Abstract

Objective

To examine whether hospital financial health was associated with differential changes in outcomes after implementation of 2003 ACGME duty hour regulations.

Data sources/Study Setting

Observational study of 3,614,174 Medicare patients admitted to 869 teaching hospitals from July 1, 2000 - June 30, 2005.

Study Design

Interrupted time series analysis using logistic regression to adjust for patient comorbidities, secular trends, and hospital site. Outcomes included 30-day mortality, AHRQ Patient Safety Indicators (PSIs), failure-to-rescue (FTR) rates, and prolonged length of stay (PLOS).

Principal Findings

All 8 analyses measuring the impact of duty hour reform on mortality by hospital financial health quartile, in post-reform year 1 (“Post 1”) or year 2 (“Post 2”) versus the pre-reform period, were insignificant: Post 1 OR range 1.00 – 1.02 and Post 2 OR range 0.99 – 1.02. For PSIs, all 6 tests showed clinically insignificant effect sizes. The FTR rate analysis demonstrated non-significance in both post-reform years (OR 1.00 for both). The PLOS outcomes varied significantly only for the combined surgical sample in Post 2, but this effect was very small, OR 1.03 (95% CI 1.02, 1.04).

Conclusions

The impact of 2003 ACGME duty hour reform on patient outcomes did not differ by hospital financial health. This finding is somewhat reassuring, given additional financial pressure on teaching hospitals from 2011 duty hour regulations.

Keywords: Resident duty hour reform, quality of care, hospital financial health, patient outcomes, health policy

INTRODUCTION

Within the past two years, two major policy reforms that affect teaching hospitals were implemented: an unfunded mandate to further restrict resident duty hours (Accreditation Council for Graduate Medical Education 2010) and the Patient Protection and Affordable Care Act (ACA). The Accreditation Council for Graduate Medical Education (ACGME) approved a new set of resident duty hour restrictions to be implemented by July 1, 2011. Revised work hour rules will decrease maximum shift length from 30 hours to 16 hours for PGY-1 residents and 28 hours, including 24 hours of clinical duty plus a 4 hour extension when needed, for PGY-2 residents and above (Accreditation Council for Graduate Medical Education 2010). Hospitals will incur additional, uncompensated personnel costs if excess resident work is transferred to substitute providers. It is estimated that compliance with these new regulations will cost $1.17–1.42 billion, assuming a mixture of substitute labor (Nuckols and Escarce 2012). Concurrently, the ACA will likely put greater financial pressure on hospitals through reduced annual market basket updates for inpatient hospitals, a 75% decrease in Medicare Disproportionate Share Hospital (DSH) payments, and penalties for hospitals with readmission rates above threshold levels. Several of these changes will become effective by October 1, 2012 (Accreditation Council for Graduate Medical Education 2010; The Henry J. Kaiser Family Foundation 2010).

How will these financial pressures affect teaching hospitals’ ability to implement the revised work hour rules while preserving or improving quality? Analyses of past duty hour reform demonstrated either no change (Volpp et al. 2007a; Silber et al. 2009; Volpp et al. 2009; Rosen et al. 2009; Fletcher et al. 2004; Jagsi et al. 2008) or small improvements in outcomes (Shetty and Bhattacharya 2007; Volpp et al. 2007b; Horwitz et al. 2007) associated with the reform. However, these studies did not examine whether the financial status of teaching hospitals influenced their ability to implement the 2003 resident work-hour rules without worsening patient outcomes, given implementation costs of up to $1.1 billion per year (Nuckols and Escarce 2005). To the extent that financially stressed teaching hospitals substituted highly-skilled inputs such as hospitalists in place of residents, the net impact on outcomes in these hospitals might even have been favorable (Shetty and Bhattacharya 2007; Roy et al. 2008). These findings would have important implications for the likely impact of current ACGME efforts to further restrict duty hours on quality.

In this manuscript, we present results from an analysis of the impact of the underlying financial health of hospitals on the association between implementation of ACGME duty hour rules and a comprehensive set of patient outcome measures among Medicare fee-for-service patients admitted to short-term, acute-care US nonfederal teaching hospitals. We compared trends in risk-adjusted mortality, patient safety event rates (Rosen et al. 2009), failure-to-rescue (FTR) (Silber et al. 2009), and prolonged length of stay (PLOS) (Volpp et al. 2009) among less versus more financially healthy teaching hospitals to examine whether hospitals that were financially distressed at baseline had more difficulty implementing ACGME duty hour rules in a manner that protected or improved patient outcomes.

METHODS

Main Outcome Measures

This study utilizes medical and surgical outcome measures described in prior work, including 30-day all-location all-cause mortality, selected AHRQ Patient Safety Indicators (PSIs) (Rosen et al. 2009; AHRQ Patient Safety Indicators 2006), FTR rates after admission for surgery (Volpp et al. 2009; Silber et al. 1992; Silber et al. 1995b; Silber et al. 2007; Silber et al. 2009a), and PLOS (Silber et al. 2003; Silber et al. 2009a; Silber et al. 2009b). Three PSI composite measures constructed in a prior study (Rosen et al. 2009) were utilized to assess patient safety events from iatrogenic complications of care: PSI-C reflecting continuity of care in the perioperative setting, PSI-T representing technical skills-based care, and PSI-O as an “Other” composite including a mix of surgical and medical PSIs.

Study Sample

The sample for the Resident Duty Hour Study has been described in detail in previous studies (Volpp et al. 2007a; Silber et al. 2009; Volpp et al. 2009; Rosen et al. 2009). In summary, the sample included 8,529,595 Medicare patients admitted to 3,321 short-term, acute-care general nonfederal hospitals over the years July 1, 2000 to June 30, 2005. Patients were grouped into the combined medical category based on a principal diagnosis of acute myocardial infarction (AMI), stroke, gastrointestinal bleeding, or congestive heart failure (CHF), or into the combined surgical category with a Diagnosis-Related Group (DRG) indicating general, orthopedic, or vascular surgery. We further excluded 36 hospitals that each had fewer than 5 deaths in the study period and 40,582 associated patient admissions, as well as 240 hospitals with 811,844 patient admissions due to missing financial information, because our financial analyses used more extensive data from Medicare Cost Reports. For this study, the relevant sample was limited to teaching hospitals, so we excluded 2,176 non-teaching hospitals with 4,062,995 patient admissions. The final sample included 869 teaching hospitals with 3,614,174 admissions over five years.

Financial Health of Hospitals

We divided hospitals into four quartiles based on their average ratio of cash flow to total revenue from 2000–2003, a measure utilized in multiple prior studies (Bazzoli et al. 2008; Kane 1991; Clement et al. 1997; McCue and Clement 1996). This ratio, the sum of operating and non-operating net income, plus annual depreciation expense, divided by total hospital revenue, was computed from financial data from Medicare Cost Reports provided by the Centers for Medicare & Medicaid Services (CMS). This measure presents a more complete picture of a hospital’s financial health than other metrics, as it incorporates revenues from non-operating sources. Quartile 1 was designated to represent hospitals with the best financial health.

Risk Adjustment, Risk score, and Hospital Control Measures

We employed a risk-adjustment approach developed by Elixhauser et al. (1998) as modified in prior studies (Volpp et al. 2007a; Volpp et al. 2007b; Silber et al. 2009a; Rosen et al. 2009; Glance et al. 2006; Quan et al. 2005; Southern, Quan, and Ghali 2004; Stukenborg, Wagner, and Connors 2001). This approach also included adjustment for age and sex, transfer status, year of admission, and interactions between year and resident-to-bed ratio (Keeler et al. 1992; Allison et al. 2000; Taylor, Whellan, and Sloan 1999). We also adjusted for the principal diagnosis in medical admissions or DRG in surgical patients, grouping paired DRGs with and without complications or comorbidities to avoid adjusting for iatrogenic events.

To identify high-risk patients for sub-analyses, risk scores for 30-day mortality were derived using out-of-sample data from 1999–2000 to avoid bias from a generated regressor (Pagan 1984). Patients with risk scores greater than the 90th percentile comprised the high-risk sub-sample used in analyses.

Data

Data on patient characteristics were drawn from the Medicare Provider Analysis and Treatment File (MEDPAR), which includes information on principal and secondary diagnoses, age, sex, comorbidities, and discharge status, including dates of death (Lawthers et al. 2000). Denominator files from CMS provided information on health maintenance organization enrollment. American Hospital Association data were used to identify hospitals that merged, opened, or closed during the study period. Financial data and the number of residents and beds per hospital were obtained from the Medicare Cost Reports. We used resident-to-bed ratio to measure teaching intensity as in previous studies (Volpp et al. 2007a; Volpp et al. 2007b; Silber et al. 2009a; Rosen et al. 2009; Keeler et al. 1992; Allison et al. 2000; Taylor, Whellan, and Sloan 1999).

Statistical Analysis

We used a multiple time series research design (Volpp et al. 2007a; Volpp et al. 2007b; Campbell and Stanley 1963), also known as differences-in-differences, to examine whether the implementation of duty hour reform was associated with a differential change in the trend of patient outcomes in less versus more financially healthy teaching hospitals. This approach reduces potential biases from unmeasured variables that are unchanged or change at a constant pace over time (Shadish, Cook, and Campbell 2002; Rosenbaum 2001). The multiple time series research design compares each hospital to itself, before and after reform, contrasting the changes in less financially healthy hospitals to the changes in more financially healthy hospitals, adjusting for observed differences in patient risk factors.

We tested whether pre-reform trends were similar in less vs. more financially healthy hospitals and adjusted for any observed underlying difference in these trends.

Using the outcome measures described above as dependent variables, we performed logistic regression adjusted for patient comorbidities, year indicator variables to control for secular trends affecting all patients (e.g. due to general changes in technology), and hospital fixed effects. The effect of the change in duty hour rules was measured as the coefficient of each financial health quartile (excluding Quartile 1 as the reference group) interacted with dummy variables indicating post-reform year 1 and post-reform year 2. These coefficients, presented as odds ratios (ORs), measure the degree to which patient outcomes changed in less vs. more financially healthy hospitals, comparing the post-reform years to pre-reform year 1. They were measured for each year separately because of the possibility of either delayed beneficial effects or early harmful effects. FTR analyses were performed on surgical/procedural patients only, since hospital-acquired complications are easier to ascertain for surgical than medical patients (Silber et al. 1992; Silber et al. 1995a; Silber et al. 2007; Silber et al. 2009a ; Volpp et al. 2009). For all analyses, odds ratios greater than one indicate greater adjusted odds of the adverse outcome, or greater reductions in quality of care, at less financially healthy hospitals from pre- to post-reform.

We performed a between-quartile trend likelihood ratio test (with 2 degrees of freedom) to evaluate the “dose response” between financial health and relative change in outcomes in each post-reform year. This test was implemented by comparing a model not allowing for a relationship between financial quartiles and relative patient outcomes and a model that included a linear trend across quartiles. The null hypothesis was that all quartiles had equivalent post-reform effects within a given year. Rejecting the null hypothesis would suggest that there were differences in post-reform outcomes according to pre-reform financial stress.

We employed the Bonferroni correction when evaluating for systematic patterns of significance given the large number of estimates. Stability analyses were performed using the least financially healthy hospitals (Quartile 4) as the control group, to examine for any underlying difference between the least financially healthy quartile and the rest of the hospitals, and using operating margin as an alternate measure of financial health. We also performed a falsification test to determine whether there was any difference in underlying pre-duty hour reform trends between hospitals by quartile of financial status. If such a difference existed, it might confound interpretation of pre/post differences in outcomes across quartiles of financial status. We implemented this analysis by using pre-reform year 3 as the baseline year with pre-reform year 1 as the comparator. Finally, we evaluated whether the association between financial status and changes in patient outcomes after duty hour reform varied by hospital teaching intensity. We tested this hypothesis by including interaction terms between the effects of interest (i.e., each financial health quartile interacted with dummy variables indicating post-reform year 1 and post-reform year 2) and the resident-to-bed ratio.

The research design prevents three possible types of bias (Volpp et al. 2007a; Volpp et al. 2007b). First, the models included hospital fixed-effects and thus differences caused by hospital characteristics that are stable over time do not confound the association between financial status and outcomes, as each hospital is compared with itself before and after duty hour reform. Second, we introduced year indicators to control for secular, unmeasured trends in treatment patterns (e.g. technological improvements) that could affect outcomes at all hospitals similarly. Third, we controlled for changes in patient case-mix by including controls for patient severity.

A limitation of this approach was that any diverging trend in outcomes for less versus more financially healthy teaching hospitals that was coincident with the reform could confound the analysis. Consequently, we extensively tested whether the pre-reform trends in outcomes were similar in more and less financially-healthy hospitals and adjusted for any observed underlying difference in pre-reform trends. The research design utilized financially healthy hospitals as controls for those of poorer financial health with respect to the impact of the reform because the former group likely had sufficient resources to implement needed changes.

RESULTS

Description of Hospitals by Financial Quartile

There was wide variation in the measures of financial health, with mean cash flow-to-total revenue ratio of 29.9% for Quartile 1, 13.4% for Quartile 2, 6.5% for Quartile 3, and −13.3% for Quartile 4 for the baseline years 2000–2003 (Table 1). Mean operating margin, defined as net operating income divided by net patient revenue demonstrated similarly wide variation, with mean operating margins ranging from 4.0% in Quartile 1 to – 22.1% in quartile 4. Only quartile 1 had positive mean operating margins. There was substantial correlation between the two measures of financial health (correlation coefficient = 0.81). Hospitals in the financially healthiest quartile were more likely to be for-profit (27.1% vs. overall 12.9% average) while hospitals in the least financially healthy quartile were more likely to be government-owned (15.8% vs. overall 6.1% average).

Table 1.

Characteristics of hospitals in sample from 2000–2003, by financial health quartilea

| Quartile 1b | Quartile 2b | Quartile 3b | Quartile 4b | Total | |

|---|---|---|---|---|---|

|

Mean cash flow-total revenue ratio, % (range) |

29.93% (18.67% – 90.99%) |

13.42% (9.74% – 18.39%) |

6.54% (3.17% – 9.73%) |

−13.27% (−246.30%–3.14%) |

9.13% (−246.30%–90.99%) |

|

Mean operating margin, % (range) |

3.97% (−38.98%–37.93%) |

−0.24% (−54.12%–27.18%) |

−3.01% (−62.71% –20.80%) |

−22.13% (−240.72% –13.38%) |

−5.37% (−240.72% –37.93%) |

| No. (%) of facilities | 217 (24.97%) | 217 (24.97%) | 217 (24.97%) | 218 (25.09%) | 869 |

|

No. (%) of discharges |

7,298,876 (28.50%) | 6,927,431 (27.06%) | 6,287,043 (24.55%) | 5,090,676 (19.88%) | 25,604,026 |

| Urban, % | 91.24% | 91.71% | 90.78% | 96.33% | 92.52% |

| Rural, % | 8.76% | 8.29% | 9.21% | 3.67% | 7.48% |

| Ownership | |||||

| For Profit, % | 27.14% | 10.58% | 6.34% | 6.52% | 12.89% |

| Not-for-profit, % | 68.57% | 87.50% | 90.24% | 77.72% | 81.04% |

| Government, % | 4.29% | 1.92% | 3.41% | 15.76% | 6.07% |

|

Teaching intensity (Resident-to-bed ratio) |

|||||

| Minor Teaching ( >0 – 0.249), % (SD) |

79.72% | 75.12% | 75.58% | 53.67% | 71.00% |

| Major Teaching (0.250 −1.094), % |

20.28% | 24.88% | 24.42% | 46.33% | 29.00% |

|

Number of beds per hospital (SD) |

346.50 (242.46) | 326.67 (197.96) | 301.49 (187.02) | 293.42 (204.36) | 316.99 (209.64) |

|

Occupancy rate, % (range) |

67.51% (23.38% – 100.32%) |

69.49% (33.61% – 102.16%) |

68.34% (29.51% – 103.40%) |

68.27% (11.83% – 105.44%) |

68.4% (11.83% – 105.44%) |

Included 869 hospitals with 3,614,174 patients. Prereform and postreform years are academic years (e.g. prereform year 3 indicates academic year July 1, 2000 to June 30, 2001)

Quartiles computed based on mean cash flow-total revenue ratio. Quartile 1 is the most financially healthy and Quartile 4 is the least financially healthy

Patient Population and Unadjusted Outcomes

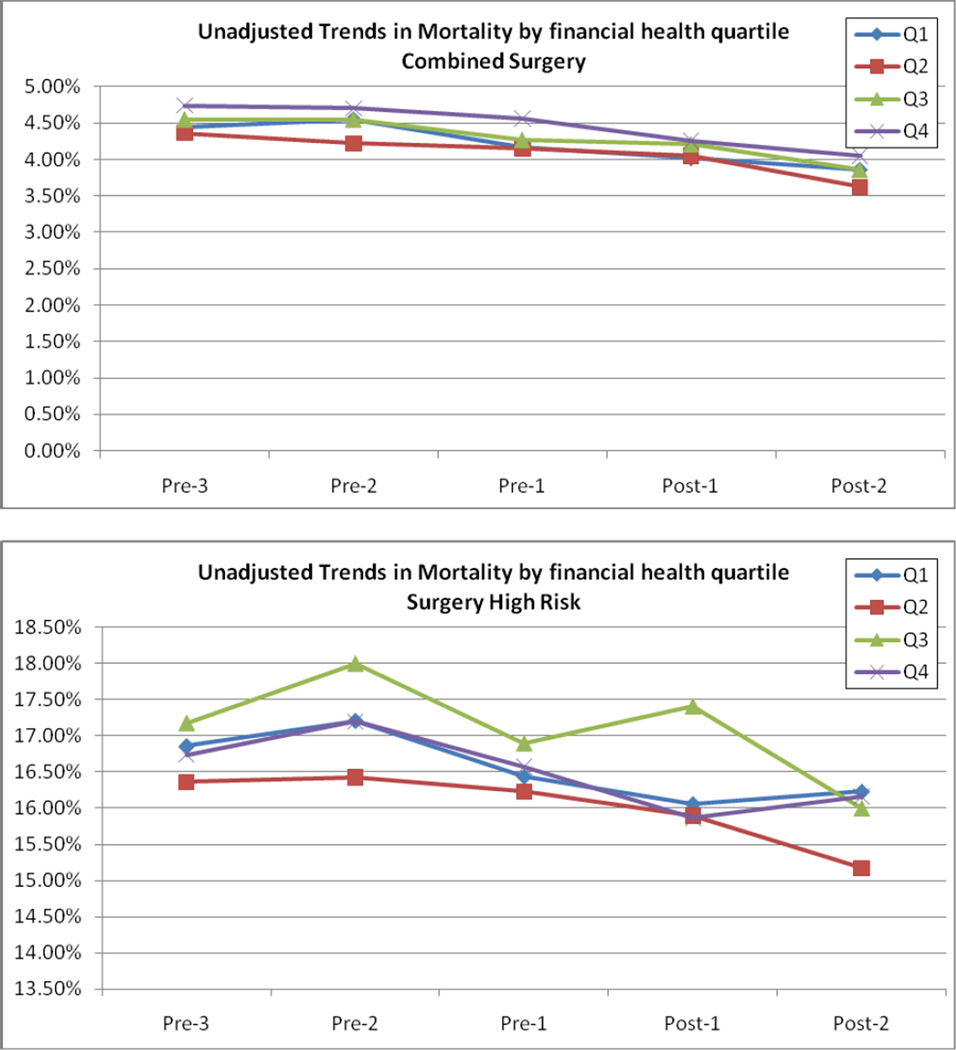

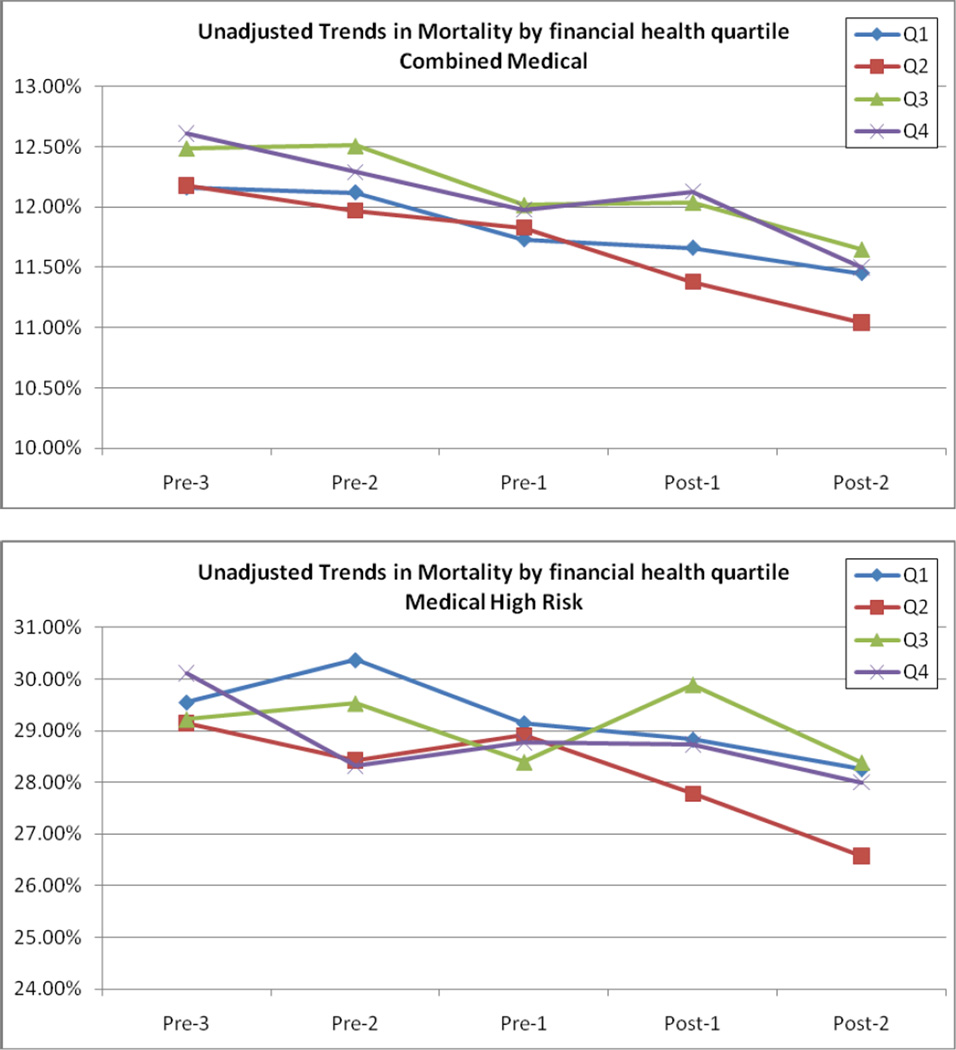

The number of admissions for the samples associated with each outcome measure was fairly constant over time, though there was variation in the outcome rates themselves across financial quartiles and years (TABLE 2). In the combined medical and high risk medical samples, there were general downward trends in unadjusted mortality rates for all quartiles over the sample years. In the combined surgery sample, unadjusted mortality decreased similarly across quartiles, with Quartile 4 consistently showing the highest unadjusted mortality rates (FIGURE 2). We do not present plots of unadjusted trends for the other outcome measures as there were no discernable relationships in the trends across financial quartiles.

Table 2.

Characteristics of the Study Population

| Prereform Years | Postreform Years | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2000–2003* (pooled) | 2003–2004* | 2004–2005* | ||||||||||

|

Financial Health (cash flow) Quartile of Hospital of Admission |

Q1 | Q2 | Q3 | Q4 | Q1 | Q2 | Q3 | Q4 | Q1 | Q2 | Q3 | Q4 |

| Mortality- Combined Medical | ||||||||||||

| No. of Cases | 278,174 | 264,153 | 243,307 | 180,012 | 88,843 | 83,418 | 79,044 | 57,088 | 84,278 | 80,421 | 75,710 | 55,184 |

| Unadjusted Mortality, % | 12.01% | 12.00% | 12.34% | 12.30% | 11.66% | 11.38% | 12.04% | 12.13% | 11.45% | 11.04% | 11.65% | 11.50% |

| High Risk Mortality- Combined Medical | ||||||||||||

| No. of High Risk Cases | 27,965 | 27,259 | 25,089 | 18,337 | 8,799 | 8,448 | 7,856 | 5,659 | 8,429 | 8,341 | 7,900 | 5,572 |

| Unadjusted High Risk Mortality, % | 29,69% | 28,84% | 29.07% | 29.09% | 28.83% | 27.77% | 29.89% | 28.73% | 28.26% | 26.58% | 28.39% | 28.00% |

| Mortality- Combined Surgical | ||||||||||||

| No. of Cases | 355,266 | 345,163 | 297,998 | 212,111 | 123,144 | 117,684 | 103,432 | 73,529 | 122,214 | 117,308 | 103,550 | 73,143 |

| Unadjusted Mortality, % | 4.38% | 4.24% | 4.45% | 4.67% | 4.02% | 4.05% | 4.21% | 4.25% | 3.86% | 3.62% | 3.86% | 4.05% |

| High Risk Mortality – Combined Surgical | ||||||||||||

| No. of High Risk Cases | 36,862 | 35,461 | 30,260 | 23,020 | 12,565 | 11,964 | 10,320 | 7,677 | 12,100 | 11,790 | 10,197 | 7,604 |

| Unadjusted High Risk Mortality, % | 16.84% | 16.34% | 17.35% | 16.83% | 16.06% | 15.90% | 17.41% | 15.87% | 16.22% | 15.17% | 16.00% | 16.16% |

| FTR | ||||||||||||

| No. of Cases (comp. or death) | 151,816 | 147,445 | 128,067 | 93,418 | 54,576 | 51,965 | 45,724 | 33,474 | 52,869 | 50,546 | 44,288 | 32,327 |

| Unadjusted FTR, % | 10.26% | 9.93% | 10.37% | 10.59% | 9.06% | 9.15% | 9.51% | 9.32% | 8.92% | 8.39% | 8.99% | 9.14% |

| PSI-C | ||||||||||||

| No. of Cases | 938,125 | 892,495 | 788,709 | 592,914 | 307,843 | 293,824 | 261,905 | 198,745 | 301,559 | 289,273 | 262,102 | 196,158 |

| Unadjusted PSI, % | 0.61% | 0.58% | 0.60% | 0.60% | 0.58% | 0.57% | 0.60% | 0.60% | 0.61% | 0.57% | 0.61% | 0.60% |

| PSI-T | ||||||||||||

| No. of Cases | 437,578 | 398,863 | 331,610 | 241,144 | 147,029 | 136,932 | 114,974 | 84,592 | 145,689 | 138,080 | 116,446 | 85,597 |

| Unadjusted PSI, % | 1.24% | 1.24% | 1.19% | 1.34% | 1.36% | 1.21% | 1.25% | 1.46% | 1.39% | 1.30% | 1.22% | 1.36% |

| PSI-O | ||||||||||||

| No. of Cases | 968,887 | 920,114 | 814,972 | 610,357 | 311,783 | 297,117 | 265,528 | 201,109 | 302,955 | 290,664 | 263,504 | 197,077 |

| Unadjusted PSI, % | 0.64% | 0.70% | 0.65% | 0.69% | 0.70% | 0.74% | 0.68% | 0.67% | 0.70% | 0.70% | 0.65% | 0.71% |

| PLOS (combined medical)) | ||||||||||||

| No. of Cases | 278,174 | 264,153 | 243,307 | 180,012 | 88,843 | 83,418 | 79,044 | 57,088 | 84,278 | 80,421 | 75,710 | 55,184 |

| Unadjusted PLOS, % | 67.57% | 69.11% | 67.64% | 68.01% | 65.60% | 67.00% | 65.87% | 65.63% | 64.41% | 66.51% | 64.59% | 65.08% |

| PLOS (combined surgical) | ||||||||||||

| No. of Cases | 148,541 | 142,773 | 124,676 | 82,434 | 51.805 | 48,841 | 44,314 | 28,979 | 52,073 | 49,203 | 44,578 | 29,140 |

| Unadjusted PLOS, % | 57.61% | 58.84% | 55.86% | 56.75% | 53.05% | 54.23% | 52.12% | 52.20% | 49.11% | 50.12% | 47.21% | 49.23% |

Included 3,614,174 patients from 869 facilities. Pre-reform years 3 to 1 were pooled.

Figure 2. Unadjusted trends in Mortality for Surgical Admissions by Hospital Financial Health Quartile.

The Accreditation Council for Graduate Medical Education duty hour regulations were implemented on July 1, 2003. Pre-reform year 3 (Pre-3) included academic year 2000–2001 (July 1, 2000, to June 30, 2001); pre-reform year 2 (Pre-2), academic year 2001–2002; pre-reform year 1 (Pre-1), academic year 2002–2003; post-reform year 1 (Post-1), academic year 2003–2004; and post-reform year 2 (Post-2), academic year 2004–2005. No significant divergence was found in the degree to which mortality changed from pre-reform year 1 to either post-reform year any group. Significance levels assess whether trend from pre-reform year 1 to post-reform years 1 and 2, respectively, differed for less vs. more financially healthy hospitals.

Adjusted Analyses

Adjusted analyses of the 6 patient outcomes across the samples of medical and surgical patients indicated no systematic improvement or worsening in outcomes in accordance with hospital financial status in either post-reform year 1 or post-reform year 2 (Table 3). Furthermore, the between-quartile trend likelihood ratio test, used to evaluate the “dose response” between financial health of hospitals and changes in risk-adjusted patient outcomes, demonstrated very few significant interactions between quartiles of hospitals’ financial health and relative changes in patient outcomes from pre-reform to post-reform (Table 3).

Table 3.

Adjusted Odds of Mortality, Patient Safety Event Rates, Failure-to-Rescue, and Prolonged Length of Stay after Duty Hour Reform in less vs. more financially healthy teaching hospitals

| Post-reform Year 1 X | Post-reform Year 2 X | |||||||

|---|---|---|---|---|---|---|---|---|

| Quartile 2a | Quartile 3a | Quartile 4a | Test of Between- Quartile Trend |

Quartile 2a | Quartile 3a | Quartile 4a | Test of Between- Quartile Trend |

|

| Mortality | Odds Rat ios (95% CI )b |

Odds Ratios (95% CI )b |

||||||

| Combined medical | 0.96 (0.92,1.01) |

1.02 (0.97,1.06) |

1.03 (0.98,1.08) |

1.01 (1.00,1.02) |

0.95* (0.91,0.99) |

0.99 (0.95,1.04) |

0.98 (0.94,1.03) |

0.99 (0.98,1.01) |

| High-risk medical (vs. low-risk) |

0.98 (0.910,1.60) |

1.03 (0.95,1.11) |

0.95 (0.87,1.03) |

1.02 (0.99,1.04) |

0.97 (0.90,1.05) |

1.01 (0.93,1.09) |

1.02 (0.93,1.11) |

1.02 (0.99,1.05) |

| Combinedsurgical | 0.98 (0.92,1.04) |

1.01 (0.95,1.07) |

0.97 (0.91,1.04) |

1.00 (0.98,1.01) |

0.92** (0.86,0.98) |

0.97 (0.91,1.04) |

0.97 (0.90,1.04) |

0.995 (0.98,1.01) |

| High-risk surgical (vs. low-risk) |

0.94 (0.86,1.30) |

1.04 (0.95,1.35) |

0.96 (0.87,1.063) |

1.00 (0.97,1.03) |

0.98 (0.90,1.08) |

0.98 (0.89,1.07) |

1.04 (0.94,1.56) |

1.00 (0.97,1.025) |

| Patient safety event | ||||||||

| PSI-C | 0.97 (0.88,1.06) |

1.03 (0.93,1.13) |

0.97 (0.87,1.08) |

1.00 (0.97,1.03) |

0.91* (0.83,1.00) |

0.99 (0.90,1.09) |

0.94 (0.84,1.05) |

0.99 (0.97,1.02) |

| PSI-T | 0.91* (0.82,1.00) |

0.95 (0.86,1.06) |

1.05 (0.94,1.17) |

1.00 (0.97,1.03) |

0.95 (0.86,1.05) |

0.95 (0.86,1.05) |

0.94 (0.84,1.05) |

0.97* (0.94,0.99) |

| PSI-O | 0.93 (0.85,1.01) |

0.93 (0.85,1.01) |

0.88* (0.80,0.97) |

0.96** (0.94,0.99) |

0.89** (0.82,0.97) |

0.90* (0.82,0.98) |

0.95 (0.86,1.05) |

0.98 (0.96,1.01) |

| FTR rate | 0.99 (0.93,1.05) |

1.02 (0.95,1.09) |

0.97 (0.90,1.04) |

1.00 (0.98,1.02) |

0.92** (0.86,0.98) |

0.97 (0.91,1.04) |

0.98 (0.91,1.06) |

1.00 (0.98,1.02) |

| PLOS rate | ||||||||

| Combined medical | 0.99 (0.96,1.02) |

1.02 (0.99,1.05) |

0.96* (0.93,0.99) |

1.00 (0.99,1.01) |

1.01 (0.98,1.04) |

1.00 (0.98,1.04) |

0.98 (0.95,1.01) |

1.00 (0.99,1.01) |

| Combined surgical | 0.97 (0.93,1.01) |

1.04 (1.00,1.08) |

0.99 (0.95,1.04) |

1.01 (1.00,1.02) |

0.95** (0.91,0.99) |

1.01 (0.97,1.05) |

1.10*** (1.05,1.15) |

1.03*** (1.02,1.04) |

Abbreviation: CI, confidence interval; PSI, patient safety indicators; FTR, failure-to-rescue; PLOS, prolonged length of stay. High-risk samples include the top decile of patients by severity risk score.

The interaction terms (Post-reform Year 1 X Quartile 4) and (Post-reform Year 2 X Quartile 4) measure whether there is any relative change in the odds of the patient outcome in Quartile 4 (least financially healthy) vs. Quartile 1 (financially healthiest) hospitals. Analogous interaction terms for financial quartile 2 and 3 are also included. Models are also adjusted for age, sex, comorbidities, common time trends, and hospital site where treated.

Units for odds ratios compare hospitals in the quartile of interest to hospitals in Quartile 1, the financially healthiest quartile.

p<0.05,

p<0.01,

p<0.001

Mortality

None of the mortality analyses demonstrated any systemic pattern of improvement or worsening in response to duty hour reform between more and less financially healthy hospitals in post-reform year 1. In post-reform year 2, only quartile 2 (relative to the most financially healthy hospitals) showed statistically significant results for combined medical (OR 0.95; 95% CI 0.91 – 0.99) and combined surgical patients (OR 0.92; 95% CI 0.86 – 0.98). The between-quartile trend likelihood ratio tests showed no significant dose-response between financial health of hospitals and the change in risk-adjusted mortality in either post-reform year 1 (OR range 1.00 to 1.02) or post-reform year 2 (OR range 0.99 to 1.02).

Patient Safety Indicators

In post-reform year 1, PSI-T declined significantly (relative to the most financially healthy hospitals) only in quartile 2 (OR 0.91; 95% CI 0.82 – 1.00) whereas PSI-O declined significantly (relative to the most financially healthy hospitals) only in quartile 4 (OR 0.88; 95% CI 0.88 – 0.97). In post-reform year 2, PSI-T and PSI-O showed significant relative improvements from the pre-reform period in quartile 2 (OR 0.91; 95% CI 0.83 – 1.00 and OR 0.89; 95% CI 0.82 – 0.97) and PSI-O also improved significantly in quartile 3 (OR 0.90; 95% CI 0.82 – 0.98). The between-quartile trend likelihood ratio tests demonstrated no dose-response except for declining patient safety event rates in less vs. more financially healthy hospitals for the PSI-T outcome in post-reform year 2 (OR 0.97; 95% CI 0.94 – 0.99) and for PSI-O in post-reform year 1 (OR 0.96; 95% CI 0.94 – 0.99).

Failure-to-Rescue

FTR rates demonstrated no significant differences in pre-post changes across quartiles of financial health in post-reform year 1, and the only significant difference (relative to the most financially healthy hospitals) in post-reform year 2 was in quartile 2 (OR 0.92; 95% CI 0.86 – 0.98). The between-quartile trend likelihood ratio tests demonstrated no significant dose-response for financial health on FTR rates for either post-reform year 1 (OR 1.00; 95% CI 0.98 – 1.02) or post-reform year 2 (OR 1.00; 95% CI 0.98 – 1.02).

Prolonged Length of Stay

PLOS rates for the combined medical group declined significantly (relative to the most financially healthy hospitals) only in quartile 4 in post-reform year 1 (OR 0.96; 95% CI 0.93 – 0.99); there were no significant changes for any quartile (relative to the most financially healthy hospitals) in post-reform year 2. Finally, PLOS rates in the combined surgical sample showed no significant differences in pre-post changes across quartiles of financial health in post-reform year 1, but a significant decrease in quartile 2 (OR 0.95; 95% CI 0.91 – 0.99) and a significant increase in quartile 4 (OR 1.10; 95% CI 1.05 – 1.15) in post-reform year 2. The between-quartile trend likelihood ratio tests demonstrated increasing PLOS rates in less vs. more financially healthy hospitals only for the combined surgical sample in post-reform year 2 (OR 1.03; 95% CI 1.02 – 1.04).

Employing the Bonferroni correction resulted in only one statistically significant coefficient among the 60 quartile-by-year estimates, highlighting that there was no systematic relationship between hospital financial health and changes in outcomes following the 2003 duty hour reform. Of note, the trends for the PSI measures that were statistically significant, though not with the Bonferroni correction, indicated a relative improvement in outcomes at less financially healthy hospitals while PLOS demonstrated a corresponding relative worsening in quality at less financially healthy hospitals. Stability analyses replicating the unadjusted, adjusted, and quartile trend analyses utilizing an alternate measure of financial health, hospital operating margin (Bazzoli et al. 2008), demonstrated similar results. A falsification test that tested for pre-duty hour differences in trends across hospitals by financial quartiles confirmed that pre-existing quartile-specific trends were not present, suggesting that the observed differences post-reform were not confounded by underlying differences in trends by hospital financial status (Appendix Table 1). An analysis using 3-way interactions with teaching intensity suggested that trends were similar across the spectrum of teaching hospitals (Appendix Table 2). Finally, an analysis of low-risk patients also produced similar results (Appendix Table 3).

DISCUSSION

Our findings suggest that the underlying financial health of hospitals was not associated with differences in the degree to which a set of medical and surgical patient outcomes changed after implementation of the 2003 ACGME duty hour rules in a national sample of teaching hospitals. While there were isolated improvements in outcomes in some quartiles of hospital financial health for a few measures, there was no systematic dose-response relationship as measured by the test of between-quartile trends. It is unlikely that a causal relationship between hospital financial status and changes in patient outcomes due to duty hour reform exists.

Implementing resident duty hour rules is costly for hospitals, with one study estimating the nationwide costs to be between $673 million and $1.1 billion for the 2003 rules (Nuckols and Escarce 2005). Our findings offer evidence that despite the significant costs, the degree to which quality changed after the implementation of duty hour restrictions was not related to hospital financial health.

Projections of the costs for implementing 2011 duty hour rules have been as high as $1.64 billion, if attending physicians are used as substitutes for residents, approximately 15 percent more than the 2003 rules when adjusting for inflation (Nuckols and Escarce 2012; Nuckols and Escarce 2009). Our results are somewhat reassuring, as they suggest that teaching hospitals adapted successfully to the financial pressure caused by the 2003 unfunded mandate, protecting patient outcomes. However, we cannot determine from this study whether there may be a “breaking point” for individual hospitals that may be especially strained financially by the recent changes in both work hour rules and forthcoming changes in Medicare payment policies.

Several studies that examined mortality, patient safety event rates, prolonged length of stay, and failure-to-rescue in a national sample of Medicare patients found no systematic improvement or worsening after duty hour reform, across levels of teaching intensity, without consideration of hospital financial health (Volpp et al. 2007a; Volpp et al. 2007b; Silber et al. 2009; Volpp et al. 2009; Rosen et al. 2009; Shetty and Bhattacharya 2007; Horwitz et al. 2007) . However, in other studies, financial pressure has been shown to adversely impact the quality of care provided by hospitals. This evidence includes studies of the impact of policy reforms that resulted in financial pressure (Lindrooth et al. 2007; Shen 2003; Volpp et al. 2005; Clement et al. 2007; Encinosa and Bernard 2005), longitudinal studies of trends in financial performance and quality of care (Bazzoli et al. 2007; Bazzoli et al. 2008), as well as cross-sectional analyses of the association between financial condition and patient outcomes (Burstin et al. 1993). While our findings indicate that teaching hospitals were able to implement new duty hour rules without any worsening of outcomes, regardless of their financial health, the 2003 ACGME duty hour rules were not accompanied by financial pressure from payment reforms, such as those to be instituted shortly after the 2011 duty hour regulations.

There may be multiple reasons for the observed lack of significant variation in post-reform changes in patient outcomes across quartiles of hospital financial health. First, teaching hospitals may have provided adequate training and supervision or used physician extenders, fellows, or attendings (across all quartiles of financial health) after implementation of the ACGME duty hour rules to avoid declines in patient outcomes. Second, as less financially healthy hospitals tended to be more teaching intensive, their higher number of residents may have enabled greater flexibility in implementation. For example, residents could be reallocated from services requiring less duty hours to those requiring more. As resident-to-resident substitution does not incur additional cost, these hospitals may have more extensively redistributed resident time across services and rotations such that additional personnel were not required (Okie, 2007). It is also possible that less financially healthy hospitals did not implement the reform or did so incompletely. Finally, hospitals in need of financial resources may have shifted into more profitable business lines or cut costs elsewhere to free up resources for duty hour reform implementation.

Our study has a number of possible limitations. Thirty-day all-cause mortality does not reflect changes in quality of life, functional status, and other important patient outcomes. Patient safety events may not be prevalent enough to detect changes over time. Despite the large patient sample, some of the confidence intervals were still quite wide and we cannot rule out small but clinically meaningful effects. To mitigate this concern, we employed a wide range of outcome measures varying from singular events, such as death, to aggregated composite measures of patient safety (PSIs). Observational studies based on administrative data lack detailed clinical information and are subject to unmeasured confounding. However, our multiple time series difference-in-difference approach compares outcomes over time within each hospital in less vs. more financially healthy hospitals. This methodology reduces the likelihood of bias as a confounding variable would need to be contemporaneous to the reform and to affect teaching hospitals differentially by financial status. Finally, we do not have information on the methods employed in implementing duty hour reform, including actual hours worked, at each hospital.

In conclusion, we found that the financial health of teaching hospitals was not systematically associated with any significant change in a comprehensive set of medical and surgical patient outcome measures across the duty hour reform time period. These findings present some reassuring evidence in light of further duty hour restrictions that were implemented in 2011 and payment reforms to be implemented in 2012–2014. Yet our findings do not guarantee that this future combination of financial stressors will not push some hospitals beyond their ability to prevent adverse impacts on patient outcomes. These impacts will need to be carefully monitored going forward.

Supplementary Material

Figure 1. Unadjusted trends in Mortality for Medical Admissions by Hospital Financial Health Quartile.

The Accreditation Council for Graduate Medical Education duty hour regulations were implemented on July 1, 2003. Pre-reform year 3 (Pre-3) included academic year 2000–2001 (July 1, 2000, to June 30, 2001); pre-reform year 2 (Pre-2), academic year 2001–2002; pre-reform year 1 (Pre-1), academic year 2002–2003; post-reform year 1 (Post-1), academic year 2003–2004; and post-reform year 2 (Post-2), academic year 2004–2005. No significant divergence was found in the degree to which mortality changed from pre-reform year 1 to either post-reform year any group. Significance levels assess whether trend from pre-reform year 1 to post-reform years 1 and 2, respectively, differed for less vs. more financially healthy hospitals.

Acknowledgments

Joint Acknowledgement/Disclosure Statement:

Funding/Support: This work was supported by grant National Heart, Lung, and Blood Institute (NHLBI) R01 HL082637 “Impact of Resident Work Hour Rules on Errors and Quality.”

Role of the Sponsors: The sponsors had no role in the design and conduct of the study, in the collection, management, analysis, and interpretation of the data, or in the preparation, review, or approval of the manuscript.

Footnotes

Drs Volpp and Silber had full access to all of the data in the study and take responsibility for the integrity of the data, and together with Dr. Navathe, take responsibility for the accuracy of the data analysis.

Ms. Even-shoshan, Mr. Zhou, Ms. Wang, and Mr. Halenar received financial compensation for their contributions.

Disclosures: None

REFERENCES

- Accreditation Council for Graduate Medical Education Web site. [Accessed October 3, 2010];Approved Standards Information 2010 Common Program Requirements. http://acgme-2010standards.org/pdf/Common_Program_Requirements_07012011.pdf.

- Agency for Healthcare Research and Quality Patient Safety Indicators Software (AHRQ website) Version 3.0. Rockville, MD: 2006. [Accessed April 8, 2008]. Available at: http://www.qualityindicators.ahrq.gov/software.htm. [Google Scholar]

- Allison JJ, Kiefe CI, Weissman NW, Person SD, Rousculp M, Canto JG, Bae S, Williams D, Farmer R, Centor RM. Relationship of hospital teaching status with quality of care and mortality for Medicare patients with acute MI. Journal of the American Medical Association. 2000;284:1256–1262. doi: 10.1001/jama.284.10.1256. [DOI] [PubMed] [Google Scholar]

- Bazzoli GJ, Chen H, Zhao M, Lindrooth RC. Hospital financial condition and the quality of patient care. Health Economics. 2008;17:977–995. doi: 10.1002/hec.1311. [DOI] [PubMed] [Google Scholar]

- Bazzoli GJ, Clement J, Lindrooth RC, Chen HF, Aydede S, Braun B, Loeb J. Hospital financial condition and operational decisions related to the quality of hospital care. Medical Care Research and Review. 2007;64:148–168. doi: 10.1177/1077558706298289. [DOI] [PubMed] [Google Scholar]

- Burstin HR, Lipsitz SR, Udvarhelyi IS, Brennan TA. The effect of hospital financial characteristics on quality of care. Journal of the American Medical Association. 1993;270:845–849. [PubMed] [Google Scholar]

- Campbell DT, Stanley JC. Experimental and Quasi-Experimental Designs for Research. Dallas, TX: Houghton Mifflin Co; 1963. pp. 56–63. [Google Scholar]

- Clement JP, Lindrooth RC, Chukmaitov AS, Chen HF. Does the patient’s payer matter in hospital patient safety? Medical Care. 2007;45:131–138. doi: 10.1097/01.mlr.0000244636.54588.2b. [DOI] [PubMed] [Google Scholar]

- Clement JP, McCue MJ, Luke RD, Bramble JD. Financial performance of hospitals affiliated with strategic hospital alliances. Health Affairs (Millwood) 1997;16:193–203. doi: 10.1377/hlthaff.16.6.193. [DOI] [PubMed] [Google Scholar]

- Elixhauser A, Steiner C, Harris DR, Coffey RM. Comorbidity measures for use with administrative data. Medical Care. 1998;36:8–27. doi: 10.1097/00005650-199801000-00004. [DOI] [PubMed] [Google Scholar]

- Encinosa WE, Bernard DM. Hospital finances and patient safety outcomes. Inquiry. 2005;42:60–72. doi: 10.5034/inquiryjrnl_42.1.60. [DOI] [PubMed] [Google Scholar]

- Fletcher KE, Davis SQ, Underwood W, Mangrulkar RS, McMahon LF, Saint S. Systematic review: effects of resident work hours on patient safety. Annals of Internal Medicine. 2004;141:851–857. doi: 10.7326/0003-4819-141-11-200412070-00009. [DOI] [PubMed] [Google Scholar]

- Glance LG, Dick AW, Osler TM, Mukamel DB. Does date stamping ICD-9-CM codes increase the value of clinical information in administrative data? Health Services Research. 2006;41:231–251. doi: 10.1111/j.1475-6773.2005.00419.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horwitz LI, Kosiborod M, Lin Z, Krumholz HM. Changes in outcomes for internal medicine inpatients after work-hour regulations. Annals of Internal Medicine. 2007;147:97–103. doi: 10.7326/0003-4819-147-2-200707170-00163. [DOI] [PubMed] [Google Scholar]

- Jagsi R, Weinstein DF, Shapiro J, Kitch BT, Dorer D, Weissman JS. The Accreditation Council for Graduate Medical Education’s limits on residents’ work hours and patient safety. A study of resident experiences and perceptions before and after hours reductions. Archives of Internal Medicine. 2008;168:493–500. doi: 10.1001/archinternmed.2007.129. [DOI] [PubMed] [Google Scholar]

- Kane NM. Hospital profits: a misleading measure of financial health. Journal of American Health Policy. 1991;1:27–35. [PubMed] [Google Scholar]

- Keeler EB, Rubenstein LV, Kahn KL, Draper D, Harrison ER, McGinty MJ, Rogers WH, Brook RH. Hospital characteristics and quality of care. Journal of the American Medical Association. 1992;268:1709–1714. [PubMed] [Google Scholar]

- Lawthers AG, McCarthy EP, Davis RB, Peterson LE, Palmer RH, Iezzoni LI. Identification of in-hospital complications from claims data: is it valid? Medical Care. 2000;38:785–795. doi: 10.1097/00005650-200008000-00003. [DOI] [PubMed] [Google Scholar]

- Lindrooth RC, Bazzoli GJ, Clement J. Hospital reimbursement and treatment intensity. Southern Economic Journal. 2007;73:575–587. [Google Scholar]

- McCue MJ, Clement JP. Assessing the characteristics of hospital bond defaults. Medical Care. 1996;34:1121–1134. doi: 10.1097/00005650-199611000-00006. [DOI] [PubMed] [Google Scholar]

- Nuckols TK, Bhattacharya J, Wolman DM, Ulmer C, Escarce JJ. Cost Implications of Reduced Work Hours and Workloads for Resident Physicians. New England Journal of Medicine. 2009;360:2202–2215. doi: 10.1056/NEJMsa0810251. [DOI] [PubMed] [Google Scholar]

- Nuckols TK, Escarce JJ. Cost Implications of ACGME's 2011 Changes to Resident Duty Hours and the Training Environment. Journal of General Internal Medicine. 2012;27:241–249. doi: 10.1007/s11606-011-1775-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nuckols TK, Escarce JJ. Residency work-hours reform: a cost analysis including preventable adverse events. Journal of General Internal Medicine. 2005;20:873–878. doi: 10.1111/j.1525-1497.2005.0133.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okie S. An elusive balance — residents’ work hours and the continuity of care. New England Journal of Medicine. 2007;356:2665–2667. doi: 10.1056/NEJMp078085. [DOI] [PubMed] [Google Scholar]

- Pagan A. Econometric Issues in the Analysis of Regressions with Generated Regressors. International Economic Review. 1984;25:221–247. [Google Scholar]

- Quan H, Sundararajan V, Halfon P, Fong A, Burnand B, Luthi JC, Saunders LD, Beck CA, Feasby TE, Ghali WA. Coding algorithms for defining comorbidities in ICD-9-CM and ICD-10 administrative data. Medical Care. 2005;43:1130–1139. doi: 10.1097/01.mlr.0000182534.19832.83. [DOI] [PubMed] [Google Scholar]

- Rosen AK, Loveland SA, Romano PS, Itani KMF, Silber JH, Even-Shoshan O, Halenar MJ, Teng Y, Zhu J, Volpp KG. Effects of resident duty hour reform on surgical and procedural patient safety indicators among hospitalized VA and Medicare patients. Medical Care. 2009;47:723–731. doi: 10.1097/MLR.0b013e31819a588f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenbaum PR. Stability in the absence of treatment. Journal of the American Statistical Association. 2001;96:210–219. doi: 10.1198/016214501753381896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roy CL, Liang CL, Lund M, Boyd C, Katz JT, McKean S, Schnipper JL. Implementation of a physician assistant/hospitalist service in an academic medical center: Impact on efficiency and patient outcomes. Journal of Hospital Medicine. 2008;3:361–368. doi: 10.1002/jhm.352. [DOI] [PubMed] [Google Scholar]

- Shadish WR, Cook TD, Campbell DT. Experimental and Quasi-Experimental Designs for Generalized Causal Inference. Boston, MA: Houghton-Mifflin; 2002. p. 181. [Google Scholar]

- Shen YC. The effect of financial pressure on the quality of care in hospitals. Journal of Health Economics. 2003;22:243–269. doi: 10.1016/S0167-6296(02)00124-8. [DOI] [PubMed] [Google Scholar]

- Shetty KD, Bhattacharya J. Changes in hospital mortality associated with residency work-hour regulations. Annals of Internal Medicine. 2007;147:73–80. doi: 10.7326/0003-4819-147-2-200707170-00161. [DOI] [PubMed] [Google Scholar]

- Silber JH, Romano PS, Rosen AK, Wang Y, Even-Shoshan O, Volpp KG. Failure-to-rescue: comparing definitions to measure quality of care. Medical Care. 2007;45:918–925. doi: 10.1097/MLR.0b013e31812e01cc. [DOI] [PubMed] [Google Scholar]

- Silber JH, Rosenbaum PR, Even-Shoshan O, Shabbout M, Zhang X, Bradlow ET, Marsh RM. Length of stay, conditional length of stay, and prolonged stay in pediatric asthma. Health Services Research. 2003;38:867–886. doi: 10.1111/1475-6773.00150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silber JH, Rosenbaum PR, Koziol LF, Sutaria N, Marsh RR, Even-Shoshan O. Conditional Length of Stay. Health Services Research. 1999;34:349–363. [PMC free article] [PubMed] [Google Scholar]

- Silber JH, Rosenbaum PR, Romano PS, Rosen AK, Wang Y, Teng Y, Halenar MJ, Even-Shoshan O, Volpp KG. Hospital teaching intensity, patient race, and surgical outcomes. Archives of Surgery. 2009a;144:113–120. doi: 10.1001/archsurg.2008.569. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silber JH, Rosenbaum PR, Rosen AK, Romano PS, Itani KM, Cen L, Lanyu M, Halenar MJ, Even-Shoshan O, Volpp KG. Prolonged hospital stay and the resident duty hour rules of 2003. Medical Care. 2009b;47:1191–1200. doi: 10.1097/MLR.0b013e3181adcbff. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silber JH, Rosenbaum PR, Ross RN. Comparing the Contributions of Groups of Predictors: Which Outcomes Vary With Hospital Rather Than Patient Characteristics. Journal of the American Statistical Association. 1995a;90:7–18. [Google Scholar]

- Silber JH, Rosenbaum PR, Schwartz JS, Ross RN, Williams SV. Evaluation of the complication rate as a measure of quality of care in coronary artery bypass graft surgery. Journal of the American Medical Association. 1995b;274:317–323. [PubMed] [Google Scholar]

- Silber JH, Williams SV, Krakauer H, Schwartz JS. Hospital and patient characteristics associated with death after surgery. A study of adverse occurrence and failure to rescue. Medical Care. 1992;30:615–629. doi: 10.1097/00005650-199207000-00004. [DOI] [PubMed] [Google Scholar]

- Southern DA, Quan H, Ghali WA. Comparison of the Elixhauser and Charlson/Deyo methods of comorbidity measurement in administrative data. Medical Care. 2004;42:355–360. doi: 10.1097/01.mlr.0000118861.56848.ee. [DOI] [PubMed] [Google Scholar]

- Stukenborg GJ, Wagner DP, Connors AF., Jr. Comparison of the performance of two comorbidity measures, with and without information from prior hospitalizations. Medical Care. 2001;39:727–739. doi: 10.1097/00005650-200107000-00009. [DOI] [PubMed] [Google Scholar]

- Taylor DH, Whellan DJ, Sloan FA. Effects of admission to a teaching hospital on the cost and quality of care for Medicare beneficiaries. New England Journal of Medicine. 1999;340:293–299. doi: 10.1056/NEJM199901283400408. [DOI] [PubMed] [Google Scholar]

- The Henry J. Kaiser Family Foundation. [Accessed October 5, 2010];Focus on health reform: summary of new health reform law. http://www.kff.org/healthreform/upload/8061.pdf.

- Volpp KG, Konetzka RT, Zhu J, Parson L, Peterson E. Effects of cuts in medicare reimbursement on ;process and outcome of care for acute myocardial infarction patients. Circulation. 2005;112:2268–2275. doi: 10.1161/CIRCULATIONAHA.105.534164. [DOI] [PubMed] [Google Scholar]

- Volpp KG, Rosen AK, Rosenbaum PR, Romano PS, Even-Shoshan O, Wang Y, Bellini L, Behringer T, Silber JH. Mortality among hospitalized Medicare beneficiaries in the first two years following ACGME resident duty hour reform. Journal of the American Medical Association. 2007a;298:975–983. doi: 10.1001/jama.298.9.975. [DOI] [PubMed] [Google Scholar]

- Volpp KG, Rosen AK, Rosenbaum PR, Romano PS, Even-Shoshan O, Canamucio A, Bellini L, Behringer T, Silber JH. Mortality among patients in VA hospitals in the first 2 years following ACGME resident duty hour reform. Journal of the American Medical Association. 2007b;298:984–992. doi: 10.1001/jama.298.9.984. [DOI] [PubMed] [Google Scholar]

- Volpp KG, Rosen AK, Rosenbaum PR, Romano PS, Itani KM, Bellini L, Even-Shoshan O, Cen L, Wang Y, Halenar MJ, Silber JH. Did duty hour reform lead to better outcomes among the highest risk patients? Journal of General Internal Medicine. 2009;24:1149–1155. doi: 10.1007/s11606-009-1011-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.