Abstract

Objective

To determine the proportion of physician practices in the United States that currently meets medical home criteria.

Data Source/Study Setting

2007 and 2008 National Ambulatory Medical Care Survey.

Study Design

We mapped survey items to the National Committee on Quality Assurance’s (NCQA’s) medical home standards. After awarding points for each “passed” element, we calculated a practice’s infrastructure score, dividing its cumulative total by the number of available points. We identified practices that would be recognized as a medical home (Level 1 [25–49 percent], Level 2 [50–74 percent], or Level 3 [infrastructure score ≥75 percent]) and examined characteristics associated with NCQA recognition.

Results

Forty-six percent (95 percent confidence interval [CI], 42.5–50.2) of all practices lack sufficient medical home infrastructure. While 72.3 percent (95 percent CI, 64.0–80.7 percent) of multi-specialty groups would achieve recognition, only 49.8 percent (95 percent CI, 45.2–54.5 percent) of solo/partnership practices meet NCQA standards. Although better prepared than specialists, 40 percent of primary care practices would not qualify as a medical home under present criteria.

Conclusion

Almost half of all practices fail to meet NCQA standards for medical home recognition.

There are high expectations that delivery system reforms embodied in the patient-centered medical home will enhance the quality, safety, and accountability of medical care in the United States (Rittenhouse, Shortell, and Fisher 2009). To achieve this model’s ambitious goals, physician practices will need to implement a variety of clinical innovations, including evidence-based care pathways, performance measurement and feedback, and multi-dimensional health information technology (Rittenhouse and Shortell 2009).

To date, the degree to which existing practices possess the capacity to implement these and other model functions, based on current standards, has been incompletely assessed. Prior studies demonstrate that adoption of medical home infrastructure among primary care groups is associated strongly with organizational size (i.e., practices with greater than 140 physicians) (Rittenhouse et al. 2008). In fact, the use of medical home processes among small and medium-size practices—from which the majority of Americans receive health care (Isaacs, Jellinek, and Ray 2009)—is limited (Rittenhouse et al. 2011). What remains largely unexplored, however, is the extent to which organizational structure impacts uptake of medical home capabilities. Because they unite primary care and specialist physicians in the same practice, multispecialty groups may have the greatest potential for meeting medical home standards; conversely, single-specialty groups that tend to focus on limited clinical “service lines” may be less prepared for the care coordination and integration activities called for by the medical home model. Understanding this distinction is important to the extent that multi-specialty groups are reported to be in decline as physicians gravitate toward single-specialty practices (Liebhaber and Grossman 2007).

Moreover, previous evaluations of medical home capacity have focused mainly on primary care practices (Rittenhouse et al. 2008, 2011; Friedberg et al. 2009; Goldberg and Kuzel 2009). While it is true that primary care physicians are uniquely suited to provide the first-contact, continuous, and comprehensive care described under the medical home model (Grumbach and Bodenheimer 2002), there are certain conditions (e.g., cancer, chronic kidney disease) for which medical or surgical specialty practices may represent a more logical and efficient medical home (Berenson 2010). With over 80 percent of cardiology, endocrinology, and pulmonology practices serving as the usual source of care for as many as 10 percent of their patients (Casalino et al. 2010), specialist-led medical homes are a real possibility. As such, there is also a need to better understand the current infrastructure in medical and surgical specialty practices.

In this context, we used data from the National Ambulatory Medicare Care Survey (NAMCS) (Centers for Disease Control and Prevention 2010a) to evaluate medical home-relevant resources in physician practices in the United States. By comparing physician-reported resources to the National Committee on Quality Assurance (NCQA) standards for medical home recognition (National Committee for Quality Assurance 2008), we derived nationally representative estimates of the proportion of practices that would qualify as a medical home, according to practice size, organizational structure, and clinical specialty. We also explored potential disparities in access to “recognized” practices among specific vulnerable populations. Taken together, these data will both informpolicy makers regarding the feasibility of proposed medical home reforms and provide preliminary insight concerning their accessibility to the underserved.

METHODS

Data Source and Subjects

For all analyses, we used restricted data files from the National Ambulatory Medical Care Survey (NAMCS), an annual three-stage probability sample of outpatient visits to randomly selected, non-federal-employed, ofice-based physicians in the United States (Centers for Disease Control and Prevention 2010b). The restricted data files contain physician and practice characteristics obtained during a survey induction interview that are not included with the public use micro-data files for confidentiality reasons. During the induction interview, participants indicate if they are in solo, partnership, or group practice; those in group practice report the number of affiliated physicians. From these data, we generated a four-level practice-size variable that distinguished between physicians in solo/partnership, small (three to five physicians), medium (six to 10), or large group (11 or more) practice. We used additional information collected as a part of the induction interview to distinguish between single- and multi-specialty practices. Among the solo/partnership and single-specialty group practices, we further differentiated adult primary care, medical specialty, and surgical specialty practices.

The NCQA Voluntary Recognition Program

As a framework for assessing each practice’s medical home infrastructure, we used the original nine overarching medical home standards established by the NCQA (National Committee for Quality Assurance 2008). These standards are being used currently to recognize medical home practices in several prominent demonstration projects (Patient-Centered Primary Care Collaborative 2008). Subsumed by the nine standards are 30 specific elements (including 10 designated as “must pass” for purposes of medical home recognition) related to, among other factors, practice resources and systems of care. In this study, we mapped 15 elements, concerning six of the NCQA standards, to items from the 2007 and 2008 NAMCS (Appendix Table 1).

We first determined whether a practice “passed” (yes/no) each measurable element. Using the NCQA’s scoring system (Appendix Table 1) (National Committee for Quality Assurance 2008), we then derived a cumulative point total for the practice by summing across all passed elements. Next, we calculated an infrastructure score for each practice (expressed as a percentage) by dividing its cumulative point total by the total number of available points. The maximum denominator for this score was 59 points; however, the denominator value changed in the setting of missing data (please see the Appendix Methods for an example of this calculation). The infrastructure score also allowed us to assign each practice to an NCQA level of recognition (not recognized ["24 percent], Level 1 [25–49 percent], Level 2 [50–74 percent], or Level 3 [infrastructure score ≥75 percent]).

Statistical Analyses

In all analyses, we applied appropriate sampling weights, clusters, and stratification to correct our standard error estimates for the complex survey design. To make practices (rather than physicians) our unit of analysis, we derived a medical practice estimator using methodology from the National Center for Health Statistics (Appendix Methods) (Hing and Burt 2007). This approach allowed us to generate unbiased, nationally representative practice-level estimates.

After measuring the percentage of practices that passed a given NCQA element, we used linear regression to determine if this percentage varied significantly by practice size (solo/partnership, small, medium, or large group practice) or organizational structure (solo/partnership, single-specialty, or multi-specialty group practice). Among single-specialty group and solo/partnership practices, we made similar comparisons between primary care, medical specialty, and surgical specialty practices. Within primary care practices, we also determined whether attainment of a given NCQA element related to practice size and organizational structure.

Next, we calculated the proportion of practices that would achieve NCQA recognition. We then used multinomial logistic regression to evaluate associations between levels of NCQA recognition and practice size, organizational structure, and clinical specialty. We also conducted a series of sensitivity analyses designed to address concerns related to item nonresponse, indirect overlap between certain survey items and the corresponding NCQA elements, and unmeasured NCQA elements (Appendix Methods). Results from sensitivity analyses (which are available upon request) were consistent with those from our primary analyses and are not reported herein.

Finally, to explore potential disparities in access to “recognized” practices, we assessed levels of NCQA recognition among rural versus urban (as measured by the metropolitan statistical area) primary care practices. We also examined differences in the proportion of visits to “recognized” versus “not recognized” primary care practices among patients from disparate race/ethnic and poverty strata. We defined poverty status using a fourlevel categorical variable—available in the NAMCS visit file—that specifies the percent of the population in a patient’s ZIP code living below the poverty level (as defined by the United States Census Bureau). To account for contextual factors, we fit multivariable logistic regression models to evaluate the association between patient visits to “not recognized” practices and race/ethnicity or poverty status, adjusting for practice size and organizational structure.

We completed all statistical testing using computerized software (STATAversion 11.0, StataCorp LP, TX, USA). The University of Michigan Health Sciences and Behavioral Sciences Institutional Review Board determined that this study was exempt from its oversight.

RESULTS

During the study interval, 43.8 percent of office-based physicians (95 percent confidence interval [CI], 40.6–47.1 percent) worked in solo or partnership practices. Solo/partnership practices comprised more than three-quarters of all physician practices in the United States (78.5 percent [95 percent CI, 76.2–80.7 percent]). Eleven percent (95 percent CI, 9.4–12.7 percent) of physicians worked in large practices, and 20.1 percent (95 percent CI, 17.2–23.3 percent) worked in multi-specialty groups. These practice settings represented 1.2 percent (95 percent CI, 1.0–1.5 percent) and 6.4 percent (95 percent CI, 5.3–7.6 percent) of all physician practices, respectively.

Generally speaking, the proportion of practices that passed a given NCQA element increased with practice size, and large groups outperformed smaller groups on 12 of 15 measured elements. In contrast, attainment was lowest among solo/partnership practices for all 15 elements, including eight that were achieved by fewer than 25 percent of solo/partnership practices. Table 1 presents summary measures of achievement according to practice size and organizational structure. Notably, solo/partnership practices passed fewer than one in three “must pass” elements (32.8 percent [95 percentCI, 30.8–34.9 percent]); only about half of these practices would achieve even the lowest NCQA level of recognition (49.9 percent [95 percent CI, 45.2–54.5 percent]).

Table 1.

Mean Infrastructure Scores, Percent of “Must Pass” Elements Achieved, and the Proportion of Practices Qualifying for Medical Home Recognition, Stratified by Practice Size and Organizational Structure

| Estimate (95 Percent Confidence Interval) | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Practice Size | Organizational Structure | ||||||||

| Measure of Practices’ Achievement |

All practices n = 138,795 |

Solo/Partner n = 108,963 |

Small Group n = 21,638 |

Medium Group n = 6,497 |

Large Group n = 1,697 |

p-Value† | Single-Specialty n = 21,016 |

Multi-Specialty n = 8,816 |

p-Value†† |

| Mean infrastructure score* | 33.1 (31.4–34.8) | 31.0 (29.0–32.9) | 38.4 (35.8–41.0) | 45.5 (41.1–49.8) | 59.2 (55.1–63.2) | <.001 | 39.7 (37.2–42.2) | 44.4 (39.3–49.4) | <.001 |

| Percent of “must pass” elements achieved | 34.9 (33.1–36.7) | 32.8 (30.8–34.9) | 39.9 (37.3–42.5) | 46.9 (42.7–51.1) | 58.4 (54.5–62.3) | <.001 | 41.4 (38.9–43.8) | 45.2 (40.5–49.8) | <.001 |

| Percent of practices with a given level of recognition | |||||||||

| Not recognized | 46.3 (42.5–50.2) | 50.2 (45.5–54.8) | 35.9 (31.0–41.2) | 26.3 (19.9–33.8) | 12.2 (8.3–17.6) | <.001 | 34.5 (29.7–39.6) | 27.7 (20.1–36.8) | <.001 |

| Level 1 | 30.5 (26.8–34.2) | 30.0 (25.5–35.0) | 31.9 (27.3–36.9) | 34.7 (28.5–41.5) | 24.0 (18.1–31.2) | 31.7 (27.5–36.2) | 33.0 (25.0–42.1) | ||

| Level 2 | 14.5 (12.3–16.7) | 12.6 (10.2–15.5) | 21.4 (17.6–25.7) | 19.3 (14.6–25.0) | 28.3 (21.8–35.9) | 21.0 (17.5–25.0) | 22.0 (16.3–29.1) | ||

| Level 3 | 8.7 (6.8–10.6) | 7.2 (5.3–9.8) | 10.8 (7.9–14.5) | 19.7 (14.4–26.4) | 35.5 (27.7–44.2) | 12.8 (9.8–16.6) | 17.3 (11.8–24.7) | ||

The denominator for a practice’s infrastructure score was based on the NAMCS items (that mapped to specific NCQA elements) for which it reported data. The maximum denominator for the score was 59 points; however, the denominator value changed in the setting of missing data.

p-value is for the comparison between solo/partnership, small, medium, and large group practices.

p-value is for the comparison between solo/partnership, single-specialty, and mutli-specialty group practices.

NCQA, National Committee on Quality Assurance.

Multi-specialty groups outperformed their single-specialty counterparts on all but two of the NCQA elements. Regardless of the number of physicians, nearly three in four multi-specialty groups (72.3 percent [95 percent CI, 64.0–80.7 percent]) achieved NCQA standards for medical home recognition (Table 1). In contrast, only 65.5 percent (95 percent CI, 60.5–70.5 percent) and 49.9 percent (95 percent CI, 45.2–54.5 percent) of single-specialty group and solo/partnership practices, respectively, would achieve similar recognition status (Table 1).

Comparisons across specialties revealed that primary care solo/partnership practices have, on average, greater medical home infrastructure than similar practices in medical and surgical specialties (Appendix Table 2). Among single-specialty group practices, primary care groups were more likely than medical and surgical specialty groups to pass NCQA standards related to patient self-management, electronic prescribing, test tracking, and performance reporting. Moreover, primary care single-specialty groups also had higher mean infrastructure scores than single-specialtymedical and surgical groups (Table 2).

Table 2.

Mean Infrastructure Scores, Percent of “Must Pass” Elements Achieved, and the Proportion of Single-Specialty Group Practices Qualifying for Medical Home Recognition, Stratified by Clinical Specialty

| Estimate (95 Percent Confidence Interval) | |||||

|---|---|---|---|---|---|

| Measure of Practices’ Achievement | All Single-Specialty n = 21,016 |

Primary Care n = 9,014 |

Medical Specialty n = 7,140 |

Surgical Specialty n = 4,862 |

p-Value |

| Mean infrastructure score* | 39.7 (37.0–42.4) | 44.3 (39.5–49.2) | 34.9 (31.7–38.2) | 38.0 (33.5–42.5) | .019 |

| Percent of “must pass” elements achieved | 41.4 (38.7–44.0) | 45.5 (40.7–50.2) | 37.8 (34.0–41.6) | 39.0 (34.3–43.6) | .053 |

| Percent of practices with a given level of recognition | |||||

| Not recognized | 34.5 (28.9–40.1) | 29.7 (22.1–38.5) | 41.0 (31.3–51.5) | 33.9 (25.6–43.3) | .238 |

| Level 1 | 31.7 (26.8–36.6) | 29.0 (22.0–37.3) | 30.6 (21.5–41.4) | 38.3 (30.3–47.0) | |

| Level 2 | 21.0 (17.1–25.0) | 23.6 (17.5–31.1) | 19.2 (13.2–27.1) | 18.7 (12.7–26.8) | |

| Level 3 | 12.8 (9.1–16.5) | 17.7 (12.3–24.9) | 9.2† (4.7–17.2) | 9.0 (5.4–14.8) | |

The denominator for a practice’s infrastructure score was based on the NAMCS items (that mapped to specific NCQA elements) for which it reported data. The maximum denominator for the score was 59 points; however, the denominator value changed in the setting of missing data.

Because it is based on fewer than 30 records or the standard error is more than 30% of the estimate, the National Center for Health Statistics considers this estimate unreliable.

NCQA, National Committee on Quality Assurance.

In analyses limited to primary care practices, attainment of individual NCQA elements was greatest among large groups (versus medium, small group, or solo/partnership practices) and multi-specialty groups (versus single-specialty group or solo/partnership practices) (Table 3). More than three in four large primary care (Appendix Table 3) and multi-specialty group practices (Table 4) would achieve NCQA standards for medical home recognition; conversely, fewer than 60 percent of solo/partnership primary care practices would achieve the same designation.

Table 3.

Primary Care Practice Attainment of Individual NCQA Elements, Stratified by Practice Size and Organizational Structure

| Percentage (95 Percent Confidence Interval) | |||||||||

| Practice Size | Organizational Structure | ||||||||

| NCQA Element |

All Primary Care n = 60,859 |

Solo/Partner n = 46,829 |

Small Group n = 10,347 |

Medium Group n = 2,919 |

Large Group n = 764 |

p-Value† |

Single-Specialty n = 9,014 |

Multi-Specialty n = 5,016 |

p-Value†† |

| Patient tracking and registry functions | |||||||||

| Uses data system for basic patient information | 73.3 (66.9–78.8) | 71.2 (63.3–77.9) | 77.5 (69.2–84.2) | 87.7 (78.0–93.5) | 92.0 (82.4–96.6) | .002 | 82.2 (74.4–88.1) | 77.2 (65.2–85.9) | .062 |

| Has clinical data system with clinical data in searchable data fields | 35.5 (30.2–41.1) | 33.2 (27.0–40.1) | 39.6 (31.5–48.4) | 45.9 (35.3–56.8) | 78.9 (66.9–87.3) | <.001 | 39.5 (31.0–48.6) | 49.6 (36.6–62.7) | .051 |

| Uses paper or electronic-based charting tools to organize clinical information* | 31.2 (26.7–36.2) | 28.2 (22.7–34.5) | 37.3 (29.4–45.9) | 46.5 (35.3–58.1) | 77.1 (65.6–85.6) | <.001 | 39.7 (31.1–48.9) | 44.4 (32.1–57.3) | .025 |

| Percentage (95 Percent Confidence Interval) | |||||||||

| Practice Size | Organizational Structure | ||||||||

| NCQA Element |

All Primary Care n = 60,859 |

Solo/Partner n = 46,829 |

Small Group n = 10,347 |

Medium Group n = 2,919 |

Large Group n = 764 |

p-Value† |

Single-Specialty n = 9,014 |

Multi-Specialty n = 5,016 |

p-Value†† |

| Uses data to identify important diagnoses and conditions in practice* | 29.4 (24.4–34.9) | 26.7 (21.0–33.3) | 35.7 (27.9–44.3) | 43.9 (33.8–54.5) | 51.2 (38.9–63.4) | .001 | 39.9 (32.0–48.3) | 34.7 (24.6–46.4) | .021 |

| Care management | |||||||||

| Generates reminders about preventive services for clinicians | 30.4 (25.5–35.8) | 28.4 (22.8–34.8) | 34.7 (27.0–43.3) | 39.6 (29.6–50.5) | 65.5 (53.8–75.6) | <.001 | 37.4 (29.0–46.8) | 37.2 (26.2–49.7) | .133 |

| Uses non-physician staff to manage patient care | 53.7 (48.7–58.6) | 52.8 (46.6–58.9) | 56.6 (47.8–64.9) | 57.2 (47.1–66.6) | 56.0 (43.5–67.8) | 0.848 | 57.5 (49.4–65.3) | 55.1 (43.5–66.1) | .665 |

| Percentage (95 Percent Confidence Interval) | |||||||||

| Practice Size | Organizational Structure | ||||||||

| NCQA Element |

All Primary Care n = 60,859 |

Solo/Partner n = 46,829 |

Small Group n = 10,347 |

Medium Group n = 2,919 |

Large Group n = 764 |

p-Value† |

Single-Specialty n = 9,014 |

Multi-Specialty n = 5,016 |

p-Value†† |

| Conduct care management, including care plans, assessing progress, addressing barriers | 86.4 (79.8–91.0) | 85.7 (77.2–91.4) | 86.8 (79.6–91.7) | 90.4 (78.6–96.0) | 99.9 (99.4–1.00) | <.001 | 91.6 (84.1–95.7) | 83.8 (73.8–90.4) | .248 |

| Patient self-management support | |||||||||

| Actively support patient self-management* | 90.5 (86.1–93.6) | 90.1 (84.5–93.8) | 90.2 (83.2–94.4) | 96.9 (92.8–98.7) | 93.6 (86.2–97.2) | .103 | 92.3 (86.3–95.8) | 90.7 (79.1–96.2) | .787 |

| Electronic prescribing | |||||||||

| Uses electronic system to write prescriptions | 32.6 (28.2–37.4) | 30.1 (24.7–36.2) | 37.7 (30.5–45.4) | 45.9 (36.0–56.0) | 67.2 (55.6–77.0) | <.001 | 39.2 (31.5–47.5) | 44.2 (32.7–56.4) | .045 |

| Percentage (95 Percent Confidence Interval) | |||||||||

| Practice Size | Organizational Structure | ||||||||

| NCQA Element |

All Primary Care n = 60,859 |

Solo/Partner n = 46,829 |

Small Group n = 10,347 |

Medium Group n = 2,919 |

Large Group n = 764 |

p-Value† |

Single-Specialty n = 9,014 |

Multi-Specialty n = 5,016 |

p-Value†† |

| Has electronic prescription writer with safety checks | 25.2 (20.9–30.1) | 23.6 (18.5–29.7) | 28.0 (21.2–36.0) | 34.3 (25.0–44.9) | 55.7 (43.3–67.4) | <.001 | 28.2 (21.8–35.6) | 35.5 (24.1–48.9) | .172 |

| Test tracking | |||||||||

| Tracks tests and identifies abnormal results systematically* | 35.9 (30.6–41.5) | 31.9 (25.4–39.2) | 47.6 (39.3–55.9) | 52.9 (42.7–62.8) | 72.0 (57.2–83.2) | <.001 | 47.6 (39.6–55.8) | 53.9 (41.8–65.5) | .004 |

| Uses electronic system to order and retrieve tests and flag duplicate tests | 16.1 (12.9–19.9) | 13.8 (10.1–18.5) | 19.2 (13.6–26.4) | 35.1 (25.4–46.3) | 47.4 (34.0–61.1) | <.001 | 23.0 (17.4–29.8) | 25.3 (14.5–40.4) | .028 |

| Performance reporting and improvement | |||||||||

| Percentage (95 Percent Confidence Interval) | |||||||||

| Practice Size | Organizational Structure | ||||||||

| NCQA Element |

All Primary Care n = 60,859 |

Solo/Partner n = 46,829 |

Small Group n = 10,347 |

Medium Group n = 2,919 |

Large Group n = 764 |

p-Value† |

Single-Specialty n = 9,014 |

Multi-Specialty n = 5,016 |

p-Value†† |

| Measures clinical and/or service performance by physician or across the practice* | 23.9 (18.8–29.7) | 22.0 (15.9–29.8) | 28.2 (21.3–36.3) | 36.5 (27.5–46.5) | 28.6 (18.3–41.8) | .132 | 33.2 (25.9–41.3) | 24.4 (17.0–33.7) | .079 |

| Survey of patient’s care experience | 20.6 (15.7–26.5) | 19.3 (13.4–26.8) | 25.1 (18.5–33.1) | 24.0 (16.3–33.8) | 28.6 (18.5–41.5) | .454 | 23.9 (17.2–32.2) | 27.0 (18.2–38.0) | .428 |

| Reports performance across the practice or by physician* | 9.1 (6.5–12.5) | 7.6 (4.7–12.1) | 11.5 (7.3–17.7) | 19.0 (12.0–28.7) | 36.5 (24.2–50.8) | <.001 | 12.7 (8.5–18.6) | 17.0 (10.7–25.8) | .070 |

NCQA ȁmust pass” element.

p-value is for the comparison between solo/partnership, small, medium, and large group practices.

p-value is for the comparison between solo/partnership, single-specialty, and multi-specialty group practices.

NCQA, National Committee on Quality Assurance.

Table 4.

Mean Infrastructure Score, Percent of “Must Pass” Elements Achieved, and the Proportion of Primary Care Practices Qualifying for Medical Home Recognition, Stratified by Organizational Structure.

| Estimate (95 Percent Confidence Interval) | |||||

|---|---|---|---|---|---|

| Measure of Practices’ Achievement | All Primary Care n = 60,859 |

Solo/Partner n = 46,829 |

Single-Specialty n = 9,014 |

Multi-Specialty n = 5,016 |

p-Value |

| Mean infrastructure score* | 37.6 (34.9–40.3) | 35.4 (32.2–38.7) | 44.3 (39.9–48.8) | 46.4 (39.5–53.2) | <.001 |

| Percent of “must pass” elements achieved | 37.9 (35.2–40.6) | 35.6 (32.3–38.8) | 45.5 (41.0–50.0) | 45.8 (40.0–51.9) | <.001 |

| Percent of practices with a given level of recognition | |||||

| Not recognized | 39.5 (33.6–45.5) | 43.1 (35.9–50.6) | 29.7 (22.8–37.6) | 24.0 (15.1–36.1) | .011 |

| Level 1 | 30.9 (26.0–35.7) | 31.1 (25.1–37.7) | 29.0 (22.6–36.5) | 32.6 (21.4–46.2) | |

| Level 2 | 18.3 (14.5–22.0) | 16.6 (12.6–21.7) | 23.6 (17.7–30.8) | 23.6 (16.0–33.2) | |

| Level 3 | 11.8 (8.4–14.3) | 9.2 (6.2–13.5) | 17.7 (12.5–24.4) | 19.8 (12.6–29.7) | |

The denominator for a practice’s infrastructure score was based on the NAMCS items (that mapped to specific NCQA elements) for which it reported data. The maximum denominator for the score was 59 points; however, the denominator value changed in the setting of missing data.

NCQA, National Committee on Quality Assurance.

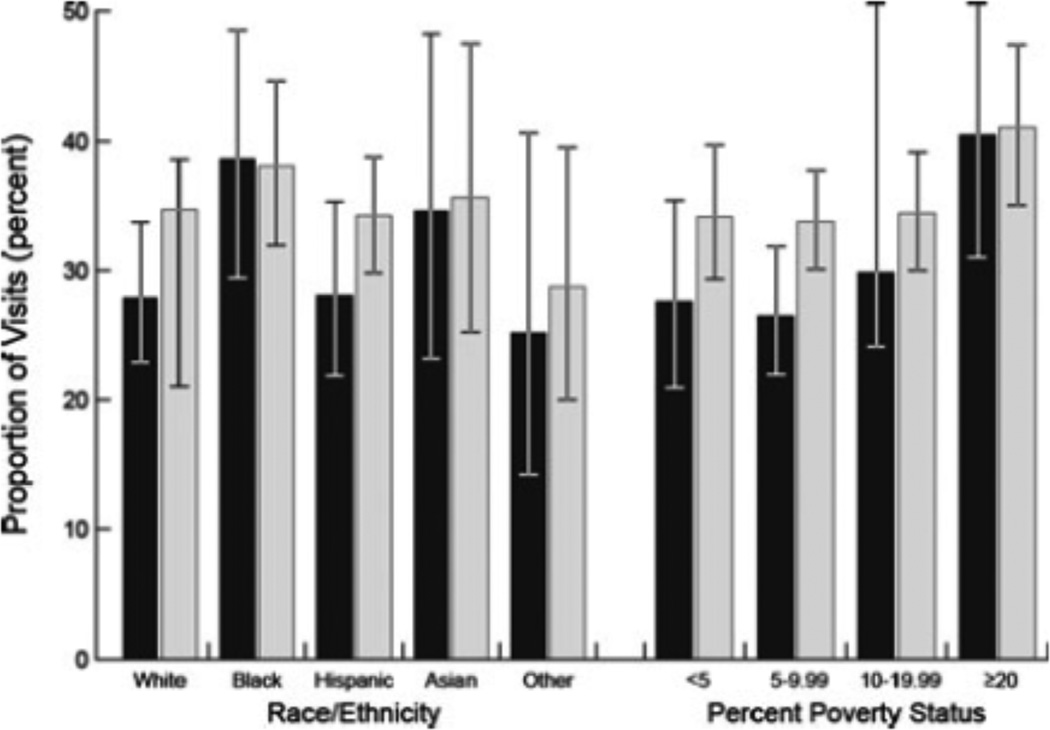

Finally, we observed similar mean infrastructure scores for primary care practices in urban (38.0 percent [95 percent CI, 35.0–40.9 percent]) versus rural (35.4 percent [95 percent CI, 30.1–40.7 percent]) environments. Yet we noted differences in access to “recognized” primary care practices across poverty strata (Figure 1). Namely, the proportion of visits to “not recognized” practices was higher among patients from the poorest versus most affluent neighborhoods (40.4 percent [95 percent CI, 31.0–50.6 percent] versus 27.6 percent [95 percent CI, 20.9–35.4 percent], respectively; p = .039). After adjusting for practice size and organizational structure, whereas the magnitude of this association persisted, it was no longer statistically significant (p = .109). There was no difference by race in the proportion of visits to “recognized” primary care practices.

Figure 1.

Proportion of Patient Visits to “Not Recognized” Primary Care Practices by Race/Ethnicity and Poverty Stata

Note: In the bar chart, visits to “not recognized” primary care practices, according to race/ethnicity and poverty strata, are depicted. The black- and gray-shaded bars depict unadjusted and adjusted estimates, respectively. The adjusted estimates account for practice size and organizational structure. The error bars represent 95 percent confidence intervals for the estimate. The poverty strata refer to the percent of population in a patient’s ZIP code living below the poverty level (as defined by the United States Census Bureau). Differences in unadjusted estimates across the poverty strata (p = .039) are statistically significant.

DISCUSSION

Our findings indicate that large group and multi-specialty physician practices possess the greatest capacity to implement proposed medical home reforms. Just as importantly, however, these data also highlight the strikingly low levels of medical home infrastructure among smaller practices in the United States. Only a minority of solo or partnership practices currently meet NCQA standards for test tracking, electronic prescribing, and performance reporting and improvement. Although primary care practices appear to be better positioned than their medical and surgical specialty counterparts, nearly 40 percent of these practices still lack the organizational resources and systems of care needed to qualify for even the lowest level of NCQA medical home recognition.

Our results are consistent with earlier work demonstrating that use of medical home processes in large primary care groups is strongly associated with overall practice size (Rittenhouse et al. 2008). Likewise, our findings are concordant with prior studies demonstrating that physicians in multi-specialty groups are more likely to adopt electronic health information systems and implement strategies for performance measurement and quality improvement (Mehrotra, Epstein, and Rosenthal 2006; Tollen 2008).

Although large and multi-specialty groups have greater medical home capacity, the reality is that 9 out of 10 Americans still receive some healthcare from smaller practices (Isaacs, Jellinek, and Ray 2009). Medical home infrastructure in small practice settings had been assessed previously in only two state-level analyses (Friedberg et al. 2009; Goldberg and Kuzel 2009). Recently, however, Rittenhouse and colleagues reported on the resources available in more than 1,300 small- and medium-size primary care groups from across the United States. Specifically, they examined aspects of care that corresponded to four Joint Principles of the patient-centered medical home (e.g., enhanced access) and found that, on average, these practices used only 20 percent of the processes measured (Rittenhouse et al. 2011). This finding is, in general, consistent with estimates from our nationally representative sample; and, taken together, these data underscore the fact that many small, single-specialty, and non-primary care groups will require both substantial assistance and significant retooling to meet NCQA requirements for participation in current medical home reforms.

Despite high expectations for the patient-centered medical home, only a few studies have actually assessed the impact of this model on clinical costs and outcomes. In one study, Group Health Cooperative reported that, compared with two control clinics, a medical home pilot practice achieved superior 12-month outcomes for patient care experience, clinician work experience, and clinical quality of care (Reid et al. 2009). In the Geisinger system, early results from a medical home initiative revealed a 20 percent reduction in all-cause hospitalizations and a 7 percent savings in total medical costs for patients treated in a medical home practice (Paulus, Davis, and Steele 2008). Likewise, implementation of medical home components in the National Demonstration Project was associated with modest improvements in condition-specific quality of care after slightly more than 2 years (Jaén et al. 2010). While these early data are promising, their generalizability to other practices remains unknown, and there are no studies that evaluate the relationship between medical home practices and long-term patient outcomes. Therefore, given the substantial human and financial resources required to develop and maintain sufficient medical home infrastructure, the gradual adoption of these practice changes is not necessarily a bad thing insofar as it allows many practices to better understand which aspects of the model actually work before they make the investments necessary to implement it.

Our study must be considered in the context of several limitations. First, while we measured adoption of various structural resources necessary for NCQA recognition, there is little doubt that certain core principles of the medical home model (e.g., whole person orientation) can be achieved without objective documentation of specific NCQA standards. Indeed, some critics contend that these standards are too static and process-oriented, providing an incomplete assessment of medical home qualification (Carrier, Gourevitch, and Shah 2009) and fail to consider the medical home as an integrated whole rather than a sum of its individual parts (Grumbach and Bodenheimer 2002). Moreover, while the NCQA categorizes practices into levels of recognition, there is no empiric work that validates meaningful differences between these strata. Accordingly, alternative (or supplementary) measures of medical home designation are needed, including standards that place a greater emphasis on patient-centered care. That being said, our use of the NCQA standards is supported by a number of considerations. For one, they are endorsed by the leading primary care professional societies. In addition, most planned or ongoing state pilot projects and demonstrations employ these standards (Patient-Centered Primary Care Collaborative 2008).

Second, we could not assess 15 NCQA elements, including several (e.g., those related to access and communication [Campbell et al. 2001]) that may be achieved more easily by solo/partnership practices. Fortunately, the elements that we did evaluate capture most of the essential functions envisioned by medical home architects. Moreover, in sensitivity analyses where we assigned practices varying point totals associated with passing unmeasured elements, we noted no substantive changes to our main findings. Third, item non-response rates were non-trivial for several of the measured NCQA elements. Recognizing this concern, we performed both sensitivity analyses based on imputed datasets and subgroup analyses limited to physicians with complete response data; each of these steps provided reassuring evidence that our reported estimates are valid. Fourth, we acknowledge indirect overlap between some of the measured NAMCS items and the NCQA elements to which they are mapped. However, we believe that these differences are relatively non-differential in that some NAMCS items may over-estimate compliance with the corresponding NCQA element, while others may underestimate this relationship. Notably, our principal findings did not change substantially in sensitivity analyses that excluded the five NAMCS items that, in our judgment, had the least direct overlap. Despite these reservations, we believe that, in the absence of a perfect dataset for measuring medical home infrastructure across a variety of practice organizations in the United States, the NAMCS represents the best available substrate for evaluating this timely and policy-relevant issue.

Our findings have direct implications for ongoing health care delivery system reform. Collectively, these analyses suggest at least two potentially unintended consequences of current medical home initiatives. First, because medical home designation is likely to yield more lucrative reimbursement, physicians in solo/partnership practices may be compelled to aggregate into larger groups that more easily meet medical home standards. While this change might ultimately prove to be beneficial, it is also possible that some of these physicians—in particular those working in rural areas—may be unable to affiliate. In this scenario, the consequent financial pressures could lead to solo/partnership practice closures and impaired patient access. Second, insofar as medical home implementation improves health outcomes, our findings suggest that health care disparities could be exacerbated because certain vulnerable populations appear to be overrepresented in “not recognized” practices. Therefore, to make the benefits of medical homes more equitable and widely accessible, policy makers may need to address the challenges facing smaller practices.

Potential policy solutions exist. One approach may involve establishing financial incentives aimed at integrating solo/partnership practices into larger (potentially regionally based) physician organizations (Shortell and Casalino 2008). In this scenario, smaller practices could leverage economies of scale created by such affiliations (e.g., administrative efficiencies, shared clinical culture) to implement many of the medical home's quality and safety functions. Alternatively, legislative actions aimed at stimulating the adoption and implementation of health information technology may prove helpful. In fact, policy makers have already taken a first step in this direction. Under the American Recovery and Reinvestment Act's Health Information Technology for Economic and Clinical Health (HITECH) sections, $17 billion in financial incentives is earmarked for providers who adopt and utilize electronic health records, and physicians who demonstrate “meaningful use” of a certified electronic health record will be eligible (starting this year) to receive supplemental Medicare reimbursements. From a medical home perspective, these funds—along with complementary work in this area by the Office of the National Coordinator for Health Information Technology—could increase the number of practices that use electronic health records to efficiently track clinical and laboratory data and provide their patients with electronic prescriptions. In the coming years, it will be crucial to evaluate the impact of this investment on a variety of medical home functions; indeed, the success or failure of the HITECH incentives will likely determine both the political feasibility and the wisdom of additional funding in this area.

Authorization in the American Recovery and Reinvestment Act of federal dollars for a Health Information Technology Extension Program is also directly relevant to the feasibility of medical home reforms in solo/partnership practice settings. This program includes Regional Extension Centers that are already providing technical support for clinicians working toward “meaningful use” of health information technology. It seems plausible that the scope of work for Regional Extension Centers could be expanded beyond health information technology to include other activities that facilitate medical home reforms in these practices (Bodenheimer, Grumbach, and Berenson 2009; Gawande 2010; Grumbach and Mold 2010). For instance, their resources (both human and financial) could be used to train physicians affiliated with solo/partnership practices in population health management. Likewise, they could encourage and facilitate these physicians’ participation in the growing pool of quality collaboratives (Schouten et al. 2008); such participation may, in turn, accelerate the number of small practices capable of implementing systems for achieving the medical home standards of performance monitoring and quality improvement. Finally, payers (including the Centers for Medicare and Medicaid Services) could institute payment reforms aimed at increasing reimbursement for services provided by non-physician clinicians, thereby creating a business case for expanding the prevalence of team-based care in solo/partnership and other smaller practices.

Our collective findings may help policy makers and other stakeholders predict the feasibility of medical home reforms across a variety of practice settings. In particular, these data foreshadow the challenges facing many smaller physician groups seeking to implement medical home reforms. Since most solo/partnership practices currently lack the structural resources and systems of care called for under the NCQA standards, tailored policy solutions will be needed to ensure that they can participate in this innovative practice model. Possible strategies for enhancing (physician and patient) access may include additional investments aimed at stimulating the adoption of health information technology, as well as financial incentives that promote practice-based performance measurement and quality improvement. Because they often care for vulnerable populations, failure to support smaller practices may exacerbate existing health disparities. As such, it will be important to assess these concerns in future prospective studies of planned medical home demonstrations.

Acknowledgments

This study was supported by the Robert Wood Johnson Foundation Clinical Scholars Program (to JH) and the Agency for Healthcare Research and Quality (K08 HS018346-01A1 to DM). This work utilized the Measurement Core of the Michigan Diabetes Research and Training Center funded by DK020572 from the NIDDK. The funding sources had no role in study design, data collection, data analysis, data interpretation, or in the writing of the report.

The findings and conclusions in this paper are those of the authors and do not necessarily represent the views of the Research Data Center, the National Center for Health Statistics, or the Centers for Disease Control and Prevention.

Footnotes

Disclosure Statement: The authors have no conflicts of interest.

Disclosures: None.

REFERENCES

- Berenson RA. Is There Room for Specialists in the Patient-Centered Medical Home? Chest. 2010;137(1):10–11. doi: 10.1378/chest.09-2502. [DOI] [PubMed] [Google Scholar]

- Bodenheimer T, Grumbach K, Berenson RA. A Lifeline for Primary Care. New England Journal of Medicine. 2009;360(26):2693–2699. doi: 10.1056/NEJMp0902909. [DOI] [PubMed] [Google Scholar]

- Campbell SM, Hann M, Hacker J, Burns C, Oliver D, Thapar A, Mead N, Safran DG, Roland MO. Identifying Predictors of High Quality Care in English General Practice: Observational Study. British Medical Journal. 2001;323(7316):784–787. doi: 10.1136/bmj.323.7316.784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carrier E, Gourevitch MN, Shah NR. Medical Homes: Challenges in Translating Theory into Practice. Medical Care. 2009;47(7):714–722. doi: 10.1097/MLR.0b013e3181a469b0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casalino LP, Rittenhouse DR, Gillies RR, Shortell SM. Specialist Physician Practices as Patient-Centered Medical Homes. New England Journal of Medicine. 2010;362(17):1555–1558. doi: 10.1056/NEJMp1001232. [DOI] [PubMed] [Google Scholar]

- Centers for Disease Control and Prevention. Restricted Variables. [accessed on December 8, 2010];2010a Available at http://www.cdc.gov/rdc/B1dataType/dt122.htm.

- Centers for Disease Control and Prevention. About the Ambulatory Health Care Surveys. [accessed on December 8, 2010];2010b Available at http://www.cdc.gov/nchs/ahcd/about_ahcd.htm.

- Friedberg MW, Safran DG, Coltin KL, Dresser M, Schneider EC. Readiness for the Patient-Centered Medical Home: Structural Capabilities of Massachusetts Primary Care Practices. Journal of General Internal Medicine. 2009;24(2):162–169. doi: 10.1007/s11606-008-0856-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gawande A. Testing, Testing. [accessed on December 8, 2010];2010 Available at http://www.newyorker.com/reporting/2009/12/14/091214fa_fact_gawande. [Google Scholar]

- Goldberg DG, Kuzel AJ. Elements of the Patient-Centered Medical Home in Family Practices inVirginia. Annals of Family Medicine. 2009;7(5):301. doi: 10.1370/afm.1021. 208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grumbach K, Bodenheimer T. A Primary Care Home for Americans: Putting the House in Order. Journal of the American Medical Association. 2002;288(7):889–893. doi: 10.1001/jama.288.7.889. [DOI] [PubMed] [Google Scholar]

- Grumbach K, Mold JW. Transforming Primary Care and Community Health: A Health Care Cooperative Extension Service. Journal of the American Medical Association. 2010;301(24):589–5891. doi: 10.1001/jama.2009.923. [DOI] [PubMed] [Google Scholar]

- Hing E, Burt CW. Office-Based Medical Practices: Methods and Estimates from the National Ambulatory Medical Care Survey. Advance Data. 2007;383:1–15. [PubMed] [Google Scholar]

- Isaacs SL, Jellinek PS, Ray WL. The Independent Physician–Going, Going. New England Journal of Medicine. 2009;360(7):655–657. doi: 10.1056/NEJMp0808076. [DOI] [PubMed] [Google Scholar]

- Jaén CR, Ferrer RL, Miller WL, Palmer RF, Wood R, Davila M, Stewart EE, Crabtree BF, Nutting PA, Stange KC. Patient Outcomes at 26 Months in the Patient-Centered Medical HomeNational Demonstration Project. Annals of Family Medicine. 2010;8(suppl 1):S57–S67. doi: 10.1370/afm.1121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liebhaber A, Grossman JM. Tracking ReportNo. 18. Washington, DC: Center for Studying Health System Change; 2007. Physicians Moving to Mid-Sized, Single-Specialty Practices. [PubMed] [Google Scholar]

- Mehrotra A, Epstein AM, Rosenthal MB. Do Integrated Medical Groups Provide Higher-Quality Medical Care Than Individual Practice Associations? Annals of Internal Medicine. 2006;145(11):826–833. doi: 10.7326/0003-4819-145-11-200612050-00007. [DOI] [PubMed] [Google Scholar]

- National Committee for Quality Assurance. Standards and Guidelines for Physician Practice Connections–Patient Centered Medical Home (PPC-PCMH) Washington, DC: National Committee for Quality Assurance; 2008. [Google Scholar]

- Patient-Centered Primary Care Collaborative. Patient-Centered Medical Home: Building Evidence and Momentum. [accessed on December 8, 2010];2008 Available at http://www.aafp.org/online/etc./medialib/aafp_org/documents/press/pcmh-summit/pcmh-pilot-demonstration-report.Par.0001.File.tmp/Pilot-ReportFINAL.pdf.

- Paulus RA, Davis K, Steele GD. Continuous Innovation in Health Care: Implications of the Geisinger Experience. Health Affairs (Millwood) 2008;27(5):1235–1245. doi: 10.1377/hlthaff.27.5.1235. [DOI] [PubMed] [Google Scholar]

- Reid RJ, Fishman PA, Yu O, Ross TR, Tufano JT, Soman MP, Larson EB. Patient-Centered Medical Home Demonstration: A Prospective, Quasi-Experimental, before and after Evaluation. American Journal of Managed Care. 2009;15(9):e71–e87. [PubMed] [Google Scholar]

- Rittenhouse DR, Casalino LP, Gillies RR, Shortell SM, Lau B. Measuring the Medical Home Infrastructure in Large Medical Groups. Health Affairs (Millwood) 2008;27(5):1246–1258. doi: 10.1377/hlthaff.27.5.1246. [DOI] [PubMed] [Google Scholar]

- Rittenhouse DR, Shortell SM. The Patient-Centered Medical Home: Will It Stand the Test of Health Reform? Journal of the American Medical Association. 2009;301(19):2038–2040. doi: 10.1001/jama.2009.691. [DOI] [PubMed] [Google Scholar]

- Rittenhouse DR, Shortell SM, Fisher ES. Primary Care and Accountable Care–Two Essential Elements of Delivery-System Reform. New England Journal of Medicine. 2009;361(24):2301–2303. doi: 10.1056/NEJMp0909327. [DOI] [PubMed] [Google Scholar]

- Rittenhouse DR, Casalino LP, Shortell SM, McClellan SR, Gillies RR, Alexander JA, Drum ML. Small and Medium-Size Physician Practices Use Few Patient-Centered Medical Home Processes. Health Affairs (Millwood) 2011;30(8):1–10. doi: 10.1377/hlthaff.2010.1210. [DOI] [PubMed] [Google Scholar]

- Schouten LM, Hulscher ME, van Everdingen JJ, Huijsman R, Grol RP. Evidence for the Impact of Quality Improvement Collaboratives: Systematic Review. British Medical Journal. 2008;336(7659):1491–1494. doi: 10.1136/bmj.39570.749884.BE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shortell SM, Casalino LP. Health Care Reform Requires Accountable Care Systems. Journal of the American Medical Association. 2008;300(1):95–97. doi: 10.1001/jama.300.1.95. [DOI] [PubMed] [Google Scholar]

- Tollen L. Fund Report. Vol. 89. New York: Commonwealth Fund.; 2008. Physician Organization in Relation to Quality and Efficiency of Care: A Synthesis of Recent Literature. [Google Scholar]