Abstract

Prioritizing missense variants for further experimental investigation is a key challenge in current sequencing studies for exploring complex and Mendelian diseases. A large number of in silico tools have been employed for the task of pathogenicity prediction, including PolyPhen-2, SIFT, FatHMM, MutationTaster-2, MutationAssessor, CADD, LRT, phyloP and GERP++, as well as optimized methods of combining tool scores, such as Condel and Logit. Due to the wealth of these methods, an important practical question to answer is which of these tools generalize best, that is, correctly predict the pathogenic character of new variants. We here demonstrate in a study of ten tools on five datasets that such a comparative evaluation of these tools is hindered by two types of circularity: they arise due to (1) the same variants or (2) different variants from the same protein occurring both in the datasets used for training and for evaluation of these tools, which may lead to overly optimistic results. We show that comparative evaluations of predictors that do not address these types of circularity may erroneously conclude that circularity-confounded tools are most accurate among all tools, and may even outperform optimized combinations of tools.

Keywords: Pathogenicity Prediction Tools, Exome Sequencing

Introduction

Current high-throughput techniques to investigate the genetic basis of inherited diseases yield large numbers of potentially pathogenic sequence alterations (Tennessen et al. 2012; Purcell et al. 2014). Conducting further in-depth functional analyses on these large numbers of candidates is generally impractical. Reliable strategies that allow investigators to decide which of these variants to prioritize are therefore imperative. Researchers will often focus on non-synonymous single nucleotide variants (nsSNVs), which are disproportionately deleterious compared to synonymous variants (Hindorff et al. 2009; Kiezun et al. 2012; MacArthur et al. 2012), and filter out common variants, which are presumed to be more likely to be neutral. However, in many cases, tens of thousands of candidates still remain after this step. Computational tools that can be used to identify those missense variants most likely to have a pathogenic effect, that is, most likely to contribute to a disease, are therefore of high practical value.

A number of such tools are already available, such as MutationTaster-2 (Schwarz et al. 2014), LRT (Chun and Fay 2009), PolyPhen-2 (Adzhubei et al. 2010), SIFT (Ng and Henikoff 2003), MutationAssessor (Reva et al. 2011), FatHMM weighted and unweighted (Shihab et al. 2013), CADD (Kircher et al. 2014), phyloP (Cooper and Shendure 2011) and GERP++ (Davydov et al. 2010). They are widely used for separating pathogenic variants from neutral variants in sequencing studies (e.g. Leongamornlert et al. 2012; Rudin et al. 2012; Kim et al. 2013; Thevenon et al. 2014; Weinreb et al. 2014; Zhao et al. 2014). While these tools are all commonly applied to the problem of pathogenicity prediction, the original purposes they were designed for varies (see Table 1). Some measure sequence conservation (phyloP (Cooper and Shendure 2011), GERP++ (Davydov et al. 2010), and SIFT (Ng and Henikoff 2003)), others try to assess the impact of variants on protein structure or function (e.g. PolyPhen-2 (Adzhubei et al. 2010)) or to quantify the overall pathogenic potential of a variant based on diverse types of genomic information (e.g. CADD (Kircher et al. 2014)). Note that SIFT is both a measure of sequence conservation and provides an analytically derived threshold for predicting whether or not protein function will be affected (Ng and Henikoff 2003). Furthermore, popular benchmark datasets for pathogenicity prediction differ in the way they define the pathogenic or neutral character of a variant (see Table 2). For instance, neutral variants are supposed to have a minor allele frequency larger than 1% in HumVar (Adzhubei et al. 2010), of less than 1% in ExoVar (Li et al. 2013), and of more than 40% in VariBench (Thusberg et al. 2011; Nair and Vihinen 2013).

Table 1.

Overview of the prediction tools used in this study

| Tool (Abbreviation) | Version | N | AA | Purpose, as stated by developers |

|---|---|---|---|---|

| PolyPhen-2 (PP2) | 2.2.2 | Yes | Yes | "Predicts possible impact of an amino acid substitution on the structure and function of a human protein using straightforward physical and comparative considerations"1 |

| MutationTaster-2 (MT2) | 2 | Yes | No | "Evaluation of the disease-causing potential of DNA sequence alterations"2 |

| MutationAssessor (MASS) | 2 | Yes | Yes | "Predicts the functional impact of amino-acid substitutions in proteins, such as mutations discovered in cancer or missense polymorphisms"3 |

| LRT | - | Yes | No | "Identify a subset of deleterious mutations that disrupt highly conserved amino acids within protein-coding sequences, which are likely to be unconditionally deleterious"4 |

| SIFT | 1.03 | Yes | Yes | "Predicts whether an amino acid substitution affects protein function"5 |

| GERP++ | - | Yes | No | "Identifies constrained elements in multiple alignments by quantifying substitution deficits. These deficits represent substitutions that would have occurred if the element were neutral DNA, but did not occur because the element has been under functional constraint. We refer to these deficits as "Rejected Substitutions". Rejected substitutions are a natural measure of constraint that reflects the strength of past purifying selection on the element."6 |

| phyloP | - | Yes | No | "Compute conservation or acceleration p-values based on an alignment and a model of neutral evolution"7 |

| FatHMM unweighted (FatHMM-U) | 2.2–2.3 | No | Yes | Predicts "functional consequences of both coding variants, i.e. non-synonymous single nucleotide variants (nsSNVs), and non-coding variants" 8 |

| FatHMM weighted (FatHMM-W) | 2.2–2.3 | No | Yes | Predicts "functional consequences of both coding variants, i.e. non-synonymous single nucleotide variants (nsSNVs), and non-coding variants" and its weighting scheme attributes higher tolerance scores to SNVs in proteins, related proteins, or domains that already include a high fraction of pathogenic variants 8 |

| CADD | 1.0 | Yes | No | "CADD is a tool for scoring the deleteriousness of single nucleotide variants as well as insertion/deletions variants in the human genome." 9 |

For each tool, the first column shows the version of the tool, the second column (N) shows whether it accepts nucleotide changes as input, the third column (AA) shows whether it accepts amino-acid changes as input. The last column provides a description of the tool, as stated by the developers.

Table 2.

Purpose of each dataset, as described by dataset creators

| Dataset | Purpose | POSITIVE CONTROL: Damaging / deleterious / disease-causing / pathogenic |

NEGATIVE CONTROL: Neutral / benign / non-damaging / tolerated |

|---|---|---|---|

| HumVar | Mendelian Disease variant identification | "All disease-causing mutations from UniProtKB"1 | "Common human nsSNPs (MAF>1%) without annotated involvement in disease… treated as non-damaging"1 |

| ExoVar | "Dataset composed of pathogenic nsSNVs and nearly non-pathogenic rare nsSNVs"2 | "5,340 alleles with known effects on the molecular function causing human Mendelian diseases from the UniProt database… positive control variants" "pathogenicnsSNVs"2 | "4,752 rare (alternative/derived allele frequency < %) nsSNVs with at least one homozygous genotype for the alternative/derived allele in the 1000 Genomes Project… negative controlvariants" "other rare variants"2 |

| VariBench | “Variation datasets affecting protein tolerance”3 | “The pathogenic dataset of 19,335 missense mutations obtained from the PhenCode database downloaded in June 2009), IDbases and from 18 individual LSDBs. For this dataset, the variations along with the variant position mappings to RefSeq protein (>=99% match), RefSeq mRNA and RefSeq genomic sequences are available for download.“3 | “This is the neutral dataset or non synonymous coding SNP dataset comprising 21,170 human non synonymous coding SNPs with allele frequency 40.01 and chromosome sample count 449 from the dbSNP database build 131. This dataset was filtered for the disease-associated SNPs. The variant position mapping for this dataset was extracted from dbSNP database.”3 |

| predictSNP | “Benchmark dataset used for the evaluation of … prediction tools and training of consensus classifier PredictSNP“ 4 | Disease-causing and deleterious variants from SwissProt, HGMD, HumVar, Humsavar, dbSNP, PhenCode, IDbases and 16 individual locus-specific databases | Neutral variants from SwissProt, HGMD, HumVar, Humsavar, dbSNP, PhenCode, IDbases and 16 individual locus-specific databases |

| SwissVar | “Comprehensive collection of single amino acid polymorphisms (SAPs) and diseases in the UniProtKB/Swiss-Prot knowledgebase“5 | “A variant is classified as Disease when it is found in patients and disease-association is reported in literature. However, this classification is not a definitive assessment of pathogenicity” 6 | “A variant is classified as Polymorphism if no disease-association has been reported” 6 |

For each dataset, the first column shows the general purpose. The last two columns describe the positive and negative control categories of variants.

Given this wealth of different methods and benchmarks that can be used for pathogenicity prediction, an important practical question to answer is whether one or several tools systematically outperform all others in prediction accuracy. To address this question, we comprehensively assess the performance of ten tools that are widely used for pathogenicity prediction: MutationTaster-2 (Schwarz et al. 2014), LRT (Chun and Fay 2009), PolyPhen-2 (Adzhubei et al. 2010), SIFT (Ng and Henikoff 2003), MutationAssessor (Reva et al. 2011), FatHMM weighted and unweighted (Shihab et al. 2013), CADD (Kircher et al. 2014), phyloP (Cooper and Shendure 2011) and GERP++ (Davydov et al. 2010). We evaluate performance across major public databases previously used to test these tools (Adzhubei et al. 2010; Mottaz et al. 2010; Thusberg et al. 2011; Li et al. 2013; Nair and Vihinen 2013; Bendl et al. 2014) and show that two types of circularity severely affect the interpretation of the results. Here we use the term ‘circularity’ to describe the phenomenon that predictors are evaluated on variants or proteins that were used to train their prediction models. While a number of authors have acknowledged the existence of one particular form of circularity before (stemming specifically from overlap between data used to develop the tools and data upon which those tools are tested) (Adzhubei et al. 2010; Thusberg et al. 2011; Nair and Vihinen 2013; Vihinen 2013), our study is the first to provide a clear picture of the extent and impact of this phenomenon in pathogenicity prediction.

The first type of circularity we encounter is due to overlaps between datasets that were used for training and evaluation of the models. Tools such as MutationTaster-2 (Schwarz et al. 2014), PolyPhen-2 (Adzhubei et al. 2010), MutationAssessor (Reva et al. 2011) and CADD (Kircher et al. 2014), which require a training dataset to determine the parameters of the model, run the risk of capturing idiosyncratic characteristics of their training set, leading to poor generalization when applied on new data. To prevent the phenomenon of overfitting (Hastie et al. 2009), it is imperative that tools be evaluated on variants that were not used for the training of these tools (Vihinen 2013). This is particularly true when evaluating combinations of tool scores, as different tools have been trained on different datasets, increasing the likelihood that variants in the evaluation set appear in at least one of these datasets (González-Pérez and López-Bigas 2011; Capriotti et al. 2013; Li et al. 2013; Bendl et al. 2014). Notably, this type of circularity, which we refer to as type 1 circularity, could cause spurious increases in prediction accuracy for both single tools and combinations of tool scores.

The second type of circularity, which we refer to as type 2 circularity, is closely linked to a statistical property of current variant databases: Often, all variants from the same gene are jointly labelled as being pathogenic or neutral. As a consequence, a classifier that predicts pathogenicity based on known information about specific variants in the same gene will achieve excellent results, while being unable to detect novel risk genes, for which no variants have been annotated before. Further, it will not be able to perform another critical function: discrimination of pathogenic variants from neutral ones within a given protein.

Furthermore, we evaluate the performance of two tools which combine scores across methods, Condel (González-Pérez and López-Bigas 2011) and Logit (Li et al. 2013), and examine whether these tools are affected by circularity as well. These tools are based on the expectation that individual predictors have complementary strengths, because they rely on diverse types of information, such as sequence conservation or modifications at the protein level. Combining them hence has the potential to boost their discriminative power, as reported in a number of studies (González-Pérez and López-Bigas 2011; Capriotti et al. 2013; Li et al. 2013; Bendl et al. 2014). The problem of circularity, however, could be exacerbated when combining several tools. First, consider the case where the data that are used to learn the weights assigned to each individual predictor in the combination also overlaps with the training data of one or more of the tools. Here, tools that have been fitted to the data already will appear to perform better and may receive artificially inflated weights. Second, consider the case where the data used to assess the combination of tools overlaps with the data on which the tools have been trained. Here, the tools themselves are biased towards performing well on the evaluation data, which can make their combination appear to perform better than it actually does.

Materials and Methods

Datasets and data pre-processing

In this study we used five different datasets to assess the performance of available prediction tools and their combinations. We used publicly available and commonly used benchmark datasets: HumVar (Adzhubei et al. 2010), ExoVar (Li et al. 2013), VariBench (Thusberg et al. 2011; Nair and Vihinen 2013), predictSNP (Bendl et al. 2014) and the latest SwissVar (Dec. 2014) database (Mottaz et al. 2010) (Table 3). As these tools can require either nucleotide or amino acid substitutions as input, we used Variant Effect Predictor (VEP) (McLaren et al. 2010) to convert between both formats. We excluded all variants for which we could not determine an unambiguous nucleotide or amino acid change. Note that by contrast, analyses such as that of Thusberg et al. (2011) only assess tools that require amino-acid changes as input. As the intersection of the training data from the tool CADD (Kircher et al. 2014) and that of all other data sets is small (fewer than a hundred variants), we systematically excluded all variants overlapping with the CADD training data from all other data sets. The VariBench dataset (benchmark database for variations) was created (Thusberg et al. 2011; Nair and Vihinen 2013) to address the problem of type 1 circularity. However, while the pathogenic variants of this dataset were new, its neutral variants may have been present in the training data of other tools. VariBench has an overlap of approximately 50% with both HumVar and ExoVar (Supp. Figure S1). We kept the non-overlapping variants to build an independent evaluation dataset, which we called VariBenchSelected and make available along with this manuscript (Supp. Data S1). From the predictSNP benchmark dataset we systematically excluded all variants that overlap with HumVar, ExoVar and VariBench and called the resulting dataset predictSNPSelected. Eventually, we created a fifth dataset, SwissVarSelected. Here, we excluded from the latest SwissVar database (Dec. 2014) all variants overlapping with the other four datasets – HumVar, ExoVar, VariBench and predictSNP. Thus, SwissVarSelected should be the dataset containing the newest variants across all datasets. With one possible exception, none of the prediction tools or conservation scores we investigated in this manuscript were trained on VariBenchSelected, predictSNPSelected or SwissVarSelected. The exception is that some variants in the Selected datasets may overlap partially with variants used to train MutationTaster-2 (MT2) (Schwarz et al. 2014) because MT2 was trained on private data (a large collection of disease variants from HGMD Professional (Stenson et al. 2014)).

Table 3.

All datasets used in this study

| Datasets | Deleterious Variants (D) | Neutral Variants (N) | Total | Ratio (D:Total) |

Tools potentially trained on data (fully or partly) |

Removed variants overlapping with: |

|---|---|---|---|---|---|---|

| HumVar | 21090 | 19299 | 40389 | 0.52 | MT2, MASS, PP2, FatHMM-W | CADD training data |

| ExoVar | 5156 | 3694 | 8850 | 0.58 | MT2, MASS, PP2, FatHMM-W | CADD training data |

| VariBenchSelected | 4309 | 5957 | 10266 | 0.42 | MT2 | CADD training data, HumVar, ExoVar |

| predictSNPSelected | 10000 | 6098 | 16098 | 0.62 | MT2 | CADD training data, HumVar, ExoVar, VariBench |

| SwissVarSelected | 4526 | 8203 | 12729 | 0.36 | MT2 | CADD training data, HumVar, ExoVar, VariBench, predictSNP |

These pre-processed and filtered datasets are used to evaluate the performance of different prediction tools.

Pathogenicity prediction score sources and conservation scores

For any given variant, we obtained scores and prediction labels for each tool directly from their respective webservers or standalone tools (Table 1). The pathogenicity score of a missense variant may depend on which transcript of the corresponding gene is considered. For this reason, we standardized our analyses by examining the same transcript across all tools; if available, we chose the canonical transcript (Hubbard et al. 2009). In contrast, ANNOVAR (Wang et al. 2010) and dbNSFP 2.0 (Liu et al. 2013) use the transcript that yields the worst (e.g. most damaging) score, which means that different tools may select different transcripts for the same variant.

Data availability and reproducibility of results

For each dataset, we compiled comma-separated files containing all obtained tool scores and predicted labels as well as information about the variant (true label, nucleotide and amino acid changes, minor allele frequencies, UniProt accession IDs (Magrane and Consortium 2011), Ensembl gene, transcript and protein IDs (Flicek et al. 2014) and dbSNP IDs (rs#) if available (Sherry et al. 2001)). All these datasets including the tool predictions can be found at the VariBench website (http://structure.bmc.lu.se/VariBench/GrimmDatasets.php) as well as a single Excel file at the journals website (Supp. Data S2).

Further, we provide all Python scripts used to generate all tables and figures in this study along with all datasets, compressed as a single ZIP file (Supp. Data S1). All data and Python scripts can also be downloaded from: http://www.bsse.ethz.ch/mlcb/research/bioinformatics-and-computational-biology/pathogenicity-prediction.html.

Performance evaluation

To evaluate the performance of all the tools in this study, we used a collection of statistics derived from a confusion matrix. To this end, we counted a correctly classified test point as a true positive (TP) if and only if the test point corresponds to the positive class (pathogenic or damaging) and as a true negative (TN) if and only if the test point corresponded to the negative class (neutral or benign). Accordingly, a false positive (FP) is a negative test point that is classified to be positive and a false negative (FN) a positive test point classified as a negative one. Since a few datasets are slightly unbalanced, we assessed the performance of the single tools by computing receiver operating characteristic curves (ROC-curves). Furthermore, we computed Precision-Recall curves (ROC-PR-curves) (Davis and Goadrich 2006). The ROC-curve is the fraction of the TP over all positives TP+FN (sensitivity or true positive rate) against the fraction of the FP over all negatives TN+FP (1-specificity or false positive rate), whereas the ROC-PR-curve is the fraction of the TP over all positives TP+FN (recall or sensitivity) against the fraction of the TP over all TP+FP (precision). To measure the performance, we computed the area under the ROC and ROC-PR curves (AUC and AUC-PR, respectively). The area under the curve can take values between 0 and 1. A perfect classifier has an AUC and AUC-PR of 1. The AUC of a random classifier is 0.5. Additionally, we assessed the performance with seven commonly used parameters as described in the Human Mutation guidelines (Vihinen 2012; Vihinen 2013) and reported the results in the Supp. Information:

| (1) |

| (2) |

| (3) |

| (4) |

| (5) |

| (6) |

| (7) |

Evaluation of the weighting scheme of FatHMM-W

Given the superior performance of FatHMM-W on VariBenchSelected and predictSNPSelected (see Results and Figure 1), we examined this prediction tool in more detail. This section provides relevant details about FatHMM’s weighted (FatHMM-W) and unweighted (FatHMM-U) versions and our evaluation of the weighting of FatHMM, which accounts for its superior performance. To evaluate the role of the weighting scheme of FatHMM-W (Shihab et al. 2013) (Supp. Text S1), we compared the original FatHMM-W method to an L1-regularized logistic regression (Lee et al. 2006) over the log-transformed features ln(Wn) and ln(Wd). These features are used by FatHMM to reweight the FatHMM-U score and construct FatHMM-W, the weighted version of FatHMM (Supp. Text S1). We performed a 10-fold cross-validation on the five datasets HumVar (Adzhubei et al. 2010), ExoVar (Li et al. 2013), VariBenchSelected (Thusberg et al. 2011; Nair and Vihinen 2013), predictSNPSelected (Bendl et al. 2014) and SwissVarSelected (Mottaz et al. 2010) (see Table 3 and the Methods section). For this purpose, we randomly split the dataset of interest into ten subsets of equal size (to the extent possible). Simultaneously, we kept the ratio of neutral to pathogenic variants the same across all subsets. This avoids generating a biased subset containing only variants of the same kind. We then combined nine subsets to train the model and used the remaining one to assess the performance (testing). We repeated this cross-validation procedure ten times. Because we were using a regularization term, we had to find a trade-off between the matching model on the training set and the best generalization. For this purpose, we had to select a reasonable value C. This we did by performing an internal line-search for each fold to find the Cj that leads to the best AUC from a set of C-values, C = {1e-4, 1e-3, 1e-2, 1e-1, 1, 1e1, 1e2}. The overall performance of the model was the average across all ten AUC values. We then computed AUC, AUC-PR and the seven commonly used parameters as described in the Human Mutation guidelines (Vihinen 2012; Vihinen 2013). For our experiments, we used custom Python scripts (see Supp. Data S1) and scikit-learn (Pedregosa et al. 2011), an efficient machine learning library for Python that includes an L1-regularized logistic regression using the LIBLINEAR library (Fan et al. 2008).

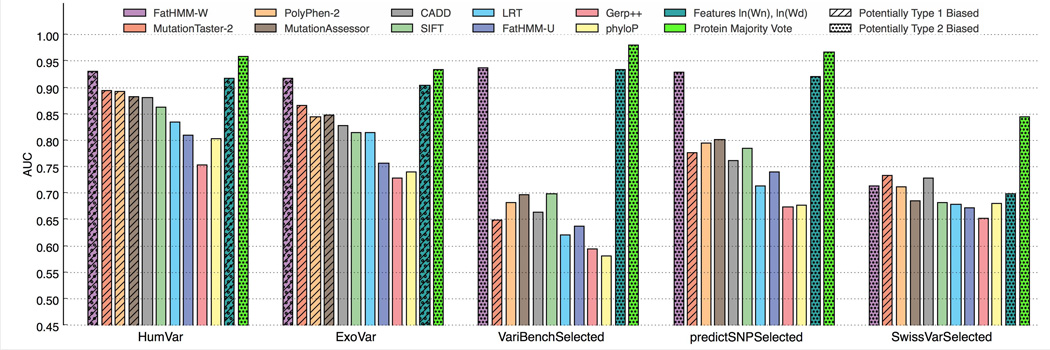

Figure 1. Predictive performance of 10 state-of-the-art pathogenicity prediction tools over five datasets.

Evaluation of the ten different pathogenicity prediction tools (by AUC) over five datasets. The hatched bars indicate potentially biased results, due to the overlap (or possible overlap) between the evaluation data and the data used (by tool developers) for training the prediction tool. The dotted bars indicate that the tool is biased due to type 2 circularity. The Protein Majority Vote predictor (MV) and the logistic regression (over the features used in the weighting scheme of FatHMM-W) are discussed in the second part of the results section.

Protein Majority Vote (MV)

To analyse how protein-related features can influence the performance of the prediction tools, we performed a Protein Majority Vote (MV). For each of the five evaluation datasets, we split the benchmark into ten subsets, and for each of the subsets, used the union of the nine other subsets as training data. Within that framework, we scored a variant by the pathogenic-to-neutral ratio, in the training data, of the protein that variant belongs to. If the protein did not appear in the training data, we assigned a score of 0.5. This strategy, while statistically effective on the currently existing databases, is not appropriate, as it cannot discriminate between neutral and pathogenic variants within the same protein.

Results

Evaluation of ten pathogenicity prediction tools on five variant datasets

We evaluated the performance of eight different prediction tools: MutationTaster-2 (MT2) (Schwarz et al. 2014), LRT (Chun and Fay 2009), PolyPhen-2 (PP2) (Adzhubei et al. 2010), SIFT (Ng and Henikoff 2003), MutationAssessor (MASS) (Reva et al. 2011), FatHMM weighted (FatHMM-W) and FatHMM unweighted (FatHMM-U) (Shihab et al. 2013), and Combined Annotation Dependent Depletion (CADD) (Kircher et al. 2014) as well as two conservation scores: phyloP (Cooper and Shendure 2011) and GERP++ (Davydov et al. 2010). An overview of these tools and conservation scores can be found in Table 1. Details on how these scores were obtained can be found in the Methods section.

We evaluated these tools using a range of pre-processed public datasets and subsets of VariBench, predictSNP and SwissVar (see Methods), resulting in five evaluation datasets (Adzhubei et al. 2010; Mottaz et al. 2010; Thusberg et al. 2011; Li et al. 2013; Nair and Vihinen 2013; Bendl et al. 2014) (see Methods, Table 3 and Supp. Figure S1). Importantly, two of these datasets (HumVar (Adzhubei et al. 2010) and ExoVar (Li et al. 2013)) overlap with at least one of the training sets used to train the individual tools FatHMM-W, MT2, MASS and PP2 (Supp. Figure S1 and Table 3). The Selected datasets can be considered to be truly independent evaluation datasets, which are free of type 1 circularity (see Methods).

We report AUC values per tool and per dataset in Figure 1 and Supp. Table S1 (corresponding ROC, PR curves, AUC/AUC-PR values as well as other evaluation metrics can be found in Supp. Figures S2–S6 and Supp. Table S1). Hatched bars in Figure 1 indicate that the evaluation data were used in part or entirely to train the corresponding tool; these results may suffer from overfitting. Dotted bars indicate that the tools are biased, due to type 2 circularity (see section ‘Type 2 circularity as an explanation of the good performance of FatHMM weighted’).

Five central observations can be made in Figure 1: First, on the two benchmarks HumVar and ExoVar, the four best performing methods were fully or partly trained on these datasets. Second, while MT2, PP2 and MASS outperform CADD and SIFT on benchmarks that include some of their training data (HumVar, ExoVar), this is not the case on the independent VariBenchSelected and predictSNPSelected datasets. A potential explanation is that type 1 circularity — that is overlap between training and evaluation sets — might lead to overly optimistic results on the first two datasets. Third, across the first four datasets, FatHMM-W outperforms all other tools (Supp. Table S1 and Figure 1). All measured evaluation criteria support these findings on the VariBenchSelected and predictSNPSelected datasets (Supp. Table S1), even though FatHMM-W has no type 1 bias on VariBenchSelected and predictSNPSelected. However, it is confounded by type 2 circularity. Fourth, FatHMM-W shows a severe drop in performance on the SwissVarSelected dataset. Finally, we observed across all datasets that trained predictors generally outperform untrained conservation scores.

Type 2 circularity as an explanation of the good performance of FatHMM weighted

The superiority of FatHMM-W's (Shihab et al. 2013) predictions on VariBenchSelected and predictSNPSelected and the severe drop in performance on SwissVarSelected made us investigate its underlying model to find the reason for its superior performance on all but one dataset. FatHMM-W's weighting scheme attributes higher scores to amino acid substitutions in proteins, related proteins, or domains that already include a high fraction of pathogenic variants (see Supp. Text S1). A key element of this weighting scheme is the use of the two parameters Wn and Wd, which represent the relative frequency of neutral variants (Wn) and pathogenic variants (Wd) in the relevant protein family, defined through SUPERFAMILY (Gough et al. 2001) or Pfam (Sonnhammer et al. 1997). To further evaluate the role of this weighting in the performance of FatHMM-W, we compared the original FatHMM-W to a logistic regression over the features (ln(Wn) and ln(Wd)) in a 10-fold cross-validation on the Selected datasets (see Methods and Supp. Text S1). The use of these features alone was sufficient to achieve approximately the same predictive performance as FatHMM-W (see Supp. Table S2 and Figure 1).

Given that the ratio of neutral and pathogenic variants in the same protein family is the key feature used by FatHMM-W, we further analysed how an even simpler statistic – the fraction of pathogenic variants in the same protein – performs as a predictor. We refer to this predictor as a protein majority vote (MV) (see Methods). MV systematically outperforms FatHMM-W (Supp. Table S2 and Figure 1). The pathogenicity of neighbouring variants within the same protein is therefore the best predictor of pathogenicity across these datasets. This strategy, while statistically effective on the currently existing databases, is not appropriate. Indeed it assigns the same label to all variants in the same protein, based on information likely obtained at the protein-level (i.e. that it is associated with a disease), and cannot distinguish between pathogenic and neutral variants within the same protein.

To better understand the outstanding performance of FatHMM-W and the protein-based majority vote, we examined the relative frequency of pathogenic variants across proteins in all our datasets. In the independent evaluation dataset VariBenchSelected, we found that more than 98% of all proteins (4,425 out of 4,490, Table 4) contain variants from a single class, i.e. either “pathogenic” or “neutral” (Figure 2a). For the remainder of the manuscript, we shall refer to proteins with only one class of variant as “pure” proteins (divided in “pathogenic-only” proteins and “neutral-only” proteins). The existence of such “pure” proteins – while theoretically possible – should not be interpreted as a biological phenomenon. Rather, these designations are based on current knowledge, and are at least partially an artefact of how these particular datasets are populated.

Table 4.

Protein categories and variants per category

| Datasets | “Pure” Pathogenic Proteins |

Pathogenic Variants in “Pure” Proteins |

“Pure” Neutral Proteins |

Neutral Variants in “Pure” Proteins |

Mixed Proteins |

Variants in Mixed Proteins |

Total Number of Proteins |

|---|---|---|---|---|---|---|---|

| HumVar | 1277 | 10484 | 8400 | 17140 | 911 | 12765 | 10588 |

| ExoVar | 891 | 4336 | 2794 | 3478 | 165 | 1036 | 3850 |

| VariBenchSelected | 286 | 3865 | 4139 | 5869 | 65 | 532 | 4490 |

| predictSNPSelected | 855 | 7090 | 3738 | 5649 | 228 | 3359 | 4821 |

| SwissVarSelected | 1444 | 2749 | 3614 | 6568 | 540 | 3412 | 5598 |

Overview about the total number of proteins per dataset and the composition of these datasets.

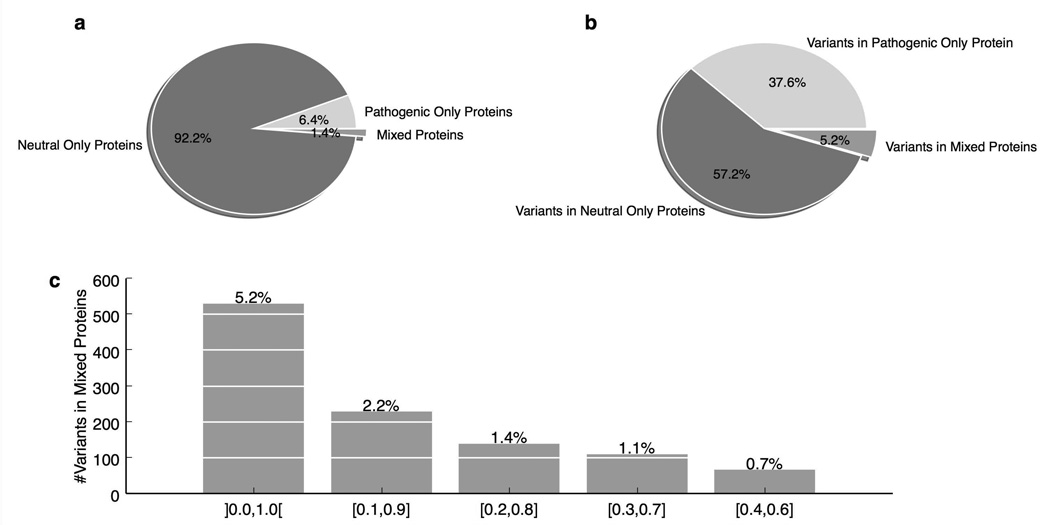

Figure 2. In the VariBenchSelected dataset, most SNPs are in genes with only neutral or only pathogenic variants.

(a) Protein perspective: Proportion of proteins containing only neutral variants (“neutral-only”), only pathogenic variants (“pathogenic-only”), and both types of variants (“mixed”). Only 1.4% of the proteins are mixed. (b) Variant perspective: Proportions, of variants in each of the three categories of proteins. Only 5.2% of variants are in mixed proteins. (c) Fractions of variants, in the VariBenchSelected data set, containing various ratios of pathogenic-to-neutral variants, binned into increasingly narrow bins, approaching balanced proteins. The open interval ]0.0, 1.0[ contains all mixed proteins (as in 2B). Only 0.7% of all variants belong to almost perfectly balanced proteins (closed interval [0.4,0.6]).

Nearly all (94.8%) variants in VariBenchSelected are located in pure proteins with 57.2% in neutral-only proteins and 37.6% in pathogenic-only proteins (Figure 2b). On such a dataset, excellent accuracies can be achieved by predicting the status of a variant based on the other variants in the same protein. We refer to this phenomenon as type 2 circularity. The remaining 5.2% of VariBenchSelected variants are located in “mixed” proteins (Fig. 2b and 2c), which contain both pathogenic and neutral variants (pathogenic-to-neutral ratio in the open interval ]0.0, 1.0[ in Figure 2c). While the MV approach will necessarily misclassify some of these variants, it will still perform well on proteins containing primarily neutral or primarily pathogenic variants, and overall, only 0.7% of all variants are in proteins containing an almost balanced ratio of pathogenic and neutral variants (pathogenic-to-neutral ratio in the interval [0.4, 0.6] in Figure 2c). Similar dataset compositions can be observed in the other three datasets HumVar, ExoVar and predictSNPSelected (Supp. Figures S7–S9). A striking property of SwissVarSelected is its much larger fraction of proteins with almost balanced pathogenic-to-neutral ratio: 6.5% of all variants (832 out of 12729) can be found in the most balanced category of mixed proteins [0.4,0.6] (see Supp. Figure S10), compared to an average of 1.5% in the other four datasets.

To further understand FatHMM-W’s performance, we evaluated it separately on mixed proteins. As shown in Figures 3, Supp. Figure S11 and Supp. Figure S12, FatHMM-W performs well on pure proteins but loses much of its predictive power on the mixed proteins, as it is misled by its weighting scheme. On almost-balanced proteins, FatHMM-W is therefore outperformed by all other tools but phyloP (Figure 3). This may also be the first reason why FatHMM-W performs worse on SwissVarSelected than on all other datasets: SwissVarSelected contains many more variants in the most mixed categories (Figure S10). FatHMM-W even performs poorly on mixed proteins from its own training dataset (Supp. Figure S11). We observed that PolyPhen-2 outperforms all other tools in the mixed categories for the datasets predictSNPSelected and SwissVarSelected (Supp. Figure S12). For the VariBenchSelected dataset no clear winner can be determined (Supp. Figure S12).

Figure 3. Performance of ten pathogenicity prediction tools according to protein pathogenic-to-neutral variant ratio.

Evaluation of tool performance on subsets of VariBenchSelected, predictSNPSelected and SwissVarSelected, defined according to the relative proportions of pathogenic and neutral variants in the proteins they contain. “Pure” indicates variants belonging to proteins containing only one class of variant. [x, y] indicate variants belonging to mixed proteins, containing a ratio of pathogenic-to-neutral variants between x and y. ]0.0, 1.0[ therefore indicates all mixed proteins (the ratios of 0.0 and 1.0 being excluded by the reversed brackets). While FatHMM-W performs well or excellently on variants belonging to pure proteins (VariBenchSelected and predictSNPSelected), it performs poorly on those belonging to mixed proteins.

The second reason for the drop in performance is the presence of “new” proteins in SwissVarSelected that are unknown to the FatHMM-W weighting database. To show this, we used the HumVar and ExoVar datasets as a proxy for the training data among all our tools (FatHMM’s training data is not fully publicly available). We observed that ~91% of all pathogenic and ~68% of all neutral variants in VariBenchSelected are located in proteins that also occur in HumVar/ExoVar (Supp. Figure S13, Supp. Table S3). As FatHMM-W makes use of information from protein families, we computed pair-wise BLASTP (Camacho et al. 2009) alignments between all proteins in our Selected datasets and proteins in HumVar/ExoVar. Approximately 99% of all pathogenic variants in VariBenchSelected are located in proteins from HumVar/Exovar or proteins with more than 70% sequence similarity to a protein in HumVar/Exovar. Similar statistics can be observed for predictSNPSelected (Supp. Figure S13 and Supp. Table S4). However, for SwissVarSelected we observed that only ~61% of all pathogenic and ~56% of all neutral variants belong to proteins from HumVar/ExoVar (Supp. Figure S13 and Supp. Table S5). Approximately 78% of all pathogenic and ~77% of all neutral variants in SwissVarSelected are located in proteins from HumVar/ExoVar or in proteins with high sequence similarity (70% sequence similarity) to a protein from HumVar/ExoVar (Supp. Figure S13 and Supp. Table S5). Hence a significant proportion of SwissVarSelected variants cannot be found in proteins from the proxy training dataset or proteins with high sequence similarity. All of these findings lead to the conclusion that FatHMM-W’s good performance on VariBenchSelected and predictSNPSelected is largely due to type 2 circularity.

Evaluation of two combined predictors

After studying in silico tools for pathogenicity prediction, we compared the performance of two methods that combine individual tools into a joint prediction, the meta-predictors Condel (González-Pérez and López-Bigas 2011) and Logit (Li et al. 2013). Based on our previous findings, we were interested in how their performance compares when evaluated on datasets that avoid type 1 circularity. Furthermore, we wanted to compare the performance of meta-predictors that include FatHMM-W and may suffer from type 2 circularity, to meta-predictors that do not include FatHMM-W.

Condel’s webserver provides two combinations of tool scores: The older (original) version combines PP2 (Adzhubei et al. 2010), SIFT (Ng and Henikoff 2003) and MASS (Reva et al. 2011) and the latest one adds FatHMM-W (Shihab et al. 2013). We refer to these two combinations as Condel and Condel+, respectively (Supp. Table S6). To provide a fair comparison, we used the same sets of tools for the Logit (and Logit+) model by Li et al. (Logit and Logit+, see Supp. Table S6). Thus, in our manuscript, Logit combines PP2, SIFT, and MASS; Logit+ combines these three tools and FatHMM-W.

To avoid type 1 circularity, we chose the Selected datasets as our evaluation datasets, as they do not overlap with the training dataset of any individual tool or meta-predictor. Our results using Logit on all Selected datasets confirm those reported by Li et al. (Li et al. 2013): Logit outperforms all individual tools and Condel in terms of AUC (Figure 4 and Supp. Figures S14–S18). Condel’s performance (AUC=0.70) is on par with SIFT (AUC=0.70) for VariBenchSelected, the best performing of the tools it combines. We then evaluated the combination of tools on the pure and mixed proteins on VariBenchSelected, predictSNPSelected and SwissVarSelected (Supp. Figure S19). While Logit performs well on the pure proteins, Condel performs at least as well as Logit on variants in mixed proteins.

Figure 4. Comparison of the performance of two meta-predictors (Logit and Condel) and their component tools, across five datasets.

Bar heights reflect AUC for each tool and tool combination. Logit and Condel are meta-predictors combining MASS, PP2 and SIFT. The ‘+’ versions of Logit and Condel also include FatHMM-W. While effective in prediction, FATHMM-W (alone and in the Logit+ and Condel+ meta-predictors) is optimistically biased due to type 2 circularity (see Results section). In the ‘Selected’ datasets, Logit provides the best unbiased performance. SIFT has the lowest performance in the HumVar and ExoVar datasets, but it is also the only predictor that is unbiased in these two datasets.

The evaluation of Condel+ and Logit+ may be optimistically biased by type 2 circularity, given the inclusion of FatHMM-W. Across all datasets, we observed that adding FatHMM-W to either tool score combination (Condel+ or Logit+) led to a performance boost (Figure 4 and Supp. Figures S14–S18). However, this did not hold for mixed proteins, providing strong evidence for type 2 circularity. For mixed proteins, we observed a significant drop in performance for both Logit+ and Condel+ on all datasets but SwissVarSelected (see Supp. Figure S19).

Discussion

The wealth of pathogenicity prediction tools proposed in the literature raises the question whether there are systematic differences in the quality of their predictions when evaluated on a large number of variant databases. In an attempt to answer this question, we performed a comparative evaluation of pathogenicity prediction tools and demonstrated the existence of two types of circularity that meaningfully impair comparison of in silico pathogenicity prediction tools. We showed how ignoring these effects could lead to overly optimistic assessments of tool performance. One severe consequence of this phenomenon is that it may hinder the discovery of novel disease risk genes, as these tools are widely used to choose variants for further functional investigation.

In this manuscript we have described and demonstrated ‘type 1’ and ‘type 2’ circularity. Type 1 circularity occurs because of an overlap between training and evaluation datasets, possibly resulting in overfitting (Hastie et al. 2009), meaning that a tool is highly tailored to a given dataset, but will perform worse on novel variants. Type 1 circularity is a well-known and studied phenomenon in machine learning (Hastie et al. 2009) and there are guidelines on how it can be avoided (Vihinen 2013). To avoid type 1 circularity, we built the Selected datasets, in which none of the variants have previously been seen by any of the tools (with the possible and unavoidable exception of MT2). This makes them the most appropriate datasets on which to draw conclusions regarding the relative performance of the tools. Figure 1 and Supp. Table S1 suggest that MT2, PP2 and MASS overfit on their training data and have rather weak generalization abilities.

Our efforts to understand the outstanding performance of FatHMM-W on 4 out of 5 datasets led to additional insights about type 2 circularity. Our findings about type 2 circularity demonstrate that predicting the pathogenicity of a variant – based on the pathogenicity of all other known variants in the same protein – is a statistically successful, but ultimately inappropriate strategy. It is inappropriate because it will often fail to correctly classify variants in proteins that contain both pathogenic and neutral variants. Further, it will often fail to discover pathogenic variants in unannotated proteins.

The apparent success of this strategy is due to the fact that, in variant databases, it is frequently the case that all the variants of the same protein are annotated with the same status. Furthermore, pathogenic-only proteins contain many more labelled variants than neutral-only proteins. In these databases, pathogenic amino acid substitutions are heavily concentrated in a few key genes (Figure 2 and Supp. Figures S7–S9). These observations regarding the distribution of variants in our datasets likely result – in part – from research practices relevant to the way variant databases are populated. Often an initial discovery of a pathogenic variant in a gene (for a given disease) leads to additional discoveries of pathogenic variants in the same gene, in part because a given gene will be more heavily investigated once it has been identified as harbouring pathogenic variants.

These properties of variant databases explain why we observe that pathogenicity can be predicted from the annotation of variants within the same protein by a majority vote with excellent accuracies (Figure 1 and Supp. Table S2). They also explain why FatHMM-W, whose predictive power is driven by the pathogenic-to-neutral ratio of variants in the same protein, performs so well on VariBenchSelected and predictSNPSelected (Figure 1 and Supp. Table S2). This approach, however, will often fail to correctly classify amino acid substitutions in proteins that contain both pathogenic and neutral variants (Figure 3, Supp. Figure S11 and Supp. Figure S12). This partially explains why FatHMM-W performs worse on SwissVarSelected than on all other datasets, as it contains many more variants in the most mixed categories (Figure 3 and Figure S10). The same phenomenon also occurs when building meta-predictors: the performance of Logit+ and Condel+ are similarly optimistically biased because they contain FathHMM-W.

The pervasiveness of circularity makes it difficult to draw definitive conclusions regarding the relative performance of these prediction tools. We do nevertheless observe a reassuring trend for tools trained for the purpose of predicting pathogenicity to outperform conservation scores. In addition, the Logit combination of SIFT, PP2 and MASS is a slightly better predictor of pathogenicity than any of these tools taken individually. Also, for the mixed proteins in particular, Condel performs better than, or is on par with, Logit across the datasets (Supp. Figure S19).

It is important to note that the drop in performance that we observe when applying a method to a dataset (that it was not trained on) could also be due to the different definitions of pathogenicity and neutrality used in the different benchmark datasets (see Table 2). It should be an important goal of future studies to quantify this impact. However, as long as circularity exists in a comparative study, it will mask the effect of these differences in the definition of pathogenicity: In Figure 1, FatHMM-W seems to provide excellent prediction results across four different benchmark datasets, irrespective of the different definitions of pathogenicity and neutrality. However, our analysis shows that the true origin of this superior performance is type 2 circularity and not robustness to different definitions of pathogenicity and neutrality.

Therefore, a key step in future studies examining this problem will be to avoid any type of circularity. The existence of these types of circularity has immediate implications for the further development and evaluation of pathogenicity prediction tools: At the very least, and as recommended previously (Vihinen 2013), prediction tools should only be compared on benchmarks that do not overlap with any of the datasets used to train the tool. We provide such datasets, VariBenchSelected, predictSNPSelected, and SwissVarSelected (see Supp. Data S1) for this kind of independent evaluation. All prediction tools should make their training datasets public, as type 1 circularity cannot be excluded if any portion of a training dataset is kept private.

A more rigorous strategy would be to re-train all predictors on the same dataset, in order to truly evaluate the predictors and not the quality of their training datasets. However, this is only possible if the raw variant descriptors (variant features) – from which the tools derive their predictions – are made available. We investigated all tools presented here and only for PP2 was it straightforward to obtain these descriptors (Adzhubei et al. 2010).

To address the problem of type 2 circularity, it is imperative that future studies report prediction accuracy as a function of the pathogenic-to-neutral ratio, as in Figure 3. An even better strategy would be to stratify training and test datasets such that variants from the same protein only occur in either the training or the test dataset, completely removing the possibility of classification within the same protein (Adzhubei et al. 2010). Furthermore, one could construct predictors for different classes of variants, defined by the pathogenic-to-neutral ratio in the proteins, to address the problem that none of the existing tools achieves constantly good results across these classes on VariBenchSelected. Both these alternative approaches, however, rely critically on the availability of raw descriptors used by the corresponding tools.

Finally, there is another potential source of circularity to beware of in the future: The novel variants entered in databases may be annotated using existing pathogenicity prediction tools. These tools will therefore appear to perform well on ‘new’ data (from later versions of mutation databases), whereas in fact they will only be recovering labels that they have themselves provided. We therefore advocate documentation of the sources of evidence that were used to assign labels to variants when they are entered into a variant database. This is akin to standard practice for gene function databases, which record whether the annotation of a gene with a particular function was biologically validated and/or computationally predicted.

Supplementary Material

Acknowledgments

We thank Shaun Purcell for helpful feedback and advice throughout the project and Barbara Rakitsch and Dean Bodenham for fruitful discussion. We thank our anonymous reviewers for their helpful feedback.

Funding:

The research of Professor Dr. Karsten Borgwardt was supported by the Alfried Krupp Prize for Young University Teachers of the Alfried Krupp von Bohlen und Halbach-Stiftung. Dr. Smoller is supported in part by NIH/NIMH grant K24MH094614.

References

- Adzhubei IA, Schmidt S, Peshkin L, Ramensky VE, Gerasimova A, Bork P, Kondrashov AS, Sunyaev SR. A method and server for predicting damaging missense mutations. Nat. Methods. 2010;7:248–249. doi: 10.1038/nmeth0410-248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bendl J, Stourac J, Salanda O, Pavelka A, Wieben ED, Zendulka J, Brezovsky J, Damborsky J. PredictSNP: Robust and Accurate Consensus Classifier for Prediction of Disease-Related Mutations. PLoS Comput Biol. 2014;10:e1003440. doi: 10.1371/journal.pcbi.1003440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camacho C, Coulouris G, Avagyan V, Ma N, Papadopoulos J, Bealer K, Madden TL. BLAST+: architecture and applications. BMC Bioinformatics. 2009;10:421. doi: 10.1186/1471-2105-10-421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Capriotti E, Altman RB, Bromberg Y. Collective judgment predicts disease-associated single nucleotide variants. BMC Genomics. 2013;14:S2. doi: 10.1186/1471-2164-14-S3-S2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chun S, Fay JC. Identification of deleterious mutations within three human genomes. Genome Res. 2009;19:1553–1561. doi: 10.1101/gr.092619.109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cooper GM, Shendure J. Needles in stacks of needles: finding disease-causal variants in a wealth of genomic data. Nat. Rev. Genet. 2011;12:628–640. doi: 10.1038/nrg3046. [DOI] [PubMed] [Google Scholar]

- Davis J, Goadrich M. The relationship between Precision-Recall and ROC curves. 2006 [Google Scholar]

- Davydov EV, Goode DL, Sirota M, Cooper GM, Sidow A, Batzoglou S. Identifying a High Fraction of the Human Genome to be under Selective Constraint Using GERP++ PLoS Comput Biol. 2010;6:e1001025. doi: 10.1371/journal.pcbi.1001025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan R-E, Chang K-W, Hsieh C-J, Wang X-R, Lin C-J. LIBLINEAR: A library for large linear classification. J. Mach. Learn. Res. 2008;9:1871–1874. [Google Scholar]

- Flicek P, Amode MR, Barrell D, Beal K, Billis K, Brent S, Carvalho-Silva D, Clapham P, Coates G, Fitzgerald S, Gil L, Girón CG, et al. Ensembl 2014. Nucleic Acids Res. 2014;42:D749–D755. doi: 10.1093/nar/gkt1196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- González-Pérez A, López-Bigas N. Improving the Assessment of the Outcome of Nonsynonymous SNVs with a Consensus Deleteriousness Score, Condel. Am. J. Hum. Genet. 2011;88:440–449. doi: 10.1016/j.ajhg.2011.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gough J, Karplus K, Hughey R, Chothia C. Assignment of homology to genome sequences using a library of hidden Markov models that represent all proteins of known structure. J. Mol. Biol. 2001;313:903–919. doi: 10.1006/jmbi.2001.5080. [DOI] [PubMed] [Google Scholar]

- Hastie T, Tibshirani R, Friedman J. The Elements of Statistical Learning. New York: Springer; 2009. Model Assessment and Selection; pp. 219–259. [Google Scholar]

- Hindorff LA, Sethupathy P, Junkins HA, Ramos EM, Mehta JP, Collins FS, Manolio TA. Potential etiologic and functional implications of genome-wide association loci for human diseases and traits. Proc. Natl. Acad. Sci. 2009;106:9362–9367. doi: 10.1073/pnas.0903103106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hubbard TJP, Aken BL, Ayling S, Ballester B, Beal K, Bragin E, Brent S, Chen Y, Clapham P, Clarke L, Coates G, Fairley S, et al. Ensembl 2009. Nucleic Acids Res. 2009;37:D690–D697. doi: 10.1093/nar/gkn828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiezun A, Garimella K, Do R, Stitziel NO, Neale BM, McLaren PJ, Gupta N, Sklar P, Sullivan PF, Moran JL, Hultman CM, Lichtenstein P, et al. Exome sequencing and the genetic basis of complex traits. Nat. Genet. 2012;44:623–630. doi: 10.1038/ng.2303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim HS, Mendiratta S, Kim J, Pecot CV, Larsen JE, Zubovych I, Seo BY, Kim J, Eskiocak B, Chung H, McMillan E, Wu S, et al. Systematic Identification of Molecular Subtype-Selective Vulnerabilities in Non-Small-Cell Lung Cancer. Cell. 2013;155:552–566. doi: 10.1016/j.cell.2013.09.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kircher M, Witten DM, Jain P, O’Roak BJ, Cooper GM, Shendure J. A general framework for estimating the relative pathogenicity of human genetic variants. Nat. Genet. 2014;46:310–315. doi: 10.1038/ng.2892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee S-I, Lee H, Abbeel P, Ng AY. Efficient L~ 1 Regularized Logistic Regression. 2006 [Google Scholar]

- Leongamornlert D, Mahmud N, Tymrakiewicz M, Saunders E, Dadaev T, Castro E, Goh C, Govindasami K, Guy M, O’Brien L, Sawyer E, Hall A, et al. Germline BRCA1 mutations increase prostate cancer risk. Br. J. Cancer. 2012;106:1697–1701. doi: 10.1038/bjc.2012.146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li M-X, Kwan JSH, Bao S-Y, Yang W, Ho S-L, Song Y-Q, Sham PC. Predicting Mendelian Disease-Causing Non-Synonymous Single Nucleotide Variants in Exome Sequencing Studies. PLoS Genet. 2013;9:e1003143. doi: 10.1371/journal.pgen.1003143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu X, Jian X, Boerwinkle E. dbNSFP v2.0: A Database of Human Non-synonymous SNVs and Their Functional Predictions and Annotations. Hum. Mutat. 2013;34:E2393–E2402. doi: 10.1002/humu.22376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacArthur DG, Balasubramanian S, Frankish A, Huang N, Morris J, Walter K, Jostins L, Habegger L, Pickrell JK, Montgomery SB, Albers CA, Zhang ZD, et al. A Systematic Survey of Loss-of-Function Variants in Human Protein-Coding Genes. Science. 2012;335:823–828. doi: 10.1126/science.1215040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Magrane M, Consortium U. UniProt Knowledgebase: a hub of integrated protein data. Database. 2011;2011:bar009–bar009. doi: 10.1093/database/bar009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLaren W, Pritchard B, Rios D, Chen Y, Flicek P, Cunningham F. Deriving the consequences of genomic variants with the Ensembl API and SNP Effect Predictor. Bioinformatics. 2010;26:2069–2070. doi: 10.1093/bioinformatics/btq330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mottaz A, David FPA, Veuthey A-L, Yip YL. Easy retrieval of single amino-acid polymorphisms and phenotype information using SwissVar. Bioinforma. Oxf. Engl. 2010;26:851–852. doi: 10.1093/bioinformatics/btq028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nair PS, Vihinen M. VariBench: A Benchmark Database for Variations. Hum. Mutat. 2013;34:42–49. doi: 10.1002/humu.22204. [DOI] [PubMed] [Google Scholar]

- Ng PC, Henikoff S. SIFT: predicting amino acid changes that affect protein function. Nucleic Acids Res. 2003;31:3812–3814. doi: 10.1093/nar/gkg509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, et al. Scikit-learn: Machine Learning in Python. J Mach Learn Res. 2011;12:2825–2830. [Google Scholar]

- Purcell SM, Moran JL, Fromer M, Ruderfer D, Solovieff N, Roussos P, O’Dushlaine C, Chambert K, Bergen SE, Kähler A, Duncan L, Stahl E, et al. A polygenic burden of rare disruptive mutations in schizophrenia. Nature. 2014;506:185–190. doi: 10.1038/nature12975. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reva B, Antipin Y, Sander C. Predicting the functional impact of protein mutations: application to cancer genomics. Nucleic Acids Res. 2011;39:e118–e118. doi: 10.1093/nar/gkr407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudin CM, Durinck S, Stawiski EW, Poirier JT, Modrusan Z, Shames DS, Bergbower EA, Guan Y, Shin J, Guillory J, Rivers CS, Foo CK, et al. Comprehensive genomic analysis identifies SOX2 as a frequently amplified gene in small-cell lung cancer. Nat. Genet. 2012;44:1111–1116. doi: 10.1038/ng.2405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwarz JM, Cooper DN, Schuelke M, Seelow D. MutationTaster2: mutation prediction for the deep-sequencing age. Nat. Methods. 2014;11:361–362. doi: 10.1038/nmeth.2890. [DOI] [PubMed] [Google Scholar]

- Sherry ST, Ward M-H, Kholodov M, Baker J, Phan L, Smigielski EM, Sirotkin K. dbSNP: the NCBI database of genetic variation. Nucleic Acids Res. 2001;29:308–311. doi: 10.1093/nar/29.1.308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shihab HA, Gough J, Cooper DN, Stenson PD, Barker GLA, Edwards KJ, Day INM, Gaunt TR. Predicting the Functional, Molecular, and Phenotypic Consequences of Amino Acid Substitutions using Hidden Markov Models. Hum. Mutat. 2013;34:57–65. doi: 10.1002/humu.22225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sonnhammer ELL, Eddy SR, Durbin R. Pfam: A comprehensive database of protein domain families based on seed alignments. Proteins Struct. Funct. Genet. 1997;28:405–420. doi: 10.1002/(sici)1097-0134(199707)28:3<405::aid-prot10>3.0.co;2-l. [DOI] [PubMed] [Google Scholar]

- Stenson PD, Mort M, Ball EV, Shaw K, Phillips AD, Cooper DN. The Human Gene Mutation Database: building a comprehensive mutation repository for clinical and molecular genetics, diagnostic testing and personalized genomic medicine. Hum. Genet. 2014;133:1–9. doi: 10.1007/s00439-013-1358-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tennessen JA, Bigham AW, O’Connor TD, Fu W, Kenny EE, Gravel S, McGee S, Do R, Liu X, Jun G, Kang HM, Jordan D, et al. Evolution and Functional Impact of Rare Coding Variation from Deep Sequencing of Human Exomes. Science. 2012;337:64–69. doi: 10.1126/science.1219240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thevenon J, Milh M, Feillet F, St-Onge J, Duffourd Y, Jugé C, Roubertie A, Héron D, Mignot C, Raffo E, Isidor B, Wahlen S, et al. Mutations in SLC13A5 Cause Autosomal-Recessive Epileptic Encephalopathy with Seizure Onset in the First Days of Life. Am. J. Hum. Genet. 2014;95:113–120. doi: 10.1016/j.ajhg.2014.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thusberg J, Olatubosun A, Vihinen M. Performance of mutation pathogenicity prediction methods on missense variants. Hum. Mutat. 2011;32:358–368. doi: 10.1002/humu.21445. [DOI] [PubMed] [Google Scholar]

- Vihinen M. How to evaluate performance of prediction methods? Measures and their interpretation in variation effect analysis. BMC Genomics. 2012;13:S2. doi: 10.1186/1471-2164-13-S4-S2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vihinen M. Guidelines for reporting and using prediction tools for genetic variation analysis. Hum. Mutat. 2013;34:275–282. doi: 10.1002/humu.22253. [DOI] [PubMed] [Google Scholar]

- Wang K, Li M, Hakonarson H. ANNOVAR: functional annotation of genetic variants from high-throughput sequencing data. Nucleic Acids Res. 2010;38:e164–e164. doi: 10.1093/nar/gkq603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weinreb I, Piscuoglio S, Martelotto LG, Waggott D, Ng CKY, Perez-Ordonez B, Harding NJ, Alfaro J, Chu KC, Viale A, Fusco N, Cruz Paula Ada, et al. Hotspot activating PRKD1 somatic mutations in polymorphous low-grade adenocarcinomas of the salivary glands. Nat. Genet. 2014;46:1166–1169. doi: 10.1038/ng.3096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao L, Wang F, Wang H, Li Y, Alexander S, Wang K, Willoughby CE, Zaneveld JE, Jiang L, Soens ZT, Earle P, Simpson D, et al. Next-generation sequencing-based molecular diagnosis of 82 retinitis pigmentosa probands from Northern Ireland. Hum. Genet. 2014:1–14. doi: 10.1007/s00439-014-1512-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

For each dataset, we compiled comma-separated files containing all obtained tool scores and predicted labels as well as information about the variant (true label, nucleotide and amino acid changes, minor allele frequencies, UniProt accession IDs (Magrane and Consortium 2011), Ensembl gene, transcript and protein IDs (Flicek et al. 2014) and dbSNP IDs (rs#) if available (Sherry et al. 2001)). All these datasets including the tool predictions can be found at the VariBench website (http://structure.bmc.lu.se/VariBench/GrimmDatasets.php) as well as a single Excel file at the journals website (Supp. Data S2).

Further, we provide all Python scripts used to generate all tables and figures in this study along with all datasets, compressed as a single ZIP file (Supp. Data S1). All data and Python scripts can also be downloaded from: http://www.bsse.ethz.ch/mlcb/research/bioinformatics-and-computational-biology/pathogenicity-prediction.html.