Abstract

Purpose

Children must develop optimal perceptual weighting strategies for processing speech in their first language. Hearing loss can interfere with that development, especially if cochlear implants are required. The three goals of this study were to measure, for children with and without hearing loss: (1) cue weighting for a manner distinction, (2) sensitivity to those cues, and (3) real-world communication functions.

Method

107 children (43 with NH, 17 with HAs, and 47 with CIs) performed several tasks: labeling of stimuli from /bɑ/-to-/wɑ/ continua varying in formant and amplitude rise time (FRT and ART); discrimination of ART; word recognition; and phonemic awareness.

Results

Children with hearing loss were less attentive overall to acoustic structure than children with NH. Children with CIs, but not those with HAs, weighted FRT less and ART more than children with NH. Sensitivity could not explain cue weighting. FRT cue weighting explained significant amounts of variability in word recognition and phonemic awareness; ART cue weighting did not.

Conclusion

Signal degradation inhibits access to spectral structure for children with CIs, but cannot explain their delayed development of optimal weighting strategies. Auditory training could strengthen the weighting of spectral cues for children with CIs, thus aiding spoken language acquisition.

INTRODUCTION

Children must learn many skills during childhood. One of the most essential of these is how to use language for communication. Mastering this skill involves acquiring keen sensitivity to the elements of linguistic structure, along with knowledge about how those elements can be combined, so that communicative intent is both comprehended and generated. Children must learn to recover linguistic elements from the speech signal automatically, meaning in an obligatory manner with a minimum of cognitive effort. They must also be able to do so under adverse listening conditions. One element that is considered especially important for children to gain sensitivity to is the phonemic segment. Children must develop precisely defined phonemic categories, and be able to automatically recover phonemic structure from the speech they hear.

This last suggestion presents a conundrum, however, because the phonemic structure of language is not represented isomorphically in the speech signal. Separate temporal sections of the signal are not discretely associated with individual phonemic segments, and there are no acoustic “signatures” defining individual phonemes. Instead, the speech signal is continuous and highly variable in acoustic form, depending on overall phonemic and grammatical structure, as well as on talker characteristics. Nonetheless, listeners are able to report the specific phonemes encoded into the speech heard, as well as the order of those phonemes: that is, a phoneme-based transcription can be derived from a continuous and variable speech signal. Thus a major goal of speech perception research since its inception has been to ascertain how listeners manage to do just that, to recover discrete segments quickly and accurately from these continuous and variable signals.

Decades of investigation addressing the goal stated above has led to the common conclusion that mature listeners possess sophisticated perceptual strategies, ones that involve attending strongly to the components of the signal that are most relevant to identifying phonemic units, and organizing that structure appropriately (e.g., Lisker, 1975; McMurray & Jongman, 2011; Nittrouer, Manning, & Meyer, 1993; Strange & Shafer, 2008). Although not consciously employed, these strategies vary across languages, such that the specific acoustic properties attended to (or weighted) depend on which properties define phonemic segments in the language being examined (e.g., Beddor & Strange, 1982; Crowther & Mann, 1992; DiCanio, 2014; Flege & Port, 1981; Llanos, Dmitrieva, Shultz, & Francis, 2013; Miyawaki et al., 1975; Pape & Jesus, 2014). Precisely because the way acoustic structure in the speech signal gets weighted is language specific, there must surely be some perceptual learning involved and several investigators have described that learning process (e.g., Francis, Kaganovich, & Driscoll-Huber, 2008; Holt & Lotto, 2006). In particular, there is reliable evidence that young children weight the various cues to phonemic identity differently than adults, and developmental changes occur in those weighting strategies (Bernstein, 1983; Bourland Hicks & Ohde, 2005; Greenlee, 1980; Mayo, Scobbie, Hewitt, & Waters, 2003; Nittrouer et al., 1993; Ohde & German, 2011; Simon & Fourcin, 1978; Wardrip-Fruin & Peach, 1984). The process of acquiring appropriate and language-specific weighting strategies is a protracted activity, occupying much of the first decade of life (Beckman & Edwards, 2000; Idemaru & Holt, 2013; Mayo et al., 2003; Nittrouer, 2004).

Unfortunately, several conditions of childhood can impose barriers to children’s progress through this process of discovering optimal listening strategies for the language they are learning. For example, children living in conditions of poverty may not have adequate exposure to complete language models to allow them to discover how acoustic structure specifies phonemic categories; that is, their experiences might not be sufficient for perceptual learning to occur optimally (Nittrouer, 1996). Another potentially interfering condition involves hearing loss, which can alter children’s auditory environments such that the acoustic properties most relevant to phonemic decisions in the ambient language are not plainly available to them. This problem most seriously affects spectral properties of the speech signal because that spectral structure is most affected by hearing loss. Even mild losses involve broadened cochlear filters, leaving the internal representation of speech “smeared” (e.g., Narayan, Temchin, Recio, & Ruggero, 1998; Oxenham & Bacon, 2003). This degradation in spectral structure especially diminishes access to static and time-varying formant patterns, and that information has been found to be particularly important to children’s speech perception (e.g., Bourland Hicks & Ohde, 2005; Greenlee, 1980; Nittrouer, 1992; Nittrouer & Studdert-Kennedy, 1987). For children with hearing loss significant enough to warrant cochlear implants (CIs), spectral degradation is even more extreme: The spread of excitation along the basilar membrane means that only a few broad spectral channels are available in the representation (e.g., Fishman, Shannon, & Slattery, 1997; Friesen, Shannon, Başkent, & Wang, 2001; Kiefer, von Ilberg, Rupprecht, Hubner-Egner, & Knecht, 2000; Shannon, Zeng, & Wygonski, 1998).

These constraints leave children who have hearing loss with diminished access to the acoustic structure – especially spectral – that defines phonemic categories. One way in which the developmental process might circumvent this barrier is that children with hearing loss could develop phonemic categories defined according to the acoustic properties available to them, rather than the ones used by listeners with normal hearing in their language environment. This possibility arises because of the speech attribute termed redundancy (e.g., Lacroix & Harris, 1979; Xu & Pfingst, 2008). This term suggests that the multiple kinds of acoustic properties – or cues – arising during the course of speech production are all equally utilitarian in phoneme category formation. Accordingly, it would be possible that children with hearing loss could develop phonemic categories defined by these alternative cues, the ones left intact by the signal processing of their auditory prostheses.

One study has examined directly this question of whether listeners with hearing loss can utilize alternative acoustic cues to phonemic distinctions with the same efficiency that listeners with normal hearing bring to the task using more traditional cues. Moberly et al. (2014) investigated whether adults with acquired hearing loss who use CIs weight formant structure and amplitude structure similarly to adults with normal hearing in their labeling of syllable-initial /b/ and /w/. In this manner contrast, the rate of vocal-tract opening over the course of syllable production affects both formant structure (i.e., rate of rise in frequency of the first two formants) and amplitude structure (i.e., rate of rise in amplitude). These two cues, termed formant rise time (FRT) and amplitude rise time (ART), have been examined in labeling tasks with adults and children. For this particular manner contrast, listeners of all ages, with normal hearing and typical language skills, are found to weight FRT far more strongly than ART (Carpenter & Shahin, 2013; Goswami, Fosker, Huss, Mead, & Szucs, 2011; Nittrouer & Studdert-Kennedy, 1986; Nittrouer, Lowenstein, & Tarr, 2013, Walsh & Diehl, 1991). However, formant structure is not well preserved in the signal processing of CIs. Accordingly, Moberly et al. found that adults with CIs placed less perceptual weight on FRT than adults with normal hearing. At the same time, they placed greater perceptual weight on ART. Thus, these adults with CIs were indeed weighting the alternative cue to phonemic categorization more than the cue typically weighted greatly. That alternative strategy might be predicted to be just as effective as the usual strategy, because FRT and ART covary reliably in the speech signal, and Moberly et al. tested that prediction by measuring the amount of variance in word recognition explained by the weighting of each cue. It was found that the amount of perceptual weight placed on FRT – the typical cue – correlated strongly with word recognition scores, standardized β = 0.77, but the weight placed on ART was not related to word recognition at all. Based on these results, it was concluded that FRT and ART are not equivalent in linguistic utility, so adults with hearing loss who were better able to maintain their pre-loss listening strategies had better word recognition. The current study extended that investigation to children with CIs, who had been deaf since birth. It may be that the adults in the Moberly et al. study were unable to redefine their phonemic categories based on their post hearing loss auditory abilities, but children – who are deaf since birth – may be able to use those alternative strategies effectively. That is, phonemic categories for them may be developed from the start based on acoustic cues that are different from the ordinary.

Previous investigations into the perceptual weighting strategies of children with hearing loss have focused primarily on children with CIs, with mixed results. In general, it has been observed that children with CIs show similar weighting to children with normal hearing of cues that are not spectral in nature, but rather are based on the duration of signal properties (e.g., Caldwell & Nittrouer; 2013; Nittrouer, Caldwell-Tarr, Moberly, & Lowenstein, 2014). Spectral properties, on the other hand, are typically found to be weighted less by children with CIs than by those with normal hearing (e.g., Giezen, Escudero, & Baker, 2010; Hedrick, Bahng, Hapsburg, & Younger, 2011; Nittrouer et al., 2014). However, there are exceptions to that trend: in particular, Giezen et al. found that the children with CIs weighted the static spectral structure of steady-state vowels the same as children with normal hearing. Where children with moderate hearing loss who wear hearing aids (HAs) are concerned, Nittrouer and Burton (2002) observed that they generally paid less attention to spectral properties than children with normal hearing, regardless of whether they were static or time-varying. However, it was also found that the distribution of perceptual attention across two spectral cues differed depending on the amount and quality of early linguistic experience, such that those with more and better experience displayed greater weighting of the spectral property typically weighted by adults in their native-language community. Thus, an effect of linguistic experience in addition to hearing loss was observed.

Current Study

The primary goal of the study reported here was to examine perceptual weighting strategies for children with hearing loss, in order to determine whether they are the same as those of children with normal hearing (NH). In particular, a phonemic contrast was employed in this investigation that compared the weighting of two cues: one cue that is weighted heavily by listeners with NH, but which listeners with hearing loss should have diminished access to (FRT), and another cue that is not weighted heavily by listeners with NH, but which should be better preserved by impaired auditory systems, even those with CIs (ART). For children with HAs, formant structure should be more readily available than it is for children with CIs: although the broadened auditory filters associated with hearing loss diminish spectral representations, the extent of that effect should not be as severe as what is encountered for children wearing CIs. Amplitude structure should be fairly well-preserved by HAs and CIs alike, although the ubiquitous application of dynamic-range compression means the range of that structure will be constrained, especially in CIs. Nonetheless, change in amplitude across time should be well represented for these children with hearing loss.

Overall, outcomes of this investigation should provide insight into how the acquisition of optimal, language-specific weighting strategies is influenced by the process of learning language under conditions of hearing loss, and amplification with either a HA or CI. Comparing outcomes for children with HAs and CIs will help establish the extent to which deviations from typical weighting strategies are associated with impaired auditory functioning in general, or more specifically with electric stimulation. The set of stimuli used in this experiment provided an especially appealing test of weighting strategies because all of the acoustic structure pertinent to the phonemic contrast was strictly low frequency (i.e., below 2500 Hz). In particular, the formant structure of relevance was restricted to the first and second formants, and both of those formants were below 1200 Hz. Consequently, it seemed likely that these signal components would be above aided thresholds for children with reasonably fit auditory prostheses.

A second goal of the current study was to evaluate auditory sensitivity to amplitude structure, primarily to determine the extent to which perceptual weighting of that cue could be traced to the sensitivity exhibited by these children with hearing loss. Here the term ‘sensitivity’ refers to how well the children are able to detect change in this cue. The preponderance of evidence has shown that the weighting of acoustic cues is not explained by listeners’ auditory sensitivities to those cues. This finding has been reported for second-language learners who fail to weight a cue in that second language very strongly (e.g., Miyawaki et al., 1975), even though they are perfectly able to discriminate changes in the cue as well as first-language speakers when the cue is presented in isolation. A disassociation between auditory sensitivity and perceptual weighting has also been observed for children, who do not weight certain cues as strongly as adults, even though they are able to discriminate those cues as well as adults (Nittrouer, 1996; Nittrouer & Crowther, 1998). In the present study, it seemed worthwhile to examine sensitivity to the amplitude cue being examined, for which similar sensitivity was predicted for children with and without hearing loss. An earlier study (Nittrouer et al., 2014) already examined sensitivity to spectral structure, and the relationship of that sensitivity to perceptual weighting strategies involving that cue, explicitly for the children in the current study. That was possible because the children in this study are part of an ongoing longitudinal investigation. The earlier study revealed that sensitivity to spectral structure was not able to account fully for perceptual weighting of spectral cues for either group of children.

A third and final goal of the current study was to examine whether perceptual weighting strategies could explain language functioning in the real world. To achieve this goal, two measures were included. First, word recognition was evaluated. Clearly, this is an everyday communication function. In this case, isolated words were used rather than sentences because unless signals are degraded in some manner, most listeners are able to apply their knowledge of syntactic and semantic structure to aid recognition of words in sentences (e.g., Boothroyd & Nittrouer, 1988; Duffy & Giolas, 1974; Giolas, Cooker, & Duffy, 1970) although the magnitude of these effects vary depending on listener characteristics such as age (e.g, Elliott, 1979; Kalikow, Stevens, & Elliot, 1977; Nittrouer & Boothroyd, 1990; Sheldon, Pichora-Fuller, & Schneider, 2008) and hearing loss (e.g., Conway, Deocampo, Walk, Anaya, & Pisoni, 2014; Dubno, Dirks, & Morgan, 1982). However, the use of sentence-length materials degraded in some manner, such as with the addition of noise, did not seem appropriate in this case because the addition of noise introduces other factors that can affect perception, and those factors would need to be accounted for. Moreover, word recognition has already been examined as a potential correlate to weighting strategies for deaf listeners with CIs: Moberly et al. (2014) found a strong relationship between weighting of spectral structure (FRT) and word recognition for adults with CIs. Nittrouer et al. (2014) also found a relationship between perceptual weighting strategies and word recognition, but only when children with and without hearing loss were included together in the correlation analysis. However, Nittrouer et al. also measured phonemic awareness. For this skill, significant relationships with weighting strategies were observed, for children with NH and those with hearing loss who wore CIs, even when the correlation analyses were performed separately for each group. Consequently, a measure of phonemic awareness was incorporated into the current study. Although phonemic awareness tasks of the sort included in this study (i.e., judging similarity of words in terms of phonemic structure) are not performed in the real world, many language functions rely on that knowledge. In particular, reading and working memory both depend on awareness of phonemic structure (e.g., Pennington, Van Orden, Kirson, & Haith, 1991). Thus, including a measure of phonemic awareness would serve as an indicator of how well these children should be able to perform these other sorts of tasks.

In sum, the information gained from this investigation should help in the future design of interventions for children who receive CIs. In particular, insight would be gained as to whether these children can acquire language with the alternative cues most readily available to them, or if we should be facilitating the development of the weighting strategies typically used in their native language.

Method

Participants

One hundred and seven children participated in this study during the summer after they completed fourth grade. All were participants in an ongoing, longitudinal study of outcomes for children with hearing loss (Nittrouer, 2010), and came from across the United States. Forty-three of the children had NH, 17 had moderate-to-severe hearing loss and wore HAs, and 47 had severe-to-profound hearing loss and wore CIs. Table 1 shows demographic information for these three groups of children. Socio-economic status, shown on the second row, was indexed using a two-factor scale on which both the highest educational level and the occupational status of the primary income earner in the home are considered (Nittrouer & Burton, 2005). Scores for each of these factors range from 1 to 8, with 8 being high. Values for the two factors are multiplied together, providing a range of possible scores from 1 to 64. A score of 30 on this index indicates that the primary income earner obtained a four-year university degree and holds a professional position, and this was generally the range of socio-economic status of these children’s families. Numbers of articulation errors are shown in the third row, and reveal that all these children had good articulation.

Table 1.

Mean scores (and standard deviations, SDs) for each age group. N = 43 for normal hearing, 17 for hearing aids, and 47 for cochlear implants, except where noted for age of second implant.

| Normal Hearing | Hearing Aids | Cochlear Implants | ||||

|---|---|---|---|---|---|---|

|

| ||||||

| M | SD | M | SD | M | SD | |

| Age (years, months) | 10, 4 | 4 | 10, 3 | 4 | 10, 6 | 5 |

| Socioeconomic status | 35 | 13 | 33 | 12 | 33 | 11 |

| Number of articulation errors | <1 | <1 | <1 | 1 | 1 | 2 |

| Better-ear pure-tone average threshold | 63 | 9 | 105 | 14 | ||

| Average aided threshold | 27 | 14 | 25 | 8 | ||

| Age of identification (months) | 8 | 9 | 6 | 7 | ||

| Age of 1st hearing aids (months) | 10 | 9 | 8 | 6 | ||

| Age of 1st cochlear implant (months) | 21 | 18 | ||||

| Age of 2nd cochlear implant (months) | 46 | 21 | ||||

Socioeconomic status is on a 64-point scale; number of articulation errors is derived from the Goldman Fristoe 2 Test of Articulation (2000); better-ear pure-tone average threshold is given in dB hearing level and represents mean, unaided thresholds measured at time of testing (children with HAs) or immediately prior to first cochlear implantation (children with CIs) for the frequencies of 0.5, 1.0, and 2.0 kHz; average aided threshold is for those same three frequencies, for the better ear; age of 2nd cochlear implant is given for the 29 children with two CIs at the time of testing.

All children with NH passed hearing screenings of the octave frequencies between 250 Hz and 8,000 Hz presented at 20 dB hearing level at the ages of 3 years, 6 years, 8 years, and 10 years. The last hearing screening was conducted at the time the data reported here were collected. The fourth row of Table 1 shows mean better-ear pure-tone average (unaided) thresholds for the three frequencies of 500, 1,000, and 2,000 Hz obtained at the time of testing for children with HAs, and just before receiving a first CI for children with CIs. Average aided thresholds are provided for those same three frequencies, as well. Also shown for children with hearing loss are the ages of identification of hearing loss, obtaining first hearing aids, getting a first cochlear implant (if children had a CI), and getting a second cochlear implant (if children were one of the 31 who had two CIs). As can be seen, all 64 children with hearing loss in this study were identified fairly early, and received appropriate treatment early. They all received early intervention focused on spoken language at least once per week before the age of three years, and attended preschool programs that focused on spoken language after the age of three years. All children attended mainstream programs from kindergarten through fourth grade, without sign language instruction. Of the 47 children with CIs, 27 had devices from Cochlear Corporation, 17 had devices from Advanced Bionics, and three children had devices from Med El. Of the 17 children with HAs, 14 had Phonak and three had Oticon HAs.

Equipment and Materials

All testing took place in a sound-treated room. Stimuli were presented via a computer equipped with a Creative Labs Soundblaster digital-to-analog card. A Roland MA-12C powered speaker was used for audio presentation of stimuli, and it was positioned one meter in front of where children sat during testing, at 0-degrees azimuth. Stimuli in the labeling and discrimination tasks were presented at a 22.05-kHz sampling rate with 16-bit digitization. Stimuli in the word recognition task were presented at a 44.1-kHz sampling rate with 16-bit digitization. Stimuli in the phonemic awareness task were presented in audio-video format, with 1500-kbps video signals, and 44.1-kHz sampling rate, 16-bit audio signals. Video images were presented on a computer monitor, positioned at a meter from the child, with the speaker’s face centered in the monitor and measuring 17 cm in height.

Data collection for the word recognition and phonemic awareness tasks (as well as for the test of articulation) was video and audio recorded using a SONY HDR-XR550V video recorder. Sessions were recorded so scoring could be done at a later time by laboratory staff members as blind as possible to children’s hearing status. Children wore SONY FM transmitters in specially designed vests that transmitted speech signals to the receivers, which provided direct line input to the hard drives of the cameras. This procedure ensured good sound quality for all recordings.

For the labeling tasks, two pictures (on 8 × 8 in. cards) were used to represent each response label: for /bɑ/, a drawing of a baby, and for /wɑ/, a picture of a glass of water. When these pictures were introduced by the experimenter, it was explained to the child being tested that they were being used to represent the response labels because babies babble by saying /bɑ/-/bɑ/ and babies call water /wɑ/-/wɑ/.

For the discrimination tasks, a cardboard response card 4 in. × 14 in. with a line dividing it into two 7-in. halves was used with all children during testing. On half of the card were two black squares representing the same response choice; on the other half were one black square and one red circle representing the different response choice. Ten other cardboard cards (4 in. × 14 in., not divided in half) were used for training with children. On six cards were two simple drawings, each of common objects (e.g., hat, flower, ball). On three of these cards the same object was drawn twice (identical in size and color) and on the other cards two different objects were drawn. On four cards were two drawings each of simple geometric shapes: two with the same shape in the same color and two with different shapes in different colors. A game board with ten steps was also used with children. They moved a marker to the next number on the board after each block of stimuli (ten blocks in each condition). Cartoon pictures were used as reinforcement and were presented on a color monitor after completion of each block of stimuli. A bell sounded while the pictures were being shown and so served as additional reinforcement for responding.

Stimuli

Four sets of stimuli were used for labeling and discrimination testing, and these were the same as those used in Nittrouer et al. (2013).

Synthetic speech stimuli for labeling

The two synthetic /bɑ/-/wɑ/ stimulus sets from Nittrouer et. al. (2013) were used. These stimuli were based on natural productions of /bɑ/ and /wɑ/, and were generated in Sensyn, a Klatt synthesizer. The stimuli were all 370 ms in duration, with a fundamental frequency of 100 Hz throughout. Starting and steady-state frequencies of the first two formants were the same for all stimuli, even though the time it took to reach steady-state frequencies varied (i.e., FRT). The first formant (F1) started at 450 Hz and rose to 760 Hz at steady state. The second formant (F2) started at 800 Hz and rose to 1150 Hz at steady state. The third formant (F3) remained constant at 2400 Hz. There were no formants above F3, which meant that all signal portions were relatively low frequency. In addition to varying FRT, the time it took for the amplitude to reach its maximum (i.e., ART) was varied independently. In natural speech, both FRT and ART are briefer for syllable-initial stops, such as /b/, and longer for syllable-initial glides, such as /w/.

In one set of stimuli in this experiment, FRT varied along a 9-step continuum from 30 ms to 110 ms in 10-ms steps. Each stimulus was generated with each of two ART values: 10 ms and 70 ms. This resulted in 18 FRT stimuli (9 FRTs X 2 ARTs). In the other set of stimuli, ART varied along a 7-step continuum from 10 ms to 70 ms in 10-ms steps. For these stimuli, FRT was set to both 30 ms and 110 ms, resulting in 14 ART stimuli (2 FRTs X 7 ARTs).

Natural, unprocessed speech stimuli

Five exemplars each of /bɑ/ and /wɑ/ from Nittrouer et al. (2013) were used for training in the labeling tasks. These tokens were produced by a male talker and digitized at a 44.1 kHz sampling rate with 16-bit resolution.

Nonspeech stimuli for discrimination

For the discrimination task, two types of stimuli were used, which again were from Nittrouer et al. (2013). One was synthesized with Sensyn, using steady-state formants. The frequencies were 500 Hz for F1, 1000 Hz for F2, and 1500 Hz for F3. These frequencies are typical values for modeling the resonances of a male vocal tract with a quarter wavelength resonator, although they do not represent any English vowel. Thus they are speech-like, but not representative of any actual vowel sound. The f0 was 100 Hz. Total duration was 370 ms. Onset amplitude envelopes (similar to the envelopes used for the synthetic speech stimuli of the labeling task) were overlaid on these signals. ART ranged from 0 to 250 ms in 25-ms steps, resulting in 11 stimuli. These stimuli are termed the formant stimuli in this report. The other set of stimuli consisted of sine waves synthesized using Tone (Tice & Carrell, 1997), with the same duration, formant frequencies (for the sine waves) and ARTs as the formant stimuli, resulting in 11 stimuli. These stimuli are termed the tone stimuli in this report. These stimuli are not perceived as speech-like at all.

Word recognition

The four CID W-22 word lists were used (Hirsh et al., 1952). This measure consists of four lists of 50 monosyllabic words, spoken by a man. The lists were presented auditorily via computer.

Phonemic awareness

This task consisted of 48 items on a Final Consonant Choice task. These items are listed in the Appendix. These items were video-audio recorded by a male talker. These materials have been used often in the past (e.g., Caldwell & Nittrouer, 2013; Nittrouer, Caldwell, Lowenstein, Tarr, & Holloman, 2012; Nittrouer, Shune, & Lowenstein, 2011), and consistency in scoring across studies has been observed. Nonetheless, split-halves reliability was computed in this experiment for the children with NH (i.e., the control group).

Articulation

The Goldman-Fristoe 2 Test of Articulation (Goldman & Fristoe, 2000) was administered.

General Procedures

All procedures were approved by the Institutional Review Board of the Ohio State University. Children, with their parents, visited Columbus, Ohio for two days during the summer after their fourth-grade year, at which time the data reported here were collected. All stimuli were presented at 68 dB SPL.

Task-specific Procedures

Labeling task

For the labeling task, the experimenter introduced each picture separately and told the child the name of the word associated with that picture. Children practiced pointing to the correct picture and naming it after it was spoken by the experimenter ten times (five times for each word). Having children both point to the picture and say the word ensured that they were correctly associating the word and the picture. Then the child heard the five natural, digitized exemplars of /bɑ/ and /wɑ/, and was instructed to respond in the same way. Children were required to respond to nine of ten exemplars correctly to proceed to the next level of training.

Training was provided next with the synthetic endpoints corresponding to the most /bɑ/-like (30 ms FRT, 10 ms ART) and /wɑ/-like (110 ms FRT, 70 ms ART) stimuli. These endpoints were the same for both sets of labeling stimuli. Children heard five presentations of each endpoint, in random order. They had to respond to nine out of ten of them correctly to proceed to testing. Feedback was provided for up to two blocks of training, and then children were given up to three blocks without feedback to reach that training criterion. If any child had been unable to respond to nine of ten endpoints correctly by the third block, testing would have stopped. In this case, no data from that child would have been included in the final analysis. This strict criterion, termed the training criterion, was established because the labeling data were the focus of the study, so it was essential that these data be available. However, all children included met the training criterion.

During testing, listeners heard all stimuli in the set (18 for the FRT set; 14 for the ART set) presented in blocks of either 18 or 14 stimuli. Ten blocks were presented. Listeners needed to respond accurately to at least 70% of the endpoints for one set of stimuli in order to have their data included in the analysis; this was the endpoint criterion. Requiring that children demonstrate this level of accurate responding, after establishing during training that they could label the endpoints correctly, served as a check on general attention.

Discrimination tasks

For the discrimination tasks, an AX procedure was used. In this procedure, listeners compare a stimulus, which varies across trials (X), to a constant standard (A). For both the formant and tone stimuli, the stimulus with the 0-ms ART was always the standard (A), and every stimulus (including the standard) was played as the comparison (X). The interstimulus interval between standard and comparison was 450 ms. The listener responded by pointing to the picture of the two black squares and saying same if the stimuli were judged as being the same, and by pointing to the picture of the black square and the red circle and saying different if the stimuli were judged as being different. Both pointing and verbal responses were required because each served as a check on the reliability of the other.

Prior to testing with the acoustic stimuli, children were shown the drawings of the six same and different objects and asked to report if the two objects on each card were the same or different. Then they were shown the cards with drawings of same and different geometric shapes, and asked to report if the two shapes were the same or different. Finally, children were shown the card with the two squares on one side and a circle and a square on the other side and asked to point to ‘same’ and to ‘different.’ These procedures ensured that all children understood the concepts of same and different.

In the next phase of training, all listeners were presented with five pairs of the acoustic stimuli that were identical and five pairs of stimuli that were maximally different, in random order. Listeners were asked to report whether the stimuli were the same or different and were given feedback for up to two blocks. Next, these same training stimuli were presented, and listeners were asked to report if they were the same or different, without feedback. Listeners needed to respond correctly to nine of the ten training trials without feedback in order to proceed to testing, and were given up to three blocks without feedback to reach criterion. Unlike criteria for the labeling tasks, listeners’ data were not removed from the overall data set if they were unable to meet training criteria for one of the conditions in the discrimination task. However, their data were not included for that one condition, and they were deemed unable to detect a change in the property under examination. During testing, each block included all of the comparison stimuli, played in random order. Ten blocks were presented. Listeners needed to respond correctly to at least 70% of the physically same and maximally different stimuli to have their data included for that condition in the final analysis.

Word recognition

Each child heard one of the four CID word lists, and lists were randomized across children within each group. For this task, children were told that they would hear a man’s voice say the phrase “Say the word,” which would be followed by a word. The child was instructed to repeat the word as accurately as possible. They were given only one opportunity to hear each word.

Phonemic awareness

This Final Consonant Choice task started with six practice items. In this task, the child saw/heard the talker say a target word. The child needed to repeat the target word correctly before seeing/hearing the three choices, and was given up to three opportunities to do so. Then, the child saw/heard the talker say three new words, and had to state which one ended in the same sound as the target word.

Articulation

In this test, children saw pictures of items and had to name them.

Scoring and Analysis

Computation of weighting factors from labeling tasks

To derive weighting factors for the FRT and ART cues, a single logistic regression analysis was performed on responses to all stimuli across the four continua (two FRT and two ART) for each listener. The proportion of /w/ responses given to each stimulus served as the dependent measure in the computation of these weighting factors. Settings of FRT and ART served as the predictor variables. Settings for both variables were scaled across their respective continua such that endpoints always had values of zero and one. Raw regression coefficients derived for each cue served as the weighting factors, and these FRT and ART weighting factors were used in subsequent statistical analyses. However, these factors were also normalized, based on the total amount of weight assigned to the two factors in aggregate. For example, the normalized FRT weighting factor was the raw FRT weighting factor/(raw FRT weighting factor + raw ART weighting factor). Negative coefficients were recoded as positive in this analysis. This matches procedures of others (e.g., Escudero, Benders, & Lipski, 2009; Giezen, Escudero, & Baker, 2010), who have termed these new scores cue ratios. That term was adopted in this report.

Computation of d′ for discrimination tasks

The discrimination functions of each listener were used to compute a d′ for each condition (Holt & Carney, 2005; Macmillan & Creelman, 2005). The d′ was selected as the measure of sensitivity because it is bias-free. The d′ is defined in terms of z-values along a Gaussian normal distribution, which means they are standard deviation units. A d′ for each step along the continuum was computed as the difference between the z-value for the “hit” rate for that comparison stimulus (proportion of different responses when A and X stimuli were different) and the z-value for the “false alarm” rate (proportion of different responses when A and X stimuli were identical, that is when the standard was compared to itself). An average d′ value was computed across all steps of the continuum, and this value was used in subsequent statistical analyses. The minimum this average d′ could be was 0, meaning the child did not discriminate ART at all, and the maximum the average d′ could be was 4.65, meaning the child discriminated ART very well.

Scoring for word recognition

The CID word lists were scored on a phoneme-by-phoneme basis by a graduate research assistant. Whole words were only scored as correct if all phonemes were correct, and no additional phonemes were included. The children in this study had good articulation, as revealed by their scores on the Goldman Fristoe 2 Test of Articulation (2000) shown in Table 1. A second student scored 25% of the word-recognition responses. Both students had familiarity with the speech-production patterns of these children. As a measure of inter-rater reliability, the scores of the two graduate research assistants were compared. Percent agreement was used as this metric of reliability. For all other data analyses, the dependent CID measure was the number of words recognized correctly, according to the first scorer.

Scoring of phonemic awareness

The experimenter working with the child entered a response of correct or incorrect at the time of testing, and testing with these materials was discontinued after a child responded incorrectly to six consecutive items. Nonetheless, all testing was video-recorded, and a second graduate research assistant watched 25% of the video recordings and scored accuracy of responding in order to obtain a metric of inter-rater reliability. The dependent measure for analysis was the percent correct choices of words ending in the same sound as the corresponding targets. Percent correct scores for odd and even items were compared in order to provide a metric of test reliability.

Articulation

Two students scored each child’s responses separately, and their scores were compared for a measure of reliability. Numbers of errors are reported in Table 1.

Results

Agreement for word scores from the CID word lists that were scored by both graduate research assistants ranged from 92% to 100%, with a mean of 97% agreement. Reliability for the phoneme awareness and the articulation tasks was 100% for all scores obtained from two graduate students. For all tasks, inter-rater reliability was judged as being adequate. For the measure of split-halves reliability on the phonemic awareness task, 69% correct for odd items and 70% correct for even items were obtained across all children. A Cronbach’s alpha of .946 was found, so it was concluded that the task was reliable.

The alpha level for all analyses was set at .05. However, precise p values are reported when p < .10. For p > .10, results are reported simply as not significant.

Weighting factors

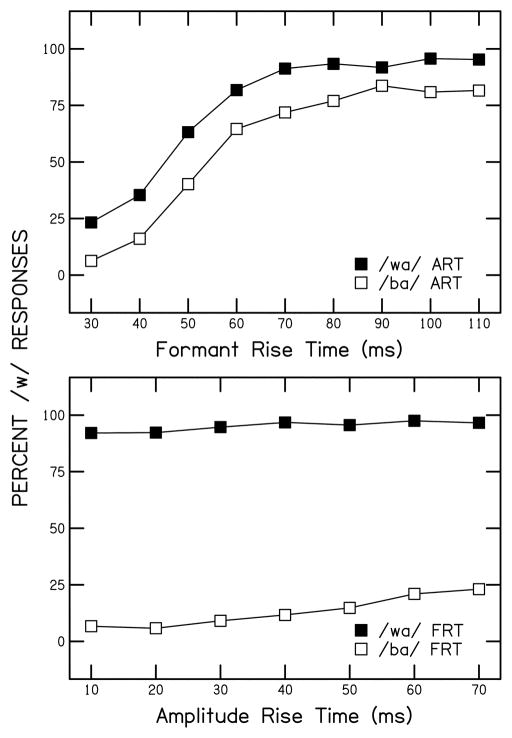

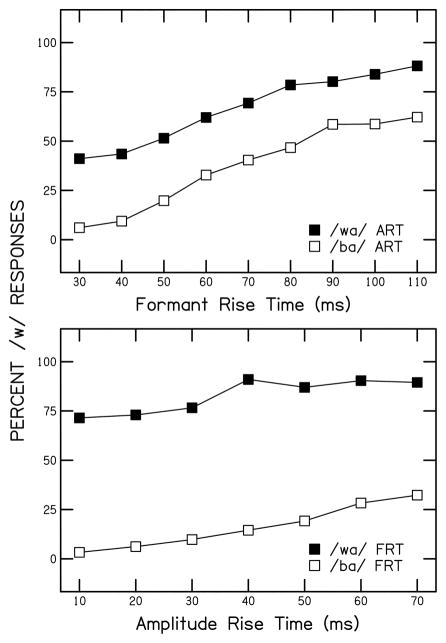

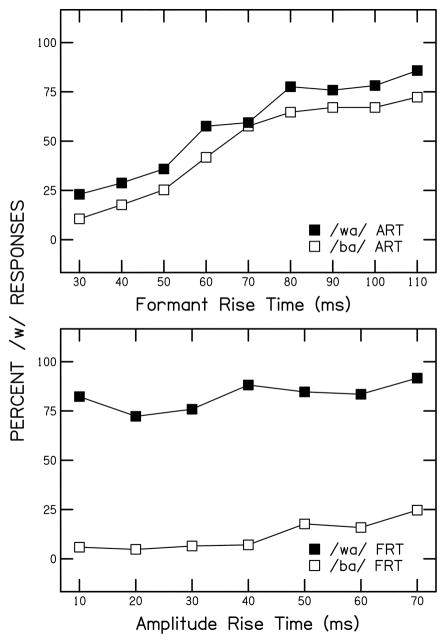

The first question addressed was whether weighting factors differed across the three groups of children. Figures 1 through 3 display labeling functions for each group, for both stimulus conditions. Functions for the FRT continua are on the top, and those for the ART continua are on the bottom. Looking first at Figure 1 for children with NH, it can be seen that the functions on top, for the FRT continua, are rather steep, but not separated greatly. The functions on the bottom, for the ART continua, are shallow, and greatly separated. That pattern of results indicates that these children attended more to FRT than to ART, an interpretation that arises because steepness of functions is an indicator of the extent to which the cue represented on the x axis was weighted (the steeper the function, the greater the weight), and separation between functions is an indicator of the extent to which the binary-set property was weighted (the more separated, the greater the weight). Looking next at Figure 2 for children with HAs, it can be seen that functions on the top, for the FRT continua, are slightly shallower than those in Figure 1, but no more separated; functions on the bottom are slightly less separated, but no steeper. These patterns suggest that the children with HAs weighted FRT a little less than the children with NH, but there is no indication that they weighted ART any more. Looking lastly at Figure 3, it can be seen that, compared to Figure 1, labeling functions for the FRT continua, on top, are much shallower and more separated. At the same time, functions on the bottom panel are less separated. Combined, these trends suggest that the children with CIs attended less to FRT, and more to ART than the children with NH.

FIGURE 1.

Labeling functions for children with NH for the FRT continua (top panel) and ART continua (bottom panel). For the top panel (FRT continua), filled symbols indicate when ART was set to be appropriate for /wɑ/, and open symbols indicate when ART was set to be appropriate for /bɑ/. For the bottom panel (ART continua), filled symbols indicate when FRT was set to be appropriate for /wɑ/, and open symbols indicate when FRT was set to be appropriate for /bɑ/.

FIGURE 3.

Labeling functions for children with CIs for the FRT continua (top panel) and ART continua (bottom panel). See Figure 1 for details.

FIGURE 2.

Labeling functions for children with HAs for the FRT continua (top panel) and ART continua (bottom panel). See Figure 1 for details.

Table 2 shows mean weighting factors for each group. Raw weighting factors are in the top two rows, and reflect the trends seen in the figures: There is a decrease across groups (from NH to HA to CI) in the weight assigned to FRT, and children with CIs weighted ART more than children in the other two groups. A two-way, repeated-measures analysis of variance (ANOVA) was performed on these data, with acoustic cue (FRT or ART) as the repeated measure and group as the between-subjects factor. Results revealed a significant main effect of cue, F(1,104) = 167.85, p < .001, η2 = .617, and group, F(2,104) = 13.99, p < .001, η2 = .212. These outcomes reflect the facts that FRT was weighted more than ART by all groups, and children with NH weighted the cues more than the children with hearing loss when the cues are considered together. In addition, the cue x group interaction was significant, F(2,104) = 19.42, p < .001, η2 = .272, reflecting the fact that there were differences in weighting across the two cues for these groups.

Table 2.

Mean weighting factors and cue ratios (and SDs) for each group. N = 43 for normal hearing, 17 for hearing aids, and 47 for cochlear implants.

| Normal Hearing | Hearing Aids | Cochlear Implants | ||||

|---|---|---|---|---|---|---|

|

| ||||||

| M | SD | M | SD | M | SD | |

| Weighting factors | ||||||

| FRT | 6.52 | 2.92 | 4.30 | 2.12 | 3.66 | 1.44 |

| ART | 1.30 | 0.85 | 0.79 | 0.82 | 1.79 | 1.15 |

| Cue ratios | ||||||

| FRT | 0.81 | 0.12 | 0.81 | 0.14 | 0.67 | 0.16 |

| ART | 0.19 | 0.12 | 0.19 | 0.14 | 0.33 | 0.16 |

To evaluate these trends further, one-way ANOVAs were performed separately for each cue, with group as the factor. For FRT, a significant effect was found, F(2,104) = 18.78, p < .001, η2 = .265. Post hoc comparisons with Bonferroni adjustments revealed that children with NH had significantly higher weighting factors than both groups of children with hearing loss: for children with HAs, p = .003; for children with CIs, p < .001. However, children with HAs and CIs weighted FRT similarly. For ART, the main effect of group was significant, F(2,104) = 7.19, p = .001, η2 = 121, as had been found for FRT. However, the pattern of post hoc comparisons (with Bonferroni adjustments) was slightly different. In this case, children with NH and those with HAs did not weight ART differently. The comparison of scores for children with NH and those with CIs did not reach significance, although it was close, p = .056. The comparison of children with HAs and those with CIs was significant, p = .001. Based on these outcomes, it seems appropriate to conclude that children with CIs actually weighted ART more than children in the other two groups.

Cue ratios are on the bottom of Table 2, and provide useful insights. When the weighting factors are normalized to the total amount of perceptual attention paid to these two cues, it is found that the relative amount of weight assigned to each of the cues is similar for children with NH and those with HAs. However, children with CIs show a different pattern: less weight is allocated to FRT, and slightly more to ART. This description is supported by the outcomes of a one-way ANOVA performed on the FRT cue ratios: The main effect of group was significant, F(2,104) = 12.64, p < .001, η2 = .196, and the post hoc comparisons (with Bonferroni corrections) were significant for the comparisons of children with CIs and both children with NH, p < .001, and children with HAs, p = .002. Children with NH and HAs performed the same. Statistical analysis was not performed for ART cue ratios because it would produce identical outcomes to this one for FRT cue ratios, given that these scores are complementary proportions derived from a common sum.

Sensitivity to ART

For the two discrimination tasks, not all children were able to reach criteria to have their data included in the analysis, either because they failed the training or failed to discriminate 70% or more of the same or most different stimuli during testing. Table 3 shows the numbers and percentages of children in each group who were unable to reach criteria to have their data included in the analysis. One outcome that is interesting in these data is that more children were unable to discriminate even the most different ARTs when the stimuli were speech-like (i.e., the formant stimuli), rather than not like speech at all (i.e., the tone stimuli).

Table 3.

Numbers (N) of children in each group who could not reach criteria to have their data included in the analysis for the discrimination tasks, along with percentages (P).

| Normal Hearing | Hearing Aids | Cochlear Implants | ||||

|---|---|---|---|---|---|---|

|

| ||||||

| N | P | N | P | N | P | |

| Formant stimuli | 8 | 19 | 2 | 12 | 14 | 30 |

| Tone stimuli | 2 | 5 | 2 | 12 | 6 | 13 |

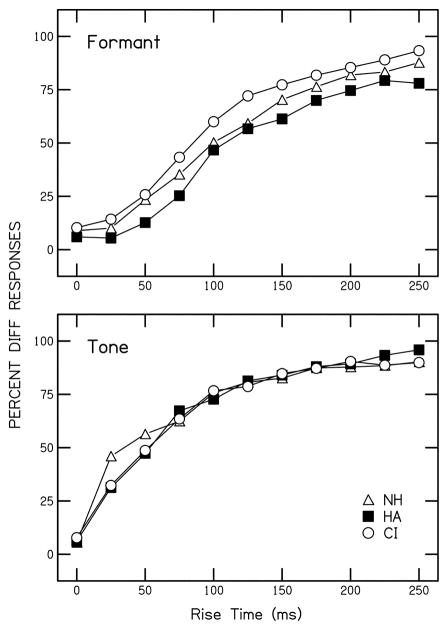

Figure 4 shows mean discrimination functions, for both sets of stimuli. Functions for all three groups of children are shown on the same plots. In general, it appears as if these functions are similar for all three groups. Mean d′ values are presented in Table 4, and support that impression derived from Figure 4: sensitivity to ART was similar for all groups. In fact, one-way ANOVAs performed on these d′ values for both conditions failed to reveal any significant results. Thus it can be concluded that children in all groups (at least those who were able to do the task) were similarly sensitive to amplitude structure in these signals.

FIGURE 4.

Discrimination functions from the formant task (top panel) and tone task (bottom panel) for children with NH (open triangles), children with HAs (filled squares), and children with CIs (open circles).

Table 4.

Means (and SDs) for d′ computed in the discrimination tasks.

| Normal Hearing | Hearing Aids | Cochlear Implants | ||||

|---|---|---|---|---|---|---|

|

| ||||||

| M | SD | M | SD | M | SD | |

| Formant stimuli | 1.96 | 0.90 | 1.76 | 0.64 | 2.13 | 0.96 |

| Tone stimuli | 2.89 | 0.92 | 2.92 | 1.05 | 2.74 | 0.90 |

Although group differences were not observed, correlation coefficients were computed between the d′ values for each of the formant and tone stimuli and ART weighting factors. This was done to evaluate whether sensitivity to ART explained the weighting of that cue. No significant effects were observed, suggesting that there was not a relationship between sensitivity and weighting of the cue. Of course, that conclusion might be questioned, based on the fact that roughly 20% of the children were unable to recognize differences in ART as large as 250 ms, when stimuli were speech-like, but not quite speech. Because of this finding, ART weighting factors were compared for the children who could and could not perform the discrimination task with the formant stimuli, the condition in which the most children were unable to meet criteria. Mean ART weighting factors for these groups were 1.42 (1.11) and 1.49 (0.76), for those who could and could not do the task, respectively. A t test performed on these values was not significant, so it was concluded that sensitivity to ART did not explain weighting of that cue.

Word recognition and phonemic awareness

Word recognition and phonemic awareness were examined next. Means (and SDs) across groups for these measures are shown in Table 5. One-way ANOVAs were performed on data for each measure, using arcsine transformations because these data were percentages. For word recognition, a significant group effect was observed, F(2,104) = 90.92, p < .001, η2 = .636, and post hoc comparisons with Bonferroni adjustments showed that children with NH performed better than children with HAs, as well as those with CIs, p < .001 in both cases. However, children with HAs and CIs did not differ in their word recognition scores. For the phonemic awareness (i.e., Final Consonant Choice) task, a significant group effect was observed, F(2,104) = 14.86, p < .001, η2 = .222. In this case, post hoc comparisons with Bonferroni adjustments showed that children with CIs performed more poorly than both children with NH, p < .001, and children with HAs, p = .038. The difference between children with NH and those with HAs was not statistically significant.

Table 5.

Means (and SDs) for the word recognition and phonemic awareness tasks, given as percent correct.

| Normal Hearing | Hearing Aids | Cochlear Implants | ||||

|---|---|---|---|---|---|---|

|

| ||||||

| M | SD | M | SD | M | SD | |

| Word recognition | 96 | 3 | 69 | 18 | 72 | 15 |

| Phonemic awareness | 81 | 15 | 73 | 18 | 58 | 24 |

A central question addressed by this study was whether the weighting strategies exhibited by children explained their abilities to recognize words and perform the phonemic awareness task. To answer those questions, stepwise linear regression was performed, with word recognition and phonemic awareness as dependent measures in separate analyses, and both FRT and ART weighting factors entered as the predictor variables in both. A total of three regression analyses were performed for each dependent measure: one with all children included, one with only children with NH included, and one with only children with CIs included. A separate analysis was not performed with only children with HAs because there were few of these children. For the analysis of word recognition with all children included, it was found that FRT weighting explained a significant amount of variance, standardized β = .472, p < .001, but ART weighting did not. The analyses performed separately for children with NH or CIs did not provide any significant solutions. For the analysis of phonemic awareness that included all children, it was found that FRT weighting explained a significant amount of variance, standardized β = .428, p < .001; again, ART did not explain any additional variance. In this case, the group-specific analyses showed that FRT weighting explained a significant amount of variance for children with NH, standardized β = .331, p = .030, and children with CIs, standardized β = .311, p = .033. Consequently, it may be concluded that the extent to which these children weighted FRT explained some variability in their abilities both to recognize words and to recognize word-internal phonemic structure.

Weighting strategies and hearing-related factors

Because the extent to which children weighted FRT was found to explain significant amounts of variability for both word recognition and phonemic awareness, the question was explored of whether certain characteristics associated with hearing loss led to more typical weighting strategies for FRT. First, results for the children with HAs were examined. Using FRT weighting factors as the dependent measure, the independent variables of socioeconomic status, age of identification of hearing loss, and both unaided and aided pure-tone average thresholds were entered as predictor variables in separate regression analyses. None of these factors explained any significant amount of variance in FRT weighting by these children with HAs. Similarly, no independent variable associated with socioeconomic status, hearing loss, or subsequent cochlear implantation (including age of first implant) was found to explain any significant amount of variance in FRT weighting by the children with CIs. Finally, no difference was found for these children with CIs based on whether they had one or two CIs.

Discussion

The study reported here was conducted to examine the perceptual weighting strategies of children with hearing loss. Three specific goals were addressed. The first goal was to evaluate whether children with hearing loss place the same perceptual weight on specific spectral and temporal cues as children with NH who are the same age. Attaining this goal would indicate whether these children with hearing loss are developing strategies for listening to speech in their first language commensurate to those of children with NH acquiring the same first language. It is generally recognized that adult listener-speakers possess listening strategies that are language-specific and automatic in function, leading to efficient and accurate speech recognition. The question addressed here was whether children with hearing loss appear to be on course to develop such strategies.

The second goal in this study was to determine if the amount of perceptual attention, or weight, assigned to one of these cues was explained by auditory sensitivities to that cue. Attaining this goal would help determine whether auditory training might be useful in helping children with hearing loss develop language-appropriate weighting strategies: If the weighting strategies of these children were found to be largely constrained by their auditory limitations, the conclusion could be reached that training would be of very limited value because it could not influence that sensitivity; only device improvements could do that. Alternatively, if perceptual weighting strategies for these children with hearing loss are even partially independent of auditory sensitivity, then training to help children develop appropriate strategies could be highly effective.

Finally, the third goal of the current study was to investigate how these children’s perceptual weighting strategies impacted their communication functioning in the real world. Attaining this goal would speak to the question of whether perceptual attention, as indexed by perceptual weighting strategies, is of real value to everyday communication.

Results of this study showed that the children with hearing loss weighted the spectral cue incorporated into the experiment – formant rise time, or FRT – less than children with NH. That was true, regardless of whether these children used HAs or CIs. In both cases, the absolute amount of perceptual weight assigned to FRT was less than that assigned by children with NH. When it came to the amplitude cue – amplitude rise time, or ART – the children with CIs, but not those with HAs, were found to weight it more than the children with NH. These trends for absolute perceptual weights meant that when these weights were normalized, children with HAs showed a similar weighting pattern across cues as the children with NH. On average, children in both groups assigned roughly 80% of their perceptual attention to the FRT cue, and 20% to the ART cue. However, those metrics can be deceiving because children with HAs assigned less overall weight to these cues than did the children with NH. In other words, children with HAs were just not as attentive to signal detail as children with NH. When it came to the normalized weighting factors, or cue ratios, for children with CIs, it was found that they assigned 67% of their attention to the FRT cue and 33% to the ART cue, on average. That pattern, along with the fact that they were simply less attentive to the acoustic structure of the stimuli, suggests that these children with CIs could have difficulty learning to process speech efficiently.

The results of the discrimination task employed in this experiment revealed that sensitivity to acoustic structure did not fully explain the amount of perceptual attention paid to that structure. An identical outcome had been observed by Nittrouer et al. (2014) for these same children, when they were asked to discriminate formant transitions. Combined, these results suggest that these children with hearing loss could benefit from auditory training designed to help them focus their perceptual attention on the most linguistically significant components of the signal.

Finally, results of the regression analyses performed with measures of everyday communication functions used as dependent variables showed that children’s abilities to attend to spectral structure (FRT in this case) explained significant amounts of variability in their word recognition and phonemic awareness skills. This finding means that it would be worthwhile to provide the kind of auditory training that would be required to help these children develop effective perceptual weighting strategies.

This last finding is perhaps the most important for this study. The unveiling of a relationship between weighting strategies and how well these children could perform some communication functions relevant to real-world expectations emphasizes the importance of developing language-appropriate weighting strategies. There are generally several acoustic cues that arise with each articulatory gesture, so serve to define a phonemic category. For example, Lisker (1986) listed 16 acoustic cues that have been shown to be relevant to decisions regarding voicing of syllable-initial stops. While all these cues can contribute to those voicing decisions (Whalen, Abramson, Lisker, & Mody, 1993), not all of them have the same degree of influence (Lisker, 1975). Furthermore, native speakers of a language demonstrate the same relative weighting of the cues to any one distinction in that language. Presumably, the cues that have come to be weighted more strongly are the ones that can lead to accurate decisions, with little cognitive effort; in other words, the cues that support automatic processing of speech (Strange & Shafer, 2008). For this reason, it is preferable that a child develop the weighting strategies that are shared by mature listener-speakers of whatever first language is being learned.

The current study demonstrated that developing these optimal strategies can be challenging for a child with hearing loss. However, no evidence was found to suggest that the acquisition of these strategies is entirely constrained by sensitivity to the acoustic structure in question, and that is a finding that replicates earlier results. In addition to the finding of Nittrouer et al. (2014), Nittrouer and Burton (2002) observed that children with the same degree of hearing loss who used HAs demonstrated diminished perceptual weight – compared to children with NH – to two acoustic cues to the place distinction for syllable-initial fricatives: spectrum of the noise itself and onset formant transitions. However, cue weighting patterns differed across the two groups of children with HAs, and the less mature of these strategies was associated with poorer performance in the real world. The only thing that differed between the two groups of children was that those with the more mature weighting strategies had received early intervention services that were specifically designed for children with hearing loss, so presumably provided more experience with language, which could have meant more opportunities to acquire optimal weighting strategies. Overall, that study provided evidence for the suggestions that appropriate intervention, or training, could promote language-appropriate perceptual weighting strategies, which in turn should facilitate optimal communication skills.

Limitations of the current study and future directions

Although the current study provides some valuable insight into how intervention might proceed with children with hearing loss, and what it should be focused on, there are additional considerations. In particular, the dependent measures of communication function examined here – word recognition and phonemic awareness – do not evaluate the full range of skills important to communication functioning. In particular, listeners with hearing loss have special difficulty functioning in noise, and these tasks do not evaluate how these children will perform in that regard. Additionally, the effects of semantic and syntactic structure were not assessed. Large-scale studies are needed to develop comprehensive models of language processing and acquisition across the range of skills involved in that processing. Only with those sorts of studies will we be able to understand the complex interactions across these skills. Furthermore, investigation into the most appropriate manner of intervention is required. Although the value of effective perceptual weighting strategies was demonstrated in this study, the way to facilitate the acquisition of those strategies remains in question. In particular, can these strategies be learned simply by enhancing children’s experiences listening to and producing spoken language, or are specific training procedures required? If specific training is required, what should it be and how should it be implemented? Intervention studies are needed to help answer these questions.

Summary

The study reported here provided evidence that children with hearing loss are less attentive to the acoustic structure of speech signals overall than children with NH. In this case, the children with CIs, but not those with HAs, showed atypical patterns of perceptual attention across the cues, as well. The weighting of one of these cues was not related to children’s auditory sensitivity to that cue, a finding that matches earlier results. However, weighting strategies were related to children’s abilities to perform ecologically relevant communication tasks. The conclusion to be reached from this combination of results is that auditory training should be able to facilitate for these children with hearing loss the attainment of weighting strategies that are language appropriate, and the application of that training would have positive effects on the broader communication functioning of these children. Nonetheless, questions remain concerning what kind of intervention should be implemented.

Acknowledgments

This work was supported by Grant No. R01 DC006237 from the National Institute on Deafness and Other Communication Disorders, the National Institutes of Health. The authors thank Taylor Wucinich and Demarcus Williams for their help in data collection.

Appendix. The Final Consonant Choice Task. Correct choice is underlined

| Practice Examples | |||

|---|---|---|---|

| 1. rib | mob | phone | heat |

| 2. stove | hose | stamp | cave |

| 3. hoof | shed | tough | cop |

| 4. lamp | rock | juice | tip |

| 5. fist | hat | knob | stem |

| 6. head | hem | rod | fork |

**Discontinue after 6 consecutive errors.

| Test Trials | |||

|---|---|---|---|

| 1. truck | wave | bike | trust |

| 2. duck | bath | song | rake |

| 3. mud | crowd | mug | dot |

| 4. sand | sash | kid | flute |

| 5. flag | cook | step | rug |

| 6. car | foot | stair | can |

| 7. comb | cob | drip | room |

| 8. boat | skate | frog | bone |

| 9. house | mall | dream | kiss |

| 10. cup | lip | trash | plate |

| 11. meat | date | sock | camp |

| 12. worm | price | team | soup |

| 13. hook | mop | weed | neck |

| 14. rain | thief | yawn | sled |

| 15. horse | lunch | bag | ice |

| 16. chair | slide | chain | deer |

| 17. kite | bat | mouse | grape |

| 18. crib | job | hair | wish |

| 19. fish | shop | gym | brush |

| 20. hill | moon | bowl | hip |

| 21. hive | glove | light | hike |

| 22. milk | block | mitt | tail |

| 23. ant | school | gate | fan |

| 24. dime | note | broom | cube |

| 25. desk | path | lock | tube |

| 26. home | drum | prince | mouth |

| 27. leaf | suit | roof | leak |

| 28. thumb | cream | tub | jug |

| 29. barn | tag | night | pin |

| 30. doll | pig | beef | wheel |

| 31. train | grade | van | cape |

| 32. bear | shore | clown | rat |

| 33. pan | skin | grass | beach |

| 34. hand | hail | lid | run |

| 35. pole | land | poke | |

| 36. ball | clip | steak | pool |

| 37. park | bed | lake | crown |

| 38. gum | shoe | gust | lamb |

| 39. vest | cat | star | mess |

| 40. cough | knife | log | dough |

| 41. wrist | risk | throat | store |

| 42. bug | bus | leg | rope |

| 43. door | pear | dorm | food |

| 44. nose | goose | maze | zoo |

| 45. nail | voice | chef | bill |

| 46. dress | tape | noise | rice |

| 47. box | face | mask | book |

| 48. spoon | cheese | back | fin |

References

- Beckman ME, Edwards J. The ontogeny of phonological categories and the primacy of lexical learning in linguistic development. Child Development. 2000;71:240–249. doi: 10.1111/1467-8624.00139. [DOI] [PubMed] [Google Scholar]

- Beddor PS, Strange W. Cross-language study of perception of the oral-nasal distinction. Journal of the Acoustical Society of America. 1982;71:1551–1561. doi: 10.1121/1.387809. [DOI] [PubMed] [Google Scholar]

- Bernstein LE. Perceptual development for labeling words varying in voice onset time and fundamental frequency. Journal of Phonetics. 1983;11:383–393. [Google Scholar]

- Boothroyd A, Nittrouer S. Mathematical treatment of context effects in phoneme and word recognition. Journal of the Acoustical Society of America. 1988;84:101–114. doi: 10.1121/1.396976. [DOI] [PubMed] [Google Scholar]

- Bourland Hicks C, Ohde RN. Developmental role of static, dynamic, and contextual cues in speech perception. Journal of Speech Language and Hearing Research. 2005;48:960–974. doi: 10.1044/1092-4388(2005/066). [DOI] [PubMed] [Google Scholar]

- Caldwell A, Nittrouer S. Speech perception in noise by children with cochlear implants. Journal of Speech, Language, and Hearing Research. 2013 doi: 10.1044/1092-4388(2012/11-0338). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carpenter AL, Shahin AJ. Development of the N1-P2 auditory evoked response to amplitude rise time and rate of formant transition of speech sounds. Neuroscience Letters. 2013;544:56–61. doi: 10.1016/j.neulet.2013.03.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conway CM, Deocampo J, Walk AM, Anaya EM, Pisoni DB. Deaf Children with Cochlear Implants Do Not Appear to Use Sentence Context to Help Recognize Spoken Words. Journal of Speech Language and Hearing Research. 2014;57:2174–2190. doi: 10.1044/2014_JSLHR-L-13-0236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowther CS, Mann V. Native language factors affecting use of vocalic cues to final consonant voicing in English. Journal of the Acoustical Society of America. 1992;92:711–722. doi: 10.1121/1.403996. [DOI] [PubMed] [Google Scholar]

- DiCanio C. Cue weight in the perception of Trique glottal consonants. Journal of the Acoustical Society of America. 2014;135:884–895. doi: 10.1121/1.4861921. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Dirks DD, Morgan DE. Effects of mild hearing loss and age on speech recognition in noise. Journal of the Acoustical Society of America. 1982;72:s34. doi: 10.1121/1.391011. [DOI] [PubMed] [Google Scholar]

- Duffy JR, Giolas TG. Sentence intelligibility as a function of key word selection. Journal of Speech and Hearing Research. 1974;17:631–637. doi: 10.1044/jshr.1704.631. [DOI] [PubMed] [Google Scholar]

- Elliott LL. Performance of children aged 9 to 17 years on a test of speech intelligibility in noise using sentence material with controlled word predictability. Journal of the Acoustical Society of America. 1979;66:651–653. doi: 10.1121/1.383691. [DOI] [PubMed] [Google Scholar]

- Escudero P, Benders T, Lipski SC. Native, non-native and L2 perceptual cue weighting for Dutch vowels: The case of Dutch, German and Spanish listeners. Journal of Phonetics. 2009;37:452–465. [Google Scholar]

- Fishman KE, Shannon RV, Slattery WH. Speech recognition as a function of the number of electrodes used in the SPEAK cochlear implant speech processor. Journal of Speech Language and Hearing Research. 1997;40:1201–1215. doi: 10.1044/jslhr.4005.1201. [DOI] [PubMed] [Google Scholar]

- Flege JE, Port R. Cross-language phonetic interference: Arabic to English. Language and Speech. 1981;24:125–146. [Google Scholar]

- Francis AL, Kaganovich N, Driscoll-Huber C. Cue-specific effects of categorization training on the relative weighting of acoustic cues to consonant voicing in English. Journal of the Acoustical Society of America. 2008;124:1234–1251. doi: 10.1121/1.2945161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friesen LM, Shannon RV, Başkent D, Wang X. Speech recognition in noise as a function of the number of spectral channels: Comparison of acoustic hearing and cochlear implants. Journal of the Acoustical Society of America. 2001;110:1150–1163. doi: 10.1121/1.1381538. [DOI] [PubMed] [Google Scholar]

- Giezen MR, Escudero P, Baker A. Use of acoustic cues by children with cochlear implants. Journal of Speech, Language, and Hearing Research. 2010;53:1440–1457. doi: 10.1044/1092-4388(2010/09-0252). [DOI] [PubMed] [Google Scholar]

- Giolas TG, Cooker HS, Duffy JR. The predictability of words in sentences. The Journal of Auditory Research. 1970;10:328–334. [Google Scholar]

- Goldman R, Fristoe M. Goldman Fristoe 2: Test of Articulation. Circle Pines, MN: American Guidance Service, Inc; 2000. [Google Scholar]

- Goswami U, Fosker T, Huss M, Mead N, Szucs D. Rise time and formant transition duration in the discrimination of speech sounds: the Ba-Wa distinction in developmental dyslexia. Developmental Science. 2011;14:34–43. doi: 10.1111/j.1467-7687.2010.00955.x. [DOI] [PubMed] [Google Scholar]

- Greenlee M. Learning the phonetic cues to the voiced-voiceless distinction: A comparison of child and adult speech perception. Journal of Child Language. 1980;7:459–468. doi: 10.1017/s0305000900002786. [DOI] [PubMed] [Google Scholar]

- Hedrick M, Bahng J, von Hapsburg D, Younger MS. Weighting of cues for fricative place of articulation perception by children wearing cochlear implants. International Journal of Audiology. 2011;50:540–547. doi: 10.3109/14992027.2010.549515. [DOI] [PubMed] [Google Scholar]

- Hirsh IJ, Davis H, Silverman SR, Reynolds EG, Eldert E, Benson RW. Development of materials for speech audiometry. Journal of Speech and Hearing Disorders. 1952;17:321–337. doi: 10.1044/jshd.1703.321. [DOI] [PubMed] [Google Scholar]

- Holt RF, Carney AE. Multiple looks in speech sound discrimination in adults. Journal of Speech Language and Hearing Research. 2005;48:922–943. doi: 10.1044/1092-4388(2005/064). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holt LL, Lotto AJ. Cue weighting in auditory categorization: implications for first and second language acquisition. Journal of the Acoustical Society of America. 2006;119:3059–3071. doi: 10.1121/1.2188377. [DOI] [PubMed] [Google Scholar]

- Idemaru K, Holt LL. The developmental trajectory of children’s perception and production of English /r/-/l/ Journal of the Acoustical Society of America. 2013;133:4232–4246. doi: 10.1121/1.4802905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kalikow DN, Stevens KN, Elliott LL. Development of a test of speech intelligibility in noise using sentence materials with controlled word predictability. Journal of the Acoustical Society of America. 1977;61:1337–1351. doi: 10.1121/1.381436. [DOI] [PubMed] [Google Scholar]

- Kiefer J, von Ilberg C, Rupprecht V, Hubner-Egner J, Knecht R. Optimized speech understanding with the continuous interleaved sampling speech coding strategy in patients with cochlear implants: effect of variations in stimulation rate and number of channels. Annals of Otology, Rhinology and Laryngology. 2000;109:1009–1020. doi: 10.1177/000348940010901105. [DOI] [PubMed] [Google Scholar]

- Lacroix PG, Harris JD. Effects of high-frequency cue reduction on the comprehension of distorted speech. Journal of Speech and Hearing Disorders. 1979;44:236–246. doi: 10.1044/jshd.4402.236. [DOI] [PubMed] [Google Scholar]

- Lisker L. Is it VOT or a first-formant transition detector? Journal of the Acoustical Society of America. 1975;57:1547–1551. doi: 10.1121/1.380602. [DOI] [PubMed] [Google Scholar]

- Lisker L. “Voicing” in English: A catalogue of acoustic features signaling /b/ versus /p/ in trochees. Language and Speech. 1986;29:3–11. doi: 10.1177/002383098602900102. [DOI] [PubMed] [Google Scholar]

- Llanos F, Dmitrieva O, Shultz A, Francis AL. Auditory enhancement and second language experience in Spanish and English weighting of secondary voicing cues. Journal of the Acoustical Society of America. 2013;134:2213–2224. doi: 10.1121/1.4817845. [DOI] [PubMed] [Google Scholar]

- Macmillan NA, Creelman CD. Detection theory: A user’s guide. Mahwah, N.J: Erlbaum; 2005. [Google Scholar]

- Mayo C, Scobbie JM, Hewlett N, Waters D. The influence of phonemic awareness development on acoustic cue weighting strategies in children’s speech perception. Journal of Speech, Language, and Hearing Research. 2003;46:1184–1196. doi: 10.1044/1092-4388(2003/092). [DOI] [PubMed] [Google Scholar]

- McMurray B, Jongman A. What information is necessary for speech categorization? Harnessing variability in the speech signal by integrating cues computed relative to expectations. Psychological Review. 2011;118:219–246. doi: 10.1037/a0022325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miyawaki K, Strange W, Verbrugge R, Liberman AM, Jenkins JJ, Fujimura O. An effect of linguistic experience: The discrimination of [r] and [l] by native speakers of Japanese and English. Perception & Psychophysics. 1975;18:331–340. [Google Scholar]

- Moberly AC, Lowenstein JH, Tarr E, Caldwell-Tarr A, Welling DB, Shahin AJ, et al. Do adults with cochlear implants rely on different acoustic cues for phoneme perception than adults with normal hearing? Journal of Speech Language and Hearing Research. 2014;57:566–582. doi: 10.1044/2014_JSLHR-H-12-0323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Narayan SS, Temchin AN, Recio A, Ruggero MA. Frequency tuning of basilar membrane and auditory nerve fibers in the same cochleae. Science. 1998;282:1882–1884. doi: 10.1126/science.282.5395.1882. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S. Age-related differences in perceptual effects of formant transitions within syllables and across syllable boundaries. Journal of Phonetics. 1992;20:351–382. [Google Scholar]

- Nittrouer S. The relation between speech perception and phonemic awareness: Evidence from low-SES children and children with chronic OM. Journal of Speech and Hearing Research. 1996;39:1059–1070. doi: 10.1044/jshr.3905.1059. [DOI] [PubMed] [Google Scholar]

- Nittrouer S. The role of temporal and dynamic signal components in the perception of syllable-final stop voicing by children and adults. Journal of the Acoustical Society of America. 2004;115:1777–1790. doi: 10.1121/1.1651192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S. Early development of children with hearing loss. San Diego: Plural Publishing; 2010. [Google Scholar]