Abstract

Electronic health record (EHR) data can be extracted for calculating performance feedback, but users’ perceptions of such feedback impacts its effectiveness. Through qualitative analyses, we identified perspectives on barriers and facilitators to the perceived legitimacy of EHR-based performance feedback, in 11 community health centers (CHCs). Providers said such measures rarely accounted for CHC patients’ complex lives or for providers’ decisions as informed by this complexity, which diminished the measures’ perceived validity. Suggestions for improving the perceived validity of performance feedback in CHCs are presented. Our findings add to the literature on EHR-based performance feedback by exploring provider perceptions in CHCs.

Keywords: feedback, quality improvement, algorithms, community health centers, qualitative research, attitude of health personnel, electronic health records, safety-net providers

Introduction

Care quality measures created with data extracted from electronic health records (EHRs) can provide valuable performance feedback to clinicians, with the potential to improve patient medical care. Thus far, however, such performance feedback has had only a limited impact on care quality.1–4 One important reason for this limitation is that users (recipients of the performance feedback) can perceive EHR data-based measures to be invalid or unfair,5–8 which diminishes the feedback’s influence on provider behaviors.9–11 This lack of trust in the legitimacy and accuracy of EHR-based performance feedback both stems from and illustrates the challenges of creating quality metrics based on readily extractable EHR data.12

Previous qualitative research identified some strategies for creating EHR data-based feedback measures that providers consider credible and valid, but with a few exceptions,9,10,13 this research was conducted in large, academic / integrated healthcare settings. Little is known about perceptions of and strategies for improving such feedback in the community health center (CHC) setting. Yet CHCs – the United States’ healthcare ‘safety net’ – differ from other healthcare settings in critical ways, most notably their patients’ socioeconomic vulnerability. Thus, there is a need to better understand barriers to the perceived legitimacy of EHR-based feedback measures in primary care CHCs, and approaches to crafting performance feedback that effectively improves care quality in this setting. To that end, we present an in-depth qualitative assessment of how primary care providers perceived EHR-based performance feedback, and their suggestions for increasing the utility of such feedback data, as reported in data collected in the context of a clinic-randomized implementation trial conducted in CHCs.

The terminology used to describe performance feedback in the literature varies. Here, performance metrics means the aggregate measurement of a given care point (e.g., rate of guideline-concordant statin prescribing, shown as a percentage on a graph). Data feedback means potentially actionable data linked back to individual patients (e.g., a list of patients with diabetes who are indicated for a statin but not prescribed one). Performance feedback encompasses both types of measurement.

Methods

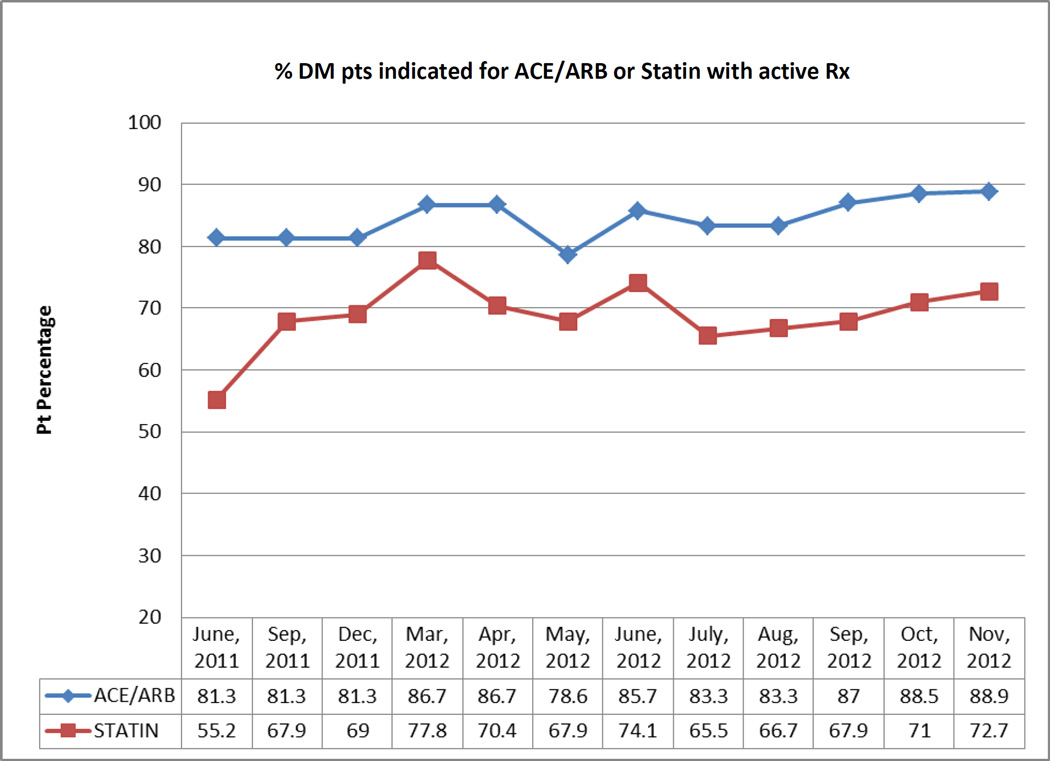

The ‘ALL Initiative’ (ALL) is an evidence-based intervention designed to increase the percentage of patients with diabetes who are appropriately prescribed cardioprotective statins and angiotensin-converting enzyme inhibitors (ACEI) / angiotensin II receptor blockers (ARB). The data presented here were collected in the context of a five-year pragmatic trial of the feasibility and impact of implementing ALL in 11 primary care CHCs in the Portland, OR area. The ALL intervention included encounter-based alerts, patient panel data roster tools, and educational materials, described in detail elsewhere.14,15 We also extracted data from the study CHCs’ shared EHR to create performance metrics on the percentage of diabetic patients who had active prescriptions for statins and ACEI/ARBs, if indicated for those medications per national guidelines. These study-specific metrics were calculated for each clinic and clinician, using aggregated data (Figure 1), and given to the study CHCs’ leadership as monthly clinic-level reports; in addition, patient panel summaries were given to each provider at the study clinics at varying intervals. Individual patients’ ‘indicated and active’ status was also given to providers by request (Figure 2). The CHCs’ leaders and individual providers distributed this performance feedback to clinic staff as desired; for details on how the feedback was disseminated, see Table 1.

Figure 1.

Provider-specific performance metrics

Figure 2.

Provider-specific data feedback

Table 1.

Distribution of study-related performance feedback, by organization

| Organization | Study Performance Metrics | Study Data Feedback |

|---|---|---|

| A | Initially staff used study rosters to identify patients ‘indicated but not active,’ which they would note on the EHR Problem List for provider review. |

|

| Distributed to individual providers one time in study year 4. |

In study year 4, intending to increase reliance on the real-time alert, began inserting only the intervention logic into the EHR problem list |

|

| Roster continued to be used by an RN diabetes QI lead for individual meetings with providers to discuss overall care of their diabetic patients. | ||

| B | Quarterly, site coordinator sent metrics for each provider and clinic to the Medical Director, who then disseminated to clinic- based lead providers. |

Monthly, site coordinator posted roster-based lists of patients ‘indicated but not active’ by care team (usually two providers) on the organization’s shared drive. |

| Some lead providers presented the metrics at clinic-specific provider team meetings. |

Staff had to take the initiative to search for and pull the list. |

|

| Graphs depicting overall clinic progress sometimes posted on clinic bulletin boards, at discretion of clinic managers. |

||

| C | Site coordinator pulled provider-specific percentages of ‘indicated and active’ from the study results and emailed them in graph form (along with the clinic-wide percentages) to individual providers 4 times over the course of the 5 year study. |

Approximately every 6 weeks site coordinator created roster-based provider-specific lists of patients ‘indicated but not active’. |

| Leadership sometimes used the clinic metrics as a springboard for discussion in leadership and QI meetings. |

Distributed paper copies in-person and emailed electronic copies (varied). Usually given only to providers, but by request sometimes shared with other members of the care team. |

Using a convergent design within a mixed methods framework,16 we collected qualitative data on the dynamics and contextual factors affecting intervention uptake.17 The extent of qualitative data collection at each clinic was informed by pragmatic constraints and data saturation (the point at which no new information was observed).18 Table 2 details our methods and sampling strategy. The intervention was implemented in June 2011 and supported through May 2015; qualitative data were collected between December 2011 and October 2014. Qualitative analysis was guided by the constant comparative method,19 wherein each finding and interpretation is compared to previous findings to generate theory grounded in the data. We used the software program QSR NVivo to organize and facilitate analysis of the interview and group discussion transcripts and observation field notes. We identified key emergent concepts, or codes, and assigned them to appropriate text segments. Each code’s scope and definition was then refined, and additional themes identified, through iterative immersion / crystallization cycles (deep engagement with the data followed by reflection).20 Our interpretations of the data were confirmed through regular discussions among the research team, which included experts in clinical care, quality improvement, and quantitative and qualitative research, and clinic leadership at the study CHCs. This paper presents our qualitative findings on CHC physicians’ perspectives on the performance feedback provided to them as described above.

Table 2.

Qualitative Data Collection Methods

| Method | Sampling strategy | Number of resulting documents |

Detail |

|---|---|---|---|

| Observation | Convenience

|

126 field notes |

|

| Semi- structured interviews |

Purposive

|

34 transcripts |

|

| Group discussions |

Purposive

|

8 transcripts |

|

| Diaries by site coordinators |

Not applicable | 31 months of entries |

|

| Document collection |

Not applicable | 201 documents |

|

| Chart review | Varied by organization

|

431 unique patients |

|

Abbreviations: PCP, Primary care provider; MD, Doctor of Medicine; RNs, Registered nurse; MAs, Medical assistant; TAs, Team assistant, PCC, Patient Care Coordinator; PA, Physician assistant; NP, Nurse practitioner

This study was approved by the Kaiser Permanente NW Institutional Review Board. Study participants (clinic staff) gave verbal consent prior to data collection.

Results

CHC providers’ perceptions of performance feedback measures

CHC providers stated that they often questioned the validity of performance feedback measures, usually because the feedback measures did not account for CHC patients’ needs or the complexity of their lives, or for clinical decisions made by providers who understood this complexity. While similar concerns have been reported in other care settings,5,9–11,21,22 the socioeconomic vulnerabilities and fluidity of the CHC patient population added specific barriers to the perceived trustworthiness of the feedback measures.

Defining the population: Who counts as ‘indicated and active’?

In this study, the feedback measures’ denominator was the number of patients indicated for a given medication (ACEI/ARB or statin), and the numerator was the number prescribed the indicated medication in the last year. However, CHC patients’ socioeconomic circumstances (e.g., lack of money to pay for the medication, housing instability) or related clinician judgment (e.g., perceived likelihood of medication non-adherence, preference for a stepwise approach to prescribing for patients with complex needs) could be barriers to prescribing a given ‘indicated’ medication. For example, some CHC patients bought medications in their home country, where they cost less, or took medications that family members or friends had discontinued. Without documentation of these circumstances in the EHR, however, the feedback measures would identify the patient’s prescription as expired. Two examples illustrate this:

[Provider] asked about one medication that [the patient] said he was taking but it looked in the chart like he was out of. Patient explained that his son was taking the same medication but had recently been prescribed a higher dose, so he gave his dad (the patient) his remaining pills of the lower dose. ‘Because I don’t have money.’ (Field note)

… If patients are not on the medications, it is not because it wasn’t offered. [The provider] believes that if the patient was not on medications it is due to education level affecting understanding, lack of resources for scripts and tests, or patient flat out refuses. The concern … is how this information is reflected in the statistics or data. (Field note)

Furthermore, CHC providers reported that their patients are often unable to see their primary provider for periods of time (e.g., if they are out of the country, or in prison), or are not available for other reasons (e.g., transient populations, inaccurate / frequently changing contact information). When patients on a provider’s panel were temporarily receiving care elsewhere (e.g., while in jail), and medication data were not shared between care sites, feedback measures would be affected. Similarly, migrant workers remain on the provider’s panel (and thus in the feedback measures’ denominator) even when they are out of the country and cannot be reached by the clinic. Their prescriptions might expire while the patient was unable to see their provider, negatively impacting rates of guideline-based prescribing in the performance feedback measures.

In addition, CHC patients are not enrolled members, which can affect measurement of care quality by making it unclear whether a patient was not receiving appropriate care, versus out of reach. Patients identified as lost to follow-up were removed from the feedback measures’ denominators; however, as clinics used different methods for defining patients as lost to follow-up, accounting for this accurately in data extraction was difficult. For example, patients could be considered in a given provider’s denominator if they were “touched” by the clinic in the last year (e.g., by attempted phone calls) even if no actual contact was made. Thus, patients who were never seen in person could be included in the feedback measure’s denominator.

Situations such as these could not be effectively captured by the extraction algorithm, as the EHR lacked discrete data fields where providers could record them, so these exceptions were not reflected in the performance data. As a result, the CHC providers often questioned the measures’ validity and fairness. One provider said that receiving such reports can feel like “salt in the wound.” Another provider noted:

“‥ we get these stupid reports all the time telling you you’re good, you’re bad. I mean, just one less thing to like have somebody pointing fingers at me. … It’s horrible as a provider, really, to get all of these measurements … It’s like saying you’re going to be graded on this.” (PCP)

Gap between potential and actuality

Providers consistently described struggling between a desire to use feedback data to improve patient care and their inability to do so given inherent situational constraints. This could lead to feeling overwhelmed, anxious, frustrated, or guilty when they received the feedback reports.

“… the possibilities for data and what we could do with it in a systematic way are amazing. But we are so completely overloaded … that we just can't even deal with the data that we get…” (PCP)

However, a few positives were noted. Some providers acknowledged that performance feedback can be helpful reminders of the importance of the targeted medications in diabetes care, which motivated them to discuss this with their patients.

“I like it, personally, because … somebody is helping me to see. Sometimes it is difficult to see the whole picture …. It is not because you lack the knowledge or the experience. But you can't catch everything.” (PCP)

Others appreciated the feedback as a safeguard, even though they were often already aware of the patients flagged as needing specific actions or medications. Conversely, others thought it was not worth reviewing the reports, as they already knew their patients’ issues:

“[I would look over] the patients who were indicated for certain meds… that weren't on them, and just kind of just quickly review who those patients were. Just kind of … do I recognize this patient? Oh, am I surprised that they’re not on a statin or ACE? No I'm not. Okay.” (PCP)

Provider suggestions for improving performance feedback measures

Despite the tensions described above, most providers said they wanted to receive feedback data, but many noted that organizational changes (e.g., to workflow, staffing, and productivity expectations) would be necessary precursors to its effective use. Without these changes, providers thought such data would primarily serve as snapshots of current care quality, but not as tools to improve performance. They suggested a number of ways to improve both the acceptability and utility of performance feedback.

Staffing and resources

Dedicated, management-supported “brain time” was suggested as a means to enable care teams to review feedback data together and identify next steps to addressing care gaps. Providers also recommended designating a trusted team member (e.g., an RN) as responsible for identifying potentially actionable items from the feedback data.

“… [what] I’m kind of looking for is a QI [quality improvement] person to come in here that has the data, and goes to the team meetings, and can [be] sort of non-judgmentally preventive. … So it's not so much as you bad person … but hey, we look a little low here, how about if we just talk for a few minutes about, you know, what one little step we could take, and let's try it for a few months and see how it works. But being in the team so that they can support that work, and then checking back in.” (PCP)

Action plans

Providers also requested concrete suggestions for how to prioritize and act on feedback data (along with resources to do so), saying that data alone is insufficient to drive change.

“[What] I'd really like is here's your data, and here's what we're going to do with this. … Here's the twelve patients that you have six things wrong with them, that if you got these patients in they're really high yield, something like that.” (PCP)

Holistic, patient-specific format

Many providers commented that patient-level data would be more useful than aggregate performance metrics, and asked that such feedback include patient-specific information along with the panel-based metrics. Many also requested that such patient-level feedback data include relevant clinical indicators in addition to measures targeted by a given initiative (e.g., a diabetes ‘dashboard’ that shows HbA1c, blood pressure, and LDL results along with guideline-indicated medications) for a more holistic view of the patient’s needs.

“I guess … if you were trending in the wrong direction that would be useful information … But for me … probably meatier is pulling lists and looking at specific individuals and saying, you know, here’s this woman … she’s not on statin … is there a reason why?” (PCP)

The patient panel data (Figure 2), an example of this approach, was generally well-received by care teams. The colors are a ‘stoplight’ tool: green indicated measurement within normal limits, yellow indicated that a measure approaching a concerning level, and red indicated a problem.

Discussion

Previously reported challenges to the effective use of EHR data-based performance feedback measures include users’ questions about ‘what counts’ / how the measures are calculated;9–11,23 welcoming the feedback but also feeling judged by it;9 and acting on population-based care measurement and expectations while providing patient-centered, individualized care.9–11,24,25 Feedback measures perceived to be supportive, rather than evaluative,9,24,26,27 that include goal setting and / or action plans,28–30 and that users believe account for patient and provider priorities, are more likely to be trusted, and thus potentially impactful.9–11,24,25,31,32

Our findings concur, and add to this literature by exploring provider perceptions within the safety net setting. CHC patients are often unable to follow care recommendations for financial reasons, may receive care elsewhere for periods of time, or may be otherwise unavailable to clinic staff, leading to inaccuracies in feedback measures. CHC providers, understanding their patients’ barriers to acting on recommended care, are understandably disinclined to trust feedback data that does not account for such barriers. Thus, in this important setting, creating EHR-based performance feedback that users perceive as valid may be particularly challenging because of limitations in how effectively such measures can account for the socioeconomic circumstances of CHC patients’ lives.

Limitations on the ability to extract data in a way that accounts for such factors is inherent to most EHRs.12,33–36 EHR data extraction entails accessing data recorded in discrete fields accessible and searchable by a computer algorithm. The type of ‘non-clinical’ patient information discussed above as barriers to care, as well as the reasoning behind the non-provision of recommended care, is rarely documented in standardized locations or in discrete data fields (if at all),37–39 compromising the ability to extract comprehensive performance feedback data recognized as legitimate by users. Improved EHR functions for documenting exceptions might enable more accurate quality measurement, and thus improve providers’ receptiveness to and trust of feedback data. In prior research, providers were more receptive to EHR-based clinical decision support when documentation of exceptions was enabled;40 the same may apply for feedback measures. Another EHR adaptation that could improve such measures’ accuracy would be heightened capacity for health information exchange, so that data on care that CHC patients receive external to their CHC could be reviewed by their primary care provider.

This study’s CHC providers’ suggestions for improving the legitimacy and utility of EHR data-based performance feedback did not directly speak to the challenges of using EHR data to create accurate measures, but they do so indirectly. For example, the providers recommended giving designated staff time and support for reviewing and acting on performance feedback. Such support could include ensuring that the appropriate people understand how each measure is extracted and constructed, and what a given measure might miss due to limitations in data structures. Providers who dispute performance feedback that is extracted from their own EHR data may feel more confident in the feedback if they understand how the metrics and reports are calculated from the raw data (e.g., the algorithm will not catch free text documentation of patient refusal; to remove that patient from the measure denominator it is necessary to use the alert override option). In addition, the providers’ ambivalence about the performance measures illuminates the need to acknowledge that care quality cannot be judged simplistically, and to ensure that focusing on measurement does not conflict with patient-centered care. Proactively acknowledging these needs and working with providers to address them could further strengthen trust in feedback measures.

This study has several limitations. The study clinics were involved in other, concurrent practice change efforts, some of which also involved performance feedback. Given this, provider reactions may have been atypical, limiting generalizability of the findings. Interviews and observations were conducted by members of the research team potentially perceived to have an investment in intervention outcomes; respondents may therefore have moderated their responses. Finally, results are purely descriptive and are not correlated with any quantitative outcomes.

Conclusion

Provider challenges to the legitimacy of EHR data-based performance feedback measures have impeded the effective use of such feedback. Addressing issues related to such measures’ credibility and legitimacy, and providing strategies and resources to take action as necessary, may help realize the potential of EHR data-based performance feedback in improving patient care.

Acknowledgments

Thank you to Colleen Howard for her many contributions to the project, including data collection and interpretation, and to Jill Pope, Elizabeth L. Hess and C. Samuel Peterson for editorial and formatting assistance. Development of this manuscript, and the study which it describes, were supported by grant R18HL095481 from the National Heart, Lung and Blood Institute.

Footnotes

Clinical Trial Registration Number: NCT02299791

Conflict of interest: We have no conflict of interest, financial or otherwise, to disclose in relation to the content of this paper.

Drafts of portions of this work have been presented at the 2014 NAPCRG annual meeting, the 2015 Society for Implementation Research Collaboration (SIRC) conference, and the 8th Annual Conference on the Science of Dissemination and Implementation in Health.

Reference List

- 1.Persell SD, Kaiser D, Dolan NC, et al. Changes in performance after implementation of a multifaceted electronic-health-record-based quality improvement system. Med Care. 2011;49:117–125. doi: 10.1097/MLR.0b013e318202913d. [DOI] [PubMed] [Google Scholar]

- 2.Persell SD, Khandekar J, Gavagan T, et al. Implementation of EHR-based strategies to improve outpatient CAD care. Am J Manag Care. 2012;18:603–610. [PubMed] [Google Scholar]

- 3.Bailey LC, Mistry KB, Tinoco A, et al. Addressing electronic clinical information in the construction of quality measures. Acad Pediatr. 2014;14:S82–S89. doi: 10.1016/j.acap.2014.06.006. [DOI] [PubMed] [Google Scholar]

- 4.Ryan AM, McCullough CM, Shih SC, Wang JJ, Ryan MS, Casalino LP. The intended and unintended consequences of quality improvement interventions for small practices in a community-based electronic health record implementation project. Med Care. 2014;52:826–832. doi: 10.1097/MLR.0000000000000186. [DOI] [PubMed] [Google Scholar]

- 5.Kizer KW, Kirsh SR. The double edged sword of performance measurement. J Gen Intern Med. 2012;27:395–397. doi: 10.1007/s11606-011-1981-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Brooks JA. The new world of health care quality and measurement. Am J Nurs. 2014;114:57–59. doi: 10.1097/01.NAJ.0000451688.04566.cf. [DOI] [PubMed] [Google Scholar]

- 7.Gourin CG, Couch ME. Defining quality in the era of health care reform. JAMA Otolaryngol Head Neck Surg. 2014;140:997–998. doi: 10.1001/jamaoto.2014.2086. [DOI] [PubMed] [Google Scholar]

- 8.Van der Wees PJ, Nijhuis-van der Sanden MW, van GE, Ayanian JZ, Schneider EC, Westert GP. Governing healthcare through performance measurement in Massachusetts and the Netherlands. Health Policy. 2014;116:18–26. doi: 10.1016/j.healthpol.2013.09.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ivers N, Barnsley J, Upshur R, et al. "My approach to this job is…one person at a time": Perceived discordance between population-level quality targets and patient-centred care. Can Fam Physician. 2014;60:258–266. [PMC free article] [PubMed] [Google Scholar]

- 10.Kansagara D, Tuepker A, Joos S, Nicolaidis C, Skaperdas E, Hickam D. Getting performance metrics right: a qualitative study of staff experiences implementing and measuring practice transformation. J Gen Intern Med. 2014;29(Suppl 2):S607–S613. doi: 10.1007/s11606-013-2764-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Dixon-Woods M, Leslie M, Bion J, Tarrant C. What counts? An ethnographic study of infection data reported to a patient safety program. Milbank Q. 2012;90:548–591. doi: 10.1111/j.1468-0009.2012.00674.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Baker DW, Persell SD, Thompson JA, et al. Automated review of electronic health records to assess quality of care for outpatients with heart failure. Ann Intern Med. 2007;146:270–277. doi: 10.7326/0003-4819-146-4-200702200-00006. [DOI] [PubMed] [Google Scholar]

- 13.Rowan MS, Hogg W, Martin C, Vilis E. Family physicians' reactions to performance assessment feedback. Can Fam Physician. 2006;52:1570–1571. [PMC free article] [PubMed] [Google Scholar]

- 14.Gold R, Muench J, Hill C, et al. Collaborative development of a randomized study to adapt a diabetes quality improvement initiative for federally qualified health centers. J Health Care Poor Underserved. 2012;23:236–246. doi: 10.1353/hpu.2012.0132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gold R, Nelson C, Cowburn S, et al. Feasibility and impact of implementing a private care system's diabetes quality improvement intervention in the safety net: a cluster-randomized trial. Implement Sci. 2015;10:83. doi: 10.1186/s13012-015-0259-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Fetters MD, Curry LA, Creswell JW. Achieving Integration in Mixed Methods Designs: Principles and Practices. Health Serv Res. 2013;48:2134–2156. doi: 10.1111/1475-6773.12117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bunce AE, Gold R, Davis JV, et al. Ethnographic process evaluation in primary care: explaining the complexity of implementation. BMC Health Serv Res. 2014;14:607. doi: 10.1186/s12913-014-0607-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Guest G, Bunce A, Johnson L. How Many Interviews Are Enough? An Experiment with Data Saturation and Variability. Field Methods. 2006;18:59–82. [Google Scholar]

- 19.Parsons KW. Constant Comparison. In: Lewis-Beck MS, Bryman A, Futing Liao T, editors. Encyclopedia of Social Science Research Methods. SAGE Publications, Inc; 2004. pp. 181–182. [Google Scholar]

- 20.Borkan J. Immersion/Crystallization. In: Crabtree BF, Miller WL, editors. Doing Qualitative Research (2nd Edition) Thousand Oaks, CA: Sage Publications; 1999. pp. 179–194. [Google Scholar]

- 21.Stange KC, Etz RS, Gullett H, et al. Metrics for assessing improvements in primary health care. Annu Rev Public Health. 2014;35:423–442. doi: 10.1146/annurev-publhealth-032013-182438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Powell AA, White KM, Partin MR, et al. Unintended consequences of implementing a national performance measurement system into local practice. J Gen Intern Med. 2012;27:405–412. doi: 10.1007/s11606-011-1906-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Parsons A, McCullough C, Wang J, Shih S. Validity of electronic health record-derived quality measurement for performance monitoring. J Am Med Inform Assoc. 2012;19:604–609. doi: 10.1136/amiajnl-2011-000557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dixon-Woods M, Redwood S, Leslie M, Minion J, Martin GP, Coleman JJ. Improving quality and safety of care using "technovigilance": an ethnographic case study of secondary use of data from an electronic prescribing and decision support system. Milbank Q. 2013;91:424–454. doi: 10.1111/1468-0009.12021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Powell AA, White KM, Partin MR, et al. Unintended consequences of implementing a national performance measurement system into local practice. J Gen Intern Med. 2012;27:405–412. doi: 10.1007/s11606-011-1906-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Casalino LP. The unintended consequences of measuring quality on the quality of medical care. N Engl J Med. 1999;341:1147–1150. doi: 10.1056/NEJM199910073411511. [DOI] [PubMed] [Google Scholar]

- 27.Dixon-Woods M, Leslie M, Tarrant C, Bion J. Explaining Matching Michigan: an ethnographic study of a patient safety program. Implement Sci. 2013;8:70. doi: 10.1186/1748-5908-8-70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Ivers NM, Grimshaw JM, Jamtvedt G, et al. Growing literature, stagnant science? Systematic review, meta-regression and cumulative analysis of audit and feedback interventions in health care. J Gen Intern Med. 2014;29:1534–1541. doi: 10.1007/s11606-014-2913-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ivers NM, Sales A, Colquhoun H, et al. No more 'business as usual' with audit and feedback interventions: towards an agenda for a reinvigorated intervention. Implement Sci. 2014;9:14. doi: 10.1186/1748-5908-9-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hysong SJ. Meta-analysis: audit and feedback features impact effectiveness on care quality. Med Care. 2009;47:356–363. doi: 10.1097/MLR.0b013e3181893f6b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Malina D. Performance anxiety--what can health care learn from K-12 education? N Engl J Med. 2013;369:1268–1272. doi: 10.1056/NEJMms1306048. [DOI] [PubMed] [Google Scholar]

- 32.Mannion R, Braithwaite J. Unintended consequences of performance measurement in healthcare: 20 salutary lessons from the English National Health Service. Intern Med J. 2012;42:569–574. doi: 10.1111/j.1445-5994.2012.02766.x. [DOI] [PubMed] [Google Scholar]

- 33.Persell SD, Wright JM, Thompson JA, Kmetik KS, Baker DW. Assessing the validity of national quality measures for coronary artery disease using an electronic health record. Arch Intern Med. 2006;166:2272–2277. doi: 10.1001/archinte.166.20.2272. [DOI] [PubMed] [Google Scholar]

- 34.Gardner W, Morton S, Byron SC, et al. Using computer-extracted data from electronic health records to measure the quality of adolescent well-care. Health Serv Res. 2014;49:1226–1248. doi: 10.1111/1475-6773.12159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Urech TH, Woodard LD, Virani SS, Dudley RA, Lutschg MZ, Petersen LA. Calculations of Financial Incentives for Providers in a Pay-for-Performance Program: Manual Review Versus Data From Structured Fields in Electronic Health Records. Med Care. 2015;53:901–907. doi: 10.1097/MLR.0000000000000418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Baus A, Zullig K, Long D, et al. Developing Methods of Repurposing Electronic Health Record Data for Identification of Older Adults at Risk of Unintentional Falls. Perspect Health Inf Manag. 2016;13:1b. [PMC free article] [PubMed] [Google Scholar]

- 37.Steinman MA, Dimaano L, Peterson CA, et al. Reasons for not prescribing guideline-recommended medications to adults with heart failure. Med Care. 2013;51:901–907. doi: 10.1097/MLR.0b013e3182a3e525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Matthews KA, Adler NE, Forrest CB, Stead WW. Collecting psychosocial "vital signs" in electronic health records: Why now? What are they? What's new for psychology? Am Psychol. 2016;71:497–504. doi: 10.1037/a0040317. [DOI] [PubMed] [Google Scholar]

- 39.Behforouz HL, Drain PK, Rhatigan JJ. Rethinking the social history. N Engl J Med. 2014;371:1277–1279. doi: 10.1056/NEJMp1404846. [DOI] [PubMed] [Google Scholar]

- 40.Persell SD, Dolan NC, Baker DW. Medical exceptions to decision support: a tool to identify provider misconceptions and direct academic detailing. AMIA Annu Symp Proc. 2008;1090 [PubMed] [Google Scholar]