Abstract

This meta-analysis was conducted to understand the factors underlying effective messages to counter attitudes/beliefs based on misinformation. Because misinformation can lead to poor decisions about consequential matters and is persistent and difficult to correct, debunking it is an important scientific and public policy goal. This meta-analysis (k = 52, N = 6,878) revealed large effects for: presenting misinformation (2.41 ≤ d ≤ 3.08), debunking (1.14 ≤ d ≤ 1.33), and the persistence of misinformation in the face of debunking (0.75 ≤ d ≤ 1.06). Persistence was stronger and the debunking effect was weaker when audiences generated reasons in support of the initial misinformation. A detailed debunking message containing new details of the information currently recommended in various editorial policies, such as Retraction Watch, correlated positively with the debunking effect. Surprisingly, however, a detailed debunking message correlated positively with the misinformation-persistence effect. (141 words)

Keywords: misinformation, correction, continued influence, science communication, belief persistence/perseverance

The effects of misinformation are of interest to many areas of psychology, from cognitive science, to social approaches, to the emerging discipline that prescribes best reporting and publication practices for all psychologists. Misinformation on consequential subjects is of special concern and includes claims that could affect health behaviors and voting decisions. For example, “genetically-modified mosquitoes caused the ZIKA virus outbreak in Brazil” is misinformation, a claim unsupported by scientific evidence (Schipani, 2016). Despite retraction of the scholarly article making the causal link of the measles, mumps, and rubella vaccine and autism, some are still convinced of this unfounded claim (Newport, 2015). Others continue to hold that there were weapons of mass destruction in Iraq, a belief undercut by the fact that none were found there after the US invasion (Newport, 2013). Similarly, others believe that the Affordable Care Act (ACA) mandated death panels in spite of the fact that independent fact-checkers have shown that such consultations about end of life care preferences are voluntary and not a pre-condition of enrolling in ACA (Henig, 2009; Nyhan, 2010). The false beliefs on which we focus here occur when the audience initially believes misinformation and that misinformation persists or continues to exert psychological influence after it has been rebutted. In this context, an important question is: How strong is the misinformation persistence across contexts and what audience and message factors moderate this effect?

Mounting evidence suggests that the process of correcting misinformation is complex and remains incompletely understood (Lewandowsky et al., 2015; Lewandowsky, Ecker, Seifert, Schwarz, & Cook, 2012; Schwarz, Sanna, Skurnik, & Yoon, 2007). Lewandowsky and colleagues (2012) qualitatively reviewed the characteristics of effective debunking, a term we define as presenting a corrective message that establishes that the prior misinformation is false. Corrections may be partial, such as those that update details of the information, or complete, such as retractions of scientific articles based on inappropriate or fabricated evidence the authors or the journal no longer endorse. This meta-analysis complements the Lewandowsky review by quantitatively assessing the size and moderators of the debunking and misinformation-persistence effects.

Audience Factors that Reduce Credulity

As the literature confirms, “human memory is not a recording device, but rather a process of (re)construction that is vulnerable to both internal and external influence” (Van Damme & Smets, 2014). Scholars agree that systematically reasoning in line with the arguments contained in a message should increase the message’s impact (Arceneaux, Johnson, & Cryderman, 2013; Chaiken & Trope, 1999; Johnson-Laird, 1994; Kahneman, 2003; Petty & Briñol, 2010; Slothuus & de Vreese, 2010). Accordingly, when the elaboration process organizes, updates, and integrates elements of information, generating explanations in line with the initial misinformation, this process may create a network of confirming causal accounts about the misinformation in memory. Conditions that yield confirming explanations may be associated with stronger misinformation persistence and a weaker debunking effect (Arceneaux, 2012; Johnson-Laird, 2013). In contrast, considering the error in the initial information may lead to a weak explanatory model (Kowalski & Taylor, 2009). As a result, conditions that yield explanations that counter the misinformation should be associated with weaker misinformation persistence and greater debunking. In short, the direction of the cognitive activity of the audience is likely to predict misinformation persistence and ineffective correction.

The Debunking Message

Corrections that merely encourage people to consider the opposite of initial information often inadvertently strengthen the misinformation (Schwarz et al., 2007). Therefore, offering a well argued, detailed debunking message appears to be necessary to reduce misinformation persistence (Jerit, 2008). Research on mental models (Johnson-Laird, 1994; Johnson-Laird & Byrne, 1991) suggests that an effective debunking message should be sufficiently detailed to allow recipients to abandon initial information for a new model (Johnson & Seifert, 1994; Wilkes & Leatherbarrow, 1988). Messages that simply label the initial information as incorrect may therefore leave recipients unable to remember what was wrong and without a model to understand the information (Johnson & Seifert, 1994; Wilkes & Leatherbarrow, 1988). Hence, we hypothesized that the level of detail of the debunking message i.e., simply labeling the misinformation as incorrect vs, providing new and credible information, would be a vital factor in effective debunking and in curbing the persistence of misinformation.

Present Meta-Analysis

To conduct the proposed meta-analysis, we used pairs of keywords to obtain relevant scholarship from multiple databases in relevant areas (e.g., political science, communication, and public health, see SI for detailed information). Only those reports from studies that were clearly or possibly experimental remained as candidates. One of the most popular experimental paradigms is a series of reports of a warehouse fire (see Ecker, Lewandowsky, Swire, & Chang, 2011; Johnson & Seifert, 1994; Wilkes & Leatherbarrow, 1988). This paradigm involves three phases. In the first manipulation phase, experimental participants read a booklet containing either a misinformation message attributing the fire to the presence of volatile materials in the warehouse or a misinformation message accompanied by a debunking message, whereas control participants receive neither. The second phase is a delay during which participants work on an unrelated task for 10 minutes. In the third phase, participants receive open-ended questionnaires assessing their understanding of the reports. The questionnaires include ten causal inference questions (e.g., “What could have caused the explosions?”), ten factual questions (e.g., “What time was the fire eventually put out?”), and manipulation check items. These questions measure the tendency of making more detailed inferences (e.g., “What could have caused the explosions?”) about either the misinformation or the debunking message, with the possibility of greater misinformation persistence when the detailed inferences are about the misinformation.

We also specified three eligibility criteria to identify relevant studies, including: (a) the presence of open-ended questions/closed-ended scale measures of participants’ beliefs in (e.g., probability judgments about an event or person) or attitudes supporting (e.g., liking for a policy) the earlier misinformation and the debunking-message information, (b) the presence of a control group as well as one of the experimental groups i.e., with misinformation and/or with debunking-message, and (c) the inclusion of a news message initially asserted to be true (the misinformation message) as well as a debunking message (se SI for details). Even though many topics involved real world matters (e.g., Berinsky, 2012 for the 2010 Affordable Care Act materials, SI Materials and Methods), the message positions should be unfamiliar to the participants before the experiment.

The Selection of Studies

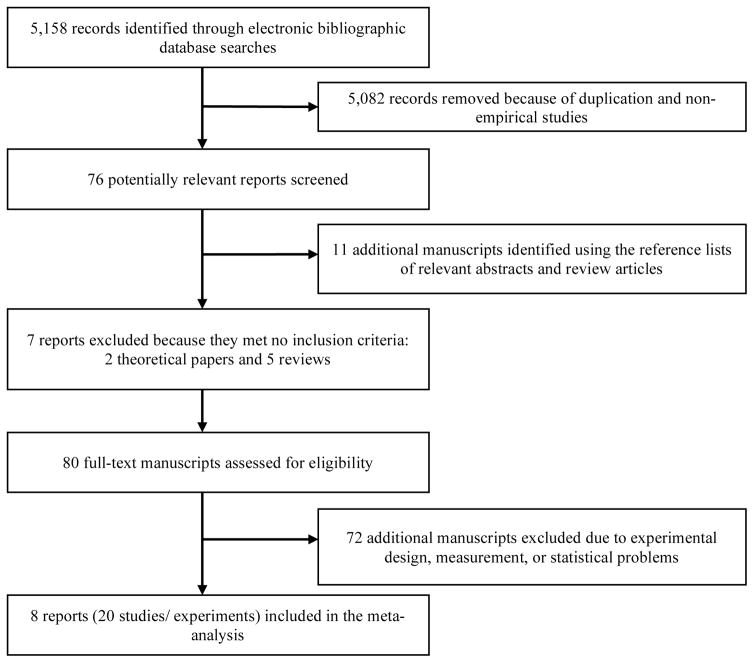

To obtain a complete set of studies, we used specific terms and keywords (including wildcards; see SI Materials and Methods) and searched multiple online databases: (a) PsyINFO, (b) Google Scholar, (c) MEDLINE, (d) PubMed, (e) ProQuest Dissertations and Theses Abstracts and Indexes: Social Sciences, (f) the Communication Source, and (g) the Social Sciences Citation Index. We also checked reviews and bibliographies and culled the references of papers selected for inclusion (SI Materials and Methods). By February 15, 2015, this meta-analysis included eight research reports (N = 6,878), 20 experiments, and 52 statistically independent samples (see Figure 1).

Figure 1.

Flow diagram of the search protocol and workflow for study selection, as suggested by Moher, Liberati, Tetzlaff, and Altman (2009).

Estimation of Effect Sizes for Misinformation, Debunking, and Misinformation Persistence

We used Hedges’ d as our effect size. This approach includes a correction factor j, [1 – 3/(4*n – 1)], which reduces the positive bias introduced by the use of small samples in experimental studies. All the experiments we synthesized happened to have a between-subjects design. Thus, we compared Ms between experimental conditions to obtain the effect sizes of interest (SI Materials and Methods). The difference between the misinformation group and the control group constitutes the misinformation effect, the difference between the misinformation group and the debunking group constitutes the debunking effect, and the difference between the debunking group and the control group constitutes the misinformation-persistence effect. Two trained raters used means and standard deviations from the different groups to compute Hedges’ d, following the formulas outlined by Borenstein et al. (Borenstein, Hedges, Higgins, & Rothstein, 2009).

Coding of Moderators

Two of the authors worked as raters to calculate effect sizes and code the moderators, including audience and message factors. Specifically, we coded for (a) the generation of explanations in line with the misinformation, (b) the generation of counter-arguments to the misinformation, and (c) the level of detail of the debunking message. Raters resolved disagreements by discussion. All the coded variables reached adequate agreement (kappa = .87 – 1.00, ICC = .90 – 1.00). Table 1 summarizes the coded characteristics and results in the literature we synthesized.

Table 1.

Characteristics and Effect Sizes of Studies Included in Meta-Analysis

| Short Citation and Experiment |

News Topics | Sample Sizes (N) | Percentage of Female Participants |

Mean Age | Misinformation Effect Size |

Debunking Effect Size |

Misinformation- Persistence Effect Size |

Publication Status | Online vs. Lab Data Collection |

Generation of explanations in line with the Misinformation |

Generation of Counter- arguments to the Misinformation |

Detail of Debunking Messages |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Berinsky, 2012 | ||||||||||||

| Experiment 1 | Report that the 2010 Affordable Care Act (ACA) contains descriptions of death panels | 618 | - | - | - | - | 0.07 | WP | O | −0.93 | −0.91 | ND |

| Experiment 1 | Report that the 2010 Affordable Care Act (ACA) contains descriptions of death panels | 618 | - | - | - | - | −0.10 | WP | O | −0.93 | −0.91 | ND |

| Experiment 1 | Report that the 2010 Affordable Care Act (ACA) contains descriptions of death panels | 618 | - | - | - | - | −0.28 | WP | O | −0.93 | −0.91 | ND |

| Experiment 1 | Report that the 2010 Affordable Care Act (ACA) contains descriptions of death panels | 618 | - | - | - | - | −0.32 | WP | O | −0.93 | −0.91 | ND |

| Experiment 1 | Report that the 2010 Affordable Care Act (ACA) contains descriptions of death panels | 618 | - | - | - | - | −0.42 | WP | O | −0.93 | −0.91 | ND |

| Experiment 2 | Report that the 2010 Affordable Care Act (ACA) contains descriptions of death panels | 278 | - | - | - | - | 0.19 | WP | O | −0.93 | −0.91 | ND |

| Experiment 2 | Report that the 2010 Affordable Care Act (ACA) contains descriptions of death panels | 278 | - | - | - | - | −0.15 | WP | O | −0.93 | −0.91 | ND |

| Bullock, 2007 | ||||||||||||

| Experiment 1 | Positions of political candidates on policy arguments about Medicaid | 204 | 48 | - | - | - | 0.93 | T | O | −0.04 | −0.37 | ND |

| Experiment 1 | Positions of political candidates on policy arguments about Medicaid | 209 | 48 | - | - | - | 0.32 | T | O | −0.04 | −0.37 | ND |

| Experiment 2 | Positions of political candidates on policy arguments about Medicaid | 58 | 74 | - | - | - | 0.25 | T | O | −0.04 | −0.37 | ND |

| Experiment 2 | Positions of political candidates on policy arguments about Medicaid | 173 | 74 | - | - | - | −0.63 | T | O | −0.04 | −0.37 | ND |

| Experiment 3 | Positions of political candidates on spending on social services, protecting the environment versus job, and government aid to blacks | 100 | 64 | - | - | - | 0.36 | T | O | −0.04 | −0.37 | - |

| Experiment 3 | Positions of political candidates on spending on social services, protecting the environment versus job, and government aid to blacks | 165 | 64 | - | - | - | 0.20 | T | O | −0.04 | −0.37 | - |

| Ecker, Lewandowsky, & Tang, 2010 | ||||||||||||

| Experiment 1 | Report about the causes of a minibus accident | 50 | 76 | 19.1 | - | 0.42 | 3.26 | JA | −0.04 | −0.37 | D | |

| Experiment 1 | Report about the causes of a minibus accident | 50 | 76 | 19.1 | - | 0.74 | 2.71 | JA | L | −0.04 | −0.37 | D |

| Experiment 1 | Report about the causes of a minibus accident | 50 | 76 | 19.1 | - | 1.19 | 1.77 | JA | L | −0.04 | 0.18 | D |

| Experiment 1 | Report about the causes of a minibus accident | 50 | 76 | 19.1 | - | 1.23 | 1.69 | JA | L | −0.04 | −0.37 | D |

| Experiment 2 | Report about the causes of a minibus accident | 92 | 72 | 19.9 | 3.31 | 2.34 | 0.83 | JA | L | −0.04 | 0.18 | D |

| Ecker, Lewandonsky, & Apai, 2011 | ||||||||||||

| Experiment 1 | Report about the causes of a plane crash | 20 | - | - | 9.89 | - | - | JA | L | −0.93 | −0.91 | - |

| Experiment 1 | Report about the causes of a plane crash | 20 | - | - | 8.91 | - | - | JA | L | −0.93 | −0.91 | - |

| Experiment 1 | Report about the causes of a plane crash | 30 | - | - | - | −1.31 | 10.38 | JA | L | −0.04 | −0.91 | D |

| Experiment 1 | Report about the causes of a plane crash | 30 | - | - | - | −0.73 | 6.45 | JA | L | −0.04 | 1.21 | D |

| Experiment 1 | Report about the causes of a plane crash | 30 | - | - | - | 4.74 | 1.61 | JA | L | −0.04 | −0.91 | D |

| Experiment 1 | Report about the causes of a plane crash | 30 | - | - | - | 4.55 | 1.04 | JA | L | −0.04 | 1.21 | D |

| Experiment 2 | Report about the causes of a plane crash | 32 | 85 | 21.4 | 2.49 | - | - | JA | L | −0.93 | −0.91 | - |

| Experiment 2 | Report about the causes of a plane crash | 32 | 85 | 21.4 | 1.34 | - | - | JA | L | −0.93 | −0.91 | - |

| Experiment 2 | Report about the causes of a plane crash | 48 | 85 | 21.4 | - | 0.87 | 0.80 | JA | L | −0.04 | −0.91 | D |

| Experiment 2 | Report about the causes of a plane crash | 48 | 85 | 21.4 | - | 0.98 | 0.64 | JA | L | −0.04 | −0.91 | D |

| Experiment 2 | Report about the causes of a plane crash | 64 | 85 | 21.4 | - | 1.03 | 0.50 | JA | L | −0.04 | 1.21 | D |

| Experiment 2 | Report about the causes of a plane crash | 64 | 85 | 21.4 | - | 0.88 | 0.81 | JA | L | −0.04 | 1.21 | D |

| Ecker et al., 2011 | ||||||||||||

| Experiment 1 | Report about the causes of a warehouse fire accident | 69 | 67 | - | 1.55 | 0.72 | 0.83 | JA | L | 2.22 | 1.21 | - |

| Experiment 1 | Report about the causes of a warehouse fire accident | 69 | 67 | - | 1.55 | 1.17 | 0.38 | JA | L | 2.22 | 1.21 | - |

| Experiment 1 | Report about the causes of a warehouse fire accident | 69 | 67 | - | 1.2 | 0.76 | 0.44 | JA | L | 2.22 | 1.21 | - |

| Experiment 1 | Report about the causes of a warehouse fire accident | 69 | 67 | - | 1.2 | 0.79 | 0.41 | JA | L | 2.22 | 1.21 | - |

| Experiment 2 | Report about the causes of a warehouse fire accident | 46 | 69 | - | - | 0.33 | - | JA | 1.33 | 1.21 | - | |

| Experiment 2 | Report about the causes of a warehouse fire accident | 46 | 69 | - | - | 0.29 | - | JA | L | 1.33 | 1.21 | - |

| Experiment 2 | Report about the causes of a warehouse fire accident | 46 | 69 | - | - | 0.46 | - | JA | L | 1.33 | 1.21 | - |

| Experiment 2 | Report about the causes of a warehouse fire accident | 46 | 69 | - | - | 0.35 | - | JA | L | 1.33 | 1.21 | - |

| Ecker et al., 2014 | ||||||||||||

| Experiment 1 | Report about the person responsible for a liquor store robbery | 72 | 67 | 19 | 2.28 | 0.65 | 1.28 | JA | L | −0.04 | −0.91 | D |

| Experiment 1 | Report about the person responsible for a liquor store robbery | 72 | 67 | 19 | 1.68 | 0.93 | 1.12 | JA | L | −0.04 | −0.91 | D |

| Experiment 2 | Report about the person responsible for an attempted bank robbery | 50 | 69 | 19 | - | 0.69 | - | JA | L | −0.04 | −0.91 | D |

| Experiment 2 | Report about the person responsible for an attempted bank robbery | 50 | 69 | 19 | - | 0.73 | - | JA | L | −0.04 | −0.91 | D |

| Johnson & Seifert, 1994 | ||||||||||||

| Experiment 1 | Report about the causes of a warehouse fire accident | 20 | - | - | - | - | 1.95 | JA | L | −0.04 | 1.21 | D |

| Experiment 1 | Report about the causes of a warehouse fire accident | 20 | - | - | - | - | 1.52 | JA | L | −0.04 | 1.21 | D |

| Experiment 1.5 | Report about the causes of a warehouse fire accident | 20 | - | - | - | - | 1.12 | JA | L | −0.04 | 1.21 | D |

| Experiment 1.5 | Report about the causes of a warehouse fire accident | 20 | - | - | - | - | 0.98 | JA | L | −0.04 | 1.21 | D |

| Experiment 2 | Report about the causes of a warehouse fire accident | 60 | - | - | 2.74 | 0.54 | 1.91 | JA | L | −0.04 | 1.21 | ND |

| Experiment 3 | Report about the causes of a police investigation into a theft at a private home | 81 | - | - | 0.96 | −0.11 | 0.96 | JA | L | −0.04 | 1.21 | ND |

| Experiment 3.5 | Report about the causes of a police investigation into a theft at a private home | 27 | - | - | 0.57 | JA | L | −0.04 | 1.21 | D | ||

| Thorson, 2013 | ||||||||||||

| Experiment 1 | Report of a political candidate who accepted campaign donations from a convicted felon | 157 | - | - | 5.71 | 2.88 | 1.22 | T | O | −0.93 | −0.91 | ND |

| Experiment 2 | Report of a political candidate who accepted campaign donations from a convicted felon | 240 | - | - | 4.62 | 3.40 | 0.91 | T | O | −0.93 | −0.91 | D |

| Experiment 2 | Report of a political candidate who accepted campaign donations from a convicted felon | 234 | - | - | 3.98 | 3.97 | −0.32 | T | O | −0.93 | −0.91 | D |

Note. Publication status (WP = working paper, T = thesis, JA = journal article); Online vs. lab data collection (O = online data collection, L= lab data collection); Generation of explanations in line with the misinformation; Generation of counter-arguments to the misinformation; Detail of debunking messages (ND = not detailed debunking information, D = detailed debunking information).

Audience factors

Two trained raters coded the generation of explanations in line with the misinformation as directly induced by experimental procedures (explicit procedure = 2; no explicit procedure = 1). They also judged whether there were instructions and/or experimental settings likely to spontaneously activate explanations in line with the misinformation (low likelihood = 1, moderate likelihood = 2, and high likelihood = 3). For example, Ecker, Lewandowsky, Swire, and Chang’s (2011) Experiment 1 was assigned a 2 (= explicit procedure) for explicit experimental procedure because the misinformation was repeated 1–3 times across conditions. The same experiment was assigned a 3 (= high likelihood) for spontaneous generation of explanations because participants were instructed to complete an open-ended questionnaire with causal inference questions (e.g., “What could have caused the explosions?”). In contrast, Berinsky’s (2012) report included neither an explicit procedure to strengthen the reception of the misinformation nor questionnaires to induce inferences about the misinformation. Therefore, this report was assigned a 1 for both variables. The standardized scores of these two variables were averaged into a composite index to represent the overall likelihood of explanations in line with the misinformation (see Table 1 for sample indexes).

The raters followed a similar scheme to code the generation of counter-arguments to the misinformation after receiving the debunking message and the generation of counterarguments includes generating causal alternatives. First, they coded whether counterarguments were directly induced by the experimental procedures (explicit procedure = 2; no explicit procedure = 1). Second, they coded whether counter-arguments were indirectly induced by the experimental setting (low likelihood = 1, moderate likelihood = 2, high likelihood = 3). For example, in Ecker, Lewandowsky, and Tang’s (2010) study, the debunking message was presented one time and did not elaborate on the multiple explanations supporting the information. Thus, the experimental procedure was coded as 1 (= no explicit procedure). However, participants were instructed to complete open-ended questions to make inferences about the misinformation after receiving the debunking message. Therefore, this study was coded 2 (= moderate likelihood) for spontaneous generation of counter-arguments. We then averaged the standardized scores of the direct and indirect codes as an overall index of generation of counter-arguments.

Level of detail of the debunking message

The two raters also coded whether the debunking message simply labeled the initial information as incorrect (a score of 1 = not detailed), or provided detailed information (a score of 2 = detailed). For example, the debunking message presented in Ecker, Lewandowsky, and Apai’s (2011) experiments was assigned a 2 because new information was provided (i.e., The actual cause was determined to be a faulty fuel tank, p. 287).

Analytic Procedures

The present study aimed at comparing the effects of misinformation, debunking, and misinformation persistence and three separate meta-analyses were performed (see chapter 25 in Borenstein et al., 2009). We first assessed publication/inclusion bias and analyzed the weighted mean magnitudes (d.) of the effect sizes using fixed-effects and random-effects models estimated with maximum-likelihood methods. Then, we conducted Cochran’s Q tests and I2 statistics to determine whether the population of effect sizes was heterogeneous across samples (Hedges & Olkin, 1985) and performed three-level meta-analysis (i.e., nested by reports) to estimate the heterogeneity level and control for dependency among studies from a single report. Finally, we conducted moderator analyses to explain the non-sampling variance in the effects. For descriptive purposes, we followed Cohen’s (1988) definitions of effect sizes, i.e., small effect: 0.10 < d ≤ 0.20, medium effect: 0.20 < d ≤ 0.5, and large effect: 0.50 < d ≤ 0.80, to interpret the results.

Results

Descriptions of Studies and Conditions

All reports were conducted between 1994 and 2015 and yielded 52 experimental conditions and 26 control conditions. The synthesized experiments concerned a variety of news. Eight reports used false social and political news, including reports of robberies (Ecker, Lewandowsky, Fenton, & Martin, 2014), the investigations of the warehouse fire (Ecker, Lewandowsky, Swire, et al., 2011; Johnson & Seifert, 1994) and traffic accidents (Ecker, Lewandowsky, & Apai, 2011; Ecker et al., 2010), the death panel descriptions of the 2010 Affordable Care Act (Berinsky, 2012), positions of political candidates on arguments about Medicaid (Bullock, 2007), and whether a political candidate had received donations from a convicted felon (Thorson, 2013). Table 1 presents a summary of characteristics for each meta-analyzed condition. The average number of participants was 132 (SD = 174). Most samples were collected in laboratory settings (69.2%), followed by third-party online platforms (30.8%). The average percentage of females was 72 (SD = 9.57), and the average age was 20 years old (SD = 1.16).

Mean Effect Sizes and Heterogeneity

Mean weighted analyses were used to estimate the misinformation effect, the debunking effect, and the misinformation-persistence effect (k = 52; total N = 6,878), using fixed-effects, random-effects models, and random-effects models nested by reports. We followed the detection procedure proposed by Viechtbauer and Cheung (2010) to examine the influence of outliers with exceptionally large effect sizes (d > 5.50) of the misinformation and misinformation-persistence. We estimated all mean effects without and with the removal of outliers and the estimates were significant in both cases (see Table 2). Furthermore, the I2 statistics revealed 99% of the non-sampling variability in all cases (see Table 2).

Table 2.

Results of Effect Size Estimates With (Top Panel) and Without (Bottom Panel) Outliers

| Fixed-Effects Model | Random-Effects Model | Three-Level Random-Effects Model |

Random-Effects Model with Weights |

|||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||||||||||

| k | d. | 95% CI |

Q | d. | 95% CI | τ2 | I2 | cluster | df | d. | 95% Wald CI |

I2(2) | I2(3) | d. | 95% CI | τ2 | I2 | |

| All Effects | ||||||||||||||||||

| Misinformation | 16 | 2.04 | 1.93 – 2.14 | 590.44*** | 3.08 | 2.02 – 4.15 | 4.48 (1.66) | 98.99 | 0 | 14 | 3.08 | 2.00 – 4.15 | 98.99 | - | 2.94 | 1.80 – 4.08 | 4.48 (1.66) | 98.99 |

| Debunking | 30 | 0.88 | 0.81– 0.93 | 1031.21*** | 1.14 | 0.68 – 1.61 | 1.65 (0.44) | 98.45 | 30 | 28 | 1.14 | 0.68 – 1.61 | 98.45 | - | 1.33 | 0.62 – 2.04 | 2.28 (0.72) | 98.76 |

| Misinformation- Persistencea | 42 | 0.09 | 0.07– 0.12 | 1701.81*** | 0.97 | 0.60 – 1.35 | 1.46 (0.33) | 99.47 | 8 | 39 | 0.92 | 0.40 – 1.44 | 63.35 | 35.93 | 1.06 | 0.68 – 1.44 | 1.38 (0.32) | 99.45 |

|

| ||||||||||||||||||

| Effects Without Outliers | ||||||||||||||||||

| Misinformationb | 14 | 2.01 | 1.91 – 2.12 | 540.03*** | 2.46 | 1.73 – 3.19 | 1.87 (0.77) | 97.91 | 6 | 11 | 2.49 | 1.53 – 3.45 | 24.82 | 72.78 | 2.41 | 1.63 – 3.20 | 1.87 (0.72) | 97.91 |

| Misinformation- Persistencec | 40 | 0.09 | 0.06– 0.12 | 1584.65*** | 0.75 | 0.50 – 1.00 | 0.60 (0.14) | 99.47 | 8 | 37 | 0.79 | 0.36 – 1.23 | 39.18 | 59.49 | 0.77 | 0.48 – 1.05 | 0.62 (0.16) | 98.91 |

Note. k = number of samples; d. = mean Hedges’ d; 95% CI = 95% confidence interval; Q = Cochran’ Q test; τ2 = estimated amount of total heterogeneity (and standard error); I2 = level of between-sample heterogeneity; Random-effects model with weights used standardized N residuals as weights; - = no estimation of effect size was performed because no missing study was identified on the left-hand side of the funnel plot in Figure 2; df = degree of freedom, I2(2) = the amount of variance explained at level 2 (records); I2(3) = the amount of variance explained at level 3 (studies);

= significant likelihood ratio test between level 2 and level 3 models, χ2 = 7.98, df = 1, p = .005;

= significant likelihood ratio test between level 2 and level 3 models, χ2 = 8.34, df = 1, p = .004;

= significant likelihood ratio test between level 2 and level 3 models, χ2 = 20.92, df = 1, p < .001.

< .05,

< .01,

< .001.

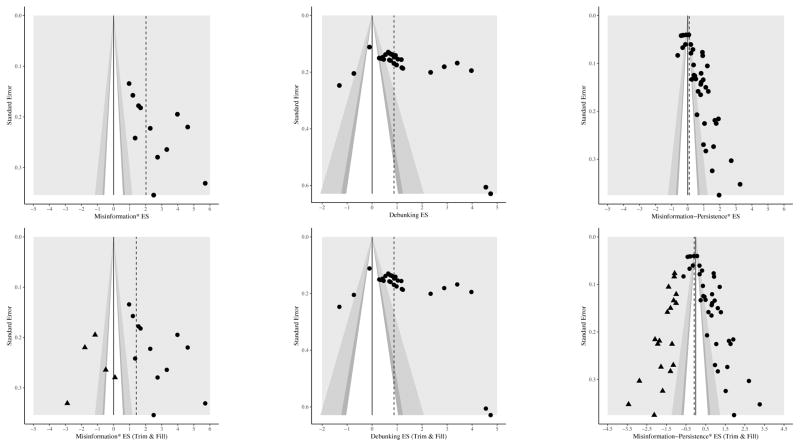

Assessment of Bias

Given the substantial degree of heterogeneity, we performed multiple sensitivity analyses to assess bias (see Table 3), including contour-enhanced funnel plots (Peters, Sutton, Jones, Abrams, & Rushton, 2008), trim and fill (Duval, 2005), selection models (Vevea & Woods, 2005), meta-regression with publication status as a moderator, p-curves (Simonsohn, Simmons, & Nelson, 2015), and p-uniform tests (van Assen, van Aert, & Wicherts, 2015). Table 3 summarizes the results of these analyses. Some of the methods suggested bias, whereas others did not. To be conservative, we explored the sources of this potential bias and corrected for it in later analyses.

Table 3.

Overview of Sensitivity Analyses for the Effects of Misinformation, Debunking, and Misinformation-Persistence

| Effect Sizes | All | Without Outliers | Any Indication of Bias |

|---|---|---|---|

| A. Contoured-Enhanced Funnel Plot: Funnel plots are scatter plots of the effects estimated from individual records against a measure of study size. Asymmetrical funnel plots suggest publication bias (Sterne & Harbord, 2004). Contour lines, which indicate levels of statistical significance (e.g., < .10, < .05,< .01), are added to funnel plots (Peters et at. 2008). FEM was used. | |||

| Misinformation | Asymmetric funnel plot with records fall outside the funnel | Asymmetric funnel plot with records fall outside the funnel | Yes, see Figure 2 |

| Debunking | Asymmetric funnel plot with records fall outside the funnel | - | Yes, see Figure 2 |

| Misinformation-Persistence | Asymmetric funnel plot with records fall outside the funnel | Asymmetric funnel plot with records fall outside the funnel | Yes, see Figure 2 |

|

| |||

| B. Trim and Fill Method: A nonparametric method to correct funnel plot asymmetry by removing the smaller records that cause the asymmetry, re-estimating the center of the effect sizes, and filling the omitted records to ensure that the funnel plot is more symmetrical (Borenstein et al., 2009; Duval, 2005). FEM was used. | |||

| Misinformation | Six estimated records filled on the left | Five estimated records filled on the left | Yes, see Figure 2 |

| Debunking | Zero estimated records filled on the left | - | No, see Figure 2 |

| Misinformation-Persistence | Nineteen estimated records filled on the left | Nineteen estimated records filled on the left | Yes, see Figure 2 |

|

| |||

| C. Selection Models: The weight-function models accounting for that effect sizes may not have the same probability of being published. Based on different probabilities, a selection model adjusts estimates of the mean effect size and can be compared with the unadjusted one to assess publication bias (Vevea & Woods, 2005). REM was used. | |||

| Misinformation | Small differences between unadjusted and adjusted estimates | Small differences between unadjusted and adjusted estimates | No, see Supplementary Information |

| Debunking | Large differences between unadjusted and adjusted estimates | - | Yes, see Supplementary Information |

| Misinformation-Persistence | Small differences between unadjusted and adjusted estimates | Small differences between unadjusted and adjusted estimates | No, see Supplementary Information |

|

| |||

| D. Meta-Regression Model: Publication type can be examined formally as in a moderator analysis. When data are selectively reported in a way that is related to the magnitude of the effect size (e.g., when results are only reported when they are statistically significant), such a variable can have biasing effects (Borenstein et al., 2009). REM was used. | |||

|

| |||

| Misinformation | Publication type was a significant moderator | Publication type was a significant moderator | Yes, see Supplementary Information |

| Debunking | Publication type was a significant moderator | - | Yes, see Supplementary Information |

| Misinformation-Persistence | Publication type was a significant moderator | Publication type was a significant moderator | Yes, see Supplementary Information |

|

| |||

| E. p-Curve: This method involves plotting the distribution of p-values reported in a set of studies. The analysis combines the half (.25) and full p-curve to make inferences about evidential value (Simonsohn, Simmons, & Nelson, 2015). | |||

| Misinformation | P-curve was right-skewed | P-curve was right-skewed | No, see Supplementary Information |

| Debunking | P-curve was right-skewed | - | No, see Supplementary Information |

| Misinformation-Persistence | P-curve was right-skewed | P-curve was right-skewed | No, see Supplementary Information |

|

| |||

| F. p-Uniform: This analysis holds the same underlying assumption that the distribution of the p value under the null hypothesis that the effect size is equal to the true effect size is uniform (van Assen et al., 2015). P method was used. | |||

| Misinformation | L.pb test was nonsignificant | L.pb test was nonsignificant | No, see Supplementary Information |

| Debunking | L.pb test was nonsignificant | - | No, see Supplementary Information |

| Misinformation-Persistence | L.pb test was nonsignificant | L.pb test was nonsignificant | No, see Supplementary Information |

To identify correlates of potential bias, we identified correlates of differences in sample sizes

We first conducted correlation analyses between sample size and methodological factors that had relatively complete data (missing values in less than 5% of the selected reports). Table 4 shows that sample size correlated with several methodological factors, including explanations in line with the misinformation and counter-arguments to the misinformation. Therefore, we then used the results from Table 4 to reduce the bias related to Ns and also reduce the potential influence of Ns in moderator analyses. Based on the multiple regression analyses, in Table 4, we calculated standardized residuals to remove the influence of the covariates on sample size. Those residuals were then used to represent sample size N in a way that is independent of the effect of the methodological and publication factors. Specifically, we estimated a weight for each sample by referencing the smallest standardized residual, i.e., [standardized residual – minimum (standardized residuals) + 0.0001]. The weighted model is likely to mitigate the influence of sample sizes as a potential source of bias, which led us to also repeat all the analyses of misinformation and persistence with these weights included.1 Specifically, we calculated mean effect sizes for all the effects of misinformation, debunking, and misinformation-persistence using REM with the standardized residuals of sample size introduced as weights (WREM). Table 2 presents these results, which were similar to the earlier ones. Moderator analyses were also replicated with these weights and are reported in turn (see Table 5).

Table 4.

Results of Correlational and Multiple Regression Analyses Predicting N

| Misinformation | Debunking | Misinformation -Persistence | |

|---|---|---|---|

| Simple Correlations | |||

| df | 14 | - | 40 |

| Publication Status | .91*** | - | .63*** |

| Online vs. Lab Data Collection | .91*** | - | .63*** |

| Publication Year | .23 | - | .36* |

| Explanations in line with the Misinformation | −.21 | - | −.50*** |

| Counterarguments to the Misinformation | - | - | −.52*** |

| Unstandardized Multiple Regression Coefficients | |||

| df | 14 | - | 36 |

| Publication Status | 169.78***a | - | 462.22***b 118.48**a |

| Online vs. Lab Data Collection | n.a. | - | n.a. |

| Publication Year | −0.82 | - | 2.15 |

| Explanations in line with the Misinformation | 10.62 | - | −2.63 |

| Counterarguments to the Misinformation | - | - | 5.49 |

Note. df = degree of freedom;

= coefficients of dissertations compared to journal articles;

= coefficient of working papers compared to journal articles. Results are about the same if explanations in line with the misinformation and counter-argument generation are excluded. (see Footnote 1)

< .05,

< .01,

<.001.

Table 5.

Results of Moderator Analyses of all Effects, Effects Nested by Reports, and Effects without Outliers

| Variables | Misinformation | Debunking | Misinformation-Persistence | ||

|---|---|---|---|---|---|

| MEM | WMEM | MEM | MEM | WMEM | |

| All Effects | |||||

| Intercept | 3.17*** (0.42) | 3.07*** (0.45) | −0.74 (0.61) | 0.88* (0.39) | 1.08** (0.40) |

| Explanations in line with the Misinformation | −0.97** (0.33) | −0.98** (0.34) | −4.08*** (0.72) | 1.40* (0.58) | 2.09*** (0.62) |

| Counter-arguments to the Misinformation | n.a. | n.a. | 0.93*** (0.26) | −0.36 (0.24) | −0.68** (0.24) |

| Level of Detail of Debunking Messagea | n.a. | n.a. | 1.82** (0.64) | 0.86* (0.42) | 1.06* (0.43) |

| QM | 8.87** (14) | 8.30** (14) | 33.64*** (17) | 17.30*** (31) | 26.36*** (31) |

| τ2, I2 | 2.54 (0.96), 98.18 | 2.54 (0.96), 98.18 | 0.79 (0.26), 96.36 | 1.03 (0.26), 99.30 | 1.03 (0.26), 99.30 |

| R2 | 43.28 | 43.28 | 65.33 | 44.05 | 44.05 |

|

| |||||

| All Effects Nested by Reports | |||||

| Intercept | 3.17*** (0.42) | - | −0.74 (0.61) | 0.88* (0.39) | - |

| Explanations in line with the Misinformation | −0.97** (0.33) | - | −4.08*** (0.72) | 1.40* (0.58) | - |

| Counter-arguments to the Misinformation | n.a. | - | 0.93*** (0.26) | −0.36 (0.24) | - |

| Level of Detail of Debunking Messagea | n.a. | - | 1.82** (0.64) | 0.86* (0.42) | - |

| df | 8.87** (14) | - | 33.64*** (17) | 17.30*** (31) | - |

| τ2, I2 | 2.54 (0.96), 98.18 | - | 0.79 (0.26), 96.36 | 1.03 (0.26), 99.30 | - |

| R2 | 43.28 | - | 65.33 | 44.05 | - |

| Likelihood-Ratio Test (χ2, df) | −0.00, 1 | - | −0.00, 1 | −0.00, 1 | - |

|

| |||||

| Effects Without Outliers | |||||

| Intercept | 2.65*** (0.30) | 2.60*** (0.33) | - | 0.68** (0.23) | 0.72** (0.24) |

| Explanations in line with the Misinformation | −0.65** (0.23) | −0.64** (0.24) | - | 0.67† (0.35) | 0.82* (0.36) |

| Counter-arguments to the Misinformation | n.a. | n.a. | - | 0.09 (0.15) | 0.08 (0.15) |

| Level of Detail of Debunking Messagea | n.a. | n.a. | - | 0.51* (0.25) | 0.52* (0.25) |

| QM | 8.12** (12) | 6.84** (13) | - | 23.38*** (29) | 23.47*** (29) |

| τ2, I2 | 1.16 (0.46), 96.61 | 1.16 (0.46), 6.61 | - | 0.34 (0.09), 98.07 | 0.34 (0.09), 98.07 |

| R2 | 38.03 | 38.03 | - | 44.66 | 44.66 |

Note. Entries in the top panel correspond to unstandardized beta weights with standard errors between parentheses.

1 = labeling initial information as incorrect, 2 = providing new and credible information; QM = test of moderators (degree of freedom); τ2 = estimated amount of total heterogeneity (and standard error), I2 = level of between-sample heterogeneity; R2 = proportion of variance explained; MEM = Mixed-Effects Model; WMEM = Mixed-Effects Model weighted by standardized N residuals.

<.10,

< .05,

< .01,

<.001.

Moderator Analyses

We used meta-regressions to analyze the effects of the moderators on the misinformation, debunking, and persistence effects and summarized the results in Table 5. As shown in the middle panel of Table 5, the likelihood-ratio tests were nonsignificant for all effects, suggesting that the non-nested models with moderators better represent the data than the nested ones. Table 6 presents effect sizes for debunking and misinformation persistence across moderator levels.

Table 6.

Effect Size Estimates for Debunking and Misinformation Persistence Across Levels of Moderator Variables using WREM with Weights

| Debunkinga | Misinformation-Persistenceb | |

|---|---|---|

| Explanations in line with the misinformation | ||

| High | 0.62 (18) | 1.72 (25) |

| Low | 3.77 (3) | −0.14 (10) |

| Counter-arguments to the misinformation | ||

| High | 1.48 (8) | 0.46 (13) |

| Low | 0.73 (11) | 1.58 (22) |

| Detail of Debunking Message | ||

| Labeling information source as incorrect | 0.16 (3) | 0.55 (14) |

| Providing detailed debunking information | 1.25 (18) | 1.58 (21) |

Note. Table entries are d.s, with the number of samples in parentheses;

= inverse variances of the effect sizes were included as weights;

= the standardized residuals of sample size were included as weights; Means above and below .25 standard deviations were used to group high (vs. low) conditions.

Elaboration in line with the misinformation

We first examined whether generating explanations in line with the misinformation would moderate our misinformation, debunking, misinformation-persistence effects. A meta-regression analysis with the misinformation effect as the outcome variable revealed an inverse association with the generation of explanations in line with the misinformation (WMEM: b = −0.98, 95% CI [−1.61, −0.33]; MEM: b = −0.97, 95% CI [−1.64, −0.31]). Specifically, the more likely recipients were to generate explanations supporting the misinformation, the weaker the misinformation effect was. This effect was unexpected because elaborating on information generally increases its impact when the message is strong (Cacioppo, Petty, & Crites, 1994; Petty & Briñol, 2010), 2010). Still, this effect does not change the interpretation of the more important results concerning the debunking and misinformation-persistence effects.

Our meta-regression analysis of the debunking effect revealed a negative association with the generation of explanations supporting the misinformation (MEM: b = −4.08, 95% CI [−5.50, −2.66]). As expected, the greater the elaboration in line with the misinformation, the weaker the later debunking effect. Furthermore, we found the anticipated moderation of the misinformation-persistence effect. The greater the likelihood of generating explanations in line with the misinformation, the greater the persistence of the misinformation (WMEM: b = 2.09, 95% CI [0.88, 3.30]; MEM: b = 1.40, 95% CI [0.26, 2.54]).

Elaborating counter-arguments to the misinformation

As the upper panel of Table 5 indicates, results were consistent with our expectations that the likelihood of counterarguing the misinformation when the debunking message is presented would moderate the initial misinformation effect (MEM: b = 0.93, 95% CI [0.42, 1.44]) as well as misinformation persistence (WMEM: b = −0.68, 95% CI [−1.16, −0.20]; MEM: b = −0.36, 95% CI [−0.82, 0.11]). In summary, the debunking effect was stronger and the misinformation persistence was weaker when recipients of the misinformation were more likely to counter-argue the misinformation than when they were not.

Detail of debunking message

We then assessed whether the level of detail of the debunking message affected its effect and misinformation persistence. In line with our expectations, a detailed debunking was associated with a stronger debunking effect than a non-detailed debunking (MEM: b = 1.82, 95% CI [0.57, 3.07]). Contrary to expectations, however, a more detailed debunking message was associated with a stronger misinformation-persistence effect (WMEM: b = 1.06, 95% CI [0.23, 1.90]; MEM: b = 0.86, 95% CI [0.04, 1.67]). This result suggested that using a more detailed debunking message was effective to discredit the misinformation but was associated with greater misinformation persistence. A post-hoc analysis between generating explanations to the initial misinformation and the level of details of debunking message revealed a large positive correlation, r = .52, df = 33, p = .0015. It seems plausible that the misinformation messages could have been more detailed in studies with more detailed debunking, a possibility that future meta-analyses should investigate.

Discussion

The primary objective of this meta-analysis was to understand the factors underlying effective messages to counter attitudes/beliefs based on misinformation. Examining moderators provided empirical evidence to evaluate recommendations and suggestions for discrediting the false information. Employing Cohen’s effect size guidelines (1988), the findings show large effects for: misinformation, debunking, and misinformation-persistence across estimation methods (see Table 2), except the fixed-effects model of misinformation-persistence. Table 6 also presents effect sizes for debunking and misinformation persistence across moderator levels.

The results of generating explanations in line with the misinformation were consistent with the hypothesis that people who generate arguments supporting misinformation struggle to later question and change their initial attitudes and beliefs. As shown in Table 6, the debunking message was less effective when people were initially more likely to generate explanations supporting the misinformation than when they were not. The results of counterarguing the misinformation also supported predictions. The debunking message was more effective when people were more likely to counter-argue the misinformation than when they were not. Further, the results of detail of debunking messages were consistent with our hypothesis that debunking is more successful when it provides information that enables recipients to update the mental model justifying the misinformation (see Table 6). As expected, the debunking effect was weaker when the debunking message simply labeled misinformation as incorrect rather than when it introduced corrective information. Contrary to expectations, however, the debunking effects of more detailed debunking messages did not translate into reduced misinformation persistence, as the studies with detailed debunking might also have stronger misinformation persistence. In the following paragraphs, we discuss the detection of inclusion bias in our samples, and then present recommendations for uprooting discredited information.

Assessments of Inclusion Bias

Our analyses of publication and methodological correlates suggest that different research practices have been adopted across published and unpublished studies. Contrary to the usual bias (Hopewell, McDonald, Clarke, & Egger, 2007), unpublished samples in our meta-analysis (i.e., working papers and dissertations) had larger sample sizes than did published articles (see Table 4), a relation observed for the misinformation and misinformation-persistence effect. Furthermore, we found moderate to strong associations between sample size and methodological factors, suggesting that part of the bias is due to differences in study characteristics. Such results could also stem from more refined experimental methods, such as pilot testing a particular procedure to establish the required sample size a priori. In other words, such research practices as power analyses may contribute a greater number of studies with larger sample sizes and smaller effect sizes, as found in our study. The inconsistent results of various sensitivity analyses speak to the needs for future research to investigate the robustness of various bias detection methods and develop new assessment tools to further understand publication/inclusion bias (Inzlicht, Gervais, & Berkman, 2015; Kepes, Banks, & Oh, 2014; McShane, Böckenholt, & Hansen, 2016; Peters et al., 2010).

Recommendations for Debunking Misinformation

Our results have practical implications for editorial and public opinion practices.

Recommendation 1: Reducing generation of arguments in line with the misinformation

Our findings suggested that elaboration in line with the misinformation reduces the acceptance of the debunking message, making it difficult to eliminate false beliefs. Elaborating on the reasons for a particular event allows recipients to form a mental model that can later bias processing of new information and make undercutting the initial belief difficult (Hart et al., 2009). Therefore, media and policy makers should ensure reporting about an incident of misinformation (e.g., a retraction report) in ways that reduce detailed thoughts in support of the misinformation.

Recommendation 2: Creating conditions that facilitate scrutiny and counterarguing of misinformation

Our findings highlight the conclusion that counter-arguing the misinformation enhances the power of corrective efforts. Therefore, public mechanisms and educational initiatives should induce a state of skepticism and increase systematic doubt in all transmitted information. Furthermore, when retractions or corrections are issued, facilitating understanding and generation of detailed counter-arguments should yield optimal acceptance of the debunking message.

Recommendation 3: Correcting misinformation with new detailed information but keeping expectations low

The moderator analyses indicated that recipients of misinformation are less likely to accept the debunking messages when the counter messages simply label the misinformation as wrong rather than when they debunk the misinformation with new details (e.g., Thorson, 2013). A caveat is that the ultimate persistence of the misinformation depends on its initial take, and detailed debunking may not always function as expected.

Continuing to Develop Alert Systems and Conclusion

Policy makers should be aware of the likely persistence of misinformation in different areas. Alerting systems such as Factcheck.org exist in the political domain. Importantly, when a Facebook user’s search turns up a story identified as inaccurate by one of the five major fact-checking groups, a newly implemented feature provides links to fact-checking information generated by one of these debunking sites. Debunking journalism exists in the social and health domains as well. For example, Snopes.com has recently published corrections of fake news claiming that a billionaire had purchased the tiny town of Buford. At the same time, science communication scholarship and practice offer some innovative initiatives such as retractionwatch.com, founded in 2010 by Ivan Oransky and Adam Marcus, which provides readers with updated information about scientific retractions. In line with Recommendation 3, Retraction Watch frequently updates readers on the details of retraction investigations online. Such an ongoing monitoring system creates desirable conditions of scrutiny and counter-arguing of misinformation.

This meta-analysis began with a review of relevant literature on the perseverance of attitudes/beliefs and then assessed the impact of moderators on the misinformation, debunking, and misinformation-persistence effects. Compared to results from single experiments, meta-analysis is a useful catalogue of experimental paradigms, dependent variables, moderators, and other methods factors used in studies in related domain. In light of our findings, we offered three recommendations: (a) reduce arguments that support misinformation, (b) engage audiences in scrutiny and counter-arguing of misinformation, and (c) introduce new information as part of the debunking message. Of course, these recommendations do not take the audience’s dispositional characteristics into account and may not be effective or less effective for certain ideologies (Lewandowsky et al., 2015) and cultural backgrounds (Sperber, 2009).

Supplementary Material

Figure 2.

Contour-enhanced funnel plots of effect sizes (x-axes) (top panel). Contour-enhanced funnel plots with “filled” records (triangles) (bottom panel) using fixed-effects models. The vertical dashed lines indicate the mean estimates of the fixed-effects model. An asterisk refers to the removal of outliers for misinformation and misinformation-persistence effects.

Footnotes

Results of the moderator analyses for the misinformation and misinformation-persistence effects are about the same in strength and direction if explanations in line with the misinformation and counter-argument generation are excluded from the multiple-regression analyses and the estimations of the standardized residual N weights.

Author Contributions

M. S. Chan and C. R. Jones reviewed and coded all relevant studies. M. S. Chan analyzed the data and drafted the manuscript. M. S. Chan, K. Hall-Jamieson, D. Albarracín provided critical revisions. All authors approved the final version of the manuscript for submission.

Contributor Information

Man-pui Sally Chan, University of Illinois at Urbana-Champaign.

Christopher R. Jones, University of Pennsylvania

Kathleen Hall-Jamieson, University of Pennsylvania.

Dolores Albarracín, University of Illinois at Urbana-Champaign.

References

References marked with an asterisk indicate reports included in the meta-analysis.

- Arceneaux K. Cognitive biases and the strength of political arguments. American Journal of Political Science. 2012;56(2):271–285. http://doi.org/10.1111/j.1540-5907.2011.00573.x. [Google Scholar]

- Arceneaux K, Johnson M, Cryderman J. Communication, persuasion, and the conditioning value of selective exposure: Like minds may unite and divide but they mostly tune out. Journal of Political Communication. 2013;30(2) http://doi.org/http://dx.doi.org/10.1080/10584609.2012.737424. [Google Scholar]

- *.Berinsky AJ. Rumors, truths, and reality: A study of political misinformation. 2012 Retrieved from http://web.mit.edu/berinsky/www/files/rumor.pdf.

- Borenstein M, Hedges L, Higgins J, Rothstein H. Introduction to meta-analysis. New York, NY: Wiley; 2009. [Google Scholar]

- *.Bullock JG. Experiments on partisanship and public opinion: Party cues, false beliefs, and bayesian updating. Stanford University; 2007. Retrieved from https://books.google.com/books/about/Experiments_on_Partisanship_and_Public_O.html?id=OIhEAQAAIAAJ&pgis=1. [Google Scholar]

- Cacioppo JT, Petty RE, Crites SLJ. Attitude change. In: Ramachandran VS, editor. Encyclopedia of human behavior. San Diego: Academic Press; 1994. pp. 261–270. [Google Scholar]

- Chaiken S, Trope Y. Dual-process theories in social psychology. New York: The Guilford Press; 1999. [Google Scholar]

- Cohen J. Statistical power analysis for the behavioral sciences. 2. Hillsdale, NJ: Erlbaum; 1988. [Google Scholar]

- Duval S. The “‘trim and fill’” method. In: Rothstein HR, Sutton AJ, Borenstein M, editors. Publication bias in meta- analysis: Prevention, assessment, and adjustments. West Sussex: John Wiley & Sons; 2005. pp. 127–144. [Google Scholar]

- *.Ecker UKH, Lewandowsky S, Apai J. Terrorists brought down the plane!—No, actually it was a technical fault: Processing corrections of emotive information. The Quarterly Journal of Experimental Psychology. 2011;64(2):283–310. doi: 10.1080/17470218.2010.497927. http://doi.org/http://dx.doi.org/10.1080/17470218.2010.497927. [DOI] [PubMed] [Google Scholar]

- *.Ecker UKH, Lewandowsky S, Fenton O, Martin K. Do people keep believing because they want to? Preexisting attitudes and the continued influence of misinformation. Memory & Cognition. 2014;42(2):292–304. doi: 10.3758/s13421-013-0358-x. http://doi.org/10.3758/s13421-013-0358-x. [DOI] [PubMed] [Google Scholar]

- *.Ecker UKH, Lewandowsky S, Swire B, Chang D. Correcting false information in memory: Manipulating the strength of misinformation encoding and its retraction. Psychonomic Bulletin & Review. 2011;18(3):570–578. doi: 10.3758/s13423-011-0065-1. http://doi.org/http://dx.doi.org/10.3758/s13423-011-0065-1. [DOI] [PubMed] [Google Scholar]

- *.Ecker UKH, Lewandowsky S, Tang DTW. Explicit warnings reduce but do not eliminate the continued influence of misinformation. Memory & Cognition. 2010;38(8):1087–1100. doi: 10.3758/MC.38.8.1087. http://doi.org/10.3758/mc.38.8.1087. [DOI] [PubMed] [Google Scholar]

- Ecker UKH, Swire B, Lewandowsky S. Correcting misinformation—A challenge for education and cognitive science. Processing inaccurate information: Theoretical and applied perspectives from cognitive science and the educational sciences. 2014:13–37. Retrieved from http://search.proquest.com/docview/1676367736?accountid=14553.

- Hart W, Albarracín D, Eagly AH, Brechan I, Lindberg MJ, Merrill L. Feeling validated versus being correct: A meta-analysis of selective exposure to information. Psychological Bulletin. 2009;135(4):555–588. doi: 10.1037/a0015701. http://doi.org/10.1037/a0015701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hedges LV, Olkin I. Statistical methods for meta-analysis. Orlando, FL: Academic; 1985. [Google Scholar]

- Henig J. False euthanasia claims. 2009 Retrieved March 15, 2017, from http://www.factcheck.org/2009/07/false-euthanasia-claims/

- Hopewell S, McDonald S, Clarke M, Egger M. Grey literature in meta-analyses of randomized trials of health care interventions. The Cochrane Database of Systematic Reviews. 2007;(2):MR000010. doi: 10.1002/14651858.MR000010.pub3. http://doi.org/10.1002/14651858.MR000010.pub3. [DOI] [PMC free article] [PubMed]

- Inzlicht M, Gervais W, Berkman E. News of ego depletion’s demise is premature: Commentary on Carter, Kofler, Forster, & Mccullough, 2015. SSRN Electronic Journal. 2015 http://doi.org/10.2139/ssrn.2659409.

- Jerit J. Issue framing and engagement: Rhetorical strategy in public policy debates. Political Behavior. 2008;30(1):1–24. http://doi.org/10.1007/s11109-007-9041-x. [Google Scholar]

- Johnson-Laird PN. Mental models and probabilistic thinking. Cognition. 1994;50(1–3):189–209. doi: 10.1016/0010-0277(94)90028-0. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/8039361. [DOI] [PubMed] [Google Scholar]

- Johnson-Laird PN. Mental models and consistency. In: Gawronski B, Fritz S, editors. Cognitive consistency: A unifying concept in social psychology. New York: Guilford Press; 2013. [Google Scholar]

- Johnson-Laird PN, Byrne RMJ. Deduction. Hillsdale, N. J: Lawrence Erlbaum; 1991. [Google Scholar]

- *.Johnson HM, Seifert CM. Sources of the continued influence effect: When misinformation in memory affects later inferences. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1994;20(6):1420–1436. http://doi.org/10.1037/0278-7393.20.6.1420. [Google Scholar]

- Kahneman D. A perspective on judgment and choice: mapping bounded rationality. The American Psychologist. 2003;58(9):697–720. doi: 10.1037/0003-066X.58.9.697. http://doi.org/10.1037/0003-066X.58.9.697. [DOI] [PubMed] [Google Scholar]

- Kepes S, Banks GC, Oh IS. Avoiding bias in publication bias research: The value of “null” findings. Journal of Business and Psychology. 2014;29(2):183–203. http://doi.org/10.1007/s10869-012-9279-0. [Google Scholar]

- Kowalski P, Taylor AK. The effect of refuting misconceptions in the introductory psychology class. Teaching of Psychology. 2009;36(3):153–159. http://doi.org/10.1080/00986280902959986. [Google Scholar]

- Lewandowsky S, Cook J, Oberauer K, Brophy S, Lloyd EA, Marriott M. Recurrent fury: Conspiratorial discourse in the blogosphere triggered by research on the role of conspiracist ideation in climate denial. Journal of Social and Political Psychology. 2015;3(1):161–197. http://doi.org/10.5964/jspp.v3i1.443. [Google Scholar]

- Lewandowsky S, Ecker UKH, Seifert CM, Schwarz N, Cook J. Misinformation and its correction: Continued influence and successful debiasing. Psychological Science in the Public Interest. 2012;13(3):106–131. doi: 10.1177/1529100612451018. http://doi.org/http://dx.doi.org/10.1177/1529100612451018. [DOI] [PubMed] [Google Scholar]

- McShane BB, Böckenholt U, Hansen KT. Adjusting for publication bias in meta-analysis: An evaluation of selection methods and some cautionary notes. Perspectives on Psychological Science : A Journal of the Association for Psychological Science. 2016;11(5):730–749. doi: 10.1177/1745691616662243. http://doi.org/10.1177/1745691616662243. [DOI] [PubMed] [Google Scholar]

- Newport F. Americans still think Iraq had weapons of mass destruction before war. 2013 Retrieved February 25, 2016, from http://www.gallup.com/poll/8623/americans-still-think-iraq-had-weapons-mass-destruction-before-war.aspx.

- Newport F. U.S., percentage saying vaccines are vital dips slightly. 2015 Retrieved January 18, 2016, from http://www.gallup.com/poll/181844/percentage-saying-vaccines-vital-dips-slightly.aspx.

- Nyhan B. Why the “death panel” myth wouldn’t die: Misinformation in the health care reform debate. The Forum - A Journal of Applied Research in Contemporary Politics. 2010;8(1) http://doi.org/https://doi.org/10.2202/1540-8884.1354. [Google Scholar]

- Peters JL, Sutton AJ, Jones DR, Abrams KR, Rushton L. Contour-enhanced meta-analysis funnel plots help distinguish publication bias from other causes of asymmetry. Journal of Clinical Epidemiology. 2008;61(10):991–996. doi: 10.1016/j.jclinepi.2007.11.010. http://doi.org/10.1016/j.jclinepi.2007.11.010. [DOI] [PubMed] [Google Scholar]

- Peters JL, Sutton AJ, Jones DR, Abrams KR, Rushton L, Moreno SG. Assessing publication bias in meta-analyses in the presence of between-study heterogeneity. Journal of the Royal Statistical Society: Series A (Statistics in Society) 2010;173(3):575–591. http://doi.org/10.1111/j.1467-985X.2009.00629.x. [Google Scholar]

- Petty RE, Briñol P. Attitude change. In: Baumeister RF, Finkel EJ, editors. Advanced social psychology: The state of science. Oxford: Oxford University Press; 2010. pp. 217–259. [Google Scholar]

- Schipani V. GMOs didn’t cause Zika outbreak. 2016 Retrieved March 15, 2017, from http://www.factcheck.org/2016/02/gmos-didnt-cause-zika-outbreak/

- Schwarz N, Sanna LJ, Skurnik I, Yoon C. Metacognitive experiences and the intricacies of setting people straight: Implications for debiasing and public information campaigns. Adv Exp Soc Psychol Advances in Experimental Social Psychology. 2007;39:127–191. http://doi.org/10.1016/S0065-2601(06)39003-X 127. [Google Scholar]

- Simonsohn U, Simmons JP, Nelson LD. Better p-curves: Making p-curve analysis more robust to errors, fraud, and ambitious p-hacking, a reply to Ulrich and Miller (2015) Journal of Experimental Psychology: General. 2015;144(6):1146–1152. doi: 10.1037/xge0000104. http://doi.org/10.1037/xge0000104. [DOI] [PubMed] [Google Scholar]

- Slothuus R, de Vreese CH. Political parties, motivated reasoning, and issue framing effects. The Journal of Politics. 2010;72(3):630–645. http://doi.org/10.1017/S002238161000006X. [Google Scholar]

- Sperber D. Culturally transmitted misbeliefs. Behavioral and Brain Sciences. 2009;32(6):534–535. http://doi.org/http://dx.doi.org/10.1017/S0140525X09991348. [Google Scholar]

- Sterne JAC, Harbord RM. Funnel plots in meta-analysis. The Stata Journal. 2004;4(2):127–141. Retrieved from http://www.stata-journal.com/article.html?article=st0061. [Google Scholar]

- *.Thorson EA. Belief echoes: The persistent effects of corrected misinformation. Dissertations available from ProQuest. 2013 Retrieved from http://repository.upenn.edu/dissertations/AAI3564225.

- van Assen MALM, van Aert RCM, Wicherts JM. Meta-analysis using effect size distributions of only statistically significant studies. Psychological Methods. 2015;20(3):293–309. doi: 10.1037/met0000025. http://doi.org/10.1037/met0000025. [DOI] [PubMed] [Google Scholar]

- Van Damme I, Smets K. The power of emotion versus the power of suggestion: Memory for emotional events in the misinformation paradigm. Emotion. 2014;14(2):310–320. doi: 10.1037/a0034629. http://doi.org/10.1037/a0034629. [DOI] [PubMed] [Google Scholar]

- Vevea JL, Woods CM. Publication bias in research synthesis: Sensitivity analysis using a priori weight functions. Psychological Methods. 2005;10(4):428–443. doi: 10.1037/1082-989X.10.4.428. http://doi.org/0.1037/1082-989X.10.4.428. [DOI] [PubMed] [Google Scholar]

- Viechtbauer W, Cheung MWL. Outlier and influence diagnostics for meta-analysis. Research Synthesis Methods. 2010;1(2):112–25. doi: 10.1002/jrsm.11. http://doi.org/10.1002/jrsm.11. [DOI] [PubMed] [Google Scholar]

- Wilkes AL, Leatherbarrow M. Editing episodic memory following the identification of error. The Quarterly Journal of Experimental Psychology Section A. 1988;40(2):361–387. http://doi.org/10.1080/02724988843000168. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.