Abstract

Event-related brain potentials (ERP) are important neural correlates of cognitive processes. In the domain of language processing, the N400 and P600 reflect lexical-semantic integration and syntactic processing problems, respectively. We suggest an interpretation of these markers in terms of dynamical system theory and present two nonlinear dynamical models for syntactic computations where different processing strategies correspond to functionally different regions in the system’s phase space.

Keywords: Computational psycholinguistics, Language processing, Event-related brain potentials, Dynamical systems

Introduction

How are symbolic processing capabilities such as language realized by neural networks in the human brain? This is one of the most important problems in cognitive neurodynamics. Although methods such as event-related brain potentials (ERPs), event-related fields (ERFs), and functional magnetic resonance imaging (fMRI) have yielded considerable experimental evidence relating to the neural correlates of language processing, very little is known about the computational neurodynamical processes occurring at mesoscopic and microscopic scales that are responsible for macroscopically measurable effects.

Existing attempts to model language-related brain potentials are macroscopic-phenomenological, successfully relating particular ERP components to particular computational steps on the one hand, and to distinct cortical areas, on the other hand (Friederici 1995, 1998, 1999, 2002; Grodzinsky and Friederici 2006; Hagoort 2003, 2005; Bornkessel and Schlesewsky 2006; Frisch et al. 2008). However, these models are presently unable to relate ERPs to underlying neurodynamical systems.

In this paper we attempt to provide such an account for syntactic language processing. (A first step towards the modeling of the mismatch negativity ERP (MMN) for word recognition has recently been suggested by Wennekers et al. (2006) and Garagnani et al. (2007)). Using a particular ERP experiment on the processing of German subject–object ambiguities (Friederici et al. 1998, 2001; Bader and Meng 1999; beim Graben et al. 2000; Vos et al. 2001; Frisch et al. 2002, 2004; Schlesewsky and Bornkessel 2006), a locally ambiguous context-free grammar and two appropriate deterministic pushdown recognizers are constructed from a Government and Binding (Chomsky 1981; Haegeman 1994) representation of the stimulus material. Subsequently, we present two different implementations of these automata by nonlinear dynamical systems, resulting from universal tensor product representations (Dolan and Smolensky 1989; Mizraji 1989; Smolensky 1990; Mizraji 1992; Smolensky and Legendre 2006; Smolensky 2006). The first model is a parallel distributed processing (PDP) model (Rumelhart et al. 1986) in a high-dimensional activation vector space. Local ambiguity is processed in parallel (Lewis 1998) and model ERPs are obtained from principal components in the activation space. The second model generalizes previous work of beim Graben et al. (2004) on nonlinear dynamical automata (NDAs), where generalized shifts in symbolic dynamics (Moore 1990, 1991; Siegelmann 1996) are mapped onto a bifurcating two-dimensional dynamical system. In this model, local ambiguity is processed serially according to a diagnosis and repair account (Lewis 1998). Model ERPs are then described by the parsing entropy, obtained from a measurement partition.

Both models aim at a dynamical system interpretation of language-related ERPs (Başar 1980, 1998): The application of different processing strategies to ambiguous sentences is reflected by the exploration of different regions (probably with different volumes as well) in the processor’s phase space. These regions can be functionally related to differents ways of symbol manipulation (Fodor and Pylyshyn 1988; Smolensky 1991).

We begin with overview of language-relevant ERP components and how they could be interpreted in terms of dynamical system theory and other existing models of language processing. Then we describe a pilot study of a language processing ERP experiment and present two dynamical system models of the empirical data. This is followed by preliminary results of the ERP analysis to illustrate the dynamics of the two models in the light of their ERP correlates.

Event-related potentials in language studies

Event-related brain potentials (ERPs) are transient changes in the ongoing electroencephalogram (EEG) which are time-locked to the perception or processing of particular stimuli or cognitive events (Başar 1980, 1998; Regan 1989; Niedermeyer and da Silva 1999). ERPs differ with respect to their amplitude, polarity, latency (usually regarded as the time of maximal amplitude), duration, morphology, spatial topography, and, eventually, their putative neural generators. Some of these parameters vary depending on experimental manipulations that might be physical, such as pitch or volume for acoustic stimuli, size or color for visual stimuli, in the domain of psychophysics; or instructions or unexpectedness in the domain of cognitive neuroscience (Coles and Rugg 1995). As the ERP signal is about one order of magnitude smaller than the amplitude of the spontaneous EEG, signal analysis methods, such as ensemble averaging, source separation, or nonlinear techniques have to be employed (Regan 1989; Niedermeyer and da Silva 1999; Friederici et al. 2001; Makeig et al. 2002; Marwan and Meinke 2004; beim Graben et al. 2000, 2005; Allefeld et al. 2004; Schinkel et al. 2007). In the conventional averaging paradigm, the resulting waveforms are labeled by the polarities and latencies of peaks, such as N100, P300, N400, P600, denoting negativities around 100 and 400 ms, and positivities around 300 and 600 ms, respectively.

Since the pioneering work of Kutas and Hillyard (1980, 1984), ERPs have become increasingly important for the investigation of online language processing in the human brain. In this paper we focus on two important measures from the psycholinguistic perspective, the N400 and the P600.

The N400 component in language processing

Kutas and Hillyard (1980) reported a parietally distributed negativity around 400 ms after stimulus onset for semantically anomalous sentence continuations (shown in bold) such as (1), compared to semantically normal control sentences (2).

|

1 |

|

2 |

In subsequent work, Kutas and Hillyard (1984) reported that this N400 component is also sensitive to semantic priming, not only in sentence processing but also in lexical decision tasks (Osterhout and Holcomb (1995); see also Kutas and van Petten (1994) and Coles and Rugg (1995) for further reviews). According to Coles and Rugg (1995, p. 23), “the N400 appears to be a ‘default’ component, evoked by words whose meaning is unrelated to, or not predicted by, the prior context of the words” (Dambacher et al. 2006). This view is further supported by recent experimental findings on N400s evoked by purely semantic and thematic-syntactic manipulations (Frisch and Schlesewsky 2001; Bornkessel et al. 2004; Frisch and beim Graben 2005; beim Graben et al. 2005). For example, in the (extended) Argument Dependency Model (eADM) of Bornkessel and Schlesewsky (2006), the N400 reflects a mismatch with previous prominence information held in working memory (eADM is discussed in section “Introduction/Phenomenological models”).

The P600 component in language processing

The first syntax-related ERP component, observed by Neville et al. (1991), was the left anterior negativity (LAN). It is often more pronounced at left-hemispheric recording sites (hence the name). The LAN is related to phrase structure or word category violations 1 such as at the critical verb ‘of’ in2

|

3 |

The LAN was accompanied by a sustained parietal positivity around 600 ms, which turned out to be a very reliable indicator of syntactic processing problems. Osterhout and Holcomb (1992) found this P600 [or Syntactic Positivity Shift (SPS; Hagoort et al. (1993)] for phrase structure violations, such as (4), and for local garden path sentences (5).

|

4 |

|

5 |

When reading the sentence (5) from left to right, the preposition ‘to’ (in bold) renders the sentence temporarily ungrammatical because the comprehender expects the verb ‘persuaded’ to be followed by an object noun phrase. However, further downstream, when the phrase ‘was sent…’ is encountered, the sentence is recognized as grammatical: the verb ‘persuaded’ is a contraction of the full form ‘who was persuaded’, in other words, a reduced relative construction. Such local ambiguities thus lead the reader temporarily down a garden path; hence the name (Fodor and Ferreira 1998).

The P600 does not only reflect ungrammaticality or ambiguity. Its amplitude and latency varies with syntactic complexity and reanalysis costs (Osterhout et al. 1994; Kaan et al. 2000). Mecklinger et al. (1995); Friederici et al. (1998) and Frisch et al. (2004) reported an earlier positivity (P345) for sentences where syntactic reanalysis was easier to perform than for those evoking a P600. Late positivities which co-vary also with semantic and pragmatic contexts have been reported e.g. by Drenhaus et al. (2006). For this reason, Bornkessel and Schlesewsky (2006) propose distinguishing between the P600 and late positivites in their model.

The dynamical system approach

The brain is a complex dynamical system whose high-dimensional phase space is spanned by the activation space of its neural network. Measuring EEG, MEG or neuroimaging characteristics comprises a spatio-temporal coarse-graining that maps the phase space in an observable space spanned by multivariate time series (Amari 1974; Atmanspacher and beim Graben 2007; Freeman 2007). This observable space is explored by trajectories representing e.g. EEG time series, such as individual ERP epochs, that start from randomly scattered initial conditions.

Following Başar (1980, 1998), the experimental manipulations of an ERP experiment can be regarded as control parameters in the sense that individual ERP epochs recorded under different conditions will explore different regions of the observable space thereby revealing different topologies of the system’s flow. Changing the control parameters critically then causes bifurcations of the dynamics, that can be assessed by suitable order parameters, such as the averaged ERP, coherence, synchronization or information measures (Başar 1980, 1998; Allefeld et al. 2004; beim Graben et al. 2000).

Applying methods from information theory to ERP data requires a further coarse-graining of the continuous time series into a symbolic representation. Symbolic dynamics (Hao 1989; Lind and Marcus 1995) deals with dynamical systems of discrete phase space and time where trajectories are given by bi-infinite (for invertible maps) “dotted” symbol sequences

|

6 |

with symbols  taken from a finite set, the alphabetA. In Eq. (6) the dot denotes the

observation time t0 such that the symbol right to the dot,

taken from a finite set, the alphabetA. In Eq. (6) the dot denotes the

observation time t0 such that the symbol right to the dot,

displays the current state. The dynamics is

given by the left shift

displays the current state. The dynamics is

given by the left shift

|

7 |

resulting to a new sequence with current state

In order to get a symbolic dynamics from a continuous system

(X,Φt) with phase space X and flow  one must first discretize time, such that Φt = Φt are the iterations of a map

one must first discretize time, such that Φt = Φt are the iterations of a map  and secondly, one has to partition the

observable space X into a

finite number I of mutually

disjoint sets: {Ai|i = 1, 2,…,

I} which cover the whole

phase space X, i.e.

and secondly, one has to partition the

observable space X into a

finite number I of mutually

disjoint sets: {Ai|i = 1, 2,…,

I} which cover the whole

phase space X, i.e.

The index set A = {1, 2,…, I}

of the partition can then be interpreted as the alphabet of

symbols ai = i. Thus, the

current state

The index set A = {1, 2,…, I}

of the partition can then be interpreted as the alphabet of

symbols ai = i. Thus, the

current state  contained in cell Ai is mapped onto the dotted symbol “. ai”. Accordingly, its successor x1 = Φ (x0) is mapped onto the symbol aj if

contained in cell Ai is mapped onto the dotted symbol “. ai”. Accordingly, its successor x1 = Φ (x0) is mapped onto the symbol aj if  On the other hand, the left shift σ brings

aj onto the “surface” of the sequence s = … ak . aiaj… by σ(… ak . aiaj…) = … akai . aj….

On the other hand, the left shift σ brings

aj onto the “surface” of the sequence s = … ak . aiaj… by σ(… ak . aiaj…) = … akai . aj….

Partitioning the observable space of ERP time series, beim

Graben et al. (2000, 2005), Frisch et al. (2004) and Drenhaus et al.

(2006) were

able to describe ERP components by suitable order parameters

obtained from a symbolic dynamics of the time series. In this

approach, each ERP epoch is represented by a string of length

L of a few symbols

{ai|i = 1, 2,…,

I} which form an

epoch ensemble of N

sequences for each experimental condition. A subset of these

epochs that agree at a certain time t in a particular building block, a word

of N

sequences for each experimental condition. A subset of these

epochs that agree at a certain time t in a particular building block, a word of n = |w|

symbols,

of n = |w|

symbols,

|

8 |

is called a cylinder

set. Counting the members of cylinder sets (i.e.

determing their probability measure) yields the word statistics from which event-related cylinder entropies

from which event-related cylinder entropies

|

9 |

can be obtained.

Language processing models in psycholinguistics

In this section we provide a brief overview of language processing models discussed in the psycholinguistic literature (see e.g., Lewis (2000), Vasishth and Lewis (2006b), Lewis et al. (2006)). These can be classified into three broad types (some models cut across these broad categories, of course). The first type is the phenomenological model. Such a model pursues a top-down approach, accounting for macroscopic, phenomenological evidence from neuroimaging techniques, EEG, MEG, behavioral data and clinical studies; this type of model is usually neither algorithmically nor mathematically codified. The second type is the symbolic computational model; this class of model follows the classical cognitivistic account of formal language theory and automata theory (Newell and Simon 1976; Fodor and Pylyshyn 1988). The third type is the dynamical systems approach to cognition; these models attempt to bridge the gap between symbolic computation and continuous dynamics on the one hand, and between qualitative descriptions and quantitative predictions on the other.

These types of models can be classified along another dimension as well: they can be either serial or parallel (Lewis 1998). In a serial model, when a temporary ambiguity is encountered, only one possibility is pursued until a valid representation is computed, or the computation breaks down. In case of a breakdown, either the immediately preceding computational steps have to be retraced until a viable alternative can be found (backtracking), or the search space of possible continuations is locally modified such that the processing trajectory jumps into another admissible track (repair). By contrast, in a parallel model, the entire search space is globally explored by the processor. We study the implications of these two strategies by developing a parallel processing model (section “Methods/Tensor product top-down recognizer”) and a serial diagnosis and repair model (section “Methods/Nonlinear dynamical automaton”).

Phenomenological models

Based on the Wernicke-Geschwind model (Kandel et al. 1995, Chapt. 34), Friederici (1995, 1999, 2002) proposed her serial neurocognitive model of language processing (see also Grodzinsky and Friederici (2006)) that comprises three different phases: In a first phase around 200 ms, only word category information is taken into account to construct a preliminary syntactic representation of a sentence. Word category violations elicit the early left anterior negativity (ELAN) in the ERP (see section “Introduction/Event-related potentials in language studies”). In the second phase from 300 to 500 ms, semantic and thematic information such as the verb’s subcategorization frames are used to compute the argument dependencies and a first semantic interpretation. Violations of subcategorization information or semantic plausibility are related to the left anterior negativity (LAN) and the N400 component, respectively. Finally, in the third phase (between 500 and 800 ms), syntactic reanalysis of phrase structure violations and garden-path interpretations are accompanied by the P600 component.

This model became supplemented by a diagnosis and repair mechanism (Friederici 1998), which describes the recovery of the human language system from weak garden paths without backtracking. After diagnosing the need of reanalysis, the search space of possible grammatical continuations is modified by a local repair operation, into which the system moves directly afterwards (Lewis 1998). Friederici (1998) provides evidence that the diagnosis process is reflected by the onset of the “P600” which might appear as a P345 in constructions where only binding relations have to be recomputed (Friederici et al. 1998, 2001; Vos et al. 2001; Frisch et al. 2004). In contrast, amplitude and duration of the P600 reflect the amount of reanalysis costs. This view has been challenged by Hagoort (2003) who reported ERP results on weak and strong syntactic violations (11, 12) compared to a correct sentence (10) as in

|

10 |

|

11 |

|

12 |

where the weak violation (11) does not evoke an early positivity as it would have to be expected upon the diagnosis and repair model predicting that the weak violation would be easier to diagnose and to repair. However, this interpretation is at variance with the model because the weak violation (11) is morphosyntactic, which might be harder to diagnose than the strong word category violation (12) which in fact evoked an early starting, large and sustained P600.

Relying on Friederici’s (1995, 1999, 2002) neurocognitive model of language processing, Bornkessel and Schlesewsky (2006) have recently proposed their (extended) Argument Dependency Model, where only a syntactic core consisting of word category and argument structure information is maintained (van Valin 1993). The model accounts for cross-linguistic differences in ERP and neuroimaging patterns solely attributed to computational steps in the processing of semantic and thematic dependencies (Friederici’s phase 2). However, the third phase in the eADM comprises a generalized mapping and well-formedness checks that are reflected by late positivities in the ERP.

At the edge between the phenomenological and computational/dynamical models lies Hagoort’s Memory, Unification and Control (MUC) model (Hagoort 2003, 2005). It shares the property of a syntactic core (lexicalized structure frames) with the eADM and was computationally implemented by Vosse and Kempen (2000). The MUC attributes ERP and neuroimaging findings to a binding mechanism between lexical frames. The ELAN is evoked by a failure to bind lexical frames together, whereas the P600 is related to the time required to establishing such bindings. However, the MUC differs from the neurocognitive model and the eADM with respect to the priority of syntactic computation. While the former are serial/hierarchical, presupposing a syntax first mechanism, the latter is fully parallel where all kinds of information (word category, argument structure, semantics) are used as they become available.

Computational symbolic models

Classical cognitive science rests on the physical symbol system hypothesis that any cognitive process is essentially symbol manipulation that can be achieved by a Turing machine (or less powerful automata) (Newell and Simon 1976; Fodor and Pylyshyn 1988; beim Graben 2004). It is obvious that formal language theory provides the natural framework for treating such systems (Hopcroft and Ullman 1979; Aho and Ullman 1972).

Formal languages. A

formal language is a subset of finite words  over an alphabet A. Formal languages can be generated by formal

grammars and recognized or translated by automata. Although

natural languages are not context-free (Shieber 1985), context-free

grammars (CFGs) and pushdown automata provide useful tools

for the description of natural languages. A context-free

grammar is a tuple G = (T,

N, P, S) where T is

the (terminal) alphabet of a language, N is an alphabet of auxiliary nonterminal

symbols,

over an alphabet A. Formal languages can be generated by formal

grammars and recognized or translated by automata. Although

natural languages are not context-free (Shieber 1985), context-free

grammars (CFGs) and pushdown automata provide useful tools

for the description of natural languages. A context-free

grammar is a tuple G = (T,

N, P, S) where T is

the (terminal) alphabet of a language, N is an alphabet of auxiliary nonterminal

symbols,  is a set of rewriting rules

is a set of rewriting rules

and

and  is a distinguished start symbol.

is a distinguished start symbol.

In the framework of Government and Binding Theory (GB) (Chomsky 1981; Haegeman 1994) the X-bar module supplies a context free grammar

|

where X and Y, respectively, denote one of the syntactic categories C (complementizer), I (inflection), D (determiner), N (noun), V (verb), A (adjective), or P (preposition). The start symbol of this grammar is usually CP or IP. The first rule determines that a maximal projection is formed from a specifier, SpecX, and an X′ phrase. The second rule allows for recursive adjunction and the third rule indicates that a head X0 takes a complement YP to form an X′ phrase. The head X0projects over its complements and adjuncts to the maximal projection. We use this framework in section “Methods/Processing models” to construct our particular language processing model.

Automata. Context-free

languages can be parsed

(i.e. recognized or translated) by serial pushdown automata where Q

is a finite set of internal states, T is the input alphabet matching with the

terminal alphabet of a context-free language, Γ is a finite

stack alphabet,

where Q

is a finite set of internal states, T is the input alphabet matching with the

terminal alphabet of a context-free language, Γ is a finite

stack alphabet,  is a partial transition function,

is a partial transition function,

is the distinguished initial state,

is the distinguished initial state,

is the initial stack symbol, and

is the initial stack symbol, and

is the set of final (accepting) states. An

automaton with no final states:

is the set of final (accepting) states. An

automaton with no final states:  is said to accept

by empty stack. Given a context-free grammar

G, one can construct

a pushdown automaton accepting the language generated by

G by simulating rule

expansions. Such an automaton is called a top-down recognizer. Formally,

if G = (N, T, P,

S) is a context-free

grammar, then the pushdown automaton

is said to accept

by empty stack. Given a context-free grammar

G, one can construct

a pushdown automaton accepting the language generated by

G by simulating rule

expansions. Such an automaton is called a top-down recognizer. Formally,

if G = (N, T, P,

S) is a context-free

grammar, then the pushdown automaton  is a top-down

recognizer for G, where δ is defined for all

is a top-down

recognizer for G, where δ is defined for all

and all

and all  as follows:

as follows:

|

13 |

|

14 |

where  denotes the empty

word of the language. For further discussion,

see Aho and Ullman (1972); Hopcroft and Ullman (1979) and beim Graben

et al. (2004).

denotes the empty

word of the language. For further discussion,

see Aho and Ullman (1972); Hopcroft and Ullman (1979) and beim Graben

et al. (2004).

For a deterministic top-down

recognizer, the sets  in Eq. 13 always contain only one element as the

grammar G must be

locally unambiguous.

This can, however, be achieved by decomposing the grammar

G into its locally

unambiguous parts (beim Graben et al. (2004), see also Hale and

Smolensky (2006) for a related approach). A

deterministic top-down recognizer can be more conveniently

characterized by its state

descriptions

in Eq. 13 always contain only one element as the

grammar G must be

locally unambiguous.

This can, however, be achieved by decomposing the grammar

G into its locally

unambiguous parts (beim Graben et al. (2004), see also Hale and

Smolensky (2006) for a related approach). A

deterministic top-down recognizer can be more conveniently

characterized by its state

descriptions where

γ = γ0γ1…γk−1 is

the content of the stack while w = w1w2…wl is a finite word at the input tape. It follows

from the definition of the transition function δ that the

automaton has access only to the top of the stack

γ0 and to the first symbol of the

input tape w1 at each instance of time. Hence, we

can define a map

where

γ = γ0γ1…γk−1 is

the content of the stack while w = w1w2…wl is a finite word at the input tape. It follows

from the definition of the transition function δ that the

automaton has access only to the top of the stack

γ0 and to the first symbol of the

input tape w1 at each instance of time. Hence, we

can define a map  acting on pairs of the topmost symbols of

stack

acting on pairs of the topmost symbols of

stack  and input tape

and input tape  respectively, by the transition function

δ

respectively, by the transition function

δ

|

15 |

where γ = γ0…γk−1 if

and if P contains a rule

and if P contains a rule

Computational psycholinguistics does not only attempt to describe the grammar of natural languages, it also seeks to identify the mechanisms underlying human sentence processing (Lewis 2000). The existence of garden-paths is evidence that natural languages have local ambiguities; in addition, garden-paths have occasionally been taken to motivate serial processing theories (for contrasting parallel processing accounts, see section “Methods/Tensor product top-down recognizer” and Hale (2003, 2006), Boston et al. (in press)). One of the first serial processing models for human languages was Frazier and Fodor’s Sausage Machine (1978). It comprises two modules: the “sausage machine” generates phrase packages by looking a few symbols ahead into the input, while the “sentence structure supervisor” incrementally builds the phrase structure tree according to a context-free grammar. The model also accounts for particular processing strategies which minimize computational effort. Frazier and Fodor’s (1978) Minimal Attachment Principle favors phrase structure trees with a minimal number of nodes. Additionally, it has been shown that the model cannot be represented by a simple top-down recognizer (Fodor and Frazier 1980). However, there are proposals in the literature that the human parser may be a kind of a deterministic left-corner automaton with finite look-ahead (Marcus 1980; Staudacher 1990), as suggested by the sausage machine.

Generalized shifts. Of

special interest for our exposition in

section “Methods/Nonlinear dynamical automaton” is that any

automaton (even Turing machines and super Turing devices)

can be reconstructed by a particular kind of symbolic

dynamics, called generalized

shifts (Moore 1990; 1991; Siegelmann 1996). These are given

by sets of bi-infinite dotted strings Eq. 6 which evolve under the left

shift Eq. 7

augmented with a word replacement mechanism. Let

be a number of digits around the dot, i.e.

d = 2, then the words

w = a−1. a0 of length d lie in a domain of

dependence (DoD). The generalized shift is

given by

be a number of digits around the dot, i.e.

d = 2, then the words

w = a−1. a0 of length d lie in a domain of

dependence (DoD). The generalized shift is

given by

|

16 |

|

17 |

|

18 |

where F(s) = l

dictates a number of shifts to the right (l < 0), to the left

(l > 0) or no

shift at all (l = 0),

G(s) is a word w′ of length e in the domain of effect (DoE) to be

replacing the content w

in the DoD of s, and

denotes this replacement function.

denotes this replacement function.

A deterministic top-down recognizer as described by Eq. 15 can be easily represented by such a generalized shift, choosing

|

19 |

where the stack content γ had to be reverted to γ′ = γk−1… γ0 in order to bring the topmost symbol γ0 into the DoD of the generalized shift (beim Graben et al. 2004).

Although the repair procedure of Lewis (1998) goes beyond the domain of top-down parsing, it can be straightforwardly embedded into the framework of generalized shifts by a table of additional maps, such as

|

20 |

where γk could be a (reversed) string in the stack with arbitrary length |γk|. As the repair operation can only take place within the stack, the input string w remains unaffected. We exploit this possibility in our serial diagnosis and repair model in section “Methods/Nonlinear dynamical automaton”.

Cognitive architectures. To conclude this section we shall also consider computational models that are partly hybrid architectures of symbolic and dynamic systems (Vosse and Kempen 2000; Hale 2003, 2006; Hale and Smolensky 2006; van der Velde and de Kamps 2006). One particular type of these models is based on cognitive architectures such as ACT-R, which we discuss next as an illustration.

The cognitive theory ACT-R (Anderson et al. 2004) is implemented as a general computational model and incorporates constraints developed through considerable experimental research on human information processing. The ACT-R theory relevant for the present discussion and the parsing model are outlined next, and its empirical coverage is briefly discussed. For details of the architecture the reader should consult the article mentioned above.

In its essence, ACT-R consists of two distinct systems, declarative memory and procedural memory. Declarative memory consists of items (chunks) identified by a single symbol. Each chunk is a set of feature–value pairs; the value of a feature may be a primitive symbol or the identifier of another chunk, in which case the feature–value pair represents a relation. In addition to the memory systems, focused buffers hold single chunks. There is a fixed set of buffers, each of which holds a single chunk in a distinguished state that makes it available for processing. Items outside of the buffers must be retrieved to be processed. The three important cognitive buffers are: the goal buffer, the problem state buffer, and the retrieval buffer. The goal buffer serves to represent the current control state information, and the problem state buffer represents the current problem state. The retrieval buffer serves as the interface to declarative memory, holding the single chunk from the last retrieval.

The retrieval-buffer structure has much in common with conceptions of working memory and short-term memory that posit an extremely limited focus of attention of one to three items, with retrieval processes required to bring items into the focus for processing (McElree and Dosher 1993). All procedural knowledge is represented as production rules—asymmetric associations specifying conditions and actions. Conditions are patterns to match against buffer contents, and actions are taken on buffer contents. All behavior arises from production rule firing; the order of behavior is not fixed in advance but emerges in response to the dynamically changing contents of the buffers. The sentence processing model critically depends on the built-in constraints on activation fluctuation of chunks as a function of usage and delay. Chunks have numeric activation values that fluctuate over time; activation reflects usage history and time-based decay. The activation affects a chunk’s probability and latency of retrieval. ACT-R also assumes that associative retrieval is subject to interference. Chunks are retrieved by a content-addressable, associative retrieval process (McElree 2000). Similarity-based retrieval interference arises as a function of retrieval cue-overlap: the effectiveness of a cue is reduced as the number of items associated with the cue increases. Associative retrieval interference arises because the strength of association from a cue is reduced as a function of the number of items associated with the cue.

The sentence processing model embedded in ACT-R consists of a definition of lexical items in permanent memory defined in terms of feature–value pairs, and a set of production rules specifying a left-corner parser (Aho and Ullman 1972). The model has been applied to several different published reading experiments involving English (Lewis and Vasishth 2006), German (Vasishth et al. 2008), and Hindi (Vasishth and Lewis 2006a), using the self-paced reading and eyetracking methodologies. The simulations provide detailed accounts of the effects of length and structural interference on both unambiguous and garden path structures.

An important result in this model is that all fits were obtained with most of the quantitative parameters set to default values in the ACT-R literature. The remaining theoretical degrees of freedom in the model are the production rules that embody parsing skill, and these rules represent a straightforward realization of left-corner parsing conforming to one overriding principle: compute the parse as quickly as possible. This approach thereby considerably reduces theoretical degrees of freedom; both the specific nature of the strategic parsing skill and the mathematical details of the memory retrieval derive from existing theory, plus the assumption of fast, incremental parsing.

Dynamical system models

The dynamical systems approach to cognition claims that cognitive computation is essentially the transient behavior of nonlinear dynamical systems (Crutchfield 1994; Port and van Gelder 1995; van Gelder 1998; Beer 2000; beim Graben et al. 2004).

Connectionist models.

Most attempts for such models of syntactic language

processing (see Christiansen and Chater (1999); Lewis

(2000) for

surveys), were made using local

connectionist representations. These are

given by activation patterns in neural networks which are

either extended over many processors (“neurons”), for

distributed

representations, or localized to single

processors for local representations. All N network processors

together span a finite vector space

together span a finite vector space

the activation space, in which the

representational states of a connectionist network are

situated. Formally, a representation

the activation space, in which the

representational states of a connectionist network are

situated. Formally, a representation

|

21 |

is local if

exactly one processor  is activated at a certain time, i.e.

xi = 1, xj = 0, for all

is activated at a certain time, i.e.

xi = 1, xj = 0, for all  On the other hand, every other

representation is distributed (Smolensky and Legendre 2006).

On the other hand, every other

representation is distributed (Smolensky and Legendre 2006).

In his seminal work, Elman (1995) suggested a simple recurrent neural network (SRN) architecture that predicts word categories of an input string. This model was elaborated by Tabor et al. (1997) and Tabor and Tanenhaus (1999) using a gravitational clustering mechanism to account for reading time differences. However, local representations have been criticized by Fodor and Pylyshyn (1988) as being cognitively implausible because they lack the required compositionality and causality properties.

In response to these criticisms, Dolan and Smolensky (1989); Smolensky (1990) and independently Mizraji (1989, 1992) suggested a unifying framework for distributed representations of structured symbolic information such as lists or phrase structure trees. According to Smolensky (1990), this approach comprises three steps:

decomposing symbolic structures via fillers and roles,

representing conjunctions,

superimposing conjunctions formed by tensor products of filler/role bindings.

A distributed connectionist representation of symbolic

structures s in a set

S is a mapping ψ from

S to activation

vector space  The set S contains strings, binary trees, or other

symbol structures. Smolensky (1990) called the

mapping ψ faithful if ψ

is injective and no symbolic structure is mapped onto the

zero vector.

The set S contains strings, binary trees, or other

symbol structures. Smolensky (1990) called the

mapping ψ faithful if ψ

is injective and no symbolic structure is mapped onto the

zero vector.

In order to prescribe the desired representation, Smolensky (1990) defined the role decomposition F/R for the set of symbolic structures S as a cartesian product of sets F × R, where the elements of F are called fillers and those of R are dubbed roles. The role decomposition is then defined by the following mapping:

|

22 |

where each  is associated to the set β(s) of filler/role bindings in

(Smolensky 1990, p.169) .3 The mapping β is referred to as the filler/role representation of

S which provides the

first step of decomposing symbolic structures via fillers

and roles.

is associated to the set β(s) of filler/role bindings in

(Smolensky 1990, p.169) .3 The mapping β is referred to as the filler/role representation of

S which provides the

first step of decomposing symbolic structures via fillers

and roles.

Consider, e.g., a finite sequence (a word)

|

23 |

of n symbols ai from a finite alphabet T. Following Smolensky, the symbols can be identified with the fillers, i.e. F = T. On the other hand, the roles are given by the n string positions, R = {0, 1,…, n}. Then, we obtain from Eq. 22,

|

where the expression  tells that filler

tells that filler  is bound at role ik in forming s.

is bound at role ik in forming s.

A connectionist representation ψ comprises three different

maps. First, all elements  and

and  are mapped onto different vectors

are mapped onto different vectors

of two finite-dimensional vector spaces

of two finite-dimensional vector spaces

respectively. Second, tensor product representations

of the filler/role bindings are introduced by another

function from β(s) to

respectively. Second, tensor product representations

of the filler/role bindings are introduced by another

function from β(s) to

Therefore, Smolensky (1990, p. 174) defined

the maps:

Therefore, Smolensky (1990, p. 174) defined

the maps:

|

24 |

|

25 |

|

26 |

The Eqs. 24 and 25 map the fillers and roles onto the corresponding vector spaces. The filler/role binding is then formed by algebraic tensor products in Eq. 26.

The second step, representing conjunctions, is achieved by pattern superposition, which is basically vector addition. If two vectors are each represented in one connectionist system by an activation pattern, then the representation of the conjunction of these patterns is the pattern resulting from superimposing the individual patterns. Smolensky (1990, p. 171) defined that a connectionist representation ψ employs the superpositional representation of conjunction if and only if:

|

27 |

where  are the filler/role bindings for s conceived as propositions:

“filler f binds to role

r”. Thus, the

connectionist representation of a conjunction of filler/role

bindings is the sum of the representations of the individual

bindings.4 The connectionist representation ψ restricted to

β(s) is defined in

Eq. 26 as ψb, such that we arrive eventually at

are the filler/role bindings for s conceived as propositions:

“filler f binds to role

r”. Thus, the

connectionist representation of a conjunction of filler/role

bindings is the sum of the representations of the individual

bindings.4 The connectionist representation ψ restricted to

β(s) is defined in

Eq. 26 as ψb, such that we arrive eventually at

|

28 |

The string  from example (23) is thus represented by

the sum of tensor products

from example (23) is thus represented by

the sum of tensor products

|

29 |

Depending on the dimensionality of the filler and role vector spaces, the representation Eq. 28 is faithful, if the filler and role vectors are both linearly independent. Contrarily, if this is not the case, the tensor product representation Eq. 28 describes graceful saturation of activation (Smolensky 1990; Smolensky and Legendre 2006). Note that a faithful tensor product representation allows the projection of activation patterns back into the respective filler and role spaces, thus enabling the unbinding of constituent structures. We shall use such a faithful representation for phrase structures trees in section “Methods/Tensor product top-down recognizer” for modeling our massively parallel top-down recognizer. Such models are important as they possess a compositional architecture whose representations by patterns of distributed activation are in fact causally efficacious for cognitive computations, thereby disproving Fodor and Pylyshyn (1988).

On the other hand, tensor product representations that are

not faithful are interesting for other reasons. One example

for such representations are fractal

encodings, proposed by Tabor (1998, 2000) for constructing

dynamical automata in

case of  (Smolensky and Legendre 2006; Smolensky

2006).

Also Gödel encodings

(Moore 1990,

1991;

Siegelmann 1996; beim Graben et al. 2004) are special,

namely, one-dimensional tensor product representations, for

integer fillers and real-valued roles:

(Smolensky and Legendre 2006; Smolensky

2006).

Also Gödel encodings

(Moore 1990,

1991;

Siegelmann 1996; beim Graben et al. 2004) are special,

namely, one-dimensional tensor product representations, for

integer fillers and real-valued roles:  where n

is the number of fillers.

where n

is the number of fillers.

Dynamical recognizers.

Pollack (1991)

introduced dynamical recognizers and implemented them

through cascaded neural networks in order to describe

classification tasks for formal languages. A dynamical

recognizer is a quadruple  where

where  is a “phase space”, T is a finite input alphabet of symbols

ai,

is a “phase space”, T is a finite input alphabet of symbols

ai,  is an iterated function system,

parameterized by the symbols from

is an iterated function system,

parameterized by the symbols from  and

and  is a “decision function”. The recognizer

accepts a finite string

is a “decision function”. The recognizer

accepts a finite string  as belonging to a certain formal language,

when

as belonging to a certain formal language,

when

|

for a particular initial condition

Note that this dynamical system is

completely non-autonomous as there is no intrinsic dynamics

Note that this dynamical system is

completely non-autonomous as there is no intrinsic dynamics

prescribed generating trajectories from

initial conditions as itineraries

prescribed generating trajectories from

initial conditions as itineraries  These systems have been further developed

by Moore (1998); Moore and Crutchfield (2000), and especially by

Tabor (1998,

2000)

towards “quantum automata” and dynamical automata,

respectively. We exploit these ideas in

section “Methods/Nonlinear dynamical automaton” to present a

“quantum representation theory for dynamical automata”.

These systems have been further developed

by Moore (1998); Moore and Crutchfield (2000), and especially by

Tabor (1998,

2000)

towards “quantum automata” and dynamical automata,

respectively. We exploit these ideas in

section “Methods/Nonlinear dynamical automaton” to present a

“quantum representation theory for dynamical automata”.

Nonlinear dynamical automata. The simplest variant of the tensor product representation is the Gödel encoding, where a one-sided string (Eq. 23) is mapped onto a real number in the unit interval [0, 1] by a bT-adic expansion

|

30 |

Here,  is an arbitrary encoding of the bT symbols in T

by the integers from 0 to bT − 1, and

is an arbitrary encoding of the bT symbols in T

by the integers from 0 to bT − 1, and  denotes its extension to sequences from

denotes its extension to sequences from

The Gödel encoding Eq. 30 has the advantage that also dotted

bi-infinite strings (Eq. 6) can be described by numeric values, simply

by splitting the string at the dot and representing the

left-hand-side from the dot by one number  and the right-hand-side from the dot by

another number

and the right-hand-side from the dot by

another number  such that

such that

|

31 |

|

32 |

Thus, a bi-infinite string is mapped onto by a point (x, y) in the unit square [0, 1]2. This “symbologram” provides a phase space representation of a symbolic dynamics (Cvitanović et al. 1988; Kennel and Buhl 2003).

Next, consider a generalized shift with DoD of length

d as in

section “Introduction/Computational symbolic models”. Then,

the word contained in the DoD at the left-hand-side of the

dot, partitions the x-axis of the symbologram, while the word

contained in the DoD at the right-hand-side of the dot

partitions its y-axis. In

our case, for d = 2, the

DoD is formed by the words w = a−1. a0, such that the symbol a−1 produces a partition of the

x-axis and the symbol

a0 of the y-axis. Taken together, the DoDs partition the

unit square into rectangles which are the corresponding

domains of the symbologram dynamics. Moore (1990, 1991) has proven that

this dynamics is given by piecewise affine linear (yet,

globally nonlinear) maps. In contrast to the non-autonomous,

forced dynamical recognizers and automata (Pollack

1991; Tabor

1998,

2000), the

nonlinear symbologram dynamics,  resulting from generalized shifts is

autonomous and intrinsic. Therefore, we shall refer to these

systems as to nonlinear dynamical

automata (NDA). It is obvious that rectangles

in the NDA’s phase space, which correspond to cylinder sets

of the underlying generalized shift, are symbolic

representations and that these are causally efficacious as

they are contained in the cells of the partition defining

the NDA’s dynamics (beim Graben 2004). Less obvious is

yet, that these rectangles are also constituents obeying

compositionality demands (Fodor and Pylyshyn 1988). However, this

becomes clear by considering the symbolic dynamics of the

NDA: constituents form admissible words of generalized

shifts (Atmanspacher and beim Graben 2007).

resulting from generalized shifts is

autonomous and intrinsic. Therefore, we shall refer to these

systems as to nonlinear dynamical

automata (NDA). It is obvious that rectangles

in the NDA’s phase space, which correspond to cylinder sets

of the underlying generalized shift, are symbolic

representations and that these are causally efficacious as

they are contained in the cells of the partition defining

the NDA’s dynamics (beim Graben 2004). Less obvious is

yet, that these rectangles are also constituents obeying

compositionality demands (Fodor and Pylyshyn 1988). However, this

becomes clear by considering the symbolic dynamics of the

NDA: constituents form admissible words of generalized

shifts (Atmanspacher and beim Graben 2007).

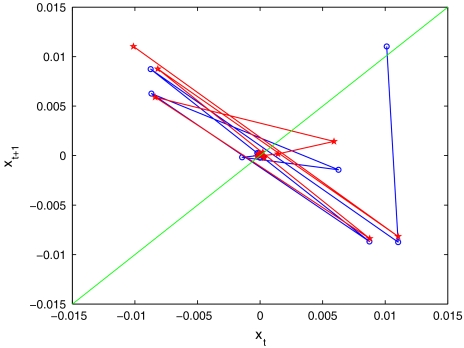

In sum, we realize that faithful high-dimensional tensor product representations as well as two-dimensional NDAs fulfill Fodor and Pylyshin’s (1988) constraints on dynamical system architectures for cognitive computation, where different regions in phase space represent different symbolic contents with different causal effects.

Methods

In this section we present two different models of syntactic language processing both comprised by Smolensky’s universal tensor product representations. The first model makes explicit use of a high-dimensional and faithful filler/role binding architecture that allows the description of syntactic parsing as a massively parallel process in the phase space of a nonlinear dynamica l system. The second model, on the other hand, generalizes the nonlinear dynamical automata approach of Gödel encoded generalized shifts. It is a serial diagnosis and repair model, augmented with a non-autonomous forcing to describe incoming sentence material. Both models will be developed on the basis of a context-free grammar that describes the processing difficulties in a pilot experiment on language-related brain potentials. The models are able to describe, at least qualitatively, the obtained ERP results by trajectories that explore different regions in phase space in pursuing different processing steps.

ERP experiment

In an ERP experiment on the processing of locally ambiguous German sentences, 14 subjects were asked to read 120 sentences each, belonging to two different condition classes:

|

33 |

|

34 |

Sentences were visually presented phrase-by-phrase with 500 ms (plus 100 ms blank screen resulting in 600 ms interstimulus interval) at the screen of a computer monitor. The sentences were presented in pseudo-randomized order and subjects had to answer probe questions after each sentence to control for their attendance.

The sentences (33) and (34) began with a feminine noun phrase

(NP) die Rednerin (‘the

speaker’), ambiguous with respect to its grammatical role

(either subject or direct object). The disambiguating

information was provided by the determiner of the second noun

phrase:  Berater (‘the advisor’) is

explicitly marked as accusative case, therefore assigned to the

direct object role in sentence (33). By contrast,

Berater (‘the advisor’) is

explicitly marked as accusative case, therefore assigned to the

direct object role in sentence (33). By contrast,

Berater bears nominative

case, hence indicating that this NP must be interpreted as the

subject of the sentence. Correspondingly, die Rednerin has to be

disambiguated towards the direct object role of the sentence

(34).

Berater bears nominative

case, hence indicating that this NP must be interpreted as the

subject of the sentence. Correspondingly, die Rednerin has to be

disambiguated towards the direct object role of the sentence

(34).

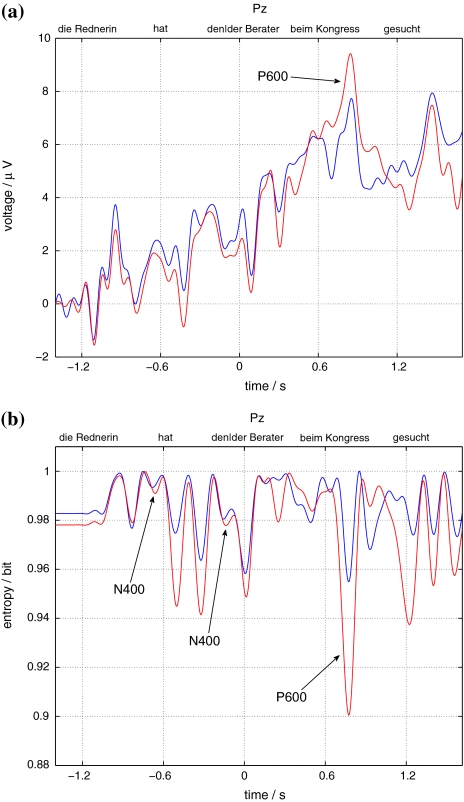

According to psycholinguistic principles such as the minimal attachment principle, discussed in section “Introduction/Computational symbolic models”, a subject interpretation of the ambiguous NP is preferred against the alternative direct object interpretation. This preference, the subject preference strategy, leads to processing problems for object-first sentences such as (34). These difficulties are reflected by changes in the event-related brain potential.

In order to measure ERPs, subjects were attached to an EEG recorder by means of 25 Ag/AgCl electrodes mounted according to the 10–20 system (Sharbrough et al. 1995). EEG was recorded with a sampling rate of 250 Hz (impedances < 5kΩ) and was referenced to the left mastoid (re-referenced to linked mastoids offline). Additionally, EOG was monitored to control for eye-movement artifacts.

For the ERP analysis only artifact-free trials (determined by visual inspection) with correct answers to the probe questions were selected. Epochs covering the whole sentence from −1,400 to 2,500 ms relative to the presentation of the critical second NP were baseline corrected by subtracting the time-average of a pre-sentence interval of 200 ms. Mean ERPs were computed for each subject and condition and subsequently averaged into the grand averages.

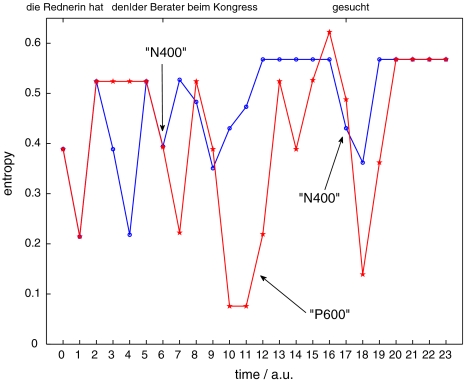

Moreover, a symbolic dynamics analysis (section “Introduction/The dynamical system approach”) was performed. As ERPs are strongly nonstationary over a whole sentence, the binary half-wave encoding (beim Graben 2001; beim Graben and Frisch 2004; Frisch et al. 2004) was employed with parameters: width of secant slope window, T1 = 70 ms; width of moving average window, T2 = 10 ms; dynamic encoding lag, l = 8 ms. This symbolization technique detects local maxima and minima of the EEG time series, mapping time intervals between their successive inflection points onto words consisting of only “0”s and “1”s, respectively. Note that the half-wave encoding resembles the first sifting step of the empirical mode decomposition (Huang et al. 1998; Sweeney-Reed and Nasuto 2007). Due to its local operations, the half-wave encoding technique is able to reduce baseline problems and nonstationary drifts. From the symbolically encoded ERP epochs, event-related cylinder entropies Eq. 9 were computed.

As the ERP results have only illustrative purpose in this study, we do not perform statistical analyses here. A follow-up of this provisional pilot experiment is currently scheduled; and comprehensive results will be published later.

Processing models

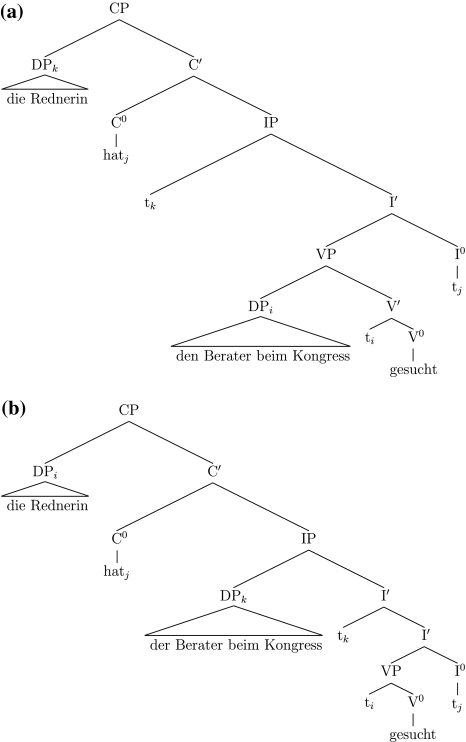

In order to construct a formal representation of our ERP

language stimuli, we present the phrase structure trees of the

sentences  (33) and

(33) and  (34) according to Government and Binding

Theory (GB) (Chomsky 1981; Haegeman, 1994) in Fig. 1. Figure 1a shows the tree of (33) and

1b that for

(34).

(34) according to Government and Binding

Theory (GB) (Chomsky 1981; Haegeman, 1994) in Fig. 1. Figure 1a shows the tree of (33) and

1b that for

(34).

Fig. 1.

GB phrase structure trees. (a) For the

sentence (33). (b) For the

sentence (33). (b) For the

sentence (34)

sentence (34)

These trees are derived on the basis of the X-bar module of GB (section “Introduction/Computational symbolic models”). Their binding relations result from movement operations that are represented by co-indexed nodes (hence the nodes DPk and DPi are essentially the same, they only differ in their binding partners, the tracestk, ti). Note that binding and movement cannot be captured within the framework of context-free grammars; one possibility to do so are minimalist grammars (Stabler 1997; Michaelis 2001; Stabler and Keenan 2003; Hale 2003; Gerth 2006).

From the trees in Fig. 1, a context-free grammar is readily obtained. Though, for the sake of simplicity, we first discard the lexical material regarding the next to last nodes in the trees as the terminal symbols T = {DP1, C0, t, DP2, DP3, V0, I0} where:

|

Additionally, only binary branches of the trees are taken into account such that the expansion of I0 is also abandoned. The corresponding nonterminal alphabet is then given by N = {CP, C′, I1, I2, VP, IP, V′} where we have introduced two categories I1, I2 for the I′ nodes in Fig. 1b. The grammar G = (T, N, P, CP) comprises the rewriting rules

|

|

35 |

|

36 |

|

37 |

|

38 |

|

39 |

|

40 |

|

41 |

|

42 |

|

43 |

|

44 |

|

The grammar G is locally ambiguous for there is more than one rule to expand the nonterminals IP, I1, VP, respectively. Following beim Graben et al. (2004), the grammar can be decomposed into two locally unambiguous grammars G1, G2, resulting in the production systems

|

45 |

for generating sentence (33), and

|

46 |

for generating sentence (34), (this decomposition is analogue to the harmonic normal form decomposition suggested by Hale and Smolensky (2006)).

Each of the languages produced by the disambiguated grammars G1, G2, can be deterministically parsed with a suitable top-down recognizer as explained in section “Introduction/Computational symbolic models”. In the following two subsections, we use Smolensky’s tensor product representations in order to construct two different top-down recognizers. The first, a parallel, high-dimensional dynamical system using faithful filler/role bindings (Dolan and Smolensky 1989; Smolensky 1990, 2006; Smolensky and Legendre 2006); the second, a serial diagnosis and repair NDA based on a Gödel encoding of generalized shifts (Moore 1990, 1991; Siegelmann 1996) combined with a non-autonomous update dynamics (Pollack 1991; Moore 1998; Tabor 2000; Moore and Crutchfield 2000).

Tensor product top-down recognizer

Following Dolan and Smolensky (1989), Smolensky (1990, 2006) and Smolensky and Legendre (2006), hierarchical structures can be represented in activation space of neural networks by filler/role bindings through tensor products. A labeled binary tree supplies three role positions: Parent, LeftChild, and RightChild which can be identified with basis vectors of a three-dimensional role vector space,

|

47 |

To each of these roles, either a simple or a complex filler can be attached. Simple fillers are terminal and nonterminal symbols of the grammar G, which in turn are also identified with basis vectors of another, 14-dimensional space:

|

48 |

The binding of a category to a tree position is expressed

by a tensor product  A simple tree is then given by the sum

over all filler/role bindings

A simple tree is then given by the sum

over all filler/role bindings  Since tree positions can be occupied by

subtrees, a recursive application of tensor products, as

e.g. in

Since tree positions can be occupied by

subtrees, a recursive application of tensor products, as

e.g. in  instantaneously increases the

dimensionality of the respective subspaces. Therefore, the

sum has to be replaced by a direct sum over tensor product

spaces leading to

instantaneously increases the

dimensionality of the respective subspaces. Therefore, the

sum has to be replaced by a direct sum over tensor product

spaces leading to

|

49 |

where  is the infinite-dimensional Fock space generated by the

finite-dimensional filler

is the infinite-dimensional Fock space generated by the

finite-dimensional filler  and role vector spaces

and role vector spaces  (Smolensky and Legendre 2006, p. 186, footnote

11). In fact, a faithful representation of infinitely

recursive phrase structures would demand the complete Fock

space (beim Graben et al. 2007).

(Smolensky and Legendre 2006, p. 186, footnote

11). In fact, a faithful representation of infinitely

recursive phrase structures would demand the complete Fock

space (beim Graben et al. 2007).

However, the grammar G

is fortunately not recursive thus making Smolensky’s

approach tractable with finite means. In the following, we

shall devise a tensor product

top-down recognizer. Its state description at

time t comprises two Fock

space elements  and

and  corresponding to the stack and the input

of the automaton defined in Eq. 15, respectively.

corresponding to the stack and the input

of the automaton defined in Eq. 15, respectively.  denotes the tensor product tree at

processing time t, while

the tensor

denotes the tensor product tree at

processing time t, while

the tensor  is of the form

is of the form  i.e. all fillers, which correspond only to

terminals, are assigned to the root position. Thereby,

i.e. all fillers, which correspond only to

terminals, are assigned to the root position. Thereby,

encodes the input being processed. Because

the direct sum in these expressions is commutative and

associative, we additionally require two pointers,

encodes the input being processed. Because

the direct sum in these expressions is commutative and

associative, we additionally require two pointers,  to the next, not yet expanded, nonterminal

filler in the stack

to the next, not yet expanded, nonterminal

filler in the stack  and

and  to the topmost position in the input.

Therefore, a tensor product top-down recognizer τi for processing grammar Gi (i = 1, 2)

is characterized by a semi-symbolic state

description

to the topmost position in the input.

Therefore, a tensor product top-down recognizer τi for processing grammar Gi (i = 1, 2)

is characterized by a semi-symbolic state

description

|

50 |

The parser recursively expands the nonterminal filler

beneath

beneath  into an elementary tree

into an elementary tree  when there is a rule corresponding to

when there is a rule corresponding to

in the grammar. Thereafter, it looks into

the expanded tree, whether

in the grammar. Thereafter, it looks into

the expanded tree, whether  or

or  agrees with the terminal symbol in

agrees with the terminal symbol in

pointed to by

pointed to by  If this is the case, the particular filler

will be removed only from the input, readjusting

If this is the case, the particular filler

will be removed only from the input, readjusting

to the next input symbol.

to the next input symbol.

Since filler/role bindings can be established recursively,

we introduce four additional roles  indicating the positions in the state

description list above. Binding

indicating the positions in the state

description list above. Binding  to

to  to

to  etc., yields the representation of a state

description of τi

etc., yields the representation of a state

description of τi

|

51 |

As there are two sentences,  (33) and

(33) and  (34), which can be processed by two

deterministic tensor product top-down recognizers

τ1 and τ2,

there are four different parses,

(34), which can be processed by two

deterministic tensor product top-down recognizers

τ1 and τ2,

there are four different parses,  to be examined. Parsing trajectories are

presented in the Appendix.

to be examined. Parsing trajectories are

presented in the Appendix.

For numerical implementation of the model, we chose the

arithmetic vector spaces  and

and  and identify the fillers

and identify the fillers  and roles

and roles  ) with their canonical basis vectors

) with their canonical basis vectors

respectively. Thus, e.g. the role for the

parent position in vector space

respectively. Thus, e.g. the role for the

parent position in vector space  is

is  This leads to a local and faithful

representation of these symbols.

This leads to a local and faithful

representation of these symbols.

In this representation, the tensor products are then given

by Kronecker products

(Mizraji 1989,

1992) of

filler and role vectors,  where

where

|

Generally, the calculation of iterated tensor products

leads to a vast number of subspaces of different dimensions.

Consider, e.g., the tree representation attached to

for state 2 of the

for state 2 of the  parse,

parse,

|

saying that CP is attached to Parent,

DP1 to LeftChild and C′ to RightChild. Since the filler

is beneath the “stack pointer” in

is beneath the “stack pointer” in

rule (36),

rule (36),  corresponding to the state

transition

corresponding to the state

transition

|

applies next. This results into state 3 of the trajectory with

|

in  position.

position.

Obviously, the first two direct summands  and

and  in this term belong to another, lower

dimensional, subspace of the Fock space than the remaining

ones. Therefore, usage of direct sums is crucially required

for proper tensor product representations. However, as our

model grammars are not recursive at all, we know that the

representation of the last phrase structure tree for

well-formed parses is an element of a finite tensor product

space

in this term belong to another, lower

dimensional, subspace of the Fock space than the remaining

ones. Therefore, usage of direct sums is crucially required

for proper tensor product representations. However, as our

model grammars are not recursive at all, we know that the

representation of the last phrase structure tree for

well-formed parses is an element of a finite tensor product

space  with maximal dimension M. Therefore, we take

with maximal dimension M. Therefore, we take

as an embedding

space, interpreting the direct sums as those

of finite subspaces in

as an embedding

space, interpreting the direct sums as those

of finite subspaces in  Technically, we achieve this by

multiplying all vectors from lower-dimensional subspaces

Technically, we achieve this by

multiplying all vectors from lower-dimensional subspaces

with tensor powers of the root role

with tensor powers of the root role

from the right, where

from the right, where

For the example above, we hence obtain the correct expression

|

where the usual vector addition is now admissible.

Since all representations are now activation vectors from

the same high-dimensional vector space  we can easily construct the desired

parallel parser from

the four trajectories obtained by

we can easily construct the desired

parallel parser from

the four trajectories obtained by  respectively, simply by superimposing the

state descriptions of

respectively, simply by superimposing the

state descriptions of  and

and  for each processing step separately. This

entails the parallel parse of the

for each processing step separately. This

entails the parallel parse of the  sentence (33). On the other hand,

superimposing the instantaneous state descriptions of

sentence (33). On the other hand,

superimposing the instantaneous state descriptions of

and

and  yields the parallel parse of the

yields the parallel parse of the

sentence (34). Note that the trajectories

of the parses have to be filled up with zero vectors to

adjust their different durations.

sentence (34). Note that the trajectories

of the parses have to be filled up with zero vectors to

adjust their different durations.

At the end, our construction yields a time-discrete

dynamical system  with phase space

with phase space and flow

and flow , representing a parallel tensor product

top-down recognizer. The iterates

, representing a parallel tensor product

top-down recognizer. The iterates  generate the parsing trajectory for

t = 0, 1, 2… starting

with initial condition

generate the parsing trajectory for

t = 0, 1, 2… starting

with initial condition

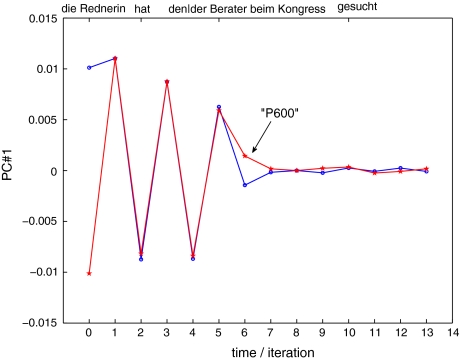

The results of this model are presented in section “Results/Tensor product top-down recognizer”. Here, we have standardized the numerical data by the z-transform in order to make the different parses comparable. Additionally, we employ a principal component analysis (PCA) for reducing the dimensions of the activation vector space in order to facilitate visualization. In short, the PCA is an orthogonal linear transformation that rotates the data into a new coordinate system such that the greatest variance of the data has the direction of the first principal component, PC#1, the second greatest variance of the second principal component and so on.

Nonlinear dynamical automaton

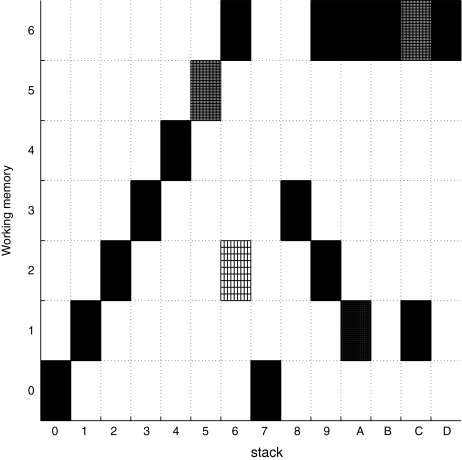

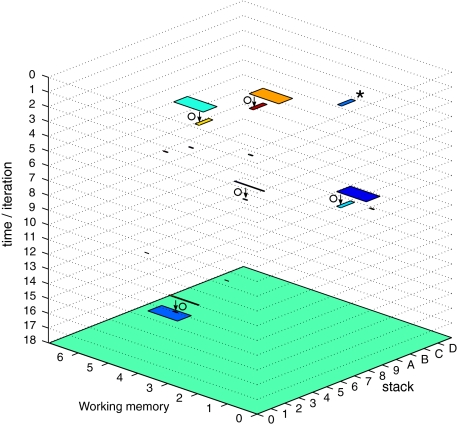

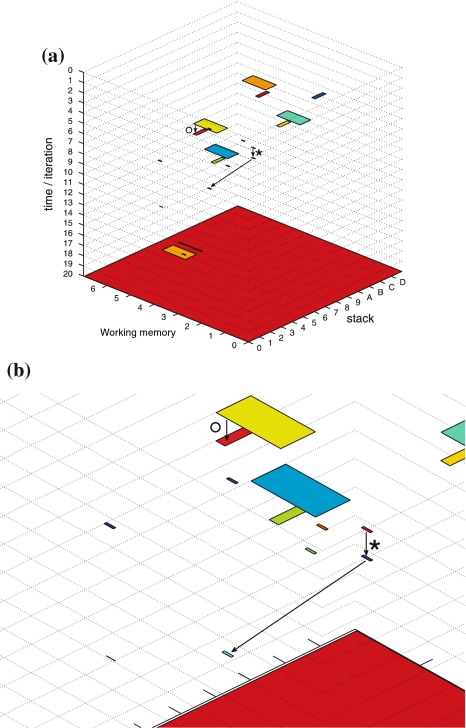

In this section, we construct an NDA top-down recognizer for the stimulus material of the ERP experiment along the lines of beim Graben et al. (2004). Given the two, locally unambiguous grammars G1, G2 with productions Eqs. 45, 46, we first construct two generalized shifts τ1,τ2 for recognizing the context-free languages generated by them.

Pushdown automata generally process finite words

, whereas generalized shifts are operating

on bi-infinite strings

, whereas generalized shifts are operating

on bi-infinite strings  Therefore, we first have to describe

finite words through infinite means. This can be achieved by

forming equivalence classes of bi-infinite sequences that

agree in a particular building block around the separating

dot. Yet, such classes of equivalent bi-infinite sequences

are exactly the cylinder sets introduced in

section “Introduction/The dynamical system approach”. Thus,

every state description γ′ . w of a generalized shift emulating a top-down

recognizer, with

Therefore, we first have to describe

finite words through infinite means. This can be achieved by

forming equivalence classes of bi-infinite sequences that

agree in a particular building block around the separating

dot. Yet, such classes of equivalent bi-infinite sequences

are exactly the cylinder sets introduced in

section “Introduction/The dynamical system approach”. Thus,

every state description γ′ . w of a generalized shift emulating a top-down

recognizer, with  (γ′ denotes γ in reversed order, again)

and

(γ′ denotes γ in reversed order, again)

and  corresponds to a cylinder set

corresponds to a cylinder set

|

52 |

in  For the sake of subsequent modeling and

numerical implementation, we regard the finite strings γ in

the stack and w in the

input as one-sided infinite strings, continued by random

symbols

For the sake of subsequent modeling and

numerical implementation, we regard the finite strings γ in

the stack and w in the

input as one-sided infinite strings, continued by random

symbols  and

and

|

53 |

Next, we address the Gödel encoding to construct the NDAs.

Because the stack alphabet  and the input alphabet T of the pushdown automata differ

in size, two separate Gödel encodings, one for the x- and another one for the

y-axis of the

symbologram are recommended. Using the random continuations

Eq. 53, we

obtain from Eq. 30

and the input alphabet T of the pushdown automata differ

in size, two separate Gödel encodings, one for the x- and another one for the

y-axis of the

symbologram are recommended. Using the random continuations

Eq. 53, we

obtain from Eq. 30

|

54 |

|

55 |

where bT is the number of terminal symbols in T, bΓ = bT + bN is the number of stack symbols in

is the number of nonterminal symbols in

N,), and g is the arbitrary Gödel

encoding of these symbols.

is the number of nonterminal symbols in

N,), and g is the arbitrary Gödel

encoding of these symbols.

Fixing the prefixes γ0…γk−1 of

the stack and w1w2…wl of the input, results to a cloud of points

randomly scattered across a rectangle in the unit square of

the symbologram. These rectangles are compatible with the

symbol processing dynamics of the NDA, while individual

points  do not have an immediate symbolic

interpretation. We shall refer to arbitrary rectangles

do not have an immediate symbolic

interpretation. We shall refer to arbitrary rectangles

as to macrostates, distinguishing them from the

microstates

as to macrostates, distinguishing them from the

microstates of the underlying dynamical system.

of the underlying dynamical system.

In order to achieve our modeling task, we first determine the Gödel codes of the context-free grammar derived in section “Methods/Processing models” by arbitrarily introducing the integer numbers

|

56 |

where we have used “hexadecimal” notation for numbers 10 to 13.

Disregarding the numerical values of these codes for a moment, we can prescribe the two generalized shifts τ1,τ2 by their transition functions. For the shift that emulates the top-down recognizer τ1, processing the productions P1 (Eq. 45), this function is

|

57 |

Here, w1 always stands for the topmost

symbol in the input. The last transition describes any

attachment of a successfully predicted terminal. All other

transitions describe the prediction by expanding a rule in

P1. Table 1 presents the dynamics of this generalized

shift processing the sentence  (33).

(33).

Table 1.

Sequence of state transitions of the generalized shift τ1, processing the well-formed string 0361645

| Time | State | Operation |

|---|---|---|

| 0 | 7 · 0361645 |

|

| 1 | 80 · 0361645 |  |

| 2 | 8 · 361645 |

|

| 3 | 93 · 361645 |  |

| 4 | 9 · 61645 |

|

| 5 | A6 · 61645 |  |

| 6 | A · 1645 |

|

| 7 | 5C · 1645 |

|

| 8 | 5D1 · 1645 |  |

| 9 | 5D · 645 |

|

| 10 | 546 · 645 |  |

| 11 | 54 · 45 |  |

| 12 | 5 · 5 |  |

| 13 |  |

|

The operations are indicated as follows:

means prediction according to

rule (X) in the productions Eq. 45 of a

context-free grammar;

means prediction according to

rule (X) in the productions Eq. 45 of a

context-free grammar;  means cancelation of

successfully predicted terminals both from stack

and input; and

means cancelation of

successfully predicted terminals both from stack

and input; and  means acceptance of the string

as being well-formed

means acceptance of the string

as being well-formed

Accordingly, the generalized shift

τ2 for recognizing the

well-formedness of the string 0326645, which encodes

sentence  (34), with respect to the productions

P2 is constructed. Table 2 displays the resulting

symbolic dynamics.

(34), with respect to the productions

P2 is constructed. Table 2 displays the resulting

symbolic dynamics.

Table 2.

Sequence of state transitions of the generalized shift τ2, processing the well-formed string 0326645

| Time | State | Operation |

|---|---|---|

| 0 | 7 · 0326645 |

|

| 1 | 80 · 0326645 |  |

| 2 | 8 · 326645 |

|

| 3 | 93 · 326645 |  |

| 4 | 9 · 26645 |

|

| 5 | A2 · 26645 |  |

| 6 | A · 6645 |

|

| 7 | B6 · 6645 |  |

| 8 | B · 645 |

|

| 9 | 5C · 645 |

|

| 10 | 546 · 645 |  |

| 11 | 54 · 45 |  |

| 12 | 5 · 5 |  |

| 13 |  |

|

The operations are indicated as above

In order to describe the processing problem arising from

parsing the  string 0326645 by

τ1, we present this dynamics in

Table 3.

string 0326645 by

τ1, we present this dynamics in

Table 3.

Table 3.

Sequence of state transitions of the generalized shift τ1, processing the unpreferred string 0326645

| Time | State | Operation |

|---|---|---|

| 0 | 7 · 0326645 |

|

| 1 | 80 · 0326645 |  |

| 2 | 8 · 326645 |

|

| 3 | 93 · 326645 |  |

| 4 | 9 · 26645 |

|

| 5 | A6 · 26645 |  |

| 6 | A6 · 26645 |

The operations are indicated as above,

yet  refers to the processing

problem that a predicted VP is not present in

the input

refers to the processing

problem that a predicted VP is not present in

the input

A possible solution for describing diagnosis and repair processes (Lewis 1998; Friederici 1998) in generalized shifts was proposed by beim Graben et al. (2004). Comparing step 6 in Table 3 with step 5 in Table 2, suggests the construction of a third generalized shift τ3 with only one nontrivial transition

|

58 |

This repair shift replaces the stack content A6 by another content A2 which now lies at the admissible trajectory of τ2 shown in Table 2, steps 5 to 13.