Abstract:

The desire to optimize techniques and interventions that comprise clinical practice will inevitably involve the implementation of change in the process of care. To confirm the intended benefits of instituting clinical change, the process should be undertaken in a scientific manner. Although implementing changes in perfusion practice is limited by the availability of evidence based practice guidelines, we have the opportunity to audit our current practice according to institutional guidelines using quality improvement methods. Current electronic data collection technology is a useful tool available to facilitate the reporting of both clinical and process outcome improvements. The model of clinical effectiveness can be used as a systematic approach to introducing change in clinical practice, which involves reviewing the literature, acquiring appropriate skills and resources, auditing the change, and implementing continuous quality improvement to standardize the process. Finally, reporting the findings allows dissemination of the knowledge that can be generalized. Reporting change strengthens our efforts in clinical effectiveness, highlights the importance of perfusion practice, and increases the influence of the profession.

Keywords: quality improvement, cardiopulmonary bypass, clinical audit, practice change

Clinicians strive to optimize their techniques and interventions that comprise clinical practice and find an ideal strategy that also minimizes adverse effects. Inevitably, this endeavor will involve the implementation of a change in practice, and to be able to demonstrate improvement by instituting clinical change, the process should be undertaken in a scientific manner. Two reports published by the Institute of Medicine (1,2) that highlighted the gap between ideal and actual delivery of healthcare have resulted in an increase in the scrutiny of clinical efficiency, such that changes in regulatory or economic factors require that organizations adopt change (3). Change is often implemented when new products or techniques emerge that have the potential to improve outcomes. The challenge for us as clinicians in determining whether a change in practice is beneficial for our patients is to assimilate and evaluate the available evidence. But deciding whether the available evidence is sufficient to warrant a change in practice may have limitations, both in the quality and quantity of the evidence. Although the ethos of “evidence based practice” has been described as a change in clinical mindset from the past—heavily reliant on precedent and opinion, to one based on science (4), change may be threatening to individuals who want to preserve the status quo where current practice is based on familiarity with tradition. Therefore, human and organizational behavior may influence the level of evidence required to implement change (3).

“BEST PRACTICE AND CLINICAL EFFECTIVENESS”

Best practice may also be defined as “the conscientious, explicit, and judicious use of current best evidence in making decisions about the care of individual patients” (5). The term used to describe the application of evidence based practice at the population level in contrast to the individual patient level is “clinical effectiveness,” which incorporates the ethos of evidence based practice together with the requirement for cost effectiveness (6). The model for describing clinical effectiveness can be also used as a model for introducing change in clinical practice. Figure 1 illustrates the components of the process, which starts by reviewing the literature to determine best practice. The aim of the literature review is to determine whether there is sufficient evidence to justify implementing change. Where the evidence is inconclusive, there is an opportunity to contribute to the evidence through research to contribute to the definition of best practice. If best practice can be defined, then the next step is to determine whether the appropriate techniques and procedures are known and whether the infrastructure is in place to support the change. Before we can define improvement in practice we need to have a benchmark of our current processes, which can be achieved through the use of clinical audit. The process of data collection and analysis that is inherent in the auditing process provides the foundations for a process of continuous quality improvement through the cycle of audit and intervention. Once we have achieved clinical improvement, the knowledge obtained can be generalized through publication.

Figure 1.

A model for clinical effectiveness that can be used as a struc-ture for instituting change in clinical practice that involves sourcing and reviewing the evidence, auditing practice, and reporting the findings. Adapted from NHS Executive. Achieving Effective Practice: The Clinical Effectiveness and Research Information Pack for Nurses, Midwives and Health Visitors, Department of Health, London; 1998.

“What if the Evidence Is Insufficient to Define Best Practice?”

A clinical example of this process is the attempt to define “what is the best practice for arterial pressure management during CPB?” A recent review of the evidence related to optimal perfusion reported that there is insufficient evidence to recommend an optimal pressure for all patients (7). Low risk patients tolerate mean pressures of 50–60 mmHg without apparent complications, however limited data suggest that high risk patients may benefit from pressures >70 mmHg. The authors conclude that there are currently limited data to make strong recommendations for the conduct of optimal CPB. Assuming we accept the validity of these findings (for the purpose of this example), then we are left with the opportunity to contribute to the research ourselves. The conduct of quantitative research regarding optimal CPB parameters may not be within the purview of every institution; however the opportunity exists to review existing process of care guidelines and determine whether a change in practice is warranted, and audit the existing practice. Analyzing the data from the audit will provide us with the opportunity to define, improve, and standardize compliance with process of care guidelines. Ultimately, although there is a major limitation currently in the lack of evidence based guidelines for the conduct of optimal CPB, we have the opportunity to implement quality assurance and improvement processes based on our institutional process of care guidelines.

“THE SCIENCE OF QUALITY IMPROVEMENT”

Methods of quality assurance are focused on measuring the quality of our practice to enable us to compare it with defined benchmarks, which facilitates quality improvement as it gives us our control by which we can quantify improvement. How then, can quality improvement be considered from a research perspective?

Quality improvement involves gaining knowledge about the nature of our clinical practice. Research is aimed at gaining knowledge that can be generalized. In essence, if the knowledge obtained from a quality improvement project can be generalized, then the project can be considered research. Given the benefits in creating generalized knowledge in the context of the reporting change in practice, it is beneficial to give the project some thought, and with careful planning one can embark on both a process of quality improvement and research.

If we consider the components of the continuous quality improvement (CQI) process, we start out with a specific goal, define the improvement process, and then measure problems and variation in the process. Our analysis is aimed at finding root causes for the variation, to recommend improvements and monitor further improvements. The inherent similarity in approach to the research process is the systematic method of enquiry. Two examples of systematic methodology in CQI are the Plan/Do/Check/ACT (PDCA) (8) and the Define/Measure/Analyze/Improve/ Control (DMAIC) cycles (9).

Plan/Do/Check/Act

Plan—In this phase, intended improvements should be analyzed, looking for areas that hold opportunities for change. To identify these areas for change, the use of a flow chart or Pareto Chart should be considered.

Do—The change decided in the plan phase should be implemented.

Check—This is a crucial step in the PDCA cycle. After the change has been implemented for a short time, it must be determined how well it is working. Is it really achieving the improvement as hoped? This must be decided on several measures that can be used to monitor the level of improvement. Control charts can be helpful with this measurement.

Act—After planning, implementing, and then monitoring a change, it must be decided whether it is worth continuing with the new process. If it consumed too much time, was difficult to adhere to, or even led to no improvement, aborting the change may be considered. However, if the change led to a desirable improvement, expanding the scope to a different area, or slightly increasing the complexity may be considered. This recommences the Plan phase and can be the beginning of the continuous improvement process (8).

Define/Measure/Analyze/Improve/Control

D, Define an improvement opportunity.

M, Measure process performance.

A, Analyze the process to determine root causes of performance problems; determine whether the process can be improved.

I, Improve the process by implementing solutions that address root causes.

C, Control the improved process by standardizing the process.

Newland and Baker have described the use of electronic data collection to facilitate the quality measurement of CPB (10) and the use of the quantitative data for CQI (11). These reports highlight the potential for existing intra-operative data collection systems to be used in the process of auditing change, and the use of statistical control charts as a tool for the interpretation of performance data.

One of the most comprehensive changes to institutional CPB practice has been reported by Trowbridge et al. in which they introduced a number of simultaneous changes to practice (12). Evidence based changes included incorporation of updated continuous blood gas monitoring, biocompatible circuitry, updated centrifugal blood propulsion, continuous autotransfusion technology, new generation myocardial protection instrumentation, plasmapheresis, topical platelet gel application, excluding hetastarch while increasing the use of albumin, viscoelastographic coagulation monitoring, and implementing a quantitative quality improvement program. Data analysis included both propensity scoring and logistic regression was used to control for demographic, preoperative, operative, and postoperative variables in comparing 317 patients before and 259 patients after the changes to practice. The methodology of this study provides an example of the model of clinical effectiveness, incorporating quality improvement. The discussion highlights a number of issues related to the process of change in clinical practice. The authors comment that implementing change in CPB is hampered by our ability to evaluate new technologies. This lack of published scientific data reduces our ability to demonstrate cost effectiveness, and weakens the appreciation of the importance of our care—vital concepts in terms of clinical effectiveness. The authors also raise the issue of risk benefit and its analogy to cost benefit, in that the higher the potential risks associated with a change in practice the greater the level of evidence required to justify the change. Some of the changes that were reported were achieved by a cost reduction—such as with the cost of albumin and by ruthless negotiation of the price of coated circuits.

In the interpretation of the results of these endeavors, we need to consider what information can be generalized: Did the team achieve the expected benefits? Were there any unexpected benefits or problems? What can the team learn from these? What can be done to fine tune the solution so that it can be applied on a wider basis? These considerations should be addressed initially at the planning stage to determine appropriate endpoints for analysis. The inability to demonstrate cause and effect relationships in observational studies, together with the low frequency of adverse clinical outcomes following cardiac surgery, limit the usefulness of clinical endpoints as metrics for quality improvement studies. The numbers required to achieve adequate statistical power in such studies may result in the introduction of bias associated with changes in practice over time (10). The use of mortality as an endpoint for measurement of changes in the quality of cardiac surgery has been described as too harsh, with the use of a combined mortality/morbidity endpoint proposed as a more appropriate metric, with morbidity endpoints including stroke, renal failure, re-operation, prolonged ventilation (>48 hours) and sternal infection (13). A combined adverse endpoint for CPB is yet to be reported, however inclusion of metrics of end organ function may be appropriate, including stroke, myocardial infarction, renal failure, pulmonary dysfunction, together with mortality. Ultimately, the variables collected should be relevant to the change in practice, such that a qualitative or quantitative description of the process can be reported. Studies that are not statistically powered to determine changes in clinical endpoints should include measures of the process undergoing change.

The need for perfusionists to report changes in practice has also been recognized by Riley (14) in response to the adoption of a new outline for quality improvement reports by the journal Quality in Health Care. The new outline was adopted to facilitate reporting of process improvement that may not fit the traditional structure of introduction, methods, results, and discussion. Riley argues that quality improvement and technology changes in perfusion fit well into the reporting model (Figure 2).

Figure 2.

An outline for reporting quality improvement and/or changes in clinical practice. The reporting of these projects increases clinical effectiveness by generalizing the knowledge obtained.

“THE SCIENCE OF CHANGE”

Diffusion of Innovations

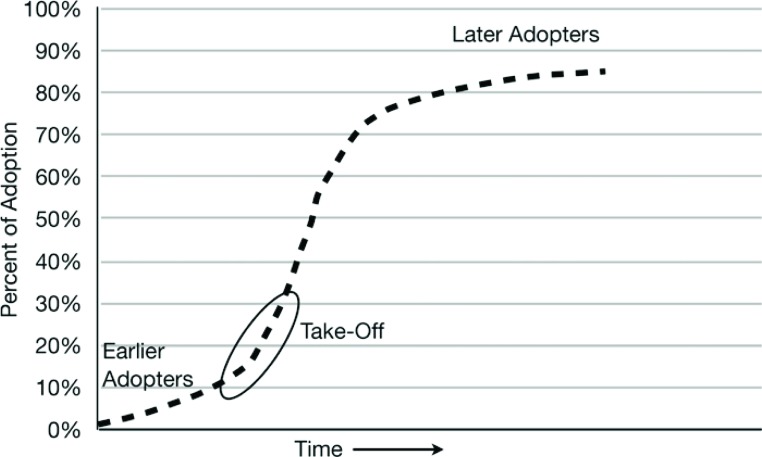

Diffusion is the process in which an innovation is communicated over time through members of a social system (15). The four main elements are the innovation (change), communication channels, time, and the social system. The rate at which innovations are adopted is determined by the perceived advantage, compatibility, the ability to subject to trial and observe change effect, and complexity. The influence of these factors results in the pattern of adoption illustrated in Figure 3 .

Figure 3.

The diffusion process of adoption of an innovation that is communicated through certain channels over time among members of a social system. The typical S shaped curve is characterized by relatively few early adopters, a take off period that triggers rapid adoption, followed by a minority of late adopters. Adapted from Rogers EM. Diffusion of Innovations. New York: Free Press; 2003.

The process in initiated by a relatively small number of individuals known as “innovators,” leading to the majority of adoption occurring midway through the process. This model of change described in a social context highlights issues relevant to the application of change in CPB practice. First, optimization of communication channels will influence the ability to implement change, therefore careful consideration should be given to how the intended change is communicated and to whom, in particular, key stakeholders. Communication should address the factors (potential advantage, compatibility, the ability to subject to trial and observe change effect, and complexity). To disseminate information regarding implementation of change more rapidly to the broader community, innovators in particular should closely audit and report their experience and results.

“Strategic Planning and Organizational Processes”

Planned change necessitates a strategy for change. Resolution of performance problems or implementation of opportunities for improvement in clinical practice require strategic planning. Strategic planning is the process of directing and shaping change through the implementation of outcome oriented processes to manage that change (16). Organizational process is an important factor in the introduction of innovative practices. Several key strategies have been described in the transition of research into practice, including constructing decision making coalitions (a group of individuals to argue the change), linking new initiatives to organizational goals (how does the change fit with existing objectives), quantitatively monitoring implementation (data collection of endpoints), and developing a subculture to support change (17). This subculture not only exists locally, but may also be supported by collaborative ventures, such as the International Consortium of Evidence Based Perfusion or the Perfusion Downunder Collaboration.

A concept related to the effectiveness of influencing change that may be relevant to perfusionists is known as Covey’s circle of influence/concern, which may be described as a smaller expandable circle (influence) contained within a larger circle (concern). This concept highlights that to be truly effective we need to focus within our circle of influence and focus change on factors that are within our control. Focusing on areas in our area of concern is less effective. Using this model, it has been shown that if we work on our inner circle, as we make changes and improve, our circle of influence grows as those outside the circle view our achievements and wish to support us or collaborate (6). Such social contexts of change provide further impetus for perfusionists to engage in and report change in a scientific manner, for the diffusion of improvement innovations, to highlight the importance of our care, and extend our circle of influence within the cardiac surgical community.

SUMMARY

To confirm the intended benefits of instituting clinical change, the process should be undertaken in a scientific manner. Current electronic data collection technology is a useful tool available to facilitate the reporting of clinical improvements. The model of clinical effectiveness can be used as an effective model for introducing change in clinical practice, which involves reviewing the literature, acquiring appropriate skills and resources to implement the change, auditing the change, and implementing continuous quality improvement to standardize the process. Finally, reporting the process allows dissemination of the knowledge that can be generalized. Reporting change strengthens our efforts in clinical effectiveness, highlights the importance of CPB practice, and increases the influence of the profession.

REFERENCE

- 1.Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academy Press; 2001:3. [PubMed] [Google Scholar]

- 2.Kohn LT, Corrigan JM, Donaldson MS, eds. To Err Is Human: Building a Safer Health System. Institute of Medicine. Washington, DC: National Academy Press; 1999. [PubMed] [Google Scholar]

- 3.Nemeth LS.. Implementing Change for Effective Outcomes. Outcomes Manag. 2003;7:134–9. [PubMed] [Google Scholar]

- 4.Walshe K.. Evidence-based health care: Brave new world? Health Care Risk Report. 1996;2:16–8. [Google Scholar]

- 5.Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS.. Evidence based medicine: What it is and what it isn’t. BMJ. 1996;312:71–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Parsley K, Corrigan P.. Quality Improvement in Healthcare: Putting Evidence into Practice. Cheltenham, UK: Stanley Thornes Ltd;1999. [Google Scholar]

- 7.Murphy GS, Hessel EA, Groom RC.. Optimal perfusion during cardiopulmonary bypass: An evidence based approach. Anesth Analg. 2009;108:1394–417. [DOI] [PubMed] [Google Scholar]

- 8.Australian and New Zealand College of Anaesthetists Guidelines of Continuous Quality Improvement. Available at: http://www.anzca.du.au/fpm/resources/educational-documents/guidelines-oncontinuous-quality-improvement.html/. Accessed May 29, 2009. [Google Scholar]

- 9.Rath and Strong Management Consultants. Six Sigma Pocket Guide. Lexington, MA: AON Consulting Worldwide; 2002. [Google Scholar]

- 10.Newland RF, Baker RA.. Electronic data processing: The pathway to automated quality control of cardiopulmonary bypass. J Extra Corpor Technol. 2006;38:139–43. [PMC free article] [PubMed] [Google Scholar]

- 11.Baker RA, Newland RF.. Continuous quality improvement of per-fusion practice: The role of electronic data collection and statistical control charts. Perfusion. 2008;23:7–16. [DOI] [PubMed] [Google Scholar]

- 12.Trowbridge CC, Stammers AH, Wood GC, et al. Improved outcomes during cardiac surgery: A multifactorial enhancement of cardiopulmonary bypass techniques. J Extra Corpor Technol. 2005;37: 165–72. [PMC free article] [PubMed] [Google Scholar]

- 13.Shroyer AL, Coombs LP, Peterson ED, et al. The Society of Thoracic Surgeons: 30-day operative mortality and morbidity risk models. Ann Thorac Surg. 2003;75:1856–64. [DOI] [PubMed] [Google Scholar]

- 14.Riley JB.. Are perfusion technology and perfusionists ready for quality reporting employing six-sigma performance measurement? J Extra Corpor Technol. 2003;35:168–71. [PubMed] [Google Scholar]

- 15.Rogers EM.. Diffusion of Innovations. New York: Free Press; 2003. [Google Scholar]

- 16.Katz JM Green E.. Managing Quality. A Guide to System-Wide Performance Management in Health Care. St. Louis: Mosby-Year Book, Inc; 1997. [Google Scholar]

- 17.Rosenheck RA.. Organizational process: A missing link between research and practice. Psychol Serv. 2001;52:1607–12. [DOI] [PubMed] [Google Scholar]