Abstract

At the one-word stage children use gesture to supplement their speech (‘eat’+point at cookie), and the onset of such supplementary gesture-speech combinations predicts the onset of two-word speech (‘eat cookie’). Gesture thus signals a child’s readiness to produce two-word constructions. The question we ask here is what happens when the child begins to flesh out these early skeletal two-word constructions with additional arguments. One possibility is that gesture continues to be a forerunner of linguistic change as children flesh out their skeletal constructions by adding arguments. Alternatively, after serving as an opening wedge into language, gesture could cease its role as a forerunner of linguistic change. Our analysis of 40 children––from 14 to 34 months––showed that children relied on gesture to produce the first instance of a variety of constructions. However, once each construction was established in their repertoire, the children did not use gesture to flesh out the construction. Gesture thus acts as a harbinger of linguistic steps only when those steps involve new constructions, not when the steps merely flesh out existing constructions.

Children who produce one word at a time often use gesture to supplement their speech, turning a single word into an utterance conveying a sentence-like meaning (‘eat’ + point at cookie). Interestingly, the age at which children first produce supplementary gesture-speech combinations of this sort reliably predicts the age at which they first produce two-word utterances (‘eat cookie’; Goldin-Meadow & Butcher, 2003; Iverson & Goldin-Meadow, 2005; Özçalışkan & Goldin Meadow, 2005a). Gesture thus serves as a signal that a child will soon be ready to begin producing basic two-word constructions. The question we ask here is what happens when the child begins to flesh out these skeletal constructions with additional arguments. Gesture could continue to be at the cutting edge of these expansions; if so, additional arguments would appear in gesture-speech combinations before they appear entirely in speech. Alternatively, gesture could act as a harbinger of new linguistic steps only when those steps involve novel constructions and not when the steps merely flesh out existing constructions; if so, additional arguments would be just as likely to appear in speech-alone combinations as in gesture-speech combinations. We followed the 40 children originally described in Özçalışkan & Goldin Meadow (2005a) for another year to explore this question.

Gesture is a harbinger of new constructions

Gesture plays a distinctive role during the early stages of language development. Children communicate using gestures before they produce their first words (Acredolo & Goodwyn, 1985, 1989; Bates, 1976; Bates, Benigni, Bretherton, Camaioni & Volterra, 1979; Iverson, Capirci & Caselli, 1994; Özçalışkan & Goldin Meadow, 2005b). They use deictic gestures to identify referents in their immediate environment (e.g., pointing at a dog to indicate a DOG), iconic gestures to convey information about the actions or attributes of an object (e.g., flapping arms to convey FLYING, holding arms spread apart to indicate BIG SIZE), and conventional gestures (i.e., emblems) to communicate socially-shared meanings (e.g., nodding the head to mean YES, flipping hands to mean DON’T KNOW).

Children produce their first gestures around 10 months of age and use them mainly to point out objects in their immediate environment. These early gestures are almost always produced without speech, but often with meaningless vocalizations (Bates, 1976; Bates et al., 1979; Butcher & Goldin-Meadow, 2000). The temporal and semantic coordination of gesture and speech comes later, somewhere between 14 to 22 months (Butcher & Goldin-Meadow, 2000). Initially, children produce gestures that convey information that reinforces the information conveyed in the accompanying speech (e.g., ‘cookie’+ point at cookie), that is, complementary gesture-speech combinations (e.g., Butcher & Goldin-Meadow, 2000; Greenfield & Smith, 1976). The semantic relation between gesture and speech becomes more complex with increasing linguistic skill, and gesture is used to convey information that is different from, and supplementary to, the information conveyed in speech. In these supplementary gesture-speech combinations, gesture creates a two-unit construction by adding a new semantic element to the meaning, e.g., adding an action (‘hair’ + WASH), an object (‘eat’ + point at cookie; ‘have wheels’ + point at truck), or an attribute (‘window’ + SMALL). Unlike the earlier complementary combinations, supplementary gesture-speech combinations provide children with a tool to communicate sentence-like meanings (with one element of the construction in speech, the other in gesture) before children are able to convey those meanings using speech alone (e.g., ‘wash hair’, ‘trucks have wheels’, ‘small window’).

Previous research has found a close link between early supplementary gesture-speech combinations and later linguistic constructions (Goldin-Meadow & Butcher, 2003; Iverson & Goldin-Meadow, 2005; Özçalışkan & Goldin Meadow, 2005a, 2006a, 2006b). First, the onset of supplementary gesture-word combinations always precedes the onset of word-word combinations in young children’s communications (Capirci, Iverson, Pizzuto & Volterra, 1996; Goldin-Meadow & Butcher, 2003; Özçalışkan & Goldin Meadow, 2005b; Volterra & Iverson, 1995). More interestingly, the age at which children first produce supplementary combinations (‘eat’ + point at cookie) predicts the age at which they produce their first two-word utterances (‘eat cookie’; Goldin-Meadow & Butcher, 2003; Iverson & Goldin-Meadow, 2005). In other words, children who are first to produce combinations in which gesture and speech convey different information are also first to produce two-word combinations. It is clear from this earlier work that the ability to convey sentence-like information across gesture and speech is a good predictor of the child’s burgeoning ability to convey these meanings entirely within speech.

More recent work has sought precursors of particular sentential constructions in children’s supplementary gesture-speech combinations before such constructions are expressed entirely in speech (Özçalışkan & Goldin Meadow, 2005a). Analysis of supplementary gesture-speech combinations in relation to multi-word speech in children from 14 to 22 months show that children produce three distinct linguistic constructions across the two modalities several months before these same constructions appear entirely within speech. In particular, the children produce argument-argument (e.g., ‘mommy’ + point at cookie), predicate-argument (e.g., ‘cookie’ + EAT gesture) and predicate-predicate (e.g., ‘I like it’ + EAT gesture) constructions in a gesture-speech combination approximately four months before they produce them entirely in speech (‘mommy cookie’, ‘eat cookie’, ‘I like to eat it’). Moreover, this same developmental progression is found in children with early brain injury, with delays in the onset of each construction in speech preceded by similar delays in the onset of the construction in gesture+speech (Özçalışkan, Levine & Goldin Meadow, 2007; see also Özçalışkan & Goldin Meadow, 2007). Thus, the pattern of development for the onset of each sentential construction type––from gesture+speech to speech-only––confirms that gesture can serve as a stepping-stone for the emergence of novel constructions in the spoken modality.

Is gesture a harbinger of construction expansion?

Even by 22 months of age, the argument+argument, predicate+argument, and predicate+predicate constructions that children produce in gesture-speech combinations and in multi-word speech are limited, each containing only two elements. The next step is for the child to flesh out these constructions with additional arguments. The question we ask is whether gesture plays a cutting edge role in the process. To address this question, we continued to observe the children originally describeda in Özçalışkan and Goldin Meadow (2005a) until they were 34 months old. We expected the children to follow one of two possible development paths. Gesture might continue to be a forerunner of linguistic change as children flesh out their skeletal constructions with additional arguments. Thus, we might see precursors of the expanded constructions in gesture-speech combinations before they appear entirely in speech. Alternatively, once the skeletal structure of a construction becomes part a child’s repertoire, gesture might no longer play a leading role in adding arguments to the constructions.

METHODS

Sample and data collection

Forty children (22 girls, 18 boys) were videotaped in their homes every four months from 14 to 34 months. Each session lasted 90 minutes, and caregivers were asked to interact with their children as they typically would in their every day routines and ignore the presence of the experimenter. Sessions typically consisted of free play with toys, book reading, and snack time, but also varied based on the preferences of the caregivers. The children’s families constituted a heterogeneous mix in terms of family income and ethnicity, and were representative of the greater Chicago area. All children were being raised as monolingual English speakers.

Coding and analysis

All meaningful sounds and communicative gestures were transcribed. Sounds that were reliably used to refer to entities, properties, or events (‘doggie’, ‘nice’, ‘broken’), along with onomatopoeic sounds (e.g., ‘meow’, ‘choo-choo’) and conventionalized evaluative sounds (e.g., ‘oopsie’, ‘uh-oh’), were counted as words. Any communicative hand movement that did not involve direct manipulation of objects (e.g., twisting a jar open) or a ritualized game (e.g., patty cake) was considered a gesture. The only exception was when the child held up an object to bring to another’s attention; these acts served the same function as the pointing gesture and thus were treated as deictic gestures. Each gesture was classified into one of three types:1 (1) Conventional gestures were gestures whose forms and meanings were prescribed by the culture (e.g., nodding the head to mean YES, extending an open palm next to a desired object to mean GIVE). (2) Deictic gestures were gestures that indicated concrete objects, persons, or locations, which we classified as the referents of these gestures (e.g., pointing to a dog to refer to a dog, holding up a bottle to refer to a bottle). (3) Iconic gestures were gestures that depicted the attributes or actions of an object via hand or body movements (e.g., moving the index finger forward and in circles to convey a ball’s rolling).

We divided all gesture and speech production into communicative acts. A communicative act was defined as a word or gesture, alone or in combination, that was preceded and followed by a pause, a change in conversational turn, or a change in intonational pattern. Communicative acts were classified into three categories: (1) Gesture only acts were gestures produced without speech, either singly (e.g., point at cookie) or in combination (e.g., point at cookie + shake head; point at cookie + point at empty jar). (2) Speech only acts were words produced without gesture, either singly (e.g., ‘cookie’) or in combination (‘mommy cookie’). (3) Gesture-speech combinations were acts containing both gesture and speech (e.g., ‘me cookie’ + point at cookie; ‘cookie’ + EAT gesture).

Gesture-speech combinations were further categorized into three types according to the relation between the information conveyed in gesture and speech. (1) A reinforcing relation was coded when gesture conveyed the same information as speech (e.g., ‘mommy’ + point at mommy, ‘cuppie’ + hold-up milk cup). (2) A disambiguating relation was coded when gesture clarified the referent of a deictic word in speech, including personal pronouns (e.g., ‘she’ + point at sister), demonstrative pronouns (e.g., ‘this’ + point at doll), and spatial deictic words (e.g., ‘there’ + point at couch). (3) A supplementary relation was coded when gesture added semantic information to the message conveyed in speech (e.g., ‘open+ point to jar with a lid; ‘mommy water’ + hold up empty cup).

Supplementary gesture-speech combinations and multi-word combinations were categorized into three types according to the types of semantic elements conveyed: (1) multiple arguments without a predicate, (2) a predicate with at least one argument, and (3) multiple predicates with or without arguments. Each of these three categories was further coded in terms of the number of arguments explicitly produced (see examples in Table 1). Deictic gestures were assumed to convey arguments (e.g., point at a ball = ball, hold-up a cup = cup), and iconic gestures were assumed to convey predicates (e.g., moving hand to mouth repeatedly = EAT). Conventional gestures could also convey predicate meanings (e.g., extending an open palm next to a desired object = GIVE), but not arguments. Gesture-gesture combinations were rare in our data and thus were not included in the analysis.

Table 1.

Examples of the types of semantic relations children conveyed in multi-word speech combinations and in supplementary gesture-speech combinations a

| Combination type | Multi-Word Speech Combinations | Supplementary Gesture-Speech Combinations b. |

|---|---|---|

| Argument+Argument(s) | ||

| Two arguments | ‘Bottle daddy’ [18] | ‘Mommy’ + CUP (point) [18] |

| ‘Feet in my socks’ [22] | ‘Daddy’ + DIRT ON GROUND (point) [22] | |

| ‘Dad church’ [26] | ‘Like icecream cone’ + MUSHROOM (hold-up) [26] | |

| ‘The cat in the tree’ [30] | ‘A choochoo train’ + TUNNEL (point) [30] | |

| ‘House for Nicholas’ [34] | ‘No basement’ + FATHER (point) [34] | |

| Three Arguments | ‘Here mommy doggie’ [22] | ‘Here mommy’ + BAG (hold-up) [18] |

| ‘Mom keys in basket’ [26] | ‘Mama plate’ + TRASHCAN (point) [22] | |

| ‘I a booboo mom’ [30] | ‘Poopoo mommy’ + BATHROOM (point) [26] | |

| ‘Mom gatorade in my cup’ [34] | ‘Emily cereal’ + MOUTH (point) [30] | |

| ‘Mommy in here’ + DOLL (hold-up) [34] | ||

|

| ||

| Predicate+Argument(s) | ||

| Predicate + One Argument | ‘Roll it’ [18] | ‘Hair’ + WASH (iconic) c. [18] |

| ‘Mouse is swimming’ [22] | ‘Bite’ + TOAST (point) [22] | |

| ‘Pull my diaper’ [26] | ‘Sit’ + LEDGE (point) [26] | |

| ‘Popped this balloon’ [30] | ‘Baby’ + EAT (iconic)d. [30] | |

| ‘Drink your tea’ [34] | ‘The snap clip’ + HANGe. (iconic) [34] | |

| Predicate +Two Arguments | ‘I want the lego’ [18] | ‘Daddy gone’ + OUTSIDE (point) [18] |

| ‘Put it on the baby’ [22] | ‘Have food’ + FATHER (point) [22] | |

| ‘Dad is pushing the stroller’ [26] | ‘I running’ + KITCHEN (point) [26] | |

| ‘People climb ladder’ [30] | ‘It in the drawer’ + CLOSE (iconic)f. [30] | |

| ‘I am sitting in the pool’ [34] | ‘Open it’ + KNIFE (point) [34] | |

| Predicate + Three Arguments | ‘I cook bunny for suppertime’ [26] | ‘Give me muffin back’ + DOG (point) [26] |

| ‘I drop my poopie mom’ [26] | ‘You cover me’ + BLANKET (hold-up) [26] | |

| ‘You see my butterfly on my wall’ [30] | ‘Read to me mommy’ + BOOK (point) [30] | |

| ‘You don’t push it with the foot’ [34] | ‘Daddy clean all the bird poopie’ + TABLE (point)[34] | |

|

| ||

| Predicate+Predicate | ||

| Two predicates + zero or one argument | ‘Help me find’ [22] | ‘I paint’ + GIVE (conventional) g. [22] |

| ‘Let me see’ [26] | ‘Me scoop’ + GIVE (conventional) h. [26] | |

| ‘Make my tape stop’ [30] | ‘Go up’ + CLIMB (iconic) i. [30] | |

| ‘Come and see our babies’ [34] | ‘Did it really hard’ + PRESSj. (iconic) [34] | |

| Two predicates + multiple arguments | ‘Let me put on frog’ [26] | ‘I like it’ + EAT d. (iconic) [22] |

| ‘You make him fall’ [30] | ‘I ready taste it’ + GIVE (conventional) [26]k. | |

| ‘I put it down and throwed it out’ [34] | ‘You making me’ + FALL l. (iconic) [30] | |

| ‘I just like that’ + STIR m. (iconic) [34] | ||

The age, in months, at which each example was produced is given in brackets after the example.

The speech is in single quotes, the meaning gloss for the gestures is in small caps, and the type of gesture (point, iconic, conventional) is indicated in parentheses following the gesture gloss. We did not code the order in which gesture and speech were produced in gesture-speech combinations; the word is arbitrarily listed first and the gesture second in each example.

The child moves downward facing open palms above head in circles as if washing hair.

The child moves hand, with fingers touching thumb, repeatedly to mouth to convey eating.

The child moves thumb and index fingers in an arch as if hanging an object in air.

The child moves outward facing palms away from body as if closing a drawer.

The child extends an open palm next to a crayon to request the crayon so that she can paint.

The child extends an open palm towards a measuring cup to request the cup so that she can scoop flour from the bowl with it.

The child moves semi-arched open palms upward as if climbing.

The child moves downward facing open palms forcefully downward as if pressing an imaginary object.

The child extends an open palm towards a muffin to request it so that she can taste it.

The child moves both hands forcefully downward to convey falling.

The child moves index finger in circles above a teacup to convey stirring.

We assessed reliability on a subset of the videotaped sessions transcribed by an independent coder. Agreement between coders was 88% (k= .76; N=763) for identifying gestures, 91% (k= .86; N=375) for assigning meaning to gestures, and 99% (k= .98; N=482) and 96% (k= .93; N=179) for coding semantic relations in multi-word speech and supplementary gesture-speech combinations, respectively. Data were analyzed using one-way ANOVAs––with age as the within subject factor––and t-tests, or chi-squares, as appropriate.

RESULTS

Children’s early speech, gesture and gesture-speech combinations

Children steadily increased their speech production over time. As can be seen in Table 2, children produced more communicative acts containing speech (F (5,170)=84.09, p<.001), more different word types (F (5,170)=174.74, p<.001), and more word tokens (F (5,170)=95.32, p<.001) with increasing age. There was a significant increase in the number of communicative acts containing speech and word types between 18 and 22 months (Scheffé, p’s<.001), and significant increases in all three measures from 22 to 26 months (Scheffé, p’s<.001). In addition, children continued to increase their word tokens and word types significantly from 26 to 30 months (Scheffé, p’s<.01). Children’s word lexicons showed a steep increase from 11 word types and 44 word tokens at 14 months to 239 word types and 1741 word tokens at 34 months, and the majority of the children (37/40) were producing multi-word combinations by 22 months.

Table 2.

Summary of the children’s speech and gesture production a.

| 14-months | 18-months | 22-months | 26-months | 30-months | 34-months | |

|---|---|---|---|---|---|---|

| Speech b | ||||||

| Mean number of communicative acts containing speech (SD) | 38 (44) | 157 (125) | 351 (249) | 549 (257) | 608 (234) | 642 (253) |

| Mean number of word tokens (SD) | 44 (53) | 179 (142) | 479 (401) | 1054 (658) | 1475 (795) | 1741 (789) |

| Mean number of word types (SD) | 11 (12) | 35 (25) | 91 (62) | 162 (79) | 213 (76) | 239 (74) |

| Mean number of word-word combinations (SD) | 4 (7) | 16 (22) | 90 (116) | 243 (173) | 342 (169) | 393 (166) |

| Percentage of children producing at least one two-word combinationc | 25% (10/40) | 63% (25/40) | 93% (37/40) | 97% (37/38) | 100% (37/37) | 100% (38/38) |

| Gesture | ||||||

| Mean number of communicative acts containing gesture (SD) | 53 (36) | 90 (63) | 117 (73) | 126 (88) | 115 (62) | 105 (59) |

| Mean number of gesture tokens (SD) | 54(36) | 91(64) | 119(75) | 131(90) | 123(68) | 112(65) |

| Mean number of gesture-speech combinations (SD) | 6(9) | 30(32) | 67(49) | 95(66) | 97(55) | 88(51) |

| Percentage of children producing at least one gesture-speech combination | 52% (21/40) | 98% (39/40) | 98% (39/40) | 97% (37/38) | 100% (37/37) | 100% (38/38) |

SD = standard deviation

All speech utterances are included in the top part of this table, with or without gesture.

We missed data collection for a few of the children at the later ages. The total number of children we observed was 38 at 26 and 34 months, and 37 at 30 months.

Children also increased their gesture production over time. They produced more communicative acts with gesture (F (5,170)=10.22, p<.001), more gesture tokens (F (5,170)=10.82, p<.001), and more gesture-speech combinations (F (5,170)=34.29, p<.001) with increasing age. There were significant increases in the mean number of communicative acts with gesture and gesture tokens between 14 and 18 months (Scheffé, p’s<.05), and a significant increase in the mean number of gesture-speech combinations between 18 and 22 months (Scheffé, p<.01). At 14 months, only half of the children (21/40) were producing gesture-speech combinations, but by 18 months, all but one child combined gesture with speech. Interestingly, in contrast to their speech production, which continued to improve over time, children’s gesture production declined slightly from 26 months onward.

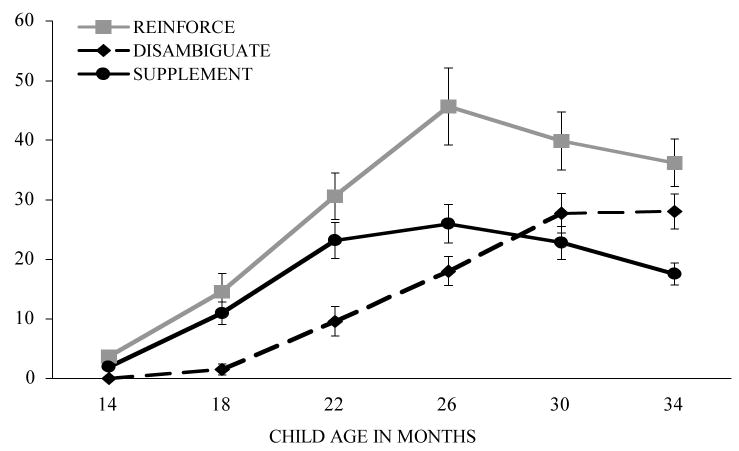

Children produced three distinct types of gesture-speech combinations between 14 and 34 months of age, combinations in which gesture reinforced (‘cookie’ + point at cookie), disambiguated (‘that one’ + point at cookie) or supplemented (‘eat’ + point at cookie) the information conveyed in speech. Children reliably increased their production of reinforcing (F (5,170)=20.10, p<.001), disambiguating (F (5,170)=32.12, p<.001), and supplementary (F (5,170)=16.87, p<.001) gesture-speech combinations over time (see Figure 1). There were significant increases in children’s production of reinforcing and supplementary combinations between 18 and 22 months (Scheffé, p’s<.05), and in their production of disambiguating combinations between 18 and 26 months (Scheffé, p<.001).

Figure 1.

Mean number of gesture-speech combinations produced by children at 14, 18, 22, 26, 30 and 34 months of age categorized according to type (error bars represent standard errors)

Among the three types of gesture-speech combinations children produced, supplementary combinations stand out as particularly interesting because it is in these combinations that children convey at least two different pieces of semantic information––one in speech, the other in gesture––thus conveying sentence-like meanings. In our previous work, exploring children’s supplementary combinations and multi-word speech between 14 and 22 months, we found that the children produced a variety of linguistic constructions first in a gesture-speech combination and only later in speech alone (Özçalışkan & Goldin Meadow, 2005a). But children’s speech continues to improve after 22 months of age. Here we explore whether the children’s gesture-speech combinations change as their speech becomes increasingly complex. The question we ask is whether the children continue to use supplementary combinations for a construction once they have begun producing the construction in speech alone, and whether gesture plays a leading role in adding arguments to the construction.

Gesture’s role in establishing a construction in a child’s repertoire

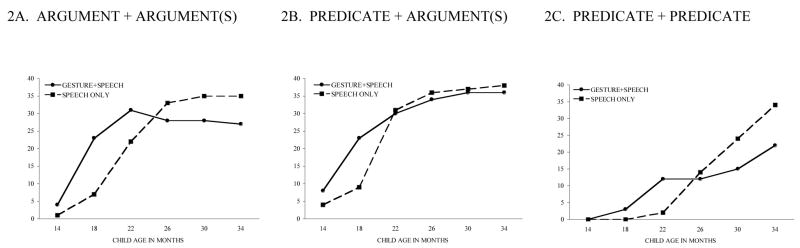

Figure 2 displays the number of children who produced at least one instance of each of the three construction types (argument+argument(s), predicate+argument(s), predicate+ predicate), either in a gesture-speech combination (gesture+speech) or in multi-word speech (speech-only).

Figure 2.

Number of children who produced utterances with two or more arguments (panel A), utterances with a predicate and at least one argument (panel B), or utterances with two predicates (panel C) in speech alone (dashed lines) or in a gesture-speech combination (solid lines)

Beginning with argument+argument(s) constructions, we found that by 18 months, children were producing these constructions routinely, but only in a gesture-speech combination and not yet in speech on its own (Özçalışkan & Goldin Meadow, 2005a). Indeed, at 18 months, significantly more children produced this construction in gesture+speech than in speech-only (23 vs. 7, χ2 (1)=12.0, p<.001), and they also produced significantly more instances of the construction in gesture+speech than in speech-only (63 [SD=2.4] vs. 17 [SD=1.3], t(39)=2.74, p<.01). By 22 months, the children were conveying argument+argument(s) constructions frequently, in both gesture+speech (197 [SD=6.53]) and in speech on its own (245 [SD=11.82]). At 26 months, speech became the child’s preferred modality, and the children produced significantly more instances of the argument+argument(s) construction in speech-only than in gesture+speech (435 [SD=10.55 vs. 178 [SD=6.48], t(37)= 3.10, p<.01), a pattern that continued through 34 months.

Turning next to predicate+argument(s) constructions, we found that here again by 18-months, significantly more children produced the construction in gesture+speech than in speech-only (23 vs. 9, χ2 (1)=8.80, p<.01; Özçalışkan & Goldin Meadow, 2005a); they also produced significantly more instances of the construction in gesture+speech than in speech-only (113 [SD=4.0] vs. 50 [SD=3.35], t(39)=2.14, p<.05) during the same observation session. By 22 months, a majority of the children (31/40) were conveying predicate+argument(s) construction in speech and, indeed, produced significantly more instances of the construction in speech-only than in gesture+speech (1223 [SD=60.13] vs. 240 [SD=6.73], t(39)=2.67, p=.01), a pattern that continued through 34 months.

Turning last to predicate+predicate constructions, we found that at 22 months, many children were conveying this construction in gesture+speech, but not yet in speech on its own (12 vs. 2 children, χ2 (1)=7.01, p<.01; Özçalışkan & Goldin Meadow, 2005a). At 22 months, children also produced significantly more instances of the construction in gesture+speech than in speech-only (17 [SD=0.78] vs. 2 [SD=0.22], t(39)=3.06, p<.01). At 26 months, children were conveying predicate+predicate constructions equally often in gesture+speech (57 [SD=4.05]) and speech alone (45 [SD=1.96]). By 30 months, speech became the preferred modality, and children produced significantly more instances of the predicate+predicate construction in speech-only than in gesture+speech (167 [SD=5.25] vs. 27 [SD=1.15], t(37)= 4.51, p<.001), a pattern that continued through 34 months.

Figure 2 shows that, as a group, children produced each construction in a gesture-speech combination before producing it entirely in speech. Does each individual child follow this developmental path, producing the construction first in gesture+speech and only later in speech-only? Table 3 presents the number of children who produced each of the three construction types in only one format (either gesture+speech or speech-only, middle two columns) or in both formats (three columns on the right) over the six observation sessions. Children who produced the construction in both formats were further classified according to whether they produced the construction first in gesture+speech, first in speech, or in both formats at the same time.

Table 3.

Number of children who produced the three types of constructions in only one format (gesture+speech or speech-only) or in both formats classified according to the format used first a.

| Type of Construction | Produced in one format |

Produced in both formats |

|||

|---|---|---|---|---|---|

| Only in G+S | Only in S | G+S first then S | G+S and S at same age | S first then G+S | |

| Argument + Argument(s)b. | 0 | 0 | 27 | 8 | 4 |

| Predicate + Argument(s) | 0 | 0 | 18 | 15 | 7 |

| Predicate + Predicatec. | 2 | 2 | 20 | 9 | 5 |

G+S = gesture + speech; S = speech only

One child never produced the construction in either gesture+speech or speech-only.

Two children never produced the construction in either gesture+speech or speech-only.

Overall, only a few children did not follow the predicted path from gesture+speech to speech-only: of the 40 children, only 4 (10%) produced the argument+argument(s) construction first in speech-only, and only 7 (17.5%) did so for predicate+argument(s) or predicate+predicate combinations. Children who produced a construction in both formats during the same observation session (8, 15 and 9 children for argument+argument(s), predicate+argument(s), and predicate+predicate combinations, respectively) do not bear on the hypothesis as we cannot tell from our data which format the child used first. Of the children who produced a construction in both formats but at different observation sessions, significantly more produced the construction first in gesture+speech than in speech-only (argument+argument(s): 27 vs. 4, χ2 (1)=25.49, p<.001; predicate+argument(s): 18 vs. 7, χ2 (1)=5.82, p<.05; predicate+predicate: 20 vs. 5, χ2 (1)=11.40, p<.001). Thus, children, both as a group and individually, produced each of these three constructions in gesture+speech before producing the construction entirely in speech.

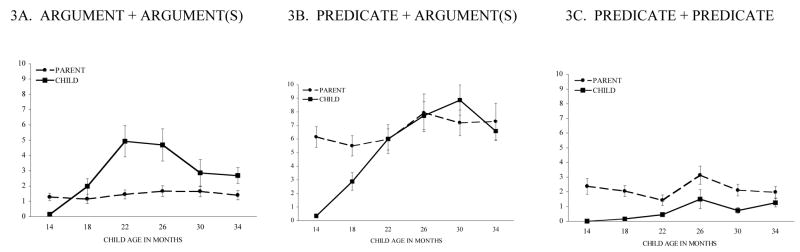

Our results show that children first used gesture and speech together but, over time, came to prefer speech. Nonetheless, even after speech became the preferred modality, a substantial number of children continued to produce the construction in gesture+speech (see Figure 2). Why? A close look at the children’s parents’ production of these construction types might give us the answer. We know from previous work (Özçalışkan & Goldin Meadow, 2006a) that the parents of these children produced all three construction types in gesture+speech during their everyday interactions with their children. However, unlike their children, the parents’ productions did not change over the six observation sessions, either for argument+argument(s) (F (5,170)=0.37, ns), predicate+argument(s) (F (5,170)=1.20, ns), or predicate+predicate (F (5,170)=2.20, ns) combinations. The solid line in Figure 3 shows the mean number of argument+argument(s), predicate+argument(s), and predicate+predicate combinations the children produced in gesture+speech at each observation session, and the dashed line shows the mean number of times the parents produced each of the three constructions in gesture+speech.

Figure 3.

Mean number of gesture-speech combinations with two or more arguments (panel A), combinations with a predicate and at least one argument (panel B), or combinations with two predicates (panel C) produced by children (solid lines) or their parents (dashed lines) (error bars represent standard errors).

Note that the children produced more and more argument+argument(s) and predicate+argument(s) constructions over time, eventually overshooting their parents’ productions of these gesture+speech combinations. At some point after the children established the construction in speech alone, their gesture+speech production levels decreased and began to approach their parents’ levels. By 34 months, the children were producing argument+argument(s) (MCHILD=2.68, MPARENT=1.40; t(37)=2.75, p<.01) and predicate+argument(s) (MCHILD=6.58, MPARENT=7.29; t(37)=.55, ns) constructions at rates roughly comparable to their parents’ rates. The children also increased their production of predicate+predicate constructions over time, although by our last observation session they had not yet reached their parents’ level. It is not clear whether, in future months, the children will overshoot their parents’ productions of predicate+predicate constructions, or remain at the levels attained at 34 months (which were roughly comparable to their parents’ levels, MCHILD=1.26, MPARENT=1.97; t(37)=1.51, ns). In general, the children seemed to be using their gesture+speech combinations to bootstrap their way into producing these constructions in speech. Once having achieved the construction in speech, the children decreased their production of gesture+speech combinations to their parents’ levels.

Gesture’s role in fleshing out constructions with additional arguments

Our analysis thus far establishes the distinctive role of gesture for the young language-learner in taking his or her first steps into these three linguistic constructions. It also suggests that, with further improvements in children’s spoken language skills, gesture ceases to be the primary channel for conveying these constructions and speech becomes the preferred modality. However, because the children are learning English, which does not routinely permit argument omission, over time the children are going to have to flesh out the constructions with additional arguments. Does gesture play a leading role in this process? One possibility is that gesture helps children take the initial step into the bare bones form of a construction (i.e., a two argument construction; a predicate with a single argument construction; a two predicate with no arguments construction), but once the construction becomes part of their communicative repertoire, children no longer rely on gesture to add further arguments to the construction. Alternatively, gesture might be instrumental in learning to flesh out a construction with additional arguments (e.g., a three argument construction; a predicate with multiple arguments construction; a two predicate with multiple arguments construction).

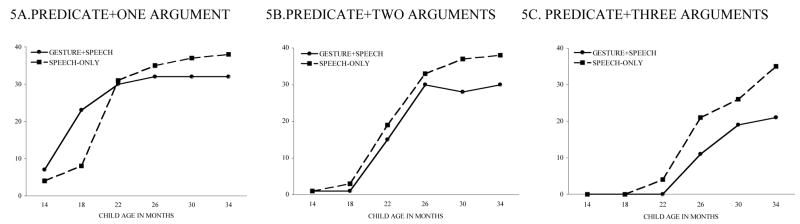

To explore these alternatives, we divided argument+argument(s) constructions into those containing two arguments and those containing three arguments; we divided predicate+ argument(s) constructions into those containing one, two, or three arguments; and we divided multiple predicate constructions into those containing zero or one argument and those containing multiple arguments (see Table 1). For each subcategory of construction type, we examined whether children first produced it in a gesture-speech combination before they produced it entirely in speech.

Figure 4 shows the number of children who produced at least one instance of a two argument (4A) or a three-argument (4B) construction in a gesture-speech combination or in multi-word speech. As in our previous analyses, significantly more children produced twoargument combinations in gesture+speech than in speech-only at 18 months (23 vs. 7, χ2 (1)=12.0, p<.001), showing a leading role for gesture in the onset of two argument constructions. However, we observed no such developmental trend for three-argument constructions; the number of children who produced three-argument combinations in gesture+speech or speech-only was comparable at each observation session, with no reliable differences.

Figure 4.

Number of children who produced utterances with two arguments (panel A) or three arguments (panel B) in speech alone (dashed lines) or in a gesture-speech combination (solid lines)

We see the same pattern in predicate+argument(s) constructions. Significantly more children produced predicate+one argument constructions in gesture+speech than in speech-only at 18 months (Figure 5A, 23 vs. 8, χ2 (1)=10.32, p<.01), confirming gesture’s leading role in the onset of predicate+one argument constructions. But gesture did not play a leading role in the onset of either predicate+two argument (Figure 5B) or predicate+three argument constructions (Figure 5C); the number of children who produced either construction in gesture+speech or speech-only was comparable from 14 to 30 months of age and, after 30 months, significantly more children produced the construction in speech-only than in gesture+speech (predicate+two argument construction at 30 months, 37 vs. 28, χ2 (1)=5.25, p<.05; predicate+three argument construction at 34 months, 35 vs. 21, χ2 (1)=10.06, p<.001).

Figure 5.

Number of children who produced utterances with a predicate and one argument (panel A), utterances with a predicate and two arguments (panel B), or utterances with a predicate and three arguments (panel C) in speech alone (dashed lines) or in a gesture-speech combination (solid lines)

Turning last to predicate+predicate constructions, we found a similar pattern, but delayed by 4 months. Significantly more children produced predicate+predicate constructions with no arguments or with one argument in gesture+speech than in speech-only at 22 months (Figure 6A, 10 vs. 2, χ2 (1)=4.8, p<.05). But gesture did not pave the way for predicate+predicate constructions with two or more arguments (Figure 6B); the number of children who produced these combinations in gesture+speech and speech-only was comparable at 22 months (3 vs. 0) and 26 months (8 vs. 9, χ2 (1)=0, ns). From 30 months onward, speech became the preferred modality, and significantly more children produced the construction in speech-only than in gesture+speech at 30 months (7 vs. 22, χ2 (1)=10.60, p<.001) and 34 months (19 vs. 29, χ2 (1)=4.22, p<.05).

Figure 6.

Number of children who produced utterances with two predicates and one or zero argument (panel A), utterances with two predicates and two or more arguments (panel B) in speech alone (dashed lines) or in a gesture-speech combination (solid lines)

In summary, we found that children rely on gesture to take their initial steps into a construction. However, once the construction is established in their repertoire, gesture no longer seems to be needed and children do not use gesture as a stepping-stone to flesh out the constructions with additional arguments.

Children’s production of predicate+predicate construction in speech and gesture

Among the three construction types, multiple predicate combinations show the most change during the 14 to 34 month period. Predicate+predicate combinations make their first appearance in children’ gesture-speech combinations and multi-word speech at 22 months, and steadily increase in frequency until 34 months. We therefore examined detailed changes in children’s multiple predicate constructions during our observations.

Table 4 shows the distribution of different types of predicate+predicate combinations children produced in their gesture-speech combinations (upper half) and in their multi-word speech (lower half) over the six observation sessions. In their early predicate+predicate constructions in gesture+speech, children used a limited set of conventional gestures, each mapping onto a single predicate meaning. These included (1) extending an open palm next to a desired object to convey GIVE (e.g., ‘me scoop’ + GIVE cup of flour; ‘I got to cut it’ + GIVE knife), (2) curling fingers of an extended palm inward to convey COME (e.g., ‘bite me’ + COME; ‘do it’ + COME), and (3) raising both arms above head to convey PICK-UP (e.g., ‘help me’ + PICK-UP;‘do it again’+ PICK-UP). These three types of combinations accounted for 80–100% of all the predicate+predicate constructions in children’s gesture-speech combinations from 14 to 26 months.

Table 4.

Multi-predicate constructions produced by the children in gesture-speech and multi-word combinations categorized according to type.a.

| 14-month | 18-month | 22-month | 26-month | 30-month | 34-month | |

|---|---|---|---|---|---|---|

| Gesture+speech | ||||||

| GIVE + verb | -- | 67% (2,3) | 48% (6,12) | 76% (10,12) | 37% (8,15) | 37% (9,22) |

| PICKUP + verb | -- | 33% (1,3) | 33% (4,12) | 14% (3,12) | 14% (2,15) | -- |

| COME + verb | -- | -- | 2% (1,12) | 1% (1,12) | -- | 3% (3,22) |

| OTHER GESTUREb. + verb | -- | -- | 17% (2,12) | 9% (2,12) | 49% (9,15) | 60% (15,22) |

|

| ||||||

| Speech-only | ||||||

| Let me+verb | -- | -- | -- | 50% (10,14) | 21% (12,24) | 22% (19,34) |

| Verb1+conjunction+verb2 | -- | -- | -- | 13% (4,14) | 21% (15,24) | 25% (24,34) |

| Want/make/need/help someone to+verb | -- | -- | 50% (1,2) | 16% (6,14) | 28% (12,24) | 23% (22,34) |

| Think/know/wish +verb | -- | -- | -- | 4% (1,14) | 3% (4,24) | 8% (11,34) |

| See/look+verb | -- | -- | -- | 5% (2,14) | 14% (13,24) | 10% (12,34) |

| Say/tell/ask+verb | -- | - | -- | 1% (1,14) | 5% (7,24) | 5% (10,34) |

| Other embedded | -- | -- | 50% (1,2) | 11% (3,14) | 8% (9,24) | 7% (11,34) |

Percentages were computed as follows: we took the number of gesture-speech or multi-word combinations of a given type that each child produced during an observation session and divided that number by the total number of gesture-speech or multi-word combinations conveying predicate+predicate constructions the child produced during that session; we then averaged those percentages, including only children who produced predicate+predicate constructions in either gesture+speech or speech alone during the observation session. The total number of children who produced each construction type during the observation session is indicated in the first number in parentheses; the total number of children who produced predicate+predicate constructions during that session is indicated in the second number).

Other gesture refers to an iconic gesture that encoded some type of action (e.g., THROWING, FLYING, EATING)

However, at 30 months, children began to produce iconic gestures at higher rates, allowing them to convey a wider range of predicate meanings (e.g., flapping arms to convey FLYING; moving fist forcefully forward to convey PUSHING; moving hand to mouth repeatedly to convey EATING). The increase in iconic gestures, in turn, allowed children to convey a wider array of predicate+predicate combinations across gesture and speech (e.g., ‘you have to make a somebody’ + move hand in air as if DRAWING; ‘no play nice’ + move downward facing palm sideways as if STROKING a pet animal; ‘I know that’ + move downward facing palm up and down as if DRIBBLING a basketball) from 30 months onward. These more varied combinations accounted for half of the predicate+predicate constructions children produced in gesture+speech at the 30-month (49%) and the 34-month (60%) observation sessions.

As described earlier, children did not produce multi-predicate combinations in speech until 26 months (the two exceptions were both produced at 22 months; see Table 4, bottom). Moreover, half of the predicate+predicate constructions children produced in speech at 26 months were of a single type: let me + verb2 (e.g., ‘let me see’; ‘let my fix it’; ‘let me do it’). The children used two other types of predicate+predicate constructions in speech between 26 and 34 months: two predicates conjoined with various conjunctions (e.g., ‘come down and see my mom’; ‘when Molly comes over then we can eat it’; ‘I can’t go to school because I pee all the time’; ‘what are we going to do if it rain?’ and want/make/need/help someone (to) + verb2 constructions (e.g., ‘I want you sit right there’; ‘I want Lauren to play with me’; ‘you make him fall’; ‘I need mom to get me out’; ‘help me find a bulldozer’). These two types, along with the let me +verb2 frame, accounted for 70% of all the multi-predicate constructions in children’s speech-only utterances from 26 to 34 months of age. The remaining construction types accounted for few of the children’s predicate+predicate combinations in speech (Table 4, bottom); e.g., think/know + verb2 (‘I think we ate all of Kristine’; ‘do not know how to do’), see/look+ verb2 (‘he sees you doing that’; ‘look at the baby jumping’), say/tell/ask + verb2 ( ‘I said I just drawed a house’; ‘she told me to read it’; ‘you asked me to make a tower’) and occasional other embedded multi-predicate utterances (‘show me how it works’; ‘use this to cut’; ‘mom make a tower that I go in’; ‘I have a camera just like you do’).

Thus, overall, the types of predicate+predicate constructions children produced in gesture+speech and speech-only were initially restricted to a small set of construction types, becoming more diverse from 30 months onward. Even though speech became the preferred modality for expressing predicate+predicate combinations at 26 months, children’s gestures still contributed to the diversity of their predicate+predicate combinations. Thus, at the later ages, gesture continued to be a useful communicative tool, allowing children to convey a larger array of meanings than they could by using speech alone.

DISCUSSION

In this paper we examined young children’s use of supplementary gesture-speech combinations in relation to their multi-word speech as they made the transition from one-word speech to increasingly more complex linguistic constructions. We specifically focused on three types of linguistic constructions: multiple arguments (e.g., ‘egg’ + point at empty bird nest; ‘juice mama’ + hold up empty cup), single predicates with at least one argument (e.g., ‘baby’ + EAT gesture, ‘I wash her hair’ + point to sink) and multiple predicates with or without arguments (e.g., ‘me try it’ + GIVE gesture, ‘head broke’ + FALL gesture). Children produced each of these three construction types across gesture and speech several months before the constructions appeared entirely within speech. Not surprisingly, with further improvements in children’s spoken language skills from 22 to 34 months of age, gesture ceased being the primary channel of communication and speech became the preferred modality for conveying these different sentential constructions. More interestingly, gesture played a leading role in producing the initial basic form of a linguistic construction, but did not lead the way when children added arguments to flesh out the construction. In other words, once the skeleton of a construction was established in a child’s communicative repertoire, the child no longer relied on gesture as a stepping-stone to flesh out that skeleton with additional arguments.

Gesture and speech form a semantically and temporally integrated system in adult communications in spite of the fact that each modality represents meaning in different ways (McNeill, 1992, 2005; Kendon, 1980, 2004). Children achieve semantic and temporal integration between their gesture and speech relatively early in development (Butcher & Goldin-Meadow, 2000). But what is the nature of the relationship between gesture and speech for young language learners taking their initial steps into sentence construction?

One possibility is that gesture is positively related to speech; that is, increases in gestural skill parallel increases in spoken language skill. Another possibility is that gesture is negatively related to speech; that is, increases in gestural skill compensate for deficits in spoken language skills. The types of sentential constructions children expressed in their gesture-speech combinations provide evidence for both patterns, but at different points in development.

When gesture compensates for speech

At the early stages of language learning (14-to-22 months) gesture is negatively related to speech, with gestural skills compensating for limitations in spoken language skills. At a point when children are constrained in their ability to express a sentence-like meaning entirely within the spoken modality, gesture offers them a tool to express that meaning. And children use this tool to convey increasingly more complex linguistic constructions in their gesture-speech combinations before producing the constructions in speech alone. The act of expressing two different pieces of information across modalities, but within the bounds of a single communicative act, makes it clear that the child not only has knowledge of the two elements, but can assemble the elements into a single relational unit. What then prevents the child from expressing the sentence-like meaning entirely in speech?

One possibility is that the child’s grasp of the construction is initially at an implicit representational level accessible only to gesture (cf., Broaders, Cook, Mitchell & Goldin-Meadow, 2007). Expressing a construction entirely within the spoken modality requires explicit knowledge, not only of the words for the semantic elements but of the semantic relation between them. It appears to take time for children to take this step (Goldin-Meadow & Butcher, 2003). Moreover, our data suggest that children take the step from implicit to explicit knowledge at different moments for different constructions. Another possibility is that gestures are easier to produce than words at the early stages of language learning (e.g., Acredolo & Goodwyn, 1988). Speech conveys meaning by rule-governed combinations of discrete units that are codified according to the norms of the language. In contrast, gesture conveys meaning idiosyncratically by means of varying forms that are context-sensitive (Goldin-Meadow & McNeill, 1999; McNeill, 1992). Pointing at an object to label that object, or creating an iconic gesture on the fly while describing the action to be performed on the object, may be cognitively less demanding than producing words for these ideas (cf., Goldin-Meadow, Nusbaum, Kelly & Wagner, 2001; Wagner, Nusbaum & Goldin-Meadow, 2004). There is, in fact, evidence that children who undergo temporary difficulties in oral language acquisition revert to gestural devices to compensate for their deficiencies (Acredolo & Goodwyn, 1988; Thal & Tobias, 1992).

Interestingly, children’s tendency to express two pieces of information across gesture and speech before conveying them entirely in speech is a phenomenon that has been shown not only for early language development (Goldin-Meadow & Butcher, 2003; Iverson & Goldin-Meadow, 2005; Özçalışkan & Goldin-Meadow, 2005a, 2006a, 2007; Özçalışkan, Levine & Goldin-Meadow, 2007), but also for other cognitive achievements mastered at later stages in development. Communications in which gesture and speech convey different information have been observed in a variety of tasks, including preschoolers learning to count (Graham, 1999) and reasoning about a board game (Evans & Rubin, 1979), elementary school children reasoning about conservation problems (Church & Goldin-Meadow, 1986) and math problems (Perry, Church & Goldin-Meadow, 1988), and even adults reasoning about gears (Perry & Elder, 1996). There is, moreover, evidence from a number of these tasks that children who produce supplementary gesture-speech combinations in explaining the task are in a transitional knowledge state with respect to that task and are ready to make progress if given the necessary instruction (Alibali & Goldin-Meadow, 1993; Church & Goldin-Meadow; Perry et al., 1988; Pine, Lufkin & Messer, 2004). Co-speech gesture can thus serve as a window onto the child’s mind, providing a richer view of the child’s communicative and cognitive abilities than the one offered by speech (Goldin-Meadow, 2003; McNeill & Duncan, 2000).

Our results join others in suggesting that early gesture is a harbinger of change, but might it also play a role in propelling change? Gesture has the potential to play a propelling role in learning through its communicative effects. For example, learners (unconsciously) signal through their gestures that they are in a particular cognitive state, and listeners can then adjust their responses accordingly (Goldin-Meadow, Goodrich, Sauer & Iverson, 2007; Goldin-Meadow & Singer, 2003). But gesture also has the potential to play a role in learning through its cognitive effects (cf. Goldin-Meadow & Wagner, 2005). For example, encouraging school-aged children to produce gestures conveying a correct problem-solving strategy increases the likelihood that the children will solve the problem correctly (Broaders et al., 2007; Cook & Goldin-Meadow, 2006; Cook, Mitchell & Goldin-Meadow, 2007). Thus, the act of gesturing may not only signal linguistic changes to come; it may play a role in bringing those changes about.

When gesture and speech go hand-in-hand

At later stages of language learning, the relation between gesture and speech changes so that increases in gestural skills begin to parallel increases in spoken language skills, at least with respect to certain constructions. Between 22 to 34 months of age, children gradually develop a preference for speech as their principle mode of communication. At this point, although children continue to use gesture-speech combinations, the frequencies with which they produce particular constructions in gesture and speech begin to approximate adult frequencies. We know from earlier work that adults rarely produce gestures without speech when communicating with other adults (McNeill, 1992) or children (Iverson, Capirci, Longobardi & Caselli, 1999; Özçalışkan & Goldin-Meadow, 2005b). Indeed, 90% of the gestures adults produce when communicating with their children co-occur with speech (Özçalışkan & Goldin-Meadow, 2005b). Interestingly, a portion of these gesture-speech combinations are supplementary in both adult-to-adult conversations (e.g., an adult describing to another adult Granny’s chase after Sylvester in a cartoon movie moves her hand as if swinging an umbrella while saying, ‘she chases him out again,’ McNeill, 1992) and adult-to-child conversations (e.g., a mother narrating a story to her two-year-old child wiggles her fingers as if climbing while saying,‘I will meet you up at the coconut tree,’ Özçalışkan & Goldin-Meadow, 2006a; see also Goldin-Meadow & Singer, 2003). In each of these examples, the adult uses gesture to convey aspects of the scene that are not conveyed in speech (e.g., the implement involved in the chase in the adult-to-adult conversation; the manner of ascending in the adult-to-child conversation). Such combinations are a relatively common feature of adult gesture-speech communication, and the children in our sample seem to be moving towards adult production levels of these types of combinations.

One interesting question is why do adults (and children once they have passed the transitional learning point) continue to use supplementary gesture-speech combinations? The children’s supplementary gesture-speech combinations, particularly those produced when the child was just learning a construction, reflect the incipient steps a learner takes when beginning to master the task, the first progressive steps of a novice. For the children, supplementary gesture-speech combinations are an index of cognitive instability. But the caregivers in our study were all experts when it comes to expressing simple propositional information in sentences, and were able to express all of the constructions found in their supplementary gesture-speech combinations entirely within speech (Özçalışkan & Goldin-Meadow, 2006a). Why then did they produce supplementary gesture-speech combinations? We suggest that, for adults, supplementary combinations are an index of discourse instability––a moment when speech and gesture are not completely aligned, reflecting the dynamic tension of the speaking process (McNeill, 1992; see also Goldin-Meadow, 2003). This difference is not a developmental difference but rather reflects the state of the speaker’s knowledge––adults produce supplementary gesture-speech combinations when they are learning a task (Perry & Elder, 1997) and, as we have shown here, children continue to produce supplementary gesture-speech combinations even after they have mastered a task.

But why does gesture play a leading role when children produce the initial form of a linguistic construction and not when they add arguments to flesh out the construction? One possible explanation is that, at later ages, conveying arguments in gesture is a skill that the children already had control over. The children in our sample had been using gesture to add arguments to speech since they were 14 months old; adding arguments was therefore not a skill that they were in the midst of learning––they had mastered it. We know from earlier work that gesture plays a leading role in supplementary gesture-speech combinations only when the task is one that the speaker is in the process of learning (Goldin-Meadow & Singer, 2003). For example, children who are learning a math task convey strategies in their supplementary gesture-speech combinations that are at the cutting edge of their knowledge and cannot be found anywhere in their speech. Their teachers, who have mastered the task, do not––all of the strategies the teachers convey in gesture in their supplementary gesture-speech combinations can be found elsewhere in their speech on other problems. In our study, children relied on gesture to produce a construction when it was at the cutting edge of their linguistic knowledge. But once the construction had been incorporated into their repertoires, the children were able to use either gesture or speech to flesh out the construction.

Our findings suggest that, as the verbal system becomes the preferred means of communication, the gestural system undergoes reorganization with respect to language learning, moving from a state in which gesture is a harbinger of linguistic skills that will soon appear in speech, to a state in which gesture enriches the speaker’s communicative repertoire in response to discourse pressures (McNeill, 1992). This change in gesture’s language-learning role is signaled not only by changes in children’s supplementary gesture-speech combinations but also by increases in their iconic gestures. A substantial portion of the co-speech gestures adults produce in adult-adult conversations are iconic (McNeill, 1992); the children in our study showed the first signs of moving toward this pattern at 30 months, with the blossoming of iconic gestures in predicate+predicate constructions.

In summary, our analyses suggest that gesture extends a child’s communicative repertoire as the child goes through the stages of language learning, but does so in different ways at different points in development. Early on, gesture helps children take their initial steps into sentential constructions. Later, with improvements in spoken language skills, gesture begins to take on a new, more adult role and is no longer at the cutting edge of children’s linguistic achievements, although it is still a harbinger of things to come in other cognitive skills (Goldin-Meadow, 2003). Gesture acts as a harbinger of linguistic steps only when those steps involve new constructions, not when the steps merely flesh out existing constructions.

Acknowledgments

We thank Kristi Schonwald and Jason Voigt for administrative and technical support, and Karyn Brasky, Laura Chang, Elaine Croft, Kristin Duboc, Becky Free, Jennifer Griffin, Sarah Gripshover, Kelsey Harden, Lauren King, Carrie Meanwell, Erica Mellum, Molly Nikolas, Jana Oberholtzer, Lillia Rissman, Becky Seibel, Laura Scheidman, Meredith Simone, Calla Trofatter, and Kevin Uttich for help in data collection and transcription. We also thank Sotaro Kita and the two anonymous reviewers for their helpful comments. The research was supported by a P01 HD40605 grant to S. Goldin-Meadow.

Footnotes

Children occasionally produced a fourth type of gesture, the beat gesture. Beat gestures are formless hand movements that convey no semantic information but move in rhythmic relationship with speech to highlight aspects of discourse structure (e.g., flicking the hand or the fingers up and down or back and forth; McNeill, 1992). The incidence of beat gestures was extremely rare in the data; this category was therefore excluded from the analyses.

References

- Acredolo LP, Goodwyn SW. Symbolic gesturing in language development. Human Development. 1985;28:40–49. [Google Scholar]

- Acredolo LP, Goodwyn SW. Symbolic gesturing in normal infants. Child Development. 1989;59:450–466. [PubMed] [Google Scholar]

- Alibali MW, Goldin-Meadow S. Gesture-speech mismatch and mechanisms of learning: What the hand reveal about a child’s state of mind. Cognitive Psychology. 1993;25:468–523. doi: 10.1006/cogp.1993.1012. [DOI] [PubMed] [Google Scholar]

- Bates E. Language and context. New York: Academic Press; 1976. [Google Scholar]

- Bates E, Benigni L, Bretherton I, Camanioni L, Volterra V. The emergence of symbols: cognition and communication in infancy. New York: Academic Press; 1979. [Google Scholar]

- Broaders S, Wagner Cook S, Mitchell Z, Goldin-Meadow Making children gesture brings out implicit knowledge and leads to learning. Journal of Experimental Psychology: General. 2007;136(4):539–550. doi: 10.1037/0096-3445.136.4.539. [DOI] [PubMed] [Google Scholar]

- Butcher C, Goldin-Meadow S. Gesture and the transition from one- to two-word speech: when hand and mouth come together. In: McNeill D, editor. Language and gesture. Cambridge: Cambridge University Press; 2000. pp. 235–258. [Google Scholar]

- Capirci O, Iverson JM, Pizzuto E, Volterra V. Gestures and words during the transition to two-word speech. Journal of Child Language. 1996;23:645–673. [Google Scholar]

- Church RB, Goldin-Meadow S. The mismatch between gesture and speech as an index of transitional knowledge. Cognition. 1986;23:43–71. doi: 10.1016/0010-0277(86)90053-3. [DOI] [PubMed] [Google Scholar]

- Cook SW, Goldin-Meadow S. The role of gesture in learning: Do children use their hands to change their minds? Journal of Cognition and Development. 2006;7:211–232. [Google Scholar]

- Cook SW, Mitchell Z, Goldin-Meadow S. Gesturing makes learning last. Cognition. 2007 doi: 10.1016/j.cognition.2007.04.010. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Evans MA, Rubin KH. Hand gestures as a communicative mode in school-aged children. Journal of Genetic Psychology. 1979;135:189–196. [Google Scholar]

- Goldin-Meadow S. Hearing Gesture: How our hands help us think. Cambridge, MA: Harvard University Press; 2003. [Google Scholar]

- Goldin-Meadow S, Butcher C. Pointing toward two word speech in young children. In: Kita S, editor. Pointing: where language, culture, and cognition meet. New Jersey: Lawrence Erlbaum Associates; 2003. pp. 85–107. [Google Scholar]

- Goldin-Meadow S, Goodrich W, Sauer E, Iverson J. Young children use their hands to tell their mothers what to say. Developmental Science. 2007;10(6):778–785. doi: 10.1111/j.1467-7687.2007.00636.x. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, McNeill D. The role of gesture and mimetic representation in making language the province of speech. In: Corbalis M, Lea, editors. The descent of mind. Oxford, UK: Oxford University Press; 1999. pp. 155–172. [Google Scholar]

- Goldin-Meadow S, Nusbaum H, Kelly S, Wagner S. Explaining math: Gesturing lightens the load. Psychological Science. 2001;12:516–522. doi: 10.1111/1467-9280.00395. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Singer MA. From children’s hands to adults’ ears: Gesture’s role in teaching and learning. Developmental Psychology. 2003;39(3):509–520. doi: 10.1037/0012-1649.39.3.509. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Wagner SM. How our hands help us learn. Trends in Cognitive Science. 2005;9:234–241. doi: 10.1016/j.tics.2005.03.006. [DOI] [PubMed] [Google Scholar]

- Graham TA. The role of gesture in children learning to count. Journal of Experimental Child Psychology. 1999;74:333–355. doi: 10.1006/jecp.1999.2520. [DOI] [PubMed] [Google Scholar]

- Greenfield P, Smith J. The structure of communication in early language development. New York: Academic Press; 1976. [Google Scholar]

- Iverson JM, Capirci O, Caselli MC. From communication to language in two modalities. Cognitive Development. 1994;9:23–43. [Google Scholar]

- Iverson JM, Capirci O, Longobardi E, Caselli MC. Gesturing in mother-child interactions. Cognitive Development. 1999;14:57–75. [Google Scholar]

- Iverson JM, Goldin-Meadow S. Gesture paves the way for language development. Psychological Science. 2005;16:368–371. doi: 10.1111/j.0956-7976.2005.01542.x. [DOI] [PubMed] [Google Scholar]

- Kendon A. Gesticulation and speech: Two aspects of the process of utterance. In: Key MR, editor. The relationship of verbal and nonverbal communication. New York: Mouton Publishers; 1980. pp. 207–227. [Google Scholar]

- Kendon A. Gesture: Visible action as utterance. Cambridge, UK: Cambridge University Press; 2004. [Google Scholar]

- McNeill D. Hand and mind: What gestures reveal about language and thought. Chicago: University of Chicago Press; 1992. [Google Scholar]

- McNeill D. Gesture and thought. Chicago: University of Chicago Press; 2005. [Google Scholar]

- McNeill D, Duncan S. Growth points in thinking for speaking. In: McNeill D, editor. Language and Gesture. New York, NY: Cambridge University Press; 2000. pp. 141–161. [Google Scholar]

- Özçalışkan Ş, Goldin-Meadow S. Gesture is at the cutting edge of early language development. Cognition. 2005a;96(3):B101–B113. doi: 10.1016/j.cognition.2005.01.001. [DOI] [PubMed] [Google Scholar]

- Özçalışkan Ş, Goldin-Meadow S. Do parents lead their children by the hand? . Journal of Child Language. 2005b;32(3):481–505. doi: 10.1017/s0305000905007002. [DOI] [PubMed] [Google Scholar]

- Özçalışkan Ş, Goldin-Meadow S. How gesture helps children construct language. In: Clark EV, Kelly BF, editors. Constructions in acquisition. Stanford, CA: CSLI Publications; 2006a. pp. 31–58. [Google Scholar]

- Özçalışkan Ş, Goldin-Meadow S. ‘X is like Y’: The emergence of similarity mappings in children’s early speech and gesture. In: Gitte Kristianssen, Michael Achard, Rene Dirven, Francisco Ruiz, de Mendoza., editors. Cognitive Linguistics: Foundations and fields of application. Mouton de Gruyter; 2006b. pp. 229–262. [Google Scholar]

- Özçalışkan Ş, Goldin-Meadow S. Sex differences in language first appear in gesture. Child Development. 2007 doi: 10.1111/j.1467-7687.2009.00933.x. Under review. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Özçalışkan Ş, Levine S, Goldin-Meadow S. Gesturing with an injured brain: How gesture helps children with early brain injury learn linguistic constructions. Cognition. 2007 doi: 10.1017/S0305000912000220. Under review. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perry M, Elder AD. Knowledge in transition: Adults’ developing understanding of a principle of physical causality. Cognitive Development. 1997;12:131–157. [Google Scholar]

- Perry M, Church B, Goldin-Meadow S. Transitional knowledge in the acquisition of concepts. Cognitive Development. 1988;3:359–400. [Google Scholar]

- Pine KJ, Lufkin N, Messer D. More gestures than answers: Children learning about balance. Developmental Psychology. 2004;40:1059–1067. doi: 10.1037/0012-1649.40.6.1059. [DOI] [PubMed] [Google Scholar]

- Thal D, Thobias S. Communicative gestures in children with delayed onset of oral expressive vocabulary. Journal of Speech and Hearing Research. 1992;37:157–170. doi: 10.1044/jshr.3506.1289. [DOI] [PubMed] [Google Scholar]

- Volterra V, Iverson JM. When do modality factors affect the course of language acquisition? In: Emmorey K, Reilly JS, editors. Language, gesture, and space. Hillsdale, NJ: Erlbaum; 1995. pp. 371–390. [Google Scholar]

- Wagner S, Nusbaum H, Goldin-Meadow S. Probing the mental representation of gesture: Is handwaving spatial? Journal of Memory and Language. 2004;50:395–407. [Google Scholar]