Abstract

Background

Facilitating direct observation of medical students' clinical competencies is a pressing need.

Methods

We developed an electronic problem-specific Clinical Evaluation Exercise (eCEX) based on a national curriculum. We assessed its feasibility in monitoring and recording students' competencies and the impact of a grading incentive on the frequency of direct observations in an internal medicine clerkship. Students (n = 56) at three clinical sites used the eCEX and comparison students (n = 56) at three other clinical sites did not. Students in the eCEX group were required to arrange 10 evaluations with faculty preceptors. Students in the second group were required to document a single, faculty observed ‘Full History and Physical’ encounter with a patient. Students and preceptors were surveyed at the end of each rotation.

Results

eCEX increased students' and evaluators' understanding of direct-observation objectives and had a positive impact on the evaluators' ability to provide feedback and assessments. The grading incentive increased the number of times a student reported direct observation by a resident preceptor.

Conclusions

eCEX appears to be an effective means of enhancing student evaluation.

Keywords: students, medical, clinical competence, observation, computers, handheld, clinical clerkship, internal medicine

According to the Liaison Committee on Medical Education (LCME), the nationally recognized accrediting authority for allopathic medical schools in USA and Canada, ‘there must be ongoing assessment that assures students have acquired and can demonstrate on direct observation the core clinical skills, behaviors, and attitudes that have been specified in the school's educational objectives.’ In this context, the LCME considers meeting this standard to be absolutely necessary for accreditation (1). Therefore, effective methods for accomplishing direct observation and assessment linked to educational objectives are vital for training medical students. However, several challenges exist in the direct observation and assessment of a medical student's clinical skills. A major challenge to overcome is that such skills are rarely observed. According to Howley, up to 81% of students reported never being observed interviewing or examining a patient during core third-year clerkships (2). Pulito reported that of the 1,056 comments generated by the faculty on clinical evaluation forms, not a single one commented on the history-taking or physical examination skills of medical students. He determined that the evaluation of a student's clinical skills by faculty is commonly inferred from other factors such as case presentations or derived from information received from residents (3).

When direct observation does occur, standards for judging clinical performance are commonly not made explicit to the evaluator (4). The lack of explicit performance standards affects the reliability and validity of competency assessments. For example, in a study of 326 British medical students, Hill noted that up to 29% of a student's score on a mini-CEX evaluation could be explained by faculty-specific variables (i.e. the examiner's stringency, subjectivity or satisfaction) – a higher percentage than that attributable to the student's actual ability (5).

Although standardized patient encounters are commonly employed in the assessment of clinical skills, Holmboe has argued persuasively for continued importance of the role of faculty in assessing a trainee's performance when caring for actual patients in a clinical setting (6).

The lack of effective strategies for making direct observation feasible in a real life clinical setting is the most frequently cited barrier to allowing direct observation to occur (2, 4, 7, 8). Assessment tools that are flexible enough to be used in dynamic clinical environments and that have the capacity to monitor and record a student's competence reliably, as part of routine clinical workflow have become key instructional needs. To address some of these prevailing concerns and shortcomings, we set out to assess the feasibility of an assessment tool, a problem-specific electronic Clinical Evaluation Exercise (eCEX), in monitoring and recording student competencies in our internal medicine clerkship. In addition, we wanted to assess the impact of a grading incentive on the number of directly observed student–patient encounters. We hypothesized that the eCEX would be useful in recording a student's clinical performance and that students and evaluators would identify it as educationally valuable. Our secondary hypothesis was that a grading incentive (defined below) would be associated with an increase in the number of directly observed student–patient interactions.

Methods

Description of the technology

We developed a web-based content management system that enables the delivery of content to mobile devices. The main purpose of creating this system was to enable users with average computing skills to build flexible and customizable computer-based learning packages for distribution to mobile devices via direct internet links or as a downloaded program. Users of the system can create and manage the structure and layout of the content, links to other content within the program, and links to multimedia and external programs. This content management system differs from computer programming languages in that extensive technical expertise is not a prerequisite to producing web content. It is designed to make content distribution easy, so that it can be viewed and interacted with using Microsoft Windows-based mobile devices and, more recently, the iPhone and iPod Touch. Because the system has all its data stored in a central location, updating and viewing content in real-time is possible. The system was built on the Microsoft.net™ framework, and, because it is Web-based, it is widely accessible. We have previously reported our experience with this tool in distributing 19 of the core training problems from the Clerkship Directors in Internal Medicine (CDIM) Curriculum Guide, and with using this tool as a patient-encounter log (9, 10).

We recently equipped this software with a tool for the development of real-time assessment of student competencies related to these 19 CDIM training problems. We built the assessment instrument using a cascading tree structure. After adding the training problem (e.g., abdominal pain) in the root directory, we added the competencies to be assessed (e.g., communication skills, history taking, and physical examination skills) in subdirectories. Within each of the specific competency sub-directories, we added line items (e.g., for abdominal pain history-taking we added lines for pain characteristics, associated symptoms, past history of GI disease, family history of GI disease, medications, social history). Within each line, using an HTML editor, we added the specific items to be assessed (e.g., for abdominal pain→history taking→associated symptoms, added items included weight change, fever, dysuria, and GI-related symptoms, such as diarrhea, melena, etc., mostly derived from the CDIM curriculum). When displayed on a mobile device, these items appear as checkboxes. The tool allows the author to define the user to define the minimum number of checked items that constitutes a ‘well done’ versus ‘needs improvement’ performance. Additionally, the tool allows the author to define the minimum number of ‘well done’ lines required to receive a ‘well done’ as opposed to ‘needs improvement.’ This allows the grade to be rendered automatically. The faculty evaluators, however, have the option to override the grade at their discretion.

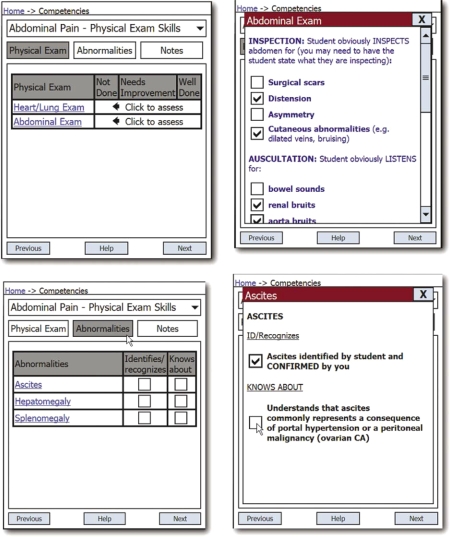

We allowed the students to choose the problem-specific performance objectives on which they wished to be assessed (e.g., the physical examination objectives in a patient with abdominal pain). They, then, displayed the line items for that objective on their device and handed it to a faculty member (including residents). The faculty preceptors subsequently used the checklist to assess the students interacting with a real patient. We have termed this electronic problem-specific clinical evaluation tool the eCEX (Fig. 1).

Fig. 1.

Some screen shots of the eCEX evaluation tool.

After the evaluation, the faculty preceptors electronically signed the assessment record and this record of the students' performance was then stored in a central server for tracking purposes.

Setting

The College of Human Medicine at Michigan State University trains third-year medical students in six communities throughout Michigan. During the academic year 2008–2009, we implemented the eCEX as a required element of the internal medicine clerkship in a convenience sample of 56 students in three of our six community training sites. Fifty-six students in the remaining three training sites did not use the eCEX and constituted the control group.

For the grading incentive, students using the eCEX were to arrange 10 eCEX evaluations with their clinical preceptors as part of the requirements to pass the clerkship. Students chose both the specific competency (e.g., abdominal examination in a patient with abdominal pain) and the specific resident or attending evaluator at their discretion. They were also responsible for orienting and assisting the evaluator in the use of the eCEX software. Students at the control sites were required to complete a single observed complete history and physical examination on a patient chosen for them by the evaluator, in order to pass the clerkship. All additional direct observations in the clerkship were discretionary.

At the end of each eight-week clerkship rotation, all students and preceptors were surveyed at the eCEX sites on the educational utility and technical aspects of the eCEX (Appendices 1 and 2).

Additionally, students in both study groups were surveyed on the number of times they were directly observed by either faculty or residents during their eight weeks on the clerkship. All data were obtained by using the online survey service ‘Survey Monkey’ (www.surveymonkey.com).

The data were downloaded into a spreadsheet and imported into SPSS for statistical analysis. The number of directly observed encounters was analyzed with a one-tailed t-test between the communities using the eCEX and those not using the eCEX, with significance set at a p-value of <0.05. All other data were analyzed with descriptive statistics.

The local Institutional Review Board approved this study.

Results

Student and evaluator Clinical Evaluation Exercise (eCEX) use

All 56 students in the eCEX group successfully uploaded 10 or more completed directly observed encounters. One hundred and twenty-nine faculty evaluators completed at least one eCEX. The average number of eCEX evaluations per faculty evaluator was 4.6 (range = 1–19). Twenty-seven faculty evaluators (20.9%) participated in a single eCEX whereas the remainder performed two or more eCEX evaluations (Table 1).

Table 1.

Student and faculty eCEX use data

| Site 1 | Site 2 | Site 3 | |

|---|---|---|---|

| Average number of evaluators per student per clerkship | 3.7 (1–6) | 3.6 (2–7) | 4 (1–7) |

| Total number of evaluators per community | 46 | 47 | 36 |

| Average number of evaluations per evaluator | 3.5 (±3.6) Range (1–19) | 4.9 (±) 3.7 Range (1–14) | 5.6 (±) 5.2 Range (1–19) |

| Percentage of faculty doing 1 CEX | 25 | 17 | 19.4 |

| Percentage of faculty doing >2 CEX | 46 | 62 | 66 |

Note: Summer 2008 through February 2009; 129 separate faculty provided at least one eCEX evaluation.

Student were observed, no by an average, by of 3.6–4 separate faculty evaluators depending on the community (range = 1–7 evaluators per student).

Survey data on the educational utility and technical aspects of Clinical Evaluation Exercise (eCEX)

Fifty-three of 56 (91%) eCEX students and 71 of 125 faculty evaluators (55%) responded to an online survey of their perception of the educational utility and technical aspects of the eCEX (Table 2).

Table 2.

Faculty and student survey on the utility of eCEX

| Faculty (n = 72) | Students (n = 53) | |||

|---|---|---|---|---|

| Strongly agree or agree (%) | Disagree or strongly disagree (%) | Strongly agree or agree (%) | Disagree or strongly disagree (%) | |

| Understand specific physical examination and history-taking competencies* | 88.4 | 2.9 | 73.6 | 17.0 |

| Technical problems | 1.5 | 88.2 | ||

| Difficulty fitting into work day | 8.6 | 84.2 | ||

| It was easy getting resident to observe | 20.7 | 52.8 | ||

| It was easy getting faculty to observe | 11.6 | 69.8 | ||

| Improved my assessments | 74.7 | 5.6 | ||

| Improved my ability to give feedback | 88.7 | 9.9 | ||

| Time it took me to learn to use the program (min) | 6.3 | |||

| Time evaluating student (min) | 13.3 | |||

| Overall usefulness | 95.3 | 1.5 | 43.4 | 28.3 |

Note: Summer 2008 through February 2009; neutral or NA not counted; 56 students used the eCEX, 53 (91%) responded to the survey on the eCEX utility; 70 out of 129 (54%) faculty/residents.

*‘Needing to know and demonstrate’ for students, and ‘improved ability to identify the specific competencies needing to be evaluated’ for faculty.

Students and faculty both agreed that the eCEX helped them understand the specific history and physical examination competencies being assessed during the encounters (75.5–88.4% agree/strongly agree). Faculty felt that the eCEX improved their assessments (74.7%) and their ability to give feedback (88.7%). The average time spent by faculty in evaluating a student was 13.3 minutes. Faculty spent an average of 6.3 minutes on learning to use the electronic checklist. They did not perceive accommodating the eCEX into their workday as difficult (84.2%). However, only 11.6–20.7% of the students agreed or strongly agreed that arranging for either residents or faculty to observe them was easy.

Number of directly observed encounters

All 112 students (100%) reported the number of times they were directly observed by a resident or attending. Students in the eCEX group identified residents as observers of their focused history and focused physical examinations an average of 7.7 (±8.3) and 11.5 (±11.3) times, respectively. Corresponding numbers for the control group were 4.6±3.9 (p<0.05) and 6.9±7.4 (p<0.05). Other comparisons of the various measures between the two groups were not statistically significant.

Evaluation of competencies chosen by students

Students were free to choose the specific training problem from our pre-specified list of problems and the competency in which they would be evaluated. All the students chose at least one physical examination competency on which to be evaluated, whereas 20% of the students chose not to be evaluated on communication skills or history taking (Table 3).

Table 3.

eCEX student data

| Communication skills | History taking | Physical examination | |

|---|---|---|---|

| Average number CEX's per competency domain | 2.1 (±1.8) | 3.4 (±2.1) | 5.6 (±2.7) |

| Percentage of students having NO evaluations in the domain | 20.7 | 21.1 | 0 |

Note: The average number of patients that students were directly observed interacting with was 7.8.

Discussion

There are several unique aspects to our study. To our knowledge, this is the first electronic mobile assessment tool linked to problem-specific performance objectives from a nationally recognized curriculum. Additionally, we believe this is the first comparative study assessing an intervention aimed at increasing the number of directly observed student–patient encounters.

A number of studies have noted the difficulty encountered by faculty members in identifying the specific performance criteria upon which medical students should be evaluated. In a study of the implementation of a mini-CEX with 326 medical students, Hill commented that some examiners in the study were unsure of the standard to expect from medical students and problems existed ‘in trying to ensure that everyone was working to the same or similar standards’ (5). In another study of 396 mini-CEX assessments of 176 students, Fernando concluded that faculty evaluators were unsure of the level of performance expected of the learners (11). In a study of 172 family medicine clerks, on the role of direct observation in clerkship ratings, Hasnain suggested that the high level of faculty-rater disagreement concerning clinical performance of students might have been due ‘to the fact that standards for judging clinical competence were not explicit’ (4).

These findings should not be surprising given the nature of nationally recognized medical curricula. For example, the CDIM curriculum used in this study specifies that, in patients with COPD, students should be able to demonstrate the ability to obtain at least 17 specific historical items, demonstrate at least 10 separate physical examination steps, and be able to counsel and encourage patients about smoking cessation, peak flow monitoring, environmental issues, and asthma action plans when indicated. This volume of information makes knowing and remembering what a medical student should be able to do at a particular point in time and with a particular patient in a specific clinical situation very difficult. Therefore, assessing attainment of specific curricular objectives is difficult, if not impossible.

Our results demonstrate that delivery of expected performance criteria just before planned assessments using the eCEX fostered an understanding among faculty evaluators and students of what to expect during the evaluation; specifically 88% of faculty agreed that the eCEX helped them understand the physical examination and history competencies that they needed to evaluate, and 74% of students agreed that the eCEX helped understand the specific history and physical examination competencies that they needed to know and demonstrate. In addition, 75–89% of the faculty respondents felt that the eCEX improved their assessments and their ability to give feedback.

Our second objective was to test whether or not a grading incentive increased the number of directly observed patient-encounters. The use of a grading incentive to accomplish CEX evaluations is not a new concept. Kogan used it as an incentive for students to return their paper-based mini-CEX booklets that were handed out at the beginning of their internal medicine clerkship. However, the incentive was not tied to the number of forms completed, unlike in our study where a grade was given only if the student completed 10 evaluations (8). As in our study, the actual results of the mini-CEX evaluations in Kogan's study were not tied to the final grade.

Using a handheld device-based mini-CEX, Torre required students to complete two electronic mini-CEX evaluations during a two-month clerkship and attained 100% compliance. The presence or absence of a specific grading incentive was not mentioned in this study. Again, the results of the actual CEX evaluations did not impact a student's final clerkship evaluation in Torre's study. Neither Kogan's nor Torre's study used a comparison group to assess the effect of their mini-CEX intervention on the number of directly observed encounters.

In a study of 173 medical students, Fernando noted that only 41% of students met the requirement of three mini-CEX evaluations in their final year of medical school. Fernando speculated that since these assessments were formative and did not count toward the students' final grade, the perceived importance of the mini-CEX may have been diminished (11).

It has been estimated that adequate reliability of the mini-CEX can be achieved with 8–15 encounters (5). Therefore, it is educationally important to ensure that a minimum number of CEX encounters occur. We have previously demonstrated that a grading incentive is associated with a very high rate of compliance with students meeting patient-log requirements (10). In this study, we also demonstrated that a grading incentive was associated with 100% compliance in all 56 students completing 10 eCEX evaluations.

In their study, Fernando et al. commented: ‘Providing formal training (on the use of the mini-CEX) to all clinical staff who are likely to encounter students, or who may be approached by students for assessment, is probably unrealistic’ (11). The results of our study strongly suggest that at least when it comes to the faculty's understanding of what to assess, formal training may not be necessary. Giving students the responsibility to orient faculty to the process and to provide faculty with a detailed electronic checklist just prior to the assessment may be an answer to the dilemma posed by Fernando.

Several limitations, however, need to be kept in mind when interpreting the findings of our study. First, this is a single institution study and the results may not be generalized to other institutions. Second, the three intervention sites in the study were assigned based upon convenience and not via randomization. It is possible that site-specific characteristics, not our intervention, may have explained the results. Third, we did not require our students to complete a full CEX but only a focused history or focused aspect of the physical examination. Thus our results may not be applied to situations in which a full CEX is required. Fourth, although we had an excellent survey response from the students, only 55% of the faculty responded, and those who did not respond may have been less enthusiastic about the value of the eCEX. Fifth, we did not study the reliability of faculty assessments using the eCEX. The use of structured checklists in the assessment of clinical performance is known to at least double the accuracy of trainee assessment by identifying more performance errors (12). However, this improved accuracy did not translate into more negative global assessments. The global assessments in our study were generated automatically, and several of the faculty commented that they appreciated this aspect of the eCEX. We aim to study the inter-rater reliability of the eCEX over the next academic year. Sixth, perhaps our study confirms a self-evident outcome that a grading incentive provides a strong stimulus for students. Although the expectations were quite different for the two groups, students in each group met their respective requirement for directly observed encounters. Given that we assessed two separate interventions – a grading incentive and the eCEX tool – we cannot say with certainty which intervention increased the number of directly observed encounters. It is quite possible that the use of the eCEX, and not the grading incentive, stimulated more frequent direct observation. Finally, we do not know how allowing students to select the patient and specific competency assessment in the eCEX group influenced the study's outcome.

We conclude that the use of a problem-specific electronic mobile checklist increased the students' and evaluators' understanding of the specific objectives of the observation and the evaluators' self-reported ability to give feedback and provide assessments. We further conclude that a grading incentive increased the number of times a student reported being observed by a resident physician while performing a focused history and physical examination. Future study's on the inter-rater reliability of the eCEX are planned.

Appendix 1. Faculty evaluator survey questionnaire.

| 1. | The amount of time I spent evaluating the student was: _____ | |||||

| 2. | The number of CEX evaluations I personally could see myself doing with students during a 2 week inpatient block is: ________ | |||||

| Strongly Agree | Agree | Neutral | Disagree | Strongly disagree | ||

| 3. | I found the eCEX software easy to use | ○ | ○ | ○ | ○ | ○ |

| 4. | I found it difficult to fit the CEX evaluation into my work day | ○ | ○ | ○ | ○ | ○ |

| 5. | Routine use of CEX during the medicine clerkship will help me improve my assessment of medical students' competencies | ○ | ○ | ○ | ○ | ○ |

| 6. | The use of CEX improved my ability to provide feedback to the students whom I evaluated | ○ | ○ | ○ | ○ | ○ |

| 7. | The CEX improved my ability to identify specific physical exam competencies which I needed to evaluate | ○ | ○ | ○ | ○ | ○ |

| 8. | The CEX improved my ability to identify specific history-taking competencies I needed to evaluate | ○ | ○ | ○ | ○ | ○ |

| 9. | I liked the idea of evaluating students by observing multiple short encounters | ○ | ○ | ○ | ○ | ○ |

| 10. | I experienced significant technical problems using the CEX | ○ | ○ | ○ | ○ | ○ |

| 11. | I would recommend that faculty, not the student, specify the specific required observations | ○ | ○ | ○ | ○ | ○ |

| Very useful | Useful | Neutral | Not useful | |||

| 12. | As an evaluator, I would rate the overall educational usefulness of the CEX as: | ○ | ○ | ○ | ○ |

Appendix 2. Student survey questionnaire.

| Very Important/Strongly Agree | Important/Agree | Neutral | Unimportant/Disagree | Very unimportant/Strongly Disagree | ||

| 1. | The CEX improved my understanding of specific physical exam competencies I needed to know and demonstrate | ○ | ○ | ○ | ○ | ○ |

| 2. | The CEX improved my understanding of specific history-taking competencies I needed to know and demonstrate | ○ | ○ | ○ | ○ | ○ |

| 3. | I liked the idea of requiring multiple small observations | ○ | ○ | ○ | ○ | ○ |

| 4. | I spent more than 5 minutes in orienting preceptors to the use of the CEX | ○ | ○ | ○ | ○ | ○ |

| 5. | Ten separate observations were too many | ○ | ○ | ○ | ○ | ○ |

| 6. | The students should be allowed to choose the specific required observations (e.g. abdominal pain history) | ○ | ○ | ○ | ○ | ○ |

| 7. | The faculty preceptors should specify/select all of the specific required observations | ○ | ○ | ○ | ○ | ○ |

| Very Easy | Easy | Neutral | Difficult | Very Difficult | ||

| 8. | How easy was it to schedule a resident to observe you? | ○ | ○ | ○ | ○ | ○ |

| 9. | How easy was it to schedule an attending to observe you? | ○ | ○ | ○ | ○ | ○ |

| 10. | How easy was the overall use of the CEX? | ○ | ○ | ○ | ○ | ○ |

- How many times were you directly observed performing (did you directly observe a student performing) a focused physical exam on a patient?

- How many times were you directly observed performing (did you directly observe a student performing) a full physical exam on a patient?

- How many times were you directly observed performing (did you directly observe a student performing) a focused history on a patient?

- How many times were you directly observed performing (did you directly observe a student performing) a full history on a patient?

- How many times were you directly observed (did you directly observe a student) counseling a patient?

Conflict of interest and funding

This study was funded in part by a grant 5 D56HP05217 from the Division of Medicine, Bureau of Health Professions, Health Services and Resources Administration.

References

- 1.LCME. Washington, DC: Liaison Committee on Medical Education; 2009. Accreditation Standards [Internet] [updated 30 October 2009]; Available from: http://www.lcme.org/functionslist.htm [cited 7 Dec 2009] [Google Scholar]

- 2.Howley LD, Wilson WG. Direct observation of students during clerkship rotations: a multiyear descriptive study. Acad Med. 2004;79:276–80. doi: 10.1097/00001888-200403000-00017. [DOI] [PubMed] [Google Scholar]

- 3.Pulito AR, Donnelly MB, Plymatle M, Mentzer RM., Jr. What do faculty observe of medical students' clinical performance? Teach Learn Med. 2006;18:99–104. doi: 10.1207/s15328015tlm1802_2. [DOI] [PubMed] [Google Scholar]

- 4.Hasnain M, Connell KJ, Downing SM, Olthoff A, Yudkowsky R. Toward meaningful evaluation of clinical competence: the role of direct observation in clerkship ratings. Acad Med. 2004;79:S21–4. doi: 10.1097/00001888-200410001-00007. [DOI] [PubMed] [Google Scholar]

- 5.Hill F, Kendall K, Galbraith K, Crossley J. Implementing the undergraduate mini-CEX: a tailored approach at Southampton University. Med Educ. 2009;43:326–34. doi: 10.1111/j.1365-2923.2008.03275.x. [DOI] [PubMed] [Google Scholar]

- 6.Holmboe ES. Faculty and the observation of trainees' clinical skills: problems and opportunities. Acad Med. 2004;79:16–22. doi: 10.1097/00001888-200401000-00006. [DOI] [PubMed] [Google Scholar]

- 7.Torre DM, Simpson DE, Elnicki DM, Sebastian JL, Holmboe ES. Feasibility, reliability and user satisfaction with a PDA-based mini-CEX to evaluate the clinical skills of third-year medical students. Teach Learn Med. 2007;19:271–7. doi: 10.1080/10401330701366622. [DOI] [PubMed] [Google Scholar]

- 8.Kogan JR, Bellini LM, Shea JA. Feasibility, reliability, and validity of the mini-clinical evaluation exercise (mCEX) in a medicine core clerkship. Acad Med. 2003;78:S33–5. doi: 10.1097/00001888-200310001-00011. [DOI] [PubMed] [Google Scholar]

- 9.Ferenchick G, Fetters GM, Carse AM. Just in time: technology to disseminate curriculum and manage educational requirements with mobile technology. Teach Learn Med. 2008;20:44–52. doi: 10.1080/10401330701542669. [DOI] [PubMed] [Google Scholar]

- 10.Ferenchick G, Mohamand A, Mireles J, Solomon D. Using patient encounter logs for mandated clinical encounters in an internal medicine clerkship. Teach Learn Med. 2009;21:299–304. doi: 10.1080/10401330903228430. [DOI] [PubMed] [Google Scholar]

- 11.Fernando N, Cleland J, McKenzie H, Cassar K. Identifying the factors that determine feedback given to undergraduate medical students following formative mini-CEX assessments. Med Educ. 2008;42:89–95. doi: 10.1111/j.1365-2923.2007.02939.x. [DOI] [PubMed] [Google Scholar]

- 12.Noel GL, Herbers JE, Caplow MP, Cooper GS, Pangaro LN, Harvey J. How well do internal medicine faculty members evaluate the clinical skills of residents? Ann Intern Med. 1992;117:757–65. doi: 10.7326/0003-4819-117-9-757. [DOI] [PubMed] [Google Scholar]