Abstract

The computation by which our brain elaborates fast responses to emotional expressions is currently an active field of brain studies. Previous studies have focused on stimuli taken from everyday life. Here, we investigated event-related potentials in response to happy vs neutral stimuli of human and non-humanoid robots. At the behavioural level, emotion shortened reaction times similarly for robotic and human stimuli. Early P1 wave was enhanced in response to happy compared to neutral expressions for robotic as well as for human stimuli, suggesting that emotion from robots is encoded as early as human emotion expression. Congruent with their lower faceness properties compared to human stimuli, robots elicited a later and lower N170 component than human stimuli. These findings challenge the claim that robots need to present an anthropomorphic aspect to interact with humans. Taken together, such results suggest that the early brain processing of emotional expressions is not bounded to human-like arrangements embodying emotion.

Keywords: emotion, ERP, N170, P1, non humanoid robots

INTRODUCTION

The computation by which our brain elaborates fast responses to emotional expressions is currently an active field of brain studies. Until now, studies have focused on stimuli taken from everyday life—namely facial expressions of emotion, emoticons (also called smileys) created to convey a human state of emotion and instantly taken as such, landscapes or social pictures likely to generate recalls of past emotional events, etc. Less is known, however, concerning brain responses to expressive stimuli devoid of humanity and that have never been seen before. Non-humanoid robots can help providing such kind of information.

Robots are a potential scaffold for neuroscientific thought, to put it in Ghazanfar and Turesson’s words (Ghazanfar and Turesson, 2008). This comment intends to announce a turn in the exploration of the brain by neuroscientists. More and more is known about the fact that the Mirror Neuron System responds anthropomorphically to robotic movements, when the mechanical motion is closely matched with the biological one (Gazzola et al., 2007; Oberman et al., 2007). Interference between action and observation of a biological motion performed by a humanoid robot suggests similar action effect of robotic and human motor perception (Chaminade et al., 2005), although an artificial motion may require more attention (Chaminade et al., 2007). Electromyographic studies have found that we respond by similar automatic imitation to the photograph of a robot opening or closing hand and to the photograph of a human hand (Chaminade et al., 2005; Press et al., 2005; Bird et al., 2007; Oberman et al., 2007).

In sum, the human brain tends to respond similarly to human compared to robotic motion. But, what about emotion, so deeply anchored in our social brain (Brothers, 1990; Allison et al., 2000)? Does the human brain respond similarly to emotion expressed by human and robot?

To explore this question, we compared event-related potentials (ERPs) evoked by human expressions of emotion and by emotion-like patterns embodied in non-humanoid robots that should prevent the patterns from being captured as conveying mental states of emotion and recalling emotional reactions already experienced.

Recent brain findings may feed assumptions about whether we can react similarly to robotic and human emotional stimuli. Perceptual processing of facial emotional expressions engages several visual regions involved in constructing perceptual representations of faces as well as activations of subcortical structures such as the amygdala (Adolphs, 2002; Vuilleumier and Pourtois, 2007). Functional MRI studies found greater activations in the occipital and temporal cortex and in the amygdala in response to expressive faces as compared to neutral faces (Breiter et al., 1996; Vuilleumier et al., 2001). Electroencephalographic studies have localized temporally this amplification of activity for emotional content at about 100 ms post-stimulus, with occipital components of the ERPs) amplitude being higher for emotional than neutral faces (Pizzagalli et al., 1999; Batty and Taylor, 2003; Pourtois et al., 2005). In non-human primates, intracell recordings found temporal lobe face-selective neurons differentiating emotion vs neutral expression at a latency of 90 ms, and differentiating monkey identity about 70 ms later (Sugase et al., 1999). These delays meet P1 and N170 visual components latencies in human EEG studies, with the P1 wave being modulated by emotion, and the N170 wave being mainly modulated by facial configuration, and originated in higher-level visual areas selective of face recognition (Bentin et al., 1996; George et al., 1996; Pizzagalli et al., 1999; Batty and Taylor, 2003; Rossion and Jacques 2008).

The emotional modulation of the P1 has been described in the literature in response to human (Batty and Taylor, 2003) and schematic emotional faces (Eger et al., 2003) and also in response to emotionally arousing stimuli such as body expressions (Meeren et al., 2005), words (Ortigue et al., 2004) or complex visual scenes (Carretie et al., 2004; Alorda et al., 2007). Such perceptual enhancement has been found in response to positive as well as negative eliciting stimuli (Batty and Taylor, 2003; Brosch et al., 2008). The P1 visual component has a field distribution compatible with activation of occipital, visual brain areas, and may be due to several striate and extrastriate generators within the posterior visual cortex (Di Russo et al., 2003; Vanni et al., 2004; Joliot et al., 2009). The posterior activation is likely to be modulated by the amygdala, acting remotely on sensory cortices to amplify visual cortical activity (Amaral et al., 2003), and may as well involve frontal sources (Carretie et al., 2005). Such participation would be related to the top–down regulation of attention designed to enhance perceptual processing of emotionally relevant stimuli. P1 amplitude enhancement in response to emotional stimuli compared to neutral stimuli may reflect a sensory gain for the processing of emotional stimuli. This is congruent with results from fMRI studies that reported emotional amplification in several visual regions of early perceptual processing (e.g. Lang et al., 1998).

Now suppose that we are facing a non-humanoid robot displaying emotional and neutral patterns. We wonder whether the encoding of emotion from such a machine-like set-up may occur as early as P1: will we find a P1 enhancement for the emotional patterns, notwithstanding the fact that the set-up is a complex combination of emotional features and typical components of mechanical devices?

To investigate this question, we created pictures of robotic patterns presenting two contrasted properties: some of the robotic features are similar to inner features of emotion in the human face (mouth, eyes, brows) but a great number of other features are familiar components of machine set-ups (cables, nails, threads, metallic pieces). In such a case, we do not know what the prominent perceptual saliency should be. If we reason by analogy we could consider that the answer is already given in studies having used schematic faces (Eger et al., 2003; Krombholz et al., 2007). Those studies have shown similar brain responses to the emotional expressions of real faces compared to schematic faces. However, our robots are not analogous to schematic faces, at least regarding the criterion of complexity. In schematic faces, only the main invariant of an expression has been selected so as to provide both simpler and prototypical patterns. Reversely, our designs are complex mixtures of a prominent feature of emotion and familiar components of mechanical devices. Schematic faces show much less variance than human faces (Kolassa et al., 2009) or robots. It is not given for granted that the invariant of the robotic emotional expression will be extracted from the whole. Those contrasted properties of our designs allow us to explore whether the brain can spot emotion in complex non-humanoid stimuli.

METHOD

Participants

Fifteen right-handed subjects took part in the experiment (seven women and eight men, mean age 22.1 ± 3.28). All had normal or corrected to normal vision. All participants provided informed consent to take part in the experiment that had been previously approved by the local ethical committee.

Stimuli and design

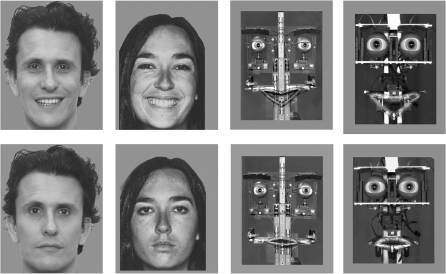

Eight photos of four different robotic set-ups displaying happy or neutral expressions and eight photos from four different human faces displaying happy or neutral expressions were selected (Figure 1). The eight robotic expressions were created following the Facial Action Coding System (FACS) criteria. Each emotional expression was validated by a FACS-certified researcher and a FACS expert. The robotic set-ups from which the pictures were drawn were all inspired by Feelix (Canamero and Fredslund, 2001; Canamero and Gaussier, 2005) and designed by Gaussier and Canet (Canamero and Gaussier, 2005; Nadel et al., 2006). They were composed of a robotic architecture linked to a computer. The eyes, eyebrows, eyelids and mouth were moved by 12 servomechanisms connected to a 12-channel serial servo-controller with independent variable speed. An in-house software was used to generate the emotional patterns and to command the different servo-motors accordingly. At a pre-experimental stage, robotic expressions were recognized by 20 adults as happy or neutral with a mean percent score of 95% for happy and 84% for neutral (Nadel et al., 2006).

Fig. 1.

Examples of the emotional stimuli presented. Human and robotic pictures of happy (up) and neutral (bottom) expressions.

Concerning human pictures, six were drawn from Ekman and Friesen’s classical set of prototypical facial expressions and two were posed by an actor exhibiting the same facial action units as those described in the FACS (Ekman and Friesen, 1976).

All 16 pictures were black and white. They were equalized for global luminance. Pictures were presented in the centre of the computer screen and subtended a vertical visual angle of 7°.

Behavioural data recording and analysis

Participants were asked to discriminate if pictures displayed a neutral or an emotional expression and to press a choice button by either the left or the right hand in a counterbalanced order. Subjects were asked to react as quickly as possible although avoiding incorrect responses. The participants completed a total of 256 trials including a practice block of 16 trials, followed by four blocks of 60 experimental trials, lasting about 20 min. Each block consisted of random combinations of four robotic and four human displays of happy and neutral expressions. Each trial began with the presentation at the centre of the screen of a fixation cross for 100 ms, followed by a picture stimulus for 500 ms. Interval between trial varied randomly between 1000 and 2400 ms.

Accuracy, by means of percentage of correct identification of emotional expression, and response time were analysed separately by a 2 × 2 repeated measures analysis of variance (ANOVA), with media (robot, human) and emotion (happy, neutral) as the repeated measures.

Electrophysiological recording and analysis

Electroencephalograms (EEGs) were acquired from 62 Ag/AgCl electrodes positioned according to the extended 10–20 system and referenced to the nose. Impedances were kept below 5 kΩ. The horizontal electrooculogram (EOG) was acquired using a bipolar pair of electrodes positioned at the external canthi. The vertical EOG was monitored with supra and infraorbital electrodes. The EEG and EOG signals were sampled at 500 Hz, filtered online with a bandpass of 0.1–100 Hz and offline with a low pass at 30 Hz using a zero-phase-shift digital filter. Eye blink was removed offline and all signals with amplitudes exceeding ±100 µV in any given epoch were discarded. Data were epoched from −100 to 500 ms after stimulus onset, corrected for baseline over the 100 ms window pre-stimulus, transformed to a common average reference and averaged for each individual in each of the four experimental conditions. Peak amplitudes were analysed at the 19 most parietal–occipital electrode sites for the N170 (P7/8, P5/6, P3/4, P1/2, Pz, PO3/4, PO5/6, PO7/8, POZ, O1/2 and Oz) and the 10 most posterior parietal–occipital for the P1 (PO3/4, PO5/6, PO7/8, POZ, O1/2 and Oz). Peak latency was measured for the P1 by locating the most positive deflection from the stimulus onset within the latency window of 80–120 ms. N170 was identified in grand average waveforms as the maximum voltage area within the latency windows of 130–190 ms. P1 Peak amplitude and latency were analysed separately by a 2 × 2 × 10 repeated measures ANOVA, with media (robot, human), emotion (happy/neutral) and electrodes (10 levels) as intrasubject factors. For the N170, an identical ANOVA was run with the factor electrode involving 19 levels. A Greenhouse–Geisser correction was applied to P-values associated with multiple degrees of freedom repeated measures. Paired t-tests were used for 2 × 2 comparisons.

RESULTS

Behavioural effects of emotion

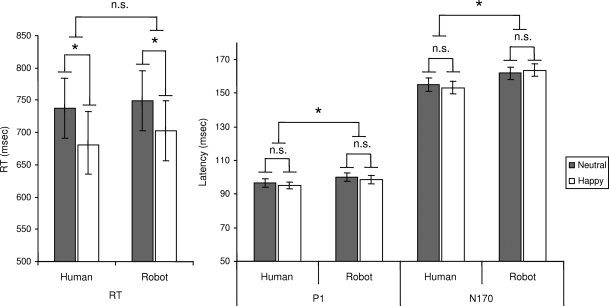

Participants correctly discriminated 94% of the expressions as emotional or neutral, irrespective of the robotic vs human media support, F(1,14) = 0.001, P = 0.97, or emotion, F(1,14) = 2.35, P = 0.15. Mean reaction times (Figure 2) were not significantly affected by media, F(1,14) = 3.43, P = 0.087, while there was a significant effect of emotion, F(1,14) = 10.34, P < 0.001. Responses to happy displays were shorter (692 ± 187 ms) than responses to neutral displays (743 ± 180 ms) independent of media effects, F(1,14) = 2.67, P = 0.52.

Fig. 2.

Temporal responses. Mean reaction times and mean latency values for the P1 and N170 peaks averaged across their analysed electrodes (ms ± S.E.M.). ns p > 0.5; *p < .01.

Early emotion effects on P1 component

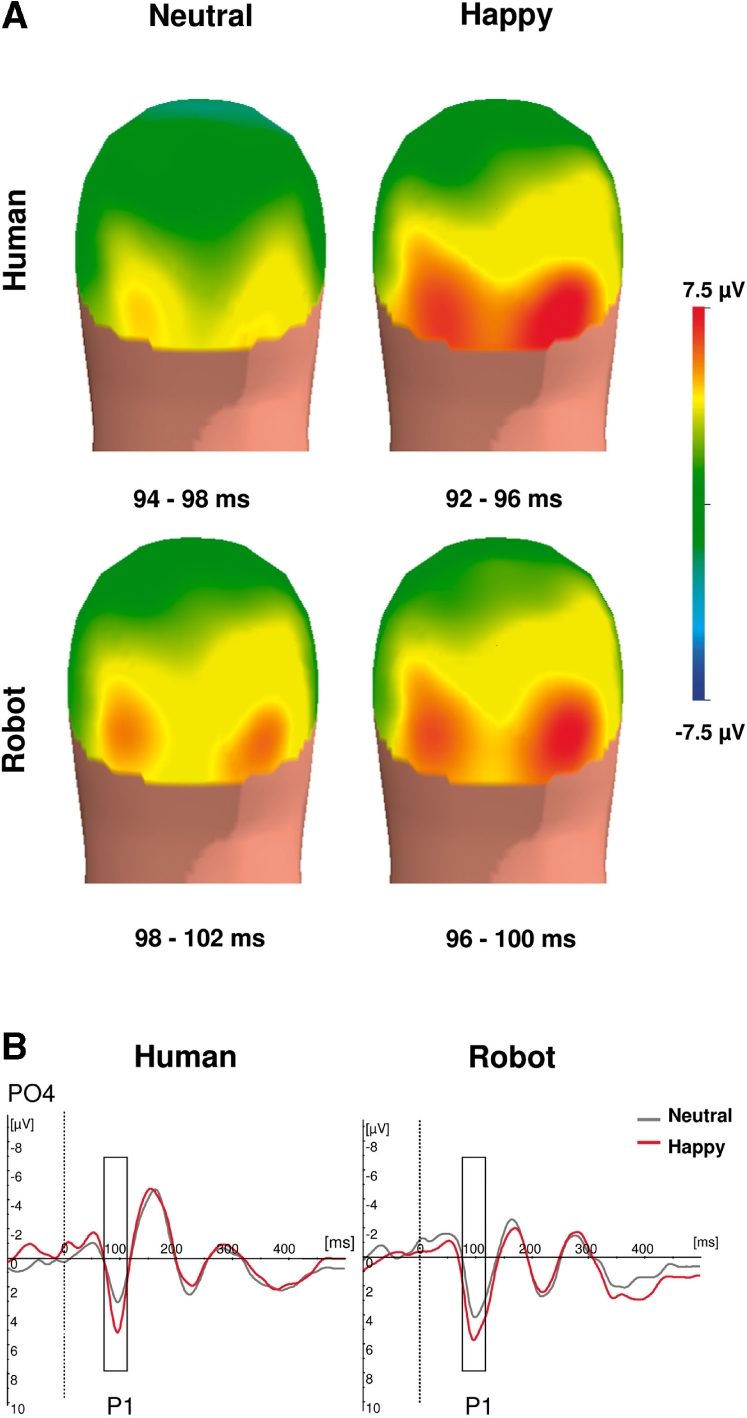

P1 amplitude (Figure 3) was higher for happy than for neutral expressions, F(1,14) = 25.68, P < 0.001. Mean amplitude was, respectively, 7.7 ± 3.36 µV for happy and 6.17 ± 3.25 µV for neutral. Separate analysis within each media showed that P1 emotion effect was significant for human [F(1,14) = 16.65, P < 0.001] as well as for robots [F(1,14) = 15.82, P < 0.001]. P1 amplitude was also affected by human vs robotic media, F(1,14) = 4.68, P < 0.05, with P1 responses being greater for robotic expressions (7.54 ± 3.1 µV) than for human expressions (6.33 ± 3.6 µV). There was no interaction between emotion and media, F(1,14) = 2.34, P = 0.14. P1 latency was shorter in response to human than to robotic expressions, F(1,14) = 9.018, P < 0.01. The P1 peak culminated parieto–occipitally at 97 ± 2.2 ms in response to human expressions, and at 101 ± 2.5 ms in response to robotic expressions. P1 latency did not vary with emotion, F(1,14) = 2.29, P = 0.15, nor did it interact with media, F(1,14) = 0.07, P = 0.93.

Fig. 3.

Emotion effect on the P1. (A) Back view of the grand average spline-interpolated topographical distribution of the ERP in the latency range of P1 according to media (human vs robot) and expression (happy vs neutral). (B) Grand averaged ERP response at PO4 for neutral (black) and happy (red) expressions of human (left) and robotic (right) displays.

Media effect on N170 component

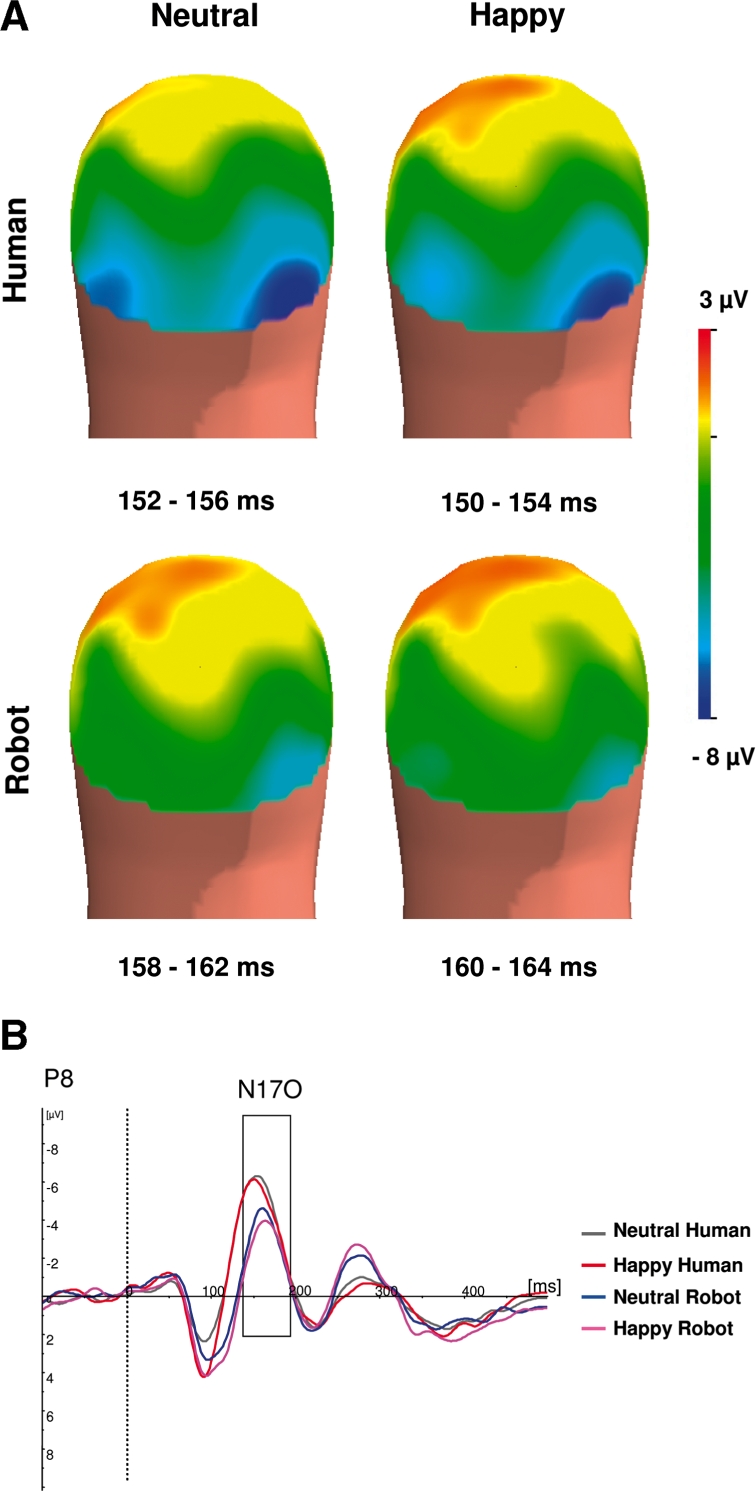

Media affected N170 latency and amplitude (Figure 4), with N170 latency being earlier for human than for robotic media, F(1,14) = 13.81, P < .01. N170 culminated temporo–occipitally at 154 ± 3.5 ms for human expressions and at 162 ± 3.8 ms for robotic expressions.

Fig. 4.

Media effect on the N170. (A) Back view of the grand average spline-interpolated topographical distribution of the ERP in the latency range of N170 according to media (human vs robot) and emotion (happy vs neutral). (B) Grand averaged ERP responses at P8 for neutral human (grey), happy human (red), neutral robotic (blue) and happy robotic (pink) stimuli.

There was a significant media effect on N170 amplitude, F(1,14) = 56.33, P < 0.0001. N170 was higher for human expressions (7.1 ± 3.71 µV) than for robotic ones (4.26 ± 3.5 µV).

There was no effect of emotion on N170 latency, F(1,14) = 0.09, P = 0.75, or amplitude, F(1,14) = 1.51, P = 0.225, and no interaction between expression and media for latency, F(1,14) = 1.15, P = 0.45, or amplitude, F(1,14) = 1.59, P = 0.23.

An electrode effect on N170 latency, F(18,252) = 3.73, P < 0.001, ε = 0.006, and amplitude, F(18,252) = 4.95, P < 0.0001, ε = 0.005, reflected larger amplitudes and earlier latencies to lateral than midline sites. Such effect supported the customary parietal–lateral distribution of the N170.

DISCUSSION

We compared ERP and behavioural responses to emotion elicited by robotic and human displays. The temporal dynamics of early brain electrical responses showed two successive visual components: an occipital P1 component amplitude that was modulated by emotion in response to robotic stimuli as well as to human stimuli and a temporo–occipital N170 component differentiating brain responses to robotic vs human stimuli.

These results are congruent with previous findings concerning human emotional expression. An enhancement of the P1 for emotional content has been shown in emotional detection tasks (Pizzagalli et al., 1999; Fichtenholtz et al., 2007) and also in studies exploring the impact of emotion on attentional orientation (Pourtois et al., 2005; Brosch et al., 2008). Such findings suggest that P1 enhancement may reflect an increased attention towards motivationally relevant stimuli during early brain processing.

Within the perspective of natural selective attention, perceptual encoding is proposed to be in part directed by underlying motivational systems of approach and avoidance (Lang et al., 1997; Schupp et al., 2006): Certain kinds of stimuli trigger selective attention due to their biological meaning. Emotional cues would guide visual attention and receive privileged processing that would in turn enhance the saliency of emotional stimuli (Adolphs, 2004; Rey et al., 2010). This hypothesis is supported by EEG and fMRI findings of higher perceptual activations in visual regions for emotional stimuli compared to neutral ones, when using human faces or face representations such as schematic faces (Vuilleumier et al., 2001; Eger et al., 2003; Britton et al., 2008). In this context, recent findings point out that the P1 would reflect a more general form of early attentional orienting based on other stimulus features such as salience (Taylor, 2002; Briggs and Martin, 2008). For example, P1 amplitude is enhanced in response to images of upright faces compared to inverted faces (Taylor, 2002).

This interpretation goes well along the fact that at the level of perceptual encoding affective properties may be confounded with physical properties of the stimulus (Keil et al., 2007). The initial sensory response in the visual cortex is sensitive to simple features associated with emotionality (Pourtois et al., 2005; Keil et al., 2007). The fact that simple emotional features are likely to modulate P1 component may explain why happy robotic patterns elicited a higher P1 than neutral robotic ones. Indeed, the difference between the neutral and happy robotic displays was the lip pattern.

The detection of happy faces is especially dependent on the mouth region. Whithout the mouth region, detection times to happy faces are longer (Calvo et al., 2008) and the lower section of the face including the mouth is sufficient to accurately recognize happiness (Calder et al., 2000). The mouth region provides the most important diagnostic feature for the recognition or identification of happy facial expressions (Adolphs, 2002; Leppanen and Hietanen, 2007; Schyns et al., 2007). Our findings suggest that an upward lip pattern is not only sufficient for a face to be classified as happy but also sufficient to pick up and process selectively the emotional feature among a mixed combination of emotional and non-emotional components, at the perceptual level of information processing as soon as 90 ms poststimulus. In our robots, the upward lip pattern may be the salient element rather than the metallic piece beneath the mouth or the cables suspended behind the eye.

Behavioural results are congruent with such a facilitated detection of happy stimuli. Happy stimuli elicited quicker reaction times than neutral stimuli, for robot as well as for human stimuli. Such facilitation is in line with the literature of behavioural studies using human emotional faces as stimuli. Concerning positive emotional stimuli in particular, studies using dot-probe tasks have found greater attentiveness for happy faces (Brosch et al., 2008; Unkelbach et al., 2008). Happy faces including schematic ones are identified more accurately and more quickly than any other faces (Leppanen and Hietanen, 2004; Palermo and Coltheart, 2004; Juth et al., 2005;). The absence of a faster detection of happy faces in a few studies (Ohman et al., 2001; Juth et al., 2005; Schubo et al., 2006) accounted for a saliency effect, as measured by Calvo et al. (2008).

An increased saliency in the mouth region of happy robots may explain the faster categorization of happy robots as emotional. Stimulus categorization can actually be quickened by salient features that would be detected without requiring a complete visual analysis of the stimulus (Fabre-Thorpe et al., 2001), leading to shortened reaction times to detect target cues. Indeed, rapid recognition of happy faces may be achieved by using the smile as a shortcut for categorizing facial expressions (Leppanen and Hietanen, 2007).

Detecting emotionally significant stimuli in the environment might be an obligatory task of the organism. Emotional detection does apparently not habituate as a function of passive exposure, and exists in passive situations as well as categorization tasks (Schupp et al., 2006; Bradley, 2009). Early posterior cortical activity in the P1 temporal window has been shown to be enhanced by emotional cues in tasks where emotional cues were either target (instructed attention) or non-target cues (motivated attention). For example, Schupp et al. (2007) found an early emotional occipital effect while some picture categories served as target category as well as they were passively displayed in separate runs.

Cortical indices of selective attention due to implicit emotional and explicit task-defined significance do not differ at the level of perceptual encoding. Same conclusion comes from fMRI studies: as presumed by Bradley et al. (2003), the attentional engagement prompted by motivationally relevant stimuli involve the same neural circuits as those that modulate attentional resource allocation more broadly, e.g. by task or instructional requirements. To summarize, P1 amplitude may be enhanced by both voluntary allocation of attentional resources and the automatic capture of motivationally relevant stimuli. Our findings show that such perceptual enhancement for emotional stimuli extends to complex patterns mixing emotional features and typical components of mechanical devices.

Our robotic results for P1 and N170 are consistent with differences between human and non-human processing such as those evidenced in Moser’s et al. (2007) fMRI study. Emotional computerized avatar faces elicited an amygdala activation altogether with less activity in several visual regions selective of face processing, compared to human faces (Moser et al., 2007). N170 reflects high-level visual processing of face, namely the structural encoding (Bentin et al., 1996). This component is typically higher in response to faces, including schematic ones (Sagiv and Bentin, 2001), than to any other category of objects, such as animals or cars (Carmel and Bentin, 2002; George et al., 1996). Accordingly, robotic pictures elicited a smaller and delayed N170 compared to human pictures. As far as the face configuration of a stimulus accounts for the N170 amplitude, a smaller N170 is coherent with the properties of our robotic displays: they had no contour, no nose, no chin, no cheeks, and the emotional features, though prototypical ones, were made of or combined with metallic pieces and cables (Nadel et al., 2006).

Physical characteristics of the non-humanoid designs may also explain why robotic stimuli elicited a higher and delayed P1 compared to human stimuli. P1 differences between robot and human stimuli may involve low-level features. P1 amplitude has indeed also been found to be sensitive to low-level features including complexity (Bradley et al., 2007).

As early as it is to human expressions, the human visual system is sensitive to expressive displays of complex non-humanoid mechanical set-ups that are not visually encoded strictly like faces, as the N170 shows. Thus, our data do not support the hypothesis that humans are predisposed to react emotionally to facial stimuli by biologically given affect programs (Dimberg et al., 1997). It is, however, compatible with another evolutionary perspective proposing that our modern brain still obeys to ‘ancestral priorities’ (New et al., 2007). It is argued that human attention evolved to reliably develop certain category-specific selection criteria including some semantic categories based on prioritization. Emotional patterns should be part of those semantic categories producing an attention bias, insofar as they are major informative signals for survival and for social relationships. Once selected, such patterns might have come to be progressively disembodied from faces and become a signal of their own. A key function of emotion is to communicate simplified but high impact information (Arbib and Fellous, 2004). The P1 response to non-humanoid robots suggests that high impact information can be drawn from mixed displays where emotional invariant has to be selected among other salient physical features.

Two main consequences of such a prioritization deserve emphasis. First, it could be of interest for roboticists to have in mind that human-like entities might not be the unique way to develop social robots: if our brain responds similarly to non anthropomorphic emotional signals, is there a need to design expressive agents that share our morphology? Are anthropomorphic signals of emotion a necessary ingredient for Robot based therapy of emotional impairments?

Secondly, our results can lead to speculate about the evolution of the modern brain regarding emotion. Following the Darwinian thesis (Darwin, 1872), a cognitive evolutionary perspective describes the continued heavy use of facial expressions by modern humans even while possessing a tremendously powerful language, (as) a vestige of the early adaptation of Homo (Donald, 1991). Instead, this vestige may be thought of as an easy vehicle for a flexible modern brain capable to process ‘undressed emotion’.

FUNDING

This work was funded by a EU Program Feelix Growing (IST-045169).

Acknowledgments

We are greatly grateful to R. Soussignan, FACS certified, and to H. Oster, FACS expert, for their selection of human and robotic expressions according to FACS criteria. We thank P. Canet and M. Simon, Emotion Centre, for their help in designing robotic expressions.

REFERENCES

- Adolphs R. Recognizing Emotion from Facial Expressions: Psychological and Neurological Mechanisms. Behavioral and Cognitive Neuroscience Reviews. 2002;1:21–62. doi: 10.1177/1534582302001001003. [DOI] [PubMed] [Google Scholar]

- Adolphs R. Emotional vision. Nature Neuroscience. 2004;7:1167–8. doi: 10.1038/nn1104-1167. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, McCarthy G. Social perception from visual cues: role of the STS region. Trends In Cognitive Sciences. 2000;4:267–78. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Alorda C, Serrano-Pedraza I, Campos-Bueno JJ, Sierra-Vazquez V, Montoya P. Low spatial frequency filtering modulates early brain processing of affective complex pictures. Neuropsychologia. 2007;45:3223–33. doi: 10.1016/j.neuropsychologia.2007.06.017. [DOI] [PubMed] [Google Scholar]

- Amaral DG, Behniea H, Kelly JL. Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience. 2003;118:1099–120. doi: 10.1016/s0306-4522(02)01001-1. [DOI] [PubMed] [Google Scholar]

- Arbib MA, Fellous JM. Emotions: from brain to robot. Trends In Cognitive Sciences. 2004;8:554–61. doi: 10.1016/j.tics.2004.10.004. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Cognitive Brain Research. 2003;17:613–20. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Bentin S, McCarthy G, Perez E, Puce A, Allison T. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–65. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bird G, Leighton J, Press C, Heyes C. Intact automatic imitation of human and robot actions in autism spectrum disorders. Proceedings of the Royal Society B. 2007;274:3027–31. doi: 10.1098/rspb.2007.1019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley MM. Natural selective attention: orienting and emotion. Psychophysiology. 2009;46:1–11. doi: 10.1111/j.1469-8986.2008.00702.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley MM, Hamby S, Low A, Lang PJ. Brain potentials in perception: picture complexity and emotional arousal. Psychophysiology. 2007;44:364–73. doi: 10.1111/j.1469-8986.2007.00520.x. [DOI] [PubMed] [Google Scholar]

- Bradley MM, Sabatinelli D, Lang PJ, Fitzsimmons JR, King W, Desai P. Activation of the visual cortex in motivated attention. Behavioral Neuroscience. 2003;117:369–80. doi: 10.1037/0735-7044.117.2.369. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, et al. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 1996;17:875–87. doi: 10.1016/s0896-6273(00)80219-6. [DOI] [PubMed] [Google Scholar]

- Briggs KE, Martin FH. Target processing is facilitated by motivationally relevant cues. Biological Psychology. 2008;78:29–42. doi: 10.1016/j.biopsycho.2007.12.007. [DOI] [PubMed] [Google Scholar]

- Britton JC, Shin LM, Barrett LF, Rauch SL, Wright CI. Amygdala and fusiform gyrus temporal dynamics: responses to negative facial expressions. BMC Neuroscience. 2008;9:44. doi: 10.1186/1471-2202-9-44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brosch T, Sander D, Pourtois G, Scherer KR. Beyond fear: rapid spatial orienting toward positive emotional stimuli. Psychological Science. 2008;19:362–70. doi: 10.1111/j.1467-9280.2008.02094.x. [DOI] [PubMed] [Google Scholar]

- Brothers L. The social brain: a project for integrating primate behavior and neurophysiology in a new domain. Concepts in Neuroscience. 1990;1:27–51. [Google Scholar]

- Calder AJ, Young AW, Keane J, Dean M. Configural information in facial expression perception. Journal of Experimental Psychology: Human Perception and Performance. 2000;26:527–51. doi: 10.1037//0096-1523.26.2.527. [DOI] [PubMed] [Google Scholar]

- Calvo MG, Nummenmaa L, Avero P. Visual search of emotional faces Eye-movement assessment of component processes. Experimental Psychology. 2008;55:359–70. doi: 10.1027/1618-3169.55.6.359. [DOI] [PubMed] [Google Scholar]

- Canamero L, Fredslund J. I show you how I like you—Can you read it in my face? IEEE Transactions On Systems Man And Cybernetics. 2001;31:454–59. [Google Scholar]

- Canamero L, Gaussier P. Emotion understanding: robots as tools and models. In: Nadel J, Muir D, editors. Emotional Development. London: Ofxord University Press; 2005. pp. 234–258. [Google Scholar]

- Carmel D, Bentin S. Domain specificity versus expertise: factors influencing distinct processing of faces. Cognition. 2002;83:1–29. doi: 10.1016/s0010-0277(01)00162-7. [DOI] [PubMed] [Google Scholar]

- Carretie L, Hinojosa JA, Martin-Loeches M, Mercado F, Tapia M. Automatic attention to emotional stimuli: neural correlates. Human Brain Mapping. 2004;22:290–99. doi: 10.1002/hbm.20037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carretie L, Hinojosa JA, Mercado F, Tapia M. Cortical response to subjectively unconscious danger. Neuroimage. 2005;24:615–23. doi: 10.1016/j.neuroimage.2004.09.009. [DOI] [PubMed] [Google Scholar]

- Chaminade T, Franklin DW, Oztop E, Cheng G. Motor interference between humans and humanoid robots: effect of biological and artificial motion. IEEE International Conference on Development and Learning. 2005:96–101. [Google Scholar]

- Chaminade T, Hodgins J, Kawato M. Anthropomorphism influences perception of computer-animated characters’ actions. Social Cognitive and Affective Neuroscience. 2007;2:206–16. doi: 10.1093/scan/nsm017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Darwin C. The expression of emotion in man and animals. London: Murray; 1872. [Google Scholar]

- Di Russo F, Martinez A, Hillyard SA. Source analysis of event-related cortical activity during visuo-spatial attention. Cerebral Cortex. 2003;13:486–99. doi: 10.1093/cercor/13.5.486. [DOI] [PubMed] [Google Scholar]

- Dimberg U, Thunberg M, Elmehed K. Unconscious facial reactions to emotional facial expressions. Psychological Science. 1997;11:86–9. doi: 10.1111/1467-9280.00221. [DOI] [PubMed] [Google Scholar]

- Donald M. Origins of the Modern Mind: three stages in the evolution of culture and cognition. Cambridge, MA: Harvard University Press; 1991. [Google Scholar]

- Eger E, Jedynak A, Iwaki T, Skrandies W. Rapid extraction of emotional expression: evidence from evoked potential fields during brief presentation of face stimuli. Neuropsychologia. 2003;41:808–17. doi: 10.1016/s0028-3932(02)00287-7. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Palo Alto: Consulting Psychologist Press; 1976. [Google Scholar]

- Fabre-Thorpe M, Delorme A, Marlot C, Thorpe S. A limit to the speed of processing in ultra-rapid visual categorization of novel natural scenes. Journal of Cognitive Neuroscience. 2001;13:171–80. doi: 10.1162/089892901564234. [DOI] [PubMed] [Google Scholar]

- Fichtenholtz HM, Hopfinger JB, Graham R, Detwiler JM, Labar KS. Happy and fearful emotion in cues and targets modulate event-related potential indices of gaze-directed attentional orienting. Social Cognitive and Affective Neuroscience. 2007;2:323–33. doi: 10.1093/scan/nsm026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gazzola V, Rizzolatti G, Wicker B, Keysers C. The anthropomorphic brain: the mirror neuron system responds to human and robotic actions. Neuroimage. 2007;35:1674–84. doi: 10.1016/j.neuroimage.2007.02.003. [DOI] [PubMed] [Google Scholar]

- George N, Evans J, Fiori N, Davidoff J, Renault B. Brain events related to normal and moderately scrambled faces. Cognitive Brain Research. 1996;4:65–76. doi: 10.1016/0926-6410(95)00045-3. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Turesson HK. How robots will teach us how the brain works. Nature Neuroscience. 2008;11:3. [Google Scholar]

- Joliot M, Leroux G, Dubal S, et al. Cognitive inhibition of number/length interference in a Piaget-like task: evidence by combining ERP and MEG. Clinical Neurophysiology. 2009;120:1501–13. doi: 10.1016/j.clinph.2009.06.003. [DOI] [PubMed] [Google Scholar]

- Juth P, Lundqvist D, Karlsson A, Ohman A. Looking for foes and friends: perceptual and emotional factors when finding a face in the crowd. Emotion. 2005;5:379–95. doi: 10.1037/1528-3542.5.4.379. [DOI] [PubMed] [Google Scholar]

- Keil A, Sabatinelli D, Ding M, Lang PJ, Ihssen N, Heim S. Re-entrant projections modulate visual cortex in affective perception: evidence from Granger causality analysis. Human Brain Mapping. 2007;30:532–40. doi: 10.1002/hbm.20521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolassa IT, Kolassa S, Bergman S, et al. Interpretive bias in social phobia: An ERP study with morphed emotional schematic faces. Cognition and Emotion. 2009;23:69–95. [Google Scholar]

- Krombholz A, Schaefer F, Boucsein W. Modification of N170 by different emotional expression of schematic faces. Biological Psychology. 2007;76:156–62. doi: 10.1016/j.biopsycho.2007.07.004. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley BP, Cuthbert BN. Motivated attention: affect, activation and action. In: Lang PJ, Simons RF, Balaban M, editors. Attention and Emotion: sensory and motivational processes. Mahwah, NJ: Erlbaum; 1997. pp. 97–135. [Google Scholar]

- Lang PJ, Bradley MM, Fitzsimmons JR, et al. Emotional arousal and activation of the visual cortex: an fMRI analysis. Psychophysiology. 1998;35:199–210. [PubMed] [Google Scholar]

- Leppanen JM, Hietanen JK. Positive facial expressions are recognized faster than negative facial expressions, but why? Psychological Research. 2004;69:22–9. doi: 10.1007/s00426-003-0157-2. [DOI] [PubMed] [Google Scholar]

- Leppanen JM, Hietanen JK. Is there more than a happy face than just a big smile? Visual Cognition. 2007;15:468–90. [Google Scholar]

- Meeren HK, van Heijnsbergen CC, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences, U.S.A. 2005;102:16518–23. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moser E, Derntl B, Robinson S, Fink B, Gur RC, Grammer K. Amygdala activation at 3T in response to human and avatar facial expressions of emotions. Journal of Neuroscience Methods. 2007;161:126–33. doi: 10.1016/j.jneumeth.2006.10.016. [DOI] [PubMed] [Google Scholar]

- Nadel J, Simon M, Canet P, et al. Human Response to an expressive robot. Epigenetic Robotics. 2006;06:79–86. [Google Scholar]

- New J, Cosmides L, Tooby J. Category-specific attention for animals reflects ancestral priorities, not expertise. Proceedings of the National Academy of Sciences, U.S.A. 2007;104:16598–603. doi: 10.1073/pnas.0703913104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oberman LM, McCleery JP, Ramachandran VS, Pineda JA. EEG evidence for mirror neuron activity during the observation of human and robot actions: Toward an analysis of the human qualities of interactive robots. Neurocomputing. 2007;70:2194–03. [Google Scholar]

- Ohman A, Lundqvist D, Esteves F. The face in the crowd revisited: a threat advantage with schematic stimuli. Journal of Personality and Social Psychology. 2001;80:381–96. doi: 10.1037/0022-3514.80.3.381. [DOI] [PubMed] [Google Scholar]

- Ortigue S, Michel CM, Murray MM, Mohr C, Carbonnel S, Landis T. Electrical neuroimaging reveals early generator modulation to emotional words. Neuroimage. 2004;21:1242–51. doi: 10.1016/j.neuroimage.2003.11.007. [DOI] [PubMed] [Google Scholar]

- Palermo R, Coltheart M. Photographs of facial expression: accuracy, response times, and ratings of intensity. Behavior Research Methods, Instruments, Computers. 2004;a36:634–8. doi: 10.3758/bf03206544. [DOI] [PubMed] [Google Scholar]

- Pizzagalli D, Regard M, Lehmann D. Rapid emotional face processing in the human right and left brain hemispheres: an ERP study. Neuroreport. 1999;10:2691–98. doi: 10.1097/00001756-199909090-00001. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Thut G, Grave de Peralta R, Michel C, Vuilleumier P. Two electrophysiological stages of spatial orienting towards fearful faces: early temporo-parietal activation preceding gain control in extrastriate visual cortex. Neuroimage. 2005;26:149–63. doi: 10.1016/j.neuroimage.2005.01.015. [DOI] [PubMed] [Google Scholar]

- Press C, Bird G, Flach R, Heyes C. Robotic movement elicits automatic imitation. Brain Research. 2005;25:632–40. doi: 10.1016/j.cogbrainres.2005.08.020. [DOI] [PubMed] [Google Scholar]

- Rey G, Knoblauch K, Prevost M, Komano O, Jouvent R, Dubal S. Visual modulation of pleasure in subjects with physical and social anhedonia. Psychiatry Research. 2010 doi: 10.1016/j.psychres.2008.11.015. (in press) [DOI] [PubMed] [Google Scholar]

- Rossion B, Jacques C. Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170. Neuroimage. 2008;39:1959–79. doi: 10.1016/j.neuroimage.2007.10.011. [DOI] [PubMed] [Google Scholar]

- Sagiv N, Bentin S. Structural encoding of human and schematic faces: holistic and part-based processes. Journal of Cognitive Neuroscience. 2001;13:937–51. doi: 10.1162/089892901753165854. [DOI] [PubMed] [Google Scholar]

- Schubo A, Gendolla GH, Meinecke C, Abele AE. Detecting emotional faces and features in a visual search paradigm: are faces special? Emotion. 2006;6:246–56. doi: 10.1037/1528-3542.6.2.246. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Flaisch T, Stockburger J, Junghofer M. Emotion and attention: event-related brain potential studies. Progress in Brain Research. 2006;156:31–51. doi: 10.1016/S0079-6123(06)56002-9. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Stockburger J, Codispoti M, Junghofer M, Weike AI, Hamm AO. Selective visual attention to emotion. Journal of Neuroscience. 2007;27:1082–9. doi: 10.1523/JNEUROSCI.3223-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schyns PG, Petro LS, Smith ML. Dynamics of visual information integration in the brain for categorizing facial expressions. Current Biology. 2007;17:1580–5. doi: 10.1016/j.cub.2007.08.048. [DOI] [PubMed] [Google Scholar]

- Sugase Y, Yamane S, Ueno S, Kawano K. Global and fine information coded by single neurons in the temporal visual cortex. Nature. 1999;400:869–73. doi: 10.1038/23703. [DOI] [PubMed] [Google Scholar]

- Taylor MJ. Non-spatial attentional effects on P1. Clinical Neurophysiology. 2002;113:1903–8. doi: 10.1016/s1388-2457(02)00309-7. [DOI] [PubMed] [Google Scholar]

- Unkelbach C, Fiedler K, Bayer M, Stegmuller M, Danner D. Why positive information is processed faster: the density hypothesis. Journal of Personality and Social Psychology. 2008;95:36–49. doi: 10.1037/0022-3514.95.1.36. [DOI] [PubMed] [Google Scholar]

- Vanni S, Warnking J, Dojat M, Delon-Martin C, Bullier J, Segebarth C. Sequence of pattern onset responses in the human visual areas: an fMRI constrained VEP source analysis. Neuroimage. 2004;21:801–17. doi: 10.1016/j.neuroimage.2003.10.047. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–41. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G. Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia. 2007;45:174–94. doi: 10.1016/j.neuropsychologia.2006.06.003. [DOI] [PubMed] [Google Scholar]