Abstract

Biological information processing is often carried out by complex networks of interconnected dynamical units. A basic question about such networks is that of reliability: if the same signal is presented many times with the network in different initial states, will the system entrain to the signal in a repeatable way? Reliability is of particular interest in neuroscience, where large, complex networks of excitatory and inhibitory cells are ubiquitous. These networks are known to autonomously produce strongly chaotic dynamics — an obvious threat to reliability. Here, we show that such chaos persists in the presence of weak and strong stimuli, but that even in the presence of chaos, intermittent periods of highly reliable spiking often coexist with unreliable activity. We elucidate the local dynamical mechanisms involved in this intermittent reliability, and investigate the relationship between this phenomenon and certain time-dependent attractors arising from the dynamics. A conclusion is that chaotic dynamics do not have to be an obstacle to precise spike responses, a fact with implications for signal coding in large networks.

INTRODUCTION

Information processing by complex networks of interconnected dynamical units occurs in biological systems on a range of scales, from intracellular genetic circuits to nervous systems [1, 2]. In any such system, a basic question is the reliability of the system i.e., the reproducibility of a system’s output when presented with the same driving signal but with different initial system states. This is because the degree to which a network is reliable constrains how — and possibly how much — information can be encoded in the network’s dynamics. This concept is of particular interest in computational neuroscience, where the degree of a network’s reliability determines the precision (or lack thereof) with which it maps sensory and internal stimuli onto temporal spike patterns. Analogous phenomena arise in a variety of physical and engineered systems, including coupled lasers [3] (where it is known as “consistency”) and “generalized synchronization” of coupled chaotic systems [4].

The phenomenon of reliability is closely related to questions of dynamical stability, and in general whether a network is reliable reflects a combination of factors, including the dynamics of its components, its overall architecture, and the type of stimulus it receives [5]. Understanding the conditions and dynamical mechanisms that govern reliability in different classes of biological network models thus stands as a challenge in the study of networks of dynamical systems. An ubiquitous and important class of neural networks are those with a balance of excitatory and inhibitory connections [6]. Such balanced networks produce dynamics that match the irregular firing observed experimentally on the “microscale” of single cells, and on the macroscale can exhibit a range of behaviors, including rapid and linear mean-field dynamics that could be beneficial for neural computation [7–11]. However, such balanced networks are known to produce strongly chaotic activity when they fire autonomously or with constant inputs [9, 11, 12]. On the surface, this may appear incompatible with reliable spiking, as small differences in initial conditions between trials may lead to very different responses. However, that the answer might be more subtle is suggested by a variety of results on the impact of temporally fluctuating inputs on chaotic dynamics [5, 13–18].

At a more technical level, because of the link between reliability and dynamical stability, many previous theoretical studies of reliability of single neurons and neuronal networks have focused on the maximum Lyapunov exponent of the system as an indicator of reliability. This is convenient because (i) exponents are easy to estimate numerically and, for certain special types of models, can be estimated analytically [5, 9, 12, 19–21]; and (ii) using a single summary statistic permits one to see, at a glance, the reliability properties of a system across different parameter values. However, being a single statistic, the maximum Lyapunov exponent cannot capture all relevant aspects of the dynamics. Indeed, the maximum exponent measures the rate of separation of trajectories in the most unstable phase space direction; other aspects of the dynamics are missed by this metric. Recently, attention has turned to the full Lyapunov spectrum. In particular, [11] compute this spectrum for balanced autonomously spiking neural networks, and suggest limitations on information transmission that result.

In this paper, we present a detailed numerical study and steps toward a qualitative theory of reliability in fluctuation-driven networks with balanced excitation and inhibition. One of our main findings is that even in the presence of strongly chaotic activity – as characterized by positive Lyapunov exponents – single cell responses can exhibit intermittent periods of sharp temporal pre2 cision, punctuated by periods of more diffuse, unreliable spiking. We elucidate the local (meaning cell-to-cell) interactions involved in this intermittent reliability, and investigate the relationship between this phenomenon and certain time-dependent attractors arising from the dynamics (some geometric properties of which can be deduced from the Lyapunov spectrum).

MODEL DESCRIPTION

We study a temporally driven network of N = 1000 spiking neurons. Each neuron is described by a phase variable θi ∈ S1 = ℝ/

whose dynamics follow the “θ-neuron” model [22]. This model’s spike generation in so-called “Type I” neurons and are equivalent to the “quadratic integrate-and-fire” (QIF) model after a change of coordinates (see [22, 23] and the Appendix). These models can also be formally derived from biophysical neuron models near “saddle-node-on-invariantcircle” bifurcations; the underlying “normal form” dynamics [22, 24] are found in many brain areas. The θ-neuron model is known to produce reliable responses to stimuli in isolation [5, 19], cf. [25, 26]. Thus, any unreliability or chaos that we find is purely a consequence of network interactions.

whose dynamics follow the “θ-neuron” model [22]. This model’s spike generation in so-called “Type I” neurons and are equivalent to the “quadratic integrate-and-fire” (QIF) model after a change of coordinates (see [22, 23] and the Appendix). These models can also be formally derived from biophysical neuron models near “saddle-node-on-invariantcircle” bifurcations; the underlying “normal form” dynamics [22, 24] are found in many brain areas. The θ-neuron model is known to produce reliable responses to stimuli in isolation [5, 19], cf. [25, 26]. Thus, any unreliability or chaos that we find is purely a consequence of network interactions.

Coupling from neuron j to neuron i is determined by the weight matrix A = {aij}. A is chosen randomly as follows: each cell is either excitatory (i.e., all its out-going weights are ≥ 0) or inhibitory (all its out-going weights are ≤ 0), with 20% of the cells j being inhibitory and 80% excitatory; we do not allow self-connections, so aii = 0. Each neuron has mean in-degree K = 20 from each population (excitatory and inhibitory) and the synaptic weights are in accordance with the classical balanced-state network architecture [9]. We note that our results appear to be qualitatively robust to changes in N and K, but a detailed study of scaling limits is beyond the scope of this paper.

A neuron j is said to fire a spike when θj(t) crosses θj = 1; when this occurs, θi is impacted via the coupling term aijg(θj) where g(θ) is a smooth “bump” function with small support ([−1/20, 1/20]) around θ = 0 satisfying , meant to model the rapid rise and fall of a synaptic current (see Appendix for details). In addition to coupling interactions, each cell receives a stimulus Ii(t) = η + εζi(t) where η represents a constant current and ζi(t) are aperiodic signals, modeled here (as in [5, 25, 26]) by “frozen” realizations of independent white noise processes, scaled by an amplitude parameter ε. Note that the terms ζi(t) model external signals, not “noise” (i.e., driving terms that can vary between trials), though such terms can be easily added (as in [16]).

The ith neuron in the network is therefore described by the following stochastic differential equation (SDE):

| (1) |

where the intrinsic dynamics F(θi) = 1 + cos(2πθi) and the stimulus response curve Z(θi) = 1 − cos(2πθi) come directly from coordinate changes based on the original QIF equations (see Appendix and [22]). Here, Wi,t is the independent Wiener process generating ζi(t); the ε2 term is the Itô correction from the coordinate change [27]. Finally, η sets the intrinsic excitability of individual cells. For η < 0, there is a stable and an unstable fixed point, together representing resting and threshold potentials. Thus (contrasting [11] where cells are intrinsically oscillatory), neurons are in the “excitable regime,” displaying fluctuation-driven firing, as for many cortical neurons [28].

In what follows, we focus on networks in this regime by fixing η = −0.5, where cells spike due to temporal fluctuations in their inputs (both from external drive and network interactions) rather than being perturbed and coupled oscillators. We study the effect of the amplitude ε of the external drive on the evoked dynamics. Note that in the absence of such inputs, these networks do not produce sustained activity.

Fig. 1 illustrates that the general properties of the network dynamics, including a wide distribution of firing rates from cell to cell and highly irregular firing in individual cells, are consistent with many models of balanced-state networks in the literature, as well as general empirical observations from cortex [7, 8]. An additional such property is that our network’s mean firing rate scales monotonically with η and ε (data not shown), as in [9, 11].

FIG. 1.

(Color online) (A) Typical firing rate distributions for excitatory and inhibitory populations. (B) Typical interspike-interval (ISI) distribution of a single cell. The coefficient of variation (CV) is close to 1. (C) Invariant measure for an excitable cell (η < 0); inset: typical trajectory trace of an excitable cell where solid and dotted lines mark the stable and unstable fixed points. (D) Network raster plots for 250 randomly chosen cells. For all panels, η = −0.5, ε = 0.5.

MATHEMATICAL BACKGROUND

For reliability questions, we are interested in the response of a network to a fixed input signal starting from different initial states. Equivalently, we can imagine an ensemble of initial conditions all being driven simultaneously by the same signal ζ(t). If the system is reliable, then there should be a distinguished trajectory θ(t) to which the ensemble converges. In contrast, an unreliable network will lack such an attracting solution, as dynamical mechanisms conspire to keep trajectories separated. To put these ideas on a precise mathematical footing, it is useful to treat our SDE (1) as a random dynamical system (RDS). That is, we view the system as a nonautonomous ODE driven by a frozen realization of the Brownian process, and consider the action of the generated family of flow maps on phase space. In this section, we present a brief overview of RDS concepts and their meaning in the context of network reliability.

Random dynamical systems framework

The model network described by (1) is a SDE of the form

| (2) |

whose domain is the N-dimensional torus

and

are standard Brownian motions. We assume throughout that the Fokker-Planck equation associated with (2) has a unique, smooth steady state solution μ. Since we are interested in the time evolution of an ensemble of initial conditions driven by a single, fixed realization ζ generated by

, this can be done by considering the stochastic flow maps defined by the SDE, i.e., the solution maps of the SDE. More precisely, this is a family of maps Ψt1,t2;ζ such that Ψt1,t2;ζ (xt1) = xt2 where xt is the solution of (2) given ζ. If a(x) and b(x) from (2) are sufficiently smooth, it has been shown (see, e.g. [29]) that the maps Ψt1,t2;ζ are well defined, smooth with smooth inverse (i.e., are diffeomorphisms), and are independent over disjoint time intervals [t1, t2].

and

are standard Brownian motions. We assume throughout that the Fokker-Planck equation associated with (2) has a unique, smooth steady state solution μ. Since we are interested in the time evolution of an ensemble of initial conditions driven by a single, fixed realization ζ generated by

, this can be done by considering the stochastic flow maps defined by the SDE, i.e., the solution maps of the SDE. More precisely, this is a family of maps Ψt1,t2;ζ such that Ψt1,t2;ζ (xt1) = xt2 where xt is the solution of (2) given ζ. If a(x) and b(x) from (2) are sufficiently smooth, it has been shown (see, e.g. [29]) that the maps Ψt1,t2;ζ are well defined, smooth with smooth inverse (i.e., are diffeomorphisms), and are independent over disjoint time intervals [t1, t2].

RDS theory studies the action of these random maps on the state space. The object from RDS theory most relevant to questions of reliability is the sample distribution , defined here as

| (3) |

where (Ψs,t;ζ)* denotes the propagator associated with the flow Ψs,t;ζ, i.e., it is the linear operator transporting probability distributions from time s to time t by the flow Ψs,t;ζ, and μinit is the initial probability distribution of the ensemble.

The definition above has the following interpretation: suppose the system was prepared in the distant past so that it has a random initial condition (where “random” means “having distribution μinit”). Then

is precisely the distribution of all possible states at time t, after the ensemble has been subjected to a given stimulus ζ(t) for a sufficiently long time (how long is “sufficient” is system-dependent; the limit in the definition sidesteps that question). So if

were localized in phase space (i.e., if its support has relatively small diameter), then its state at at time t is essentially determined solely by the stimulus up to that point, i.e., its response at time t is reliable. In contrast, if

were not localized, then the response is unreliable in the sense that the system’s initial condition has a measurable effect on its state at time t. Note that

depends on both ζ and the time t: as time goes by, the system receives more inputs, and

continues to evolve; it is easy to see that

. In general, we expect

to be essentially independent of the specific choice of μinit, so long as μinit is given by a sufficiently smooth probability density, e.g., the uniform distribution on

.

.

Linear stability implies reliability

Not surprisigly, the reliability of a system is related to its dynamical stability. This link can be made precise via the Lyapunov exponents λ1 ≥ λ2 ≥ … ≥ λN of the stochastic flow. As in the deterministic case, these exponents measure the rate of separation of nearby trajectories; for a “typical” trajectory, we expect a small perturbation δxt to grow or contract like |δxt| ~ eλ1t over sufficiently long timescales. Note that under very general conditions, the exponents are deterministic, i.e., they depend only on system parameters but not on the specific realization of the input ζ [30]. Moreover, consistent with the findings in [11, 16], we have observed that the exponents for our models are insensitive to specific realizations of the coupling matrix A (see Appendix), so that they are truly functions of the system parameters.

One link between exponents and is the following theorem:

Theorem 1 (Le Jan; Baxendale [31, 32])

If λ1 < 0 and a number of nondegeneracy conditions are satisfied [32], then is a random sink, i.e., where xt is a solution of the SDE.

Theorem 1 states that under broad conditions, an ensemble of trajectories described by a smooth initial density will collapse toward a single, distinguished trajectory. For this reason, λ1 < 0 is often associated with reliability.

A second, complementary theorem covers the case λ1 > 0.

Theorem 2 (Ledrappier and Young [33])

If λ1 > 0, then is a random Sinai-Ruelle-Bowen (SRB) measure.

SRB measures are concepts that originally arose in the theory of deterministic, dissipative chaotic systems [34, 35]. They are singular invariant probability distributions supported on a “strange attractor.” Such attractors necessarily have zero phase volume because of dissipation; nevertheless, SRB measures capture the statistical properties of a set of trajectories of positive phase volume (i.e., the strange attractor has a nontrivial basin of attraction). They are the “smoothest” invariant probability distributions for such systems in that they have smooth conditional densities along unstable (expanding) phase directions. Indeed, locally they typically consist of the cartesian products of smooth manifolds with Cantor-like fractal sets; the tangent spaces Eu,ζ (x) to these smooth “leaves” are invariant in the sense that DΨs,t;ζ (xs) · Eu,ζ (xs) = Eu,ζ (xt), where DΨs,t;ζ (x) denotes the Jacobian of the flow map at x. Moreover, these subspaces are readily computable as a by-product of estimating Lyapunov exponents (see Appendix).

Random SRB measures share many of the same properties as SRB measures in the deterministic setting, but are time-dependent. While in principle they may be confined to small regions of phase space at all times, this is typically not the case for the systems we study here. A positive λ1 is thus often associated with unreliability, and the terms “chaotic” and “unreliable” are often used interchangeably. (Random SRB measures have also been used to model the distribution of “pond scum”; in that context they are known as “snapshot attractors” [36].)

Although the SRB measure evolves with time, it possesses some time-invariant properties because (after transients) it describes processes that are statistically stationary in time. Among these is the dimension of the underlying attractor; another is the number of unstable directions, i.e., the number of positive Lyapunov exponents, which give the dimension of the unstable manifolds of the attractor. The latter will be useful in what follows; we denote it by Mλ.

To summarize, these two theorems allow us to reach global conclusions on the structure of random attractors (singular or extended) using only the maximum Lyapunov exponent λ1, a measure of linear stability. This has a number of consequences in what follows: first, because λ1 is a single summary statistic determined only by system parameters (and not specific input or network realizations), it allows us to see quickly the reliability properties of a system across different parameters. Second, unlike other measures of reliability, λ1 can be computed easily in numerical studies by simulating single trials (as opposed to multiple repeated trials). However, λ1 can only tell us about reliability properties in an asymptotic sense (i.e., on sufficiently long timescales), and only about the dynamics in the fastest expanding directions. As we shall see later, the reliability properties of our networks reflect the geometric properties of their SRB measures beyond those captured by λ1 alone.

MAXIMUM LYAPUNOV EXPONENTS AND ASYMPTOTIC RELIABILITY

In line with previous studies [5, 9, 11, 12, 16, 19–21], we say that a network is asymptotically reliable if λ1 < 0 and asymptotically unreliable if λ1 > 0. In principle, even when λ1 < 0, distinct trajectories could take very long times to converge to the random sink. However, we note that for all asymptotically reliable networks we considered, convergence is typically achieved within about 10 time units. For the remainder of the paper, we will concentrate on “steady state” dynamics and we adopt the point of view that ensembles of solutions for all systems considered were initiated in the sufficiently distant past. The question of transient times, although very interesting, falls outside of the scope of this paper.

We begin by studying the dependence of the on the input amplitude ε. Even in simple and low-dimensional, autonomous systems, analytical calculations of λi’s often prove to be very difficult if not impossible. We therefore numerically compute (see Appendix for details) the Lyapunov spectra of our network for various values of input drive amplitude ε. Figure 2 (A) shows the first 100 Lyapunov exponents of these spectra. This demonstrates that, at intermediate values of ε, there are several positive Lyapunov exponents (Mλ), and that the trend in this number is nonmonotonic in ε. Panel (B) gives another view of this phenomenon, as well as the dependence of λ1 on ε. In particular, for sufficiently small ε, the networks produce a negative λ1.

FIG. 2.

(Color online) (A) First 100 Lyapunov exponents of network with fixed parameters as in Fig. 1, as a function of ε. (B) Plot of λ1 (right scale), Mλ/N: the fraction of λi > 0 (left scale) vs ε. (C) Raster plots show example spike times of an arbitrarily chosen cell in the network on 30 distinct trials, initialized with random ICs. Circle and star markers indicate ε values of 0.18 and 0.5, respectively, shown in panel (B). For all panels, η = −0.5.

We note that for very small fluctuations (ε < 0.1), the network rarely spikes and λ1 is close to the real part of the largest eigenvalue associated with the stable fixed point of a single cell’s vector field. As ε increases, there is a small region (0.1 < ε < 0.2) where sustained network activity coexists with λ1 < 0. However, as ε increases further, there is a rapid transition to a positive λ1, indicating chaotic network dynamics and thus asymptotic unreliability. Consistent with RDS theory, the transition to λ1 > 0 is accompanied by the emergence of a random attractor with nontrivial unstable manifolds.

Since the networks we study are randomly connected and each cell is nearly identical, the underlying dynamics are fairly stereotypical from cell to cell. This enables us to focus on a randomly chosen cell for illustrative purposes and further analysis. Figure 2 (C) shows two sample raster plots where the spike times of a single cell from 30 distinct trials (initiated at randomly sampled ICs) are plotted. The top plot is produced from an asymptotically reliable system (λ1 < 0) and as expected, every spike is perfectly reproduced on all trials. In the bottom plot, where λ1 > 0, the spike times are clearly unreliable across different trials, as RDS theory predicts. For the remainder of this paper, we routinely refer to the parameter sets used in Fig 2 (C) as testbeds for stable and chaotic networks respectively, and make use of them for illustrative purposes (see caption of Fig 2 for details).

Finally, spike trains from the chaotic network also show an interesting phenomenon: there are many moments where spike times align across trials, i.e., the system is (temporarily) reliable. We now investigate this phenomenon.

SINGLE-CELL RELIABILITY

Let us define the ith neural direction as the state space of the ith cell, which we identify with a circle S1. The degree of reliability of the ith cell is given by the corresponding marginal distribution, i.e., we define a projection πi(θ1, ···, θN) = θi, and denote the corresponding projected single-cell distribution by . Note that when λ1 > 0, we expect to be nonsingular, i.e., corresponds to a smooth probability density function (though it may be more or less concentrated); an exception is when the random attractor is aligned in such a way that it projects to a point onto the ith direction. If is singular at time t, then the state of cell i is reproducible across trials at time t; geometrically, trajectories from distinct trials are perfectly aligned along the ith neural direction. On the other hand, if has a broad density on S1, then the state of cell i at time t can vary greatly across trials, and the ith components of distinct trajectories are separated.

This is illustrated in Fig 3(A) where snapshots of 1000 randomly initialized trajectories are projected onto (θ1, θ2)-coordinates at distinct times t1 < t2 < t3. The upper snapshots are taken from an asymptotically reliable system (λ1 < 0) where

is singular and supported on a single point (random sink) which evolves on

according to ζ(t). The bottom snapshots are taken from the λ1 > 0 regime and clearly show that distinct trajectories accumulate on “clouds” that change shape with time. These changes affect the spread of

.

according to ζ(t). The bottom snapshots are taken from the λ1 > 0 regime and clearly show that distinct trajectories accumulate on “clouds” that change shape with time. These changes affect the spread of

.

FIG. 3.

(Color online) (A) Snapshots of 1000 trajectories projected in two randomly chosen neural directions (θ1,θ2) at three distinct times. Upper and lower rows with the same parameters as in Fig 2 (C) and show a random sink and random strange attractor respectively. (B) Projections of the sample measure

onto the θ1 neural direction at distinct moments. (C) Scatter plot of average support score 〈si(t)〉 vs. entropy of projected measure

sampled over 2000 time points and 30 distinct cells. (D) Example histogram of 〈si〉 sampled across all cells in the network at a randomly chosen moment in time. Inset: snapshot of 〈si〉 vs. cell number i. (E) Example histogram of 〈si(t)〉 sampled across 2000 time points from a randomly chosen cell. Inset: sample time trace of 〈si(t)〉 vs. time. (F) and (G) Time evolution of distance between two distinct trajectories θ1(t), θ2(t) (F) Green dashed (bottom):

in a randomly chosen θi direction. Black solid (top):

. (G)

. For all panels except A (top), network parameters: η = −0.5, ε = 0.5 with λ1 ≈ 2.5.

. For all panels except A (top), network parameters: η = −0.5, ε = 0.5 with λ1 ≈ 2.5.

Our next task is to relate the geometry of the random attractor to the qualitative properties of the single-cell distributions

. A convenient tool for quantifying the latter is the differential entropy

. Recall that the differential entropy of a uniform distribution on S1 is 0, and that the more negative h is, the more singular a distribution. In our context, the more orthogonal the attractor is to the ith direction in

, the lower is its projection entropy, as illustrated in Fig 3(B). We emphasize again that the shape of

is time-dependent and so is its entropy.

, the lower is its projection entropy, as illustrated in Fig 3(B). We emphasize again that the shape of

is time-dependent and so is its entropy.

Uncertainty in single cell responses

We would like to predict from properties of the underlying dynamics. Our first step in doing so is to validate our intuition about the orientation of . Following and somewhat generalizing an approach of [11], we use a quantity which we call the support score si(t) to represent the contribution of a neural direction to the unstable directions of the strange attractor at time t.

We first define this quantity locally for a single trajectory θ(t). For this trajectory, we expect that there exists a decomposition of the tangent space into stable (contracting) and unstable (expanding) invariant subspaces: Es,ζ (θ(t)) and Eu,ζ (θ(t)). Since the dimension of Eu,ζ (θ(t)) must be Mλ, let {v1, v2, …, vMλ} be an orthonormal basis for the unstable subspace at time t (i.e. vi ∈ ℝN). We define cell i’s support score as

| (4) |

where V is the Mλ × N matrix with vi’s as rows and ri is the (N-dimensional) unit vector in the ith direction. Note that 0 ≤ si(t) ≤ 1, and that si measures the absolute value of the cosine of the angle between the neural and unstable direction. Thus, si represents the extent to which the ith direction contributes to state space expansion. The vectors {v1, v2, …, vMλ} are computed simultaneously with the λi’s (see numerical methods in Appendix).

In order to use the support score to quantify the orientation of the attractor, we need to extend the definition above, which is for a single trajectory, to an ensemble of trajectories governed by

. However, si(t) could greatly vary depending on which trajectory we choose — as we might expect if

consisted of complex folded structures. Our numerical simulations show that this variation is limited in our networks: the typical variance of an ensemble of si(t) values across an ensemble of trajectories with randomly chosen initial conditions is

(10−2) (for a fixed cell i and a fixed time t). This suggests that unstable tangent spaces about many trajectories are similarly aligned. Therefore, we extend the idea of support score to

by taking the average 〈si(t)〉 across

. We numerically approximate this quantity by averaging over 1000 trajectories. As stated earlier, the behavior of all cells are statistically similar because the network is randomly coupled. As a consequence, the quantities 〈si(t)〉 and

do not depend sensitively on which i is chosen.

(10−2) (for a fixed cell i and a fixed time t). This suggests that unstable tangent spaces about many trajectories are similarly aligned. Therefore, we extend the idea of support score to

by taking the average 〈si(t)〉 across

. We numerically approximate this quantity by averaging over 1000 trajectories. As stated earlier, the behavior of all cells are statistically similar because the network is randomly coupled. As a consequence, the quantities 〈si(t)〉 and

do not depend sensitively on which i is chosen.

Figure 3 (C) shows a scatter plot of 〈si(t)〉 vs. for a representative network that is asymptotically unreliable. This clearly shows that the contribution of a neural direction i to state space expansion results in a higher entropy of the projected measure . This phenomenon is robust across all values of ε tested. Once again, we note that this correspondence is not automatic for any dynamical system: there is no guaranteed relationship between the orientation of the unstable subspace and the entropy of the projected density. For example, the restriction of to unstable manifolds could be very localized, thus having low entropy for even perfectly aligned subspaces.

Temporal statistics

Next, we inquire about the distributions of 〈si(t)〉 across time and neural directions. That is, again following [11], we study the number of cells that significantly contribute to unstable directions at any moment as well as the time evolution of this participation for a given cell.

Figure 3 (D) shows a typical distribution of support scores across all cells in the network at a fixed moment in time. The inset shows a trace of 〈si〉 across cells at that moment. The important fact is that this is distribution is very uneven across neurons, being strongly skewed towards low values of 〈si〉. In panel (E) of the same figure, we see a typical distribution of support scores across time for a fixed cell. The inset shows a sample of the 〈si(t)〉 time trace for that cell. We emphasize that the uneven shape of these distributions implies that at any given moment in time, only a few cells significantly support expanding directions of the attractor and moreover, that the identity of these cells change as time evolves. A similar mechanism was reported for networks of au tonomously oscillating cells [11], although only the maximally expanding direction was used to compute si(t). In both cases, neurons in the network essentially take turns participating in the state space expansion that is present in the chaotic dynamics.

This leads to trajectories that are unstable on long timescales (λ1 > 0), yet alternate between periods of stability and instability in single neural directions on short timescales. To directly verify this, Fig. 3 (F) shows a sample time trace of

: the projection distance between two randomly initialized trajectories θ1(t) and θ2(t) in a single neural direction i. Also shown is

: the maximal projection distance out of all neural directions. While the maximal S1 distance is almost always close to its maximum 0.5, the two trajectories regularly collapse arbitrarily close along any given S1-direction. This leads to a global separation

that is relatively stable in time (Fig. 3 (G)) yet produces temporary local convergence (

that is relatively stable in time (Fig. 3 (G)) yet produces temporary local convergence (

refers to the geodesic distance on the flat N-torus, i.e., a cube [0, 1]N with opposite faces identified). In what follows, we will see that this mechanism translates into spike trains that retain considerable temporal structure from trial to trial.

refers to the geodesic distance on the flat N-torus, i.e., a cube [0, 1]N with opposite faces identified). In what follows, we will see that this mechanism translates into spike trains that retain considerable temporal structure from trial to trial.

RELIABILITY OF SPIKE TIMES

Thus far, we have been concerned in general with the separation of trajectories arising from distinct trials (i.e. different ICs but fixed input ζ(t)). However, of relevance to the dynamical evolution of the network state are spike times: the only moments where distinct neural directions are effectively coupled. Indeed, coupling between cells of this network is restricted to a very small portions of state space, namely to a small interval around θi = 0 ~ 1 when a cell spikes (see Model section). This property is ubiquitous in neural circuits and other pulse-coupled systems [37] and is central to the time-evolution of .

Spike reliability captured by probability fluxes

From the perspective of spiking, what matters is the time evolution of projected measures on S1 in relation to the spiking boundary. This is captured by the probability flux of at θi = 0 ~ 1: Φi(t). For our system, we can easily write down the equation for the flux since inputs to a given cell have no effect at the spiking phase (ie. Z(0) = 0 in (1)). From (1), and we have . We emphasize that this probability flux is associated with , and differs from the usual flux arising from the Fokker-Planck equation. Here, the source of variability between trajectories leading to wider is due to chaotic network interactions, rather than from noise that differs from trial to trial. Overall, Φi(t) is modulated by a complex interaction of the stimulus drive ζ(t), the vector field of the system itself, and “diffusion” originating from chaos; as we have seen, the latter depends in a nontrivial way on the geometric structure of the underlying strange attractor.

In the limit of infinitely many trials, Φi(t) is exactly the normalized cross-trial spike time histogram, often referred to as the peri-stimulus time histogram (PSTH) in the neuroscience literature. A PSTH is obtained experimentally by repeatedly presenting the same stimulus to a neuron or neural system and recording the evoked spike times on each trial. Figure 4 (A) illustrates the time evolution of Φi. Perfectly reliable spike times (repeated across all trials) are represented by a time t* such that for an open interval U ∋ t*, Φi(t)|U = δ(t − t*). Equivalently, finite values of Φi(t) indicate various degrees of spike repeatability. Of course, Φi(t) = 0 implies cell i is not currently spiking on any trial.

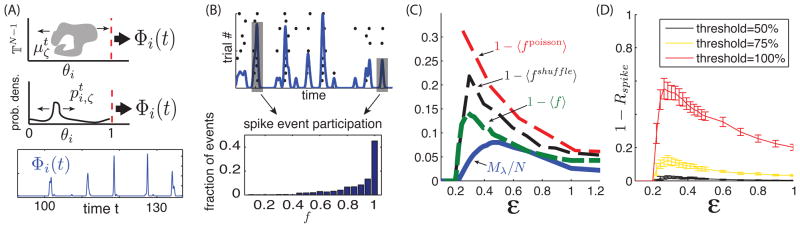

FIG. 4.

(Colors online) (A) Top and middle: cartoon representations of the flux Φi(t). Bottom: sample Φi time trace for a randomly chosen cell approximated from 1000 trajectories. (B) Top: Illustration of spike event definition. Bottom: Distribution of spike event participation fraction f. (For (A) and (B): η = −0.5, ε = 0.5, λ1 ≃ 2.5) (C) Curves of 1 − 〈f〉 (network), 1 − 〈fshuffle〉 (single cell with shuffled input spike trains from networks simulations) and 1 − 〈fpoisson〉 (single cell with random poisson spike inputs) vs. ε. Also shown is the fraction of λi > 0, Mλ/N vs. ε. (D) Mean 1 − Rspike vs. ε curves for three threshold values. Error bars show one standard deviation of mean Rspike across all cells in the network. (For (C) and (D): η = −0.5)

Spike events: repeatable temporal patterns

Our next goal is to use Φi(t) to derive a metric of spike time reliability for a network. Intuitively, given a spike observed on one trial, we seek the expected probability that this spike would be present on any other trial. This amounts to asking to what extent the function Φi(t) is “peaked” on average.

To develop a practical assessment of this extent, we begin by approximating Φi(t) from a finite number of trajectories. To do so, we modify the definition of the flux from a continuous to a discrete time quantity. For practical reasons we say that represents the fraction of a -ensemble of trajectories that crosses the θi = 1 ~ 0 boundary within a small time interval t + Δt. As a discrete quantity, we now have . Borrowing a procedure from [38], we convolve this discretized flux with a gaussian filter of standard deviation σ to obtain a smooth waveform (see Fig 4 (B)). We then define spike events as local maxima (peaks) of this waveform. A spike is assigned to an event if it falls within a tolerance window of the event time, defined by the width of the peak at half height. If the spikes contributing to an event are perfectly aligned, the tolerance is σ. However, if there is some variability in the spike times, the tolerance grows as the event’s peak widens. This procedure ensures that spikes differing by negligible shifts are members of the same event. For our estimates, we used Δt = 0.005 (time step of the numerical solver) and found that σ = 0.05 was big enough to define reasonable event sizes and small enough to discriminate between most consecutive spikes from the same trial. However, we note that the following results are robust to moderate changes in σ.

Each spike event is then assigned a participation fraction f: the fraction of trials participating in the spike event. Figure 4 (B) shows the distribution of f’s for the events recorded from all cells of our chaotic network testbed, using 2500 time unit runs with 30 trials and discarding the initial 10% to avoid transient effects. There is a significant fraction of events with f = 1 and a monotonic decrease of occurrences with lesser participation fractions. The mean 〈f〉 of this distribution is the finite-sampling equivalent of the average height of Φi peaks and therefore represents an estimate of the expected probability of an observed spike being repeated on other trials.

Finally, we compare 〈f〉 to the number of unstable directions of the chaotic attractor for a range of input amplitude ε. Figure 4 (C) shows both ε-dependent curves 1 − 〈f〉 and Mλ/N (previously shown in Fig 2 (B)). For weak input amplitudes (ε < 0.2), networks are asymptotically reliable and thus, Mλ/N = 0 and every event has full participation fraction (1 − 〈f〉 = 0). As ε increases, the network undergoes a rapid transition from stable to chaotic dynamics. Most interestingly, both 1 − 〈f〉 and Mλ/N follow the same trend, suggesting that the dimension of the underlying strange attractor plays an important role in the expected reliability of spikes. While this relationship is not perfect, it shows that the number of positive Lyapunov exponents serves as a better predictor of average spike reproducibility than the magnitude of λ1 alone.

The shapes of 1 − 〈f〉 and Mλ/N show an initial growth followed by a gradual decay, suggesting that following a transition from stable to chaotic dynamics, higher input fluctuations induce more reliable spiking. In the limit of high ε, this agrees with the intuition of an entraining effect by the input signal. This raises an important question about the observed dynamics: Is spike repeatability simply due to large deviations in the input? Or equivalently, is the role of chaotic network interactions comparable to “noise” in the inputs to individual neurons? That this may not be the case for moderate input amplitudes is suggested by the concentration of trajectories in the sample measures . We now seek to demonstrate the difference.

RELEVANT LOCAL MECHANISMS

Network interactions vs. stimulus

A natural question about the dynamical phenomena described above is: to what extent are they caused by network interactions, compared to direct effects of the stimulus? In our system, each cell receives an external stimulus ζi(t) as well as a sum of inputs from other cells. Because of network interactions, the latter inputs are highly structured even when λ1 > 0, and can be correlated across multiple trials. Indeed, all else being equal, the more singular and low-dimensional is, the more cross-trial correlation there will be. The question is whether we would still observe the same spiking behavior when inputs from the rest of the network are replaced by more random inputs.

To test this, we compare the response of cell i in a network driven by the stimulus ζ(t) with that of a single “test cell.” The test cell receives: (i) the ith component ζi(t) of the same stimulus, and (ii) excitatory and inhibitory spike trains with statistics chosen to “match” network activity in two different ways that we describe below. For each, the number of such spike trains matches the mean in-degree K of the network. That is, there are K excitatory and K inhibitory spike trains such balance is conserved.

In our first use of the test cell, we present poisson-distributed spike trains that are adjusted to the network firing rate (at each ε). Importantly, all trains are independent (both within and across trials). We denote the corresponding average spike event participation fractions by 〈fpoisson〉.

In our second use of the test cell, we present spike trains taken from K excitatory and K inhibitory cells, each chosen from a simulation with a different initial condition but with the same stimulus drive ζ(t). This way, the stimulus modulation of the individual spike trains is preserved, but the global structure of the chaotic attractor is disrupted. The corresponding average spike event participation fractions in this case are denoted by 〈fshuffle〉.

Fig 4 (C) shows 1 − 〈fpoisson〉 and 1 − 〈fshuffle〉 along-side 1 − 〈f〉. For moderate values of ε, these three curves differ by a factor of 2 (poisson) and 1.5 (shuffle) and slowly converge as ε increases. This confirms that two dynamical regimes are present: When the input strength is very high, inputs tend to entrain neurons into firing regardless of synaptic inputs, as was intuitively stated above. However, for moderate input amplitudes, network interactions play a central role in the repeatability of spike times. Importantly, we note that many repeatable spike events in chaotic networks are not present in the test cell driven with either surrogate poisson or trial-shuffled excitatory and inhibitory events, even though the same stimulus ζi(t) was given in each case.

A second, closely related question is whether the reliable spiking events we see are solely due to large fluctuations in the stimulus, or if network mechanisms play a significant role. The above results, which show that structured network interactions can have a significant impact on single-cell reliability, suggest the answer is no. Here we provide a second, more direct test of this question.

To proceed, we first classify each spike fired in the network as either reliable or not by defining a quantity Rspike: the fraction of spikes belonging to an event with a participation fraction f greater or equal to some threshold. Rspike is the cumulative density of events with f greater than the chosen threshold. Equivalently, we say a spike event is reliable if its f is greater than that threshold and unreliable otherwise. Individual spikes inherit the reliability classification of the event of which they are a member.

For visual comparison with Fig 4 (C), Fig 4 (D) shows 1 − Rspike as a function of ε for three threshold values (0.5, 0.75 and 1). These curves show the fraction of unreliable spikes, out of all spikes fired, for a given threshold. The error bars show the standard deviation of the value across all cells in the network. As expected for small ε, 1 − Rspike = 0 since λ1 < 0. Notice that as in the case of 1 − 〈f〉, the distinct choices of threshold do not affect overall trends, but they greatly impact the fraction of spikes labeled reliable (or unreliable). For what follows, we adopt a strict definition of spike time reliability by fixing the Rspike threshold at 1 (i.e. a spike is reliable if it is present in all trials). However, the subsequent results are fairly robust to the choice of this threshold.

We can now address the question raised above via spike-triggered averaging (STA). As the name describes, this procedure takes quantities related to a given cell’s dynamics (i.e. stimulus, synaptic inputs, etc.) in the moments leading to a spike, and averages them across an ensemble of spike times. In other words, it is a conditional expectation of the stimulus in the moments leading up to a spike; it can also be interpreted as the leading term of a Wiener-Volterra expansion of the neural response [39]. In what follows, we will distinguish between reliable and unreliable spikes while taking these averages in an effort to isolate dynamical differences between the two.

For illustration, we turn to our chaotic network testbed. Figure 5 (A) and (B) shows the STA of both excitatory and inhibitory network interactions as well as the external input leading to reliable and unreliable spikes. More precisely, say we consider spike times { , …} from cell i. Then the network interactions used in the STA is the ensemble of time traces { } where Z(θ) and g(θ) are as in (1) where we differentiate between excitatory and inhibitory inputs according to the sign of aij. Similarly, the external input are taken from { }.

FIG. 5.

(Color online) For (A) through (E), t = 0 marks the spike time and rel/unrel indicates the identity of the spike used in the average. (A) and (B), Spike triggered averaged external signal εZ(θi)ζi(t) (black), excitatory (purple) and inhibitory (orange) network inputs Z(θi) Σj aijg(θj). (A) Triggered on reliable spikes. (B) Triggered on unreliable spikes. (C) Spike triggered support score S. (D) Spike triggered local expansion measure E. (E) Spike triggered average phase θi. For all panels: η = −0.5, ε = 0.5 with λ1 ≈ 2.5. Shaded areas surrounding the computed averages show two standard errors of the mean.a No shade indicates that the error is too small to visualize.

a Computed standard deviations where verified by spot checks using the method of batched means with about 100 batches of size 1000.

There are two main points to take from these STAs. First, note that the average levels of spike-triggered recurrent excitation and inhibition very roughly balance one another in time periods well before spike times. However, right before spikes this balance is broken, leading to an excess of recurrent excitation which is stronger than the spike-triggered stimulus. This gives further evidence that recurrent interactions shape the dynamics with which the spikes themselves are elicited — rather than spikes being primarily driven by the external stimuli alone. Second, note that these STAs are qualitatively similar for both reliable and unreliable spikes. Even though the peak of the summed external input in Fig. 5(A) is higher than that in Fig. 5(B), it is not clear that this difference is sufficient, by itself, to explain the increase in reliability (as the magnitude of the mean external input is relatively small). This suggests that we look other dynamical factors that might contribute to reliable spike events, a task to which we now turn.

Recall that the support score si(t) measures the contribution of a single cell’s subspace to tangent unstable directions of a trajectory. Consider the corresponding STA S(t), i.e., the expected values of si(t) in a short time interval preceding each spike in the network. Fig. 5 (C) shows the resulting averages for both reliable and unreliable spikes. Moments before a cell fires an unreliable spike, S(t) is considerably larger than in the reliable spike case, thus indicating that global expansion is further aligned with a spiking cell’s direction in unreliable spike events. We now investigate properties of the flow leading to this phenomenon.

Source of local expansion

To better capture space expansion in a given neural direction, consider v(t), the solution of the variational equation

| (5) |

where J(t) = DΨ0,t;ζ is the Jacobian of the flow evaluated along a trajectory θ(t). If we set v(0) to be randomly chosen but with unit length, then v(t) quickly aligns to the directions of maximum expansion in the tangent space of the flow about θ(t); moreover, because of ergodicity

. We can equivalently write a discretized version of this expression for small Δt: λ1 = limT→∞〈e(t)〉T where 〈·〉T denotes the time average up to time T and

is analogous to a finite time Lyapunov exponent. For our network, e(t) fluctuates rapidly and depends on many factors such as number of spikes fired, the pattern of the inputs, and the phase coordinate of each cell over the time Δt. Its coefficient of variation is typically

(10) for Δt = 0.005 which is consistent with the fact that stability is very heterogeneous in time. To better understand the behavior of the flow along single neural directions, we define the local expansion coefficient

(10) for Δt = 0.005 which is consistent with the fact that stability is very heterogeneous in time. To better understand the behavior of the flow along single neural directions, we define the local expansion coefficient

| (6) |

Note that ei(t) is a local equivalent of e(t) and directly measures the maximum expansion along a neural direction.

Define E(t) as the STA corresponding to ei(t), shown in Fig. 5 (D). Notice that at its peak, Eunrel(t) is much broader than Erel(t), with which indicates that prior to an unreliable spike, trajectories are subject to an accumulated infinitesimal expansion rate higher than in the reliable spike case.

In contrast to si(t), ei(t) is directly computable in terms of contributions from different terms in the flow. We refer the reader to the Appendix for a detailed treatment of input conditions leading to reliable or unreliable spikes. Importantly, the source of “local” expansion ei(t) is dominated by the effect of a single cell’s vector field F(θi) (from Eqn. (1)) which directly depends the phase trajectory θi(t) prior to a spike.

If , F’(θi(t)) is negative, and becomes positive for — in absence of fluctuating inputs from network or external source). When an uncoupled cell is driven by ζi, we know that on average, it spends more time in its contractive region ( ) and is reliable as a result [5, 19]. While inputs may directly contribute to J(t), their effect is generally so brief that their chief contribution to ei(t) is to steer θi(t) toward expanding regions of its own subspace (see Appendix). Fig 5 (C) confirms that the average phase of a cell preceding an unreliable spike spends more time in its expanding region. Such a phenomenon has previously been reported in the form of a threshold crossing velocity argument [40].

The key feature of this driven system, likely due to sparse and rapid coupling, is a sustained balance between inputs leading to contraction/expansion in local neural subspaces. A bias toward more occurrences of “expansive inputs” yields positive Lyapunov exponents (Mλ > 0) and implies on average, more growth than decay. What is perhaps surprising is that this state space expansion remains confined to subspaces supported by only a few neural directions, which creates this coexistence of chaos and highly reliable spiking throughout the network.

DISCUSSION

In this article, we explored the reliability of fluctuation-driven networks in the excitable regime — where model single cell dynamics contain stable fixed points. We showed that these networks can operate in stable or chaotic regimes and demonstrated that spike trains of single neurons from chaotic networks can retain a great deal of temporal structure across trials. We have found that an attribute of random attractors that directly impacts the reliability of single cells is the orientation of expanding subspaces, and that the evolving shape of the random attractor is reflected in the intermittent reliability of single neurons. We have also performed a detailed numerical study to analyze the local (i.e., cell-to-cell) interactions responsible for reliable spike events.

This said, a mechanistic understanding of the origins of chaotic, structured spiking remains to be fully developed. Specifically, we still need to work out the role of larger-scale network structures, and how unreliable spike events propagate through the network in a self-sustaining fashion in networks with λ1 > 0. This is a target of our future work.

Throughout this work, we have found the qualitative theory of random dynamical systems to be a useful conceptual framework for studying reliability. Though the theory is predicated on a number of idealizations, we expect most of them (e.g., the assumption that the stimuli are white noise rather than some other type of stochastic process) can be relaxed.

Finally, we note that the phenomena observed here may have consequences for neural information coding and processing. In particular, unreliable spikes are a hallmark of sensitivity to initial conditions and may therefore carry information about previous states of the system (or, equivalently, previous inputs). In contrast, reliable spikes carry repeatable information and computations about the external stimulus ζ(t) (either via directly evoked spikes or propagated by repeatable network interactions). We showed that both unreliable and reliable spike events coexist in chaotic regimes of the system explored. Preliminary results indicate that correlation across external drives greatly enhances a network’s spike time reliability and will be the object of an upcoming publication. The resulting implications for the neural encoding of signals are an intriguing avenue for further investigation.

Acknowledgments

The authors thank Lai-Sang Young for helpful insights. This work was supported in part by an NSERC graduate scholarship, an NIH Training Grant R90 DA033461, the Burroughs Wellcome Fund Scientific Interfaces, and the NSF under grant DMS-0907927. Numerical simulations performed on NSF’s XSEDE supercomputing platform.

APPENDIX

Model and coordinate transformations from QIF

Our networks are composed of θ-neurons, which are equivalent to the quadratic-integrate-and-fire (QIF) model [22, 23]. The latter is formulated in terms of membrane potentials, and thus has a direct physical interpretation. However, it is a hybrid dynamical system, i.e., its solutions are instantaneously reset to a base value after a spike is emitted. For our purposes, such discontinuities are rather inconvenient. Fortunately, there exists a smooth change of coordinates mapping the QIF (hybrid) dynamics to the θ-space, where a cell’s membrane potential is represented by a phase variable on the unit circle S1. This representation has the advantage of being one of the simplest to capture the nonlinear spike generating mechanisms of Type I neurons with solutions that remain smooth and live on a compact domain, a mathematical feature central to this study. We now review this change of coordinates and the equivalence of the two models.

The variable v represents the membrane potential of a single neuron and its dynamics are described by the following equation:

| (7) |

where τ is the cell membrane time constant, vR and vT are rest and threshold voltages respectively and Δv = vT − vR. Ia is an applied constant current, and Id(t) is a time varying input drive. If v(t) crosses the threshold vT, its trajectory quickly blows up to infinity where it is said to fire a spike. Once a spike is fired, v(t) is reset to −∞ and the trajectory will converge toward vR. To implement this in simulations, a ceiling value is set such that when reached, it represents the apex of a spike and the voltage v(t) is “manually” reset to a value below threshold. As we will see, the θ-model circumvents the need for this procedure.

In absence of other inputs (Id = 0), the baseline current

places the system at a saddle node bifurcation, responsible for the onset of tonic (periodic) firing. Therefore, if Ia < I*, the neuron is said to be in excitable regime whereas if Ia > I*, it is in oscillatory regime.

Let us suppose that the input term Id(t) is a realization of a white noise process scaled by a constant ρ. We can rewrite (7) as a stochastic differential equation (SDE)

| (8) |

where Wt is a standard Wiener process. We treat (8) as an SDE of the Itô type [27] as it is more convenient for numerical simulations and carry out the change of variables accordingly.

Let us introduce a new variable θ defined by

| (9) |

along with a rescaling of time

| (10) |

Equation (8) now reads

| (11) |

where F(θ) = 1 + cos(2πθ), Z(θ) = 1 − cos(2πθ) and

which is the θ-model on [0, 1] we want. In absence of stochastic drive and for η < 0, the two fixed points are given by

which for η = −1 yields θs = 1/4 and θu = 3/4. For η > 0, the neuron fires periodically at a frequency of .

Typical parameter choices for the QIF model are

| (12) |

with time in units of milliseconds. Expression (10) implies that one time unit in θ-coordinates corresponds to about 125 milliseconds. In the absence of applied current Ia, we get η = −1.

Network architecture and synaptic coupling

In the main manuscript, we explore the dynamics of Erdös-Renyi type random networks of N = 1000 cells of which 80% are excitatory and 20% inhibitory. Each cell receives on average K = 20 synaptic connections from each excitatory and inhibitory subpopulation. We implement a classical balanced state architecture and scale synaptic weights of these connections by which ensures that fluctuations from network interactions remain independent of K in the large N limit [9] (as long as K ≪ N and cells fire close to independently). Although we do not systematically explore the scaling effects of N and K, preliminary results for combinations of K = 50, 100, 200 and N =2500, 5000 indicate that our findings are qualitatively robust to system size.

As mentioned earlier, one of the advantages of the θ-neuron model is the continuity of dynamics in phase space. We can therefore easily implement synaptic interaction between two neurons with differentiable and bounded terms. Synaptic interactions between θ-neurons are modeled using a smooth function

| (13) |

where and .

For example, for two cells coupled as 2 → 1, we have

| (14) |

in which a12 is the synaptic strength from neuron 2 to neuron 1. Neuron 2 only affects θ1 when θ2 ∈ [− b, b], mimicking a rapid rise and fall of a synaptic variable in response to presynaptic potential fluctuation during spike generation. We follow the approach of Latham et al. [23] to assess the effective coupling strength from neuron θ2 to neuron θ1 in the form of evoked post synaptic potentials (PSP). Specifically, we first derive a relationship between the value of a12 and the evoked PSP following a presynaptic spike from θ2 in the θ coordinates. We then translate this to the voltage coordinates.

We assume that η = −1 (equiv. to Ia = 0), and that θ1 sits at rest , and compute the value θS = θR + θPSP. As the support of g is quite small, let us linearize (14) for θ2 when it crosses 0 ~ 1. We obtain neuron 2’s phase velocity at spike time, θ̇2 = 2, and hold this velocity constant in the calculation that follows. Suppose that at t = 0, θ2 is at the left end of g’s support, then

which gives us the non-autonomous equation for θ1

| (15) |

We make a final assumption for small PSPs and assume that the behavior of (15) is linear about the resting phase (θ1 = θR). This yields θ̇1 = a12g(2t − β) which in turn gives us

Notice that , which gives the relationship

Although we have made fairly strong assumptions about the θ-dynamics in deriving this expression, we tested it numerically and found that predictions of post synaptic θ variations were accurate up to the third significant digit, for the range of PSPs of interest. Using (9), we get the equivalent expression:

For K = 20 and , we get the following approximations for excitatory and inhibitory PSPs: vEPSP ≃ 4.0mV and vIPSP ≃ −8.4mV.

Finally, we note that all synaptic couplings, when present between two cells, are of the same strength throughout the network (only the sign changes to distinguish between excitatory and inhibitory connections). Additionally, while both η and ε are network-wide constants, we introduce

(10−2) perturbations randomly chosen for each cell in order to avoid symmetries in the system.

(10−2) perturbations randomly chosen for each cell in order to avoid symmetries in the system.

Lyapunov spectrum approximation

In the main manuscript, we present approximations of the Lyapunov spectrum λ1 ≥λ2 ≥… ≥λN and related quantities for the network described by (1). Under very general conditions, the λi are well defined for system (1) and that they do not depend on the choice of IC or ζ(t). However, the Lyapunov exponents generally cannot be computed analytically and we therefore use Monte-Carlo simulations to approximate them. We numerically simulate system (1) using a Euler-Maruyama scheme with time steps of 0.005. At each point in time, we simultaneously solve the corresponding variational equation

| (16) |

where J(t) is the Jacobian of the flow evaluated along the simulated trajectory and S(0) is the N × N identity matrix. The solution matrix S(t) is then orthogonalized at each time step in order to extract the exponential growth rates associated with each Lyapunov subspace. See [41] for details of this standard algorithm.

All reported values of λi have a standard error less than 0.002, estimated by the method of batched means [42] (batch size = 500 time units) and cross-checked using several realizations of white noise processes and random connectivity matrices. We have also verified, by spot checks, that varying the batch window size does not affect the error estimate significantly.

Numerical simulations were implemented in Python and Cython programming languages and carried out on NSF’s XSEDE supercomputing platform.

Spike triggered flow decomposition

We take a closer look at the single-cell flow in an attempt to better understand the origin of reliable and unreliable spikes. We concentrate on the effect of inputs on the local expansion coefficient ei(t) (see Eqn. (6)). Consider the time evolution of vi(t) by unpacking the ith component of the discretized version of (5):

| (17) |

where and ξt ~ N(0, 1); Δt is the time increment.

We substitute expression (17) as the numerator in the definition of ei(t) (Eqn. (6)), in order to discern the contribution of different terms in the network dynamics to state space expansion. Let us define the following terms

| (18) |

Notice the use of the absolute value for v(t) components which ensures that Hk(t) > 0 implies expansion (or, if Hk(t) < 0, contraction) in whichever of the positive and negative directions vi(t) is pointing.

Here, H0 captures the contribution of the single-cell vector field to the proportional growth (or decay) of vi(t). Note from Fig 6 (A) that

(θi), the main contributing part of H0, is negative for θi ∈ (0, 1/2) and positive for θi ∈ (1/2, 1). Meanwhile, H1 measures the contribution of synaptic inputs and H2(t) the relative contribution of presynaptic neurons’ coordinates. The latter varies quite rapidly, because the derivative of the coupling function g(θj) (shown in Fig 6 (A)) takes large positive and negative values. In essence, it quantifies the transfer of expansion from one cell to the next: if |vj(t)| is large, and sgn[vi(t)vj(t)] = 1, then H2 causes expansion in the vi(t). Finally, H3 captures the contribution of the external drive.

(θi), the main contributing part of H0, is negative for θi ∈ (0, 1/2) and positive for θi ∈ (1/2, 1). Meanwhile, H1 measures the contribution of synaptic inputs and H2(t) the relative contribution of presynaptic neurons’ coordinates. The latter varies quite rapidly, because the derivative of the coupling function g(θj) (shown in Fig 6 (A)) takes large positive and negative values. In essence, it quantifies the transfer of expansion from one cell to the next: if |vj(t)| is large, and sgn[vi(t)vj(t)] = 1, then H2 causes expansion in the vi(t). Finally, H3 captures the contribution of the external drive.

FIG. 6.

(Colors online) (A) Distinct terms of the single cell flow and Jacobian. Inset : synaptic coupling function g(o). For panels (B–F), t = 0 marks the spike time and rel/unrel indicate the identity of the spike used in the average. (B) Spike triggered average phase θi (same as in Fig 5 E). (C–F) Spike triggered average terms H0(θi), H1(θi), H2(θi) and H3(θi). Network parameters: η = −0.5, ε = 0.5, yielding λ1 ≃ 2.5. For all panels except (A): shaded areas surrounding the computed averages show two standard errors of the mean. a No shade indicates that the error is too small to visualize.

a Standard errors of the mean were verified via spot checks using the method of batched means, with about 100 batches of size 1000.

We now assess the relative importance of all of these dynamical effects to spike time reliability. We do this by comparing the magnitude and sign of the H terms. Specifically, we compute spike-triggered averages of these terms in periods before reliable and unreliable spike events. We continue to use the criterion from the main text that a spike is considered reliable if it occurs on each of the simulated trials.

Notice first that inputs – synaptic or external – enter multiplicatively with Z′(θi), which is negative in (see Fig 6). This implies that more-excitatory synaptic inputs – or more-positive external inputs – arriving shortly before spikes promote contraction for H1 and H3. We see that both of these terms are primarily negative in the time periods before spikes (Panels (D),(F)), as positive inputs push cells across the spiking threshold.

Expansion – especially for unreliable events – arises from the coupling term H2 and from the term H0 representing internal dynamics. Note in particular that this latter term is an order of magnitude higher than the others; thus, we focus our attention on this next. Panel (B) of Fig 6 shows that the speed at which phases cross the threshold is lower for unreliable spikes than for reliable ones. This further explains why the H0 averages –mainly depending on

– are larger for the unreliable spikes.

– are larger for the unreliable spikes.

Thus, we conclude – as noted in the main text – that the primary dynamical mechanism behind the unstable dynamics is that inputs steer θi(t) in expansive regions of its own subspace (see Fig 6 (C) or Fig 5 (E)). This conclusion that instabilities in the flow are mainly generated by intrinsic dynamics is interesting, as it suggests that network stability could vary in rich ways depending on cell type and spike generation mechanisms.

References

- 1.Eigen M, Gardiner W, Schuster P, Winkleroswatitsch R. Scientific american. 1981;244 doi: 10.1038/scientificamerican0481-88. [DOI] [PubMed] [Google Scholar]

- 2.Bialek W, Rieke F, de Ruyter Van Steveninck R, Warland D. Science. 1991;252:1854. doi: 10.1126/science.2063199. [DOI] [PubMed] [Google Scholar]

- 3.Uchida A, McAllister R, Roy R. Physical review letters. 2004;93 doi: 10.1103/PhysRevLett.93.244102. [DOI] [PubMed] [Google Scholar]

- 4.Rulkov N, Sushchik M, Tsimring L, Abarbanel H. Physical Review E. 1995 Jan;51:980. doi: 10.1103/physreve.51.980. [DOI] [PubMed] [Google Scholar]

- 5.Lin K, Shea-Brown E, Young LS. J Nonlin Sci. 2009;19(5):497. [Google Scholar]

- 6.Shu Y, Hasenstaub A, McCormick D. Nature. 2003;423:288. doi: 10.1038/nature01616. [DOI] [PubMed] [Google Scholar]

- 7.Tomko G, Crapper D. Brain Res. 1974;79:405. doi: 10.1016/0006-8993(74)90438-7. [DOI] [PubMed] [Google Scholar]

- 8.Noda H, Adey W. Brain Res. 1970;18:513. doi: 10.1016/0006-8993(70)90134-4. [DOI] [PubMed] [Google Scholar]

- 9.van Vreeswijk C, Sompolinsky H. Neural Comput. 1998 doi: 10.1162/089976698300017214. [DOI] [PubMed] [Google Scholar]

- 10.Shadlen MN, Newsome WT. J Neurosci. 1998;18:3870. doi: 10.1523/JNEUROSCI.18-10-03870.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Monteforte M, Wolf F. Phys Rev Lett. 2010;105:268104. doi: 10.1103/PhysRevLett.105.268104. [DOI] [PubMed] [Google Scholar]

- 12.London M, Roth A, Beeren L, Häusser M, Latham PE. Nature. 2010;466:123. doi: 10.1038/nature09086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Molgedey L, Schuchhardt J, Schuster HG. Phys Rev Lett. 1992;69:3717. doi: 10.1103/PhysRevLett.69.3717. [DOI] [PubMed] [Google Scholar]

- 14.Banerjee A, Seriès P, Pouget A. Neural Comput. 2008;20:974. doi: 10.1162/neco.2008.05-06-206. [DOI] [PubMed] [Google Scholar]

- 15.Rajan K, Abbott L, Sompolinsky H, Proment D. Phys Rev E. 2010 doi: 10.1103/PhysRevE.82.011903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lin KK, Shea-Brown E, Young LS. J Comput Neuro. 2009 Aug;27:135. doi: 10.1007/s10827-008-0133-3. [DOI] [PubMed] [Google Scholar]

- 17.Litwin-Kumar A, Oswald A-MM, Urban NN, Doiron B. PLoS Comput Biol. 2011;7:e1002305. doi: 10.1371/journal.pcbi.1002305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bazhenov M, Rulkov N, Fellous J-M, Timofeev I. Physical Review E. 2005 Oct;72:041903. doi: 10.1103/PhysRevE.72.041903. [DOI] [PubMed] [Google Scholar]

- 19.Ritt J. Phys Rev E. 2003;68:1. doi: 10.1103/PhysRevE.68.041915. [DOI] [PubMed] [Google Scholar]

- 20.Pikovsky A, Rosenblum M, Kurths J. Synchronization: A Universal Concept in Nonlinear Sciences. 2001 [Google Scholar]

- 21.Pakdaman K, Mestivier D. Physical Review E. 2001 Jan; doi: 10.1103/PhysRevE.64.030901. [DOI] [PubMed] [Google Scholar]

- 22.Ermentrout B. Neural Comput. 1996;8:979. doi: 10.1162/neco.1996.8.5.979. [DOI] [PubMed] [Google Scholar]

- 23.Latham PE, Richmond BJ, Nelson PG, Nirenberg S. J Neurophysiol. 2000;83:808. doi: 10.1152/jn.2000.83.2.808. [DOI] [PubMed] [Google Scholar]

- 24.Ermentrout B, Terman D. Interdisciplinary applied mathematics. Vol. 35. Springer; 2010. Mathematical foundations of neuroscience; p. 422. [Google Scholar]

- 25.Mainen Z, Sejnowski T. Science. 1995;268:1503. doi: 10.1126/science.7770778. [DOI] [PubMed] [Google Scholar]

- 26.Bryant H, Segundo J. J Physiology. 1976;260:279. doi: 10.1113/jphysiol.1976.sp011516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Lindner B, Longtin A, Bulsara A. Neural Comput. 2003;15:1761. doi: 10.1162/08997660360675035. [DOI] [PubMed] [Google Scholar]

- 28.Destexhe A, Rudolph M, Paré D. Nat Rev Neurosci. 2003 Sep;4:739. doi: 10.1038/nrn1198. [DOI] [PubMed] [Google Scholar]

- 29.Kunita H. Stochastic Flows and Stochastic Differential Equations. Vol. 24. Cambridge: Cambridge University Press; 1990. p. xiv+346. [Google Scholar]

- 30.Kifer Y. Ergodic theory of random transformations. Birkhauser; Boston: 1986. [Google Scholar]

- 31.LeJan Y. Ann Inst Henri Poincaré. 1987;23:11. [Google Scholar]

- 32.Baxendale . Spatial stochastic processes, Progress in Probability. Vol. 19. Springer; 1991. p. 189. [Google Scholar]

- 33.Ledrappier F, Young LS. Probab Th and Rel Fields. 1988;80:217. [Google Scholar]

- 34.Eckmann JP, Ruelle D. Rev Mod Phys. 1985 Jul;57:617. [Google Scholar]

- 35.Young L. Journal of Statistical Physics. 2002 Sep;108 [Google Scholar]

- 36.Namenson A, Ott E, Antonsen T. Phy Rev E. 1996;53:2287. doi: 10.1103/physreve.53.2287. [DOI] [PubMed] [Google Scholar]

- 37.Herz A, Hopfield J. Phys Rev Lett. 1995;75:1222. doi: 10.1103/PhysRevLett.75.1222. [DOI] [PubMed] [Google Scholar]

- 38.Tiesinga P, Fellous JM, Sejnowski TJ. Nat Rev Neurosci. 2008;9:97. doi: 10.1038/nrn2315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Rieke F, Warland D, de Ruyter van Steveninck R, Bialek W. Spikes: Exploring the Neural Code. Cambridge, MA: 1996. [Google Scholar]

- 40.Banerjee A. J Comp Neuro. 2006;20:321. doi: 10.1007/s10827-006-7188-9. [DOI] [PubMed] [Google Scholar]

- 41.Geist K, Parlitz U, Lauterborn W. Prog Theor Phys. 1990;83:875. [Google Scholar]

- 42.Asmussen S, Glynn PW. Stochastic modelling and applied probability. Vol. 57. New York: Springer; 2007. Stochastic simulation : algorithms and analysis. [Google Scholar]

- 43.Lajoie G, Lin KK, Shea-Brown E. Phys Rev E. 2013 May;87:052901. doi: 10.1103/PhysRevE.87.052901. [DOI] [PMC free article] [PubMed] [Google Scholar]