Abstract

To compare the agreement of electronic health record (EHR) data versus Medicaid claims data in documenting adult preventive care. Insurance claims are commonly used to measure care quality. EHR data could serve this purpose, but little information exists about how this source compares in service documentation. For 13 101 Medicaid-insured adult patients attending 43 Oregon community health centers, we compared documentation of 11 preventive services, based on EHR versus Medicaid claims data. Documentation was comparable for most services. Agreement was highest for influenza vaccination (κ = 0.77; 95% CI 0.75 to 0.79), cholesterol screening (κ = 0.80; 95% CI 0.79 to 0.81), and cervical cancer screening (κ = 0.71; 95% CI 0.70 to 0.73), and lowest on services commonly referred out of primary care clinics and those that usually do not generate claims. EHRs show promise for use in quality reporting. Strategies to maximize data capture in EHRs are needed to optimize the use of EHR data for service documentation.

Keywords: Electronic Health Records, Preventive Services, Medical Care Delivery, Claims Data, Quality of Care

Background and significance

Healthcare organizations are increasingly required to measure and report the quality of care they deliver, for regulatory and reimbursement purposes.1–5 Such quality evaluations are often based on insurance claims data,6–8 which have been shown to accurately identify patients with certain diagnoses,9–12 but to be less accurate in identifying services provided, compared to other data sources.6–8 13 14

Electronic health records (EHRs) are an emergent source of data for quality reporting.15 16 Unlike claims, EHR data include unbilled services, and services provided to uninsured persons, or those with varied payers. Yet the use and accuracy of EHRs for service documentation and reporting may vary significantly.16–28 While EHR-based reports have been validated against Medicaid claims for provision of certain diabetes services,6 13 little is known about how EHR and claims data compare in documenting the delivery of a broad range of recommended preventive services.6 13 14 16 24

We used EHR data and Medicaid claims data to assess documentation rates of 11 preventive care services in a population of continuously insured adult Medicaid recipients served by a network of Oregon community health centers (CHCs). Our intent was not to evaluate the quality of service provision among patients due for a service, but to measure patient-level agreement for documentation of each service across the two data sources.

Materials and methods

Data sources

OCHIN EHR dataset

We obtained EHR data from OCHIN, a non-profit community health information network providing a linked, hosted EHR to >300 CHCs in 13 states.29 The OCHIN EHR data contains information from Epic practice management (eg, billing and appointments) as well as demographic, utilization, and clinical data from the full electronic medical record. Using automated queries of structured data, we extracted information for the measurement year (2011) from OCHIN's EHR data repository for the 43 Oregon CHCs that implemented OCHIN's practice management and full electronic medical record before January 1, 2010.

Medicaid insurance dataset

We obtained enrollment and claims data for all patients insured by Oregon's Medicaid program for 2011. This dataset was obtained 18 months after December 31, 2011 to account for lag time in claims processing. This study was approved by the Institutional Review Board of Oregon Health and Science University.

Study population

We used Oregon Medicaid enrollment data to identify adult patients aged 19–64 years throughout 2011, who were fully covered by Medicaid in that time and had ≥1 billing claim. We used Medicaid identification numbers to match patients in the Medicaid and EHR datasets; we then identified cohort members who had ≥1 primary care encounter in ≥1 of the study clinics in 2011 (n=18 471).30 We excluded patients who had insurance coverage in addition to Medicaid (n=3870), were pregnant (n=1494), or died (n=6) in the study period. The resulting dataset included 13 101 patients who were continuously, solely covered by Medicaid throughout 2011, and appeared in both the OCHIN EHR and Medicaid claims datasets.

Preventive service measures

The intent of this analysis was to compare the datasets in their documentation of whether a service was done/ordered for a given patient, not to identify who should have received that service. In other words, we did not examine whether the service was due in 2011 (eg, whether a 52-year-old woman had a normal mammogram in 2010 and was, therefore, not likely due for another until 2012).

To that end, we assessed documentation of 11 adult preventive care services during 2011: screening for cervical, breast, and colorectal cancer (including individual screening tests—colonoscopy, fecal occult blood test (FOBT), flexible sigmoidoscopy—and an overall colon cancer screening measure); assessment of body mass index (BMI) and smoking status; chlamydia screening; cholesterol screening; and influenza vaccination.31 32 Each service was measured in the eligible age/gender subpopulation for whom it is recommended in national guidelines (table 2 footnotes).

Table 2.

Receipt of screening services and agreement by data source, 2011

| Measure | Total eligible* patients | OCHIN EHR No. (%) |

Claims No. (%) |

Combined EHR and claims No. (%) |

κ statistic (95% CI) |

|---|---|---|---|---|---|

| Cervical cancer screening† | 7509 | 2100 (28.0) | 2533 (33.7) | 2777 (37.0) | 0.71 (0.70 to 0.73) |

| Breast cancer screening‡ | 4173 | 1435 (34.4) | 1627 (39.0) | 2174 (52.1) | 0.34 (0.31 to 0.37) |

| Colon cancer screening, any§ | 3761 | 1199 (31.9) | 870 (23.1) | 1425 (37.9) | 0.48 (0.45 to 0.51) |

| Colonoscopy§ | 3761 | 270 (7.2) | 433 (11.5) | 590 (15.7) | 0.26 (0.21 to 0.30) |

| Flexible sigmoidoscopy§ | 3761 | 0 (0.0) | 7 (0.2) | 7 (0.2) | – |

| FOBT‡ | 3761 | 1106 (29.4) | 500 (13.3) | 1130 (30.0) | 0.50 (0.47 to 0.53) |

| Chlamydia screening¶ | 523 | 224 (42.8) | 268 (51.2) | 309 (59.1) | 0.52 (0.45 to 0.59) |

| Cholesterol screening** | 12 817 | 5060 (39.5) | 5400 (42.1) | 5836 (45.5) | 0.80 (0.79 to 0.81) |

| Influenza vaccine†† | 3788 | 1573 (41.5) | 1318 (34.8) | 1653 (43.6) | 0.77 (0.75 to 0.79) |

| BMI assessed | 13 101 | 11 392 (87.0) | 141 (1.1) | 11 409 (87.1) | 0.0002 (−0.001 to 0.002) |

| Smoking status assessed | 13 101 | 12 021 (91.8) | 0 (0.0) | 12 021 (91.8) | – |

Denominator: patients appearing in both OCHIN EHR and claims datasets (N=13 101).

*Age/gender categories in which screening is appropriate.

†Women aged 19–64 with no history of hysterectomy.

‡Women aged ≥40 with no history of bilateral mastectomy.

§Men and women aged ≥50 with no history of colorectal cancer or total colectomy.

¶Sexually active women aged 19–24.

**Men and women aged ≥20; cholesterol screening includes low density lipoprotein, high density lipoprotein, total cholesterol, and triglycerides.

††Men and women aged ≥50.

BMI, body mass index; EHR, electronic health record; FOBT, fecal occult blood test.

To identify services in each dataset, we used code sets commonly used for reporting from each respective data source. In the EHR, we used codes based on Meaningful Use Stage 1 measures.4 These included ICD-9-CM diagnosis and procedure codes, CPT and HCPCS codes, LOINC codes, and medication codes. We also used relevant code groupings and codes specific to the OCHIN EHR, used for internal reporting and quality improvement. These codes could identify ordered and/or completed services; some codes may capture ordered services that were never completed. The codes used to capture service provision in the Medicaid claims data were based on the Healthcare Effectiveness Data and Information Set (HEDIS) specifications as they are tailored to claims-based reporting.3 These included standard diagnosis, procedure, and revenue codes. The 2013 HEDIS Physician measures did not include specifications for influenza vaccine or cholesterol screening, so the code sets used for assessing these measures in claims were the same as those used for the EHR data. A given service was considered ‘provided’ if it was documented as ordered, referred, or completed at least once during the measurement year. Online supplementary appendix A details the codes and data fields used to tabulate the numerator and denominator in each dataset for each measure.

Analysis

We described the study sample demographics using EHR data. We then assessed documentation of each preventive service in three ways. First, we tabulated the percentage of eligible patients with documented services in the EHR, and in Medicaid claims. Next, we calculated κ statistics to measure level of agreement between EHR and Medicaid claims at the patient level. The κ statistic captures how well the datasets agree in their ‘observation’ of whether a given individual received a service, compared to agreement expected by chance alone. We considered κ statistic scores >0.60 to represent substantial agreement, 0.41–0.60 moderate agreement, and 0.21–0.40 fair agreement.33 Last, we tabulated the total percentage of services captured in the combined EHR–claims dataset (ie, the percentage obtained when combining the EHR and claims data).

Results

Patient demographics

The 13 101 patients in the study population were predominantly female (65.6%), white (74.2%), English speaking (82.7%), and from households earning ≤138% of the federal poverty level (91.6%). The study population was evenly distributed by age (table 1).

Table 1.

Demographic characteristics of study sample

| Patients appearing in both EHR and claims (N=13 101) | ||

|---|---|---|

| No. | % | |

| Gender | ||

| Female | 8600 | 65.6 |

| Male | 4501 | 34.4 |

| Race | ||

| Asian/Pacific Islander | 772 | 5.9 |

| American Indian/Alaskan native | 180 | 1.4 |

| Black | 1409 | 10.8 |

| White | 9720 | 74.2 |

| Multiple races | 143 | 1.1 |

| Unknown | 877 | 6.7 |

| Race, ethnicity | ||

| Hispanic | 1186 | 9.1 |

| Non-Hispanic, white | 8943 | 68.3 |

| Non-Hispanic, other | 2441 | 18.6 |

| Unknown | 531 | 4.1 |

| Primary language | ||

| English | 10 836 | 82.7 |

| Spanish | 618 | 4.7 |

| Other | 1647 | 12.6 |

| Federal poverty level* | ||

| ≤138% FPL | 11 996 | 91.6 |

| >138% FPL | 838 | 6.4 |

| Missing/unknown | 267 | 2.0 |

| Age in years† | ||

| 19–34 | 4632 | 35.4 |

| 35–50 | 4681 | 35.7 |

| 51–64 | 3788 | 28.9 |

| Mean (SD) | 40.6 (12.3) | |

*Average of all 2011 encounters, excluding null values and values ≥1000% (which were considered erroneous).

†As of January 1, 2011.

EHR, electronic health record; FPL, federal poverty level.

Receipt of screening services

Medicaid claims documented a greater number of the following services than did EHR: cervical and breast cancer screening, colonoscopy, chlamydia screening, and cholesterol screening. Absolute differences ranged from 2.6% to 8.4% (table 2). The EHR data identified more patients with documented services than did claims data for total colorectal cancer screening, FOBT, influenza vaccine, BMI, and smoking assessment. The absolute differences in these services ranged from 6.7% to 91.8%, with two services (BMI and smoking screening) ranking highest among all services documented in the EHR, but minimally present in Medicaid claims. Flexible sigmoidoscopy was rarely documented in both datasets. Combining the EHR and Medicaid claims data yielded the highest documentation rates.

Agreement between EHR and Medicaid claims

We observed similar levels of documentation and high levels of agreement (κ>0.60) between the two datasets for cervical cancer screening (κ=0.71, 95% CI 0.70 to 0.73), influenza vaccine (κ=0.77, 95% CI 0.75 to 0.79), and cholesterol screening (κ=0.80, 95% CI 0.79 to 0.81) (table 2). EHR and Medicaid claims data captured similar rates of services but lower levels of agreement for breast cancer screening (κ=0.34, 95% CI 0.31 to 0.37), colorectal cancer screening-combined (κ=0.48, 95% CI 0.45 to 0.51), colonoscopy (κ=0.26, 95% CI 0.21 to 0.30), and chlamydia screening (κ=0.52, 95% CI 0.44 to 0.59), indicating that the data sources were identifying services received by varying patients in our datasets. FOBT (κ=0.50, 95% CI 0.47 to 0.53) had differing documentation rates but also had lower agreement. κ statistics could not be computed for two measures, as no documented screenings for smoking assessment were present in Medicaid claims, and the EHR had no flexible sigmoidoscopies. The poor agreement between data sources for BMI was likely due to the low number of patients with recorded BMI assessments in Medicaid claims compared to the EHR (1.1% vs 87.0%, respectively).

Discussion

For most of the preventive services we examined, EHR data compared favorably to Medicaid claims in documenting the percentage of patients with service receipt. Not surprisingly, services usually performed in the primary care setting (eg, cervical cancer screening, FOBT, cholesterol screening) were more frequently observed in the EHR compared to claims; services that are often referred out (eg, mammography, colonoscopy) were less frequently observed in the EHR.

For some measures, each dataset alone captured a similar percentage of patients receiving screening, but had low individual-level agreement, and much higher documentation of rates when using a combined EHR–claims dataset (table 2), suggesting that each dataset captured some service provision in varying patients, depending on the service. We hypothesized that the location of service delivery might also explain these differences. Services with high agreement between the EHR and claims datasets (eg, cervical cancer screening, cholesterol screening, influenza vaccination) are often performed within the primary care setting, and generate a billable charge that appears in claims data. Services that are usually referred out to other providers, such as breast and colorectal cancer screening, demonstrated lower agreement. Patients may have had a service ordered by a primary care provider but did not receive the service or did not have it billed to Medicaid, resulting in documentation in the EHR but not claims. Conversely, patients may have received a billable service but never had an associated order or communication back to the primary care clinic, resulting in documentation in claims data but not the EHR.

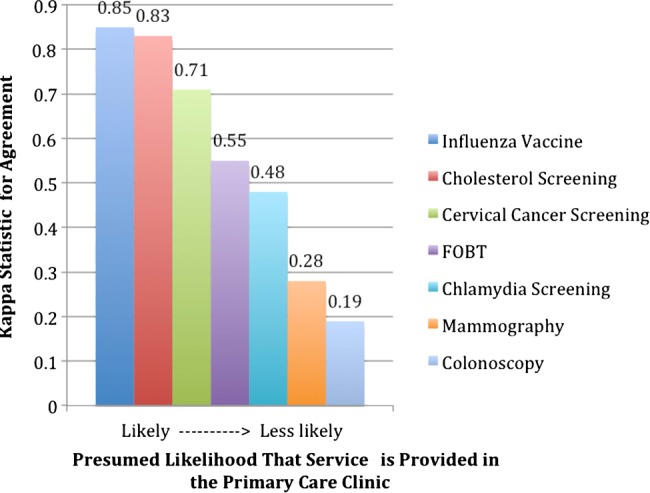

We examined whether location of service delivery could help to explain agreement between the two data sources for billable services using κ statistics. As in figure 1, higher κ values were associated with services more likely to be received in the primary care setting, and lower κ values with services more likely to be referred out. To our knowledge, this observation has not previously been demonstrated in a dataset of this size; thus, these results imply that health systems should emphasize strategies for incorporating outside records into their EHR. Smoking and BMI assessment likely had poor agreement between data sources because these screenings are rarely billed, and therefore are not present in claims. This asymmetry is important because reporting on these measures will be mandated by Meaningful Use, and these results show that claims-based reporting is likely insufficient to adequately capture these types of services.

Figure 1.

Decreasing κ statistic for agreement between electronic health record and Medicaid claims potentially related to location of service provision. Flexible sigmoidoscopy, body mass index, and tobacco assessment were not included because of low overall numbers.

Our results suggest that a combination of EHR and claims data could provide a reporting foundation for the most complete current assessment of healthcare quality. However, use of such a ‘hybrid’ method is not an efficient long-term solution, as it restricts quality assessments to patients with a single payer source. Restricting performance measurement solely to claims data often ignores health behavior assessments and counseling activities (eg, smoking assessment and BMI calculation) performed at the point of care. Our findings suggest that EHRs are a promising data source for assessing care quality. To maximize the use of EHRs for reporting and quality purposes, strategies to improve clinical processes that expand data capture of referrals and completed services within the EHR are needed. This will also help clinicians to ensure patients receive needed prevention. Improved clinic workflows, user-friendly provider interfaces, and systems to improve rapid cycle or point of care feedback to providers could all improve the capture of reportable data.

Another method for augmenting EHR data for reporting purposes is natural language processing (NLP), which may be used to mine non-structured clinical data. NLP has been used in controlled research settings to detect falls in inpatient EHRs, to conduct surveillance on adverse drug reactions and diseases of public health concern in outpatient EHRs, and to examine some preventive service use/counseling in narrow settings.34–43 However to our knowledge, NLP has not been used to detect broad preventive service provision in a large dataset with heterogeneous data. NLP holds great promise for improved reporting accuracy, but it is not currently being routinely used by community practices for quality reporting, is not routinely available at the point of care, and does not have the ability to efficiently respond to federal reporting mandates (eg, Meaningful Use) that require structured data formats, which is why it was not a part of our analysis.4 44 However, should NLP technologies continue to progress in their accessibility to clinical staff working in heterogeneous, broad, datasets, they will be invaluable in complementing structured data queries. Future research should focus on how NLP can optimize the use of EHR data for supporting these functions.

Limitations

First, we only included patients who were continuously insured by Medicaid and received services at Oregon CHCs, which may limit generalizability of our findings. Second, although the selection of this continuously insured population allowed us to have the same population denominator in both datasets, these analyses do not fully represent the extent to which EHR data capture a larger and more representative patient population than claims data (eg, EHRs include services delivered when patients are uninsured). Third, for some services, especially those commonly referred out, EHR data might only capture whether the service was ordered but not whether it was completed; however, for some care quality measures, the ordering of a given service is the metric of interest. In contrast, Medicaid claims generally reflect only completed services but not services recommended or ordered by providers but never completed by patients; this again varies by service. Fourth, the intent of our analysis was to conduct a patient-level comparison of services documented in 1 year in each dataset; we did not assess whether the patient was due for the services. Therefore, the rates reported should not be compared to national care quality rates. Future studies should use longitudinal, multi-year EHR data sources to assess services among those who are due for them, in order to expand these methods to a direct study of quality measures. Such efforts would benefit from capture/recapture methodology to estimate population-level screening prevalence. Finally, we may have missed services that were not coded in the automated extraction of EHR data. However, other analyses have revealed insignificant differences between the results of automated queries of structured data versus reviews that include unstructured data.45 Further examination is needed to determine where certain data ‘live’ in the EHR and how these data might be missed in automated queries.

Conclusion

Primary care organizations need reliable methods for evaluating and reporting the quality of the care they provide. EHRs are a promising source of data to improve quality reporting. Suggestions for how EHR data can better reflect the quality of preventive services delivered include further investigation into data location in EHRs, the development of standardized workflows and improvement processes for preventive service documentation, and electronic information exchanges to facilitate information about outside services getting back into the primary care EHR. Approaches like these may enable a more complete evaluation of the robustness and optimal use of emerging EHR data sources.

Supplementary Material

Acknowledgments

Charles Gallia, PhD, for his considerable assistance with obtaining Oregon Medicaid data. The authors are grateful for editing and publication assistance from Ms. LeNeva Spires, Publications Manager, Department of Family Medicine, Oregon Health and Science University, Portland, Oregon, USA.

Footnotes

Contributors: All authors approved the final version of the manuscript and drafted/revised the manuscript for important intellectual content. JH, SRB, and JED made substantial contributions to study design, analysis, and interpretation of data. MJH and TL executed the acquisition and analysis of data. MM assisted with data analysis, and he and RG contributed to data interpretation and study design. JPO'M assisted with study design, data analysis, and interpretation. SC assisted with study design and data interpretation. AK contributed to data interpretation.

Funding: This study was supported by grant R01HL107647 from the National Heart, Lung, and Blood Institute and grant 1K08HS021522 from the Agency for Healthcare Research and Quality.

Competing interests: None.

Ethics approval: Oregon Health and Science University IRB.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Centers for Medicaid and Medicare Services. Medicare and Medicaid programs: hospital outpatient prospective payment and ambulatory surgical center payment systems and quality reporting programs; electronic reporting pilot; inpatient rehabilitation facilities quality reporting program; revision to quality improvement organization regulations. Final rule with comment period. Federal Register 2012;77:68:209–565 [PubMed] [Google Scholar]

- 2.Wright A, Henkin S, Feblowitz J, et al. Early results of the meaningful use program for electronic health records. N Engl J Med 2013;368:779–80 [DOI] [PubMed] [Google Scholar]

- 3.Center for Medicaid and Medicare Services. Initial Core Set of Health Care Quality Measures for Adults Enrolled in Medicaid (Medicaid Adult Core Set) Technical Specifications and Resource Manual for Federal Fiscal Year 2013 2013

- 4.Center for Medicaid and Medicare Services. 2011–2012 Eligible Professional Clinical Quality Measures (CQMs). [cited 2013 March 8]. http://www.cms.gov/Regulations-and-Guidance/Legislation/EHRIncentivePrograms/CQM_Through_2013.htm

- 5.Harrington R, Coffin J, Chauhan B. Understanding how the Physician Quality Reporting System affects primary care physicians. J Med Pract Manage 2013;28:248–50 [PubMed] [Google Scholar]

- 6.Tang PC, Ralston M, Arrigotti MF, et al. Comparison of methodologies for calculating quality measures based on administrative data versus clinical data from an electronic health record system: implications for performance measures. J Am Med Inform Assoc 2007;14:10–15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Smalley W. Administrative data and measurement of colonoscopy quality: not ready for prime time? Gastrointest Endosc 2011;73:454–5 [DOI] [PubMed] [Google Scholar]

- 8.Bronstein JM, Santer L, Johnson V. The use of Medicaid claims as a supplementary source of information on quality of asthma care. J Healthc Qual 2000;22:13–18 [DOI] [PubMed] [Google Scholar]

- 9.Kottke TE, Baechler CJ, Parker ED. Accuracy of heart disease prevalence estimated from claims data compared with an electronic health record. Prev Chronic Dis 2012;9:E141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Segal JB, Powe NR. Accuracy of identification of patients with immune thrombocytopenic purpura through administrative records: a data validation study. Am J Hematol 2004;75:12–17 [DOI] [PubMed] [Google Scholar]

- 11.Solberg LI, Engebretson KI, Sperl-Hillen JM, et al. Are claims data accurate enough to identify patients for performance measures or quality improvement? The case of diabetes, heart disease, and depression. Am J Med Qual 2006;21:238–45 [DOI] [PubMed] [Google Scholar]

- 12.Fowles JB, Fowler EJ, Craft C. Validation of claims diagnoses and self-reported conditions compared with medical records for selected chronic diseases. J Ambul Care Manage 1998;21:24–34 [DOI] [PubMed] [Google Scholar]

- 13.Devoe JE, Gold R, McIntire P, et al. Electronic health records vs Medicaid claims: completeness of diabetes preventive care data in community health centers. Ann Fam Med 2011;9:351–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.MacLean CH, Louie R, Shekelle PG, et al. Comparison of administrative data and medical records to measure the quality of medical care provided to vulnerable older patients. Med Care 2006;44:141–8 [DOI] [PubMed] [Google Scholar]

- 15.Weiner JP, Fowles JB, Chan KS. New paradigms for measuring clinical performance using electronic health records. Int J Qual Health Care 2012;24:200–5 [DOI] [PubMed] [Google Scholar]

- 16.Kern LM, Kaushal R. Electronic health records and ambulatory quality. J Gen Intern Med 2013;28:1113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chan KS, Fowles JB, Weiner JP. Review: electronic health records and the reliability and validity of quality measures: a review of the literature. Med Care Res Rev 2010;67:503–27 [DOI] [PubMed] [Google Scholar]

- 18.De Leon SF, Shih SC. Tracking the delivery of prevention-oriented care among primary care providers who have adopted electronic health records. J Am Med Inform Assoc 2011;18(Suppl 1):i91–5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Persell SD, Dunne AP, Lloyd-Jones DM, et al. Electronic health record-based cardiac risk assessment and identification of unmet preventive needs. Med Care 2009;47:418–24 [DOI] [PubMed] [Google Scholar]

- 20.Weiner M, Stump TE, Callahan CM, et al. Pursuing integration of performance measures into electronic medical records: beta-adrenergic receptor antagonist medications. Qual Saf Health Care 2005;14:99–106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Dean BB, Lam J, Natoli JL, et al. Review: use of electronic medical records for health outcomes research: a literature review. Med Care Res Rev 2009;66:611–38 [DOI] [PubMed] [Google Scholar]

- 22.Baker DW, Persell SD, Thompson JA, et al. Automated review of electronic health records to assess quality of care for outpatients with heart failure. Ann Intern Med 2007;146:270–7 [DOI] [PubMed] [Google Scholar]

- 23.Greiver M, Barnsley J, Glazier RH, et al. Measuring data reliability for preventive services in electronic medical records. BMC Health Serv Res 2012;12:116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kerr EA, Smith DM, Hogan MM, et al. Comparing clinical automated, medical record, and hybrid data sources for diabetes quality measures. Jt Comm J Qual Improv 2002;28:555–65 [DOI] [PubMed] [Google Scholar]

- 25.Parsons A, McCullough C, Wang J, et al. Validity of electronic health record-derived quality measurement for performance monitoring. J Am Med Inform Assoc 2012;19:604–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kmetik KS, O'Toole MF, Bossley H, et al. Exceptions to outpatient quality measures for coronary artery disease in electronic health records. Ann Intern Med 2011;154:227–34 [DOI] [PubMed] [Google Scholar]

- 27.Persell SD, Wright JM, Thompson JA, et al. Assessing the validity of national quality measures for coronary artery disease using an electronic health record. Arch Intern Med 2006;166:2272–7 [DOI] [PubMed] [Google Scholar]

- 28.Kern LM, Malhotra S, Barron Y, et al. Accuracy of electronically reported ‘meaningful use’ clinical quality measures: a cross-sectional study. Ann Intern Med 2013;158: 77–83 [DOI] [PubMed] [Google Scholar]

- 29.OCHIN. OCHIN 2012 Annual Report 2013. https://ochin.org/depository/OCHIN%202012%20Annual%20Report.pdf

- 30.Calman NS, Hauser D, Chokshi DA. ‘Lost to follow-up’: the public health goals of accountable care. Arch Intern Med 2012;172:584–6 [DOI] [PubMed] [Google Scholar]

- 31.United States Preventive Service Task Force. USPSTF A and B Recommendations by Date. [cited 2013 8/26/13]. http://www.uspreventiveservicestaskforce.org/uspstf/uspsrecsdate.htm

- 32.Centers for Disease Control. Summary* Recommendations: Prevention and Control of Influenza with Vaccines: Recommendations of the Advisory Committee on Immunization Practices—(ACIP)—United States, 2013–14. 2013. [cited 2013 August 26]. http://www.cdc.gov/flu/professionals/acip/2013-summary-recommendations.htm

- 33.Viera AJ, Garrett JM. Understanding interobserver agreement: the kappa statistic. Fam Med 2005;37:360–3 [PubMed] [Google Scholar]

- 34.Skentzos S, Shubina M, Plutzky J, et al. Structured vs. unstructured: factors affecting adverse drug reaction documentation in an EMR repository. AMIA Annu Symp Proc 2011;2011:1270–9 [PMC free article] [PubMed] [Google Scholar]

- 35.Toyabe S. Detecting inpatient falls by using natural language processing of electronic medical records. BMC Health Serv Res 2012;12:448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lazarus R, Klompas M, Campion FX, et al. Electronic Support for Public Health: validated case finding and reporting for notifiable diseases using electronic medical data. J Am Med Inform Assoc 2009;16:18–24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hazlehurst B, McBurnie MA, Mularski R, et al. Automating quality measurement: a system for scalable, comprehensive, and routine care quality Assessment. Am Med Inform Assoc Annu Symp Proc Arch 2009;2009:229–33 [PMC free article] [PubMed] [Google Scholar]

- 38.Clark C, Good K, Jezierny L, et al. Identifying smokers with a medical extraction system. J Am Inform Assoc 2008;15:36–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Denny JC, Peterson JF, Choma NN, et al. Extracting timing and status descriptors for colonoscopy testing from electronic medical records. J Am Inform Assoc 2010;17:383–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Wagholikar KB, MacLaughlin KL, Henry MR, et al. Clinical decision support with automated text processing for cervical cancer screening. J Am Inform Assoc 2012;19:833–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Hazelhurst B, Sittig DF, Stevens VJ, et al. Natural language processing in the electronic medical record: assessing clinician adherence to tobacco treatment guidelines. Am J Prev Med 2005;29:434–9 [DOI] [PubMed] [Google Scholar]

- 42.Harkema H, Chapman WW, Saul M, et al. Developing a natural language processing application for measuring the quality of colonoscopy procedures. J Am Inform Assoc 2011;18(Suppl 1):1150–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Baldwin KB. Evaluating healthcare quality using natural language processing. J Healthc Qual 2008;30:24–9 [DOI] [PubMed] [Google Scholar]

- 44.Blumenthal D, Tavenner M. The ‘meaningful use’ regulation for electronic health records. N Engl J Med 2010;363:501–4 [DOI] [PubMed] [Google Scholar]

- 45.Angier H, Gallia C, Tillotson C, Gold R, et al. Pediatric health care quality measures in state Medicaid administrative claims plus electronic health records. North American Primary Care Research Group Annual Meeting; November 9–13, 2013; Ottawa, Canada: 2013 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.