Abstract

Objective To collaborate with community members to develop tailored infographics that support comprehension of health information, engage the viewer, and may have the potential to motivate health-promoting behaviors.

Methods The authors conducted participatory design sessions with community members, who were purposively sampled and grouped by preferred language (English, Spanish), age group (18–30, 31–60, >60 years), and level of health literacy (adequate, marginal, inadequate). Research staff elicited perceived meaning of each infographic, preferences between infographics, suggestions for improvement, and whether or not the infographics would motivate health-promoting behavior. Analysis and infographic refinement were iterative and concurrent with data collection.

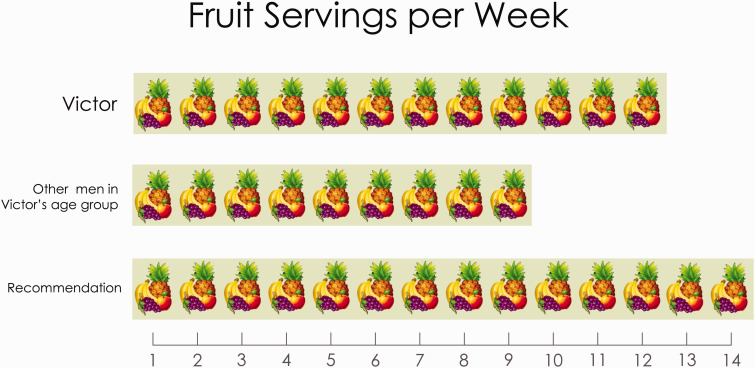

Results Successful designs were information-rich, supported comparison, provided context, and/or employed familiar color and symbolic analogies. Infographics that employed repeated icons to represent multiple instances of a more general class of things (e.g., apple icons to represent fruit servings) were interpreted in a rigidly literal fashion and thus were unsuitable for this community. Preliminary findings suggest that infographics may motivate health-promoting behaviors.

Discussion Infographics should be information-rich, contextualize the information for the viewer, and yield an accurate meaning even if interpreted literally.

Conclusion Carefully designed infographics can be useful tools to support comprehension and thus help patients engage with their own health data. Infographics may contribute to patients’ ability to participate in the Learning Health System through participation in the development of a robust data utility, use of clinical communication tools for health self-management, and involvement in building knowledge through patient-reported outcomes.

Keywords: patient participation, health communication, audiovisual aids, health literacy, comprehension

BACKGROUND AND SIGNIFICANCE

Visualizations, such as graphs, diagrams, and infographics are useful for health communication and engagement, especially when used to support comprehension among individuals with low health literacy. 1 Pictograms have been used successfully to convey medication dosing information and icon arrays to represent and compare the relative health risks associated with treatment options. 2,3 Graphic depictions of the consequences of smoking on cigarette warning labels have been rated by smokers as more engaging and effective than text-only warnings and associated with motivating smoking cessation. 4 These results are in line with Mayer’s Multimedia Learning Principle that “people learn better from words and pictures than from words alone.” 5 However, the fact that some modes of presentation are more efficacious than others 6–8 lends support to Jensen’s assertion that “it is unlikely that visuals (generally) influence outcomes (generally)” (Jensen 9 p. 180). Jensen further argues that without a comprehensive typology of visual message features (e.g., graphical elements) based on conceptual distinctions we cannot systematically test hypotheses about the effects that specific categories of visuals have on specific outcomes in specific populations. In the absence of such a typology, there are still opportunities to identify some best practices for visualization design.

Visualizations are known to work best when pictures illustrating key points and simple text are closely linked and potentially distracting irrelevant details are omitted. 1 Also important is having health professionals, rather than artists, plan the pictures in collaboration with people from the intended audience and evaluating the effects of the resulting communications with and without pictures. 1 Formal evaluation is necessary when engaging in participatory design with the target population because the most favored graphics are not necessarily the ones that most support comprehension. 7 Despite this potential pitfall, using a participatory design approach has substantial value because even seemingly small or subtle details within an image can affect its cultural relevance. 1,10 Unfortunately, these best practices for health information visualizations provide only limited guidance for visualization design. We present the insights we gained from our participatory design process with the intent of contributing to this body of best practices as well as delineating the contributions of this work to the digital infrastructure of the Learning Health Systems.

OBJECTIVES

This work is part of the Washington Heights/Inwood Informatics Infrastructure for Comparative Effectiveness Research (WICER) project. The goals of WICER are to understand and improve the health of the Washington Heights and Inwood neighborhoods of northern Manhattan (zip codes 10031, 10032, 10033, 10034, 10040), the residents of which are predominantly Latino with roots in the Dominican Republic. As part of WICER, survey data were collected from >5800 community members, including self-reported outcomes (e.g., nutrition, mental health, physical activity, overall health), anthropometric measures (i.e., height, weight, waist circumference, and blood pressure), and measures of health literacy. 11

We believe that we have an ethical obligation to return the research data to WICER participants in a way that is accessible, easily comprehended, culturally relevant, and actionable. We are mindful, however, of the challenges inherent to meeting these goals in light of the low levels of health literacy evident in preliminary survey results. We formed the WICER Visualization Working Group (VWG) to develop infographics tailored through the incorporation of participants’ WICER data. The makeup of the VWG, the development of visualization goals, and the iterative prototyping phase with which the design process began are described in detail elsewhere. 12 The subsequent participatory design phase is the subject of this paper. Future steps are to test comprehension and perceived ease of comprehension of tailored infographics as compared to text alone. In addition, to automate the creation of the infographics for distribution to WICER participants, we developed the Electronic Tailored Infographics for Community Engagement, Education, and Empowerment (EnTICE 3 ) system which takes survey data as input, supports matching of type of data to style of infographic through a set of rules (i.e., style guide), and produces a tailored infographic. 13

The primary objective of the participatory design phase was to develop a set of infographics to the point that they would be suitable for tailoring and comprehension testing. Three key factors were relevant to suitability. One, participants needed to regard the infographics, minimally, as acceptable and ideally, as appealing. Two, we needed preliminary evidence of adequate comprehension (i.e., were the prototype infographics being interpreted by participants as we intended?) Three, we needed at least one type of infographic for each type of variable. For example, displaying fruit servings per week can be accomplished similarly to displaying vegetable servings per week; however, displaying body mass index (BMI) requires an altogether different type of design in part, because interpretation of the data requires the viewer to execute different mental tasks. The identification of a single value is all that is needed to parse fruit servings per week, but parsing BMI requires the viewer to first identify a value and then to compare that value to criterion ranges. In the course of iterative prototyping, we found it useful to group infographic designs according to desired outcomes (i.e., completion of specific mental tasks), and from those desired outcomes we specified a set of seven matching visualization goals (see Table 1 ). We defined designs as successful if they appeared to support the desired outcome.

Table 1:

Visualization Goals, Desired Outcomes, Types of Data, Types and Descriptions of Visualizations, and Results

|

Visualization Goal 1: To display a single piece of health information or health behavior.

| |||

|---|---|---|---|

|

Desired Outcome: Identification of value on a measure.

| |||

| Types of Data | Types of Visualizations | Description of Visualization | Result |

| Vegetable servings per week; exercise per week | a. Simple Bar Chart | Simple bar chart of vegetable servings per week or amount of exercise per week. | Lack of context confusing; interpreted as a recommendation. Performed well once converted to a hybrid of goals 4 and 5 with addition of criterion and others’ data as comparators. |

| b. Icon Array | Grouping of numbered icons. Each icon represents a unit of measure (i.e., vegetable serving, amount of exercise). | Confusing due to lack of context. Icons interpreted too literally. Discontinued. | |

| c. Iconographic Bar Chart | Bar chart in which bar is composed of icons. Each icon represents a unit of measure (i.e., vegetable serving, amount of exercise). | Confusing due to lack of context. Still unusable after addition of comparators ( Figures 1 and 6 a) due to overly literal interpretation of icons. Discontinued. | |

| d. Stopwatch | Amount of exercise hours and minutes per week shown as the digital display on a stopwatch. | Comprehended by most; discontinued for lack of interest/enthusiasm. Has potential as a graphical element as part of a more complex design. | |

| Visualization Goal 2: To display a single piece of health information or health behavior that includes a temporal component. | |||

| Desired Outcome: Identification of number of days out of 30 on which the value of the measure is positive. | |||

| CDC 30-day measure (e.g., days feeling healthy and full of energy in the last 30 days) | a. Block Chart | Array of 30 blocks representing days. Graphical elements (e.g., color, shading) used to differentiate affected and unaffected days. | Generally well-comprehended. “Sad” blue and “anxious” dark red helped to convey days of depression and anxiety, respectively. Can be paired with a comparator block array (as in Goal 4) to provide additional information and support comparison. |

| b. Calendar Block Chart | Similar to block chart format but with the addition of graphical elements that suggest a calendar month. | Popular format but discontinued because of overly literal interpretation that the phenomenon happened on the specific days shown. | |

| c. Stacked Bar Chart | Bar chart in which the full bar represents 30 days. Graphical element (e.g., color, shading) used to display part-to-whole relationship of affected and unaffected days. | Most participants understood this design but found it unappealing. Discontinued. | |

| BMI; waist circumference | a. Reference Range Number Line | Number line, with demarcation for BMI/waist circumference reference ranges (e.g., healthy weight, overweight, etc.). The value of interest is indicated or highlighted graphically. | These two visualization types were combined (body silhouettes positioned about their respective reference ranges) based on participants’ suggestion. Designs based on the combined visualization types were favored by participants and associated with good comprehension. They are information-rich, employ color analogies, support comparisons between categories, and contextualize the numerical data in the form of the body silhouettes. |

| b. Reference Category Silhouettes | Series of gender-specific body silhouettes corresponding to BMI/waist circumference reference categories. The relevant silhouette is indicated or highlighted graphically. | ||

| Visualization Goal 3: To compare a single piece of health information or health behavior with a criterion. | |||

|---|---|---|---|

| Desired Outcome: Identification of value of health information or behavior and whether it is in normal or abnormal range. | |||

| Types of Data | Types of Visualizations | Description of Visualization | Result |

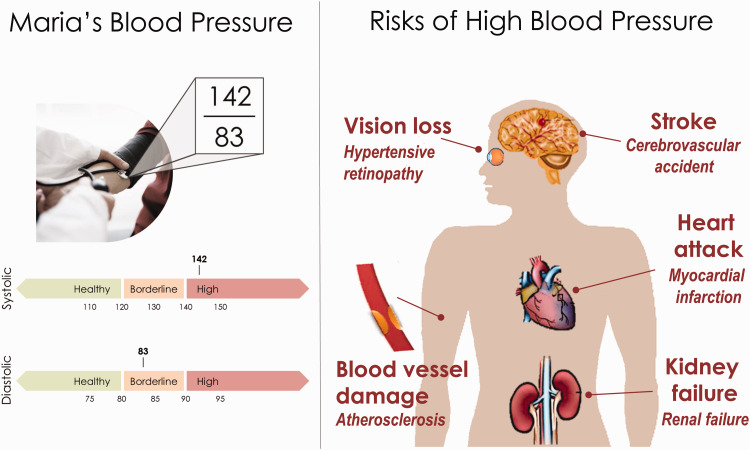

| Blood pressure | c. Reference Range Double Number Lines | Two number lines with reference ranges for blood pressure (i.e., healthy, borderline, hypertensive). The top line represents systolic values; the bottom represents diastolic values. The values of interest are indicated or highlighted graphically. | Of all the blood pressure visualizations for Goal 3, this was the most successful. It employs color analogies and is informative without being overwhelming. See the bottom left portion of Figure. 2 . |

| d. Drop Line Graph | A vertical line for which the top and bottom end points represent the systolic and diastolic values, respectively. Systolic values increase upward; diastolic values increase downward. Reference ranges (i.e., healthy, borderline, hypertensive) are displayed graphically as part of the graph field. | Despite the use of color analogies, participants found this novel graphical format confusing and unappealing. Discontinued. | |

| e. Nested Rectangle Ranges Graph | Graph in which systolic is plotted on the y -axis and diastolic is plotted on the x -axis. Shaded areas in the form of nested rectangles indicate reference ranges. Value of interest is marked on the graph. Adapted with permission from design by HealthEd, Inc. See http://tinyurl.com/nmnxlnq | Many participants were able to use this infographic without difficulty (e.g., correctly classifying a blood pressure of 130/90 as borderline) but perceived it to be unappealing and more confusing than the other designs. | |

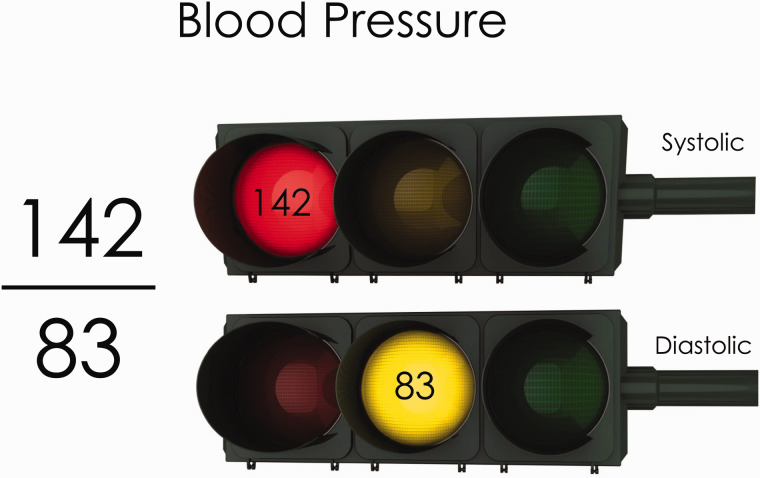

| f. Stoplight Analogy | The red, yellow, and green lights on a stoplight will be used to represent the reference ranges for blood pressure. The color corresponding to the value of interest is emphasized in the figure. | Almost universally understood, this image is the source of the color analogies used throughout the other designs ( Figure 3 ). Generally not favored because it was considered information-poor. | |

| Overall health status; amount of energy; chronic stress | a. Star Ratings | Two rows of five stars. One is labeled to represent the individual; the other to represent age group- and gender-matched community members. The number of highlighted stars indicates the relevant value for each row. | This design was appealing to and easily understood by nearly all participants ( Figure 6 b). It employs a symbolic analogy that is likely to be familiar to most people and supports comparison. |

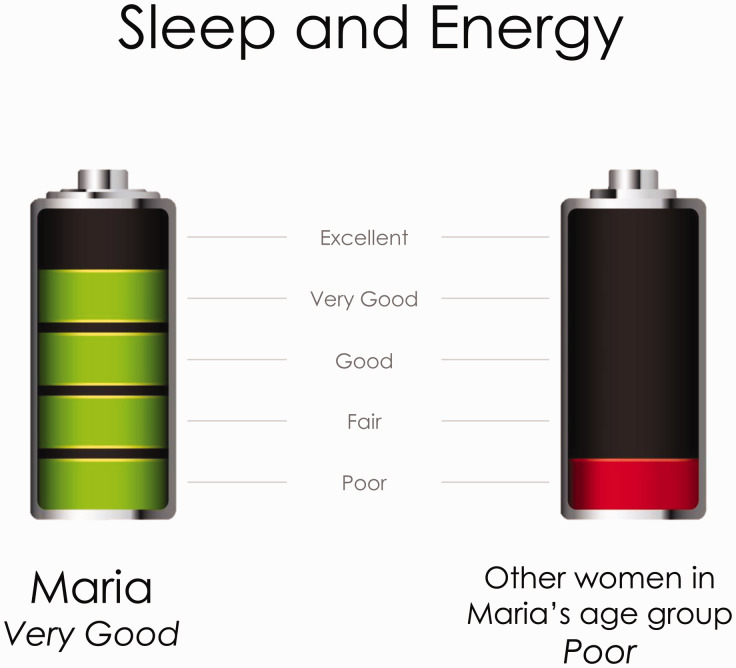

| b. Battery Power Analogy | The relevant value is represented as a proportion of the whole using the analogy of the amount of power left in a battery. | Participants readily embraced and understood the use of a battery to represent sleep and energy ( Figure 4 ). This design employs a color analogy and supports comparison. | |

| c. Paired Pressure Gauges | The value of interest is displayed as though on a pressure gauge in which reference ranges are marked on the display face. | Whether they interpreted it as a pressure gauge or speedometer, participants readily understood this design and found it appealing ( Figure 5 ). It, too, employs a color analogy and supports comparison. | |

| Visualization Goal 4: To compare a single piece of health information or health behavior of an individual with that of others. | |||

|---|---|---|---|

| Desired Outcome: Identification of value of health information or behavior and whether or not it is higher or lower than that of the comparison group. | |||

| Types of Data | Types of Visualizations | Description of Visualization | Result |

| Visualization Goal 5: To compare multiple pieces of health information or behaviors with the same denominator. | |||

| Desired Outcome: Identification of similarities and differences between values on multiple measures. | |||

| CDC 30-day measures (e.g., days of pain, depression, or anxiety in the last 30 days) | a. Clustered Bar Chart | Bar chart in which the horizontal axis is marked in 30 1-day increments. Each bar represents the number of affected days for different variable. | Although not especially engaging, participants seemed familiar with simple bar charts and had no difficulty interpreting them so long as more than one data point was displayed. This format supports comparison between categories. |

| b. Bar Chart within a Table | A table in which the rows represent different variables and the columns include the variable name/description, number of affected days, and a bar representing the number of affected days. | Although this design supported the same level of comprehension as the Clustered Bar Chart, it did so with added complexity that did not provide additional information. Discontinued. | |

| For example, overall health status, sleep and energy, nutrition, physical activity, mental health | a. Cloverleaf Analogy | A “clover” in which the relative size of each leaf represents the amount of each variable. Optimal values (in which all variables are equal and balanced) are indicated graphically (e.g., shading, dotted lines). | Initially, participants found this design confusing (see Figure 1 in 12 ). Based on their feedback, we improved it by rescaling such that high (not middling) values are good and by providing a figure that shows the ideal (all leaves filled) as a comparator. Having made those improvements, participants favored this design over 6a and 6b, said that it gave them a lot of information, and was easy to understand. |

| b. Adapted hGraph | An adaptation of the “hGraph” (an open-source project of Involution Studios; hgraph.org). Variables are plotted radially around a donut figure in which the figure represents optimal reference ranges. Points within the center of the donut or outside its boundaries represent out-of-range/suboptimal values. When connected, the points form a polygon; “rounder” shapes suggest balanced health whereas jagged shapes indicate imbalanced health status. | Participants found this design confusing and unappealing. Discontinued. | |

| c. Control Panel Analogy | Variable are represented by “status lights,” such as LED lights on electronics. As in the stoplight analogy, the color of the light (red, yellow, green) indicates the relevant value. | Although some struggled with it, most participants understood this design but preferred 6a. Design retained for further testing in hopes that improvements by a professional graphic designer will make it easier to interpret. | |

| Visualization Goal 7: To display and increase understanding of a single piece of health information through graphic illustration. | |||

| Desired Outcome: Identification of meaning and value of health information, and whether it is in the normal or abnormal range. | |||

| Blood pressure | a. Blood Pressure Cuff with BP Numeric Display | Reference Range Double Number Line (3c) embedded within a graphic showing an arm with blood pressure cuff. | The combination of 7a and 7b ( Figure 2 ) was universally comprehended and our most popular design. It is information-rich, provides context, and employs a familiar color analogy. |

| b. Blood Pressure with Effect on Body Systems Infographic | A blood pressure visualization (3c–f) embedded within an anatomical figure highlighting the body systems affected by hypertension. | ||

Note. Successful design types (i.e., leading to the desired outcome) are shown in bold.

The secondary objective of the participatory design phase was to provide insight into attributes common to successful infographics and pitfalls common to unsuccessful infographics. These findings are the focus of this paper as they are of the greatest general interest. Included in the online supplement are methodological details and lessons learned that may be relevant to those undertaking similar work.

METHODS

Sample

Eligibility criteria included: 1) participation in the WICER survey (all respondents were 18 or older), 2) signed WICER consent form indicating willingness to be contacted for future research, 3) ability to comprehend English or Spanish, and 4) completion of health literacy items in the WICER survey.

Procedures

Study procedures were approved by the Columbia University Medical Center Institutional Review Board. Participatory design sessions of 90–120 min in length were held between April 23 and June 4, 2013. Participants were compensated for their time with either grocery coupons or movie tickets with a value of $50. Session objectives were to elicit participants’ perceptions of the meaning of each design (i.e., a preliminary comprehension check), preferences between similar designs and/or iterations of a single design, feedback for improvement, and views on whether or not the infographics might motivate them to change their health behaviors. To that end, the VWG developed four questions we used to guide the participatory design sessions. Participants were asked: 1) what does the infographic tell you? 2) which of the infographics do you prefer and why? 3) how can the infographic be improved? and 4) would this infographic motivate you to address the health issue?

Participants were purposively sampled and grouped based on preferred language (English or Spanish), and when possible, by age group (18–30, 31–60, >60), and level of health literacy as measured by their response to the question “How often do you have problems learning about your medical condition because of difficulty understanding written information?” (never = adequate; sometimes or occasionally = marginal; always or often = inadequate). 14 We selected this item from among the WICER health literacy measures because it most closely matched the issue that we sought to address through the infographic approach.

Participatory design sessions were held at the Columbia Community Partnership for Health, a storefront health-focused community engagement center located in Washington Heights run by the Irving Institute for Clinical and Translational Research. Each session was facilitated by a member of the research team, with a second team member assisting with consenting participants and note-taking. WICER staff obtained written informed consent from each participant. Design sessions were audio-recorded and at least one research team member made detailed notes of the proceedings. Participants were each given identical stacks of 8–1/2″ × 11″ card stock printed with a single infographic design per page (24–36 designs per session). To preserve privacy and for ease of group discussion and comparison of designs, the infographics displayed simulated data and were not tailored to the participants. The order in which designs were presented was varied by session to mitigate order effects as a potential source of bias. Facilitators led participants through discussion of the designs one at a time using prompts 1, 3, and 4 listed above. After viewing a group of similar designs or several iterations of a single design, participants were asked to indicate their preferences between them (i.e., question 2 above) by voice or hand vote. Facilitators noted the majority preference, encouraged discussion of the reasons for participants’ preferences, and engaged in member checking by reflecting their observations back to participants for confirmation or correction. After each session, research team members took part in a short peer debriefing session to compare initial impressions.

Iterations on the infographic designs occurred between design sessions based on the information obtained during the sessions. Design decisions were made collaboratively by the multi-disciplinary VWG in weekly meetings and executed by the bilingual lead author (A.A.), who was present for 17 of the 21 sessions. Designs that performed poorly (apparent lack of comprehension, stated dislike, or unpopularity compared to similar designs) were modified substantially based on feedback or eventually dropped entirely. Typical modifications included color changes, alterations in iconography, and the addition of comparators. In one instance, two designs were merged into one based on participants’ suggestion (3a and b in Table 1 ). Group feedback occasionally prompted minor adjustments to successful designs such as changes to labeling or increased spacing between graphical elements. Individual infographic designs were presented in at least two sessions between iterations to strengthen the dependability of the findings; most designs were shown at many sessions. Participatory design sessions were concluded when a stable set of suitable designs had been identified and sessions failed to yield additional useful insights.

Analysis

At the conclusion of the sessions, the lead author created a summary document synthesized directly from the audio recordings and from the written session notes. In it, each design iteration is identified by a unique code. The summary document is organized chronologically by design session, with notes on participants’ comments under the code of the image to which the comments pertain. Most of the notes are verbatim transcriptions of notable and/or representative comments; in some cases they reflected the gist of the group’s comments if they were all very similar to one another. The lead author’s own impressions and interpretations of the participants’ sentiments also were included. The lead author then reviewed the summary document repeatedly and annotated it by hand in the margins with the thematic codes that emerged from the text as in conventional content analysis. 15 This process served not only to identify attributes of successful/unsuccessful designs but also to audit the quality of the design decisions. The summary document was then distilled into a findings narrative that was reviewed, discussed, and affirmed by the VWG.

RESULTS

Participants

A total of 21 participatory design sessions (5 in English, 16 in Spanish) were conducted with 102 participants. Due to sometimes unpredictable turnout, group size ranged from 1 to 15 ( M = 4.9, SD = 3.5). Participants ranged in age from 19 to 91 ( M = 53.2 Mdn = 58.0, SD = 16.5), and were predominantly female ( n = 87, 85.3%) and Hispanic ( n = 97, 95.1%). The distribution of participants by language, age group, and level of health literacy is shown in Table 2 .

Table 2:

Numbers of Participatory Design Session Participants by Language, Age Group, and Level of Health Literacy

| Age |

English

|

Spanish

|

Total | ||||

|---|---|---|---|---|---|---|---|

| Inadequate | Marginal | Adequate | Inadequate | Marginal | Adequate | ||

| 18–30 | – | 2 | 1 | 4 | 4 | 3 | 14 |

| 31–60 | – | 2 | 6 | 10 | 9 | 14 | 41 |

| >60 | – | 3 | 3 | 8 | 12 | 21 | 47 |

| Total | – | 7 | 10 | 22 | 25 | 38 | 102 |

Process outcomes

By the conclusion of the participatory design process we had arrived at a set of infographics suitable for tailoring and comprehension testing. That is, we had at least one way to display each of the variables of interest in a format that was acceptable/appealing to participants and for which there was preliminary evidence of adequate comprehension. The infographics in that set represented all of the visualization goals with the exception of Goal 1, “To display a single piece of health information or behavior.” Infographics matched to Goal 1 were the simplest visually, but hardest for participants to understand. One such infographic—a simpler version of Figure 1 —had a bar for Victor’s fruit servings filled with apple icons (rather than fruit clusters), but no comparison bars. Participants found these “simple” designs to be very confusing. We speculate that participants struggled with sense-making because of a lack of context or comparison. The most typical interpretation of infographics associated with Goal 1 was that they were recommendations, rather than a straightforward reporting of WICER data. The images may have contained so little information that participants assumed that there had to be something to them that was not immediately obvious and a nutrition recommendation seemed to be the most plausible explanation. Our solution was to convert all Goal 1 infographics to Goals 4 or 5 with the addition of comparators, as in Figure 1 .

Figure 1:

Infographic of Victor’s fruit serving consumption per week as compared to that of other men in his age group and to the minimum recommended servings.

Elements of successful designs

The infographic designs favored and most easily comprehended by participants were those that were information-rich, supported comparison, provided context, and/or employed familiar analogies. The most successful design, shown in Figure 2 , exemplifies these principles in that the blood pressure value can be compared visually to criterion ranges which in turn draw on the familiar analogy of stoplight colors (i.e., green, yellow/orange, and red). The picture of the blood pressure measurement and the figure illustrating the risks of high blood pressure provide context for the viewer. A simpler design that showed only the blood pressure value next to two horizontal stoplights lit up according to the risk categories of the numerator and denominator ( Figure 3 ) was almost universally understood but generally not favored because it was considered information-poor. Time and again, participants emphasized that “more information is better.” Recommendations were considered a valuable comparator that made infographics more informative.

Figure 2:

Infographic of Maria’s blood pressure in the context of criterion ranges alongside some of the risks of high blood pressure.

Figure 3:

Infographic depicting blood pressure values and criterion categories using a stoplight analogy.

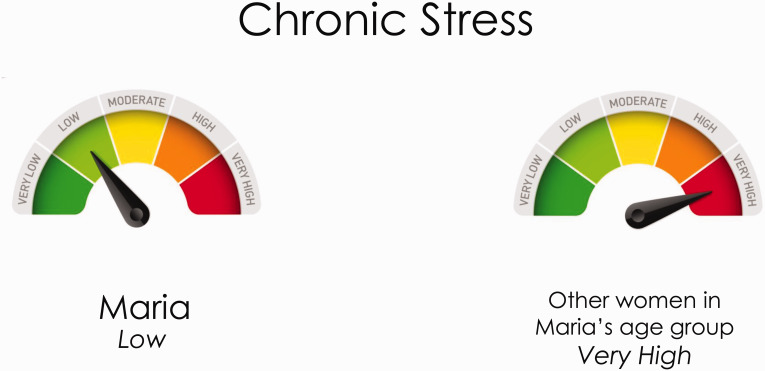

Participants responded well to symbolic and color analogies. A battery, similar to the icon showing amount of charge on a cellular phone, was embraced as an analogy for sleep and energy ( Figure 4 ). A semicircular dial similar to a pressure gauge or speedometer readout was a satisfactory analogy for level of chronic stress ( Figure 5 ). Many successful infographics, including the two aforementioned symbolic analogies, employed the stoplight color analogy wherein green represents “healthy,” “normal,” or “safe,” yellow/orange indicate “borderline” or “caution,” and red signals “danger.” These color meanings were universally understood. Participants also agreed that a somber shade of blue helped to convey the idea of sadness or depression.

Figure 4:

Infographic of Maria’s sleep and energy as compared to that of other women in her age group.

Figure 5:

Infographic of Maria’s level of chronic stress compared to that of other women in her age group.

Literal interpretation and iconography

All but a few highly literate participants interpreted the infographics in a rigidly literal fashion resulting in interpretations that were overly specific. For instance, participants who saw a version of Figure 1 with apple icons instead of fruit cluster icons complained about the monotony of eating the same fruit every day. Participants who saw both the apple and fruit cluster versions declared the fruit clusters a great improvement, but those who saw only the fruit clusters again commented on the monotony of eating those specific fruits daily (“But a whole pineapple!?”). As another example, a popular design showing 5 days of depressive symptoms in a month-calendar format often led to the false impression that the symptoms occurred on the specific days shown, rather than on any 5 of the 30 days.

The tendency toward literal interpretation greatly reduced comprehension of designs in which icons were used to represent repeated instances of a general class of things. For example, most participants who saw Figure 6 a agreed, if prompted, that the silhouetted runner was a good representation of exercise in general but prior to prompting, spoke only of running or jogging. By contrast, Figure 6 b was nearly universally understood despite the use of icons. Possibly this is owing to a greater familiarity with the use of stars in a rating system and/or the fact that the stars represent abstract gradations along a continuum rather than repeated instances of a general class of things. An infographic similar to Figure 6 a, in which pairs of sneakers were shown in lieu of the runners, yielded interpretations rooted in literalism (e.g., “He has four pairs and the other has three” or “It shows … certain items that you should wear for exercise”).

Figure 6:

Infographic of ( a ) Maria’s days of exercise per week as compared to that of other women in her age group and ( b ) Victor’s self-rating of overall health as compared to that of other men in his age group. The icons in these infographics were employed in two qualitatively different ways that affected comprehension.

Motivation and engagement

Participants were in nearly unanimous agreement that seeing their health information displayed graphically would motivate them to address relevant health issues. Multiple participants spoke of putting an infographic up on the refrigerator to serve as a daily reminder to take steps toward health self-management. Participants’ comments and lively conversations demonstrated how infographics helped them engage with the data. Participants from several groups took home their entire decks of infographics, despite the fact that the data depicted were not their own, because they valued the information they contained. Participants occasionally mentioned, unprompted, that the design session itself had been a worthwhile educational experience: “I liked it a lot, too, because it was a very instructive topic and so then you take precautions with your health.”

DISCUSSION

Contrary to the design dictum that “less is more,” we discovered that when it comes to health information, sometimes more is more. Low health literacy implies potential difficulty interpreting health information, not a diminished desire for information. Efforts to make health messages more accessible via simplification run the risk of stripping away valuable meaning. 9

We have demonstrated that it is possible to create an accessible health message without sacrificing information richness. In regard to the most visually complex of the infographics ( Figure 2 ), one participant put it succinctly, “This has lots of information that I think everyone would understand perfectly well.” Given viewers’ tendency toward literal interpretation, though, successful infographics should contain as much specificity as the available data permit, but no more, lest it be misinterpreted. Compared to experts, novices (to health information, in this case) are likelier to attend to the noise in a signal, such as distracting details, as an important part of the message. 16 Infographics that invite comparison, especially to a recommendation, provide valuable context and thus support comprehension, as do familiar symbolic and color analogies. Our findings are consistent with the claim that one of the key functions of a visualization is to support sense-making by contextualizing information for the viewer. 17 The use of symbolic and color analogies present 2 key advantages. One, the cultural referents upon which they are based (stoplight, battery, pressure gauge, star ratings) are common enough that they would likely be familiar not just to our predominantly Latino participants but also to the general population. Two, they suggest a conceptual frame into which the viewer can fit the data presented and thus ease the sense-making process. 16

General categories such as “vegetables” and “physical activity” were surprisingly difficult to convey visually at the necessary level of abstraction. We can only speculate that the convention of using repeated icons to represent a larger class of things was a relatively unfamiliar one for this population. Simple bar graphs, however, were familiar and readily understood. One function of bar graphs is that they force the viewer to move past a natural disinclination to read because the textual information is essential for interpretation. There is value to minimizing the amount and complexity of text (e.g., “borderline” not “prehypertension”; “measurement” not “circumference”) to make infographics accessible across levels of literacy. However, forcing viewers to read may be necessary to draw their attention to the finer distinctions between similar data types (e.g., minutes of moderate exercise vs minutes of vigorous exercise). Instances in which participants came to conclusions that differed from what was intended may have been exacerbated by a general tendency to draw conclusions based primarily on the image and then only sometimes consult the infographic heading if a satisfactory interpretation proved elusive. Just as participants were reluctant to read, they also were not inclined to do mathematical calculations (e.g., 14 fruit servings per week equals 2 per day). Moving forward, we will follow participants’ suggestion to display nutritional information in a per-day rather than a per-week format.

At minimum, infographics should be easily comprehended; better yet, they also should be appealing to their intended audience. At best, infographics would motivate changes to behaviors that impact health. Separately, pictorial messages 4 and tailored interventions 18 have been shown to motivate behavior change. Tailored infographics are thus very promising in this regard. Participants’ assertions that they found the infographics motivating are no guarantee that their perceived motivation would translate into action; additional research is needed. However, participants were enthusiastic about the infographics and very positive about receiving their health data in this manner. One woman put it this way: “Thank you. Because imagine, in our countries nobody bothers with these things. You suffer from high blood pressure there and you’ll have to deal with it however you can.”

Strengths and limitations

The participatory design methods we employed were intended to meet our primary objective of developing a set of infographics suitable for tailoring and comprehension testing; identification of attributes of successful infographic designs was a secondary consideration. As such, our findings may not be as extensive or incisive as they might be if we had used methods specifically focused on the secondary objective. We did, however, employ a variety of techniques commonly used to promote rigor in qualitative research. Prior to their involvement with the participatory design sessions, WICER staff had spent over a year collecting survey data in the community. Their prolonged engagement helped to establish the credibility of the findings, as did the member checking, peer debriefings, and regular consultations with the VWG. To support the dependability of the findings, each infographic design was shown during multiple sessions. A thorough audit trail consisting of audio-recordings and detailed notes taken both live and from the recordings enhances the confirmability of the findings.

WICER respondents and participatory design session participants predominantly are from or have family roots in the Dominican Republic; other groups may have culture-specific communication needs. One limitation inherent to participatory design sessions is the possibility of agreement bias as group opinions coalesce around those of an outspoken thought leader. Facilitators’ ability to assess comprehension occasionally was thwarted when participants instructed each other in the “correct” interpretation of an infographic before the recipient(s) of instruction could express a perspective. Learning effects were observed when we showed multiple designs for one data type. Changes in order of presentation helped mitigate but not altogether eliminate those learning effects. Men represented only 15% of participants. However, the target audience of the visualizations—the WICER respondent pool—has a similar gender skew with women outnumbering men three-to-one. Similarly, participants’ age distribution parallels that of the survey cohort for which the average age was 50.4 (SD 16.8).

CONCLUSION

Carefully designed infographics can be useful tools to support comprehension and thus help patients engage with their own health data. Recommendations by the Institute of Medicine for the digital infrastructure for the Learning Health System delineate three keys roles for patients related to their health data: “participate in the development of a robust data utility; use new clinical communication tools, such as personal portals, for self-management and care activities; and be involved in building new knowledge through patient-reported outcomes and other knowledge processes” (Institute of Medicine 19 p.283). Another recommendation focused on patient-centered care states that, “Patients and families should be given the opportunity to be fully engaged participants at all levels, including individual care decisions, health system learning and improvement activities, and community-based interventions to promote health” (Institute of Medicine 19 p.31)

Our study informs each of these recommendations. We contend that patients should not only contribute to the development of robust data utility, they also should be users of the data utility. Infographics, such as those we developed, are needed to facilitate use of data by individuals with low levels of health literacy. If patients are to use clinical communication tools such as patient portals, they must first recognize the need for preventive health actions and be motivated to take them. 20 The results of this study lend preliminary support to the notion that quality infographics can motivate those actions. Thus, integration of infographics into clinical communication tools may enhance the existing self-management components of the tools. Furthermore, our findings suggest that infographics are a relevant tool for engagement. Such engagement is a key component to patients’ involvement in building new knowledge through patient-reported outcomes and other knowledge processes.

An important take-away from this study is the critical importance of working directly with the community to identify imagery that will be meaningful, culturally relevant, and actionable. Visualizations based only on assumptions may be wasted effort at best, and unintentionally misleading at worst. 3 In terms of the recommendation that patients and families not only be engaged at the individual level, but also at the community-based level to promote health, some of our infographics included community comparators as well as individual data. Although our primary purpose for the comparison was to help individuals understand how their data compared to others in the community, our findings also inform representation of community-level data for other purposes such as informatics tools for engaging individuals and families to think about health interventions at the community level. If the Learning Health System is to reduce health disparities, it is imperative that those with varying levels of health literacy are able to engage in contributing to its digital infrastructure. Infographics, designed in collaboration with their intended recipients, are one strategy for meeting this goal.

FUNDING

The development and evaluation of the infographics was funded by the Agency for Healthcare Research and Quality (1R01HS019853, 1R01HS022961) and New York State Department of Economic Development NYSTAR (C090157) which also provided support for Drs. Bakken, Bales, Merrill, Yoon, and Ms. Suero-Tejeda. Dr Arcia’s and Ms. Woollen’s contributions were supported by the National Institute for Nursing Research (T32NR007969) and by the National Library of Medicine (T15LMLM007079), respectively. Dr Bakken is also supported by the National Center for Advancing Translational Sciences (UL1TR000040). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Contributors

A.A.: infographic design, data collection, data analysis, and drafting of manuscript. M.E.B. and J.A.M.: infographic design, substantive edits to manuscript. N.S-T.: data collection, substantive edits to manuscript. J.W.: infographic design, data collection, substantive edits to manuscript. S.B.: funding, infographic design, data collection, data analysis, and drafting of manuscript.

COMPETING INTERESTS

The authors have no competing interests.

Supplementary Material

ACKNOWLEDGEMENTS

The authors thank the WICER participants and the members of the WICER Visualization Working Group for their contributions to the initial infographic designs and the Irving Institute for Clinical and Translational Research for use of the Columbia Community Partnership for Health for the participatory design sessions.

Supplementary Material

Supplementary material is available online at http://jamia.oxfordjournals.org/ .

REFERENCES

- 1. Houts PS, Doak CC, Doak LG, et al. . The role of pictures in improving health communication: a review of research on attention, comprehension, recall, and adherence . Patient Educ Couns. 2006. ; 61 : 173 – 190 . [DOI] [PubMed] [Google Scholar]

- 2. Garcia-Retamero R, Okan Y, Cokely ET . Using visual aids to improve communication of risks about health: a review . Scientific World J. 2012. ; 2012 : article ID 562637 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Katz MG, Kripalani S, Weiss BD . Use of pictorial aids in medication instructions: a review of the literature . Am J Health Syst Pharm. 2006. ; 63 : 2391 – 2397 . [DOI] [PubMed] [Google Scholar]

- 4. Hammond D . Health warning messages on tobacco products: a review . Tob Control. 2011. ; 20 ( 5 ): 327 – 337 . [DOI] [PubMed] [Google Scholar]

- 5. Mayer RE . Multimedia Learning , 2nd ed . New York: : Cambridge University Press; ; 2009. . [Google Scholar]

- 6. Ancker JS, Chan C, Kukafka R . Interactive graphics for expressing health risks: development and qualitative evaluation . J Health Commun. 2009. ; 14 : 461 – 475 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Ancker JS, Senathirajah Y, Kukafka R, et al. . Design features of graphs in health risk communication: a systematic review . JAMIA. 2006. ; 13 : 608 – 618 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. McCaffery KJ, Dixon A, Hayen A, et al. . The influence of graphic display format on the interpretations of quantitative risk information among adults with lower education and literacy: a randomized experimental study . Med Decis Making. 2012. ; 32 : 532 – 544 . [DOI] [PubMed] [Google Scholar]

- 9. Jensen JD . Addressing health literacy in the design of health messages . In: Cho H , ed. Health Communication Message Design . Los Angeles, CA: : Sage Publications; ; 2012. : 171 – 190 . [Google Scholar]

- 10. Kreps GL, Sparks L . Meeting the health literacy needs of immigrant populations . Patient Educ Couns. 2008. ; 71 ( 3 ): 328 – 332 . [DOI] [PubMed] [Google Scholar]

- 11. Lee YJ, Boden-Albala B, Larson E, et al. . Online health information seeking behaviors of Hispanics in New York City: a community-based cross-sectional study . J Med Internet Res. 2014. ; 16 ( 7 ): e176 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Arcia A, Bales ME, Brown W, et al. . Method for the development of data visualizations for community members with varying levels of health literacy . AMIA Annu Symp Pro c. 2013. ; 2013:51-60 . [PMC free article] [PubMed] [Google Scholar]

- 13. Arcia A, Velez M, Bakken S . Style guide: an interdisciplinary communication tool to support the process of generating tailored infographics from electronic health data using EnTICE 3 . EGEMS. 2015. ; 3 ( 1 ): Article3 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Chew LD, Griffin JM, Partin MR, et al. . Validation of screening questions for limited health literacy in a large VA outpatient population . J Gen Intern Med. 2008. ; 23 ( 5 ): 561 – 566 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Hsieh H-F, Shannon SE . Three approaches to qualitative content analysis . Qual Health Res. 2005. ; 15 ( 9 ): 1277 – 1288 . [DOI] [PubMed] [Google Scholar]

- 16. Klein G, Phillips JK, Rall EL, Peluso DA . A data-frame theory of sensemaking . In: Hoffman RR , ed. Expertise Out of Context: Proceedings of the Sixth International Conference on Naturalistic Decision Making . New York, NY: : Lawrence Erlbaum Associates; ; 2007. : 113 – 155 . [Google Scholar]

- 17. Faisal S, Blandford A, Potts HWW . Making sense of personal health information: challenges for information visualization . Health Inform J. 2012. ; 19 ( 3 ): 198 – 217 . [DOI] [PubMed] [Google Scholar]

- 18. Lustria MLA, Noar SM, Cortese J, Van Stee SK, Glueckauf RL, Lee J . A meta-analysis of web-delivered tailored health behavior change interventions . J Health Commun. 2013. ; 18 ( 9 ): 1039 – 1069 . [DOI] [PubMed] [Google Scholar]

- 19. IOM (Institute of Medicine) . Best Care at Lower Cost: The Path to Continuously Learning Health Care in America . Washington, DC: : The National Academies Press; ; 2013. . [PubMed] [Google Scholar]

- 20. Janz NK, Becker MH . The health belief model: a decade later . Health Educ Q. 1984. ; 11 ( 1 ): 1 – 45 . [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.