Abstract

Several research groups have shown how to map fMRI responses to the meanings of presented stimuli. This paper presents new methods for doing so when only a natural language annotation is available as the description of the stimulus. We study fMRI data gathered from subjects watching an episode of BBCs Sherlock [1], and learn bidirectional mappings between fMRI responses and natural language representations. By leveraging data from multiple subjects watching the same movie, we were able to perform scene classification with 72% accuracy (random guessing would give 4%) and scene ranking with average rank in the top 4% (random guessing would give 50%). The key ingredients underlying this high level of performance are (a) the use of the Shared Response Model (SRM) and its variant SRM-ICA [2, 3] to aggregate fMRI data from multiple subjects, both of which are shown to be superior to standard PCA in producing low-dimensional representations for the tasks in this paper; (b) a sentence embedding technique adapted from the natural language processing (NLP) literature [4] that produces semantic vector representation of the annotations; (c) using previous timestep information in the featurization of the predictor data. These optimizations in how we featurize the fMRI data and text annotations provide a substantial improvement in classification performance, relative to standard approaches.

Keywords: fMRI, text annotations, natural language processing, shared response model, natural movie stimulus, multi-modal model

1. Introduction

Recent work has provided convincing evidence that fMRI readings from human subjects can be related to semantics of presented stimuli. Such experiments consist of finding (1) low-dimensional representations of the fMRI signals, and (2) low-dimensional semantic representations of the external stimulus. These tasks often build upon work in machine learning.

The earliest work concerned simple settings with carefully controlled stimuli, such as subjects being presented (visually or auditorily) with one of a set of carefully selected words [5]. The semantic representation of a word was computed using word embeddings, a tool from natural language processing [6] that represents each word as a point in a d-dimensional meaning space. This work was extended [7, 8] to perform “brain reading”, using fMRI readings and a popular text-analysis tool called topic modeling to reconstruct word clouds from brain activity evoked by a word/concept stimulus.

The next obvious step in this research program is to understand fMRI readings collected from subjects as they process more complex stimuli such as movies. In such settings, it is not clear how to represent the semantics of the stimulus, since a multitude of signals (auditory as well as visual) are presented within a short time interval. One approach to solving this task was presented in [9], which studied fMRI responses to a natural movie stimulus. In this case, the movie stimulus was represented with a feature space of 1705 distinct nouns and verbs. A subsequent study [10] examined fMRI responses to audio stories, and departed from the previous work by applying distributional embeddings to featurize the dialog and predict voxel activation. The goal in these papers was to derive a semantic word map for the voxels of the brain. Another paper [11] gathered fMRI data from subjects reading a story, and used unweighted averages of distributional embeddings to featurize sentences for predicting voxel activity.

In this paper, we study the Sherlock fMRI dataset [1], which consists of fMRI recordings of 16 people watching the British television program “Sherlock” for 50 minutes; this time series was broken into 1973 TRs, where each TR corresponds to 1.5 seconds of film. As a proxy for the semantics of the movie, we use externally annotated English text scene annotations of the program (average annotation length 15 words per TR). We examine brain data from predefined regions of interest (ROIs) in the brain, and separately analyze each one. In particular, we examine the default mode network (DMN), dorsal and ventral language areas, the occipital lobe, and a 26000-voxel mask containing voxels with high intersubject correlation across the whole brain. We seek to determine whether various modifications to fMRI and text featurization as well as the usage of previous timepoint information help to improve bidirectional mappings between fMRI data and semantic meaning vectors. In particular, we examine the effects of three featurization methods for fMRI and text data: Low-dimensional shared fMRI representation across subjects, weighted semantic embeddings of text annotations, and using previous timepoints in the performance of linear maps between people.

Aggregating fMRI responses across subjects

In prior work, combining fMRI response data from multiple subjects is often solved by averaging, anatomical alignment and smoothing, or latent multivariate feature modeling [11, 12, 10]. Further work concludes that high-level representations of content from movies are shared across people and that there can be considerable de-noising benefits from averaging across people [1]. Another recent paper [2] introduced the Shared Response Model (SRM), an algorithm that stems from previous work on hyperalignment [13]. The SRM in [2] optimizes the objective for a low-dimensional shared space S and orthogonal-column subject specific maps Wi, and can be thought of as a multi-subject extension of PCA. Simultaneously reducing dimensionality across subjects outperforms other averaging approaches at matching up specific timepoints in a movie across subjects.

Semantic representation of stimulus

To find semantic representations of English annotations, it is natural to draw upon related work in natural language processing. One common approach involves word embeddings created by using co-occurrence information in a large corpus like Wikipedia. A simple technique for representing longer pieces of text is to average the vectors for the individual words [11]. Recently, this simplistic idea has been extended in natural language processing by using recurrent neural nets [14] or by modifying the original model for learning word vectors to learn word sequence chunks (for instance, paragraphs) directly from the text [15]. These more powerful methods have the drawback of requiring large corpora, making them unusable in our current setting where we only have 1973 brief text annotations. Very recently, [4] suggested a simpler method for this task that requires no additional information beyond the existing word embeddings, yet beats these more complicated methods in standard natural language tasks. We adapt this method to construct annotation embeddings using weighted combinations of the vector representations for the words in each annotation. One of our key results is that this new embedding significantly outperforms unweighted averaging of word vectors.

Using previous timestep information

A movie stimulus naturally breaks up into multi-timestep scenes that occur at different timepoints. Thus, at any given timepoint, there may be a window of previous timesteps that are part of the current scene and thus are relevant to understanding the current time point in both fMRI and Text space. We would like to incorporate this past information shared within scenes in order to learn better maps between fMRI and Text. Other models [10, 11] incorporate past information by modeling the hemodynamic response function (HRF) that describes the fMRI BOLD response to a stimulus. However, this approach focuses on small timescales, and only accounts for the delayed and temporally-smeared BOLD response rather than attempting to aggregate scene information. Our approach is to first approximate the HRF delay with a simple one-time shift of 4.5 seconds, and to then incorporate longer time-scales into our model by including in the featurization a k-sized window of previous timesteps, where k is varied from 0 to 30 (these numbers correspond to 0 – 45 seconds).

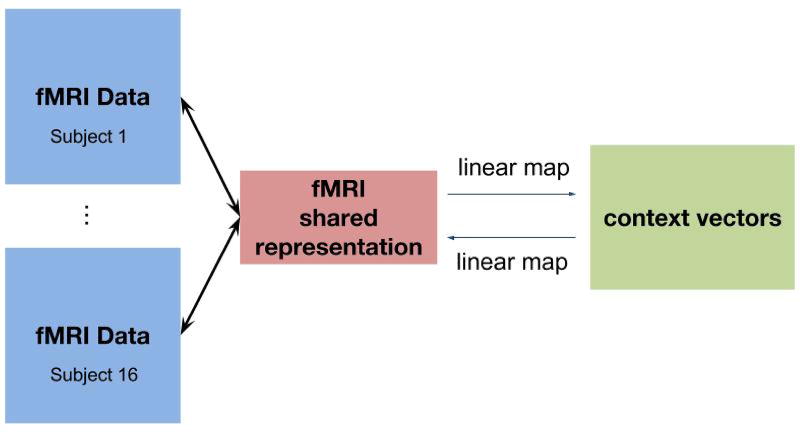

To evaluate the effect of each of these featurization methods, we use linear maps to relate the fMRI signal to the representation of the semantic content, using only the first half of the movie. These maps are validated with two experiments: scene classification and scene ranking. We divide up the second half of the movie into 25 uniformly-sized chunks. Scene classification is the task of using correlation to match predicted intervals of fMRI or semantic activity with the ground truth, and reporting the percentage of the time that the match is perfect. Since there are 25 intervals, random chance performance at this task is 4%. Scene ranking is the same task, except we measure the average rank of the correct answer: Random chance performance here is 50%. For a visual summary of the setup, see Figure 1. These experiments are executed with the fMRI → Text maps (given fMRI data, predict text annotations) as well as the Text → fMRI maps (give text annotations, predict fMRI data).

Figure 1.

Summary of Experimental Setup: We learn a shared response for the brain activity of 16 different subjects watching BBC’s Sherlock, construct semantic featurizations for associated semantic annotations, and learn bidirectional linear maps between the two data modes.

1.1. Main results

Our goal in this study is to characterize the usefulness of the representation learned by the Shared Response Model, the importance of including previous time steps, and the ideal method for featurizing text information for future predictive tasks. We measure success by evaluating the performance of different featurization approaches on the scene classification and scene ranking tasks.

Our main results are (i) showing that fMRI responses from multiple individuals can be effectively combined using SRM to improve the matching accuracy (1.3× average improvement over our baseline, the average PCA representation) between the fMRI and the text annotation (Table 1, Figures 6, 7), (ii) demonstrating that a method for combining word vectors into annotation vectors via a suitable weighting [4] for averaging word vectors on average improves 1.2× over unweighted averaging (Table 1, Figures 6, 7), and (iii) finding that appropriate inclusion of information from previous time steps yields as much as a 5.3× improvement (on average, 1.8×) in tasks measuring the performance of mapping from fMRI to Text (see Figure 6, Dorsal Language ROI). There are diminishing returns after a certain point to including more time steps: The optimal number seems to be around 5–8 previous time steps. For the Text → fMRI task, using previous time steps decreases performance.

Table 1.

Table of Improvement Ratios for Various Algorithmic Parameters: In this table we give the maximum and average improvement ratios for a specific algorithmic technique over another, including usage of previous time steps, SRM/SRM-ICA versus PCA, SIF-weighted annotation embeddings versus unweighted annotation embeddings, and Procrustes versus ridge regression for both fMRI → Text and Text → fMRI. When we use previous timesteps, we consider the results for using 5 – 8 previous time steps. These numbers are all for the scene classification task. Note that the values from the maximum columns can be seen visually in Figures 6 and 7 respectively.

| fMRI → Text | Maximum | Average |

|

| ||

| Previous Timesteps vs. None | 5.3× | 1.8× |

| Procrustes vs. Ridge | 2.8× | 1.3× |

| SRM/SRM-ICA vs. PCA | 1.8× | 1.3× |

| Weighted-SIF vs. Unweighted | 1.6× | 1.2× |

|

| ||

| Text → fMRI | Maximum | Average |

|

| ||

| Previous Timesteps vs. None | 2.5× | 0.5× |

| Procrustes vs. Ridge | 3.0× | 0.8× |

| SRM/SRM-ICA vs. PCA | 2.3× | 1.2× |

| Weighted-SIF vs. Unweighted | 1.8× | 1.1× |

Figure 6.

Comparisons for all ROIs for the fMRI → Text Top-1 Scene Classification Experiment: The chance rate for this task is 4%. Each plot is for a different ROI. Here, we only display results which use the Procrustes linear map since it on average performs better than ridge regression for fMRI → Text. We also fix the number of previous time points used for the shaded bars at 8 previous time steps, since that tends to be near optimal. We present comparisons between SRM/SRM-ICA and PCA using blue colors versus red colors, and compare weighted semantic aggregation (left) to unweighted semantic aggregation (right) by x-axis position.

Figure 7.

Comparisons for all ROIs for the Text → fMRI Top-1 Scene Classification Experiment: The chance rate for this task is 4%. Each plot is for a different ROI. Here, we only display results which use the ridge regression linear map since it on average performs better than Procrustes for Text → fMRI. We also fix the number of previous time points used for the shaded bars at 8 previous time steps, since that tends to be near optimal. We present comparisons between SRM/SRM-ICA and PCA using blue colors versus red colors, and compare weighted semantic aggregation (left) to unweighted semantic aggregation (right) by x-axis position.

We also report the top performances for each task. For the fMRI → Text task, our top scene classification performance is 72% accuracy, meaning that for 72% of the time intervals we examine, our predicted annotation representation correlates the most with the true annotation representation for that time interval (see Figure 5, Whole Brain ROI). Notably, this result improves considerably over the random guessing rate of 4%. The corresponding scene ranking performance is 96%, meaning that on average, the rank of the true annotation representation is within the top 4% when sorted by correlation with the predicted annotation representation. This number implies that, on average, the correct scene is ranked in the top 1 or 2 over all 25 scenes — since there are several more than 1 or 2 scenes in the movie with a single, prominent character like Sherlock or John Watson present, our results imply that our methods can distinguish between scenes that are similar with respect to measures like the presence of certain characters.

Figure 5.

Best Bidirectional Accuracy Scores for Each Brain Region of Interest for both Scene Classification and Ranking: In this figure, for each ROI and for each experiment (Text → fMRI 4% (red), 50% (blue) chance rates; fMRI → Text 4% (red), 50% (blue) chance rates), we give the best performance as a percentage. For all measures, closer to 100% is better. We can see that Whole Brain, DMN-A, and DMN-B tend to perform the best, and that fMRI → Text performs better than Text → fMRI.

On the other hand, the results for Text → fMRI classification were somewhat worse, although performance was still well above chance. The top scene classification performance for Text → fMRI is 56% accuracy (vs. 4% chance), and the corresponding scene ranking accuracy is 91% (vs. 50% chance; see Figure 5, DMN-A ROI).

2. Methods

2.1. Preprocessing the Dataset

The dataset we work with in this paper is Sherlock fMRI dataset [1]: 16 people watch the “Sherlock” television show for 50 minutes broken into 1973 TRs, where each TR is 1.5 seconds of film.

Before performing any analysis, the fMRI data are preprocessed and standardized using the techniques described in [1]. Then, we identify six distinct brain regions of interest (ROIs) that we treat completely separately. That is, we first apply ROI masks to the whole-brain data and then learn SRM-representations for each of these ROIs separately. We use the ROIs for the default mode network (DMN-A, DMN-B) and the ROIs for the ventral and dorsal language areas identified in [16]. Our methodology for finding the default mode network relies on intersubject functional correlation (ISFC), a technique first introduced by [17]. The central idea is that natural stimuli (like movies) evoke reliable, time-dependent activity across a variety of brain networks. For more details, see Figure 2. We are interested in the DMN ROIs in particular since prior work has demonstrated that these regions play a crucial role in tracking the narrative in settings such as watching movies or reading stories [17, 18, 19, 20, 21, 16, 22]. The “Whole Brain” ROI is a 26000-voxel mask of the brain that highlights voxels that have intersubject correlation > 0.2 on the data, and the Occipital Lobe ROI is defined from the MNI Structural Atlas in FSL (https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/Atlases). We include these ROIs for holistic comparison across the whole brain.

Figure 2.

Visualization of the DMN and Ventral/Dorsal Language Area ROIs [16]: Here, we display four of the regions of interest on a brain map. These masks were collected on the Pie Man dataset [16], then fit to a standard anatomical brain (MNI152), and interpolated to 3-mm isotropic voxels [16]. In order to define the DMN-A and DMN-B regions, as well as the Ventral and Dorsal language area regions, the intersubject functional correlation matrix [17] was calculated from the fMRI data of 36 subjects collected while they were listening to stories [16]. Then, k-means clustering was applied to find the networks. The DMN-A and DMN-B networks were identified by comparing the resultant clusters to the DMN ROIs derived by thresholding the functional correlation between the posterior cingulate (identified from an atlas) and the rest of the brain in resting-state fMRI data from 36 subjects [16]. The Ventral and Dorsal language areas were identified by comparing the clusters to previous results in the literature [16].

We also truncate the first three TRs of fMRI data and the last three TRs of semantic annotation data. This operation effectively aligns the fMRI and semantic data under the assumption that there is a 4.5 second delay between the onset of the stimulus and the BOLD response signal.

2.2. Constructing and Aggregating Semantic Vectors

The Sherlock fMRI data are supplemented by text annotations describing each TR with a few sentences. These annotations were created by human annotators who viewed the film and carefully noted a couple sentences’ worth of detail for every TR. For instance, a moment from the scene where Sherlock and John first meet is described as “Sherlock takes the phone that John hands to him. He flips the screen up, presses a button and blatantly asks: Afghanistan or Iraq? ”. Notably, these annotations contain some inferences about the subtext of the actions, expressions, and atmosphere of the scene. Adding this information diversifies the information content of the annotations, and allows for the creation of diverse and informative embeddings that capture more semantic content than would otherwise be possible with current natural language understanding techniques. The improved diversity of the embeddings makes the task of identifying unique scenes in text-space easier as well.

In order to represent words, we take advantage of the distributional properties of words in a large corpus - namely, English Wikipedia. We train word embeddings as described in [23], which perform on par with other standard word embedding techniques like GloVe and Word2Vec [23]. Now, we diverge from the prior work by calculating and applying a domain specific re-centering of the embeddings. After creating an embedding for each word in the vocabulary of the Sherlock annotations, we calculate the top principal component of all word embeddings in the vocabulary. We then scale the normalized top principal component by the average Euclidean norm of a word embedding in the Sherlock vocabulary. This vector represents a kind of average topic for the Sherlock vocabulary. Since we would like our word embeddings to be discriminative within this average topic, we algebraically subtract out this component. We can view this step as finding a translation operation that moves the word embeddings away from the region of semantic space that is close to generic words in the Sherlock annotation corpus.

The central assumption in [23] is the probability model for a word w in a vocabulary V given a context c, where the context represents a small window of words in the corpus. This model is given by where υw represents the vector for a given word and Zc is a term that normalizes the distribution. The idea is that the context vector c represents the subject matter of the text at a given point in time.

Using this assumption and a few others, the word vector learning problem is phrased in [23] as the squared-norm objective:

where C is a bias term, X is the co-occurrence count matrix between single words in a small window of text (fixed at ≈ 5 words) and υw are the word vectors we are trying to learn. This objective can be optimized with gradient descent. For a full treatment of the theoretical properties of the word vectors and the derivation of the squared-norm objective, see [23].

For every 1.5-second time-point in our Sherlock movie, annotators were asked to provide a natural description of what is happening in the movie: actions, dialog, and so on. This annotation is typically a few sentences long, and contains around 15 words on average. We can think of each annotation as the current context of the movie narrative. The log-linear probability model of [23] for words given context c implies that the maximum likelihood estimator of the context is simply the average of all words in the annotation. (This formulation is a theoretical justification for a standard rule of thumb in natural language processing for representing the sense of a small piece of text by the average of the embeddings for the words in the text). We will call these representations the unweighted annotation vectors.

However, one imagines that not all words in the annotation are equally important, and that a better representation might be possible by taking this idea into account. This approach has been studied in various neural network frameworks [14]; however, applying these kinds of models requires a large annotation corpus, while we only have 1973 15-word annotations. A recent paper [4] suggests a principled approach for computing a representation of a small piece of text. The intuition from [4] is that words that occur with much greater frequency in the original corpus may inherently contain less information, since these words are in some sense uniform with respect to the whole word distribution. Therefore, more frequent words should be weighted less. The paper [4] modifies the above language generation model as follows: For a word w given context c, the probability of a word w given context c is

| (1) |

where Zc normalizes the distribution and α ∈ [0, 1]. We can think of this model as a weighted sum of the probability of a word w appearing not conditioned on the context c and the probability of a word w appearing conditioned on the context c.

The revised estimate of the context vector c in this modified objective is

| (2) |

where . Typically, we choose α such that β ≈ 10−4. These representations are called the smooth inverse frequency (SIF) annotation vectors, or weighted annotation vectors. Figure 3 depicts a example sentence with the respective word weights colored according to importance in the sentence embedding.

Figure 3.

Visualization of Semantic Annotation Vector Weightings: We display an example sentence from the Sherlock annotations, where we have colored important words red, and unimportant words blue. Brighter red means more important, and darker blue means less important.

Using either the unweighted or weighted approach will produce one annotation vector for each of our T time steps. On the training portion of the data (the first half of the movie), we calculate an average annotation vector and subtract it from all data. Here, we assume that the average annotation vector is invariant, which turns out to be a good assumption.

2.3. Shared Response Models for Multi-Subject fMRI

The Shared Response Model (SRM) [2] is an unsupervised probabilistic latent variable model for multi-subject fMRI data under a time-synchronized stimulus. From each subject’s fMRI view of the movie, SRM learns projections to a shared space that captures semantic aspects of the fMRI response.

Specifically, SRM learns N maps Wi with orthogonal columns such that ‖Xi − WiS‖F is minimized over , S, where Xi ∈ ℝυ×T is the ith subject’s fMRI response (υ voxels by T repetition times) and S ∈ ℝk×T is a feature time-series in a k-dimensional shared space. In this paper, k = 20 since low-rank SVD with 20 dimensions captures 90% of the variance of the original fMRI matrices [1]. We also experimented with using k = 50, 80, 100, 1000, but the results barely varied from using k = 20 dimensions. Note that, for testing, the learned Wi allow us to project unseen fMRI data into the shared space via since Wi has orthogonal columns.

We also examine a variant of SRM called SRM-ICA [3] that modifies the SRM algorithm with an independent components analysis (ICA) objective. ICA is an unsupervised learning technique that identifies independent signals from a mixture by looking for rotations of the data that produce non-Gaussian signals. SRM-ICA brings this approach to learning a shared space: While in SRM we alternated by solving for Wi by minimizing ‖Xi − WiS‖F and updating S with the average of , we change the objective we use to update each Wi to an ICA objective: Maximizing the non-Gaussianity of the shared response , individually with respect to each (Xi, Wi) pair.

Here we are using the Shared Response Model to highlight aspects of the neural signal that are shared across people. To the extent that the information in the text annotations is reflected in the thoughts of most or all subjects, SRM should be helpful in mapping between fMRI and this (shared) information. Note that an alternative use of SRM is to take the shared variance identified by SRM and subtract it out, thereby highlighting idiosyncratic neural variance; this could be useful in situations where fMRI is being mapped to more idiosyncratic cognitive states. In the original Shared Response Model paper [2], the authors include an experiment where they apply SRM to two groups that were expected to have differing perceptions of the stimulus. They found that subtracting out shared variance across the groups using SRM improved subsequent discrimination of fMRI time series from the two groups.

In our experiments, we use the implementation of SRM due to [24]. We compare average SRM and SRM-ICA projections against the baseline average principal components analysis (PCA) projections. PCA is a standard linear dimensionality reduction technique that finds an optimal (in Frobenius norm) orthogonal projection of the data onto a low-dimensional subspace.

2.4. Learning Linear Maps

Our approach to predicting semantic annotation vectors from fMRI vectors and vice versa is simply linear regression with two kinds of regularization. Letting X ∈ ℝυ×T represent the fMRI data matrix (either SRM, SRM-ICA, or PCA) for a specific ROI and Y ∈ ℝ100×T represent the annotation vectors, our main approach is given by solving the Procrustes problem with orthogonal columns constraint ΩTΩ = Iυ×υ. Thus, we learn a matrix Ω ∈ ℝ100×υ as a map from X → Y, decoding fMRI vectors into semantic space. Our other approach is given by the ridge regression problem where j ∈ [1, 100] for each word vector dimension. Putting the ωj together forms Ω ∈ ℝ100×υ as before, with the orthogonality constraint replaced by a row-wise ℓ2-norm regularization.

2.5. Adding Previous Timesteps

One could augment the fMRI and annotation vectors using past time steps by finding a complicated combination of the features at each time step, resulting in a representation with the same number of dimensions. For now, we sidestep the complexity of this task by simply concatenating k previous vectors to the predictor vector at each time step (TR) before learning mappings as before. A potential downside to this approach is that we linearly increase the dimensionality with k, which can be intractable for large k. However, this approach allows every predictor feature at every timepoint to have its own weight in the linear map, creating a powerful model. Thus, in the fMRI → Text case, we stacked the k previous fMRI vectors onto each fMRI vector, and did not modify the text annotation vectors. In the Text → fMRI case, we stacked k previous text annotation vectors and left the fMRI vectors unmodified. When previous time steps do not exist, we append an all-zeros vector instead. We can think of the modified representations as capturing a notion of the dynamics occurring over an interval of 1.5(k + 1) (TR length × total number time points) seconds. In this paper, we tried k = 1 to 9 in steps of 1, and then k = 10 to 30 in steps of 5. See Figure 4 for a visualization.

Figure 4.

Visualizing Concatenation: We visualize what the single timestep case looks like compared to a case where we use the previous two timesteps in our featurization as well. The latter case results in a more complicated model, since one of the dimensions of our linear map triples in size.

2.6. Experiment Descriptions

First, we divide our 1973 TRs into 50 uniformly-sized chunks of time, the first 25 of which are our training data and the latter 25 of which are our testing data. We learn maps both from fMRI to text annotations and from text annotations to fMRI on the training data. From now on, we refer to fMRI → Text experiments as those which take an fMRI representation as input and attempt to predict a semantic annotation vector representation. Likewise, Text → fMRI experiments are those which take in a semantic annotation vector input and predict an fMRI representation. Also note that we train the linear maps on the individual TRs as opposed to the 25 chunks.

We perform two primary experiments in this paper, scene classification and scene ranking. These experiments are applied to both the fMRI → Text and Text → fMRI settings. In the following description, we denote the predictor space by X and the target space by Y.

Suppose we are in the X → Y setting. For each time chunk i ∈ [1, 25] in X-space, we predict chunk i in Y-space using the learned map, by applying the map individually to each TR within the time chunk. Then, we calculate the Pearson correlation of the predicted chunk i (represented by concatenating the representations for each TR in the chunk into one long vector) with each of the actual time chunks j ∈ [1, 25], and we rank the chunk indexes by correlation.

Scene classification

Given the ranking of actual time chunks by correlation with the predicted chunk, we report the proportion of the time that the correct chunk index is ranked the highest. This measure has a 4% chance rate, meaning that if we randomly ranked the actual chunks, any particular chunk would be the top chunk 4% of the time.

Scene ranking

Given the ranking of actual time chunks by correlation with the predicted chunk, we calculate . This measure has 50% chance rate, meaning that if we randomly ranked the actual time chunks, the average rank of any particular chunk would be in the middle.

We report both of these metrics because the 4% chance rate task gives a better idea of the distribution of the ranking, while other authors have used the 50% chance rate, obtaining ranking scores between 70% – 80% [7, 11, 8].

We also give some additional analysis of the properties of stacking previous time points, and discuss how they affect prediction capabilities. In particular, we observe the dependence of classification accuracy on the number of previous time steps.

3. Results

3.1. Top Absolute Performances over All Algorithms

Figure 5 suggests that the DMN regions perform well in the experiments, which fits with prior research in this area ([20], [16]). We achieve 72% accuracy over 4% chance with the Whole Brain region in the scene classification task. Since the scene ranking measure is always ≥ 80%, the average rank of the correct answer is in the top 20% of the scenes, which translates to top 5 scenes out of 25. For fMRI → Text we perform even better, where the average rank of the correct answer is in the top 10% of the scenes (top 3 scenes out of 25). Notably, we get excellent performance out of the Whole Brain region, which has 26000 voxels selected by merely choosing voxels whose intersubject correlation is above a certain threshold. This result demonstrates that our methods are not overly dependent on applying domain-specific knowledge (we do not necessarily have to preselect an ROI to get good results).

fMRI → Text

Here we discuss the performance of the fMRI → Text experiments. In Figure 5, we display the top accuracy over all algorithmic choices for each experiment. We achieve high accuracy performance, reaching 72% for the scene classification task for fMRI → Text and in the mid-90%s for the scene ranking tasks. In particular, the Whole Brain and the DMN regions perform best, supporting previous work by [20] and others demonstrating that the DMN plays an important role in narrative processing.

Text → fMRI

On the other hand, we see that the Text → fMRI experiments perform worse than the fMRI → Text experiments. The best top–1 scene classification accuracy performance is 56% for the DMN-A region, and the other top performing regions get accuracy in the mid-to-high 40% accuracy. For the ranking task, performance ranges from 80%–90%, which is again slightly worse than the fMRI → Text ranking experiment.

3.2. Comparing Algorithmic Choices

In order to simplify presentation for Figures 6 and 7, we chose to fix the algorithmic parameters that uniformly outperformed other options. All linear maps for fMRI → Text were learned using the Procrustes method and all linear maps for Text → fMRI were learned using the ridge regression approach. We fixed these for comparison purposes since, for fMRI → Text scene classification, Procrustes performed 1.25× better than ridge on average (Table 1). On the other hand, ridge performed 1.2× better than Procrustes on average over Text → fMRI scene classification (Table 1). As a caveat, there were exceptions to the rule, as the max ratios in Table 1 indicate. In Figures 6 and 7, for the data points that are labeled as using previous time steps, we reported the result for 8 previous time steps. The optimal number of previous time steps for fMRI → Text was typically between 5 – 8, and so we fixed that choice of parameter across all of the graphs in these figures.

Comparing SRM and SRM-ICA to PCA

We see considerable improvement on best-case performance when using SRM or SRM-ICA over PCA, particularly on the fMRI → Text tasks, in some cases gaining as much as 1.8× the top–1 scene classification performance of PCA, as demonstrated in Figure 6. Typically, SRM-ICA tends to perform slightly better, especially on the Whole Brain ROI. The case is weaker for Text → fMRI, since though we can find that performance increases by as much as 2.3× the top–1 scene classification performance, the average benefit is smaller (Table 1, Figure 7). If we look at average case improvements, we see considerable gains in both directions: SRM/SRM-ICA improve on average by 1.3× over PCA for fMRI → Text scene classification, and on average by 1.2× over PCA on Text → fMRI scene classification. For the ranking tasks, we note that while performance improvement for the best selections of algorithm parameters is not as distinct, SRM and SRM-ICA can drastically improve upon PCA performance for poor selection of parameters. This fact suggests that one should always use SRM or SRM-ICA over PCA, since on new datasets where it is not known which linear map to use, or the number of previous time points to incorporate in the analysis and so on, our results here suggest that these SRM-variants will improve strongly upon PCA if the parameters are poorly chosen, and still improve decently upon PCA otherwise.

Weighted vs. Unweighted Aggregation of Word Embeddings

Using the SIF-weighted embeddings improves upon unweighted averaging when featurizing the annotation vectors as well. Examining Table 1 and Figure 6, we see that for fMRI → Text top–1, there is improvement on best-case performance by as much as 1.3× by using weighted embeddings. On average, we see that weighted embeddings improve by 1.2× over the unweighted embeddings. Looking at Figure 7, the case is weaker for Text → fMRI top–1; while for some algorithms and ROIs we see as much as 2.5× improvement on best-case performance by weighted aggregation embeddings, we also see that sometimes unweighted averaging can outperform weighted averaging. However, on average, weighted embeddings improve by 1.1× over unweighted averaged embeddings.

The Effects of Previous Time Points

Figure 6 demonstrates the positive effect of adding previous time steps to the accuracy scores for the fMRI → Text case. Table 1 demonstrates that at best, using previous timepoints can improve performance by as much as 5.3×. On average, this improvement is 1.8×, nearly doubling performance. On the other hand, Figure 7 shows that for Text → fMRI, adding previous time steps almost universally hurts performance and on average halves performance (Table 1). This fact is also evident from Figure 8, which illustrates the situation for the DMN-A ROI.

Figure 8.

Varying Previous Timesteps: For the DMN-A region, choosing SRM-ICA, weighted average, Procrustes for the fMRI → Text linear map, and ridge for the Text → fMRI linear map, we plot the relationship between accuracy (y-axis) and number of previous time points used in the linear map fit (x-axis). We can see a peak at around using 5 – 8 previous TRs as optimal for the fMRI → Text tasks, and a relatively monotone decay for using any previous TRs in the Text → fMRI tasks.

Notably, the effect of using previous time steps is different from learning a hemodynamic response function, which other authors [11, 10] have done in the past. Instead, we are investigating whether information from longer time scales helps improve performance. In Figure 8, we see that there are some peaks in classification performance between 5 and 8 previous time steps ago (or 7.5–9.0 seconds ago, after having taken into account the HRF). However, using any number of previous time steps (up to as long as 30 TRs ago, or 45 seconds) still improves over the baseline of using no previous time steps.

For Text → fMRI however, the story is different. We see no improvement in performance when using previous time points, and in fact performance decreases (Figure 8). We discuss differences between the Text → fMRI and fMRI → Text results in the next section.

4. Discussion

In this paper, we have explored several methods that improve our success at mapping between fMRI response to a natural stimulus and semantic text data describing this stimulus. We see that SRM and SRM-ICA perform considerably better than simple averaging or using PCA. Figure 6 demonstrates that weighted aggregation of the words in semantic space to form annotation vectors improves the baseline accuracy by a reasonable amount, relative to simple averaging. We also show that adding previous time steps improves accuracy substantially.

Using SRM-ICA in fMRI space, weighted annotation vectors in semantic space, and a Procrustes linear map learned between the concatenations of five previous time points in fMRI and semantic space, we are able to achieve 72% scene classification accuracy over 4% chance rate for the Whole Brain region on the fMRI → Text task.

Other ROIs are typically above 60% scene classification accuracy as well. Similarly, in the scene ranking task, we achieve > 90% average rank for the correct answer across ROIs. Text → fMRI does not perform as well but is still far above chance (56% with DMN-A ROI for 4% chance rate, and > 80% average rank across ROIs). Another takeaway is that SRM and SRM-ICA improve upon PCA almost always, and provide particularly substantial improvement in cases where the other parameter settings (like the semantic featurization or selection of linear map and associated hyper-parameters) are not necessarily tuned. These results indicate that we are able to use multiple subjects to learn a 20-dimensional shared space for the fMRI data that increases performance on our experiments. Thus, we provide concrete evidence towards the hypothesis made in [10] regarding the existence of a shared fMRI representation across multiple subjects that correlates significantly with fine-grained semantic context vectors derived via statistical word co-occurrence properties.

The method of combining word vectors is another essential part of our results. We demonstrate that weighted-SIF averaging [4] for aggregating individual elements of a word sequence performs on average 1.2× better than unweighted averaging for fMRI → Text top–1 scene classification, and on average 1.1× better for Text → fMRI top–1 scene classification. Since we use only semantic vectors to featurize a movie stimulus dataset, our work provides additional support for the notion that the distributional hypothesis of word meaning may extend to real life multi-sensory stimuli.

Finally, we note that using multiple previous timepoints when mapping from fMRI → Text is very beneficial and significantly improves results by a factor of as much as 5.3×, and on average nearly doubles performance (Table 1).

Overall, accuracy for Text → fMRI was worse than for fMRI → Text. Also, using previous time points hurt performance for Text → fMRI (whereas it helped for fMRI → Text). One possible cause of these differences is that, in several places in the movie, text vectors were almost identical between adjacent TRs, whereas the fMRI patterns varied. Where this property occurred, Text → fMRI posed a (more difficult) one-to-many mapping problem, whereas fMRI → Text was an (easier) many-to-one mapping. These “pockets of stationarity” in the text vectors are a consequence of our using human-generated annotations to construct our semantic embeddings. As we noted before, these annotations reflect the annotator’s inferences about what is happening in the movie; if the annotator’s understanding of the current situation in the movie stays relatively stationary, the text embeddings will also stay relatively stationary. This reasoning may also account for why adding previous timepoints was not helpful for Text → fMRI; if the annotations for previous timepoints are the same as for the current timepoint, adding these previous timepoints has the effect of adding extra free parameters to the model without adding new, useful information.

Putting all of these ideas together, we think it is possible that the annotations left out some details that were nonetheless cognitively registered by the fMRI participant (and thus registered in their fMRI data). This suggests that one way to improve Text → fMRI accuracy would be to identify points in time when text annotations are relatively stationary, and then go back and encourage the annotator to include new details that distinguish between the time points that they may not have noted on the first pass. At the same time, we should note that the human cognitive system’s ability to maintain a stable understanding of a situation in the face of changing sensory input is a feature and not a bug; the relative stationarity of the annotations within scenes reflects our ability to extract deep structure from complex narratives, and in ongoing, related work we are developing new tools to identify and study brain regions that are involved in extracting this deep structure [25].

Acknowledgments

The dataset is online [1] and the code used in this paper is available on GitHub (https://github.com/kiranvodrahalli/fMRI_Text_maps_NI). Additionally, we note that we used http://brainiak.org/ for some of the implementations of algorithms used in this paper. This work was funded by a grant from the Intel Corporation, NIMH R01MH112357 awarded to U. Hasson and K. Norman; NIH grants R01-MH094480 and 2T32MH065214-11; NSF grants CCF-1527371, DMS-1317308, Simons Investigator Award, Simons Collaboration Grant, and ONRN00014-16-1-2329 awarded to S. Arora, and NSERC Discovery Grant RG-PIN 2014-04465 awarded to C. Honey. P.-H. Chen was supported by a Google PhD Fellowship. We also thank C. Chen and V. Mocz for their comments on this work.

References

- 1.Chen J, Leong YC, Honey CJ, Yong CH, Norman KA, Hasson U. Shared memories reveal shared structure in neural activity across individuals. Nature Neuroscience. 2017;20:115–125. doi: 10.1038/nn.4450. URL http://www.nature.com/neuro/journal/v20/n1/full/nn.4450.html. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Chen P-H, Chen J, Yeshurun Y, Hasson U, Haxby JV, Ramadge PJ. A Reduced-Dimension fMRI Shared Response Model. The 29th Annual Conference on Neural Information Processing Systems (NIPS) [Google Scholar]

- 3.Zhang H, Chen P-H, Chen J, Zhu X, Turek JS, Willke TL, Hasson U, Ramadge PJ. A searchlight factor model approach for locating shared information in multi-subject fmri analysis. arXiv preprint. URL https://arxiv.org/abs/1609.09432.

- 4.Arora S, Liang Y, Ma T. A Simple but Tough-to-Beat Baseline for Sentence Embeddings. International Conference on Learning Representations (ICLR) 2017 URL https://openreview.net/pdf?id=SyK00v5xx.

- 5.Mitchell TM, Shinkareva SV, Carlson A, Chang K-M, Malave VL, Mason RA, Just MA. Predicting Human Brain Activity Associated with the Meanings of Nouns. Science. 2008;320:1191–1194. doi: 10.1126/science.1152876. [DOI] [PubMed] [Google Scholar]

- 6.Deerwester S, Dumais ST, Furnas GW, Landauer TK, Harshman R. Indexing by Latent Semantic Analysis. Journal of the American Society for Information Science. 1990;41:391–407. [Google Scholar]

- 7.Pereira F, Detre G, Botvinick M. Generating Text from Functional Brain Images. Frontiers in Human Neuroscience. 2011;5:72. doi: 10.3389/fnhum.2011.00072. URL http://journal.frontiersin.org/article/10.3389/fnhum.2011.00072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Pereira F, Lou B, Pritchett B, Kanwisher N, Botvinick M, Fedorenko E. Decoding of generic mental representations from functional MRI data using word embeddings. bioRxiv preprint [Google Scholar]

- 9.Huth AG, Nishimoto S, Vu AT, Gallant J. A Continuous Semantic Space Describes the Representation of Thousands of Object and Action Categories across the Human Brain. Neuron. 2012;76:1210–1224. doi: 10.1016/j.neuron.2012.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Huth AG, deHeer WA, Griffiths TL, Theunissen FE, Gallant JL. Natural speech reveals the semantic maps that tile human cerebral cortex. Nature. 2016;532:453–458. doi: 10.1038/nature17637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wehbe L, Murphy B, Talukdar P, Fyshe A, Ramdas A, Mitchell T. Simultaneously Uncovering the Patterns of Brain Regions Involved in Different Story Reading Subprocesses. PLOS ONE. 9 doi: 10.1371/journal.pone.0112575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Conroy B, Singer B, Guntupalli J, Ramadge P, Haxby J. Inter-subject alignment of human cortical anatomy using functional connectivity. NeuroImage. 2013;81:400–411. doi: 10.1016/j.neuroimage.2013.05.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Haxby JV, Guntupalli JS, Connolly AC, Halchenko YO, Conroy BR, Gobbini MI, Hanke M, Ramadge PJ. A Common, High-Dimensional Model of the Representation Space in Human Ventral Temporal Cortex. Neuron. 2011;72:404–416. doi: 10.1016/j.neuron.2011.08.026. URL http://haxbylab.dartmouth.edu/publications/HGC+11.pdf. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kiros R, Zhu Y, Salakhutdinov R, Zemel RS, Torralba A, Urtasun R, Fidler S. Skip-Thought Vectors. Advances in Neural Information Processing Systems. URL https://papers.nips.cc/paper/5950-skip-thought-vectors.

- 15.Le Q, Mikolov T. Distributed Representations of Sentences and Documents. Proceedings of the 31st International Conference on Machine Learning, JMLR. 32 [Google Scholar]

- 16.Simony E, Honey CJ, Chen J, Lositsky O, Yeshurun Y, Hasson U. History dependent dynamical reconfiguration of the default mode network during narrative comprehension. Nature Communications. 7 doi: 10.1038/ncomms12141. URL http://www.nature.com/articles/ncomms12141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hasson U, Nir Y, Levy I, Fuhrmann G, Malach R. Intersubject Synchronization of Cortical Activity During Natural Vision. Science. 2004;303:1634–1640. doi: 10.1126/science.1089506. URL http://media.wix.com/ugd/b75639_74b4709ef98248a1be41e5ea433fdaed.pdf. [DOI] [PubMed] [Google Scholar]

- 18.Hasson U, Malach R, Heeger D. Reliability of cortical activity during natural stimulation. Trends in Cognitive Science. 2010;14:40–48. doi: 10.1016/j.tics.2009.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Honey CJ, Thompson CR, Lerner Y, Hasson U. Not Lost in Translation: Neural Responses Shared Across Languages. Journal of Neuroscience. 2012;32:15277–15283. doi: 10.1523/JNEUROSCI.1800-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Regev M, Honey CJ, Simony E, Hasson U. Selective and Invariant Neural Responses to Spoken and Written Narratives. J Neurosci. 2013;33:15978–15988. doi: 10.1523/JNEUROSCI.1580-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ames D, Honey CJ, Chow M, Todorov A, Hasson U. Contextual Alignment of Cognitive and Neural Dynamics. Journal of Cognitive Neuroscience. 2015;27:655–664. doi: 10.1162/jocn_a_00728. [DOI] [PubMed] [Google Scholar]

- 22.Yeshurun Y, Swanson S, Simony E, Chen J, Lazaridi C, Honey CJ, Hasson U. Same story, different story: the neural representation of interpretive frameworks. Psychological Science. doi: 10.1177/0956797616682029. URL . [DOI] [PMC free article] [PubMed]

- 23.Arora S, Li Y, Liang Y, Ma T, Risteski A. A Latent Variable Model Approach to PMI-based Word Embeddings. Transactions of the Association for Computational Linguistics. 4 [Google Scholar]

- 24.Anderson MJ, Capota M, Turek JS, Zhu X, Willke TL, Wang Y, Chen P-H, Manning JR, Ramadge PJ, Norman KA. Enabling factor analysis on thousand-subject neuroimaging datasets. URL https://arxiv.org/abs/1608.04647.

- 25.Baldassano C, Chen J, Zadbood A, Pillow JW, Hasson U, Norman KA. Discovering event structure in continuous narrative perception and memory. bioRxiv preprint. doi: 10.1016/j.neuron.2017.06.041. URL http://biorxiv.org/content/early/2016/10/14/081018. [DOI] [PMC free article] [PubMed]