Abstract

Mechanisms underlying the self/other distinction have been mainly investigated focusing on visual, tactile or proprioceptive cues, whereas very little is known about the contribution of acoustical information. Here the ability to distinguish between self and others' voice is investigated by using a neuropsychological approach. Right (RBD) and left brain damaged (LBD) patients and healthy controls were submitted to a voice discrimination and a voice recognition task. Stimuli were paired words/pseudowords pronounced by the participant, by a familiar or unfamiliar person. In the voice discrimination task, participants had to judge whether two voices were same or different, whereas in the voice recognition task participants had to judge whether their own voice was or was not present. Crucially, differences between patient groups were found. In the discrimination task, only RBD patients were selectively impaired when their own voice was present. By contrast, in the recognition task, both RBD and LBD patients were impaired and showed two different biases: RBD patients misattributed the other's voice to themselves, while LBD patients denied the ownership of their own voice. Thus, two kinds of bias can affect self-voice recognition: we can refuse self-stimuli (voice disownership), or we can misidentify others' stimuli as our own (embodiment of others' voice). Overall, these findings reflect different impairments in self/other distinction both at behavioral and anatomical level, the right hemisphere being involved in voice discrimination and both hemispheres in the voice identity explicit recognition. The finding of selective brain networks dedicated to processing one's own voice demonstrates the relevance of self-related acoustic information in bodily self-representation.

Abbreviations: LBD patient, left brain damaged patient; RBD patient, right brain damaged patient; VLSM, voxel-based lesion-symptom mapping

Keywords: Self/other distinction, Voice recognition, Voice discrimination, Embodiment, Brain damaged patients, Lesion-symptom mapping

Highlights

-

•

The neural basis of self/other voice discrimination and recognition were studied.

-

•

Self-voice discrimination was selectively impaired in right brain damaged patients.

-

•

Left brain damaged patients showed self-voice disownership in voice recognition.

-

•

Right brain damaged patients showed others' voice embodiment in voice recognition.

-

•

Specific components of voice identity are linked to distinct brain networks.

1. Introduction

A large amount of evidence from studies using different sensory modalities (e.g., auditory, visual and tactile) and tasks indicates that self-related information is treated as a special stimulus (Northoff, 2016; Sui and Humphreys, 2017). For instance, in the acoustical domain a variety of sounds (i.e., musical sound or other auditory information) can be referred to the self or to other people (Sevdalis and Keller, 2014). Accordingly, recent electrophysiological evidence demonstrated that self-generated sounds evoke a smaller brain response than externally generated ones (Timm et al., 2016). An important corpus of studies also demonstrated that the human voice has a critical role for identity recognition (von Kriegstein and Giraud, 2004; Schall et al., 2015; Perrodin et al., 2015; Pernet et al., 2015).

A relevant contribution to understand the anatomical correlates of voice identity recognition has been provided by neuropsychological studies (Van Lancker and Canter, 1982; Van Lancker and Kreiman, 1987; Van Lancker et al., 1989; Peretz et al., 1994; Lang et al., 2009; Hailstone et al., 2010; Blank et al., 2014; Luzzi et al., 2017; Papagno et al., 2017). For instance, Van Lancker et al. (1989) submitted left (LBD) and right brain damaged (RBD) patients to two different tasks. In the voice discrimination task, pairs of unfamiliar voices were presented and participants were required to decide whether stimuli were the same or different. In the voice recognition task, participants were asked to recognize familiar voices. Defects in voice discrimination occurred following left or right temporal lobe lesions, whereas voice recognition was impaired following right parietal lesions. Recently, other studies reported an involvement of the right temporal lobe in voice discrimination (Papagno et al., 2017) as well as in voice recognition (Luzzi et al., 2017). Moreover, an impairment in familiar voice recognition associated with bilateral fronto-temporal atrophy was described (Hailstone et al., 2010).

However, so far, no neuropsychological study has focused on self-voice processing. Despite the lack of studies on this issue, it might be of great interest to elucidate how self-stimuli are processed in the acoustical domain. Indeed, behavioral studies observed that healthy participants were less accurate with self- than unfamiliar voice when they were explicitly asked to decide whether or not the stimuli represented their own voice (Rosa et al., 2008; Allen et al., 2005). Accordingly, neurophysiological and neuroimaging data converged in showing that self- and other people's voice is differently processed. In this respect, Graux et al., 2013, Graux et al., 2015, by recording event related potentials, found that passively listening to the self-voice evoked smaller P3a responses as compared to familiar and unfamiliar voices. Moreover, fMRI studies revealed that patterns of brain activity in specific brain regions, such as right (Kaplan et al., 2008) and left inferior frontal gyri (Allen et al., 2005), underlie discrimination between the sound of one's own vs. other people's voice.

In sum, neuroimaging and neuropsychological studies did not clarify the role of each hemisphere with respect to two main factors: the type of task (voice discrimination and voice recognition) and the type of stimulus (self, familiar and unfamiliar voice). For this reason, here, we investigate the neural correlates of self-voice discrimination and self-voice recognition in patients with right or left focal lesions by adopting a paradigm previously described by Candini et al. (2014). Healthy participants listened to paired auditory stimuli pronounced by themselves, by a familiar or unfamiliar person. Afterwards, in the voice discrimination task participants had to judge whether paired stimuli were pronounced by same or different speakers, while in the voice recognition task they had to identify if one of the paired stimuli was or was not their own voice. Participants showed a selective facilitation in discriminating rather than recognizing the self-stimuli, whereas no difference was found with others' stimuli. These findings suggest that different mechanisms may underlie the processing of self-related stimuli when an explicit recognition is required (recognition task) or not required (discrimination task).

We hypothesize two different scenarios: if self-voice discrimination and recognition share functional mechanisms, their impairments should result from damage to a single neural network, and should be associated in patients. Conversely, if self-voice discrimination and recognition are subtended by distinct functional mechanisms, impairment of self-voice discrimination and recognition should be associated with lesions of relatively segregated brain networks, and not necessarily associated in patients. More specifically, considering the type of task, in line with neuropsychological findings, a deficit in voice discrimination should be found in patients with left or right hemisphere lesions (Van Lancker and Kreiman, 1987; Van Lancker et al., 1989; Papagno et al., 2017). However, it should be noted that previous reports did not consider the one's own voice. In line with lesion studies focused on self body-parts discrimination, we put forward the hypothesis that the right hemisphere has a major role also in self-voice discrimination (Frassinetti et al., 2008, Frassinetti et al., 2010). Regarding the recognition task, deficits for familiar voices were mainly associated to right hemisphere lesions (Van Lancker and Canter, 1982; Van Lancker and Kreiman, 1987; Van Lancker et al., 1989; Neuner and Schweinberger, 2000; Hailstone et al., 2010; Luzzi et al., 2017; Papagno et al., 2017). However, few studies that investigated the anatomical correlates of self-voice recognition, reported an involvement of right (Nakamura et al., 2001; Kaplan et al., 2008) or left frontal regions (Allen et al., 2005), suggesting a possible contribution of both hemispheres to self and others' voice recognition.

2. Material and methods

2.1. Participants

A priori statistical power analysis for sample size estimation was performed by using GPower (v. 3.1.9). The analysis suggested that about 15 participants for each group were necessary to achieve 90% power with alpha = 0.05 and effect size η2p = 0.29 (Candini et al., 2014).

In line with the power analysis, thirty-two consecutive brain damaged patients were recruited at the Maugeri Clinical Scientific Institutes (Castel Goffredo, Italy), the S. Maria delle Croci Hospital (Ravenna, Italy) and the Sol et Salus Hospital (Rimini, Italy) to participate in the study. Sixteen patients were affected by right hemispheric lesion (RBD; 12 males; mean ± sd age = 69.2 ± 12 years; mean ± sd education = 8.4 ± 4.1 years) and sixteen patients were affected by left hemispheric lesion (LBD; 8 males; mean ± sd age = 64.8 ± 11 years; mean ± sd education = 8.1 ± 4.1 years). Criteria for inclusion in the study were first-ever stroke (ischemic or hemorrhagic), confirmed by either brain CT or MRI findings.

Sixteen healthy participants (Controls; 9 males, mean ± sd age = 64.9 ± 10 years; mean ± sd education = 10.5 ± 4.6 years) matched by age, education and handedness served as controls. Two one-way ANOVAs confirmed that the three groups (Controls, RBD and LBD patients) did not significantly differ in age [F(2,45) = 1.67, p = 0.19] or years of education [F(2,45) = 0.75, p = 0.48].

All participants were right handed and without auditory pathology by their own verbal report. In order to confirm that hearing was normal, all participants were preliminary submitted to a yes/no task in which they were presented with tones (pure tones: 1500 Hz, 70 dB, lasting for 150 ms) and silences. Participants had to respond only to pure tones by pressing a button on a keyboard. A total of 30 trials were delivered binaurally through earphones (50% = pure tone and 50% = silence). Admission to the study was contingent on participants obtaining 100% accuracy.

All patients were administered the Mini-Mental State Examination (Folstein et al., 1975) to screen for a general cognitive impairment and the Token Test (De Renzi and Vignolo, 1962) to exclude deficits of comprehension (Table 1 for demographical and neuropsychological details).

Table 1.

Clinical and neuropsychological data of left and right brain damaged patients according to the lesion site.

LBD = left brain damaged patients; RBD = right brain damaged patients; Education and Age are indicated in years; TPL = Time post lesion (days); I = ischemic stroke, H = hemorrhagic stroke; MMSE = Mini Mental State Examination (scores are corrected for years of education and age; cut-off > 24); Token Test = cut-off > 26.5; na = score not available.

| Demographical and clinical details |

Neuropsychological Examination |

|||||

|---|---|---|---|---|---|---|

| Patient | Age | Educ | TPL | Aetiology | MMSE | TOKEN TEST |

| LBD 1 | 71 | 8 | 45 | I | 27 | 36 |

| LBD 2 | 69 | 4 | 59 | H | 24 | 27 |

| LBD 3 | 77 | 5 | 39 | I | 24 | 30 |

| LBD 4 | 72 | 8 | 28 | H | 19 | 22 |

| LBD 5 | 64 | 6 | 84 | H | 24 | 32 |

| LBD 6 | 62 | 5 | 63 | H | 22 | 26 |

| LBD 7 | 71 | 5 | 1825 | H | 25 | 29 |

| LBD 8 | 77 | 5 | 6245 | H | 30 | 28 |

| LBD 9 | 41 | 13 | 382 | H | 28 | 28 |

| LBD 10 | 56 | 13 | 446 | I | 30 | 31 |

| LBD 11 | 62 | 17 | 208 | I | 28 | 33 |

| LBD 12 | 47 | 8 | 252 | I | 29 | 33 |

| LBD 13 | 32 | 13 | 1100 | I | 26 | 30 |

| LBD 14 | 51 | 11 | 65 | H | 27 | 32 |

| LBD 15 | 46 | 11 | 390 | I | 28 | 27 |

| LBD 16 | 48 | 8 | 1358 | I | 29 | 32 |

| RBD 1 | 69 | 8 | 1620 | I | 30 | 30 |

| RBD 2 | 81 | 5 | 37 | H/I | 20 | na |

| RBD 3 | 56 | 3 | 128 | H | 27 | 32 |

| RBD 4 | 60 | 13 | 164 | I | 26 | 31 |

| RBD 5 | 66 | 13 | 1100 | H | 24 | 31 |

| RBD 6 | 80 | 3 | 744 | I | 27 | 31 |

| RBD 7 | 83 | 5 | 11 | I | 28 | 34 |

| RBD 8 | 62 | 13 | 1541 | I | 26 | 31 |

| RBD 9 | 80 | 7 | 79 | I | 24 | 30 |

| RBD 10 | 64 | 13 | 21 | H | 26 | na |

| RBD 11 | 58 | 8 | 22 | I | 29 | 34 |

| RBD 12 | 80 | 7 | 65 | I | 22 | 33 |

| RBD 13 | 74 | 13 | 442 | I | 30 | 31 |

| RBD 14 | 41 | 13 | 210 | I | 28 | 32 |

| RBD 15 | 68 | 8 | 208 | I | 30 | 33 |

| RBD 16 | 37 | 13 | 248 | I | 28 | 33 |

All participants were Italian speakers and naive to the purpose of the study. Written informed consent was obtained before taking part in the experiment. The study was approved by the local ethics committee (Department of Psychology, Bologna) and all procedures were in agreement with the Puri et al. (2008).

2.2. Stimuli

In a first session, human voices were recorded in a silent room by using a recorder positioned at 60 cm from the speaker's trunk. Vocal stimuli were Italian words and pseudowords which represented either meaningful words or meaningless analogues. Words were six disyllabic and high-frequency stimuli (in Italian: cane, foca, lupo, alce, rana, topo; in English: dog, seal, wolf, elk, frog, mouse) all belonging to the same semantic category (animals). Six pseudowords were obtained from the same words by replacing two letters (cona, faco, lusa, leca, tupi, rona). Subsequently, each vocal stimulus was digitized at 44100 Hz, 70 dB, 16 bit, stereo modality, and elaborated using a dedicated software (Cool Edit Pro software) to adjust the overall sound pressure and to balance the volume. The mean duration of each stimulus was 623.5 ms (SD = 89.5; range = 532–816 ms) and its frequencies were not manipulated.

Each stimulus could represent the participant's voice (A stimulus), the familiar other voice (B stimulus) or the unfamiliar other voice (C stimulus). Familiar other voice was the specific voice of a person the participant encounters every day. The unfamiliar other voice was taken from a data set of stimuli previously collected from a group of healthy participants. Familiar and unfamiliar voices were matched by gender, age and regional accent with each participant's voice. Self, familiar and unfamiliar voices were recorded while words and pseudowords were pronounced with a flat tone and as clearly as possible. Whenever the recorded items were not easily identifiable, the experimenter asked to repeat them until they were.

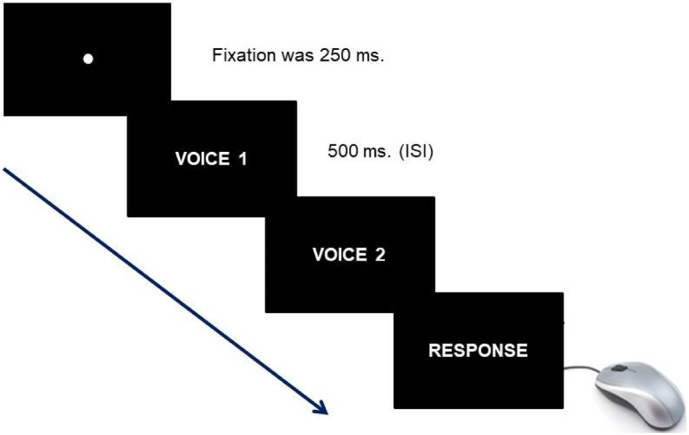

2.3. Procedure

Each trial started with a central fixation point (250 ms duration), followed by the sequential presentation (500 ms ISI) of a pair of vocal stimuli. The trial ended as soon as the participant responded. Inter-trial timing was fixed at 1000 msec. In each trial the two stimuli could either belong to the same person (same trials) or to different people (different trials) (Candini et al., 2014). Moreover, stimuli could belong to the participant (A), familiar other (B) or unfamiliar other (C). Thus, three combinations of “same” stimuli (AA, BB and CC) and three combinations of “different” stimuli (AB, AC and BC) were presented. Voices were matched by gender, age and regional accent. Finally, half trials consisted of identical stimuli (i.e. cane/cane or cona/cona), whereas the other half comprised non-identical stimuli (i.e. cane/foca or cona/faco). Presentation of each word/pseudoword was counterbalanced between “same” and “different” trials under the six combinations. Words and pseudowords were presented in two separate blocks. In each block, 4 trials for each combination of stimuli according to the voice's owner (AA-BB-CC-AC-AB-BC) were presented for a total of 24 trials. The presentation order, the stimuli sequence (identical and non-identical) as well as the owner factor (AA-BB-CC-AC-AB-BC) were randomized between trials, whereas the two blocks (words and pseudowords) were balanced between participants.

Participants sat in front of a PC screen at a distance of about 60 cm, and were required to press two previously assigned response keys, one for affirmative (YES) and one for negative (NO) answers. Response buttons were counterbalanced between participants. Stimuli were delivered through earphones (JVC HA-F140) and were presented binaurally. E-Prime 2.0 (Psychology Software Tool ©1996–2012) was adopted to control stimuli presentation and randomization. Key press RTs and response accuracy were recorded.

First of all, participants were instructed to ignore the linguistic content and to focus on the speaker. In the voice discrimination task, half of the participants were asked to judge whether the two voices belonged to the same person and the other half whether the two voices belonged to different person. In the voice recognition task, all participants were required to explicitly judge whether at least one of the two voices corresponded to their own voice. Additionally, no feedback on the accuracy of given responses was provided during the experiment (Fig. 1).

Fig. 1.

Experimental trial.

Example of experimental trial in which two paired voices were subsequently presented.

All participants performed the two tasks in one single session, with eight practice trials before the main experiment. Auditory stimuli used during the training period were not used in the subsequent experiment. To avoid an interference effect of the explicit recognition of one's own voice on the “same/different” task, the voice discrimination task always occurred before the voice recognition task. The whole experiment took approximately half an hour.

2.4. Statistical analyses

We compared Self and Other conditions according to the definition adopted in our previous study (Candini et al., 2014): in the Self trials, at least one stimulus belonged to the participant (Self condition: AA-AB-AC); in the Other trials, no stimulus belonged to the participant (Other condition: BB-CC-BC). The accuracy (% of correct responses) measured the performance.

First of all, to determine whether or not the dataset was normally distributed, we applied the Kolmogorov–Smirnov test. The lack of significant results (p > 0.05) allowed us to assume that the dataset was not significantly different from a normal distribution. Furthermore, to elucidate possible differences across the three groups, we conducted an Analysis of Variance (ANOVA) on accuracy with Group (Controls, RBD and LBD patients) as between-subject factor, Task (Voice discrimination/Voice recognition task), and Owner (Self/Other condition) as within-subject factors. Post-hoc comparisons were conducted by using the Duncan Test. The magnitude of effect size was expressed by partial eta square (η2p).

3. Results

3.1. Comparison between healthy participants, RBD and LBD patients

A significant effect of the Group factor was found [F(2,45) = 8.47, p = 0.001, η2p = 0.27] which was due to lower accuracy of RBD patients (63%) compared to LBD patients (73%, p = 0.04) and Controls (82%, p = 0.001). The difference between LBD patients and controls approached significance (p = 0.056). The variable Task [F(1,45) = 8.41, p = 0.005, η2p = 0.16] (Voice discrimination = 76% vs. Voice recognition = 69%) was significant. Furthermore, a significant three-way interaction Group x Owner x Task was found [F(2,45) = 13.79, p = 0.001, η2p = 0.38].

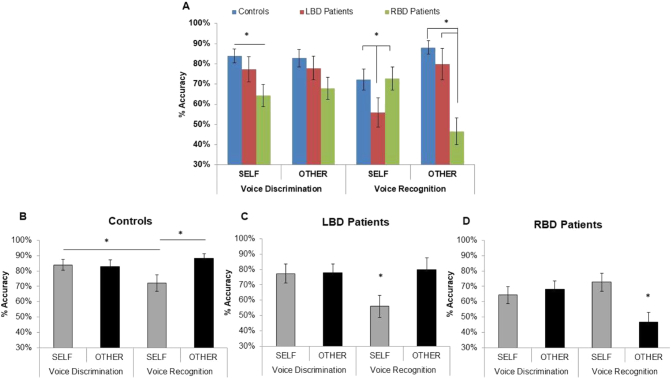

In the Voice discrimination task, as regards Self stimuli, RBD patients (64%) performed significantly worse than controls (84%, p = 0.03), whereas no difference was found between LBD patients and controls (77% vs. 84%, p = 0.44) as well as between RBD and LBD patients (p = 0.13). As regards Other stimuli, no significant differences across the three groups emerged (RBD = 68%; LBD = 78%; Controls = 83%; p = 0.09 for all comparisons). Crucially, a facilitation was found when comparing Self stimuli in both voice-discrimination and voice-recognition tasks in controls (84% vs. 72%, p = 0.05) and LBD patients (77% vs. 56%, p = 0.001) but not RBD patients (64% vs. 73%, p = 0.17).

In the Voice recognition task, with Self stimuli, LBD patients (56%) performed significantly worse than controls (72%, p = 0.05) and RBD patients (73%; p = 0.05). Conversely with Other stimuli, RBD patients (47%) performed significantly worse than controls (88%; p = 0.001) and LBD patients (80%; p = 0.001). Crucially, there was no significant difference in both Self stimuli when comparing RBD patients and controls (p = 0.98; Fig. 2A) and Other stimuli when comparing LBD patients and controls (p = 0.44). Moreover, controls and LBD patients performed worse with Self than Other stimuli (Controls 72% vs. 88%; p = 0.03; LBD patients 56% vs. 80%; p = 0.001; Fig. 2B and C). A completely different result was found in RBD patients who performed significantly worse with Other than Self stimuli (Other = 47% vs. Self = 73%; p = 0.001; Fig. 2D). The variable Owner [F(2,45) = 1.79 p = 0.19; η2p = 0.04] was not significant.

Fig. 2.

Accuracy in voice discrimination and voice recognition tasks as a function of Ownership. The comparison of accuracy (mean percentage of correct responses) in voice discrimination and voice recognition as a function of Ownership (Self, Other) between groups (A) and within group: controls (B), LBD patients (C) and RBD patients (D) are shown. Error bars depict SEMs. Significant differences (p < 0.05) between-groups (2A) and within-group (2B-D) are starred.

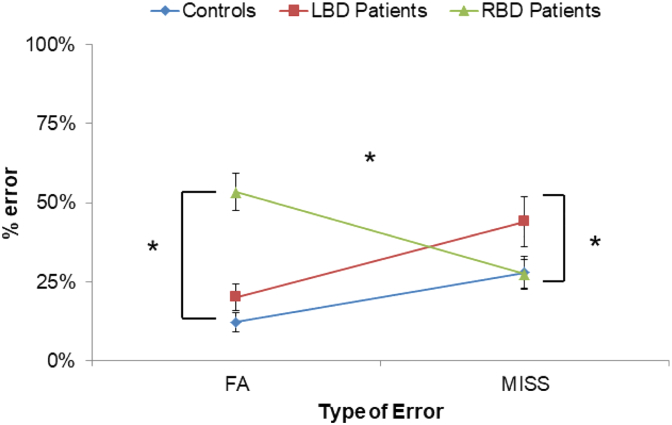

In order to look in more details at the nature of errors made by participants in the explicit recognition of self-voice, the percentage of False Alarms (FA; erroneous recognition of self-voice calculated in Other trials), and the percentage of Misses (erroneous rejection of self-voice calculated in Self trials) were compared across the three groups. An ANOVA was conducted on percentage of errors with Group (Controls, RBD and LBD patients) as between-subject factor and Error (FA and MISS) as within-subject factor. The main effect of Group was significant [F(2,45) = 6.13, p = 0.004, η2p = 0.21] due to more errors by RBD (40%) and LBD patients (32%) than controls (20%; p = 0.05 for both comparisons). No difference was found between RBD and LBD patients (p = 0.16). Crucially, a significant interaction Group x Error was found [F(2,45) = 14.79, p = 0.001, η2p = 0.40]. Post-hoc comparisons revealed that RBD patients made higher False Alarms than Miss rates (53% vs. 27%; p = 0.001), whereas both controls and LBD patients made higher Miss rates than False Alarms (Controls = 28% vs. 12%; p = 0.04; LBD Patients = 44% vs. 20%; p = 0.002). Interestingly, different error patterns emerged when RBD and LBD patients were compared to healthy participants. Indeed, RBD patients made higher False Alarms rates (53%) than controls (12%, p = 0.001) and LBD patients (20%, p = 0.001). In contrast, LBD patients (44%) made higher Miss rates than controls (28%, p = 0.037) and RBD patients (27%, p = 0.004; Fig. 3).

Fig. 3.

Analysis of errors.

The mean of False alarms (FA) and Misses (MISS) expressed as a percentage of error for Controls, left brain damaged (LBD) and right brain damaged (RBD) patients. Error bars depict SEMs. Significant differences (p < 0.05) are starred.

3.2. Control analysis: effect of time post lesion onset

Since alterations in voice production following cerebral stroke have been described in patients (Vuković et al., 2012), it was crucial to exclude that the observed results might be simply ascribed to the time post lesion onset. For this purpose, an Analysis of Covariance (ANCOVA) was conducted on accuracy with time post lesion (indicated as days from the lesion onset) as covariate, Group (RBD and LBD patients) as between-subject factor, and Task (Voice discrimination/Voice recognition task) and Owner (Self/Other condition) as within-subject factors.

However, no significant effect emerged either for the time post lesion factor [F(1,29) = 0.01, p = 0.90], or for its interaction with other variables (Group, Task and Owner). Instead, the three-way interaction Group x Owner x Task [F(1,29) = 15.99, p = 0.001, η2p = 0.36] previously found in the main analysis was still significant. Since the time post the onset of lesion did not affect patients' performance we can hypothesize that the observed deficits cannot be ascribed to a deficit in recognizing a voice that may have been modified post- compared to pre-lesion.

3.3. Lesion mapping and analysis

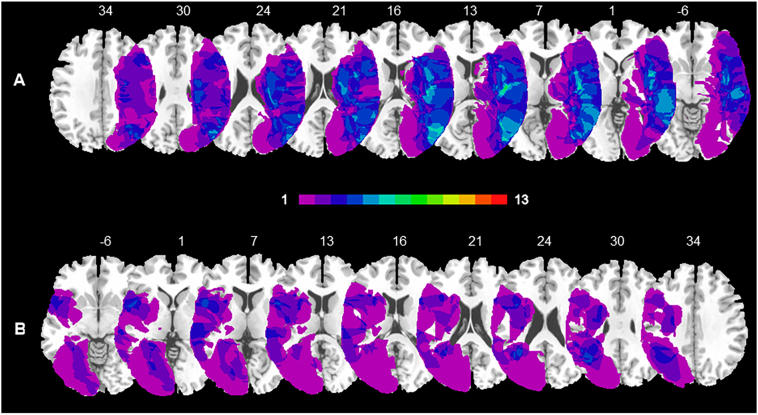

Brain lesions were identified by Computed Tomography and Magnetic Resonance digitalized images (CT/MRI) of 13 RBD and 13 LBD patients. For each patient, the location and extent of brain damage was delineated and manually mapped onto the MNI stereotactic space with the free software MRIcro (Rorden and Brett, 2000). As a first step, the MNI template was rotated to approximate the horizontal plane of the patient's scan. A trained rater (MC) manually mapped the lesion onto each correspondent template slice by using anatomical landmarks. Drawn lesions were then inspected by a second trained rater (FF) and, in case of disagreement, an intersection lesion map was used. Finally, lesion maps were rotated back into the standard space by applying the inverse of the transformation parameters used in the stage of adaptation to the brain scan. We calculated the lesions overlap on the ch2 template provided by MRIcro, separately for RBD and LBD patients groups, to create a color-coded overlay map of injured voxels across all patients and to provide an overview of all lesioned brain areas.

The region of maximum overlap in RBD patients' lesion was located along two different regions: one more anterior, involving the fronto-insular areas (46, −15, 10), and one more posterior, mainly located along the temporal lobe, extending to the middle temporal gyrus (53, −68, 15; for a graphical representation Fig. 4A). The region of maximum overlap in LBD patients' lesion was located in periventricular frontal regions (−33, 10, 25), in the insular cortices (−48, 8, 0) and involved the superior temporal (−39, −51, 23) and angular gyri (−37, −56, 30; for a graphical representation Fig. 4B). These regions were damaged in at least 3 RBD and 3 LBD patients.

Fig. 4.

Overlays lesions plots of right and left brain damaged patients.

Overlay of reconstructed lesion plots of right brain damaged (RBD) (A) and left brain damaged (LBD) patients (B) superimposed onto MNI template. The number of overlapping lesions is illustrated by different colours coding from violet (n = 1) to light green (n = 7).

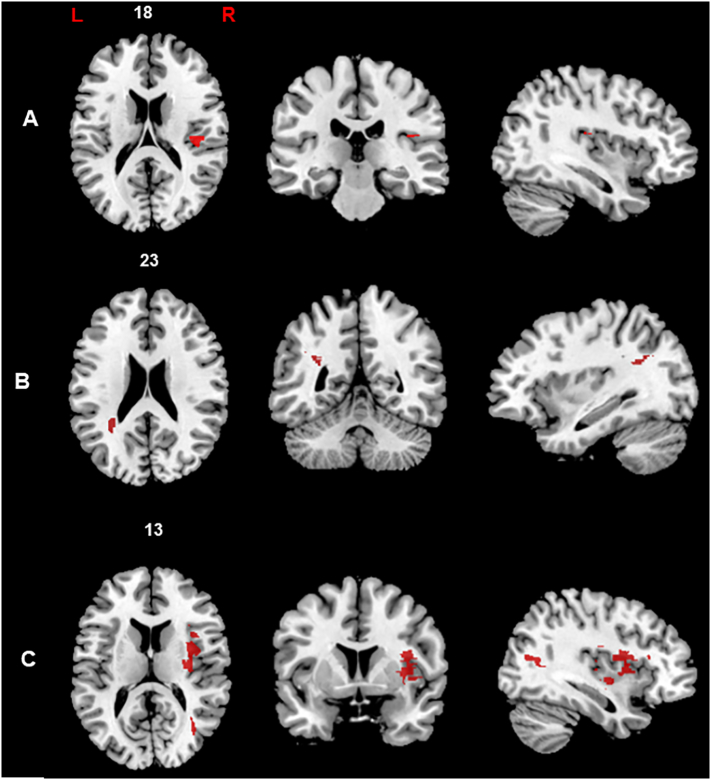

We applied a voxel-wise statistical approach to assess the contribution of lesion location to the selective impairment in discrimination and recognition of self and other's voice. We performed a Voxel-based lesion symptom mapping analysis (VLSM; Bates et al., 2003; Verdon et al., 2010) which compares behavioral scores between patients having or not a lesion in a particular voxel. The VLSM analysis was performed on the percentage of accuracy for each condition (self and other's voice) separately for voice discrimination and voice recognition tasks. In order to control for “sufficient lesion affection” and to avoid that the results of lesion-behaviour mapping were biased by voxels that are only rarely affected, we defined a minimum number of patients (in our study at least five cases were considered) with a lesion in a particular voxel (Sperber and Karnath, 2017). The behavioral scores were then compared between these two groups, yielding a t-statistic for each voxel. We adopted the t-test to perform statistical comparisons, as implemented in NPM (Non Parametric Mapping) and MRIcron software. The tests were performed using a permutation-derived correction in order to avoid producing inflated Z scores and to correct multiple comparisons without sacrificing statistical power (permFWE; Kimberg and Schwartz, 2007; Medina et al., 2010). To determine whether an observed test statistic is truly due to the difference in voxel status (lesioned or non-lesioned), the data were permuted 3000 times, calculating each permutation in a new test statistic. The distribution of those t-statistics was used to determine the cut-off score at p < 0.05. Then, brain regions that showed significant relationships with behavioral deficits were identified. In the statistical analysis described below, the anatomical distribution of the statistical results was assessed using the Automated Anatomical Labelling map (template AAL; Tzourio-Mazoyer et al., 2002), which classifies the anatomical distribution of digital images in stereotactic space. The mapping of voice discrimination revealed a peak located in the right gray matter in the posterior insular cortex significantly associated with self-voice discrimination deficit (38, −25, 18). Conversely, no significant cluster associated with performance in other's voice discrimination emerged (Fig. 5A). The mapping of voice recognition revealed distinct anatomical clusters for self and other's voice. Indeed, the self-voice recognition deficit was associated with a lesion of the left temporo-parietal white matter, adjacent to the angular gyrus (−34, −50, 23; Fig. 5B). On the other hand, the other's voice recognition deficit was significantly associated with right hemisphere lesion, specifically located in the periventricular frontal (32, 20, 17), insular (34, 3, 17) and middle temporal regions (35, −63, 13; Fig. 5C).

Fig. 5.

Representative slices showing the anatomical correlates of voice discrimination and voice recognition.

Brain regions significantly associated to self-voice discrimination (A), self-voice recognition (B) and other's voice recognition (C) are represented on the axial, coronal (from left to right) and sagittal plan. All voxels which survived to the permutation correction are displayed (p < 0.05).

In addition, to reveal the brain lesion associated with a deficit in voice-identity processing, we conducted a further VLSM analysis on overall patients' performance expressed as percentage of accuracy (composite score of voice discrimination and voice recognition regardless of Self and Other conditions; see Supplementary Material).

4. Discussion

In this study, we investigated the anatomical bases of discrimination and recognition of pre-recorded self and other voice in RBD, LBD patients and in a group of age-matched neurologically healthy participants. Different patterns of results emerged in the two tasks adopted. In the voice discrimination task, a difference across the three groups was observed when the participant's voice was present: RBD patients, but not LBD patients, showed a selective impairment compared to Controls. Instead, no difference across groups emerged with other people's voice. Moreover, within each group no difference between self and others' voice emerged.

In the voice recognition task, significant differences emerged both within and across the three groups. First, considering within groups performance, healthy participants as well as LBD patients were less accurate in recognizing self than others' voice. The opposite direction was found in RBD patients whose performance was worse with others' than self-voice. The lower accuracy for the self than others' voice observed in healthy controls and LBD patients is in line with previous results on young healthy participants reported by our group (Candini et al., 2014). This effect can be explained by the discrepancy between our own recorded voice and how we subjectively perceive and memorize our own voice. Since conduction through air and bones modifies voice characteristics during speech production (Maurer and Landis, 1990), one may argue that participants did not recognize a recorded vocal stimulus as their own voice because it was not heard as usual and it did not match the voice-stimulus stored in memory. Indeed, it was demonstrated that filtering or manipulating one's own voice frequencies will improve self-voice recognition (Shuster and Durrant, 2003; Xu et al., 2013). Second, considering across groups differences, lesions in the left and right hemisphere caused selective deficits affecting self-voice and others' voice, compared to controls: LBD patients exhibited a self-disadvantage effect, while RBD patients exhibited an other-disadvantage effect. Looking more deeply at the type of errors made by participants, we found a significant difference between the three groups: LBD patients rejected their own voice when it was present and therefore committed more misses than controls and RBD patients; instead RBD patients more frequently misattributed the other's voice to themselves when their own voice was not present and therefore committed more false alarms compared to controls and LBD patients. A similar dichotomy is also described in neurological patients with body-representation disorders. Indeed, they exhibit two types of deficits: either a disownership of their own body-parts (Vallar and Ronchi, 2009; Candini et al., 2016; Moro et al., 2016) or a tendency to claim that an alien hand is their own, showing the so called pathological embodiment of other's hand (Garbarini et al., 2015). The term embodiment is typically referred to the tendency observed in brain damaged patients to identify the examiner's hand as their own when it is located in their contralesional side according to an egocentric body congruent perspective (Garbarini et al., 2014, Garbarini et al., 2015). Here, we used the term embodiment to indicate the tendency to attribute the other's voice to themselves even if we did not manipulate the spatial configuration of the voice. Thus, in accordance with the definition of “embodiment”, further studies will verify whether “the properties of an alien voice are processed in the same way as the properties of the one's voice” (De Vignemont, 2011).

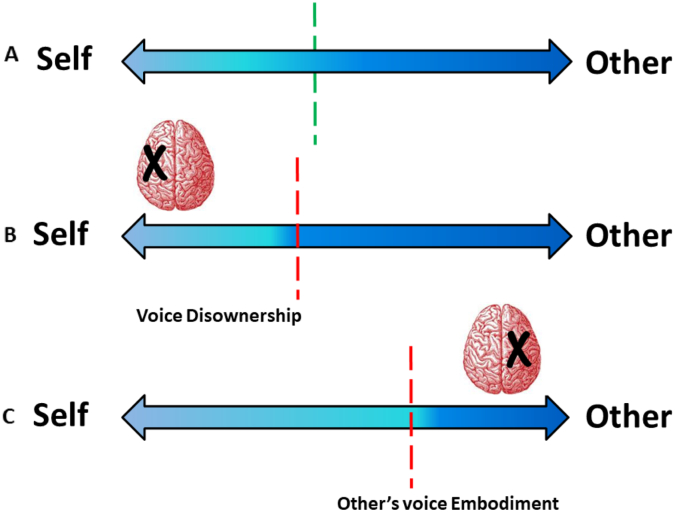

Taken together, evidence on voice and body-part recognition suggests that impaired corporeal self-representation might impact our ability to explicitly distinguish between self and other in two different ways: some patients deny the ownership of their own voice or body-parts, while some patients misattribute the other's voice or body-parts to themselves. On this basis, we may speculate that the self/other distinction is represented along a horizontal line in which “self” and “other” are located at opposite edges. Consequently, in order to recognize a voice as our own voice, our judgment can be erroneous in opposite ways: we can refuse the self-stimuli as our own voice (voice disownership), or we can misidentify the other-stimuli as our own voice (embodiment of other's voice). These opposite biases seem to reflect different impairments in the self/other distinction both at the behavioral and anatomical level (see Fig. 6).

Fig. 6.

A representation of self-other voice recognition in Controls, left brain damaged (LBD) and right brain damaged (RBD) patients.

The figure shows three different scenarios (in Controls, LBD and RBD patients, respectively) in which Self and Other are located at two opposite edges and the double-headed arrow indicates their horizontal continuum. The dot line represents the boundary between self and other. The hypothetical boundary between self and other should be the midpoint of the line in case of equal FA % (erroneous recognition of self-voice) and MISS % (erroneous rejection of self-voice). The deviation with respect to the real midpoint corresponds to the difference between the percentage of FA and MISS rates.

A) The green dot line indicates the self/other boundary in Controls (FA minus MISS = −16%).

B) The red dot line represents the tendency to deny the ownership of one's own voice (voice disownership) following a left hemisphere lesion (FA minus MISS = −24%).

C) The red dot line represents the tendency to misattribute the other's voice to themselves (others' voice embodiment), observed following a right hemisphere lesion (FA minus MISS = 26%). (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

Anatomically, the pathological tendency to refuse the presented voice as one's own (voice disownership) was associated with a lesion encompassing the left hemisphere and involving a subcortical temporo-parietal area. In this respect, neuroimaging evidence provides support for the involvement of a left cerebral network in the attribution of self-related acoustic information. For instance, a study conducted with schizophrenic patients reported a similar misattribution of self-generated speech to an alien source. This misidentification was associated with functional abnormalities in the left temporal cortex and the anterior cingulate bilaterally (Allen et al., 2007). We must recognize, however, that anatomical data in LBD patients should be taken with caution since patients with aphasia following lesions involving language areas in the left frontal and temporal lobes could not be able to take part in the current study. Indeed, one of the criteria for inclusion was the absence of deficits in language comprehension. Moreover, a preserved speech production ability was required to record participants' voice. In order to overcome this limitation, future studies should investigate the contribution of left frontal and temporal regions in self/other voice recognition adopting a different paradigm and/or type of stimuli. Interestingly, we found that the pathological tendency to erroneously attribute the other voice to themselves (embodiment of other's voice) was associated with a cortical network within the right hemisphere, extending to insular, frontal, and temporal cortex. The involvement of these regions in voice identity recognition is well supported by previous studies. In a PET study, greater activity was observed in the right parainsular cortex when healthy participants were asked to recognize their own voice from unfamiliar voices (Nakamura et al., 2001). Furthermore, in a fMRI study, Kaplan et al. (2008) demonstrated that seeing our own face and hearing our own voice mainly activated a right frontal area, suggesting that this region is crucial to build a “self-representation” across different sensory modalities. Concerning the involvement of temporal areas in voice identity recognition, mounting evidence has demonstrated that temporal regions mainly process the auditory properties of voices in order to categorize vocal identity (Belin et al., 2000; von Kriegstein and Giraud, 2004; Andics et al., 2010; Gainotti, 2011). Furthermore, impaired performance in self and other's voice recognition, but not in voice discrimination, was associated with damage to white matter tracts linking subcortical structures with cortical associative areas. Although very tentative, a possible explanation could be that, to properly attribute voices to the self or to other people, acoustical sensory information is integrated with higher-order self-representation, a process which is prevented in our right and left brain damaged patients because the white matter was not intact. Finally, a facilitation for discrimination compared to recognition of Self-stimuli was found both in Controls and LBD patients, but not in RBD patients. Crucially, this facilitation was not observed for Other stimuli suggesting that it was not simply driven by unbalanced levels of difficulty between these two tasks. Anatomically, the impairment in self-voice discrimination is significantly associated with a right insular damage. This evidence is in line with several previous studies which identify in the right insular cortex a key region for the sense of body ownership (Tsakiris et al., 2007; Baier and Karnath, 2008; Serino et al., 2013). Going further, the facilitation for self-stimuli discrimination leads us to hypothesize that self-voice is processed differently depending on whether or not explicit recognition is required. Indeed, voice discrimination, in which there was no explicit request to identify who the stimuli belong to, can be considered as a reliable proxy for the implicit recognition of one's own voice.

The above discussed differences between RBD and LBD patients compared to healthy participants in performing voice discrimination and voice recognition tasks have demonstrated that implicit and explicit self-voice knowledge may be selectively impaired following brain lesions. So far, neuropsychological evidence supported the distinction between implicit and explicit processing of stimuli. In line with our findings, some studies reported a crucial involvement of different brain areas in the right hemisphere for implicit and explicit self-body processing (Moro et al., 2011; Moro, 2013; Candini et al., 2016). On the other hand, the involvement of the left hemisphere in explicit self-body recognition is in line with findings reported by Garbarini et al. (2015). The authors described impairment in explicit recognition of the one's own body not only in right, but also in one left brain damaged patient. Thus, these studies reinforced the idea that implicit and explicit processing are likely subtended by distinct anatomical networks.

In conclusion, these findings extend our previous results (Candini et al., 2014) highlighting different and complementary contributions of both hemispheres to self/other voice recognition. Distinct areas of the right hemisphere are crucial for implicitly recognizing our own voice and explicitly rejecting the voice of others as if it was our own. Furthermore, in the explicit self-voice recognition the left hemisphere plays a complementary role in properly attributing our own voice to ourselves. Accordingly, the impairment of the explicit recognition of self-voice could result from different mechanisms. While RBD patients failed because they tended to the embodiment of others' voice, LBD patients denied the attribution of their own voice to themselves and thus exhibited voice disownership. Overall, the finding of selective brain networks dedicated to processing one's own voice demonstrates the relevance of self-related acoustic information in bodily self-representation.

Conflict of interest

The authors declare no competing financial interests. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Acknowledgments

This research was supported by Maugeri Clinical Scientific Institutes - IRCCS of Castel Goffredo (Italy) (Prof. Francesca Frassinetti) and funded by RFO (Ricerca Fondamentale Orientata, University of Bologna) to Prof. Francesca Frassinetti and to Dr. Michela Candini. This research was supported by a grant from Ministero della Salute (Ricerca Finalizzata PE Estero Theory enhancing, grant PE-2016-02362477) to Prof. Francesca Frassinetti and to Dr. Stefano Avanzi.

We are grateful to Serena Giurgola and Sara Mauri for their precious help during data collection.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.nicl.2018.03.021.

Appendix A. Supplementary data

Supplementary material

References

- Allen P.P., Amaro E., Fu C.H., Williams S.C.R., Brammer M., Johns L.C., McGuire P.K. Neural correlates of the misattribution of self-generated speech. Hum. Brain Mapp. 2005;26:44–53. doi: 10.1002/hbm.20120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allen P.P., Amaro E., Fu C.H., Williams S.C.R., Brammer M., Johns L.C., McGuire P.K. Neural correlates of the misattribution of speech in schizophrenia. Br. J. Psychiatry. 2007;190:162–169. doi: 10.1192/bjp.bp.106.025700. [DOI] [PubMed] [Google Scholar]

- Andics A., McQueen J.M., Petersson K.M., Gál V., Rudas G., Vidnyánszky Z. Neural mechanisms for voice recognition. NeuroImage. 2010;52:1528–1540. doi: 10.1016/j.neuroimage.2010.05.048. [DOI] [PubMed] [Google Scholar]

- Baier B., Karnath H.O. Tight link between our sense of limb ownership and self-awareness of actions. Stroke. 2008;39(2):486–488. doi: 10.1161/STROKEAHA.107.495606. [DOI] [PubMed] [Google Scholar]

- Bates E., Wilson S.M., Saygin A.P., Dick F., Sereno M.I., Knight R.T., Dronkers N.F. Voxel-based lesion-symptom mapping. Nat. Neurosci. 2003;6:448–450. doi: 10.1038/nn1050. [DOI] [PubMed] [Google Scholar]

- Belin P., Zatorre R.J., Lafaille P., Ahad P., Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403:309–312. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- Blank H., Wieland N., von Kriegstein K. Person recognition and the brain: merging evidence from patients and healthy individuals. Neurosci. Biobehav. Rev. 2014;47:717–734. doi: 10.1016/j.neubiorev.2014.10.022. [DOI] [PubMed] [Google Scholar]

- Candini M., Zamagni E., Nuzzo A., Ruotolo F., Iachini T., Frassinetti F. Who is speaking? Implicit and explicit self and other voice recognition. Brain Cogn. 2014;92C:112–117. doi: 10.1016/j.bandc.2014.10.001. [DOI] [PubMed] [Google Scholar]

- Candini M., Farinelli M., Ferri F., Avanzi S., Cevolani D., Gallese V., Northoff G., Frassinetti F. Implicit and explicit routes to recognize the own body: evidence from brain damaged patients. Front. Hum. Neurosci. 2016;10:405. doi: 10.3389/fnhum.2016.00405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Renzi E., Vignolo L.A. The token test: a sensitive test to detect receptive disturbances in aphasics. Brain. 1962;85:665–678. doi: 10.1093/brain/85.4.665. [DOI] [PubMed] [Google Scholar]

- De Vignemont F. Embodiment, ownership and disownership. Conscious. Cogn. 2011;20(1):82–93. doi: 10.1016/j.concog.2010.09.004. [DOI] [PubMed] [Google Scholar]

- Folstein M.F., Folstein S.E., McHugh P.R. “Mini-mental state”: a practical method for grading the cognitive state of patients for the clinician. J. Psychiatry Res. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- Frassinetti F., Maini M., Romualdi S., Galante E., Avanzi S. Is it mine? Hemispheric asymmetries in corporeal self recognition. J. Cogn. Neurosci. 2008;20:1507–1516. doi: 10.1162/jocn.2008.20067. [DOI] [PubMed] [Google Scholar]

- Frassinetti F., Maini M., Benassi M., Avanzi S., Cantagallo A., Farnè A. Selective impairment of self body-parts processing in right brain-damaged patients. Cortex. 2010;46:322–328. doi: 10.1016/j.cortex.2009.03.015. [DOI] [PubMed] [Google Scholar]

- Gainotti G. What the study of voice recognition in normal subjects and brain-damaged patients tells us about models of familiar people recognition. Neuropsychologia. 2011;49:2273–2282. doi: 10.1016/j.neuropsychologia.2011.04.027. [DOI] [PubMed] [Google Scholar]

- Garbarini F., Fornia L., Fossataro C., Pia L., Gindri P., Berti A. Embodiment of others' hands elicits arousal responses similar to one's own hands. Curr. Biol. 2014;24(16):R738. doi: 10.1016/j.cub.2014.07.023. [DOI] [PubMed] [Google Scholar]

- Garbarini F., Fossataro C., Berti A., Gindri P., Romano D., Pia L., della Gatta F., Maravita A., Neppi-Modona M. When your arm becomes mine: pathological embodiment of alien limbs using tools modulates own body representation. Neuropsychologia. 2015;70:402–413. doi: 10.1016/j.neuropsychologia.2014.11.008. [DOI] [PubMed] [Google Scholar]

- Graux J., Gomot M., Roux S., Bonnet-Brilhault, Camus V., Bruneau N. My voice or yours? An electrophysiological study. Brain Topogr. 2013;26:72–82. doi: 10.1007/s10548-012-0233-2. [DOI] [PubMed] [Google Scholar]

- Graux J., Gomot M., Roux S., Bonnet-Brilhault F., Bruneau N. Is my voice just a familiar voice? An electrophysiological study. Soc. Cogn. Affect. Neurosci. 2015;10:101–105. doi: 10.1093/scan/nsu031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hailstone J.C., Crutch S.J., Vestergaard M.D., Patterson R.D., Warren J.D. Progressive associative phonagnosia: a neuropsychological analysis. Neuropsychologia. 2010;48(4):1104–1114. doi: 10.1016/j.neuropsychologia.2009.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaplan J.T., Aziz-Zadeh L., Uddin L.Q., Iacoboni M. The self across the senses: an fMRI study of self-face and self-voice recognition. Soc. Cogn. Affect. Neurosci. 2008;3:218–223. doi: 10.1093/scan/nsn014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kimberg D.Y., Schwartz M.F. Power in voxel-based lesion-symptom mapping. J. Cogn. Neurosci. 2007;19:1067–1080. doi: 10.1162/jocn.2007.19.7.1067. [DOI] [PubMed] [Google Scholar]

- von Kriegstein K., Giraud A.L. Distinct functional substrates along the right superior temporal sulcus for the processing of voices. NeuroImage. 2004;22:948–955. doi: 10.1016/j.neuroimage.2004.02.020. [DOI] [PubMed] [Google Scholar]

- Lang C.J.G., Kneidl O., Hielscher-Fastabend M., Heckmann J.G. Voice recognition in aphasic and non-aphasic stroke patients. J. Neurol. 2009;256:1303–1306. doi: 10.1007/s00415-009-5118-2. [DOI] [PubMed] [Google Scholar]

- Luzzi S., Coccia M., Polonara G., Reverberi C., Ceravolo G., Silvestrini M., Fringuelli F.M., Baldinelli S., Provinciali L., Gainotti G. Selective associative phonagnosia after right anterior temporal stroke. Neuropsychologia. 2017 doi: 10.1016/j.neuropsychologia.2017.05.016. [DOI] [PubMed] [Google Scholar]

- Maurer D., Landis T. Role of bone conduction in the self-perception of speech. Folia Phoniatr. 1990;42:226–229. doi: 10.1159/000266070. [DOI] [PubMed] [Google Scholar]

- Medina J., Kimberg D.Y., Chatterjee A., Coslett H.B. Inappropriate usage of the Brunner-Munzel test in recent voxel-based lesion-symptom mapping studies. Neuropsychologia. 2010;48:341–343. doi: 10.1016/j.neuropsychologia.2009.09.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moro V. The interaction between implicit and explicit awareness in anosognosia: emergent awareness. J. Cogn. Neurosci. 2013;4:199–200. doi: 10.1080/17588928.2013.853656. [DOI] [PubMed] [Google Scholar]

- Moro V., Pernigo S., Zapparoli P., Cordioli Z., Aglioti S.M. Phenomenology and neural correlates of implicit and emergent motor awareness in patients with anosognosia for hemiplegia. Behav. Brain Res. 2011;225:259–269. doi: 10.1016/j.bbr.2011.07.010. [DOI] [PubMed] [Google Scholar]

- Moro V., Pernigo S., Tsakiris M., Avesani R., Edelstyn N.M.J., Jenkinson P.M., Fotopoulou A. Motor versus body awareness: voxel-based lesion analysis in anosognosia for hemiplegia and somatoparaphrenia following right hemisphere stroke. Cortex. 2016;15:62–77. doi: 10.1016/j.cortex.2016.07.001. [DOI] [PubMed] [Google Scholar]

- Nakamura K., Kawashima R., Sugiura M., Kato T., Nakamura A., Hatano K., Nagumo S., Kubota K., Fukuda H., Ito K., Kojima S. Neural substrates for recognition of familiar voices: a PET study. Neuropsychologia. 2001;39:1047–1054. doi: 10.1016/s0028-3932(01)00037-9. [DOI] [PubMed] [Google Scholar]

- Neuner F., Schweinberger S.R. Neuropsychological impairments in the recognition of faces, voices, and personal names. Brain Cogn. 2000;44:342–366. doi: 10.1006/brcg.1999.1196. [DOI] [PubMed] [Google Scholar]

- Northoff G. Is the self a higher-order or fundamental function of the brain? The “basis model of self-specificity” and its encoding by the brain's spontaneous activity. J. Cogn. Neurosci. 2016;7:203–222. doi: 10.1080/17588928.2015.1111868. [DOI] [PubMed] [Google Scholar]

- Papagno C., Mattavelli G., Casarotti A., Bello L., Gainotti G. Defective recognition and naming of famous people from voice in patients with unilateral temporal lobe tumours. Neuropsychologia. 2017 doi: 10.1016/j.neuropsychologia.2017.07.021. [DOI] [PubMed] [Google Scholar]

- Peretz I., Kolinsky R., Tramo M., Labrecque R., Hublet C., Demeurisse G., Belleville S. Functional dissociations following bilateral lesions of auditory-cortex. Brain. 1994;117:1283–1301. doi: 10.1093/brain/117.6.1283. [DOI] [PubMed] [Google Scholar]

- Pernet C.R., McAleer P., Latinus M., Gorgolewski K.J., Charest I., Bestelmeyer P.E.G., Watson R.H., Fleming D., Crabbe F., Valdes-Sosa M., Belin P. The human voice areas: spatial organization and inter-individual variability in temporal and extra-temporal cortices. NeuroImage. 2015;119:164–174. doi: 10.1016/j.neuroimage.2015.06.050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrodin C., Kayser C., Abel T.J., Logothetis N.K., Petkov C.I. Who is that? Brain networks and mechanisms for identifying individuals. Trends Cogn. Sci. 2015;19:783–796. doi: 10.1016/j.tics.2015.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puri K.A., Suresh K.R., Gogtay N.J., Thatte U.M. Declaration of Helsinki, 2008: implications for stakeholders in research. World Med. J. 2008;54:120–124. doi: 10.4103/0022-3859.52846. [DOI] [PubMed] [Google Scholar]

- Rorden C., Brett M. Stereotaxic display of brain lesions. Behav. Neurol. 2000;12:120–191. doi: 10.1155/2000/421719. [DOI] [PubMed] [Google Scholar]

- Rosa C., Lassonde M., Pinard C., Keenan J.P., Belin P. Investigations of hemispheric specialization of self-voice recognition. Brain Cogn. 2008;68:204–214. doi: 10.1016/j.bandc.2008.04.007. [DOI] [PubMed] [Google Scholar]

- Schall S., Kiebel S.J., Maess B., von Kriegstein K. Voice identity recognition: functional division of the right STS and its behavioral relevance. J. Cogn. Neurosci. 2015;27:280–291. doi: 10.1162/jocn_a_00707. [DOI] [PubMed] [Google Scholar]

- Serino A., Alsmith A., Costantini M., Mandrigin A., Tajadura-Jimenez A., Lopez C. Bodily ownership and self-location: components of bodily self-consciousness. Conscious. Cogn. 2013;22:1239–1252. doi: 10.1016/j.concog.2013.08.013. [DOI] [PubMed] [Google Scholar]

- Sevdalis V., Keller P.E. Know thy sound: perceiving self and others in musical contexts. Acta Psychol. 2014;152:67–74. doi: 10.1016/j.actpsy.2014.07.002. [DOI] [PubMed] [Google Scholar]

- Shuster L.I., Durrant J.D. Toward a better understanding of the perception of self-produced speech. J. Commun. Disord. 2003;36(1):1–11. doi: 10.1016/s0021-9924(02)00132-6. [DOI] [PubMed] [Google Scholar]

- Sperber C., Karnath H.O. Impact of correction factors in human brain lesion-behavior inference. Hum. Brain Mapp. 2017;38:1692–1701. doi: 10.1002/hbm.23490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sui J., Humphreys G.W. The ubiquitous self: what the properties of self-bias tell us about the self. Ann. N. Y. Acad. Sci. 2017;1396:222–235. doi: 10.1111/nyas.13197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Timm J., Schönwiesner M., Scröger E., SanMiguel I. Sensory supression of brain responses to self-generated sounds is observed with and without the perception of agency. Cortex. 2016;80:5–20. doi: 10.1016/j.cortex.2016.03.018. [DOI] [PubMed] [Google Scholar]

- Tsakiris M., Hesse M.D., Boy C., Haggard P., Fink G.R. Neural signatures of body ownership: a sensory network for bodily self-consciousness. Cereb. Cortex. 2007;17:2235–2244. doi: 10.1093/cercor/bhl131. [DOI] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N., Landeau B., Papathanassiou D., Crivello F., Etard O., Delcroix N., Mazoyer B., Joliot M. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. NeuroImage. 2002;15:273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- Vallar G., Ronchi R. Somatoparaphrenia: a body delusion. A review of the neuropsychological literature. Exp. Brain Res. 2009;192:533–551. doi: 10.1007/s00221-008-1562-y. [DOI] [PubMed] [Google Scholar]

- Van Lancker D.R., Canter G.J. Impairment of voice and face recognition in patients with hemispheric damage. Brain Cogn. 1982;1:185–195. doi: 10.1016/0278-2626(82)90016-1. [DOI] [PubMed] [Google Scholar]

- Van Lancker D., Kreiman J. Voice discrimination and recognition are separate abilities. Neuropsychologia. 1987;25:829–834. doi: 10.1016/0028-3932(87)90120-5. [DOI] [PubMed] [Google Scholar]

- Van Lancker D.R., Kreiman J., Cummings J. Voice perception deficits: neuroanatomical correlates of phonagnosia. J. Clin. Exp. Neuropsychol. 1989;11:665–674. doi: 10.1080/01688638908400923. [DOI] [PubMed] [Google Scholar]

- Verdon V., Schwartz S., Lovblad K.O., Hauert C.A., Vuilleumier P. Neuroanatomy of hemispatial neglect and its functional components: a study using voxel-based lesion-symptom mapping. Brain. 2010;133:880–894. doi: 10.1093/brain/awp305. [DOI] [PubMed] [Google Scholar]

- Vuković M., Sujić R., Petrović-Lazić M., Miller N., Milutinović D., Babac S., Vuković I. Analysis of voice impairment in aphasia after stroke-underlying neuroanatomical substrates. Brain Lang. 2012;123:22–29. doi: 10.1016/j.bandl.2012.06.008. [DOI] [PubMed] [Google Scholar]

- Xu M., Homae F., Hashimoto R., Hagiwara H. Acoustic cues for the recognition of self-voice and other-voice. Front. Psychol. 2013;4:735. doi: 10.3389/fpsyg.2013.00735. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material