Abstract

Retinal blood vessel extraction is considered to be the indispensable action for the diagnostic purpose of many retinal diseases. In this work, a parallel fully convolved neural network–based architecture is proposed for the retinal blood vessel segmentation. Also, the network performance improvement is studied by applying different levels of preprocessed images. The proposed method is experimented on DRIVE (Digital Retinal Images for Vessel Extraction) and STARE (STructured Analysis of the Retina) which are the widely accepted public database for this research area. The proposed work attains high accuracy, sensitivity, and specificity of about 96.37%, 86.53%, and 98.18% respectively. Data independence is also proved by testing abnormal STARE images with DRIVE trained model. The proposed architecture shows better result in the vessel extraction irrespective of vessel thickness. The obtained results show that the proposed work outperforms most of the existing segmentation methodologies, and it can be implemented as the real time application tool since the entire work is carried out on CPU. The proposed work is executed with low-cost computation; at the same time, it takes less than 2 s per image for vessel extraction.

Keywords: Retinal vessel, Deep learning, Enhancement, Fully convolved neural network

Introduction

Most of the retinal diseases are silent intruder in the human sight and the symptoms are also undetectable until the disease is well progressed. Diabetic retinopathy is one of the leading silent vision thief with no symptom at the early stages. It leads to degradation of vision and blindness in developed cases. In every disease stage, it is possible to have an internal anatomical structure changes and every change reflects the level of disease progression. Blood flow blockage as well as formation of fake blood vessels are some of the commonly seen indicators for level of diabetic retinopathy. Due to those reasons, vascular pattern extraction and analysis is considered to be the significant task in the retinal image processing for disease diagnosis. Since only blood circulation system in human eye is retinal blood vessels [1], its extraction is the essential task in most of the disease diagnosis. In addition to retinal disease diagnosis, the retinal vascular pattern is also the dominant biometric key for authentication purpose.

Factors needed to be considered for the retinal vessel extraction include the artifacts arising during image capturing and dependence of image appearance with respect to the person nativity. In addition, impact of abnormalities like microaneurysm, hemorrhages, lesion, and fragile vessels are also considered to be the reliable false positives for vessel detection. Intensity range of microaneurysm, hemorrhages, and some lesions are similar to that of the retinal vessels, and hence vessel pattern extraction is still the crucial unresolved challenge. Manual extraction is a time-consuming process and hence there is an obligation of an automated process. Retinal blood vessel extraction may be supervised or unsupervised algorithm–based technique, among both supervised algorithms perform better and are confirmed through numerous works.

Bekir et al. [2] proposed an unsupervised structure level set based method for segmenting retinal vasculature and they also introduced a zero level contour regularization. Barkhoda et al. [3] constructed two feature vectors using radial and angular partitioning and Manhattan distance based fuzzy logic is utilized for decision making for vascular pattern matching. A multiscale superpixel–based tracking method is proposed for retinal vessel segmentation by Zhao et al. [4]. Patches are obtained from the image, and then intensity, connectivity, and location are determined for extracting superpixel. Vessel is tracked from the seed point selected and continued till the terminating condition.

Aquino et al. [5] proposed a supervised algorithm based on multilayer feed forward network trained using moment invariant and gray level features. Authors compared their work with several supervised methods and they claimed that their methodology is superior in terms of data independence. Fraz et al. [6] proposed bagged and boosted decision trees based supervised classification of retinal blood vessels using gradient field orientation analysis, Gabor filter, line field strength, and morphological transformations. The proposed method is experimented on three publicly available databases DRIVE, STARE, and CHASE and they provide feature importance on different database classification. Features identified as important on DRIVE database is different from STARE database important features. Supervised algorithm–based methods usually have variation in end result with respect to the features extracted for the classification. Hence, feature selection plays a key role for the flawless classification. In all the supervised algorithms, user needs to recognize the eloquent features to perform perfect classification with minimum error. Any algorithm expected to classify with acceptable end result and the performance should never depends on the training samples.

Some of the research works also incorporate feature selection methodology along with their ultimate objective. Feature selection need to be an optimal one; it should meet the tradeoff between the number of features selected and the result that can be achieved. In order to incorporate this tradeoff, recently, more number of nature-inspired algorithms are applied to select the optimum feature set. Swarm intelligence–based algorithm [7, 8], an imitation of animal behavior in finding optimum solution [9, 10], is some of the algorithms used to solve optimization problem in feature selection. In [11], optimum feature set is selected for the retinal blood vessel segmentation using bat algorithm. Nevertheless, important features are identified through any of these feature selection algorithm; still, a gap exists in ascertaining the features which imitates our neural system.

Deep learning methods imitate our human neural scheme and convolutional neural network [12, 13] is one of the popularly used architecture. Stationary wavelet transform with fully convolutional deep neural network [14] proposed by Oliveira et al. includes the features of multiscale analysis and deep learning for retinal vessel segmentation. Transfer learning–based fully convolutional neural network [15] is proposed by Jiang et al. for retinal blood vessel segmentation. Reinforcement learning is included with convolutional neural network [16] which is proposed for retinal vessel segmentation by Guo et al. From the literature survey, we inferred that thin vessel detection is still a challenging task. In addition, illumination and contrast non-uniformity of the fundus images is the additional challenge needed to address for the vascular detection.

The proposed architecture for retinal vessel segmentation addresses both the abovementioned issues. Thin vessel detection is incorporated with the help of parallel fully convolutional architecture. Illumination and contrast non-uniformity effect can be reduced with the help of preprocessing processes. The proposed method has the following contribution:

-

i.

Same architecture is being analyzed with various preprocessing.

-

ii.

Independence of network model with data is shown through cross-training result.

-

iii.

Vessel segmentation from abnormal images shows better result.

Paper is organized as follows: “Materials” describes the databases used for this work. “Methods” explains the complete details about the architecture and the preprocessing methods applied. “Results” includes the output obtained, and the relevant discussions about the performance of the proposed work with different preprocessing techniques, strength of the work by cross validation, and comparison with existing methodologies are under “Discussion.” “Conclusions” includes the summary and future work.

Materials

DRIVE (Digital Retinal Images for Vessel Extraction) database [17, 18] is the collection of images from the ophthalmology examination on Netherlands diabetic retinopathy patients. Canon CR5 non-mydriatic camera is used to capture images at 768 by 584 pixels with 45° field of view. This database totally has 40 images, equally divided into 20 training images and 20 testing images. Groundtruth is available for both training and testing images. For testing images, two groundtruths are available; one among is gold standard and the other is used for validation of system automated segmentation. Groundtruths are developed by the knowledgeable ophthalmologist with the maximum probable case for vessel region. Database includes the images, both with and without having symptoms of diabetic retinopathy. Also, they mentioned that the images enclosed in this database were used for medical diagnoses.

STARE (STructured Analysis of the Retina) [19, 20] is an another publicly available database. The entire set of dataset contains 400 images; 40 images are available with hand labeled groundtruth by two experts. The images are acquired using Top Con TRV50 fundus camera with 35° field of view. The image resolution is said to be 24 bits per pixel and of dimension 605 × 700. The database can also be used for artery vein classification and optic disk detection.

Methods

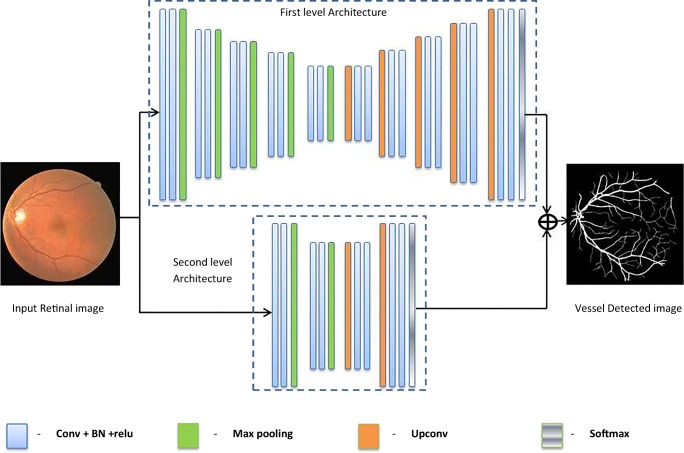

Parallel Fully Convolved Neural Network Architecture

The architecture proposed for the retinal vessel segmentation is derived from semantic segmentation [21] architecture with some alterations. The proposed architecture contains two fully convolved neural networks connected in parallel. The first-level architecture is trained with the groundtruth images extract the vessels with large and moderate thickness. The second level architecture is trained using the skeletonized groundtruth which extracts the thin and fine vessels legibly. Both the architecture level outputs are combined to obtain the overall vessel-detected output. The overall architecture of the proposed methodology is given by Fig. 1.

Fig. 1.

Proposed architecture

Convolutional Layer

Convolutional layer in the deep learning architecture imitates the biological nature of neuron activation [22]. Activation of particular neuron corresponds only to the specific visual field in brain. Replication of this thought is the kernels used for the convolutional layer. In the proposed work, the convolutional layers are applied with 64 filter kernels of size 3 × 3, padding of one layer and stride of 1.

Batch Normalization

Internal covariant shift [23] is the commonly seen issue in the middle layer activation function since it needs to adapt themselves to the distribution variation of activation function during training process. This may slow down the training process; hence, batch normalization is done to overcome this issue. The normalized layer inputs is given by

| 1 |

The layer output is obtained through scaling and shifting operation ; where γ and β are parameters learned during training, μB is the batch mean, and is the batch variance. The testing phase do not have mean and variance calculation; instead, they have used the previously calculated values.

Pooling and Unpooling Layer

Controlling of parameters, computation, and overfitting can be achieved through downsampling operation which also stores the index value to be used by the decoder. On the decoding part, upsampling is done. Maxpooling of size 2 × 2 is used as the downsampling operation in this work.

Activation Function

Rectified linear unit (Relu) activation function is used in this work. It proliferates the non-linear properties of the decision function. It also increases the network non-linear properties without affecting the receptive fields of the convolution layer [24]. Training time achieved by Relu function is faster compared to other functions without compromising accuracy [24]. Relu activation function is given by f(x) = max (0, x).

Training Options

The initial weights are assigned based on Gaussian distribution with the mean and standard deviation values as zero and 0.01 respectively. The initial bias values are set as zero. Network parameters such as weights and bias are updated using Gradient descent algorithm by moving towards the negative gradient with small steps. Updation is based on the minimization of loss function for the parameter given by

| 2 |

where “n” indicates the number of iteration, θ is the weight or bias parameter, α is the learning rate, and γ is the contribution factor from previous experience. For the proposed work, learning rate is set as 0.05, contribution factor is about 0.9. In order to avoid overfitting, l2 regularization [25] is applied, hence the loss function with regularization is given by

| 3 |

where λ is the regularization factor; regularization is applied only for weight parameter.

Cross Entropy Loss Function

In the retinal images, the pixel count of the vascular region is less in proportion compared to the other regions; hence, it leads to improper classification [26]. In order to balance this pixel count imbalance problem, weightage is being assigned to the class weights.

Let w1 and w2 are the pixel occurrence frequency of each of the classes. Total pixel count is given by the sum of the two class pixel occurrence frequency.

| 4 |

With the intention of giving equal importance to all the classes in classification, pixel occurrence frequency of each of the class is calculated and it is given by

| 5 |

Then weightage for each of the class is assigned as the reciprocal of the pixel occurrence frequency of the individual class.

| 6 |

We do experimentation on the efficiency of the network architecture for giving appropriate result with respect to the input images. The retinal fundus images are applied with various preprocessing techniques and experimented on the similar fully convolutional network architecture with identical parameter tuning. Retinal fundus images without any channel separation are trained on the fully convolved neural network architecture with the training images from DRIVE database. Then, we repeat the training using the preprocessed images using the same training images.

Preprocessing I

Green channel of the retinal color fundus images provide better visibility of vascular region compared to the background region. Hence, green channel is separated and enhanced using contrast limited adaptive histogram equalization (CLAHE) algorithm [27]. Cumulative distributive function is accomplished by transforming each pixel with a transformation function derived from a neighborhood. This algorithm restricts the histogram amplification by clipping at a predefined value before the computation of cumulative distributive function. Clipping limit is set as 0.01.

Preprocessing II

Non-uniform illumination correction is endeavored using morphological operations. Opening operation is applied on the green channel to remove the foreground and extracts only the background region. Then it is subtracted from the green channel leads to top hat transformed image. By equalizing the illumination foreground, vascular regions are enhanced and it is additionally enhanced using CLAHE technique.

Preprocessing III

In the second approach of preprocessing, non-uniform illumination in the fundus images are also considered along with contrast enhancement. Local normalization is applied to normalize the illumination all the way through the image.

| 7 |

Local mean and variance are estimated by local spatial smoothing using Gaussian filters. Contrast enhancement is applied on the illumination equalized image.

Results

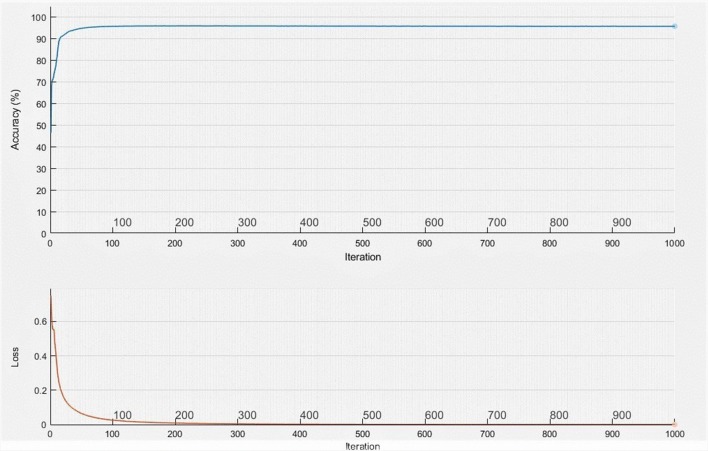

The proposed parallel architecture of fully convolved neural networks is trained using the raw images as well as the preprocessed images. Among the experimentation, it is observed that same network architecture performance can be increased by enhancing the input. Learning rate is set as constant and it is about 0.001. Cross entropy loss function is applied to eliminate the class imbalance problem. Vessel pixels are considered and trained with 18 times more weightage compared to non-vessel regions. Network is trained with the help of 20 training images from DRIVE database for about 1000 iterations and it is observed that training accuracy and mini batch loss reaches a value of about 95.86 and 0.01 respectively. Figure 2 shows the training accuracy and loss with respect to the iterations. It is witnessed that accuracy reaches the saturation value around 100 iterations and loss is around 500 iterations. Hence, it is decided to keep the training up to 1000 iterations. All the experiments are done on the Intel core i5-3570, 32GB, 64-bit operating system.

Fig. 2.

Training accuracy and loss

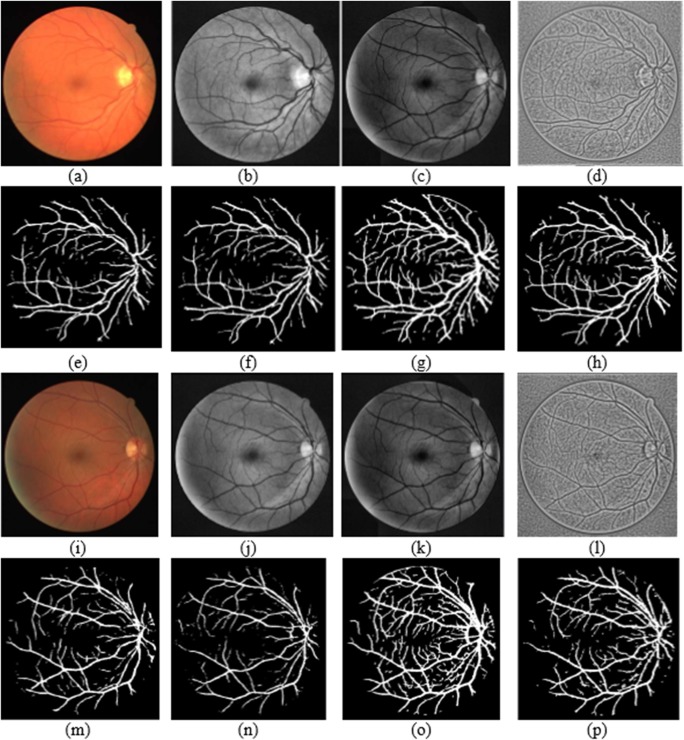

Accuracy, sensitivity, and specificity are the performance measures considered in this work since they are the mostly considered measures in the literature. The ideal value for all these measures is equal to 1. Accuracy measure reflects the proposed methodology performance in detecting the blood vessels correctly. Sensitivity is the performance measure which indicates how well the blood vessels are correctly recognized as vessels. Specificity is the measure which indicates how well the background regions are identified as other regions. The input images and the groundtruths used for training are shown in Fig. 3.

Fig. 3.

Training images. a RGB image; b, c, and d preprocessed images; e and f groundtruth for level I and II respectively

DRIVE database input image and corresponding groundtruth is shown in Fig. 4 and sample segmentation output obtained for the first level network architecture is shown in Fig. 5. First row specifies the database images with groundtruth. Second and fourth row includes the different categories of input considered for this work and relevant segmentation output in third row and fifth row respectively.

Fig. 4.

DRIVE sample images and groundtruth

Fig. 5.

Output obtained for the random sample test images. a, i RGB input; e, m corresponding output; b, j enhanced green channel input; f, n corresponding output; c, k morphologically illumination corrected input; g, o corresponding output; d, l locally normalized illumination corrected input; h, p corresponding output

Discussion

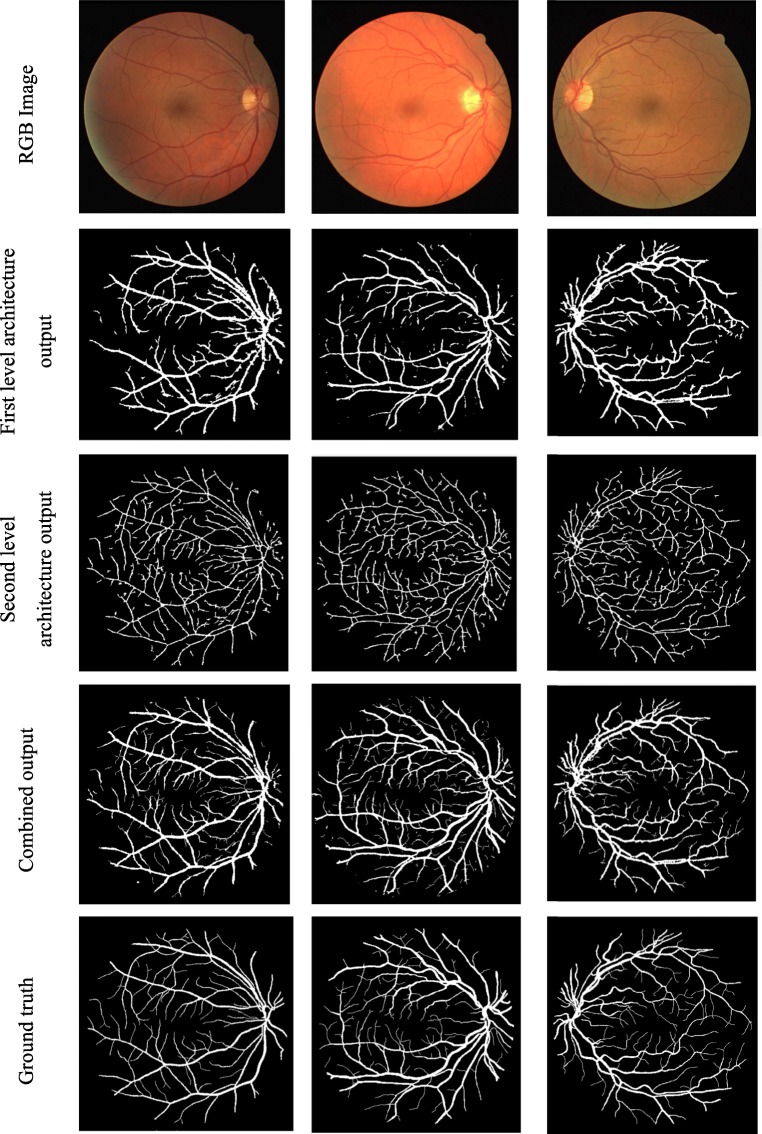

First level architecture of the fully convolved neural network detects the major vessels dominantly and failed to detect the fine thin vessels especially when the background intensity level is in the same level of vessels. Second level architecture of the fully convolved neural network which is trained using vessel skeleton detects the entire vascular pattern irrespective of the vessel thickness. To improve the visual quality, the isolated pixels are removed using some postprocessing. Figure 6 shows the output of first and second level architecture and their combined result.

Fig. 6.

Output obtained by the individual level and overall architecture

Tables 1 and 2 give the result obtained using the proposed parallel fully convolved neural network architecture under the application of different preprocessing operation.

Table 1.

Performance of DRIVE Database—RGB and enhanced green channel input image

| Image | RGB image (%) | Enhanced green channel (%) | ||||

|---|---|---|---|---|---|---|

| Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | Accuracy | |

| 1 | 82.46 | 96.90 | 95.84 | 77.67 | 97.69 | 95.90 |

| 2 | 81.99 | 95.81 | 94.74 | 76.78 | 97.95 | 95.78 |

| 3 | 88.61 | 96.13 | 95.58 | 82.86 | 97.89 | 96.48 |

| 4 | 84.64 | 96.30 | 95.51 | 72.23 | 98.13 | 95.75 |

| 5 | 86.64 | 95.52 | 94.99 | 66.84 | 98.41 | 95.45 |

| 6 | 86.12 | 95.19 | 94.64 | 68.51 | 98.79 | 95.84 |

| 7 | 79.39 | 96.15 | 94.98 | 69.62 | 98.17 | 95.56 |

| 8 | 81.92 | 95.89 | 95.09 | 61.05 | 98.71 | 95.47 |

| 9 | 84.38 | 95.70 | 95.16 | 57.87 | 99.11 | 95.77 |

| 10 | 80.70 | 96.74 | 95.71 | 71.48 | 97.98 | 95.80 |

| 11 | 77.82 | 96.57 | 95.18 | 89.85 | 97.36 | 96.69 |

| 12 | 82.72 | 96.54 | 95.64 | 67.87 | 98.40 | 95.77 |

| 13 | 84.24 | 95.08 | 94.41 | 72.19 | 98.22 | 95.67 |

| 14 | 76.11 | 97.12 | 95.63 | 73.35 | 97.62 | 95.65 |

| 15 | 72.91 | 97.52 | 95.89 | 76.72 | 97.62 | 95.93 |

| 16 | 84.33 | 96.60 | 95.75 | 70.04 | 98.49 | 95.92 |

| 17 | 81.58 | 95.97 | 95.15 | 74.02 | 98.65 | 96.04 |

| 18 | 79.78 | 96.87 | 95.80 | 70.68 | 98.21 | 96.03 |

| 19 | 81.59 | 97.40 | 96.26 | 84.55 | 98.02 | 96.90 |

| 20 | 79.73 | 97.14 | 96.13 | 73.12 | 98.24 | 96.39 |

| Average | 81.88 | 96.36 | 95.40 | 72.87 | 98.18 | 95.94 |

Table 2.

Performance of DRIVE Database—morphological based and local normalized illumination correction

| Image | Morphological based illumination correction (%) | Local normalized illumination correction (%) | ||||

|---|---|---|---|---|---|---|

| Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | Accuracy | |

| 1 | 85.06 | 97.47 | 96.42 | 80.71 | 96.78 | 95.60 |

| 2 | 89.00 | 97.75 | 96.73 | 83.88 | 96.15 | 95.17 |

| 3 | 88.82 | 94.63 | 94.16 | 81.80 | 94.85 | 94.03 |

| 4 | 84.48 | 97.53 | 96.23 | 85.44 | 95.87 | 95.22 |

| 5 | 92.10 | 96.74 | 96.36 | 85.40 | 95.62 | 94.99 |

| 6 | 91.05 | 96.51 | 96.05 | 85.00 | 95.09 | 94.48 |

| 7 | 89.70 | 97.19 | 96.55 | 82.58 | 95.93 | 95.07 |

| 8 | 87.84 | 96.68 | 96.01 | 83.71 | 95.46 | 94.86 |

| 9 | 89.55 | 96.79 | 96.34 | 83.38 | 96.17 | 95.48 |

| 10 | 87.04 | 98.00 | 97.11 | 81.21 | 96.61 | 95.65 |

| 11 | 85.48 | 97.73 | 96.68 | 78.31 | 96.02 | 94.83 |

| 12 | 85.70 | 95.93 | 95.25 | 82.07 | 96.13 | 95.27 |

| 13 | 89.06 | 97.62 | 96.78 | 83.49 | 95.95 | 95.05 |

| 14 | 80.90 | 98.21 | 96.69 | 77.49 | 96.21 | 95.12 |

| 15 | 82.10 | 97.67 | 96.67 | 74.80 | 97.30 | 95.91 |

| 16 | 86.15 | 97.37 | 96.40 | 83.40 | 96.48 | 95.58 |

| 17 | 84.70 | 96.84 | 95.92 | 81.70 | 96.44 | 95.52 |

| 18 | 83.08 | 97.86 | 96.68 | 79.54 | 97.04 | 95.90 |

| 19 | 86.03 | 98.54 | 97.47 | 82.32 | 97.41 | 96.33 |

| 20 | 82.72 | 98.02 | 96.93 | 78.15 | 97.22 | 96.07 |

| Average | 86.53 | 97.25 | 96.37 | 81.72 | 96.24 | 95.31 |

Accuracy and specificity values are high for the preprocessed input with CLAHE technique enhancement and local normalization–based illumination correction. Proposed method achieves maximum average accuracy and specificity when the input images are illumination equalized and contrast enhanced. When the raw RGB images are directly given, the maximum sensitivity, specificity, and accuracy obtained is about 95.4%, 81.88%, and 96.36% respectively. Even maximum sensitivity is achieved for the enhanced green channel input; noticeable artifacts are visible in the non-uniform contrast and illuminated regions of some images. We also observed that when contrast enhancement alone done; tiny vessels are not extracted in a better way for the same architecture. Hence, from the obtained results, we concluded to include both contrast enhancement and illumination equalization on preprocessing stage. With the inclusion of both contrast normalization and illumination equalization on the same network architecture parameter, the network performance in vessel detection gets improved. Table 3 gives the accuracy comparison of one-level and two-level architecture. The results show that the second architecture improves the accuracy well.

Table 3.

Performance comparison of one-level and two-level architecture

| Architecture | Enhanced green channel | RGB image | Enhanced and local normalized illumination correction | Enhanced and morphological based illumination correction |

|---|---|---|---|---|

| One-level | 92.4 | 93.3 | 93.2 | 94.2 |

| Two-level | 95.9 | 95.4 | 95.3 | 96.37 |

Performance comparison of the proposed architecture with some of the existing methods is shown in Table 4. Compared with the most of the supervised existing methodologies, the proposed method is possible to attain better performance. We also compare our proposed work with other deep learning–based works. Most of the deep learning works are experimented on the GPU and trained for the same DRIVE dataset for huge iterations. It is possible to achieve better result by the proposed work using CPU for the same training images of DRIVE with only 1000 iterations. The network learns well at the completion of 100 iterations and after that it maintains the same level of learning. Sensitivity is the parameter, used to specify the effectiveness of the network architecture in spotting the vascular regions. Sensitivity achieved by the proposed method is of about 86.53%, 81.88%, 81.72%, and 72.87% for the abovementioned inputs which are very high compared to the existing methods. Accuracy achieved is also a noticeable one compared to existing methodologies.

Table 4.

Performance comparison with existing methodologies

| Publication year | Method | Accuracy | Sensitivity | Specificity |

|---|---|---|---|---|

| 2004 | Niemeijer [18] | 0.9417 | 0.6898 | 0.9696 |

| 2006 | Soares [28] | 0.9446 | 0.7230 | 0.9762 |

| 2012 | Fraz [29] | 0.948 | 0.7406 | 0.9807 |

| 2014 | Cheng [30] | 0.9474 | 0.7252 | 0.9798 |

| 2016 | Aslani [31] | 0.9513 | 0.7545 | 0.9801 |

| 2018 | Shahid [32] | 0.958 | 0.730 | 0.9793 |

| 2018 | Soomro [33] | 0.953 | 0.752 | 0.976 |

| 2018 | Biswal [34] | 0.9541 | 0.71 | 0.97 |

| 2018 | Wang [35] | 0.9541 | 0.7648 | 0.9817 |

| DNN-based methods | ||||

| 2016 | Li [36] | 0.9527 | 0.7569 | 0.9816 |

| 2016 | Liskowski [37] | 0.9515 | 0.752 | 0.9806 |

| 2018 | Oliveira [38] | 0.9576 | 0.8039 | 0.9804 |

| 2018 | Jiang [39] | 0.9624 | 0.754 | 0.9825 |

| 2018 | Yan [40] | 0.9542 | 0.7653 | 0.9818 |

| 2018 | Hu [41] | 0.9533 | 0.7772 | 0.9793 |

| 2019 | Proposed method (RGB) | 0.954 | 0.8188 | 0.964 |

| Proposed method (enhanced green channel) | 0.9594 | 0.7287 | 0.9818 | |

| Proposed method (morphological operation) | 0.9637 | 0.8653 | 0.9725 | |

| Proposed method (local normalization) | 0.9531 | 0.8172 | 0.9624 | |

Values in italics specify the maximum values

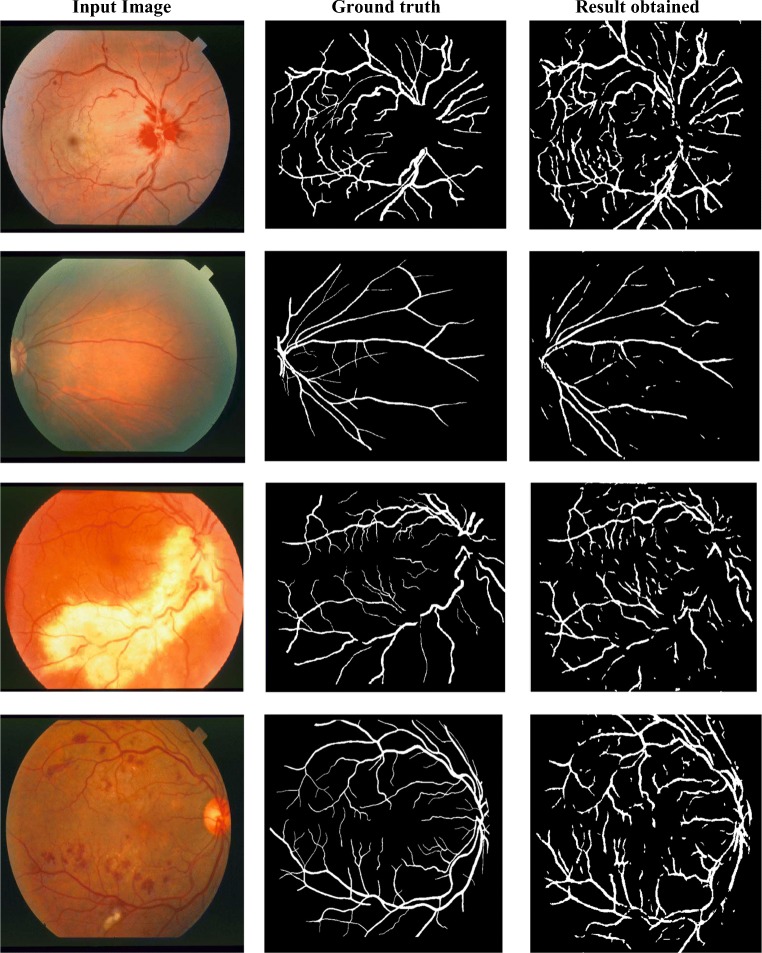

In order to verify the data independency, STARE images are tested with the DRIVE trained model. Figure 7 shows the cross-training results of STARE database abnormal images trained on DRIVE. Last row result clearly depicts that the proposed network acceptably distinguishes the vessel regions over red lesion regions even if both lie in the same level of intensity. Also, we purposely tested abnormal images from STARE database like non-uniform illuminated, presence of red and bright lesions. In all those cases, the model can clearly distinguish the abnormalities with the blood vessels. Cross validation results show that the training model is not data dependent.

Fig. 7.

Cross-training result on abnormal images (STARE)

The performance comparison of pathological images of STARE database trained on DRIVE images are listed in Table 5. Most of the existing works compare their performance on pathological images only by self-training. Our result is comparable to most of the existing methods even under cross-training. Even vessel connectivity is missing in some regions under cross-training; the false positives due to abnormalities are reduced to the maximum extent possible.

Table 5.

Performance comparison of pathological images on STARE database

Conclusions

In this paper, parallel architecture of fully convolutional neural network is proposed for pixel classification to extract vascular region in retinal fundus images. Experimentation on the network performance with respect to the input data is carried out by applying different preprocessing techniques. The proposed work also incorporates the class imbalance problem by assigning appropriate weights to the training samples. Our proposed method achieves very high accuracy and sensitivity among other existing supervised methods. Especially the cross validation results on abnormal images are comparable among the self-trained results. Proposed architecture detects the entire vascular pattern in an efficient manner irrespective of the vessel thickness. Most of the existing methodologies failed to detect the thin tiny vessels, due to the illumination effect. This issue is efficiently handled by the proposed parallel architecture. The proposed deep learning architecture is trained on CPU and hence it can be implemented in low cost real time applications.

Acknowledgments

Authors would like to acknowledge the Principal and Management of Mepco Schlenk Engineering College, Sivakasi, for their encouragement and support for this research work. We also acknowledge the anonymous reviewers for their comments.

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Sathananthavathi .V, Email: sathananthavathi@gmail.com.

Indumathi .G, Email: gindhu@mepcoeng.ac.in.

Swetha Ranjani .A, Email: swethaalagar@gmail.com.

References

- 1.Lupas¸cu CA, Tegolo D, Trucco E. FABC: retinal vessel segmentation using AdaBoost. IEEE Trans Inf Technol Biomed. 2010;14(5):1267–1274. doi: 10.1109/TITB.2010.2052282. [DOI] [PubMed] [Google Scholar]

- 2.Dizdaroglu B, Cansizoglu E a, Kalpathy-Cramer J, keck K, Chiang MF, Erdogmus D. Structure based level set method for automatic retinal vasculature segmentation. EURASIP J Image Video Process. 2014;1(39):1–26. [Google Scholar]

- 3.Barkhoda W, Akhlaqian F, Amiri MD, Nouroozzadeh MS. Retina identification based on the pattern of blood vessels using fuzzy logic. EURASIP J Adv Signal Process. 2011;1(113):1–8. [Google Scholar]

- 4.Zhao J, Yang J, Ai D, HongSong Y, Jiang YH, Luosh Z, Wang Y. Automatic retinal vessel segmentation using multi-scale superpixel chain tracking. Digital Signal Process. 2018;81:26–41. [Google Scholar]

- 5.Marin D, Aquino A, Arias MEG, Bravo JM. A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features. IEEE Trans Med Imaging. 2011;30(1):146–158. doi: 10.1109/TMI.2010.2064333. [DOI] [PubMed] [Google Scholar]

- 6.Fraz MM, Remagnino P, Hoppe A, Uyyanonvara B, Rudnicka AR, Owen CG, Barman SA. An ensemble classification-based approach applied to retinal blood vessel segmentation. IEEE Trans Biomed Eng. 2012;59(9):2538–2548. doi: 10.1109/TBME.2012.2205687. [DOI] [PubMed] [Google Scholar]

- 7.Firpi HA, Goodman E. Proceedings of the 33rd applied imagery pattern recognition workshop. Washington: IEEE Computer Society; 2004. Swarmed feature selection; pp. 112–118. [Google Scholar]

- 8.Yang XS: Engineering Optimizations via Nature-Inspired Virtual Bee Algorithms. In: Mira J, Álvarez JR Eds. Artificial Intelligence and Knowledge Engineering Applications: A Bioinspired Approach. IWINAC 2005. Lecture Notes in Computer Science, vol 3562. Springer, Berlin, Heidelberg

- 9.Hossam EE, Zawbaabc A bou M, Hassanien E. Binary grey wolf optimization approaches for feature selection. Neurocomputing. 2016;172:371–381. [Google Scholar]

- 10.Yu-Peng C, Ying L, Gang W, Yue-Feng Z, Qian X, Jia-Hao F, Xue-Ting C. A novel bacterial foraging optimization algorithm for feature selection. Expert Syst Appl. 2017;83:1–17. [Google Scholar]

- 11.Sathananthavathi V, Indumathi G. BAT algorithm inspired retinal blood vessel segmentation. IET Image Process. 2018;12(11):2075–2083. [Google Scholar]

- 12.Fu H, Xu Y, Lin S, Kee Wong DW, Liu J: DeepVessel: Retinal Vessel Segmentation via Deep Learning and Conditional Random Field. In: Ourselin S, Joskowicz L, Sabuncu M, Unal G, Wells W Eds. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2016. MICCAI 2016. Lecture Notes in Computer Science, vol 9901. Springer, Cham, 2016

- 13.Maninis KK, Pont-Tuset J, Arbeláez P, Van Gool L: Deep Retinal Image Understanding. In: Ourselin S, Joskowicz L, Sabuncu M, Unal G, Wells W Eds. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2016. MICCAI 2016. Lecture Notes in Computer Science, vol9901. Springer, Cham, 2016

- 14.Oliveira A, Pereira S, Silva CA. Retinal vessel segmentation based on fully convolutional neural networks. Expert Syst Appl. 2018;112(229):242. [Google Scholar]

- 15.Jiang Z, Zhang H, Wang Y, Ko S-B. Retinal blood vessel segmentation using fully convolutional network with transfer learning. Comput Med Imaging Graph. 2018;68:1–15. doi: 10.1016/j.compmedimag.2018.04.005. [DOI] [PubMed] [Google Scholar]

- 16.Guo Y, Budak U, Vespa LJ, Khorasani E, Şengur A. A retinal vessel detection approach using convolution neural network with reinforcement sample learning strategy. Measurement. 2018;125:586–591. [Google Scholar]

- 17.Staal JJ, Abramoff MD, Niemeijer M, Viergever MA, van Ginneken B. Ridge based vessel segmentation in color images of the retina. IEEE Trans Med Imaging. 2004;23:501–509. doi: 10.1109/TMI.2004.825627. [DOI] [PubMed] [Google Scholar]

- 18.Niemeijer M, Staal JJ, van Ginneken B, Loog M, Abramoff MD. Comparative study of retinal vessel segmentation methods on a new publicly available database. SPIE Med Imag. 2004;5370:648–656. [Google Scholar]

- 19.Hoover A, Kouznetsova V, Goldbaum M. Locating blood vessels in retinal images by piece-wise threshold probing of a matched filter response. IEEE Trans Med Imaging. 2000;19(3):203–210. doi: 10.1109/42.845178. [DOI] [PubMed] [Google Scholar]

- 20.Hoover A, Goldbaum M. Locating the optic nerve in a retinal image using the fuzzy convergence of the blood vessels. IEEE Trans Med Imaging. 2003;22(8):951–958. doi: 10.1109/TMI.2003.815900. [DOI] [PubMed] [Google Scholar]

- 21.Badrinarayanan V, Kendall A, Cipolla R. SegNet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans Pattern Anal Mach Intell. 2017;39(12):2481–2495. doi: 10.1109/TPAMI.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 22.Matusugu M, Mori K, Mitari Y, Kaneda Y. Subject independent facial expression recognition with robust face detection using a convolutional neural network. Neural Netw. 2003;16(5):555–559. doi: 10.1016/S0893-6080(03)00115-1. [DOI] [PubMed] [Google Scholar]

- 23.Ioffe S, Szegedy C: Batch normalization: accelerating deep network training by reducing internal covariate shift, 2015, arXiv:1807.01702.

- 24.Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. Adv Neural Inf Proces Syst. 2012;1:1097–1105. [Google Scholar]

- 25.Bishop CM. Pattern recognition and machine learning. New York: Springer; 2006. [Google Scholar]

- 26.Liskowski P, Krawiec K. Segmenting retinal blood vessels with deep neural networks. IEEE Trans Med Imaging. 2016;35(11):2369–2380. doi: 10.1109/TMI.2016.2546227. [DOI] [PubMed] [Google Scholar]

- 27.Zuiderveld K. Contrast limited adaptive histogram equalization. Graphic gems IV. San Diego: Academic Press Professional; 1994. pp. 474–485. [Google Scholar]

- 28.Soares JVB, Leandro JJG, Cesar RM, Jelinek HF, Cree MJ. Retinal vessel segmentation using the 2-D gabor wavelet and supervised classification. IEEE Trans Med Imaging. 2006;25(9):1214–1222. doi: 10.1109/tmi.2006.879967. [DOI] [PubMed] [Google Scholar]

- 29.Fraz MM, Remagnino P, Hoppe A. An ensemble classification-based approach applied to retinal blood vessel segmentation. IEEE Trans Biomed Eng. 2012;59(9):2538–2548. doi: 10.1109/TBME.2012.2205687. [DOI] [PubMed] [Google Scholar]

- 30.Cheng E, Du L, Wu Y, et al. Discriminative vessel segmentation in retinal images by fusing context-aware hybrid features. Mach Vis Appl. 2014;25(7):1779–1792. [Google Scholar]

- 31.Aslani S, Sarnel H. A new supervised retinal vessel segmentation method based on robust hybrid features. Biomed Signal Proc Control. 2016;30:1–12. [Google Scholar]

- 32.Shahid M, Taj IA. Robust retinal vessel segmentation using vessel's location map and Frangi enhancement filter. IET Image Process. 2018;12(4):494–501. [Google Scholar]

- 33.Soomro TA, Khan TM, Khan MAU, et al. Impact of ICA-based image enhancement technique on retinal blood vessels segmentation. IET Image Process. 2018;6:3524–3353. [Google Scholar]

- 34.Biswal B, Pooja T, Bala Subrahmanyam N. Robust retinal blood vessel segmentation using line detectors with multiple masks. IET Image Process. 2018;12(3):389–399. [Google Scholar]

- 35.Wang X, Jiang X, Ren J. Blood vessel segmentation from fundus image by a cascade classification framework. Pattern Recogn. 2019;88:331–341. [Google Scholar]

- 36.Li Q, Feng B, Xie L, Liang P, Zhang H, Wang T. A cross-modality learning approach for vessel segmentation in retinal images. IEEE Trans Med Imaging. 2016;35(1):109–118. doi: 10.1109/TMI.2015.2457891. [DOI] [PubMed] [Google Scholar]

- 37.Liskowski P, Krawiec K. Segmenting retinal blood vessels with deep neural networks. IEEE Trans Med Imaging. 2016;35(11):2369–2380. doi: 10.1109/TMI.2016.2546227. [DOI] [PubMed] [Google Scholar]

- 38.Oliveira A, Pereira S, Silva CA. Retinal vessel segmentation based on fully convolutional neural networks. Expert Syst Appl. 2018;112:229–242. [Google Scholar]

- 39.Jiang Z, Zhang H, Wang Y, Ko SB. Retinal blood vessel segmentation using fully convolutional network with transfer learning. Comput Med Imaging Graph. 2018;68:1–15. doi: 10.1016/j.compmedimag.2018.04.005. [DOI] [PubMed] [Google Scholar]

- 40.Yan Z, Yang X, Cheng K-T. Joint segment-level and pixel-wise losses for deep learning based retinal vessel segmentation. IEEE Trans Biomed Eng. 2018;65(9):1912–1923. doi: 10.1109/TBME.2018.2828137. [DOI] [PubMed] [Google Scholar]

- 41.Hu K, Zhang Z, Niu X, Zhang Y, Cao C, Xiao F, Gao X. Retinal vessel segmentation of color fundus images using multiscale convolutional neural network with an improved cross-entropy loss function. Neurocomputing. 2018;309:179–191. [Google Scholar]

- 42.Azzopardi G, Strisciuglio N, Vento M, Petkov N. Trainable cosfire filters for vessel delineation with application to retinal images. Med Image Anal. 2015;19(1):46–57. doi: 10.1016/j.media.2014.08.002. [DOI] [PubMed] [Google Scholar]

- 43.Nguyen UT, Bhuiyan A, Park LA, Ramamohanarao K. An effective retinal blood vessel segmentation method using multi scale line detection. Pattern Recogn. 2013;46(3):703–715. [Google Scholar]