SUMMARY

Historically, the detection of antibodies against infectious disease agents was achieved using test systems that utilized biological functions such as neutralization, complement fixation, hemagglutination, or visualization of binding of antibodies to specific antigens, by testing doubling dilutions of the patient sample to determine an endpoint. These test systems have since been replaced by automated platforms, many of which have been integrated into general medical pathology. Methods employed to standardize and control clinical chemistry testing have been applied to these serology tests. However, there is evidence that these methods are not suitable for infectious disease serology. An overriding reason is that, unlike testing for an inert chemical, testing for specific antibodies to infectious disease agents is highly variable; the measurand for each test system varies in choice of antigen, antibody classes/subclasses, modes of detection, and assay kinetics, and individuals’ immune responses vary with time after exposure, individual immune-competency, nutrition, treatment, and exposure to variable circulating sero- or genotypes or organism mutations. Therefore, unlike that of inert chemicals, the quantification of antibodies cannot be standardized using traditional methods. However, there is evidence that the quantification of nucleic acid testing, reporting results in international units, has been successful across many viral load tests. Similarly, traditional methods used to control clinical chemistry testing, such as Westgard rules, are not appropriate for serology testing for infectious diseases, mainly due to variability due to frequent reagent lot changes. This review investigates the reasons why standardization and control of infectious diseases should be further investigated and more appropriate guidelines should be implemented.

KEYWORDS: control, infectious disease, international standards, run control, standardization

INTRODUCTION

Testing for infectious diseases informs the diagnosis of disease, monitors efficacy of treatment, identifies stages of disease, and provides evidence of immunity and disease prevalence. Since the 1960s, infectious disease testing has experienced dramatic changes through increased knowledge of the immune system, the advent of significant technologies such as immunoassays and nucleic acid testing (NAT), biomedical engineering and robotics, and the introduction of regulatory systems, all which have a major impact on the provision of more accurate and timely results. More recently, near-patient or point-of-care testing (PoCT), as well as self-testing, has extended the provision of infectious disease testing into remote and regional, community-based facilities. Irrespective of the mode, infectious disease science has faced the challenge of managing the standardization and control of these tests. It is important that patients receive comparable results when tested in different testing facilities using different tests (standardized) and that the results from each facility are reproducible over time (controlled).

HISTORY OF SEROLOGY AND NUCLEIC ACID TESTING FOR INFECTIOUS DISEASE

Prior to the 1980s, the detection of antibodies and antigens associated with infections was conducted predominantly using laboratory-developed tests such as hemagglutination inhibition assays (HAI), complement fixation tests (CFT), plaque neutralization assays (PNT), immunofluorescence, radial hemolysis, or Ouchterlony double immunodiffusion. These bioassays detect the functional reactivity between antibodies and antigens and were the first test systems employed for the detection and semiquantification of infectious disease serological testing (1). These testing systems are labor-intensive and require specific technical skills. The results varied depending on reagents used and their quality (2–4). The antigen source was usually whole organisms, and the test system detects, without differentiation, all antibody isotypes, in particular circulating IgG, IgM, and IgA. A lack of standardization of these tests was reported (1, 5), and some professional bodies sought to introduce standard techniques (6) and quality assurance programs that improved commutability of results across laboratories (7, 8). However, these efforts were not applied universally.

Each of these test systems uses the biological functions of the antibody response to the antigen to cause a detectable signal. PNT uses live virus infected onto a cell culture to create viral plaques. In the presence of a serial dilution of patient sample, the dilution that reduces the number of plaques by 50% is used to determine the endpoint of the test. HAI uses the ability of some antigens to agglutinate the red blood cells of specific animal species. When the antigen is incubated with specific antibodies, all the binding sites become unavailable and the hemagglutination effect of the antigen is inhibited. In a similar manner, CFT employs a combination of the patient sample, a standardized concentration of the target antigen, and the complement. The complement is “fixed” when the antigen:antibody complex is formed. If the complement remains unfixed due to a lack of antigen-specific antibodies (either because the patient has no antibodies or because the antibodies have been diluted out by serial dilution), the free complement lyses antibody-coated red blood cells added to the test system. For each of these biological test systems, a serial dilution of patient samples can be tested to determine an “endpoint,” the dilution of patient serum at which the signal is no longer detectable. Traditionally, a 2-fold increase in concentration in patient samples obtained 10 to 14 days apart indicates a recent (acute) infection; however, this approach is often not possible when samples are collected too late after infection or only single samples are available.

In 1979, Voller et al. reported the use of an enzyme-linked immunoassay for the detection of specific antibodies (9). Since that time, immunoassays (IAs) have revolutionized infectious disease serology. Chemical detection systems used in IAs (substrates) included enzyme-based color reactions (EIA), radioactive labels (RIA), fluorescence (IFA), and chemiluminescence (CLIA) (10). Usually, the test system has the target antigen on a solid, immovable phase (e.g., a microtiter plate, magnetic beads, plastic beads). The patient sample is incubated with the bound antigens, and patient’s antibodies specific to the target antigen(s) are bound. After washing, a labeled anti-human conjugate is added and the conjugate is bound to the patient antibodies. After a further wash, the chemical substrate is added and the resulting signal detected. Generally, there is a dose response, where the signal increases with the amount of bound antibody.

Depending on the assay design, there are plateaus in the dose response prior to the antibody concentration rising to a detectable level and another plateau when the test system is saturated. Between these two plateaus, the dose response is usually linear in nature, indicating that the rise in signal is proportional to the rise in concentration of the antibody being detected (10). This dose response curve is often presented graphically as a sigmoidal curve. Importantly, this dose response is specific to the test system and cannot be assumed to behave with the same dynamics across different test systems. False-negative or artificially low results may be encountered when there is an excess of either antibody or antigen being detected. These antibodies or antigens saturate the available binding sites, removing the ability of the antibody associated with the detection signal to bind.

In the past 2 decades, immunoassays have become increasingly automated, with numerous continuous-access robotic platforms routinely used in clinical and blood-screening laboratories. These test systems are well-controlled, highly sensitive, specific, and precise, facilitating increased control of test results. During the 1980s, Mullis and Faloona described a process called PCR, allowing the amplification of specific nucleic acid sequences (11). This technology initiated the development of many molecular techniques, since applied to infectious diseases, including the use of chemical probes to detect amplified nucleic acid, reverse transcription for the detection of RNA, and real-time or quantitative PCR (qPCR). These technologies allowed a move away from viral culture or direct antigen detection and are now applied in the detection of the causative agents for most infectious diseases. As with the developments associated with serological methods, NAT has allowed for the automation, standardization, and control of the test systems.

It is notable that the emergence of immunoassays and NAT generally coincided with the onset of the HIV epidemic. This situation elicited large investments into research and development of technologies used for infectious disease testing. It also saw a strengthening of the regulatory environment (12–14), not only in clinical laboratories but especially in blood screening, where several notable failures to apply emerging technologies to HIV, and subsequently hepatitis C virus (HCV), had severe medicolegal consequences (15, 16). Governments of most developed countries introduced regulations to control the sale and use of in vitro diagnostic devices (IVDs) used to detect pathogens that had a high risk to the community. Manufacturers and testing laboratories are now required to have certification to relevant International Organization for Standardization (ISO) standards. National regulatory bodies and associated frameworks were strengthened, requiring IVDs to conform to set standards, such as the European Union Common Technical Specifications (12). A Global Harmonization Task Force, now replaced by the International Medical Device Regulators Forum, was established to standardize the requirements across jurisdiction and therefore reduce regulatory burden (17). To support countries lacking a regulatory framework and to guide IVD procurement by funding and implementing bodies such as Global Fund, UNDP, World Bank, and Clinton Foundation, the World Health Organization (WHO) established the prequalification of IVDs, coordinated through the Department of Essential Medicines and Health Products (EMP). This activity focuses on testing for priority diseases in resource-limited settings (18).

STANDARDIZATION AND CONTROL OF CLINICAL CHEMISTRY TESTING

During this period of time, efforts to standardize and control medical testing were championed by clinical chemists, beginning with external quality assessments (EQA), introduction of standard methods, and metrological traceability of measurands to higher-order reference materials through ISO 17511 standard (7). National measurement institutes measure pure, high-grade analytical materials such as glucose or potassium using certified reference methods, such as high-performance liquid chromatography (HPLC) or atomic absorption, to produce certified reference standards, often traceable to a Système International d’Unités (SI) measurement. Through a chain of commutability, secondary standards are produced, and these, in turn, are used to create calibrators and standards for use in medical test systems. Using this traceability hierarchy, results in clinical chemistry can ensure that patient samples tested on different test systems report the same result within a known confidence (19). EQA programs can monitor the success or failure of this process (7). Commutability of multiple clinical chemistry analytes has been a focus of professional bodies (20, 21). However, not all clinical chemistry analytes have successfully had certified reference materials developed, nor are all assumed to be commutable to clinical samples. Where the analyte is complex or variable, challenges to standardization continue to be experienced.

In order to control clinical chemistry test systems, modifications of statistical process controls created by Walter Shewhart and championed by W. Edwards Deming in postwar Japan were introduced (22, 23). Assuming that the results of repeated testing of the same sample would have Gaussian (normal) distribution, 95% of all normal results would be within ±2 standard deviations (SD) of the mean of the results and 99.7% within ±3 SD. Traditional quality control (QC) monitoring methods use this principle to establish QC acceptance limits, noting that 5% of “true” results will be falsely rejected if mean ±2 SD is used as the acceptance limit. Results outside mean ±2 SD are further investigated or rejected. James Westgard published a set of rules, commonly known as Westgard Rules, which created a framework of decision-making around the acceptance or rejection of QC results. These rules are adopted almost universally in clinical chemistry testing (24–26). More recently, a risk-based approach to monitoring the performance of medical test systems has been encouraged (27) with a strong emphasis on six sigma principles. However, until recently, no systematic review of these rules’ applicability to other disciplines such as infectious disease serology has been undertaken.

There are significant differences between the measurement of clinical chemistry analytes and that of infectious diseases (Table 1). The underlying reason for these differences is that, when testing for an inert chemical such as glucose, the test system is determining the actual quantity (how much) of glucose that is present. In contrast, when testing for antibodies, the test system is determining the efficacy of binding (how well) of the antibodies to the antigen. A sample having low levels of antibodies with high affinity and avidity could have a level of reactivity higher than that of a sample with a high concentration of low-avidity antibodies.

TABLE 1.

Differences between clinical chemistry and infectious disease serology testing

| Clinical chemistry characteristics | Infectious disease serology characteristics |

|---|---|

| Inert analyte | Type B functional biological analyte |

| Several medical decision points | Single decision point (pos/neg) |

| Quantitative | Qualitative |

| Can adjust for bias | No adjustment for bias |

| Single homogeneous molecule | Multiple and various antigens |

| Lower level of regulation | Highly regulated |

| Linear dose response curve | Nonlinear dose response curve |

| Adjusts for reagent lot variation | No adjustment for reagent lot variation |

| International standardsa | Poor or no international standards |

| Certified reference methods | No certified reference methods |

| Available in a pure form | Different forms |

| TEab can be estimated | TEa cannot be defined |

Note that not all clinical chemistry analytes have relevant international standards.

TEa, total allowable error.

As infectious disease testing has become more automated, test systems used in clinical chemistry have been adapted to the detection of infectious disease antibodies and antigens. Many larger pathology laboratories have introduced the concept of a “core laboratory” where samples for a large range of analytes, including infectious diseases, are tested on the platforms linked by a “track,” increasing the efficiency of the laboratory. As these core laboratories are commonly overseen by clinical chemists, it is not surprising that methods used to standardize and control clinical chemistry testing were implemented for infectious disease testing. Unfortunately, it has become evident that these methods, in particular and notably standardization of infectious disease antibody testing and the use of Westgard Rules, are not appropriate for infectious disease testing. Over the past 3 decades, a significant body of work has provided insight into these deficiencies and has culminated into the abandoning of the use of the WHO International Standard for the calibration of anti-rubella IgG assays and in the development of an alternative and more appropriate method to interpret the results of infectious disease serology QC.

VARIATION OF SEROLOGICAL MARKERS FOR INFECTIOUS DISEASES

Many clinical chemistry measurands, such as glucose, are small molecules, have minimal heterogeneity, and can be described as a chemical formula (e.g., C6H12O6). In contrast, analytes like antibodies that measure functional, biological activity, called “type B” quantities, are heterogeneous and are not directly traceable to SI units (28, 29). Unlike that for clinical chemistry analytes, the standardization of testing for infectious diseases has a checkered history. Although numbers of international standards for infectious diseases have been developed since the 1960s and have subsequently been used to try to standardize serological tests, these efforts have, by and large, been unsuccessful (1, 30). This is due to many issues. Of note, many international standards for serology were developed without due consideration to metrological principles (29, 31–33); serological assays are generally qualitative in nature and the analytes being measured are complex, biological, and polyclonal in nature (1, 29, 34). Antibodies can be of variable classes/subclasses, fragmented, polyclonal or monoclonal, and free or complexed, and they can have variable affinity and avidity (29). Unfortunately, although these factors have been recognized and warnings have been published (29, 34, 35), it has been assumed that the method of standardization used for functional type B measurands would be suitable for application to antibody testing, resulting in unforeseen consequences that have taken decades to resolve (1, 36).

Pathogenic organisms are complex and variable in structure and often mutate over time. All organisms, be they viruses, bacteria, fungi, or parasites, have one or more immunogenic sites. Humans elicit an immune response when they are exposed to these immunogenic antigens. For example, rubella virus has just three immunogenic sites, two envelope proteins designated E1 and E2 and one capsid protein (C), but has only a single serotype (1). Similarly, wild-type measles virus has eight clades containing 24 genotypes based on the nucleotide sequences of their hemagglutinin (H) and nucleoprotein (N) genes, which are the most variable genes in the viral genome (37). The eight clades are designated A to H, with numerals to identify the individual genotypes. Measles virus is considered to be immunologically stable, with no detectable serological variation. In contrast, HIV elicits antibody responses to group-specific antigen (GAG) protein p24 and its precursor p55, the envelope (ENV) precursor protein gp160, and proteins gp120 and gp41. Antibodies to the polymerase (POL) gene products p31, p51, and p66 are also commonly detectable. HIV-1 has four groups: M (major), O (outlier), N (non-M, non-O), and P. Group M has nine subtypes (38). Testing systems, usually testing for antibodies to HIV-1 and HIV-2 as well as HIV p24 antigen, must account for each of these variables.

Generally, the number of immunogenic sites increases with the complexity of the organism. Like HIV, many organisms have different genotypes and/or serotypes. When organisms share a large percentage of genome but vary in the immunogenic regions, the difference in the immune response they elicit can be detected and differentiated. As an example, whereas rubella virus has a single serotype, dengue virus has four serotypes, DEN-1, DEN-2, DEN-3, and DEN-4, which can be differentiated serologically (39). The variation in the genome of pathogens can also be detected by nucleic acid testing. Hepatitis C virus (HCV) has six common genotypes designated 1 to 6, their distribution varying around the world. Differentiation of genotypes can be achieved by either serotyping or genotyping (40).

The immunogenicity of organisms also changes over time through mutation. The influenza virus is well known for its antigenic “drifts” and “shifts.” Antigenic drifts are small changes in the gene of the virus over time (8). This phenomenon is also seen in HIV (38), which is one of the reasons the development of a vaccine has proven difficult for both organisms. Antigenic shift is a major change in the virus. In influenza virus, a shift is commonly associated with the combination of the genomes of two influenza viruses derived from different animal species, creating a strain that can evade previously developed antibody responses. Some viruses are prone to mutation. Hepatitis B virus (HBV) has a unique life cycle which includes an error-prone enzyme, reverse transcriptase, and a very high virion replication. Treatment of infections such as HBV can select out subpopulations, creating a change in immunogenicity over time (41).

Current IAs are developed to detect antibody subclasses to a specific antigen, e.g., anti-Epstein-Barr virus (anti-EBV) viral capsid antigen IgM or anti-hepatitis B core IgM and IgG total antibodies. The manufacturer uses various sources of antigen (whole virus, disrupted virus, purified viral antigens, or recombinant antigens) and conjugates which may be polyclonal, across multiple subclasses (total antibodies) or class-specific, or monoclonal antibodies directed to a specific viral epitope. One or more monoclonal antibodies could be used in the design of an assay.

When a human is exposed to an organism, they generally elicit an immune response. Typically, the response will be a primary or secondary immune response. In a primary response, the B lymphocytes circulating in a person who is naive, i.e., has never been in contract with the antigen, will produce antibodies to the antigen(s) present on the organism. This response is delayed and initially sluggish. The initial antibody response is usually the production of antigen-specific IgM, followed by IgG, antibodies. The IgG antibodies are immature, not necessarily specific to the antigen, and sometimes target only certain immunogenic sites or the pathogen. Over time, the elicited IgG antibodies increase in affinity (the strength of the bond between the antibody and the specific antigen) and avidity (the overall strength of the bonds between the all antibodies and the pathogen) and develop reactivity to the full range of that organism’s immunogenic sites. The secondary humoral response is followed by the creation of memory cells, which are primed to develop high-avidity IgG on reexposure to the antigen, enabling a more rapid and larger, specific immune response.

Test systems for antibodies must account for this array of variables, highlighting the difference between infectious disease serology and testing for inert, stable analytes such as glucose or potassium. Unlike that detected in inert clinical chemistry analytes, the measurand detected in infectious disease serology is extremely variable and is specific to each test system. As an example, it has been well established that the quantitative results of anti-rubella IgG assays are not comparable (1, 36, 42–46). Principles of standardization and control applied to clinical chemistry analytes cannot be used in infectious disease serology (30).

QUANTIFICATION OF ANTIBODIES TO INFECTIOUS DISEASES

The level of antibody in a patient is rarely useful in a clinical setting. In biological test systems, a rise in antibody titer can be used to confirm an acute infection. In some infectious disease tests, such as syphilis rapid plasma reagin (RPR), a doubling dilution titer of 1:8 or greater is indicative of untreated infection. However, rarely do an IA manufacturer’s instructions suggest that a rise in signal reported by their IA indicates an acute infection.

Although there are many international standards for infectious disease serology, routine reporting of results in international units (IU) and having a defined clinical cutoff associated with this unitage have been limited to three main analytes: anti-rubella IgG, anti-hepatitis B surface antigen (HBsAb), and anti-measles IgG. The cutoff for immunity for anti-rubella IgG and HBsAb is assumed to be 10 IU/ml and 10 mIU/ml, respectively. The cutoff for rubella has been seriously questioned and is under review (30). Further work is required for HBsAb. The measles PNT titer that corresponded to the protective titer was found to be ≥120 mIU/ml in a study that reviewed protective immunity of university students after a measles outbreak (47). PNT assays have been standardized against the WHO measles antibody international standard (currently the WHO 3rd international standard; NIBSC 97/648). However, the package insert of that standard states, “this preparation has not been calibrated for use in ELISA assays and/or a unitage assigned for this use.”

The history of international standards for infectious disease serology is informative. Serological international standards were first produced in the 1960s, primarily for the purpose of assessing the potencies of vaccines for rubella and measles. Studies on the preparation of standards are referred to the WHO Expert Committee on Biological Standards for adoption (48–51). The first international reference preparation of anti-rubella serum was prepared in 1966 using a pool of convalescent-phase human sera. It was replaced in 1968, with the second international reference preparation of anti-rubella serum designated BS/96.1833, also known as RUBS. Despite its name, it was prepared from normal human immunoglobulin. The current rubella IgG international standard was introduced in 1995 and was originally developed by the Statens Serum Institut (Copenhagen, Denmark) in the 1970s and designated RUB-1-94 (48). It was also prepared from pooled, concentrated human immunoglobulin rather than human serum. This standard was comprised of human immunoglobulin in equal parts with saline. It should be highlighted that RUBS and RUB-1-94 preparations are purified immunoglobulin rather than normal human serum or plasma (50) and therefore have a matrix different from that of the patients’ samples.

The potency of RUB-1-94 was assessed in a multicenter trial including 11 laboratories from seven countries. The laboratories used biological HAI, radial hemolysis assays, or first-generation EIAs. Since this time, most commercial anti-rubella IgG assays have been calibrated against this international standard and report results in IU/ml (1); however, there have been many studies demonstrating a lack in correlation of the quantitative results between assays reporting in IU/ml. Often, these discrepancies in quantification lead to different clinical interpretations. Unfortunately, this situation can have adverse clinical outcomes or incorrect interpretations (1, 36, 46).

More recently, the first WHO international standard for anti-SARS-CoV-2 immunoglobulin (human) (code: 20/136) has been released by NIBSC. It is made from human plasma from convalescent individuals and has a value of 1,000 IU/ml on reconstitution. The instructions for use indicate that the intended use of the standard is “for the calibration and harmonization of serological assays detecting anti-SARS-CoV-2 neutralising antibodies” and that “for binding antibody assays, an arbitrary unitage of 1000 binding antibody units (BAU)/ml can be used to assist the comparison of assays detecting the same class of immunoglobulins with the same specificity (e.g., anti-RBD IgG, anti-N IgM, etc.).” However, only months after release, commercial manufacturers have released “quantitative SARS-CoV-2” assays. As yet, it is not evident if the results will be reported as IU/ml, BAU/ml, or an assay-specific unit. There is a possibility that a lack of standardization between assays will be experienced if manufacturers report results as IU/ml.

Generally, serological assays are qualitative in nature. The detection of a signal, be it chemiluminescent, fluorescent, or color, is used to determine the presence or absence of the antibody being detected. Although there is a dose response relationship between the amount of antibody and the signal, it should not be assumed that this response is always proportional or linear. As there is little or no clinical utility in the amount of signal, reporting quantitative serology assay results is not recommended.

DIFFICULTIES IN THE STANDARDIZATION OF ANTIBODY QUANTIFICATION

The reasons underlying the difficulties in standardization of antibody quantification are detailed elsewhere (29, 34, 35). Metrological principles for the establishment of standards, which are difficult to apply to biological standards, require nominated reference laboratories to prepare standards comprised of the same matrix as that used in the testing system. The measurand must be defined and invariable, and the amount of measurand should be measured using a certified reference method and, ideally, expressed in SI units (52, 53). Where this cannot be achieved, as is the case for biological controls, the analyte is called a class B analyte. The three elements of a class B analyte are the system (e.g., serum), the component (e.g., anti-rubella virus IgG), and the kind of quantity (essentially the biological response or biological activity), together making up the measurand (29).

However, for many biological standards, each of these elements varies across test systems. As described above, the antibodies that develop in response to infection vary due to antigenic differences across genotype and subtypes, the stage of disease progression, antibody avidity and affinity, and the test systems used (different antigen sources or detection systems), as well as biological functionality within the test. Therefore, the measurands differ across test systems used to detect and quantify the same analyte, e.g., anti-rubella IgG. It is not surprising that it has proved impossible to standardize quantitative antibody testing. Each test system quantifies different measurands and uses different detection systems. It should be noted that, even though serological assays used to detect the antibodies directed at the same organism are difficult to standardize, they usually have comparable clinical sensitivity and specificity.

Although standardization of serological tests has been fraught, there are some factors influencing manufacturers to attempt calibration using the WHO international standards. This arguably began in 1995 with the WHO Expert Committee on Biological Standards 45th report, which stated that there was a need for a rubella standard “for the calibration of diagnostic kits” (49). Although not explicit, both European and USA IVD directives suggest that all IVDs be traceable to a higher-order standard where one exists. The 1998 European Directive stated, “the traceability of values assigned to calibrators and/or control materials must be assured through available reference measurement procedures and/or available reference materials of a higher order” (14). Therefore, many IVD manufactures have used the WHO international standards to calibrate a range of assays to report in IU/ml, including tests for antibodies for toxoplasma, cytomegalovirus (CMV), and syphilis, although quantitative reporting has rarely been used for clinical decision-making outside anti-rubella IgG and HBsAb testing.

What is the future for standardization of infectious disease serology testing? In 2017, the WHO Expert Committee on Biological Standardization determined that the rubella IgG international standard should remain available but that IVD manufacturers should be made aware of the lack of commutability of the standard and therefore consider introducing qualitative assays for the detection of rubella IgG (51). Recent publications coauthored by representatives of WHO, NIBSC, Paul Ehrlich, CDC, FDA, and the National Serology Reference Laboratory, Australia have noted that results of rubella IgG assays should no longer be expressed in IU/ml (30). The package inserts of several international standards have caveats stating they should not be used for the calibration of test kits. After more than a decade of activity by concerned scientists, the rubella RUB-1-94 standard package insert has recently been amended to include the statement, “IVD manufacturers, regulators and assay users should be made aware of RUBI-1-94 potential lack of commutability when used as a calibrant. This was highlighted by the WHO Expert Committee on Biological Standardization in TRS 68th Report (Section 3.3.4: 2018)” (51). This statement is in line with a similar statement on the measles standard. No similar statement has yet been included in the HBsAb package insert. It is hoped that the future will see IVD directives, professional bodies, and key opinion leaders embrace the concept that the standardization of serological assays for infectious diseases is not possible and promote qualitative reporting of results.

STANDARDIZATION OF ANTIGENS AND NUCLEIC ACID TESTING

There are several international standards for infectious disease antigens, such as HBsAg (54) and HIV p24 (55), which have been used to calibrate IAs. However, the quantitative results of these tests are not commonly used in clinical decision-making. Limited evidence would suggest that standardization of antigen testing is less problematic than antibody testing. Whereas HBsAg quantification may have some clinical use (56), HBV viral load testing is used more commonly to monitor therapy and disease progression (57). Similarly, HIV viral load testing is used more commonly in clinical decisions than is HIV p24 quantification, and only a few studies have investigated the relationship between HIV p24 levels and disease progression (58).

The standardization of viral load quantification has been more successful than infectious disease serology (59). Standardization of viral load testing has been championed by Standardization of Genome Amplification Technology (SoGAT), which was formed by the WHO and coordinated by the National Institute of Biological Standards and Controls (NIBSC, Potters Bar, UK) and the Paul Ehrlich Institute (Langen, Germany). The Joint Committee for Traceability in Laboratory Medicine (JCTLM) was formed in 2002 and facilitates the traceability to higher-order standards. Results reported by viral load assays calibrated against international standards have demonstrated commutability, especially for blood-borne viruses HCV and HIV RNA and HBV DNA, which were the first to have international standards in 1997 (HCV RNA) (60) and 1999 (HIV RNA and HBV DNA) (61, 62). Since this time, more than 20 international standards for viral and parasitic (malaria and toxoplasma) nucleic acids have been released (59).

The potency of these international standards is determined by collaborative studies, whereby candidate materials, usually plasma from infected donors, are tested by numbers of laboratories using different technologies (59–64). The consensus potency is calculated and expressed in international units, a measure of biological functionality, rather than being expressed in SI units. This approach assumes that variation in extraction efficiency, target region, length, and conservation, and detection systems, is accounted for. However, some institutes have utilized digital droplet PCR (ddPCR) to more accurately quantify the numbers of copies of a reference standard and, accounting for extraction efficiency of the test system, compare the copy numbers with the amount, by weight, of RNA or DNA using HPLC (65). In this way, the viral load can be expressed in SI units (ng/μl).

Although standardization of nucleic acid testing has been successful, it is not without issues. NIBSC manufactures and distributes international standards, providing them on request to IVD manufacturers and testing facilities. Although there are some restrictions around the supply of international standards, some standards have been exhausted and have required replacement. For example, the current HCV international standard is the sixth standard. Although all efforts are made to ensure communicability between each standard, the new standards are compared with the previous version, rather than the initial version. An argument could be made that a better approach would be to have a single international standard that is used to create secondary standards that are provided to manufacturers and testing facilities. In this way, communicability from standard to standard could be strengthened. Fragmentation of the target genome can also cause difficulties in the quantification of nucleic acid, as experienced with herpesviruses such as EBV and CMV DNA (66, 67). It is noted that most commercial assays have validated sample types specified in the instructions for use and often report different performance characteristics depending on the sample matrix. Even in these circumstances, it has been demonstrated that results from tests calibrated with an international standard correlate better than uncalibrated tests (68). Only through standardization of assays can clinical thresholds be created for monitoring treatment efficacy or intervention (69).

CONTROL OF INFECTIOUS DISEASE TESTING

The analytical control of infectious disease testing suffers from a different, but related, set of issues. As with standardization, application of control processes almost universally accepted for clinical chemistry has been applied to infection disease testing without systematic studies to determine if they are applicable to tests measuring biological functionality. Internationally accepted guidelines for quality control in medical pathology have been written by clinical chemists, for clinical chemistry. However, QC results for infectious disease serology do not have Gaussian distribution, and therefore commonly accepted control principles do not apply. Until recently, no systematic review of the applicability of these QC rules to other disciplines, such as infectious disease serology, had been undertaken. In fact, apart from those authored by or in collaboration with the National Reference Laboratory, Australia (NRL), there have been very few peer-reviewed publications on QC of infectious disease serology published over the past 2 decades. In 2019, NRL demonstrated that the application of Westgard Rules to serology leads to unacceptable levels of false rejections (70).

The reason for unacceptable levels of false rejections is simple. When establishing acceptance limits, guidelines suggest using 20 to 30 data points to calculate the mean and SD (25, 26, 71–75). This principle can be applied to clinical chemistry, where standardization protocols facilitate traceability of patients’ results to an international standard through a series of calibrations of secondary and working standards to manufacturer assay calibrators (52). Recalibration of the test system accounts for any bias associated with changes in assay component, resulting in minimal lot-to-lot differences. Therefore, patient or QC samples tested on different reagent lots are expected to report comparable quantitative values, within the precision of the test.

CONTROL OF NUCLEIC ACID TESTING

NAT can be either qualitative or quantitative and incorporates a range of technologies such as PCR and reverse transcription-PCR, as well as isothermal amplification methods, including transcription mediated amplification (TMA), loop-mediated isothermal amplification, clustered regularly interspaced short palindromic repeats, and strand displacement amplification (76). Many NAT assays are multiplex assays detecting two or more nucleic acid targets in the same test run (77). Increasingly, NAT is used at PoCT utilizing single-use cartridges and fully enclosed testing platforms (78). The control and monitoring of each technology present different challenges, and a detailed assessment of each is beyond the scope of this review; however, an overview is presented. By the nature of the technology, small amounts of target nucleic acid are amplified prior to detection to increase analytical sensitivity. The designer of the controls must consider whether the amplification process maintains a dose response, that is, whether the signal increases proportionally to an increase in the amount of the target nucleic acid. This is generally the case for quantitative NAT, especially those assays reporting in international units.

All NAT assays should include an internal control used to detect interference to amplification (79, 80). The internal control is an endogenous or spiked nucleic acid, is present in the test system in addition to the target, and should always be positive. In addition to the internal control, target-specific positive and negative controls should be included for all nucleic acids detected (77, 79–81). The positive control should contain clinically relevant amounts of the target either in native state or as raw nucleic acid, but in vitro RNA transcripts or plasmids do not control for the extraction step (82). A sensitivity control using a sample with a viral load close to a clinical cutoff is sometimes used to ensure that analytical sensitivity is maintained (79). This is important in multiplex assays to ensure the analytical sensitivity for each target (77). Calibration verification of quantitative NAT is required every 6 months in College of American Pathologists (CAP)-accredited laboratories (79). For quantitative NAT, one or more positive run controls, having a known viral load, should be tested periodically and the results monitored graphically. It is recommended that the positive run control undergoes the full test process, including extraction and amplification. Negative controls are usually blank samples containing all relevant reagents without target. In some test systems, in particular PoC NAT and test platforms that restrict operator manipulation, in-built, electronic system controls are utilized. These system checks will automatically monitor sample and reagent processing and optics, as well as software and electronics (78).

Qualitative NAT assays are used for the direct detection of pathogens in clinical samples and in blood screening. Results may be based on a quantitative unit such as a signal to cutoff value (S/Co) or a cycle threshold (Ct) value. It is considered that Ct values are representative of the amount of target in the sample and therefore can be used to monitor variation effectively (83). Qualitative tests, however, may not have a proportional dose response. In some TMA blood screening, NAT for example, where the result is reported as S/Co, the value is similar irrespective of the viral load. This makes the monitoring of the variation of the signal of QC program more difficult to interpret.

There remains a challenge to determine the most appropriate method for establishing acceptance criteria for run control testing. As described previously, due to a lack of an alternative, laboratories use traditional QC monitoring processes, including Westgard rules. The CAP Checklist for Molecular Testing references only traditional methods for establishing control limits used by clinical chemistry (79), and no specific NAT references are included, despite the document being written specifically for molecular testing. There is an absence of critical assessments of the appropriateness of traditional approaches, and no validated alternatives have been published. For viral load assays that are calibrated against the WHO international standard, controls should be manufactured with a known viral load, and therefore acceptance limits could be determined by comparing the QC test result with the assigned level. Acceptance limits could also be established using ±0.5 log10 or 0.3 log10 reflecting clinically significant changes (84). However, this work was performed in the 1990s on early-generation NAT, and it could be argued that these ranges are too wide. Data analysis of quality assurance programs, reported by Baylis et al. in Table S2 (59), would indicate that standard deviation of log10 results obtained from multiple laboratories is less than 0.2 log10 for assays quantifying blood-borne virus nucleic acid. Further studies into the most appropriate method of establishing control limits for NAT are required.

There are numerous standards and guidelines that reference standardization or control of NAT. A summary of guidelines relating to the standardization of NAT has previously been published (59). Different countries publish national guidelines. For example, the CAP publishes the Molecular Biology Checklist (79) that details the requirements for registration to CAP accreditation. Similarly, the Australian National Pathology Accreditation Advisory Council (NPAAC) publishes guidelines on the Requirements for Medical Testing of Microbial Nucleic Acids (80).

CONTROL OF SEROLOGY TESTING

Infectious disease serology test results are qualitative, derived from a quantitative result. However, the quantitative results, usually a ratio of the signal to a manufacturer-defined cutoff, are assay specific and not traceable to an international standard. As described above, international standards are not useful in calibrating immunoassays across different test platforms. The issue is further complicated because, when the same QC sample was tested over time, the introduction of new reagent lot numbers caused a change in the reactivity of the QC result (85, 86). The mean of results calculated from previous lots of reagents and used to establish QC acceptance limits no longer applies to the new reagent lots, subsequently causing QC rejection (70), as represented in Fig. 1. This situation must cause difficulties in interpretation for laboratories using Westgard rules, as guidelines are silent as to what approach should be used when reagent lot changes cause a change in reactivity.

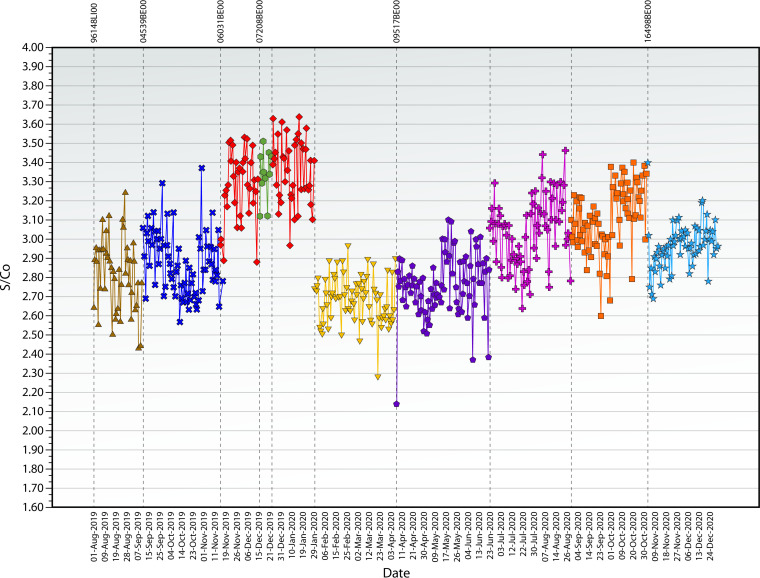

FIG 1.

Levey-Jennings chart of quality control results reported over time, with each color indicating results obtained from different reagent lots. Example of lot-to-lot variation in a commonly used anti-HCV-specific immunoassay.

An alternative to Westgard rules was developed and described previously (85). The approach, called QConnect, uses historical QC data to determine acceptance limits for QC testing. QConnect is based on some basic principles. QC samples using the QConnect principles are optimized for each assay. As infectious disease serology measurand is assay specific, a patient or QC sample will elicit different reactivity on different assays. A series of dilutions of the stock material is tested on each assay to obtain low-level reactivity on the linear part of an assay’s dose response curve. Once the dilution is selected, each new lot of the assay-specific QC sample is manufactured using the same stock material at the same concentration. In this way, the same low-level reactivity is obtained from each lot of QC sample. The QC results are collected into a single database, allowing comparison of QC results across laboratories, instruments, reagent lots, and operators. Internet-based software EDCNet (www.nrlquality.org.au/qconnect) allows the capture of results from QC samples (86, 87). Using these historical data, acceptance limits are calculated for each QC/assay combination, based on many thousands of data points, rather than 20 to 30 (85). Any results outside these QConnect limits are unexpected and should trigger a root cause investigation. The results of QConnect can also be used to estimate the uncertainty of measurement using both bias and imprecision (88), a requirement of ISO 15189.

Proponents for using traditional QC methods for monitoring infectious disease serology suggested that serological assays are “just another assay which follows the same laws as any other” (89). Evidence rejects this statement (90). It has been suggested that SD could be calculated on each new reagent lot. This approach is impractical, as changes in lot number occur frequently and the calculated SD would be valid for only a short period of time prior to the next change of reagent lot. A pooling of SDs from multiple reagent lots has also been suggested (89), but both of these approaches account for only imprecision and not bias. Arguably, a significant change in reactivity due to a reagent lot is more likely to result in a false-positive or -negative result (91).

According to CLSI EP23A guidelines, QC monitoring should be based on the risk of occurrence and the severity of harm caused (27). In infectious disease serology, the greatest risk is the reporting of a false-negative result for a commutable disease such as HIV or hepatitis. This is especially true in a blood donor screening environment. A false-positive test result may cause unnecessary distress to the patient, medicolegal complication, and possibly inappropriate treatment. A false-negative result may lead to transfusion-transmitted infections. Some test results may lead to unnecessary medical intervention, such as a termination of pregnancy in the case of rubella testing. Therefore, it can be argued that a shift in reactivity due to a lot-to-lot change is far more important than increased imprecision.

To this end, several studies have focused on the impact of lot-to-lot changes on false-positive and -negative results (91, 92). In one study, the changes in negative results of blood donors were mapped against reagent lot changes. No correlation was detected and no false-positive results occurred (92). This is not surprising due to the fact that, in blood-screening serological assays, the mean negative donor result is far removed from the assay cutoff. It is noted that this study was performed on only three analytes from the same assay manufacturer. Assays with different characteristics may have a different outcome. A method for the visualization of the relationship between QC and donor sample results, as well as other correlations, has been described previously (93, 94). True low-positive serology test results are found almost exclusively during early infection when the immune response is developing. Low-level reactivity is detectable for only hours as the immune response matures. It is during this time that a false-negative result may be possible if a reagent lot produces lower-than-expected reactivity. A recent study investigated the likelihood of a false-negative result during the window period (91). A series of six reagent lots of an anti-HCV assay were found to produce lower-than-expected reactivity. Of 44 low-positive seroconversion samples tested in affected and unaffected assay lots, only three samples had results reported below the assay cutoff when tested on two of the six affected assay lots. A further sample had results below the cutoff for only one affected lot. The risk of false-negative or false-positive results due to changes in reagent lot reactivity is low; however, it is important that laboratories are monitoring for changes in bias as well as imprecision.

The use of a well-designed, risk-based quality control process is essential for pathology testing. Variation in testing is commonplace, and the extent and frequency of variation should be monitored over time. Variation is derived from changes in reagents (91), processes, consumables (95), and equipment. By collecting these metadata with the QC results and systematically graphing the results, investigations into the cause of unacceptable variation can be facilitated. This approach is applicable to semiquantitative serological assays, NAT, and PoCT (96). It is time for an evidence-based review of quality control processes for infectious disease testing to be undertaken (88).

CONCLUSION

It is important that testing for infectious diseases by serology and NAT is accurate, provides meaningful clinical information, and has minimized bias and imprecision. This can be achieved by standardizing and controlling testing. However, the principles commonly applied to clinical chemistry have been shown not to be applicable for infectious disease serology. This is because the detection and quantification of antibodies are extremely variable. Testing for biological functionality must account for a range of factors, such as genetic variation of the organisms, changes in the immune response over time, and differences in assay design and components. The measurand of serological assays is assay specific, and therefore commutability on quantitative results across assays is not possible. There is a strong argument that international standards for antibody quantification should not be used to calibrate IAs and that regulatory bodies, the WHO, and other interested parties should state categorically that infectious disease serology assays should be qualitative.

This is not the case for NAT or antigen testing, where the approach to standardization has resulted in the standardization of test systems for RNA, DNA, and, to a lesser extent, antigen quantification. There is good evidence that the development and use of international standards have harmonized reporting of results across test systems. This fact has allowed for the establishment of thresholds for implementation or cessation of treatment, rejection of donor plasma prior to fractionation, and the ability to compare test results across testing systems and therefore from laboratory to laboratory.

The control of test systems should be risk based. As serological assays have become more automated, the control mechanisms used by clinical chemists, especially the use of Westgard rules, have been adopted by testing laboratories. However, no systematic review of the applicability of these rules had been undertaken until recently. This work has demonstrated that an unacceptable number of false rejections are encountered if international guidelines for clinical chemistry QC are followed. NRL developed and published an alternative approach, called QConnect, which is more applicable to infectious disease serology. A recent study has demonstrated that, even when a test system experiences significant changes, as evidenced by a drop in QC reactivity, the risk of a true false-negative result during a seroconversion event is minimal.

Infectious disease testing using biological function is different from testing for inert molecules. The rules applied to clinical chemistry for standardization and control cannot and should not be applied without evidence. Indeed, evidence is mounting to indicate that specific approaches to standardization and control of infectious disease testing are required. More detailed investigations in this area are encouraged. WHO, professional bodies, organizations publishing guidance documents, and the IVD industry should commit resources to reviewing the appropriateness of guidance documents for the standardization and control of infectious disease testing.

Biography

Wayne Dimech is Executive Manager, Scientific and Business Relation of the NRL, a WHO Collaborating Centre. Mr. Dimech obtained a bachelor’s degree in applied science and an MBA and is a fellow of both the Australian Institute of Medical Scientists and the Faculty of Science (Research) of the Royal College of Pathologists Australasia. He has worked in private and public pathology laboratories and specialized in infectious disease serology. Mr. Dimech’s research interests include the control and standardization of assays that detect and monitor infectious diseases. Mr. Dimech is an advisor for numerous national and international working groups, including European Commission expert panels in the field of medical devices, ISO TC/212 WG5 Biorisk and BioSafety, Joint Committee for Traceability in Laboratory Medicine (JCTLM) – Nucleic Acid Testing, and consultancies under the auspice of WHO, International Health Regulations, and UNDP. He has authored or coauthored about 50 articles in international peer-reviewed journals and contributed to three book chapters.

REFERENCES

- 1.Dimech W, Grangeot-Keros L, Vauloup-Fellous C. 2016. Standardization of assays that detect anti-rubella virus IgG antibodies. Clin Microbiol Rev 29:163–174. 10.1128/CMR.00045-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bradstreet CM, Kirkwood B, Pattison JR, Tobin JO. 1978. The derivation of a minimum immune titre of rubella haemagglutination-inhibition (HI) antibody. A Public Health Laboratory Service collaborative survey. J Hyg (Lond) 81:383–388. 10.1017/s0022172400025262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Stephenson I, Das RG, Wood JM, Katz JM. 2007. Comparison of neutralising antibody assays for detection of antibody to influenza A/H3N2 viruses: an international collaborative study. Vaccine 25:4056–4063. 10.1016/j.vaccine.2007.02.039. [DOI] [PubMed] [Google Scholar]

- 4.Gust ID, Mathison A, Winsor H. 1973. A survey of rubella haemagglutination-inhibition testing in the southern states of Australia. Bull World Health Organ 49:139–142. [PMC free article] [PubMed] [Google Scholar]

- 5.Schmidt NJ, Lennette EH. 1970. Variables of the rubella hemagglutination-inhibition test system and their effect on antigen and antibody titers. Appl Microbiol 19:491–504. 10.1128/am.19.3.491-504.1970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hierholzer JC, Suggs MT. 1969. Standardized viral hemagglutination and hemagglutination-inhibition tests. I. Standardization of erythrocyte suspensions. Appl Microbiol 18:816–823. 10.1128/am.18.5.816-823.1969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Miller WG. 2018. The standardization journey and the path ahead. J Lab Precis Med 3:87. 10.21037/jlpm.2018.09.14. [DOI] [Google Scholar]

- 8.Zacour M, Ward BJ, Brewer A, Tang P, Boivin G, Li Y, Warhuus M, McNeil SA, LeBlanc JJ, Hatchette TF, Public Health Agency of Canada and Canadian Institutes of Health Influenza Research Network . 2016. Standardization of hemagglutination inhibition assay for influenza serology allows for high reproducibility between laboratories. Clin Vaccine Immunol 23:236–242. 10.1128/CVI.00613-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Voller A, Bartlett A, Bidwell DE. 1978. Enzyme immunoassays with special reference to ELISA techniques. J Clin Pathol 31:507–520. 10.1136/jcp.31.6.507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cox KL, Devanarayan V, Kriauciunas A, Manetta J, Montrose C, Sittampalam S. 2004. Immunoassay methods. In Markossian S, Sittampalam GS, Grossman A, Brimacombe K, Arkin M, Auld D, Austin CP, Baell J, Caaveiro JMM, Chung TDY, Coussens NP, Dahlin JL, Devanaryan V, Foley TL, Glicksman M, Hall MD, Haas JV, Hoare SRJ, Inglese J, Iversen PW, Kahl SD, Kales SC, Kirshner S, Lal-Nag M, Li Z, McGee J, McManus O, Riss T, Saradjian P, Trask OJ, Jr., Weidner JR, Wildey MJ, Xia M, Xu X (ed), Assay guidance manual. Eli Lilly & Company and the National Center for Advancing Translational Sciences, Bethesda (MD). [Google Scholar]

- 11.Mullis KB, Faloona FA. 1987. Specific synthesis of DNA in vitro via a polymerase-catalyzed chain reaction. Methods Enzymol 155:335–350. 10.1016/0076-6879(87)55023-6. [DOI] [PubMed] [Google Scholar]

- 12.The Commission of European Communities. 2009. Commission Decision of 27 November 2009 amending Decision 2002/364/EC on common technical specifications for in vitro diagnostic medical devices (notified under document C(2009) 9464) (text with EEA relevance) (2009/886/EC). Off J Eur Commun L318:25–40. [Google Scholar]

- 13.Australian Government. 2020. Classification of IVD medical devices. Department of Health Therapeutic Goods Administration, Canberra. [Google Scholar]

- 14.The European Parliament and the Council of the European Union. 1998. Directive 98/79/EC of the European Parliament and the Council of 27 October 1998 on in vitro diagnostic medical devices. Off J Eur Commun L331:1–37. [Google Scholar]

- 15.Leveton LB, Sox HC, Jr, Stoto MA (ed). 1995. HIV and the blood supply: an analysis of crisis decisionmaking. National Academies Press (US), Washington (DC). 10.17226/4989. [DOI] [PubMed] [Google Scholar]

- 16.Bayer RFE (ed). 1999. Blood feuds: AIDS, blood, and the politics of medical disaster. Oxford University Press, New York. [Google Scholar]

- 17.IMDRF IVD Working Group. 21 January 2021. Principles of in vitro diagnostic (IVD) medical devices classification. IMDRF/IVD WG/N64FINAL:2021 (formerly GHTF/SG1/N045:2008). International Medical Device Regulators Forum. http://www.imdrf.org/docs/imdrf/final/technical/imdrf-tech-wng64.pdf.

- 18.World Health Organization. 2018. Overview of the WHO prequalification of in-vitro diagnostic assessment. WHO, Geneva. [Google Scholar]

- 19.American Association for Laboratory Accreditation. 2012. P102 - A2LA policy on measurement traceability. American Association for Laboratory Accreditation. [Google Scholar]

- 20.Miller WG, Schimmel H, Rej R, Greenberg N, Ceriotti F, Burns C, Budd JR, Weykamp C, Delatour V, Nilsson G, MacKenzie F, Panteghini M, Keller T, Camara JE, Zegers I, Vesper HW, IFCC Working Group on Commutability . 2018. IFCC Working Group recommendations for assessing commutability part 1: general experimental design. Clin Chem 64:447–454. 10.1373/clinchem.2017.277525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nilsson G, Budd JR, Greenberg N, Delatour V, Rej R, Panteghini M, Ceriotti F, Schimmel H, Weykamp C, Keller T, Camara JE, Burns C, Vesper HW, MacKenzie F, Miller WG, IFCC Working Group on Commutability . 2018. IFCC working group recommendations for assessing commutability part 2: using the difference in bias between a reference material and clinical samples. Clin Chem 64:455–464. 10.1373/clinchem.2017.277541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Shewhard WA. 1931. Economic control of manufactured products. Van Nostand, New York. [Google Scholar]

- 23.Levey S, Jennings ER. 1950. The use of control charts in the clinical laboratory. Am J Clin Pathol 20:1059–1066. 10.1093/ajcp/20.11_ts.1059. [DOI] [PubMed] [Google Scholar]

- 24.Westgard JO. 1994. Selecting appropriate quality-control rules. Clin Chem 40:499–501. 10.1093/clinchem/40.3.499. [DOI] [PubMed] [Google Scholar]

- 25.Westgard JO. 2003. Internal quality control: planning and implementation strategies. Ann Clin Biochem 40:593–611. 10.1258/000456303770367199. [DOI] [PubMed] [Google Scholar]

- 26.Westgard JO. 2013. Statistical quality control procedures. Clin Lab Med 33:111–124. 10.1016/j.cll.2012.10.004. [DOI] [PubMed] [Google Scholar]

- 27.Clinical and Laboratory Standards Institute. 2011. EP23-A laboratory quality control based on risk management: approved guidelines. CLSI, Wayne, PA. [Google Scholar]

- 28.Panteghini M, Forest JC. 2005. Standardization in laboratory medicine: new challenges. Clin Chim Acta 355:1–12. 10.1016/j.cccn.2004.12.003. [DOI] [PubMed] [Google Scholar]

- 29.Dybkaer R. 1996. Quantities and units for biological reference materials used with in vitro diagnostic measuring systems for antibodies. Scand J Clin Lab Invest 56:385–391. 10.3109/00365519609088792. [DOI] [PubMed] [Google Scholar]

- 30.Kempster SL, Almond N, Dimech W, Grangeot-Keros L, Huzly D, Icenogle J, El Mubarak HS, Mulders MN, Nubling CM. 2020. WHO international standard for anti-rubella: learning from its application. Lancet Infect Dis 20:e17–e19. 10.1016/S1473-3099(19)30274-9. [DOI] [PubMed] [Google Scholar]

- 31.WHO Technical Report Series. 2006. Recommendations for the preparation, characterization and establishment of international and other biological reference standards (revised 2004). WHO, Geneva. [Google Scholar]

- 32.Dybkaer R, Storring PL. 1995. Application of IUPAC-IFCC recommendations on quantities and units to WHO biological reference materials for diagnostic use. International Union of Pure and Applied Chemistry (IUPAC) and International Federation of Clinical Chemistry (IFCC). Eur J Clin Chem Clin Biochem 33:623–625. [PubMed] [Google Scholar]

- 33.Dybkaer R, Storring PL. 1994. Application of IUPAC-IFCC recommendations on quantities and units to WHO biological reference materials for diagnostic use: recommendations 1994. J Int Fed Clin Chem 6:101–103. [PubMed] [Google Scholar]

- 34.Muller MM. 2000. Implementation of reference systems in laboratory medicine. Clin Chem 46:1907–1909. 10.1093/clinchem/46.12.1907. [DOI] [PubMed] [Google Scholar]

- 35.Ekins R. 1991. Immunoassay standardization. Scand J Clin Lab Invest Suppl 205:33–46. 10.3109/00365519109104600. [DOI] [PubMed] [Google Scholar]

- 36.Bouthry E, Furione M, Huzly D, Ogee-Nwankwo A, Hao L, Adebayo A, Icenogle J, Sarasini A, Revello MG, Grangeot-Keros L, Vauloup-Fellous C. 2016. Assessing immunity to rubella virus: a plea for standardization of IgG (Immuno)assays. J Clin Microbiol 54:1720–1725. 10.1128/JCM.00383-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Bellini WJ, Rota JS, Rota PA. 1994. Virology of measles virus. J Infect Dis 170 Suppl 1:S15–23. 10.1093/infdis/170.supplement_1.s15. [DOI] [PubMed] [Google Scholar]

- 38.Bbosa N, Kaleebu P, Ssemwanga D. 2019. HIV subtype diversity worldwide. Curr Opin HIV AIDS 14:153–160. 10.1097/COH.0000000000000534. [DOI] [PubMed] [Google Scholar]

- 39.Ludolfs D, Schilling S, Altenschmidt J, Schmitz H. 2002. Serological differentiation of infections with dengue virus serotypes 1 to 4 by using recombinant antigens. J Clin Microbiol 40:4317–4320. 10.1128/JCM.40.11.4317-4320.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.van Doorn LJ, Kleter B, Pike I, Quint W. 1996. Analysis of hepatitis C virus isolates by serotyping and genotyping. J Clin Microbiol 34:1784–1787. 10.1128/JCM.34.7.1784-1787.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Caligiuri P, Cerruti R, Icardi G, Bruzzone B. 2016. Overview of hepatitis B virus mutations and their implications in the management of infection. World J Gastroenterol 22:145–154. 10.3748/wjg.v22.i1.145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Dimech W, Panagiotopoulos L, Francis B, Laven N, Marler J, Dickeson D, Panayotou T, Wilson K, Wootten R, Dax EM. 2008. Evaluation of eight anti-rubella virus immunoglobulin G immunoassays that report results in international units per milliliter. J Clin Microbiol 46:1955–1960. 10.1128/JCM.00231-08. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Dimech W, Bettoli A, Eckert D, Francis B, Hamblin J, Kerr T, Ryan C, Skurrie I. 1992. Multicenter evaluation of five commercial rubella virus immunoglobulin G kits which report in international units per milliliter. J Clin Microbiol 30:633–641. 10.1128/jcm.30.3.633-641.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Dimech W, Arachchi N, Cai J, Sahin T, Wilson K. 2013. Investigation into low-level anti-rubella virus IgG results reported by commercial immunoassays. Clin Vaccine Immunol 20:255–261. 10.1128/CVI.00603-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Dimech W. 2016. Where to now for standardization of anti-Rubella virus IgG testing. J Clin Microbiol 54:1682–1683. 10.1128/JCM.00800-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Huzly D, Hanselmann I, Neumann-Haefelin D, Panning M. 2016. Performance of 14 rubella IgG immunoassays on samples with low positive or negative haemagglutination inhibition results. J Clin Virol 74:13–18. 10.1016/j.jcv.2015.11.022. [DOI] [PubMed] [Google Scholar]

- 47.Chen RT, Markowitz LE, Albrecht P, Stewart JA, Mofenson LM, Preblud SR, Orenstein WA. 1990. Measles antibody: reevaluation of protective titers. J Infect Dis 162:1036–1042. 10.1093/infdis/162.5.1036. [DOI] [PubMed] [Google Scholar]

- 48.WHO Expert Committee on Biological Standardization. 1994. WHO technical report series. WHO, Geneva. [PubMed] [Google Scholar]

- 49.WHO Expert Committee on Biological Standardization. 1995. WHO technical report series. WHO, Geneva. [Google Scholar]

- 50.WHO Expert Committee on Biological Standardization. 1998. WHO technical report series. WHO, Geneva. [PubMed] [Google Scholar]

- 51.WHO Expert Committee on Biological Standardization. 2018. WHO technical report series. WHO, Geneva. https://apps.who.int/iris/bitstream/handle/10665/272807/9789241210201-eng.pdf?ua=1. [Google Scholar]

- 52.Kallner A. 2001. International standards in laboratory medicine. Clin Chim Acta 307:181–186. 10.1016/s0009-8981(01)00425-9. [DOI] [PubMed] [Google Scholar]

- 53.De Bièvre P, Dybkær R, Fajgelj A, Hibbert DB. 2011. Metrological traceability of measurement results in chemistry: concepts and implementation (IUPAC Technical Report). Pure Appl Chem 83:1873–1935. 10.1351/PAC-REP-07-09-39. [DOI] [Google Scholar]

- 54.Wilkinson DE, Seiz PL, Schuttler CG, Gerlich WH, Glebe D, Scheiblauer H, Nick S, Chudy M, Dougall T, Stone L, Heath AB, Collaborative Study Group . 2016. International collaborative study on the 3rd WHO international standard for hepatitis B surface antigen. J Clin Virol 82:173–180. 10.1016/j.jcv.2016.06.003. [DOI] [PubMed] [Google Scholar]

- 55.Prescott GH, Atkinson J, Morris CE. 2018. International collaborative study to establish the 1st WHO international reference panel for HIV-1 p24 antigen and HIV-2 p26 antigen. Expert committee on biological standardization. World Health Organization, Geneva. [Google Scholar]

- 56.Cornberg M, Wong VW, Locarnini S, Brunetto M, Janssen HLA, Chan HL. 2017. The role of quantitative hepatitis B surface antigen revisited. J Hepatol 66:398–411. 10.1016/j.jhep.2016.08.009. [DOI] [PubMed] [Google Scholar]

- 57.Australian Government. 2015. National Hepatitis B testing policy v1.2. Department of Health, Australasian Society for HIV Medicine. Viral Hepatitis and Sexual Health Medicine [Google Scholar]

- 58.Gray ER, Bain R, Varsaneux O, Peeling RW, Stevens MM, McKendry RA. 2018. p24 revisited: a landscape review of antigen detection for early HIV diagnosis. AIDS 32:2089–2102. 10.1097/QAD.0000000000001982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Baylis SA, Wallace P, McCulloch E, Niesters HGM, Nubling CM. 2019. Standardization of nucleic acid tests: the approach of the World Health Organization. J Clin Microbiol 57 10.1128/JCM.01056-18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Saldanha J, Lelie N, Heath A, WHO Collaborative Study Group . 1999. Establishment of the first international standard for nucleic acid amplification technology (NAT) assays for HCV RNA. Vox Sang 76:149–158. 10.1046/j.1423-0410.1999.7630149.x. [DOI] [PubMed] [Google Scholar]

- 61.Holmes H, Davis C, Heath A, Hewlett I, Lelie N. 2001. An international collaborative study to establish the 1st international standard for HIV-1 RNA for use in nucleic acid-based techniques. J Virol Methods 92:141–150. 10.1016/s0166-0934(00)00283-4. [DOI] [PubMed] [Google Scholar]

- 62.Fryer JF, Heath AB, Wilkinson DE, Minor PD, Collaborative Study Group . 2017. A collaborative study to establish the 3rd WHO International Standard for hepatitis B virus for nucleic acid amplification techniques. Biologicals 46:57–63. 10.1016/j.biologicals.2016.12.003. [DOI] [PubMed] [Google Scholar]

- 63.Saldanha J, Lelie N, Yu MW, Heath A, B19 Collaborative Study Group . 2002. Establishment of the first World Health Organization International Standard for human parvovirus B19 DNA nucleic acid amplification techniques. Vox Sang 82:24–31. 10.1046/j.1423-0410.2002.00132.x. [DOI] [PubMed] [Google Scholar]

- 64.Fryer JF, Heath AB, Wilkinson DE, Minor PD, Collaborative Study Group . 2016. A collaborative study to establish the 1st WHO International Standard for Epstein-Barr virus for nucleic acid amplification techniques. Biologicals 44:423–433. 10.1016/j.biologicals.2016.04.010. [DOI] [PubMed] [Google Scholar]

- 65.Bateman AC, Greninger AL, Atienza EE, Limaye AP, Jerome KR, Cook L. 2017. Quantification of BK virus standards by quantitative real-time PCR and droplet digital PCR is confounded by multiple virus populations in the WHO BKV international standard. Clin Chem 63:761–769. 10.1373/clinchem.2016.265512. [DOI] [PubMed] [Google Scholar]

- 66.Boom R, Sol CJ, Schuurman T, Van Breda A, Weel JF, Beld M, Ten Berge IJ, Wertheim-Van Dillen PM, De Jong MD. 2002. Human cytomegalovirus DNA in plasma and serum specimens of renal transplant recipients is highly fragmented. J Clin Microbiol 40:4105–4113. 10.1128/JCM.40.11.4105-4113.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Hayden RT, Tang L, Su Y, Cook L, Gu Z, Jerome KR, Boonyaratanakornkit J, Sam S, Pounds S, Caliendo AM. 2019. Impact of fragmentation on commutability of Epstein-Barr virus and cytomegalovirus quantitative standards. J Clin Microbiol 58. 10.1128/JCM.00888-19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Dimech W, Cabuang LM, Grunert HP, Lindig V, James V, Senechal B, Vincini GA, Zeichhardt H. 2018. Results of cytomegalovirus DNA viral loads expressed in copies per millilitre and international units per millilitre are equivalent. J Virol Methods 252:15–23. 10.1016/j.jviromet.2017.11.001. [DOI] [PubMed] [Google Scholar]

- 69.Dioverti MV, Lahr BD, Germer JJ, Yao JD, Gartner ML, Razonable RR. 2017. Comparison of standardized cytomegalovirus (CMV) viral load thresholds in whole blood and plasma of solid organ and hematopoietic stem cell Ttansplant recipients with CMV infection and disease. Open Forum Infect Dis 4:ofx143. 10.1093/ofid/ofx143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Dimech W, Karakaltsas M, Vincini GA. 2018. Comparison of four methods of establishing control limits for monitoring quality controls in infectious disease serology testing. Clin Chem Lab Med 56:1970–1978. 10.1515/cclm-2018-0351. [DOI] [PubMed] [Google Scholar]

- 71.Anonymous. 2015. Revision of the “Guideline of the German Medical Association on Quality Assurance in Medical Laboratory Examinations – Rili-BAEK” (unauthorized translation). J Lab Med 39:26–69. [Google Scholar]

- 72.Badrick T. 2008. The quality control system. Clin Biochem Rev 29 Suppl 1:S67–70. [PMC free article] [PubMed] [Google Scholar]

- 73.Clincal and Laboratory Standards Institute. 2006. C24-A3 Statistical quality control for quantitative measurement procedures: principles and definitions; Approved guidelines, 3rd ed. CLSI, Wayne PA. [Google Scholar]

- 74.Clinical and Laboratory Standards Institute. 2016. C24 - Statistical quality control for quantitative measurement procedures: principles and definitions. CLSI, Wayne PA. [DOI] [PubMed] [Google Scholar]

- 75.Public Health England. 2015. Quality assurance in the diagnostic virology and serology laboratory. Standards Unit, Microbiology Services, PHE, London, UK. [Google Scholar]

- 76.Centers for Disease Control and Prevention. 2021. Nucleic acid amplification tests (NAATs). https://www.cdc.gov/coronavirus/2019-ncov/lab/naats.html.

- 77.Elnifro EM, Ashshi AM, Cooper RJ, Klapper PE. 2000. Multiplex PCR: optimization and application in diagnostic virology. Clin Microbiol Rev 13:559–570. 10.1128/CMR.13.4.559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Zhang JY, Bender AT, Boyle DS, Drain PK, Posner JD. 2021. Current state of commercial point-of-care nucleic acid tests for infectious diseases. Analyst 146:2449–2462. 10.1039/d0an01988g. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Anonymous. 2016. Molecular pathology checklist. College of American Pathologists, Northfield, IL, USA. [Google Scholar]

- 80.National Pathology Accreditation Advisory Council. 2013. Requirements for medical testing of microbial nucleic acids. Commonwealth of Australia, Canberra, Australia. [Google Scholar]

- 81.Association for Molecular Pathology Clinical Practice Committee. 2014. Molecular diagnostic assay validation: update to the 2009 AMP molecular diagnostic assay validation white paper. Association for Molecular Pathology, Bethesda, MD, USA. [Google Scholar]

- 82.Baylis SNCM, Dimech W. 2021. Standardization of diagnostic assays, p 52–63. In Encyclopedia of virology, 4th ed, vol 5. Elsevier. [Google Scholar]

- 83.Dimech W, Vincini G. 2017. Evaluation of a multimarker quality control sample for monitoring the performance of multiplex blood screening nucleic acid tests. 28th regional congress of the ISBT, Guangzhou, China, November 25–28, 2017. Vox Sang 112:92. [Google Scholar]

- 84.Sebire K, McGavin K, Land S, Middleton T, Birch C. 1998. Stability of human immunodeficiency virus RNA in blood specimens as measured by a commercial PCR-based assay. J Clin Microbiol 36:493–498. 10.1128/JCM.36.2.493-498.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Dimech W, Vincini G, Karakaltsas M. 2015. Determination of quality control limits for serological infectious disease testing using historical data. Clin Chem Lab Med 53:329–336. 10.1515/cclm-2014-0546. [DOI] [PubMed] [Google Scholar]

- 86.Walker S, Dimech W, Kiely P, Smeh K, Francis B, Karakaltsas M, Dax EM. 2009. An international quality control programme for PRISM chemiluminescent immunoassays in blood service and blood product laboratories. Vox Sang 97:309–316. 10.1111/j.1423-0410.2009.01218.x. [DOI] [PubMed] [Google Scholar]

- 87.Dimech W, Walker S, Jardine D, Read S, Smeh K, Karakaltsas M, Dent B, Dax EM. 2004. Comprehensive quality control programme for serology and nucleic acid testing using an internet-based application. Accred Qual Assur 9:148–151. 10.1007/s00769-003-0734-5. [DOI] [Google Scholar]

- 88.Dimech W, Francis B, Kox J, Roberts G, Serology Uncertainty of Measurement Working Party . 2006. Calculating uncertainty of measurement for serology assays by use of precision and bias. Clin Chem 52:526–529. 10.1373/clinchem.2005.056689. [DOI] [PubMed] [Google Scholar]

- 89.Badrick T, Parvin C. 2019. Letter to the editor on article Dimech W, Karakaltsas M, Vincini G. Comparison of four methods of establishing control limits for monitoring quality controls in infectious disease serology testing. Clin Chem Lab Med 2018;56:1970–8. Clin Chem Lab Med 57:e71–e72. 10.1515/cclm-2018-1276. [DOI] [PubMed] [Google Scholar]

- 90.Dimech W, Vincini G, Karakaltas M. 2019. Counterpoint to the letter to the editor by Badrick and Parvin in regard to comparison of four methods of establishing control limits for monitoring quality controls in infectious disease serology testing. Clin Chem Lab Med 57:e73–e74. 10.1515/cclm-2018-1321. [DOI] [PubMed] [Google Scholar]

- 91.Dimech WJ, Vincini GA, Cabuang LM, Wieringa M. 2020. Does a change in quality control results influence the sensitivity of an anti-HCV test? Clin Chem Lab Med 58:1372–1380. 10.1515/cclm-2020-0031. [DOI] [PubMed] [Google Scholar]

- 92.Dimech W, Freame R, Smeh K, Wand H. 2013. A review of the relationship between quality control and donor sample results obtained from serological assays used for screening blood donations for anti-HIV and hepatitis B surface antigen. Accred Qual Assur 18:11–18. 10.1007/s00769-012-0950-y. [DOI] [Google Scholar]

- 93.Wand HD, Freame W, Smeh RK. 2015. Visual and statistical assessment of quality control results for the detection of hepatitis B surface antigen among Australian blood donors. Annals of Clinical and Laboratory Res [Google Scholar]