Abstract

Objective

To develop automated algorithms for the detection of posterior vitreous detachment (PVD) using OCT imaging.

Design

Evaluation of a diagnostic test or technology.

Subjects

Overall, 42 385 consecutive OCT images (865 volumetric OCT scans) obtained with Heidelberg Spectralis from 865 eyes from 464 patients at an academic retina clinic between October 2020 and December 2021 were retrospectively reviewed.

Methods

We developed a customized computer vision algorithm based on image filtering and edge detection to detect the posterior vitreous cortex for the determination of PVD status. A second deep learning (DL) image classification model based on convolutional neural networks and ResNet-50 architecture was also trained to identify PVD status from OCT images. The training dataset consisted of 674 OCT volume scans (33 026 OCT images), while the validation testing set consisted of 73 OCT volume scans (3577 OCT images). Overall, 118 OCT volume scans (5782 OCT images) were used as a separate external testing dataset.

Main Outcome Measures

Accuracy, sensitivity, specificity, F1-scores, and area under the receiver operator characteristic curves (AUROCs) were measured to assess the performance of the automated algorithms.

Results

Both the customized computer vision algorithm and DL model results were largely in agreement with the PVD status labeled by trained graders. The DL approach achieved an accuracy of 90.7% and an F1-score of 0.932 with a sensitivity of 100% and a specificity of 74.5% for PVD detection from an OCT volume scan. The AUROC was 89% at the image level and 96% at the volume level for the DL model. The customized computer vision algorithm attained an accuracy of 89.5% and an F1-score of 0.912 with a sensitivity of 91.9% and a specificity of 86.1% on the same task.

Conclusions

Both the computer vision algorithm and the DL model applied on OCT imaging enabled reliable detection of PVD status, demonstrating the potential for OCT-based automated PVD status classification to assist with vitreoretinal surgical planning.

Financial Disclosure(s)

Proprietary or commercial disclosure may be found after the references.

Keywords: Automated detection, Deep Learning, Posterior vitreous detachment, OCT

Abbreviations and Acronyms: AI, artificial intelligence; AUROC, area under the receiver operator characteristic curve; CNN, convolutional neural network; DL, deep learning; ILM, internal limiting membrane; PVD, posterior vitreous detachment; ViT, vision transformers

The age-related process of vitreous separation occurs as a result of vitreous liquefaction, ultimately leading to a complete posterior vitreous detachment (PVD).1 OCT is one of the most widely used imaging modalities in ophthalmology and is a critical tool in the analysis of the vitreoretinal interface.2 In particular, OCT is often utilized in the clinical setting to aid in the determination of a PVD.2,3

The accurate detection of PVD status is important for clinical prognostication and for presurgical planning for vitreoretinal surgeons. Patients with a recent diagnosis of acute PVD are at increased risk of retinal tears and detachments and should be followed closely by an ophthalmologist.4,5 Conversely, patients with floaters without a PVD are at a reduced concern for a retinal tear. Posterior vitreous detachment status has also been shown to have prognostic implications in regard to disease progression and in how patients respond to treatment in retinal diseases, such as diabetic retinopathy, retinal vein occlusion, and age-related macular degeneration.6, 7, 8, 9, 10 In addition, knowing whether a patient has a partial or a complete PVD is important in guiding surgical planning.3 For example, if a patient does not have a complete PVD, a surgeon may be more inclined to choose scleral buckle as the procedure of choice for retinal detachment repair instead of pneumatic retinopexy or pars plana vitrectomy.

Enabled by increasing medical imaging data availability, deep learning (DL) and artificial intelligence (AI) have been recently applied to the field of ophthalmology, assisting clinicians in the diagnosis and management of ophthalmic diseases. Image-based DL models, such as convolutional neural networks (CNNs), have shown promising results in the automated detection of retinal diseases, such as diabetic retinopathy, epiretinal membrane, and age-related macular degeneration.11, 12, 13, 14, 15, 16, 17, 18 Despite the wide application of computer-aided algorithms in the diagnosis of the abovementioned retinal diseases, to our knowledge, there is currently no reliable computer vision algorithm or DL model that can localize the posterior vitreous cortex and detect PVD status on OCT images. Although there have been a few prior studies using DL approaches for diagnosing PVD, these models used ocular ultrasound images rather than OCT images, which are far more common in clinical practice.19,20 The aim of this study was thus to enable and evaluate the automated detection of PVD through OCT imaging to improve the evaluation of the vitreoretinal interface.

Methods

Clinical Protocols and Dataset Annotation

This study adhered to the tenets set forth in the Declaration of Helsinki and the Health Insurance Portability and Accountability Act, and institutional review board/ethics committee approval was obtained at the University of California San Diego. A waiver of written informed consent was granted. Patients who had undergone evaluation by a retina specialist with OCT imaging at the University of California San Diego Department of Ophthalmology between October 2020 and December 2021 were reviewed. Adults and children encompassing a wide range of retinal pathologies were included. Exclusion criteria consisted of poor OCT image quality (i.e., scans with poor resolution because of anterior segment or vitreous opacity or because of motion artifact) and eyes with a known history of having undergone prior pars plana vitrectomy.

Macular OCT scans were obtained using a spectral-domain system (Spectralis OCT, Heidelberg Engineering) composed of a volume scan consisting of 49 horizontally-oriented B-scans covering a 6 × 6 mm area at a resolution of 512 × 496 pixels per B-scan (frame averaging > 16 frames), in addition to a 9-mm vertical and horizontal line scan that included the optic nerve at a resolution of 768 × 496 pixels (Spectralis high-speed scan protocol with frame averaging > 100 frames). Four trained ophthalmologists (A.L.L., L.H., J.A., and D.E.K.) reviewed the entire volume B-scans in addition to the horizontal and vertical raster scans of each patient for the determination of PVD status. The definition of PVD in this study was a complete stage 4 PVD without any presence of the premacular bursa or posterior vitreous cortex on any scans of the OCT.2,3 In questionable cases, consensus grading was performed by an expert-trained retina specialist (E.N.) for the final determination of PVD status. Patient information was anonymized and images were deidentified before transferring data for analysis.

Automated PVD Detection Algorithms

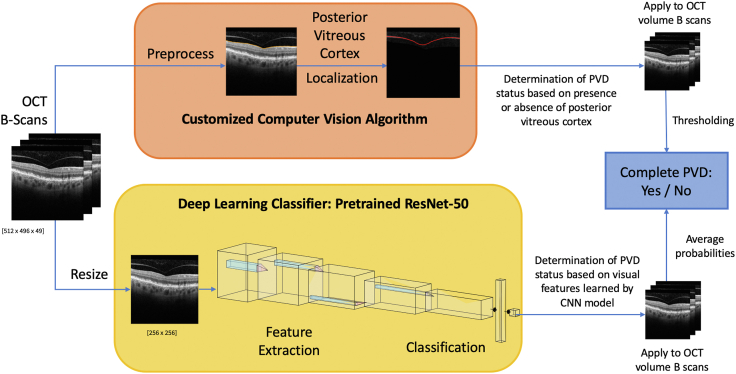

We approached the computer-aided, automated PVD detection task using the following 2 different methods (Fig 1): (1) developing a computer vision algorithm to detect the presence or absence of a PVD based on posterior vitreous cortex localization, and (2) training a deep CNN model based on our OCT dataset for determination of PVD status.

Figure 1.

Flowchart summary for the 2 posterior vitreous detachment (PVD) automated detection methods implemented on OCT imaging: customized computer vision algorithm (upper); deep learning convolutional neural network (CNN) model (lower).

Computer Vision Algorithm based on Posterior Vitreous Cortex Localization

The customized PVD detection algorithm was designed and prototyped in Python 3 code. The algorithm evaluated PVD status by examining the posterior vitreous cortex in each raster of the OCT volume scan. If the premacular bursa or posterior vitreous cortex was detected in any part of the OCT image for > 1 scan out of 49 OCT B-scans in the volume scan, the algorithm determined that the posterior vitreous cortex was attached, so there was an absence of a complete PVD. On the contrary, if the posterior vitreous cortex was not visualized for > 47 scans out of 49 OCT B-scans (thresholding parameters applied to avoid false-positives), the algorithm determined that a complete PVD (stage 4 PVD) was present.

For the automated detection of PVD status, the customized computer vision algorithm consisted of 2 parts (Fig 2): image-preprocessing and posterior vitreous cortex localization. In the image-preprocessing step, the OCT scans were normalized based on pixel intensity. A Gaussian blur (OpenCV-python library version 4.1.2) was then applied to the original OCT scan to reduce noise in the image. A kernel with a denoise level of 5 × 3 was used to suppress imaging noise present across the image and to facilitate the detection of the internal limiting membrane (ILM). Instead of using a common square-shaped kernel, we chose this specific kernel size to be longer on the horizontal axis than the vertical axis, because the ILM spans more pixels horizontally in an image than vertically. Furthermore, less denoising power was applied in the vertical axis, as keeping high-resolution and high-frequency signals on the vertical axis of the image were crucial to subsequent ILM and posterior vitreous cortex detection. Next, an edge detection-based algorithm was utilized to detect the ILM of the retina and to segment out the posterior vitreous cortex. The ILM segmentation algorithm first recognized candidate segments of the ILM on rectangular sliding windows of the image using a customized edge-detection function based on the intensity difference between the vitreoretinal interface and thresholding of continuity of the ILM. In this customized function, a sliding window of size 1 × 10 is scanned through the image stepwise pixel by pixel to detect a difference in mean intensity values with a threshold > 100 to recognize potential ILM segments. The algorithm then removed outlier segments far away from the main ILM segments in terms of a vertical distance threshold of 20 pixels and further applied a spline fitting to the rest of the potential ILM segments to outline the final ILM. Because only the area above the ILM was needed for posterior vitreous cortex localization, the retinal area below the ILM was masked out to save extra computations. Thus, after masking, only the vitreous area was retained for further processing.

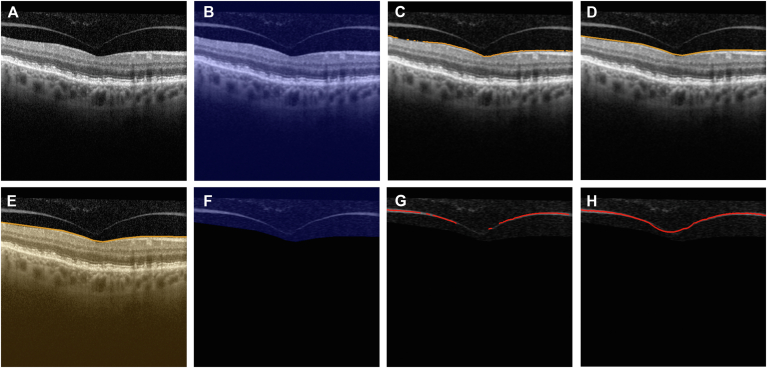

Figure 2.

Stepwise image processing illustration for customized posterior vitreous cortex localization algorithm. A, Original OCT B-scan image of a patient without a complete posterior vitreous detachment. B, Gaussian blur filter from OpenCV is applied to the entire image (blue mask). C, D, The internal limiting membrane (ILM) is located using the customized algorithm (orange line). E, Retinal area below the ILM is masked out (orange mask) and only relevant areas are kept for further posterior vitreous cortex localization. F, Gaussian blur filter from OpenCV is applied to the vitreous area (blue mask). G, H, The posterior vitreous cortex (if present in the image) is detected and located. Customized distance metrics are calculated on the detected segments and compared against heuristic thresholds to determine if the posterior vitreous cortex is present in the image.

In the posterior vitreous cortex localization step, another Gaussian blur filter with a 7 × 1 kernel was applied to further smooth the image of the vitreous and to remove horizontal noise. Similar to the kernel size selection in the image-preprocessing step, we chose 1-pixel length in the vertical axis because of the need to retain higher resolution on the vertical axis of the image because the posterior vitreous cortex also empirically spanned more pixels horizontally as compared with vertically in a B-scan. A customized function similar to the ILM detection method was crafted to localize the posterior vitreous cortex based on intensity thresholding using a 10 × 15 pixel-sized sliding window. After candidate segments of the posterior vitreous cortex were detected, the same outlier removal algorithm as used in ILM segmentation was then applied. Once a target segment that satisfied the intensity threshold was detected, the total pixel length and a continuity metric defined by the vertical difference of the 2 adjacent candidate segments were calculated. When all the evaluation criteria for posterior vitreous cortex recognition were satisfied, the algorithm localized the detected line as the posterior vitreous cortex.

To develop and fine-tune this algorithm, 110 OCT volume scans (5390 OCT images) were used. Among these scans, 10 eyes from 10 different patients were examined in detail to estimate the thresholding for visual features and relevant sliding window sizes used in the algorithm, including posterior vitreous cortex thickness threshold, intensity threshold, and line continuity metrics. These eyes were also used in the kernel size selections of Gaussian blur filters for the visual examination of denoising outcomes. The remaining 100 OCT scans (4900 images) were used to test-run prototypes of the algorithm and fine-tune the threshold values based on feedback from a trained retina specialist (E.N.).

Training DL Model for PVD Detection

To enable a DL approach to automatically detect a PVD, we implemented a DL pipeline in Pytorch 1.9.0 to train CNNs to determine the PVD status of an OCT image from the PVD status label of the volume scan. A ResNet-50 CNN model was trained on Google Colab with a Tesla P100-PCIE-16GB graphics processing unit to learn visual representations of images from an eye with complete PVD versus absence of complete PVD. We performed the training-validation dataset split on the volume (per eye) level to prevent data snooping so that the validation set would not contain any image from an eye that the model was trained on. A random shuffle was performed for the 9:1 training-validation dataset split to ensure that the data from the 2 classes were roughly balanced, after the same distribution of the entire dataset. The training set consisted of 674 OCT volume scans (33 026 OCT images), with 51.0% volume scans from eyes with complete PVD and 49.0% scans from eyes without complete PVD. The validation set used for model selection and hyperparameter tuning consisted of 73 OCT volume scans (3577 OCT images), with 41.6% volume scans from eyes with complete PVD and 58.4% scans from eyes without complete PVD. The ResNet-50 version 1.5 model used was pretrained on the ImageNet dataset to enable transfer learning of visual image representations.

To make image sizes compatible with the pretrained model, all OCT images were converted to red, green, and blue images from grayscale images (PIL Image Python library version 7.1.2) and downsized to 256 × 256 pixels (torchvision Python library version 0.13.1) at runtime in the customized data loader code. The loss function used in training was the cross-entropy loss, and the optimizer used was Adam. The training process used a uniform learning rate of 5E-5, and a batch size of 128. We trained the model for 100 epochs and saved the model weights with the lowest validation loss during training as the best model to be used in testing and evaluation.

Evaluation and Comparison of PVD Detection Algorithms

In addition to evaluating the 2 methods on the validation dataset with 73 OCT volume scans, we additionally collected 118 OCT volume scans (containing 5782 OCT images) as a separate external testing dataset for the final evaluation of our automated PVD detection methods in December 2021. The test set included 63.2% volume scans from eyes with complete PVD and 36.8% scans from eyes without complete PVD. There was no overlap in OCT images used in the testing dataset and the dataset used for training and validation for either of the 2 algorithms. Patients who had undergone prior vitrectomy were included in this testing data set to better simulate clinical practice with the anticipated future application of the AI algorithm to all patients who present to the retina clinic and undergo OCT imaging, regardless of prior surgical status. The baseline characteristics of the patients in each data set are provided in Table 1.

Table 1.

Baseline Characteristics of Patients

| Training/Validation Data Set | Testing Data Set | |

|---|---|---|

| Number of eyes | 674/73 | 118 |

| Number of OCT images | 33 026/3577 | 5782 |

| Patients | 371 (∗30) | 57 (∗6) |

| Age (yrs), mean (SD) | 64.2 (18.2) | 69.75 (16.5) |

| Gender (%) | ||

| Male | 161 (43.4) | 26 (45.6) |

| Female | 210 (56.6) | 31 (54.4) |

| Race (%) | ||

| Asian | 45 (12.1) | 6 (10.5) |

| Black or African American | 7 (1.9) | 1 (1.8) |

| Native Hawaiian or Pacific Islander | 3 (0.8) | 0 (0) |

| White | 253 (68.2) | 41 (71.9) |

| Other race or mixed race | 49 (13.2) | 9 (15.8) |

| Unknown | 14 (3.8) | 0 (0) |

SD = standard deviation.

Mandatory exclusion patients were excluded from demographics analysis.

To evaluate and compare the computer vision approach and the DL model, we performed testing by running both algorithms on the same testing set of 118 OCT volume scans. For each eye in a volume scan, we recorded the predicted PVD status as either complete PVD or absence of complete PVD and compared the results with ground truth labels to calculate the accuracy, sensitivity, specificity, precision, and F1-score of both algorithms. Receiver operating characteristics curves were plotted by varying the probability output threshold of the DL model, and the area under the receiver operator characteristic curves (AUROCs) were calculated (Scikit-learn Python library version 1.0.2) for both image-level and volume-level PVD status detection results. Because the 2 classes of PVD status were not perfectly balanced in the dataset, we also plotted the precision-recall curves to show the trade-off in precision as the decision threshold shifted to correctly recognize more complete PVD images. The average precision scores of the precision-recall curves, equivalent to the area under curve, were also calculated at the image level and volume level.

Results

Performance of Algorithms on Validation Dataset

Validation testing was performed on both automated algorithms on our dataset of 3577 images and 73 OCT volume scans (Table 2). The DL algorithm achieved an accuracy of 81.4% with an 88.3% sensitivity, 76.6% specificity, and 0.795 F1-score at the image level. At the volume level, the DL algorithm achieved a 100.0% sensitivity and 80.8% specificity with an accuracy of 88.1% and an F1-score of 0.866, whereas the customized algorithm attained an 82.1% sensitivity and 79.0% specificity with an accuracy of 80.3% and an F1-score of 0.779.

Table 2.

Algorithms Performance Metrics Comparison on Validation Dataset

| Accuracy | Sensitivity | Specificity | Precision | F1-score | ||

|---|---|---|---|---|---|---|

| Per volume | Customized algorithm | 80.3% | 82.1% | 79.0% | 74.2% | 0.779 |

| Deep learning model | 88.1% | 100.0% | 80.8% | 76.3% | 0.866 | |

| Per image | Deep learning model | 81.4% | 88.3% | 76.6% | 72.3% | 0.795 |

Comparison of PVD Detection Algorithms with Testing Dataset

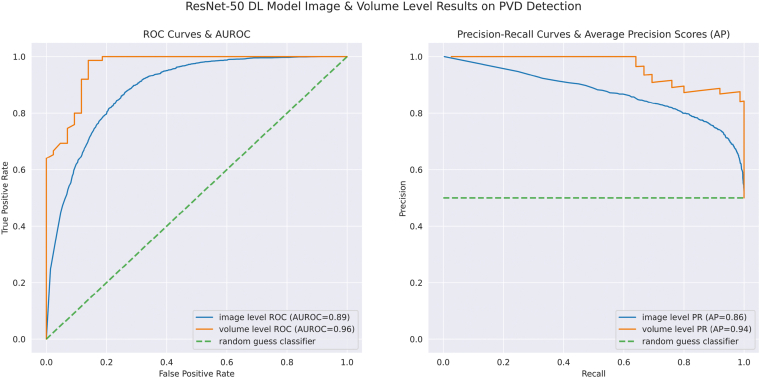

Both the customized algorithm and DL model detection results were largely in agreement with the PVD status labeled by trained graders with the testing dataset (Table 3). For the DL model, at the level of each individual OCT image, an accuracy of 83.0% and an F1-score of 0.874 were attained with a sensitivity of 93.2% and a specificity of 65.6%. The AUROC was 89% and the average precision was 86% at the image level (Fig 3). By encompassing the entire volume OCT scan and averaging the probabilities of complete PVD from each image, we achieved an accuracy of 90.7% and an F1-score of 0.932 with a sensitivity of 100% and a specificity of 74.5%. At the volume level, the AUROC was 96% and the average precision was 94% for the DL model (Fig 3). For the customized algorithm at the volume level, the accuracy was 89.5% and the F1-score was 0.912, with a sensitivity of 91.9% and a specificity of 86.1%.

Table 3.

Algorithms Performance Metrics Comparison on Testing Dataset

| Accuracy | Sensitivity | Specificity | Precision | F1-score | ||

|---|---|---|---|---|---|---|

| Per volume | Customized algorithm | 89.5% | 91.9% | 86.1% | 90.5% | 0.912 |

| Deep learning model | 90.7% | 100.0% | 74.5% | 87.2% | 0.932 | |

| Per image | Deep learning model | 83.0% | 93.2% | 65.6% | 82.2% | 0.874 |

Figure 3.

Receiving operator characteristic curves and precision-recall (PR) curves at the OCT image level and volume level for the deep learning (DL) model for posterior vitreous detachment (PVD) detection. Area under the receiver operator characteristic curves (AUROCs) and average precision (AP) are depicted in the diagram. ROC = Receiving operator characteristic.

Discussion

Utilizing both traditional computer vision and DL approaches, we developed reliable algorithms for the automatic detection of PVD status from OCT images. The accurate detection of PVD status is a critical feature in the ophthalmic examination because it is a clinically important entity for disease prognostication and presurgical planning for vitreoretinal surgeons. There are many different techniques for assessing PVD status, including slit-lamp biomicroscopy, ultrasonography, and OCT imaging. The presence of a Weiss ring on clinical evaluation suggests a complete PVD; however, identification through biomicroscopy can be challenging at times and does not allow staging of PVD status.1,21 B-scan ultrasonography has shown utility in the detection of PVD status; however, it has limited anatomic resolution and is highly operator dependent with lower interexaminer agreement than OCT.1,21,22 The use of OCT to assist evaluation of PVD status has thus become increasingly important because it is a clinically practical tool that ophthalmologists can use to efficiently and effectively identify the presence or absence of PVD.21, 22, 23 Kraker et al24 found that 6-mm OCT scans detected complete PVD with 91% sensitivity and 99% specificity. Peri-papillary scan images can also improve the diagnostic ability of OCT, especially in cases in which vitreous separation occurs in the macula but the vitreous still remains attached at the optic nerve.22 In our study, we utilized both the 6 × 6 mm volume scans in addition to the 9-mm vertical and horizontal line scan that included the optic nerve for the determination of PVD status.

Often, the greatest challenge in PVD status determination is distinguishing between stage 0, in which there is a completely attached hyaloid and absence of PVD, and a stage 4 complete PVD.2,3 Some strategies to classifying an eye as stage 0 include attempting to visualize if the premacular bursa is visible but not the posterior vitreous cortex.3 Once the process of vitreous separation occurs, identifying a partial PVD by visualizing the posterior vitreous cortex is easier and more apparent. However, there are cases in which vitreoretinal separation is difficult to discern on OCT for the human examiner. A computer algorithm trained to visualize the posterior vitreous cortex may be able to more accurately distinguish between stage 0 and early stage 1 partial PVD, and between stage 0 and stage 4 PVD. Although developing our customized computer-vision-based algorithm, we found that the algorithm detected the presence or absence of PVD more accurately in several instances after reviewing the OCT again in cases in which there was a discrepancy between the human and machine interpretation. In this way, computer-aided diagnosis may be able to assist providers in more accurately delineating PVD staging for clinical prognostication. Furthermore, in a high-volume clinic in which reviewing OCT volume scans can be time-consuming, a reliable AI algorithm for PVD status determination may allow for more efficient clinical decision-making.

Within ophthalmology, DL techniques have been applied to the diagnosis of many retinal diseases, including age-related macular degeneration, diabetic retinopathy, and macular edema.11,12,15, 16, 17, 18 Automatic segmentation of 9 retinal layer boundaries has been successfully validated on eyes with nonexudative age-related macular degeneration using a novel framework based on CNN and graph search methods.13 Although the majority of DL techniques have been applied to the retina, there are few reports of segmentation of the vitreous. To our knowledge, there are currently no DL methods that have been developed to automatically segment the posterior vitreous cortex for the identification of PVD on OCT. A DL segmentation algorithm was developed for automated eye compartment segmentation of the vitreous, retina, choroid, and sclera.25 In this model, the vitreous was defined above the demarcation line of the ILM.25 Automated segmentation of vitreous hyperreflective foci, the vitreous, and retinal pigment epithelium has also been developed using a DL approach in patients with uveitis.26 In this methodology, the hyperreflective structure of the posterior vitreous cortex was manually removed by the clinician as a false-positive structure during the image-preprocessing step.26 A DL system to recognize vitreous detachment, retinal detachment, and vitreous hemorrhage on ophthalmic ultrasound was developed and achieved accuracies of 0.90, 0.94, and 0.92, respectively.19 To our knowledge, prior literature is scarce regarding automated algorithms to recognize PVD status utilizing OCT imaging, and we believe that our study presents an innovative development in AI for automated PVD detection.

In our study, both automated algorithms demonstrated good reliability in PVD detection on OCT imaging volume scans, achieving accuracies of 89.5 % and 90.7%, with sensitivities of 91.9% and 100%, and specificities of 86.1% and 74.5% for the traditional computer vision approach and DL method, respectively. For the ResNet-50 DL model, AUROC analyses confirmed that PVD detection was more accurate when analyzing the entire OCT volume scan (96%) than at the image level (89%). This increase in accuracy and specificity was as expected because when all the scans from a volume were considered, averaging the probability of each image provided a higher confidence level.

The DL method using ResNet-50 CNN achieved better overall performance than the customized algorithm method, yet both algorithms have the potential to be further improved and refined to increase reliability in PVD detection. For the DL approach, the performance would likely improve with the adoption of a more advanced model architecture, such as the vision transformers (ViTs) model,27 which incorporates attention modules that examine input images by smaller regions. Transformer-based architectures were originally developed for natural language processing but in recent years were applied to computer vision, and ViT models were shown to be superior in performance in image recognition task benchmarks compared with traditional CNN models. However, the performance advantage over ResNet models only reveals itself when the number of training data set images surpasses a certain threshold.27 Given the limited amount of OCT images in our study, a pretrained ResNet-50 model with fewer model parameters was thus used for optimal convergence and less overfitting. If the training dataset of OCT imaging for PVD detection grows substantially in the future, we envision ViT models pretrained on image recognition benchmark datasets achieving better performance on this task than the ResNet-50 model used in our study.

We developed the customized algorithm based on the idea of utilizing traditional image analyses and heuristics in PVD diagnosis from OCT imaging. Traditional image analyses typically involve manual development of techniques based on feature extraction and edge detection.28 The customized algorithm applies automated image processing and calculations of visual metrics to examine the presence of the posterior vitreous cortex in an OCT B-scan, a feature that an ophthalmologist would also use to determine PVD status. In this sense, the customized algorithm method can be seen as an automated version of exercising existing knowledge about PVD diagnostics. This concept is similar to the clinical setting in which the examiner typically scrolls through the OCT volume scan to detect if any posterior vitreous cortex is visualized in any frame for the final determination of PVD status. For our automated algorithms, the parameters and threshold metrics were determined heuristically by running the PVD detection algorithms on the training and validation data sets and then selecting the values which resulted in the best accuracy. The generalization capability of this method is thus dependent on the set of OCT images that were used to determine the parameters. Because the number of parameters of this algorithm is orders of magnitude fewer than the DL model, the DL model was able to generalize features learned in the training dataset better than the thresholding-based customized algorithms. To further improve this automated algorithm method, more visual features used in clinical PVD detection can be taken into account, and the decision-making can be further fine-tuned to increase the precision and complexity of the algorithms.

Other limitations of our study include that our models were trained on images obtained using the Spectralis OCT system at a single academic institution. Although we included images from eyes both with and without pathology, additional future studies utilizing OCT images from different systems and from other institutions are needed to establish external validity and broader generalizability. We excluded OCT images of poor image resolution, and thus both of our methods were not developed to navigate around poor image quality or motion artifacts. A lack of a very large, robust training data set composed of thousands of images also may have limited the training of our DL model and usage of more sophisticated models with a higher number of parameters.

In conclusion, we demonstrated a novel application of both traditional computer vision algorithms and DL methods for the automated detection of PVD on OCT imaging. Future steps include performing external validation studies and optimizing the algorithms for efficient usage in the clinical setting to assist providers in clinical decision-making. Digital analysis tools, such as our algorithm, offer promise in enhancing the evaluation of the vitreoretinal interface to help guide presurgical planning and clinical prognostication for improved patient care.

Manuscript no. XOPS-D-22-00148R2.

Footnotes

Presented at the Association for Research in Vision and Ophthalmology Annual Meeting, May 3, 2022, Denver, Colorado and will be presented at the American Society of Retina Specialists Annual Meeting, July 14, 2022, New York City, New York.

Disclosures:

All authors have completed and submitted the ICMJE disclosures form.

The authors made the following disclosures: S.L.B.: Financial support – National Institutes of Health Office (grant no.: DP5OD029610), the National Eye Institute (grant no.: P30EY022589).

B.R.S.: Financial support – National Institutes of Health Office (grant no.: DP5OD029610), the National Eye Institute (grant no.: P30EY022589).

E.N.: Financial support – National Institutes of Health (grant no.: K08EY028999); Consultant – Genentech/Roche, EyeBio, Alcon, Allergan/Abbvie.

D.U.B.: Financial support – National Eye Institute.

The other authors have no proprietary or commercial interest in any materials discussed in this article.

Supported by an unrestricted departmental grant from Research to Prevent Blindness.The sponsor or funding organization had no role in the design or conduct of this research.

HUMAN SUBJECTS: Human subjects were included in this study.

This study adhered to the tenets set forth in the Declaration of Helsinki and the Health Insurance Portability and Accountability Act, and Institutional Review Board (IRB)/Ethics Committee approval was obtained at the University of California San Diego (UCSD). A waiver of written informed consent was granted.

No animal subjects were used in this study.

Author Contributions:

Conception and design: Nudleman, Li, Feng, Wang, Baxter, Huang, Arnett, Bartsch, Kuo, Saseendrakumar, Guo.

Data collection: Nudleman, Li, Feng, Wang, Baxter, Huang, Arnett, Bartsch, Kuo, Saseendrakumar, Guo.

Analysis and interpretation: Nudleman, Li, Feng, Wang, Baxter, Huang, Arnett, Bartsch, Kuo, Saseendrakumar, Guo.

Obtained funding: N/A.

Overall responsibility: Nudleman, Li, Feng, Wang, Baxter, Huang, Arnett, Bartsch, Kuo, Saseendrakumar, Guo.

References

- 1.Abraham J.R., Ehlers J.P. Posterior vitreous detachment: methods for detection. Ophthalmol Retina. 2020;4:119–121. doi: 10.1016/j.oret.2019.12.014. [DOI] [PubMed] [Google Scholar]

- 2.Uchino E., Uemura A., Ohba N. Initial stages of posterior vitreous detachment in healthy eyes of older persons evaluated by optical coherence tomography. Arch Ophthalmol. 2001;119:1475–1479. doi: 10.1001/archopht.119.10.1475. [DOI] [PubMed] [Google Scholar]

- 3.Hwang E.S., Kraker J.A., Griffin K.J., et al. Accuracy of spectral-domain OCT of the macula for detection of complete posterior vitreous detachment. Ophthalmol Retina. 2020;4:148–153. doi: 10.1016/j.oret.2019.10.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Seider M.I., Conell C., Melles R.B. Complications of acute posterior vitreous detachment. Ophthalmology. 2022;129:67–72. doi: 10.1016/j.ophtha.2021.07.020. [DOI] [PubMed] [Google Scholar]

- 5.Uhr J.H., Obeid A., Wibbelsman T.D., et al. Delayed retinal breaks and detachments after acute posterior vitreous detachment. Ophthalmology. 2020;127:516–522. doi: 10.1016/j.ophtha.2019.10.020. [DOI] [PubMed] [Google Scholar]

- 6.Houston S.K., Rayess N., Cohen M.N., et al. Influence of vitreomacular interface on anti-vascular endothelial growth factor therapy using treat and extend treatment protocol for age-related macular degeneration (vintrex) Retina. 2015;35:1757–1764. doi: 10.1097/IAE.0000000000000663. [DOI] [PubMed] [Google Scholar]

- 7.Mayr-Sponer U., Waldstein S.M., Kundi M., et al. Influence of the vitreomacular interface on outcomes of ranibizumab therapy in neovascular age-related macular degeneration. Ophthalmology. 2013;120:2620–2629. doi: 10.1016/j.ophtha.2013.05.032. [DOI] [PubMed] [Google Scholar]

- 8.Ono R., Kakehashi A., Yamagami H., et al. Prospective assessment of proliferative diabetic retinopathy with observations of posterior vitreous detachment. Int Ophthalmol. 2005;26:15–19. doi: 10.1007/s10792-005-5389-2. [DOI] [PubMed] [Google Scholar]

- 9.Singh R.P., Habbu K.A., Bedi R., et al. A retrospective study of the influence of the vitreomacular interface on macular oedema secondary to retinal vein occlusion. Br J Ophthalmol. 2017;101:1340–1345. doi: 10.1136/bjophthalmol-2016-309747. [DOI] [PubMed] [Google Scholar]

- 10.Bertelmann T., Kicova N., Mennel S., et al. The impact of posterior vitreous adhesion on ischaemia in eyes with retinal vein occlusion. Acta Ophthalmol. 2016;94:e43–e48. doi: 10.1111/aos.12815. [DOI] [PubMed] [Google Scholar]

- 11.Kermany D.S., Goldbaum M., Cai W., et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell. 2018;172:1122–1131. doi: 10.1016/j.cell.2018.02.010. e9. [DOI] [PubMed] [Google Scholar]

- 12.de Fauw J., Ledsam J.R., Romera-Paredes B., et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat Med. 2018;24:1342–1350. doi: 10.1038/s41591-018-0107-6. [DOI] [PubMed] [Google Scholar]

- 13.Fang L., Cunefare D., Wang C., et al. Automatic segmentation of nine retinal layer boundaries in OCT images of non-exudative AMD patients using deep learning and graph search. Biomed Opt Express. 2017;8:2732–2744. doi: 10.1364/BOE.8.002732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kuwayama S., Ayatsuka Y., Yanagisono D., et al. Automated detection of macular diseases by optical coherence tomography and artificial intelligence machine learning of optical coherence tomography images. J Ophthalmol. 2019;2019 doi: 10.1155/2019/6319581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lee C.S., Baughman D.M., Lee A.Y. Deep learning is effective for the classification of OCT images of normal versus age-related macular degeneration. Ophthalmol Retina. 2017;1:322–327. doi: 10.1016/j.oret.2016.12.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gargeya R., Leng T. Automated identification of diabetic retinopathy using deep learning. Ophthalmology. 2017;124:962–969. doi: 10.1016/j.ophtha.2017.02.008. [DOI] [PubMed] [Google Scholar]

- 17.Schlegl T., Waldstein S.M., Bogunovic H., et al. Fully automated detection and quantification of macular fluid in OCT using deep learning. Ophthalmology. 2018;125:549–558. doi: 10.1016/j.ophtha.2017.10.031. [DOI] [PubMed] [Google Scholar]

- 18.Ting D.S.W., Cheung C.Y.L., Lim G., et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA. 2017;318:2211–2223. doi: 10.1001/jama.2017.18152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Chen D., Yu Y., Zhou Y., et al. A deep learning model for screening multiple abnormal findings in ophthalmic ultrasonography (with video) Transl Vis Sci Technol. 2021;10:22. doi: 10.1167/tvst.10.4.22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Adithya V.K., Baskaran P., Aruna S., et al. Development and validation of an offline deep learning algorithm to detect vitreoretinal abnormalities on ocular ultrasound. Indian J Ophthalmol. 2022;70:1145–1149. doi: 10.4103/ijo.IJO_2119_21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wagley S., Belin P.J., Ryan E.H. Utilization of spectral domain optical coherence tomography to identify posterior vitreous detachment in patients with retinal detachment. Retina. 2021;41:2296–2300. doi: 10.1097/IAE.0000000000003209. [DOI] [PubMed] [Google Scholar]

- 22.Moon S.Y., Park S.P., Kim Y.K. Evaluation of posterior vitreous detachment using ultrasonography and optical coherence tomography. Acta Ophthalmol. 2020;98:e29–e35. doi: 10.1111/aos.14189. [DOI] [PubMed] [Google Scholar]

- 23.Flaxel C.J., Adelman R.A., Bailey S.T., et al. Posterior vitreous detachment, retinal breaks, and lattice degeneration preferred practice pattern. Ophthalmology. 2020;127:P146–P181. doi: 10.1016/j.ophtha.2019.09.027. [DOI] [PubMed] [Google Scholar]

- 24.Kraker J.A., Kim J.E., Koller E.C., et al. Standard 6-mm compared with widefield 16.5-mm OCT for staging of posterior vitreous detachment. Ophthalmol Retina. 2020;4:1093–1102. doi: 10.1016/j.oret.2020.05.006. [DOI] [PubMed] [Google Scholar]

- 25.Maloca P.M., Lee A.Y., de Carvalho E.R., et al. Validation of automated artificial intelligence segmentation of optical coherence tomography images. PLoS One. 2019;14 doi: 10.1371/journal.pone.0220063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lee H., Kim S., Chung H., Kim H.C. Automated quantification of vitreous hyperreflective foci and vitreous haze using optical coherence tomography in patients with uveitis. Retina. 2021;41:2342–2350. doi: 10.1097/IAE.0000000000003190. [DOI] [PubMed] [Google Scholar]

- 27.Dosovitskiy A., Beyer L., Kolesnikov A., et al. 2020. pp. 1–22. (An image is worth 16x16 words: transformers for image recognition at scale). arXiv preprint arXiv:2010.11929. [Google Scholar]

- 28.O’Mahony N., Campbell S., Carvalho A., et al. In: Advances in Computer Vision. Vol 943. Arai K., Kapoor S., editors. Springer International Publishing; 2020. Deep Learning vs. Traditional Computer Vision; pp. 128–144. (Advances in Intelligent Systems and Computing.). [Google Scholar]