Abstract

We conducted (I) 18 event-related potential (ERP) field tests to detect concealed information regarding major terrorist crimes and other real-world crimes and (II) 5 ERP tests regarding participation in a classified counterterrorism operation. This study is a test of the brain fingerprinting scientific standards hypothesis: that a specific set of methods for event-related potential (ERP) concealed information tests (CIT) known as the brain fingerprinting scientific standards provide the sufficient conditions to produce less than 1% error rate and greater than 95% median statistical confidence for individual determinations of whether the tested information is stored in each subject’s brain. All previous published results in all laboratories are compatible with this hypothesis. We recorded P300 and P300-MERMER ERP responses to visual text stimuli of three types: targets contain known information, irrelevants contain unknown/irrelevant information, and probes contain the situation-relevant information to be tested, known only to the perpetrator and investigators. Classification CIT produced significantly better results than comparison CIT, independent of classification criteria. Classification CIT had 0% error rate; comparison CIT had 6% error rate. As in previous studies, classification-CIT median statistical confidences were approximately 99%, whereas comparison CIT statistical confidences were no better than chance for information-absent (IA) subjects (who did not know the tested information). Over half of the comparison-CIT IA determinations were invalid due to a less-than-chance computed probability of being correct. Experiment (I) results for median statistical confidence: Classification CIT, IA subjects: 98.6%; information-present (IP) subjects (who know the tested information): 99.9%; comparison CIT, IA subjects: 48.7%; IP subjects: 99.5%. Experiment (II) results (Classification CIT): error rate 0%, median statistical confidence 96.6%. Countermeasures had no effect on the classification CIT. These results, like all previous results in our laboratory and all others, support the brain fingerprinting scientific standards hypothesis and indicate that the classification CIT is a necessary condition for a reliable, accurate, and valid brainwave-based CIT. The comparison CIT, by contrast, produces high error rates and IA statistical confidences no better than chance.

Supplementary Information

The online version contains supplementary material available at 10.1007/s11571-022-09795-1.

Keywords: Brain fingerprinting, Concealed information test, P300, P300-MERMER, ERP, Classification CIT, Comparison CIT, Counterterrorism, Terrorism, Criminal investigation, Scientific standards, Detection of concealed information, MERMER, Guilty knowledge test, Lie detection, Forensic science, Field study, Forensic neuroscience

Introduction

Previous related research

In our previous field studies (e.g., Farwell et al. 2013), we applied a very specific method of event-related brain potential (ERP)-based classification concealed information test (classification CIT) in the investigation of real-world crimes. We refer to the particular method practiced therein as the “brain fingerprinting” method. In previous publications (Farwell 1992, 1994, 1995a, 1995b, 2007, 2010, 2012, 2013, 2014; Farwell and Donchin 1986 [abstract], 1988a [abstract], 1991; Farwell and Smith 2001; Farwell et al. 2013; Farwell et al. 2014) we have specified in detail what this method entails and how it differs from other methods of brainwave-based CITs. Farwell and Farwell (1995) investigated the role of consciousness in similar neurological and psychophysiological phenomena applying quantum physics apparatus and protocols.

Farwell and Donchin (1991) applied this method to detect concealed information regarding both real minor crimes and mock espionage crimes. Farwell and FBI scientist Sharon Smith (Farwell and Smith 2001; Roberts (2007); applied this specific method to detect information regarding real-world events in the lives of FBI agents. Farwell, FBI scientist Drew Richardson, and present author Graham Richardson (Farwell et al. 2013) applied it to detect (1) Information regarding the FBI stored in the brains of FBI agents; (2) Information regarding bomb making stored in the brains of improvised explosive device (IED)/explosive ordnance disposal (EOD) experts; (3) And information regarding real-world crimes in two studies, one funded by the CIA. Farwell et al. (2014) applied it in another CIA-funded study to detect concealed information known to US Navy military medical experts. All these studies achieved 0% error rate and over 95% median statistical confidences.

Independent replications of the same methods, i.e., studies that met the brain fingerprinting scientific standards as described herein (see Appendix 1), consistently achieved essentially the same results. For example, Allen and Iacono (1997; see also Miller 2010) also achieved 0% error rate, high statistical confidences, and resistance to countermeasures with the classification CIT in a replication of Farwell and Donchin’s (1991) study conducted according to the brain fingerprinting scientific standards. Unlike all of the above studies except Farwell and Donchin (1991), they reported some indeterminate results.1 Neshige et al. (1991) developed a similar method in Japan, using photographs as stimuli.

The crimes we previously investigated with this method and reported in previous publications were committed by one or two individuals acting alone. Planning, if any, was done solely by the perpetrator(s). These crimes included, for example, two unplanned murders and one murder planned and perpetrated by a serial killer.

Highlights of innovation in the present study

The present study comprises the following innovations: the first application of the ERP-based CIT in real-world counterterrorism cases; the first application of the ERP-based CIT in investigation of crimes with multiple perpetrators; and the first application of the ERP-based CIT in detection of information stored in the brains of the planners as well as the perpetrators of real-world crimes; the first to empirically compare the classification CIT and the comparison CIT in specific-issue cases; the first to empirically compare the classification CIT and the comparison CIT in field cases; the first to test the predictions of the brain fingerprinting scientific standards hypothesis (the only existing hypothesis to explain the distribution of results in previous studies) in specific-issue, field, and counterterrorism cases and cases involving multiple perpetrators and planners; and the innovation of applying classification operating characteristic (COC) curves and analysis and area between curves (ABC) analysis to ERP data and to CIT data.

The present study: a counterterrorism and real-crime field study

In the current study we have included crimes committed by individuals acting alone or with one or two others, as in previous studies. For example, we include one unplanned murder committed by two perpetrators, with one accomplice and no one else involved in the crime.

In addition, however, in the present study we have applied the same “brain fingerprinting” forensic science method in the investigation of terrorist crimes. These terrorist crimes were planned, orchestrated, and perpetrated by terrorist organizations involving multiple individuals. In each case only a few of the individuals physically carried out the crime at the crime scene, while multiple individuals were involved in planning and orchestrating the crimes. Investigation of such terrorist crimes involves different challenges from those encountered in our previous field studies.

We designed and conducted brain fingerprinting tests to detect concealed information regarding a several terrorist crimes. Examples are as follows:

A hijacking wherein all of the hijackers were killed. One handler and one mastermind who planned and orchestrated the crime remained to be brought to justice. We developed a brain fingerprinting test to detect concealed information known only to the mastermind and to the handler (and to investigators).

A mass shooting involving multiple victims and multiple shooters. Some of the shooters died at the scene, along with numerous victims. Some of the shooters were neither killed, captured, nor identified at the scene. The surviving shooters, along with the mastermind and handler, remained to be brought to justice. We developed a brain fingerprinting test to detect information known only to the surviving shooters, the mastermind, and the handler (and to investigators).

A suicide bombing in which the suicide bomber died at the scene, along with multiple victims. A mastermind planned the attack and recruited the suicide bomber. A handler provided instructions, explosives, and logistical support for the suicide bomber. We developed a brain fingerprinting test to detect information known only to the mastermind and handler (and to investigators).

We applied these same methods in the investigation of real-world non-terrorist crimes.

The brain fingerprinting scientific standards hypothesis

The most striking feature of the research on brainwave-based concealed information tests to date is the sharp bimodal distribution of results with respect to both error rate and statistical confidences for individual determinations. The brain fingerprinting scientific standards hypothesis is the only hypothesis advanced so far to explain this pattern.

The present paper is a test of the brain fingerprinting scientific standards hypothesis on the detection of concealed information regarding major real-world terrorist crimes as well as conventional crimes.

The brain fingerprinting scientific standards hypothesis explains the following facts. One set of methods has consistently produced error rates of less than 1% (actually, 0%) and median statistical confidences of greater than 95% in every published study. Another, fundamentally different set of methods has produced widely variable error rates, averaging 20%–30% and sometimes as high as 50% (chance), along with statistical confidences consistently averaging no better than chance (50%) for information-absent determinations.

Farwell, D. Richardson, G. Richardson, and Furedy (Farwell 2012; Farwell et al. 2013; Farwell et al. 2014) proposed the brain fingerprinting scientific standards hypothesis to explain these results. The threefold hypothesis refers to the published brain fingerprinting scientific standards (Farwell 2012; Farwell et al. 2013; Farwell et al. 2014) as follows:

Hypothesis 1

Applying methods that substantially2 meet the 20 brain fingerprinting scientific standards provides sufficient conditions to produce less than 1% error rate3 overall and less than 5% error rate in every individual study. This holds true (1a) without countermeasures, (1b) with countermeasures, and (1c) in field cases, where it is always unknown whether countermeasures are being practiced or not.

Hypothesis 2

Applying scientific methods that substantially meet the 20 brain fingerprinting scientific standards provides sufficient conditions to consistently produce median statistical confidences for individual determinations of 95% overall, including at least 90% for information-present determinations (the subject knows the tested information) and 90% in the opposite direction for information-absent determinations (the subject does not know the tested information). (Farwell et al. 2013) suggested that data to date would justify increasing this to 95% median statistical confidences for both.)

Hypothesis 3

Some but not all of the 20 scientific standards are also necessary conditions to simultaneously obtain the above described levels of (3a) error rate and (3b) statistical confidence. The standards that are not necessary are nevertheless useful in that they improve accuracy and/or statistical confidence.

Farwell and Donchin’s (1988b, 1991) seminal papers, wherein the brain fingerprinting scientific standards were first substantially applied, have been cited by over 3000 subsequent publications in the literature. All published data to date (and all known unpublished data) are in accord with the three-part brain fingerprinting scientific standards hypothesis. No one has published any data contradictory to this hypothesis. No one has proposed an alternative hypothesis to explain the striking bimodal distribution of results in the published data. (See Farwell 2012, 2014 for reviews.)

The methods reported herein and the brain fingerprinting scientific standards on which they are based comprise an application of the classification concealed information test (CIT). A fundamentally different method is the comparison CIT. The comparison CIT does not meet the brain fingerprinting scientific standards. As predicted by the brain fingerprinting scientific standards hypothesis, in every study so far the comparison CIT has failed to produce the results that have characterized the studies meeting the standards: near-zero error rates along with high statistical confidences for both information-present4 and information-absent determinations.

This study directly compared the results produced by the classification CIT with the results produced by the comparison CIT for the same subjects tested for the same concealed information regarding the same real-world terrorist attacks and other crimes.

Fundamental principles and procedures of the classification CIT

To assess whether or not the subject knows specific information, the BF classification CIT establishes two templates: one for the subject’s response to known, situation-relevant information, and another for the subject’s response to irrelevant information. Then the subject’s response to the tested information is classified as being more similar to his response to known, relevant information or to his response to irrelevant/unknown information.

To accomplish this, the BF classification CIT presents three types of stimuli. These consist of words, phrases, or pictures presented briefly on a computer screen. “Probes” contain the information to be tested. Probes have three defining characteristics: (1) They consist of correct, salient information about the investigated situation; (2) The subject has no way of knowing the probes other than having participated in the investigated situation; and (3) The subject denies knowing the information contained in the probes. For example, if the murder weapon is a knife, and the subject has never been informed of this fact, and the subject denies knowing what the murder weapon is, then a probe stimulus could be “knife.”

“Irrelevants” contain equally plausible, but in fact incorrect and irrelevant, information regarding the investigated situation. The subject’s response to irrelevants provides a template for the subject’s response (or lack of a response) to unknown/irrelevant information. For each probe, there are two or more corresponding irrelevants consisting of equally plausible but incorrect features of the investigated event. For example, if the description of the probe is “the murder weapon used in the murder of John Jones” and probe is “knife,” corresponding irrelevants could be “rifle” and “pistol.”

“Targets” contain known, relevant details about the investigated situation. For example, if the subject knows he is being investigated for the murder of Joe Smith, “Joe Smith” could be used as a target stimulus. For each target, two or more equally plausible but incorrect irrelevants are presented. For example, if the target is “Joe Smith,” corresponding irrelevants could be “Sam Jones” and “Mike Davis.”

The subject is informed of the significance of the probes in the context of the investigated situation, but is not informed which stimuli are probes and which are irrelevants. For example, the subject may be told: “One of the items you will see on the screen will be the murder weapon. You will see the words ‘knife,’ ‘pistol,’ and ‘rifle.’ You have told us that you do not know what the murder weapon is, so you will not recognize it when it is presented, is this right?”

The subject is also told of the significance of the targets in the context of the investigated situation. Unlike probes, however, targets are explicitly identified to the subject as being crime-relevant (or situation-relevant). Moreover, the subject is assigned a specific, differential, overt behavioral task to perform in response to the targets. The subject is instructed to press a specific button in response to targets, and a different button in response to “everything else.”

In this and our previous studies, probes and targets each constitute 1/6 of stimuli presented, and irrelevants constitute 2/3.

A subject who knows the tested information embodied in the probes recognizes three types of stimuli: known targets, unknown/irrelevant irrelevants, and known probes. For such a subject, “everything else” (everything except the targets for which he pushes a special button) consists of two types of stimuli: probes that he recognizes as being relevant and significant in the context of the investigated situation, and irrelevant stimuli.

A subject who does not know the tested information will distinguish only two types of stimuli. He will recognize the targets, which have been clearly identified and require a specific, overt, differential button-press response. Since he does not know the probes, and since irrelevants and probes are equally plausible as being correct features of the investigated situation, he will not distinguish between probes and irrelevants. For a subject lacking the knowledge embodied in the probes, “everything else” will all be unknown/irrelevant stimuli. For him, true irrelevants are indistinguishable from (unknown and unrecognized) probes.

For a subject who knows the tested information, probes are extremely similar to targets. Both contain known, situation-relevant information. Thus, brain responses to probes will be extremely similar to brain responses to targets in every respect. For a subject lacking the tested information, probes will be indistinguishable from irrelevants. Thus, brain responses to probes will be extremely similar to brain responses to irrelevants.

In this way, the targets provide a template for the subject’s brain response to known, situation-relevant information. The irrelevants provide a template for the subject’s brain response to unknown/irrelevant information. The data analysis of the BF classification CIT classifies the subject’s brain response to the probes as being more similar to his response to targets, or more similar to his response to irrelevants. We use the bootstrapping statistical technique applied to correlations between brain responses to the different stimulus types to accomplish this classification. This procedure produces a determination for each subject and a corresponding statistical confidence, or probability of being correct, for each individual determination.

If the subject’s response is classified with a high statistical confidence as being more similar to the known targets than to the unknown/irrelevant irrelevants, then the determination is “information present”: the subject knows the information embodied in the probes. If the subject’s response is classified with a high statistical confidence as being more similar to his response to the irrelevants, then the determination is “information absent”: he does not know the tested information. If the subject’s response cannot be classified with a high statistical confidence in either direction, then no determination is made. The outcome is “indeterminate.”

In all of our recent studies, targets are relevant to the investigated situation. In some previous studies (Farwell and Donchin 1991), targets were inherently irrelevant (like irrelevants), and artificially made relevant only by the assigned button-press task. The primary advantage of using situation-relevant targets is that for a subject who knows the relevant information situation-relevant targets are more similar to probes than inherently irrelevant targets would be. Thus, for an information-present subject, brain responses to probes and targets tend to be more similar when targets are situation-relevant, resulting in greater accuracy and/or statistical confidence. (This and other advantages are discussed in detail in Farwell 2012 and Farwell et al. 2013).

Previous real-world, field studies with the BF classification CIT

The BF classification CIT has been applied in real-world cases involving two types of tests that detect of two types of information respectively. Specific issue tests detect information regarding a particular event that took place at a particular time and place, such as a crime or terrorist attack (Farwell and Donchin 1991 [Experiment 2]; Farwell and Smith 2001; Farwell et al. 2013). Specific screening or focused screening tests detect information that identifies people with specific training, expertise, or inside information of an agency or group, such as knowledge specific to FBI agents or bomb makers (Farwell et al. 2013). (The CIT is not applicable in general screening applications, where investigators do not know what information they are seeking to detect.)

Farwell and Donchin (1991) comprised two specific issue experiments. Experiment 1 was a laboratory study comprising detecting information regarding a mock espionage scenario. Experiment 2 detected information regarding minor real-world crimes. Both experiments achieved 0% error rate/100% accuracy. 12.5% of cases were indeterminate.

Allen and Iacono (1997) independently replicated Farwell and Donchin’s (1991) BF classification CIT methods and results (no false negatives or false positives; some indeterminates). They also implemented an alternative, Bayesian data analysis method that produced results that were highly accurate, albeit not as accurate as the Farwell and Donchin BF-classification-CIT method.

Farwell and Smith (2001) was a real-life specific issue experiment that comprised detecting information regarding non-criminal events in the lives of FBI agents. Results were 0% error rate/100% accuracy, 0% indeterminates, median statistical confidences for individual determinations of 99.9%, and all individual statistical confidences over 95%.

Farwell et al. (2013) comprised four real-life studies, two specific issue studies and two specific screening studies. The CIA real-life study was a specific issue study funded by the CIA. It comprised detecting information regarding real-life events, including felony crimes. Results were 0% error rate/100% accuracy, 0% indeterminates, median statistical confidences for individual determinations of 99.9%, and all individual statistical confidences over 95%.

The real crimes real consequences study was a real-life specific issue test comprising detecting information regarding real crimes wherein there were life-changing consequences to the outcome of the test (e.g., conviction for murder and the death sentence or life in prison). In cases where there were no judicial consequences, life-changing consequences were achieved by offering subjects a $100,000 reward for beating the test. Results were 0% error rate/100% accuracy and 0% indeterminates.

The FBI agent study was a real-life specific screening study comprising detecting information unique to FBI agents. Results were 0% error rate/100% accuracy, 0% indeterminates, median statistical confidences for individual determinations of 99.9%, and all individual statistical confidences over 95%.

The bomb maker study was a real-life specific screening study that comprised detecting information unique to bomb makers (explosive ordnance disposal [EOD] and improvised explosive device [IED] experts). Results were 0% error rate/100% accuracy, 0% indeterminates, median statistical confidences for individual determinations of 99.9%, and all individual statistical confidences over 95%.

Farwell et al. (2014) was a CIA-funded real-life specific screening study conducted in conjunction with the US Navy that comprised detecting information known only to military medical experts. Information-present subjects were US Navy military medical experts. Results for the BF classification CIT were 0% error rate/100% accuracy, 0% indeterminates, and median statistical confidences of 99.9%.

Farwell et al. (2014) compared the results of the BF classification CIT with the results of the comparison CIT applied to the same data. The comparison CIT produced significantly a higher error rate and significantly lower statistical confidences than the classification CIT. Comparison-CIT error rate was 20%. Median statistical confidence was 67%. As predicted by the statistical model, median statistical confidence for information-absent subjects was no better than chance; in fact, it was less than chance, 28.9%. More than half of the information-absent determinations were invalid, having less than 50% computed probability of being correct. Error rate, statistical confidences, and invalid results are similar to those of previous comparison-CIT studies in other laboratories. Median statistical confidence and percentage of invalid results are in accord with the predictions of the statistical model for the comparison CIT.

In summary, all previous field and real-life studies on the BF classification CIT have resulted in 0% error rate/100% accuracy and extremely high median statistical confidences. All but one study (Farwell and Donchin 1991) have also resulted in 0% indeterminates.

Countermeasures

The BF classification CIT, when practiced according to the 20 brain fingerprinting scientific standards (Farwell 2012; Farwell et al. 2013, 2014) has been shown to be highly resistant to countermeasures. No one has ever beaten a classification CIT, when implemented according to these standards, despite real-world motivations. In past studies, these motivations have included consequences such as the death penalty or life in prison, as well as a $100,000 reward for beating the test (Farwell et al. 2013).

Three types of countermeasures have been reported to be effective against comparison-CIT methods, including the complex trial protocol of Rosenfeld et al. (2008). All CIT methods involve differences in responses to the different types of stimuli. Countermeasures that have been effective against some methods have focused on attempting to manipulate responses to the respective stimulus types. There are essentially three ways of attempting to do this that have proven to be effective against the comparison CIT but not against the BF classification CIT: (1) Additional attention to irrelevants: Attempting to enhance the response to irrelevant stimuli by covertly performing a task (such as moving the toe) in response to each irrelevant stimulus (Rosenfeld et al. 2004, 2008); (2) Additional attention to targets: Attempting to enhance the response to the target stimuli by covertly performing an additional task in response to targets (Mertens and Allen 2008); (3) “Try not to think about it”: Attempting to reduce brain responses to probe stimuli by attempting not to think about the event related to the information contained in the probes (Bergström et al. 2013). A fourth method comprising a simple mental task designed to distract the subject from the stimuli (Sasaki et al. 2002) was ineffective.

All of the studies that have reported an effect of countermeasures on error rate have tested procedures that failed to implement over half of the 20 standard procedures. Predictably, they all reported high error rates and low statistical confidences even without countermeasures.

The “additional attention to irrelevants” countermeasure has defeated the comparison CIT (Rosenfeld 2004; Farwell 2011a, b; Farwell et al. 2014), and in particular Rosenfeld et al.’s (2008) complex trial protocol. In a series of experiments, the comparison-CIT complex trial protocol produced error rates of 15% without countermeasures and 29% with countermeasures (for reviews see Farwell 2012, 2014).

Mertens and Allen (2008) tested the effect of the “additional attention to targets” and “additional attention to irrelevants” countermeasures on both a classification CIT (their “bootstrapped correlation” condition) and a comparison CIT (“bootstrapped amplitude difference”). Although one of their conditions was a classification CIT, this study failed to implement several important standard procedures (7, 12, 18, and 19), and predictably produced high error rates in all conditions even without countermeasures.

Both countermeasures tested by Mertens and Allen (2008) produced a considerable increase in error rate for the comparison CIT, but no significant difference in error rate for the classification CIT. In the classification-CIT condition that most closely resembled Farwell and Donchin (1991) and our other studies (Mertens and Allen, page 292, footnote 1), 21% of the determinations made were false negatives without countermeasures, and only 16% of the determinations made were false negatives with countermeasures. In other words, countermeasures not only failed to produce more errors in the classification CIT, if anything they resulted in a (non-significantly) lower error rate.

Mertens and Allen (2008) found the comparison CIT, by contrast, to be highly susceptible to countermeasures. The error rate for the comparison CIT was 81% for the countermeasure groups (19% accuracy), which is far worse than chance (50%) performance. Even without countermeasures, the comparison CIT produced 53% errors, worse than chance performance and dramatically higher error rate than the classification CIT.

Farwell et al. (2013) showed that the “additional attention to irrelevants” and the “additional attention to targets” countermeasures had no effect on the BF classification CIT when implemented according to the 20 standard procedures, even when subjects had strong motivation to beat the test. Farwell et al. taught subjects these countermeasures in a field study on real crimes. All subjects were correctly detected, with no false positives and no false negatives. (There were also no indeterminates).

Bergström et al. (2013) tested the “try not to think about it” countermeasure on a comparison CIT. They applied the comparison-CIT bootstrapping data analysis method of Rosenfeld et al. (2004). Their results showed that, like the other two countermeasure methods described above, the “try not to think about it” countermeasure is effective against the comparison CIT.5

Although the countermeasure applied was different, Bergström et al.’s (2013) results for the comparison CIT both with and without countermeasures were similar to the results produced by the same comparison-CIT analysis procedure in the Rosenfeld et al. (2004) study and other comparison-CIT studies by that group (e.g., Verschuere et al. 2009). With the experiment and subject group with the most accurate results, and using the peak-to-peak comparison-CIT bootstrapping algorithm applied in Rosenfeld et al. (2004) and that group’s various other studies, Bergström et al. (2013) reported 30% false negatives without countermeasures, 45% false negatives with countermeasures, and 17% false positives. These results are typical of the comparison CIT as applied by Rosenfeld, Verschuere, Meijer, and others who apply that method.

In addition to demonstrating that the comparison CIT is highly susceptible to yet another countermeasure, Bergström et al. (2013) reached two conclusions, both of which are addressed by our present study: (1) “The generalizability of our findings is somewhat complicated by the multitude of different guilty knowledge protocols,” and (2) “It is also crucial that suppression countermeasures are assessed outside the laboratory. First, memories of a real crime may differ in intrusiveness from those of a crime simulation, which could affect suppression success. Second, real criminals will likely differ in their motivation to control retrieval from typical research volunteers.”

Our study addresses both of these issues. Although the BF classification CIT incorporating the 20 standard procedures has been shown to be highly resistant to the other two types of countermeasures that have defeated the comparison CIT (including Rosenfeld’s complex trial protocol), the “try not to think about it” countermeasure has never been tested on the BF classification CIT. We tested this countermeasure in the present study.

Moreover, we tested the resistance of the BF classification CIT to this countermeasure in field conditions in the investigation of real crimes, with major consequences to the outcome, on subjects with high motivation to beat the test. To provide life-changing motivation in cases where there were no judicial consequences to the outcome of the test, we offered a $100,000 reward for beating the BF classification CIT wherein the brain fingerprinting scientific standards were met.

Understanding the literature: different methods produce different results

In the literature on the brainwave-based CIT, there is an obvious bimodal distribution with respect to both error rate and statistical confidence in the results reported. What has sometimes escaped commentators, and even some researchers, is the fact that the two strikingly different patterns of results are brought about by two very different sets of methods.

One set of methods, exemplified by Farwell and Donchin (1991), Farwell and Smith (2001), and Farwell et al. (2013, 2014) has in every study—including both field and laboratory studies—produced error rates of less than 1% and median statistical confidences for individual determinations that are greater than 95%, including greater than 90% median statistical confidences for both information-present and information-absent determinations (Farwell 2012; Farwell and Richardson 2013). These methods are also highly resistant to countermeasures. Independent replications (e.g., Allen and Iacono 1997) have produced essentially the same results.

A very different set of methods, exemplified by Rosenfeld et al. (1987, 2004, 2007, 2008, 2018), Meijer et al. (2007, 2014), Meixner et al. (2009), Meixner and Rosenfeld (2014), and Lu et al. (2017), has produced an order of magnitude higher error rates and dramatically lower statistical confidences—averaging no better than chance (50%) for information-absent determinations. These methods are also highly susceptible to countermeasures.

To make sense of the bimodal distribution of results reported in the literature, and in particular the two very different patterns of results reported, it is necessary to have an understanding of the fundamental differences in methods that bring about these extremely different results.

The brain fingerprinting scientific standards specify in detail the methods that have consistently produced low error rates and high statistical confidences. In previous publications (Farwell 2012; Farwell et al. 2013, 2014; Farwell and Richardson 2013), we have discussed the methodological differences between the studies reporting low error rates and high statistical confidences versus the studies reporting high error rates and low statistical confidences with respect to the specific scientific standards that were met or not met in the two different groups of studies, as well as in specific individual studies. The studies exemplifying the two different modes of the bimodal distribution differed with respect to their compliance or lack of compliance with at least 15 of the 20 standards.

The primary difference between the methods that produced low error rates and high statistical confidences and those that produced high error rates and low statistical confidences is that the former practiced the BF classification CIT (which meets defining scientific standards 13, 14, and 17) and the latter practiced the comparison CIT (which does not meet those particular standards).

The classification CIT is a fundamentally different paradigm from the comparison CIT. The BF classification CIT analyzes responses to different types stimuli than those analyzed in the comparison CIT, and analyzes them in a fundamentally different way. These differences are described in detail in the Methods and Discussion sections.

In the present study, as in Farwell et al. (2014), we directly investigated the differences in results produced by the BF classification CIT versus the comparison CIT, with all other variables held constant. We applied both the BF classification-CIT and the comparison-CIT analysis methods to the same data, and compared the results produced by the respective techniques. This allows us to draw conclusions regarding the differences in results produced by the classification CIT and the comparison CIT in a design in which everything else except the classification/comparison distinction is the same for both conditions.

Scientific questions addressed by this research

This research addresses one primary scientific question (I) and a secondary question (II). Both are relevant to the practical application of the brainwave-based concealed information test in field situations in real-world counterterrorism and criminal investigations.

-

I.

Do field tests on suspects in real-world terrorist crimes and other crimes support the brain fingerprinting scientific standards hypothesis?

This fundamental question can be divided into the following parts:

-

Does the classification CIT, when implemented according to the 20 brain fingerprinting scientific standards, produce

-

(A)Error rate, and

-

(B)Statistical confidences for individual determinations that are viable for field use in real-world counterterrorism and criminal investigation applications?

“Viable for field use” is defined as meeting the following criteria:-

(i)Less than 1% error rate;

-

(ii)Median statistical confidences for individual determinations of at least 95%; including

-

(iii)Median statistical confidences of at least 90% for both information-present determinations and information-absent determinations;

-

(iv)Produced in the following conditions:

- without countermeasures;

- with countermeasures; and

- in field cases with substantial consequences to the outcome, where it is unknown for certain whether or not countermeasures are being applied.

-

(A)

- Do the 20 brain fingerprinting scientific standards provide sufficient conditions for a brainwave-based classification CIT that is viable for field use?

-

II.What are the differences, if any, between the results produced by the BF classification CIT versus the comparison CIT?

-

II.

This question can be divided into the following parts:

- Does the BF classification CIT produce significantly more accurate and valid results and higher statistical confidences than the comparison CIT, when all other variables are held constant?

-

(i)“Accurate and reliable” constitutes a combination of lower error rate across subjects and/or higher statistical confidences within subjects;

-

(ii)To be “valid” a study must use valid statistics properly and must at a minimum not determine any subject to be information present or information absent when the statistics applied compute a probability of less than 50% that the selected determination is correct.

-

(iii)“All other variables held constant” means that the only difference between the two methods is the fundamental one: that the classification CIT classifies the probe responses as being more similar to the target responses or to the irrelevant responses, and the comparison CIT computes whether the probe responses are larger than the irrelevant responses (and ignores the targets).

-

(i)

-

2.

Is implementing the BF classification CIT, rather than the comparison CIT (brain fingerprinting scientific standards 13, 14, and 17), a necessary condition for a combination of adequate error rate and adequate statistical confidences to meet the criteria for viable field use?

Methods

Material and methods

Subjects

We conducted 24 tests on 23 subjects on information regarding (1) Terrorist crimes; (2) Other crimes; and (3) A classified counterterrorism operation.

In Experiment 1 we conducted 19 tests on 18 subjects. (One subject was tested on information regarding two different terrorist crimes.) Results of testing showed that 6 subjects were “information present,” i.e., they had information stored in their brains regarding specific terrorist crimes or other known crimes, and that 12 subjects were “information absent,” i.e., lacked such information.

The cases reported were all of the counterterrorism and criminal cases in specific theaters that we conducted in the time period during which we were collecting data for this report. All of the other cases that we conducted in other theaters and at other times achieved identical error rates (0%) and extremely similar statistical confidences.

In Experiment 2 we conducted 5 tests on 5 subjects who were participants in a classified counterterrorism operation. All were correctly determined to be “information present.”

Due to real-world considerations, including the classified nature of the operations, ongoing counterterrorism operations and criminal investigations, and uncertainties regarding the identity and history of some of the subjects, complete demographic information is unavailable. All subjects were of sufficient age to meet ethics requirements (over 18 years).

Experimental procedures were approved by the Brain Fingerprinting Laboratories ethics committee and performed in accordance with the ethical standards of the 1964 Declaration of Helsinki, including written informed consent prior to participation.

Stimuli

Three types of stimuli consisting of words or phrases were presented on a computer screen: probes, targets, and irrelevants. Probes contained specific information relevant to the investigated situation. In Experiment 1 the investigated situations were different for each test, comprising terrorist and other crimes. In Experiment 2 the investigated situation was the same for all subjects, namely a specific classified counterterrorism operation. The test is designed to detect the subject’s knowledge or lack of knowledge of the information contained in the probes as relevant in the context of the investigated situation.

For each probe (and each target) comparable irrelevants were structured that contained similar, plausible, but incorrect information about the investigated situation. For a subject lacking the relevant knowledge contained in the probes, the irrelevants and probes were equally plausible as correct, relevant details. The irrelevants that were comparable to each probe were indistinguishable from the probe for a subject lacking the tested information.

Each probe contained correct, relevant information fitting the description of that probe. Descriptions of each probe were presented to each subject in the course of the experiment. The two irrelevants comparable to each probe contained incorrect information that would be plausible as fitting that same description for an individual lacking the information contained in the probes. For example, a probe stimulus may be the specific composition of a bomb in a terrorist bombing, or the content of intercepted communications between the mastermind, the handlers, and the end perpetrators in a hijacking. Corresponding irrelevants could be plausible alternative information that logically could be, but in fact is not, an accurate description of items matching this description. For obvious security reasons, the exact stimuli cannot be given.

Subjects were provided with a description of each probe that specified the significance of the probe in the context of the investigated situation, but were not informed which was the correct, situation-relevant probe and which were the corresponding irrelevants.

Similarly, each target stimulus contained correct, situation-relevant information, and the two irrelevant stimuli comparable to each target contained comparable, incorrect but plausible information. Unlike probes, targets were identified as such in instructions to the subjects.

Stimuli were constructed in groups of six: one probe, one target, and four irrelevants. For each probe there were two comparable irrelevants. For each target there were two comparable irrelevants. We used a ratio of 1/6 targets, 1/6 probes, and 2/3 irrelevants so targets and probes were relatively rare, which is known to enhance P300 amplitude (Farwell and Donchin 1988b, 1991).

Our prediction was that targets would elicit a large P300 and P300-MERMER (memory and encoding related multifaceted electroencephalographic response; see Farwell 2012; Rapp et al. 1993) (or P300 + LNP—late negative potential) in all subjects, irrelevants would not elicit a large P300-MERMER, and probes would elicit a large P300-MERMER only in information-present subjects. Thus, for information-present subjects, ERP responses to probes would be similar to ERPs for targets. For information-absent subjects, ERP responses to probes would be similar to ERPs for irrelevants.

For all but one test there were 9 unique probes, 9 unique targets, and 36 unique irrelevants, a total of 54 unique stimuli. These comprised 9 groups of stimuli, each consisting of one probe, one target, and four irrelevants. In each test, 3 groups (comprising one “stimulus set”) were presented. There was one exception to all of this: For one test, only 2 crime-relevant items that were known only to the investigators and the perpetrator were available, so there were only 2 probes, 2 targets, and 4 irrelevants. Each unique stimulus was presented multiple times, as described below.

The stimuli were words and short phrases, represented alphanumerically, presented on a 21.5″ AOC LED HD monitor Model #G2260VWQ6 at a distance of 24″ from the subject. The text was in white typeface against a blue background. The average length of stimuli was 12 characters, presented at a horizontal visual angle of 6.4 degrees. They varied in length from 8 to 16 characters.

Procedure

Before the test, we made certain that the subject understood the significance of the probes, without revealing which stimuli were probes. We described the significance of each probe to the subject. We then showed the subject the probe and the corresponding irrelevants, along with the description of the significance of the probe, without revealing which was the probe. Thus, subjects were informed of the significance of each probe stimulus, but were not told which stimulus was the probe and which were corresponding irrelevants. For example, subjects were told, “One of these three items is the kind of specific projectiles contained in the suicide vest,” followed by a list of one probe and two irrelevants (in random order). Although the descriptions of the probes were made known to subjects, the probe stimuli themselves were never identified as probes, nor were probes in any way distinguishable from irrelevants, except to an individual who already knew the crime-relevant information contained in the probes.

Targets were explicitly identified to the subjects. Experimental instructions ensured that the subject knew the targets and their significance in the context of the investigated situation. We described the significance of each target to the subject. We showed the subject each target and the corresponding irrelevants, along with the description of the significance of the target.

We also showed subjects a list of the targets and noted that subjects would be required to recognize the targets during the test. We instructed subjects to press one button in response to targets, and another button in response to “all other stimuli.” The subject’s task was to read and comprehend each stimulus, and then to indicate by a button press whether the stimulus was a target stimulus or not.

For a subject possessing the knowledge embodied in the probes, "all other stimuli" consisted of two types of stimuli: probes containing the known situation-relevant information, and irrelevant stimuli. For a subject lacking the tested knowledge, "all other stimuli" appeared equally irrelevant. Probes were indistinguishable from irrelevants. For “all other stimuli” (that is, everything except targets), the subject was instructed to push the opposite button from the one pushed in response to targets. This instruction applied whether the subject perceived these as a single category—all equally irrelevant, if the subject was information absent—or as two categories—(1) Irrelevant, and (2) Relevant to the concealed information being tested, if the subject was information present.

The differential button-press task in response to every stimulus presentation ensured that the subject was required to read and comprehend every stimulus, including the probe stimuli, and to prove behaviorally that he had done so on every trial. (A “trial” is defined as one stimulus presentation and the resulting brainwave and behavioral response.) This allowed us to avoid depending on detecting brain responses to assigned tasks that the subject could covertly avoid doing, while performing the necessary overt responses.

This is a critical difference between the method we employed and other methods such as Rosenfeld’s complex trial protocol that do not require subjects to distinguish the different types of stimuli to prove behaviorally on every trial that they have done so. This feature is specified in the brain fingerprinting scientific standards, and has been shown to be a necessary condition for a valid and reliable test.

We obtained the permission of all subjects to provide the information regarding the outcome of the test to authorities, agencies, and/or judicial forums where it may be requested as relevant scientific evidence. We explained to the subjects what relevant information the test could potentially provide. Before the test, subjects were knowledgeable regarding the possible consequences of the test—or any other event that might potentially provide relevant evidence—because they were well informed regarding their legal situation and any investigations or legal proceedings in which they were involved. In some cases these consequences involved life or death, prison sentences, or other inherently life-changing consequences. All subjects knew these consequences because they were knowledgeable regarding their current situation. All subjects had access to legal counsel and unlimited communication with anyone with whom they wished to discuss their situation.

To provide for life-changing consequences for all subjects including those for whom there were no judicial consequences to the outcome, we offered a $100,000 reward for beating the test. This reward applied only to the BF classification CIT implemented according to the brain fingerprinting scientific standards, and not to the comparison CIT.

We taught all subjects the “try not to think about it” countermeasure (Bergström et al. 2013) and instructed them practice it. We used the exact same instructions as Bergström et al., as closely as can be determined from their publication. Prior to the test, we instructed the subjects as follows: “Try to stop any memories of the event from coming to mind at all during the test.” This instruction immediately followed the standard instruction in brain fingerprinting scientific standard #7, as specified below.

Testing was divided into separate blocks. In each block the computer display presented 72 stimulus presentations or trials. In each block, 3 stimulus groups (one stimulus set) were presented. That is, in each block there were 3 unique probes, 3 unique targets, and 12 unique irrelevants. Each stimulus was presented 4 times in a block to make the total of 72 stimulus presentations per block. Stimuli were presented in random order. Each full test comprised at least 9 blocks.

Immediately before each block, we repeated the description of the significance of each of the probes and targets that were to appear in each block (but not the actual stimuli). For example, “In this test you will see the secret location of the control headquarters of the terrorist attack, the party who supplied the weapons, the code names for the weapons, the way the instructions were communicated from the mastermind to the end perpetrators, and the vehicle used in the attack.”

Stimuli were presented for 400 ms at an inter-stimulus interval (ISI; stimulus onset asynchrony—SOA) of 3000 ms. A fixation point (“X”) was presented for 1000 ms prior to each stimulus. For each trial, the sequence was a fixation point for 1000 ms, the stimulus (target, probe, or irrelevant) for 400 ms, a blank screen for 1600 ms, and then the next fixation point.

Trials contaminated by artifacts generated by eye movements or muscle-generated noise were rejected on-line, and additional trials were presented until 72 artifact-free trials were obtained. Trials with a range of greater than 150 microvolts in the electro-oculograph (EOG) channel were rejected. Data for “rejected” trials were collected and recorded, but rejected trials did not contribute to the count of trials presented, so each rejection resulted in an additional stimulus presentation.

Brain responses were recorded from the midline frontal, central, and parietal scalp locations (Fz, Cz, and Pz, International 10–20 System) referenced to the left ear, and from a location on the forehead to track eye movements. Custom electrodes were held in place by a custom headset.

Electroencephalograph (EEG) data and electro-oculograph (EOG/eye movement) data were digitized at 500 Hz. Data were amplified at a gain of 50,000 using custom amplifiers embedded in a custom Cognionics Quick-20 headset and communicated wirelessly via Bluetooth to the computer for recording and analysis. Analog filters passed signals between 1 and 30 Hz. Data were stored on a solid-state drive for off-line analysis.

Data were recorded in a sound-isolated room. The subject sat facing the stimulus-display monitor. The experimenter sat in the same room, out of sight of the subject.

Data analysis

We analyzed ERP data from the Pz scalp site. Data were digitally filtered using an equal-ripple, zero-phase-shift, optimal, finite impulse response, low-pass filter with a passband cutoff frequency of 6 Hz and a stopband cutoff frequency of 8 Hz (Farwell et al. 1993). Trials with a range of greater than 150 microvolts in the EOG channel were excluded from analysis. We decided on this threshold based on our previous experience (Farwell and Donchin 1991; Farwell et al. 2013). In exploratory data analysis, we have varied this threshold considerably, and the results are robust even if we change this parameter within quite a wide range.

For each subject’s data we conducted two separate analyses: a classification-CIT analysis and a comparison-CIT analysis, applying the respective bootstrapping procedures described below. The epoch analyzed was 300 to 1500 ms post-stimulus.

Classification-CIT statistical analysis bootstrapping method

The primary data-analysis task was to determine whether the ERP responses to the probe stimuli resembled the responses to the targets, containing a large P300 and P300-MERMER similar to that elicited by the targets, or whether the probe responses were more similar to the irrelevant responses, which lacked a large P300 and P300-MERMER.

We used bootstrapping (Farwell and Donchin 1988a, 1991; Wasserman and Bockenholt 1989; Farwell et al. 2013; Efron 1979) to determine whether the probe responses were more similar to the target responses or to the irrelevant responses, and to compute a statistical confidence for this determination for each individual subject. The metric for similarity was double-centered correlation.

The bootstrapping procedure accomplished two goals: (1) To take into account the variability across single trials, while also maintaining the smooth and relatively noise-free shape provided by signal averaging (which is vital for reliable correlation computations); (2) To isolate the critical variable—knowledge of the information embodied in the probes—by classifying the responses to the probe stimuli as being either more similar to the target responses or to the irrelevant responses.

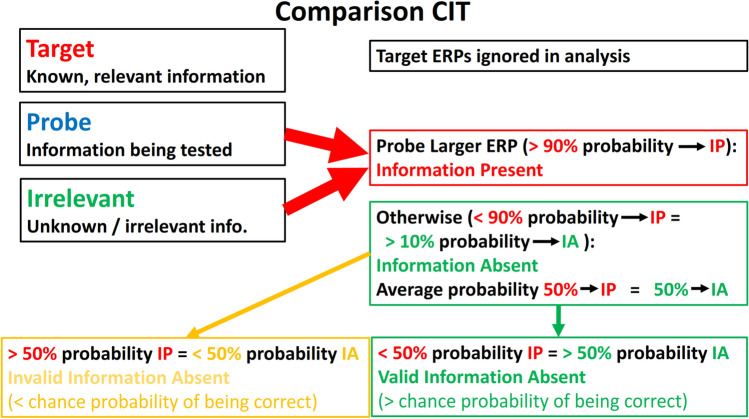

Figure 1 illustrates the classification-CIT data analysis method.

Fig. 1.

Classification CIT Data Analysis. The BF classification CIT uses bootstrapping on correlations to compute the probability that the brain response to the probe stimuli is (a) significantly more similar to the target response than to the irrelevant response (“information present” determination), (b) significantly more similar to the irrelevant response than to the target response (“information absent” determination), or (c) data are insufficient to make a determination with a high statistical confidence in either direction (indeterminate—no determination is made). All determinations, both information present and information absent, are made with at least a 90% statistical confidence

Briefly, the bootstrapping procedure for the BF classification CIT is as follows. We repeat the following procedure 1000 times. Randomly sample P probes, T targets, and I irrelevants, with P, T, and I equal to the total number of probe, target, and irrelevant trials in the data set respectively. In each iteration, compare the probe-target correlation with the probe-irrelevant correlation. Count the number of times that the probe-target correlation is greater than the probe-irrelevant correlation, and convert this to a percentage. This is the probability that the probe response is more similar to the target response than to the irrelevant response, which is the probability that information present is the correct determination. 100% minus this is the probability that the probe response is more similar to the irrelevant response, which is the probability that information absent is the correct determination.

We set an a priori bootstrapping probability criterion of 90% for an information-present determination and 90% (in the opposite direction) for an information-absent determination.6 If the probability was greater than 90% that the probe response was more similar to the target response than to the irrelevant response, we classified the subject as information present. If the probability was greater than 90% that the probe response was more similar to the irrelevant response than to the target response, the subject was classified as information absent. (This is equivalent to a probability of less than 10% (i.e., 100%–90%) that information present is correct).

If the results did not meet either the criterion for information present or the criterion for information absent, we did not classify the subject in either category. The outcome would then be indeterminate (although there were no indeterminates in this study or any of our studies since 1992).

In previous BF-classification-CIT research we have set the criterion for information-absent determinations at 70% probability that information absent is the correct determination (30% probability that information present is correct). Our rationale has been based on the situation prevalent in the field arenas where we have previously applied this method. Our previous field applications have been primarily in the criminal justice system in the US.

From a human rights and ethical point of view, in our view it is better to be more lenient in making an information-absent determination, which generally provides evidence of innocence, than an information-present determination, which generally provides evidence of guilt. It is the view of many in the US that it is better to allow many people to avoid conviction for crimes of which they are guilty than to have even one innocent person falsely convicted and incarcerated or put to death. Greater leniency in making an information-absent determination is in accord with this view.

In the present research, we have raised the criterion for an information-absent determination to 90% probability for two reasons. First, our results in previous field studies with substantial consequences to the outcome (Farwell et al. 2013) have produced higher than 90% statistical confidence for every subject. If we had implemented 90% criterion, our results would have been the same. In fact, in cases such as the Harrington case where our test provided exculpatory evidence, statistical confidence was greater than 99%.

Second, the field counterterrorism applications that provided some of the data for this study have different ethical, moral, and human rights implications than those that were applicable in the criminal investigations in which we participated previously. The cost in human life and global security to allowing a terrorist mastermind to escape prosecution and incarceration, and thus to allow him to continue his terrorist activities, is much higher than the cost of letting a common domestic criminal get away with his crimes. In counterterrorism, unlike the situation in the vast majority of ordinary crimes, a false negative error could have disastrous consequences in loss of human life. Therefore it makes sense to require a high standard for an information-absent determination, just as it does for an information-present determination.

For these reasons we have raised the criterion for an information-absent determination to 90% statistical confidence, the same as the criterion for an information-present determination (but in the opposite direction).

The bootstrapping-computed probability that information-present is the correct determination is also known as the bootstrap index. When the bootstrap probability exceeds the criterion for an information-present determination, the subject is determined to be information present, and in this case the bootstrap probability is the statistical confidence for the information-present determination.

Mathematically, the probability that information absent is the correct determination is 100% minus the probability that information present is the correct determination, or 100% minus the bootstrap index. For example, if there is a 5% probability that the probe response is more similar to the target than to the irrelevant response (information present is correct), then there is an 95% probability that the probe response is more similar to the irrelevant response (information absent is correct).

If the probability was greater than 90% that the probe response was more similar to the irrelevant response than to the target response (equivalent to a 10% probability that the probe response was more similar to the target response, or 10% bootstrap index), we classified the subject as information absent. The bootstrap probability that an information-absent determination is correct (100% minus the probability that information present is correct) is the statistical confidence for this information-absent determination.

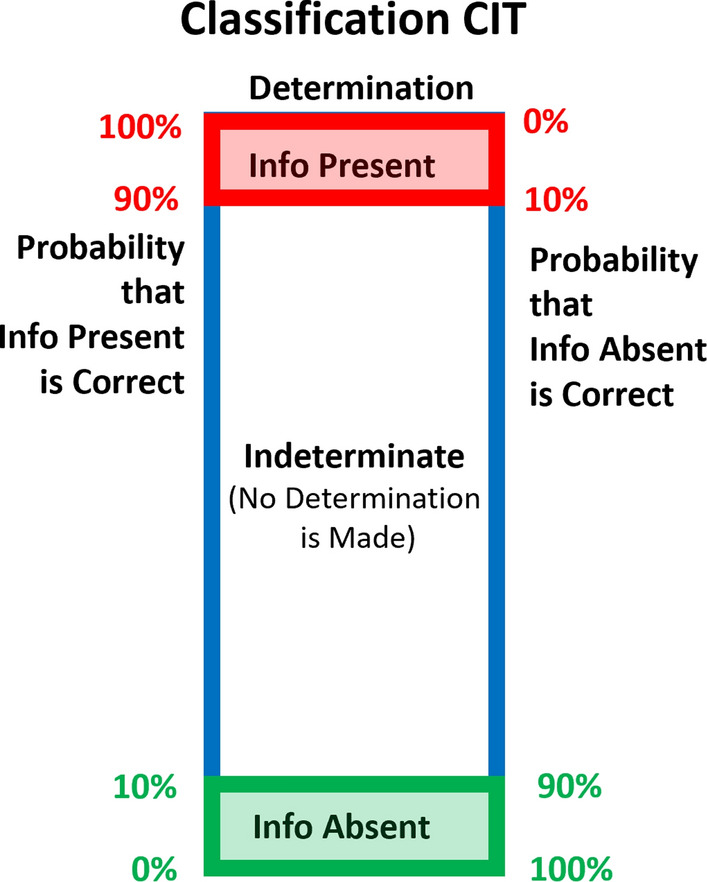

Figure 2 illustrates the classification-CIT bootstrap probabilities and determinations.

Fig. 2.

Classification CIT Bootstrap Probabilities and Determinations. The bootstrapping probability computation computes the probability that information present is the correct determination. The probability that information absent is correct is 100% minus the probability that information present is correct. A determination of either information present or information absent requires at least a 90% probability that the selected determination is correct, equivalent to 10% probability that the opposite determination is correct. Otherwise, no determination is made

Note that the probability that information absent is the correct determination equals 100% minus the probability that information present is the correct determination. The probability that information present is the correct determination is the statistical confidence for an information-present determination. The probability that information absent is the correct determination is the statistical confidence for an information-absent determination. Equivalently, the statistical confidence for an information-absent determination is 100% minus the originally computed (information-present) bootstrap probability, or 100% minus the bootstrap index.

There has been some confusion in the literature because some authors compute the bootstrap index—which is equivalent to the statistical confidence for an information-present determination—and then do not subtract it from 100% when discussing information-absent (or “innocent”) determinations. (This includes our own publication Farwell and Donchin 1991). There is nothing wrong with discussing information-absent determinations in relation to the originally computed bootstrap index, as long as one keeps in mind the fact that the bootstrap index is the probability that information absent is the incorrect determination (that is, information present is correct). Some commentators and even some researchers have been confused in evaluating information-absent determinations by the fact that a bootstrap index of 80%—which looks high at first glance—constitutes a 20% probability that information absent is the correct determination, and a bootstrap index of 20%—which looks low at first glance—constitutes an 80% probability that information absent is the correct determination.

For each subject, each data analysis method produced a determination and a statistical confidence, e.g., “information present, 99.9% statistical confidence.” The statistical confidence is the probability that the determination is correct, based on the within-subjects statistical computation taking into account the size of the effect and the variability in the data.

Error rate is the percentage of incorrect information-present (false positive) and information-absent (false negative) determinations. Accuracy is 100% minus the error rate.

In reporting error rates and/or accuracy, indeterminates must be reported as such. In reporting “accuracy,” some authors have confounded indeterminates with false positives and/or false negatives, reporting “accuracy” as the percentage of tests that result in a correct determination, and hiding the number of indeterminates. This in effect constitutes misrepresenting all indeterminates as false negative errors. This irretrievably hides the true error rate if there are indeterminates, and makes it impossible to make a meaningful comparison with studies that report the true error rate. In any meaningful reporting, indeterminates if any must be identified as such, and not confounded with false positive or false negative errors. (Some legitimate techniques such as Bayesian analysis do not allow indeterminates, in which case this fact must also be reported).

The BF classification CIT detects information. Ground truth is whether or not the subject possessed the information embodied in the probes at the time of the test. Ground truth was determined by confession and, when applicable, judicial outcome of the criminal cases. That is, in every case either (a) The subject confessed independently of the test and was proven judicially to be guilty, or (b) Another person confessed and/or was proven judicially to be guilty, or (c) The subject did not confess and was found judicially to be innocent.

We restricted our conclusions to a determination as to whether or not a subject knew the specific situation-relevant knowledge embodied in the probes at the time of the test. Our procedures recognize the fact that the brainwave-based BF classification CIT detects only the presence or absence of information—not guilt, innocence, honesty, lying, deception, or any past action or non-action.

Comparison-CIT statistical analysis bootstrapping method

The comparison CIT uses bootstrapping in an entirely different way from the BF classification CIT. The comparison CIT ignores the target responses and applies bootstrapping to compute the probability that the amplitude of the probe ERP is larger than the amplitude of the irrelevant ERP. The amplitude of the ERP response is defined as the difference between the highest voltage in the P300 window (300–900 ms) and the lowest voltage in the LNP window (900–1500 ms). This is the peak-to-peak amplitude of the P300-MERMER, equivalent to the sum of the peak amplitudes of the P300 and the LNP. It is sometimes represented as simply the P300 amplitude. (See the discussion in the Supplementary Material on “Definition of Terms and Notes on Terminology”). Computing the P300 amplitude in this way is in accord with the metric used previous applications of the comparison CIT, including the complex trial protocol, e.g., Rosenfeld et al. (2008).

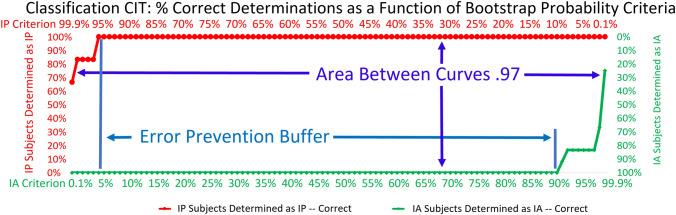

Figure 3 illustrates the comparison-CIT data analysis method.

Fig. 3.

Comparison CIT Data Analysis. The comparison CIT uses bootstrapping to determine if (a) there is greater than a 90% probability that the probe response is larger than the irrelevant response, resulting in an information-present determination; or (b) there is less than a 90% probability that the probe response is larger than the irrelevant response, resulting in an information-absent determination. The probability that information absent is the correct determination is 100% minus the probability that information present is correct. Information-present determinations have a statistical confidence of at least 90%. Information-absent determinations have a statistical confidence of greater than 10%. The statistical model predicts that the average statistical confidence for information-absent determinations is 50% (chance). If the statistical confidence is greater than 50%, the determination is valid, i.e., there is a computed probability of greater than chance that the selected determination is correct. If the statistical confidence is less than 50%, the determination is invalid, i.e., there is a computed probability of less than chance that the determination is correct

The comparison CIT uses the bootstrapping probability statistic computed on the amplitude of the brain responses to determine whether (a) The probe response is significantly larger than the irrelevant response, or (b) The probe response is not significantly larger than the irrelevant response. The former results in an information-present determination. The latter results in an information-absent determination.

In the comparison-CIT data analysis, trials are randomly sampled with replacement and averaged as described above for the BF classification CIT, except that only probe and irrelevant trials are sampled and averaged. In each of 1000 iterations, the amplitude of the ERP in the sampled probe average is compared with the amplitude of the ERP in the sampled irrelevant average. The percentage of times that the sampled probe ERP is larger than the sampled irrelevant ERP provides an estimate of the probability that the probe ERP is larger than the irrelevant ERP.

As in previous studies in ours and other laboratories, amplitude is defined as the difference in microvolts between the largest positive amplitude in the P300 window (300–900 ms post-stimulus) and the largest negative (or least positive) amplitude in the following negative LNP window (900–1500 ms post-stimulus).

If the probability that the probe ERP is larger than the irrelevant ERP is greater than 90%, then the subject is determined to be information present. If the probability that the probe ERP is larger than the irrelevant ERP is less than 90%, then the subject is determined to be information absent. (The comparison CIT does not have an indeterminate category).

A probability of 90% that information present is correct (that is, probe ERP is larger than irrelevant ERP) is equivalent to a probability of 10% (that is, 100%—90%) that information absent is correct.

Therefore, any subject with a probability of greater than 10% that information absent is correct is determined to be information absent. This results in subjects being determined to be information absent when the computed bootstrap probability is as high as 89.9% that information present would be the correct determination, that is, as low as a 10.1% statistically computed probability that the selected information-absent determination is correct. Information-absent statistical confidences range from 10.1 to 100%.

The statistical model predicts that the average statistical confidence for information-absent determinations is 50%, (chance). This is the approximate result of all previously published studies on the comparison CIT.

If the statistical confidence is greater than 50%, the determination is valid, i.e., there is a computed probability of greater than chance that the selected determination is correct. If the statistical confidence is less than 50%, the determination is invalid, i.e., there is a computed probability of less than chance that the determination is correct. The statistical model predicts that approximately half of information-absent determinations will be invalid. This is the approximate result of all previously published studies on the comparison CIT.

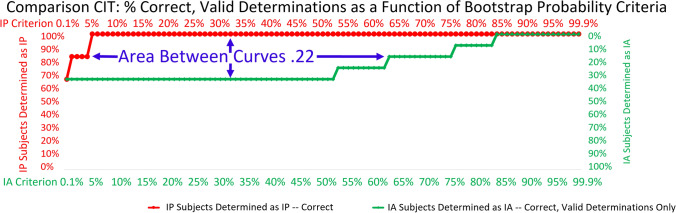

Figure 4 illustrates the comparison CIT bootstrap probabilities and determinations.

Fig. 4.

Comparison CIT Bootstrap Probabilities and Determinations. If the probability is greater than 90% that information present is the correct determination (less than 10% probability that information absent is correct), the subject is determined as information present. If the probability is less than 90% that information present is correct (greater than 10% probability that information absent is correct), the subject is determined as information absent. If the selected information-absent determination has greater than chance (50%) probability of being correct, it is a valid information-absent determination. If the probability that the selected information-absent determination is correct is less than chance (50%), it is an invalid information-absent determination

According to the predictions of the statistical model and all results of the comparison CIT reported in the literature to date, statistical confidences for the comparison CIT average 50% (chance).

According to the predictions of the statistical model as well as the results of all studies published to date, approximately half of all information-absent determinations by the comparison CIT are invalid.

In summary, the possible outcomes of the statistical computations for the comparison CIT are correct positive, correct valid negative, correct invalid negative, false positive, and false negative.

The common comparison-CIT practice of presenting invalid but “correct” results—that is, chosen determinations that have a less-than-chance (50%) probability of being correct—produces obvious logical and statistical anomalies. An alternative to reporting invalid results and less-than-chance statistical confidences is to lower the criterion for determining a subject to be information present to 50%. This means the criterion for an information-absent determination is 100% − 50% = 50% as well. With this criterion, all determinations are valid. Each subject is assigned whichever determination has a greater probability of being correct. The probability that each determination is correct is better than chance in every case. This results in a trade-off between statistical confidence and error rate, however.

We have presented the results of the comparison CIT below for both methods, the 90% information-present criterion/10% information-absent criterion and the 50%/50% criterion for both.

With a 50%/50% criterion, all determinations are valid: all are computed to be more likely than not to be correct. All determinations have a greater than 50% computed probability of being correct. All determinations have a statistical confidence of greater than chance. However, an “information absent” determination with a statistical confidence of 51%, although valid, is not of practical use. To know that there is a 51% probability that the subject is information absent provides little information about the reality of the situation.

If the probability is greater than 70% that information absent is the correct determination, however, this provides at least some potentially useful information.

Therefore, in addition to tabulating the results for the 90%/10% statistical confidence criterion and the 50%/50% statistical confidence criterion, we have also applied a third metric, tabulating which correct information-absent determinations have a greater than 70% statistical confidence. (Unlike some information-absent determinations, all information-present determinations meet this criterion because they must meet the higher 90% information-present criterion.)

Conventional signal-detection methods for illustrating error rate as a function of probability criteria are inadequate

Most common metrics and visualization modalities for assessing the trade-off between correct determinations and errors as a function of the criteria for determinations are inadequate for representing any classification-CIT data, including the data of the present study, for several reasons.

One common method for illustrating and analyzing the results of the conventional ANS-based CIT is signal-detection theory and receiver operating characteristic (ROC) curves. As the name implies, receiver operating characteristic analysis was developed to analyze the operating characteristics of signal receivers, for example, to determine whether a blip on a radar screen was substantial enough to indicate the presence of a ship, or not. Such methods can readily be applied to estimate, for example, whether a change in skin conductance was large enough to indicate deception, or not. The signal is reduced to a single number, and if this number exceeds a threshold, it is classified as a detected signal. If not, then not.

ROC curves can be used to represent the data of the comparison CIT because there is only a single criterion that can be represented by a single number (P300 amplitude). For each subject, this number can be compared to a single criterion to make the relevant determination. Any subjects’ data not determined as information-present is automatically determined as information absent. The information-absent criterion is determined by the information-present criterion: the latter is simply 100% minus the former. Thus, the data set for multiple subjects with respect to a single criterion can be represented by a single line on a graph.