Summary

Background

Breast cancer is the leading cause of cancer-related deaths in women. However, accurate diagnosis of breast cancer using medical images heavily relies on the experience of radiologists. This study aimed to develop an artificial intelligence model that diagnosed single-mass breast lesions on contrast-enhanced mammography (CEM) for assisting the diagnostic workflow.

Methods

A total of 1912 women with single-mass breast lesions on CEM images before biopsy or surgery were included from June 2017 to October 2022 at three centres in China. Samples were divided into training and validation sets, internal testing set, pooled external testing set, and prospective testing set. A fully automated pipeline system (FAPS) using RefineNet and the Xception + Pyramid pooling module (PPM) was developed to perform the segmentation and classification of breast lesions. The performances of six radiologists and adjustments in Breast Imaging Reporting and Data System (BI-RADS) category 4 under the FAPS-assisted strategy were explored in pooled external and prospective testing sets. The segmentation performance was assessed using the Dice similarity coefficient (DSC), and the classification was assessed using heatmaps, area under the receiver operating characteristic curve (AUC), sensitivity, and specificity. The radiologists’ reading time was recorded for comparison with the FAPS. This trial is registered with China Clinical Trial Registration Centre (ChiCTR2200063444).

Findings

The FAPS-based segmentation task achieved DSCs of 0.888 ± 0.101, 0.820 ± 0.148 and 0.837 ± 0.132 in the internal, pooled external and prospective testing sets, respectively. For the classification task, the FAPS achieved AUCs of 0.947 (95% confidence interval [CI]: 0.916–0.978), 0.940 (95% [CI]: 0.894–0.987) and 0.891 (95% [CI]: 0.816–0.945). It outperformed radiologists in terms of classification efficiency based on single lesions (6 s vs 3 min). Moreover, the FAPS-assisted strategy improved the performance of radiologists. BI-RADS category 4 in 12.4% and 13.3% of patients was adjusted in two testing sets with the assistance of FAPS, which may play an important guiding role in the selection of clinical management strategies.

Interpretation

The FAPS based on CEM demonstrated the potential for the segmentation and classification of breast lesions, and had good generalisation ability and clinical applicability.

Funding

This study was supported by the Taishan Scholar Foundation of Shandong Province of China (tsqn202211378), National Natural Science Foundation of China (82001775), Natural Science Foundation of Shandong Province of China (ZR2021MH120), and Special Fund for Breast Disease Research of Shandong Medical Association (YXH2021ZX055).

Keywords: Deep learning, Full automated pipeline system, Contrast-enhanced mammography, Breast lesions, Segmentation, Classification

Research in context.

Evidence before this study

We searched PubMed with the terms “(deep learning OR artificial intelligence)” AND (breast cancer OR breast lesions) AND (contrast-enhanced spectral mammography OR contrast-enhanced mammography) published from database inception up to December 6, 2022, with no language restrictions. Only three studies about deep learning-based classification of breast lesions using contrast-enhanced mammography images were published. However, these studies have various limitations, including small sample size, single-centre design, and retrospective design. In addition, the method of manual segmentation is still time-consuming and labor-intensive.

Added value of this study

Our study proposed a fully automated pipeline system (FAPS) based on contrast-enhanced mammography (CEM) that could perform the task of segmentation and classification of breast lesions. It was evaluated in pooled external and prospective testing sets. Moreover, FAPS-assisted strategies could improve radiologists’ performance and guide the selection of clinical management strategies.

Implications of all the available evidence

Our findings show that the FAPS provides a non-invasive method to perform the task of segmentation and classification based on CEM images, which exhibits superior performance and may assist clinical decision-making. In the future, more prospective multicentre validation will provide strong evidence for the performance of our FAPS in assisting the clinic.

Introduction

Breast cancer is the most commonly diagnosed cancer and the leading cause of cancer-related deaths in women.1 According to the latest data from the American Cancer Society Statistics, 281,550 new cases of invasive breast cancer were diagnosed among American females, eventually leading to approximately 43,600 deaths in 2021.2 Consequently, there is an urgent need to develop a reliable method for accurately differentiating malignant from benign breast lesions.

Currently, mammography is used clinically for routine breast cancer screening and mortality reduction.3 However, owing to the influence of breast gland shielding and overlapping, especially in dense breasts, the performance of mammography in diagnosis is not satisfactory.4 Contrast-enhanced mammography (CEM) is a promising technology that combines intravenous iodine-contrast enhancement with digital mammography.5 CEM is recommended for the diagnosis of breast cancer according to the American College of Radiology.6 Related research has confirmed that the sensitivity of CEM is comparable to that of magnetic resonance imaging (MRI) and the positive predictive value of CEM is significantly higher than that of MRI in breast cancer diagnosis.7 However, radiologists’ assessments can be influenced by variations in technique, as well as by interobserver variability in interpretation. In addition, as a new technology has developed in recent years, the current diagnosis experience is relatively lacking.

Recently, deep learning has achieved remarkable results in radiology.8, 9, 10, 11 Compared with traditional radiomics based on handcrafted features,12 deep learning can automatically extract deep imaging features and has been demonstrated higher efficiency and reproducibility than handcrafted features.13 Many studies are currently devoted to exploring the application of deep learning in the detection and diagnosis of related clinical diseases, including breast cancer, and further confirming the potential value of deep learning.14, 15, 16, 17, 18 Studies have attempted to predict benign and malignant breast lesions by combining CEM images and convolutional neural networks.19, 20, 21 However, small-sample and single-centre datasets cannot adequately learn diverse image features and meet the requirements for generalised performance testing. In addition, the form based on manual segmentation is time-consuming and labor-intensive, which is not conducive to clinical translation. Moreover, the previous study could not be tested in a prospective clinical setting, in which bias may exist.

To solve the above problem, we aimed to develop a deep learning-enabled fully automated pipeline system (FAPS) for segmentation and classification of single-mass breast lesions using CEM images and then tested it in pooled external and prospective testing sets. Finally, we further evaluated the ability of the FAPS to assist in clinical decision-making.

Methods

Ethics

The study was approved by the Institutional Ethics Committee of the Yantai Yuhuangding Hospital (retrospective study approval number: 2022-87; prospective study approval number: 2022-72). The requirement for informed consent was waived for this retrospective study. Written informed consent was obtained from all study participants in prospective study (ChiCTR2200063444). This study followed these guidelines in the STARD 2015 checklist.

Patients

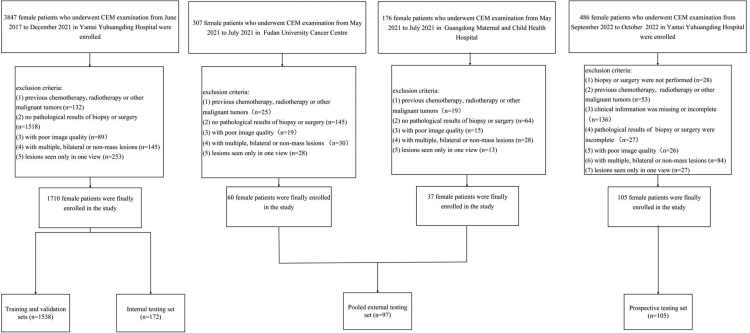

This study included 1912 patients. For the retrospective study, the inclusion criteria of patients were as follows: (1) had suspicious breast lesions during routine screening such as mammography or ultrasonography; (2) had low-energy and recombined CEM images within two weeks before biopsy or surgery; and (3) had complete medical records including demographic and clinical information. The exclusion criteria were as follows: (1) previous chemotherapy, radiotherapy, or other malignant tumors; (2) no pathological results of biopsy or surgery; (3) poor image quality; (4) the presence of multiple, bilateral or non-mass lesions; and (5) lesions seen only in one view. Detailed criteria for the prospective study and clinical characteristics can be found in Supplementary Material 1. The patient enrollment process is illustrated in Fig. 1. Finally, 1710 patients who underwent CEM before biopsy or surgery between June 2017 and December 2021 at Yantai Yuhuangding Hospital were included. The patients were split into training and validation sets (1538 patients) and an internal testing set (172 patients) at a ratio of 9:1. According to the same standard, 60 consecutive patients from May 2021 to July 2021 at the Fudan University Cancer Centre and 37 patients from May 2021 to July 2021 at the Guangdong Maternal and Child Health Hospital were enrolled in the pooled external testing set. A 10-fold cross validation was performed in the training and validation sets to learn and optimise the learnable parameters of the model. In addition, 105 patients were prospectively tested at the Yantai Yuhuangding Hospital from September 2022 to October 2022. CEM images of the patients were obtained from the Picture Archiving and Communication System. The Parameters for the CEM image acquisition are shown in Supplementary Material 2.

Fig. 1.

Study profile.

Image segmentation and data preprocessing

Before training the segmentation model, lesions were segmented by a radiologist (Segmenter 1) with 10 years of experience in breast imaging diagnosis. Another radiologist with 15 years of experience was responsible for this review. They performed segmentation of lesions using ITK-SNAP (version 3.6; www.itksnap.org). To assess the consistency of the inter- and intra-observer segmentation, 100 patients were randomly selected from the training set, and additional segmentation was performed by another two radiologists (Segmenter 2 and Segmenter 3) with 10 and 12 years of experience, respectively. In addition, a radiologist (Segmenter 1) repeated the segmentation with an interval of one month. All radiologists were blinded to the histopathological data. The Dice similarity coefficient (DSC) was used to evaluate the agreement both inter- and intra-observer segmentation. Average DSCs of 0.852 ± 0.125 and 0.876 ± 0.108 were achieved for inter- and intra-observer segmentation performances, respectively.

Before the CEM images were fed into the segmentation network, an algorithm based on automatic threshold segmentation (Otsu) was adopted to separate the mammary gland from the background region. Then, the breast region was cropped, and the images were normalised to 512 × 1024 pixels. To increase the training data and avoid overfitting of the network, we performed data augmentation, which included horizontal flipping (up to 20°), rotation, scaling (0.9–1.1), and horizontal and vertical shifting (−0.2–0.2). To create two-dimensional images suitable for segmentation network input, the low-energy, low-energy and recombined images of craniocaudal (CC) or medio lateral-oblique (MLO) views were input as the red, green, and blue channels of the image, respectively.22 For the input of the classification network, CEM images with a size of 512 × 512 pixels were first cropped according to the predicted segmentation mask. Normalisation and data augmentation were also performed. The low-energy and recombined images of each view were finally input into the classification network in the form of integration. The process was performed in Python (version 3.6.6; Python Software Foundation, Wilmington, Del).

Overview of FAPS

The FAPS consists of two subnetworks: RefineNet based on the segmentation task and the Xception + Pyramid pooling module (PPM) based on the classification task. RefineNet model can perform high-precision semantic segmentation. The nnUNet and DeepLabV3 models were trained separately for comparison. For the classification task, the Xception network was adopted as the basic backbone, using the segmentation mask from RefineNet model. In contrast to the traditional feature fusion method, which can be seen in Supplementary Material 3, we adopted the channel fusion method in this study. First, two feature maps were obtained from the last convolutional layer of the Xception + PPM network. Subsequently, we concatenated and fused these feature maps in a channel-wise manner, followed by global average pooling to obtain a one-dimensional feature vector. Probability prediction of a single view was obtained through the fully connected layer. Finally, the prediction result for the whole breast was the average prediction probability of the CC and MLO views. The information is presented in Fig. 2A. To investigate whether different components of the proposed network were truly important for accurate prediction, an ablation analysis was performed, which can be found in Supplementary Material 3. In addition, we explored the influence of PPM on FAPS performance. The basic structures of the subnetwork and PPM are shown in Supplementary Fig. S1. The study design is illustrated in Fig. 2. The gold standard for reference was the pathological results of biopsy or surgery at the model development stage. An introduction to these networks and the detailed training process are provided in Supplementary Material 4. The network and source codes are fully available (https://github.com/yyyhd/FAPS).

Fig. 2.

The design of this study. (A) Process of FAPS to perform segmentation and classification tasks. (B) The process in which FAPS assisted radiologists in pooled external and prospective testing sets. The blue arrows represent patients whose BI-RADS categories were upgraded, and the red ones were downgraded. FAPS = fully automated pipeline system; PPM = pyramid pooling module; CC = craniocaudal; MLO = medio lateral-oblique; BI-RADS = Breast Imaging Reporting and Data System.

Radiologists’ performance compared with FAPS

Three junior and three senior radiologists with 4–15 years of experience in breast imaging diagnosis reviewed the images in the pooled external and prospective testing sets. All radiologists were blinded to the pathological results. Only age, medical history, family history, and CEM images were available to them. Images were analysed using CEM BI–RADS.6 When radiologists made the BI-RADS category interpretation, judgements of benign and malignant classifications were also made. Once the decision was made, the result was recorded. Notably, the radiologists’ reading time was also recorded using an external clock for comparison with FAPS. When the radiologists read the first image, we tracked and recorded the time until the last image was completed. The time from the beginning of segmentation to the end of classification was the time required by the FAPS. To simulate clinical conditions, the maximum sensitivity would be the cutoff threshold for binary classification when FAPS was compared with six radiologists. Sensitivity and specificity were used as the primary metrics for the comparison of radiologists and FAPS.

Radiologists’ performance with FAPS assistance

CEM images from both testing sets were used to assess the auxiliary performance of the FAPS. There was no washout period at the end of the first assessment, and six radiologists were invited to reassess the BI-RADS category and classify breast lesions based on the predicted probability of lesions provided by FAPS. They could choose either not to change or else, to readjust their first result. The BI-RADS category has different management strategies according to CEM BI–RADS. Accurately predicting and successfully downgrading or upgrading would guide the selection of clinical management strategies. The detailed process is illustrated in Fig. 2B. In addition, the agreement between all pairs of radiologists with and without FAPS assistance was calculated using Cohen's kappa value. Further analyses were performed based on two subgroups: dense breasts and breast lesions with diameters ≤2 cm. Dense breasts included two subcategories, heterogeneously and extremely dense breasts.

Application of the FAPS for some controversial cases

After the first round of reading by radiologists, there were some controversial cases. It was interesting to focus on the performance of the FAPS in these cases. We first utilised activation heatmaps to visualise the regions where the model contributed the most. In addition, to better explain the performance of the model, we focused on the cases of FAPS prediction errors in the two testing sets and analysed the main histopathological types. Finally, we explored the improvement in agreement among six radiologists when FAPS was used as an auxiliary tool in four controversial cases by using the Kendall correlation coefficient.

Statistical analysis

The baseline characteristics between the benign and malignant patients in four datasets were compared according to their categorization and distribution. For continuous variables, such as the age and lesion diameter, normality and homogeneity of variance were first performed by Kolmogorov–Smirnov test and Bartlett test, respectively. If both were met, they were analysed using the two-sample t-test. Otherwise, Mann–Whitney U test was used. For categorical variables, they were analysed using Pearson's chi-squared test or Fisher's exact test, such as the breast density. The performance of all models used for the classification tasks was assessed in terms of the area under the receiver operating characteristic curve (AUC), sensitivity, specificity and accuracy. The DeLong test was used to compare differences between AUCs. 95% confidence intervals (CIs) are reported on the basis of 10,000 bootstrap replicates. The difference in the radiologists' performance with or without FAPS assistance was calculated using McNemar's x2 test. To interpret the model predictions, heatmaps were produced using gradient-weighted class activation mapping (Grad-CAM).23

Subgroup analyses investigated how the performance of the model differed by breast density and lesion diameter groups. In addition, the sensitivity analysis would also be performed with age-matched study in the datasets with age differences. In the exploratory post-hoc analyses, we evaluated the performance differences obtained with the RefineNet model and other models, including nnUNet and DeepLabV3. We also evaluated the performance differences between the method of channel fusion and feature fusion in different views.

Sample size evaluation was performed using PASS (version 21.0.3). Other statistical analyses were performed using R (version 3.6.2) and Python (version 3.6.6). A two-sided p < 0.05 indicated a statistically significant difference. This trial is registered with China Clinical Trial Registration Centre (ChiCTR2200063444).

Role of the funding source

The funders had no role in the study design, data collection and analysis, data interpretation, decision to publish, or manuscript preparation. The corresponding authors confirm that they have full access to the data and have the final responsibility for deciding to submit the manuscript for publication.

Results

Patients

Between June 2017 and October 2022, 1912 women patients were enrolled. The average age of the patients was 52.07 ± 11.56 (standard deviation, SD) years old in the training and validation sets, 52.70 ± 12.63 (SD) years old in the internal testing set, 49.32 ± 10.23 (SD) years old in the pooled external testing set and 52.02 ± 12.13 (SD) years old in the prospective testing set. The clinical characteristics of the patients are summarised in Table 1. There was a difference in mean age among the different datasets; meanwhile, there was also a difference between the malignant and benign cases. An age-matched study was conducted, and we confirmed that the difference had little impact on the results. Detailed information is provided in Supplementary Material 5.

Table 1.

Baseline characteristics in the training and validation sets, internal testing set, pooled external testing set and prospective testing set.

| Characteristics | Training and validation sets (n = 1538) |

P values | Internal testing set (n = 172) |

P values | External testing set (n = 97) |

P values | Prospective testing set (n = 105) |

P values | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Benign (n = 387) | Malignant (n = 1151) | Benign (n = 45) | Malignant (n = 127) | Benign (n = 30) | Malignant (n = 67) | Benign (n = 31) | Malignant (n = 74) | |||||

| Age (years, mean ± SD) | 42.72 ± 10.74 | 55.23 ± 10.03 | 0.008a | 43.98 ± 13.51 | 55.80 ± 10.78 | <0.001a | 42.86 ± 8.91 | 52.07 ± 9.54 | <0.001a | 42.90 ± 10.26 | 55.84 ± 10.79 | <0.001a |

| Diameter, cm (%) | 2.1 ± 1.50 | 2.33 ± 1.03 | <0.001a | 2.48 ± 1.82 | 2.35 ± 0.97 | 0.559 | 2.43 ± 1.28 | 2.87 ± 1.28 | 0065 | 2.48 ± 2.02 | 2.64 ± 1.07 | 0.777 |

| ≤1 | 95 (24.5) | 49 (4.2) | 11 (24.4) | 4 (3.1) | 3 (10) | 1 (1.5) | 5 (16.1) | 0 | ||||

| 1–2 | 139 (35.9) | 470 (40.8) | 14 (31.1) | 52 (40.9) | 12 (40) | 17 (25.4) | 10 (32.2) | 25 (33.8) | ||||

| >2 | 153 (39.5) | 632 (54.9) | 20 (44.4) | 71 (55.9) | 15 (50) | 49 (73.1) | 16 (51.6) | 49 (66.2) | ||||

| Breast density (%) | <0.001a | 0.466 | 0.499 | <0.001a | ||||||||

| A | 123 (31.8) | 365 (31.7) | 16 (35.6) | 34 (26.8) | 1 (3.3) | 6 (9) | 1 (3.2) | 28 (37.8) | ||||

| B | 97 (25.1) | 241 (20.9) | 9 (20) | 21 (16.5) | 11 (36.7) | 28 (41.8) | 8 (25.8) | 20 (27) | ||||

| C | 145 (37.5) | 379 (32.9) | 11 (24.4) | 47 (37) | 16 (53.3) | 26 (38.8) | 21 (67.7) | 24 (32.4) | ||||

| D | 22 (5.7) | 166 (14.4) | 9 (20) | 25 (19.7) | 2 (6.7) | 7 (10.4) | 1 (3.2) | 2 (2.7) | ||||

| Histopathologic types (%) | ||||||||||||

| Benign lesions (%) | ||||||||||||

| Fibroadenoma | 183 (47.2) | 11 (24.4) | 13 (43.3) | 12 (38.7) | ||||||||

| Adenosis | 97 (25.1) | 2 (4.4) | 11 (36.7) | 11 (35.5) | ||||||||

| Intraductal papilloma | 45 (11.6) | 2 (4.4) | 1 (3.3) | 4 (12.9) | ||||||||

| Inflammation | 15 (3.9) | 1 (2.2) | 1 (3.3) | 1 (3.2) | ||||||||

| Fibrocystic disease | 14 (3.9) | 0 | 0 | 1 (3.2) | ||||||||

| Phyllodes tumor | 20 (5.2) | 3 (6.7) | 2 (6.7) | 2 (6.5) | ||||||||

| Unknown/other | 13 (3.4) | 26 (57.8) | 2 (6.7) | 0 | ||||||||

| Malignant lesions (%) | ||||||||||||

| Invasive ductal carcinoma | 1032 (89.7) | 122 (96.1) | 44 (65.7) | 55 (74.3) | ||||||||

| Ductal carcinoma in situ | 23 (1.9) | 1 (0.7) | 1 (1.5) | 5 (6.8) | ||||||||

| Invasive lobular carcinoma | 28 (2.4) | 2 (1.5) | 1 (1.5) | 3 (4.1) | ||||||||

| Papillary carcinoma | 28 (2.4) | 0 | 1 (1.5) | 2 (2.7) | ||||||||

| Mucinous adenocarcinoma | 18 (1.6) | 2 (1.6) | 0 | 5 (6.8) | ||||||||

| Unknown/other | 22 (1.9) | 0 | 20 (29.9) | 6 (8.1) | ||||||||

Note: SD = standard deviation.

Significant difference between the benign and malignant patients.

Performance of the subnetwork

RefineNet achieved a DSC of 0.888 ± 0.101 in the internal testing set, which was higher than that of nnUNet and DeepLabV3. In addition, the visualisation of the segmentation results based on two examples also illustrated the superior performance of RefineNet. Detailed results are shown in Fig. 3. Therefore, RefineNet was incorporated into the FAPS subnetwork.

Fig. 3.

Performance of the segmentation network. (A) The performance of the Xception segmentation model based on the DSC on the training and validation sets, as well as the internal testing set. (B) Visualisation of segmentation results. (a) and (e) are the low-energy images of the CC view and MLO view, respectively. (b) and (f) are the recombined images of the CC view and MLO view, respectively. (c) and (g) are the real masks, and (d) and (h) are the segmentation results. The above images in a 36-year-old woman with fibroadenoma. CEM images show a 6.4 cm lesion. The DSC is 0.955 for the CC view and 0.954 for the MLO view. Images below in a 56-year-old woman with phyllodes tumor. CEM images show a 3.5 cm lesion. DSC is 0.906 for the CC view and 0.936 the for MLO view. CC = craniocaudal; MLO = medio lateral-oblique; CEM = contrast-enhanced mammography; DSC = dice similarity coefficient.

Using the predicted segmentation mask, the classification task performed by Xception with channel fusion using the CC and MLO views achieved an AUC of 0.947 (95% CI: 0.916–0.978) in the internal testing set, which was the best result in ablation analysis. Therefore, it was incorporated into the FAPS classification network. This information is provided in Supplementary Table S1 and Supplementary Fig. S2.

Performance of the FAPS and comparison with radiologists

Based on the segmentation task, FAPS achieved DSCs of 0.820 ± 0.182 and 0.837 ± 0.137 in the pooled external and prospective testing sets, respectively. For the classification task, when the PPM was adopted, the AUC of FAPS was 0.953 (95% CI: 0.925–0.981) in the internal testing set, which was higher than that without the module (p > 0.05). In the pooled external and prospective testing sets, the FAPS achieved AUCs of 0.940 (95% CI: 0.894–0.987) and 0.891 (95% CI: 0.816–0.945), sensitivities of 0.955 (95% CI: 0.867–0.980) and 0.932 (95% CI: 0.843–0.975), specificities of 0.700 (95% CI: 0.504–0.846) and 0.613 (95% CI: 0.423–0.776), and accuracies of 0.876 (95% CI: 0.794–0.934) and 0.838 (95% CI: 0.754–0.903), respectively.

The sensitivity and specificity points of the six radiologists in the two testing sets are drawn on the same ROC curve in Fig. 4. The findings indicated that the performance of the FAPS was comparable to that of senior radiologists but higher than that of junior radiologists. As shown in Table 2, the sensitivity of all radiologists was comparable to that of the FAPS. However, the specificity of the FAPS was significantly higher than that of the radiologists (p < 0.05). This is because radiologists may sacrifice specificity while guaranteeing no missed diagnoses in the routine clinical environment. The performance of the six radiologists is shown in Supplementary Table S2. In addition, the FAPS took approximately 6 s to complete the classification task based on a single breast lesion, which was much faster than that of the radiologists (3 min on average). Therefore, FAPS is of great significance in improving the diagnostic efficiency in clinical practice.

Fig. 4.

The performance of the FAPS and radiologists without and with FAPS assistance in the pooled external testing set (A) and prospective testing set (B). ROC = receiver operating characteristic; AUC = area under receiver operating characteristic curve; FAPS = fully automated pipeline system; R = radiologist.

Table 2.

The diagnostic performance of FAPS, radiologists alone, and FAPS-assisted radiologists.

| External testing set |

Prospective testing set |

|||||

|---|---|---|---|---|---|---|

| Sensitivity (95% CI) | Specificity (95% CI) | Accuracy (95% CI) | Sensitivity (95% CI) | Specificity (95% CI) | Accuracy (95% CI) | |

| FAPS | 0.955 (0.867–0.988) | 0.700 (0.504–0.846) | 0.876 (0.794–0.934) | 0.932 (0.843–0.975) | 0.613 (0.423–0.776) | 0.838 (0.754–0.903) |

| Radiologists without FAPS assistance | ||||||

| Senior | 0.945 (0.853–0.986) | 0.667 (0.472–0.821) | 0.859 (0.706–0.912) | 0.923 (0.831–0.962) | 0.462 (0.295–0.643)a | 0.757 (0.546–0.819)a |

| Junior | 0.945 (0.853–0.986) | 0.444 (0.271–0.641) | 0.791 (0.651–0.880)a | 0.923 (0.831–0.962) | 0.365 (0.211–0.552)a | 0.759 (0.551–0.824) |

| All | 0.945 (0.853–0.986) | 0.556 (0.345–0.727)a | 0.825 (0.697–0.873)a | 0.923 (0.831–0.962) | 0.414 (0.248–0.597)a | 0.758 (0.549–0.821)a |

| Radiologists with FAPS assistance | ||||||

| Senior | 0.960 (0.878–0.989) | 0.689 (0.523–0.889) | 0.876 (0.794–0.934) | 0.950 (0.869–0.989) | 0.430 (0.275–0.601) | 0.797 (0.702–0.883) |

| Junior | 0.960 (0.878–0.989) | 0.478 (0.336–0.694) | 0.811 (0.642–0.845) | 0.932 (0.843–0.975) | 0.452 (0.283–0.631) | 0.791 (0.605–0.875) |

| All | 0.960 (0.878–0.989) | 0.583 (0.424–0.742) | 0.844 (0.701–0.894) | 0.941 (0.857–0.976) | 0.441 (0.277–0.618) | 0.794 (0.612–0.879) |

Note: FAPS = fully automated pipeline system; 95% CI = 95% confidence interval.

Significant difference between the radiologists alone and the radiologists with FAPS assistance (p ˂ 0.05).

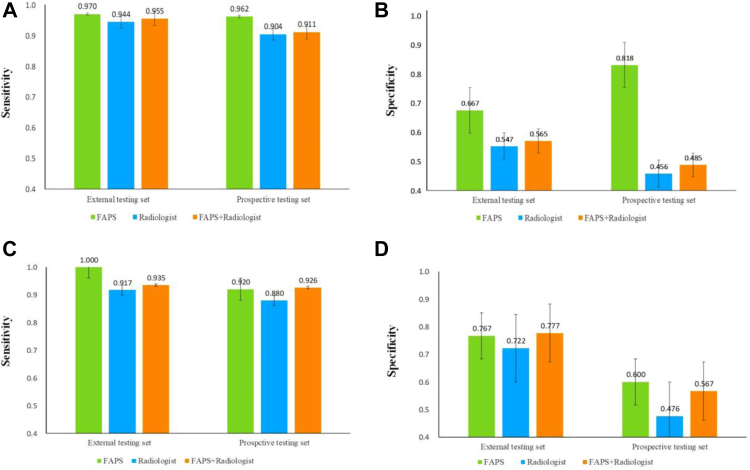

Radiologists’ performance with FAPS assistance

As shown in Table 2 and Fig. 4, the FAPS-assisted method increased the sensitivity of all radiologists by 1.5% and the specificity by 2.7% in the pooled external testing set, the sensitivity by 1.8% and the specificity by 2.7% in the prospective testing set. In addition, the average agreement of all radiologists with the assistance of the FAPS improved from 0.444 to 0.582, and from 0.312 to 0.442 in both testing set. Detailed results are show in Fig. 5. Finally, we conducted further analyses of subgroups of dense breasts and small lesions. Owing to the shielding of the glands and the influence of small lesions, it is difficult for radiologists to make accurate judgments. As shown in Fig. 6, the sensitivity and specificity of radiologists were lower than those of FAPS in both subgroups, but their performance was improved with the aid of FAPS.

Fig. 5.

The agreement degree of pairs of radiologists without and with FAPS assistance in the pooled external testing set (A and B) and prospective testing set (C and D). FAPS = fully automated pipeline system.

Fig. 6.

Subgroup analysis in the pooled external and prospective testing sets. Sensitivity and specificity with or without FAPS-assisted diagnosis in the dense breast subgroup (A and B) and the lesion diameter ≤2 cm subgroup (C and D). FAPS = fully automated pipeline system.

Adjustment of BI-RADS category 4 with FAPS assistance

As shown in Supplementary Table S3, radiologists preferred to upgrade the BI-RADS categories for malignant lesions. To avoid missing diagnoses, the BI-RADS category for benign lesions was degraded with caution. When the prediction risk of breast lesions was available in FAPS, BI-RADS category 4A of two and three patients on average were successfully downgraded in two testing sets. The clinical management strategies for these patients were adjusted from organisational diagnosis to short-term follow-up, thereby avoiding unnecessary biopsy and relieving the medical burden. In addition, BI-RADS category 4 of 11 and 13 patients on average was upgraded in the two testing sets. Management strategies should be reconsidered in these patients.

Application of the FAPS for controversial cases

As shown in Fig. 7, we selected four typical controversial cases from among the radiologists for analysis. The heatmaps visualised the regions with the largest contribution to the model's decision-making. The red region represents a larger weight, indicating that FAPS focused on the most predictive information for the classification of breast lesions. In all cases, the FAPS correctly focused on the area of the lesions. The performances of the six radiologists for the four controversial cases are shown in Supplementary Table S4. As shown in Fig. 7A and C, the performance of radiologists can be improved with the assistance of FAPS. However, there were still errors, such as those shown in Fig. 7B and D. As shown in Fig. 7B, the shape, edge, and enhancement state of benign phyllodes tumors can easily be confused with those of malignant ones, leading to incorrect judgment by FAPS and radiologists. In addition, for invasive ductal carcinoma in Fig. 7D, because there was only marginal enhancement on the recombined image, the weight in the middle of the heatmap was relatively low and ultimately led to incorrect predictions. A junior radiologist was misled by FAPS. Even so, with the help of the FAPS, the agreement among the six radiologists in these four cases improved from 0.206 to 0.378.

Fig. 7.

Heatmap analysis of four controversial cases. The three images above are the low-energy image, the recombined image (b), and the heatmap of the CC view (c). The red regions have higher predictive significance than the green and blue regions. (A) Images in a 36-year-old woman with fibroadenoma. CEM images show a 6.4 cm lesion. (B) Images in a 56-year-old woman with a phyllodes tumor. CEM images show a 3.5 cm lesion. (C) Images in a 53-year-old woman with invasive ductal carcinoma. CEM images show a 2.2 cm lesion. (D) Images in a 63-year-old woman with invasive ductal carcinoma. CEM images show a 4.8 cm lesion. FAPS = fully automated pipeline system; BI-RADS = Breast Imaging Reporting and Data System; CC = craniocaudal; MLO = medio lateral-oblique; R = radiologist; CEM = contrast-enhanced mammography.

To better explain FAPS, we further analysed all cases of FAPS prediction errors and found that the main error was the type of adenosis with dense glands. Moreover, the FAPS failed to correctly predict the four cases of phylloid tumors in both testing sets. However, the radiologists’ prediction performance was also poor in such cases. The histopathological types of FAPS prediction errors are shown in Supplementary Fig. S3.

Discussion

In this study, we developed a robust FAPS for the automated segmentation and classification of breast lesions from CEM images with DSCs of 0.820 and 0.837, and AUCs of 0.940 and 0.891 in pooled external and prospective testing sets. Moreover, FAPS-assisted strategies can improve radiologists’ performance and guide the adjustment of clinical management strategies for patients. To our best knowledge, this is the first study to develop a fully automated system with high accuracy and diagnostic efficiency, which is expected to improve the early diagnosis rate of breast cancer, and relieve the workload of radiologists.

To the best of our knowledge, the method of manual segmentation is time-consuming and labor-intensive, which is not easy to promote in clinical practice. Some studies have started to explore the use of multitask networks to solve the problem of manual segmentation.11,24,25 However, current research based on CEM images is still limited to manual annotation. We first proposed a fully automated design that relies on FAPS using CEM images to perform segmentation and classification tasks for breast lesions. The automated design can take approximately 6 s to complete the whole task based on a single breast lesion, which is of great significance to improve the diagnostic efficiency of breast lesions and reduce the burden of radiologists.

The low-energy and recombined images are clinically used to diagnose breast lesions on CEM images. However, how to effectively fuse and utilise these image features is an urgent problem currently. Studies have attempted to adopt the method of feature fusion in CC and MLO views using deep learning.19,20 After performing the global average pooling on the feature maps, the obtained one-dimensional feature vectors were concatenated and used for prediction. Differently, channel fusion that first concatenated these feature maps in a channel-level was adopted in our study. In addition, it was confirmed to be better than that of feature fusion. Furthermore, PPM has the ability to obtain global information by multiscale pooling in multiple fields, which has been proved in a previous study.26 Our results showed that the performance of FAPS was better when the PPM was adopted.

In a previous study, Dalmis et al.27 used a random forest classifier to combine the output of multiparameter MRI-based deep learning and clinical information to predict the classification of 576 breast lesions. Perek et al.21 and Song et al.20 separately constructed a CNN network based on 54 and 95 patients, respectively, using CEM images to discriminate benign and malignant breast lesions. However, these small-sample and single-centre studies cannot learn the diverse features of images and lack a test of generalisation performance. In addition, retrospective studies may be biased in clinical settings. Large sample training, and multicentre and prospective validation are necessary for deep learning. Recently, Song et al.20 attempted to solve the above challenges by constructing a deep learning model trained on multimodal breast ultrasound images of 634 patients across two hospitals and prospectively tested 141 patients. However, false positives may exist in ultrasound images owing to the strong subjectivity of manual operation.28 Considering the above problems, our research trained and evaluated the FAPS using CEM images in multicentre and prospective testing sets.

Moreover the role of artificial intelligence in assisting clinical decision-making has not been clarified in previous studies.19,20 Patel et al.29 only compared the performance of the model with that of radiologists. In our study, we demonstrated the FAPS can improve the diagnostic performance of radiologists in both testing sets, even in the subgroup of dense breasts and small lesions. In addition, BI-RADS category 4 in 12.4% and 13.3% of patients was adjusted in two testing sets with the assistance of FAPS, which may play an important guiding role in the selection of clinical management strategies. Admittedly, FAPS may sometimes lead to an incorrect diagnosis or mislead radiologists. Further analysis of these instances revealed that several challenging cases had insufficient image information or poorly defined characteristics, which confused radiologists. Nevertheless, instances in which the FAPS misled radiologists mainly occurred in some junior radiologists. This was due to the inexperience of radiologists or a lack of confidence in their own diagnoses.

Our study has several limitations. First, multiple lesions were excluded because they might not have one-to-one pathological findings. Considering the segmentation problem, non-mass lesions and lesions that existed only in a single view were excluded. However, the bias of the included lesions might lead to overly optimistic results. To meet the needs of application in the future, it may be necessary to include patients with a larger variety of lesion types such as multifocality, and non-mass lesions. Second, deep learning is highly dependent on data. More data from multiple institutions are needed to develop the predictive model to learn the diverse characteristics of breast lesions. However, the FAPS was developed for a single cohort in this study, and it was not sufficient to learn the characteristics of diversified data from multiple institutions. Third, the patients tested in this study were limited to China, including only the southern and eastern regions. To better evaluate the generalisation performance of the model, it should be tested using more cross-regional and cross-country data. Fourth, there was a difference in mean age among the different datasets; meanwhile, there was also a difference in mean age between the malignant and benign cases. Although an age-matched study has confirmed that the difference has less impact on the results, further verification is needed. Finally, as an extremely complex black-box structure, deep learning is far from sufficient to explain the mechanism. In the future, multi-omics factors such as genes should be further investigated.

In conclusion, FAPS provides a noninvasive method to perform the segmentation and classification based on CEM images, which exhibits superior performance and can serve the clinic well. In the future, a larger variety of lesion types and a more prospective multicentre validation will provide strong evidence for the performance of our FAPS in assisting the clinic.

Contributors

TZ, NM, HZ, and CX were responsible for concept and design. TZ and FL provided statistical analysis. All authors were involved in drafting and technical support in deep learning methods. XL, TC, JG, SZ, ZL, YG, SW, FZ, HM, and HX were responsible for acquisition, analysis, or interpretation of data. TZ and FL were involved in drafting the manuscript. NM, HZ, and CX had full access to all the data and verified the underlying data. All authors were involved in reviewing the manuscript and approved the final manuscript for submission.

Data sharing statement

The network and source code are fully available (https://github.com/yyyhd/FAPS). The data that supporting the findings of this study is available from the corresponding author upon request.

Declaration of interests

HX received funding from Natural Science Foundation of Shandong Province of China (ZR2021MH120). NM received funding from Taishan Scholar Foundation of Shandong Province of China (tsqn202211378), National Natural Science Foundation of China (82001775), and Special Fund for Breast Disease Research of Shandong Medical Association (YXH2021ZX055). All other authors declare no competing interests.

Acknowledgments

We thank all study participants. The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as potential conflicts of interest. The study was supported by the Taishan Scholar Foundation of Shandong Province of China (tsqn202211378), National Natural Science Foundation of China (82001775), Natural Science Foundation of Shandong Province of China (ZR2021MH120), and Special Fund for Breast Disease Research of Shandong Medical Association (YXH2021ZX055).

Footnotes

Supplementary data related to this article can be found at https://doi.org/10.1016/j.eclinm.2023.101913.

Contributor Information

Cong Xu, Email: 616574369@qq.com.

Haicheng Zhang, Email: haicheng92@126.com.

Ning Mao, Email: maoning@pku.edu.cn.

Appendix A. Supplementary data

References

- 1.Sung H., Ferlay J., Siegel R.L., et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin. 2021;71(3):209–249. doi: 10.3322/caac.21660. [DOI] [PubMed] [Google Scholar]

- 2.Siegel R.L., Miller K.D., Fuchs H.E., Jemal A. Cancer statistics, 2021. CA Cancer J Clin. 2021;71(1):7–33. doi: 10.3322/caac.21654. [DOI] [PubMed] [Google Scholar]

- 3.Pace L.E., Keating N.L. A systematic assessment of benefits and risks to guide breast cancer screening decisions. JAMA. 2014;311(13):1327–1335. doi: 10.1001/jama.2014.1398. [DOI] [PubMed] [Google Scholar]

- 4.Kim H.-E., Kim H.H., Han B.-K., et al. Changes in cancer detection and false-positive recall in mammography using artificial intelligence: a retrospective, multireader study. Lancet Digit Health. 2020;2(3):e138–e148. doi: 10.1016/S2589-7500(20)30003-0. [DOI] [PubMed] [Google Scholar]

- 5.Sogani J., Mango V.L., Keating D., Sung J.S., Jochelson M.S. Contrast-enhanced mammography: past, present, and future. Clin Imaging. 2021;69:269–279. doi: 10.1016/j.clinimag.2020.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lee C.H., Phillips J., Sung J.S., Lewin J.M., Newell M.S. American College of Radiology; 2022. Contrast Enhanced Mammography (CEM) (A supplement to ACR BI-RADS® mammography 2013)https://www.acr.org/-/media/ACR/Files/RADS/BI-RADS/BIRADS_CEM_2022.pdf [Google Scholar]

- 7.Lee-Felker S.A., Tekchandani L., Thomas M., et al. Newly diagnosed breast cancer: comparison of contrast-enhanced spectral mammography and breast MR imaging in the evaluation of extent of disease. Radiology. 2017;285(2):389–400. doi: 10.1148/radiol.2017161592. [DOI] [PubMed] [Google Scholar]

- 8.Jiao Z., Choi J.W., Halsey K., et al. Prognostication of patients with COVID-19 using artificial intelligence based on chest x-rays and clinical data: a retrospective study. Lancet Digit Health. 2021;3(5):e286–e294. doi: 10.1016/S2589-7500(21)00039-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Venkadesh K.V., Setio A.A.A., Schreuder A., et al. Deep learning for malignancy risk estimation of pulmonary nodules detected at low-dose screening CT. Radiology. 2021;300(2):438–447. doi: 10.1148/radiol.2021204433. [DOI] [PubMed] [Google Scholar]

- 10.Lu L., Dercle L., Zhao B., Schwartz L.H. Deep learning for the prediction of early on-treatment response in metastatic colorectal cancer from serial medical imaging. Nat Commun. 2021;12(1):6654. doi: 10.1038/s41467-021-26990-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.von Schacky C.E., Wilhelm N.J., Schäfer V.S., et al. Multitask deep learning for segmentation and classification of primary bone tumors on radiographs. Radiology. 2021;301(2):398–406. doi: 10.1148/radiol.2021204531. [DOI] [PubMed] [Google Scholar]

- 12.Mayerhoefer M.E., Materka A., Langs G., et al. Introduction to radiomics. J Nucl Med. 2020;61(4):488–495. doi: 10.2967/jnumed.118.222893. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Russakovsky O., Deng J., Su H., et al. ImageNet large scale visual recognition challenge. Int J Comput Vis. 2015;115(3):211–252. [Google Scholar]

- 14.Si K., Xue Y., Yu X., et al. Fully end-to-end deep-learning-based diagnosis of pancreatic tumors. Theranostics. 2021;11(4):1982–1990. doi: 10.7150/thno.52508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gao R., Zhao S., Aishanjiang K., et al. Deep learning for differential diagnosis of malignant hepatic tumors based on multi-phase contrast-enhanced CT and clinical data. J Hematol Oncol. 2021;14(1):154. doi: 10.1186/s13045-021-01167-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Peng S., Liu Y., Lv W., et al. Deep learning-based artificial intelligence model to assist thyroid nodule diagnosis and management: a multicentre diagnostic study. Lancet Digit Health. 2021;3(4):e250–e259. doi: 10.1016/S2589-7500(21)00041-8. [DOI] [PubMed] [Google Scholar]

- 17.Wang L., Ding W., Mo Y., et al. Distinguishing nontuberculous mycobacteria from Mycobacterium tuberculosis lung disease from CT images using a deep learning framework. Eur J Nucl Med Mol Imaging. 2021;48(13):4293–4306. doi: 10.1007/s00259-021-05432-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wang Y., Feng Y., Zhang L., Wang Z., Lv Q., Yi Z. Deep adversarial domain adaptation for breast cancer screening from mammograms. Med Image Anal. 2021;73 doi: 10.1016/j.media.2021.102147. [DOI] [PubMed] [Google Scholar]

- 19.Song J., Zheng Y., Xu C., Zou Z., Ding G., Huang W. Improving the classification ability of network utilizing fusion technique in contrast-enhanced spectral mammography. Med Phys. 2022;49(2):966–977. doi: 10.1002/mp.15390. [DOI] [PubMed] [Google Scholar]

- 20.Song J., Zheng Y., Zakir Ullah M., et al. Multiview multimodal network for breast cancer diagnosis in contrast-enhanced spectral mammography images. Int J Comput Assist Radiol Surg. 2021;16(6):979–988. doi: 10.1007/s11548-021-02391-4. [DOI] [PubMed] [Google Scholar]

- 21.Perek S., Kiryati N., Zimmerman-Moreno G., Sklair-Levy M., Konen E., Mayer A. Classification of Contrast-Enhanced Spectral Mammography (CESM) images. Int J Comput Assist Radiol Surg. 2018;14(2):249–257. doi: 10.1007/s11548-018-1876-6. [DOI] [PubMed] [Google Scholar]

- 22.Xi I.L., Zhao Y., Wang R., et al. Deep learning to distinguish benign from malignant renal lesions based on routine MR imaging. Clin Cancer Res. 2020;26(8):1944–1952. doi: 10.1158/1078-0432.CCR-19-0374. [DOI] [PubMed] [Google Scholar]

- 23.Selvaraju R.R., Cogswell M., Das A., Vedantam R., Parikh D., Batra D. IEEE International Conference on Computer Vision (ICCV); 2017. Grad-CAM: visual explanations from deep networks via gradient-based localization; pp. 618–626. [Google Scholar]

- 24.Jin C., Yu H., Ke J., et al. Predicting treatment response from longitudinal images using multi-task deep learning. Nat Commun. 2021;12(1):1851. doi: 10.1038/s41467-021-22188-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Choi Y.S., Bae S., Chang J.H., et al. Fully automated hybrid approach to predict the IDH mutation status of gliomas via deep learning and radiomics. Neuro Oncol. 2021;23(2):304–313. doi: 10.1093/neuonc/noaa177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Zhao H., Shi J., Qi X., Wang X., Jia J. Proceedings of the IEEE conference on computer vision and pattern recognition. 2017. Pyramid scene parsing network; pp. 2881–2890. [Google Scholar]

- 27.Dalmis M.U., Gubern-Merida A., Vreemann S., et al. Artificial intelligence-based classification of breast lesions imaged with a multiparametric breast MRI protocol with ultrafast DCE-MRI, T2, and DWI. Invest Radiol. 2019;54(6):325–332. doi: 10.1097/RLI.0000000000000544. [DOI] [PubMed] [Google Scholar]

- 28.Shen Y., Shamout F.E., Oliver J.R., et al. Artificial intelligence system reduces false-positive findings in the interpretation of breast ultrasound exams. Nat Commun. 2021;12(1):5645. doi: 10.1038/s41467-021-26023-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Patel B.K., Ranjbar S., Wu T., et al. Computer-aided diagnosis of contrast-enhanced spectral mammography: a feasibility study. Eur J Radiol. 2018;98:207–213. doi: 10.1016/j.ejrad.2017.11.024. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.