Abstract

The brain integrates volition, cognition, and consciousness seamlessly over three hierarchical (scale-dependent) levels of neural activity for their emergence: a causal or ‘hard’ level, a computational (unconscious) or ‘soft’ level, and a phenomenal (conscious) or ‘psyche’ level respectively. The cognitive evolution theory (CET) is based on three general prerequisites: physicalism, dynamism, and emergentism, which entail five consequences about the nature of consciousness: discreteness, passivity, uniqueness, integrity, and graduation. CET starts from the assumption that brains should have primarily evolved as volitional subsystems of organisms, not as prediction machines. This emphasizes the dynamical nature of consciousness in terms of critical dynamics to account for metastability, avalanches, and self-organized criticality of brain processes, then coupling it with volition and cognition in a framework unified over the levels. Consciousness emerges near critical points, and unfolds as a discrete stream of momentary states, each volitionally driven from oldest subcortical arousal systems. The stream is the brain’s way of making a difference via predictive (Bayesian) processing. Its objective observables could be complexity measures reflecting levels of consciousness and its dynamical coherency to reveal how much knowledge (information gain) the brain acquires over the stream. CET also proposes a quantitative classification of both disorders of consciousness and mental disorders within that unified framework.

Keywords: Brain dynamics, Consciousness, Metastability, Criticality, Complexity, Bayesian brain, Mental disorders

Introduction

Recent advances in artificial intelligence (AI), inspired by neurobiology, support the idea that consciousness could arise from machine learning in exclusively computational ways without requiring any kind of freedom from artificial neural networks, if these were endowed with the global architecture for self-monitoring and metacognition (Lake et al. 2017). Machine consciousness might progress by investigating the architecture, then transferring those insights into computer algorithms (Dehaene et al. 2017). However, what criterion of conscious experience should be reliable there? How can we say with confidence that a machine is or is not conscious, if the machine operates like humans? The existing tests for machine consciousness under criteria, such as flexibility, improvisation, spontaneous problem-solving (Pennartz et al. 2019), are widely practicable in neuroscience, AI studies, and robotics, but they depend ultimately on our subjective interpretation of behavior (Elamrani and Yampolskiy 2019). Consciousness as a phenomenon that is self-evidential through Descartes’ cogito remains elusive. How could consciousness be certified in any particular case beyond reportability?

To answer these questions, the science of consciousness has to explain how the brain integrates volition, cognition (including perception and memory), and consciousness seamlessly over three hierarchical levels of brain dynamic respectively: (i) a causal or ‘hard’ level, (ii) a computational (unconscious) or ‘soft’ level, and (iii) a phenomenal (conscious) or ‘psyche’ level. Although this schema produces an intuitive analogy with a computer’s hardware and software, the analogy is rather supportive for indicating the absence of a psyche level, i.e., consciousness, in computers by a reason unknown to us. Nonetheless, the division would still be trivial, unless it was put upon a strict physical foundation. The cognitive evolution theory (CET), outlined here, argues that such a foundation can indeed be proposed by assuming that the levels for the emergence of volition, cognition, and consciousness are scale-dependent. Each of these phenomena can be best accounted for at a separate scale of emergence.

In the literature, dividing a system of interest into different spatiotemporal scales is typically defined across micro-, meso- and macroscales. Their further specification depends on the size and nature of the system of interest (e.g., the Solar system vs. the cell). Accordingly, CET relates volition, cognition (absorbing perception and memory), and consciousness to these three scales of neural activity—neuronal, modular, and whole-brain dynamics.

Volition

Generally, volition is always concerned with internally-generated or self-initiated (consciously or unconsciously) action. It must necessarily have causal power. Although causation can be described at various spatiotemporal scales, depending on the size and relevant dynamics of a system of interest, a microscale proposes always a more rigorous and detailed fine-grained picture than those of a meso- or a macroscale. Because every scale of description is biased by averaging many variables to a single one, the micro-causation is, in fact, only responsible for causal processes at all coarser scales. Ignoring this fact generates fallacious concepts in cognitive neuroscience such as ‘downward causation’, which should be more correctly called ‘correlation’ (Atmanspacher and Rotter 2008). In particular, it is now commonly acknowledged that statistical dependencies based on functional connectivity patterns extracted from neuroimaging data can produce spurious causation which is only correlation (Reid et al. 2019; Mehler and Kording 2018; Weichwald and Peters 2021). Accordingly, CET argues that, on this theoretical account, volition can genuinely be accounted for only at a causal (hard) level of physically interacting neurons. Thus, a hard level is to be associated exclusively with a microscale of brain dynamics (including atomic and even a quantum scale if only it cannot be explicitly neglected as noise at larger scales).

Cognition

In a simplest formulation, cognition (including perception and memory) is learning. This must be causally (biologically or artificially) implemented. It goes in line with the fact that cognition, in contrast to volition, occurs not at a microscale of single neurons as binary input–output devices but at a mesoscale of networks of such devices, i.e., anatomical brain regions exchanging information at a computational (soft) level. Of course, it can be noted that cognition and sentience occur yet at a level of unicellular organisms (Torday and Miller 2016; Baluška et al. 2021), but it is not of interest here. Importantly, in neural networks the computations cannot have causal power (allegedly via downward causation) to volitionally influence brain dynamics at the ultimate microscale. Otherwise, we should agree that computers or, at least, learning AI systems already have their share of free volition.

What is of interest here, as mentioned above, is that these systems have neither consciousness nor volition but may implement some sort of cognition and even overcome brains in performing some tasks. What is then special in brain cognition to generate conscious experience? CET argues: it is free volition. However, if volition is physically predetermined from the past, superdeterminism comes into play. Superdeterminism argues that the brain is exactly it, a leaning machine indiscernible from those AI systems. To prevent superdeterminism, quantum randomness must somehow trespass into classical neural activity at a microscale. This is a logical way that leads many physics-oriented researchers to try to reconcile consciousness with quantum effects which clearly lack in modern computers and AI systems.

Consciousness

After all, conscious states emerge globally at a macroscale of the whole-brain network. This scale corresponds to a phenomenal (psyche) level, to which many volitional and cognitive systems contribute, thereby generating what is viewed as the neural correlates of consciousness (NCC) (Crick and Koch 2003). This psyche level is neither volitional nor cognitive but only representative of these both. It is self-evidential experience of a specious (Varela 1999) or remembered (Edelman 1989) present over which a person’s ‘way of being’ (Tononi 2008) unfolds as the stream of consciousness.

These levels of emergence can, at first sight, have something to do with Marr’s (2010) tri-level explanation: computation level (why), algorithmic level (what), and implementation level (how), each suggesting its own context-dependent explanation of the same phenomenon (vision). Instead, in CET the levels are spatially scale-dependent, each being physically responsible for the emergence of the brain’s separate features—volition, cognition, and consciousness respectively. This must not be also confused with Zeki’s (2003) three spatiotemporal levels, each being hierarchically nested within a larger one. Although scale-conditioned, these are again proposed for the same phenomena: for micro-consciousness, for macro-consciousness, and for unified experience of a person, composed of all those. In fact, Zeki’s approach does the opposite to CET by rendering consciousness ubiquitous and scale-independent. For example, Hunt and Schooler (2019) go further and suggest extending Zeki’s levels over the evolutionary timeline beginning with a rudimentary form of consciousness in non-organic matter at an atomic scale. Going this way, one might then come to postulating proto-consciousness at a quantum scale (Hameroff and Penrose 2014). CET does not consider this conjecture.

This suggests a dynamical model based on a framework, drawn over diverse neuroscientific domains, with contributions from classical and quantum physics, critical dynamics, predictive processing, information theory, and evolutionary neuroscience to approach a general theory of consciousness. The approach is based on three general prerequisites: physicalism, dynamism, and emergentism. These entail five consequences about the nature of consciousness: discreteness, passivity, uniqueness, integrity, and graduation. CET starts from the assumption that brains should have primarily evolved as volitional subsystems of organisms at a hard level of a microscale, not as prediction machines at a soft level of a mesoscale (Knill and Pouget 2004; Clark 2013). Only then these two levels might account for the emergence of consciousness at a psyche level at a macroscale. This also argues that consciousness is a process that can be consistently described only as a temporal stream of discrete states.

There are now a number of dominant theories of consciousness, each identifying consciousness with something else: integrated information, global workspace, predictive processing, or self-monitoring. It does not them rival, rather, fragmentary in explaining how the brain integrates consciousness (psyche), cognition (soft), and volition (hard) seamlessly across the three hierarchical levels. Many authors attempt to compare the theories (Shea and Frith 2019; Hohwy and Seth 2020; Mashour et al. 2020; Doerig et al. 2020; Sattin et al. 2021; Del Pin et al. 2021; Signorelli et al. 2021; VanRullen and Kanai 2021), or even converge them to one or another dynamical framework (Northoff and Lamme 2020; Chang et al. 2020; Cofré et al. 2020; Safron 2020).

Unlike static theories above, CET shares certain features with two dynamical theories of consciousness—Operational Architectonics (OA) (Fingelkurts et al. 2010) and Temporo-spatial theory (TTC) of Northoff and Huang (2017): they all describe the stream of consciousness, yet, doing it over critical brain dynamics, also called often scale-free dynamics (Stam and de Bruin 2004; He et al. 2010; He 2014; Fields et al. 2021). It is well-know that self-organized criticality and scale-free topology facilitate each other (Heiney et al. 2021). CET refers to the concept of criticality (Bak et al. 1987; Kelso, 1995; Blanchard et al. 2000; Chialvo 2010) as it is well grounded in thermodynamics and leads naturally to entropy-based concepts such as order, disorder, and complexity, which all are fundamental in CET. Instead, scale-freeness originates from network science where it is linked to small-world organization and self-similarity upon which AO and, especially, TTC are based.

However, there are other distinctions between CET and these theories. Both AO and TTC seem to adopt the idea of James that the stream of consciousness should be continuous. Accordingly, conscious states are proposed to have duration with an abrupt transition from one to another in critical points of brain dynamics (Fingelkurts et al. 2013; Northoff and Zilio 2022). The basic states with duration about 200 ms will then be hierarchically nested within more and more extended temporal slices up to few seconds and further over long-range temporal scales, thereby generating self-similar patterns of ‘operational’ or ‘intrinsic’ spacetime. CET takes the opposite view similar to that of cinematic theory of Freeman (Freeman 2007; Kozma and Freeman 2017): the stream of consciousness consists of discrete states which are ignited transiently at a psyche level like momentary snapshots (VanRullen and Koch 2003; Herzog et al. 2020) just at moments of phase transitions while the brain processes information continuously at a soft level in unconscious ways. The stream will then be formalized on the time continuum as a transitive and irreflexive chain of point-like conscious states. This is a principled distinction between CET and both these theories.

The term ‘conscious state’ can have two, at least, very different meanings. On a strict physical account, the ‘state of consciousness’ is a state of the brain at a given moment, typically presented with some function . At that moment the brain can or cannot hold a particular conscious state. The second meaning refers to consciousness in a general sense as if averaged over time, for example, when one says that a patient is in conscious state, i.e., in the state of permanent wakefulness. But this does not mean that the patient must be in state of awareness every moment, unless one neglects the rigorous notion of the mathematical continuum. To be continuous, consciousness should pervade every point on the time continuum, e.g., within a ‘sliding window’ (Fekete et al. 2018). In contrast, in a discrete stream, consciousness can occupy only separate points of the continuum that are exposed to a psyche level while not making the brain generally unconscious as it is, e.g., in sleep, in coma, or under anesthesia.

Historically, the hypothesis of temporally continuous consciousness is implicitly linked with another old belief of humans in the active role of consciousness, i.e., free will. Indeed, both assumptions need a physical model which would divide brain dynamics into two continuous parts: the “underground” for unconscious processing, and the “highway” for conscious processing as if those were going in parallel, yet requiring their own separate NCC for corresponding dynamics. It should then be assumed that the most part of its continuous time consciousness can routinely control information presented by sensorimotor regions to go on in completely deterministic ways but sometimes intervene in unconscious processing (underground?) to make its own free choice.

While it remains unclear whether or not AO and TTC admit free will, in CET, consciousness will certainly be discrete and passive. This considers the ‘subjective feeling of continuity’ and the ‘experience of free will’ two interlinked illusions of consciousness generated by its self-evidential and representative nature: consciousness cannot in principle detect its own absence in the brain. In the stream, conscious experience will always be available on introspection as if it was self-initiated there.

CET argues, each conscious state must be volitionally driven from oldest subcortical arousal systems in the brainstem integrating functions of many vital systems and containing numerous cranial nuclei and white matter tracts to higher thalamocortical areas (Parvizi and Damasio 2001; Merker 2007). Only then those areas might be involved in perception and cognition at a soft level (Fig. 1). This is a reason why this theory is called CET. Here, the word ‘evolution’ combines two meanings—cognitive and biological. First, unlike ordinary (though complex) dynamical systems the brain is a system that learns and memorizes. This is not merely a dynamical but an evolutionary process. In other words, ‘cognitive evolution’ means cumulative cognitive neurodynamics which accumulate information (knowledge) over time. Only learning systems can evolve, and brains were created by biological evolution just for it. In Darwinist terms, they do it to promote their organisms’ adaptive success (fitness). This is a point where cognitive evolution of a particular brain over its lifetime (ontogeny) and biological evolution of the brain over species (phylogeny) converge to a timeline where they advance each other.

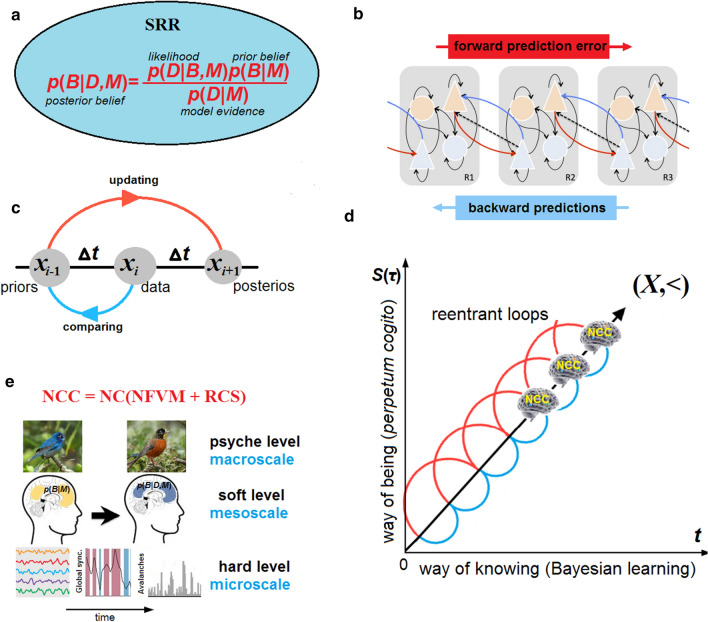

Fig. 1.

The origin of consciousness. CET starts from the assumption that the brain should have primarily evolved as volitional subsystems of organisms from simplest neural reflexes. At a causal (hard) level, those should put a principled psyche-matter divide between organisms, exploiting their stimulus-reactions repertoires freely, and non-living systems, governed completely by cause-effects interactions. On the evolutionary scale, memory and cognition should evolve together, thereby advancing each other. Their volition-driven unconscious cooperation would generate momentary conscious states over the stream at a phenomenal (psyche) level. Likewise, emotions can hardly be dissociated from self-awareness; their (limbic) neural substrates should evolve in parallel with conscious cortex-centered substrates, and motivate cognition by emotional decision-making in the functional integrity of the brain

While random gene mutations are responsible for the variety of species (and their brains) these alone might not be sufficient for evolution. Cognition is needed for action and goal-directed behavior. Under selection pressure, a brain that is more successful in its cognitive evolution by minimizing prediction-error survives and spreads its genes over generations. Those are material for new gene mutations. However, CET argues, in both ontogeny and phylogeny, before cognition may come into play, volition must be at place. To put simply, an error must be volitionally initiated before being cognitively minimized. Hence, on the evolutionary timeline, the brain should primarily have evolved as volitional subsystems of organisms (rooted in the most ancient part of the brain, the myelencephalon). Thus, in CET, volition must be causally accounted for at a microscale, yet placed anatomically into the brainstem.

It is remarkable that just this ancient brain region, which contains nuclei of most primitive automatic functions for maintaining the body’s physiological homeostasis, such as regulating blood pressure, heart rate, and breathing, combines them with arousal centers responsible for the highest phenomenon of brain activity—consciousness. Instead of speculating whether or not consciousness has free will, CET argues that in the stream of consciousness its every state has already to be volition-driven. In this sense, the brainstem not only regulates the sleep cycle for maintaining general states of consciousness (wakefulness) over extended periods of time, this also volitionally initiates ‘pulsating’ consciousness (Freeman 2007) like heartbeat within those periods of wakefulness. The evolution of this passive and discrete consciousness from simplest organisms to humans would then be a mere consequence of developing thalamocortical regions which might (i) modulate a volitional impulse from myelencephalon and (ii) enrich cognitive contents of consciousness over species.

After all, CET implies that not integrated information of irreducible causal mechanisms (Tononi 2008; Oizumi et al. 2014), architecture peculiarities of neural networks (Dehaene and Naccache 2001; Baars 2003), or predictive processing (Knill and Pouget 2004; Clark 2013; Seth 2014), but volition is a main obstacle that prevents computer scientists from making AI systems conscious. Just like ‘consciousness’, the concept of free will is far from obvious. While the Turing tests on machine consciousness can tell us nothing about the essence of consciousness, the free will tests lack a convincing theoretical paradigm for studying volition. Overall, free will is thought to be either illusory or hard to certify (Lavazza 2016). In general, the problem splits into two main accounts depending on how volition and consciousness are conceptualized.

In neuroscience, (i) consciousness is clearly taken to emerge from neural activity, and to be or not to be able to control unconscious processes. A typical conclusion is that consciousness has no control over neural processes, but the brain itself as a ‘Bayesian optimal estimator’ (Knill and Pouget 2004) can on its own part perform voluntary actions to minimize prediction-error or informational free energy (Friston et al. 2013). Thus, some kind of free volition is assumed to act on the authority of the brain not of consciousness itself. In this context, the volitional repertoires are usually viewed as a correction function in feedback circuitry (Clark 2013). The genuine causal freedom of such self-initiated actions is not questioned.

In physics, (ii) consciousness is presented mainly in the context of observer-dependent quantum phenomena. Superdeterminism is a hypothesis that all observed events and conscious observers themselves are completely controlled in the unambiguous causal ways from the Big Bang (‘t Hooft 2016). This claim is irrelevant to the computational theory of mind from the position (i), assuming that the brain itself implements hierarchical predictive processing constantly by bidirectional causal cascades (Friston 2008; Pezzulo et al. 2018). Instead, superdeterminism holds that all neural processes are only a small part of the global causal process going over the whole universe by actions of law. As regards the brain’s own counterfactual computations at a soft level, those cannot be dissociated from brain dynamics at a hard level to advance free volition despite determinism. Neither consciousness nor even the brain can have a bit of freedom. Bayesian active inference might be free of predetermination, if only its feedback circuits were closed causal loops, strictly forbidden in physics.

In this sense, the problem of free will put to the dichotomy between conscious volition and unconscious decision-making becomes unessential. The only scientifically legitimate question one can ask follows from the position (ii). Can free volition (causally independent of the past) be in principle feasible in the brain as a physical body governed by deterministic laws? A more profound conceptualization of free volition should be suggested under a criterion applicable universally to various biological and artificial systems. It happens that the problem of free will, outstanding over centuries, is intrinsically coupled with another general problem of conscious presence in those systems. Even if consciousness as a special state of matter can be measured unambiguously like mass or charge in physics (Tononi 2008; Oizumi et al. 2014; Tegmark 2015), the amount of information the system is able to integrate is secondary to the main question. What is special in this state of matter to discriminate exactly between conscious brains and non-conscious systems, integrating information as well?

But if volitional mechanisms of a system can be certified, this reveals a very special behavior, which could evolve to conscious properties by providing the system with computational power. Unlike machines, brains consist of neurons which are themselves living systems not merely binary devices (Signorelli and Meling 2021). A natural phenomenon that may then account for their autonomy lies in the quantum domain despite the fact that all significant neural processes occur apparently at classical spatiotemporal scales. On this assumption, primitive neural networks should have primarily evolved as free-volitional (quantum in origin) subsystems of organisms, not as deterministic prediction machines, requiring larger biological resources. Accordingly, their conscious properties, typically related to higher animals, should have appeared much later than their unconscious functions as, for example, in invertebrates (Brembs 2011).

To put it sharply from the position (ii), can invertebrates have some volitional mechanism, evolutionarily embedded in their neural networks to make a choice? How might the brain make a genuinely free choice not only accessible to an organism’s adaptive behavior but also evolved over species to conscious properties of higher animals? Conscious experience would then emerge as a byproduct of volitional mechanisms and cognitive thalamocortical computations based on oscillatory neural synchronization and complex patterns of brain dynamics (Ward 2011). In other words, the way Nature had chosen to evolve biological brains under natural selection can diverge significantly from the way of computer scientists in making AI systems.

The article is organized to cope more or less consistently with the multiple levels, aspects, and approaches in studying consciousness. After discussing the free will problem at a causal (hard) level and its relation to the active role of consciousness, we introduce the concept of the stream of consciousness into the framework of critical dynamics. The next section incorporates the volitional mechanism into brain dynamics to account for conscious states at a phenomenal (psyche) level. The gap between the two levels must be then filled with cognitive function at a computational (soft) level. CET adopts predictive processing as a strong candidate to provide a basis for explaining conscious contents processed unconsciously. Complexity measures are suggested in the next section to explain how consciousness might be statistically estimated. Then CET suggests the ‘cognition quantity’ measure that should account for the (algorithmic) coherency of cognitive processes and their impairments in mental disorders. The discussion section is given to the biological function of consciousness compared to machine consciousness.

Free will problem

The aim of this section is to account for volition at a causal (hard) level of brain dynamics.

In neuroscience, the free will problem is traditionally conceived from the position (i) to a trial whether consciousness makes a choice at will or the brain itself decides it covertly in unconscious ways (Haynes et al. 2007; Guggisberg and Mottaz 2013; Schultze-Kraft et al. 2016). Since Libet’s (1985) findings, experiments were clearly put to the question: Can consciousness let an action emerging from the motor area go on or block it with the explicit veto on the movement, implemented by the prefrontal areas? However, since any kind of intentional veto has to be also neurally processed, it can be noted that the very awareness comes after the decision was made by the brain (Soon et al. 2013).

To put the problem at the fundamental physical level from the position (ii), CET will follow Bell’s approach in his famous no-go theorem (Bell 1993) and its modified version, the Free Will Theorem of Conway and Kochen (2008). Without entering into details of these theorems, the assumption of our interest here is one that concerns free will. Its conceptualization is postulated as the ability of agents to decide freely, for example, how to prepare an experiment or which measurement to perform. This is then presented by conditional probability as

| 1 |

Here is an experimenter’s actual choice, and stands for the hidden deterministic variables, conditioned on our incomplete knowledge about the dynamics of a system. Note the system of our interest is the experimenter’s brain, making a choice, not anything else. The variables are assumed to embrace all necessary information about the past of both the experimenter and the environment. For clarity, this conceptualization does not discriminate between consciousness and the brain as being neutral to an initiator of volition. The Bell’s assumption given by Eq. (1) states that the experimenter’s actual choice has to be independent of the past (or, more exactly, of its past lightcone), just as the probability holds.

Clearly, if those hidden variables could exist in principle to enable us to make the exact predictions ahead of time by for a certain choice of the experimenter, the choice should be given up to a subjective illusion as it is usually reported in Libet-type experiments, conceived from the position (i). Moreover, the variables would dismiss any even unconscious volitional mechanism from the position (ii) as well. This would generally mean that by uncovering those hidden deterministic variables and applying them to artificial neural networks, the subject’s consciousness might be copyable to run automatically on many digital clones, clearly, with no freedom available there. Thus, the relevance consciousness and volition will becomes obvious by noting that it seems impossible to give any operational difference between a perfect machine predictor of a subject’s states, and a machine copy of the subject’s consciousness regardless of their nature (Aaronson 2016).

In contrast to classical information, however, quantum information cannot be uncovered due to a random wavefunction collapse not controlled by . An important consequence of it comes from the No-cloning theorem that states that it is fundamentally impossible to make a perfect copy of an unknown quantum state because of its ‘privacy’ (Wootters and Zurek 2008). At the level of neuroscience and computer science, it also makes impossible to clone a particular consciousness, if all its private states are quantum-triggered. On this condition, some free-volitional mechanism can be the only scientifically legitimate obstruction to machine-cloned consciousness.

The hypothesis that the brain is totally controlled by the hidden deterministic variables , contrary to Eq. (1), is called superdeterminism. Although many physicists (including Bell) find the hypothesis implausible, some still advocate it (‘t Hooft 2016; Hossenfelder and Palmer 2020). Superdeterminism argues that brains are just deterministic (hence copyable) machines: humans do what the universe wants them to do while keeping in mind the illusion of free will. The striking conclusion of it is that the outcome of a subject’s particular decision at any time should have been predetermined long before the subject’s birth. In fact, superdeterminism amounts to a scientifically rigorous version of old-fashioned fatalism (Gisin 2013). Fortunately, while conceived to banish any sort of mysticism from quantum mechanics (‘t Hooft 2016) such as nonlocal (faster than light) correlations or the observer-dependent wavefunction collapse, superdeterminism leads inevitably to much more mystical consequence such as ‘cosmic conspiracies’ that should violate standard statistical inequality of the Bell theorem in precisely prepared quantum experiments (Gallicchio et al. 2014).

CET puts the Bell’s condition given by Eq. (1) to its foundations as a mathematically rigorous formulation of free will: no hidden deterministic variables can have full control over brain dynamics. In the physical framework, however, there is simply no other legal way to account for volitional mechanisms besides quantum randomness because classical processes in the brain rule out any other kind of genuine freedom (Yurchenko 2021). The probabilities in quantum mechanics are fundamentally different from those in statistical mechanics. In fact, statistical mechanics dealing with big data is still a deterministic theory. In contrast, quantum entanglement and superposition are widely used in cryptographic applications to generate the so-called Bell-certified random numbers that could not be prepared classically (Pironio et al. 2010). Hence, if we want to account for free volition, we need to admit quantum effects in the brain.

Modern theories of consciousness can be divided into two camps—classical and quantum-inspired—depending on how they decide the free will problem. Most of dominant theories belong to the first camp. Although the free will problem is largely ignored there, they implicitly rely on classical statistical physics. Thus, all those theories are prone to superdeterminism. For example, to rescue conscious will within that classical account in the context of Libet-type experiments, some neuroscientists assume that noisy neural fluctuations can be involved in self-initiated actions (Schurger et al. 2016). They find that the key precursor process for triggering internally generated actions could be essentially random in a stochastic framework (Khalighinejad et al. 2018). Indeed, as the brain contains a huge number of neurons, causal neural processes can be estimated there mainly with the help of statistical descriptions. These descriptions, however, reflect the state of our knowledge that by itself does not violate determinism. This is just the reason why Bell-certification was suggested in cryptographic applications of quantum mechanics, for example, for generating a string of random numbers a Turing machine could not compute. Analogously, Bell-certification should be applicable to the volitional mechanisms of the brain in contrast to a mere reduction in classical stochastic noise.1

In contrast, the second camp pays much attention to quantum effects which have now be well confirmed in biological systems as opposed to the expectations that those should be rapidly thermalized as noise in the warm and wet environment (O’Reilly and Olaya-Castro 2014; Chenu and Scholes 2015). It was proposed that large-scale quantum entanglement across the brain due to microtubules could endow consciousness with an active role in brain dynamics at a psyche level (Hameroff 2012; Hameroff and Penrose 2014) or be involved in long-lasting quantum cognitive processing due to spin-entangled Posner molecules at a soft level (Fisher 2015,2017). These and other quantum-inspired models of consciousness and cognition (Sabbadini and Vitiello 2019; Georgiev 2020) are beyond the scope of this paper. Most importantly, CET does not belong to any of those two camps.

First of all, CET, as stated above, rejects the possibility that consciousness might somehow be active at psyche level of brain dynamics. Second, to account for the brain’s free volition at a hard level, CET proposes to do it with a minimal use of quantum randomness at a microscale of neural activity, where volition causally originates, without resorting to much more mysterious macroscopic quantum effects. Thus, while dismissing any kind of conscious will at a psyche level of a macroscale, CET assumes that quantum randomness can influence brain dynamics at a hard level of a microscale. To solve this problem, CET will recruit a molecular machinery of exocytosis according to the hypothesis of Beck and Eccles (1992, 1998) that the brain could utilize a quantum trigger of exocytosis in a synaptic cleft. Such a micro-event might be Bell-certified.

By solving this problem, CET meets a new obstacle, which, however, can naturally be overcome within the three levels of brain dynamics. It was pointed out many times that randomness alone has nothing to do with free actions caused by a reason not randomly (Koch et al. 2009; Aaronson 2016). Only two ultimate explanations are possible there. First, if volition emerges unconsciously from completely deterministic neural processes to awareness, as it is typically reported in Libet-type experiments, there is no genuine freedom in it, and this kind of volition can be ascribed to machines as well. Second, if a subject’s action would indeed be free of the past, then it was difficult to find a testable difference between physical randomness and behavioral freedom, albeit uncontrolled (Conway and Kochen 2008).

To reconcile causal freedom with cognitive control, a volitional mechanism, responsible for random quantized events at a hard level of a microscale, should be classically amplified and modulated across a mesoscale at a soft level of brain dynamics. A particular conscious state generated by the brain would then be passive at a psyche level but not predetermined from the past at a hard level. Thus, admitting quantum randomness via some neurobiological free volition mechanism (NFVM)—like the Beck-Eccles quantum trigger—is rather of logical necessity to certify the brain’s genuine freedom to act against a superdeterministic and/or classical stochastic account of its dynamics (Jedlicka 2017). After all, CET places the NFVM into arousal centers of the brainstem, a phylogenetically oldest part of the brain. We will return to this issue and incorporate the NFVM into brain dynamics after introducing the stream of consciousness.

Stream of consciousness in brain dynamics

The aim of this section is to formalize the relation between brain dynamics at a causal (hard) level, and consciousness at a phenomenal (psyche) level.

The notion of the stream of consciousness has been pervasive in the literature but never properly defined. Formalizing the stream could make our understanding of consciousness, associated with multiple meanings (see e.g., Sattin et al. 2021), more operational and distinguishable from different brain processes in the same way as developing classical mechanics and thermodynamics had allowed physicists to distinguish weight and mass, or heat and temperature. Consciousness will remain elusive until we introduce a unified framework for brain dynamics, then separating conscious experience from all concomitant and overlapping neural processes maintained by different systems.

The basic prerequisites of CET are these.

Physicalism (causality): consciousness depends entirely on neural activity governed by natural laws at a hard level, not on anything else;

Dynamism (temporality): consciousness not only requires the neural correlates of consciousness (NCC), it also needs time to be cognitively processed at a soft level;

Scale-dependence (emergentism): neural activity at micro- and mesoscopic scales cannot account for its subjective, internally generated mental phenomena at a psyche level without resorting to large-scale brain dynamics.

Physicalism, also called the mind-brain identity, deprives consciousness of any active role in brain dynamics. Or, speaking in philosophy terms, CET adopts epiphenomenalism by rejecting the idea that consciousness can have causal power over the brain. Dynamism makes consciousness the discrete stream of states like momentary snapshots (VanRullen and Koch 2003; Herzog et al. 2020), which cannot control brain dynamics at a soft level as well. After all, scale-dependence excludes multiple conscious entities in the brain like those admitted in Integrated Information Theory (Tononi and Koch 2015) or Resonance Theory of Consciousness (Hunt and Schooler 2019).

Based on the three prerequisites, CET will model consciousness as the stream of macrostates at a hard level, each specified by a particular structural–functional configuration of the whole-brain network , with NCC ⊆ . Here stands for a graph where N is the set of nodes (ideally, neurons), and is the set of edges representing synapses. The configurations with each node’s own dynamics averaged over spontaneous fluctuations in neural activity are typically presented with network statistics, extracted locally from various neuroimaging data.

The large-scale brain dynamics can then be approximated in terms of stochastic non-equilibrium systems by Coordination dynamics of coupled phase oscillators (Tognoli and Kelso 2014) or, more generally, in the Langevin formalism, a mixture of deterministic and stochastic contributions to the motion of a system,2

| 2 |

Here is a descriptive function whose representation by the order parameter in a phase space O should account for metastability, scale-free avalanches, and self-organized criticality in brain dynamics (Bak et al. 1987; Blanchard et al. 2000; Beggs and Plenz 2003; Hesse and Gross 2014). In complex neural processes, metastable states are multiple near criticality in the activities of neurons that make up a system, thereby enlarging network repertoires for flexible behavioral outcomes (Deco and Jirsa 2012; Cocchi et al. 2017; Dahmen et al. 2019). Criticality is of crucial importance in neural activity as enhancing information processing capabilities of the brain, poised on the edge between order and disorder (Chialvo 2010; Beggs and Timme 2012).

As consciousness cannot be detected directly because of its subjective nature, accessible experimentally via a subjective report, the only way the science of consciousness is left with is to postulate its emergence from neural activity. Of course, it makes its falsification problematic (Kleiner and Hoel 2021), and explains why there are now a bewildering number of very different theories, each suggesting its own account of conscious presence in brains and other systems (Doerig et al. 2020).

In CET, the stream S(τ) of consciousness will be formally defined as a derivative of brain dynamics in discretized time τ,

| 3 |

In effect, this equation should capture the instantaneous transitions from the continuous brain dynamics to discrete conscious states, each identified with a single point o ∈ O in a phase space, where the response of the brain to external stimuli is maximized (Shew et al. 2011; Tagliazucchi et al. 2016). A similar approach to studying consciousness over critical dynamics had been proposed by Werner (2009). Accordingly, the transitions over metastable brain states can then be viewed as neural correlates of pulsating conscious experience in the framework of the cinematic theory of cognition (Freeman 2007; Kozma and Freeman 2017). This approach finds now experimental evidence in many studies (Lee et al. 2010; Haimovici et al. 2013; Mediano et al. 2016; Tagliazucchi 2017; Kim and Lee 2019) exhibiting that only states integrated near criticality can ignite consciousness.

Formally, consciousness can be viewed like the physical force, derived from the momentum in Newtonian mechanics, . Although the force is measurable and calculable, it is not a real entity but only a dynamical property of a moving system. Seeing consciousness as ‘mental force’ of brain dynamics seems to be more accurate and insightful than the view that consciousness is an intrinsic property of matter like mass (Oizumi et al. 2014; Tononi and Koch 2015). Speaking in terms of physics, there is a principled ontological difference between the force and mass in , where is a scalar quantity, which is indeed intrinsic to a system constantly, whereas is a vector quantity of a system’s action that can be zero sometimes. Similarly, consciousness can trivially lack in the brain, not to mention other material (biological or artificial) systems.

According to Eq. (3), the brain has no mental force if its dynamics depart from criticality as it occur in unconscious states such as coma, sleep, or general anesthesia (Hudetz et al. 2014; Tagliazucchi et al. 2016; Lee et al. 2019). This also says us that even in critical dynamics the brain lacks the mental force during some interval while reaching dynamically a next critical point. There are two complementary ways to estimate —either by monitoring brain dynamics to calculate phase transitions or due to a subjective report. The problem of monitoring, however, is non-trivial because of heterogeneous timescales involved there (see e.g., Golesorkhi et al. 2021). An optimum timescale in resting state and in task data is usually reported to be around 200 ms (Kozma and Freeman 2008; Deco et al. 2019).

Here we follow the second way and assign the interval to a wide temporal window ms, comprising many experimental findings from video sequences of intelligible images about 7–13 per second (VanRullen et al. 2014) to the attentional blink on masked targets separated by 200–450 ms (Shapiro et al. 1997; Drissi-Daoudi et al. 2019). Yet, an important neurophysiological aspect of brain dynamics is that the stream cannot be normally delayed for a period longer than about 300 ms because this timescale is crucial for the emergence of consciousness (Dehaene and Changeux 2011; Herzog et al. 2016). Consciousness spontaneously fades after that period, for example, in anesthetized states (Tagliazucchi et al. 2016).

In CET, consciousness and unconsciousness do not cooperate in parallel as if advancing each other in two separate dynamics (highway vs. underground). Conscious states appear instantaneously as a snapshot accompanied with a phenomenal percept of the ‘specious’ (Varela, 1999) or ‘remembered’ present (Edelman 1989) at a particular moment of time. Consciousness is not an independent observer of how the states were prepared so that awareness requires no time to ignite. Since the ignition across the whole brain’s workspace at a psyche level occurs phenomenally due to self-organized criticality (Friston et al. 2012), it is not dynamical process that should transmit information into a special site of the network to conscious experience. Experience is just the information the brain has processed at a moment τ. Although conscious experience emerges only at critical points like a snapshot (VanRullen and Koch 2003; Herzog et al. 2020), subjects can feel the continuity of being as if they were conscious all the time.

Now let be a mapping from the phase space onto a vector space over the product of all nodes (neurons) of the brain. The map returns S(τ) from a point to an -dimensional vector where or for neurons active or inactive at a given time. Thus, each state S(τ) can be represented by x as a certain structural–functional configuration of at a moment τ responsible for subjective experience at a given critical point. This is also a particular NCC (see the next section). We write,

| 4 |

The discreteness of the stream means that all conscious states can, at least in principle, be naturally enumerated from a subject’s birth, not merely by a lag in experimental settings. Let the brain bring consciousness to a state at a moment . We can return Eq. (4) into the continuous time description (omitting details),

| 5 |

The next conscious state will emerge over the time interval as

| 6 |

In a timeless description, the stream S(τ) is a discrete chain (X, <), where and whose relation < standing for temporal/causal order is transitive and irreflexive. Here the irreflexivity means that forbids closed causal loops and, in particular, instantaneous feedback circuitry in brain dynamics that might enable consciousness with causal power over the brain, for example, due to the quantum temporal back-referral of information (Hameroff and Penrose 2014). In CET, consciousness is neither active nor continuous so that it cannot—classically or quantum-mechanically—choose its own way in brain dynamics. How can then the stream be free of predetermination?

Consciousness NFVM-driven in brain dynamics

The aim of this section is to incorporate free volition into the stream of consciousness. To do it consistently, consider the concept of NCC in the framework of the stream. The NCC has been traditionally defined as the minimal neural substrate expressed by specific signatures that are necessary and sufficient for any conscious experience (Crick and Koch 2003). This is based on the assumption that a key function of consciousness is to produce the best current interpretation of the visual scene and to make this information available to the planning stages of the brain (Rees et al. 2002). In general, the empirical search for NCC is implicitly based on the idea that that consciousness plays an active role in presenting a subject with a multimodal, situational survey of the environment, and in subserving their complex decision-making and goal-directed behavior (Pennartz et al. 2019). This idea is widely accepted in the neuroscientific community. When unfolded over an evolutionary scale, the idea, thus, leads to a scenario that there should have been developed special neural networks which might enable consciousness with mental power to control cognition at a computational (soft) level, yet picking up free decision-making from the neural computations at a causal (hard) level.

On the other hand, the importance of dissociating the true NCC from the variety of neural processes which underpin conscious experience has been often stressed, and the role of different areas of the brain in specifying conscious contents is debated over decades (Noë and Thompson 2004; de Graaf et al. 2012). However, the extensive cortical and subcortical networks involved in integrity of large-scale brain dynamics make it difficult to precisely identify the contribution of individual brain regions to NCC (Mashour and Hudetz 2018). Most importantly, the problem of defining the true NCC is tightly intertwined with the problem of defining consciousness and its biological function. How can we study the correlates of consciousness without knowing what the consciousness is and how had it evolved?

CET allows specifying the problem more operationally by decomposing the concept of NCC into certain neural configurations that are responsible for different conscious states. In principle, we can uncover NCC for any particular state by merely detecting activity patterns in at that moment τ. Then we can define the minimal neural substrate by the intersection of all those states over the stream, or, more generally, as

| 7 |

Here is a set of all possible states from full vigilance to sleep to coma a subject might have during lifetime. Thus, to identify which minimal correlates should be necessary for consciousness, we need to associate with the most primitive core of conscious presence presented clinically in brain-injured patients with the unresponsive wakefulness syndrome (Giacino et al. 2014) or by subcortical consciousness in infants born without the telencephalon, e.g., in hydranencephaly or anencephaly (Merker 2007).

Otherwise, if one wishes to assume active (and continuous) consciousness thought to be involved in attentional effort, active inference, decision-making, planning, goal-directed behavior, and other functions, it can be that NCC would comprise the most part of the brain for its own “highway” to produce these activities,

| 8 |

Thus, it can be futile to try to identify neural correlates of consciousness without having confidence in its role in brain dynamics. On the other hand, because conscious contents cannot be evaporated from conscious states to account for empty or contentless phenomenal experience (Hohwy 2009; Bachmann and Hudetz 2014), neural correlates thought to be involved in conscious experience have already been involved in volitional and cognitive processes (Naccache 2018; Aru et al. 2019). Of course, this fact by itself is indifferent to the question “what causes what?” Namely, it does not explain whether or not consciousness is active there.

Contrary to the idea of active consciousness, CET takes the ‘inverted perspective’: consciousness is a passive phenomenon, ignited at critical points of brain dynamics and resulting completely from unconscious computational processes at a soft level. Instead of discussing different brain regions with their contributions to subjective experience, e.g., the prefrontal cortex vs. posterior ‘hot zones’ (Koch et al. 2016; Boly et al. 2017), or involving pre-stimulus and post-stimulus activity correlates (Northoff and Zilio 2022), CET argues: there is no special NCC that might causally influence brain dynamics at a hard level. Conscious states emerge transiently at a psyche level as neural configurations triggered by the NFVM and classically amplified by bottom-up causation via scale-free avalanches that are intrinsic to and ubiquitous in critical dynamics (Beggs and Plenz 2003; Hahn et al. 2010; Shew et al. 2011).

Another supportive evidence comes from experiments showing that perturbations or nanostimulations in vivo of a single neuron can cause those avalanches and induce phase transitions of cortical recurrent networks, thereby modifying global brain states (Fujisawa et al. 2006; Cheng-Yu et al. 2009; London et al. 2010; Houweling et al. 2010) with a marked impact on conscious states (Tanke et al. 2018; Knauer and Stüttgen 2019). Of course, such experiments, if viewed in the context of Libet-type experiments (Fried et al. 2011) to account for volition, do not provide direct evidence to the NFVM because the amplifications are mainly detected on cortical neurons.

Meanwhile, CET places the NFVM into the brainstem to account for internally-generated quantized neuronal events that might generate scale-free avalanches across the brain. It is based on the fact that just the brainstem is responsible for spontaneous arousal and permanent vigilance conducted through the ascending reticular activating system (ARAS) to thalamocortical systems (Parvizi and Damasio 2001). Although the cortex is mostly responsible for elaborating conscious contents, only the ARAS and intralaminar nuclei of the thalamus can abolish consciousness. Moreover, due to the brainstem’s anatomical location in the neural hierarchy, its neuromodulatory influences, acting as control parameters of criticality, are capable of moving the whole cortex through a broad range of metastable states, responsible for cognitive processing in brain dynamics (Bressler and Kelso 2001).

On the evolutionary timeline, brains had evolved gradually as multilevel hierarchical systems consisting of anatomical parts that were selection-driven as adaptive specialized modules for executing one or another function. Any brain function requires an appropriate neuronal structure for generating various dynamical patterns to carry out it optimally. It is well known that the global architecture of the brain is not uniformly designed across its anatomical parts, which structural features are specialized under corresponding functions. For example, the cortex and the cerebellum exhibit various network properties. Possibly, the network characteristics of the brainstem with its reticular formation were developed to be especially conducive to small neuronal fluctuations that might be amplified across many spatiotemporal scales to account for reflexes and primary volitional reactions projected afterwards to higher thalamocortical systems (see “Discussion”).

How could then conscious states be causally free from the past? CET takes for an illustration the proverbial coin toss scene. If someone, say, Alice tosses a coin at her truly free will resulting from a micro-event in her brain, and amplified in spontaneous neural activity, the action is independent of the past, and, hence, the macro-event caused by Alice occurs genuinely random (not predetermined by the entire previous history of the universe). Although the outcome of tossing is typically probabilistic with a corresponding distribution, the trajectory of the coin is completely deterministic. The random outcome is thus epistemic, i.e., related to the state of our knowledge about the coin’s behavior, not of the behavior itself. Nevertheless, this can be Bell-certified, if Alice’s conscious states (coupled with corresponding actions) were indeed NFVM-triggered in her brainstem in the same way as, for instance, quantum effects can participate in bird navigation based on the interaction of electron spin with the geomagnetic field (Ritz 2011; Hiscock et al. 2016).

To put the stream S(τ) and the NFVM together, CET will replace the fundamental cause-effect framework by a behavioristic stimulus-reaction space. In general, all physical interactions of any sort throughout the world can be viewed in the behaviorism language insofar as any physical system from a particle to a planet depends on its environment. Instead of using the cause-effect language, one can assume conversely that all physical systems ‘respond’ on the environmental ‘stimuli’ by adding nothing to a standard physical theory, for example, by saying that planets behave adaptively to gravitational fields in spacetime. Clearly, no behavioral freedom could be possible there.

In contrast, CET assumes that the brain has some freedom to respond to stimuli, and introduces a stimulus–response repertoire (SRR). Considering brain dynamics within the SRR results in information not about what possible causal mechanisms should lead the brain to its current state, but how the brain could arrive at a certain state among many possible responses from a given state (stimulus). Integrated Information Theory, for example, stresses the cause-effect repertoire of brain dynamics to compute the information generated when the system transitions to one particular state out of a repertoire of statistically possible (counterfactual) states (Tononi 2008). In reality, however, every next state of the brain emerges from the previous state (a particular NCC) that has been already actually defined in the past. Moreover, the SRR may be physically possible just due to metastability in critical dynamics that provide variability and perceptual transitions (Haldeman and Beggs 2005), thus, leaving room for volitional responses there (Fig. 2a).

Fig. 2.

The stream of consciousness. a In brain dynamics, every conscious state evolves from the previous one as a schematic bunch of all possible metastable states , processed within a current SRR in a state-space and collapsed after ∆t to a certain conscious state balanced at criticality. Placing the NFVM into the brainstem responsible for arousal and vigilance guarantees that each conscious state will initially be free from predetermination. b The stream S(τ) is shown as a broken (bold) line, running over bunches of different SRRs, each triggered by the NFVM. A state emerges instantaneously as the ‘winner-take-all’ coalition that does not transmit information to special NCC. c In binocular rivalry, while the incoming signals remain constant, the percept switches to and fro over a temporal period about 2 s during which many states are to be processed in S(τ). Instead of visually experiencing a confusing picture of two images (a cat and a car) simultaneously, subjects report a perceptual alternation in seeing only one of those at a given time

The responses should then be presented by the probability p, related to our incomplete knowledge about a system’s behavior that, however, could be completely predetermined at a hard level. The probability distribution behind an SRR would thus amount to counterfactual outcomes the brain might arrive at a moment τ. However, if we adopt the Bell’s assumption, no hidden deterministic variables λ can control the NFVM. Now we can formally define the mechanism by translating Eq. (1) into CET:

| 9 |

where and stand for the previous state in the stream S(τ) and the environmental variables respectively.

Equation (9) returns CET to standard stochastic descriptions of brain dynamics, but now the descriptions can be Bell-certified, not merely statistically independent from the environment. On this condition, the probability of a subject’s choice could not be refined to unlimited precision in principle for lack of such variables. Overall, the stream S(τ) evolves as a chain (X, <) of separate conscious states, each computed unconsciously at a soft level within a given SRR (Fig. 2b).

This picture is in agreement with many neuroscientific findings, firstly, on bistable perception within a constant SRR. Binocular rivalry is a phenomenon of visual perception, placed between different images, which are presented separately to each eye but at the same time. Instead of the two images being seen superimposed, only one single image is consciously perceived at a time. After a few seconds, while the brain has processed many states focused on the same image, there is a switch to perceiving the other image, after which the cycle repeats (Fig. 2c). Binocular rivalry appears between two competing hemispheres beyond any conscious volition, and can be an example of how the NFVM affects perceptual switches passively observed by consciousness.

How should the brain unconsciously arrive at a certain state by deciding between two equivalent stimuli during a bounded interval ? Commonly accepted approaches to binocular rivalry stress just the role of randomness that should account for alternating conscious scenes within a constant SRR. Data of various experiments characterize the alternation by a crucial influence of noise in neural activity mediating deterministic dynamics (Brascamp et al. 2006). A similar explanation is given in Hohwy et al. (2008) on predictive processing as a competition of priors between two error-minima, each per image in a free-energy landscape with bistability in stochastic resonance. First, any of mentioned explanations of binocular rivalry in terms of classical stochastic processes does not contradict CET. We only ask how the conscious states alternated within the same SRR might be free in brain dynamics. According to Eq. (9), the principled premise here is the NVFM, which guarantees that the very arousal underlying each conscious state in the stream S(τ) will already be free of predetermination.

The NVFM can well be reconciled with some mental diseases such as obsessive–compulsive disorder, accompanied with distortions of the sense of agency, when patients fail to respond whether or not they were responsible for a particular action. The experience of free will is reported to be (often painfully) affected (Oudheusden et al. 2018) by the presence of intrusive recurrent thoughts and unwanted urges with compulsively repetitive acts. Such distortions must be directly related to cognitive function: if the process of unconscious control is violated at a soft level, a subject can experience distortions of the sense of agency at a psyche level as if someone else had dictated the subject’s choice. But the NFVM is intact. In other words, the NFVM is just the invisible one that initiates at a hard level those decisions, internally-generated at a soft level and exposed then to a psyche level.

Volitional-cognitive complex

The aim of this section is to provide discretized stream of consciousness at a phenomenal (psyche) level with the multitude of cognitive (unconscious) processes at a computational (soft) level, imposed upon brain dynamics at a causal (hard) level and implemented by various functional systems, thereby connecting consistently all the hierarchical levels across the three spatial scales of neural activity.

Many proponents of the active role of consciousness suggest that free will can trespass computationally into brain dynamics, but only under the set of special circumstances. For example, higher-order thoughts can involve the use of language when planning future actions at a soft level. Yet, the soft level should require its own causal explanation beyond a hard level (Rolls 2020). CET rejects this hypothesis as physically implausible. First of all, Eq. (3) does not discriminate between different kinds of conscious states. All the states must be uniformly processed, yet Bell-certified at a hard level so that some states cannot be causally freer than others. On the other hand, CET recognizes that only rapid and random reflexes might benefit from the NVFM because the stream of consciousness, consisting of completely random states, would be cognitively disconnected and, thus, ill-adaptive. The mechanisms of control are also necessarily for acquiring knowledge and understanding experience through coherent predictive processing. This implies a two-stage model in which random neural events, initiated by the NFVM from arousal nuclei at a microscale, will be unconsciously constrained by cognitive thalamocortical systems at a mesoscale before reaching conscious states at a macroscale.

To do it, CET adopts the predictive processing theory (PPT) as a strong candidate for a soft level that can bridge the gap between brain dynamics at a hard level and phenomenal experience at a psyche level. PPT postulates that brains should have evolved mainly as prediction machines (Knill and Pouget 2004; Friston 2008; Clark 2013) which minimize prediction-error to support best adaptive responses within alternating SRRs. Hohwy and Seth (2020) argue that PPT—precisely because it is a theory of perception, cognition, and action along which the stream S(τ) unfolds dynamically—could provide a systematic basis for a complete theory of consciousness. Such a theory needs to incorporate volition, consciousness, and cognition seamlessly into a general framework. There were proposed various combinations of PPT with known theories of consciousness such as Integrated Information Theory or Global Workspace Theory (Safron 2020; VanRullen and Kanai 2021). The main advantage of PPT before these static theories is its intrinsically dynamical nature. Another way to introduce dynamics into the theories is self-organized criticality (Tagliazucchi 2017; Kim and Lee 2020). However, in the framework of CET criticality and predictive processing are well compatible in describing brain dynamics: the former is about causation at a hard level, and the latter is about computation at a soft level. Moreover, criticality is thought to optimize information computing (Shew et al. 2011). It turns CET to Bayesian learning as a core computational devise of predictive processing.

Bayesian learning is the transformation of priors about the parameters into posteriors via data, presented by stimuli within a given SRR (Fig. 3a). The posteriors become over updating the priors of the brain’s generative model for future data in predictive processing over SRRs. Bayesian learning is often thought of as a single process implemented by means of top-down and bottom-up signal flow over hierarchical layers (Friston 2008; Seth 2013) in the brain (Fig. 3b). The same models are successfully exploited in deep machine learning. First, unlike brains, such machines lack any conscious experience at a psyche level,—and it occurs by a reason unknown to us. Second, suppose the machine might be conscious in that single process. Did it mean that its conscious states should all emerge only as priors or as posteriors (related to the output layers of generative models in machine learning)? To translate Bayesian learning into the language of the stream S(τ), CET takes priors and posteriors to be separate conscious states, each unconsciously processed during an interval . One more state must then be placed between them for perceived data. Laying now priors to the boundary conditions of Bayesian learning, its full cycle needs a triplet (Fig. 3c). Importantly, such a triplet arises only in a static representation, requiring three successive conscious states. In brain dynamics, they become just mixed into a single process in which priors turn out into posteriors that serve for data acquisition in next states.

Fig. 3.

The reentrant cognition system in predictive processing. a The Bayes theorem describes how the prior belief B (expectation) based on the brain’s generative model M is transformed into the posterior belief over data acquisition D, all placed into a state space of a given SRR. b Hierarchical predictive processing across three cortical regions with feedforward and feedback information flow (adapted from Friston 2008). c Triplets of successive states, accompanied with self-organized recurrent neural activity across hierarchically distributed brain areas, are connected by the RCS over two ∆t intervals as an unclosed causal loop. Consciousness at the present state (data acquisition) self-refers (blue short arc) to its previous state (priors) to arrive (red long arc) at the future state (posteriors). d Here brain dynamics are mapped onto the irreflexive chain (X, <) in a 2-dimensional space of a physical axis t (way of knowing) and a phenomenal axis S(τ) (way of being). The chain evolves by the RCS as the perpetum cogito process, running from a subject’s birth moment. Unlike a statistic description of Bayesian learning above, in dynamics, the priors, posteriors, and data acquisition become a single process. e The schematic of neural activity over the causal, computational, and phenomenal levels of description

Importantly, by turning to Bayesian learning, CET comes naturally at the hard-soft duality between brain dynamics at a causal level, and predictive processing at a computational level, both expressed with the same statistical tools. Meanwhile, predictive processing is about subjective information the brain has computed from its own perspective in a given objective state, not about the state itself (a particular NCC). In other words, this reflects a cognitive (epistemic) aspect of neural activity, not its physical (ontic) aspect, presented by , which encodes that information in neurons. The NCC can be uncovered by neuroimaging data, whereas its contents are accessible only due to a subjective report. Without realizing this hard-soft duality, a reader can be confused. In own framework, priors, data acquisition, and posteriors all refer to conscious contents the brain has learned from its own perspective, whereas S(τ) conforms to a certain NCC responsible for those contents at the physical level. Because of the duality, we can know everything about the NCC but be still unable to explain how subjective experience appears there.

Indeed, consciousness is subjective self-evidential experience. This is the essence of Descartes self-referential cogito “I think, therefore I am.” Its stream, Tononi (2008) argues, is a way of being rather than a way of knowing. Conscious experience cannot, however, be dissociated from its cognitive contents (Hohwy 2009; Aru et al. 2019; Naccache 2018), as well as from its introspective account, i.e., self-awareness (Lau and Rosenthal. 2011; Friston 2018). Now we argue that the way of being (consciousness) and the way of knowing (cognition) go side by side by imposing self-referential cogito upon Bayesian learning. On this condition, every conscious state in the stream S(τ) should self-refer. Recall, however, that the chain (X, <) of conscious states is irreflexive since closed causal loops are forbidden there. Neurophysiologically, thus, self-reference cannot be made instantaneously but needs time to be causally processed in brain dynamics, with consequent subjective experience. When consciousness self-refers, it refers to its present state, while coming causally and computationally into the next updated state in the stream S(τ).

Henceforth in CET, self-referential cogito will follow Bayesian learning in every conscious state over the stream. Meanwhile, consciousness and cognition both depend entirely on brain dynamics: the way of knowing originates from metastability, and the way of being emerges near criticality. Critical dynamics allow thus to naturally separate unconscious predictive processing from conscious experience, ignited instantaneously only at particular moments of time. Without the conceptualization, presented by Eq. (3), it would be hard to explain how conscious snapshots were separated from both continuous brain dynamics at a causal level, and unconscious predictive processing at a computational level.

If so, then from a perspective of neural circuitry, information flow in the brain should somehow embody Bayesian learning and cogito with corresponding neural mechanisms. Reentry is a typical neurophysiological device suggested by Edelman et al. (2011) for the binding problem: How do functionally segregated areas of the brain correlate their activities in the absence of an executive program or superordinate map? Reentry is viewed as an ongoing process among competing neuronal groups of the dynamical core, which is central to the emergence of consciousness in a particular state (Edelman 2003; Baars et al. 2013). This emphasizes the role of recurrent activities between cortical areas by feedforward and feedback connections (Mashour et al. 2020). It is also shown that critical dynamics are well compatible with learning in recurrent neural networks (Del Papa et al. 2017).

In CET, the system comprising all thalamocortical areas involved in perception and cognition, with the predominant role of the prefrontal cortex in cognitive control (Miller and Cohen 2001), will be called Reentrant Cognition System (RCS). The RCS has to capture the dual aspect of brain dynamics and provides both global and local dynamical binding of neural activity: while being a causal system, this is responsible for long-term cognitive coherency of the stream S(τ) over Bayesian learning that is schematically depicted by an unclosed temporal loop imposed upon brain dynamics with respect to causality (Fig. 3c). The RCS must be (i) autonomous, (ii) self-connected over S(τ), and (iii) applicable uniformly to every conscious state experienced and remembered along the way of being through self-referential cogito. In the stream, self-awareness emerges from recursive applications of primary perceptual experience at a moment to cognitive contents at the next moment . In this sense, self-awareness is what the brain has learned about its own representations of the world (Cleeremans 2011).

This is just the reason why the process can be called “perpetum cogito” (Yurchenko 2017) in which priors, data acquisition, and posteriors intertwine with each other into a single process by recurrent (causally unclosed) neuronal structural–functional loops over time. Thus, self-organized criticality at a causal (hard) level, predictive processing at a computational (soft) level, and self-evidencing conscious experience at a phenomenal (psyche) level all should be covered by the perpetum cogito (Fig. 3d). The brain does not store perceptual data and intermediate computations; only its ultimate decisions (“best guess”) over Bayesian learning will be stored. It explains why brain states can be preserved when reach conscious experience, whereas unconscious information, underlying the decisions, quickly decays (Dehaene and Changeux 2011). While being ignorant of unconscious processing (e.g., in visual masking), consciousness remains well informed about the brain’s ultimate decisions (e.g., in binocular rivalry), and thus acquires an illusion of volitional and cognitive control. Thus, the perpetum cogito process provides the discrete stream of consciousness with the persistent and temporally extended sense of Self. Importantly, this must not be confused with conscious processing which covertly requires its own “highway” in brain dynamics to control the unconscious “underground” of neural activity. CET finds the very term fallacious as leading to the illusion of free will. What might be loosely called ‘conscious processing' should ultimately be the perpetum cogito as a discrete reentry process (way of being) following passively unconscious predictive processing (way of knowing) and exposed near criticality to a psyche level as more or less coherent decisions of Bayesian learning at that time. Their adaptive success depends on the RCS.

The NFVM and RCS both form a volitional-cognitive complex, anatomically extended over the whole brain. While the RCS occupies mainly the thalamocortical regions, the NFVM is a key underlying mechanism placed in the brainstem to be responsible for bottom-up initiation of conscious states, each then processed by the RCS within a given SRR during ∆t. The states have also to be modulated in sensorimotor systems to provide cognitive function with coherence maximization between SRRs in the ever-changing environment. Thus, to be cognitively connected under the way of being that makes a difference, the brain should have the volitional-cognitive complex fine-tuned and exploited entirely.

According to the inverted perspective, adopted by CET, consciousness is a passive snapshot ignited at a psyche level having neither causal nor computation power over neural activity at both hard and soft levels. There is no neural correlates of consciousness that might be responsible for its active role, . Momentary conscious states emerge phenomenally at critical points of brain dynamics as ultimate decisions of Bayesian learning. Their neural correlates are just the neural correlates (NC) of the complex (Fig. 3e). Heuristically,

| 10 |

More exactly, the neural correlates of a particular conscious state S(τ), presented by the variable , depend not only on a set of neurons recruited by the complex at that moment but also on how well that configuration of diverse structural–functional networks can maintain self-organized criticality to provide large-scale brain dynamics with the mental force. Its magnitude, traditionally referred to as the level or ‘quantity’ of consciousness in a given state, varies across different states, including clinical ones. Now there are a number different quantitative measures proposed to estimate the level of consciousness in different states. We will consider most promising of them in the next section.

Complexity and transfer entropy in stream of consciousness

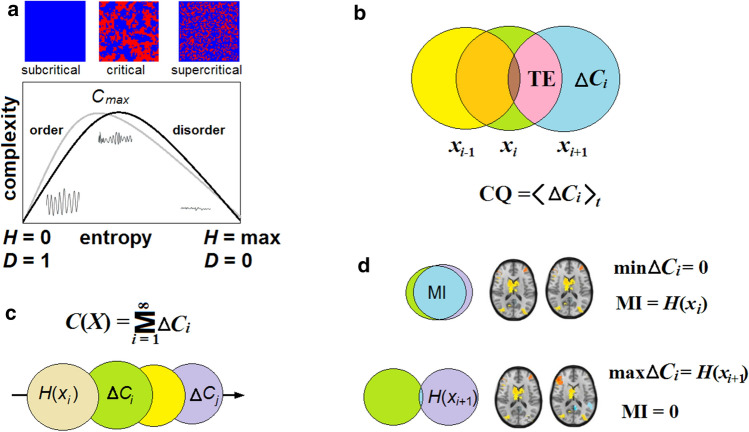

According to Eq. (3), conscious states emerge only near criticality where the brain is poised between order and disorder (Chialvo 2010). This provides an optimal state for dynamical variability and information storage, and has been suggested as a determinant for information-based measures of consciousness (Mediano et al. 2016; Tagliazucchi 2017; Kim and Lee 2019). Indeed, these both are statistically relevant as describing neural activity at the same physical level (Werner 2009; Deco et al. 2015; Aguilera 2019). While the critical dynamics are characterized by the order parameter, for example, a mean proportion of activated neurons in , with the control parameter, depending on connectivity density over time (Hesse and Gross 2014), the information-based measures evaluate the degree of integration (order) of in a particular state. In CET, objective observables of consciousness at a moment τ will be complexity measures.

Here we consider only two measures that are most relevant to neural activity. In physics, a statistical complexity was proposed to reflect a thermodynamic depth of physical systems with accessible states arranged from an ideal gas in equilibrium to a perfect crystal, maximally ordered (Lòpez-Ruiz et al. 1995). This is a product of Shannon entropy H as the disorder measure, and the opposite measure D called “disequilibrium” as a distance between and H.

| 11 |

| 12 |

In an ideal gas, , and . Conversely, for a crystal, , and . Thus, the product well captures the balance between order and disorder and becomes zero for both purely chaotic and purely crystalized systems. Nevertheless, it does not allow for complex non-ordinary systems that are themselves information-processing structures.