Abstract

Background:

Funding agencies, publishers, and other stakeholders are pushing environmental health science investigators to improve data sharing; to promote the findable, accessible, interoperable, and reusable (FAIR) principles; and to increase the rigor and reproducibility of the data collected. Accomplishing these goals will require significant cultural shifts surrounding data management and strategies to develop robust and reliable resources that bridge the technical challenges and gaps in expertise.

Objective:

In this commentary, we examine the current state of managing data and metadata—referred to collectively as (meta)data—in the experimental environmental health sciences. We introduce new tools and resources based on in vivo experiments to serve as examples for the broader field.

Methods:

We discuss previous and ongoing efforts to improve (meta)data collection and curation. These include global efforts by the Functional Genomics Data Society to develop metadata collection tools such as the Investigation, Study, Assay (ISA) framework, and the Center for Expanded Data Annotation and Retrieval. We also conduct a case study of in vivo data deposited in the Gene Expression Omnibus that demonstrates the current state of in vivo environmental health data and highlights the value of using the tools we propose to support data deposition.

Discussion:

The environmental health science community has played a key role in efforts to achieve the goals of the FAIR guiding principles and is well positioned to advance them further. We present a proposed framework to further promote these objectives and minimize the obstacles between data producers and data scientists to maximize the return on research investments. https://doi.org/10.1289/EHP11484

Introduction

Transparent and comprehensive data sharing is a laudable but challenging goal in any research domain, including the environmental health sciences. Major failings in research reproducibility often result in wasted time and lost resources.1 Through implementation of robust strategies to share data and metadata, hereafter referred to collectively as (meta)data, we believe that these failings can be addressed. Today, the FAIR principles are central elements of data management, sharing policies, and initiatives across diverse institutions, including the National Institutes of Health (NIH),2 the National Academies of Sciences, Engineering and Medicine,3 the European Commission,4 the Wellcome Trust,5 and Health Canada.6 This is highlighted by NIH’s updated policy for data management and sharing, which requires a “Data Management and Sharing Plan” as a component for research grant proposals starting 25 January 2023 (NOT-OD-21-103).2 In addition, the NIH’s focus on rigor and reproducibility, and accountability to the U.S. taxpayer, is indirectly driving improvement in standards for (meta)data quality, especially data collected in support of large cross–data set meta-analyses and the application of artificial intelligence and machine learning.7–9

Despite these efforts, widespread awareness and adoption of the FAIR principles to date has been modest.10,11 Significant gaps have been reported between the expectations outlined in the FAIR principles (Table 1) and the current reality of data sharing.12 This commentary provides a broad overview of current approaches and tools to promote the adoption of the FAIR principles for environmental health research data. We outline the importance of capturing domain-relevant metadata using in vivo and omic data sets as a reference point to highlight the gaps and successes in metadata collection, as well as introduce a strategy to better capture the metadata associated with diverse experimental designs, models, and end points typical of environmental health research experiments. The benefit of improving resources surrounding (meta)data collection will be highlighted herein through a case study.

Table 1.

Overview of the FAIR data principles.

| Term | Brief descriptiona |

|---|---|

| Findable | Data can be persistently identified manually and by machine automated processes through rich metadata deposition. |

| Accessible | (Meta)data are available through standardized protocols with clear authentication and authorization procedures. |

| Interoperable | Metadata use qualified formal, accessible, shared and broadly applicable language. |

| Reusable | (Meta)data are richly described and meet domain-relevant standards with clear usage licenses and detailed provenance. |

Definitions summarized from Wilkinson et al.53

Defining Expectations for Reporting Standards

Significant challenges are associated with the discovery, evaluation, integration, and reuse of data because of the diversity of study designs, approaches, and analyses employed in the environmental health sciences.13 Specific (meta)data requirements have been established to support validation, integration, reanalysis, and reuse; these are commonly referred to as minimum information checklists, reporting frameworks, reporting guidelines, or reporting standards. Minimum reporting standards (Table 2) vary broadly in their scope and may address only assay-specific expectations, as in the case of microarrays [e.g., Minimum Information about Microarray Experiments (MIAME)]14,15 or cell-based assay expectations [e.g., Minimum Information about a Cellular Assay (MIACA)].16 Some reporting standards are now widely required by established repositories and for publication in peer-reviewed journals such as MIAME and its next-generation sequencing evolution [Minimum Information about a Sequencing Experiment (MINSEQE)].17–20

Table 2.

Discussed minimum reporting requirement standards.

| Abbreviation | Name | DOI | Developed for environmental health studies |

|---|---|---|---|

| TBC56 | Tox Bio Checklist | 10.25504/FAIRsharing.jgbxr | Yes |

| MIABE28 | Minimum Information About a Bioactive Entity | 10.25504/FAIRsharing.dt7hn8 | No |

| MIAME/Tox29 | Minimum Information About a Microarray Experiment (Toxicology) | 10.25504/FAIRsharing.zrmjr7 | Yes |

| TERM57 | Toxicology Experiment Reporting Module | 10.1016/j.yrtph.2021.105020 | Yes |

| MIATA30 | Minimal Information About T Cell Assays | 10.25504/FAIRsharing.n7nqde | No |

| MINSEQE17 | Minimal Information About a Sequencing Experiment | 10.25504/FAIRsharing.a55z32 | No |

| MIACA16 | Minimal Information About a Cellular Assay | 10.25504/FAIRsharing.7d0yv9 | No |

Note: DOI, digital object identifier.

The Minimum Information for Biological and Biomedical Investigations (MIBBI) standards collection created by the MIBBI Foundry, now FAIRsharing.org, attempts to facilitate the harmonization of reporting standards.13,21 At the time of this writing, FAIRsharing.org has become a registry of standards, databases, and policies pertaining to FAIR, and it lists 208 standards covering diverse assay types and experimental models as “Ready,” meaning active and available for use. The proliferating number of reporting standards face significant hurdles to adoption because of a lack of coordination within user communities, which results in the collection of the same information multiple times for a single study using different controlled vocabularies and formats.13 In addition to overlap, reporting standards use different terms to describe similar features (e.g., species vs. organism) and inconsistent language (e.g., mouse vs. Mus musculus; micromolar vs. microgram per kilogram vs. parts per million). Whereas ongoing efforts such as the Linked Data Modeling Language (LinkML) aim to standardize the structure for defining and sharing reporting standards,22 few published reporting standards seem to follow a consistent structure. For example, different “Ready” reporting standards are described in disparate formats, including human-readable text (e.g., PDF) and machine-readable markup language (e.g., XML). In addition to encouraging adoption of reporting standards, establishing a consistent approach to defining standards in a machine-readable manner presents an opportunity to retrospectively evaluate the completeness of (meta)data collection.22,23

Reporting Standards in Environmental Health Research

The environmental health sciences are a broad, wide-ranging domain of research with methodologies spanning bacterial assays to in vivo experiments to epidemiological studies collectively aimed at characterizing the effects of environmental exposures on human health. As such, the usefulness of data for an individual chemical or a class of compounds, generated across diverse models using different approaches, could be severely compromised by missing or inadequate metadata. For example, a recent systematic review of per- and polyfluoroalkyl substances found that 19% of candidate animal studies that initially passed screening did not adequately characterize exposure.24 Similarly, in an evaluation of sex bias in human smoking data sets in GEO, 34.5% of samples were found to be missing metadata for sex.25 Incompleteness in metadata reporting for human, in vivo, ex vivo, and in vitro experiments can severely restrict potential in the development and validation of new assays and methodologies.26

In the domain of toxicology, reporting standards such as the Tox Bio Checklist (TBC) and Organisation for Economic Co-operation and Development (OECD) Toxicology Experiment Reporting Module (TERM) have primarily been applied for omic data types, particularly transcriptomic data. The TBC was an early attempt to capture study designs and biology rather than focusing on a specific technology platform.27 FAIRSharing.org lists TBC with a status of “Uncertain” because there is no maintainer, whereas TERM is not listed. Although it is difficult to causally link centralization of reporting standards to adoption, it is likely that the absence of centralized resources presents another hurdle for adoption, particularly between the diverse research communities such as government and academia that may rely on different authorities. Many other relevant reporting standards for environmental health research experiments can also be identified at FAIRSharing.org, such as the Minimum Information about a Bioactive Entity (MIABE)28 and the toxicogenomics subset of MIAME (MIAME/Tox), now listed as “Deprecated” because it is no longer maintained.29

Many reporting standards not specifically developed for environmental health research experiments capture key aspects of the types of experiments that are performed in environmental health research. Integrating complementary reporting standards would likely capture a significant fraction of the expected metadata relevant to different components of an experiment. For example, a T-cell toxicity assay may well warrant use of the Minimal Information about T Cell Assays (MIATA) expectations by capturing cell collection and handling characteristics, but MIATA does not include dose and stressor details because those are not relevant to all T-cell assays.30 Therefore, to capture environmental health science–relevant metadata for a T-cell assay, MIATA would need to be combined with another set of standards that captures information about the stressor through identifiers such as the Distributed Structure-Searchable Toxicity (DSSTox) identifier31 as well as the treatment study design. In our opinion, there is an unresolved need to identify and/or develop a suite of reporting standards that defines essential metadata for assessing exposure and/or treatment across all types of experiments.

Resources and Infrastructure for (Meta)Data Collection

In addition to developing, describing, and implementing reporting standards, we believe there is a need to create infrastructure to collect (meta)data in a structured and consistent manner such as ELIXIR’s Investigation/Study/Assay (ISA) Commons framework (isacommons.org)19,20 and the Center for Expanded Data Annotation and Retrieval (CEDAR) workbench (metadatacenter.org).32 Both ISA and CEDAR use reporting standards to ensure a) the expected metadata are included, b) the vocabulary is standardized, and c) the (meta)data are shared in a common structured machine-readable exchange format. These tools emphasize both vocabulary and metadata. Although not widely adopted, the ISA framework formed the basis of the ArrayExpress19 and MetaboLights33 repositories and the Nature Publishing Group journal Scientific Data.34 These examples primarily represent omic data types and illustrate the wide gap between these other data types such as individual end points (e.g., tissue weights) regarding the current state of data management and sharing. Although ISA and CEDAR use different structures and formats to collect metadata, both enable machine-readable sharing and provide the opportunity to develop data exchange tools between these and other formats.

To advance the FAIR principles in environmental health research, we believe that submission to public repositories will be instrumental. Systematic reviews, which collect and summarize literature for individual compounds, groups of chemicals, or environmental exposures, can query these repositories to find relevant data sets. For example, human exposure meta-analyses examining smoking or environmental exposures to a variety of environmental contaminants (e.g., polychlorinated biphenyls, arsenic) leveraged data sets in GEO to identify and use relevant transcriptomic data sets.25,35 However, data repositories vary in scope from specific data types (e.g., GEO) to specific experiment types such as CEBS36 and ToxRefDB,37 which are administered by the National Institute of Environmental Health Sciences (NIEHS) Division of Translational Toxicology (DTT) and the U.S. Environmental Protection Agency (U.S. EPA), respectively. Ensuring that all elements are accurately deposited in a structured manner is a significant challenge in the creation of a comprehensive environmental health science data repository that accepts data submissions from any investigator or institution. Alternatively, individual groups may opt to undertake the considerable development and administration efforts of a local repository, though this risks further community fragmentation. Open-source frameworks such as the Gen3 data commons, however, can provide a middle ground between institution-specific databases and harmonized data repositories.38–40

Methods

Case Study for the Use of Reporting Standards

Revising existing in vivo environmental health science experiment requirements.

Recently the NIEHS Superfund Research Program joined the FAIR effort by incorporating new data management and analysis requirements for grantees to accelerate disparate data integration and reuse to assess the potential health and environmental impacts of Superfund chemicals of concern.41,42 As part of these efforts, a working group was formed between Superfund Research Centers at Michigan State University (https://iit.msu.edu/centers/superfund/), the University of Kentucky (superfund.engr.uky.edu/), the University of Louisville (https://louisville.edu/enviromeinstitute/superfund), and the University of Iowa (https://iowasuperfund.uiowa.edu/). Our objective was to update and harmonize the TBC and TERM reporting standards. Given the resources associated with developing standards (the Netherlands Organization for Health Research and Development estimated costs at USD per standard),43 we used existing in vivo experiment standards to illustrate how environmental health science data can be made more FAIR. We not only focused on metadata expectations, but also discussed resources and tools aimed at facilitating implementation. The developed Minimum Information about Animal Toxicology Experiments In Vivo (MIATE/invivo) standard includes: a) updated requirement in human- and machine-readable formats, b) ISA configurations compatible with the ISA tools, c) templates for CEDAR, d) a GEO submission template, and e) example data (https://fairsharing.org/FAIRsharing.wYScsE). Although MIATE/invivo and associated resources were developed for in vivo treatment experiments, they provide a framework for development of resources tackling in vitro, ex vivo, and other data types.

Harmonizing existing in vivo toxicology (meta)data requirements.

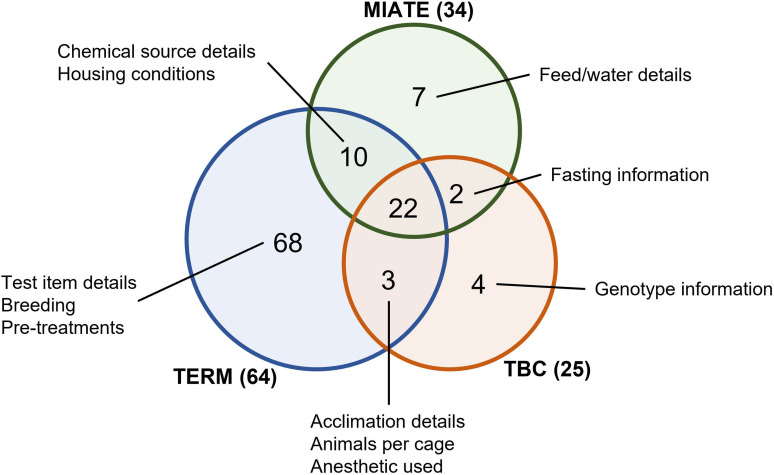

To maintain interoperability with CEBS and ToxRefDB, MIATE/invivo retained most fields in the TBC and TERM reporting standards (Figure 1; Excel Table S1). Specifically, fields related to genetic alterations and strain information requested by TBC were replaced using ontologies from resources such as the Rat Strain Ontology42 or Mouse Genome Informatics.44,45 Because novel genotypes are not used in all experiments, we instead suggest developing reporting standards to capture the appropriate metadata for studies using genetically modified animals. To our knowledge, such reporting standards have not been formalized in literature or as a record in FAIRsharing.org. The chemical stressor component was edited to accommodate multiple chemicals (i.e., mixtures), and the standard also required defining the source and purity of the chemical stressor. To resolve prior inaccuracies in chemical stressor nomenclature, MIATE/invivo required that chemical stressors be defined using a DSSTox identifier.31

Figure 1.

Comparison of metadata terms listed in the TERM, TBC, and MIATE/invivo reporting standards. Each standard was manually examined. Only metadata requirements relevant to in vivo experiments for each reporting standard were included for comparison. Because of the use of different vocabularies, each reporting standard metadata term was manually mapped to an equivalent term in the other reporting standards if one were present. Mapping summary can be found in Excel Table S1. The Venn diagram shows the number of common and unique terms for each reporting standard. Note: MIATE/invivo, Minimum Information about Animal Toxicology Experiments in Vivo; TBC, Tox Bio Checklist; TERM, Toxicology Experiment Reporting Module.

Unlike TERM, MIATE/invivo relegates sample and organ metadata to individual assays because these can involve different tissues from the same test subject. We expect that metadata for specific end points will be better captured from standards established by domain experts for individual assays. MIATE/invivo leverages the open-source ISA framework, which supports interoperability between reporting standards for comprehensive metadata capturing. We also included provisions for more thorough documentation of food and water intake in diet-based experiments as well as more detailed information regarding complex treatment regiments in longitudinal studies involving multiple collection time points. Fields were also extended to improve study reproducibility as outlined in the Animal Research: Reporting of In Vivo Experiments (ARRIVE) recommendations.46 Nevertheless, note that MIATE/invivo and comparable standards strive to capture only a minimal set of required metadata elements. Data producers, independent investigators, risk/safety assessors, regulators, and responsible stewards all can benefit when these tools provide optional or custom fields that use controlled vocabularies to capture additional relevant metadata.

Illustrating the value of using resources for standardized data depositing.

Using MIATE/invivo and associated resources, here we present a case study using in vivo environmental health data sets deposited in GEO to highlight the need for and advantages of collecting and depositing (meta)data in a more robust manner. As one of the more mature repositories and having the expectations that transcriptomics data be deposited by most journals, GEO represents a rich source of data sets for evaluation. Moreover, we previously deposited data in GEO using earlier versions of MIATE/invivo, which will illustrate the benefits of standardized (meta)data collection. The detailed methodology used here, including source code and outputs, is provided as a supplemental document (MIATE-3.0.1.zip) and deposited to Zenodo [doi.org/10.5281/zenodo.7667576; this digital object identifier (DOI) will link to the most recent version, and version 3 was used for this commentary] and available on GitHub (https://github.com/Zacharewskilab/MIATE).

In short, GEO was queried for mouse and rat data sets using the keywords “dose-response,” “toxicology,” or “dose.” Metadata was computationally extracted from all data sets, and a semiautomated assessment removed studies involving biological (e.g., infections), physical (e.g., cold temperature), or psychological stressors (e.g., isolation) as well as studies involving co-treatments with a chemical or not, which resulted in 1,233 total data sets (Excel Table S2). Because of incomplete (meta)data collection and lack of standardization, some data sets may be incorrectly included, but their inclusion further reinforces the importance of robust metadata collection tools. Metadata fields extracted from the 1,233 data sets were then manually mapped to terms in the TBC, TERM, or MIATE/invivo reporting standards (Excel Table S3).

Discussion

State of Environmental Health Metadata in a Public Repository

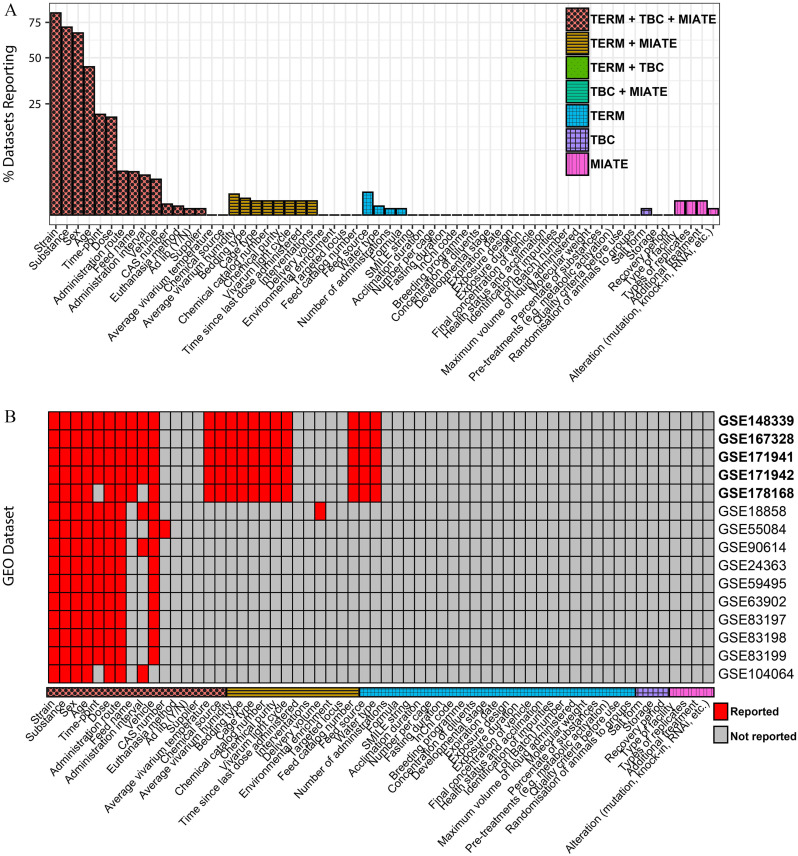

As expected, all GEO data sets reported the organism as required for deposition. However, none provided the complete minimum metadata expected by MIATE/invivo reporting standards when assessed without using complex algorithms such as natural language processing (Figure 2A). Although strain, toxicant identifier, sex, age, dose, and time point were commonly reported, the format and language were inconsistent. For example, 297 unique strain names were identified within the 1,233 data sets, but manual review identified different vocabularies (e.g., BALB/C, BALB-C, BABL/C), none of which were specific enough to identify the exact genetic stock (e.g., BALB/cJ-MGI:2159737 vs. BALB/cN-MGI:2161229). Even more concerning was the various reporting approaches used for treatment, vehicle, and dose, information that is essential for integrative analyses, systematic reviews, and use of artificial intelligence and machine learning. In several cases, treatment was reported as a “yes/no” or the toxicant and dose were reported together (e.g., “ sodium-arsenite”). Although the appropriate information is present, inconsistent structure compromises machine-automated parsing, greatly increasing the difficulty of reuse and the potential for error. Furthermore, toxicants were often reported using common names (e.g., tamoxifen) or abbreviations (e.g., 2AAF for 2-acetylaminofluorene). Using an automated approach to map names to CAS or simplified molecular-input line-entry system identifiers had a matching identifier only of the time, with many being incorrect [e.g., Ni (nickel) matched iodamine]. This possibility of error highlights the importance of establishing not only metadata expectations but also ontologies, controlled vocabularies, and unique descriptive identifiers (e.g., the DSSTox ID31) such as those being collected by efforts of the NIEHS Environmental Health Language Collaborative.47

Figure 2.

Evaluation of GEO deposited metadata conformance to the in vivo experimental environmental health science reporting standards TERM, TBC, and MIATE/invivo. GEO was queried for mouse and rat data sets using the keywords “dose-response,” “toxicology,” or “dose,” followed by computational extraction of metadata. Following semiautomated metadata assessment to remove studies involving biological (e.g., infections), physical (e.g., cold temperature), or psychological stressors, a total of 1,233 GEO data sets were examined (Excel Table S2). Expected terms were compared to requested metadata in TERM, TBC, and MIATE/invivo reporting standards (Excel Table S3). (A) Percentage of data sets providing each reporting standard term is shown. (B) Top 15 data sets providing the most terms present in any reporting standards shown as a heat map indicating which term was reported. Bolded GEO accession identifiers represent data sets using a draft version of MIATE/invivo. Documentation and code for reproducibility are provided as a supplemental document (MIATE-3.0.1.zip) and available as part of MIATE/invivo resources (doi.org/10.5281/zenodo.7667576). Data used for plotting are provided in supplementary tables (Excel Table S4–S5). Note: GEO, Gene Expression Omnibus; MIATE/invivo, Minimum Information about Animal Toxicology Experiments in Vivo; TBC, Tox Bio Checklist; TERM, Toxicology Experiment Reporting Module.

Demonstrating the value of standardizing data deposition.

MIATE/invivo also provides an accompanying template for data deposition into GEO (Table S6), which was used to deposit several data sets. Not surprisingly, these data sets—of all 1,233 data sets we assessed—had the most terms that could be identified and parsed through automated processes [Figure 2B (bolded accession numbers)]. We examined the next top 15 data sets in terms of how many MIATE/invivo terms could be computationally identified. These data sets captured 27%–34% of the terms despite the lack of adoption of standards and controlled vocabulary among data depositors, indicating some agreement regarding essential information to be included. Three data sets collected without using MIATE/invivo (those representing top (GSE18858),48 middle (GSE70583), and bottom (GSE116653)49 data sets for the number of relevant identifiable terms) were examined more closely (Table 3). Evaluation highlighted that a lack of standardization is one of the largest hurdles, because relevant terms could be identified by manually reading the associated manuscripts and the GEO summary but could not be computationally identified using fully automated approaches to parse the metadata. It is notable that GSE18858 did not provide information regarding housing conditions, chemical identifiers (other than name), or feed/water information in the GEO metadata. However, it did include delivery volumes, which was not initially covered by MIATE/invivo but has since been added, illustrating that MIATE/invivo must be a living standard that grows and improves over time. Most data sets required some amount of labor-intensive manual mapping of terms. Consequently, the inability to obtain metadata from database records does not necessarily reflect poor data quality, because the relevant information can be retrieved manually or possibly through natural language processing algorithms.

Table 3.

Manual examination of representative data sets for the inclusion of metadata terms within MIATE/invivo.

| GEO accession |

MIATE/invivo terms identified manuallya | MIATE/invivo terms identified by term mapping | MIATE/invivo terms identified as exact matches |

|---|---|---|---|

| GSE116653 | 38b | 1: 10: |

0 |

| GSE18858 | 45 |

c |

0 |

| GSE70583 | 17d | 5: |

1: strain – no ontology |

Note: GEO, Gene Expression Omnibus; MIATE/invivo, Minimum Information about Animal Toxicology Experiments In Vivo.

Includes ability to derive information through database searches (e.g., mapping chemical name to DSSToxID).

Manuscript was not clearly linked to GEO record.

Vehicle was not provided in manuscript but is in GEO record.

No link to manuscript could be identified. All information was determined from summary text.

This case study evaluated gene expression data in GEO, a more mature repository containing environmental health research data in comparison with dedicated repositories such as CEBS and ToxRefDB. Most other data types, particularly nonomic data, either lack established repositories or have repositories that are not as widely adopted as GEO. Even finding relevant data is itself a significant hurdle; among those identified, there was both an absence and an inconsistent use of controlled language. We show that depositing data using a defined set of requirements improved these aspects and that comparable requirements can be developed to establish other high-quality depositions. These outcomes are possible even without the availability of a dedicated repository, as demonstrated recently for geospatial information system (GIS) data sets.50

Proposed workflow for addressing FAIR principles in environmental health research.

This commentary highlights the need for collective community change regarding when and how (meta)data are collected to achieve the FAIR objectives. We propose the following workflow to further encourage data sharing and reuse as well as generate new knowledge from existing data. This workflow is aimed primarily at motivating the adoption of these principles through positive reinforcement and developing resources that reduce the burden on individual investigators.

Item A. Library of reporting standards.

This step flows from the concept of common data elements used in the health care field to collect standardized language and terms. Although not widely used in basic and preclinical toxicology and pharmacology, it is reflected in TBC, TERM, MIATE/invivo, and the Standard for Exchange of Nonclinical Data. A single centralized resource where standards are cataloged in machine-readable formats would specify the required elements and allowable values. Established resources such as FAIRSharing.org, for example, could require that data standard records include a LinkML schema,22 or recognize those that do. Development of the standards in this library would be a collaborative, community-based, version-controlled effort but with an accepted schema standard with the costs dispersed across the individual developing communities. Validated standards would also be assigned a persistent DOI as well as useful metadata tags for easy searching of the relevant standards. For example, MIATE/invivo is version-controlled using GitHub, and releases are assigned a DOI using Zenodo (doi.org/10.5281/zenodo.7667576).

Item B. Template creation, applications, and tools.

A typical environmental health study would likely need to fulfill multiple minimal requirements for each diverse research area. For example, studies involving dose-dependent gene expression analysis in a novel genetic mouse would need to satisfy MINSEQE and MIATE/invivo requirements, as well as additional minimal information describing the novel model that should be defined by the genetic model research community. Additional experimental end points would similarly have distinct sets of expected metadata. The requirements may have common data elements (e.g., species, strain), whereas others may be unique to one reporting standard (e.g., dose or target gene). To demonstrate how this could work, we developed a Python software tool to integrate multiple ISA Study configurations in the default format while maintaining compatibility with the downstream use of an ISAcreator tool (https://github.com/zacharewskilab/MIATE).

Item C. Facilitating (meta)data collection at the source.

The responsibility for collecting the relevant metadata falls on data producers, who may not possess experience with data models, controlled vocabularies, ontologies, data formats, or bioinformatics. Nevertheless, all stakeholders benefit from robust, well-maintained, and user-friendly software tools to guide effective (meta)data collection. Existing tools such as ISAcreator,51 CEDAR workbench,32 and Swate52 can be used to assist (meta)data collectors with ontologies to minimize common errors. Although these tools facilitate the use of controlled language and ontologies, not all simultaneously collect the associated data or are optimized to capture multiple independent end points for an experiment. As the research community continues to develop these resources, it will be critical that end users be considered as well as the mechanism to go from data collection to sharing.

Item D. Collecting (meta)data in a structured manner.

Established repositories provide access to organized and accessible metadata, but not all data types have a dedicated repository. The proposed workflow ensures that (meta)data is structured prior to deposition to support interoperability and reusability. Collection of structured (meta)data is standard practice for tools such as the ISA creator, which collects (meta)data in ISA-Tab or ISA-JSON formats along with data files into a single compressed archive file. Alternatively, CEDAR provides metadata in both RDF and JSON-LD machine-readable formats. With prior knowledge regarding which metadata collection resource was used, independent investigators can more easily access and reuse the (meta)data.

Item E. Exporting to public repositories.

Deposition of (meta)data into repositories is an important component for supporting the “findable” and “accessible” aspects of FAIR.53 Although some data types will not have an established repository, others may. For example, ArrayExpress and GEO both host expression data, Metabolomics Workbench and MetaboLights manage metabolomic data, and Proteomics Identification Database retains proteomic data. However, repositories vary regarding metadata requirements and deposition formats (e.g., SOFT or MINiML XML for GEO and mwTab for Metabolomics Workbench). These variations do not mean that (meta)data needs to be recollected or manually reorganized for each deposition. Instead, tools could be developed that convert between formats that would be accepted by repositories to minimize copying errors and simplify (meta)data submission. For example, ISA has a suite of tools that provides a converter for ArrayExpress.

Item F. Promoting adoption through validation.

Data generators have limited motivation to take on the burden of depositing (meta)data, considering the time and effort involved. Although many avenues should be explored to credit robust efforts to produce FAIR data, implementation of tools to assess metadata quality would provide guidance and improve (meta)data deposition efforts. For example, a GEO submission would be expected to score high for gene expression data requirements but low for proteomic data. Similarly, a data set evaluating a cancer cell line is unlikely to score well for MIATE/invivo, and it should not. When looking to reuse data for artificial intelligence and machine learning, these scores could be used as selection criteria. Tools to assess (meta)data deposition quality against expected requirements would increase confidence in the quality of the data set and accelerate its reuse. For example, the Metabolomics Workbench File Validator website (https://moseleybioinformaticslab.github.io/mwFileStatusWebsite/) continuously provides evaluation reports for all publicly available data sets in the Metabolomics Workbench for conformity to the mwTab deposition requirements and consistency between mwTab and JSON versions.54,55

Challenges and future directions.

One impediment to achieving the FAIR objectives is providing sufficient incentives to encourage voluntary deposition of rich (meta)data. Overcoming this obstacle falls on not only data generators but also on funding agencies and publishers, who have significant influence within the research community. This achievement of FAIR objectives is best exemplified by transcriptomic data reporting, which has matured with experience—leading to established repositories (e.g., GEO)—and is subject to requirements by most publishers for manuscript publication. Nevertheless, it is not uncommon to find papers that fail to provide sufficiently rich metadata to perform independent secondary analyses or, worse, do not submit complete or even partial data sets. This finding is not surprising, because the responsibility of verifying and validating deposited data generally falls on peer reviewers or journal staff because effective automated validation methods are not currently available.

All stakeholders, from data generators to publishers and funding institutions, have a role in addressing this challenge. To accelerate adoption of the FAIR principles, we suggest finding ways to recognize investigator contributions during manuscript acceptance and proposal funding decision-making, as well as the evaluation of the quality of the data submissions to gently push investigators to strive for improvement of their own data. Item F outlines a putative mechanism to automate the evaluation of deposited data, which could provide feedback to data generators, funding agencies, and publishers following comparison with the expected reporting standard.

Some journals, including Environmental Health Perspectives, already ask submitters to meet the standards outlined at EQUATOR (Enhancing Quality and Transparency of Health Research; https://www.equator-network.org/). Likewise, publishers could request submitters to link their deposited data to specific relevant standards listed in a library of standards as outlined in Item A. Such a requirement would allow automated processes to validate individual data sets against their associated standards and provide feedback to authors to improve data reporting. This approach could be implemented even today in the absence of robust data evaluation tools to provide feedback about the relevant standards for their data type. However, major organized efforts are needed to determine how to define and collect individual standards in machine-readable formats.

Conclusions

In our opinion, overcoming the challenges of achieving the FAIR objectives will require significant sweat equity and investment. As a community, the data management and sharing culture needs to be redefined. Training, particularly at the institutional level, is expected to support such efforts. However, development and maintenance of user-friendly resources for structured data collection and data deposition will be essential to minimize the burden on investigators and incentivize their participation. Moreover, it will be particularly valuable to develop open-source resources that include long-term maintenance plans. It is expected that as resources such as MIATE/invivo mature, they will be developed further to accommodate other experimental designs, including developmental, ex vivo, and in vitro studies. The environmental health sciences are an ever-expanding area of research involving diverse experiment types. Capturing the nuances of each experimental study design and data type is essential to maximize investment returns and advance discoveries in environmental health research.

Supplementary Material

Acknowledgments

The authors would like to thank M. Heacock, J.M. Fostel, and M. Conway, all of the National Institute of Environmental Health Sciences (NIEHS), for continued discussions on promoting and accelerating the adoption of FAIR principles in the environmental health science community. This work was supported by the NIEHS Superfund Research Program (SRP) to T.Z. (P42ES004911) and SRP Data Interoperability and Reuse supplements to the Michigan State University Superfund Research Center (SRC) (P42ES004911), the University of Kentucky SRC (P42ES007380), the University of Louisville SRC (P42ES023716), and the University of Iowa SRP (P42ES013661).

References

- 1.Baker M. 2016. 1,500 Scientists lift the lid on reproducibility. Nature 533(7604):452–454, PMID: , 10.1038/533452a. [DOI] [PubMed] [Google Scholar]

- 2.NIH (National Institutes of Health) Office of Science Policy. 2020. Final NIH Policy for Data Management and Sharing: NOT-OD-21-013. https://grants.nih.gov/grants/guide/notice-files/NOT-OD-21-013.html.

- 3.National Academies of Sciences Engineering, and Medicine. 2018. Open Science by Design: Realizing a Vision for 21st Century Research. Washington, DC: National Academies Press. 10.17226/25116 [accessed 19 June 2023]. [DOI] [PubMed] [Google Scholar]

- 4.European Commission, Directorate-General for Research and Innovation. 2018. Turning FAIR into Reality: Final Report and Action Plan from the European Commission Expert Group on FAIR data. https://data.europa.eu/doi/10.2777/1524 [accessed 19 June 2023].

- 5.Wellcome Trust. Open research. https://wellcome.org/what-we-do/our-work/open-research. [accessed 6 February 2023].

- 6.Nemer M. 2020. Roadmap for Open Science. https://science.gc.ca/site/science/sites/default/files/attachments/2022/Roadmap-for-Open-Science.pdf [accessed 19 June 2023].

- 7.Bhhatarai B, Walters WP, Hop C, Lanza G, Ekins S. 2019. Opportunities and challenges using artificial intelligence in ADME/Tox. Nat Mater 18(5):418–422, PMID: , 10.1038/s41563-019-0332-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Boyles RR, Thessen AE, Waldrop A, Haendel MA. 2019. Ontology-based data integration for advancing toxicological knowledge. Curr Opin Toxicol 16:67–74, 10.1016/j.cotox.2019.05.005. [DOI] [Google Scholar]

- 9.Chen X, Roberts R, Tong W, Liu Z. 2022. Tox-GAN: an artificial intelligence approach alternative to animal studies–a case study with toxicogenomics. Toxicol Sci 186(2):242–259, PMID: , 10.1093/toxsci/kfab157. [DOI] [PubMed] [Google Scholar]

- 10.Brock J. 2019. “A love letter to your future self”: what scientists need to know about FAIR data. https://www.nature.com/nature-index/news-blog/what-scientists-need-to-know-about-fair-data. [accessed 6 February 2023].

- 11.Wallach JD, Boyack KW, Ioannidis JPA. 2018. Reproducible research practices, transparency, and open access data in the biomedical literature, 2015–2017. PLoS Biol 16(11):e2006930, PMID: , 10.1371/journal.pbio.2006930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ali B, Dahlhaus P. 2022. The role of FAIR data towards sustainable agricultural performance: a systematic literature review. Agriculture-Basel 12(2):309, 10.3390/agriculture12020309. [DOI] [Google Scholar]

- 13.Taylor CF, Field D, Sansone SA, Aerts J, Apweiler R, Ashburner M, et al. 2008. Promoting coherent minimum reporting guidelines for biological and biomedical investigations: the MIBBI project. Nat Biotechnol 26(8):889–896, PMID: , 10.1038/nbt.1411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Brazma A, Hingamp P, Quackenbush J, Sherlock G, Spellman P, Stoeckert C, et al. 2001. Minimum information about a microarray experiment (MIAME)-toward standards for microarray data. Nat Genet 29(4):365–371, PMID: , 10.1038/ng1201-365. [DOI] [PubMed] [Google Scholar]

- 15.FAIRsharing.org. 2021. Minimum Information About a Microarray Experiment (MIAME). https://fairsharing.org/FAIRsharing.32b10v [accessed 26 September 2022].

- 16.FAIRsharing.org. 2022. Minimal Information About a Cellular Assay (MIACA). https://fairsharing.org/FAIRsharing.7d0yv9 [accessed 19 June 2023].

- 17.FAIRsharing.org. 2022. Minimal Information about a high throughput SEQuencing Experiment (MINSEQE). https://fairsharing.org/FAIRsharing.a55z32 [accessed 19 June 2023].

- 18.Sansone SA, Rocca-Serra P, Field D, Maguire E, Taylor C, Hofmann O, et al. 2012. Toward interoperable bioscience data. Nat Genet 44(2):121–126, PMID: , 10.1038/ng.1054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sansone SA, Rocca-Serra P, Brandizi M, Brazma A, Field D, Fostel J, et al. 2008. The first RSBI (ISA-TAB) workshop: “can a simple format work for complex studies?” OMICS 12(2):143–149, PMID: , 10.1089/omi.2008.0019. [DOI] [PubMed] [Google Scholar]

- 20.Sansone SA, Rocca-Serra P, Tong W, Fostel J, Morrison N, Jones AR, et al. 2006. A strategy capitalizing on synergies: the Reporting Structure for Biological Investigation (RSBI) working group. OMICS 10(2):164–171, PMID: , 10.1089/omi.2006.10.164. [DOI] [PubMed] [Google Scholar]

- 21.Kettner C, Field D, Sansone SA, Taylor C, Aerts J, Binns N, et al. 2010. Meeting report from the second “minimum information for biological and biomedical investigations” (MIBBI) workshop. Stand Genomic Sci 3(3):259–266, PMID: , 10.4056/sigs.147362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Moxon S, Solbrig H, Unni D, Jiao D, Bruskiewich R, Balhoff J, et al. 2021. The Linked Data Modeling Language (LinkML): a general-purpose data modeling framework grounded in machine-readable semantics. In: CEUR Workshop Proceedings: 2021 International Conference on Biomedical Ontologies (ICBO 2021). 16–18 September 2021. Bozen-Bolzano, Italy, 148.–. [Google Scholar]

- 23.Gamble M, Goble C, Klyne G, Zhao J. 2012. MIM: A Minimum Information Model: A Minimum Information Model vocabulary and framework for Scientific Linked Data. In: 2012 IEEE 8th International Conference on E-Science. 8–12 October 2012. Chicago, Illinois, 1–8. [Google Scholar]

- 24.Costello E, Rock S, Stratakis N, Eckel SP, Walker DI, Valvi D, et al. 2022. Exposure to per- and polyfluoroalkyl substances and markers of liver injury: a systematic review and meta-analysis. Environ Health Perspect 130(4):46001, PMID: , 10.1289/EHP10092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Flynn E, Chang A, Nugent BM, Altman R. 2021. Comprehensive assessment of smoking and sex related effects in publicly available gene expression data. bioRxiv. Preprint posted online September 29, 2021. 10.1101/2021.09.27.461968. [DOI] [Google Scholar]

- 26.Comess S, Akbay A, Vasiliou M, Hines RN, Joppa L, Vasiliou V, et al. 2020. Bringing big data to bear in environmental public health: challenges and recommendations. Front Artif Intell 3:31, PMID: , 10.3389/frai.2020.00031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Fostel JM, Burgoon L, Zwickl C, Lord P, Corton JC, Bushel PR, et al. 2007. Toward a checklist for exchange and interpretation of data from a toxicology study. Toxicol Sci 99(1):26–34, PMID: , 10.1093/toxsci/kfm090. [DOI] [PubMed] [Google Scholar]

- 28.FAIRsharing.org. 2021. Minimum Information About a Bioactive Entity (MIABE). https://fairsharing.org/FAIRsharing.dt7hn8 [accessed 15 February 2023].

- 29.FAIRsharing.org. 2022. Minimum Information About an Array-Based Toxicogenomics Experiment (MIAME/Tox). https://fairsharing.org/10.25504/FAIRsharing.zrmjr7 [accessed 4 November 2014].

- 30.FAIRsharing.org. 2021. Minimal Information About T Cell Assays (MIATA). https://fairsharing.org/FAIRsharing.n7nqde [accessed 19 June 2023].

- 31.Grulke CM, Williams AJ, Thillanadarajah I, Richard AM. 2019. EPA’s DSSTox database: history of development of a curated chemistry resource supporting computational toxicology research. Comput Toxicol 12:100096, PMID: , 10.1016/j.comtox.2019.100096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.O’Connor MJ, Warzel DB, Martinez-Romero M, Hardi J, Willrett D, Egyedi AL, et al. 2019. Unleashing the value of Common Data Elements through the CEDAR Workbench. AMIA Annu Symp Proc 2019:681–690, PMID: . [PMC free article] [PubMed] [Google Scholar]

- 33.Haug K, Cochrane K, Nainala VC, Williams M, Chang J, Jayaseelan KV, et al. 2020. MetaboLights: a resource evolving in response to the needs of its scientific community. Nucleic Acids Res 48(D1):D440–D444, PMID: , 10.1093/nar/gkz1019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hufton A. Scientific Data’s metadata specification. Scientificdataupdates: a blog from Scientific Data. https://blogs.nature.com/scientificdata/2014/01/08/scientific-datas-metadata-specification/ [accessed 24 May 2023].

- 35.Kim S, Hollinger H, Radke EG. 2022. Omics in environmental epidemiological studies of chemical exposures: a systematic evidence map. Environ Int 164:107243, PMID: , 10.1016/j.envint.2022.107243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Waters M, Stasiewicz S, Merrick BA, Tomer K, Bushel P, Paules R, et al. 2008. CEBS–Chemical Effects in Biological Systems: a public data repository integrating study design and toxicity data with microarray and proteomics data. Nucleic Acids Res 36(Database issue):D892–D900, PMID: , 10.1093/nar/gkm755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Watford S, Ly Pham L, Wignall J, Shin R, Martin MT, Friedman KP. 2019. ToxRefDB version 2.0: improved utility for predictive and retrospective toxicology analyses. Reprod Toxicol 89:145–158, PMID: , 10.1016/j.reprotox.2019.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Do N, Grossman R, Feldman T, Fillmore N, Elbers D, Tuck D, et al. 2019. The Veterans Precision Oncology Data Commons: transforming VA data into a national resource for research in precision oncology. Semin Oncol 46(4–5):314–320, PMID: , 10.1053/j.seminoncol.2019.09.002. [DOI] [PubMed] [Google Scholar]

- 39.Jensen MA, Ferretti V, Grossman RL, Staudt LM. 2017. The NCI Genomic Data Commons as an engine for precision medicine. Blood 130(4):453–459, PMID: , 10.1182/blood-2017-03-735654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Grossman R, Abel B, Angiuoli S, Barrett J, Bassett D, Bramlett K, et al. 2017. Collaborating to compete: Blood Profiling Atlas in Cancer (BloodPAC) Consortium. Clin Pharmacol Ther 101(5):589–592, PMID: , 10.1002/cpt.666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Heacock ML, Amolegbe SM, Skalla LA, Trottier BA, Carlin DJ, Henry HF, et al. 2020. Sharing SRP data to reduce environmentally associated disease and promote transdisciplinary research. Rev Environ Health 35(2):111–122, PMID: , 10.1515/reveh-2019-0089. [DOI] [PubMed] [Google Scholar]

- 42.Heacock ML, Lopez AR, Amolegbe SM, Carlin DJ, Henry HF, Trottier BA, et al. 2022. Enhancing data integration, interoperability, and reuse to address complex and emerging environmental health problems. Environ Sci Technol 56(12):7544–7552, PMID: , 10.1021/acs.est.1c08383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Musen MA. 2022. Without appropriate metadata, data-sharing mandates are pointless. Nature 609(7926):222, PMID: , 10.1038/d41586-022-02820-7. [DOI] [PubMed] [Google Scholar]

- 44.Nigam R, Munzenmaier DH, Worthey EA, Dwinell MR, Shimoyama M, Jacob HJ. 2013. Rat strain ontology: structured controlled vocabulary designed to facilitate access to strain data at RGD. J Biomed Semantics 4(1):36, PMID: , 10.1186/2041-1480-4-36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Law M, Shaw DR. 2018. Mouse Genome Informatics (MGI) is the international resource for information on the laboratory mouse. In: Eukaryotic Genomic Databases: Methods and Protocols. Kollmar M, ed. New York, NY: Springer New York, 141–161. [DOI] [PubMed] [Google Scholar]

- 46.Percie Du Sert N, Hurst V, Ahluwalia A, Alam S, Avey MT, Baker M, et al. 2020. The ARRIVE guidelines 2.0: updated guidelines for reporting animal research. BMC Vet Res 16(1):242, PMID: , 10.1186/s12917-020-02451-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Holmgren SD, Boyles RR, Cronk RD, Duncan CG, Kwok RK, Lunn RM, et al. 2021. Catalyzing knowledge-driven discovery in environmental health sciences through a community-driven harmonized language. Int J Environ Res Public Health 18(17):8985, PMID: , 10.3390/ijerph18178985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Thomas RS, Clewell HJ 3rd, Allen BC, Wesselkamper SC, Wang NC, Lambert JC, et al. 2011. Application of transcriptional benchmark dose values in quantitative cancer and noncancer risk assessment. Toxicol Sci 120(1):194–205, PMID: , 10.1093/toxsci/kfq355. [DOI] [PubMed] [Google Scholar]

- 49.Sai L, Yu G, Bo C, Zhang Y, Du Z, Li C, et al. 2019. Profiling long non-coding RNA changes in silica-induced pulmonary fibrosis in rat. Toxicol Lett 310:7–13, PMID: , 10.1016/j.toxlet.2019.04.003. [DOI] [PubMed] [Google Scholar]

- 50.Ojha S, Thompson PT, Powell CD, Moseley HNB, Pennell KG. 2022. A FAIR approach for detecting and sharing PFAS hot-spot areas and water systems. ChemRxiv. Preprint posted online July 25, 2022. 10.26434/chemrxiv-2022-bt3f6. [DOI] [Google Scholar]

- 51.Rocca-Serra P, Brandizi M, Maguire E, Sklyar N, Taylor C, Begley K, et al. 2010. ISA software suite: supporting standards-compliant experimental annotation and enabling curation at the community level. Bioinformatics 26(18):2354–2356, PMID: , 10.1093/bioinformatics/btq415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Mühlhaus T, Brillhaus D, Tschöpe M, Maus O, Grüning B, Garth C, et al. 2021. DataPLANT–Tools and Services to structure the Data Jungle for fundamental plant researchers. In: E-Science-Tage 2021: Share Your Research Data. Heuveline V, Bisheh N, eds. Heidelberg, Germany: heiBOOKS, 132–145. [Google Scholar]

- 53.Wilkinson MD, Dumontier M, Aalbersberg IJ, Appleton G, Axton M, Baak A, et al. 2016. The FAIR guiding principles for scientific data management and stewardship. Sci Data 3:160018, PMID: , 10.1038/sdata.2016.18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Powell CD, Moseley HNB. 2022. The metabolomics workbench file status website: a metadata repository promoting FAIR principles of metabolomics data. bioRxiv. Preprint posted online March 7, 2022. 10.1101/2022.03.04.483070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Powell CD, Moseley HNB. 2021. The Python Library for RESTful Access and Enhanced Quality Control, Deposition, and Curation of the Metabolomics Workbench Data Repository. Metabolites 11(3):, PMID: , 10.3390/metabo11030163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.FAIRsharing.org. 2021. Tox Biology Checklist (TBC). https://fairsharing.org/229 [accessed 19 June 2023].

- 57.Harrill JA, Viant MR, Yauk CL, Sachana M, Gant TW, Auerbach SS, et al. 2021. Progress towards an OECD reporting framework for transcriptomics and metabolomics in regulatory toxicology. Regul Toxicol Pharmacol 125:105020, PMID: , 10.1016/j.yrtph.2021.105020. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.