Abstract

Study Design

Cross-sectional database study

Objective

The purpose of this study was to develop a successful, reproducible, and reliable convolutional neural network (CNN) model capable of segmentation and classification for grading intervertebral disc degeneration (IVDD), as well as quantify the network's impact on doctors' clinical decision-making.

Methods

5685 discs from 1137 patients were graded separately by four experienced doctors according to the Pfirrmann classification. A ground truth (GT) was established for each disc in accordance with the decision of the majority of doctors. The U-net model is used for segmentation. 1815 discs from 363 patients were used to train and test the U-net. The Inception V3 model is employed for classification. All discs were separated into two distinct sets: 90% in a training set and 10% in a test set. The performance metrics of these models were measured. Reliability tests were performed. The impact of CNN assistance on doctors was assessed.

Results

Segmentation accuracy was .9597 with a .8717 Jaccard Index and a .9314 Sorensen Dice coefficient. Classification accuracy is .9346, and the F1 score is .9355. The intraclass correlation coefficient (ICC) and kappa values between CNN and GT were .95-.97. With CNN's assistance, the success rates of doctors increased by 7.9% to 22%.

Conclusions

The fully automated network outperformed doctors markedly in terms of accuracy and reliability. The results of CNN were comparable to those of other recent studies in the literature. It was determined that CNN’s assistance had a substantial positive effect on the doctor’s decision.

Keywords: degenerative disc disease, convolutional neural network, pfirrmann classification, segmentation

Introduction

Low back pain is a prevalent condition with significant social and medical expenses. It has a complex etiology, and IVDD, with a 40% prevalence, is one of the most prominent causes. 1 Current conservative and surgical interventions are generally symptom-modulating and have limited long-term benefits. Recent research on regenerative therapies,2,3 particularly cell-based approaches, 4 suggests that they may be beneficial in treating early disc degeneration and has exhibited encouraging preclinical results. Therefore, the proper classification of IVDD is crucial for treatment. 5 With magnetic resonance imaging (MRI), both morphometric6,7 and quantitative classifiers8–11 for IVDD grading have been created, and varied degrees of reproducibility have been reported. Interobserver variability in the manual classification of degenerative disc disease (DDD) is relatively high. 12 Therefore, disc degeneration has also been classified using various convolutional neural networks and deep learning techniques.13–16 In this research, the outcomes of deep neural networks are compared with those of doctors to highlight their efficacy. It has been demonstrated that these networks are highly effective at classification, but it is unclear whether this success influences the treatment decisions made by clinicians. In this study, we aimed to develop a deep neural network capable of both segmentation and classification of IVDD according to the Pfirrmann classification, which is widely used in clinical practice, and to demonstrate the impact of this network on the decisions of clinicians, who are still primarily responsible for diagnosis and treatment.

Methods

Medical Data Collection and Labeling

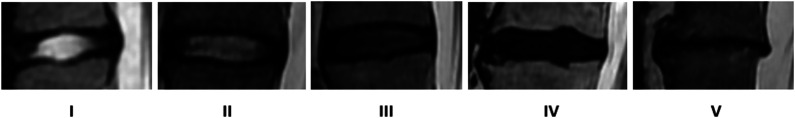

The Institutional Review Board of Yeni Yuzyil University approved this research (Protocol No. 2020/06-462). Informed consent was obtained from all participants. The entire dataset was obtained from a single center, consisting of patients complaining of low back pain. Between 2016 and 2020, the imaging data of 1248 patients were reviewed. Patients without sagittal T2W images, with metallic implants in the lumbar vertebrae creating image artifacts, or with scans of poor quality were excluded. A total of 1137 patients were included. L1-2 to L5-S1 discs were evaluated Midsagittal T2W sections were obtained from each patient and anonymized. A 1.5-T scanner was used to do MRIs of the lumbar spine (GE, Signa, 1.5-T). Only sagittal T2W images were utilized in this study. T2W sagittal images were acquired using a fast spin-echo sequence with a repetition time of 2680-4900 msec, an echo time of 100-109 msec, and an echo train length of 17. DFOV was 32 × 32 cm, with a slice thickness of 4 mm. A total of 5685 lumbar discs (five intervertebral discs per patient) in these images were classified independently and blindly by two orthopaedists, one neurosurgeon, and one radiologist (three with more than 15 years of experience and one with more than 7 years of experience) according to the Pfirrmann classification (Figure 1).

Figure 1.

Pfirrmann classification(6) from grade 1 to 5.

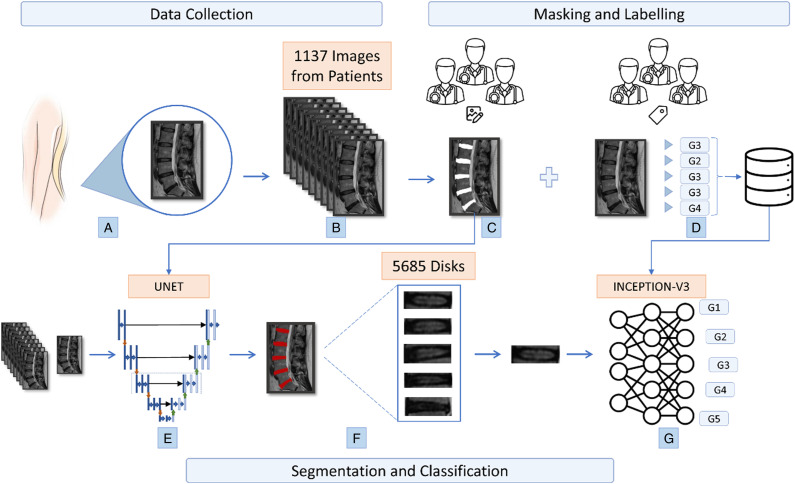

The three consistent grading results of the four were adopted as ground truth. If a quorum was not reached, the discs were re-evaluated by four doctors in a separate session and labeled by consensus. To train and evaluate the performance of our system, discs were separated into two distinct sets: 90% in a training set and 10% in a test set. The test set used to evaluate the accuracy or concurrent validity of the automatic ratings was compared to both the ground truth and the individual results of each doctor. A flowchart of the study is given in Figure 2.

Figure 2.

Flowchart of study (a) obtaining lumbar discs (b) collection of disc images from 1137 patients (c) masking of images by specialists with imageJ program for segmentation (d) labeling of 5 discs in each image for classification (e) creation of the U-net model (f) obtaining discs from images according to the U-net model (g) classification of segmented discs according to the generated CNN inception-v3 model.

Segmentation and Image Processing

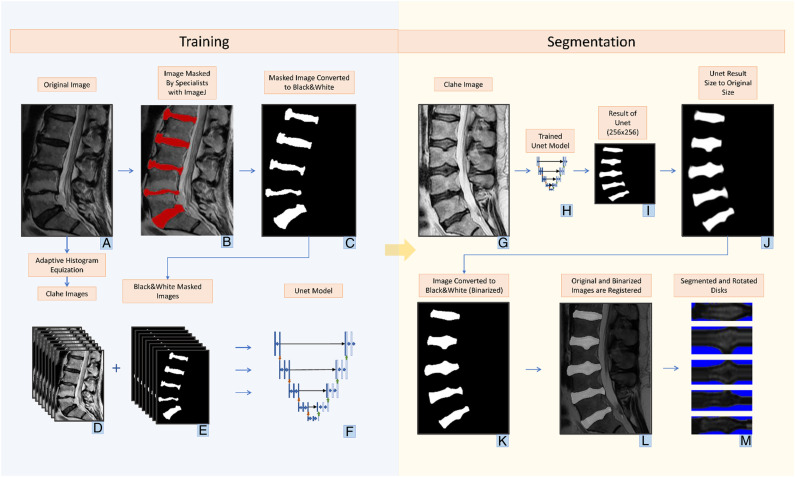

Before classification, it is important to segment the five discs included in each image as part of the image collection and labeling process. However, manual segmentation of all discs is costly and unsustainable in terms of time. Therefore, the U-net segmentation approach was utilized. Expert physicians used the ImageJ program to color the contents of the five discs in 363 MRI images in a distinct color to indicate the discs for use in the U-net model. Apart from the regions colored by the physicians, all areas in the colored images were thresholded as black (0,0,0), while the disc areas were thresholded as white (255,255,255). The U-net model was trained using a dataset comprising 303 main images and their corresponding binary mask images of the designated discs. On a test set of 60 images, a high accuracy rate of 95.97% was achieved after training was completed. In addition to accuracy, the Jaccard coefficient and DICE score obtained from the model are also presented in Table 2. The output images generated by the U-net model were of size 256 × 256 pixels. Subsequently, a thresholding and scaling procedure was applied to the output images to accurately extract the discs based on the size of each main image. The final images produced were of the same size as the original images and represented the locations of the discs with a distinct hue. From here, the discs were segmented mostly by evaluating the bounding rectangle areas, but it was also discovered that rotated rectangle areas needed to be assessed to accommodate certain images with distinct disks in the cut sections. Additionally, it was discovered that moving the discs horizontally improved classification performance. Following the acquisition of all discs, discs that were inappropriate for categorization based on the U-net result were manually fixed.

Table 2.

Results of the U-Net Model on the Test Dataset.

| Accuracy | Jaccard index (IoU) | Sørensen Dice similarity coefficient |

|---|---|---|

| .9597 | .8717 | .93146749 |

The classical U-net model employed for the segmentation task is presented in Figure 3

Figure 3.

Training phase (a) original Images (b) masked images for training phase with imageJ program by doctors (c) image converted to black and white to focus only on disc areas (d) images converted to contrast limited adaptive histogram equalization (CLAHE) by adaptive histogram equalization method (e) masked versions of CLAHE images (f) generating the U-net model from CLAHE and masked images. segmentation phase CLAHE image for segmentation (h) trained U-net model (i) image created with the Unet model in 256 × 256 dimensions (j) converting the image from U-net to its original size (k) changing the image to black and white with thresholding (l) determining the location of the discs in the white areas by overlaying the original image and the image converted to black and white as a result of U-net (m) saving the images taken as a result of cropping and rotating horizontally the region of interests. Discs are ready for classification.

During the training process of the U-net model, the optimizer used was Adam, the loss function was binary cross-entropy, and the learning rate was set at .001. Along with accuracy, the Jaccard coefficient was adopted as an additional metric to monitor the performance of the model throughout the training process. It has been observed that the use of contrast-limited adaptive histogram equalization (CLAHE) can improve the accuracy of U-net segmentation. Therefore, in this study, U-net was trained using images pre-processed with CLAHE, and the resulting masks obtained after training were used for the segmentation of the original unaltered images.

Data Augmentation

In the context of an image classification task, various data augmentation techniques were explored to improve the accuracy of the model. Specifically, random augmentations were applied to the original images in order to generate new training data. The selection of augmentation types was done in a manner that did not introduce any undesirable artifacts or distortions in the images. The chosen augmentation types included a rotation of 0-5 degrees, a brightness range of .5-1.5, and a zoom range of 0-.2. Furthermore, two additional image processing techniques, CLAHE and gamma correction, were also applied to the images in order to further enhance the dataset. CLAHE was applied with a contrast limit threshold of 2, and gamma correction was applied with a gamma value of 1.5. The augmentations and their corresponding parameter values can be found in Table 1.

Table 1.

Augmentations and Enhancements Applied to Image Data.

| Augmentation type | Value |

| rotation_range | 5 |

| forebrightness_range | [.5, 1.5] |

| zoom_range | 0.2 |

| Clahe contrast limit | 2 |

| Gamma correction γ value | 1.5 |

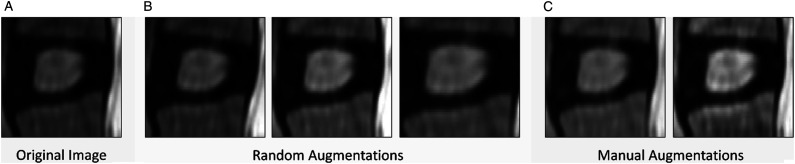

Figure 4 illustrates sample images that have undergone the augmentation process. The augmented and processed images were then used to train a model and compared against the original segmented images. The objective was to evaluate the impact of these augmentation and processing techniques on the performance of the model. The results were analyzed to determine the effectiveness of these techniques in improving the classification accuracy of the model.

Figure 4.

Utilized augmentation methods (a) original image (b) random augmentations: brightness, rotation, zoom variations (c) CLAHE and gamma augmentations.

Classification Model

In this study, transfer learning was employed for classification using the Inception-v3 model. The following sections provide information about the Inception model and the specific hyperparameters and parameters used in this study.

Inception Model

Inception models are a type of CNN model developed by Szegedy et al. 17 and presented by Google. The main purpose of their development was to increase the number of parameters in CNNs as the models got deeper while avoiding computational complexity. This approach is based on extending the model rather than making it deeper. Horizontal expansion is achieved by concatenating parallel convolutional operations and operations with different kernel sizes at the end of the layer to obtain the result. With the help of the operations performed using different filter sizes, the model has the chance to learn the attributes of different degrees of input. Since this learning is performed in parallel, it reduces the parameter complexity of the model. The blocks where these operations are performed are called Inception blocks.

In Inception blocks, 1 × 1, 3 × 3, and 5 × 5 filters and max pooling operations are applied in parallel to the input coming to the block at the same time. Since concatenating the results of all these operations would still be a loaded operation, they reduced the number of channels by applying a 1 × 1 filter before each filter and after max pooling. Inception-v1 added multiple blocks and auxiliary classifiers to prevent overfitting. Inception-v2 and Inception-v3 added batch normalization and factorized convolutions, making the model more efficient. With the addition of these factorized convolutions and batch normalization layers, the computational cost of the model is further reduced, making it much more efficient.

In general, Inception models aim to achieve higher success with lower computational costs. Much higher performance has been achieved by using Inception modules, batch normalization, auxiliary classifiers, and factorized convolutions in the model.

The Inception-v3 model used in the study has a very deep and complex structure and has proven its success in many image classification benchmarks. It was preferred because higher success can be achieved with less computational power by utilizing the Inception-v3 model features.

Model and Hyperparameters

In the study, the Inception-v3 model trained on ImageNet was used to perform disc degeneration grade classification. The model was fine-tuned for the classification task by rearranging the layers that perform classification and adjusting the last layers to be suitable for training. To prevent overfitting, the GlobalAveragePooling layer was preferred over the Flatten layer, and a Dropout layer was added before it. Finally, the Softmax layer was used to classify five grades. In addition to the added layers, some of the last layers from the original model were unfrozen and used in training.

The model's hyperparameters, including the initial learning rate, optimizer, dropout rate, number of unfrozen layers, and batch size, were determined using the Keras tuner and the Hyperband tuning algorithm. As a result of hyperparameter optimization, a dropout rate of .2, an initial learning rate of .1, and a batch size of 32 were used, and stochastic Gradient Descent (SGD) was preferred as the optimizer. The number of unfrozen layers was determined to be 20 for the data without augmentation and 30 for the data with random augmentation.

The dataset used in this study was found to be unbalanced upon examination of the number of instances in each class. Specifically, the Grade 1 and Grade 2 classes had significantly fewer instances than the other grade classes, which can lead to poor performance of machine learning models due to bias towards the majority class. The class weight parameter was used during the machine learning model's training to solve this problem. The class weight parameter was specifically set to “balanced,” which gives each class weights that are inversely proportionate to how frequently it occurs in the dataset. As a result, throughout training, both classes received an equal amount of attention, reducing the negative impacts of class imbalance. This technique has been widely used in the literature to improve the performance of models on imbalanced datasets.

The model was trained using an Nvidia GeForce GTX 1660Ti with 6 GB of memory, an Intel Core i7-10750H processor, and a 15 GB RAM system. The model was designed to train for a maximum of 150 epochs. As the Inception model accepts 299 × 299 images, the images were resized to the appropriate sizes before being fed into the model. Early stopping was employed to prevent overfitting, and the model training was stopped after the validation loss rate did not decrease for 17 epochs. A decreasing learning rate was used during training. If the validation loss rate did not decrease for three consecutive epochs, the learning rate was reduced by .2.

Throughout the study, a total of 4 different training sessions were conducted using the original data, randomly augmented data, CLAHE-applied data, and gamma correction-applied data. The hyperparameters determined for all datasets were used. Since the dataset without any augmentation yielded the best results, this dataset was employed in this study.

Determination of the Impact of CNN Assistance on Doctors

Before this evaluation, each doctor was informed of their own accuracy rate and CNN’s overall success. Each doctor was then given back the discs they had erroneously labeled, but CNN had correctly labeled. The doctors were unaware of whether they or CNN had correctly or erroneously labeled these discs. Each doctor was then asked if they would have made the same decision as CNN if they had been allowed to view CNN’s classification prior to making their own assessment. This manner, it was evaluated to what extent their errors in assessment would have been mitigated if CNN had assisted doctors. Before and after CNN assistance, the number of true and wrong answers were analyzed, and the percentage effect of CNN assistance on the doctor's success rate was determined.

Results

The performance of the models was evaluated using various metrics such as accuracy, precision, sensitivity, and F1-score. The evaluation was conducted on a test dataset consisting of previously unseen images. Furthermore, the performance of the classification model was compared to that of medical experts to assess its effectiveness. The results of these comparisons are also reported in this section.

Segmentation Results

Table 2 presents the accuracy, Jaccard index, and Sørensen dice similarity coefficient values of the segmentation performed with the U-net model.

From here, the discs were segmented mostly by evaluating the bounding rectangle areas, but it was also discovered that rotated rectangle areas needed to be assessed to accommodate certain images with distinct disks in the cut sections. Additionally, it was discovered that moving the discs horizontally improved classification performance. Following the acquisition of all discs, discs that were inappropriate for categorization based on the UNET result were manually fixed.

Classification Results

Table 3 shows the average accuracy, precision, sensitivity, Cohen's Kappa, F1, and AUC values, along with their corresponding standard deviations (SD), obtained through 10-fold cross-validation for each of the four datasets evaluated in this study.

Table 3.

Classification Results.

| Metric | Normal data [Mean ± SD] | Augmented data [Mean ± SD] | CLAHE [Mean ± SD] | Gamma correction [Mean ± SD] |

|---|---|---|---|---|

| Accuracy | .9190 ± .008567 | .8860 ± .008941 | .8712 ± .0062 | .9026 ± .008792 |

| Precision (weighted) | .9236 ± .008407 | .9023 ± .006845 | .8725 ± .006214 | .9060 ± .009268 |

| Sensitivity (weighted) | .9190 ± .008566 | .8859 ± .008941 | .8712 ± .006201 | .9026 ± .008792 |

| Cohens kappa | .8823 ± .009983 | .8359 ± .012559 | .8114 ± .00905 | .8580 ± .013013 |

| F1 score (weighted) | .9204 ± .008468 | .8900 ± .008337 | .8715 ± .006150 | .9035 ± .008976 |

| AUC (weighted/ovr) | .9901 ± .000674 | .9870 ± .000739 | .983 ± .001216 | .9885 ± .000747 |

| Trainable layer | 20 | 30 | 20 | 20 |

| Trainable parameter | 1,060,869 | 4,379,397 | 1,060,869 | 1,060,869 |

| Total parameter | 21,813,029 | 21,813,029 | 21,813,029 | 21,813,029 |

As can be seen in Table 3, the model that was trained using the original dataset had the highest average accuracy (.9190 ± .008567), AUC value (.9901 ± .000674), and F1 score (.9204 ± .008468), whereas the augmented dataset had a slightly lower accuracy (.8860 ± .008941), AUC value (.9870 ± .000739), and F1 score (.8900 ± .008337). The gamma corrected dataset showed slightly higher accuracy (.9026 ± .008792), AUC value (.9885 ± .000747), and F1 score (.9035 ± .008976) compared to the CLAHE applied dataset, which had the lowest accuracy (.8712 ± .0062), AUC value (.983 ± .001216), and F1 score (.8715 ± .006150).

Based on the results, the model with the highest accuracy rate was selected for comparison with the evaluations of the doctors. This model was trained using the dataset without augmentation and achieved an accuracy rate of 93.46% and an F1 score of .9355. The complete results of the model used for the comparison are presented in Table 4.

Table 4.

The Confussion Matrix and the Final Results of the CNN Model.

| Predicted class | ||||||

| 1 | 2 | 3 | 4 | 5 | Total | |

| 1 | 37 | 6 | 0 | 0 | 0 | 43 |

| 2 | 3 | 39 | 6 | 0 | 0 | 48 |

| 3 | 1 | 8 | 238 | 6 | 0 | 253 |

| 4 | 0 | 0 | 5 | 169 | 6 | 180 |

| 5 | 0 | 0 | 0 | 2 | 40 | 42 |

| Total | 41 | 53 | 249 | 177 | 46 | 566 |

| Accuracy | Loss | Cohens kappa | Precision | Sensitivity | F1 score | AUC |

| .934629 | .216085 | .904582 a | .937701 | .934629 | .935573 | .991673 |

aThis is the kappa value for test data only.

Reliability

The results of the reliability tests are given in Table 5. The pairwise results of the ICC (intraclass correlation coefficient) values between the GT, CNN, and doctors were statistically significant, and the inter-rater agreement was excellent (.90-.97). The highest agreement was found between CNN-GT (ICC: .97), while the lowest agreement was observed between CNN-Dr2 (ICC: .90) and CNN-Dr3 (ICC: .90). The Kappa test for inter-observer agreement between CNN-GT (κ: .95), CNN-D1 (κ: .75), and GT-D1 (κ: .77) was observed to be excellent. The other pairwise agreement results were statistically significant but had good to fair agreement coefficients.

Table 5.

Summary of ICC and Weighted Kappa Values. ICC Below .5 are as Poor Reliability, .5 and .75 as Moderate Reliability, .75-.9 as Good Reliability, Over .90 as Excellent Reliability. Weighted Kappa Below .20 as Poor Agreement, .21 to .40 as Fair, .41 to .60 as Moderate, .61 to .80 as Good, and Over .80 as Very Good Agreement. 18

| GT (95% CI) | CNN (95% CI) | DR1 (95% CI) | DR2 (95% CI) | DR3 (95% CI) | DR4 (95% CI) | P | ||

|---|---|---|---|---|---|---|---|---|

| ICC | GT | 1 | .97(.95-.097) | .95 (.95-.96) | .91 (.90-.91) | .91 (.89-.91) | .93 (.92-.93) | <0.01 |

| CNN | .97(.95-.097) | 1 | .95 (.94-.95) | .90 (.90-.91) | .90 (.89-.90) | .93 (.92-.93) | <0.01 | |

| Kappa | GT | 1 | .95 | .77 | .55 | .66 | .62 | <0.01 |

| CNN | .95 a | 1 | .75 | .53 | .65 | .61 | <0.01 |

a This is the kappa value for overall data.

General information about the dataset is provided in Table 6. A total of 5685 discs were classified. From grades 1 to 5, there were 408 (7.17%), 513 (9.02%), 2519 (44.3%), 1840 (33.26%), and 405 (7.12%) discs, respectively.

Table 6.

Comparative Evaluation Outcomes.

| Ground Truth | CNN | Dr1 | Dr2 | Dr3 | Dr4 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Test | Total | Test | Total | Test | Total | Test | Total | Test | Total | |||

| Grade 1 | 408 | True | 36 | 395 | 35 | 365 | 32 | 310 | 13 | 93 | 36 | 346 |

| False | 6 | 13 | 7 | 43 | 10 | 98 | 29 | 315 | 6 | 62 | ||

| % | 7.17 | 85.7 | 96.8 | 83.3 | 89.4 | 76.2 | 75.9 | 30.9 | 22.8 | 85.7 | 84.8 | |

| Grade 2 | 513 | True | 42 | 494 | 40 | 384 | 41 | 440 | 34 | 286 | 44 | 424 |

| False | 8 | 19 | 10 | 129 | 9 | 73 | 16 | 227 | 6 | 89 | ||

| % | 9.02 | 84.0 | 96.2 | 80.0 | 74.8 | 82.0 | 85.7 | 68.0 | 55.7 | 88.0 | 82.6 | |

| Grade 3 | 2519 | True | 238 | 2425 | 208 | 2083 | 199 | 1861 | 207 | 2013 | 169 | 1595 |

| False | 16 | 94 | 46 | 436 | 55 | 658 | 47 | 506 | 85 | 924 | ||

| % | 44.3 | 93.7 | 96.2 | 81.8 | 82.7 | 78.3 | 73.8 | 81.5 | 79.9 | 66.5 | 63.3 | |

| Grade 4 | 1840 | True | 173 | 1798 | 149 | 1534 | 105 | 1109 | 155 | 1613 | 128 | 1365 |

| False | 5 | 42 | 29 | 306 | 73 | 731 | 23 | 227 | 50 | 475 | ||

| % | 32.36 | 97.2 | 97.7 | 83.7 | 83.3 | 59.0 | 60.2 | 87.1 | 87.6 | 71.9 | 74.1 | |

| Grade 5 | 405 | True | 39 | 398 | 39 | 381 | 17 | 185 | 32 | 349 | 37 | 379 |

| False | 2 | 7 | 2 | 24 | 24 | 220 | 9 | 56 | 4 | 26 | ||

| % | 7.12 | 95.1 | 98.2 | 95.1 | 94.0 | 41.4 | 45.6 | 78.0 | 86.1 | 90.2 | 93.5 | |

| Total | 5685 | True | 528 | 5510 | 471 | 4747 | 394 | 3905 | 441 | 4354 | 414 | 4109 |

| False | 37 | 175 | 94 | 938 | 171 | 1780 | 124 | 1331 | 151 | 1576 | ||

| % | 93.46 | 96.9 | 83.3 | 83.5 | 69.7 | 68.6 | 78.0 | 76.5 | 73.2 | 72.2 | ||

Both the results of the test group, whose intraclass distribution was similar to the main list, and the results of all discs in total are given in Table 6. The CNN success rate was significantly higher than that of doctors, both in the test group and in total (93.4% and 96.9%, respectively). Intraclass distributions are also given in Table 5.

The comparative classification results of CNN and doctors for each grade are given in Table 6.

Effect Of CNN Assistance

By employing CNN, doctors were able to correct 48-74.5% of their faulty decisions. The overall performance of CNN was 93.4%. The success percentage of the asisted doctors was 90.6% to 92.9%. Overall, CNN positively influenced the doctors' decisions by 7.9% to 22%, bringing them closer to its own level (Table 7).

Table 7.

Impact of CNN Assistance on Doctors.

| Before CNN assistance | After CNN assistance | Rate of change in doctor’s decision with CNN asistance | |

| True/False/Success rate | True/False/Success rate | ||

| Dr1 | 4747/938/83.5% | 5197/488/91.4% | +7.9% |

| Dr2 | 3905/1780/68.6% | 5155/530/90.6% | +22% |

| Dr3 | 4354/1331/76.5% | 5246/439/92.3% | +15.8% |

| Dr4 | 4109/1576/72.3% | 5283/402/92.9% | +20.6% |

Discussion

Several classification techniques have been established for IVDD thus far. MRI is the clinically favored imaging modality for IVDs. Pfirmann's categorization, which is commonly employed as a morphological classification, is insufficiently discriminatory in elderly populations, despite its generally strong agreement.19–22 In addition to the substantial intra-class differences, the low inter-class differences negatively impact the success of the categorization. 14 In order to address these constraints, this classification has been modified morphologically and quantitatively,7,22 and both authors have claimed improved discriminatory results. In addition, new MRI techniques, such as T1rho, T2*, GAGcest, and sodium MRI, have been developed to assess alterations in the disc’s chemical composition.23–25 T1RHO has been demonstrated to be correlated with the degenerative process; however, the association with low back pain remains unknown. In addition, they have not been extensively used because of drawbacks such as expensive software and specialized hardware requirements, lengthy imaging periods, low sensitivity, and a lack of imaging standardization. 25

In recent years, deep learning, specifically CNN’s, has proven to be an effective segmentation and classification approach for IVDD and has made substantial advancements. Deep learning-based algorithms can identify richly discriminative features from raw data without requiring complex feature design. 26 These methods are utilized for both quantitative and qualitative segmentation and classification tasks. Several methods were used for the automated segmentation. With a deformable part model detector, the dice overlap coefficient for IVD segmentation accuracy is reported as 84.0± % 1.5. 27 Huang et al. reported 92.6% quantitative segmentation performance with their U-net-based CNN, named Spine Explorer. Manuel (using Image J) and automatic (using Spine Explorer) measurements had excellent agreement. (.81∼1.00). CSF-adjusted signal measures were shown to have a substantial correlation with the Pfirrmann score, whereas disc height had a modest correlation. 16 We also used U-net in our qualitative study, and our segmentation performance was 95.9%, which was comparable to Huang but superior to Jamaluddin. Castro-Mateos et al. noted that relatively high intra- and inter-class variability was reported in the literature for manual classification and suggested that automating classification could be more beneficial. Hence, they created an automated segmentation method based on an active contour model. The utilized segmentation method yielded results with varying degrees of precision, with a dice similarity index ranging from 85% to 95%. 12 In another study by the same author, the success percentage for IVD segmentation using active contour models is reported as 91.7% ± 5.6. 28 This rate corresponds to our results. Jamaluddin et al. extracted IVDs from detected vertebral bodies in T2W MRI images and reported 95.6% detection and labeling accuracy. 13 Gao et al. noted that the Pfirrman staging system may present certain difficulties for the automation of regular DL models. The main reason for this issue is primarily related to the architecture of the classifier, specifically the employed loss function. In their study comparing four different CNN networks for disc degeneration grading, they used push-pull regularization (PPR) to circumvent this issue. As a result, Gao et al 14 discovered that incorporating PPR into the network improved performance by 8-10%. 14 In our research, the regular Inception-v3 model had a 93.4% success rate. Comparing the BianqueNet and DeepLabv3 networks with various modules, Zheng et al 29 obtained a quantified segmentation performance of .9310-.9480 dice coefficients. 29 Similar to our DL model, Niemayer et al. reported more than 90% classification accuracy with reliability (k > .9) in a study employing a VGG16-like architecture. 15 In a study utilizing the YOLO-v5 single-stage detection model, detection and classification accuracy rates of greater than 95% were also reported. 30

Clearly, DL models are quite effective in IVDD classification. However, two points are notable. First, there is still a failure rate of 7-15% in the recent studies. In our study, DL assistance was found to boost human performance only up to the level of its own performance. This failure rate can be attributed to the insufficient discrimination of the employed classification system. 14 It is reasonable to anticipate that the success of DL models will increase as a consequence of a new classification that provides better discrimination. Second, recent studies have consistently demonstrated the efficacy of DL models, but no research has been undertaken to determine the extent to which this success would influence the clinician's ultimate choice regarding the disease's stage. According to our knowledge, our study is the first to objectively assess how DL assistance impacts a doctor's ability to judge. Despite the fact that IVDD is a progressive condition, it appears that this process can be inhibited by the use of potential emerging regenerative therapies. Appropriate staging is crucial when selecting a patient for these new therapies. 5

Limitations

Current CNNs have only been trained and evaluated on images from a single type of scanner. Consequently, it is possible that an additional factor may be required to account for variations that may occur with distinct imaging equipment.

Another limitation of the method could be the chosen grading system. In our study, we used the Pfirrmann classification, which requires a standard T2-weighted MR image. This classification has some problems, such as high intra-class differences and low inter-class differences, as well as low discriminatory power.12,22 To surmount this disadvantage, we compiled a manual classification from four experts and established the ground truth as the value with the highest number of votes. However, new classifications that designate the degrees of degeneration in a different manner may be implemented for future research.

Conclusion

We presented a DL-based system for IVD segmentation and classification. In terms of reliability (k > .95) and accuracy (.934), the network outperformed humans markedly. We have quantitatively demonstrated that the network we developed has a positive effect on doctors' assessments. Our findings are comparable to those of other DL-based research.

Footnotes

Authors’ Contributions: Concept – Z.S., E.B., I.S., S.S., H.U., R.K.; Design – Z.S, S.S., H.U.; Supervision – Z.S., S.S., I.S., H.U.; Materials – Z.S., E.B., R.K., I.S., S.S.,; Data Collection and/or Processing – Z.S., E.B., R.K., I.S., S.S.; Analysis and/or Interpretation - Z.S., I.S., S.S., R.K., H.U.,; Literature Review – Z.S., I.S., S.S., E.B., H.U.; Writing – Z.S., E.B., I.S., S.S., H.U., R.K; Critical Review - Z.S., S.S., H.U. All authors have made substantial contributions in the interpretation of data, revising the article critically and all approved of the final version for submission.

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) received no financial support for the research, authorship, and/or publication of this article.

ORCID iD

Zafer Soydan https://orcid.org/0000-0001-6387-8628

Data Availability Statement

The data used during the current study are available from the corresponding author on reasonable request.

References

- 1.Hoy DG, Smith E, Cross M, et al. Reflecting on the global burden of musculoskeletal conditions: lessons learnt from the global burden of disease 2010 study and the next steps forward. Ann Rheum Dis. 2015;74(1):4-7. doi: 10.1136/annrheumdis-2014-205393 [DOI] [PubMed] [Google Scholar]

- 2.Matsuyama Y, Chiba K, Iwata H, Seo T, Toyama Y. A multicenter, randomized, double-blind, dose-finding study of condoliase in patients with lumbar disc herniation. J Neurosurg Spine. 2018;28(5):499-511. doi: 10.3171/2017.7.SPINE161327 [DOI] [PubMed] [Google Scholar]

- 3.Le Maitre CL, Hoyland JA, Freemont AJ. Catabolic cytokine expression in degenerate and herniated human intervertebral discs: IL-1beta and TNFalpha expression profile. Arthritis Res Ther. 2007;9(4):R77. doi: 10.1186/ar2275 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Thorpe AA, Binch AL, Creemers LB, Sammon C, Le Maitre CL. Nucleus pulposus phenotypic markers to determine stem cell differentiation: fact or fiction? Oncotarget. 2016;7(3):2189-2200. doi: 10.18632/oncotarget.6782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Binch ALA, Fitzgerald JC, Growney EA, Barry F. Cell-based strategies for IVD repair: clinical progress and translational obstacles. Nat Rev Rheumatol. 2021;17(3):158-175. doi: 10.1038/s41584-020-00568-w [DOI] [PubMed] [Google Scholar]

- 6.Pfirrmann CW, Metzdorf A, Zanetti M, Hodler J, Boos N. Magnetic resonance classification of lumbar intervertebral disc degeneration. Spine (Phila Pa 1976). 2001;26(17):1873-1878. doi: 10.1097/00007632-200109010-00011 [DOI] [PubMed] [Google Scholar]

- 7.Griffith JF, Wang YX, Antonio GE, et al. Modified pfirrmann grading system for lumbar intervertebral disc degeneration. Spine (Phila Pa 1976). 2007;32(24):E708-E712. doi: 10.1097/BRS.0b013e31815a59a0 [DOI] [PubMed] [Google Scholar]

- 8.Yoon MA, Hong SJ, Kang CH, Ahn KS, Kim BH. T1rho and T2 mapping of lumbar intervertebral disc: correlation with degeneration and morphologic changes in different disc regions. Magn Reson Imaging. 2016;34(7):932-939. doi: 10.1016/j.mri.2016.04.024 [DOI] [PubMed] [Google Scholar]

- 9.Zhou Z, Jiang B, Zhou Z, et al. Intervertebral disk degeneration: T1ρ MR imaging of human and animal models. Radiology. 2013;268(2):492-500. doi: 10.1148/radiol.13120874 [DOI] [PubMed] [Google Scholar]

- 10.Togao O, Hiwatashi A, Wada T, et al. A qualitative and quantitative correlation study of lumbar intervertebral disc degeneration using glycosaminoglycan chemical exchange saturation transfer, pfirrmann grade, and T1-ρ. AJNR Am J Neuroradiol. 2018;39(7):1369-1375. doi: 10.3174/ajnr.A5657 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Pulickal T, Boos J, Konieczny M, et al. MRI identifies biochemical alterations of intervertebral discs in patients with low back pain and radiculopathy. Eur Radiol. 2019;29(12):6443-6446. doi: 10.1007/s00330-019-06305-6. [DOI] [PubMed] [Google Scholar]

- 12.Castro-Mateos I, Hua R, Pozo JM, Lazary A, Frangi AF. Intervertebral disc classification by its degree of degeneration from T2-weighted magnetic resonance images. Eur Spine J. 2016;25(9):2721-2727. doi: 10.1007/s00586-016-4654-6 [DOI] [PubMed] [Google Scholar]

- 13.Jamaludin A, Lootus M, Kadir T, et al. Issls prize in bioengineering science 2017: automation of reading of radiological features from magnetic resonance images (MRIs) of the lumbar spine without human intervention is comparable with an expert radiologist. Eur Spine J. 2017;26(5):1374-1383. doi: 10.1007/s00586-017-4956-3 [DOI] [PubMed] [Google Scholar]

- 14.Gao F, Liu S, Zhang X, Wang X, Zhang J. Automated grading of lumbar disc degeneration using a push-pull regularization network based on MRI. J Magn Reson Imag. 2021;53(3):799-806. doi: 10.1002/jmri.27400 [DOI] [PubMed] [Google Scholar]

- 15.Niemeyer F, Galbusera F, Tao Y, Kienle A, Beer M, Wilke HJ. A deep learning model for the accurate and reliable classification of disc degeneration based on MRI data. Invest Radiol. 2021;56(2):78-85. doi: 10.1097/RLI.0000000000000709 [DOI] [PubMed] [Google Scholar]

- 16.Huang J, Shen H, Wu J, et al. Spine explorer: a deep learning based fully automated program for efficient and reliable quantifications of the vertebrae and discs on sagittal lumbar spine MR images. Spine J. 2020;20(4):590-599. doi: 10.1016/j.spinee.2019.11.010 [DOI] [PubMed] [Google Scholar]

- 17.Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z. Rethinking the inception architecture for computer vision, 2016; IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016; Las Vegas, NV, USA, pp. 2818-2826, doi: 10.1109/CVPR.2016.308 [DOI] [Google Scholar]

- 18.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159-174 [PubMed] [Google Scholar]

- 19.Farshad-Amacker NA, Farshad M, Winklehner A, Andreisek G. MR imaging of degenerative disc disease. Eur J Radiol. 2015;84(9):1768-1776. doi: 10.1016/j.ejrad.2015.04.002 [DOI] [PubMed] [Google Scholar]

- 20.Kettler A, Wilke HJ. Review of existing grading systems for cervical or lumbar disc and facet joint degeneration [published correction appears in Eur Spine J. 2006 Jun;15(6):719]. Eur Spine J. 2006;15(6):705-718. doi: 10.1007/s00586-005-0954-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Miyazaki M, Hong SW, Yoon SH, Morishita Y, Wang JC. Reliability of a magnetic resonance imaging-based grading system for cervical intervertebral disc degeneration. J Spinal Disord Tech. 2008;21(4):288-292. doi: 10.1097/BSD.0b013e31813c0e59 [DOI] [PubMed] [Google Scholar]

- 22.Rim DC. Quantitative pfirrmann disc degeneration grading system to overcome the limitation of Pfirrmann disc degeneration grade. Korean J Spine. 2016;13(1):1-8. doi: 10.14245/kjs.2016.13.1.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Paul CPL, Smit TH, de Graaf M, et al. Quantitative MRI in early intervertebral disc degeneration: T1rho correlates better than T2 and ADC with biomechanics, histology and matrix content. PLoS One. 2018;13(1):e0191442. Published 2018 Jan 30. doi: 10.1371/journal.pone.0191442 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Welsch GH, Trattnig S, Paternostro-Sluga T, et al. Parametric T2 and T2* mapping techniques to visualize intervertebral disc degeneration in patients with low back pain: initial results on the clinical use of 3.0 tesla MRI. Skeletal Radiol. 2011;40(5):543-551. doi: 10.1007/s00256-010-1036-8 [DOI] [PubMed] [Google Scholar]

- 25.Tamagawa S, Sakai D, Nojiri H, Sato M, Ishijima M, Watanabe M. Imaging evaluation of intervertebral disc degeneration and painful discs-advances and challenges in quantitative MRI. Diagnostics (Basel). 2022;12(3):707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436-444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 27.Jamaludin A, Lootus M, Kadir T, Zisserman A. Automatic intervertebral discs localization and segmentation: a vertebral approach. Paper presented at international workshop on computational methods and clinical applications for spine imaging 2016; 5 October 2015; Munich, Germany (Vol.9402):97-103. 10.1007/978-3-319-41827-8_9 [DOI] [Google Scholar]

- 28.Castro-Mateos I, Pozo JM, Lazary A, Frangi AF, Aylward S, Hadjiiski L. 2D Segmentation of intervertebral discs and its degree of degeneration from T2-weighted magnetic resonance images. Paper presented at medical imaging 2014: computer-aided diagnosis; March 2014; Washington, USA (Vol.9035): Society of Photo-Optical Instrumentation Engineers. 10.1117/12.2043755 [DOI] [Google Scholar]

- 29.Zheng HD, Sun YL, Kong DW, et al. Deep learning-based high-accuracy quantitation for lumbar intervertebral disc degeneration from MRI. Nat Commun. 2022;13(1):841. Published 2022 Feb 11. doi: 10.1038/s41467-022-28387-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Liawrungrueang W, Kim P, Kotheeranurak V, Jitpakdee K, Sarasombath P. Automatic detection, classification, and grading of lumbar intervertebral disc degeneration using an artificial neural network model. Diagnostics (Basel). 2023;13(4):663. Published 2023 Feb 10. doi: 10.3390/diagnostics13040663 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data used during the current study are available from the corresponding author on reasonable request.