Abstract

In this paper, we consider asymptotically exact support recovery in the context of high dimensional and sparse Canonical Correlation Analysis (CCA). Our main results describe four regimes of interest based on information theoretic and computational considerations. In regimes of “low” sparsity we describe a simple, general, and computationally easy method for support recovery, whereas in a regime of “high” sparsity, it turns out that support recovery is information theoretically impossible. For the sake of information theoretic lower bounds, our results also demonstrate a non-trivial requirement on the “minimal” size of the nonzero elements of the canonical vectors that is required for asymptotically consistent support recovery. Subsequently, the regime of “moderate” sparsity is further divided into two subregimes. In the lower of the two sparsity regimes, we show that polynomial time support recovery is possible by using a sharp analysis of a co-ordinate thresholding [1] type method. In contrast, in the higher end of the moderate sparsity regime, appealing to the “Low Degree Polynomial” Conjecture [2], we provide evidence that polynomial time support recovery methods are inconsistent. Finally, we carry out numerical experiments to compare the efficacy of various methods discussed.

Keywords: Canonical Correlation Analysis, Support Recovery, Low Degree Polynomials, Variable Selection, High Dimension

I. Introduction

Canonical Correlation Analysis (CCA) is a highly popular technique to perform initial dimension reduction while exploring relationships between two multivariate objects. Due to its natural interpretability and success in finding latent information, CCA has found enthusiasm across vast canvas of disciplines, which include, but are not limited to psychology and agriculture, information retrieving [3]-[5], brain-computer interface [6], neuroimaging [7], genomics [8], organizational research [9], natural language processing [10], [11], fMRI data analysis [12], computer vision [13], and speech recognition [14], [15].

Early developments in the theory and applications of CCA have now been well documented in the statistical literature, and we refer the interested reader to [16] and references therein for further details. However, the modern surge in interest for CCA, often being motivated by data from high throughput biological experiments [17]-[19], requires re-thinking several aspects of the traditional theory and methods. A natural structural constraint that has gained popularity in this regard, is that of sparsity, i.e., the phenomenon of an (unknown) collection of variables being related to each other. In order to formally introduce the framework of sparse CCA, we present our statistical setup next. We shall consider n-i.i.d. samples with and being multivariate mean zero random variables with joint variance covariance matrix

| (1) |

The first canonical correlation is then defined as the maximum possible correlation between two linear combinations of X and Y. This definition interprets as the optimal value of the following maximization problem:

| (2) |

The solutions to (2) are the vectors that maximize the correlation of the projections of X and Y in those respective directions. Higher order canonical correlations can thereafter be defined in a recursive fashion (cf. [20]). In particular, for , we define the jth canonical correlation and the corresponding directions uj and vj by maximizing (2) with the additional constraint

| (3) |

As mentioned earlier, in many modern data examples, the sample size n is typically at most comparable to or much smaller than p or q – rendering the classical CCA inconsistent and inadequate without further structural assumptions [21]-[23]. The framework of Sparse Canonical Correlation Analysis (SCCA) (cf. [8], [24]), where the ui’s and the vi’s are sparse vectors, was subsequently developed to target low dimensional structures (that allows consistent estimation) when p, q are potentially larger than n. The corresponding sparse estimates of the leading canonical directions naturally perform variable selection, thereby leading to the recovery of their support (cf. [8], [19], [24], [25]). It is unknown, however, under what settings, this naïve method of support recovery, or any other method for the matter, is consistent. The support recovery of the leading canonical directions serves an important purpose of identifying groups of variables that explain the most linear dependence among high dimensional random objects (X and Y) under study – and thereby renders crucial interpretability. Asymptotically optimal support recovery is yet to be explored systematically in the context of SCCA – both theoretically, and from the computational viewpoint. In fact, despite the renewed enthusiasm for CCA, both the theoretical and applied communities have mainly focused on the estimation of the leading canonical directions, and relevant scalable algorithms – see, e.g., [22], [24], [26]-[28]. This paper explores the crucial question of support recovery in the context of SCCA. 1

The problem of support recovery for SCCA naturally connects to a vast class of variable selection problems (cf. [29]-[33]). The problem closest in terms of complexity turns out to be the sparse PCA (SPCA) problem [34]. Support recovery in the latter problem is known to present interesting information theoretic and computational bottlenecks (cf. [30], [35]-[37]). Moreover, information theoretic and computational issues also arise in context of SCCA estimation problem (cf. [24], [26]-[28]). In view of the above, it is natural to expect that such information theoretic and computational issues exist in context of SCCA support recovery problem as well. However, the techniques used in SPCA support recovery analysis are not directly applicable to the SCCA problem, which poses additional challenges due to the presence of high dimensional nuisance parameters and . The main focus of our work is therefore retrieving the complete picture of the information theoretic and computational limitations of SCCA support recovery. Before going into further details, we present a brief summary of our contributions, and defer the discussions on the main subtleties to Section III. Our methods can be implemented using the R package Support.CCA [38].

A. Summary of Main Results

We say a method successfully recovers the support if it achieves exact recovery with probability tending to one uniformly over the sparse parameter spaces defined in Section II. In the sequel, we denote the cardinality of the combined support of the ui’s and the vi’s by sx and sy, respectively. Thus sx and sy will be our respective sparsity parameters. Our main contributions are listed below.

1). General methodology:

In Section III-A, we construct a general algorithm called RecoverSupp, which leads to successful support recovery whenever the latter is information theoretically tractable. This also serves as the first step in creating a polynomial time procedure for recovering support in one of the difficult regimes of the problem – see e,g. Corollary 2, which shows that RecoverSupp accompanied by a co-ordinate thresholding type method recovers the support in polynomial time in a regime that requires subtle analysis. Moreover, Theorem 1 shows that the minimal signal strength required by RecoverSupp matches the information theoretic limit whenever the nuisance precision matrices and are sufficiently sparse.

2). Information theoretic and computational hardness as a function of sparsity:

As the sparsity level increases, we show that the CCA support recovery problem transitions from being efficiently solvable, to NP hard (conjectured), and to information theoretically impossible. According to this hardness pattern, the sparsity domain can be partitioned into the following three regimes: (i) , , , , and (iii) , . We describe below the distinguishing behaviours of these three regimes, which is consistent with the sparse PCA scenario.

We show that when , (“easy regime”), polynomial time support recovery is possible, and well-known consistent estimators of the canonical correlates (cf. [24], [28]) can be utilized to that end. When , (“difficult regime”), we show that a co-ordinate thresholding type algorithm (inspired by [1]) succeeds provided . We call the last regime “difficult” because it is unknown whether existing estimation methods like COLAR [28] or SCCA [24] have valid statistical guarantees in this regime – see Section III-A and Section III-D for more details.

In Section III-C, we show that when , (“hard regime”), support recovery is computationally hard subject to the so called “low degree polynomial conjecture” recently popularized by [39], [40], and [2]. Of course, this phenomenon is observable only when p, , because otherwise, the problem would be solvable by the ordinary CCA analysis (cf. [23], [41]). Our findings are consistent with the conjectured computational barrier in context of SCCA estimation problem [28].

When , , we show that support recovery is information theoretically impossible (see Section III-B).

3). Information theoretic hardness as a function of minimal signal strength:

In context of support recovery, the signal strength is quantified by

Generally, support recovery algorithms require the signal strength to lie above some threshold. As a concrete example, the detailed analyses provided in [1], [30], and [35] are all based on the nonzero principal component elements being of the order . To the best of our knowledge, prior to our work, there was no result in the PCA/CCA literature on the information theoretic limit of the minimal signal strength. Generally, PCA studies assume that the top eigenvectors are de-localized, i.e., the principal components have elements of the order and thereby mostly considered the cases of de-localized eigenvectors. We do not make any such assumption on the canonical covariates, and thereby we believe that our study paints a more complete picture of the support recovery.

In Section III-B, we show that (or ) is a necessary requirement for successful support recovery by U (or V).

B. Notation

For a vector , we denote its support by . We will overload notation, and for a matrix , we will denote by D(A) the indexes of the nonzero rows of A. By an abuse of notation, sometimes we will refer to D(A) as the support of A as well. When and are unknown parameters, generally, the estimator of their supports will be denoted by and , respectively. We let denote the set of all positive numbers, and write for the set of all natural numbers {0, 1, 2, … ,}. For any , We let [n] denote the set {1, … , n}. We define the projection of A onto by

| (4) |

For any finite set , we denote its cardinality by . Also, for any event , we let be the indicator of the event . For any , we let denote the unit sphere in .

We let be the usual lk norm in for . In particular, we let denote the number of nonzero elements of a vector . For any probability measure on the Borel sigma field of , we let to be the set of all measurable functions such that . The corresponding inner product will be denoted by . We denote the operator norm and the Frobenius norm of a matrix by and , respectively. We let Ai* and Aj denote the i-th row and j-th column of A, respectively. For , we define the norms and . The maximum and minimum eigenvalues of a square matrix A will be denoted by and , respectively. Also, we let s(A) denote the maximum number of nonzero entries in any column of A, i.e., .

The results in this paper are mostly asymptotic (in n) in nature and thus require some standard asymptotic notations. If an and bn are two sequences of real numbers then (and ) implies that (and ) as , respectively. Similarly (and ) implies that for some (and for some ). Alternatively, will also imply and will imply that for some . We write if there are positive constants C1 and C2 such that for all . We will write to indicate an and bn are asymptotically of the same order up to a poly-log term. Finally, in our mathematical statements, C and c will be two different generic constants which can vary from line to line.

II. Mathematical Formalism

We denote the rank of by r. It can be shown that exactly r canonical correlations are positive and the rest are zero in the model (2). We will consider the matrices and . From (2) and (3), it is not hard to see that and . The indexes of the nonzero rows of U and V, respectively, are the combined support of the ui’s and the vi’s. Since we are interested in the recovery of the latter, it will be useful for us to study of the properties of U and V. To that end, we often make use of the following representation connecting to U and V [16]:

| (5) |

To keep our results straightforward, we restrict our attention to a particular model throughout, defined as follows.

Definition 1. Suppose . Let be a constant. We say if

-

A1

(Sub-Gaussian) X and Y are sub-Gaussian random vectors (cf. [42]), with joint covariance matrix Σ as defined in (1). Also rank.

-

A2

Recall the definition of the canonical correlation from (3). Note that by definition, . For , additionally satisfies .

-

A3

(Sparsity) The number of nonzero rows of U and V are sx and sy, respectively, that is and . Here U and V are as defined in (5).

-

A4(Bounded eigenvalue)

-

A5

(Positive eigen-gap) for i = 2, … , r.

Sometimes we will consider a sub-model of where each is Gaussian. This model will be denoted by , where “G” stands for the Gaussian assumption. Some remarks on the modeling assumptions A1—A5 are in order, which we provide next.

-

A1.

We begin by noting that we do not require X and Y to be jointly sub-Gaussian. Moreover, the individual sub-Gaussian assumption itself is common in the , regime in the SCCA literature (cf. [24], [28], [43]). Our proof techniques depend crucially on the sub-Gaussian assumption. We also anticipate that the results derived in this paper will change under the violation of this assumption. For the sharper analysis in the difficult regime (, ), our proof techniques require the Gaussian model – which is in parallel with [1]’s treatment of the sparse PCA in the corresponding difficult regime. In general, the Gaussian spiked model assumption in sparse PCA goes back to [44], and is common in the PCA literature (cf. [30], [35]).

-

A2-A4.

These assumptions are standard in the analysis of canonical correlations (cf. [24], [28]).

-

A5.

This assumption concerns the gap between consecutive canonical correlation strengths. However, we refer to this gap as “Eigengap” because of its similarity with the Eigengap in the sparse PCA literature (cf. [1], [45]). This assumption is necessary for the estimation of the i-th canonical covariates. Indeed, if then there is no hope of estimating the i-th canonical covariates because they are not identifiable, and so support recovery also becomes infeasible. This assumption can be relaxed to requiring only k many λi’s to be strictly larger than where k ≤ r. In this case, we can recover the support of only the first k canonical covariates.

In the following sections, we will denote the preliminary estimators of U and V by and , respectively. The columns of and will be denoted by and , respectively. Therefore and will stand for the corresponding preliminary estimators of ui and vi. In case of CCA, the ui’s and vi’s are identifiable only up to a sign flip. Hence, they are also estimable only up to a sign flip. Finally, we denote the empirical estimates of , , and , by , , and , respectively – which will often be appended with superscripts to denote their estimation through suitable subsamples of the data 2. Finally, we let denote a positive constant which depends on only through , but can vary from line to line.

III. Main Results

We divide our main results into the following parts based on both statistical and computational difficulties of different regimes. First, in Section III-A we present a general method and associated sufficient conditions for support recovery. This allows us to elicit a sequence of questions regarding necessity of the conditions and remaining gaps both from statistical and computational perspectives. Our subsequent sections are devoted to answering these very questions. In particular, in Section III-B we discuss information theoretic lower bounds followed by evidence for statistical-computational gaps in Section III-C. Finally, we close a final computational gap in asymptotic regime through sharp analysis of a special co-ordinate-thresholding type method in Section III-D.

A. A Simple and General Method:

We begin with a simple method for estimating the support, which readily establishes the result for the easy regime, and sets the directions for the investigation into other more subtle regimes. Since the estimation of D(U) and D(V) are similar, we focus only on the estimation of D(V) for the time being.

Suppose is a row sparse estimator of V. The nonzero indexes of is the most intuitive estimator of D(V). Such an is also easily attainable because most estimators of the canonical directions in the high dimension are sparse (cf. [24], [26], [28] among others). Although we have not yet been able to show the validity of this apparently “naïve” method, we provide numerical results in Section IV to explore its finite sample performance. However, a simple method can refine these initial estimators, to often optimally recover the support D(V). We now provide the details of this method and derive its asymptotic properties.

To that end, suppose we have at our disposal an estimating procedure for , which we generically denote by and an estimator of U. We split the sample in two equal parts, and compute and from the first part of the sample, and the estimator from the second part of the sample. Define . Our estimator of D(V) is then given by

| (6) |

where cut is a pre-specified cut-off or threshold. We will discuss more on cut later. The resulting algorithm will be referred as RecoverSupp from now on. Algorithm 1 gives the algorithm for the support recovery of V, but the full version of RecoverSupp, which estimates D(U) and D(V) simultaneously, can be found in Appendix A; see Algorithm 3 there. RecoverSupp is similar in spirit to the “cleaning” step in the sparse PCA support recovery literature (cf. [1]). One thing to remember here is that is not an estimator V. In fact, the (i, j)-th element of is an estimator of .

Remark 1. In many applications, the rank r may be unknown. [46] (see Section 4.6.5 therein) suggests to use the screeplot of the canonical correlations to estimate r. Screeplot is also a popular tool to estimate the number of nonzero principal components in PCA analysis [1]. For CCA, the screeplot is the plot of the estimated canonical correlations versus their orders. If there is a clear gap between two successive correlations, [46] suggests taking the larger correlation as the estimator of . One can use [24]’s SCCA method to estimate the canonical correlations to obtain the screeplot. There can be other ways of estimating r. For example, in their trans-eQTL study, [47] uses a resampling technique on a holdout dataset to generate observations from the null distribution of the i-th canonical correlation estimate under the hypothesis , where . The largest i, for which the test is rejected, is taken as the estimated rank. A similar technique has been used by [48] to select the ranks for a related method JIVE.

| Algorithm 1 RecoverSupp ): sup-port recovery of V | |

|---|---|

|

|

It turns out that, albeit being so simple, RecoverSupp has desirable statistical guarantees provided and are reasonable estimators of U and , respectively. These theoretical properties of RecoverSupp, and the hypotheses and queries generated thereof, lay out the roadmap for the rest of our paper. However, before getting into the detailed theoretical analysis of RecoverSupp, we state a l2-consistency condition on and , where we remind the readers that we let and denote the i-th columns of and , respectively. Recall also that the i-th columns of U and V are denoted by ui and vi, respectively.

Condition 1 (l2 consistency ). There exists a function so that and the estimators and of ui and vi satisfy

with probability 1 − o(1) uniformly over .

We will discuss the estimators which satisfy Condition 1 later. Theorem 1 also requires the signal strength Sigy to be at least of the order , where the parameter depends on the type of as follows:

is of type A if there exists Cpre > 0 so that satisfies with probability 1 − o(1) uniformly over . Here we remind the readers that . In this case, .

is of type B if with probability 1 − o(1) uniformly over for some Cpre > 0. In this case, .

is of type C if . In this case, .

The estimation error of clearly decays from type A to C, with the error being zero at type C. Because is generally much smaller than , shrinks from Case A to Case C monotonously as well. Thus it is fair to say that reflects the precision of the estimator in that is smaller if is a sharper estimator. We are now ready to state Theorem 1. This theorem is proved in Appendix C.

Theorem 1. Suppose and the estimators satisfy Condition 1. Further suppose is of type A, B, or C, which are stated above. Let where depends on the type of as outlined above. Then there exists a constant , depending only on , so that if

| (7) |

and , with , then the algorithm RecoverSupp fully recovers D(V) with probability 1 − o(1) uniformly over (for of type A and C), or uniformly over (for of type B).

The assumption that log p and log q are o(n) appears in all theoretical works of CCA (cf. [24], [28]). A requirement of this type is generally unavoidable. Note that Theorem 1 implies a more precise estimator requires smaller signal strength for full support recovery.

Main idea behind the proof of Theorem 1:

Because , is an estimator of for and . If , then for all . Therefore, in this case, we expect to be small for all . We will show that whenever , is uniformly bounded by for and with high probability. Here is a constant. Second, when , we will show that can not be too small. In fact, we will show that

| (8) |

for some with high probability in this case. The lower bound in the above inequality is bounded below by . Thus, if the minimal signal strength Sigy is bounded below by a large enough multiple of ϵn, then the lower bound will be larger than the upper bound in the case. Therefore, in this scenario, we can choose C > 0 so that

If we set , then the above inequality leads to.

These C1 and C2 are behind the constant in (7) and our choice of θn.

Thus the key step in the proof of Theorem 1 is analyzing the bias of , which hinges on the following bias decomposition:

| (9) |

Note that the term corresponds to the bias in estimating . Similarly, the error terms and incur due to the bias in estimating and ui, respectively. The main contributing term in the upper bound in (9) is . One can use the consistency property of to show that is of the order . Since has different rates and modes of convergence in cases A, B, and C, has different orders in cases A, B, and C, which explains why ϵn is of different order in these cases.

The term is much smaller – it is of the order . The proof bases on the fact that the l∞ error of estimating by is of the order for subgaussian X and Y. The error term is exactly zero for , and hence does not contribute. Thus only and contribute to the bias of for , which is therefore bounded by for some C1 > 0 with high probability in this case. The term does contribute to the bias of for , however, and it is of the order in this case. Because Err is small by Condition 1, we can show that is smaller than , which eventually leads to the relation in (8), thus completing the proof. We have already mentioned that RecoverSupp is analogous to the cleaning step in sparse PCA. Therefore the proof of Theorem 1 has similarities with some analogous results in sparse PCA. See for example Theorem 3 of [1], which proves the consistency of a “cleaned” estimator of the joint support of the spiked principal components. However, the proof in the CCA case is a bit more involved because of the presence of , which needs to be estimated for the cleaning step. Different estimators of can have different rates of convergence, which leads to the different types of the estimators. This ultimately leads to different requirements on the order of the threshold cut and the minimal signal strength Sigy.

Next we will discuss the implications of Theorem 1, but before getting into that detail, we will make two important remarks.

Remark 2. Although the estimation of the high dimensional precision matrix is potentially complicated, it is often unavoidable owing to the inherent subtlety of the CCA framework due to the presence of high dimensional nuisance parameters and . [26] also used precision matrix estimator for partial recovery of the support. In case of sparse CCA, to the best of our knowledge, there does not exist an algorithm that can recover the support, partially or completely, without estimating the precision matrix. However, our requirements on are not strict in that many common precision matrix estimators, e.g., the nodewise Lasso [49, Theorem 2.4], the thresholding estimator [50, Theorem 1 and Section 2.3], and the CLIME estimator [51, Theorem 6] exhibit the decay rate of type A and B under standard sparsity assumptions on . We will not get into the detail of the sparsity requirements on because they are unrelated to the sparsity of U or V, and hence are irrelevant to the primary goal of the current paper.

Remark 3. In the easy regime , polynomial time estimators satisfying Condition 1 are already available, e.g., COLAR [28, Theorem 4.2] or SCCA [24, Condition C4]. Thus it is easily seen that polynomial time support recovery is possible in the easy regime provided (7) is satisfied.

The implications of Theorem 1 in context of the sparsity requirements on D(U) and D(V) for full support recovery are somewhat implicit through the assumptions and conditions. However, the restriction on the sparsity is indirectly imposed by two different sources – which we elaborate on now. To keep the interpretations simple, throughout the following discussion, we assume that (a) , (b) p and q are of the same order, and (c) sx and sy are also of the same order. Note that (a) implies for a type B estimator of . Since we separate the task of estimating the nuisance parameter from the support recovery of V, we also assume that , which implies for a type A estimator of . The assumption , combined with (a), reduces the minimal signal strength condition (7) in Theorem 1 to .

In lieu of the discussion above, the first source of sparsity restriction is the minimal signal strength condition (7) on Sigy. To see this, first note that

where . Since ,

implying . Therefore, implicit in Theorem 1 lies the condition

| (10) |

which is enforced by the minimal signal strength requirement (7). Thus Theorem 1 does not hold for even when and r are small. This regime requires some attention because in case of sparse PCA [30] and linear regression [29], support recovery at 3 is proven to be information theoretically impossible. However, although a parallel result can be intuited to hold for CCA, the details of the nuances of SCCA support recovery in this regime is yet to be explored. Therefore, the sparsity requirement in (10) raises the question whether support recovery for CCA is at all possible when , even if and is known.

Question 1. Does there exist any decoder such that when ?

A related question is whether the minimal signal strength requirement (7) is necessary. To the best of our knowledge, there is no formal study on the information theoretic limit of the minimal signal strength even in context of the sparse PCA support recovery. Indeed, as we noted before, the detailed analyses of support recovery for SPCA provided in [1], [30], and [35] are all based on the nonzero principal component elements being of the order . Finally, although this question is not directly related to the sparsity conditions, it indeed probes the sharpness of the results in Theorem 1.

Question 2. What is the minimum signal strength required for the recovery of D(V)?

We will discuss Question 1 and Question 2 at greater length in Section III-B. In particular, Theorem 2(A) shows that there exists C > 0 so that support recovery at is indeed information theoretically intractable. On the other hand, in Theorem 2(B), we show that the minimal signal strength has to be of the order for full recovery of D(V). Thus when , (7) is indeed necessary from information theoretic perspectives.

The second source of restriction on the sparsity lies in Condition 1. Condition 1 is a l2-consistency condition, which has sparsity requirement itself owing the inherent hardness in the estimation of U. Indeed, Theorem 3.3 of [28] entails that it is impossible to estimate the canonical directions ui’s consistently if for some large C > 0. Hence, Condition 1 indirectly imposes the restriction . However, when , , and , the above restriction is already absorbed into the condition elicited in the last paragraph. In fact, there exist consistent estimators of U whenever and (see [27] or Section 3 of [28]). Therefore, in the latter regime, RecoverSupp coupled with the above-mentioned estimators succeeds. In view of the above, it might be tempting to think that Condition 1 does not impose significant additional restrictions. The restriction due to Condition 1, however, is rather subtle and manifests itself through computational challenges. Note that when support recovery is information theoretically possible, the computational hardness of recovery by RecoverSupp will be at least as much as that of the estimation of U. Indeed, the estimators of U which work in the regime , are not adaptive of the sparsity, and they require a search over exponentially many sets of size sx and sy. Furthermore, under , all polynomial time consistent estimators of U in the literature, e.g., COLAR [28, Theorem 4.2] or SCCA [24, Condition C4], require sx, sy to be of the order . In fact, [28] indicates that estimation of U or V for sparsity of larger order is NP hard.

The above raises the question whether RecoverSupp (or any method as such) can succeed at polynomial time when , . We turn to the landscape of sparse PCA for intuition. Indeed, in case of sparse PCA, different scenarios are observed in the regime , depending on whether , or (we recall that for SPCA we denote the sparsity of the leading principal component direction generically through s). We focus on the sub-regime first. In this case, both estimation and support recovery for sparse PCA are conjectured to be NP hard, which means no polynomial time method succeeds; see Section III-C for more details. The above hints that the regime , is NP hard for sparse CCA as well.

Question 3. Is there any polynomial time method that can recover the support D(V) when , ?

We dedicate Section III-C to answering this question. Subject to the recent advances in the low degree polynomial conjecture, we establish computational hardness of the regime , (up to a logarithmic factor gap) subject to , q. Our results are consistent with [28]’s findings in the estimation case and cover a broader regime; see Remark 5 for a comparison.

When the sparsity is of the order and , however, polynomial time support recovery and estimation are possible for the sparse PCA case. [1] showed that a co-ordinate thresholding type spectral algorithm works in this regime. Thus the following question is immediate.

Question 4. Is there any polynomial time method that can recover the support D(V) when , ?

We give an affirmative answer to Question 4 in Section III-D, which is in parallel with the observations for the sparse PCA. In fact, Corollary 2 shows that when and are known, , and , , estimation is possible in polynomial time. Since estimation is possible, RecoverSupp suffices for polynomial time support recovery in this regime, where is well below the information theoretic limit of . The main tool used in Section III-D is co-ordinate thresholding, which is originally a method for high dimensional matrix estimation [50], and apparently has nothing to do with estimation of canonical directions. However, under our setup, if the covariance matrix is consistently estimated in operator norm, by Wedin’s Sin θ Theorem [52], an SVD is enough to get a consistent estimator of U and V suitable for further precise analysis.

Remark 4. RecoverSupp uses sample splitting, which can reduce the efficiency. One can swap between the samples and compute two estimators of the supports. One can easily show that both the intersection and the union of the resulting supports enjoy the asymptotic guarantees of Theorem 1.

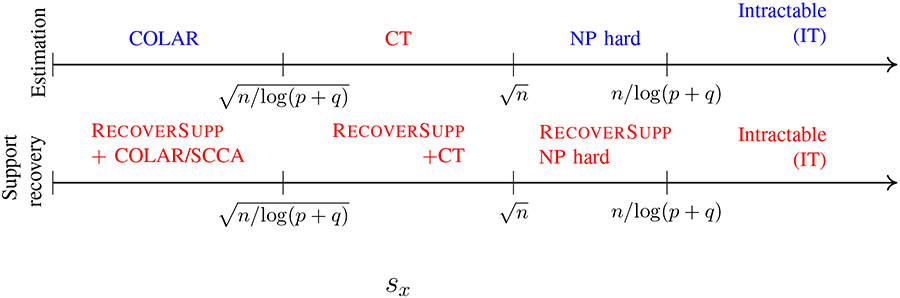

This section can be best summarized by Figure 1, which gives the information theoretic and computational landscape of sparse CCA analysis in terms of the sparsity. In other words, Figure 1 gives the phase transition plot for SCCA support recovery with respect to sparsity. It can be seen that our contributions (colored in red) complete the picture, which was initiated by [28].

Fig. 1:

Phase transition plots for SCCA estimation and support recovery problems with respect to sparsity. We have taken here. COLAR corresponds to the estimation method of [28]. Our contributions are colored in red. See [28] for more details on the regions colored in blue.

B. Information Theoretic Lower Bounds: Answers to Question 1 and 2

Theorem 2 establishes the information theoretic limits on the sparsity levels sx, sy, and the signal strengths Sigx and Sigy. The proof of Theorem 2 is deferred to Appendix D.

Theorem 2. Suppose and are estimators of D(U) and D(V), respectively. Let sx, sy > 1, and , . Then the following assertions hold:

-

If , thenOn the other hand, if , then

-

Let be the class of distributions satisfying . ThenOn the other hand, if , Then

In both cases, the infimum is over all possible decoders and .

First, we discuss the implications of part A of Theorem 2. This part entails that for full support recovery of V, the minimum sample size requirement is of the order . This requirement is consistent with the traditional lower bound on n in context of support recovery for sparse PCA [30, Theorem 3] and L1 regression [29, Corollary 1]. However, when , the sample size requirement for estimation of V is slightly relaxed, that is, [28, Theorem 3.2]. Therefore, from information theoretic point of view, the task of full support recovery appears to be slightly harder than the task of estimation. The scenario for partial support recovery might be different and we do not pursue it here. Moreover, as mentioned earlier, in the regime , RecoverSupp works with [28]’s (see Section 3 therein) estimator of U. Thus part A of Theorem 2 implies that is the information theoretic upper bound on the sparsity for the full support recovery of sparse CCA.

Part B of Theorem 2 implies that it is not possible to push the minimum signal strength below the level . Thus the minimal signal strength requirement (7) by Theorem 1 is indeed minimal up to a factor of . The last statement can be refined further. To that end, we remind the readers that for a good estimator of , i.e., a type B estimator, if . However, the latter always holds if support recovery is at all possible, because in that case , and elementary linear algebra gives . Thus, it is fair to say that, provided a good estimator of , the requirement (7) is minimal up to a factor of . Indeed, this implies that for banded inverses with finite band-width our results are rate optimal.

It is further worth comparing this part of the result to the SPCA literature. In the SPCA support recovery literature, generally, the lower bound on the signal strength is depicted in terms of the sparsity s, and usually a signal strength of order is postulated (cf. [1], [30], [35]). Using our proof strategies, it can be easily shown that for SPCA, the analogous lower bound on the signal strength would be . The latter is generally much smaller than and only when , the requirement of close to the lower bound. Thus, in the regime , the lower bound should rather be of the order . Therefore the minimum signal strength requirement of typically assumed in SPCA literature seems larger than necessary.

Main idea behind the proof of Theorem 2:

The main device used in this proof is Fano’s inequality [53]. Note that for any ,

| (11) |

Therefore it suffices to show that the left hand side in the above inequality is bounded away from 1/2 for some carefully chosen . If is finite, we can lower bound the left hand side of (11) using Fano’s inequality [53], which yields

| (12) |

Thus the main task is to choose in a way so that the right hand side (RHS) of (12) is large. We will choose so that X and Y are jointly Gaussian. In particular, , , and where and are fixed, and α is allowed to vary in a set . In this model, r = 1, ρ is the canonical correlation, and α and β0 are the left and right canonical covariates, respectively. Also, varies across as α varies across . Moreover, . Our main task boils down to choosing carefully.

The idea behind choosing is as follows. For any decoder, i.e., an estimator of the support, the chance of making error increases when is large. This can also be seen noting that the right hand side of (12) increases as increases. However, even if we prefer a larger , we need to ensure that the KL divergence between the distributions in the resulting is small. The reason is that, for a large , the right hand side of (12) can be small unless the KL divergence between the corresponding distributions in is small. In other words, any decoder will face a challenge detecting the true support of α when there are many distributions to choose from, and these distributions are also close to each other in KL distance.

For part A of Theorem 2, we choose in the following way. Letting

we let be the class of α’s which are obtained by replacing one of the in α0 by 0, and one of the zero’s in α0 by . A typical α obtained this way looks like

In this case, it turns out that . Under the conditions of part A of 2, we can show that the RHS of (12) is bounded below by 1/2 for this . The proof of part A is similar to its PCA analogue, which is Theorem 3 of [30]. The latter theorem is also based on Fano’s lemma and uses a similar construction for . However, there is no PCA analogue of part B. For part B of Theorem 2, we let be the class of all α’s so that

where

can take any position out of the positions. Clearly, . It can be shown that the RHS of (12) is bounded below by 1/2 in this case as well.

C. Computational Limits and Low Degree Polynomials: Answer to Question 3

We have so far explored the information theoretic upper and lower bounds for recovering the true support of leading canonical correlation directions. However, as indicated in the discussion preceding Question 3, the statistically optimal procedures in the regime where , are computationally intensive and is of exponential complexity (as a function of p, q). In particular, [28] have already showed that when sx and sy belong to parts of this regime, estimation of the canonical correlates is computationally hard, subject to a computational complexity based Planted Clique Conjecture. For the case of support recovery, the SPCA has been explored in detail and the corresponding computational hardness has been established in analogous regimes – see, e.g., [1], [30], and [35] for details. A similar phenomenon of computational hardness is observed in case of SPCA spike detection problem [54]. In light of the above, it is natural to believe that the SCCA support recovery is also computationally hard in the regime , , and, as a result, yields a statistical-computational gap. Although several paths exist to provide evidence towards such gaps 4, the recent developments using “Predictions from Low Degree Polynomials” [2], [39], [40] is particularly appealing due its simplicity in exposition. In order to show computationally hardness of the SCCA support recovery problem in the , regime, we shall resort to this very style of ideas, which has so far been applied successfully to explore statistical-computational gaps under sparse PCA [36], Stochastic Block Models, and tensor PCA [40], among others. This will allow us to explore the computational hardness of the problem in the entire regime where

| (13) |

compared to the somewhat partial results (see Remark 5 for detailed comparison) in earlier literature.

We divide our discussions to argue the existence of a statistical-computational gap in this regime as follows. Starting with a brief background on the statistical literature on such gaps, we first present a natural reduction of our problem to a suitable hypothesis testing problem in Section III-C1. Subsequently, in Section III-C2 we present the main idea of the “low degree polynomial conjecture” by appealing to the recent developments in [39], [40], and [2]. Finally, we present our main result for this regime in Section III-C3, thereby providing evidence of the aforementioned gap modulo the Low Degree Polynomial Conjecture presented in Conjecture 1.

1). Reduction to Testing Problem::

Denote by the distribution of a random vector. Therefore corresponds to the case when X and Y are uncorrelated. We first show that there is any scope of support recovery in only if is distinguishable from , i.e., the test vs. has asymptotic zero error.

To formalize the ideas, suppose we observe i.i.d random vectors which are distributed either as or . We denote the n-fold product measures corresponding to and by and , respectively. Note that if , then . We overload notation, and denote the combined sample and by X and Y respectively. In this section, X and Y should be viewed as unordered sets. The test for testing the null vs. the alternative is said to strongly distinguish and if

The above implies that both the type I error and the type II error of Φn converges to zero as . In case of composite alternative , the test strongly distinguishes from if

Now we explain how support recovery and the testing framework are connected. Suppose there exist decoders which exactly recover D(U) and D(V) under for . Then the trivial test, which rejects the null if either of the estimated supports is non-empty, strongly distinguishes from . The above can be coined as the following lemma.

Lemma 1. Suppose there exist polynomial time decoders and of D(U) and D(V) so that

| (14) |

Further assume, , and . Then there exists a polynomial time test which strongly distinguishes and .

Thus, if a regime does not allow any polynomial time test for distinguishing from , there can be no polynomial time computable consistent decoder for D(U) and D(V). Therefore, it suffices to show that there is no polynomial time test which distinguishes from in the regime , . To be more explicit, we want to show that if , , then

| (15) |

for any Φn that is computable in polynomial time.

The testing problem under concern is commonly known as the CCA detection problem, owing to its alternative formulation as vs. . In other words, the test tries to detect if there is any signal in the data. Note that, Lemma 1 also implies that detection is an easier problem than support recovery in that the former is always possible whenever the latter is feasible. The opposite direction may not be true, however, since detection does not reveal much information on the support.

2). Background on the Low-degree Framework:

We shall provide a brief introduction to the low-degree polynomial conjecture which forms the basis of our analyses here, and refer the interested reader to [39], [40], and [2] for in-depth discussions on the topic. We will apply this method in context of the test vs. . The low-degree method centers around the likelihood ratio , which takes the form in the above framework. Our key tool here will be the Hermite polynomials, which form a basis system of [62]. Central to the low-degree approach lies the projection of onto the subspace (of ) formed by the Hermite polynomials of degree at most . The latter projection, to be denoted by from now on, is important because it measures how well polynomials of degree ≤ Dn can distinguish from . In particular,

| (16) |

where the maximization is over polynomials of degree at most Dn [36].

The norm of the untruncated likelihood ratio has long held an important place in the theory hypothesis testing since implies and are asymptotically indistinguishable. While the untruncated likelihood ratio is connected to the existence of any distinguishing test, degree Dn projections of are connected to the existence of polynomial time distinguishing tests. The implications of the above heuristics are made precise by the following conjecture [40, Hypothesis 2.1.5].

Conjecture 1 (Informal). Suppose . For “nice” sequences of distributions and , if as n → ∞ whenever Dn ≤ t(n)polylog(n), then there is no time-nt(n) test that strongly distinguishes and .

Thus Conjecture 1 implies that the degree-Dn polynomial is a proxy for time-nt(n) algorithms [2]. If we can show that for a Dn of the order for some ϵ > 0, then the low degree Conjecture says that no polynomial time test can strongly distinguish and [2, Conjecture 1.16].

Conjecture 1 is informal in the sense that we do not specify the “nice” distributions, which are defined in Section 4.2.4 of [2] (see also Conjecture 2.2.4 of [40]). Niceness requires to be sufficiently symmetric, which is generally guaranteed by naturally occurring high dimensional problems like ours. The condition of “niceness” is attributed to eliminate pathological cases where the testing can be made easier by methods like Gaussian elimination. See [40] for more details.

3). Main Result:

Similar to [36], we will consider a Bayesian framework. It might not be immediately clear how a Bayesian formulation will fit into the low-degree framework, and lead to (15). However, the connection will be clear soon. We put independent Rademacher priors and on α and β. We say if are i.i.d., and for each ,

| (17) |

The Rademacher prior can be defined similarly. We will denote the product measure by . Let us define

| (18) |

When , is the covariance matrix corresponding to and with covariance . Hence, for to be positive definite, is a sufficient condition. The priors and put positive weight on α and β that do not lead to a positive definite , and hence calls for extra care during the low-degree analysis. This subtlety is absent in the sparse PCA analogue [36].

Let us define

| (19) |

We denote the n-fold product measure corresponding to by . If , then the marginal density of (X, Y) is . The following lemma, which is proved in Appendix H-C, explains how the Bayesian framework is connected to (15).

Lemma 2. Suppose and . Then

where is the shorthand for .

Note that a similar result holds for as well because . Lemma 2 implies that to show (15), it suffices to show that a polynomial time computable fails to strongly distinguish the marginal distribution of X and Y from . However, the latter falls within the realms of the low degree framework because the corresponding likelihood ratio takes the form

| (20) |

Using priors on the alternative space is a common trick to convert a composite alternative to a simple alternative, which generally yields more easily to various mathematical tools.

If we can show that for some , then Conjecture 1 would indicate that a time computable fails to distinguish the distribution of from . Theorem 3 accomplishes the above under some additional conditions on p, q, and n, which we will discuss shortly. Theorem 3 is proved in Appendix E.

Theorem 3. Suppose ,

| (21) |

Then is O(1) where is as defined in (20).

The following Corollary results from combining Lemma 2 with Theorem 3.

Corollary 1.Suppose| (22) |

If Conjecture 1 is true, then for , there is no time test that strongly distinguishes and .

Corollary 1 conjectures that polynomial time algorithms can not strongly distinguish and provided sx, sy, p, and q satisfy (22). Therefore under (22), Lemma 1 conjectures support recovery to be NP hard.

Now we discuss a bit on condition (22). The first constraint in (22) is expected because it ensures , , which indicates that the sparsity is in the hard regime. We need to explain a bit on why the other constraint p, is needed. If , the sample canonical correlations are consistent, and therefore strong separation is possible in polynomial time without any restriction on the sparsity [23], [41]. Even if and , then also strong separation is possible in model 18 provided the canonical correlation ρ is larger than some threshold depending on c1 and c2 [23]. The restriction p, ensures that the problem is hard enough so that the vanilla CCA does not lead to successful detection. The constant 3e is not sharp and possibly can be improved. The necessity of the condition p, is unknown for support recovery, however. Since support recovery is a harder problem than detection, in the hard regime, polynomial time support recovery algorithms may fail at a weaker condition on n, p, and q.

Remark 5. [Comparison with previous work:] As mentioned earlier, [28] was the first to discover the existence of computational gap in context of sparse CCA. In their seminal work, [28] established the computational hardness of CCA estimation problem at a particular subregime of , provided is allowed. In view of the above, it was hinted that sparse CCA becomes computationally hard when , . However, when is bounded, the entire regime , is probably not computationally hard. In Section III-D, we show that if , then both polynomial time estimation and support recovery are possible if , at least in the known and case. The latter sparsity regime can be considerably larger than , . Together, Section III-D and the current section indicate that in the bounded case, the transition of computational hardness for sparse CCA probably happens at the sparsity level , not , which is consistent with sparse PCA. Also, the low-degree polynomial conjecture allowed us to explore almost the entire targeted regime , , where [28], who used the planted clique conjecture, considers only a subregime of , .

We will end the current section with a brief outline of the proof of Theorem 3.

The main idea behind the proof of Theorem 3:

Let us denote by the linear span of all -variate Hermite polynomials of degree at most Dn. For each and , we let , where is the univariate normalized Hermite polynomial of degree zi. We will discuss the Hermite polynomials in greater detail in Appendix E. Any normalized m-variate Hermite polynomial is of the form , where . Then is the linear span of all with

Since is the projection of on , it then holds that

The first step of the proof is to find out the expression of . Since , we can partition w into w = (w1, … , wn), where for each i ∈ [n]. Using some algebra, we can show that

Exploiting the properties of Hermite polynomials, it can be shown that

where for , , and any function , the notation stands for the z-th order partial derivative of f with respect to t evaluated at the origin. The rest of the proof is similar to the PCA analogue in [36], but there is an extra indicator term for the CCA case. Following [36], we use the common trick of using replicas of α and β to simplify the algebra. Suppose , and , are independent. Let W be the indicator function of the event . Denote by the p-th order truncation of the Taylor series expansion of at x = 0. Following some algebra, it can be shown that

Comparing the above with the analogous result for PCA, namely Lemma 4.2 of [36], we note that the indicator term W does not appear in the PCA analogue. The indicator term W appears in the CCA case because we had set to be for to tackle the extra restrictions on α and β in this case.

D. A Polynomial Time Algorithm for Regime : Answer to Question 4

In this subsection, we show that in the difficult regime , using a soft co-ordinate thresholding (CT) type algorithm, we can estimate the canonical directions consistently when . CT was introduced by the seminal work of [50] for the purpose of estimating high dimensional covariance matrices. For SPCA, [1]’s CT is the only algorithm that provably recovers the full support in the difficult regime (see also [35]). In context of CCA, [26] uses CT for partial support recovery in the rank one model under what we referred to as the easy regime. However, [26]’s main goal was the estimation of the leading canonical vectors, not support recovery. As a result, [26] detects the support of the relatively large elements of the leading canonical directions, which are subsequently used to obtain consistent preliminary estimators of the leading canonical directions. Our thresholding level and theoretical analysis are different from that of [26] because the analytical tools used in the easy regime do not work in the difficult regime.

1). Methodology: Estimation via CT:

By “thresholding a matrix A co-ordinate-wise”, we will roughly mean the process of assigning the value zero to any element of A which is below a certain threshold in absolute value. Similar to [1], we will consider the soft thresholding operator, which, at threshold level t, takes the form

It will be worth noting that the soft thresholding operator is continuous.

| Algorithm 2 Coordinate Thresholding (CT) for estimating D(V) | |

|---|---|

|

|

We will also assume that the covariance matrices and are known. To understand the difficulty of unknown and , we remind the readers that . Because the matrices U and V are sandwiched between the matrices and , their sparsity pattern does not get reflected in the sparsity pattern of . Therefore, if one blindly applies CT to , they can at best hope to recover the sparsity pattern of the outer matrices and . If the supports of the matrices U and V are of main concern, CT should rather be applied on the matrix . If and are unknown, one needs to efficiently estimate before the application of CT. Although under certain structural conditions, it is possible to find rate optimal estimators and of and at least in theory, the errors and may still blow up due to the presence of the high dimensional matrix , which can be as big as in operator norm. One may be tempted to replace with a sparse estimator of to facilitate faster estimation, but that does not work because we explicitly require the formulation of as the sum of Wishart matrices (see equation 37 in the proof). The latter representation, which is critical for the sharp analysis, may not be preserved by a CLIME [51] or nodewise Lasso estimator [49] of Σxy.

We remark in passing that it is possible to obtain an estimator so that . Although the latter does not provide much control over the operator norm of , it is sufficient for partial support recovery, e.g., the recovery of the rows of U or V with strongest signals. (See Appendix B of [26] for example, for some results in this direction under the easy regime when r = 1.)

As indicated by the previous paragraph, we apply coordinate thresholding to the matrix , which directly targets the matrix . We call this step the peeling step because it extracts the matrix from the sandwiched matrix . We then perform the entry-wise co-ordinate thresholding algorithm on the peeled form with threshold Thr so as to obtain . We postpone the discussion on Thr to Section III-D2. The thresholded matrix is an estimator of , but we need an estimator of . Therefore, we again sandwich between and . The motivation behind this sandwiching is that if , then is a good estimator of in that

However, is an SVD of . Using Davis-Kahan sin theta theorem [52], one can show that the SVD of produces estimators and of and , where the columns of and are ϵn-consistent in l2 norm for the columns of and , respectively, up to a sign flip (cf. Theorem 2 of [52]). Pre-multiplying the resulting U′ by yields an estimator of U up to a sign flip of the columns. We do not worry about the sign flip because Condition 1 allows for the sign flips of the columns. Therefore, we feed this into RecoverSupp as our final step. See Algorithm 2 for more details.

Remark 6. In case of electronic health records data, it is possible to obtain large surrogate data on X and Y separately and thus might allow relaxing the known precision matrices assumption above. We do not pursue such semi-supervised setups here.

2). Analysis of the CT Algorithm:

For the asymptotic analysis of the CT algorithm, we will assume the underlying distribution to be Gaussian, i.e., . This Gaussian assumption will be used to perform a crucial decomposition of sample covariance matrix, which typically holds for Gaussian random vectors. [1], who used similar devices for obtaining the sharp rate results in SPCA, also required a similar Gaussian assumption. We do not yet know how to extend these results to sub-Gaussian random vectors.

Let us consider the threshold , where Thr is explicitly given in Theorem 4. Unfortunately, tuning of Thr requires the knowledge of the underlying sparsity sx and sy. Similar to [1], our thresholding level is different than the traditional choice of order in the easy regime analyzed in [50], [63] and [26]. The latter level is too large to successfully recover all the nonzero elements in the difficult regime. We threshold at a lower level, which in its turn, complicates the analysis to a greater degree. Our main result in this direction, stated in Theorem 4, is proved in Appendix F.

Theorem 4. Suppose . Further suppose , , and . Let K and C1 be constants so that and , where C > 0 is an absolute constant. Suppose the threshold level Thr is defined by

Suppose is a constant that takes the value K, C1, or one in case (i), (ii), and (iii), respectively. Then there exists an absolute constant C > 0 so that the following holds with probability 1 − o(1) for :

To disentangle the statement of Theorem 4, let us assume for the time being. Then case (ii) in the theorem corresponds to . Thus, CT works in the difficult regime provided . It should be noted that the threshold for this case is almost of the order , which is much smaller than , the traditional threshold for the easy regime. Next, observe that case (i) is an easy case because is much smaller than . Therefore, in this case, the traditional threshold of the easy regime works. Case (iii) includes the hard regime, where polynomial time support recovery is probably impossible. Because it is unlikely that CT can improve over the vanilla estimator in this regime, a threshold of zero is set.

Remark 7. Theorem 4 requires because one of our concentration inequalities in the analysis of case (ii) needs this technical condition (see Lemma 8). The omitted regime is indeed an easier one, where special methods like CT is not even required. In fact, it is well known that subgaussian X and Y satisfy (cf. Theorem 4.7.1 of [42])

which is in the regime under concern. Including this result in the statement of Theorem 4 could unnecessarily lengthen the exposition. Therefore, we decided to exclude this regime from Theorem 4 to focus more on the regime.

Remark 8. The statement of Theorem 4 is not explicit on the lower bound of the constant C1. However, our simulation shows that the algorithm works for . Both threshold parameters C1 and K in Theorem 4 depend on the unknown . The proof actually shows that can be replaced by , , , .

Finally, Theorem 4 leads to the following corollary, which establishes that in the difficult regime, there exist estimators which satisfy Condition 1, and Algorithm 2 succeeds with probability one provided . This answers Question 4 in the affirmative for Gaussian distributions.

Corollary 2. Instate the conditions of Theorem 4. Then there exists so that if

| (23) |

then the defined in Algorithm 2 satisfies Condition 1, and (Algorithm 2 correctly recovers .

We defer the proof of Corollary 2 to Appendix G. We will now present a brief outline of the proof of Theorem 4.

Main idea behind the proof of Theorem 4:

The proof hinges on the hidden variable representation of X and Y due to [64]. We discuss this representation in detail in Appendix C-2, which basically says the data matrices X and Y can be represented as

where , , and are independent standard Gaussian data matrices, and , , , and . We will later show in Section C-2 that and are well defined positive definite matrices. It follows that has the representation

Next, we define some sets. Let , , , and . Therefore E1 and E2 correspond to the supports, where F1 and F2 correspond to their complements. Now we partition [p] × [q] into the following three sets:

| (24) |

and

| (25) |

Therefore E is the set that contains the joint support. We can decompose as

| (26) |

where is the projection operator defined in (4).

The usefulness of the decomposition in (26) is that S1, S2, and S3 have different supports, which enables us to write

We can therefore analyze the three terms , , and separately. In general, the thresholding operator η is not linear in that for matrices A and B, generally does not hold.

As indicated above, we analyze the operator norms of , , and separately. Among S1, S2, and S3, S1 is the only matrix that is supported on E, the true support. The basic idea of the proof is showing that co-ordinate thresholding preserves the matrix S1, and kills off the other matrices S2 and S3, which contain the noise terms. S1 includes the matrix . Because concentrates around Ir by Bai-Yin law (cf. Lemma 4.7.1 of [42]), concentrates around . Therefore the analysis of η(S1) is relatively straightforward.

Most of the proof is devoted towards showing and are small, i.e., co-ordinate thresholding kills off the noise terms. The difficulty arises because the threshold was kept smaller than the traditional threshold of order to adjust for the hard regime. Therefore the approaches of [50] or [28] do not work in this regime. The noise matrices S2 and S3 are sum of matrices of the form , , or , or their transposes, where for rest of this section, M and N should be understood as deterministic matrices of appropriate dimension, whose definition can change from line to line. Analyzing and essentially hinges on Lemma 8, which upper bounds the operator norm of matrices of the form . The proof of Lemma 8 uses, among other tools, a sharp Gaussian concentration result from [1] (see Corollary 10 therein), and a generalized Chernoff’s inequality for dependent Bernoulli random variables [65]. Using Lemma 8, we can also upper bound operator norms of matrices of the form because can be represented as for some matrix M3 of appropriate dimension. Therefore, to show and are small, Lemma 8 suffices, which also completes the proof.

The proof of Theorem 4 has similarities with the proof of the analogous result for PCA in [1] (see Theorem 1 therein). However, one main difference is that for PCA, the key instrument is the representation of X as the spiked model [44], which yields the representation

| (27) |

where and are standard Gaussian data matrices, and is a deterministic matrix. The analysis in PCA revolves around the sample covariance matrix , which, following (27), writes as

From the above representation, it can be shown that the analogues of S2 and S3 in the PCA case are sum of matrices of the form or their transposes. [1] uses an upper bound on to bound the PCA analogue of and (see Proposition 13 therein). In contrast, we encounter terms of the form since CCA is concerned with XTY/n. To deal with these terms, we needed the upper bound result on instead, which requires a separate elaborate proof. Although the basic idea behind bounding and bounding is similar, the proof of bounding is more involved. For example, some independence structures are destroyed due to the pre and post multiplication by the matrices M1 and N1, respectively. We required concentration inequalities on dependent Bernoulli random variables to tackle the latter.

IV. Numerical Experiments

This section illustrates the performance of different polynomial time CCA support recovery methods when the sparsity transitions from the easy to difficult regime. We base our demonstration on a Gaussian rank one model, i.e., (X, Y) are jointly Gaussian with covariance matrix . For simplicity, we take p = q and sx = sy = s. In all our simulations, ρ is set to be 0.5, and , where

are unit norm vectors. Note that the order of most elements of β is O(s−2/3), where a typical element of α is O(s−1/2). Therefore, we will refer to α and β as the moderate and the small signal case, respectively. For the population covariance matrices Σx and Σy of X and Y, we consider the following two scenarios:

A (Identity): Σx = Ip and Σy = Iq. Since p = q, they are essentially the same.

- B (Sparse inverse): This example is taken from [28]. In this case, are banded matrices, whose entries are given by

Now we explain our common simulation scheme. We take the sample size n to be 1000, and consider three values for p: 100, 200, and 300. The highest value of p + q is thus 600, which is smaller than but in proportion to n regime. Our simulations indicate that all of the methods considered here requires n to be quite larger than p + q for the asymptotics to kick in at ρ = 0.5. We will later discuss this point in detail. We further let vary in the set [0.01, 2]. To be more specific, we consider 16 equidistant points in the set [0.01, 2] for the ratio .

Now we discuss the error metric used here to compare the performance of different support recovery methods. Type I and type II errors are commonly used tools to measure the performance of support recovery [1]. In case of support recovery of α, we define the type I error to be the proportion of zero elements in α that appear in the estimated support . Thus, we quantify the type I error of α by . On the other hand, the type II error for α is the proportion of elements in D(α) which are absent in , i.e., the type II error is quantified by . One can define the type I and type II errors corresponding to β similarly. Our simulations demonstrate that often the methods with low type I error exhibit a high type II error, and vice versa. In such situations, comparison between the corresponding methods becomes difficult if one uses the type I and type II errors separately. Therefore, we consider a scaled Hamming loss type metric, which suitably combines the type I and type II error. The symmetric Hamming error of estimating D(α) by is [66, Section 2.1]

Note that the above quantity is always bounded above by one. We can similarly define the symmetric Hamming distance between D(β) and . Finally, the estimates of these three errors (Type I, Type II, and scaled Hamming Loss) are obtained based on 1000 Monte Carlo replications.

Now we discuss the support recovery methods we compare here.

Naïve SCCA. We estimate α and β using the SCCA method of [24], and set and , where and are the corresponding SCCA estimators. To implement the SCCA method of [24], we use the R code referred therein with default tuning parameters.

Cleaned SCCA. This method implements RecoverSupp with the above mentioned SCCA estimators of α and β as the preliminary estimators.

CT. This is the method outlined in Algorithm 2, which is RecoverSupp coupled with the CT estimators of α and β.

Our CT method requires the knowledge of the population covariance matrices Σx and Σy. Therefore, to keep the comparison fair, in case of the cleaned SCCA method as well, we implement RecoverSupp with the popular covariance matrices. Because of their reliance on RecoverSupp, both cleaned SCCA and CT depend on the threshold cut, tuning which seems to be a non-trivial task. We set , where C is the thresholding constant. Our simulations show that a large C results in high type II error, where insufficient thresholding inflates the type I error. Taking the hamming loss into account, we observe that C ≈ 1 leads to a better performance in case A in an overall sense. On the other hand, case B requires a smaller value of thresholding parameter. In particular, we let C to be one in case A, and set C = 0.05 and 0.2, respectively, for the support recovery of α and β in case B. The CT algorithm requires an extra threshold parameter, namely the parameter Thr in Algorithm 2, which corresponds to the co-ordinate thresholding step. We set Thr in accordance with Theorem 4 and Remark 8, with K being and C1 being . We set as in Remark 8, that is

The errors incurred by our methods in case A are displayed in Figure 2 (for α) and Figure 3 (for β). Figures 4 and 5, on the other hand, display the errors in the recovery of α and β, respectively, in case B.

Fig. 2:

Support recovery for α when and . Here threshold refers to cut in Theorem 1.

Fig. 3:

Support recovery for β when and . Here threshold refers to cut in Theorem 1.

Fig. 4:

Support recovery for α when and are the sparse covariance matrices. Here threshold refers to cut in Theorem 1.

Fig. 5:

Support recovery for β when and are the sparse covariance matrices. Here threshold refers to cut in Theorem 1.

Now we discuss the main observations from the above plots. When the sparsity parameter s is considerably low (less than ten in the current settings), the naíve SCCA method is sufficient in the sense that the specialized methods do not perform any better. Moreover, the naïve method is the most conservative one among all three methods. As a consequence, the associated type I error is always small, although the type II error of the naïve method grows faster than any other method. The specialized methods are able to improve the type II error at the cost of higher type I error. At a higher sparsity level, the specialized methods can outperform the naïve method in terms of the Hamming error, however. This is most evident when the setting is also complex, i.e., the signal is small, or the underlying covariance matrices are not identity. In particular, Figure 2 and 4 entail that when the signal strength is moderate and the sparsity is high, the cleaned SCCA has the lowest hamming error. In the small signal case, however, CT exhibits the best hamming error as increases; cf. Figure 3 and 5.

The Type I error of CT can be slightly improved if the sparsity information can be incorporated during the thresholding step. We simply replace cut by the maximum of cut and the s-th largest element of , where the latter is as in Algorithm RecoverSupp. See, e.g., Figure 6, which entails that this modification reduces the Hamming error of the CT algorithm in case A. our empirical analysis hints that the CT algorithm has potential for improvement from the implementation perspective. In particular, it may be desirable to obtain a more efficient procedure for choosing cut in a systematic way. However, such a detailed numerical analysis is beyond the scope of the current paper and will require further modifications of the initial methods for estimation of α, β both for scalability and finite sample performance reasons. We keep these explorations as important future directions.

Fig. 6:

Support recovery by the CT algorithm when we use the information on sparsity to improve the type I error. Here and are and , respectively, and threshold refers to cut in Theorem 1. To see the decrease in type I error, compare the errors with that of Figure 2 and Figure 3.

It is natural to wonder what is the effect of cleaning via RecoverSupp on SCCA. As mentioned earlier, during our simulations we observed that a cleaning step generally improves the type II error of the naïve SCCA, but it also increases the type I error. In terms of the combined measure, i.e., the Hamming error, it turns out that cleaning does have an edge at higher sparsity levels in case B; cf. Figure 4 and Figure 5. However, the scenario is different in case A. Although Figures 2 and 3 indicate that almost no cleaning occurs at the set threshold level of one, we saw that cleaning happens at lower threshold levels. However, the latter does not improve the overall Hamming error of naïve SCCA. The consequence of cleaning may be different for other SCCA methods.

To summarize, when the sparsity is low, support recovery using the naïve SCCA is probably as good as the specialized methods. However, at higher sparsity level, specialized support recovery methods may be preferable. Consequently, the precise analysis of the apparently naïve SCCA will indeed be an interesting future direction.

V. Discussion

In this paper, we have discussed rate optimal behavior of information theoretic and computational limits of the joint support recovery for the sparse canonical correlation analysis problem. Inspired by recent results in the estimation theory of sparse CCA, a flurry of results in sparse PCA, and related developments based on low-degree polynomial conjecture – we are able to paint a complete picture of the landscape of support recovery for SCCA. For future directions, it is worth noting that our results are so far not designed to recover D(vi) for individual i ∈ [r] separately (and hence the term joint recovery). Although this is also the case for most state of the art in the sparse pCA problem (results often exist only for the combined support [1] or the single spike model where r = 1 [29]), we believe that it is an interesting question for deeper explorations in the future. Moreover, moving beyond asymptotically exact recovery of support to more nuanced metrics (e.g., Hamming Loss) will also require new ideas worth studying. Finally, it remains an interesting question to pursue whether polynomial time support recovery is possible in the , regime using a CT type idea – but for unknown yet structured high dimensional nuisance parameters Σx, Σy.

Acknowledgments

This work was supported by National Institutes of Health grant P42ES030990.

Biographies

Nilanjana Laha received a Bachelor of Statistics in 2012 and a Master of Statistics in 2014 from the Indian Statistical Institute, Kolkata. Then she received a Ph.D. in statistics in 2019 from the University of Washington, Seattle. She was a postdoctoral research fellow at the department of Biostatistics at Harvard university from 2019 to 2022. She is currently an assistant professor in Statistics at Texas A & M University. Her research interests include dynamic treatment regimes, high dimensional association, and shape constrained inference.

Rajarshi Mukherjee received a Bachelor of Statistics in 2007 and a Master of Statistics in 2009 from the Indian Statistical Institute, Kolkata. He received his Ph.D. degree in Bisostatistics from Harvard University in 2014. He was a Stein fellow in the department of Statistics at Stanford University from 2014 to 2017. He was an assistant professor at the division of Biostatistics at the University of California, Berkeley, from 2017 to 2018. Since 2018, he has been an assistant professor at the department of Biostatistics at Harvard University. His research interests primarily lie in structured signal detection problems in high dimensional and network models, and functional estimation and adaptation theory in nonparametric statistics.

Appendix A. Full version of RecoverSupp

| Algorithm 3 RecoverSupp: simultaneous support recovery of U and V | |

|---|---|

|

|

In Algorithm 3, we used different cut-offs for estimating and , which are cutx and cuty, respectively. In practice, one can choose the same threshold cut for both of them.

Appendix B. Proof preliminaries

The Appendix collects the proof of all our theorems and lemmas. This section introduces some new notations and collects some facts, which are used repeatedly in our proofs.

A. New Notations

Since the columns of , i.e., [] are orthogonal, we can extend it to an orthogonal basis of , which can also be expressed in the form [] since Σx is non-singular. Let us denote the matrix [u1,…,up] by , whose first r columns form the matrix U. Along the same line, we can define , whose first q columns constitute the matrix V.

Suppose is a matrix. Recall the projection operator defined in (4). For any S ⊂ [p], we let denote the matrix . Similarly, for , we let AF be the matrix . For , we define the norms and . We will use the notation ∣A∣∞ to denote the quantity .

The Kullback Leibler (KL) divergence between two probability distributions P1 and P2 will be denoted by KL(P1 ∣ P2). For , we let denote greatest integer less than or equal to .

B. Facts on

First, note that since by (2) for all i ∈ [q], we have . Similarly, we can also show that . Second, we note that , and

| (28) |

because the largest element of Λ is not larger than one. Since Xi’s and Yi’s are Subgaussian, for any random vector v independent of X and Y, it follows that [45, Lemma 7]

| (29) |

with probability 1 – o(1) uniformly over . Also, we can show that satisfies

where Cauchy-Schwarz inequality was used in the first step.

C. General Technical Facts

Fact 1.For two matricesand, we haveFact 2 (Lemma 11 of [1]). Let be a matrix with i.i.d. standard normal entries, i.e., Zi,j ~ N(0, 1). Then for every t > 0,

As a consequence, there exists an absolute constant C > 0 such that

Recall that for , in Appendix B-A, we defined ∥A∥1,∞ and ∥A∥∞1 to be the matrix norms and , respectively.

The following fact is a Corollary to (29).

Fact 3. Suppose X and Y are jointly subgaussian. Then .

Fact 4 (Chi-square tail bound). Suppose . Then for any y > 5, we have

Proof of Fact 4. Since Zl’s are independent standard Gaussian random variables, by tail bounds on Chi-squared random variables (The form below is from Lemma 12 of [1]),

Plugging in x = yk, we obtain that

which implies for y > 1,

which can be rewritten as

as long as y > 5. □

Appendix C. Proof of Theorem 1

For the sake of simplicity, we denote , , and by , , and , respectively. The reader should keep in mind that and are independent of and because they are constructed from a different sample. Next, using Condition 1, we can show that there exists so that

as n → ∞. Without loss of generality, we assume wi = 1 for all i ∈ [r]. The proof will be similar for general wi’s. Thus

| (30) |

Therefore for all i ∈ [r] with probability tending to one.