Abstract

In intraoperative brain cancer procedures, real-time diagnosis is essential for ensuring safe and effective care. The prevailing workflow, which relies on histological staining with hematoxylin and eosin (H&E) for tissue processing, is resource-intensive, time-consuming, and requires considerable labor. Recently, an innovative approach combining stimulated Raman histology (SRH) and deep convolutional neural networks (CNN) has emerged, creating a new avenue for real-time cancer diagnosis during surgery. While this approach exhibits potential, there exists an opportunity for refinement in the domain of feature extraction. In this study, we employ coherent Raman scattering imaging method and a self-supervised deep learning model (VQVAE2) to enhance the speed of SRH image acquisition and feature representation, thereby enhancing the capability of automated real-time bedside diagnosis. Specifically, we propose the VQSRS network, which integrates vector quantization with a proxy task based on patch annotation for analysis of brain tumor subtypes. Training on images collected from the SRS microscopy system, our VQSRS demonstrates a significant speed enhancement over traditional techniques (e.g., 20–30 min). Comparative studies in dimensionality reduction clustering confirm the diagnostic capacity of VQSRS rivals that of CNN. By learning a hierarchical structure of recognizable histological features, VQSRS classifies major tissue pathological categories in brain tumors. Additionally, an external semantic segmentation method is applied for identifying tumor-infiltrated regions in SRH images. Collectively, these findings indicate that this automated real-time prediction technique holds the potential to streamline intraoperative cancer diagnosis, providing assistance to pathologists in simplifying the process.

Supplementary Information

The online version contains supplementary material available at 10.1007/s10278-024-01001-4.

Keywords: Stimulated Raman histology, SRS imaging system, Brain tumor subtype classification, Intraoperative diagnosis, Vector quantization, Generative deep learning

Introduction

Brain tumors encompass a diverse group of neoplasms originating in human brain tissue, with varying degrees of aggressiveness and fatality [1]. The early detection of brain tumors is crucial for preventing disease metastasis and ensuring effective subsequent treatment. Although pathological diagnosis has long been considered the “gold standard” for tumor diagnosis, the field of pathology still faces a series of challenges. In some underdeveloped regions and small hospitals, there is a particularly prominent shortage of pathologists, which leads to pathologists having to work under heavy load for extended periods [2]. Meanwhile, the process of pathological diagnosis heavily relies on the professional knowledge and extensive experience of pathologists, and the subjectivity of their judgments has contributed to a continuous increase in diagnostic inconsistency. In many cases, to ensure that the specimen is sufficient for a final diagnosis and to guide surgical procedures, it is necessary to analyze the excised tumor portion during surgery [3]. However, traditional brain tumor pathological diagnosis involves a labor-intensive process, including tissue freezing, sectioning, and staining, among other steps [4, 5]. Therefore, it is essential to develop advanced technologies for rapid automated intraoperative brain tumor biopsy and pathology to enhance patient treatment and surgical outcomes.

With the rapid advancements in optical microscopy and deep learning, we have developed a streamlined diagnostic process aimed at improving various existing challenges. Prior to this, substantial efforts were dedicated to fostering the flourishing of stimulated Raman scattering (SRS) technology. These efforts included technological improvements such as enhancing sensitivity [6–9], speed [10–14], spatial resolution [15–18], specificity [19–22], and miniaturization of devices [23, 24]. Additionally, there were endeavors in biomedical applications, such as label-free tissue pathology [25–29]. The SRS microscope demonstrates unique capabilities within imaging systems, particularly in the imaging of endogenous biochemical components, especially at the micrometer scale in single-cell contexts. Stimulated Raman histology (SRH) [24, 30], as an application of SRS [31–38] technology in the field of histology, provides us with a powerful tool. SRH utilizes picosecond laser pulses with a narrow linewidth to selectively visualize lipid and protein distribution, unveiling the tissue's biochemical characteristics. This technique requires no staining and enables real-time imaging, comes with numerous advantages. It unveils microscopic features essential for diagnosis, including histological details like axons and lipid droplets that may not be visible in traditional H&E stained images [39, 40]. Additionally, SRH can eliminate artifacts that arise during the preparation of frozen or smear tissue, further enhancing the accuracy of the diagnostic process.

Hollon et al. [40] have pioneered a new approach to intraoperative rapid brain tumor diagnosis by combining SRH with deep convolutional neural networks (CNN). Their method involves a three-step tissue diagnostic process, including image acquisition, image processing, and CNN-based diagnostic prediction. Encouragingly, the CNN diagnostic accuracy based on SRH images reached 94.6%, which is comparable to the 93.9% achieved with traditional histological images. On the other hand, Ji et al. [41] utilized a U-Net-based deep learning algorithm to successfully convert single-shot femtosecond Stimulated Raman Scattering (femto-SRS) images into dual-channel picosecond Stimulated Raman Scattering (pico-SRS) images with chemical resolution. This accomplishment enabled rapid, label-free histological imaging of gastric biopsy tissues during endoscopy. While these studies have made significant strides, it is important to note that both of these studies rely on supervised deep learning models. However, current research suggests that self-supervised models can achieve performance comparable to supervised methods [42–45]. These models are trained by defining auxiliary proxy tasks that retain some data and instruct the model to predict them. This arises from the fact that information pertinent to the task tends to be distributed across various dimensions within the data [46]. We use the publicly available OpenSRH [47] dataset to train the model to reconstruct or restore from these images, compelling it to recognize the basic features of the images. Once training is completed, the vector representations derived from the proxy task will accurately capture the crucial features of the images. These representations can be employed for image comparison and classification, providing robust support for further analysis and applications. This approach opens up new possibilities in the field of image analysis, offering a promising path to overcome enhance model performance.

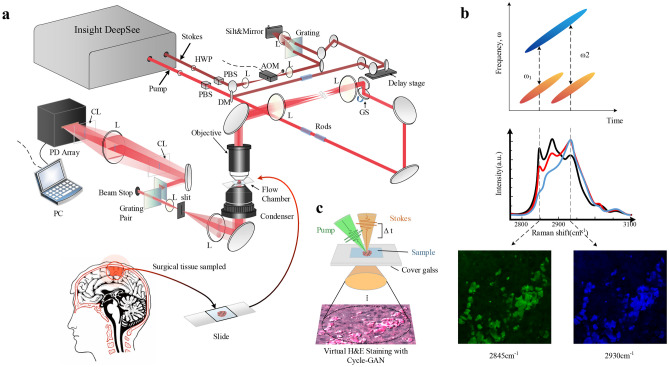

This paper presents the creation, validation, and application of VQSRS, a self-supervised clustering and prediction approach based on deep learning (Fig. 1). The key innovation lies in introducing a proxy task, which ensures that features extracted from different images of the same disease contribute to their distinction from images of other diseases in the dataset. On the hardware front, a novel femtosecond laser instrument called InSight DeepSee is employed to construct an SRS microscopy system, mitigating traditional femtosecond laser limitations and offering rapid, label-free, and sub-micrometer resolution imaging of untreated biological tissue samples.

Fig. 1.

Overview of VQSRS. Stage 1: Freshly excised specimens are directly loaded into the SRS microscopy system for image acquisition. Cycle-GAN is applied for SRH virtual staining, which enhances the contrast and visual quality of the images. Stage 2: In this stage, the distinctive features of pathological tissues are expressed. Self-supervised strategies and proxy tasks are employed to train a classifier. Stage 3: The optimized model obtained from Stage 2 is used in this phase to perform brain tumor subtype annotation. Semantic segmentation techniques are utilized for heatmap prediction, aiding in visualizing the areas of interest within the images

Materials and Methods

Introduction of Experimental Instruments

One of the goals of our research is to validate the potential for rapid histological imaging of fresh brain tissue biopsies using the new femtosecond laser instrument, InSight DeepSee (Spectra-Physics 1565 Barber Lane Milpitas, CA 95035, USA). When utilizing picosecond stimulated Raman scattering (SRS) in SRH, each switch takes 5 s, compromising the high spectral imaging speed [19]. In contrast, femtosecond SRS offers advantages of high signal-to-noise ratio (SNR) and fast imaging speeds due to its higher pulse peak power, but it presents some challenges, primarily the lack of spectral resolution for chemical imaging [31, 48], making it less directly applicable in the field of histology. In order to fully harness the advantages of femtosecond lasers and overcome their limitations in chemical imaging, we have employed a dual-output femtosecond laser as the excitation source for both the pump and Stokes lasers. By using dispersion elements (rods), we are able to effectively chirp the femtosecond pulses to a duration of approximately 2–4 ps. This enhancement not only leverages the strengths of femtosecond lasers but also ensures the capability for high-quality chemical imaging. The pump laser was tuned to 800 nm, and the Stokes laser was tuned to 1040 nm to match the CH molecular bond vibrations for stimulated Raman scattering imaging (Fig. 2a). By adjusting the pulse-to-pulse time delay, we acquired SRS images at various Raman frequencies (Fig. 2b). This light source granted us access to Raman shifts within the range of 2800 cm−1 to 3130 cm−1. SRH images were acquired at two Raman shifts: 2845 cm−1 highlighted regions rich in lipids, while 2930 cm−1 emphasized areas rich in DNA and proteins. The picosecond pulses were used at two Raman frequencies (ω1 = 2845 cm−1 for CH2, ω2 = 2930 cm−1 for CH3) to capture raw images, extract information about lipids, DNA, and proteins, and generate SRS images. This method involves coupling the pump and Stokes beams in both time and space, directing them to the designated sample section (Fig. 2c). Subsequently, virtual H&E (hematoxylin and eosin) staining techniques are applied to generate pseudo-colored SRH images. This process reveals histological features for further analysis.

Fig. 2.

Experimental design and workflow. a The optical setup of the SRS microscopy system using the Insight DeepSee instrument is constructed. The system utilizes laser scanning microscopy for imaging and collects transmitted laser light through a condenser lens. The target Raman frequency is selected by adjusting the time delay between the two pulses. L, lens; PD, photodetector; HWP, half-wave plate; PBS, polarizing beam splitter. b Femtosecond pulses are transformed into picoseconds through chirping, allowing for SRS images with spectral resolution to be obtained. Images are acquired at two Raman shifts, 2845 cm−1 and 2930 cm−1. c The collected samples are subjected to virtual staining using Cycle-GAN to provide sufficient high spectral/chemical resolution

To ensure the stability of the optical path, the pump laser path is typically fixed. The pump laser, characterized by higher photon energy, and the Stokes laser, characterized by lower photon energy, are combined using a dichroic mirror (DM) that allows short-wavelength laser light to pass through. The depicted reflector and DM are both mounted on a two-dimensional adjustable mirror frame, and by adjusting these optical components, the optical path of the Stokes laser is modified to achieve spatial co-linearity with the pump laser. Additionally, the Stokes laser undergoes sinusoidal modulation at a frequency of 2.2 MHz using an acousto-optic modulator (AOM). This modulation helps improve the efficiency of detecting stimulated Raman loss (SRL) signals. In the temporal domain, an optical path with time delay is created using two mirrors and a translation stage, ensuring temporal overlap of the pump and Stokes lasers. Both pulses are shaped by a pulse shaping system. After temporal and spatial coupling, SRS signals are generated, collected by a photodetector (PD) array, and converted into image information on a computer. The imaging speed can reach 7 µs per pixel.

Coloring of SRH Images

The traditional SRH image coloring method employs a virtual H&E (Hematoxylin and Eosin) color lookup table to represent the lipid and protein components in tissues, extracted respectively from the CH2 image at 2845 cm−1 and the CH3 image at 2930 cm−1 [40]. In the lipid channel, a color lookup table resembling eosin staining is selected and mapped to pink, while in the DNA and protein channels, a lookup table similar to hematoxylin staining is chosen and mapped to deep purple. It is important to emphasize that all color mappings are linear. Combining these two color-transformed independent channels creates an SRH image reminiscent of traditional H&E staining.

To achieve improved virtual H&E staining for generating SRH mosaics, we employ a Cycle-Consistent Generative Adversarial Network (Cycle-GAN) [49, 50]. This approach offers several advantages over traditional lookup table staining methods. Firstly, with Cycle-GAN, we can learn color mappings from a large number of real H&E stained images, capturing the diversity of real tissue samples. Secondly, Cycle-GAN is adaptive, adjusting the staining mapping based on the features of input images, thus achieving better results across different tissue types and conditions (Fig. 3a). Moreover, the unsupervised learning of Cycle-GAN eliminates the need for manually creating paired data, enhancing flexibility. The cycle-consistency loss ensures that the image, after transformation, remains consistent upon reconversion. As optimization progresses, the generator can produce increasingly realistic H&E stained images. This approach provides valuable visual information for research and diagnosis, without the costs and time associated with actual staining, significantly enhancing efficiency and feasibility.

Fig. 3.

Comparison of SRH image coloring with traditional H&E staining for multiple brain tumor subtypes. a The virtual colorized SRH images for brain tumor diagnosis were transformed using Cycle-GAN technology. Concurrently, examples were generated featuring images resembling eosin-stained images highlighting lipids and images resembling hematoxylin-stained images emphasizing DNA and proteins. b A parallel display of conventional H&E histopathology and the VQSRS intraoperative workflow. Scale bar, 50 μm

In this study, SRH image coloring was performed on seven brain tumor subtypes (Fig. 3a), covering the most common brain tumor diagnostic types. Compared to traditional H&E staining, SRH does not require any staining of tissues, preserving the original structure and biological information of tissue samples. Simultaneously, the imaging speed is faster, enabling the acquisition of high-quality tissue sample images within seconds, suitable for rapid intraoperative analysis and real-time decision support (Fig. 3b). In the traditional pathology workflow, the diagnostic accuracy interpreted by certified neuropathology experts is around 91% [47]. Although the accuracy of our method (88.2%) was slightly inferior to that of professional neuropathologists, its rapid operability and immediacy brought important advances to clinical practice in brain tumor surgery.

Generating Image Representations Through Self-Supervised Feature Learning

We adopted the latest version of the Vector Quantized Variational Autoencoder (VQ-VAE2), known as VQ-VAE2, to reconstruct SRS images acquired by InSight DeepSee, preserving spatial chemical information [51, 52]. VQ-VAE is a powerful self-supervised feature learning model that encodes images into quantized latent representations — vectors, which are then decoded to reconstruct the input image [53]. This discrete encoding possesses strong representation capabilities, and evidence suggests that it can learn the most effective image representations for pathological tissues. Therefore, we chose the latest advancement in this field — VQ-VAE2 as our starting point to study the latent representation of SRS images. Both VQ-VAE and VQ-VAE-2 use the method of vector quantization (VQ) to obtain discrete latent representations. In the embedding space, the model finds an embedding closest to the vector output from the encoder (measured by Euclidean distance), and this embedding’s index represents the vector. Thus, the encoded output is transformed into discrete codes instead of continuous values. The embedding itself is continuously updated during training to ensure optimality in the encoding–decoding task.

While VQSRS can accurately learn data representations of the entire dataset and reconstruct input images through generated latent variables, relying solely on the generative model for training and feature extraction cannot yield practical results in brain tumor subtype classification tasks. To effectively leverage the generative model for feature extraction while ensuring the trained parameters are suitable for tumor subtype classification, we designed proxy tasks within the model to enhance performance and generalization (Fig. 4). In the experiment, two patch-based prediction tasks were introduced after the vector quantization stage. The goal of these tasks was to predict the category to which each SRS image belongs. The reason for introducing two tasks lies in VQ-VAE2’s dual-layer architecture that outputs both global and local representations. Local representations usually encode fine-grained variations and local structures within input data, while global representations extract overall structures, semantic information, and high-level abstract features, thus providing consistent global features. The first task is connected after the first quantizer to extract the low-dimensional representation of the image. Similarly, the second task is placed after the deepest quantizer to ensure consistency between global representations and patch-level features. Therefore, the model’s objective is not to achieve perfect image reconstruction but to obtain tumor subtype-specific image representations for prediction by constraining it through patch annotations. With this design, we can train the generative model to be a better feature extractor for its role in tumor subtype classification. The quantizer’s output includes a global representation, a 320 × 10 × 10 feature tensor, and a local representation that captures contextual information on the image, sized 64 × 75 × 75. These components collectively play a vital role in extracting informative features for subsequent tumor subtype classification tasks.

Fig. 4.

The overall structure of the self-supervised feature learning model based on VQSRS. Preprocessing: The initial step involves subtracting two images acquired from different wavelengths to generate a third channel that enhances nuclear contrast and overall image contrast. These channels are merged into an RGB image, serving as input for VQSRS. Encoding: Data is fed into an encoder comprising two levels of encoding, producing global and local feature representations of the image. Vector quantization (VQ): The latent space is quantized using codebook indices, replacing original vectors with matching encoding vectors. Decoding: The decoder reconstructs images based on VQ outputs, capturing essential features. Generated latent representations, including both local and global aspects, are used for feature analysis and clustering analysis

Experimental Setup

During the training process of the deep neural network, adjusting various hyperparameters to achieve better performance is crucial. The hyperparameter configurations are presented in Table 1; the data is split into a training set and a validation set in an 8:2 ratio (Fig. 5a and b). It is important to note that all data used in the results figures come from the validation set to ensure evaluating the model’s performance on unseen data. To optimize model training, we employed the Adam optimizer in combination with the cosine learning rate schedule. This learning rate strategy helps ensure that the model converges better during training. Additionally, to enhance training effectiveness, we applied a warmup_epoch strategy with an initial epoch of 3 and a maximum learning rate of 0.002. This strategy helps the model learn more steadily during the initial training, gradually adapting to the data's features. Each batch consists of 16 images. Figure 5 displays the cross-entropy loss and top-1 accuracy for both the training and validation sets. These metrics provide a comprehensive assessment of the model’s performance during both training and validation phases. By monitoring changes in these metrics, we can adjust hyperparameters and strategies in a timely manner to guide the model's training more effectively. All experiments were conducted on three RTX 3080Ti GPUs.

Table 1.

Hyperparameter settings

| Hyper-parameters | Values |

|---|---|

| Mini batch size | 16 |

| Warmup-epoch | 3 |

| Optimizer | Adamw |

| Max learning rate | 0.002 |

| Weight_decay | 0.0001 |

| Lr strategy | cosine_scheduler |

Fig. 5.

Datasets and evaluation of VQSRS. a, b The class distribution of the training and validation image set in the dataset is represented by the number of patches and patients. Each patient has only one type of tumor image. c Analysis using confusion matrix. Each row in the matrix corresponds to the instances of an actual class, while each column represents the instances predicted for a specific class. A total of 52,447 validation images were assessed, and the numbers within the diagonal boxes indicate the count of correctly predicted images for each class. d ROC curve for binary classifiers: Each curve signifies a binary classifier designed for a specific brain tumor tissue class. The area under the curve (AUC) is an indicator of the model's ability to distinguish between categories, with the Pituita binary classifier demonstrating the highest AUC at 0.9994. e and f. Addressing Overfitting in VQSRS: These figures evaluate overfitting within VQSRS by tracking accuracy e and loss f during the training process

Through paired-sample t-tests on the ROC curves of Extended Data Fig. 1, we compared the performance differences among different models. Detailed statistical data is provided in Extended Data Table 1. According to our analysis, we observed significant differences (at a significance level 0.05) among the models. This suggests that, in this specific dataset, the VQSRS model may be more suitable for predicting tumors.

Semantic Segmentation and Predicted Heatmaps

To capture spatial heterogeneity among subtypes and histological grades within each imaged tissue, we employ probabilistic heatmaps to generate predictive probabilities. High-resolution semantic segmentation is conducted through the following process:

Image patches are acquired over the entire image using a sliding window of 300 × 300 pixels.

Each block is input into VQSRS to generate output probabilities (ranging from 0 to 1) for non-cancer and cancer classes, summing up to 1. If the probability of being non-cancer is higher than 40%, it is classified as normal. If the probability of being cancer is higher than 40%, it is classified as cancer. Otherwise, it is classified as undiagnosed [41].

The captured region is shifted right by 100 pixels, repeating steps 2–3 for classification. If it reaches the edge, it shifts down by 100 pixels and continues moving in reverse.

Each 100 × 100-pixel sub-block in the original image is classified multiple times (Fig. 6b). The counts for each type (cancer, non-cancer, undiagnosed) are tallied and divided by the corresponding classification counts to represent subtype prediction probabilities.

The image is restored to its original size. Then, probabilities for each subtype are color-coded and overlaid onto the SRH image to generate heatmaps.

Fig. 6.

Method for image segmentation. During the process of image segmentation, we utilized a sliding window of 300 × 300 pixels with a stride of 100 pixels. This window was employed to extract patches from images. Simultaneously, we presented the corresponding probability heatmap pixel grid to visualize the results of image segmentation. By overlapping all patches, we can ascertain the number of patches that determine the probability distribution within the probability heat pixel grid. These patches play a critical role in defining the probability distribution

One advantage of this method is that most heatmap pixels are covered by multiple image blocks, combining probability distribution results for each heatmap pixel. This integration and overlap resemble pooling of local prediction probabilities, resulting in smoother predictive heatmaps.

Results

Refactored SRS Chemical Imaging Results

When subjected to laser excitation, the sample induces molecular vibrations, allowing us to distinguish different molecular vibrational spectra through spectral resolution. Through preprocessing of SRS images, we are able to retain the vibrational intensity information obtained at 2845 cm−1 and 2930 cm−1 (as shown in Fig. 7b), enabling the model to better learn the chemical resolution. After training and reconstruction with the VQSRS model, we can compare the input and output SRS intensities to assess the recovery accuracy (as shown in Fig. 7c). The results indicate that there is nearly identical SRS intensity between the input and reconstruction, demonstrating the successful restoration of chemical contrast by VQSRS.

Fig. 7.

SRS image reconstruction and corresponding Raman spectra based on VQSRS. a SRS images, pre-processed and reconstructed using VQSRS. b Intensity profiles of the input images at 2845 cm−1, 2930 cm−1, and the preprocessed input image (dashed line in a). c Intensity profiles of the VQSRS-reconstructed images and the input images (dashed line in a)

To compare the relationships between these data, we conducted a differential analysis. To compare the relationships between these data, we conducted a differential analysis. In the examination of non-normally distributed data at 2845 cm−1, 2930 cm−1, Input, and Reconstruction, we employed Friedman tests and paired-sample Wilcoxon signed-rank tests for a thorough investigation. The Friedman test for vibration frequencies (see Extended Data Table 2) revealed highly significant differences among Inputs at 2845 cm−1 and 2930 cm−1, with Cohen’s d values being notably large (all exceeding 0.8). This observation aligns with our expectations, as Inputs underwent preprocessing, and the peaks at 2845 cm−1 and 2930 cm−1 represent features of different tissues at the same locations. Furthermore, when conducting Wilcoxon signed-rank tests on non-normally distributed Input and Reconstruction data (see Extended Data Table 3), we found relatively small differences, with Cohen’s d values being modest (0.166). This observation is consistent with the reconstruction results, emphasizing subtle yet statistically significant differences between the Input and Output. These differences may be attributed to limitations in the reconstruction ability of the model.

Cluster Results

Throughout the entire experimental process, we separately trained four models — SRH_CNN, MOCO, MAE, and VQSRS — on the dataset and conducted a comprehensive comparative analysis. We have obtained image representations of brain tumor subtypes, which include localization information. These representations have fewer dimensions compared to the initial images, yet these dimensions remain challenging for human interpretation or visualization. To address this challenge, we employed Uniform Manifold Approximation and Projection (UMAP) [54], a dimensionality reduction technique, to map the global localization code set (i.e., the collection of global representations for all data) into a two-dimensional coordinate system, generating UMAP projection plots. The aim of this approach is to facilitate the visualization and interpretation of sample distributions, clustering structures, and relationships within the image data while preserving local structures and similarities in the data as much as possible.

In Fig. 8a, each point represents a brain tumor image from the validation set, forming a detailed scatter plot of the representations, which reflects the diversity of the task. From the plot, we can discern several clusters of points, representing distinct subtypes' spatial structures. These results visually confirm the task’s diversity. Specifically, the figure aims to illustrate two points. Firstly, VQSRS without the incorporation of a proxy task falls under pure self-supervised learning. Although it demonstrates effectiveness in the clustering process, the results are not pronounced due to the absence of actual class outputs, making it impossible to compute the adjusted Rand index (ARI) metric. Therefore, a proxy task with patch labels was introduced during the vector quantization phase to provide effective category information during training. The benefits of self-supervised learning were utilized in the training process, as evidenced by the distinct clustering performance between self-supervised VQSRS and supervised SRH_CNN, as shown in the figure. Second, there exist significant differences between pathological image data and natural image data, such as the lack of standardized directionality, low color variation, and diverse perspectives. These differences may impact the effectiveness of existing SSL methods (MOCO, MAE, etc.). We employed a VQVAE-2 model, which utilizes a unique generative self-supervised learning approach. This method learns features of pathological images through reconstruction, encompassing both global and local features. The vector quantization process in VQVAE maps continuous latent representations to discrete codebooks, achieving feature discretization. During training, this discrete structure allows the model to capture similar features in the data through nearest-neighbor search, facilitating effective data discrimination.

Fig. 8.

Comparison of UMAP Plots and Concrete Instances of VQSRS. a UMAP Projection Plots for the Three Models. For better comparison, they are displayed using the same color. b Brain tumor SRS image instances corresponding to each cluster for VQSRS. On the right side are the Sankey diagrams representing each tissue type, and on the left side, annotations denote predictions, while the right side indicates ground truth

Further analyzing the UMAP plots generated by VQSRS, we can observe that tumor categories with similar histological features tend to exhibit comparable feature representations in the UMAP projection. This similarity is manifested by distinct clustering structures between different tumor types, underscoring the effectiveness of VQSRS in capturing latent similarities among tumor samples. This clustering structure aids in our comprehensive understanding of the similarities and differences among various tumor categories. The analysis of UMAP images generated by the VQSRS model offers insights, assisting in identifying and interpreting hidden associations and structures within image data.

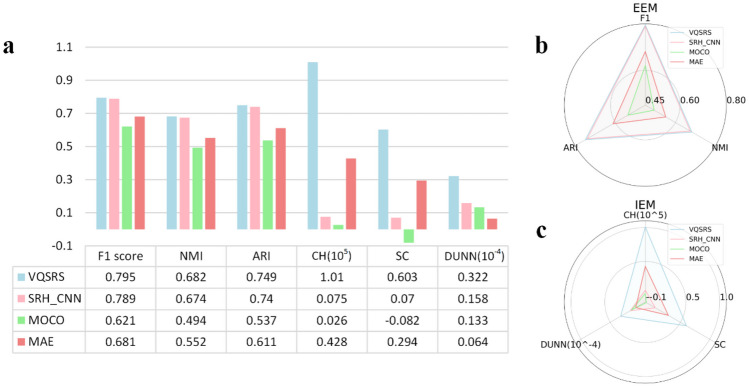

Quantifying Cluster Performance

In terms of quantifying clustering performance, a comprehensive assessment was conducted for the four models using various metrics. As shown in Table 2, VQSRS demonstrated superior performance in most indicators such as specificity and average classification accuracy. Additionally, the clustering evaluation metrics for these models are presented in Fig. 9a, covering a total of six aspects. The differential analysis can be observed in Extended Data Table 4. All models were trained with identical hyperparameters and in the same training environment. Subsequently, each model made predictions on the validation set, and the resulting values were employed for UMAP dimensionality reduction.

Table 2.

Evaluation metrics for each model. The best are shown in bold

| Deep learning model | VQSRS | SRH_CNN | MAE | MOCO |

|---|---|---|---|---|

| Method | Self-supervised + proxy task | Supervised | Self-supervised (non-contrast learning) | Self-supervised (comparative learning) |

| Accuracy (%) | 88.22 | 86.48 | 76.23 | 70.29 |

| Sensitivity (%) | 98.84 | 99.54 | 99.37 | 97.89 |

| Specificity (%) | 95.35 | 92.13 | 83.79 | 79.76 |

| Clustering Index (ARI) | 0.749 | 0.740 | 0.611 | 0.537 |

Fig. 9.

Metric comparison. a External evaluation metrics gauge the consistency between computed results and labels, while internal evaluation metrics measure the clustering results in terms of cohesion and separability. b employs a radar chart to visualize the three external metrics, while c displays the three internal metrics

When quantifying cluster performance, evaluation metrics can generally be categorized into two types: internal evaluation metrics and external evaluation metrics [55]. Internal evaluation metrics aim to assess the quality of clustering based on the inherent characteristics of the dataset, without requiring true labels. Examples of internal evaluation metrics include the silhouette coefficient (SC), Calinski-Harabasz index (CH), and Dunn index [56]. On the other hand, external evaluation metrics evaluate the quality of clustering algorithms based on known labels, such as F1 score, normalized mutual information (NMI) and ARI [57]. For visual representation of the data, please refer to Fig. 9b.

Figure 9 presents a comparative analysis of six clustering-related evaluation metrics. Both external and internal metrics reveal consistent trends in the rankings of the three models. To enhance the clarity and readability of the radar charts, we utilized virtual axis manipulation and data processing techniques in Fig. 9d and h. In d, the translation of axis coordinates enhances the data comparisons clearer, while in h, percentage scaling was employed for data transformation and adjustment. In terms of external metrics, the VQVAE model with only the proxy task does not perform as effectively as the SRH_CNN model. However, with the introduction of the dual-vector quantized VQSRS model, it demonstrates superior performance in external metrics. For internal evaluation metrics, the VQVAE model surpasses the SRH_CNN model in clustering effectiveness. Yet, the further improved VQSRS model exhibits even more significant enhancements.

Extracting Feature Spectra for Analysis

VQSRS has the capability to generate highly resolved brain tumor localization maps based on the extracted image representations. We have made proactive efforts to decipher and understand the constituents of these representations and interpret their meanings. To clearly discern and accurately characterize the components that constitute these representations, we have generated feature spectra for the primary constituents of each tumor. By calculating histograms of codebook feature indices from the localized representations in Fig. 4, we constructed spectra for these features. To effectively categorize related or potentially redundant features, we performed hierarchical biclustering [58] (Fig. 10) to achieve a meaningful ordering of the spectra. First, we obtained codebook indices for all patches. Then, we computed index histograms for each brain tumor and combined these histograms into an n × b_n histogram matrix, where n represents the number of brain tumors, and b_n represents the number of codebook indices. We computed Pearson correlation coefficients to form a 256 × 256 correlation matrix. Hierarchical clustering was then performed to obtain the feature spectra. The color bar in the upper right corner represents the strength of correlations, and we selected 7 major clusters and separated them accordingly. Each average feature spectrum represents a specific localization, with its count indicating the corresponding brain tumor’s frequency of occurrence in the codebook indices.

Fig. 10.

Feature spectral analysis. a The features in the localized representations are re-ordered through hierarchical clustering to form feature spectra. The color bar provides a visual representation of correlation strengths. b Mean feature spectra are generated for each distinct localization cluster. Occurrences indicate the number of times the quantized vectors were found in the localized image representations. All spectra and heatmaps are vertically aligned

Semantic Segmentation Reveals Intratumor Heterogeneity

We have additionally applied a semantic segmentation method to perform pixel-level classification, illustrating how VQSRS-based analysis can be leveraged to highlight diagnostic areas within SRH images (Fig. 11). Employing a dense sliding window algorithm, we created a probability distribution corresponding to diagnostic categories for every pixel present in the SRH image. These diagnostic categories were determined based on patch-level predictions with overlapping regions. By mapping category probabilities onto the pixel intensity scale, we can visualize RGB color overlays that indicate the presence of tumor tissue, normal tissue, and non-diagnosed regions. This approach facilitates the creation of image overlays with pixel-level predictive coverage. Our segmentation method achieved a mean intersection-over-union (IOU) value of 73.2 in the tumor inference category. However, the suboptimal performance may be attributed to a significant number of undiagnosed images in the dataset, leading to poor segmentation results in this semantic segmentation-like approach and introducing a certain degree of bias. Leveraging machine learning to distinguish and categorize undiagnosed data holds the potential to achieve more precise segmentation.

Fig. 11.

Semantic segmentation of SRH images using probability heatmaps to identify tumor infiltration and diagnostic regions. a SRH images collected from patients diagnosed with HGG (High-Grade Glioma). b Untreated tissue specimens imaged using SRH preserve cytological details and tissue structure, aiding in the visualization of brain tumor boundaries. c Inference class probability heatmaps of tumor, normal, and non-diagnostic regions in SRH images displayed using ground truth segmentation, with the tumor probability heatmap overlaid onto the SRH images. d With associated patient-level diagnostic class probabilities

Reconstruction of Image Details

In our experiments, we selected a validation set containing original images with seven tumor subtype classes, and performed reconstruction using VQSRS. As the training epochs advanced, we noted substantial enhancements in the quality of the reconstructed images (Fig. 12). Initially, during the early epochs, the reconstructed images exhibited some blurriness and distortion. For instance, in the overall contours of the images, we noted slight blurriness leading to the lack of clarity in certain details. However, as the epochs increased, the image quality gradually improved. By the second epoch, we observed a reduction in blurriness, and subtle textures started becoming clearer. Information such as nuclear contrast and cell density began to be preserved in the reconstructed images. Starting from the eighth epoch, we noticed a sharper overall image and more accurate color restoration. In areas with complex textures in some original images, the reconstructed images captured more details. While substantial improvements were observed, our model still faced challenges in accurately capturing details in highly complex texture regions, leading to some inaccuracies. Overall, with increasing epochs, we observed improvements in various aspects of image reconstruction. Clarity, detail preservation, and color restoration in the reconstructed images gradually became more accurate. However, further work is needed to continue enhancing our model in more complex image scenarios.

Fig. 12.

a Example panel of reconstructed images on the validation set. The first row displays the patches of input original images, while the remaining rows show the effects of reconstruction at different epochs. b Training and validation mean squared error (MSE) loss plotted across the training phase, providing a quantitative assessment of the model's image reconstruction quality. Training accuracy converges to nearly perfect, with validation loss reaching 0.0152 after the 12th iteration. Repeating the training 20 times results in comparable convergence of MSE loss for both the training and validation sets. Further training doesn't improve validation accuracy and meets the criteria for early stopping

Discussion and Conclusion

As an emerging molecular vibration-based microscopy technique, SRS imaging has experienced rapid growth in the fields of biology and medicine. However, due to the limitations of traditional picosecond laser pulse switching speeds, the sensitivity and imaging speed of SRS microscopy have been challenging to improve. This study focuses on enhancing the sensitivity and speed of SRS imaging and successfully develops a high-spectral-resolution SRS imaging technique based on the InSight DeepSee instrument. By converting femtosecond pulses to picosecond pulses using dispersive elements, we effectively enhance the imaging speed of SRS while maintaining good chemical resolution.

On the other hand, this study further explores the application of self-supervised deep learning to SRS images. Leveraging the strong feature extraction capability of VQVAE2, we propose a novel model named VQSRS. By introducing proxy tasks to constrain the model, it can obtain image representations applicable to various brain tumor subtypes from both global and local perspectives. Experimental results show significant improvements in external and internal evaluation metrics on a global scale, enabling the generation of clear brain tumor prediction heatmaps. From a local perspective, we utilize localized representations containing structural and contextual information to compute feature spectra, characterizing the structural features of each image.

Despite achieving significant milestones, there are still many directions for further development. In the testing of tumor types, we conducted tissue diagnostics for only seven categories, which did not fully cover other tumor and non-tumor entities. Additionally, due to the current lack of WHO grading in the dataset, finer differentiation of tumor types, such as whether neuroglial tumors are included in the category of low-grade neuroglial tumors, has not been possible. Therefore, there is a need for further refinement of the dataset to achieve more accurate diagnoses. This approach may not offer more effective information when faced with two probability categories that are very close but represent different types. In future research, designing thresholds for each tumor may provide a better means of distinguishing between tumors. In summary, VQSRS presents a promising avenue for enhancing intraoperative diagnostics in pathology-deficient regions, allowing for near-real-time histological data acquisition. However, our study underscores the current limitations, as the technology falls short of demonstrating diagnostic accuracy comparable to conventional histology. It is crucial to recognize that VQSRS is not a standalone replacement for pathologists but rather a complementary tool. Integration with standard interpretation may expedite diagnoses, but careful consideration of additional investments and continued research is needed. While the technology holds potential, its full clinical applicability awaits refinement and improvement in diagnostic accuracy through collaborative efforts between technology and healthcare professionals.

Supplementary Information

Below is the link to the electronic supplementary material.

Author Contribution

Zijun Wang constructed the model, implement the algorithm, performed experiments, and partially wrote the manuscript. Kaitai Han and Zhenghui Wang analyzed the results and partially wrote the manuscript. Wu Liu and Mengyuan Huang partially analyzed the results. Chaojing Shi, Xi Liu, Guocheng Sun, and Shitou Liu collected the data. Qianjin Guo supervised the whole study, conceptualized the algorithm, analyzed the results, and partially wrote the manuscript.

Funding

This work was supported by NSFCs (Nos.52361145714, 21673252) and the Beijing Municipal Education Commission, China, under grant number 2019821001, and the fund of Climbing Program Foundation from Beijing Institute of Petrochemical Technology (Project No. BIPTAAI-2021007)

Data Availability

The data used in this study were obtained from publicly available datasets. The availability of the data can be found at https://opensrh.mlins.org/ and is openly accessible to the research community.

Declarations

Ethics Approval and Consent to Participate

This study utilized publicly available datasets, which do not involve human participants. As the data are pre-existing and anonymized, no ethics approval or consent to participate was required for this research.

Competing Interests

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Ferlay J, Colombet M, Soerjomataram I, Parkin DM, Piñeros M, Znaor A, Bray F. Cancer statistics for the year 2020: An overview. Int J Cancer. 2021;149:778–789. doi: 10.1002/ijc.33588. [DOI] [PubMed] [Google Scholar]

- 2.Chaya N. Poor access to health Services: Ways Ethiopia is overcoming it. Res Comment. 2007;2:1–6. [Google Scholar]

- 3.Hamilton PW, Van Diest PJ, Williams R, Gallagher AG. Do we see what we think we see? The complexities of morphological assessment. J Pathol J Pathol Soc G B Irel. 2009;218:285–291. doi: 10.1002/path.2527. [DOI] [PubMed] [Google Scholar]

- 4.Novis DA, Zarbo RJ. Interinstitutional comparison of frozen section turnaround time. Arch Pathol Lab Med. 1997;121:559. [PubMed] [Google Scholar]

- 5.Gal AA, Cagle PT. The 100-year anniversary of the description of the frozen section procedure. Jama. 2005;294:3135–3137. doi: 10.1001/jama.294.24.3135. [DOI] [PubMed] [Google Scholar]

- 6.Wei L, Min W. Electronic Preresonance Stimulated Raman Scattering Microscopy. J Phys Chem Lett. 2018;9:4294–4301. doi: 10.1021/acs.jpclett.8b00204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Xiong H, Qian N, Miao Y, Zhao Z, Min W. Stimulated Raman Excited Fluorescence Spectroscopy of Visible Dyes. J Phys Chem Lett. 2019;10:3563–3570. doi: 10.1021/acs.jpclett.9b01289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Xiong H, Shi L, Wei L, Shen Y, Long R, Zhao Z, Min W. Stimulated Raman excited fluorescence spectroscopy and imaging. Nat Photonics. 2019;13:412–417. doi: 10.1038/s41566-019-0396-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Xiong H, Min W: Combining the best of two worlds: Stimulated Raman excited fluorescence. J Chem Phys. 2020, 153. [DOI] [PMC free article] [PubMed]

- 10.Saar BG, Freudiger CW, Reichman J, Stanley CM, Holtom GR, Xie XS: Video-rate molecular imaging in vivo with stimulated Raman scattering. science. 2010, 330:1368–70. [DOI] [PMC free article] [PubMed]

- 11.Ozeki Y, Umemura W, Otsuka Y, et al. High-speed molecular spectral imaging of tissue with stimulated Raman scattering. Nat Photonics. 2012;6:845–851. doi: 10.1038/nphoton.2012.263. [DOI] [Google Scholar]

- 12.Liao C-S, Slipchenko MN, Wang P, Li J, Lee S-Y, Oglesbee RA, Cheng J-X. Microsecond scale vibrational spectroscopic imaging by multiplex stimulated Raman scattering microscopy. Light Sci Appl. 2015;4:e265–e265. doi: 10.1038/lsa.2015.38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Liao C-S, Wang P, Wang P, Li J, Lee HJ, Eakins G, Cheng J-X. Spectrometer-free vibrational imaging by retrieving stimulated Raman signal from highly scattered photons. Sci Adv. 2015;1:e1500738. doi: 10.1126/sciadv.1500738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wakisaka Y, Suzuki Y, Iwata O, et al. Probing the metabolic heterogeneity of live Euglena gracilis with stimulated Raman scattering microscopy. Nat Microbiol. 2016;1:1–4. doi: 10.1038/nmicrobiol.2016.124. [DOI] [PubMed] [Google Scholar]

- 15.Kim D, Choi DS, Kwon J, Shim S-H, Rhee H, Cho M. Selective Suppression of Stimulated Raman Scattering with Another Competing Stimulated Raman Scattering. J Phys Chem Lett. 2017;8:6118–6123. doi: 10.1021/acs.jpclett.7b02752. [DOI] [PubMed] [Google Scholar]

- 16.Bi Y, Yang C, Chen Y, et al. Near-resonance enhanced label-free stimulated Raman scattering microscopy with spatial resolution near 130 nm. Light Sci Appl. 2018;7:81. doi: 10.1038/s41377-018-0082-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Xiong H, Qian N, Zhao Z, Shi L, Miao Y, Min W. Background-free imaging of chemical bonds by a simple and robust frequency-modulated stimulated Raman scattering microscopy. Opt Express. 2020;28:15663–15677. doi: 10.1364/OE.391016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Xiong H, Qian N, Miao Y, Zhao Z, Chen C, Min W. Super-resolution vibrational microscopy by stimulated Raman excited fluorescence. Light Sci Appl. 2021;10:87. doi: 10.1038/s41377-021-00518-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wei L, Hu F, Shen Y, et al. Live-cell imaging of alkyne-tagged small biomolecules by stimulated Raman scattering. Nat Methods. 2014;11:410–412. doi: 10.1038/nmeth.2878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hu F, Lamprecht MR, Wei L, Morrison B, Min W. Bioorthogonal chemical imaging of metabolic activities in live mammalian hippocampal tissues with stimulated Raman scattering. Sci Rep. 2016;6:39660. doi: 10.1038/srep39660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hu F, Wei L, Zheng C, Shen Y, Min W. Live-cell vibrational imaging of choline metabolites by stimulated Raman scattering coupled with isotope-based metabolic labeling. Analyst. 2014;139:2312–2317. doi: 10.1039/C3AN02281A. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Alfonso-García A, Pfisterer SG, Riezman H, Ikonen E, Potma EO. D38-cholesterol as a Raman active probe for imaging intracellular cholesterol storage. J Biomed Opt. 2016;21:061003–061003. doi: 10.1117/1.JBO.21.6.061003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Liao C-S, Wang P, Huang CY,. , et al. In Vivo and in Situ Spectroscopic Imaging by a Handheld Stimulated Raman Scattering Microscope. ACS Photonics. 2018;5:947–954. doi: 10.1021/acsphotonics.7b01214. [DOI] [Google Scholar]

- 24.Orringer DA, Pandian B, Niknafs YS, et al. Rapid intraoperative histology of unprocessed surgical specimens via fibre-laser-based stimulated Raman scattering microscopy. Nat Biomed Eng. 2017;1:0027. doi: 10.1038/s41551-016-0027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Yang Y, Yang Y, Liu Z, et al. Microcalcification-Based Tumor Malignancy Evaluation in Fresh Breast Biopsies with Hyperspectral Stimulated Raman Scattering. Anal Chem. 2021;93:6223–6231. doi: 10.1021/acs.analchem.1c00522. [DOI] [PubMed] [Google Scholar]

- 26.Zhang B, Xu H, Chen J, et al. Highly specific and label-free histological identification of microcrystals in fresh human gout tissues with stimulated Raman scattering. Theranostics. 2021;11:3074. doi: 10.7150/thno.53755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wei Z, Liu X, Yan R, Sun G, Yu W, Liu Q, Guo Q. Pixel-level multimodal fusion deep networks for predicting subcellular organelle localization from label-free live-cell imaging. Front Genet. 2022;13:1002327. doi: 10.3389/fgene.2022.1002327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wei Z, Liu W, Yu W, Liu X, Yan R, Liu Q, Guo Q. Multiple Parallel Fusion Network for Predicting Protein Subcellular Localization from Stimulated Raman Scattering (SRS) Microscopy Images in Living Cells. Int J Mol Sci. 2022;23:10827. doi: 10.3390/ijms231810827. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Sun G, Liu S, Shi C, Liu X, Guo Q. 3DCNAS: A universal method for predicting the location of fluorescent organelles in living cells in three-dimensional space. Exp Cell Res. 2023;433:113807. doi: 10.1016/j.yexcr.2023.113807. [DOI] [PubMed] [Google Scholar]

- 30.Freudiger CW, Min W, Saar BG, et al. Label-free biomedical imaging with high sensitivity by stimulated Raman scattering microscopy. Science. 2008;322:1857–1861. doi: 10.1126/science.1165758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zhang J, Zhao J, Lin H, Tan Y, Cheng J-X. High-speed chemical imaging by dense-net learning of femtosecond stimulated Raman scattering. J Phys Chem Lett. 2020;11:8573–8578. doi: 10.1021/acs.jpclett.0c01598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hu F, Shi L, Min W. Biological imaging of chemical bonds by stimulated Raman scattering microscopy. Nat Methods. 2019;16:830–842. doi: 10.1038/s41592-019-0538-0. [DOI] [PubMed] [Google Scholar]

- 33.Zhang L, Wu Y, Zheng B, et al. Rapid histology of laryngeal squamous cell carcinoma with deep-learning based stimulated Raman scattering microscopy. Theranostics. 2019;9:2541. doi: 10.7150/thno.32655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tian F, Yang W, Mordes DA, et al. Monitoring peripheral nerve degeneration in ALS by label-free stimulated Raman scattering imaging. Nat Commun. 2016;7:13283. doi: 10.1038/ncomms13283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lu F-K, Basu S, Igras V, et al. Label-free DNA imaging in vivo with stimulated Raman scattering microscopy. Proc Natl Acad Sci. 2015;112:11624–11629. doi: 10.1073/pnas.1515121112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Fu D, Zhou J, Zhu WS, et al. Imaging the intracellular distribution of tyrosine kinase inhibitors in living cells with quantitative hyperspectral stimulated Raman scattering. Nat Chem. 2014;6:614–622. doi: 10.1038/nchem.1961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Freudiger CW, Min W, Holtom GR, Xu B, Dantus M, Sunney Xie X. Highly specific label-free molecular imaging with spectrally tailored excitation-stimulated Raman scattering (STE-SRS) microscopy. Nat Photonics. 2011;5:103–109. doi: 10.1038/nphoton.2010.294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Wang MC, Min W, Freudiger CW, Ruvkun G, Xie XS. RNAi screening for fat regulatory genes with SRS microscopy. Nat Methods. 2011;8:135–138. doi: 10.1038/nmeth.1556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ji M, Orringer DA, Freudiger CW, et al.: Rapid, label-free detection of brain tumors with stimulated Raman scattering microscopy. Sci Transl Med. 2013, 5:201ra119–201ra119. [DOI] [PMC free article] [PubMed]

- 40.Hollon TC, Pandian B, Adapa AR, et al. Near real-time intraoperative brain tumor diagnosis using stimulated Raman histology and deep neural networks. Nat Med. 2020;26:52–58. doi: 10.1038/s41591-019-0715-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Liu Z, Su W, Ao J, et al. Instant diagnosis of gastroscopic biopsy via deep-learned single-shot femtosecond stimulated Raman histology. Nat Commun. 2022;13:4050. doi: 10.1038/s41467-022-31339-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Cho K, Kim KD, Nam Y, et al.: CheSS: Chest X-Ray Pre-trained Model via Self-supervised Contrastive Learning. J Digit Imaging. 2023, 1–9. [DOI] [PMC free article] [PubMed]

- 43.Liang X, Dai J, Zhou X, et al.: An Unsupervised Learning-Based Regional Deformable Model for Automated Multi-Organ Contour Propagation. J Digit Imaging. 2023, 1–9. [DOI] [PMC free article] [PubMed]

- 44.Goyal P, Caron M, Lefaudeux B, et al.: Self-supervised pretraining of visual features in the wild. ArXiv Prepr ArXiv210301988. 2021.

- 45.Holmberg OG, Köhler ND, Martins T, et al. Self-supervised retinal thickness prediction enables deep learning from unlabelled data to boost classification of diabetic retinopathy. Nat Mach Intell. 2020;2:719–726. doi: 10.1038/s42256-020-00247-1. [DOI] [Google Scholar]

- 46.Batson J, Royer L: Noise2self: Blind denoising by self-supervision. In: International Conference on Machine Learning. PMLR; 2019. 524–33.

- 47.Jiang C, Chowdury A, Hou X, et al. OpenSRH: optimizing brain tumor surgery using intraoperative stimulated Raman histology. Adv Neural Inf Process Syst. 2022;35:28502–28516. [PMC free article] [PubMed] [Google Scholar]

- 48.Zhang D, Slipchenko MN, Cheng J-X. Highly sensitive vibrational imaging by femtosecond pulse stimulated Raman loss. J Phys Chem Lett. 2011;2:1248–1253. doi: 10.1021/jz200516n. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Cao R, Nelson SD, Davis S, et al. Label-free intraoperative histology of bone tissue via deep-learning-assisted ultraviolet photoacoustic microscopy. Nat Biomed Eng. 2023;7:124–134. doi: 10.1038/s41551-022-00940-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Harms J, Lei Y, Wang T, et al. Paired cycle-GAN-based image correction for quantitative cone-beam computed tomography. Med Phys. 2019;46:3998–4009. doi: 10.1002/mp.13656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Wu H, Flierl M: Vector quantization-based regularization for autoencoders. In: Proceedings of the AAAI Conference on Artificial Intelligence. 2020. 6380–7.

- 52.Razavi A, Van den Oord A, Vinyals O: Generating diverse high-fidelity images with vq-vae-2. Adv Neural Inf Process Syst. 2019, 32.

- 53.Van Den Oord A, Vinyals O: Neural discrete representation learning. Adv Neural Inf Process Syst. 2017, 30.

- 54.McInnes L, Healy J, Melville J: Umap: Uniform manifold approximation and projection for dimension reduction. ArXiv Prepr ArXiv180203426. 2018.

- 55.Hussain SF, Ramazan M. Biclustering of human cancer microarray data using co-similarity based co-clustering. Expert Syst Appl. 2016;55:520–531. doi: 10.1016/j.eswa.2016.02.029. [DOI] [Google Scholar]

- 56.Wu Z, Zhang Y, Zhang JZ, Xia K, Xia F. Determining Optimal Coarse-Grained Representation for Biomolecules Using Internal Cluster Validation Indexes. J Comput Chem. 2020;41:14–20. doi: 10.1002/jcc.26070. [DOI] [PubMed] [Google Scholar]

- 57.Zelig A, Kariti H, Kaplan N: KMD clustering: Robust general-purpose clustering of biological data. 2023, 2020.10.04.325233. 10.1101/2020.10.04.325233 [DOI] [PMC free article] [PubMed]

- 58.Cheng Y, Church GM: Biclustering of expression data. In: Ismb. 2000. 93–103. [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data used in this study were obtained from publicly available datasets. The availability of the data can be found at https://opensrh.mlins.org/ and is openly accessible to the research community.