Abstract

Our ability to perceive multiple objects is mysterious. Sensory neurons are broadly tuned, producing potential overlap in the populations of neurons activated by each object in a scene. This overlap raises questions about how distinct information is retained about each item. We present a novel signal switching theory of neural representation, which posits that neural signals may interleave representations of individual items across time. Evidence for this theory comes from new statistical tools that overcome the limitations inherent to standard time-and-trial-pooled assessments of neural signals. Our theory has implications for diverse domains of neuroscience, including attention, figure binding/scene segregation, oscillations, and divisive normalization. The general concept of switching between functions could also lend explanatory power to theories of grounded cognition.

Keywords: multiplexing, neural representation, neural code, multiplicity

Current theories of neural representations fall short for multiple stimuli

Current theories of neural representation (see Glossary) hold that information is encoded by a combination of neural identity and firing rate: which neurons respond and how vigorously they do so form the representation of a stimulus. These theories have been developed to account for responses observed for the presentation of one stimulus at a time. On its face, this central dogma cannot easily handle the case of multiple stimuli.

The critical issue concerns the breadth (and nature) of sensory tuning (Figure 1). Consider the domain of space. In the visual system, acuity is ultimately limited by the spacing of the photoreceptors – where a photon might arise from within a given photoreceptor’s receptive field is essentially invisible to us; it is only the fact that a given photoreceptor has been activated that provides information to subsequent stages of processing. Photoreceptor spacing and receptive field size lead to an acuity limit of roughly half an arc-minute. (Measurements of acuity can yield lower values in special cases, but photoreceptor spacing remains the limiting factor; see [1] for full discussion.) However, as signals progress along the visual pathway, receptive fields increase in size, and the initially tight correspondence between receptive field size and acuity falls apart. Even in primary visual cortex, a relatively early point along the visual pathway, the smallest receptive fields are approximately 10-fold larger than the spatial limits of visual perception [2, 3], with much larger fields appearing in later stages. Current accounts for how this problem is solved concern the population of neurons: the loss of information about where the stimulus is within the receptive field in a single neuron is thought to be compensated for by overlap in the population of activated neurons, such that the precise location of a stimulus can be inferred from the peak of the population activity. Such a scheme can work well when there is only one stimulus, but how the locations of multiple stimuli might be reconstructed from such a population scheme is not obvious. By analogy, images taken with a low-resolution camera can indicate the location of a single dot against a plain background fairly well, but fail to preserve detail when the scene is more complex (Figure 1a).

Figure 1: The brain’s tuning problem and signal switching as a possible solution.

a) In the visual system, the initial “fine” coding in the retina gives way to “coarse” coding at later stages of the visual pathway, e.g., [2, 3, 108–111]. Coarse coding should impair perception of fine detail and multiple stimuli, as illustrated here by analogy with the resolution of photographs. b) A related, but distinct, problem occurs in the auditory system, where neurons are monotonically sensitive to the horizontal eccentricity of sounds [e.g. e.g. 5, 6, 7, 8, 112, 113]. How such a firing rate code for sound location can be deployed when there is more than one sound is unclear. c) Both problems can be thought of as a too-many-stimuli-for-the-wires problem. In telecommunications systems, when multiple signals (e.g., A, B, C, D) must be transmitted during the same general period of time over a single channel, a switch can be used to gate the inputs so that only one signal is carried in the channel at any given moment, with different signals transmitted across time. d) If the brain implements something similar, the activity patterns in response to multiple stimuli might appear to consist of epochs of time in which each stimulus dominates the signal in alternation, as shown for two hypothetical stimuli [modified from 26]. Depending on the timescale of fluctuations and their coordination across the population, it may be that multiple distinct channels are created dynamically in the brain, a variation on the form of multiplexing deployed in the telecommunications case (see text for details).

The auditory system has an even more severe problem. Spatial location is reconstructed not via optical image formation but rather from computations derived from interaural timing and level differences (among other sources of information). The timing and level cues vary in proportion to the angle of incidence of a sound in relation to the axes connecting the two ears – i.e., the largest temporal leads and loudness advantages occur when the sound is nearest to one ear vs. the other. In the mammalian auditory system, the majority of neurons replicate this tuning profile, i.e., they respond more strongly the closer a sound is to the axis of one ear vs. the other (Figure 1b) [4–8]. It is thought that location is inferred from the level of firing of such neurons, rather than which neurons are active as might occur in a neural map. Again, such a code can work well when there is only one sound, but when two sounds occur, how is a firing rate code supposed to ensure the representation of both stimuli? One possibility is that segregation of stimuli to different subpopulations of neurons may occur via their differential tuning to sound frequency, such that the locations of sounds of different frequencies would be encoded in the level of firing of different subsets of frequency-tuned neurons. However, at suprathreshold sound intensities, frequency tuning is very broad [9], leading to a “blurring” problem that is akin to the one described above for the visual system.

In short, the majority of the brain’s visual and auditory neurons are sufficiently broadly tuned that representing more than one perceptible stimulus or stimulus attribute at once appears challenging. To date, this situation has gone surprisingly unrecognized. Studies that investigate responses to more than one stimulus are few and far between, and the implications of the findings of such studies for preserving information are often not fully considered. For example, a common finding among such studies is that neurons appear to respond more weakly to a preferred stimulus when there are other stimuli present vs. when the preferred stimulus is presented alone (e.g., [10]). If such changes in firing rate were to reflect an effective shrinkage in the receptive field size, thus sharpening the tuning of individual neurons and dynamically making the code more fine-grained, this could in principle facilitate coding multiple stimuli. But in the auditory system, such sharpening does not appear to be sufficient [11], and in the visual system, the general theory that has been offered proposes instead a general lowering or normalization of activity rather than a sharpening of tuning ([12], but see e.g., [12–14] for some cases where sharpening of tuning can arise in the presence of surround stimuli and see section “A non-lossy explanation for (some) normalization?” for more detail).

This gap leads us to present a novel theory of how the brain might solve this problem through signal switching across time. Specifically, when more than one stimulus is present, perhaps neurons switch between encoding different items, using interleaved temporal epochs to preserve distinct information about each individual item across time and/or across the neural population (Figure 1c,d). Below we describe this theory and its inspirations, supporting evidence, and implications for multiple domains of neuroscience.

Neural Signal Switching

The problem of multiple signals needing to be squeezed into a channel with limited capacity finds precedent in the domain of telecommunications [15]. When there are more signals than wires to transmit them, telecommunications circuitry is designed such that signals “take turns” accessing the limited channels available, a process known as time-division multiplexing (Figure 1c). The possibility that the brain might deploy a form of multiplexing has previously been suggested in the domain of working memory. Working memory has a capacity limit of ~5–7 items, and it has been proposed that this capacity limit may arise due to an encoding process in which items to be remembered are slotted into different phases of an oscillatory cycle [16–22].

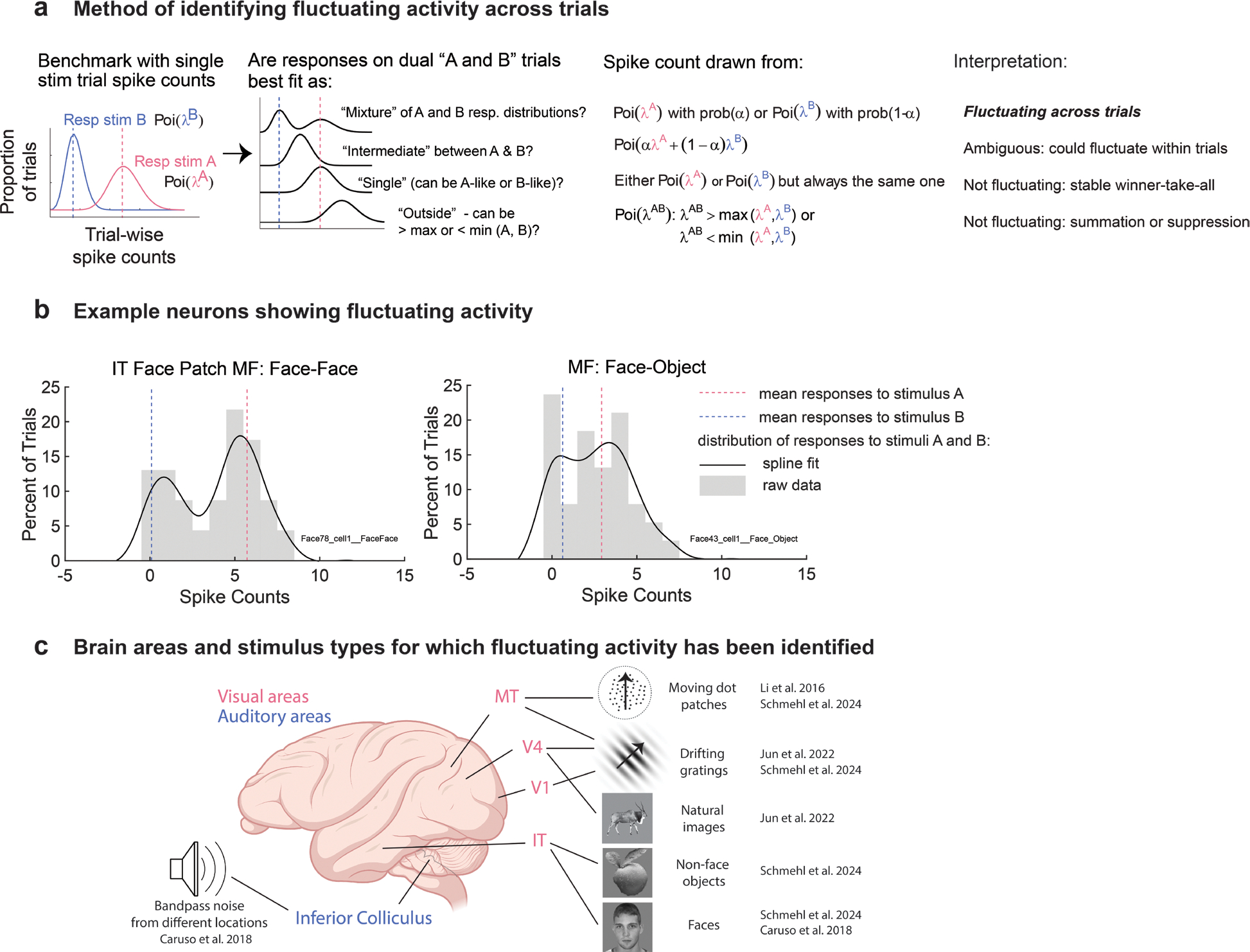

Progress in studying such phenomena at the single neuron level has been limited by analysis methods that rely on pooling activity across time, stimulus repetitions, or both. We recently developed new statistical methods for analyzing activity patterns evoked by two stimuli at multiple time scales [23–27]. These methods require “triplets” of conditions: stimulus A, stimulus B, and A and B in combination (AB) (Figure 2a). For each triplet, we determine the distribution of responses when only one stimulus, A or B, is presented. The AB condition provides the test case: does neural activity switch between A-like and B-like response patterns when both A and B are presented? Benchmarking with the A-alone and B-alone response distributions is key to interpreting any fluctuating activity as possibly preserving a representation of the component stimuli.

Figure 2. Statistical tests to identify fluctuating sensory activity.

a) The across-trial analysis method considers the distributions of spike counts on combined stimulus trials in comparison to the component single stimulus trials. Of particular interest are mixtures, which capture the neuron fluctuating between responding to one stimulus on some trials and the other on the remaining trials, and intermediates, which could reflect a similar switching response but at a time scale faster than the trial duration. b) Example spike count distributions from two neurons in the middle fundus face patch when tested with either two faces or a face and another object [26, 27]. c) Fluctuating activity at the scale of trials (i.e., mixtures) has been identified using a variety of stimulus types in multiple visual as well as auditory areas of the rhesus monkey [23, 26, 27, 34].

The analysis methods consider the distribution of activity at various time scales. Comparing spike counts across trials, we used a Bayesian model comparison to ascertain whether the trial-wise AB spike counts are best fit by a mixture of the trial-wise A-like and B-like Poisson distributions (mixture), consistent with fluctuations between encoding each individual stimulus across trials (Figure 2a). The alternative options involved single-mode Poisson distributions that match one of the A-like or B-like distributions (single, or winner/loser-take-all), lie between those distributions (intermediate, potentially consistent with more rapid, sub-trial fluctuations, to be discussed further below), or lie outside the range of those distributions (outside, consistent with an additive or strongly suppressive interaction between the stimuli). This analysis excludes cases in which the A-alone and B-alone distributions are statistically indistinguishable from one another or when either does not appear to be Poisson-like (e.g., [28]).

We have tested this spike-counts-across-trials analysis method in a variety of auditory and visual primate brain areas using a range of tasks and stimulus conditions, and using data collected in a variety of laboratories. When stimuli are distinguishable from one another, such as presented from different locations, mixtures are evident in all the brain regions tested (Figure 2b,c; auditory: inferior colliculus; visual: V1, MT, V4, IT face patch MF, IT face patch AL) [23, 26, 27].

Fluctuating activity has also been identified by several other groups in other contexts using related methods. For example, in studies of binocular rivalry in which different stimuli are presented to each eye, some neurons in visual cortical areas show responses that fluctuate along with concomitant changes in which eye dominates the percept (e.g., [29, 30]). Other studies [31–33] have deployed analytical techniques involving mixtures of Poissons and have reported that such mixtures/overdispersion is more evident when the image is not homogenous but can be considered to contain more than one element. Finally, one study [34] showed alternating responses to adjacent dot patches in MT, a finding we replicated by analyzing their data with our analysis method [27].

In our spike-counts-across-trials analyses, slow fluctuations as indicated by mixtures were not the only response pattern identified. Rather, intermediates were also identified in some datasets, leaving open the possibility that fluctuations might exist at a faster time scale than the across-trial scale analysis could detect. To detect fluctuation within individual trials, we developed a second analysis method, the Dynamic Admixture Point Process (DAPP) model (Figure 3). In DAPP, spike counts are binned into subintervals within trials, and each AB trial is treated as a weighted recombination of the A-like and B-like response distributions observed during each bin. The weights are allowed to vary dynamically across time within a trial and vary stochastically across trials, producing random weight curves that are not time locked across repetition. The stochastic generator is estimated from the data, and an inference is drawn on the shape of the weight curves generated by it: do they tend to be steady, or do they exhibit varying contributions of A and B across time? Using 50 ms bins, we found some evidence of within-trial varying patterns of activity in both the inferior colliculus and the face patch system [24, 26]. Such within-trial variation in activity occurred among cases dubbed intermediate at the whole-trial time scale but rarely among mixtures, which usually showed steady weight functions across within-trial time scales. These findings confirmed the presence of slow fluctuations, and also suggested that faster ones may exist as well, varying by neuron, stimulus condition, and brain area.

Figure 3. A method of identifying faster fluctuations.

a) The dynamic admixture point process (DAPP) model treats combined stimulus trials as weighted admixtures of the distributions of activity observed on single stimulus trials. The weight curves (alpha) can vary across time and trials. The properties of the processes that generate these curves are learned by the model, permitting the generation of new curves. The distributions of the trial-wise means and ranges of these curves are then used to tag a triplet’s data as central vs. extreme and flat vs. wavy based on statistical cutoffs explained in [24, 26]. In general, whole trial mixtures should be flat-extreme, and whole trial intermediates should be central but could be either flat or wavy, with the latter category indicating fluctuation. b,c) The observed and generated weight curves for the two examples from the inferior colliculus [26]. b) Example 1: On any given trial, weight curves are flat, but across trials they are bimodally distributed at the extreme values that correspond to either the individual A or B responses (left panel). Trial-wise mean alpha values cluster at 0 or 1 (middle panel), and the individual curves do not “swing” very much from their averages (right panel). These weight curves are consistent with a mixture, or across-trial fluctuation. c) Example 2: Weight curves vary during the course of the trial (“wavy”, left panel), and are central in their average value, with trial-wise mean alpha near 0.5 (middle panel). The waviness is captured by the high maximum swing size (right panel). These weight curves are consistent with within-trial fluctuation, despite being classified as intermediate at the whole trial scale.

A grand theory of everything?

Neural signal switching is relevant to a variety of topics in perception, cognition, and attention. In this section, we review several phenomena to which neural signal switching may contribute.

Figure binding and scene segregation

What constitutes a “stimulus” to be encoded by neurons? The sensory scene is continuous, yet we experience it as consisting of discrete objects with boundaries. Additionally, individual objects can have multiple distinct parts to be represented; for example, the letter E is composed of one vertical line and three horizontal ones. Accurate perception necessitates encoding all parts as distinct yet connected. How such grouping and segregation occurs has been a topic of intense interest for perceptual psychology and systems neuroscience for about 100 years.

Neural switching patterns could relate to the perceptual segregation of objects from one another and/or into component parts. An earlier theory of how the brain might solve the binding problem proposed that the different attributes of complex visual stimuli are linked together via temporal synchrony of firing patterns across neurons [35–39]. Put another way, this earlier theory effectively postulated that responses to stimuli might not be steady across time, and that the timing of responses in different neurons to different components of the stimulus might reflect (or cause) the perceptual linkage (or lack thereof) of different aspects of the sensory scene.

While the majority of the neural switching patterns we have observed to date operate at a much slower timescale than was postulated by this earlier theory of binding, we have observed intriguing potential connections to object binding. Specifically, the only datasets where we have not observed substantial mixtures and intermediates involved superimposed gratings that formed a fused “plaid” [23, 27]. The relative absence of fluctuating activity when the stimuli form a single object in comparison to cases where the stimuli are distinct is notable.

Perhaps most importantly, neural switching theory offers a new foothold into investigating the neural mechanisms underlying this perceptual experience. The Gestalt principles that lead to grouping have been well understood at least in the visual domain for a century [40]. Studies in which neural switching is investigated as the stimulus attributes that support/disrupt grouping such as connectedness, similarity, common fate, etc. are parametrically varied will be important for gaining a full understanding of the relationship between these neural and perceptual phenomena. Simultaneous recordings from multiple neurons will also be needed to ascertain whether activity fluctuations are coordinated across neurons (and see next section).

Neural switching theory and attention

Both neural switching theory and theories of attention concern how the brain processes multiple stimuli, and can be thought of as branches of the same conceptual family. But there are key differences. Neural switching theory seeks to account for how the representation of multiple stimuli might be preserved (Figure 4a), whereas attention theories typically concern how the brain focuses on a small number of salient individual items to the detriment of most others (Figure 4b,c).

Figure 4. Correlation patterns can reveal whether switching is information-preserving vs. information-selecting.

a) Simultaneously recorded units in V1 show evidence of encoding both A and B stimuli across the population on every trial. Activity of individual units is color-coded according to whether the activity was more akin to the A-like (pink) or B-like (blue) distributions of spikes on a given trial. Only units with at least moderate evidence of mixture activity patterns are depicted; that this identification was successful can be seen by the presence of both A-like (pink) and B-like (blue) squares for every neuron across trials (rows). Notably, every trial also has both A-like and B-like responses across neurons (columns). Overall, there are more A-like than B-like responses, suggesting a possible bias in the representation. b-c) The pattern differs from those expected under covert attention (b), in which neurons might switch in synchrony between encoding one item vs. the other. This is shown here as a simulation involving the same A-like and B-like responses actually observed in (a), depicted as having occurred in a correlated fashion across neurons, or normalization (c), which would be expected to produce a common intermediate value across neurons and trials (purple).

Another key difference concerns the general level of description. Attention refers to a cognitive, behavioral process – the experience of being more aware of one stimulus than others [41]. Neural switching theory postulates potential neural mechanisms for preserving information regarding multiple items in the neural code, and doesn’t make explicit claims about correlations with perceptual awareness of individual items except to argue that such information preservation is a necessary pre-condition to awareness.

Nevertheless, there are intriguing open questions at the intersection of neural switching theory and attention. These open questions surround how responses are coordinated across the neural population and how switching patterns may relate to sampling of the sensory scene across time.

Preservation vs. prioritization depends on coordination across the population.

Open questions regarding the relationship between neural switching theory and attention concern the degree of coordination between individual neurons and how this relates to perception. If all neurons in a population encode only one of the multiple items present at a given instant, and if that item is the only item that the subject perceives at that moment, a neural mechanism related to attention may be at work. However, if a neural representation is preserved for multiple items, that population may form a pre-attentive stage of processing, with attention operating at a later point [e.g. 42].

In one dataset that speaks to this question, the pattern of results is most consistent with the A and B stimuli both being encoded simultaneously across the population. Specifically, in V1, when multiple neurons showing mixture response patterns are recorded simultaneously, both A-like and B-like response patterns occur on every trial, but in varying subsets of neurons (Figure 4a). However, two additional aspects of the observed pattern are intriguing. First, the A and B stimuli do not appear to be coded equally across the population. In this set of recordings, the A stimulus appears to be encoded preferentially compared to the B stimulus. Such a bias could lead to stimulus A being perceived as more salient. Second, the switching patterns do not appear to be random across neurons. While no two neurons are perfectly correlated with each other, there are ensembles of neurons that appear better correlated with each other than with other neurons. For example, units 1 and 2 are highly correlated with each other, and units 3, 4, and 5 are quite correlated with each other but not well correlated with units 1 and 2. This suggests that there may be subgroups of neurons that tend to be highly correlated with each other (e.g., [31, 43]), consistent with the burgeoning literature on the “dimensionality” of neural codes being lower than the number of neurons in those codes [44]. An implication of this is that the number of ensembles in a neural population may contribute to limits on the number of stimuli that can be encoded.

Sampling of the sensory scene.

An intriguing possible connection between attention and neural switching involves evidence from numerous studies suggesting that attention may rely on sampled processes. The reaction time to identify a target can sometimes scale with the number of items to be evaluated [45]. Such serial processing would be consistent with an underlying mechanism involving a duty cycle of successive stimulus encoding [e.g. 46, 47–49]. This sampling may be internally controlled: stimulus detectability varies across time and is correlated with the phase of oscillations in EEG signals [50–52] or local field potentials in various brain areas [53]. Furthermore, even nominally sustained attention can exhibit rhythmic properties [54–56]. Such findings therefore also suggest an ongoing, endogenous cycling in neural coding with concomitant effects on perception.

In sum, neural switching theory can provide insights into several phenomena thought to be associated with attention, from salience to limited processing capacity to temporal sampling of the sensory scene. More work will be needed to bring these different perspectives into better conceptual alignment.

A non-lossy explanation for (some) normalization?

A common observation in sensory brain regions is that neural activity often fails to scale linearly with the total amount of energy in the sensory scene, a pattern often termed “normalization,” e.g. [12, 57–63] (or more generally, “subadditivity”, for review, see [14]). Conceptually, normalization has been seen as ensuring neurons stay in a firing rate zone tailored to the statistics of the scene – such as reducing contrast sensitivity in a high contrast environment compared to a low contrast environment (e.g.[12]). Some cases of normalization, though, are more akin to the case we are concerned about, namely the presence of more than one distinct stimulus in a scene. For example, when a grating is presented in the center of a V1 neuron’s receptive field, and it is surrounded by a grating of a different orientation, the V1 neuron’s (average) response can be intermediate between the (average) responses evoked by either the center or surround stimuli alone (cross-orientation suppression, e.g. [12]). Such suppression can also occur for surrounds of the same orientation – in particular when stimulus contrast is high [13].

But the interpretation of such suppressive patterns depends on whether the underlying activity is actually fluctuating between single-stimulus response rates. In short, perhaps what has appeared as normalization is partly an illusion of time-and-trial pooling analysis methods. By focusing on the average response across time and trials, any fluctuating activity would have been overlooked, and it could be the case that firing rates of individual neurons are actually switching between the value(s) associated with the presence of stimulus A and those associated with the presence of stimulus B at some temporal scale. In our analysis methods, both mixtures (across-trial fluctuations) and intermediates involve responses that would be categorized as normalizing when responses are pooled across trials. But both mixtures and the “wavy-central” (within-trial fluctuations) subcategory of intermediates (Figure 3c) actually retain information about each item. These information-retaining strategies contrast with the alternative normalizing strategy, in which the activity on each trial is close to the across-trial average level (see Figure 4c for a simulation of this case).

Aside from our methods, clues to the underlying pattern can also come from simply evaluating across-trial variability in neural firing, a topic already of considerable interest in visual cortical processing [14, 64–66]. A relatively high variance in the dual stimulus context could indicate an underlying signal switching operation. It has been shown that the variance-to-mean ratio (Fano factor) of visual activity can shift into different modes depending on the sensory context [14], and indeed the Fano factor has been shown to be increased for larger, heterogenous images, which may be an analogous to those used here [32, 33].

If fluctuating neural signals do underlie some cases currently attributed to normalization, a key postulated benefit of normalization is retained: the distribution of activity levels across time and neurons continues to ensure the population level of activity stays within a limited firing rate range. In contrast, cases of “true” normalization pose a new problem: they indicate that the relationship between firing rate and the evoking stimuli is context dependent. Put another way, if the response to two stimuli, RAB, is the average of the responses observed to each stimulus alone (RA and RB), then the brain will have lost information about RA and RB individually (e.g., Figure 4c). Depending on how such a neural representation is evaluated by subsequent stages of processing, it could even seem like there is a single stimulus at some midpoint between stimulus A and stimulus B. This is known to happen in the case of saccades: when two visual targets are presented simultaneously, humans and monkeys will sometimes look between them [67–69]. A read-out algorithm would need to handle such shifting relationships between firing rate and the underlying stimuli gracefully (such as “peak-peaking” the most active neurons regardless of the absolute level of their firing rate).

Oscillations and duty cycles

When accompanied by sufficient correlations across the neural population, any regularity or periodicity in neural switching could result in oscillatory signals measurable in local field potentials, EEG, or even spiking activity [70], and could indicate phase-of-firing coding [16, 22]. The duty cycle of periodicity could be fixed (i.e., switching could occur on the same temporal schedule regardless of the number of items), or it might vary with sensory or cognitive load. Recent work involving a working memory task found a reduction in the frequency of theta-band modulation in the human hippocampus as a function of increasing numbers of items to be remembered, suggesting the duty cycle is extended to cover all the items [71]. In monkeys, changes in the power in different frequency bands have been observed in local field potentials of several brain regions as a function of perceptual load [72]. Humans engaged in a multi-object tracking task appeared to use a fixed amount of time to sample each object, such that the total amount of time to sample all objects might be expected to vary with object number [46].

Perceptual and motor imperfections: masking, crowding, and averaging movements

There may be limitations in how well neural signal switching operates. For example, signals might be imperfectly segregated from one another or imperfectly timed with the rest of the neural population, or there might be limits in the spatial or temporal scale at which signal switching is capable of operating. Such limitations could be associated with interesting perceptual parallels, such as when the presence of stimulus A alters some aspect of the perception of stimulus B. Examples include simultaneous masking [73, 74], distorted object perception associated with visual crowding [75], and averaging eye movements evoked by simultaneous targets [e.g. 76, 77]. In our previous study [26], even though the monkeys successfully made saccades to each sound, those saccades tended to fall slightly short of the actual sound locations. Such behavioral observations are potentially consistent with imperfections in the coding of each item.

Response variability

Variability in neural signals has troubled neuroscientists since the field emerged as a scientific discipline [e.g. 78], as it may cast doubt on the reliability of neural representations. Many experimental studies have chipped away at unexplained sources of variance to nominally identical conditions; one compelling example is recent evidence of extensive motor correlates in task-related activity throughout cortical regions [79]. If neurons switch between encoding different stimuli at times controlled by some internal state, this too will contribute to the apparent noise in neural firing patterns. In the years to come, it will be of interest to explore activity fluctuations together with other factors to ascertain just how much signal might be contained in what has until recently seemed like noise.

A role in thought?

More broadly, neural switching has intriguing implications for the theory of grounded cognition. Briefly, this theory posits that cognitive capacities like thought rely at least in part on running simulations in brain areas that carry sensory and motor signals [80–83]. Simplistically, when you think about a cat, your visual areas may exhibit activity patterns reminiscent of seeing a cat, while auditory and tactile areas may contribute elements related to the sound and feel of a cat. But a central problem with this theory is how the brain might distinguish such simulations from the real thing especially given that failures to distinguish such simulations from reality might produce hallucinations. Signal switching offers a possible explanation: perhaps neural ensembles switch between encoding stimuli that are actually present and running such simulations in the background.

Such a possibility would account for several common observations in neuroscience, such as human imaging studies showing overlap between the brain regions activated by sensory stimuli and those activated during mental imagery and language processing [e.g. 84, 85–87]. In animal studies, what constitutes “thought” is likely considerably simpler, but that doesn’t mean it doesn’t occur. Perhaps some portion of the variability or noise observed in response to nominally identical single stimulus conditions reflects fluctuations between sensing the stimulus in the first place and using the same circuit for other cognitive functions that may be unknown to or uncontrolled by the experimenter.

Open Questions

Many questions remain, from underlying biological mechanisms to ongoing statistical challenges. In the sections below, we consider these topics.

How is information routed?

A critical question concerns what mechanisms control how signals are routed from one neuron/neural ensemble to another. That such mechanisms exist is supported by recent studies showing that correlations between signals simultaneously recorded in different brain regions can change in different contexts, implying that the “effective connectivity” between them can change (for reviews, see [88–91]). In principle, such internal control over signal flow could be deployed in contexts such as the one proposed here. A possible mechanism by which signal flow could be controlled dynamically involves axo-axonal synapses, which may allow neurons to gate inputs to other neurons [e.g. 92, 93–95].

A second factor that may be related to neural switching is that neurons periodically undergo state changes that impact their excitability [e.g. 96, 97]. These changes could be internal to the neuron or could be controlled by external synaptic inputs or even ephaptic coupling [98, 99]. If such states differentially apply to some of a neuron’s inputs vs. others, they could introduce a predisposition to respond to a particular stimulus at any given moment.

Computational work concerning the formation of transient cell assemblies is also relevant. Such efforts have suggested that networks of neurons might exhibit distinct attractor states corresponding to different items stored in or recalled from memory. Such networks might then also transition between such stable states when encoding different items [e.g. 100, 101, 102]. How the transitions occur is not fully understood but could involve either explicit signals from elsewhere triggering the transitions “on purpose” or stochastic fluctuations arising due to biological noise within individual neurons.

What is the temporal and spatial resolution of neural signal switching?

In telecommunications’ version of time-division multiplexing, a single digital bit of information can be coded on the order of nanoseconds. Brain signaling is slower, with delays for action potentials and synaptic transmission in the millisecond range, and reaction times to sensory stimuli of at least a hundred milliseconds or more. It is therefore highly unlikely that the durations of individual packets in the brain will be as brief as they are in artificial systems. In our tests, we found evidence for fluctuation when examining neural activity both within and across trials. However, the true underlying time scales remain unknown, and identifying the precise moment when switches occur requires other methods [e.g., e.g., 103, 104, 105].

Relatedly, we introduced multiplexing as a possible solution to problems arising from broad neural tuning. Yet, in many of our datasets, evidence for multiplexing occurs even when only one of the two stimuli is located within the receptive field (e.g., [23]). While breadth of tuning creates the need for something other than spatial maps, it is unknown how fluctuations, correlation patterns, and underlying neural ensembles might relate to the degree of separation between the two stimuli and the granularity of overall tuning. Switching in neural activity may be a strategy deployed primarily when stimuli are close enough that the overlap in activatable neurons is substantial, and may be less likely when individual stimuli evoke more distinct activity patterns. It is also unclear whether the granularity of sensory maps might change under more cluttered conditions. For example, perhaps receptive fields shrink or shift to minimize the overlap problem [106, 107].

What is a single stimulus?

What if nominally single-stimulus distributions are themselves fluctuating? While experimenters may intend to present only a single stimulus, it is simply not physically possible. There are always other perceptible stimuli in the environment beyond the limited set presented deliberately by the experimenter (the dim glow of the display monitor, the hum of the HVAC system, and more). Thus, if fluctuations occur when two stimuli are presented by deliberate experimental design, there could also be fluctuations that occur in the “single” stimulus case. We currently exclude such cases from the analysis, requiring that the “single” stimulus cases be satisfactorily modeled with a single Poisson distribution. Further work is therefore needed to explore whether signal-carrying fluctuations occur in the case of nominally single stimuli.

Concluding Remarks

Fluctuating activity might increase the brain’s processing power by permitting individual neurons to encode multiple items across time. This novel hypothesis about the nature of brain representations is relevant to a wide range of findings in neuroscience from the perceptual, neurophysiological, and cognitive realms (see Outstanding Questions). Testing this theory is challenging and requires novel quantitative methods that incorporate the entire distribution of neural response patterns, not just their central tendencies. A risk is that the theory is not globally falsifiable: there is likely no experiment that can show that neural signal switching never occurs anywhere in the brain. Rather, a comparative approach evaluating the strength of evidence under different experimental conditions is likely the most productive avenue for future research in this area. We hope that neural signal switching theory encourages consideration of gaps in existing models and illuminates possible connections between seemingly disparate phenomena in the field.

Outstanding Questions.

What causes neural fluctuations? How a neuron comes to respond to one stimulus vs. another is not known. The process could be “bottom up” (driven by the stimuli), “top down” (driven by a fluctuating control process that is stimulus independent), or noise-driven (influenced by stochastic fluctuations that promote responses to one stimulus vs the other).

What is the spatial scale of neural signal switching? Does fluctuating activity occur chiefly when nearby stimuli compete for representation in an overlapping population of neurons, or is the phenomenon more widespread? Receptive field sizes vary along the processing hierarchy; if fluctuation is linked to receptive field size, then the spatial scale at which it occurs is likely to vary across the processing hierarchy as well.

What is the temporal scale of neural signal switching? Currently, detecting switching is contingent upon the time scales chosen for analysis. Whether fluctuations could be identified at faster or slower time scales than those shown here is unknown.

Do different neurons in an ensemble code the same item at the same time? Signal switching could allow single neurons to preserve information about multiple stimuli across time, but true information preservation requires neurons to coordinate their responses such that at least one neuron responds to each stimulus at a given moment. Whether and how such coordination occurs across the neural population will be a critical area for ongoing exploration.

How is such a code “read-out” by downstream neural populations? We present signal switching as an information preservation strategy, but attentional mechanisms may cause selective filtering somewhere along the processing hierarchy. What determines the preservation vs. selection of sensory information at these different stages, or how the overall code is “read-out”, remain unclear.

What other phenomena could neural switching theory help explain? Signal switching has intriguing potential connections to attention, working memory, perception, and the theory of grounded cognition. The potential role of neural switching in these phenomena remains open for exploration.

Highlights.

Different stimuli can potentially activate overlapping populations of neurons in the brain. How does the brain maintain information about multiple items?

Here, we describe a new theory: neurons might switch back and forth between encoding each item across time.

Recent statistical advances have allowed neurophysiology studies to probe for such activity fluctuations, generating support for this theory and opening intriguing new research directions.

Many open questions remain, such as the time scale of activity fluctuations, the manner in which they are coordinated across (and read out from) neural ensembles, and the implications for perceptual binding, neural oscillations, and cognitive processes like attention, memory, and thought.

Acknowledgments

We are grateful for thoughtful comments on the manuscript from Anita Disney, Jeffrey Mohl, and Shawn Willett, and have benefited from additional fruitful discussions involving Na Young Jun, Yunran Chen, Nicholas Marco, Tingan Zhu, and Chad Smith as well as Aaron Batista, Nicolas Brunel, Yuchen Cao, Marlene Cohen, Winrich Freiwald, Cynthia King, Stephanie Lovich, David Murphy, Leslie Osborne, John Pearson, Liz Romanski, Justine Shih, and Matthew Slayton. The work was supported in part by grants to JMG and STT from NIH (R01 DC013096, R01 DC016363).

Glossary

- Arc-minute

A unit of measurement relating to visual space. An arc-minute spans 1/60 of one degree of the angle subtended in the visual scene.

- Binding

A perceptual phenomenon in which multiple stimuli that share a similar spatial location or stimulus properties may seem to form a single object.

- Duty cycle

In a time-multiplexed code, the cycle time it takes to represent each item once.

- Dynamic Admixture Point Process (DAPP) model

A mathematical model that permits detection of fluctuations at short time scales, such as within an individual trial.

- Encode

How a neuron’s firing pattern carries information about stimulus identity and properties.

- Multiplexing

In this article, a phenomenon whereby a single neuron can encode multiple stimuli by switching between representations of individual stimuli across time windows, allowing information to be maintained about multiple stimuli across time or across multiple neurons. The term has also been applied to cases in which more than one variable influences a neuron’s firing pattern, such as both sensory and decision-related activity.

- Normalization

Defined most generally, a neural response pattern that scales less than linearly with the energy in the sensory scene. The particular case of interest in this article is when a neural response to two stimuli resembles the average of the responses to each stimulus individually.

- Neural code

A general term for the representation of information in neural activity.

- Receptive field

The spatial location to which a neuron is capable of responding (see also “sensory tuning”).

- Representation

The information carried by the firing patterns of populations of neurons, and how that information signifies particular stimulus identities or properties.

- Sensory tuning

A neuron’s preference to respond more strongly to some types of stimuli than others. Neurons can be tuned to respond preferentially to stimuli in specific regions of space (see also “receptive field”), having specific stimulus properties (e.g., sound frequency), or having a specific identity (e.g., identity of a human face).

Footnotes

Declaration of Interests

The authors declare no competing interests.

References

- 1.Geisler WS (1984) Physical limits of acuity and hyperacuity. J Opt Soc Am A 1 (7), 775–82. [DOI] [PubMed] [Google Scholar]

- 2.Dow BM et al. (1981) Magnification factor and receptive field size in foveal striate cortex of the monkey. Exp Brain Res 44 (2), 213–28. [DOI] [PubMed] [Google Scholar]

- 3.Keliris GA et al. (2019) Estimating average single-neuron visual receptive field sizes by fMRI. Proceedings of the National Academy of Sciences 116 (13), 6425–6434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Middlebrooks JC et al. (1994) A panoramic code for sound location by cortical neurons. Science 264 (5160), 842–844. [DOI] [PubMed] [Google Scholar]

- 5.Groh JM et al. (2003) A monotonic code for sound azimuth in primate inferior colliculus. Journal of Cognitive Neuroscience 15, 1217–1231. [DOI] [PubMed] [Google Scholar]

- 6.Werner-Reiss U and Groh JM (2008) A rate code for sound azimuth in monkey auditory cortex: implications for human neuroimaging studies. Journal of Neuroscience 28 (14), 3747–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.McAlpine D and Grothe B (2003) Sound localization and delay lines--do mammals fit the model? Trends Neurosci 26 (7), 347–50. [DOI] [PubMed] [Google Scholar]

- 8.Grothe B et al. (2010) Mechanisms of sound localization in mammals. Physiol Rev 90 (3), 983–1012. [DOI] [PubMed] [Google Scholar]

- 9.Bulkin DA and Groh JM (2011) Systematic mapping of the monkey inferior colliculus reveals enhanced low frequency sound representation. Journal of Neurophysiology 105 (4), 1785–97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Schneider DM and Woolley S.M.J.J.o.N. (2011) Extra-classical tuning predicts stimulus-dependent receptive fields in auditory neurons. 31 (33), 11867–11878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Willett SM and Groh JM (2022) Multiple sounds degrade the frequency representation in monkey inferior colliculus. Eur J Neurosci 55 (2), 528–548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Carandini M and Heeger DJ (2012) Normalization as a canonical neural computation. Nat Rev Neurosci 13 (1), 51–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sceniak MP et al. (1999) Contrasťs effect on spatial summation by macaque V1 neurons. Nat Neurosci 2 (8), 733–9. [DOI] [PubMed] [Google Scholar]

- 14.Goris RLT et al. (2024) Response sub-additivity and variability quenching in visual cortex. Nat Rev Neurosci 25 (4), 237–252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Froehlich E and Kent A (1991) The Froehlich/Kent encyclopedia of telecommunications, CRC Press. [Google Scholar]

- 16.Lisman JE and Idiart MA (1995) Storage of 7 +/− 2 short-term memories in oscillatory subcycles. Science 267 (5203), 1512–5. [DOI] [PubMed] [Google Scholar]

- 17.Siegel M et al. (2009) Phase-dependent neuronal coding of objects in short-term memory. Proc Natl Acad Sci U S A 106 (50), 21341–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kayser C et al. (2009) Spike-Phase Coding Boosts and Stabilizes Information Carried by Spatial and Temporal Spike Patterns. Neuron 61 (4), 597–608. [DOI] [PubMed] [Google Scholar]

- 19.O'Keefe J and Recce ML (1993) Phase relationship between hippocampal place units and the EEG theta rhythm. Hippocampus 3 (3), 317–30. [DOI] [PubMed] [Google Scholar]

- 20.Huxter JR et al. (2008) Theta phase-specific codes for two-dimensional position, trajectory and heading in the hippocampus. Nat Neurosci 11 (5), 587–94. [DOI] [PubMed] [Google Scholar]

- 21.Montemurro MA et al. (2008) Phase-of-Firing Coding of Natural Visual Stimuli in Primary Visual Cortex. Current Biology 18 (5), 375–380. [DOI] [PubMed] [Google Scholar]

- 22.Cattani A et al. (2017) Local Field Potential, Phase Coding. In Encyclopedia of Computational Neuroscience (Jaeger D and Jung R eds), pp. 1–7, Springer; New York. [Google Scholar]

- 23.Jun NY et al. (2022) Coordinated multiplexing of information about separate objects in visual cortex. Elife 11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Glynn C et al. (2021) Analyzing second order stochasticity of neural spiking under stimuli-bundle exposure. Annals of Applied Statistics, arXiv:1911.04387 15 (1), 41–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Mohl JT et al. (2020) Sensitivity and specificity of a Bayesian single trial analysis for time varying neural signals. Neurons, Theory, Data Analysis, and Behavior. [PMC free article] [PubMed] [Google Scholar]

- 26.Caruso VC et al. (2018) Single neurons may encode simultaneous stimuli by switching between activity patterns. Nat Commun 9 (1), 2715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Schmehl MN et al. (2024) Multiple objects evoke fluctuating responses in several regions of the visual pathway. Elife 13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Amarasingham A et al. (2006) Spike Count Reliability and the Poisson Hypothesis. 26 (3), 801–809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hesse JK and Tsao DY (2020) A new no-report paradigm reveals that face cells encode both consciously perceived and suppressed stimuli. Elife 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Leopold DA and Logothetis NK (1996) Activity changes in early visual cortex reflect monkeys' percepts during binocular rivalry. Nature 379 (6565), 549–53. [DOI] [PubMed] [Google Scholar]

- 31.Sokoloski S et al. (2021) Modelling the neural code in large populations of correlated neurons. Elife 10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Coen-Cagli R and Kohn A, Variability of V1 population responses to natural images reflects probabilistic inference, Cognitive Computational Neuroscience, New York, 2017. [Google Scholar]

- 33.Sokoloski S and Coen-Cagli R, Disentangling neural dynamics with fluctuating hidden Markov models., Cosyne, virtual, 2022. [Google Scholar]

- 34.Li K et al. (2016) Neurons in Primate Visual Cortex Alternate between Responses to Multiple Stimuli in Their Receptive Field. Front Comput Neurosci 10, 141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Von Der Malsburg C (1994) The correlation theory of brain function. In Models of neural networks, pp. 95–119, Springer. [Google Scholar]

- 36.Milner PM (1974) A model for visual shape recognition. Psychol Rev 81 (6), 521–35. [DOI] [PubMed] [Google Scholar]

- 37.Gray CM and Singer W (1989) Stimulus-specific neuronal oscillations in orientation columns of cat visual cortex. Proc Natl Acad Sci U S A 86 (5), 1698–702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Singer W and Gray CM (1995) Visual feature integration and the temporal correlation hypothesis. Annu Rev Neurosci 18, 555–86. [DOI] [PubMed] [Google Scholar]

- 39.Gray CM (1999) The temporal correlation hypothesis of visual feature integration: still alive and well. Neuron 24 (1), 31–47, 111–25. [DOI] [PubMed] [Google Scholar]

- 40.Todorovic D (2008) Gestalt principles. Scholarpedia 3 (12), 5345. [Google Scholar]

- 41.Hommel B et al. (2019) No one knows what attention is. Attention, Perception, & Psychophysics 81 (7), 2288–2303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Broadbent DE (1957) A mechanical model for human attention and immediate memory. Psychological review 64 (3), 205. [DOI] [PubMed] [Google Scholar]

- 43.Sokoloski S and Coen-Cagli R (2019) Conditional Finite Mixtures of Poisson Distributions for Context-Dependent Neural Correlations. arXiv 1908.00637. [Google Scholar]

- 44.Ebitz RB and Hayden BY (2021) The population doctrine in cognitive neuroscience. Neuron 109 (19), 3055–3068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Triesman A and Gelade G (1980) A feature integration theory of attention. Cognit. Psychol 12, 97–136. [DOI] [PubMed] [Google Scholar]

- 46.d’Avossa G et al. (2006) Attentional selection of moving objects by a serial process. Vision Research 46 (20), 3403–3412. [DOI] [PubMed] [Google Scholar]

- 47.Jia J et al. (2017) Sequential sampling of visual objects during sustained attention. PLoS Biol 15 (6), e2001903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Jensen O and Vissers ME (2017) Multiple visual objects are sampled sequentially. PLoS Biol 15 (7), e2003230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Holcombe AO and Chen W-Y (2013) Splitting attention reduces temporal resolution from 7 Hz for tracking one object to< 3 Hz when tracking three. Journal of vision 13 (1), 12–12. [DOI] [PubMed] [Google Scholar]

- 50.Busch NA et al. (2009) The phase of ongoing EEG oscillations predicts visual perception. J Neurosci 29 (24), 7869–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Busch NA and VanRullen R (2010) Spontaneous EEG oscillations reveal periodic sampling of visual attention. Proc Natl Acad Sci U S A 107 (37), 16048–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Vanrullen R et al. (2011) Ongoing EEG Phase as a Trial-by-Trial Predictor of Perceptual and Attentional Variability. Front Psychol 2, 60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Fiebelkorn IC et al. (2018) A Dynamic Interplay within the Frontoparietal Network Underlies Rhythmic Spatial Attention. Neuron 99 (4), 842–853 e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Fiebelkorn IC et al. (2013) Rhythmic sampling within and between objects despite sustained attention at a cued location. Curr Biol 23 (24), 2553–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Helfrich RF et al. (2018) Neural Mechanisms of Sustained Attention Are Rhythmic. Neuron 99 (4), 854–865 e5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Fiebelkorn IC and Kastner S (2019) A Rhythmic Theory of Attention. Trends Cogn Sci 23 (2), 87101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Carandini M and Heeger DJ (1994) Summation and division by neurons in primate visual cortex. Science 264 (5163), 1333–6. [DOI] [PubMed] [Google Scholar]

- 58.Carandini M et al. (1997) Linearity and normalization in simple cells of the macaque primary visual cortex. Journal of Neuroscience 17 (21), 8621–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Ohshiro T et al. (2017) A Neural Signature of Divisive Normalization at the Level of Multisensory Integration in Primate Cortex. Neuron 95 (2), 399–411 e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Ohshiro T et al. (2011) A normalization model of multisensory integration. Nat Neurosci 14 (6), 775–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Britten KH and Heuer HW (1999) Spatial summation in the receptive fields of MT neurons. Journal of Neuroscience 19 (12), 5074–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Li X and Basso MA (2005) Competitive stimulus interactions within single response fields of superior colliculus neurons. J Neurosci 25 (49), 11357–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Zoccolan D et al. (2005) Multiple Object Response Normalization in Monkey Inferotemporal Cortex. The Journal of Neuroscience 25 (36), 8150–8164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Goris RL et al. (2014) Partitioning neuronal variability. Nat Neurosci 17 (6), 858–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Coen-Cagli R and Solomon SS (2019) Relating Divisive Normalization to Neuronal Response Variability. J Neurosci 39 (37), 7344–7356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Henaff OJ et al. (2020) Representation of visual uncertainty through neural gain variability. Nat Commun 11 (1), 2513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Glimcher PW and Sparks DL (1993) Representation of averaging saccades in the superior colliculus of the monkey. Exp Brain Res 95 (3), 429–35. [DOI] [PubMed] [Google Scholar]

- 68.van Opstal AJ and van Gisbergen JA (1990) Role of monkey superior colliculus in saccade averaging. Exp Brain Res 79 (1), 143–9. [DOI] [PubMed] [Google Scholar]

- 69.Wollenberg L et al. (2018) Visual attention is not deployed at the endpoint of averaging saccades. PLoS Biol 16 (6), e2006548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Rollenhagen JE and Olson CR (2005) Low-frequency oscillations arising from competitive interactions between visual stimuli in macaque inferotemporal cortex. J Neurophysiol 94 (5), 3368–87. [DOI] [PubMed] [Google Scholar]

- 71.Axmacher N et al. (2010) Cross-frequency coupling supports multi-item working memory in the human hippocampus. Proc Natl Acad Sci U S A 107 (7), 3228–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Kornblith S et al. (2016) Stimulus Load and Oscillatory Activity in Higher Cortex. Cereb Cortex 26 (9), 3772–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Moore BC (1995) Frequency analysis and masking. Hearing 161, 205. [Google Scholar]

- 74.Hermens F and Bell A (2014) Speeded classification in simultaneous masking. Journal of Vision 14 (6), 6–6. [DOI] [PubMed] [Google Scholar]

- 75.Whitney D and Levi DM (2011) Visual crowding: a fundamental limit on conscious perception and object recognition. Trends Cogn Sci 15 (4), 160–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Lisberger SG and Ferrera VP (1997) Vector averaging for smooth pursuit eye movements initiated by two moving targets in monkeys. J Neurosci 17 (19), 7490–502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.van Opstal AJ and van Gisbergen JAM (1990) Role of monkey superior colliculus in saccade averaging. Exp. Brain Res. 79, 143–149. [DOI] [PubMed] [Google Scholar]

- 78.Bullock TH (1970) The reliability of neurons. The Journal of general physiology 55 (5), 565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Musall S et al. (2019) Single-trial neural dynamics are dominated by richly varied movements. Nature Neuroscience 22 (10), 1677–1686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Barsalou LW (2008) Grounded cognition. Annu Rev Psychol 59, 617–45. [DOI] [PubMed] [Google Scholar]

- 81.Lakoff G and Johnson M (1980) Metaphors we live by, University of Chicago Press. [Google Scholar]

- 82.Gallese V and Lakoff G (2005) The Brain's concepts: the role of the Sensory-motor system in conceptual knowledge. Cogn Neuropsychol 22 (3), 455–79. [DOI] [PubMed] [Google Scholar]

- 83.Groh JM (2014) Thinking about thinking. In Making Space: How the Brain Knows Where Things Are, pp. 203–217, Harvard University Press. [Google Scholar]

- 84.Slotnick SD et al. (2005) Visual mental imagery induces retinotopically organized activation of early visual areas. Cereb Cortex 15 (10), 1570–83. [DOI] [PubMed] [Google Scholar]

- 85.Hauk O et al. (2004) Somatotopic representation of action words in human motor and premotor cortex. Neuron 41 (2), 301–7. [DOI] [PubMed] [Google Scholar]

- 86.Lacey S et al. (2012) Metaphorically feeling: comprehending textural metaphors activates somatosensory cortex. Brain Lang 120 (3), 416–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Huth AG et al. (2016) Natural speech reveals the semantic maps that tile human cerebral cortex. Nature 532 (7600), 453–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Hutchison RM et al. (2013) Dynamic functional connectivity: promise, issues, and interpretations. Neuroimage 80, 360–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Canolty RT and Knight RT (2010) The functional role of cross-frequency coupling. Trends Cogn Sci 14 (11), 506–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Montijn JS et al. (2012) Divisive normalization and neuronal oscillations in a single hierarchical framework of selective visual attention. Front Neural Circuits 6, 22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Lurie DJ et al. (2020) Questions and controversies in the study of time-varying functional connectivity in resting fMRI. Network Neuroscience 4 (1), 30–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Walberg F (1965) Axoaxonic contacts in the cuneate nucleus, probable basis for presynaptic depolarization. Exp Neurol 13 (2), 218–31. [DOI] [PubMed] [Google Scholar]

- 93.Feuerstein TJ (2008) Presynaptic receptors for dopamine, histamine, and serotonin. Handb Exp Pharmacol (184), 289–338. [DOI] [PubMed] [Google Scholar]

- 94.Pugh JR and Jahr CE (2013) Activation of axonal receptors by GABA spillover increases somatic firing. J Neurosci 33 (43), 16924–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Trigo FF et al. (2008) Axonal GABAA receptors. Eur J Neurosci 28 (5), 841–8. [DOI] [PubMed] [Google Scholar]

- 96.Hasenstaub A et al. (2007) State changes rapidly modulate cortical neuronal responsiveness. J Neurosci 27 (36), 9607–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Engel TA et al. (2016) Selective modulation of cortical state during spatial attention. Science 354 (6316), 1140–1144. [DOI] [PubMed] [Google Scholar]

- 98.Anastassiou CA et al. (2011) Ephaptic coupling of cortical neurons. Nature Neuroscience 14 (2), 217–223. [DOI] [PubMed] [Google Scholar]

- 99.Bergmann TO et al. (2019) Pulsed Facilitation of Corticospinal Excitability by the Sensorimotor μ-Alpha Rhythm. The Journal of Neuroscience 39 (50), 10034–10043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Hopfield JJ (1982) Neural networks and physical systems with emergent collective computational abilities. Proceedings of the national academy of sciences 79 (8), 2554–2558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Sompolinsky H and Kanter I (1986) Temporal association in asymmetric neural networks. Physical review letters 57 (22), 2861. [DOI] [PubMed] [Google Scholar]

- 102.Bernstein J et al. , Markov transitions between attractor states in a recurrent neural network, 2017 AAAI Spring Symposium Series, 2017. [Google Scholar]

- 103.Latimer KW et al. (2015) NEURONAL MODELING. Single-trial spike trains in parietal cortex reveal discrete steps during decision-making. Science 349 (6244), 184–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Latimer KW et al. (2016) Response to Comment on "Single-trial spike trains in parietal cortex reveal discrete steps during decision-making". Science 351 (6280), 1406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Zoltowski DM et al. (2019) Discrete Stepping and Nonlinear Ramping Dynamics Underlie Spiking Responses of LIP Neurons during Decision-Making. Neuron 102 (6), 1249–1258 e10. [DOI] [PubMed] [Google Scholar]

- 106.Womelsdorf T et al. (2006) Dynamic shifts of visual receptive fields in cortical area MT by spatial attention. Nat Neurosci 9 (9), 1156–60. [DOI] [PubMed] [Google Scholar]

- 107.Connor CE et al. (1997) Spatial attention effects in macaque area V4. J Neurosci 17 (9), 3201–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Smith AT et al. (2001) Estimating Receptive Field Size from fMRI Data in Human Striate and Extrastriate Visual Cortex. Cerebral Cortex 11 (12), 1182–1190. [DOI] [PubMed] [Google Scholar]

- 109.Motter BC (2009) Central V4 Receptive Fields Are Scaled by the V1 Cortical Magnification and Correspond to a Constant-Sized Sampling of the V1 Surface. 29 (18), 5749–5757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Freeman J and Simoncelli E (2011) Metamers of the Visual Stream. Nature neuroscience 14, 1195–201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.Grill-Spector K et al. (2017) The Functional Neuroanatomy of Human Face Perception. Annu Rev Vis Sci 3, 167–196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112.Higgins NC et al. (2010) Specialization of binaural responses in ventral auditory cortices. J Neurosci 30 (43), 14522–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Woods TM et al. (2006) Effects of stimulus azimuth and intensity on the single-neuron activity in the auditory cortex of the alert macaque monkey. J Neurophysiol 96 (6), 3323–37. [DOI] [PubMed] [Google Scholar]