Summary

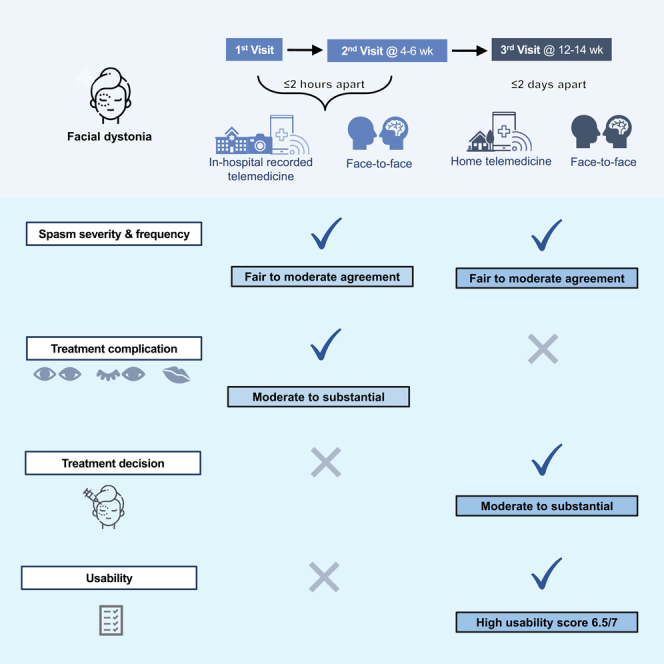

This study investigated telemedicine reliability and usability in evaluating facial dystonia grading and treatment complications. Eighty-two telemedicine recordings from 43 adults with blepharospasm (12, 28%) and hemifacial spasm (31, 72%) were obtained (mean age 64.5 ± 9.3 years, 32 females [64%]). Two recorded in-hospital telemedicine visits were arranged with in-person visits at baseline and 4–6 weeks. After 8 weeks, neuro-ophthalmologists who performed the in-person visits re-evaluated the telemedicine video records. Intra-rater agreements in assessing spasm gradings were moderate (severity: kappa = 0.42, 95% confidence interval [CI] 0.21–0.62; frequency: kappa = 0.41, 95% CI 0.21–0.61) with substantial agreement in detecting lagophthalmos (kappa = 0.61, 95% CI 0.36–0.86). Adding symptoms to signs increased sensitivity and negative predictive value (NPV) in detecting lagophthalmos (67%–100% and 94%–100%) and drooping lips (38%–75% and 94%–96%), respectively. Thai version Telehealth Usability Questionnaire showed high mean usability score of 6.5 (SD 0.8) out of 7. Telemedicine could further be developed as an alternative platform to evaluate facial dystonia.

Subject areas: Health sciences, Ophthalmology, Health technology

Graphical abstract

Highlights

-

•

Telemedicine had high usability scores for facial dystonia

-

•

Agreements in assessing treatment complications were better than spasm gradings

-

•

The dynamicity of facial dystonia spasm should be considered

-

•

Chin-up position enhanced lagophthalmos detection

Health sciences; Ophthalmology; Health technology

Introduction

Clinical presentations of facial dystonia, including benign essential blepharospasm (BEB) and hemifacial spasm (HFS), are involuntary episodic contractions of the orbicularis oculi muscles.1 Botulinum toxin injection is the current choice of treatment, but reinjections are needed.1,2 Standard quarterly in-person visits tend to limit the ability to detect complications such as lagophthalmos, ptosis, and drooping lips, which often occur with maximum effect at four to six weeks after injections.2,3 Frequent follow-ups to adjust injection dosage and locations are preferred but necessitate increased patient traveling burdens.3,4,5 Since the COVID-19 pandemic, the implementation of telemedicine has expanded globally for social distancing and convenience.4,5,6,7,8 While the accuracy of telemedicine physical examinations has been validated for many disorders, there has been no evidence on the efficacy of using telemedicine for detecting and monitoring facial dystonia.3,9,10,11,12,13,14,15,16

This study aimed to investigate the reliability and usability of telemedicine evaluations for facial dystonia compared to in-person evaluation by neuro-ophthalmologists.

Results

Demographic data

Forty-three facial dystonia participants gave their consent and completed the first visit for an evaluation by the neuro-ophthalmologist. Due to traveling difficulties, one participant missed the second visit. Three and one participants dropped out after the first and third telemedicine visits, respectively. A total of 82 video recordings were collected (Figure 1). Five videos had no sound due to recording errors, resulting in 77 videos with symptom evaluation. Grading score data were not collected from one participant during the third home telemedicine visit reducing the final total to only 38 participants for grading score comparison.

Figure 1.

Study flow diagram

Half of the telemedicine videos were recorded after in-person examinations, composed of the 39 first visits and two second visits. The other half were recorded before in-person examinations. The mean video assessment washout period was 137 days (standard deviation [SD] = 36). The low-grade group was slightly larger than the high-grade group with the highest number of videos in the Jankovic rating scale (JRS) grade 3 (Table 1).

Table 1.

Demographic data of recruited participants and video recordings

| Items | n = 43 participants | Items | n = 82 recordings | |

|---|---|---|---|---|

| Gender, No.(%) | Jankovic rating scale, No. (%) | Severity | Frequency | |

| Male | 11 (26) | Grade 0 | 16 (20) | 16 (19.5) |

| Female | 32 (74) | Grade 1 | 18 (22) | 18 (22) |

| Mean age (SD) | 64.5 (9.3) | Grade 2 | 14 (17) | 16 (19.5) |

| Disease, participants (%), recordings (%) | Grade 3 | 24 (29) | 23 (28) | |

| BEB | 12 (28), 21 (26) | Grade 4 | 10 (12) | 9 (11) |

| HFS | 31 (72), 61 (74) | Categorized grading, No. (%) | Severity | Frequency |

| Device operating system, No. (%) | High-grade group | 48 (58.5) | 50 (61) | |

| IOS | 10 (23) | Low-grade group | 34 (41.5) | 32 (39) |

| Android | 33 (77) | Sequence between in-person examination and telemedicine recordation, No. (%) | ||

| Prior telemedicine experience, No. (%) | ||||

| Yes | 29 (67) | In-person visit first | 41 (50) | |

| No | 14 (33) | Telemedicine visit first | 41 (50) | |

n = number of samples, No. = number in each group.

Reliability

Comparing 82 telemedicine visit videos to in-person visit evaluations, intra-rater agreements for the spasm severity and frequency gradings, displayed by quadratic weighted kappa score, were 0.44 and 0.42, respectively. Kappa statistics calculated for the reliability of categorized spasm severity and frequency gradings were 0.42 and 0.41, respectively. Kappa scores in detecting signs of complications were highest for lagophthalmos detection (kappa = 0.61) followed by ptosis and drooping lips. No sign of extraocular muscle limitation was present throughout the study (Table 2).

Table 2.

Agreement between signs or symptoms of telemedicine and signs of in-person evaluations

| Items | |||

|---|---|---|---|

| Signs (n = 82 videos) | Signs or symptoms (n = 77 videos) | ||

| Lagophthalmos | 0.61 (0.36–0.86) | 0.35 (0.18–0.50) | |

| Ptosis | 0.59 (0.31–0.86) | 0.08 (−0.04–0.20) | |

| Drooping lips | 0.47 (0.11–0.82) | 0.24 (0.04–0.44) | |

| Eye movement limitation | 0 | 0 | |

| In-hospital telemedicine visit | Third real-life telemedicine visit | ||

| Spasm severity score (0–4) | 0.44 (0.25–0.64) | 0.51 (0.23–0.78) | (n = 38 participants) |

| Classified spasm severity | 0.42 (0.21–0.62) | 0.56 (0.29–0.83) | |

| Spasm frequency score (0–4) | 0.42 (0.22–0.62) | 0.49 (0.22–0.77) | |

| Classified spasm frequency | 0.41 (0.21–0.61) | 0.51 (0.23–0.79) | |

| Further treatment decision | – | 0.63 (0.38–0.88) | (n = 39 participants) |

Quadratic weighted Kappa or Kappa (95% confidence interval).

n = number of samples.

Using signs of facial dystonia and its treatment complications from in-person examination as the gold standard, diagnostic accuracy for classified spasm severity and frequency, presence of lagophthalmos, ptosis, and drooping lips showed high specificity, negative predictive value (NPV), and accuracy in recognizing complications (Table 3). However, the kappa score significantly decreased, with lower specificity and accuracy when comparing signs or symptoms of complications in the telemedicine visit arm to signs in the in-person visit (Tables 2 and 3). The sensitivity and NPV of lagophthalmos and drooping lips detection showed an increase (Table 3).

Table 3.

Diagnostic accuracy of telemedicine visit evaluation

| Diagnostic accuracy | Complications (in-hospital telemedicine) |

In-hospital telemedicine |

Third home-based telemedicine |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Lagophthalmos |

Ptosis |

Drooping lips |

Classified severity | Classified frequency | Classified severity | Classified frequency | Treatment decision | ||||

| Signs | S&S | Signs | S&S | Signs | S&S | ||||||

| n= | 82 videos | 77 videos | 82 videos | 77 videos | 82 videos | 77 videos | 82 videos | 38 patients | 39 patients | ||

| Sensitivity, % | 67 (35–90) | 100 (74–100) | 75 (35–97) | 75 (35–97) | 38 (9–76) | 75 (35–97) | 62 (44–78 | 63 (44–79) | 83 (62–95) | 82 (60–95) | 83 (63–95) |

| Specificity, % | 94 (86–98) | 64 (52–75) | 93 (85–98) | 49 (37–61) | 99 (93–100) | 73 (61–83) | 79 (65–90) | 78 (64–89) | 73 (45–92) | 69 (41–89) | 80 (52–96) |

| PPV, % | 67 (42–85) | 32 (26–40) | 55 (32–75) | 14 (9–20) | 75 (26–96) | 23 (15–34) | 68 (53–80) | 65 (50–77) | 83 (66.8–92) | 78 (63–88) | 87 (71–95) |

| NPV, % | 94.3 (88–97) | 100 | 97 (91–99) | 95 (84–98) | 94 (89.5–96.2) | 96 (89–99) | 75 (65–82) | 77 (67–84) | 73 (52–88) | 73 (52–88) | 75 (54–88) |

|

Positive LR |

11.7 (4.2–32.8) | 2.8 (2–3.8) | 11.1 (4.4–28.3) | 1.5 (0.9–2.3) | 27.8 (3.3–236.4) | 2.8 (1.6–4.8) | 3 (1.6–5.5) | 2.8 (1.6–5.1) | 3.1 (1.3–7.3) | 2.6 (1.2–5.6) | 4.2 (1.5–11.7) |

|

Negative LR |

0.4 (0.2–0.8) | 0 | 0.3 (0.1–0.9) | 0.5 (0.2–1.8) | 0.6 (0.4–1.1) | 0.3 (0.1–1.2) | 0.5 (0.3–0.8) | 0.5 (0.3–0.8) | 0.2 (0.1–0.6) | 0.3 (0.1–0.7) | 0.2 (0.1–0.5) |

| Accuracy, % | 90 (82–96) | 70 (58–79) | 92 (83–97) | 51 (40–62) | 93 (85–97) | 73 (62–82) | 72 (61–81) | 72 (61–81) | 79 (63–90) | 76 (60–89) | 82 (67–93) |

(95% confidence interval).

n = number of samples, S&S = sign and symptom, PPV = positive predictive value, NPV = negative predictive value, LR = likelihood ratio.

In the third visit, the spasm severity and frequency weighted kappa scores of 38 telemedicine visits evaluations were 0.51 and 0.49, respectively. Kappa score slightly increased to 0.56 and 0.51 for categorized spasm severity and frequency gradings, respectively. The agreement in determining further injection did show a moderate kappa score of 0.63 (n = 39) (Table 2).

All recordings were reviewed to check for the environment, lighting control, and patient cooperation. Participants’ attention was judged as good throughout the recording.

Subgroup analyses

The sequence of examinations revealed that most of the prior visit evaluations were found to have higher gradings than the following visits which were held on the same day. Out of 41 telemedicine visits that occurred after in-person examinations, six (15%) telemedicine visits were more severe than in-person visits compared to 18 (44%) with a lower grading. Further categorization into high-grade (3–4) and low-grade (0–2) groups showed similar results with three (7%) higher-graded telemedicine visits versus 12 (29%) lower-graded visits. Additionally, in the telemedicine visits which came before in-person examinations, 18 (44%) telemedicine visits were more severe than in-person visits, while eight (20%) telemedicine visits were less severe. After being categorized, only one (2%) telemedicine visit was graded to be milder than the in-person visit in contrast to seven (17%) visits with greater grading scores.

Usability

Forty participants gave high telehealth usability scores with the highest mean score in the satisfaction subscale with 6.69 out of 7. The mean score for the reliability subscale was the lowest (6.23). Total mean usability score was 6.5 (SD 0.8) out of 7. Details of all 21 questions are displayed in Table 4.

Table 4.

Telehealth usability questionnaire and usability score (n = 40 patients)

| Items and subscales | Min | Max | Mean | SD | |

|---|---|---|---|---|---|

| 1. | Telehealth improves my access to healthcare services. | 5 | 7 | 6.65 | 0.7 |

| 2. | Telehealth saves me time traveling to a hospital or specialist clinic. | 5 | 7 | 6.8 | 0.56 |

| 3. | Telehealth provides for my healthcare need. | 5 | 7 | 6.4 | 0.87 |

| Usefulness | 6.62 | 0.74 | |||

| 4. | It was simple to use this system. | 4 | 7 | 6.45 | 0.96 |

| 5. | It was easy to learn to use the system. | 5 | 7 | 6.33 | 0.83 |

| 6. | I believe I could become productive quickly using this system. | 5 | 7 | 6.4 | 0.84 |

| 7. | The way I interact with this system is pleasant. | 5 | 7 | 6.65 | 0.58 |

| 8. | I like using the system. | 5 | 7 | 6.25 | 0.87 |

| 9. | The system is simple and easy to understand. | 4 | 7 | 6.45 | 0.87 |

| Ease of Use | 6.42 | 0.83 | |||

| 10. | This system is able to do everything I would want it to be able to do. | 5 | 7 | 6.25 | 0.87 |

| 11. | I can easily talk to the clinician using the telehealth system. | 4 | 7 | 6.56 | 0.75 |

| 12. | I can hear the clinician clearly using the telehealth system. | 5 | 7 | 6.73 | 0.64 |

| 13. | I felt I was able to express myself effectively. | 5 | 7 | 6.48 | 0.72 |

| 14. | Using the telehealth system, I can see the clinician as well as if we met in person. | 4 | 7 | 6.58 | 0.78 |

| Effectiveness | 6.52 | 0.76 | |||

| 15. | I think the visits provided over the telehealth system are the same as in-person visits. | 4 | 7 | 6.45 | 0.81 |

| 16. | Whenever I made a mistake using the system, I could recover easily and quickly. | 5 | 7 | 6.26 | 0.91 |

| 17. | The system gave error messages that clearly told me how to fix problems. | 2 | 7 | 5.97 | 1.21 |

| Reliability | 6.23 | 1 | |||

| 18. | I feel comfortable communicating with the clinician using the telehealth system. | 5 | 7 | 6.78 | 0.48 |

| 19. | Telehealth is an acceptable way to receive healthcare services. | 5 | 7 | 6.7 | 0.56 |

| 20. | I would use telehealth services again. | 5 | 7 | 6.54 | 0.72 |

| 21. | Overall, I am satisfied with this telehealth system. | 5 | 7 | 6.74 | 0.6 |

| Satisfaction | 6.69 | 0.6 |

Modified from Parmanto, Lewis, Graham, and Bertolet.17

n = number of samples.

Effect on daily life activities

Spasmodic eyelids were reported to disturb participant habits in 36 out of 82 (45%) visits. Limited activities were driving (n = 13, 36%), social meeting (n = 11, 31%), reading (n = 6, 17%), walking (n = 4, 11%), and sewing (n = 2, 6%). Impacts on eating, watching television, and sleeping were reported in one visit per activity (3%). No adverse events were reported during the study.

Discussion

Excellent reliability in evaluating facial dystonia treatment complications and moderate reliability in evaluating spasm gradings were reported in this study. Telemedicine abilities to detect gross presentations have been proven in a wide range of medical specialties including ophthalmology and neurology.3,9,10,11,12,13,14,15,16 Fraint et al. (n = 46) reported excellent reliability (kappa = 0.89) in determining the Toronto Western Spasmodic Torticollis Rating Scale (TWSTRS) motor severity summary score in cervical dystonia, a disease which can be assessed by visual examination similar to facial dystonia.2,3 In contrast to this study, our telemedicine evaluation showed moderate agreement for spasm severity (kappa = 0.44), spasm frequency (kappa = 0.42), and classified gradings (severity, kappa = 0.42 and frequency, kappa = 0.41). This could be explained by the dynamics of facial dystonia, unlike the study on spasmodic torticollis which was evaluated by a more static component and larger organ movement.1,2,3 In general, the fine periodic facial spasm changes over time, and other factors such as stress, light, activity, and attention add to the difficulty of video diagnosis.1,2 However, in this study, room environment, light, and participants activity were controlled as the protocol in the telemedicine visit arm. Participants’ attention was good for all videos. We are unable to assess how these factors might affect the reliability in an uncontrolled environment. Moreover, video quality and internet signal affected the ability to assess participant grading despite substantial efforts to enhance recording resolution. Tarolli et al. also mentioned moderate correlations between remote and in-person motor assessments, with an intraclass correlation coefficient (ICC) of 0.43 for the Movement Disorder Society-Unified Parkinson’s Disease Rating Scale (MDS-UPDRS) and 0.51 for the UPDRS motor assessment.14 In addition, lower agreement and difficulties in evaluating dystonia (ICC = 0.31) and oculomotor aspect (ICC = 0.41) were demonstrated in a pilot study on remote assessment in Huntington’s disease (n = 11).15 We found that no matter how close the two visits were apart, an hour or a few days, the agreement in facial dystonia grading and injection requirement was similar at moderate agreement.

After taking symptoms into consideration, the increased sensitivity and NPV in detecting lagophthalmos and drooping lips corresponded with previous reports of the screening potential of telemedicine by taking a detailed history together with video or photograph examination.8,18 Supporting evidence for a greater ability to detect related complications was reported.12,13 A kappa coefficient of 0.55 in detecting facial paresis was correlated with our substantial agreement in lagophthalmos detection (kappa = 0.61), and moderate agreement for both ptosis (kappa = 0.59) and drooping lips detection (kappa = 0.47).12 Although abnormal eye movement was not present in this study, it was detected in general neurological patients with a kappa coefficient of 0.58.12 In addition, an overall kappa coefficient of 0.65 was demonstrated for detecting abnormal extraocular motility, ptosis, and other ocular signs in two ophthalmology clinics.12,13 Since lagophthalmos is a complication that could be treated by lubrication or lid tapping to prevent further corneal complications, its high detectability by telemedicine could improve facial dystonia management.2 Our assessors also noted that the chin-up position could enhance lagophthalmos noticeability. This position was used to detect lagophthalmos in previous reports.19,20

Lower kappa scores, specificity, and accuracy for complications evaluated by signs or symptoms identified during telemedicine visits compared to signs identified during in-person visits could be explained by higher false positives because people reportedly have symptoms in the absence of signs.

High usability scoring from this study is consistent with prior satisfactory teleconsultation reports.3,21,22,23 Lower grading severity in the later visit in a post hoc subgroup analysis, whether it was telemedicine or in-person visit, could be due to participant familiarity with the examination processes.

Being the first to investigate telemedicine reliability in the evaluation of both the grading and treatment complications of facial dystonia was the strength of this study. The examination protocol of this study should be studied further as a possible future standard.

In conclusion, telemedicine produced high usability scores and better reliability in evaluating facial dystonia treatment complications than its spasm gradings. The dynamic periodic spasm of the disease should be taken into consideration when assessing difficulties in reaching higher reliability.

Limitations of the study

The shortcomings of this study were the subjective scale and omission of inter-rater agreement data. The validated JRS was selected as our major rating scale due to its good internal consistency in assessing BEB and usage acceptance for BEB and HFS.24,25 Inter-rater agreement was omitted due to resource limitation and similar grading abilities from more than ten years of experience between our neuro-ophthalmologist assessors (P.H. and S.J.). Nevertheless, each pair of telemedicine and in-person examinations were graded by the same neuro-ophthalmologist to exclude possible inter-rater effects. Within this context, we aimed to minimize measurement and recall bias with a washout period of at least eight weeks.26 The telemedicine and in-person visits were also held less than 2 h apart and performed under controlled examination steps to minimize influencing factors such as rest status, time, and situation.1,2 Another shortcoming is a relatively small sample size resulting in rather low precision, as demonstrated by a wide confidence interval. However, paired analysis could add some statistical power and the study results are similar to previous studies done on different diseases.

STAR★Methods

Key resources table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Software and algorithms | ||

| IBM SPSS Statistics 28.0 | International Business Machines Corporation (IBM) | https://www.ibm.com/support/pages/downloading-ibm-spss-statistics-28 |

| Chula Care Application | King Chulalongkorn Memorial Hospital, Mobile application can be downloaded through App store and play store. (This application is available for King Chulalongkorn Memorial Hospital’s patients after identity verifications. Telemedicine visits is accessible only after an official appointment.) |

https://chulalongkornhospital.go.th/kcmh/chula-care-application/ https://apps.apple.com/th/app/chula-care/id1382259986?l=th https://play.google.com/store/apps/details?id=th.go.chulalongkornhospital.chulacare&pli=1 https://bit.ly/2yzaKjVhttps://chula.virtualhosp.com/backoffice/login |

| Other | ||

| Telehealth Usability Questionnaire (TUQ) | International Journal of Telerehabilitation | https://doi.org/10.5195/ijt.2016.6196 |

| Thai version of Telehealth Usability Questionnaire | International Journal of Telerehabilitation | https://doi.org/10.5195/ijt.2023.6577 |

Resource availability

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the lead contact, Parima Hirunwiwatkul (hparima@gmail.com).

Materials availability

-

•

Thai version of Telehealth Usability Questionnaire (T-TUQ) used to evaluate the usability of telemedicine in this study and the original version, previously developed and validated, Telehealth Usability Questionnaire (TUQ) has been deposited in publication.17,27

-

•

This study is not related to any reagent or code.

Data and code availability

-

•

All data reported in this paper will be shared by the lead contact upon request.

-

•

This paper does not report original code.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

Experimental model and study participant details

This diagnostic study was approved by the Institutional Review Board of the Faculty of Medicine, Chulalongkorn University, Bangkok, Thailand (Approval number 433/2021) and registered in the Thai clinical trials registry (TCTR20210508001). Each action in this study was completed in accordance with the Declaration of Helsinki and Good Clinical Practice guidelines. All participants provided written informed consent.

Objectives

The primary objective was to validate the reliability of telemedicine in evaluating spasm severity and frequency of facial dystonia participants and any corresponding functional disability. Other secondary objectives were to determine telemedicine’s ability to recognize possible complications of botulinum toxin injections, facilitate decisions on further injections, assess impacts on daily life activities, and assess its usability.

Samples and participants

Since the five severity gradings were grouped into high-grade and low-grade groups, the calculated sample size was 74 recordings considering an expected kappa coefficient of 0.8, 0.15 precision (kappa=0.65-0.95) 95% confidence level (95%CI) and outcome proportion of 0.7.28,29,30 After assuming a drop-out rate of 10%, a sample size of 83 telemedicine visits was targeted.

Eighty-three in-hospital telemedicine visit video recordings of facial dystonia participants were collected from the botulinum toxin outpatient clinic, Department of Ophthalmology, King Chulalongkorn Memorial Hospital (KCMH), Bangkok, Thailand. In-hospital telemedicine visits were arranged through KCMH telemedicine application called “Chula care”. Within two hours apart, in-person visits evaluated by a neuro-ophthalmologist was considered as the gold standard best practice.

Potential Thai, Southeast Asian ethnic, facial dystonia participants in both sexes of any gender, at any stage of disease, were recruited by invitation at the clinic from 11 November 2021 to 31 March 2022. Demographic data can be found in Table 1. Eligible participants were at least 18 years old with the ability to communicate and use the hospital telemedicine application either by themselves or with help from their caregivers in Thai. Participants with fluctuating co-morbidities such as myasthenia gravis and diseases that decrease the ability to communicate through telemedicine such as severe dementia were excluded.

Participants in this study were healthy immunocompetent host and naïve to the study experimental model. No procedures prior to this study were arranged to any participants.

Method details

Before each telemedicine visit, participants were trained on how to use the telemedicine application by finding the proper environment, position, and lighting for high quality video according to the following setups:

-

(1)Position in room with windows

-

•Front-facing the window

-

•Avoid back-facing and side-facing

-

•Close curtains to adjust lighting for best visualization

-

•

-

(2)Ceiling light position

-

•In front of the camera

-

•Not directly above nor at the side of the participants

-

•Can be turned off if there are other lighting source

-

•

-

(3)Stand lighting properties

-

•Ring LED light size six to eight inch in diameter

-

•Cool white light color

-

•Appropriate intensity for facial visibility with minimum reflective light

-

•

-

(4)Camera/Mobile phone position

-

•Center the camera in front of the ring LED light

-

•Center the camera in front of participants faces at eye level

-

•Move closer or farther to entirely visualize participants’ faces to neck

-

•Use stand phone holder for stabilization

-

•While looking straight, please look at the camera on the phone not the screen.

-

•

On the first two visits at baseline and 4-6 weeks, each participant was examined by a neuro-ophthalmologist (P.H. or S.J.) for spasm gradings, signs of complications, and impaired daily activities. Less than two hours apart, the first two telemedicine visits were held by non-assessor researchers (P.P., W.T., B.A.) and recorded without evaluation at the KCMH telemedicine clinic (Figure 1). Botulinum toxin was later injected as indicated in the first visit. Symptoms of complications were collected only during the telemedicine visit. Video recordings of the first and second visit were considered to be independent of each other due to different clinical presentations. At 12 to 14 weeks, the last non-recorded telemedicine visit was held from the participant’s place within two days before the third in-person visit to evaluate the actual telemedicine usability. Further treatment decisions and spasm gradings were collected in the last visit. After a washout period of more than eight weeks, the first two telemedicine visits videos were de-identified, re-ordered, and later evaluated by the same neuro-ophthalmologist (P.H. or S.J.), who evaluated the participants during the correlated in-person visits (Figure 1). Each pair of telemedicine and in-person examinations were graded by the same neuro-ophthalmologist to exclude possible inter-rater effect. All telemedicine and in-person visits were performed according to an adapted protocol:

-

(1)

Patients at rest, eyes open (10 sec)

-

(2)

Patients voluntarily perform a forceful eye closure and grin followed by eye reopening and mouth closure (repeated 5 times, one cycle per second)

-

(3)

Patients at rest, eyes open (10 sec)

-

(4)

Patients voluntarily perform gentle eye closure followed by eye reopening (repeated 5 times, one cycle per second)

-

(5)

Patients at rest, eyes open (10 sec)

-

(6)

Patients voluntarily perform a static pout with bloated cheeks (10 sec)

-

(7)

Patients at rest, eyes open (10 sec)

-

(8)

In primary head position, patients look to the left, to the right, up and down while using both hands to lift their upper lids. (15 sec)

-

(9)

Patients at rest, eyes open (10 sec)

-

(10)

Patients voluntarily perform gentle eye closure and chin up to detect lagophthalmos. (10 sec)

-

(11)

The doctor asks the patient the following questions:

-

(12)

How’s the severity and frequency of the spasmodic eyelids and facial musculatures?

-

(13)

Do you have a symptom of water in your eyes while washing your face?

-

(14)

Do you notice that you have drooping lids or unequal lids position?

-

(15)

Any drooling from the side of your mouth while drinking or brushing your teeth?

-

(16)

Do you see double vision? Is the diplopia in a vertical or horizontal direction?

-

(17)

Do facial spasms affect your daily life activities? What are the affected activities?

-

(18)

Patient at rest, eyes open (at least 120 sec), avoid extraneous movements or head and face touching which could affect the spasm severity.24

Quantification and statistical analysis

Outcomes and measurements

Spasm severity and frequency gradings were the primary outcomes as measured by the JRS graded from 0 to 4.25 Gradings were collected separately and later categorized into two groups, the high-grade or incapacitated group (grade 3-4) and the low-grade or non-functionally disabled group (grade 0-2). Secondary outcomes were: (1) signs and symptoms of complications after treatment including lagophthalmos, ptosis, drooping lips, and extraocular muscles limitation; (2) impaired daily activities; (3) usability, assessed by the Thai version of Telehealth Usability Questionnaire (T-TUQ).27 This questionnaire was translated from a previously developed and validated Telehealth Usability Questionnaire (TUQ), with permission from the questionnaire developer, University of Pittsburgh.17,27 Translation and back translation processes were reviewed for content validity by four expert judgements and pilot cognitive interview with ten patients.27 Each question was scored based on a seven-point Likert scale and classified into five subscales: usefulness, ease of use, effectiveness, reliability, and satisfaction (Table 4).17

Statistical analysis

Appropriate descriptive statistics including mean with SD, frequency and percentage were used to describe subject characteristics, affected daily activities, and usability score in results, Table 1, and Table 4.

Intra-rater agreement between telemedicine visit videos and in-person visit evaluations was assessed by quadratic weighted kappa coefficient for spasm severity and frequency separately. The kappa coefficient statistic was calculated to evaluate the binary outcomes agreements, which are the categorized severity and frequency gradings, detection of complications by signs alone, detection of complications by signs or symptoms identified during telemedicine visit compared to signs identified during in-person visit, and decisions for further botulinum toxin injection in Table 2. The employed definition of kappa was previously described by Landis and Koch.30 Using an in-person evaluation by a neuro-ophthalmologist as the gold standard best practice, sensitivity, specificity, PPV, NPV, positive likelihood ratio, negative likelihood ratio, and accuracy were further calculated with 95%CI in Table 3. Inter-rater agreement was omitted because our neuro-ophthalmologist assessors (P.H. and S.J.) have similar JRS grading abilities through experience and real-life practice. All analyses were performed using SPSS (version 28.0; IBM Corp) without imputation of missing data. Indeterminate results were discussed between P.H. and S.J.

Additional resources

The original Telehealth Usability Questionnaire (TUQ) and Thai version Telehealth Usability Questionnaire (T-TUQ) are available through https://doi.org/10.5195/ijt.2016.6196 and https://doi.org/10.5195/ijt.2023.6577, respectively.26 This study was registered in the Thai clinical trials registry (TCTR20210508001) available through https://www.thaiclinicaltrials.org/#.

Acknowledgments

The authors thank Teerakiat Jaroensettasin, MD, consultant in Child and Adolescent Psychiatry, Colchester, UK, and his team for the TUQ back translation process. Funding was granted from the Quality Improvement Fund of King Chulalongkorn Memorial Hospital, Thai Red Cross Society, Bangkok, Thailand (grant number 273/2564).

Author contributions

P.P., P.H., W.T., and S.J. contributed to the conceptualization and visualization. P.P., P.H., W.T., S.J., and S.K. developed the methodology and performed the experiments. P.P., P.H., W.T., S.J., and B.A. collected clinical data. P.P. performed the data curation and formal analysis and wrote the original manuscript. P.H., W.T., S.J., B.A., and S.K. critically reviewed and edited the manuscript. P.P., P.H., and S.K. contributed to the resources. P.P. and P.H. acquired the funding. P.H. was the project administration and supervised the study. P.P. and P.H. verified the data and equally contributed to the study. All authors had full access to all the data in this study and had final responsibility for the decision to submit for publication.

Declaration of interests

The authors declare no competing interests.

Published: May 3, 2024

References

- 1.Holds J.B., White G.L., Thiese S.M., Anderson R.L. Facial dystonia, essential blepharospasm and hemifacial spasm. Am. Fam. Physician. 1991;43:2113–2120. [PubMed] [Google Scholar]

- 2.Moss H.E. In: Liu, Volpe, and Galetta’s neuro-ophthalmology: diagnosis and management. Third ed. Liu G.T., Volpe N.J., Galetta S.L., editors. Elsevier; 2019. Eyelid and facial nerve disorders; pp. 467–469. [Google Scholar]

- 3.Fraint A., Stebbins G.T., Pal G., Comella C.L. Reliability, feasibility and satisfaction of telemedicine evaluations for cervical dystonia. J. Telemed. Telecare. 2020;26:560–567. doi: 10.1177/1357633X19853140. [DOI] [PubMed] [Google Scholar]

- 4.Dullet N.W., Geraghty E.M., Kaufman T., Kissee J.L., King J., Dharmar M., Smith A.C., Marcin J.P. Impact of a university-based outpatient telemedicine program on time savings, travel costs, and environmental pollutants. Value Health. 2017;20:542–546. doi: 10.1016/j.jval.2017.01.014. [DOI] [PubMed] [Google Scholar]

- 5.Caffery L.J., Farjian M., Smith A.C. Telehealth interventions for reducing waiting lists and waiting times for specialist outpatient services: A scoping review. J. Telemed. Telecare. 2016;22:504–512. doi: 10.1177/1357633X16670495. [DOI] [PubMed] [Google Scholar]

- 6.Grossman S.N., Han S.C., Balcer L.J., Kurzweil A., Weinberg H., Galetta S.L., Busis N.A. Rapid implementation of virtual neurology in response to the COVID-19 pandemic. Neurology. 2020;94:1077–1087. doi: 10.1212/WNL.0000000000009677. [DOI] [PubMed] [Google Scholar]

- 7.Lai K.E., Ko M.W., Rucker J.C., Odel J.G., Sun L.D., Winges K.M., Ghosh A., Bindiganavile S.H., Bhat N., Wendt S.P., et al. Tele-Neuro-Ophthalmology During the Age of COVID-19. J. Neuro Ophthalmol. 2020;40:292–304. doi: 10.1097/WNO.0000000000001024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ko M.W., Busis N.A. Tele-Neuro-Ophthalmology: Vision for 20/20 and Beyond. J. Neuro Ophthalmol. 2020;40:378–384. doi: 10.1097/WNO.0000000000001038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Martin-Khan M., Wootton R., Whited J., Gray L.C. A systematic review of studies concerning observer agreement during medical specialist diagnosis using videoconferencing. J. Telemed. Telecare. 2011;17:350–357. doi: 10.1258/jtt.2011.101113. [DOI] [PubMed] [Google Scholar]

- 10.Yager P.H., Clark M.E., Dapul H.R., Murphy S., Zheng H., Noviski N. Reliability of circulatory and neurologic examination by telemedicine in a pediatric intensive care unit. J. Pediatr. 2014;165:962–966.e65. doi: 10.1016/j.jpeds.2014.07.002. [DOI] [PubMed] [Google Scholar]

- 11.Lade H., McKenzie S., Steele L., Russell T.G. Validity and reliability of the assessment and diagnosis of musculoskeletal elbow disorders using telerehabilitation. J. Telemed. Telecare. 2012;18:413–418. doi: 10.1258/jtt.2012.120501. [DOI] [PubMed] [Google Scholar]

- 12.Awadallah M., Janssen F., Körber B., Breuer L., Scibor M., Handschu R. Telemedicine in general neurology: interrater reliability of clinical neurological examination via audio-visual telemedicine. Eur. Neurol. 2018;80:289–294. doi: 10.1159/000497157. [DOI] [PubMed] [Google Scholar]

- 13.Nitzkin J.L., Zhu N., Marier R.L. Reliability of telemedicine examination. Telemed. J. 1997;3:141–157. doi: 10.1089/tmj.1.1997.3.141. [DOI] [PubMed] [Google Scholar]

- 14.Tarolli C.G., Andrzejewski K., Zimmerman G.A., Bull M., Goldenthal S., Auinger P., O’Brien M., Dorsey E.R., Biglan K., Simuni T. Feasibility, reliability, and value of remote video-based trial visits in parkinson’s disease. J. Parkinsons Dis. 2020;10:1779–1786. doi: 10.3233/JPD-202163. [DOI] [PubMed] [Google Scholar]

- 15.Bull M.T., Darwin K., Venkataraman V., Wagner J., Beck C.A., Dorsey E.R., Biglan K.M. A pilot study of virtual visits in Huntington disease. J. Huntingtons Dis. 2014;3:189–195. doi: 10.3233/JHD-140102. [DOI] [PubMed] [Google Scholar]

- 16.Louis E.D., Levy G., Côte L.J., Mejia H., Fahn S., Marder K. Diagnosing Parkinson’s disease using videotaped neurological examinations: Validity and factors that contribute to incorrect diagnoses. Mov. Disord. 2002;17:513–517. doi: 10.1002/mds.10119. [DOI] [PubMed] [Google Scholar]

- 17.Parmanto B., Lewis A.N., Jr., Graham K.M., Bertolet M.H. Development of the Telehealth Usability Questionnaire (TUQ) Int. J. Telerehabil. 2016;8:3–10. doi: 10.5195/ijt.2016.6196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Fatehi F., Jahedi F., Tay-Kearney M.-L., Kanagasingam Y. Teleophthalmology for the elderly population: A review of the literature. Int. J. Med. Inform. 2020;136 doi: 10.1016/j.ijmedinf.2020.104089. [DOI] [PubMed] [Google Scholar]

- 19.Cabuk K.S., Karabulut G.O., Fazil K., Nacaroglu S.A., Gunaydin Z.K., Taskapili M. 2D analysis of gold weight implantation surgery results in paralytic lagophthalmos. Beyoglu Eye J. 2021;6:200–205. doi: 10.14744/bej.2021.95866. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.McCord C.D., Walrath J.D., Nahai F. Concepts in eyelid biomechanics with clinical implications. Aesthet. Surg. J. 2013;33:209–221. doi: 10.1177/1090820X12472692. [DOI] [PubMed] [Google Scholar]

- 21.Labiris G., Panagiotopoulou E.K., Kozobolis V.P. A systematic review of teleophthalmological studies in Europe. Int. J. Ophthalmol. 2018;11:314–325. doi: 10.18240/ijo.2018.02.22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Pinar U., Anract J., Perrot O., Tabourin T., Chartier-Kastler E., Parra J., Vaessen C., de La Taille A., Roupret M. Preliminary assessment of patient and physician satisfaction with the use of teleconsultation in urology during the COVID-19 pandemic. World J. Urol. 2021;39:1991–1996. doi: 10.1007/s00345-020-03432-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Robiony M., Bocin E., Sembronio S., Costa F., Bresadola V., Tel A. Redesigning the Paradigms of Clinical Practice for Oral and Maxillofacial Surgery in the Era of Lockdown for COVID-19: From Tradition to Telesemeiology. Int. J. Environ. Res. Public Health. 2020;17:6622. doi: 10.3390/ijerph17186622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Defazio G., Hallett M., Jinnah H.A., Stebbins G.T., Gigante A.F., Ferrazzano G., Conte A., Fabbrini G., Berardelli A. Development and validation of a clinical scale for rating the severity of Blepharospasm. Mov. Disord. 2015;30:525–530. doi: 10.1002/mds.26156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wabbels B., Jost W.H., Roggenkämper P. Difficulties with differentiating botulinum toxin treatment effects in essential blepharospasm. J. Neural. Transm. 2011;118:925–943. doi: 10.1007/s00702-010-0546-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Campbell W.S., Talmon G.A., Foster K.W., Baker J.J., Smith L.M., Hinrichs S.H. Visual memory effects on intraoperator study design. Am. J. Clin. Pathol. 2015;143:412–418. doi: 10.1309/AJCPUC3TYMS3QOBM. [DOI] [PubMed] [Google Scholar]

- 27.Hirunwiwatkul P., Pongpanich P., Tulvatana W., Jariyakosol S., Phuenpathom W., Krittanupong S., Chonramak R., Pichedvanichok T., Bhidayasiri R., Nimnuan C. Evaluation of Psychometric Properties of Thai Version Telehealth Usability Questionnaire (T-TUQ) Int. J. Telerehabil. 2023;15 doi: 10.5195/ijt.2023.6577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Donner A., Eliasziw M. A goodness-of-fit approach to inference procedures for the kappa statistic: Confidence interval construction, significance-testing and sample size estimation. Stat. Med. 1992;11:1511–1519. doi: 10.1002/sim.4780111109. [DOI] [PubMed] [Google Scholar]

- 29.Shoukri M.M., Asyali M.H., Donner A. Sample size requirements for the design of reliability study: review and new results. Stat. Methods Med. Res. 2004;13:251–271. doi: 10.1191/0962280204sm365ra. [DOI] [Google Scholar]

- 30.Landis J.R., Koch G.G. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–174. doi: 10.2307/2529310. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

-

•

All data reported in this paper will be shared by the lead contact upon request.

-

•

This paper does not report original code.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.