Abstract

Background

Radiotherapy is a core treatment modality for oropharyngeal cancer (OPC), where the primary gross tumor volume (GTVp) is manually segmented with high interobserver variability. This calls for reliable and trustworthy automated tools in clinician workflow. Therefore, accurate uncertainty quantification and its downstream utilization is critical.

Methods

Here we propose uncertainty-aware deep learning for OPC GTVp segmentation, and illustrate the utility of uncertainty in multiple applications. We examine two Bayesian deep learning (BDL) models and eight uncertainty measures, and utilize a large multi-institute dataset of 292 PET/CT scans to systematically analyze our approach.

Results

We show that our uncertainty-based approach accurately predicts the quality of the deep learning segmentation in 86.6% of cases, identifies low performance cases for semi-automated correction, and visualizes regions of the scans where the segmentations likely fail.

Conclusions

Our BDL-based analysis provides a first-step towards more widespread implementation of uncertainty quantification in OPC GTVp segmentation.

Subject terms: Medical imaging, Cancer imaging

Plain language summary

Radiotherapy is used as a treatment for people with oropharyngeal cancer. It is important to distinguish the areas where cancer is present so the radiotherapy treatment can be targeted at the cancer. Computational methods based on artificial intelligence can automate this task but need to be able to distinguish areas where it is unclear whether cancer is present. In this study we compare these computational methods that are able to highlight areas where it is unclear whether or not cancer is present. Our approach accurately predicts how well these areas are distinguished by the models. Our results could be applied to improve the computational methods used during radiotherapy treatment. This could enable more targeted treatment to be used in the future, which could result in better outcomes for people with oropharyngeal cancer.

Sahlsten et al. systematically evaluate two Bayesian deep learning methods and eight uncertainty measures for the segmentation of oropharyngeal cancer primary gross tumor volume with a multi-institute PET/CT dataset. The uncertainty-aware approach can accurately predict the segmentation quality that enables automatic segmentation quality control.

Introduction

Management of oropharyngeal cancer (OPC), a type of head and neck squamous cell carcinoma (HNSCC), still remains a challenge even for experienced multidisciplinary centers1. A core treatment modality in OPC patient care is radiotherapy (RT). The current standard of care for OPC RT relies on clinical experts’ manually generated segmentation of the primary gross tumor volume (GTVp) as a target structure to deliver RT dose. However, the GTVp in OPC is notorious for being one of the most difficult structures amongst all cancer types to perform accurate segmentation for RT planning due to its exceptionally high interobserver variability2–4. Subsequently, GTVp segmentation has been cited as the single largest factor of uncertainty in RT planning5,6. Therefore, automated approaches that can reduce interobserver variability are of paramount importance in improving the current OPC RT workflow.

Deep learning (DL) has increasingly been used in the OPC RT space to automatically segment organs at risk7,8 and target structures9–12. Impressively even for GTVp segmentation, several DL approaches have boasted exceptionally high performance in terms of volumetric and surface-level agreement with the ground-truth segmentations13. Importantly, the DL-based auto-segmentation has been shown to be superior to the expected expert’s interobserver variability14, thereby highlighting its potential utility as a support tool for accurate clinical decision-making. Notably, many of these advances in OPC GTVp segmentation have been spurred by open-source data challenges15, namely the HEad and neCK TumOR (HECKTOR) PET/CT tumor segmentation challenge14,16,17. However, while there exists a deluge of DL-based OPC auto-segmentation approaches that demonstrate potentially clinically acceptable performance in terms of geometric measures as evident from HECKTOR16, the relative confidence (i.e., uncertainty) with which the predictions generated by these models remains a relatively unexplored domain.

Uncertainty quantification is crucial to improve the trust of clinicians in automated systems and to facilitate the clinical implementation of these technologies18. Within RT, the segmentation is a clear and well-discussed application space for uncertainty estimation19. This is particularly relevant for RT target structures (i.e., OPC GTVp) where high interobserver variability is expected. In addition, there is interest in separating the predictive uncertainty into aleatoric and epistemic components and analyzing them separately20. While the performance of OPC GTVp auto-segmentation models is seemingly impressive, the actual clinical utility for most of these methods has yet to be solidified due to a lack of investigations on model uncertainty. Previous work in DL uncertainty estimation has been extensively investigated in segmenting lung-related21–23 and brain-related24–26 structures. While DL uncertainty estimation has been applied to a broad range of HNSCC-related classification tasks27–30 and dose prediction31, only a limited number of studies have investigated uncertainty estimation for 3-dimensional HNSCC medical image segmentation, predominantly for nasopharyngeal cancer32 or organs at risk33,34; to our knowledge only one study has attempted to investigate segmentation uncertainty estimation in OPC35. Therefore, there exists a considerable gap in knowledge about how to construct DL auto-segmentation models that lend themselves to uncertainty estimation and subsequently how to quantify the model uncertainty at individual patient and voxel-wise levels for OPC GTVp segmentation.

In this study, we explore the utilization of uncertainty with deep learning in 3D medical imaging by proposing an uncertainty-aware DL for the OPC GTVp auto-segmentation. We develop probabilistic DL models based on Deep Ensemble and MC Dropout Ensemble using large-scale PET/CT datasets and systematically investigate various established uncertainty measures for patient-level uncertainty, i.e., the information-theoretic entropy, expected entropy, mutual information, coefficient of variation, and structure expected entropy. Here we contribute by deriving three novel measures to our best knowledge, namely the Dice-risk, structure entropy, and structure mutual information and present a qualitative analysis of voxel-wise uncertainty with the entropy, expected entropy, and mutual information. We evaluate the auto-segmentation results with established segmentation performance measures, and we evaluate the utility of uncertainty information by employing several quantitative evaluation methods to link uncertainty measures to the known performance measures and qualitatively investigate the results of uncertainty.

Methods

This research is based on retrospective and registry-based data, and as such does not consider human subjects and is not subject to IRB approval. Our external validation dataset was retrospectively collected under a HIPAA-compliant protocol approved by the MD Anderson institutional Review Board (RCR03-0800) which implements a waiver of informed consent. In this section, we introduce background on uncertainty estimation in deep learning, present the materials and experimental setup, i.e., the datasets used in this study, describe the DL models we employ, introduce the uncertainty measures that are used to quantify the model uncertainty, and list all the performance evaluation metrics used for the experiments. Our proposed uncertainty-based framework is depicted in Fig. 1.

Fig. 1. Proposed framework for uncertainty-aware GTVp segmentation of OPC patients.

The probabilistic deep learning model () with stochastic parameters () distributed according to an approximate posterior distribution () segments the GTVp, outputs a voxel-level uncertainty map, and quantifies the patient-level uncertainty value (). The patient-level uncertainty is then used to estimate the segmentation quality by checking whether the uncertainty is below or above the predetermined threshold (). When the patient-level uncertainty exceeds the threshold, a medical expert will manually inspect and perform corrections to the deep learning segmentation, if necessary. The downstream utilization of the segmentation is then informed by the patient-wise and voxel-wise uncertainties, as well as the patient-wise performance estimate.

Uncertainty estimation in deep learning

Approaches based on conventional DL have been found to be overconfident in the predictions they make. This means that the probability estimates they provide do not correspond to the observed likelihood of them being correct36. The Bayesian approach has been described to show promise in improving uncertainty estimation and calibration of DL methods37. A direct estimation of the Bayesian posterior of the parameters is intractable, and thus approximation methods are required. Two common posterior approximation methods for segmentation in the literature are deep ensembling22,38 and Monte Carlo (MC) Dropout26,39,40. The deep ensemble approach assumes that multiple networks, having been trained independently with stochastic gradient descent with weight decay, provide samples from the modes of the posterior. The MC Dropout can be seen as a variational approximation of the posterior41 that can be efficiently sampled from. A key concept for the Bayesian predictive models is the posterior predictive distribution that requires marginalization over the model parameters. Using samples from the deep ensemble or MC Dropout, we can use Monte Carlo approximation for the posterior predictive distribution:

| 1 |

where is the number of MC samples and is the m:th MC sample of the parameters.

Uncertainty in any modeling task is often thought to be separable to two main sources i.e., being of aleatoric or epistemic origin42. The aleatoric uncertainty is the component that is caused by inherent randomness in the data, e.g., noise, while the epistemic uncertainty originates from imperfect knowledge of the model, e.g., the type of model or its parameters. In deep learning, both aleatoric and epistemic uncertainty cause predictive uncertainty that is the uncertainty that the deep learning model has in its predictions43.

To measure the uncertainty associated with a set of events, a classic uncertainty measure is the information-theoretic entropy, proposed by Shannon44, defined for a predictive model of given as:

| 2 |

For a Bayesian supervised predictive model with the probability distribution over the set of possible parameters , such as a Bayesian neural network (BNN), the predictive entropy of the model can be decomposed to the aleatoric and epistemic components45 as follows:

| 3 |

where is a realization (or an event) of the parameters, is the mutual information between the output of the model and the parameters of the model, and the term captures the aleatoric uncertainty while stands for the epistemic uncertainty component. For the remainder of this work, will be called the predictive entropy and denoted with , will be called the expected entropy and denoted with , and the mutual information will be denoted with . A common approach in deep learning segmentation is to consider that the labels of each voxel are conditionally independent of each other given the input, see e.g., refs. 46,47. Hence the entropy-based uncertainty of the output as a whole is the sum of the voxel-wise entropies.

Recently, there has also been interest in defining alternative uncertainty measures for deep learning. One approach to such measures is to view the uncertainty in terms of pointwise risk in 48–50. That is:

| 4 |

where is a cost function and is the output of a neural network. When the cost function is negative log-likelihood and assume that the output of the neural network is the true conditional distribution of possible events, we have:

| 5 |

Thus, the information-theoretic entropy can be viewed as a special case of the pointwise risk with a negative log-likelihood as the cost function. Furthermore, the risk-based approach allows one to design a wide variety of uncertainty measures depending on how one views what the cost should be in the setting. However, since we do not necessarily have a cost function that is of an additive form of voxel-wise costs, the voxel-wise uncertainty might not be computable. In addition, for real life use-cases, it requires special modeling tools to decompose the risk-based uncertainty into aleatoric and epistemic components50.

In addition to the entropy and risk-based view to uncertainty estimation, there have been numerous ad hoc uncertainty measures proposed for medical DL segmentation that have not been derived from the first principles, but have achieved remarkable results in the tasks involved. For example, Roy et al.26 proposed so-called structure-wise uncertainty computation, where the uncertainty is only computed on voxels that the model deems as part of the foreground structure, e.g., in GTVp segmentation the voxels where . In the study, the structure predictions were then used to calculate two novel uncertainty measures, i.e., the coefficient of variation of the volume of thresholded predictions and the expected entropy of the structure voxels. In addition, the work proposed a third novel uncertainty measure called pairwise Dice similarity coefficient which was not included in our study due to the high computational cost when using a large number of MC samples combined with ensembling.

Since the theory of uncertainty estimation with deep learning is still a developing field, and there does not exist a universal approach to it, systematic evaluation of uncertainty-aware neural networks and uncertainty measures for each use-case is necessary.

Materials and experimental setup

In this section, we present the dataset and the experimental setup used for our results.

Dataset

For this study, we utilized two main OPC patient datasets containing PET/CT data: (1) the publicly available 2021 HECKTOR Challenge training dataset51, which we obtained by completing the End User Agreement through AICrowd52, and (2) an external validation dataset from The University of Texas MD Anderson Cancer Center (MDA). The HECKTOR dataset contains 224 OPC patients with co-registered PET/CT scans. Each scan included a GTVp segmentation mask manually generated by a single clinical annotator and multiple annotators were involved for the whole dataset. Additional details on the HECKTOR dataset can be found in the corresponding overview paper51. The MDA external validation dataset contains 67 human papilomavirus-positive OPC patients with co-registered PET/CT scans with manually generated GTVp segmentation masks from a single clinician annotator (S.A.). Manual segmentation was performed using Velocity AI software v. 3.0.1 (Atlanta, GA, USA). Additional details on the MDA external validation dataset, including image acquisition characteristics and demographic variables, can be found in Supplementary Methods, Supplementary Tables 1 and 2. The MDA external validation dataset was retrospectively collected under a HIPAA-compliant protocol approved by the MDA institutional review board (RCR03-0800) which implements a waiver of informed consent.

For model training and evaluation, all data was resampled into 1 mm isotropic pixel spacing, 1-mm slice thickness, and cropped into 144 × 144 × 144 voxel-sized volumes centered around the GTVp segmentation. The CT scans were windowed at [−200, 200] Hounsfield Units and rescaled to [−1, 1] range, and the PET scans were z-score normalized. The models were trained using a fivefold cross-validation scheme on the HECKTOR dataset. For the performance evaluation of the model, the MDA external validation dataset was used.

Bayesian deep learning models

We investigated two approximations of Bayesian inference in DL, i.e., the Deep Ensemble and the Monte Carlo (MC) Dropout Ensemble. The DL architecture in both models used the same 3D residual U-net from the Medical Open Network for AI (MONAI) (0.7.0)53 that was chosen due to its established success in OPC GTVp segmentation10,12,51,54. This architecture has two input channels, i.e., one for CT and one for PET, and a single-output channel with the sigmoid activation function. The input is followed by an encoder consisting of five convolution blocks with 16, 32, 64, 128, and 256 channels, followed by a decoder mirroring the channel count, and a feature concatenation from the decoder to the respective encoder block. Each of these blocks has two convolution layers each followed by instance normalization, dropout, and parametric ReLU layers, and a residual connection with convolution between the input and output of the block. The only difference between the methods is that the MC Dropout method applies the dropout stochastic regularization layer during test-time41, whereas the output was deterministic with the Deep Ensemble.

Both ensembles consisted of five models that were each trained using fivefold cross-validation for the HECKTOR dataset. From a Bayesian point of view, the posterior predictive distribution of a Deep Ensemble is approximated with a uniform mixture of the individual networks in the ensemble, i.e., the average of the predictions of the individual networks is approximate Bayesian inference. With the MC Dropout Ensemble, the uniform mixture is over multiple networks with MC dropout, and the predictive distribution is approximated by the average over Monte Carlo samples from each of these networks55. In practice, we used 60 MC samples from each of the five ensemble members for the approximation.

For both ensembles, the optimal hyperparameters i.e., loss functions and dropout rate were searched based on the cross-validation performance. For the loss function, we evaluated the Dice loss, which is a soft approximation to the Dice similarity coefficient:

| 6 |

where and are the label and prediction values at the coordinate , respectively. In addition, we evaluated the sum of Dice and Binary Cross-Entropy (BCE) losses:

| 7 |

| 8 |

where . The dropout rate was evaluated with values of 0.1, 0.2, …, 0.9. The tuning of the model was based on the combination of the overall segmentation performance and the quality of the uncertainty estimation with the largest value for area under the Dice similarity coefficient referral curve described in detail in the Uncertainty measures subsection. To keep our analysis as general as possible, uncertainty was not utilized otherwise during model development or training. The final model for both the Deep Ensemble and MC Dropout Ensemble utilized a dropout rate of 0.5 and the DiceBCELoss.

Segmentation performance evaluation

The predictions of the models were thresholded with values of 0.5 and higher being considered as GTVp and otherwise as background. We denote the thresholded predictions with and the binary mask with . We evaluated the segmentation performance with the Dice similarity coefficient (DSC):

| 9 |

the mean surface distance (MSD):

| 10 |

and the mean Hausdorff distance at 95% (95HD):

| 11 |

where is an operator that extracts the set of surface voxels, is the the cardinality of a set, and is the 95th percentile maximum. These metrics were selected because of their ubiquity in the literature and ability to capture both volumetric overlap and boundary distances56,57. For the fivefold cross-validation results, we report the mean and standard error of the mean (SEM) of the metrics computed on each fold, whereas for the holdout set we report the mean and interquartile range (IQR) of point estimates. The model output was resampled into original resolution with nearest-neighbor sampling and evaluated against original resolution segmentations. The performance of MSD and 95HD was evaluated in millimeters. When comparing the segmentation model metrics, we implemented two-sided Wilcoxon signed-rank tests with P values less than or equal to 0.05 considered as significant. Statistical comparisons and their annotations on figures were performed using the SciPy (1.7.3)58 and statannotations (0.4.4)59 Python packages, respectively.

Uncertainty measures

We consider predictive entropy , expected entropy , and mutual information as the entropy-based uncertainty measures. We also examine the risk-based uncertainty estimation, for which we propose negative to be the cost function in GTVp segmentation. We call this uncertainty measure DSC-risk (), which is calculated as:

| 12 |

where the expectation over is with respect to the posterior predictive distribution . Since the expectation over all the possible segmentations cannot be calculated in practice, we utilize instead a Monte Carlo estimate of the DSC-risk:

| 13 |

Thus, this estimate is taken in “doubly stochastic” manner, since is also estimated with Monte Carlo approximation. We decided not to consider the MSD and 95HD-based cost functions with the risk-based uncertainty estimation in order to keep the number of uncertainty measures within reasonable limits.

In addition, we use the recently proposed structure-based uncertainty estimates i.e., coefficient of variation () and structure expected entropy (), which are defined next26. Let be the set of voxels that the model with parameters predicts as part of the GTVp structure:

| 14 |

Then the and are calculated as:

| 15 |

| 16 |

where stands for the cardinality of a set i.e., the number of voxels predicted as GTVp, the standard deviation, and the mean of the number of voxels predicted as GTVp with respect to the parameter distribution of the deep learning model. The expectations over the model parameters are computed with Monte Carlo approximation.

To study the structure uncertainty with entropy-based measures in depth, we also have a minor contribution of combining the two other entropy-based measures with structure uncertainty calculation. Hence we define the structure predictive entropy () and structure mutual information () as follows:

| 17 |

| 18 |

| 19 |

where the set of structure voxels is now estimated from the posterior predictive distribution , instead of the Monte Carlo samples of predictive distribution .

Uncertainty performance evaluation

To evaluate the utility of the patient-level uncertainty, we performed multiple experiments described in the literature. First, similar to ref. 22, we developed a linear regression model with the cross-validation DSC values as the independent variables and uncertainty values as the dependent variables. In addition, a linear model with uncertainty value being the dependent variable and DSC being the independent variable was evaluated with results reported in Supplementary Results on Supplementary Table 3 and Supplementary Fig. 1. We then defined a threshold between uncertain and certain segmentations as the uncertainty value that the model predicted for 0.61 DSC. The threshold value was selected at 0.61 DSC since it represents the average interobserver variability for GTVp segmentation on PET/CT data as per previous literature14. The patient-level uncertainty estimates were then compared to the DSC values computed on the holdout dataset, by quantifying the four possible combinations, i.e., the model is uncertain, and the segmentation is inaccurate , the model is uncertain and the segmentation is accurate , the model is certain and the segmentation is accurate , and the model is certain and the segmentation is inaccurate . We then computed the following measures proposed in ref. 40: conditional probability that the segmentation is accurate given that the model is certain and that the segmentation is inaccurate given that the model is uncertain , which are defined as:

| 20 |

| 21 |

In addition, we report the overall performance with Accuracy vs. Uncertainty () measure:

| 22 |

which provides the probability of the outcome being segmentation is accurate given that the model is certain or segmentation is inaccurate given that the model is uncertain, similarly to the Patch Accuracy vs. Patch Uncertainty measure of ref. 40. In addition, we examined the linear relationship between the DSC performance and patient-level uncertainty estimates by quantifying the Pearson correlation coefficient between the DSC values and model certainty, defined as negative uncertainty, and denoted as -, -, -, -, -, -, -, - for the eight uncertainty measures.

We also examined uncertainty-based referral simulation that is common in uncertainty-aware classification tasks45,48,55. In the batch referral process, each patient is assigned an uncertainty score using one of the uncertainty measures and the patients are sorted based on the score. Then, the patients are removed from the set, one at a time, beginning from the highest uncertainty score, and after each removal, i.e., simulated referral, the performance measures are computed on the remaining set of patients. This process simulates a scenario where the patients for which the model has high uncertainty are referred for an expert for manual verification and/or correction, while expecting higher performance for the remaining patients. This process is repeated until 10% of the patients are remaining, as we observed that the performance measure estimates an increase in stochasticity with fewer patients. As a summary score for the combination of segmentation performance and uncertainty quantification through batch referral, we evaluate the area under the referral curve with Dice similarity coefficient (R-DSC AUC) by averaging the segmentation performance over multiple referral thresholds, in a similar manner as performed by Band et al.45 with accuracy.

In addition, we evaluate the uncertainty-based referral with an instance-based process, where scans are referred according to a predetermined uncertainty threshold calculated with the training data. This analysis is more oriented for practice, as the cases are flagged for high uncertainty with a predetermined threshold value, instead of a value corresponding to a certain percentile computed on a batch of data, i.e., the holdout test set. We examine this process with three uncertainty thresholds: at 0.80, 0.85, and 0.90 cross-validation DSC, denoted as , , and , respectively. The uncertainty thresholds are calculated for each uncertainty measure independently. As the instance referral process does not control the number of patients, but we would like for the model to confidently and accurately segment as many patients as possible, we also report the number of patients considered as certain in the instance referral process analysis.

Results

Segmentation performance

As for the overall segmentation performance without considering uncertainty, the MC Dropout Ensemble had the mean DSC value of 0.751 (SEM: 0.023) on the cross-validation data and 0.720 (IQR: 0.172) on the holdout data. The Deep Ensemble DSC was found to be 0.755 (SEM: 0.019) on the cross-validation data and 0.716 (IQR: 0.177) on the holdout data. As for the MSD metric, the MC Dropout Ensemble had the mean 2.01 mm (SEM: 0.295) on the cross-validation data and 2.31 (IQR: 1.15) mm on the holdout data, whereas the Deep Ensemble had the mean 2.07 mm (SEM: 0.271) on the validation data and 2.27 (IQR: 1.47) mm on the holdout data. For the MC Dropout Ensemble and the Deep Ensemble, the 95HD metric had the mean 6.49 mm (SEM: 1.14) and 6.89 mm (SEM: 1.11) on the cross-validation data, and 8.25 (IQR: 3.74) mm and 8.00 (IQR: 4.04) mm on the holdout data, respectively. There was a statistically significant difference (P ≤ 0.05) between the Deep Ensemble and the MC Dropout Ensemble for all the metrics on the holdout set with effect sizes of −0.37, −0.30, and −0.18 for DSC, MSD, and 95HD, respectively. Overall segmentation performance for the holdout data is illustrated in Fig. 2.

Fig. 2. Segmentation performance of the two approximate Bayesian methods.

Violinplot of Dice similarity coefficient (DSC), mean surface distance (MSD), and Hausdorff distance at 95% (95HD) performance on the external dataset (N = 67). The dotted lines mark the quartiles. Statistical significance is measured using the Wilcoxon signed-rank test.

Uncertainty estimation

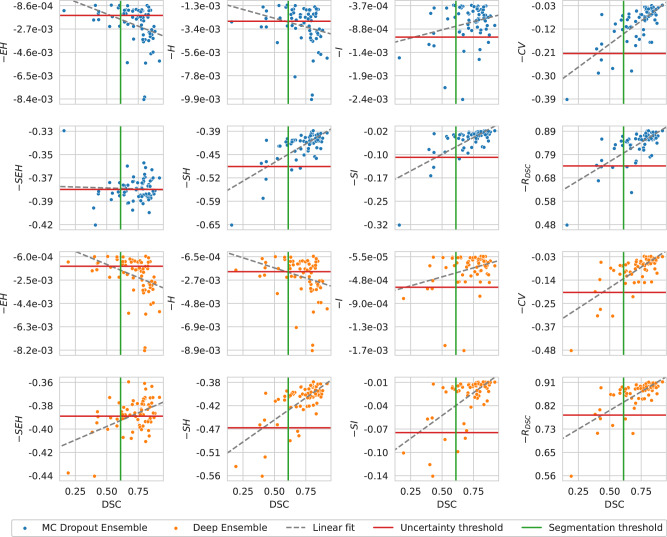

In the case of the performance estimation with uncertainty, the MC Dropout Ensemble had highest , , and values of 90.9% with , 80.0% with , and 86.6% with , respectively. The Deep Ensemble had the highest value for with , with , and with , giving rise to values of 92.1%, 80.0%, and 86.6%, respectively. The , , , and uncertainty measures had similar but slightly worse performance than the , on both the MC Dropout Ensemble and Deep Ensemble models. The worst performing uncertainty measure was with values of 38.8% and 37.3% for MC Dropout Ensemble and Deep Ensemble, respectively. Overall for both models the , , and uncertainty measures performed worse than other measures with values for in the ranging from 37.3% to 65.6% while other measures ranged from 80.6% to 86.6%. In this analysis, the uncertainty thresholds were calculated at the 0.61 DSC on the cross-validation performance with values of −1.7e–03 for -, −2.7e-03 for -, −1.1e-03 for -, −0.21 for -, −0.38 for -, −0.49 for -, −0.10 for -, and 0.74 for with MC Dropout Ensemble. The uncertainty thresholds for Deep Ensemble were −1.4e-03 for -, −2.0e-03 for -, −6.0e-04 for -, −0.20 for -, −0.39 for -, −0.47 for -, −0.08 for -, and 0.79 for - with the Deep Ensemble. Full results are shown in Fig. 3 and Table 1. From the linear correlation analysis between DSC and certainty, - had a correlation of ρ = 0.718 (P = 7.89e-12) and ρ = 0.720 (P = 6.64e-12) for MC Dropout Ensemble and Deep Ensemble, respectively. Meanwhile, - had a correlation value to the DSC with ρ = −0.043 (P = 7.32e-01) and ρ = 0.465 (P = 7.24e-05), for MC Dropout Ensemble and Deep Ensemble, respectively. The structure predictive entropy - had a correlation value to the DSC with ρ = 0.704 (P = 2.99e-11) and ρ = 0.676 (P = 3.47e-10), for MC Dropout Ensemble and Deep Ensemble, respectively. The structure mutual information - had a correlation value to the DSC with ρ = 0.676 (P = 3.36e-11) and ρ = 0.623 (P = 1.84e-08), for MC Dropout Ensemble and Deep Ensemble, respectively. Lastly, the DSC-risk - had a correlation value of ρ = 0.698 (P = 5.41e-11) and ρ = 0.704 (P = 3.13e-11) for MC Dropout Ensemble and Deep Ensemble, respectively. Expected entropy - had a correlation value to the DSC with ρ = −0.361 (P = 2.7e-03) and ρ = −0.388 (P = 1.2e-03), for MC Dropout Ensemble and Deep Ensemble, respectively. The volume-level predictive entropy - had a correlation value to the DSC with ρ = −0.258 (P = 3.5e-02) and ρ = −0.303 (P = 1.3e-02), for MC Dropout Ensemble and Deep Ensemble, respectively. The volume-level mutual information - had a correlation value to the DSC with ρ = 0.257 (P = 3.6e-02) and ρ = 0.303 (P = 1.3e-02), for MC Dropout Ensemble and Deep Ensemble, respectively.

Fig. 3. Correlation analysis between model segmentation performance and model certainty.

Segmentation performance is based on Dice similarity coefficient (DSC) and model certainty (i.e., negative uncertainty) is based on expected entropy , predictive entropy , mutual information , coefficient of variation , structure expected entropy , structure predictive entropy , structure mutual information , or DSC-risk . The segmentation and uncertainty thresholds are drawn at the interobserver variability value of 0.61 DSC and at the predicted certainty value of 0.61 cross-validation DSC, respectively. Note that the x-axis is shared between the columns.

Table 1.

Conditional probabilities and overall accuracy for segmentation accuracy based on uncertainty

| Model | MC Dropout Ensemble | Deep Ensemble | ||||

|---|---|---|---|---|---|---|

| Uncertainty measure | p(accurate|certain) (%) | p(inaccurate|uncertain) (%) | (%) | p(accurate|certain) (%) | p(inaccurate|uncertain) (%) | (%) |

| 75.0 | 12.8 | 38.8 | 74.1 | 12.5 | 37.3 | |

| 80.0 | 15.6 | 49.3 | 77.1 | 12.5 | 46.3 | |

| 86.2 | 44.4 | 80.6 | 86.9 | 66.7 | 85.1 | |

| 87.1 | 80.0 | 86.6 | 87.1 | 80.0 | 86.6 | |

| 90.9 | 26.5 | 58.2 | 92.1 | 31.0 | 65.7 | |

| 85.5 | 60.0 | 83.6 | 85.2 | 50.0 | 82.1 | |

| 85.7 | 75.0 | 85.1 | 86.9 | 66.7 | 85.1 | |

| 86.9 | 66.7 | 85.1 | 86.9 | 66.7 | 85.1 | |

Accurate/inaccurate is determined by the 0.61 DSC threshold, and certain/uncertain is determined by the predicted confidence threshold at 0.61 the cross-validation DSC. The overall segmentation accuracy is defined as the accuracy vs uncertainty (). Best results for each model are in bold.

When simulating the batch referral process on the holdout set, by rejecting the most uncertain scans up to 90% of the total number of scans, it turned out that the coefficient of variation had the highest R-DSC AUC and expected entropy had the lowest R-DSC AUC for both of the models. During the referral process, all the uncertainty measures generally increased the performance, except for the structure expected entropy with MC Dropout Ensemble, as the DSC decreased under the initial, i.e., full holdout set, performance around 35% and past 85% referred cases. The batch referral curves for each uncertainty measure and the model are presented in Fig. 4. The R-DSC AUC performance was 0.681 with , 0.696 with , 0.727 with , 0.782 with , 0.752 with , 0.771 with , 0.772 with , and 0.769 with for MC Dropout Ensemble. For the Deep Ensemble, the R-DSC AUC performance was 0.672 with , 0.684 with , and 0.727 with , 0.783 with , 0.734 with , 0.774 with , 0.778 with , and 0.775 with .

Fig. 4. Model segmentation performance in the batch referral process based on uncertainty measures.

Evaluated with Dice similarity coefficient (DSC), mean surface distance (MSD), and Hausdorff distance at 95% (95HD). Most uncertain scans are referred, based on expected entropy (), predictive entropy (), mutual information (), coefficient of variation (), structure expected entropy (), structure predictive entropy (), structure mutual information (), and DSC-risk (), up to 90% of the total scans.

For the instance-based referral process with the uncertainty threshold, the MC Dropout Ensemble referred a single patient with and measures that improved DSC to 0.728, while the Deep Ensemble did not refer any patients. For the uncertainty threshold, the MC Dropout Ensemble had the highest DSC value of 0.769 with the measure and 47 patients retained, while the Deep Ensemble had the highest DSC value of 0.827 with measure and 3 patients retained. For the uncertainty threshold, the MC Dropout Ensemble had the highest DSC value of 0.876 with and 2 patients retained and for the Deep Ensemble the highest DSC value of 0.808 was obtained with and 11 patients retained. Full results for all uncertainty measures for both models and all thresholds are shown in Table 2.

Table 2.

Segmentation performance after threshold-based referral

| Model | MC Dropout Ensemble | Deep Ensemble | ||||

|---|---|---|---|---|---|---|

| Uncertainty measure/threshold | ||||||

| 0.720 (N = 67) | − (N = 0) | − (N = 0) | 0.716 (N = 67) | − (N = 0) | − (N = 0) | |

| 0.720 (N = 67) | − (N = 0) | − (N = 0) | 0.716 (N = 67) | − (N = 0) | − (N = 0) | |

| 0.720 (N = 67) | − (N = 0) | − (N = 0) | 0.716 (N = 67) | 0.827 (N = 3) | − (N = 0) | |

| 0.720 (N = 67) | 0.769 (N = 47) | 0.876 (N = 2) | 0.716 (N = 67) | 0.752 (N = 53) | − (N = 0) | |

| 0.720 (N = 67) | − (N = 0) | − (N = 0) | 0.716 (N = 67) | − (N = 0) | − (N = 0) | |

| 0.728 (N = 66) | 0.754 (N = 55) | 0.807 (N = 12) | 0.716 (N = 67) | 0.751 (N = 56) | 0.805 (N = 13) | |

| 0.728 (N = 66) | 0.755 (N = 51) | 0.835 (N = 9) | 0.716 (N = 67) | 0.750 (N = 48) | − (N = 0) | |

| 0.720 (N = 67) | 0.755 (N = 55) | 0.807 (N = 11) | 0.716 (N = 67) | 0.753 (N = 52) | 0.808 (N = 11) | |

Segmentation performance based on Dice similarity coefficient (DSC) of remaining samples after thresholding with uncertainty. The uncertainty threshold () is based on cross-validation DSC at values of 0.80, 0.85, and 0.90. The number of patients retained after referral in parenthesis. The best results for each threshold and model are in bold.

In addition, we analyzed the uncertainty estimation in terms of the two distance-based measures, MSD and 95HD, that is reported in the Supplementary Methods, with Supplementary Table 4 and Supplementary Fig. 2 for MSD and Supplementary Table 5 and Supplementary Fig. 3 for 95HD. Overall, the best-performing uncertainty measures were the mutual information and coefficient of variation. However, all uncertainty measures performed similarly to these with the exception of the predictive entropy, expected entropy, and structure expected entropy that had the worst performance similarly to the main analysis using DSC.

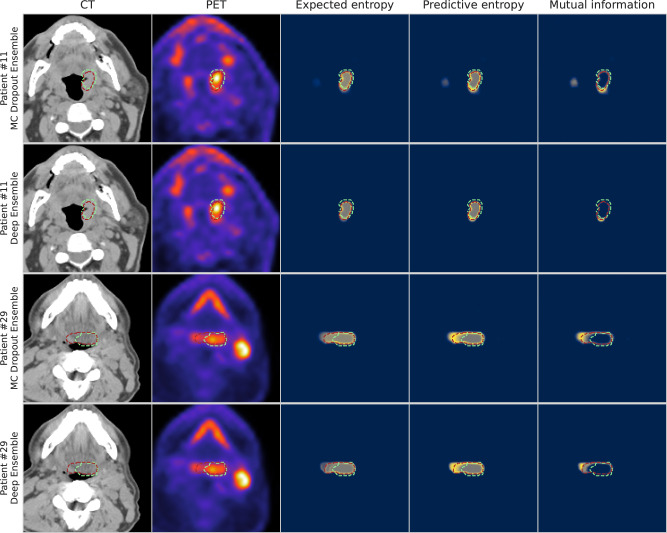

When visually examining the voxel-wise uncertainty measures of predictive entropy, mutual information, and expected entropy for both MC Dropout Ensemble and Deep Ensemble models, the uncertainty is highest around the edges of the predicted segmentation mask for all the measures. Mutual information is mainly focused on the edges with the inner volume having high confidence, while expected entropy demonstrates moderate uncertainty near the inner volume. Full visual comparison of axial slices is shown in Fig. 5. Additional in-depth qualitative analysis of uncertainty maps for select cases are shown in Supplementary Results, Supplementary Figs. 4–7.

Fig. 5. Visualization of segmentations overlaid on top of the input modalities and uncertainty maps for two patients from the external validation set.

Patient #11 and Patient #29 correspond to a T1N2M0 and T2N2M0 tumor case, respectively; both patients had tumors that originated in the base of tongue region. The columns illustrate in order: computed tomography (CT), positron emission tomography (PET), expected entropy, predictive entropy, and mutual information. The model and expert segmentations are superimposed in red and green, respectively. Rows one and three contain the results for MC Dropout Ensemble while rows two and four contain the results for the Deep Ensemble. Blue, gray, and yellow colors in uncertainty maps correspond to low, medium, and high model uncertainty, respectively.

Discussion

In this study, we have systematically investigated uncertainty-aware deep learning for OPC GTVp segmentation. We examined ways to leverage the uncertainty information in this segmentation task, specifically the uncertainty-based patient-level DSC performance estimation, utilization of uncertainty for automated referral for manual correction, and visualization of voxel-level uncertainty information. We implemented two established variations of Bayesian inference in DL, namely the MC Dropout Ensemble and the Deep Ensemble. Through experimentation with a multitude of uncertainty quantification measures (coefficient of variation, expected entropy, predictive entropy, mutual information, structure expected entropy, structure predictive entropy, structure mutual information, and DSC-risk) we compare and contrast differences between these approaches. Given the relative sparsity of existing literature for uncertainty estimation in OPC-related segmentation, our results act as an essential first benchmarking step towards a deeper understanding and further implementation of these techniques for clinically applicable radiotherapy segmentation workflows.

In terms of segmentation performance without considering uncertainty, both of the evaluated methods had similar performance while outperforming the expected average expert interobserver variability at 0.61 DSC. While the methods had statistical significant differences in performance, it should be noted that these minor differences are likely not clinically meaningful. Generally speaking, the state-of-the-art performance for the DL-based PET/CT OPC GTVp segmentation has remained mostly stagnant over the past few years, with external validation results being within a similar range as ours51,54. This is likely secondary to the already established large interobserver variability in OPC tumor-related segmentation. This further emphasizes the need for methods to provide clinicians with uncertainty estimates that could be used to further guide their clinical decision-making.

The uncertainty estimation performance between the two models was similar across most of the uncertainty measures, which suggests that the Deep Ensemble can be considered to be as accurate in the uncertainty estimation as the MC Dropout Ensemble, while requiring less computational resources. Indeed, our results are well aligned with a recent large-scale study of Bayesian DL, which showed that the Deep Ensemble is a competitive approximation for Bayesian inference37. As our uncertainty quantification methods require no modifications to the training of these models, and as the Deep Ensemble has been a popular approach for PET/CT OPC tumor segmentation (17 out of the 22 teams participating in the 2021 HECKTOR segmentation challenge utilized model ensembling51), it is straightforward to apply our uncertainty quantification framework for existing models to enable the model confidence to be used in practice.

Among all the uncertainty measures investigated, the coefficient of variation was generally favorable in terms of the performance estimation with uncertainty and both of the referral processes, while the predictive entropy, expected entropy, and structure expected entropy had generally the worst performance. However, all the measures had a tendency to be overconfident in the uncertainty-based performance estimation, which is seen as a gap between the generalization and cross-validation DSC values. The poor expected entropy performance suggests that most of the uncertainty in this task is related to the model uncertainty, as the expected entropy has been described to capture the aleatoric component of uncertainty45. This is also suggested by the improved performance of the structure expected entropy with the Deep Ensemble in comparison to the MC Dropout Ensemble. The dropout mechanism of MC Dropout Ensemble covers the parameter space with much larger support than the samples of Deep Ensemble that could in turn identify more of the uncertainty as parameter-related, which is not captured by the structured expected entropy. When comparing the structure and standard entropy measures, all structure-based measures perform better than the standard counterparts. One probable cause for this can be the considerably higher class imbalance in 3D segmentation in comparison to 2D setting. Indeed, since the entropy can be seen as the expected negative log-likelihood of the predictive distribution, which is a well known surrogate loss for misclassification rate60, it is expected that it is most influenced by the majority class. However, deeper analysis of these measures warrants a more technical analysis that is out of scope of this study. The risk-based uncertainty measure DSC-risk had comparable performance to the structure uncertainty measures, but it was outperformed by the coefficient of variation in most of the experiments. The expected conditional risk used to develop the measure could be extended to reflect clinical preference or risk-averseness, such as the shape, size, and location of the model output segmentation, to fine-tune the uncertainty estimation for the specific OPC tumor segmentation task.

From our qualitative analysis of the uncertainty estimation, both of the methods produced uncertain voxels mainly on the edges of the predicted segmentation mask for the expected entropy, i.e., the aleatoric component, mutual information, i.e., the epistemic component, and predictive entropy that includes both components of uncertainty. When comparing the models, the MC Dropout Ensemble method provided a smoother uncertainty gradient and more variation in the uncertainty, likely due to providing 300 samples per voxel compared to the five samples of Deep Ensemble. Moreover, a key takeaway from additional qualitative analysis includes a general overemphasis of PET signal by the models that could lead to erroneous predictions and uncertainty quantification. However, this finding is somewhat expected since studies of PET/CT auto-segmentation have demonstrated that models generally utilize PET signal to a higher degree in model predictions compared to CT signal61,62.

Lei et al.33, Tang et al.32, and van Rooij et al.34 are among the few studies that have investigated segmentation-related uncertainties in HNSCC. Similar to our work, these studies utilized ensembling (Lei et al.33) and MC Dropout (Tang et al.32, van Rooij et al.34) to segment nasopharyngeal cancer tumors and organs at risk on CT images, respectively. Moreover, in the only currently published study on the topic of uncertainty estimation in OPC GTVp segmentation, De Biase et al.35 proposed a novel DL-based method using PET/CT images that generated probability maps for capturing the model uncertainty. The sequences of three consecutive 2-dimensional slices and the corresponding tumor segmentations were used as inputs to a model that leveraged inter/intra-slice context using attention mechanisms and recurrent neural network architectures. In their study, ensembling was used to derive probability maps rather than uncertainty maps, whereupon the authors experimented with different probability thresholds corresponding to areas of higher or lower agreement among the trained models. Our study acts as an important adjunct to the study by De Biase et al.35, as the various methodologies investigated herein could be coupled with their proposed clinical solution.

There are some limitations in our study. First, although there are numerous probabilistic DL methods, we examined only two commonly used ones. These methods were selected due to their relative prevalence in existing literature and were thus deemed as an important starting point for exploring uncertainty estimation in OPC tumor-related segmentation. Second, we have utilized a relatively limited sample size for model training and evaluation. However, this study contains a robust training set from multiple institutions as supplied by the de-facto standard data science competition for OPC segmentation (i.e., HECKTOR) with external validation through our own institutional holdout dataset. Notably, our external validation dataset only contained human papillomavirus-positive patients; additional stratified analyses should be performed in the future studies using larger heterogenous external datasets. Moreover, we have chosen to utilize bounding boxes around the GTVp, as was performed for 2021 HECKTOR Challenge, in order to simplify the segmentation problem and focus on the exploration of uncertainty estimation whereas future studies should attempt the integration of uncertainty estimation into fully developed OPC segmentation workflows that can be applied to “as encountered” PET/CT images. Third, we have limited our investigation to the primary tumor, and not investigated nodal metastasis in this study, but as the newer editions of the HECKTOR Challenge includes these regions of interest, the incorporation of nodal metastasis should be the focus of future studies. Fourth, we have only investigated segmentations generated by a single observer for each scan, but the influence of multi-observer segmentations on uncertainty estimates is a future research direction. Finally, we have chosen to squarely focus on PET/CT as an imaging modality due to its ubiquity in OPC GTVp segmentation workflows. However, it is known that different imaging modalities (e.g., magnetic resonance imaging, contrast-enhanced CT) can provide complementary information for OPC tumor segmentation63, and the combination of multiple image inputs may affect auto-segmentation model outputs10,61,64. Therefore, future research should investigate how results differ for models using alternative imaging modalities and the impacts of individual channel inputs on the uncertainty estimation.

Conclusions

We applied Bayesian DL models with various uncertainty measures for OPC GTVp segmentation using multimodal large-scale datasets in order to evaluate the utility of uncertainty estimation. We found that regardless of the uncertainty measure applied, both of the models (Deep Ensemble and MC Dropout Ensemble) provided similar utility in terms of predicting segmentation quality and referral performance; due to its slightly lower computational cost and greater ubiquity, Deep Ensemble may be preferable to MC Dropout Ensemble. Notably, the coefficient of variation had overall favorable performance for both models so may be ideal as an uncertainty measure. While research in uncertainty estimation for OPC GTVp auto-segmentation is in its nascent stage, we anticipate that uncertainty estimation will become increasingly important as these AI-based technologies begin to enter clinical workflows. Therefore our benchmarking study is a crucial first-step toward a wider adoption and exploration of these techniques. Future studies should investigate further uncertainty quantification methodology, larger sample sizes, additional relevant segmentation targets (i.e., metastatic lymph nodes), and incorporation of additional imaging modalities.

Supplementary information

Acknowledgements

The work of Joel Jaskari, Jaakko Sahlsten, and Kimmo K. Kaski was supported in part by the Academy of Finland under Project 345449. Antti Mäkitie is supported in part by a grant from the Finnish Society of Sciences and Letters. Kareem A. Wahid is supported by the Dr. John J. Kopchick Fellowship through The University of Texas MD Anderson UTHealth Graduate School of Biomedical Sciences, the American Legion Auxiliary Fellowship in Cancer Research, and an NIH/National Institute for Dental and Craniofacial Research (NIDCR) F31 fellowship (1 F31DE031502-01) and the NCI NRSA Image Guided Cancer Therapy Training Program (T32CA261856). Benjamin H. Kann is supported by an NIH/National Institute for Dental and Craniofacial Research (NIDCR) K08 Grant (K08DE030216). Clifton D. Fuller receives related grant support from the NCI NRSA Image Guided Cancer Therapy Training Program (T32CA261856), as well as additional unrelated salary/effort support from NIH institutes. Dr. Fuller receives grant and infrastructure support from MD Anderson Cancer Center via: the Charles and Daneen Stiefel Center for Head and Neck Cancer Oropharyngeal Cancer Research Program; the Program in Image-guided Cancer Therapy; and the NIH/NCI Cancer Center Support Grant (CCSG) Radiation Oncology and Cancer Imaging Program (P30CA016672). Dr. Fuller has received unrelated direct industry grant/in-kind support, honoraria, and travel funding from Elekta AB.

Author contributions

Study concepts: all authors; study design: J.S., K.W., M.N., and J.J.; data acquisition: S.A., K.W., M.N., and R.H.; quality control of data and algorithms: J.S. and E.G.; data analysis and interpretation: J.S., K.W., J.J., B.K., A.M., and K.K.; manuscript editing: J.S., J.J., K.W., M.N., E.G., B.K., A.M., K.K., and C.F. All authors contributed to the article and approved the submitted version.

Peer review

Peer review information

Communications Medicine thanks Alessia de Biase and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Data availability

The HECKTOR 2021 training dataset51 is publicly accessible from https://www.aicrowd.com/challenges/miccai-2021-hecktor requiring to fill in and sign an End User Agreement. The anonymized external validation dataset is publicly available on Figshare (10.6084/m9.figshare.22718008) under CC BY 4.0 license65. CSV files used to create the Figures are available in Figshare (10.6084/m9.figshare.22718008)65.

Code availability

All analyses were performed using the cited software, packages, and pipelines, whose codes were publicly available. The DL architecture in both models used the same 3D residual U-net from the Medical Open Network for AI (MONAI) (0.7.0)52 (https://github.com/Project-MONAI/MONAI) Statistical comparisons and their annotations on figures were performed using the SciPy (1.7.3)57 (https://github.com/scipy/scipy) and statannotations (0.4.4)58 (https://github.com/trevismd/statannotations) Python packages, respectively.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors jointly supervised this work: Mohamed A. Naser, Kimmo Kaski.

Contributor Information

Mohamed A. Naser, Email: manaser@mdanderson.org

Kimmo Kaski, Email: kimmo.kaski@aalto.fi.

Supplementary information

The online version contains supplementary material available at 10.1038/s43856-024-00528-5.

References

- 1.Bray F, et al. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2018;68:394–424. doi: 10.3322/caac.21492. [DOI] [PubMed] [Google Scholar]

- 2.Rasch C, Steenbakkers R, van Herk M. Target definition in prostate, head, and neck. Semin. Radiat. Oncol. 2005;15:136–145. doi: 10.1016/j.semradonc.2005.01.005. [DOI] [PubMed] [Google Scholar]

- 3.Cardenas CE, et al. Comprehensive quantitative evaluation of variability in magnetic resonance-guided delineation of oropharyngeal gross tumor volumes and high-risk clinical target volumes: an R-IDEAL Stage 0 Prospective Study. Int. J. Radiat. Oncol. Biol. Phys. 2022;113:426–436. doi: 10.1016/j.ijrobp.2022.01.050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lin, D. et al. E pluribus unum: prospective acceptability benchmarking from the Contouring Collaborative for Consensus in Radiation Oncology crowdsourced initiative for multiobserver segmentation. J. Med. Imaging.10, S11903 (2023). [DOI] [PMC free article] [PubMed]

- 5.Njeh CF. Tumor delineation: the weakest link in the search for accuracy in radiotherapy. J. Med. Phys. 2008;33:136–140. doi: 10.4103/0971-6203.44472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Segedin, B. & Petric, P. Uncertainties in target volume delineation in radiotherapy—are they relevant and what can we do about them? Radiol. Oncol.50, 254–262 (2016). [DOI] [PMC free article] [PubMed]

- 7.Nikolov S, et al. Clinically applicable segmentation of head and neck anatomy for radiotherapy: deep learning algorithm development and validation study. J. Med. Internet Res. 2021;23:e26151. doi: 10.2196/26151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.McDonald, B. A. et al. Investigation of autosegmentation techniques on T2‐weighted MRI for off‐line dose reconstruction in MR‐linac workflow for head and neck cancers. Med. Phys. 51, 278–291 (2024). [DOI] [PMC free article] [PubMed]

- 9.Taku N, et al. Auto-detection and segmentation of involved lymph nodes in HPV-associated oropharyngeal cancer using a convolutional deep learning neural network. Clin. Transl. Radia. Oncol. 2022;36:47–55. doi: 10.1016/j.ctro.2022.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wahid KA, et al. Evaluation of deep learning-based multiparametric MRI oropharyngeal primary tumor auto-segmentation and investigation of input channel effects: results from a prospective imaging registry. Clin. Transl. Radiat. Oncol. 2022;32:6–14. doi: 10.1016/j.ctro.2021.10.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Naser, M. A., van Dijk, L. V., He, R., Wahid, K. A. & Fuller, C. D. Tumor segmentation in patients with head and neck cancers using deep learning based-on multi-modality PET/CT images. In Head and Neck Tumor Segmentation (eds Andrearczyk, V., Oreiller, V. & Depeursinge, A.) 85–98 (Springer International Publishing, 2021). [DOI] [PMC free article] [PubMed]

- 12.Naser, M. A. et al. Head and neck cancer primary tumor auto segmentation using model ensembling of deep learning in PET/CT images. In Head and Neck Tumor Segmentation and Outcome Prediction (eds Andrearczyk, V., Oreiller, V. L., Hatt, M. & Depeursinge, A.) 121–133 (Springer International Publishing, 2022). [DOI] [PMC free article] [PubMed]

- 13.Savjani RR, et al. Automated tumor segmentation in radiotherapy. Semin. Radiat. Oncol. 2022;32:319–329. doi: 10.1016/j.semradonc.2022.06.002. [DOI] [PubMed] [Google Scholar]

- 14.Oreiller V, et al. Head and neck tumor segmentation in PET/CT: the HECKTOR challenge. Med. Image Anal. 2022;77:102336. doi: 10.1016/j.media.2021.102336. [DOI] [PubMed] [Google Scholar]

- 15.Wahid KA, et al. Artificial intelligence for radiation oncology applications using public datasets. Semin. Radiat. Oncol. 2022;32:400–414. doi: 10.1016/j.semradonc.2022.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Andrearczyk V, et al. Automatic head and neck tumor segmentation and outcome prediction relying on FDG-PET/CT images: findings from the second edition of the HECKTOR challenge. Med. Image Anal. 2023;90:102972. doi: 10.1016/j.media.2023.102972. [DOI] [PubMed] [Google Scholar]

- 17.Andrearczyk, V. et al. Overview of the HECKTOR challenge at MICCAI 2022: automatic head and neck tumor segmentation and outcome prediction in PET/CT. In Head and Neck Tumor Segmentation and Outcome Prediction (eds Andrearczyk, V., Oreiller, V. L., Hatt, M. & Depeursinge, A.) 1–30 (Springer Nature Switzerland, 2023). [DOI] [PMC free article] [PubMed]

- 18.Kompa B, Snoek J, Beam AL. Second opinion needed: communicating uncertainty in medical machine learning. NPJ Digit. Med. 2021;4:4. doi: 10.1038/s41746-020-00367-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.van den Berg CAT, Meliadò EF. Uncertainty assessment for deep learning radiotherapy applications. Semin. Radiat. Oncol. 2022;32:304–318. doi: 10.1016/j.semradonc.2022.06.001. [DOI] [PubMed] [Google Scholar]

- 20.Depeweg, S., Hernández-Lobato, J. M., Doshi-Velez, F. & Udluft, S. Decomposition of uncertainty in Bayesian deep learning for efficient and risk-sensitive learning. In Proceedings of the 35th International Conference on Machine Learning (eds. Dy, J. & Krause, A.) Vol. 80, 1184–1193 (PMLR, 2018).

- 21.Hu, S. et al. Supervised uncertainty quantification for segmentation with multiple annotations. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2019 137–145 (MICCAI 2019, Cham, 2019).

- 22.Hoebel, K. et al. An exploration of uncertainty information for segmentation quality assessment. In Medical Imaging 2020: Image Processing Vol. 11313, 381–390 (SPIE, 2020).

- 23.Kohl, S. A. A. et al. A probabilistic U-Net for segmentation of ambiguous images. In Advances in Neural Information Processing System, Vol. 31 (eds Bengio, S. et al.) 6965–6975 (Curran Associates, Inc., 2018).

- 24.Carannante, G., Dera, D., Bouaynaya, N. C., Rasool, G. & Fathallah-Shaykh, H. M. Trustworthy medical segmentation with uncertainty estimation. Preprint at https://arxiv.org/pdf/2111.05978v1 (2021).

- 25.Sagar, A. Uncertainty quantification using variational inference for biomedical image segmentation. In Proceedings of the IEEE/CVF Winter Conference (IEEE, 2022).

- 26.Roy, A. G., Conjeti, S., Navab, N. & Wachinger, C. Inherent brain segmentation quality control from fully ConvNet Monte Carlo sampling. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2018. 664–672 (Springer International Publishing, 2018).

- 27.Dohopolski M, Chen L, Sher D, Wang J. Predicting lymph node metastasis in patients with oropharyngeal cancer by using a convolutional neural network with associated epistemic and aleatoric uncertainty. Phys. Med. Biol. 2020;65:225002. doi: 10.1088/1361-6560/abb71c. [DOI] [PubMed] [Google Scholar]

- 28.Song B, et al. Bayesian deep learning for reliable oral cancer image classification. Biomed. Opt. Express. 2021;12:6422–6430. doi: 10.1364/BOE.432365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Dolezal JM, et al. Uncertainty-informed deep learning models enable high-confidence predictions for digital histopathology. Nat. Commun. 2022;13:6572. doi: 10.1038/s41467-022-34025-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Dohopolski, M. et al. Uncertainty estimations methods for a deep learning model to aid in clinical decision-making—a clinician’s perspective. Preprint at https://arxiv.org/abs/2210.00589 (2022).

- 31.Nguyen D, et al. A comparison of Monte Carlo dropout and bootstrap aggregation on the performance and uncertainty estimation in radiation therapy dose prediction with deep learning neural networks. Phys. Med. Biol. 2021;66:054002. doi: 10.1088/1361-6560/abe04f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Tang P, et al. Unified medical image segmentation by learning from uncertainty in an end-to-end manner. Knowledge-Based Systems. 2022;241:108215. doi: 10.1016/j.knosys.2022.108215. [DOI] [Google Scholar]

- 33.Lei W, et al. Automatic segmentation of organs-at-risk from head-and-neck CT using separable convolutional neural network with hard-region-weighted loss. Neurocomputing. 2021;442:184–199. doi: 10.1016/j.neucom.2021.01.135. [DOI] [Google Scholar]

- 34.van Rooij W, Verbakel WF, Slotman BJ, Dahele M. Using spatial probability maps to highlight potential inaccuracies in deep learning-based contours: facilitating online adaptive radiation therapy. Adv. Radiat. Oncol. 2021;6:100658. doi: 10.1016/j.adro.2021.100658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.De Biase, A., Sijtsema, N. M., van Dijk, L., Langendijk, J. A. & van Ooijen, P. M. A. Deep learning aided oropharyngeal cancer segmentation with adaptive thresholding for predicted tumor probability in FDG PET and CT images. Phys. Med. Biol. 10.1088/1361-6560/acb9cf (2023). [DOI] [PubMed]

- 36.Guo, C., Pleiss, G., Sun, Y. & Weinberger, K. Q. On calibration of modern neural networks. In Proceedings of the 34th International Conference on Machine Learning (eds. Precup, D. & Teh, Y. W.) Vol. 70, 1321–1330 (PMLR, 2017).

- 37.Izmailov, P., Vikram, S., Hoffman, M. D. & Wilson, A. G. G. What are Bayesian neural network posteriors really like? In Proceedings of the 38th International Conference on Machine Learning (eds. Meila, M. & Zhang, T.) Vol. 139, 4629–4640 (PMLR, 2021).

- 38.Mehrtash A, Wells WM, Tempany CM, Abolmaesumi P, Kapur T. Confidence calibration and predictive uncertainty estimation for deep medical image segmentation. IEEE Trans. Med. Imaging. 2020;39:3868–3878. doi: 10.1109/TMI.2020.3006437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hoebel, K., Chang, K., Patel, J., Singh, P. & Kalpathy-Cramer, J. Give me (un)certainty—an exploration of parameters that affect segmentation uncertainty. Preprint at https://arxiv.org/abs/1911.06357 (2019).

- 40.Mukhoti, J. & Gal, Y. Evaluating Bayesian deep learning methods for semantic segmentation. Preprint at https://arxiv.org/abs/1811.12709 (2018).

- 41.Gal, Y. & Ghahramani, Z. Dropout as a Bayesian approximation: representing model uncertainty in deep learning. In Proceedings of The 33rd International Conference on Machine Learning (eds. Balcan, M. F. & Weinberger, K. Q.) Vol. 48, 1050–1059 (PMLR, 2016).

- 42.Abdar M, et al. A review of uncertainty quantification in deep learning: techniques, applications and challenges. Inf. Fusion. 2021;76:243–297. doi: 10.1016/j.inffus.2021.05.008. [DOI] [Google Scholar]

- 43.Gal, Y. Uncertainty in Deep Learning. PhD thesis, University of Cambridge (2016).

- 44.Shannon CE. A mathematical theory of communication. Bell Syst. Tech. J. 1948;27:379–423. doi: 10.1002/j.1538-7305.1948.tb01338.x. [DOI] [Google Scholar]

- 45.Band, N. et al. Benchmarking Bayesian Deep Learning on Diabetic Retinopathy Detection Tasks. In Proceedings of the Neural Information Processing Systems Track on Datasets and Benchmarks, Vol. 1 (eds Vanschoren, J. & Yeung, S.) (2021).

- 46.Shelhamer E, Long J, Darrell T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39:640–651. doi: 10.1109/TPAMI.2016.2572683. [DOI] [PubMed] [Google Scholar]

- 47.Ronneberger, O., Fischer, P. & Brox, T. U-Net: convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015 234–241 (Springer International Publishing, 2015).

- 48.Jaskari J, et al. Uncertainty-aware deep learning methods for robust diabetic retinopathy classification. IEEE Access. 2022;10:76669–76681. doi: 10.1109/ACCESS.2022.3192024. [DOI] [Google Scholar]

- 49.Xu A, Raginsky M. Minimum excess risk in Bayesian learning. IEEE Trans. Inf. Theory. 2022;68:7935–7955. doi: 10.1109/TIT.2022.3176056. [DOI] [Google Scholar]

- 50.Lahlou, S. et al. DEUP: direct epistemic uncertainty prediction. Transact. mach. learn. res. 2835–8856 (2023).

- 51.Andrearczyk, V. et al. Overview of the HECKTOR challenge at MICCAI 2021: automatic head and neck tumor segmentation and outcome prediction in PET/CT images. In Head and Neck Tumor Segmentation and Outcome Prediction 1–37 (Springer International Publishing, 2022). [DOI] [PMC free article] [PubMed]

- 52.AIcrowd MICCAI 2021: HECKTOR Challenges. AIcrowdhttps://www.aicrowd.com/challenges/miccai-2021-hecktor (2021).

- 53.Jorge Cardoso, M. et al. MONAI: an open-source framework for deep learning in healthcare. Preprint at https://arxiv.org/abs/2211.02701 (2022).

- 54.Andrearczyk, V. et al. Overview of the HECKTOR challenge at MICCAI 2020: automatic head and neck tumor segmentation in PET/CT. In Head and Neck Tumor Segmentation 1–21 (Springer International Publishing, 2021). [DOI] [PMC free article] [PubMed]

- 55.Filos, A. et al. A systematic comparison of Bayesian deep learning robustness in diabetic retinopathy tasks. Preprint at https://arxiv.org/abs/1912.10481 (2019).

- 56.Taha AA, Hanbury A. Metrics for evaluating 3D medical image segmentation: analysis, selection, and tool. BMC Med. Imaging. 2015;15:29. doi: 10.1186/s12880-015-0068-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Sherer MV, et al. Metrics to evaluate the performance of auto-segmentation for radiation treatment planning: a critical review. Radiother. Oncol. 2021;160:185–191. doi: 10.1016/j.radonc.2021.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Virtanen P, et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods. 2020;17:261–272. doi: 10.1038/s41592-019-0686-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Charlier, F. et al. Statannotations (v0.6). Zenodo10.5281/zenodo.7213391 (2022).

- 60.Murphy, K. P. Machine Learning: A Probabilistic Perspective (MIT Press, 2012).

- 61.Andrearczyk, V. et al. Automatic segmentation of head and neck tumors and nodal metastases in PET-CT scans. In Proceedings of the Third Conference on Medical Imaging with Deep Learning (eds Arbel, T. et al.) Vol. 121, 33–43 (PMLR, 2020).

- 62.Yang J, Beadle BM, Garden AS, Schwartz DL, Aristophanous M. A multimodality segmentation framework for automatic target delineation in head and neck radiotherapy. Med. Phys. 2015;42:5310–5320. doi: 10.1118/1.4928485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Salzillo TC, et al. Advances in imaging for HPV-related oropharyngeal cancer: applications to radiation oncology. Semin. Radiat. Oncol. 2021;31:371–388. doi: 10.1016/j.semradonc.2021.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Ren J, Eriksen JG, Nijkamp J, Korreman SS. Comparing different CT, PET and MRI multi-modality image combinations for deep learning-based head and neck tumor segmentation. Acta Oncol. 2021;60:1399–1406. doi: 10.1080/0284186X.2021.1949034. [DOI] [PubMed] [Google Scholar]

- 65.Sahlsten, J. et al. PET/CT data for PDL auto-segmentation project. Figshare10.6084/M9.FIGSHARE.22718008 (2024).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The HECKTOR 2021 training dataset51 is publicly accessible from https://www.aicrowd.com/challenges/miccai-2021-hecktor requiring to fill in and sign an End User Agreement. The anonymized external validation dataset is publicly available on Figshare (10.6084/m9.figshare.22718008) under CC BY 4.0 license65. CSV files used to create the Figures are available in Figshare (10.6084/m9.figshare.22718008)65.

All analyses were performed using the cited software, packages, and pipelines, whose codes were publicly available. The DL architecture in both models used the same 3D residual U-net from the Medical Open Network for AI (MONAI) (0.7.0)52 (https://github.com/Project-MONAI/MONAI) Statistical comparisons and their annotations on figures were performed using the SciPy (1.7.3)57 (https://github.com/scipy/scipy) and statannotations (0.4.4)58 (https://github.com/trevismd/statannotations) Python packages, respectively.