Abstract

Background

Laparoscopic cholecystectomy is a very frequent surgical procedure. However, in an ageing society, less surgical staff will need to perform surgery on patients. Collaborative surgical robots (cobots) could address surgical staff shortages and workload. To achieve context-awareness for surgeon-robot collaboration, the intraoperative action workflow recognition is a key challenge.

Methods

A surgical process model was developed for intraoperative surgical activities including actor, instrument, action and target in laparoscopic cholecystectomy (excluding camera guidance). These activities, as well as instrument presence and surgical phases were annotated in videos of laparoscopic cholecystectomy performed on human patients (n = 10) and on explanted porcine livers (n = 10). The machine learning algorithm Distilled-Swin was trained on our own annotated dataset and the CholecT45 dataset. The validation of the model was conducted using a fivefold cross-validation approach.

Results

In total, 22,351 activities were annotated with a cumulative duration of 24.9 h of video segments. The machine learning algorithm trained and validated on our own dataset scored a mean average precision (mAP) of 25.7% and a top K = 5 accuracy of 85.3%. With training and validation on our dataset and CholecT45, the algorithm scored a mAP of 37.9%.

Conclusions

An activity model was developed and applied for the fine-granular annotation of laparoscopic cholecystectomies in two surgical settings. A machine recognition algorithm trained on our own annotated dataset and CholecT45 achieved a higher performance than training only on CholecT45 and can recognize frequently occurring activities well, but not infrequent activities.

The analysis of an annotated dataset allowed for the quantification of the potential of collaborative surgical robots to address the workload of surgical staff. If collaborative surgical robots could grasp and hold tissue, up to 83.5% of the assistant’s tissue interacting tasks (i.e. excluding camera guidance) could be performed by robots.

Supplementary Information

The online version contains supplementary material available at 10.1007/s00464-024-10958-w.

Keywords: Collaborative surgical robots, Robot autonomy, Surgical process modeling, Surgical data science, Action recognition, Machine learning

Objectives

The aim of this study was to develop and validate a fine-granular workflow model of surgical activities in laparoscopic cholecystectomy for cooperation with collaborative surgical robots.

Background

Laparoscopic cholecystectomy (LC) is the gold standard for gallbladder removal, with over 700,000 surgeries performed each year in the United States and over 170,000 in Germany [1, 2]. However, surgical staff shortages pose a serious challenge in our ageing society as less surgical staff will need to perform LC patients [3–5].

As a possible solution, computer vision for LC shows potential to alleviate the workload of the surgical staff with the advent of intraoperative decision support, automated quality control and cooperation with collaborative robots [6–9].

Specifically, in contrast to commercially available teleoperated surgical robots, collaborative surgical robots work alongside the surgeon during conventional laparoscopic surgery by automatically performing surgical tasks, such as camera guidance or suturing bowel anastomosis [10–13]. This hybrid approach has shown promising results in non-medical fields such as industrial assembly lines [14–18].

A key challenge for surgical computer vision is the application of fine-granular surgical workflow recognition in order for collaborative surgical robots to become context-aware [8, 19–22]. To train machine learning (ML) algorithms with surgical knowledge for fine-granular surgical workflow recognition, surgical workflow information needs to be captured and structured in large quantity but data which includes fine-granular surgical workflow annotation for LC is lacking [23–25]. One established dataset, CholecT45 (previously CholecT40, based on videos from the single-center Cholec80 benchmark dataset), uses the concept of action triplets (instrument, verb, target) for surgical action data annotation and was used as a benchmark dataset for surgical workflow recognition in the MICCAI EndoVis challenges CholecTriplet2021 and CholecTriplet2022 [26–30]. However, clear action, verb and target class annotation rules are not publicly available and the used annotation software is proprietary, thus the dataset is neither reproducible nor easily extendable [31, 32]. Also, actor information is not included, meaning that action triplet recognition for each of the surgeon’s and surgical assistant’s hands for the development of individual collaborative robot arms is not possible.

Neumuth et al. proposed to formalize the fine-granular surgical workflow using the surgical activity, which is a quadruplet (actor, instrument, action, target) [33]. This concept was previously introduced for surgical action recognition in LC in the MICCAI EndoVis 2019 HeiChole benchmark challenge [34]. However, this challenge focused on surgical phases and the data used for the action recognition task did not contain information on the actor, instrument or target of an action.

Furthermore, a key step in the development of collaborative autonomous surgical robots is in making the safe preclinical translation of prototypes possible [35, 36]. Despite this, research on surgical action recognition in multiple surgical settings like phantom, ex vivo porcine, in vivo porcine and human cadaver is scarce [37].

To overcome these limitations with regards to activity recognition for collaborative surgical robotics, we aimed to answer the following research questions:

How can the concept of surgical activity be applied to model laparoscopic cholecystectomy in the surgical settings “in vivo human” and “ex vivo porcine”?

How can the model be applied to real-world procedures by means of human intelligence, i.e. manual video annotation?

Can a machine learning algorithm automate activity recognition?

The contributions of this work are threefold: we develop and share a surgical process model of the activity workflow for LC (activity model), annotate and analyze a novel activity dataset on publicly available human LC videos and new ex vivo porcine LC videos with a free and open-source annotation software, and evaluate the performance of a ML algorithm trained on our novel dataset and on CholecT45.

Materials & methods

Activity model

Model development

The previously introduced surgical activity definition was used for the development of a novel surgical process model for video annotation of LC [33]. Discussion rounds were performed between a board-certified general surgeon, an engineer and two medical students with experience in surgical data annotation until an agreement for the model was found. The following characteristics were defined using practical guidelines defined in previous research:

- Activity definition: modeling actor, instrument, action, and target information in a bottom-up manner as activities with the following definitions [38, 39].

- Actor: specifies which stakeholder’s hand performs the action.

- Instrument: specifies with which tool the action is performed by the actor.

- Action: specifies what is being done by the actor.

- Target: specifies what tissue is being manipulated.

Standardization: defining a hierarchical task granularity structure that corresponds to a recent consensus and applying it on videos from start to end including idle time [40].

Causality: modeling for causal consumption of data, meaning that the future should not be known when observing a given moment during a procedure [39].

Generalizability: modeling for application to a generic LC procedure to capture procedure variations for the preclinical development of collaborative surgical robot, both on explanted porcine livers and on human patients [38, 40, 41].

Class selection

The activity model was populated with classes for each activity feature, for example “right hand of surgeon” for actors, “overholt” for instruments, “blunt dissect” for actions or “Calot triangle” for targets (Supplementary Material, Sect. 1). The selection of classes was based on previous research and the clinical experience of a board certified general surgeon [34, 42]. In total, 7 surgical phases, 3 actors, 24 instruments, 12 actions, 25 targets and binary instrument visibility were selected as possible classes.

Model validation with manual annotation

Data collection

Videos of LC performed on human patients by surgeons in the Hospital Salem (Heidelberg, Germany) present in the publicly available HeiChole benchmark dataset were selected for annotation, amounting to ten videos [34]. This represented videos from a single center in the HeiChole benchmark dataset in order to manage annotation effort and ensure consistency of the data, because only LC with the same trocar placement and using the most popular dissection technique in Germany, the electric hook, were included [43, 44].

Ten videos of LC performed on explanted porcine livers by medical students and surgical residents in the surgical training center of Heidelberg University Hospital (Heidelberg, Germany) were collected for annotation. To manage annotation effort, only LC videos on explanted porcine livers with a length of less than one hour were included [44]. Video segments in which the endoscope was outside of the laparoscopy box were censored with a white frame.

As the HeiChole benchmark dataset is publicly available and all videos were fully anonymized before use, no ethics approval or written consent were needed.

Data annotation

The video annotation rules were based on the surgical activity model (Supplementary Material, Sect. 2). The free and open-source ANVIL annotation software was used for annotation [45].

All videos were converted to 25 frames per second and annotated by one medical student with experience in surgical data annotation and clinical experience as a first assistant in LC (Fig. 1). Class and rule ambiguities that were discovered during the annotation process were resolved by discussions with a board-certified general surgeon. Accordingly, video annotations were systematically updated 11 times in total as the annotation rules were being refined.

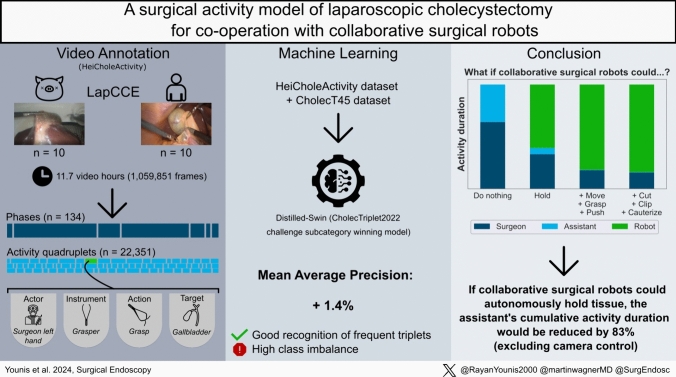

Fig. 1.

Visual abstract of the study. The left bottom shows an example of an activity: “The surgeon’s left hand used a grasper to grasp the gallbladder from 14:47,50 to 14:49,00 min.”

Automatic activity recognition with machine learning

Data processing

A machine learning (ML) algorithm was trained and tested for automatic surgical action recognition on the annotated data. A ML algorithm called Distilled-Swin, which ranked first in the action triplet recognition category of the MICCAI EndoVis challenge CholecTriplet2022, the variant (SwinT + MultiD + SelfD) model, was used [29, 46]. Distilled-Swin is a swin transformer model trained through self-distillation, this approach is specifically designed to address challenges related to high number of classes and class imbalance [47].

To use the dataset as input data for ML, it was preprocessed in the following order: invisible activities were removed to limit the introduction of potential class ambiguities. The actor was removed from each activity quadruplet to form action triplets to comply with the algorithm design. All targets of activities with the action “move” were converted to “no target” to reduce potential temporal ambiguities. The individual classes were grouped by clinical similarity, relevance and categorizations in previous literature [26, 30, 34, 48] to reduce the number of occurring triplet combinations to 100 or less, as favored by the algorithm design (Supplementary Material, Sect. 3). Independently, the individual classes of the surgical activity model were also grouped to symbolically fit into the classes used in CholecT45. The dataset’s video frames were sampled at a constant framerate of 3.125 FPS to limit the overall model input to less than 150.000 frames because of hardware limitations, similar to previous works in literature [49].

Experiments

Three experiments were carried out with the Distilled-Swin algorithm. First, training and cross-validation were performed on our own dataset using the groupKfold algorithm to avoid data leakage between video sequences [50]. Each video appeared exactly once in the test set across all folds (Table 1). The data were stratified based on the triplet combination to balance the number of classes per fold. The action triplet recognition performance was measured with the mean average precision (mAP) and top K = 5 Accuracy (Top-5-Acc) percentages using the ivtmetrics library [49]. Performance differences with different data input were investigated (Table 1).

Table 1.

Data split for cross-validation using all data

| Fold 0 | Fold 1 | Fold 2 | Fold 3 | Fold 4 | |

|---|---|---|---|---|---|

| 0 | HeiChole17 | 002exvivo | 005exvivo | 004exvivo | 001exvivo |

| 1 | HeiChole18 | 013exvivo | 009exvivo | 010exvivo | 011exvivo |

| 2 | HeiChole20 | HeiChole16 | HeiChole19 | HeiChole14 | 012exvivo |

| 3 | – | HeiChole21 | HeiChole22 | HeiChole15 | 014exvivo |

| 4 | – | – | – | – | HeiChole13 |

Second, the algorithm was trained on the combination of our HeiCholeActivity dataset and the CholecT45 dataset and then validated on the official cross-validation split of the CholecT45 dataset [49]. The calculation of the action triplet recognition performance was identical to the first experiment and mAP was measured.

Third, our dataset was separated by actor into three subsets, meaning that each subset included instrument, action and target annotations specific to the surgeon’s left hand, surgeon’s right hand or assistant’s right hand. A Distilled-Swin algorithm was trained on all subsets. The cross-validation of each algorithm was identical to the first experiment and mAP was measured.

Results

Activity dataset

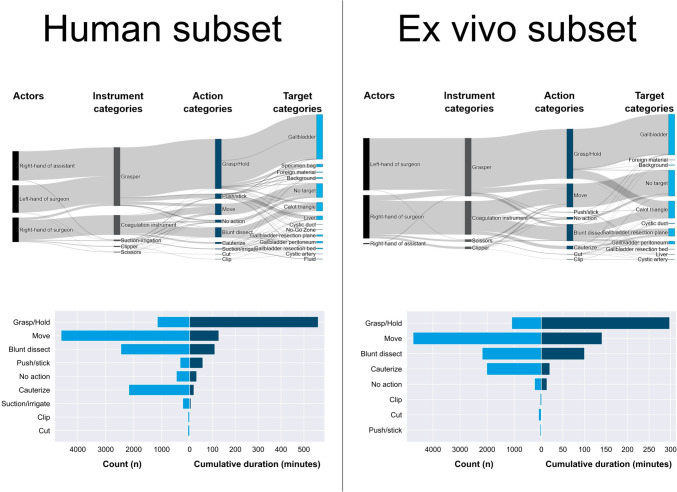

Our dataset consists of n = 20 videos at 25 FPS with a cumulative duration of 11.7 h or 1,059,851 frames (Fig. 1). The dataset contains 22,351 annotated individual activities, i.e. time segments corresponding to activities, with a cumulative duration of 24.9 h. For the human subset, there were more different targets compared to the ex vivo porcine subset and the addition of the assistant’s hand to the annotation resulted in the actions “grasp” and “hold” having a higher proportion of the overall duration. The cumulative number and cumulative duration of activities for human and ex vivo porcine subsets were differentiated (Fig. 2). Instrument presence was annotated 9,841 times and phases 134 times in total. A proportion of 9.02% of frames contains no visible activity at all. 83.5% of the assistant’s cumulative activity duration consisted of “grasp” and “hold”.

Fig. 2.

Selection of descriptive statistics of the activity model-based annotated HeiCholeActivity dataset for the human subset (left) and ex vivo porcine subset (right). Top: Cumulative duration of combinations of annotated features within all activities. Bottom: Total amount of activity time segments (light blue) and cumulative duration of activity time segments (dark blue), grouped by action category

Automatic action triplet recognition

Our own class reduction method for model input resulted in 5 instrument categories, 9 action categories and 14 target categories which formed 88 different triplet combinations occurring in the dataset. The dataset had a size of 132,482 frames after preprocessing.

In the first experiment with training on our own HeiCholeActivity dataset only with our own class reduction method, the action triplet recognition algorithm scored 25.7% mAP and 85.5% Top-5-Acc using all videos for training and cross-validation. Detailed results of the algorithm cross-validation performance can be seen in Table 2.

Table 2.

Action triplet recognition algorithm performance using different training data input from the HeiCholeActivity dataset

mAP mean average precision score, Top−5 Acc top K=5 accuracy score

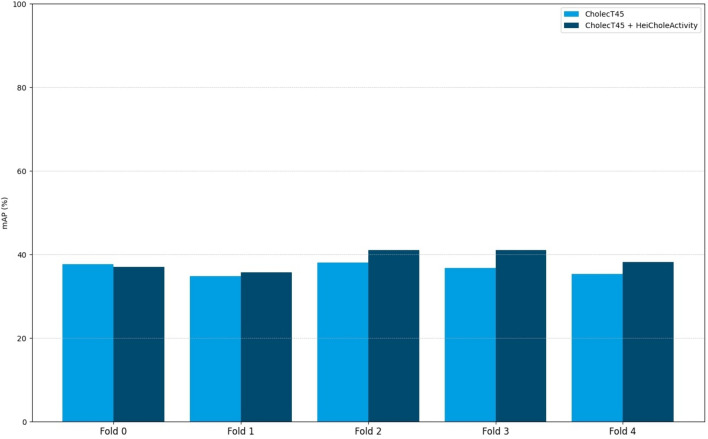

In the second experiment with training on our own HeiCholeActivity dataset and CholecT45 with CholecT45 triplet classes, the action triplet recognition algorithm scored 37.9% mAP. Figure 3 presents an overview of the per-fold performance compared to algorithm performance using only CholecT45 for training [31, 46].

Fig. 3.

Comparison between the Distilled-Swin algorithm cross-validation performances on CholecT45 using only CholecT45 for training or the combination of CholecT45 and HeiCholeActivity

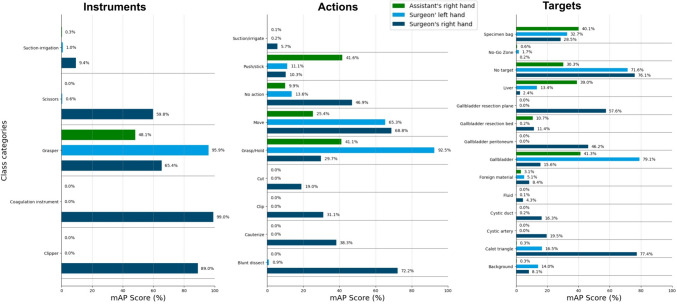

In the third experiment with training on our HeiCholeActivity dataset only our own class reduction method and actor separation, the action triplet recognition algorithm scored 49.5% mAP for action triplets of the surgeon’s left hand, 46.8% for action triplets of the surgeon’s right hand and 56.6% for action triplets of the assistant’s right hand. A detailed overview of the per-class performance for each actor can be seen in Fig. 4.

Fig. 4.

Per-actor class category recognition performance using the HeiCholeActivity dataset for training

Discussion

Activity model for laparoscopic cholecystectomy

Our activity model formalizes the surgical activity workflow aiming at cooperation with collaborative surgical robots and has been applied to annotate real-world videos. Furthermore, our descriptive analysis provides quantitative insights into the surgical workflow, e.g. what the differences between LC on humans and on explanted porcine livers are (Fig. 2) and which activities are performed at which frequency during LC.

Activity recognition with ML

As of now, activity recognition with machine learning is not clinically applicable yet, probably because of limited data quantity and variability. The algorithm’s cross-validation performance using only the annotated videos on explanted porcine livers as input was higher than using only the annotated videos on humans (Table 2). This could be attributed to the different number of unique action triplet classes in each subset, as more unique classes potentially lead to more class imbalance and lower mAP scores. Passing supplementary separated action, instrument and target labels as well as phase and actor annotations as auxiliary information yielded no particular performance improvement.

In the first experiment, the algorithm’s cross-validation mAP score using all of our own HeiCholeActivity dataset as input (25.7%) was lower than during its participation in the CholecTriplet2022 challenge which used the CholecT45 dataset as input (36.5%) [29]. Possible reasons for this difference in performance can be addressed. While the annotation of all video frames including idle time reflects the actual occurrence of each activity, it introduced a high temporal action triplet class imbalance (Fig. 2). Accentuated by the limited quantity of annotated data, this resulted in a good recognition of frequent classes but poor recognition performance of many infrequent classes. This negatively affected the overall mAP score as the performance for each triplet class has the same weight, but positively affected the top K = 5 accuracy as frequent classes were recognized correctly in many frames. Top K = 5 accuracy performance should be interpreted cautiously, as it is comparatively high but may not be a suitable metric to measure clinical usability.

Our dataset contains fewer videos than the one used in CholecTriplet2022 (20 vs. 45 videos) and addressing the lower amount of total data and infrequent classes by sampling videos at a higher framerate (3.125 vs. 1 FPS) did not appear to solve this issue. Thus, more videos and video segments should be annotated in the future. The auxiliary use of semantically segmented video frames, which contain rarely targeted but often visible tissues, may also improve performance [42]. Recording videos with multi-modal medical device sensor data, e.g. the electrocautery signal feed, may also improve annotations [51]. Semi-supervised ML approaches may bridge the gap between the availability of surgical video and lack of annotations thereof [52].

In the second experiment, the algorithm cross-validation mAP score using our own HeiCholeActivity dataset as well as CholecT45 as input improved over the score achieved by the algorithm using only CholecT45 as input (37.9% vs. 36.5%) [29, 46]. This improvement is encouraging for further research on state-of-the-art algorithms which achieve 40.6% mAP using only CholecT45 for training [53]. However, the difference in annotation rules between the datasets made the symbolical mapping of our own classes to the classes of CholecT45 partly inconsistent. For example, the target “Calot triangle” was mapped to “cystic_plate” in the CholecT45 dataset. The target “Calot triangle” was often used in our own dataset to annotate dissecting activities in the Calot triangle dissection phase and implicitly includes cystic duct, cystic artery and cystic plate. This is due to the consideration that differentiating the correct target in a causal manner while it was still covered by fatty tissue, and thus potentially false was too ambiguous and subjective for consistent goal-driven annotations. Therefore, “cystic duct” and “cystic artery” can only be annotated when clipped or cut according to our annotation rules. In CholecT45, “cystic duct” and “cystic artery” were also annotated in the Calot triangle dissection phase. This highlights the need for transparent annotation rules across datasets in literature which we address in the Supplementary Material.

In the third experiment, big differences in class performance between actors were noted. For example, the action “Grasp/Hold” was recognized well for the surgeon’s left hand and the target “Calot triangle” was recognized well for the surgeon’s right hand (Fig. 4).

Limitations

During the development and application of the activity model, several limitations were discovered in regards to the real-time, i.e. causal nature, of the use case of collaborative surgical robotics. A compromise was negotiated between unambiguous start and end events for each activity and a manageable annotation effort during the development and iteration of every annotation rule. For example, the target of a movement can only be roughly estimated until the movement is finished, thus forcing the annotator to change annotations retrogradely if the prior assumed target turns out to be false. This incompatibility between real-time recognition and goal-oriented modeling may be inherent to the ambiguous nature of the prediction of future actions, especially for the use case of collaborative robotic assistance. Modeling in a strictly event-driven manner could cause less causality issues, but would produce either a substantial loss of clinical meaning or a substantially higher annotation effort [39]. Therefore, during model refinement and resulting iterations of the annotation rules, a latency period of one second was introduced to annotate the most probable activity during this timeframe (Supplementary Material, Sect. 2, Action annotation). This allowed causality to be mostly kept, i.e. no significant retrograde correction of the annotated activity to be needed. For the use case of collaborative surgical robots, an input latency of one second is estimated by the authors to be tolerable to reliably understand the surgeon’s workflow early enough in most situations, similarly to a human assistant’s reaction and processing time to see and understand the surgeon’s workflow in real-time. However, to alleviate this latency in the future, muscle activity sensors on the surgeon’s arms may aid to anticipate their next activity [54].

Actors also occasionally performed two actions simultaneously, e.g. the right hand grasper moving to the specimen bag while holding the gallbladder. The activity model captured only the primary action, resulting in the potential loss of clinically relevant information. This could be addressed in future works.

Additionally, “blunt dissect” was found to be an action which included many different dissection techniques with the electric hook, overholt or other instruments. In future works, each dissection technique could become its own class and therefore provide more detailed information on the intraoperative workflow [55]. Similarly, differentiating between the anatomic regions of a targeted tissue could be useful to capture the advancement of the procedure in a more accurate and clinically relevant manner (e.g. dissecting the left, right or center of the Calot triangle and then clipping the proximal or distal end of the cystic duct). This could also be added to the activity model in future works.

Research steps towards clinical application

LC is a popular benchmark procedure for surgical workflow recognition with ML due to it being a high-volume procedure performed by novice surgeons [21]. However, more complex surgical procedures performed by experienced surgeons such as robot-assisted minimally invasive esophagectomy (RAMIE) are becoming increasingly relevant for surgical workflow recognition due to higher process standardization and clinical need for assistance systems to further improve patient outcomes [56, 57].

Regardless of the selected procedure, high quantity and variation of input data are key for ML algorithms to generalize and be robust for clinical application. Therefore, we add results from a new single-center annotated subset of human LC from a German hospital to the research field. We also created a novel single-center annotated subset of ex vivo porcine LC to facilitate translational research on collaborative surgical robots in pre-clinical development phases. Both subsets share the same surgical activity process model. The model’s classes were mapped to the classes of the CholecT45 dataset in order to use both datasets for algorithm training.

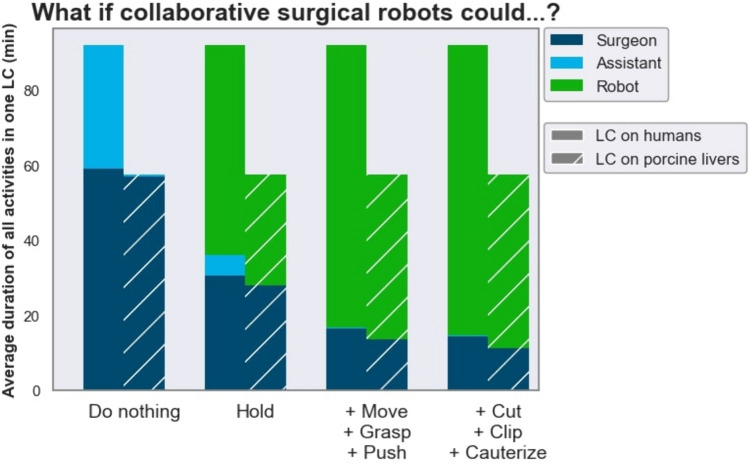

If collaborative surgical robots could grasp and hold tissue, 83.5% of the assistant’s tissue interacting tasks (i.e. excluding camera guidance) could be performed by the robot. Thus, collaborative surgical robots may have the potential to lower the workload of surgical staff in LC if they could autonomously perform assisting actions in the future (Fig. 5). If robots took over assisting functions such as using a grasping instrument (moving, pushing, grasping tissue or holding tissue) and controlling the endoscope during LC, they could free up time for the surgical assistant to assist in more complex surgical procedures, work in the ward or rest during on-call night shifts. Conversely, a reliable robotic surgical assistant may lower the hurdle for surgical assistants to start performing basic laparoscopic procedures like LC themselves while the supervising surgeon would not necessarily need to be scrubbed in and assisting.

Fig. 5.

Quantitative impact of collaborative robotics on human–robot-cooperation in laparoscopic cholecystectomy (LC). The plot displays the hypothetical cumulative duration of activities of each stakeholder (surgeon, assistant, robot) across all videos if collaborative surgical robots could autonomously perform increasing sets of specific action tasks. It is assumed that the number of available robots is not limited. Camera holding with the assistant’s left hand was not included, because it was not annotated as an activity in our model and does not involve direct interaction with tissue

While robotically automating shorter, more critical actions (such as cutting, clipping, cauterizing) may not save a substantial amount of time in surgery, it may reduce the surgeon’s daily mental stress if performed reliably [58].

The requirements for state-of-the-art data input for ML compelled us to preprocess the dataset. This resulted in decreased data complexity and introduced feature selection as a type of data leak [59]. However, the authors believe that the data’s complexity reflects the activity model’s clinical relevance. In regards to enabling collaborative surgical robotics, the data’s clinical relevance is crucial, and thus should not be simplified but preserved. The authors consider that such collaborative surgical robots will require comparable workflow recognition capabilities to that of surgeons for seamless and complex real-time assistance, which does not leave space for trade-offs in data complexity and may require further improvements in performance of ML algorithms.

Concerning the practical use of the activity model, we estimate the actual annotating time for all 20 videos to have taken a total of over 500 working hours, including the learning curve and iterations, despite the specified video selection criteria. Therefore, we consider annotation effort to be a bottleneck for the further use of machine learning algorithms for activity recognition. The resulting selection bias may have resulted in the collection of less diverse data and less edge cases. Those bottlenecks could be addressed by the use of foundation models during the annotation process [60]. However, foundation models designed for workflow recognition are lacking. Label interpolation could make use of annotated time segments in order to augment the quantity of annotated data. Perceived annotation effort could also be reduced if a modern, free and gamified video annotation software were developed [61]. Less annotation effort would reduce selection bias and potentially enable multi-center randomized video collection for surgical action annotation. This would also enable the annotating capacity to be scaled up to improve intra- and inter-rater reliability of the annotations.

Finally, future works could contain more surgical settings, e.g. robot-assisted LC on humans and LC on living pigs. This could improve the generalization of ML-based surgical action recognition algorithms during the preclinical development of collaborative surgical robots.

Importantly, considerations on accountability and liability in the use of collaborative surgical robots leveraging automated processes need to be taken into account already during the development process. A level of autonomy (LoA) needs to be clearly defined in each development stage and comply with national and international medical device regulations before entering the market. However LoA definitions lack consistency in literature and need a unified approach [11, 62, 63]. Especially, a potential of harm to patients needs to be avoided at all costs. Determining the liability of the main stakeholders, mainly between the surgical staff and the robot manufacturer, when complications for the patient arise, can pose a challenge when the system is tightly integrated into the surgical workflow and responsibilities are not clearly defined [64].

Conclusion

This paper introduces an activity model of laparoscopic cholecystectomy in different surgical settings. Its first application with video annotation and its use for machine learning-based action triplet recognition are presented. The activity model accurately captures clinically relevant information on the fine granular surgical workflow and shows the potential of collaborative surgical robots in the operating rooms of the future. However, the gap between the constraints of machine learning approaches and the requirements of surgeons for the use case of collaborative surgical robotics needs to be bridged in future works.

Supplementary Information

Below is the link to the electronic supplementary material.

Funding

Open Access funding enabled and organized by Projekt DEAL. This work has been funded by the German federal ministry of research and education (BMBF) within the Koala-Grasp project (funding number: 13GW0471D), by the German Research Foundation (DFG, Deutsche Forschungsgemeinschaft) as part of Germany’s Excellence Strategy—EXC 2050/1—Project ID 390696704—Cluster of Excellence “Centre for Tactile Internet with Human-in-the-Loop” (CeTI) of TUD Dresden University of Technology and by the National Center for Tumor Diseases Heidelberg within the Cancer-Therapy-Programm “Data Science driven Surgical Oncology”.

Data availability

The raw video data and annotations that support the findings of this study are available from the corresponding author upon reasonable request.

Disclosures

Franziska Mathis-Ullrich, Beat Peter Müller-Stich and Martin Wagner are project leaders of the Koala-Grasp project, funded by the German federal ministry of research and education (BMBF) (funding number: 13GW0471D). Stefanie Speidel, Marius Distler, Jürgen Weitz and Martin Wagner are funded by the German Research Foundation (DFG) as part of Germany’s Excellence Strategy—EXC 2050/1—Project ID 390696704—Cluster of Excellence “Centre for Tactile Internet with Human-in-the-Loop” (CeTI) of TUD Dresden University of Technology. Rayan Younis is funded by the National Center for Tumor Diseases Heidelberg within the Cancer Therapy Program Data Science driven Surgical Oncology (DSdSO). Amine Yamlahi, Sebastian Bodenstedt, Paul Maria Scheikl, Anna Kisilenko, Marie Daum, André Schulze, Philipp Anthony Wise, Felix Nickel and Lena Maier-Hein have no conflict of interest or financial ties to disclose.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Shaffer EA (2006) Epidemiology of gallbladder stone disease. Best Pract Res Clin Gastroenterol 20:981–996. 10.1016/j.bpg.2006.05.004 10.1016/j.bpg.2006.05.004 [DOI] [PubMed] [Google Scholar]

- 2.Statistisches Bundesamt (Destatis) (2023) Krankenhäuser: Die 20 häufigsten Operationen insgesamt (OPS5). https://www.destatis.de/DE/Themen/Gesellschaft-Umwelt/Gesundheit/Krankenhaeuser/Tabellen/drg-operationen-insgesamt.html. Accessed 11 May 2024

- 3.Ellison EC, Pawlik TM, Way DP, Satiani B, Williams TE (2018) Ten-year reassessment of the shortage of general surgeons: Increases in graduation numbers of general surgery residents are insufficient to meet the future demand for general surgeons. Surgery 164:726–732. 10.1016/j.surg.2018.04.042 10.1016/j.surg.2018.04.042 [DOI] [PubMed] [Google Scholar]

- 4.Kirch DG, Petelle K (2017) Addressing the physician shortage: the peril of ignoring demography. JAMA 317:1947. 10.1001/jama.2017.2714 10.1001/jama.2017.2714 [DOI] [PubMed] [Google Scholar]

- 5.Vallböhmer D, Fuchs H, Krones C (2018) Nehmen wir jetzt jeden? eine umfrage in Deutschen chirurgischen kliniken. Passion Chir 8

- 6.Battaglia E, Boehm J, Zheng Y, Jamieson AR, Gahan J, Majewicz Fey A (2021) Rethinking autonomous surgery: focusing on enhancement over autonomy. Eur Urol Focus 7:696–705. 10.1016/j.euf.2021.06.009 10.1016/j.euf.2021.06.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gumbs AA, Grasso V, Bourdel N, Croner R, Spolverato G, Frigerio I, Illanes A, Abu Hilal M, Park A, Elyan E (2022) The advances in computer vision that are enabling more autonomous actions in surgery: a systematic review of the literature. Sensors 22:4918. 10.3390/s22134918 10.3390/s22134918 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Maier-Hein L, Vedula SS, Speidel S, Navab N, Kikinis R, Park A, Eisenmann M, Feussner H, Forestier G, Giannarou S, Hashizume M, Katic D, Kenngott H, Kranzfelder M, Malpani A, März K, Neumuth T, Padoy N, Pugh C, Schoch N, Stoyanov D, Taylor R, Wagner M, Hager GD, Jannin P (2017) Surgical data science for next-generation interventions. Nat Biomed Eng 1:691–696. 10.1038/s41551-017-0132-7 10.1038/s41551-017-0132-7 [DOI] [PubMed] [Google Scholar]

- 9.Yip M, Das N (2018) Robot autonomy for surgery. The encyclopedia of medical robotics. World Scientific, pp 281–313 [Google Scholar]

- 10.Attanasio A, Scaglioni B, De Momi E, Fiorini P, Valdastri P (2021) Autonomy in surgical robotics. Annu Rev Control Robot Auton Syst 4:651–679. 10.1146/annurev-control-062420-090543 10.1146/annurev-control-062420-090543 [DOI] [Google Scholar]

- 11.Haidegger T (2019) Autonomy for surgical robots: concepts and paradigms. IEEE Trans Med Robot Bionics 1:65–76. 10.1109/TMRB.2019.2913282 10.1109/TMRB.2019.2913282 [DOI] [Google Scholar]

- 12.Wagner M, Bihlmaier A, Kenngott HG, Mietkowski P, Scheikl PM, Bodenstedt S, Schiepe-Tiska A, Vetter J, Nickel F, Speidel S, Wörn H, Mathis-Ullrich F, Müller-Stich BP (2021) A learning robot for cognitive camera control in minimally invasive surgery. Surg Endosc 35:5365–5374. 10.1007/s00464-021-08509-8 10.1007/s00464-021-08509-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Saeidi H, Opfermann JD, Kam M, Wei S, Leonard S, Hsieh MH, Kang JU, Krieger A (2022) Autonomous robotic laparoscopic surgery for intestinal anastomosis. Sci Robot 7:eabj2908. 10.1126/scirobotics.abj2908 10.1126/scirobotics.abj2908 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Beuss F, Schmatz F, Stepputat M, Nokodian F, Fluegge W, Frerich B (2021) Cobots in maxillofacial surgery—challenges for workplace design and the human-machine-interface. Procedia CIRP 100:488–493. 10.1016/j.procir.2021.05.108 10.1016/j.procir.2021.05.108 [DOI] [Google Scholar]

- 15.Hentout A, Aouache M, Maoudj A, Akli I (2019) Human–robot interaction in industrial collaborative robotics: a literature review of the decade 2008–2017. Adv Robot 33:764–799. 10.1080/01691864.2019.1636714 10.1080/01691864.2019.1636714 [DOI] [Google Scholar]

- 16.Sherwani F, Asad MM, Ibrahim BSKK (2020) Collaborative robots and industrial revolution 4.0 (IR 4.0). In: 2020 International Conference on Emerging Trends in Smart Technologies (ICETST). IEEE, Karachi, Pakistan, pp 1–5

- 17.Taesi C, Aggogeri F, Pellegrini N (2023) COBOT applications—recent advances and challenges. Robotics 12:79. 10.3390/robotics12030079 10.3390/robotics12030079 [DOI] [Google Scholar]

- 18.Vicentini F (2021) Collaborative robotics: a survey. J Mech Des 143:040802. 10.1115/1.4046238 10.1115/1.4046238 [DOI] [Google Scholar]

- 19.Chadebecq F, Lovat LB, Stoyanov D (2022) Artificial intelligence and automation in endoscopy and surgery. Nat Rev Gastroenterol Hepatol. 10.1038/s41575-022-00701-y 10.1038/s41575-022-00701-y [DOI] [PubMed] [Google Scholar]

- 20.Kassahun Y, Yu B, Tibebu AT, Stoyanov D, Giannarou S, Metzen JH, Vander Poorten E (2016) Surgical robotics beyond enhanced dexterity instrumentation: a survey of machine learning techniques and their role in intelligent and autonomous surgical actions. Int J Comput Assist Radiol Surg 11:553–568. 10.1007/s11548-015-1305-z 10.1007/s11548-015-1305-z [DOI] [PubMed] [Google Scholar]

- 21.Mascagni P, Alapatt D, Sestini L, Altieri MS, Madani A, Watanabe Y, Alseidi A, Redan JA, Alfieri S, Costamagna G, Boškoski I, Padoy N, Hashimoto DA (2022) Computer vision in surgery: from potential to clinical value. Npj Digit Med 5:163. 10.1038/s41746-022-00707-5 10.1038/s41746-022-00707-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wagner M, Brandenburg JM, Bodenstedt S, Schulze A, Jenke AC, Stern A, Daum MTJ, Mündermann L, Kolbinger FR, Bhasker N, Schneider G, Krause-Jüttler G, Alwanni H, Fritz-Kebede F, Burgert O, Wilhelm D, Fallert J, Nickel F, Maier-Hein L, Dugas M, Distler M, Weitz J, Müller-Stich B-P, Speidel S (2022) Surgomics: personalized prediction of morbidity, mortality and long-term outcome in surgery using machine learning on multimodal data. Surg Endosc 36:8568–8591. 10.1007/s00464-022-09611-1 10.1007/s00464-022-09611-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kranzfelder M, Staub C, Fiolka A, Schneider A, Gillen S, Wilhelm D, Friess H, Knoll A, Feussner H (2013) Toward increased autonomy in the surgical OR: needs, requests, and expectations. Surg Endosc 27:1681–1688. 10.1007/s00464-012-2656-y 10.1007/s00464-012-2656-y [DOI] [PubMed] [Google Scholar]

- 24.von Rueden L, Mayer S, Beckh K, Georgiev B, Giesselbach S, Heese R, Kirsch B, Walczak M, Pfrommer J, Pick A, Ramamurthy R, Garcke J, Bauckhage C, Schuecker J (2021) Informed machine learning—a taxonomy and survey of integrating prior knowledge into learning systems. IEEE Trans Knowl Data Eng. 10.1109/TKDE.2021.3079836 10.1109/TKDE.2021.3079836 [DOI] [Google Scholar]

- 25.Maier-Hein L, Eisenmann M, Sarikaya D, März K, Collins T, Malpani A, Fallert J, Feussner H, Giannarou S, Mascagni P, Nakawala H, Park A, Pugh C, Stoyanov D, Vedula SS, Cleary K, Fichtinger G, Forestier G, Gibaud B, Grantcharov T, Hashizume M, Heckmann-Nötzel D, Kenngott HG, Kikinis R, Mündermann L, Navab N, Onogur S, Roß T, Sznitman R, Taylor RH, Tizabi MD, Wagner M, Hager GD, Neumuth T, Padoy N, Collins J, Gockel I, Goedeke J, Hashimoto DA, Joyeux L, Lam K, Leff DR, Madani A, Marcus HJ, Meireles O, Seitel A, Teber D, Ückert F, Müller-Stich BP, Jannin P, Speidel S (2022) Surgical data science – from concepts toward clinical translation. Med Image Anal 76:102306. 10.1016/j.media.2021.102306s 10.1016/j.media.2021.102306 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Nwoye CI, Gonzalez C, Yu T, Mascagni P, Mutter D, Marescaux J, Padoy N (2020) Recognition of instrument-tissue interactions in endoscopic videos via action triplets. In: Martel AL et al (eds) Medical Image Computing and Computer Assisted Intervention – MICCAI 2020. MICCAI 2020. Lecture Notes in Computer Science, vol 12263. Springer, Cham, pp 364–374

- 27.Twinanda AP, Shehata S, Mutter D, Marescaux J, de Mathelin M, Padoy N (2017) EndoNet: a deep architecture for recognition tasks on laparoscopic videos. IEEE Trans Med Imaging 36:86–97. 10.1109/TMI.2016.2593957 10.1109/TMI.2016.2593957 [DOI] [PubMed] [Google Scholar]

- 28.Nwoye CI, Alapatt D, Yu T, Vardazaryan A, Xia F, Zhao Z, Xia T, Jia F, Yang Y, Wang H, Yu D, Zheng G, Duan X, Getty N, Sanchez-Matilla R, Robu M, Zhang L, Chen H, Wang J, Wang L, Zhang B, Gerats B, Raviteja S, Sathish R, Tao R, Kondo S, Pang W, Ren H, Abbing JR, Sarhan MH, Bodenstedt S, Bhasker N, Oliveira B, Torres HR, Ling L, Gaida F, Czempiel T, Vilaça JL, Morais P, Fonseca J, Egging RM, Wijma IN, Qian C, Bian G, Li Z, Balasubramanian V, Sheet D, Luengo I, Zhu Y, Ding S, Aschenbrenner J-A, van der Kar NE, Xu M, Islam M, Seenivasan L, Jenke A, Stoyanov D, Mutter D, Mascagni P, Seeliger B, Gonzalez C, Padoy N (2023) CholecTriplet2021: a benchmark challenge for surgical action triplet recognition. Med Image Anal 86:102803. 10.1016/j.media.2023.102803 10.1016/j.media.2023.102803 [DOI] [PubMed] [Google Scholar]

- 29.Nwoye CI, Yu T, Sharma S, Murali A, Alapatt D, Vardazaryan A, Yuan K, Hajek J, Reiter W, Yamlahi A, Smidt F-H, Zou X, Zheng G, Oliveira B, Torres HR, Kondo S, Kasai S, Holm F, Özsoy E, Gui S, Li H, Raviteja S, Sathish R, Poudel P, Bhattarai B, Wang Z, Rui G, Schellenberg M, Vilaça JL, Czempiel T, Wang Z, Sheet D, Thapa SK, Berniker M, Godau P, Morais P, Regmi S, Tran TN, Fonseca J, Nölke J-H, Lima E, Vazquez E, Maier-Hein L, Navab N, Mascagni P, Seeliger B, Gonzalez C, Mutter D, Padoy N (2023) CholecTriplet2022: show me a tool and tell me the triplet—an endoscopic vision challenge for surgical action triplet detection. Med Image Anal 89:102888. 10.1016/j.media.2023.102888 10.1016/j.media.2023.102888 [DOI] [PubMed] [Google Scholar]

- 30.Nwoye CI, Yu T, Gonzalez C, Seeliger B, Mascagni P, Mutter D, Marescaux J, Padoy N (2022) Rendezvous: attention mechanisms for the recognition of surgical action triplets in endoscopic videos. Med Image Anal 78:102433. 10.1016/j.media.2022.102433 10.1016/j.media.2022.102433 [DOI] [PubMed] [Google Scholar]

- 31.Nwoye C, Murali A, Sharma S, Yu T, Kun Y, Vardazaryan A, Alapatt D, Padoy N (2022) MICCAI 2022 EndoVis CholecTriplet2022: challenge dataset annotation protocol. https://cholectriplet2022.grand-challenge.org/annotation-protocol/. Accessed 11 May 2024

- 32.Zia A, Liu X, Bhattacharyya K, Wang Z, Berniker M, Jarc A, Nwoye C, Alapatt D, Murali A, Sharma S, Vardazaryan A, Padoy N, Van Amsterdam B, Psychogyios D, Colleoni E, Stoyanov D, Rau A, Bano S, Jin Y, Cartucho J, Giannarou S, Lopez YE, Buc E, Soonawalla Z, Gordon-Weeks A, Silva M, Bartoli A, Roß T, Reinke A, Bodenstedt S, Stoyanov D, Maier H, Speidel S (2022) Endoscopic vision challenge 2022

- 33.Neumuth T, Jannin P, Strauss G, Meixensberger J, Burgert O (2009) Validation of knowledge acquisition for surgical process models. J Am Med Inform Assoc 16:72–80. 10.1197/jamia.M2748 10.1197/jamia.M2748 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wagner M, Müller-Stich B-P, Kisilenko A, Tran D, Heger P, Mündermann L, Lubotsky DM, Müller B, Davitashvili T, Capek M, Reinke A, Reid C, Yu T, Vardazaryan A, Nwoye CI, Padoy N, Liu X, Lee E-J, Disch C, Meine H, Xia T, Jia F, Kondo S, Reiter W, Jin Y, Long Y, Jiang M, Dou Q, Heng PA, Twick I, Kirtac K, Hosgor E, Bolmgren JL, Stenzel M, von Siemens B, Zhao L, Ge Z, Sun H, Xie D, Guo M, Liu D, Kenngott HG, Nickel F, von Frankenberg M, Mathis-Ullrich F, Kopp-Schneider A, Maier-Hein L, Speidel S, Bodenstedt S (2023) Comparative validation of machine learning algorithms for surgical workflow and skill analysis with the HeiChole benchmark. Med Image Anal 86:102770. 10.1016/j.media.2023.102770 10.1016/j.media.2023.102770 [DOI] [PubMed] [Google Scholar]

- 35.Marcus HJ, Payne CJ, Hughes-Hallett A, Gras G, Leibrandt K, Nandi D, Yang G-Z (2016) Making the leap: the translation of innovative surgical devices from the laboratory to the operating room. Ann Surg 263:1077–1078. 10.1097/SLA.0000000000001532 10.1097/SLA.0000000000001532 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Troccaz J, Dagnino G, Yang G-Z (2019) Frontiers of medical robotics: from concept to systems to clinical translation. Annu Rev Biomed Eng 21:193–218. 10.1146/annurev-bioeng-060418-052502 10.1146/annurev-bioeng-060418-052502 [DOI] [PubMed] [Google Scholar]

- 37.Selvam P, Sundar KJA (2023) Chapter 23—a deep learning framework for surgery action detection. In: Garg H, Chatterjee JM (eds) Deep learning in personalized healthcare and decision support. Academic Press, pp 315–328 [Google Scholar]

- 38.Neumuth T (2017) Surgical process modeling. Innov Surg Sci 2:123–137. 10.1515/iss-2017-0005 10.1515/iss-2017-0005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ward TM, Fer DM, Ban Y, Rosman G, Meireles OR, Hashimoto DA (2021) Challenges in surgical video annotation. Comput Assist Surg 26:58–68. 10.1080/24699322.2021.1937320 10.1080/24699322.2021.1937320 [DOI] [PubMed] [Google Scholar]

- 40.Meireles OR, Rosman G, Altieri MS, Carin L, Hager G, Madani A, Padoy N, Pugh CM, Sylla P, Ward TM, Hashimoto DA (2021) SAGES consensus recommendations on an annotation framework for surgical video. Surg Endosc 35:4918–4929. 10.1007/s00464-021-08578-9 10.1007/s00464-021-08578-9 [DOI] [PubMed] [Google Scholar]

- 41.Neumuth T, Jannin P, Schlomberg J, Meixensberger J, Wiedemann P, Burgert O (2011) Analysis of surgical intervention populations using generic surgical process models. Int J Comput Assist Radiol Surg 6:59–71. 10.1007/s11548-010-0475-y 10.1007/s11548-010-0475-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Wagner M, Bodenstedt S (2021) Endoscopic vision challenge (EndoVis) 2021: HeiChole surgical workflow analysis and full scene segmentation (HeiSurF). https://www.synapse.org/heisurf. Accessed 27 Oct 2021

- 43.Hüttl TP, Hrdina C, Krämling H-J, Schildberg FW, Meyer G (2001) Gallstone surgery in German university hospitals: development, complications and changing strategies. Langenbecks Arch Surg 386:410–417. 10.1007/s004230100249 10.1007/s004230100249 [DOI] [PubMed] [Google Scholar]

- 44.Palinkas LA, Horwitz SM, Green CA, Wisdom JP, Duan N, Hoagwood K (2015) Purposeful sampling for qualitative data collection and analysis in mixed method implementation research. Adm Policy Ment Health Ment Health Serv Res 42:533–544. 10.1007/s10488-013-0528-y 10.1007/s10488-013-0528-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Kipp M (2001) ANVIL—a generic annotation tool for multimodal dialogue. In: Seventh Eur Conf Speech Commun Technol 1367–1370

- 46.Yamlahi A, Tran TN, Godau P, Schellenberg M, Michael D, Smidt F-H, Nölke J-H, Adler T, Tizabi MD, Nwoye C, Padoy N, Maier-Hein L (2023) Self-distillation for surgical action recognition. In: Greenspan H et al (eds) Medical Image Computing and Computer Assisted Intervention – MICCAI 2023. MICCAI 2023. Lecture Notes in Computer Science, vol 14228. Springer, Cham, pp 637–646 [Google Scholar]

- 47.Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: hierarchical vision transformer using shifted windows

- 48.Kotsiantis SB, Zaharakis ID, Pintelas PE (2006) Machine learning: a review of classification and combining techniques. Artif Intell Rev 26:159–190. 10.1007/s10462-007-9052-3 10.1007/s10462-007-9052-3 [DOI] [Google Scholar]

- 49.Nwoye CI, Padoy N (2022) Data splits and metrics for method benchmarking on surgical action triplet datasets. 10.48550/ARXIV.2204.05235

- 50.Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D et al (2011) Scikit-learn: machine learning in Python. J Mach Learn Res 12(85):2825–2830 [Google Scholar]

- 51.Dergachyova O, Bouget D, Huaulmé A, Morandi X, Jannin P (2016) Automatic data-driven real-time segmentation and recognition of surgical workflow. Int J Comput Assist Radiol Surg 11:1081–1089. 10.1007/s11548-016-1371-x 10.1007/s11548-016-1371-x [DOI] [PubMed] [Google Scholar]

- 52.DiPietro R, Hager GD (2019) Automated surgical activity recognition with one labeled sequence. In: Shen D, Liu T, Peters TM, Staib LH, Essert C, Zhou S, Yap P-T, Khan A (eds) Medical image computing and computer assisted intervention – MICCAI 2019. Springer International Publishing, Cham, pp 458–466 [Google Scholar]

- 53.Li Y, Bai B, Jia F (2024) Parameter-efficient framework for surgical action triplet recognition. Int J Comput Assist Radiol Surg. 10.1007/s11548-024-03147-6 10.1007/s11548-024-03147-6 [DOI] [PubMed] [Google Scholar]

- 54.Chen Y, Zhang H, Wang C, Ang KK, Ng SH, Jin H, Lin Z (2023) A hierarchical dynamic Bayesian learning network for EMG-based early prediction of voluntary movement intention. Sci Rep 13:4730. 10.1038/s41598-023-30716-7 10.1038/s41598-023-30716-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Ma R, Ramaswamy A, Xu J, Trinh L, Kiyasseh D, Chu TN, Wong EY, Lee RS, Rodriguez I, DeMeo G, Desai A, Otiato MX, Roberts SI, Nguyen JH, Laca J, Liu Y, Urbanova K, Wagner C, Anandkumar A, Hu JC, Hung AJ (2022) Surgical gestures as a method to quantify surgical performance and predict patient outcomes. Npj Digit Med 5:187. 10.1038/s41746-022-00738-y 10.1038/s41746-022-00738-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Hussain SM, Brunetti A, Lucarelli G, Memeo R, Bevilacqua V, Buongiorno D (2022) Deep learning based image processing for robot assisted surgery: a systematic literature survey. IEEE Access 10:122627–122657. 10.1109/ACCESS.2022.3223704 10.1109/ACCESS.2022.3223704 [DOI] [Google Scholar]

- 57.Takeuchi M, Kawakubo H, Saito K, Maeda Y, Matsuda S, Fukuda K, Nakamura R, Kitagawa Y (2022) Automated surgical-phase recognition for robot-assisted minimally invasive esophagectomy using artificial intelligence. Ann Surg Oncol 29:6847–6855. 10.1245/s10434-022-11996-1 10.1245/s10434-022-11996-1 [DOI] [PubMed] [Google Scholar]

- 58.Arora S, Sevdalis N, Nestel D, Woloshynowych M, Darzi A, Kneebone R (2010) The impact of stress on surgical performance: a systematic review of the literature. Surgery 147:318-330.e6. 10.1016/j.surg.2009.10.007 10.1016/j.surg.2009.10.007 [DOI] [PubMed] [Google Scholar]

- 59.Kapoor S, Narayanan A (2023) Leakage and the reproducibility crisis in machine-learning-based science. Patterns. 10.1016/j.patter.2023.100804 10.1016/j.patter.2023.100804 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Mazurowski MA, Dong H, Gu H, Yang J, Konz N, Zhang Y (2023) Segment anything model for medical image analysis: an experimental study. Med Image Anal 89:102918. 10.1016/j.media.2023.102918 10.1016/j.media.2023.102918 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Warsinsky S, Schmidt-Kraepelin M, Thiebes S, Wagner M, Sunyaev A (2022) Gamified expert annotation systems: meta-requirements and tentative design. In: Drechsler A, Gerber A, Hevner A (eds) The transdisciplinary reach of design science research. Springer International Publishing, Cham, pp 154–166 [Google Scholar]

- 62.Connor MJ, Dasgupta P, Ahmed HU, Raza A (2020) Autonomous surgery in the era of robotic urology: friend or foe of the future surgeon? Nat Rev Urol 17:643–649. 10.1038/s41585-020-0375-z 10.1038/s41585-020-0375-z [DOI] [PubMed] [Google Scholar]

- 63.Yang G-Z, Cambias J, Cleary K, Daimler E, Drake J, Dupont PE, Hata N, Kazanzides P, Martel S, Patel RV, Santos VJ, Taylor RH (2017) Medical robotics—regulatory, ethical, and legal considerations for increasing levels of autonomy. Sci Robot 2:eaam8638. 10.1126/scirobotics.aam8638 10.1126/scirobotics.aam8638 [DOI] [PubMed] [Google Scholar]

- 64.Jamjoom AAB, Jamjoom AMA, Thomas JP, Palmisciano P, Kerr K, Collins JW, Vayena E, Stoyanov D, Marcus HJ, The iRobotSurgeon Collaboration (2022) Autonomous surgical robotic systems and the liability dilemma. Front Surg 9:1015367. 10.3389/fsurg.2022.1015367 10.3389/fsurg.2022.1015367 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The raw video data and annotations that support the findings of this study are available from the corresponding author upon reasonable request.