Abstract

Procedural fidelity refers to the degree to which procedures for an assessment or intervention (i.e., independent variables) are implemented consistent with the prescribed protocols. Procedural fidelity is an important factor in demonstrating the internal validity of an experiment and clinical treatments. Previous reviews evaluating the inclusion of procedural fidelity in published empirical articles demonstrated underreporting of procedural fidelity procedures and measures within specific journals. We conducted a systematic review of The Analysis of Verbal Behavior (TAVB) to evaluate the trends in procedural fidelity reporting from 2007 to 2021. Of the 253 articles published in TAVB during the reporting period, 144 of the articles (168 studies) met inclusionary criteria for further analysis. Our results showed that 54% of studies reported procedural fidelity data, which is slightly higher than previous reviews. In comparison, interobserver-agreement data were reported for a high percentage of studies reviewed (i.e., 93%). Further discussion of results and applied research implications are included.

Keywords: Interobserver agreement, Procedural fidelity, Treatment integrity, Verbal behavior

Behavior analysts use research to guide treatment selection and implementation, and they are obligated to stay in regular contact with scholarly literature (Briggs & Mitteer, 2022; Carr & Briggs, 2010). Therefore, it is important the interventions that practitioners are accessing have high internal validity demonstrated through research. Internal validity refers to accurately relating changes in a dependent variable to changes in an independent variable (Gresham et al., 1993). Threats to internal validity might include maturation, reactivity, and environmental histories (Christ, 2007). Another threat to internal validity is that the independent variable is not implemented as prescribed—procedural fidelity. Measurement of procedural fidelity permits practitioners and researchers to evaluate internal validity and provides additional support that changes in the dependent measures are due to the independent variable and not impacted by other variables. Thus, procedural fidelity refers to the extent to which an independent variable(s) is implemented as prescribed by a pre-determined procedure (Peterson et al., 1982). The inclusion of procedural fidelity in published research is important, as behavior analysts rely on published research to guide treatment decisions.

Multiple reviews have investigated procedural fidelity trends in behavioral journals over the past 40 years (e.g., McIntyre et al., 2007; Peterson et al., 1982; Sanetti et al., 2012). In an initial review of procedural fidelity, Peterson et al. (1982) discussed the importance of establishing a functional relationship between independent and dependent variables and found that only 23% of articles published in the Journal of Applied Behavior Analysis (JABA) between 1969 and 1980 assessed fidelity. They also provided a classification of independent-variable risk according to three categories: fidelity was assessed (no risk), fidelity was not assessed but used mechanical/computer-implemented procedures (low risk), or fidelity was not assessed and the potential for error was possible (high risk). The results of Peterson et al. showed that 68% of studies were classified as high risk.

McIntyre et al. (2007) extended Peterson et al. (1982) and reviewed school-based experimental investigations published in JABA between 1991 and 2005. The results suggested that although independent variables were frequently defined, procedural fidelity was only collected during 30% of investigations. Reviewed studies were coded as either no, low, or high risks for treatment inaccuracies based on the classifications in Peterson et al. Approximately 45% of the studies were classified as high risk for treatment inaccuracies, which was an improvement from Peterson et al.

More recently, the reporting of procedural fidelity has been assessed across other journals. For example, Cymbal et al. (2022) investigated procedural fidelity reported in the Journal of Organizational Behavior Management (JOBM) between 2000 and 2020. Researchers found that only 24% of studies reported fidelity data and 44% of studies were coded as high risk. Falakfarsa et al. (2022) reviewed articles published in Behavior Analysis in Practice (BAP) between 2008 and 2019. Results suggested that 47% of studies reported procedural fidelity data. Additionally, 48% of studies were classified as high risk for treatment-implementation inaccuracies. Han et al. (2023) extended previous research by conducting a review from 1980 to 2020 in JABA, and comparing results to previous reviews (i.e., Cymbal et al., 2022; Falakfarsa et al, 2022). Results suggested that procedural fidelity was underreported across journals; however, increasing trends were reported in JABA and BAP. This finding is discrepant from reporting of interobserver-agreement data, which has consistently been reported more often than procedural fidelity across journals (e.g., Falakfarsa et al., 2022). For example, Falakfarsa found that 94% of studies reviewed reported IOA data, which was more than double the number of studies reporting procedural fidelity data.

To our current understanding, no procedural fidelity review has included articles published in The Analysis of Verbal Behavior (TAVB), which was first published in 1982 and currently has 38 volumes. As the journal includes a focus on applied and innovative applications in the real world, it is important that the research that is disseminated is implemented with high procedural fidelity. In the absence of procedural fidelity data, it is difficult to draw valid conclusions about the effectiveness of verbal-behavior interventions and assessments. Verbal-behavior research may be especially vulnerable to procedural fidelity failures given that much of the research is conducted in applied settings (e.g., clinics, schools, and homes). Thus, the purpose of the present investigation was to review articles published in TAVB between 2007 and 2021 to report on procedural fidelity and related variables.

Method

Criteria for Review

We reviewed all articles published in TAVB from 2007 (Issue 23) to 2021 (Issue 37). This included a total of 253 articles. To be included in the review, a study was required to be experimental (i.e., an independent variable was manipulated to affect a dependent variable). The study also had to include a method section that described the procedures of the investigation. Finally, the study needed to include either a single-case design or a group design. We excluded studies that reported using an A-B design without replication, post-test only design, or did not report using a design. Consistent with Falakfarsa et al. (2022), if an article had multiple studies, we coded each study separately. A total of 144 articles with 168 studies met our inclusion criteria and were included in the analysis.

Coding

We reviewed each study for the following variables: (a) participant demographics, (b) treatment agent, (c) location of intervention, (d) assessment and intervention, (e) design, (f) operational definitions for the dependent variables, (g) operational definitions for the independent variables, (h) monitoring procedural fidelity, (i) procedural fidelity calculation type, (j) risk for procedural inaccuracies, and (k) monitoring interobserver agreement (IOA).

Participant Demographics

Each study was coded as “yes” or “no” for reporting demographic data. A study was coded as “yes” if it included two or more of the following: (a) age, (b) gender, (c) race/ethnicity, (d) diagnosis, or (e) socio-economic status. A study was coded as “no” if it reported fewer than two participant demographic factors.

Treatment Agent

Coders reviewed each study and categorized each individual responsible for implementing the intervention or assessment into one of the following categories: (a) teacher, (b) professional (e.g., psychologist, speech pathologist, RBT®, BCBA®, behavior therapist), (c) paraprofessional/staff/instructor, (d) parent, (e) sibling, (f) researcher/experimenter, (g) peer tutors, (h) self, or (i) other (i.e., does not fit into one of the categories above). When more than one individual was responsible for implementing the intervention, then each treatment agent was coded (i.e., each study could have multiple treatment agents).

Location of Intervention

Coders reviewed each study and categorized the location into one of the following categories: (a) school (i.e., preschool, elementary school, high school, university), (b) clinic (i.e., hospital-based clinic, university-based clinic, community clinic), (c) home, (d) group home or residential facility, (e) not specified (i.e., no location indicated), and (f) other for locations that did not fit any other category. If multiple locations were used in one study, all locations were recorded (i.e., each study could have multiple locations).

Assessment and Intervention

Each study was coded as including an assessment (e.g., preference assessments, functional assessments), intervention (e.g., reinforcement, extinction, response cost), or both (Falakfarsa et al., 2022). Preference assessments and reinforcer assessments were only categorized as an assessment if they were one of the main independent variables (e.g., a study in which a preference assessment was conducted to identify preferred items to use in an intervention was not categorized as an assessment).

Design

Each study was coded as (a) single-case design, (b) group design, or (c) both. For studies that reported using a single-case design or both designs, coders categorized the designs into one of the following categories based on the author’s description of the design: (a) multiple-baseline/multiple-probe, (b) reversal, (c) alternating treatments/multielement, (d) adapted alternating treatments, (e) changing criterion, (f) mixed or multiple designs, and (g) other.

Operational Definitions of the Dependent Variables

A study was coded as “yes” or “no” to determine if a dependent variable was operationally defined. Observers coded a study as “yes” if a dependent variable was operationally defined in a way that would allow for replication (Falakfarsa et al., 2022).

Operational Definitions of the Independent Variables

Coders scored each study as “yes” or “no” for operational definitions for the independent variables. A study was coded as “yes” if the assessment or intervention could be replicated with the information that was provided (Falakfarsa et al., 2022; McIntyre et al., 2007).

Monitoring Procedural Fidelity

Each study was coded as “yes,” “no,” or “monitored” to determine if procedural fidelity was monitored and reported (Falakfarsa et al., 2022; Sanetti et al., 2012). A study was coded as “yes” if procedural fidelity was monitored, and numerical data were reported for at least one independent variable. A study was coded as “no” if a study did not mention collecting procedural fidelity data. A study was coded as “monitored” if the authors noted monitoring procedural fidelity but did not report any corresponding data. For each study that reported procedural fidelity data, coders scored the average procedural fidelity percentage (i.e., high, moderate, low). Procedural fidelity percentages were coded as “high” if average fidelity scores were 90% or higher, “moderate” if average scores were 80–89%, and “low” if average scores were below 80%.

Procedural Fidelity Calculation Type

For each study that reported monitoring procedural fidelity, coders reported the procedural fidelity calculation type. The procedural fidelity calculation type included (a) components/steps, (b) trials, (c) intervals, (d) checklists, and (e) other (e.g., blocks, sessions).

Risk for Procedural Inaccuracies

All studies were coded as “no risk,” “low risk,” or “high risk” for procedural inaccuracies (McIntyre et al., 2007; Peterson et al., 1982). A study was coded as “no risk” if the implementation of the treatment was reported as monitored (i.e., monitoring procedural fidelity was scored as “yes” or “monitored”). A study was coded as “low risk” if procedural fidelity was not reported to be measured or monitored, but the treatment was judged to be at low risk for inaccuracies. Low-risk treatments included treatments that were (a) mechanically defined (e.g., computer-mediated), (b) permanent products (e.g., posting of classroom rules), (c) continuously applied (e.g., noncontingent access to preferred items or activities), or (d) single components (e.g., escape contingent on work completion). A study was coded as “high risk” if procedural fidelity was not reported or measured but was necessary (i.e., the study did meet criteria to be coded as low risk or no risk).

Monitoring IOA

All studies were coded as “yes” or “no” to determine if IOA was monitored and reported (Falakfarsa et al., 2022). A study was coded as “yes” if a second, independent observer collected data on one or more of the dependent variables. A study was coded as “no” if the study did not report collecting IOA. For each study that reported IOA, coders also scored the average agreement percentage (i.e., high, moderate, low, not specified). Agreement percentages were coded as “high” if average scores were 90% or higher, “moderate” if average scores were 80–89%, “low” if average scores were below 80%, and “not specified” if specific IOA values were not reported.

Each study that reported monitoring procedural fidelity, was coded “yes,” “no,” or “not applicable” for measuring and reporting IOA for procedural fidelity data. A study was coded as “yes” if IOA was measured and reported for the implementation of one or more independent variables. A study was coded as “no” if IOA was not collected for one or more independent variables. A study was coded as “not applicable” if procedural fidelity data were not collected for that study.

Intercoder Reliability

Two independent observers reviewed the inclusionary criteria and classified articles as meeting or not meeting inclusionary criteria. Of the 253 articles reviewed between 2007 and 2021, agreement was initially 95%. Any disagreements were discussed with a third independent reviewer until a consensus was identified. Ultimately, 100% agreement for inclusionary criteria was reached after discussing disagreements.

For 100% of articles and studies that met the inclusionary criteria, two independent observers completed the coding on each of the areas discussed in the methods above. The total number of items agreed upon was divided by the number of agreements plus the number of disagreements and multiplied by 100. The percentage agreement was 94.4%. As with the inclusionary criteria, any disagreements were discussed with a third independent reviewer until a consensus was identified. Ultimately, 100% agreement was reached after discussing disagreements.

Results

Most studies (93%; n = 156) reported two or more participant demographic factors, whereas 7% (n = 11) did not report at least two demographic factors and 1% (n = 1) of studies did not use human participants. The results of our analyses on whether studies conducted an assessment, intervention, or both as the primary independent variable indicated that 85% (n = 142) of studies implemented an intervention, 9% (n = 15) conducted an assessment, 5% (n = 8) used both, and 2% (n = 3) did not fit in any of the categories. Regarding the type of experimental design used across studies, the majority conducted single-case designs (90%, n = 151), with very few implementing a group design (8%, n = 13) or both (2%, n = 4). Table 1 shows the percentage and number of studies with the specific type of single-case design conducted. Multiple baseline or probe designs were the most used design across studies at 48% (n = 74) with mixed/multiple single-case designs at 19% (n = 29), and alternating-treatments/multi-element designs at 12% (n = 18). The remaining types of single-case designs accounted for 11% or less of studies (i.e., reversal, adapted alternating treatments, changing criterion, other).

Table 1.

Type of single-case designs used across studies

| Type of single-subject design | Count | % |

|---|---|---|

| Multiple baseline(s)/probe | 74 | 48 |

|

Reversal Alternating treatments/multi-element |

7 18 |

4 12 |

|

Adapted alternating treatments Changing criterion Mixed/multiple Other |

8 2 29 17 |

5 1 19 11 |

Count = 155

The results for the analyses of assessment and treatment locations and different treatment agents across studies indicated that the most frequently reported locations at which studies were conducted were schools (n = 82), homes (n = 40), and clinics (n = 36). The remaining locations included other locations (n = 30), group homes or residential facilities (n = 7), or not specified (n = 2). A high frequency of studies were conducted in a single location (n = 142) rather than in multiple locations (n = 26). Regarding treatment agents, the most frequently reported were researchers/experimenters (n = 133) and professionals (n = 30). Very few paraprofessionals (n = 15), teachers (n = 12), parents (n = 7), other agents (n = 7), peer tutors (n = 1), participants (i.e., self; n = 1), or siblings (n = 0) were included as treatment agents across studies. The majority of studies were implemented by a single treatment agent (n = 134) with only a few studies incorporating multiple treatment agents (n = 34).

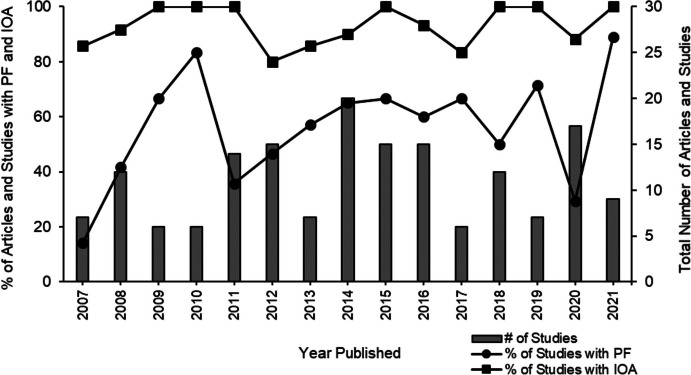

A majority of studies provided operational definitions of the independent (99%) and dependent (97%) variables. Figure 1 depicts the percentage and number of total studies reviewed (n =168) that reported procedural fidelity data between 2007 and 2021 in TAVB. Of the studies included in the review, 54% (n = 91) reported procedural fidelity data, 45% (n = 75) did not report collecting procedural fidelity data, and 1% (n = 2) monitored but did not report data. Procedural fidelity reporting was variable across each year with the lowest procedural fidelity reporting in 2007 and the highest reporting in 2021. There was also an increase in the percentage of studies reporting procedural fidelity in the years 2009, 2010, 2019, and 2021, but there were fewer studies that met inclusion criteria published during those years. Of the studies that collected and reported procedural fidelity data (n = 92), 96% (n = 88) reported high average fidelity scores (i.e., at least 90% fidelity), whereas only 2% (n = 2) were moderate (i.e., 80–89% fidelity), 1% (n = 1) were low (i.e., lower than 80% fidelity), and 1% (n = 1) did not report average fidelity scores. Of the studies that reported collecting data on procedural fidelity, only 12% (n = 11) of studies also collected IOA data on procedural fidelity.

Fig. 1.

Percentage and number of studies reporting procedural fidelity and interobserver agreement data by year. Note. PF = Procedural Fidelity; IOA = Interobserver Agreement

Out of 168 studies, only 55% (n = 93) described how fidelity was calculated. Calculating fidelity based on trials was the most common type of procedural fidelity calculation used (45%; n = 42), steps and components were the second most used calculation type (26%; n = 24), then checklist (12%; n = 11) and other types (i.e., blocks or sessions; 12%; n = 11), and a few studies did not report a calculation method when scoring procedural fidelity (5%, n = 5). The number and percentage of studies that were categorized as high, low, or no risk for procedural inaccuracies is depicted in Fig. 2. Of the 168 studies, 58 met the criteria for high risk, 17 were categorized as low risk, and 93 were considered no risk.

Fig. 2.

Number and percentage of studies coded as high, low, or no risk of procedural inaccuracies

Figure 1 also displays the percentage and number of studies reporting IOA data for each publication year. Most studies (i.e., 93%; n = 157) reported collecting IOA data with an average of 93% (n = 157; range, 80% to 100%), and the range across years was relatively high and stable (i.e., range, 80% to 100%), especially compared to fidelity. Finally, of the studies that collected and reported IOA data, 89% (n = 140) reported high, 10% moderate (n = 15), and 1% low (n = 2) average agreement scores.

Discussion

The present study extended previous reviews of procedural fidelity reporting practices in behavior-analytic literature by assessing the publication practices in TAVB between the years of 2007 and 2021. The overall results showed that 54% of studies included in this review reported procedural fidelity data despite more than 97% of studies operationally defining their dependent and independent variables. Although procedural fidelity reporting remains low, the results from this review of TAVB are slightly higher than those reported in previous studies. For example, Falakfarsa et al. (2022) reviewed procedural fidelity reporting trends in the BAP journal from 2008 to 2019 and found that procedural fidelity reporting was less than 50%. Additionally, the Cymbal et al. (2022) review found that only 24% of studies reported or monitored procedural fidelity in the JOBM from 2000–2020. Similar to trends in reporting IOA in other reviews (e.g., Falakfarsa et al., 2022), our investigation found that IOA reporting was higher than procedural fidelity reporting.

The present review extends previous reviews on procedural fidelity in several ways. First, the current study analyzed the type of experimental design used. Again, the most common type of single-case design used was multiple baseline or probe design (48%) across studies reviewed. Procedural fidelity is extremely important when using a multiple baseline or probe designs because the independent variable is systematically implemented overtime and across participants, behaviors, or settings. Thus, researchers should be ensuring that the independent variable is implemented consistently and accurately.

Second, we coded studies based on the inclusion of demographic information (i.e., two or more participant demographics included). To our knowledge, no previous procedural fidelity reviews have reported information on the inclusion of demographic information. Recently, researchers have suggested the importance of reporting demographic information (e.g., ethnicity, race, socioeconomic status, gender/sex), as it may highlight important disparities in access to and the generality of behavioral interventions. Jones et al. (2020) reviewed the reporting of demographic variables in the JABA from articles published from 2013 to 2019 and found that demographic variables are underreported. In the current review, we found that 93% of studies reported at least two demographic variables for one or more participants. These results are higher than Jones et al. (2020). However, we did not analyze reporting for each demographic variable individually; thus, future research should consider replicating Jones et al. with other journals, including TAVB.

Third, other than a recent review conducted by Essig et al. (2023), we are not aware of any other reviews that reported the percentage of studies that collected IOA for procedural fidelity data. We found that only 12% of studies in TAVB reported IOA for procedural fidelity data. These results were consistent with Essig et al. that found that only 18% of studies published in a 5-year span in JABA and BAP reported IOA for procedural fidelity data. This finding is concerning given the importance of IOA for determining the internal validity of behavioral assessments and interventions. Thus, to be confident that our independent variables are responsible for the changes in our dependent variables as a field, we should further evaluate the conditions under which IOA for procedural fidelity is necessary. Although field reporting IOA for dependent variables is viewed as necessary for publication, the same standard is not upheld for reporting IOA for the primary independent variable. The only way this is likely to change is if stronger editorial grant contingencies are established (St. Peter et al., 2023).

Finally, we coded the procedural fidelity calculation type. Roughly half of the studies in the current review described how fidelity was calculated. In general, the studies we reviewed did not provide clear descriptions of how procedural fidelity was calculated. Bergmann et al. (2023) found that the way in which procedural fidelity data are collected and calculated may lead to Type I and Type II errors. However, the present study is limited as we coded the procedural fidelity calculation type very broadly because researchers rarely provided operational definitions for procedural fidelity calculation procedures. Future research should clearly communicate the procedures used for calculating procedural fidelity and should continue to examine how these calculations impact conclusions.

There are some additional limitations to the current review that should be noted. First, we only searched articles between 2007 and 2021. The Analysis of Verbal Behavior has been publishing research on verbal behavior since 1982. Therefore, due to the selected range, the conclusions that can be made over time are limited, and our results are representative of more recent, rather than historical, trends in TAVB. Second, although we based our coding on previous procedural fidelity reviews, some of our definitions varied slightly from other studies. For example, when we classified studies as assessment and intervention, our classification differed from previous research. We only included a study as an assessment if it was the primary independent variable. This is different from Falakfarsa et al. (2022) who coded a study as an assessment if one was conducted. Specifically, Falakfarsa et al. (2022) identified 49% of studies with assessment and intervention, whereas the current review identified 5% of studies with both assessment and intervention. Slight variations in our definitions might limit some of the comparisons that can be made across studies. Finally, we coded studies based on the definitions of risk used by authors of previous procedural fidelity reviews (e.g., McIntyre et al., 2007; Peterson et al., 1982). The definitions for risk that we used do not factor in other potential variables that might impact procedural fidelity (e.g., treatment agent and setting). Additional research should consider these variables when classifying risk.

Although our findings indicate that slightly more than 50% of studies included in our analysis reported procedural fidelity, there is still room for improvement in conducting research practices that enhance the validity of assessment and interventions that can transition effectively to practice. Additional research that focuses on the actual procedural fidelity practices of providers is warranted. Many of the studies in the current review were conducted in schools, clinics, and home settings. It is likely that there are several barriers to collecting procedural fidelity data in these settings (e.g., limited resources; Fallon et al., 2020). Future research should assess these potential barriers. This would help determine the gaps in measuring procedural fidelity in applied settings and could help with identifying training needs that lead to the potential application of evidence-based practices that are accessible, feasible, and socially valid for practitioners. Related to potential risk for procedural fidelity inaccuracies, studies could improve reporting who the treatment agents are and the training that they receive prior to implementing procedures. In 2022, TAVB required submitted manuscripts and publications to report procedural fidelity or discuss its absence as a limitation. Clearly, the efforts and recommendations from earlier reviews advocating for reporting procedural fidelity as part of our research dissemination effort in behavior analytic journals are being heard (e.g., Falakfarsa et al 2022; Han et al., 2023; Peterson et al. 1982).

Data availability

The data that support the findings are available from the corresponding author upon reasonable request.

Declarations

Competing interests

The authors declare no conflicts of interest.

Ethical approval

No human subject research was conducted.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Bergmann S, Niland H, Gavidia VL, Strum MD, Harman MJ. Comparing multiple methods to measure procedural fidelity of discrete-trial instruction. Education and Treatment of Children. 2023;46:201–220. doi: 10.1007/s43494-023-00094-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Briggs AM, Mitteer DR. Updated strategies for making regular contact with the scholarly literature. Behavior Analysis in Practice. 2022;15(2):541–552. doi: 10.1007/s40617-021-00590-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carr JE, Briggs AM. Strategies for making regular contact with the scholarly literature. Behavior Analysis in Practice. 2010;3:13–18. doi: 10.1007/BF03391760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christ TJ. Experimental control and threats to internal validity of concurrent and nonconcurrent multiple baseline designs. Psychology in the Schools. 2007;44(5):451–459. doi: 10.1002/pits.20237. [DOI] [Google Scholar]

- Cymbal D, Wilder DA, Cruz N, Ingraham G, Llinas M, Clark R, Kamlowsky M. Procedural integrity reporting in the journal of organizational behavior management (2000–2020) Journal of Organizational Behavior Management. 2022;42(3):230–243. doi: 10.1080/01608061.2021.2014380. [DOI] [Google Scholar]

- Essig L, Rotta K, Poling A. Interobserver agreement and procedural fidelity: An odd asymmetry. Journal of Applied Behavior Analysis. 2023;56(1):78–85. doi: 10.1002/jaba.961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Falakfarsa G, Brand D, Jones L, Godinez ES, Richardson DC, Hanson RJ, Velazquez SD, Wills C. Treatment integrity reporting in behavior analysis in practice 2008–2019. Behavior Analysis in Practice. 2022;15(2):443–453. doi: 10.1007/s40617-021-00573-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fallon LM, Cathcart SC, Sanetti LMH. Assessing parents’ treatment fidelity: A survey of practitioners in home settings. Focus on Autism and Other Developmental Disabilities. 2020;35(1):15–25. doi: 10.1177/1088357619866. [DOI] [Google Scholar]

- Gresham FM, Gansle KA, Noell GH. Treatment integrity in applied behavior analysis with children. Journal of Applied Behavior Analysis. 1993;26(2):257–263. doi: 10.1901/jaba.1993.26-257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han JB, Bergmann S, Brand D, Wallace MD, St. Peter CC, Feng J, Long BP. Trends in reporting procedural integrity: A comparison. Behavior Analysis in Practice. 2023;16(2):388–398. doi: 10.1007/s40617-022-00741-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones SH, St. Peter CC, Ruckle MM. Reporting of demographic variables in the journal of applied behavior analysis. Journal of Applied Behavior Analysis. 2020;53(3):1304–1315. doi: 10.1002/jaba.722. [DOI] [PubMed] [Google Scholar]

- McIntyre LL, Gresham FM, DiGennaro FD, Reed DD. Treatment integrity of school-based interventions with children in the journal of applied behavior analysis 1991–2005. Journal of Applied Behavior Analysis. 2007;40(4):659–672. doi: 10.1901/jaba.2007.659-672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peter CC, Brand D, Jones SH, Wolgemuth JR, Lipien L. On a persisting curious double standard in behavior analysis: Behavioral scholars' perspectives on procedural fidelity. Journal of Applied Behavior Analysis. 2023;56(2):336–351. doi: 10.1002/jaba.974. [DOI] [PubMed] [Google Scholar]

- Peterson L, Homer AL, Wonderlich SA. The integrity of independent variables in behavior analysis. Journal of Applied Behavior Analysis. 1982;15(4):477–492. doi: 10.1901/jaba.1982.15-477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sanetti LMH, Dobey LM, Gritter KL. Treatment integrity of interventions with children in the journal of positive behavior interventions from 1999 to 2009. Journal of Positive Behavior Interventions. 2012;14(1):29–46. doi: 10.1177/1098300711405853. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings are available from the corresponding author upon reasonable request.