Graphical abstract

Keywords: Artificial vision, Recycling, Classification, Automation

Abstract

Environmental protection has gained greater importance over time due to the negative impact and irreversible consequences that have occurred worldwide and stem from pollution. One of the great challenges faced in different parts of the world is the inadequate management and classification of solid waste. In order to contribute to tackling this issue, this paper proposes an automated sorting system based on artificial vision which allows recognition and separation of recyclable materials (Plastic, Glass, Cardboard and Metal) through a webcam connected in real time to the Nvidia® Jetson Nano™ 2 GB programming board, which has a convolutional neural network (CNN) trained for the proper classification of waste. The system had a 95 % accuracy in separating plastic, 96 % in glass and metal, and 94 % in cardboard. With this in mind, we conclude it contributes to the recycling effort, which has an impact on the reduction of environmental pollution worldwide.

Specifications table

| Hardware name | AutoRecycler |

|---|---|

| Subject area |

|

| Hardware type |

|

| Closest commercial analog | The Bin-e smart recycling garbage can is the closest commercial analog to this project. This garbage can employ image sorting algorithms to separate garbage into plastic, paper, metal and glass [1]. |

| Open source license | CC BY-NC-ND 4.0 |

| Cost of hardware | Approximate cost: $420USD |

| Source file repository | https://doi.org/10.17632/yf8z2263gy.2 |

1. Hardware in context

Over the years, the accelerated growth of population and infrastructure in urban centers worldwide has resulted in major negative impacts for our planet. Some among them are: global warming, increase of carbon dioxide, decrease of ozone in the atmosphere, overexploitation of natural resources for obtaining new raw materials and waste production [2], [3]; Solid waste originates from the materials used in the manufacture, transformation or use of consumer goods, some examples of which are: discarded paper, plastic or glass bottles, metal containers or cardboard boxes, among others. These by-products originate from activities carried out in homes, offices, schools, commerce and industry [4], [5]. One of the most significant environmental impacts is the contamination of both surface and underground water due to the decomposition and dumping of garbage in rivers, streams, and seas. As a result, natural water is no longer fit for human consumption. Solid waste left out in the open deteriorates the quality of the air we breathe, as it causes respiratory infections as well as nasal and eye irritation, added to the discomfort caused by nauseating smells. It also causes aesthetic deterioration in many urban and rural areas and degradation of natural landscapes. All of these have a great impact on people's health and quality of life [4].

According to data provided by the World Bank and the world's leading environmental authority, the United Nations Environment Program (UNEP), the world generates 2000 million tons of solid urban waste each year, 45% of which is not properly managed and only 16% is successfully recycled [6]. The increase in population is directly related to the increase in the generation of solid waste, since it raises the demand for consumer goods, food and services [2], [7]. The United Nations has pointed out that Latin America and the Caribbean have the lowest recycling rates globally, this generates about 216 million tons of urban solid waste a year. The organization emphasizes that 90% of it is unused and only 4.5% of it is recycled properly, causing environmental problems that affect soil, water and air [8], [3]. Taking this into consideration, UNEP promotes recycling initiatives around the world with the aim of decreasing waste production and pollution, and supports more environmentally sustainable countries, in the hopes of reducing negative climate impacts [6]. One of these initiatives is the collection of recyclable materials to be transformed into new products for reuse. Such process offers different benefits, such as: conservation of natural resources, energy saving, curbing of overexploitation of raw materials, in turn resulting in protection of natural habitats, and reduction in the amount of waste dumped in landfills and turned into garbage, among other contributions to environmental conservation [9], [10].

Accelerated growth in the urban area has generated serious environmental problems, such as global warming and water and air pollution. Poor solid waste management, especially in Latin America and the Caribbean, aggravates these problems due to low recycling rates. Global initiatives such as those of (UNEP) seek to mitigate these effects by promoting recycling and the transformation of waste into new products, which helps to conserve natural resources, reduce the exploitation of raw materials and reduce the amount of waste in landfills.

Knowing the importance of recycling in waste management strategies around the world and in favor of its contribution, an automated waste separation system based on artificial vision called AutoRecycler was implemented, which allows extracting information from a set of digital images trained by a convolutional neural network (CNN), thus detecting recyclable items such as glass, plastic, cardboard and metal. The detection of these items is done through a webcam connected in real time to the Nvidia® Jetson Nano™ 2GB programming card. DC servomotors were used, which regulate the opening of the gates located inside the prototype. This device was designed to be easily used, with the objective of carrying out a correct separation of waste and to encourage, motivate and sensitize people to act responsibly when sorting the waste they produce every day, thus contributing favorably to the community and to the preservation of the environment.

1.1. Related works

Previous research has been carried out that is related to the proposed work, which seeks to generate a significant contribution to recycling. Three relevant studies were selected for this project in order to analyze the models used by different authors. This analysis allows identifying differences and similarities with the work proposed here and highlighting the novelties of the AutoRecycler project.

Authors Mazin Abdulmahmood and Ryan Grammenos [11] in their article refer to the continuation of previous research in building a solid waste classification model WasteNet that classifies recycling into five categories: Cardboard, Glass, Metal, Paper and Plastic through the analysis of the TrashNet dataset, the authors incorporated new inferences to extend the original dataset, combining them with the IBM dataset. In this process they used images with white background and proceeded to retrain the Convolutional Neural Network (CNN). The paper proposes a methodology for reconstructing and fitting conventional image classification models using EfficientNets. In addition, the implementation of a channel through TensorRT to accelerate real-time execution on the Nvidia® Jetson Nano™ embedded device is presented. This work focuses on developing a more efficient version of WasteNet, a collaborative recycling sorting model. In this project, a Raspberry Pi V2 camera is used to detect items, along with a PCA9685 PWM controller that sends the signal to three servo motors for gate movement. During testing, the researchers collected materials directly from the containers to demonstrate the project's effectiveness in real time. The new model achieves an accuracy of 95.8% in tests, and 95% in real situations, representing a 14% increase over the original model. The authors in the article show a test system to check the effectiveness of the model on a final prototype [11].

On the other hand, Xueying Li and Ryan Grammenos [12] present in their article the design and implementation of a small-scale, low-cost, battery-powered, intelligent recycling garbage can with the ability to segment waste in real time. Five material categories were used in the development of this project: Cardboard, Glass, Metal, Paper and Plastic, in addition to the categories Empty and Hand: “Empty” indicates that there is no item in the image, while the “Hand” category detects a human hand to avoid trapping the user's hand in the image. When these two groups are detected, the container remains in standby and continues with the detection. In this project the authors collected more than 1,800 images of recyclable waste to train the Convolutional Neural Network (CNN), created a testbed to capture hand images where the detection space was empty, as well as images with a black background and others showing the user's hands. These images were combined with the TrashNet public dataset, which contains images with white background, with the objective of training different neural network classification models and observing their performance, they used two embedded systems: The Jetson Nano™ card and the K210 unit. The camera used was the Raspberry Pi. The best model developed was MobileNet V3, achieved an accuracy of 93.99% on the Jetson Nano™ and 94.61% on the K210 [12].

In the articles previously presented, the development of different models for the detection of waste in the classes (Cardboard, Glass, Metal, Paper and Plastic) with the objective of evaluating their performance is observed. However, it is not evident that the mentioned systems carry out an automatic classification process of the objects in corresponding containers for recycling, unlike the authors Lauren Tan, Jessica Lew and Tate Arevalo [13], who created an intelligent trash can, which automatically classifies the items into two categories: recyclable or non-recyclable. Users must toss items one by one into the box set up for detection and the device automatically sorts that item into the corresponding category, disposing of it in the correct garbage can via a sliding mechanism. The authors considered four main types of materials: Metals, Glass, PET and HDPE Plastics and Paper/Cardboard. The Jetson Nano™ board, Raspberry Pi V2 camera and inductive and capacitive sensors integrated into the platform under the lid were used to carry out this project. These sensors were added to identify the material of the objects. In addition, a stepper motor was used to transfer the objects from one container to another. For image classification, they used the existing ResNet101 model, combined with images from the Kaggle dataset and their own images. Image training was performed with the Jetson-Inference deep learning library on the Jetson Nano™ board. This prototype has an accuracy rate of 90% [13].

Constantly, a wide variety of projects and researches are carried out through the implementation of technology, with the objective of improving the recycling process and optimizing the proper separation of waste around the world. The aforementioned articles highlight similarities with the prototype proposed in this article, such as the use of the Nvidia Jetson Nano™ card to develop the system, the application of artificial vision and the training of Convolutional Neural Networks (CNN) with their own photographs, although the authors combined these images with others from public repositories, they also used images with white background for the classes (Plastic, Glass, Cardboard and Metal) in the development of the project, as well as the use of different objects belonging to these classes for the capture of images. This article presents the small-scale AutoRecycler prototype, based on artificial vision, which detects materials such as (Plastic, Glass, Cardboard and Metal), and performs the classification process automatically within the prototype thanks to a mechanism created specifically for this purpose.

The novelties presented by the AutoRecycler system, unlike the projects previously analyzed, can be seen in the set of images captured from a variety of everyday materials, considering their different types, sizes, colors and conditions, for which it was necessary to take 4,850 images in order to improve the effectiveness of the system in the detection of objects. The system model is implemented on a small scale, which demonstrates that it can be replicable, and its structure is designed to perform the recycling process automatically.

The mechanics of the prototype allows that once the user introduces the objects to be recycled one by one into the detection zone, the material is recognized and the opening of the detection zone gate is activated. This causes the object to fall with speed down the inclined ramp at an angle of 20 degrees, ensuring a displacement towards the corresponding container gate, which opens prior to the detection of the object. This process causes the object to bounce and fall into the assigned container, representing an implementation of automatic recycling. In addition, the system performs an accurate sorting of the materials, ensuring that all items of the same material are directed into the same container.

2. Hardware description

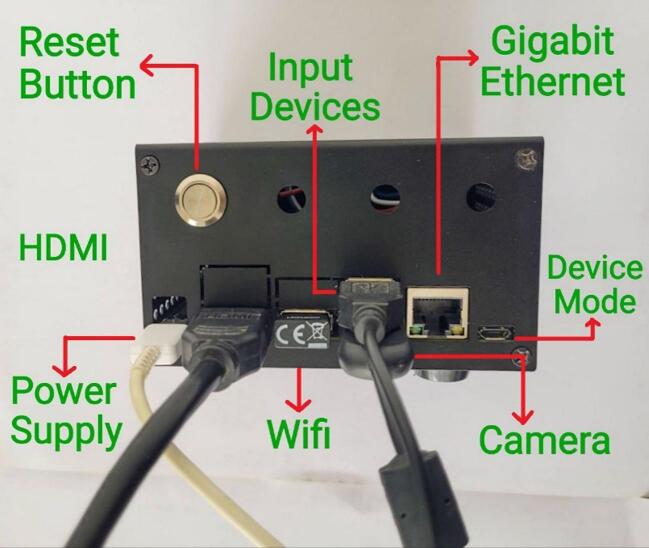

The AutoRecycler system was developed with the Nvidia® Jetson Nano™ 2GB board, this small computer is used to create artificial intelligence (AI) and robotics applications, consumes between (5W to 10W) of energy and to work properly it requires: a 5V⎓3A power supply, a USB Wi-Fi adapter, mouse, keyboard, HDMI display and a USB webcam, which is in charge of waste detection and the subsequent recycling program. In the installation and programming of the board, a 64GB microSD card was used for data storage. These components can be seen in (Fig. 1) [14], [15].

Fig. 1.

Components of the detection system.

The Jetson Nano™ board is protected by a specialized metal case created by Nvidia® which fits properly to the board and its connection ports. It includes a power and a reset button, a USB Type-C port for power supply, and a HDMI port for display connection. For input devices such as: Wi-Fi adapter, mouse, keyboard and webcam, two USB 2.0 ports and one USB 3.0 port are available. In regard to data transfer, the USB 3.0 port is faster compared to the USB 2.0 ports. In addition, the board contains a Gigabit Ethernet port that allows wired internet connection with higher speed and bandwidth. Finally, a micro-USB port to access the board’s Device Mode. These are shown in (Fig. 2) [14], [16].

Fig. 2.

Jetson Nano™ 2 GB card connections.

In the development of the program, the supervised learning algorithm was used, a subset of Machine Learning that is based on using labeled data to train algorithms capable of classifying or predicting data. This approach allows evaluating the relevance of various features or attributes using the labeled data and thus gradually improving the model fit towards the expected result. For this development, supervised classification type learning was employed, where each example is labeled by choosing between two or more different classes, allowing the model to select the appropriate label in real time based on the knowledge acquired during training [17].

For image training, a Convolutional Neural Network (CNN) was employed, using the Deep Learning neural network algorithm, in order to correctly identify the objects in the images and the classes to which each of them belongs. This approach allows a detailed analysis of the images, extracting relevant features that facilitate the accurate identification of the objects [18]. The detection model used was SSD-Mobilenet-v2, an architecture noted for its speed and efficiency in real-time object detection [19]. The combination of Deep Learning with the SSD-Mobilenet-v2 architecture ensures optimal performance in terms of accuracy and response time, enabling the effective implementation of advanced solutions for object classification and detection in images [20].

In order to test the integrated system, a model with four different bins was made. The different elements to be recycled (plastic, glass, cardboard and metal) were put in the bins. Servomotors with an operating voltage of 4.8V to 6V DC and operating speed of 0.12s/60° were used for the classification and operation of the model’s hatches [21].

The AutoRecycler system is low power consumption. The Jetson Nano™ board consumes between 5 and 10 W, depending on the workload [15]. The PWM controller PCA9685, in charge of managing the servo motors, has a minimum consumption of 0.005 W. Each SG90 servo motor consumes approximately 1 W, but this consumption decreases significantly when the servos are not in action [22]. Furthermore, since not all servomotors operate simultaneously, the total power consumption of the system is further optimized, ensuring efficient and sustainable operation.

The elements of differentiation of the recycling system include:

-

•

Low energy consumption.

-

•

Ease of use.

-

•

Being an automatic waste separation system.

3. Design files summary

| Design file name | File type | Open source license | Location of the file |

|---|---|---|---|

| Bill_of_Materials | XLSX | CC BY-NC-ND 4.0 | https://doi.org/10.17632/yf8z2263gy.2 |

| Docker_Installation | TXT | CC BY-NC-ND 4.0 | https://doi.org/10.17632/yf8z2263gy.2 |

| Camera_Capture | TXT | CC BY-NC-ND 4.0 | https://doi.org/10.17632/yf8z2263gy.2 |

| PCA9685Driver_Installation | TXT | CC BY-NC-ND 4.0 | https://doi.org/10.17632/yf8z2263gy.2 |

| Training_Recicla | TXT | CC BY-NC-ND 4.0 | https://doi.org/10.17632/yf8z2263gy.2 |

| AutoRecycler_Prototype_Drawing | CC BY-NC-ND 4.0 | https://doi.org/10.17632/yf8z2263gy.2 | |

| AutoRecycler_CAD | DWG | CC BY-NC-ND 4.0 | https://doi.org/10.17632/yf8z2263gy.2 |

The repository https://doi.org/10.17632/yf8z2263gy.2 contains the files needed to build the AutoRecycler project. These files are classified as follows:

-

•

The file “Bill_of_Materials.xlsx” contains the list of materials used in the project.

-

•

The file “Docker_Installation.txt” contains the step-by-step instructions for the Docker installation on the Jetson Nano™ 2GB board.

-

•

The “Camera_Capture.txt” file contains the lines of code to run inside the Docker to create the “labels.txt” file with the label names, along with detailed directions to open the Camera-Capture tool and build the dataset using its own images.

-

•

The file “PCA9685Driver_Installation.txt” contains the commands to install the library (adafruit_servokit) inside a terminal on the Jetson Nano™ board.

-

•

The “Training_Recicla.txt” file contains the lines of code to carry out the Convolutional Neural Network (CNN) training inside the Docker container.

-

•

The file “AutoRecycler_Prototype_Drawing.pdf” contains the design and dimensions for building the prototype.

-

•

The “AutoRecycler_CAD.dwg” file, created in the AutoCAD program, contains the detailed design of the AutoRecycler prototype, presented from different angles. It includes 2D and 3D views that provide a complete understanding of the structure.

-

•

Inside the folder named “Recycle_Dataset” is the subfolder “recycle”, which includes three subfolders: “JPEGImages” contains the set of 4,850 images captured for the detection of the recyclable elements (Plastic, Glass, Cardboard and Metal), “Annotations” stores the 4.850 delimited areas (BoundingBox) of the objects extracted from each image and, finally, “ImageSets” contains the information of the data collected in the categories (Train, Val and Test), distributed as follows: Train represents 36.2% of the collected data, Val 31.3%, and Test 32.5%. These data were used for the training of the Convolutional Neural Network (CNN).

-

•

The “my_project” folder contains the following files: codes “Server.py” and “OpenCVDetection_Recicla.py” for the final project execution, “labels.txt” file which contains the name of the classes (Plastic, Glass, Cardboard and Metal) used in the development of the system, and the trained model “ssd-mobilenet.onnx”.

4. Bill of materials summary

In the “Bill_of_Materials.xlsx” file, located in the repository mentioned in the previous section, the materials used in the development of the automatic solid waste classification system (AutoRecycler) can be found.

5. Build instructions

Step 1: Obtain the necessary materials to carry out the project. Check the file “Bill_of_Materials.xlsx.”.

Step 2: Connect the Jetson Nano™ 2GB board appropriately (Fig. 2).

Step 3: Run the initial setup of the Nvidia® Jetson Nano™ 2GB board, this step requires the Jetson Nano™ development kit found on the official Nvidia® website [16].

Step 4: Install the L4T-Pytorch-based Docker container on the L4T R32.6.1 version of the Nvidia® Jetson Nano™ 2GB board for project development. A container is a runnable unit of software where everything needed is hosted in the same application. This makes it smaller and portable. Docker is an open source project that allows the creation, development and running of applications in containers [23]. Review the file “Docker_Installation.txt”.

Step 5: Using the “Camera-Capture” tool within the Docker container, create a dataset for object detection using own images and draw in each of them a bounding box (BoundingBox) around each element. For the AutoRecycler project, we created the file “labels.txt” with the classes (Plastic, Glass, Cardboard and Metal), and with 4,850 pictures taken on white background with the Logitech® C920S webcam. In each image a bounding box of an object corresponding to all classes in the categories (Train, Val and Test) of the dataset (Detection) (Fig. 3). To carry out step 5, you can refer to the file “Camera_Capture.txt”.

-

•

To ensure the accuracy of the system, several factors were considered when capturing the 4,850 images. The photographs of the objects were taken from various perspectives in order to achieve a more accurate detection when entering the material in the detection zone of the prototype, this allowed the object to be recognized from different angles. Variety in the appearance of the materials was considered, using elements of different sizes, colors and shapes, some of them were photographed with labels and lids, and others without them. We chose to capture images that showed the hands holding the object, in order to avoid interference during reading and the introduction of the object into the detection zone. In addition, the conditions of the materials were taken into account; although most of them were in good condition, some presented certain irregularities that contributed to detect the objects in different conditions. Photographs were taken applying different types of lighting, sunlight was used during different times of the day and LED light emitted by a white light lamp, adjusted to various intensities during the night, this ensured that the lighting did not interfere with the detection at any time. The dataset created for the AutoRecycler project is located in the “Recycle_Dataset” folder.

Fig. 3.

Capture images with the “Camera-Capture” tool in the (Train) category of the (Metal) class.

Step 6: With the data acquired in Step 5, start the training of a Convolutional Neural Network (CNN) for 32 epochs using the algorithm of deep neural networks (Deep Learning), in order to correctly identify the objects in the images and the classes to which each of them belongs [18]. The detection model used is the SSD-Mobilenet-v2. This architecture is optimal thanks to its speed and efficiency in detecting objects in real time [19], [24]. In the file “Training_Recicla.txt” located in the repository, you will find detailed instructions on how to carry out the training of the images.

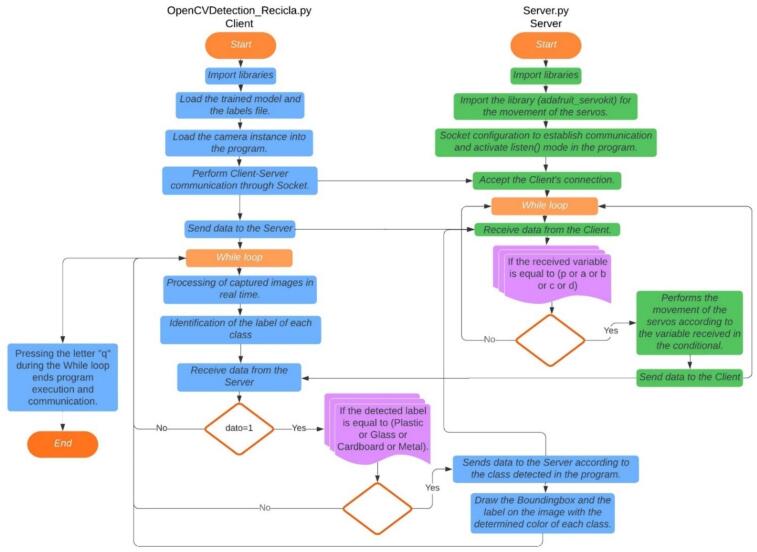

Step 7: Download the folder “my_project” from the repository, the file “OpenCVDetection_Recicla.py” can be found within. This script was developed to perform the object detection inference and to load the previously trained model. The Python programming language was used in its 3.6.9 version integrating OpenCV in the 4.5.0 version. This program must be compiled simultaneously with the “Server.py” code. See the flowchart of the programs “OpenCVDetection_Recicla.py” and “Server.py” in (Fig. 5).

Fig. 5.

Flowchart of the “OpenCVDetection_Recicla.py” and “Server.py” programs.

Step 8: Connect as necessary for the operation of the DC servomotors in the prototype, using the 16-channel PWM I2C PCA9685 controller PCA9685 connected to the Jetson Nano™ board (seven 5V DC servomotors and an external 5V⎓3A power supply) (Fig. 4). Review the file where the materials can be found: “Bill_of_Materials.xlsx”.

Fig. 4.

Connection diagram for the movement of the servos in the prototype.

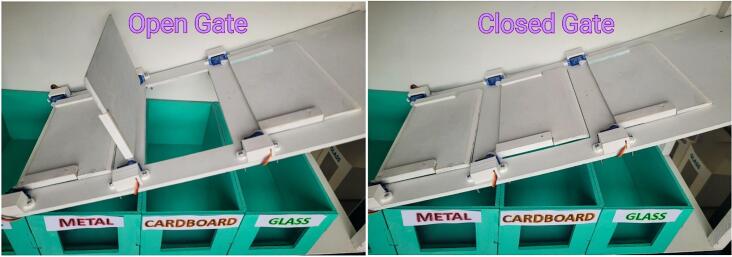

The 16-channel I2C PWM controller PCA9685 was used in the design of the AutoRecycler prototype because of its high precision in controlling various devices through pulse width modulation (PWM). When connected to the Jetson Nano™ board, it establishes seamless communication via the I2C protocol, allowing the Jetson Nano™ to send instructions to the PCA9685 to adjust the speed, direction or position of the connected servo motors. These 5V DC servo motors are essential for precise motion control, receiving PWM signals from the PCA9685 to determine their ideal position in the system. The external 5V⎓3A power supply ensures a stable power supply for the controller and servomotors, ensuring safe and efficient operation of the entire system [21], [14]. Seven servomotors were used in this project which were distributed as follows: one was used to control the movement of the gate in the detection zone, which drops the object to move down the ramp and two servos were installed in each of the container gates (Glass, Cardboard and Metal) to ensure that the gates are more resistant when activating and receiving the object increasing the durability of the project when opening and closing the gates.

Step 9: Install the library (adafruit_servokit) outside the Docker container on the Jetson Nano™ board. The installation of this library is necessary to be able to use the PWM I2C PCA9685 controller efficiently, as it provides a programming interface that facilitates the control of the servo motors connected to the controller [21]. To do this, it is necessary to execute the lines of code found in the file “PCA9685Driver_installation.txt”.

Step 10: Inside the “my_project” folder is the “Server.py” file. In this script, the code for servo calibration was included, providing the necessary motion in the prototype. This code must be compiled outside of Docker since the corresponding libraries for the execution of the program are not found within it.

-

•

In order to adequately implement the system, a two-way communication through the socket library in Python between the programs “OpenCVDetection_Recicla.py” and “Server.py” took place, for the correct operation of the object detection and servo motor movement in the prototype, so that both programs work at the same time. The socket library was imported in each of the codes, the program “OpenCVDetection_Recicla.py” was assigned as client within them, and “Server.py” was denominated as server. The connection between the programs is established when the client connects to the server to exchange information through the transmission control protocol and the internet protocol (TCP/IP). Its purpose is to establish a connection using the IP address of the Jetson Nano™ board, accompanied by a port number (Host) [25], this data indicates where to send the information, so they must match in both codes. The flow chart of the programs can be seen in (Fig. 5).

Step 11: Simultaneously compile the programs to verify the communication between them: The program “Server.py” must be compiled from a terminal outside Docker and the program “OpenCVDetection_Recicla.py” from the terminal inside Docker (Fig. 6). When running the “Server.py” program, the connection established through the IP address will be displayed on the screen and “OpenCVDetection_Recicla.py” will display a pop-up window with the real-time image of the connected webcam, highlighting the detected object with a BoundingBox next to its corresponding class label (Fig. 13). In the code a specific color was assigned to each class in order to facilitate its identification. To close the program, press the “q” key.

Fig. 6.

Simultaneous compilation of the programs “OpenCVDetection_Recicla.py” and “Server.py”.

Fig. 13.

Operation of the “OpenCVDetection_Recicla.py” and “Server.py” codes in real time.

Step 12: Build the AutoRecycler prototype mock-up (Fig. 7). This mock-up is (68 cm) long, (55 cm) high and (25 cm) wide. The containers of (Plastic, Glass, Cardboard and Metal) are (12 cm) long, (12 cm) high and (25 cm) wide, to visualize the storage of the objects, each container has an opening in the front of (7.5 cm) wide and (7.5 cm) long. To achieve the displacement of the objects to each of the containers, a ramp was designed which measures (57 cm) long, (25 cm) wide and its inclination level is (20 degrees), the size of the ramp gates is (18 cm) long and (11 cm) wide proportional to the measures of each container. The object detection area is (12 cm) long, (12 cm) high and (25 cm) wide, a gate was installed with the same dimensions of the ramp gates and has a front opening of (7.5 cm) wide and (7.5 cm) long. To place the components in the prototype, a bar of (68 cm) long and (25 cm) wide was placed. To give stability to the prototype, two square wooden columns were installed at the front and two equal columns at the back, with dimensions of (2x2x41 cm). To add support to the bar where the system components are located, a horizontal column of size (2x2x68 cm) and a smaller horizontal column of (2x2x21 cm) were installed to support and stabilize the ramp. On the side parts of the prototype, two covers were installed to improve the security of the system. The cover on the right side, where the detection area is located, measures (43 cm) high and (25 cm) wide, while the cover on the left side measures (55 cm) high and (25 cm) wide. In the repository is available the file “AutoRecycler_Prototype_Drawing.pdf” where the design, dimensions and material thickness of the AutoRecycler prototype can be observed.

Fig. 7.

AutoRecycler prototype model.

For the operation of the AutoRecycler prototype, a completely closed system around the inclined ramp was realized using MDF wood on the back of the system and on the lateral sides. The front part is enclosed with transparent acrylic material, which allows visualization of the movement and sorting of the detected items in real time. This design not only improves the safety of the prototype by preventing people from accidentally touching the moving parts, but also minimizes possible errors in operation by protecting the internal components from external factors.

Step 13: Cover the object detection area with white color in order to maintain the initial characteristics in the image capture. (Fig. 8).

Fig. 8.

Detection zone covered of color white.

6. Operation instructions

Step 1: Locate the webcam at a distance of (25 cm) from the area where the objects to be detected are placed in the prototype (Fig. 9). This distance was determined from the initial conditions used in the image capture for CNN training, thus ensuring optimal visibility and focus of the objects during the detection and classification process.

Fig. 9.

Location of the camera and the object to be detected.

Step 2: Check all the connections of the Jetson Nano™ board (Fig. 1), (Fig. 2) and (Fig. 4), and properly arrange the wires on the model. It is essential to guarantee a stable and secure connection for the correct operation of the system, avoiding possible interference and performance problems.

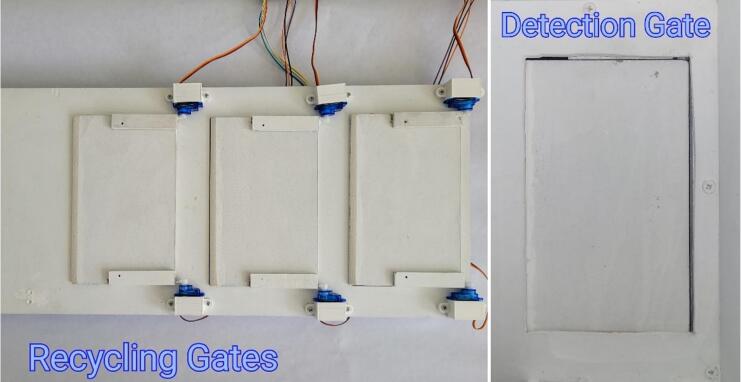

Step 3: Install the DC servomotors in the prototype according to the diagram shown in (Fig. 4), the servos (Glass1, Glass2, Cardboard1, Cardboard2, Metal1 y Metal2) should be placed in each of the ramp hatches in order to create the opening and closing transition (Fig. 10) and (Fig. 11). The servo (Input) should be installed in the same way in the detection zone hatches evidencing the descent of the object to the ramp and then to the container.

Fig. 10.

Gate movement.

Fig. 11.

Location of the servomotors on the ramp.

Step 4: Initialize the Jetson Nano™ 2GB board.

Step 5: Verify that all servomotors and gates are closed before starting the detection and sorting programs (Fig. 12). This measure guarantees the correct operation of the system and the prevention of any possible damage or mishap during the execution of the programs.

Fig. 12.

Gates closed in the prototype.

Step 6: Simultaneously compile the program “OpenCVDetection_Recicla.py” from the Docker terminal and the program “Server.py” from another terminal outside Docker (Fig. 6). When running the programs, you can see the connection established through the IP address and a pop-up window showing the real-time image of the webcam, highlighting the detected object with a BoundingBox next to its corresponding class label.

-

•

Codes operation in real time (Fig. 13).

Step 7: For the program to properly detect the object, it is necessary to only place one object in the detection zone before placing another one. The objects must also be of the defined classes (Plastic, Glass, Cardboard and Metal) as shown in (Fig. 9) and (Fig. 13).

Step 8: Press the letter “q” to close the “OpenCVDetection_Recicla.py” program and finish communication via sockets between the two programs.

-

•

The operation of the AutoRecycler prototype is shown step by step in the flow chart in (Fig. 14).

Fig. 14.

Flow chart of the AutoRecycler prototype operation.

7. Validation and characterization

During the prototype validation phase, recognition tests were carried out with the objects initially used in the image training. The following elements were also validated: the distance between the camera and the object to be detected, the background on which the object is located, and the detection capability when there are multiple objects in front of the camera. The results established that the distance between the camera and the object can range from 20 cm to 30 cm, and it was determined that the background should be white to obtain a more precise and accurate detection. It is important to note that these data may differ depending on the initial conditions of each training. When placing several objects in the detection zone at the same time, it was observed that the system is able to easily recognize each one of them (Fig. 15). However, to meet the classification criteria determined for the AutoRecycler prototype, it was decided to work with one object at a time to be detected. This is because the system could open different hatches at the same time if several objects are placed simultaneously, which could lead to erroneous separation of waste and therefore failure to achieve the goal.

Fig. 15.

Detection of different objects at the same time.

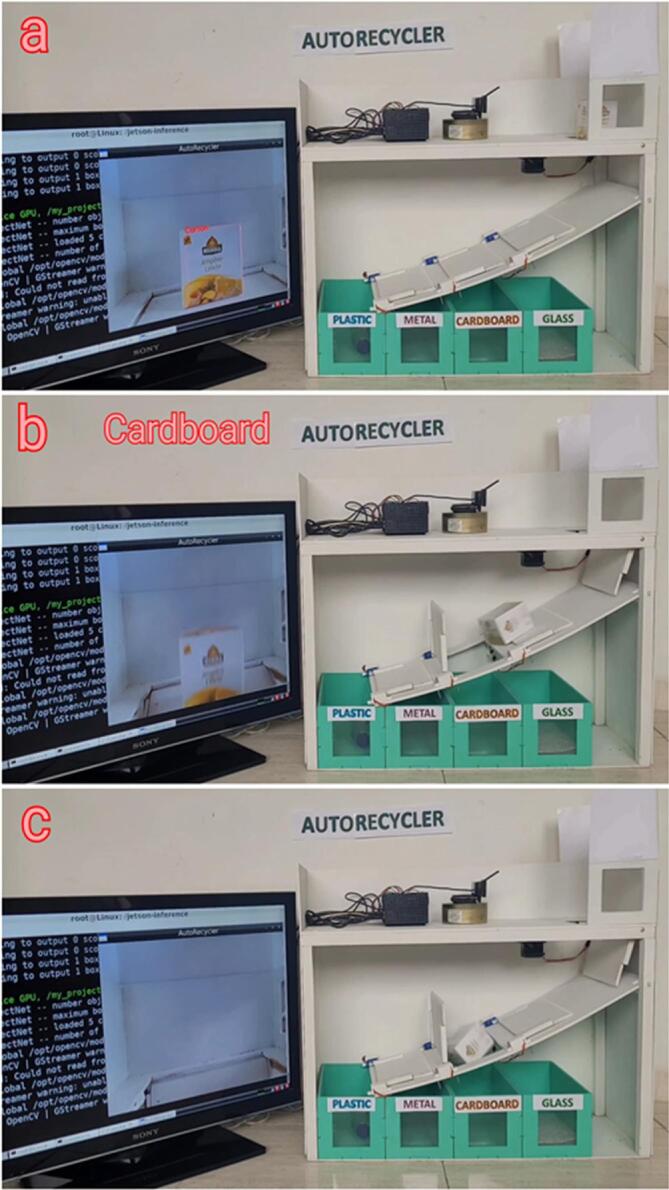

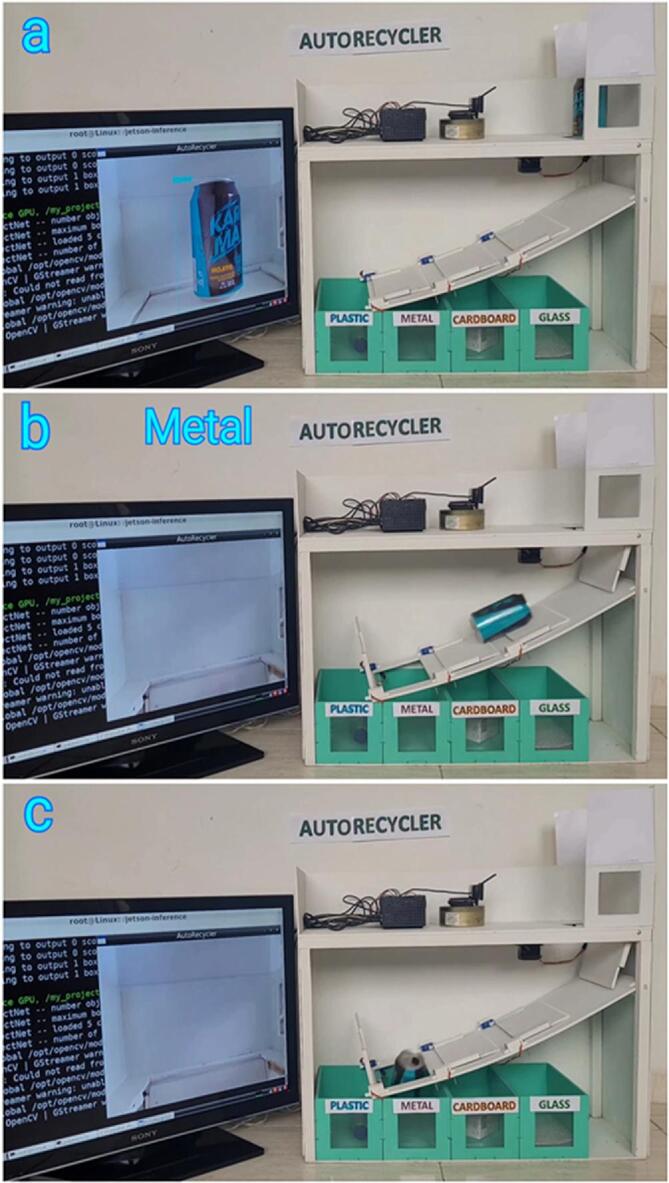

In the tests carried out on the final prototype, there was evidence that the system is able to adequately separate solid waste (Plastic, Glass, Cardboard and Metal), detecting one object at a time. The servomotors were installed in order to allocate the objects while sliding down the ramp, which facilitates their descending motion thanks to its inclination of 20 degrees, this can be seen in (Fig. 16) which corresponds to the validation of metal, while (Fig. 17) represents cardboard, and (Fig. 18) shows the validation process of plastic, and (Fig. 19) such of glass.

Fig. 16.

Validation of the detection process, sliding and metal classification in the AutoRecycler prototype.

Fig. 17.

Validation of the detection process, sliding and cardboard classification in the AutoRecycler prototype.

Fig.18.

Validation of the detection process, sliding and plastic classification in the AutoRecycler prototype.

Fig. 19.

Validation of the detection process, sliding and glass classification in the AutoRecycler prototype.

The operation of the AutoRecycler prototype can be seen through three items (a, b and c), as it is shown in the images (Fig. 16), (Fig. 17), (Fig. 18), and (Fig. 19). In item (a), we can see the detection process of each of the elements, the screen shows the image captured in real time with a bounding box (BoundingBox) and a label on the detected object. Each element is assigned a different color to facilitate its identification. After detecting the object, the hatch to which it is directed is opened and then the second hatch where the detected object is finally placed follows accordingly. In item (b) we can observe that, when the object falls through the entrance hatch in the detection zone, the ramp directs it as expected and, finally, in item (c) after sliding down the ramp (item b) the object reaches the designated hatch and falls into the corresponding container dedicated to the type of material (Plastic, Glass, Cardboard and Metal), thus achieving an effective classification of the elements. Within the repository, there is the “Video_01.mp4″ file where you can view the operation of the AutoRecycler prototype.

The response times of the AutoRecycler prototype for object detection in the different material categories (Plastic, Glass, Cardboard and Metal) are consistent, averaging 1 s for all categories when placing the object in the detection zone. This consistent time ensures that the system can process and classify the different types of materials with the same efficiency and speed, optimizing system performance and ensuring smooth operation.

Once the object is detected, the response time to activate the servos and open the corresponding container gate is 2 s for the categories (Glass, Cardboard and Metal). Then, the inlet gate opens after 2 s, allowing the objects to fall down the inclined chute and be sorted into the appropriate container. The container gates close 5 s after the inlet gate opens, and the inlet gate closes 2 s later. In the case of the Plastic category, the response times are similar, except for the opening and closing times of the container gate since this category does not have one. The response times of the AutoRecycler prototype are designed to guarantee a smooth and efficient operation of the recycling process, ensuring that the system has adequate time for sorting the objects.

After validating the prototype with objects used during the training, additional tests were carried out using different elements that were not part of the initial testing, corresponding to the aforementioned classes (Plastic, Glass, Cardboard and Metal). For each class, detection, sliding and classification tests were performed using 6 different elements, located in various positions in the detection zone of the AutoRecycler prototype. This process was carried out aiming to evaluate the accuracy of the system with both the objects used in the training and with different elements that were not initially included.

-

•

For the validation of the Plastic class in the prototype, the elements from (Fig. 20) were used.

Fig. 20.

Elements used in the validation of the Plastic category in the AutoRecycler prototype.

For the Plastic category, validation tests were performed for each of the objects (Fig. 20) on three aspects: The detection of the items in the detection zone, the sliding of each item down the steep ramp, and the classification in the corresponding container. Each item was evaluated with a score from 1 to 5, in ascending order, with 1 being the lowest score, reflecting poor performance of the object in the prototype, and 5 being the highest score, indicating good performance in the system (Table 1).

Table 1.

Validation results with the new objects from the Plastic category in the prototype.

| PLASTIC VALIDATION | |||

|---|---|---|---|

| Object number | Detection | Sliding | Classification |

| 1 | 5 | 5 | 5 |

| 2 | 5 | 5 | 5 |

| 3 | 3 | 5 | 5 |

| 4 | 5 | 5 | 5 |

| 5 | 3 | 5 | 5 |

| 6 | 5 | 5 | 5 |

During the tests, high accuracy in detecting the elements (1, 2, 4 and 6) at various angles within the detection zone could be observed. However, some deficiencies were identified in the detection of the objects (3 and 5) at certain positions of the elements. All six objects slid smoothly and were successfully classified in the prototype, indicating satisfactory performance of the automated classification system for the Plastic class.

-

•

For the validation of the Glass class in the prototype, the elements from (Fig. 21) were used.

Fig. 21.

Elements used in the validation of the Glass category in the AutoRecycler prototype.

For the Glass category, validation tests were performed for each of the objects (Fig. 21) in three aspects: The detection of the items in the detection zone, the sliding of each item down the steep ramp, and the classification in the corresponding container. Each item was evaluated with a score from 1 to 5, in ascending order, with 1 being the lowest score, reflecting poor performance of the object in the prototype, and 5 being the highest score, indicating good performance in the system (Table 2).

Table 2.

Validation results with the new objects from the Glass category in the prototype.

| GLASS VALIDATION | |||

|---|---|---|---|

| Object number | Detection | Sliding | Classification |

| 1 | 5 | 5 | 5 |

| 2 | 5 | 5 | 3 |

| 3 | 5 | 5 | 5 |

| 4 | 5 | 5 | 5 |

| 5 | 5 | 5 | 5 |

| 6 | 5 | 5 | 4 |

During the validation of the Glass category, different tests were carried out for each of the objects used, which showed very good results during the detection of the elements. In addition, a smooth and smooth sliding of the objects in the prototype was observed, however, during the classification attempts, difficulties arose with the elements (2 and 6) due to their height. These elements encountered obstacles when trying to enter through the gate into the corresponding container, which may be related to the dimensions of the objects.

-

•

For the validation of the Cardboard class in the prototype, the elements from (Fig. 22) were used.

Fig. 22.

Elements used in the validation of the Cardboard category in the AutoRecycler prototype.

For the Cardboard category, validation tests were performed for each of the objects (Fig. 22) on three aspects: The detection of the items in the detection zone, the sliding of each item down the steep ramp, and the sorting into the corresponding container. Each item was evaluated with a score from 1 to 5, in ascending order, with 1 being the lowest score, reflecting poor performance of the object in the prototype, and 5 being the highest score, indicating good performance in the system (Table 3).

Table 3.

Validation results with the new objects from the Cardboard category in the prototype.

| CARDBOARD VALIDATION | |||

|---|---|---|---|

| Object number | Detection | Sliding | Classification |

| 1 | 5 | 5 | 4 |

| 2 | 5 | 5 | 5 |

| 3 | 3 | 5 | 5 |

| 4 | 5 | 5 | 5 |

| 5 | 5 | 5 | 5 |

| 6 | 4 | 4 | 5 |

In the validation of the cardboard-based materials, an accurate detection of the elements (1, 2, 4 and 5) could be verified, however, difficulties were observed in the detection of the objects (3 and 6) in some specific positions. Regarding the sliding in the prototype, most of the elements presented a good performance, with the exception of object number (6). This object, due to its shape, experienced problems to slide properly in some attempts. Regarding classification, object (1) presented some difficulties when trying to enter through the gate into the container, due to its larger size compared to the other objects.

-

•

For the validation of the Metal class in the prototype, the elements from (Fig. 23) were used.

Fig. 23.

Elements used in the validation of the Metal category in the AutoRecycler prototype.

For the Metal category, validation tests were performed for each of the objects (Fig. 23) in three aspects: The detection of the items in the detection zone, the sliding of each item down the steep ramp, and the classification in the corresponding container. Each item was evaluated with a score from 1 to 5, in ascending order, with 1 being the lowest score, reflecting poor performance of the object in the prototype, and 5 being the highest score, indicating good performance in the system (Table 4).

Table 4.

Validation results with the new objects from the Metal category in the prototype.

| METAL VALIDATION | |||

|---|---|---|---|

| Object number | Detection | Sliding | Classification |

| 1 | 4 | 5 | 5 |

| 2 | 5 | 5 | 5 |

| 3 | 4 | 5 | 5 |

| 4 | 5 | 5 | 5 |

| 5 | 5 | 5 | 5 |

| 6 | 5 | 4 | 5 |

During the validation of the Metal category, the following results were observed: Elements (2, 4, 5 and 6) presented a correct detection when placed in different positions, on the contrary, elements (1 and 3) presented a low difficulty in their detection; In the sliding tests performed, it was verified that elements (1, 2, 3, 4 and 5) slide correctly down the ramp without inconveniences, but element (6) presents some difficulty when sliding due to the characteristics of the object, finally the results of the classification showed that all the elements were correctly classified within the prototype.

The errors observed in the validation of the detection and sliding processes in the system were minimal, these can be attributed to variations in the characteristics of the objects, which depend on the material of each one. Likewise, errors in classification can arise due to the dimensions of the objects and their position at certain angles when entering the corresponding container. Although the system was trained with a wide set of images representing various materials and objects, a decrease in system accuracy was observed on certain occasions during tests with objects that were not part of the initial training, due to the type, size or condition of the items to be recycled. It is important to highlight that, during these tests, it was possible to satisfactorily identify some materials that had not been previously trained.

The AutoRecycler prototype, yielded very favorable results during validation of the classes using objects used previously during training, as well as at the moment of validating with several different objects. From the results obtained above, it can be estimated that the Plastic class has a validation accuracy of 95%, Glass and Metal have an outstanding accuracy of 96% in the prototype. Likewise, it was determined that Cardboard obtained a validation accuracy of 94%. To calculate the percentage of accuracy of each class, the number of validated objects was taken into account in relation to the number of points corresponding to each of the evaluated criteria (Detection, Sliding and Classification) aiming to evaluate the effectiveness of the prototype. These results demonstrate that the AutoRecycler prototype is a system with high accuracy in detecting different materials and with an appropriate mechanism for its sliding and classification.

The accuracy of the system was determined by a simple direct rule of three represented by the formula , where:

-

•

B corresponds to (100%) of the total points in the validation table.

-

•

C is the sum of the points obtained in the Detection, Sliding and Classification items of the table.

-

•

A corresponds to the total sum of the possible points in the table for the Detection, Sliding and Classification items calculated in relation to the number of objects (90 points), where each object has a maximum score of 5, equivalent to 100%.

This methodology was applied to calculate the individual accuracy of each category (Plastic, Glass, Cardboard and Metal) using their respective evaluation tables (Table 1),(Table 2),(Table 3) and (Table 4).

The AutoRecycler system has the following limitations:

-

•

The size of the elements to be classified must be in accordance with the size of the prototype gates.

-

•

Object detection takes place on a reduced set of elements used for training the Convolutional Neural Network (CNN). If the variety of classes and objects detected is to be increased, it becomes necessary to perform a new training with a larger number of images.

-

•

For the AutoRecycler system to work, it is fundamental to compile the previously mentioned codes, which requires the connection of a display to the Jetson Nano™ board to be able to evidence its inside functioning and to carry out the corresponding compilation.

To improve the accuracy of the system and correct the errors encountered, several strategies can be implemented. First, it is necessary to expand the training data set by including images of objects that were not part of the initial training. This will allow the system to increase the accuracy in detecting previously unseen objects, thus improving its detection and classification capabilities by learning to recognize and classify new objects. In addition, it is recommended that the size of the prototype and the gates be increased to support larger items, which will ensure that the system can handle larger objects accurately and efficiently. Also, it is important to maintain the characteristics of the sliding ramp and create the necessary improvements, so that objects can slide regardless of their individual characteristics, this will help to realize a smooth movement through the system. With the implementation of these strategies, the accuracy of the system is expected to increase significantly, resulting in more accurate detection, slippage and sorting of recyclable materials.

A possible future work for this system could focus on continuous improvement and optimization through the development of an application to analyze the amount of recycled materials, the performance and functionality of the prototype, with the possibility that its operation could be generated with solar energy to become much more efficient and sustainable, also, it would be positive to analyze the incorporation of a greater variety of materials in the sorting stage, this favors the management of recyclable resources. It would also be positive to explore more advanced manufacturing methods and more sustainable materials in order to create a large-scale prototype for future commercialization and access to the public, which could generate market opportunities and have an impact on society.

CRediT authorship contribution statement

Anggie P. Echeverry: Writing – original draft, Validation, Software, Resources, Project administration, Methodology, Investigation, Formal analysis, Conceptualization. Carlos F. López: .

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

The authors express their gratitude to the Corporación Universitaria Autónoma del Cauca [ISNI: 0000 0004 0483 8740] (Colombia) for the knowledge provided during their professional training, and to the technology and environment research team (GITA) for the support provided to throughout the research process. This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Biographies

Anggie Paola Echeverry Cháux a student in the electronic engineering program at the la Corporación Universitaria Autónoma del Cauca, Colombia, has completed the subjects of her degree and is currently doing her degree work to complete her university studies. During 2022 and 2023, Anggie completed the programming degrees in languages (Java and Python) and development (Software and Mobile Applications) at the Universidad de Antioquia. Likewise, she completed the diploma in (Banking Software Development) at the la Corporación Universitaria Autónoma del Cauca, Colombia.

Carlos Felipe López Córdoba, electronic engineer, Master in energy systems. Carlos has carried out research projects focused on the development of technological solutions products, for which he has an invention patent granted in 2020, later he developed the writing of a book chapter focused on the energy potential of self-generation of fish farms published in 2024. He is currently a research professor at the Corporación Universitaria Autónoma del Cauca, Colombia.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.ohx.2024.e00575.

Contributor Information

Anggie P. Echeverry, Email: anggie.echeverry.c@uniautonoma.edu.co.

Carlos F. López, Email: carlos.lopez.c@uniautonoma.edu.co.

Appendix A. Supplementary data

The following are the Supplementary data to this article:

References

- 1.“Bin-e | Smart Waste Bin.” Accessed: Apr. 14, 2024. [Online]. Available: https://www.bine.world/.

- 2.Escobar Rodríguez Á.L. Hacia la gestión ambiental de residuos sólidos en las metrópolis de América Latina. Innovar. 2002;12(20):111–120. [Google Scholar]

- 3.Baus D. City University of New York (CUNY); 2017. Overpopulation and the Impact on the Environment. M.S. thesis, [Google Scholar]

- 4.“Efectos de la inadecuada gestión de residuos sólidos – Estrucplan.” Accessed: Mar. 18, 2023. [Online]. Available: https://estrucplan.com.ar/efectos-de-la-inadecuada-gestion-de-residuos-solidos/.

- 5.M. H. Dehghani, G. A. Omrani, and R. R. Karri, “Solid Waste—Sources, Toxicity, and Their Consequences to Human Health,” Soft Computing Techniques in Solid Waste and Wastewater Management, pp. 205–213, Jan. 2021, doi: 10.1016/B978-0-12-824463-0.00013-6.

- 6.“Día Internacional de Cero Desechos: La ONU aboga por el cambio hacia una economía circular.” Accessed: Mar. 18, 2024. [Online]. Available: https://news.un.org/es/story/2023/03/1519822.

- 7.Fletcher C.A. Manchester Metropolitan University; 2019. Towards zero waste: The search for effective waste management policy to support the transition to a circular economy. Doctoral thesis (PhD), [Google Scholar]

- 8.“Cómo la basura afecta al desarrollo de América Latina.” Accessed: May 17, 2023. [Online]. Available: https://news.un.org/es/story/2018/10/1443562.

- 9.L. López González and D. Espinoza Corrales, “Conducta de separación de residuos en los hogares costarricenses,” Dissertare, ISSN-e 2542-3177, Vol. 5, No. 1 (January-June), 2020, vol. 5, no. 1, p. 5, 2020.

- 10.Raza-Carrillo D., Acosta J. Environmental planning and recycling of solid urban waste. Economia, Sociedad y Territorio. 2022;22(69):519–544. doi: 10.22136/est20221696. [DOI] [Google Scholar]

- 11.M. Abdulmahmood and R. Grammenos, “Improving the Deployment of Recycling Classification through Efficient Hyper-Parameter Analysis,” Oct. 2021, Accessed: Feb. 09, 2024. [Online]. Available: https://arxiv.org/abs/2110.11043v2.

- 12.Li X., Grammenos R. Evaluation of practical edge computing CNN-based solutions for intelligent recycling bins. IET Smart Cities. 2023;5(3):194–209. doi: 10.1049/SMC2.12057. [DOI] [Google Scholar]

- 13.L. Tan, J. Lew, and T. Arevalo, “Bin There Dump That,” May 2021, Accessed: Feb. 09, 2024. [Online]. Available: https://course.ece.cmu.edu/∼ece500/projects/s21-teamc6/final-project-report-and-video/.

- 14.Vazquez J.A.E. Nvidia Jetson nano, un mini pc para desarrollo de robótica e inteligencia artificial. RICT Revista De Investigación Científica, Tecnológica e Innovación. 2023;1(1):1–4. doi: 10.12345/ridt.ccaiXXiYY.ZZZZ. [DOI] [Google Scholar]

- 15.“Jetson Nano 2GB Developer Kit User Guide | NVIDIA Developer.” Accessed: Jan. 13, 2024. [Online]. Available: https://developer.nvidia.com/embedded/learn/jetson-nano-2gb-devkit-user-guide#id-.JetsonNano2GBDeveloperKitUserGuidevbatuu_v1.0-PowerConsumption.

- 16.“Jetson Nano Developer Kit | NVIDIA Developer.” Accessed: Sep. 13, 2023. [Online]. Available: https://developer.nvidia.com/embedded/learn/get-started-jetson-nano-devkit.

- 17.D. L. Pachón Espinel, “Prototipo de Sistema Automatizado con Visión Artificial para la Selección de Empaques de Plástico, Vidrio y Lata en el Proceso de Reciclaje,” Thesis, UDEC-Universidad de Cundinamarca, 2019.

- 18.R. Ribani and M. Marengoni, “A Survey of Transfer Learning for Convolutional Neural Networks,” Proceedings - 32nd Conference on Graphics, Patterns and Images Tutorials, SIBGRAPI-T 2019, pp. 47–57, Oct. 2019, doi: 10.1109/SIBGRAPI-T.2019.00010.

- 19.Evan, M. Wulandari, and E. Syamsudin, “Recognition of Pedestrian Traffic Light using Tensorflow and SSD MobileNet V2,” IOP Conf Ser Mater Sci Eng, vol. 1007, no. 1, p. 012022, Dec. 2020, doi: 10.1088/1757-899X/1007/1/012022.

- 20.S. Kumar, R. Kumar, and Saad, “Real-Time Detection of Road-Based Objects using SSD MobileNet-v2 FPNlite with a new Benchmark Dataset,” 2023 4th International Conference on Computing, Mathematics and Engineering Technologies: Sustainable Technologies for Socio-Economic Development, iCoMET 2023, 2023, doi: 10.1109/ICOMET57998.2023.10099364.

- 21.Maurya A., Singh A., Waghmare S. University of Mumbai; 2023. Humanoid Robot (Robo) Project Synopsis Report, [Google Scholar]

- 22.“Adafruit 16-Channel 12-bit PWM/Servo Driver - I2C interface [PCA9685],” Adafruit. Accessed: Jun. 22, 2024. [Online]. Available: https://www.adafruit.com/product/815.

- 23.T. Siddiqui, S. A. Siddiqui, and N. A. Khan, “Comprehensive Analysis of Container Technology,” 2019 4th International Conference on Information Systems and Computer Networks, ISCON 2019, pp. 218–223, Nov. 2019, doi: 10.1109/ISCON47742.2019.9036238.

- 24.Sivkov S., et al. The algorithm development for operation of a computer vision system via the OpenCV library. Proc. Comput. Sci. 2020;169:662–667. doi: 10.1016/J.PROCS.2020.02.193. [DOI] [Google Scholar]

- 25.A. Shah, G. Servar, and U. Tomer, “Realtime Chat Application using Client-Server Architecture,” vol. 10, May 2022, doi: 10.22214/ijraset.2022.42848.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.