ABSTRACT

Causal directed acyclic graphs (DAGs) serve as intuitive tools to visually represent causal relationships between variables. While they find widespread use in guiding study design, data collection and statistical analysis, their adoption remains relatively rare in the domain of psychology. In this paper we describe the relevance of DAGs for health psychology, review guidelines for developing causal DAGs, and offer recommendations for their development. A scoping review searching for papers and resources describing guidelines for DAG development was conducted. Information extracted from the eligible papers and resources (n = 11) was categorised, and results were used to formulate recommendations. Most records focused on DAG development for data analysis, with similar steps outlined. However, we found notable variations on how to implement confounding variables (i.e., sequential inclusion versus exclusion). Also, how domain knowledge should be integrated in the development process was scarcely addressed. Only one paper described how to perform a literature search for DAG development. Key recommendations for causal DAG development are provided and discussed using an illustrative example.

KEYWORDS: Directed acyclic graph, causal diagram, causal inference, guidelines, recommendations, development

Background

Understanding causal relationships is a key aim of health research, in particular for theory building, and developing and evaluating interventions. Experiments and randomised controlled trials are considered the gold standard for interpreting associations between variables as cause–effect relations (i.e., for inferring causality) (Deaton & Cartwright, 2018). In many situations, such studies are not feasible or suitable because of ethical and practical challenges, or because interest lies in real-world settings (Rohrer, 2018). For that reason, researchers often rely on observational designs for inferring cause–effect relations (Rohrer, 2018).

Inferring causality from observational designs is notoriously challenging, because causal interpretations of associations are not guaranteed. Due to a lack of randomisation, an association between the independent variable (the exposure) and dependent variable (the outcome) might be the result of a common cause of both variables (i.e., confounding bias) (Glymour, 2006). For example, an association between meeting the WHO guidelines regarding moderate-to-vigorous physical activity (MVPA) (World Health Organization, 2020) and working memory (WM) performance in older adults does not necessarily reflect a causal effect of meeting the MVPA guidelines on WM performance. It could also be explained by age, a common cause of both variables. Consequently, the validity of causal interpretations relies on whether confounding has been sufficiently eliminated. Furthermore, interpretations of cause–effect relations may be hampered by the selection of participants on variables influenced by (common causes of) exposure and outcome characteristics. Expanding our example, suppose that participants were recruited via elderly care facilities, where residents typically have less physical and/or cognitive health. Consider a resident who meets the guidelines for MVPA, it is then more probable that this resident was admitted due to challenges in WM performance. Conversely, residents with a good WM performance, are more likely to have been admitted because of limited engagement with MVPA. Consequently, in this sample, one is likely to find a negative relation between the exposure (reaching the MVPA guidelines) and the outcome (WM performance). Such relation may be absent or even in the opposite direction in the general population of elderly. A distorted (possibly inversed) relation between exposure and outcome may thus occur because of endogenous selection or collider bias, a type of bias that, unlike confounding bias, is much less well-known among researchers in psychology (Glymour, 2006). In an experimental design, collider bias is often eliminated by sampling from a well-defined population before randomising participants. Besides confounding and collider bias, measurement bias (also called information bias) may arise in observational studies, and hamper causal interpretations of observational associations (Hernán, 2009). For example, reaching the MVPA guidelines may be measured with a questionnaire asking older adults to think back about the time they spent in several physical activities (e.g., walking, biking, etc.) over the past seven days (Craig et al., 2003). In this measure of MVPA, the true MVPA level will be distorted by other factors, including the individual’s ability to recall the MVPA undertaken over the past seven days. Measurement error (at least in the exposure) is generally prevented in randomised studies because participants are randomly assigned to well-defined exposure/treatment levels.

Awareness of these biases, especially confounding bias, has fostered a culture of caution regarding causal inference in observational research. As a result, many researchers have learned to avoid describing their aims and results in terms of ‘causal effects’, and write about (adjusted) ‘associations’ (Grosz et al., 2020; Hernán, 2018). Many researchers have also learned that adjusting for a large set of covariates is ‘a good practice’ to reduce confounding bias, often with the implicit belief that ‘more is better’ (Gaskell, 2020). However, adding covariates without considering the underlying causal structure may increase rather than reduce bias. In cases where causal effects are of interest, which often is the case, there is a need to employ more formal and systematic approaches that delineate the underlying causal assumptions and the associated analytical methods.

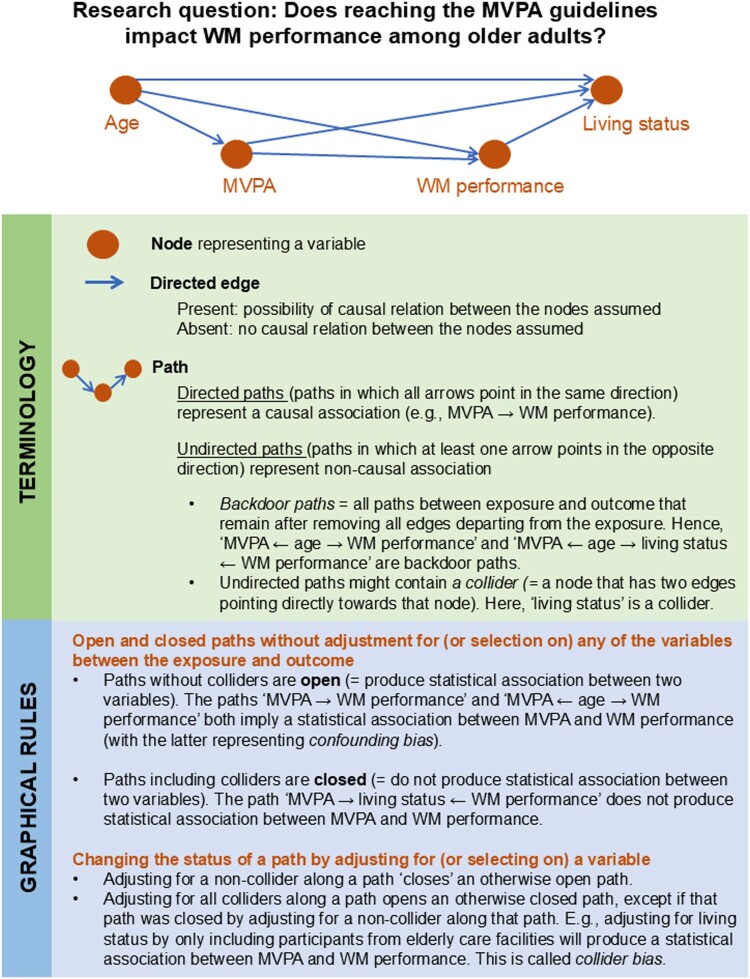

One such approach is the utilisation of causal Directed Acyclic Graphs (DAGs). These causal diagrams are invaluable tools for planning and conducting studies that aim to uncover causal effects in observational data (Greenland et al., 1999; Pearl, 2009). When our aim is to examine causal relationships, we need to go through two phases. In the identification phase, we conceptually define the causal structure between our variables based on background knowledge and decide whether the causal effect of interest can be identified from the available data under the assumed causal structure. In the estimation phase, we perform the statistical analysis using information derived from the identification phase. DAGs are highly relevant tools for the first phase as they allow us to provide an explicit and transparent visual representation of relevant background knowledge and the research design. A DAG can easily be scrutinised by fellow researchers, and provides a clear basis for discussion. Furthermore, DAGs allow a more formal approach to identify (DAG-implied) adjustment sets that reduce confounding bias and avoid inducing collider bias (due to over-adjustment or selective sampling of individuals from the target population). General introductions to causal DAGs are readily available; see e.g., Glymour, 2006; Hernán & Robins, 2020, Chapter 6; Morgan & Winship, 2015, Chapter 3; Elwert, 2013; Glymour et al., 2016). Figure 1 illustrates key definitions and graphical rules of DAGs, employing an example from health psychology. Although causal DAGs are increasingly used by epidemiologists and methodological specialists for guiding study design, data collection and adequate statistical analysis, they remain relatively rare in the health sciences (Tennant et al., 2021), and the behavioral and social sciences.

Figure 1.

Basic definitions and graphical rules for Directed Acyclic Graphs. Notes. Measurement bias can be visualised using different structures. Interested readers are referred to Chapter 9 of Hernán and Robins (Hernán & Robins, 2020).

There may be several reasons for the slow uptake of causal DAGs in health psychology. First, explicit causal inference is often considered taboo in nonexperimental research in psychology (Grosz et al., 2020). Due to the prevailing mantra that only randomised experiments can uncover causal relationships, researchers conducting observational studies refrain from using causal language in reporting study aims and results. As a result, associational rather than causal analyses are conducted. Paradoxically, many researchers, even those using observational designs, are interested in answering causal questions, with causal implications inevitably surfacing when translating findings into policy and practice recommendations (Grosz et al., 2020; Hernán, 2018). Such (adjusted) ‘associational’ approaches are prone to the biases outlined above, and of limited value for health decision making (Crutzen & Peters, 2021; Glymour, 2006; Grosz et al., 2020; Hernán, 2018).

Second, structural equation models (SEMs) have a widespread use in psychology (Bollen & Pearl, 2013), and network analyses are currently experiencing an unprecedented surge in popularity (Borsboom et al., 2021). Both share (visual) similarities with causal DAGs (Bollen & Pearl, 2013; Borsboom et al., 2021; Huang et al., 2023). Hence, questions about the added value of the utilisation of causal DAGs arise. In observational studies, causal DAGs are primarily used as input to guide statistical estimation of a causal effect of a pre-defined exposure on a pre-defined outcome, and are, unlike network models, only rarely considered as the output of a statistical analysis. Unlike parametric SEMs and network models, causal DAGs are conceptual tools used primarily in the identification phase of causal analysis, and are not inherently linked to any specific statistical model or analytical technique. DAGs can hence be considered as non-parametric SEMs (Bollen & Pearl, 2013). In addition, SEMs are used for answering both causal and non-causal research questions (Kunicki et al., 2023). Network analysis is used to examine patterns of conditional (in)dependence between variables. Sometimes these patterns are used to develop hypotheses regarding the causal relations between variables or even to learn the underlying causal DAG from the available data (Ryan et al., 2022). However, this endeavour (sometimes termed ‘causal discovery’) is challenging (and arguably much more ambitious than causal effect estimation), not only because edges in network models can reflect non-causal correlations by adjusting for colliders, but also because it fundamentally relies on the strong assumption that all common causes of any pair of variables are included in the network model and hence captured in the available data (Ryan et al., 2022).

Third, because of their acyclic nature, researchers may believe that causal DAGs are not able to represent bidirectional effects between exposures and outcomes over time and are therefore ill-suited for capturing numerous phenomena of interest to health psychologists. This idea may have been reinforced by the fact that DAGs are often introduced with over-simplified examples with time-fixed exposures and single outcome measurements. Nevertheless, by considering and encoding the temporal and longitudinal nature of variables, DAGs can encode bidirectional effects characterised by ‘feedback loops’ between exposures, outcomes, and confounders (see Figure 2 for an example) (Hernán & Robins, 2020; Murray & Kunicki, 2022). In doing so, DAGs provide insight into the complex nature of potential biasing pathways that may operate through feedback loops between exposures, outcomes and time-varying confounders (Hernán & Robins, 2020).

Figure 2.

Panel A displays a feedback loop. Panel B shows the corresponding causal DAG encoding the temporal nature underlying the feedback loop. Notes. ‘k’ refers to timepoint k.

Fourth, although the mathematical underpinnings of DAGs are well documented, guidance for applied researchers aiming to develop a DAG for answering their causal research questions is limited. Lack of knowledge regarding DAG development is an often reported barrier among researchers for adopting causal DAGs in empirical research (Barnard-Mayers et al., 2021). Furthermore, this lack of information can result in the development of highly implausible DAGs or the failure to use the developed DAG to (properly) guide further data-analysis (i.e., the estimation phase) (Barnard-Mayers et al., 2021).

This paper aims to further enhance the use of DAGs in the domain of health psychology by summarising the guidelines and recommendations on causal DAG development in the literature via a scoping review. Based on the findings, we will discuss and formulate recommendations for the development of DAGs, and illustrate these using an example within the field of health psychology.

Methods

This review is based on Arksey and O’Malley’s six-step framework for conducting a scoping review (Arksey & O'Malley, 2005) and the PRISMA extension for scoping reviews (Tricco et al., 2018). The completed PRISMA checklist can be found in Supplementary File 1. The protocol was uploaded to the Open Science Framework (OSF) on the 10th ofJune 2022 (osf.io/u42q9).

Step 1 – Identifying the research question

For this review, we considered the question ‘which guidelines or recommendations are provided for the development of causal DAGs?’. The review therefore sought to identify and summarise papers and resources focused on offering guidelines on how to develop a DAG for a research question.

Step 2 – Identifying relevant records

Relevant records (e.g., papers, book chapters, online courses) were identified in two ways. First, potential records were identified by searching bibliographic databases. For the concept ‘DAG’ we adopted the search strategy used by Tennant and colleagues in their review on the use of DAGs in applied health research (Tennant et al., 2021). The search strategy was adapted for three bibliographic databases: (1) Medline (PubMed), (2) EMBASE and (3) Web of Science (see Supplementary File 2). Only English-language papers and resources were considered. No restrictions were set for the research area, time since publication, or type of paper or resource. Papers and resources that (1) did not provide guidance on how to create a DAG or (2) focused on causal discovery were excluded. The most recent search was performed on the 11th of March 2022. Additional papers and resources were identified by hand searching the reference lists of the papers identified by the bibliographic searches.

Step 3 – Selecting relevant records

Records retrieved from the three databases were exported to Rayyan software (Ouzzani et al., 2016). After removing duplicates, relevant records were identified in two phases. First, titles and abstracts were screened in duplicate by LP, ADP, and JVC. LP screened all the records while ADP and JVC each screened 50 percent. For each record, the researchers indicated whether the record should be included, excluded, or in case of doubt, the label ‘maybe’ was allocated. After this, the results were compared and disagreements were discussed. The full texts of the remaining records were then assessed for final eligibility by LP who also searched the reference lists for additional relevant papers and resources.

Step 4 – Charting the data

In step 4, LP extracted the key information from each of the included papers and resources (Arksey & O'Malley, 2005). Regarding general information, this included publication year, author names, title, country of first author’s affiliation, and type of publication (e.g., article). Regarding the provided guidance and recommendations, information was extracted according to four themes: (1) the main purpose of DAG development (i.e., whether the identified paper or resource focuses on developing a DAG to guide study design, data analysis, or both), (2) proposed guidelines for DAG development, (3) guidance on the consultation of experts for DAG development, and (4) guidance on how to perform a literature search for DAG development. Because the included records do not report on empirical studies, no quality appraisal of the individual records was performed.

Step 5 – Collating, summarising and reporting the results

In step 5, a narrative summary of the findings was developed focusing on three themes of particular relevance to applied health researchers. First, we describe the purpose of developing a DAG. Second, we discuss the similarities and differences between the papers and resources regarding the proposed steps for DAG development. Third, we summarise the guidance on how to obtain domain knowledge (i.e., background knowledge regarding how other variables are related to the exposure and outcome of interest) for the development of a DAG.

Step 6 – Consultation exercise

In the final step, the aim and content of the review were discussed with applied health researchers, to ensure it best meets the needs of potential users. To achieve this, three members of the research team (LP, ADP, and JVC) organised workshops at the 19th annual meeting of the International Society of Behavioral Nutrition and Physical Activity (Phoenix, USA) and at Ghent University (Ghent, Belgium). During these workshops, the aim and the content of the review were presented and discussed. Participants were asked: (1) ‘What are facilitators for reading the scoping review?’ (2) ‘What are barriers for reading the scoping review?’ and (3) ‘Are there other relevant themes that we should discuss in the scoping review?’. Supplementary File 3 provides an overview of the characteristics of the attendees and of the feedback obtained and how we implemented this feedback in the final manuscript.

Results

Sample description

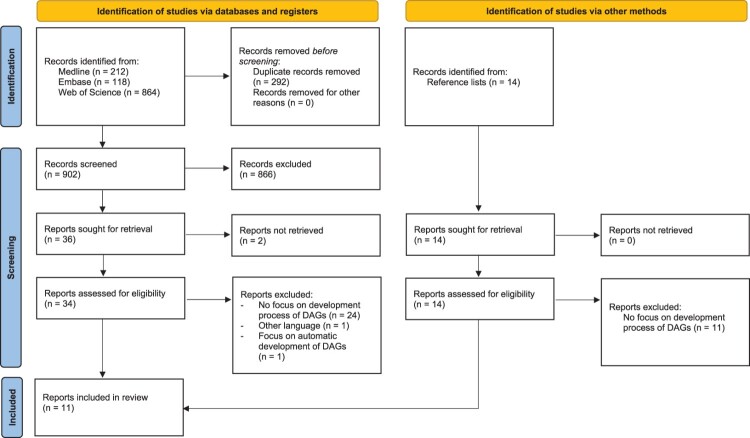

Figure 3 shows the completed PRISMA flow diagram, which summarises the review selection process. A total of 902 unique records were identified from the three bibliographic databases. Of these, eight were included in the review. Fourteen additional records were identified from reference lists, of which three were included in the review. An overview of the eleven included papers and resources is provided in Table 1.

Figure 3.

Completed PRISMA flow diagram.

Table 1.

Included papers and resources.

| Authors (Date) | Country | Publication type | Title | Name of journal / book |

|---|---|---|---|---|

| Sauer and VanderWeele (2013)* | USA | Book supplement | Use of directed acyclic graphs | Developing a Protocol for Observational Comparative Effectiveness Research: A User’s Guide |

| Tafti and Shmueli (2020) | USA | Article | Beyond Overall Treatment Effects: Leveraging Covariates in Randomized Experiments Guided by Causal Structure | Information Systems Research |

| Grace and Irvine (2020) | USA | Article | Scientist’s guide to developing explanatory statistical models using causal analysis principles | Ecology |

| Gaskell (2020) | USA | Article | An Introduction to Causal Diagrams for Anesthesiology Research | Anesthesiology |

| Ferguson et al. (2020) | UK | Article | Evidence synthesis for constructing directed acyclic graphs (ESC-DAGs): a novel and systematic method for building directed acyclic graphs | International Journal of Epidemiology |

| Suzuki et al. (2020)* | Japan | Article | Causal diagrams: pitfalls and tips | Journal of Epidemiology |

| Tennant et al. (2021) | UK | Article | Use of directed acyclic graphs (DAGs) to identify confounders in applied health research: review and recommendations | International Journal of Epidemiology |

| Watkins (2021); Watkins (2022) | Australia | Conference abstract and website | An online searchable database of example causal diagrams to make them easier to construct | International Journal of Epidemiology |

| Laubach et al. (2021) | USA | Article | A biologist’s guide to model selection and causal inference | Proceedings of the Royal Society B |

| Digitale et al. (2022) | USA | Article | Tutorial on directed acyclic graphs | Journal of Clinical Epidemiology |

| Hernán (2022)* | USA | Online course | Causal diagrams: draw your assumptions before your conclusions | NA |

Note: * = record identified via reference lists of the identified papers.

Narrative summary

In the following paragraphs, we discuss (1) whether the included papers or resources focused on DAG development for guiding study design and/or data analysis, (2) the guidelines and recommendations proposed for DAG development, and (3) guidance provided for obtaining domain knowledge. Supplementary file 4 presents a summary of these themes for each of the included records.

Purpose of DAG development

Of the eleven included papers and resources, six focus on the development and use of DAGs for guiding data analysis (Ferguson et al., 2020; Grace & Irvine, 2020; Laubach et al., 2021; Sauer & VanderWeele, 2013; Tennant et al., 2021; Hernán, 2022). DAGs are indeed useful resources for gaining insight in the causal relations between variables and allow for the identification of sufficient adjustment sets, i.e., a set of variables that is sufficient for confounding adjustment of the exposure-outcome relation (Greenland et al., 1999). However, in their review, Tennant and colleagues found that many studies using DAGs do not report the DAG-implied adjustment set(s) and some studies even undermine the DAG-implied adjustment set(s) by adding or removing covariates from the analysis (Tennant et al., 2021). The authors suggested that lack of confidence or fear of criticism might cause these practices and recommended clearly reporting both the DAG and the DAG-implied adjustment set(s) (Tennant et al., 2021).

Five records highlight that DAGs also play a vital role in early phases of the research process and in guiding study design (Watkins, 2022; Digitale et al., 2022; Gaskell, 2020; Suzuki et al., 2020; Tafti & Shmueli, 2020). For example, Digitale et al. indicated that DAGs are valuable tools for conceiving research questions and are ideally reported in research proposals (Digitale et al., 2022). Additionally, DAGs allow researchers to prioritise which variables to measure for answering the causal questions of interest (Gaskell, 2020; Tafti & Shmueli, 2020). Consequently, limited time and resources are not wasted by measuring variables that are irrelevant or should not be adjusted for (Tafti & Shmueli, 2020).

Proposed guidelines and recommendations

Seven papers and resources outline specific steps for DAG development (Watkins, 2022; Laubach et al., 2021; Digitale et al., 2022; Ferguson et al., 2020; Gaskell, 2020; Tafti & Shmueli, 2020; Hernán, 2022). Four provide more general recommendations (Grace & Irvine, 2020; Sauer & VanderWeele, 2013; Suzuki et al., 2020; Tennant et al., 2021). The papers and resources providing specific steps for DAG development all indicate that the first step of creating a DAG consists of adding the exposure and outcome of interest and precisely defining both (Watkins, 2022; Laubach et al., 2021; Digitale et al., 2022; Ferguson et al., 2020; Gaskell, 2020; Tafti & Shmueli, 2020; Hernán, 2022). In two papers the authors argue that potential mediators should be included in the next step (Gaskell, 2020; Tafti & Shmueli, 2020). However, unless researchers are interested in the specific pathways of the effect from the exposure on the outcome (direct or indirect paths), including potential mediators is not a necessary step when focusing on the total effect (Hernán, 2022).

In the second step, common causes of exposure and outcome are added to the DAG. In the third step, common causes of each pair of variables included in the DAG in steps 1 and 2 are added (Watkins, 2022 Laubach et al., 2021; Digitale et al., 2022; Ferguson et al., 2020; Gaskell, 2020; Tafti & Shmueli, 2020; Hernán, 2022). The second and third steps are considered the most important aspect of developing a causal DAG (Sauer & VanderWeele, 2013). Importantly, when using DAGs to guide data analysis, the common causes integrated in the DAG should not be limited to variables available in the dataset, but should also include unmeasured variables when applicable (Watkins, 2022; Digitale et al., 2022; Ferguson et al., 2020; Gaskell, 2020; Sauer & VanderWeele, 2013; Tafti & Shmueli, 2020; Tennant et al., 2021; Hernán, 2022). Furthermore, to allow easier interpretation of the DAG and to avoid mistakes, Tennant et al. recommend to visually arrange the nodes in such way that they reflect the passage of time from left-to-right or from top-to-bottom (Tennant et al., 2021).

Further integrating these common causes in a DAG requires careful consideration. We identified two distinct approaches for this step in the selected literature: an additive approach and a dismantling approach. Six of the included records propose an additive approach in which the complexity of the DAG is gradually increased (Watkins, 2022; Laubach et al., 2021; Digitale et al., 2022; Gaskell, 2020; Tafti & Shmueli, 2020; Hernán, 2022). In this approach, after steps 1-3, the need to add directed edges between each pair of variables in the DAG is considered. For example, one record recommends to add a directed edge wherever theory or logic suggests a potential direct effect between two variables (Tafti & Shmueli, 2020). However, as absent arrows represent stronger assumptions than included arrows, Tennant et al. argue that providing a rationale for including edges is unnecessary (Tennant et al., 2021). Instead, the authors recommend justifying omitted edges with theory and/or evidence (Tennant et al., 2021). In line with this reasoning, the dismantling approach starts with including all potential edges and then gradually reduces the complexity of the DAG by removing edges (Ferguson et al., 2020; Grace & Irvine, 2020). For example, after applying steps 1-3, Ferguson et al. propose to draw edges between each pair of variables in the DAG, resulting in a saturated DAG (i.e., a DAG in which all variables are connected with all other variables in the DAG) (Ferguson et al., 2020). This step can be performed without considering the directionality of the edges linking the variables (Ferguson et al., 2020). Then, each of the edges are sequentially assessed and a rationale is provided for altering the direction of the edges (e.g., based on temporal order) or for removing edges (Ferguson et al., 2020). Making decisions regarding the direction of an edge between two variables that might influence each other in a DAG reflecting a cross-sectional design can be difficult. In this case, one resource advises that an edge should be drawn in the direction of the strongest causal effect (Watkins, 2022). Another option could be to develop multiple DAGs and to conduct sensitivity analyses testing the robustness of the results under different assumptions (i.e., by altering the direction of edges between the variables that might influence each other bidirectionally) (Ferguson et al., 2020; Sauer & VanderWeele, 2013).

In many realistic research settings, our analytical sample is not randomly selected from our target population. Selection bias is defined as ‘any bias away from the true causal effect in the referent population (i.e., the population before the selection process) due to selecting the sample from the referent population’ (Lu et al., [2022], page 699). Three records explicitly describe the inclusion of selection nodes in the DAG (Digitale et al., 2022; Gaskell, 2020; Hernán, 2022). By adding a selection node and drawing a box around this node researchers indicate that they implicitly stratify on this variable by focusing on a specific subsample of the referent population.

Measurement bias occurs when the relation between the outcome and the exposure is altered due to the process by which the data are measured (Hernán & Robins, 2020). Four records described the utility of DAGs to identify measurement bias (Digitale et al., 2022; Suzuki et al., 2020; Watkins, 2021; Hernán, 2022), but only one provided guidelines on how to represent measurement bias in DAGs. Adding nodes for mismeasured variables and edges representing causal influences on this mismeasurement is a final step in the DAG development approach proposed by Hernán (Hernán, 2022).

Guidance on how to obtain domain knowledge for DAG development

Decisions regarding which variables are relevant to include in a DAG should be based on domain knowledge (Ferguson et al., 2020; Hernán et al., 2002). As argued by Pearce and Lawlor, DAGs can only be as good as the domain knowledge used to develop them (Pearce & Lawlor, 2016). However, none of the included records describe the process via which domain experts are to be involved or consulted for DAG development. Similarly, guidance on how to perform a literature search for DAG development is scarce. On his website ‘causaldiagrams.org’ Watkins provides a searchable database of published health research literature including a causal diagram (Watkins, 2022). This database allows researchers to inform their DAG by previously created DAGs that focus on similar research questions. However, a DAG developed for one specific causal question in a specific context and for a specific population may not, without any modification, be copied to answer a similar causal question in a different context and population. Only one paper provided explicit guidance on how to perform a literature search for DAG development and how to incorporate the findings in a DAG. Ferguson and colleagues recommend starting the development of a DAG with a (novel) systematic review or a review of systematic reviews focusing on the ‘focal relationship(s)’, i.e., exposure-outcome relationship(s), of interest (Ferguson et al., 2020). Each of the studies identified via the systematic review or review of systematic reviews then goes through three stages. In the first stage, an implied graph is created. This implied graph is a saturated graph containing the outcome, the exposure and all ‘control variables’ for a specific study. The authors define control variables as variables that were included in the analysis assessing the relationship between the exposure and the outcome. In the second stage, each edge in the implied graph is evaluated based on causal theory. Hence, in this stage, edges can be reversed or removed. The final stage consists of integrating the directed edges and nodes from each of the implied DAGs into one DAG (Ferguson et al., 2020). Variables that were not identified in the retrieved literature, but are considered important by the researcher are also added in this step. The authors indicate that the final DAG might become very complex. Hence, researchers are advised to ‘recombine’ similar nodes. Similarity could be based on theory (e.g., the nodes are categories of one concept or are used interchangeably in the literature) or on conceptually related nodes having similar edges to and from other nodes (Ferguson et al., 2020). However, as the authors indicate, this approach is labour-intensive (Ferguson et al., 2020) and problems may arise when there is limited literature available for the specific research question.

Discussion

Causal DAGs are tools to visually represent assumed causal relationships between variables. Importantly, they aid to make the causal assumptions invoked by researchers when causally interpreting their observational study results more transparent and explicit. Their uptake in psychology is limited, which might be due to a lack of knowledge and guidance on how to develop DAGs to inform study design and to guide data analysis. We therefore conducted a scoping review, examining papers and resources that describe guidelines and recommendations for their development. Our review revealed several areas of consensus, some less common suggestions, and some areas of disagreement.

Several resources highlighted the benefits of creating DAGs early in the research process, and not only for guiding data-analysis. When constructing DAGs, many recommended to include all common causes of both the exposure and outcome, regardless of whether they have been measured. There were varying recommendations on the optimal approach for including edges in DAGs. Some resources suggested an additive approach (e.g., Tafti & Shmueli [2020]), whereas others a dismantling approach (e.g., Ferguson et al. [2020]). Only a few resources recommended to incorporate nodes to represent participant selection procedures (Digitale et al., 2022; Gaskell, 2020; Hernán, 2022). Just one record offered guidance on adding nodes to represent measurement error (Hernán, 2022). Although many resources mentioned the importance of relying on expert knowledge in the field, there was very limited guidance on soliciting and integrating such domain knowledge during the DAG development process (see Ferguson et al. [2020] for a notable exception).

Based on these findings, we propose six steps when constructing a causal DAG (see Table 2). Given that constructing a DAG demands considerable time and investment, it is essential to allocate sufficient time to this process. Steps 1–5 comprise key recommendations distilled from our review. These steps focus on DAG development and hence refer to the identification phase of causal inference. In cases where the identified literature did not provide detailed guidance, we refer to the broad literature of causal inference. Step 6 provides guidance on how to use DAGs to inform data analysis, and is thus part of the estimation phase of causal inference. This step was added to ensure that the information derived from the DAG is actually used to analyse the data. To make the six-step procedure more tangible, a running example in the domain of health psychology is used. For each of the accompanying DAGs shown below, we provide a link to Dagitty, a free online tool not only for drawing, but also for analysing DAGs (Textor et al., 2011). Using this tool, interested readers can further explore how altering the DAGs changes the set of variables that should ideally be adjusted for in the estimation phase (see step 6).

Table 2.

Six steps to consider when creating a DAG.

| Step | Content |

|---|---|

| 1 | Create the DAG as early as possible in the research process |

| 2 | Precisely define the exposure and the outcome |

| 3 | Add common causes |

| 4 | Consider adding nodes representing selection procedures |

| 5 | Consider adding nodes representing measurement error |

| 6 | Use the DAG to inform data-analysis |

Step 1 – Create the DAG as early as possible in the research process

We recommend to construct a DAG as early as possible, preferably before the design of a study, the latest before data-analysis. Developing a DAG before designing the study permits researchers to select the variables that need to be measured and adjusted for (Greenland et al., 1999). Consequently, time and resources are not wasted by assessing variables that do not require adjustment (Tafti & Shmueli, 2020). Early DAG development may also help in refining selection procedures and measurement instruments to avoid potential sources of bias (e.g., by targeted recruitment of hard-to-reach subgroups). In case the data is already collected, it is also relevant to create a DAG in an early phase. Doing so will give relevant information on the need to link existing datasets from different studies to cover all relevant confounding pathways (see step 3) or to search for additional information from other sources to deal with selection (see step 4) or measurement (see step 5) bias.

Illustrative example for step 1. For exposition, we return to our example from the introduction section in which we examine the causal effect of reaching the WHO guidelines regarding MVPA on WM performance in older adults (World Health Organization, 2020). Here, we assume that we develop the DAG before conducting a large prospective longitudinal study among adults aged 65–80.

Step 2 – Precisely define the exposure and the outcome

The first nodes to be added are the exposure and the outcome, which should be sufficiently well-defined and, ideally, separated in time. Longitudinal designs provide a means to temporally separate the exposure and outcome, allowing us to reduce the risk of reverse causality (i.e., the outcome causally affects the exposure). Of importance, a DAG focuses on the effect of one exposure on an outcome, i.e., the key research question at hand. If interest lies in the distinct effects of different (types of) exposures, separate DAGs should be constructed. For example, if we are also interested in whether alcohol consumption impacts WM performance, we should construct another, separate DAG. Furthermore, we should decide whether we are interested in examining the causal effect of an exposure at a single time point or an exposure (pattern) that accumulates over time. For example, we could examine the impact of reaching the MVPA guidelines at one timepoint (single time point exposure) or at two consecutive timepoints (cumulative exposure) on WM performance. Examining the impact of a cumulative exposure will make the DAG more complex as it requires adding multiple nodes to represent repeated measures of the exposure (and outcome) over time.

Illustrative example for step 2. We focus on the impact of reaching the WHO guidelines for MVPA (i.e., ≥ 150 minutes of MVPA per week [World Health Organization, 2020]) assessed over a period of one week on WM performance on the day following the assessment of our exposure. Note that MVPA will be assessed over a period of seven days, but that these data will be combined into a single binary variable (i.e., reaching or not reaching the WHO guidelines regarding MVPA). Hence, we have a single time point exposure and a temporal distinction between our exposure and outcome. The exposure and outcome are added to the DAG, and a directed edge is drawn from the exposure to the outcome (see Figure 4). Considering that the relationship between reaching the MVPA guidelines and WM performance could be bidirectional, one might also be interested in the effect of WM performance on reaching the MVPA guidelines. This would require the construction of a separate DAG for this specific research question and ideally measurements for WM would then precede rather than succeed those of MVPA. Both the exposure and the outcome will need to be further specified. Hence, we will have to decide how exactly we define MVPA (e.g., using metabolic equivalent of task) and WM performance (e.g., visuospatial or auditory WM performance).

Figure 4.

DAG depicting the exposure and outcome. Notes. https://dagitty.net/mgFapKirJ The green colour of the arrow indicates this edge captures an open directed path.

Step 3 – Add common causes

Adding common causes to the DAG requires domain knowledge obtained via literature and/or consultation of a domain expert. The evidence synthesis method proposed by Ferguson and colleagues allows researchers to gain an overview of variables that were considered relevant in studies by the original authors (Ferguson et al., 2020). However, scrutiny is required as variables in available studies might have been added to the analysis by the original authors without using a causal framework (and thus prone to introducing bias). The approach of Ferguson and colleagues also quickly becomes infeasible when substantive literature on a certain topic is available (Campbell et al., 2021). We recommend to start with a scoping review of articles with similar research questions, and list the common causes in these studies. A discussion with a group of experts may then follow about whether or not to include these in a DAG. To date, limited information is available regarding how to consult domain experts for DAG development. Possible methods are stakeholder workshops (Rodrigues et al., 2022) and publicly available feedback forms (Barnard-Mayers et al., 2022).

Researchers might wonder how many common causes their DAG should contain to be considered a ‘good’ DAG. There is no clear-cut answer to this question (Pearce & Lawlor, 2016). DAGs are always a simplification of reality. Their strength lies, however, in making this simplification explicit and open to scrutiny (Fleischer & Roux, 2008). Providing information on how domain knowledge was obtained (i.e., which domain experts and/or other resources were consulted) and incorporated will help reviewers and readers evaluate the quality of a given DAG, and prompt informed discussions. We recommend to document information on the development process of a DAG in detail in accompanying protocols and papers.

Edges linking the nodes can be added one by one (additive approach). Otherwise, a fully saturated DAG can be created, and implausible edges removed (dismantling approach). Organising the nodes in a way such that they represent the passage of time facilitates drawing the edges in the correct direction (Tennant et al., 2021). Irrespective of the approach used, it is essential to prioritise the justification of the omission of edges, rather than their inclusion. Furthermore, measured as well as unmeasured common causes should be considered (Morgan & Winship, 2015).

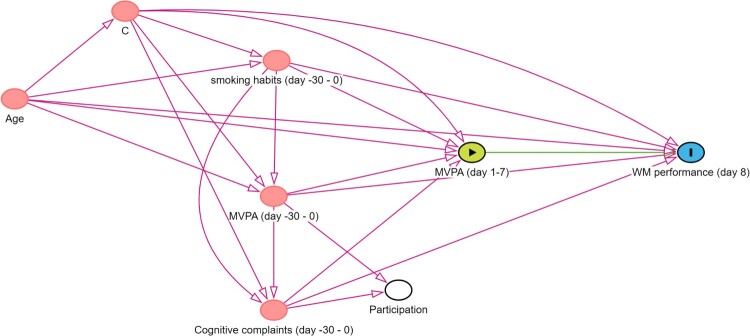

Illustrative example for step 3. Common causes of MVPA and WM performance can be identified via a literature review and by consulting researchers from the domain of physical activity and/or cognitive functioning among older adults. A good example is provided by Campbell and colleagues, who used the evidence synthesis method of Ferguson et al. to construct a DAG outlining the relationship between physical activity and cognitive function (Campbell et al., 2021; Ferguson et al., 2020). Common causes included, amongst others, age and smoking habits (Campbell & Cullen, 2023). These variables are also likely to affect both reaching the MVPA guidelines and WM performance. Hence, age and smoking status are added to our DAG. As past MVPA and cognitive complaints are likely to influence the exposure and outcome, these variables are also added as common causes. Participants would be asked to consider the past month when reporting on their smoking habits, MVPA and cognitive complaints. For simplicity, the remaining common causes are represented by the node C. Figure 5 shows that adding the common causes creates multiple undirected or spurious (backdoor) paths between reaching the MVPA guidelines and WM performance.

Figure 5.

DAG with common causes. Notes. https://dagitty.net/mUqwRMept Arrows lying on an open undirected path are indicated in red.

Having a longitudinal design facilitates identifying a variable as a common cause or as a mediator. For example, sleep quality assessed before the assessment of MVPA can be considered a common cause, but not a mediator. However, sleep quality during the night following day 7 can act as a mediator, but not as a common cause. As we are interested in the total effect of reaching the MVPA guidelines on WM performance, mediators are not included in our DAG (Hernán, 2022).

Step 4 – Consider adding nodes representing selection procedures

Selection bias often occurs as a result of stratifying on or adjusting for a collider, threatening the internal validity of the study. Selection nodes are not only useful to signal and visualise potential threats to interval validity, but also to external validity (Greenland, 2017; Hernán, 2017). Correcting for bias due to systematic selection of the analytical sample (from the target population of interest) usually requires information that is typically missing in the study data (e.g., to estimate the probability of selection in the study) (Hernán et al., 2004; Hernán & Robins, 2020; 2024). In contrast, when creating a DAG before designing the study, one might create more adequate designs to reduce the impact of such selection procedures; see (Ren & Loh, 2024) for examples from psychology research of how selection bias can arise.

Illustrative example for step 4. When advertising a study examining the impact of reaching the WHO guidelines regarding MVPA on WM performance in older adults, one can imagine that people reaching these guidelines and people experiencing cognitive complaints are more likely to participate in the study. Figure 6 shows a DAG in which this selection procedure in visualised. The node ‘Participation’ indicates whether or not people participate in the study. We stratify on this variable as we will only have data from people who participated in the study. In doing so, we become aware of the undirected pathways between our exposure and outcome (i.e., MVPA (day1-7) ← MVPA (day -30–0) → Participation ← Cognitive complaints (day -30–0) → WM performance (day 8) and MVPA (day1-7) ← Cognitive complaints (day -30–0) → Participation ← MVPA (day -30–0) → WM performance (day 8)). This is also known as volunteer bias (Lu et al., 2022). Leaving this path open would threaten the internal validity of our study. Of interest, the spurious path can be closed by adjusting for ‘MVPA (day-30–0)’ or ‘Cognitive complaints (day -30–0)’. However, even though, in the absence of unmeasured common causes of participation and the outcome (and of participation and the exposure), this eliminates collider bias and hence removes the threat to internal validity, it does not eliminate the remaining selection bias (due to selective sampling) that may threaten external validity.

Figure 6.

DAG including a collider. Notes. https://dagitty.net/mocqpRgwk

Step 5 – Consider adding nodes representing measurement error

Hernán and Robins recommend first drawing a DAG assuming that all variables are perfectly measured. This allows researchers to focus on confounding and selection bias before considering the complexities related to measurement error. However, mismeasurement and its potential impact in the form of measurement bias should not be overlooked as measurement errors in the exposure, outcome, or important confounders can strongly bias the effect estimate (Hernán & Robins, 2020). By developing a DAG before designing the study, one can anticipate different sources of measurement bias and change the research design accordingly. Correcting for measurement bias after data collection requires (like selection bias) researchers to collect and model information that is usually not available in the original study data (e.g., from a validation (sub)sample that includes key variables measured without error) (Keogh, 2014; Keogh et al., 2020).

Psychologists are often interested in the causal effect of latent variables. Examining the causal effect of latent variables requires a precise definition of these variables and a clear justification for the methods used to assess these variables (VanderWeele, 2022). This is in line with recent calls for more conceptual clarification regarding measurement instruments and psychological constructs (Bringmann et al., 2022; Crombez et al., 2023; Crombez et al., 2024; Peters & Crutzen, 2024). The Decentralized Construct Taxonomy specifications outlined by Peters and Crutzen may be a helpful resource to transparently define constructs, and to provide instructions for their assessment (Peters & Crutzen, 2024).

Illustrative example for step 5. We could decide to assess older adults’ MVPA using a questionnaire asking participants to recall their physical activities in the past seven days (e.g., [Craig et al., 2003]) and their WM performance using neuropsychological tests. Figure 7 displays the relation between both variables. For simplicity, we will only focus on measurement error in the exposure and outcome. To avoid clutter, we only display these variables in the DAG. The nodes ‘MVPA’ and ‘WM performance’ refer to the perfectly measured variables, also known as latent constructs (Hernán & Robins, 2020). We will not have direct access to these values. Instead, we will have to use the measured values ‘MVPA*’ and ‘WM performance*’. These measures are influenced not only by the true latent variables but also by unknown measurement errors represented by ‘UMVPA’ and ‘UWM_performance’. Because the ability to correctly report physical activities over the past seven days may depend on WM performance, an arrow is drawn from the outcome to UMVPA. Consequently, MVPA* and WM performance* are expected to be associated even under the null hypothesis of no effect of the true exposure on the true outcome (Hernán, 2009). Hence, based on this DAG, researchers may decide to assess MVPA using a method that is less likely to be influenced by WM performance (e.g., accelerometry).

Figure 7.

DAG representing measurement bias. Notes: https://dagitty.net/mWeagRxdW

Step 6 – Use the DAG to inform data analysis

In this step we move from the identification phase to the estimation phase. Different data-analytic methods can be used which can be broadly classified into three categories: regression adjustment (which focuses on modelling the relation between the outcome and exposure, conditional on confounders), propensity score methods (which primarily focus on modelling the relation between the exposure and confounders) and double robust methods (which focus on modelling both types of relations) (Hernán et al., 2019). Irrespective of the specific data-analytic method, the main aim is to close all spurious paths between the exposure and outcome while ensuring that no new spurious paths are opened. Hence, we aim to adjust for common causes, but not for colliders or mediators. This is a challenging job when we have a complex DAG. Fortunately, tools like Dagitty can help to automatically identify potential (or minimally sufficient) adjustment set(s) for estimating particular effects using regression adjustment, propensity score methods or double robust methods (Textor et al., 2011).

There are two important caveats related to the use of DAGs in the estimation phase. First, DAGs provide no guidance regarding the assumptions underlying parametric modelling (e.g., functional form of the relation between the variables in the DAG) (Digitale et al., 2022). Hence, well-considered statistical modelling decisions are still required in order to obtain consistent estimates of the exposure’s effect on the outcome. Second, because DAGs provide no information on the strength or the functional form of the relation between the included variables, the magnitude of different sources of bias is not captured by a DAG. Hence, DAGs may foster the detection and understanding of biases, but provide little information on the strength of these biases (Pearce & Lawlor, 2016). To overcome this limitation, researchers should conduct quantitative bias analyses, a form of sensitivity analyses which uses external data to provide more accurate effect and uncertainty estimates (Fox et al., 2022).

Illustrative example for step 6. In this step, we will assume that we were able to assess our variables without measurement error. The DAG displayed in Figure 6 indicates that we should adjust for Age, C, Cognitive complaints (day -30–0), MVPA (day -30–0), and smoking habits (day -30–0). An often used method is to include these variables as covariates in a linear regression model. This approach requires that each of the included variables has an additive and linear relationship with the outcome. Incorrectly modelled covariates will result in residual confounding.

Until now, we illustrated the six steps for the effect of a single time point exposure on a single time point outcome. As indicated in step 2, DAGs can be readily extended to represent multiple time point exposures (and outcomes). For example, in a longitudinal study, we could examine the impact of reaching the MVPA guidelines on two consecutive timepoints on WM performance. This type of research questions requires us to properly model time-varying confounders that may be affected by earlier exposures (also known as treatment-confounder feedback) and hence simultaneously act as mediators on certain causal pathways and as confounders on other causal pathways. For example, reaching the MVPA guidelines on week 1 might affect cognitive complaints at the end of that week, which in turn may affect reaching the MVPA guidelines on week 2. Hence, the time-varying confounder ‘cognitive complaints’ becomes both a mediator and a confounder. The issue of treatment-confounder feedback is also relevant to consider when deciding on whether a cumulative or sustained exposure (aggregated) over a longer time window (e.g., MVPA or diet assessed over one week) could be more adequately treated as a time-varying rather than a time-fixed exposure.1 Traditional regression adjustment and most propensity score methods (with the exception of inverse probability weighting) fail to properly account for treatment-confounder feedback and will generally produce biased effect estimates [Hernán and Robins (2020), Chapter 20]. G-methods, a family of advanced statistical methods (where the ‘g’ stands for generalised) developed by Robins and colleagues (Robins & Hernan, 2008), can be used to estimate the cumulative causal effects of exposure at consecutive timepoints (or more generally defined exposure regimes) (Hernán & Robins, 2020). Accessible introductions to g-methods have been introduced (Loh et al., 2024; Loh & Ren, 2023a); see Kennedy et al. for a recent application of one such method (Kennedy et al. 2023).

The six guiding steps outlined above illustrate that developing and using DAGs requires iterative discussions and considerable time investment. However, this brings some substantial benefits. First, it stimulates researchers to develop a better understanding of their research question and their underlying assumptions before collecting and/or analysing their data. Second, it fosters closer collaboration and cross-pollination by enhancing communication between researchers from different domains (Digitale et al., 2022). Consequently, developing DAGs to answer research questions fits within both the ‘Open Science’ movement, which focuses on transparency, rigour, reproducibility and replicability (UNESCO and Canadian Commission for UNESCO, 2022) and the ‘Slow Science’ movement, which invites researchers to think about the bigger aims of science (e.g., contributing to society) and advocates for less, but higher quality research (Altman, 1994; Frith, 2020).

Limitations and future directions

There are some limitations to this review. First, although we sought to include all relevant resources, we may have missed papers and resources. However, including all papers and resources may not be necessary to provide a general overview of guidelines and recommendations. Second, the six steps outlined above cannot be considered an exhaustive checklist for DAG development. Nonetheless, we do believe that these six steps will provide researchers with a scaffolding, which will allow them to further delve into the growing DAG-literature.

Despite their advantages, DAGs also have limitations. First, DAGs do not replace the need to formulate a clear research question. In fact, predefining a precise target of causal inference (ideally a causal ‘estimand’ that is defined without making any reference to a particular statistical model) is indispensable for DAG development, for design-related and data analytical choices, and ultimately, for enabling unambiguous and well-balanced interpretations of study results (Lundberg & Johnson, 2021). Second, as indicated above, DAGs are acyclic by definition. Nonetheless, feedback loops can be represented by ‘unrolling’ the relation between variables over discrete timepoints (i.e., splitting single variable nodes into multiple sequential nodes to represent their longitudinal measurements) (Bongers, 2022). Health behaviors are, however, influenced by a continuous interplay between variables at different levels (intra-individual, inter-individual and contextual) and constantly changing and adapting over time and contexts (Chevance et al., 2021; Gomersall, 2018). In the past years there has been a plea to acknowledge the complex dynamics of health behaviors (Chevance et al., 2021; Gomersall, 2018; Heino et al., 2021). This shift is reflected in the rise of studies using intensive longitudinal designs (e.g., experience sampling methodology (Kuppens, 2021) capturing health behaviors over time and contexts. The application of DAGs for causal inference with intensive longitudinal data is, however, in its early stages. Furthermore, within the domain of psychology, mixed-effects models are often used to analyse longitudinal data. Currently, literature focusing on causal inference with longitudinal data analysed using mixed-effects models is scarce (although see Shardell and Ferrucci [2018] and Kim and Steiner [2021] for recent developments). The call to embrace the complex dynamics of health behaviors is also reflected in the adoption of modelling techniques that are able to deal with cyclic and nonlinear relationships between variables (e.g., formal, dynamical systems modelling (Perski et al., 2024). Recently, a framework of structural dynamical causal models (SDCMs) has been proposed to explicate the causal interpretation of dynamical systems (Bongers, 2022). Nonetheless, it is important to note that the fundamental causal diagrammatic issues raised in this paper, specifically the ability to nonparametrically identify causal effects of non-randomised exposures, apply generally in longitudinal designs with many time points. For example, even in the simplest possible scenario with just two time points, deciding whether to adjust or not to adjust for a previous outcome can be facilitated using causal diagrams (Loh & Ren, 2023b). Finally, DAGs are mainly used to examine causal relationships between well-defined and measurable variables (e.g., vaccination status). Psychologists are often interested in the effect of latent variables (e.g., self-efficacy for engaging in MVPA) on health behaviors. Further research on methods combining structural equation modelling, which acknowledges the presence of latent variables, and DAGs is expected to enhance causal inference within the domain of psychology (Mulder, 2024).

Supplementary Material

Acknowledgements

The authors would like to thank all participants of the ‘Using Directed Acyclic Graphs to guide your research: an introduction, exercises and interactive discussions’ workshops for their constructive feedback.

Glossary

Abbreviations: DAG: directed acyclic graph; SEM: structural equation model; MVPA: moderate-to-vigorous intensity physical activity; WM: working memory; SDCM: structural dynamical causal model.

Funding Statement

LP was supported by a Research Foundation – Flanders (FWO) Postdoctoral Fellowship – junior (12ZF122N). WWL was partially supported by the University Research Committee Award of Emory University. JVC was supported by a FWO Postdoctoral Fellowship – senior (12I1120N). GC and ADP were funded by a grant from the Medical Research Council and Versus Arthritis to the PAINSTORM consortium as part of the Advanced Pain Discovery Platform (MR/W002388/1), and by a FWO research scientific network grant (PAIN, W001721N).

Note

The longer the time window over which exposure levels are aggregated, the longer the window of opportunity for intermediate (non-aggregated) exposure levels to evolve and vary over time, and hence also for confounding factors to get entangled in treatment-confounder feedback loops, also within the considered time window. Acknowledging the original time-varying nature of time-aggregated exposures may therefore be warranted to adequately address (time-varying) confounding (and potential forms of time-dependent bias) within such longer time windows.

Disclosure statement

PWGT is a director of Causal Thinking Ltd, which provides causal inference research and training. PWGT may therefore benefit from any study that demonstrates the value of causal inference methods.

Author contributions

LP: Conceptualisation, Literature screening, Data extraction, Writing – original draft; JS: Conceptualisation, Writing – review & editing; LWW: Conceptualisation, Writing – review & editing; GC: Conceptualisation, Writing – review & editing; FDB: Conceptualisation, Writing – review & editing; NJ: Conceptualisation, Writing – review & editing; PWGT: Writing – review & editing; JVC: Conceptualisation, Literature screening, Writing – review & editing; ADP: Conceptualisation, Literature screening, Writing – review & editing.

References

- Altman, D. G. (1994). The scandal of poor medical research. BMJ, 308(6924), 283–284. doi: 10.1136/bmj.308.6924.283 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arksey, H., & O'Malley, L. (2005). Scoping studies: Towards a methodological framework. International Journal of Social Research Methodology, 8(1), 19–32. doi: 10.1080/1364557032000119616 [DOI] [Google Scholar]

- Barnard-Mayers, R., Childs, E., Corlin, L., Caniglia, E. C., Fox, M. P., Donnelly, J. P., & Murray, E. J. (2021). Assessing knowledge, attitudes, and practices towards causal directed acyclic graphs: A qualitative research project. European Journal of Epidemiology, 36(7), 659–667. doi: 10.1007/s10654-021-00771-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barnard-Mayers, R., Kouser, H., Cohen, J. A., Tassiopoulos, K., Caniglia, E. C., Moscicki, A.-B., Campos, N. G., Caunca, M. R., Seage III, G. R., & Murray, E. J. (2022). A case study and proposal for publishing directed acyclic graphs: The effectiveness of the quadrivalent human papillomavirus vaccine in perinatally HIV Infected girls. Journal of Clinical Epidemiology, 144, 127–135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bollen, K. A., & Pearl, J. (2013). Eight myths about causality and structural equation models. In S. Morgan (Ed.), Handbook of causal analysis for social research (pp. 301–328). Springer. [Google Scholar]

- Bongers, S. R. (2022). Causal modeling & dynamical systems: A new perspective on feedback.

- Borsboom, D., Deserno, M. K., Rhemtulla, M., Epskamp, S., Fried, E. I., McNally, R. J., Robinaugh, D. J., Perugini, M., & Dalege, J. (2021). Costantini G: Network analysis of multivariate data in psychological science. Nature Reviews Methods Primers, 1(1), 58. doi: 10.1038/s43586-021-00055-w [DOI] [Google Scholar]

- Bringmann, L. F., Elmer, T., & Eronen, M. I. (2022). Back to basics: The importance of conceptual clarification in psychological science. Current Directions in Psychological Science, 31(4), 340–346. doi: 10.1177/09637214221096485 [DOI] [Google Scholar]

- Campbell, T., & Cullen, B. (2023). Estimating the effect of physical activity on cognitive function within the UK Biobank cohort. International Journal of Epidemiology, 52(5), 1592–1611. doi: 10.1093/ije/dyad009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell, T., Ferguson, K., Whyte, J., & Cullen, B. (2021). Constructing a graphical model of the relationship between physical activity and cognitive function based on a systematic review of prospective evidence.

- Chevance, G., Perski, O., & Hekler, E. B. (2021). Innovative methods for observing and changing complex health behaviors: Four propositions. Translational Behavioral Medicine, 11(2), 676–685. doi: 10.1093/tbm/ibaa026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craig, C. L., Marshall, A. L., Sjostrom, M., Bauman, A. E., Booth, M. L., Ainsworth, B. E., Pratt, M., Ekelund, U., Yngve, A., Sallis, J. F., & Oja, P. (2003). International physical activity questionnaire: 12-country reliability and validity. Medicine & Science in Sports & Exercise, 35(8), 1381–1395. doi: 10.1249/01.mss.0000078924.61453.fb [DOI] [PubMed] [Google Scholar]

- Crombez, G., Scott, W., & De Paepe, A. L. (2024). Knowing what we are talking about: The case of pain catastrophizing. The Journal of Pain, 25(3), 591–594. doi: 10.1016/j.jpain.2023.12.014 [DOI] [PubMed] [Google Scholar]

- Crombez, G., Veirman, E., Van Ryckeghem, D., Scott, W., & De Paepe, A. (2023). The effect of psychological factors on pain outcomes: Lessons learned for the next generation of research. Pain reports, 8(6), e1112. doi: 10.1097/PR9.0000000000001112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crutzen, R., & Peters, G.-J. (2021). The regression trap: Why regression analyses are not suitable For selecting determinants to target In behavior change interventions. [DOI] [PMC free article] [PubMed]

- Deaton, A., & Cartwright, N. (2018). Understanding and misunderstanding randomized controlled trials. Social Science & Medicine, 210, 2–21. doi: 10.1016/j.socscimed.2017.12.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Digitale, J. C., Martin, J. N., & Glymour, M. M. (2022). Tutorial on directed acyclic graphs. Journal of Clinical Epidemiology, 142, 264–267. doi: 10.1016/j.jclinepi.2021.08.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elwert, F. (2013). Graphical causal models. In S. Morgan (Ed.), Handbook of causal analysis for social research (pp. 245–273). Springer. [Google Scholar]

- Ferguson, K. D., McCann, M., Katikireddi, S. V., Thomson, H., Green, M. J., Smith, D. J., & Lewsey, J. D. (2020). Evidence synthesis for constructing directed acyclic graphs (ESC-DAGs): A novel and systematic method for building directed acyclic graphs. International Journal of Epidemiology, 49(1), 322–329. doi: 10.1093/ije/dyz150 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fleischer, N. L., & Roux, A. D. (2008). Using directed acyclic graphs to guide analyses of neighbourhood health effects: An introduction. Journal of Epidemiology & Community Health, 62(9), 842–846. doi: 10.1136/jech.2007.067371 [DOI] [PubMed] [Google Scholar]

- Fox, M. P., MacLehose, R. F., & Lash, T. L. (2022). Applying quantitative bias analysis to epidemiologic data. Springer. [Google Scholar]

- Frith, U. (2020). Fast lane to slow science. Trends in Cognitive Sciences, 24(1), 1–2. doi: 10.1016/j.tics.2019.10.007 [DOI] [PubMed] [Google Scholar]

- Gaskell, A. L. (2020). Sleigh JW: An introduction to causal diagrams for anesthesiology research. Anesthesiology, 132(5), 951–967. doi: 10.1097/ALN.0000000000003193 [DOI] [PubMed] [Google Scholar]

- Glymour, M. M. (2006). Using causal diagrams to understand common problems in social epidemiology. In J. M. Oakes & J. S. Kaufman (Eds.), Methods in Social Epidemiology (pp. 393–428). Jossey-Bass/Wiley. [Google Scholar]

- Glymour, M., Pearl, J., & Jewell, N. P. (2016). Causal inference in statistics: A primer. John Wiley & Sons. [Google Scholar]

- Gomersall, T. (2018). Complex adaptive systems: A new approach for understanding health practices. Health Psychology Review, 12(4), 405–418. doi: 10.1080/17437199.2018.1488603 [DOI] [PubMed] [Google Scholar]

- Grace, J. B., & Irvine, K. M. (2020). Scientist’s guide to developing explanatory statistical models using causal analysis principles. Ecology, 101(4), e02962. doi: 10.1002/ecy.2962 [DOI] [PubMed] [Google Scholar]

- Greenland, S. (2017). Response and follow-up bias in cohort studies. American Journal of Epidemiology, 185(11), 1044–1047. doi: 10.1093/aje/kwx106 [DOI] [PubMed] [Google Scholar]

- Greenland, S., Pearl, J., & Robins, J. M. (1999). Causal diagrams for epidemiologic research. Epidemiology, 10(1), 37–48. doi: 10.1097/00001648-199901000-00008. [DOI] [PubMed] [Google Scholar]

- Grosz, M. P., Rohrer, J. M., & Thoemmes, F. (2020). The Taboo against explicit causal inference in nonexperimental psychology. Perspectives on Psychological Science, 15(5), 1243–1255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heino, M. T., Knittle, K., Noone, C., Hasselman, F., & Hankonen, N. (2021). Studying behaviour change mechanisms under complexity. Behavioral Sciences, 11(5), 77. doi: 10.3390/bs11050077 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hernán, M. A. (2022). Causal diagrams: draw your assumptions before your conclusions. HarvardX. https://www.edx.org/learn/data-analysis/harvard-university-causal-diagrams-draw-your-assumptionsbefore-your-conclusions [Google Scholar]

- Hernán, M. A. (2009). Cole SR: Invited commentary: Causal diagrams and measurement bias. American Journal of Epidemiology, 170(8), 959–962. doi: 10.1093/aje/kwp293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hernán, M. A. (2017). Invited commentary: Selection bias without colliders. American Journal of Epidemiology, 185(11), 1048–1050. doi: 10.1093/aje/kwx077 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hernán, M. A. (2018). The C-word: Scientific euphemisms do not improve causal inference from observational data. American Journal of Public Health, 108(5), 616–619. doi: 10.2105/AJPH.2018.304337 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hernán, M. A., Hernández-Díaz, S., & Robins, J. M. (2004). A structural approach to selection bias. Epidemiology, 15(5), 615–625. doi: 10.1097/01.ede.0000135174.63482.43 [DOI] [PubMed] [Google Scholar]

- Hernán, M. A., Hernández-Díaz, S., Werler, M. M., & Mitchell, A. A. (2002). Causal knowledge as a prerequisite for confounding evaluation: An application to birth defects epidemiology. American Journal of Epidemiology, 155(2), 176–184. doi: 10.1093/aje/155.2.176 [DOI] [PubMed] [Google Scholar]

- Hernán, M. A., Hsu, J., & Healy, B. (2019). A second chance to get causal inference right: A classification of data science tasks. Chance, 32(1), 42–49. doi: 10.1080/09332480.2019.1579578 [DOI] [Google Scholar]

- Hernán, M. A., & Robins, J. M. (2020). Causal inference: What if. Chapman & Hall/CRC. [Google Scholar]

- Huang, D., Susser, E., & Rudolph, K. E. (2023). Keyes KM: Depression networks: A systematic review of the network paradigm causal assumptions. Psychological Medicine, 53(5), 1665–1680. [DOI] [PubMed] [Google Scholar]

- Kennedy, T. M., Kennedy, E. H., & Ceballo, R. (2023). Marginal structural models for estimating the longitudinal effects of community violence exposure on youths’ internalizing and externalizing symptoms. Psychological Trauma: Theory, Research, Practice, and Policy, 15(6), 906–916. doi: 10.1037/tra0001398 [DOI] [PubMed] [Google Scholar]

- Keogh, R. H., Shaw, P. A., Gustafson, P., Carroll, R. J., Deffner, V., Dodd, K. W., Küchenhoff, H., Tooze, J. A., Wallace, M. P., & Kipnis, V. (2020). STRATOS guidance document on measurement error and misclassification of variables in observational epidemiology: Part 1—basic theory and simple methods of adjustment. Statistics in Medicine, 39(16), 2197–2231. doi: 10.1002/sim.8532 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keogh, R. H., & White, I. R. (2014). A toolkit for measurement error correction, with a focus on nutritional epidemiology. Statistics in Medicine, 33(12), 2137–2155. doi: 10.1002/sim.6095 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim, Y., & Steiner, P. M. (2021). Causal graphical views of fixed effects and random effects models. British Journal of Mathematical and Statistical Psychology, 74(2), 165–183. doi: 10.1111/bmsp.12217 [DOI] [PubMed] [Google Scholar]

- Kunicki, Z. J., Smith, M. L., & Murray, E. J. (2023). A primer on structural equation model diagrams and directed acyclic graphs: When and how to use each in psychological and epidemiological research. Advances in Methods and Practices in Psychological Science, 6(2). doi: 10.1177/25152459231156085 [DOI] [Google Scholar]

- Kuppens, P. (2021). The open handbook of experience sampling methodology: A step-by-step guide to designing, conducting, and analyzing ESM studies.

- Laubach, Z. M., Murray, E. J., Hoke, K. L., Safran, R. J., & Perng, W. (2021). A biologist's guide to model selection and causal inference. Proceedings of the Royal Society B: Biological Sciences, 288(1943), 20202815. doi: 10.1098/rspb.2020.2815 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loh, W. W., & Ren, D. (2023a). A tutorial on causal inference in longitudinal data with time-varying confounding using g-estimation. Advances in Methods and Practices in Psychological Science, 6(3). doi: 10.1177/25152459231174029 [DOI] [Google Scholar]

- Loh, W. W., & Ren, D. (2023b). The unfulfilled promise of longitudinal designs for causal inference. Collabra: Psychology, 9(1). doi: 10.1525/collabra.89142. [DOI] [Google Scholar]

- Loh, W. W., Ren, D., & West, S. G. (2024). Parametric g-formula for testing time-varying causal effects: What it is, why it matters, and how to implement it in Lavaan. Multivariate Behavioral Research, 1–24. doi: 10.1080/00273171.2024.2354228 [DOI] [PubMed] [Google Scholar]

- Lu, H., Cole, S. R., Howe, C. J., & Westreich, D. (2022). Toward a clearer definition of selection bias when estimating causal effects. Epidemiology, 33(5), 699–706. doi: 10.1097/EDE.0000000000001516 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lundberg, I., Johnson, R., & Stewart, B. M. (2021). What is your estimand? Defining the target quantity connects statistical evidence to theory. American Sociological Review, 86(3), 532–565. doi: 10.1177/00031224211004187 [DOI] [Google Scholar]

- Morgan, S. L., & Winship, C. (2015). Counterfactuals and causal inference. Cambridge University Press. [Google Scholar]

- Mulder, J. D. (2024). Structural equation modeling for description, prediction, and causation. Utrecht University. [Google Scholar]

- Murray, E. J., & Kunicki Z. (2022). As the wheel turns: Causal inference for feedback loops and bidirectional effects.

- Ouzzani, M., Hammady, H., Fedorowicz, Z., & Elmagarmid, A. (2016). Rayyan—a web and mobile app for systematic reviews. Systematic Reviews, 5(1), 1–10. doi: 10.1186/s13643-016-0384-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pearce, N., & Lawlor, D. A. (2016). Causal inference—so much more than statistics. International Journal of Epidemiology, 45(6), 1895–1903. doi: 10.1093/ije/dyw328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pearl, J. (2009). Causality: Models, reasoning and inference (2nd ed.). Cambridge University Press. [Google Scholar]

- Perski, O., Copeland, A., Allen, J., Pavel, M., Rivera, D. E., Hekler, E., Hankonen, N., & Chevance, G. (2024). The iterative development and refinement of health psychology theories through formal, dynamical systems modelling: A scoping review and initial ‘best practice’recommendations. [DOI] [PubMed]

- Peters, G.-J., & Crutzen, R. (2024). Knowing what we're talking about: Facilitating decentralized, unequivocal publication of and reference to psychological construct definitions and instructions. Meta-Psychology, 8. doi: 10.15626/MP.2022.3638 [DOI] [Google Scholar]

- Ren, D., & Loh, W. W. (2024). Advancing group-based disparities research and beyond: A cautionary note on selection bias. Advances in Methods and Practices in Psychological Science. [Google Scholar]

- Robins, J., & Hernan, M. (2008). Estimation of the causal effects of time-varying exposures. In Fitzmaurice G., Davidian M., Verbeke G., & Molenberghs G. (Eds.), Longitudinal data analysis (pp. 553–599). Chapman and Hall/CRC. [Google Scholar]

- Rodrigues, D., Kreif, N., Lawrence-Jones, A., & Barahona, M. (2022). Mayer E: Reflection on modern methods: constructing directed acyclic graphs (DAGs) with domain experts for health services research. International Journal of Epidemiology, 51(4), 1339–1348. doi: 10.1093/ije/dyac135 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rohrer, J. M. (2018). Thinking clearly about correlations and causation: Graphical causal models for observational data. Advances in Methods and Practices in Psychological Science, 1(1), 27–42. doi: 10.1177/2515245917745629 [DOI] [Google Scholar]

- Ryan, O., Bringmann, L. F., & Schuurman, N. K. (2022). The challenge of generating causal hypotheses using network models. Structural Equation Modeling: A Multidisciplinary Journal, 29(6), 953–970. doi: 10.1080/10705511.2022.2056039 [DOI] [Google Scholar]

- Sauer, B., & VanderWeele, T. J. (2013). Use of directed acyclic graphs. In P. Velentgas, N. A. Dreyer, P. Nourjah, et al., (Eds.), Developing a protocol for observational comparative effectiveness research: A user's guide. Rockville, MD: Agency for Healthcare Research and Quality (US). [PubMed] [Google Scholar]

- Shardell, M., & Ferrucci, L. (2018). Joint mixed-effects models for causal inference with longitudinal data. Statistics in Medicine, 37(5), 829–846. doi: 10.1002/sim.7567 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suzuki, E., Shinozaki, T., & Yamamoto, E. (2020). Causal diagrams: Pitfalls and tips. Journal of Epidemiology, 30(4), 153–162. doi: 10.2188/jea.JE20190192 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tafti, A., & Shmueli, G. (2020). Beyond overall treatment effects: Leveraging covariates in randomized experiments guided by causal structure. Information Systems Research, 31(4), 1183–1199. doi: 10.1287/isre.2020.0938 [DOI] [Google Scholar]

- Tennant, P. W., Murray, E. J., Arnold, K. F., Berrie, L., Fox, M. P., Gadd, S. C., Harrison, W. J., Keeble, C., Ranker, L. R., Textor, J., Tomova, G. D., Gilthorpe, M. S., & Ellison, G. T. H. (2021). Use of directed acyclic graphs (DAGs) to identify confounders in applied health research: Review and recommendations. International Journal of Epidemiology, 50(2), 620–632. doi: 10.1093/ije/dyaa213 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Textor, J., Hardt, J., & Knüppel, S. (2011). DAGitty: A graphical tool for analyzing causal diagrams. Epidemiology, 22(5), 745. doi: 10.1097/EDE.0b013e318225c2be [DOI] [PubMed] [Google Scholar]

- Tricco, A. C., Lillie, E., Zarin, W., O'Brien, K. K., Colquhoun, H., Levac, D., Moher, D., Peters, M. D., Horsley, T., Weeks, L., ..., & Straus, S. E. (2018). PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Annals of internal medicine, 169(7), 467–473. doi: 10.7326/M18-0850 [DOI] [PubMed] [Google Scholar]

- UNESCO and Canadian Commission for UNESCO . (2022). An introduction to the UNESCO Recommendation on Open Science. doi: 10.54677/XOIR1696. [DOI] [Google Scholar]

- van Alten, S., Domingue, B. W., Faul, J., Galama, T., & Marees, A. T. (2024). Reweighting UK Biobank corrects for pervasive selection bias due to volunteering. International Journal of Epidemiology, 53(3). doi: 10.1093/ije/dyae054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- VanderWeele, T. J. (2022). Constructed measures and causal inference: Towards a new model of measurement for psychosocial constructs. Epidemiology, 33(1), 141–151. doi: 10.1097/EDE.0000000000001434 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watkins, T. (2021). Abstract #1070 An online searchable database of example causal diagrams to make them easier to construct. International Journal of Epidemiology, 50, supplement 1. doi: 10.1093/ije/dyab168.702 [DOI] [Google Scholar]

- Watkins, T. (2022). Causal diagrams – Information and examples. https://causaldiagrams.org/ [Google Scholar]

- World Health Organization . (2020). WHO guidelines on physical activity and sedentary behaviour. World Health Organization. [Google Scholar]

Associated Data