Abstract

The Comprehensive In-vitro Proarrhythmia Assay (CiPA) initiative aims to refine the assessment of drug-induced torsades de pointes (TdP) risk, utilizing computational models to predict cardiac drug toxicity. Despite advancements in machine learning applications for this purpose, the specific contribution of in-silico biomarkers to toxicity risk levels has yet to be thoroughly elucidated. This study addresses this gap by implementing explainable artificial intelligence (XAI) to illuminate the impact of individual biomarkers in drug toxicity prediction. We employed the Markov chain Monte Carlo method to generate a detailed dataset for 28 drugs, from which twelve in-silico biomarkers of 12 drugs were computed to train various machine learning models, including Artificial Neural Networks (ANN), Support Vector Machines (SVM), Random Forests (RF), XGBoost, K-Nearest Neighbors (KNN), and Radial Basis Function (RBF) networks. Our study’s innovation is leveraging XAI, mainly through the SHAP (SHapley Additive exPlanations) method, to dissect and quantify the contributions of biomarkers across these models. Furthermore, the model performance was evaluated using the test set from 16 drugs. We found that the ANN model coupled with the eleven most influential in-silico biomarkers namely showed the highest classification performance among all classifiers with Area Under the Curve (AUC) scores of 0.92 for predicting high-risk, 0.83 for intermediate-risk, and 0.98 for low-risk drugs. We also found that the optimal in silico biomarkers selected based on SHAP analysis may be different for various classification models. However, we also found that the biomarker selection only sometimes improved the performance; therefore, evaluating various classifiers is still essential to obtain the desired classification performance. Our proposed method could provide a systematic way to assess the best classifier with the optimal in-silico biomarkers for predicting the TdP risk of drugs, thereby advancing the field of cardiac safety evaluations.

Subject terms: Virtual screening, Machine learning

Introduction

Torsade de Pointes (TdP) is a hazardous cardiac condition characterized by the twisting of the QRS complex in electrocardiogram (ECG) signals, typically triggered by adverse drug reactions1,2. The evaluation of drug-induced cardiac arrhythmias leading to TdP risk has been a pivotal concern for both researchers and the international regulatory board. The Comprehensive In-vitro Proarrhythmia Assay (CiPA) paradigm was introduced to address this issue. CiPA is a novel approach that incorporates in silico simulations and comprehensive evaluation of drug responses across multiple ion channels3. CiPA comprises four integral components4. The first facet involves in vitro experiments targeting multiple cardiac ionic currents, with a primary focus on hERG, late sodium, and L-type calcium currents, to assess the torsadogenic risk of a drug4,5. The second element employs in silico models, utilizing in vitro datasets as input to estimate drug toxicity by mimicking the human ventricular myocyte responses5. The third component scrutinizes potential unexpected in vitro effects on human stem cell-derived ventricular cardiac myocytes5. Lastly, the fourth facet determines the potential human impact of drugs, considering human-specific metabolic characteristics by examining in vivo ECG biomarkers in phase I clinical trials5.

According to FDA requirements, each new drug requires preclinical testing to evaluate its pharmacological activity and acute toxicity in animals6. In emergencies involving exposure to hazardous substances, the FDA grants an exemption for the use of the drug in humans based on the results of animal testing under The Animal Efficacy Rule7. However, animal testing involves high research costs, and there is a risk of missing drugs that could benefit humans8. It happens because the results of preclinical toxicological testing in animals cannot always consistently predict their effectiveness in humans, so the probability of test failure reaches almost 50%8. Therefore, in-silico modeling methods have been developed for drug testing that are more consistent, efficient, and can improve human safety. These methods are related to CiPA guidelines, which use computer simulations that mimic the human ventricle to predict the risk of drug toxicity.

The baseline of cardiac cell model that is commonly used for developing CiPA-based TdP risk classifier is originated from the model proposed by O’Hara Rudy et al. (ORd model)9. The O’Hara et al. developed a human ventricular action potential model, leveraging new, healthy human ventricular data to include features such as Ca2+ and voltage-dependent inactivation of the L-type Ca2+ current (ICaL), kinetics for various ion currents like the transient outward, rapid delayed rectifier (IKr), Na+/Ca2+ exchange (INaCa), and inward rectifier currents. Their model incorporated action potential recordings across physiological cycle lengths and examined the rate dependence and restitution of action potential duration (APD) with and without specific channel blockers. Then, Li et al. introduced a drug-IKr dynamic interaction utilizing the ORd cardiac cell model10. Furthermore, Dutta et al. engineered an in silico model employing the CiPAORdv1.0 ventricular myocyte model9,11. Dutta et al.’s model fine-tuned the maximal conductance constants of ion channels of the ORd-dynamic-IKr model proposed by Li et al.10, explicitly targeting IKs, ICaL, IKr, INaL, and IK1.

Multiple studies have developed drug testing systems based on CiPA guidelines for categorizing the TdP risk associated with drugs. Polak et al. suggested using feature extraction from in-silico biomarkers, such as , , Pseudo ECG, QRS width, QT interval, initial repolarization time, and late repolarization time as input to classify drug risk levels. They used an empirical decision tree with the best accuracy of 89%12. Liu et al. used the XGBoost algorithm to classify compounds that exhibit hERG inhibitory activity. They obtained an accuracy of 84.4%, and the area under the receiver operating characteristic curve (AUC) was 0.87613.

Furthermore, Lancaster and Sobie achieved significant results using a support vector machine (SVM) that takes both AP and inputs to classify drugs as arrhythmogenic or non-arrhythmogenic14. This system performed excellently in their research, with an AUC score reaching 0.963 and only a 12.8% misclassification rate. In addition, Yoo et al. proposed classifying drug risk levels by using nine in-silico biomarkers () as input for artificial neural network (ANN) with an AUC result of 0.92 for the high-risk group, 0.83 for the intermediate-risk group, and 0.98 for the low-risk group15. Based on the results of previous studies, ANN provides the best performance for classifying drug toxicity risk levels.

In short, using machine learning algorithms, previous research has employed various in-silico biomarkers with solid correlations to TdP risk as input features in predicting drugs’ proarrhythmia risk. Some previous studies have also utilized optimized hERG models in in-silico simulations to determine drug risk levels for TdP. However, despite various machine learning algorithms, including logistic regression, random forest (RF), the Extreme Gradient Boosting (XGBoost), and ANN, being used to classify cardiac toxicity of drugs12,13, 15, the primary challenge remains in the analysis of which biomarkers are most crucial in classifying the drugs’ TdP risk level.

In related fields, researchers have also significantly studied optimization and prediction models. For example, a study explores co-design strategies to optimize both structural and dynamic performance parameters simultaneously, resulting in reduced operating costs and improved performance16. Similarly, other research demonstrates the application of bio-inspired optimization algorithms in parameter identification, achieving high accuracy and robustness in the identified parameters17. Another innovative approach is the bio-inspired optimization algorithm applied to various optimization problems, showing significant improvements in solution quality and computational efficiency18.

While these examples use sophisticated optimization algorithms tailored to specific engineering problems, in this research, we employ grid search (GS) to optimize hyperparameters for machine learning models to predict TdP risk. GS is an exhaustive search method that tunes hyperparameters to find the optimal model configuration by evaluating all possible combinations within a specified range. This method ensures that the selected model parameters lead to the best possible predictive performance.

Several methods have been developed to explain the predictive behavior of machine learning models and one of the most prominent is the SHapley Additive explanations (SHAP) method. The SHAP method was introduced in 2017 by Lundberg et al.19 to interpret the complex machine learning models by unifying the six existing methods namely LIME, DeepLIFT, Layer-Wise Relevance Propagation, and three classic Shapley value estimation methods (Shapley regression values, Shapley sampling values, and Quantitative Input Influence)20–26. Some example uses of the SHAP method were demonstrated by Lundberg et al.27 in making a tree explanation method known as the Tree Explainer to solve some medical machine learning problems. The authors demonstrated that the proposed method could allow researchers to identify important but rare mortality risk factors in the general population of the US, find different sub-groups sharing similar risk factors, highlight interaction effects among risk factors for chronic disease of the kidney, and examine features that downgrade the machine learning model’s performance over time. Therefore, given the capability of the SHAP method to interpret the predictive mechanism of the machine learning model, we propose a systematic method to incorporate the SHAP method for evaluating the level of importance of in silico biomarkers for predicting TdP risk of drugs, as well as assessing and improving the predictive performance of the classifiers.

Methods

In this study, we present an algorithm to evaluate proarrhythmic drug risk by identifying the most significant biomarkers contributing to high, intermediate, and low-risk groups. Figure 1 illustrates the entire process of our proposed method. The block diagram consists of several steps, including preprocessing in-vitro experimental data to generate 2000 samples for each drug and using in-silico simulation to generate variability of twelve in-silico biomarkers. Finally, the system performance is evaluated by testing several machine learning models based on the explainable artificial intelligence (XAI) approach.

Fig. 1.

Illustration of our proposed algorithm for evaluating proarrhythmic drug risk, identifying key biomarkers in high, intermediate, and low-risk groups. The figure outlines the process, including preprocessing in-vitro data, sample generation, feature variability simulation, and identifying significant biomarkers using XAI algorithms (ANN). System performance is evaluated through model testing based on the XAI approach.

In-silico simulation

This study employed in-vitro patch clamp experiments for 28 drugs (see Table 1) sourced from the CiPA group’s dataset (https://github.com/FDA/CiPA/tree/Model-Validation-2018/Hill_Fitting/data). The dataset comprises dose–response inhibition effects of drugs on various ion channels, including calcium channels (), hERG channels (), inward rectifying potassium channels (), delayed slow rectifying potassium channels (), Kv4.3 channels (), late sodium channels (), and peak sodium channels ()3. For each drug and ion channel, we collected multiple measurements to capture the variability in response. The 28 in vitro patch clamp experiments provided by CiPA consisted of recordings capturing the dose–response relationships of each drug on the specified ion channels. The input format included information on the drug, ion channel, concentration levels, and corresponding ion channel responses. This detailed dataset served as the foundation for our subsequent analyses.

Table 1.

List of 28 drugs along with their corresponding Cmax values.

| Proarrhythmic risk level | Train drug | Test drug | ||

|---|---|---|---|---|

| Drug name | Cmax (nM) | Drug name | Cmax (nM) | |

| High risk | Sotalol | 14,690 | Disopyramide | 742 |

| Dofetilide | 2 | Ibutilide | 100 | |

| Bepridil | 33 | Vandetanib | 255 | |

| Quinidine | 3237 | Azimilide | 70 | |

| Intermediate | Cisapride | 2.6 | Clarithromycin | 1206 |

| Terfenadine | 4 | Clozapine | 71 | |

| Chlorpromazine | 38 | Domperidone | 19 | |

| Ondansetron | 139 | Droperidol | 6.3 | |

| Pimozide | 0.43 | |||

| Astemizole | 0.26 | |||

| Risperidone | 1.81 | |||

| Low | Verapamil | 81 | Metoprolol | 1800 |

| Ranolazine | 1948.20 | Nifedipine | 7.7 | |

| Diltiazem | 122 | Nitrendipine | 3.02 | |

| Mexiletine | 4129 | Tamoxifen | 21 | |

| Loratadine | 0.45 | |||

Determining the number of 2000 hill curves estimated via Markov-Chain Monte Carlo (MCMC) was a crucial aspect of our study. We aimed for a sufficient sample size to ensure the robustness and reliability of our analyses. The decision to use 2000 samples was based on careful consideration of statistical power, computational feasibility, and the need to adequately represent the underlying probability distribution of Hill equation parameters (IC50 and Hill coefficient) for each drug and ion channel combination28. This approach allowed us to evaluate comprehensively how each drug interacts with specific ion channels.

MCMC simulations were applied to generate 2000 samples representing the combined probability distribution of IC50 and Hill coefficient28. These samples collectively formed the combined probability distribution used for subsequent analyses. Furthermore, the dose–response inhibition effect of a drug can be expressed mathematically as follows:

| 1 |

where, is the concentration at which 50% inhibition of ion currents occurs, represents the drug concentration, and represents the Hill coefficient9,29. The inhibition effect of a drug on an ion channel is assumed to rescale the maximum conductance of the ion channel as follows:

| 2 |

where the is the maximum conductance of ion channel under drug effects, whereas the is the maximum conductance of ion channel without drug effect. This comprehensive approach allowed us to evaluate how each drug interacts with specific ion channels. Subsequently, drug effects were simulated at four different concentration levels, ranging from 1 to 4 times the maximal plasma concentration (Cmax) for each drug. The Cmax values used in our simulations were obtained from relevant literature and experimental data specific to each drug, ensuring accuracy in our concentration-level simulations. Before drug administration, the cell model was simulated without drug effects for 1000 stimulations with a cycle length of 2000 ms until reaching a steady state. Following steady-state achievement, the drug effect was administered with the same number of stimulations and cycle length.

The action potential (AP) selection procedure was employed to obtain AP and Calcium Profile (CP), as shown in Fig. 2, for each drug sample. Following the AP selection mechanism proposed by Chang et al.28, the AP with the highest maximum repolarization rate among the last 250 stimulations was selected. The maximum depolarization rate for AP that fully repolarizes can be found between 30 and 90% repolarization. For AP that can repolarize 30% but not 90%, the maximum depolarization rate can be obtained between 30% repolarization until the end of the beat. The maximum repolarization rate for AP that cannot achieve 30% repolarization can be found between the AP peak and the beat’s end. The APs that fail to depolarize were omitted from the analysis. Finally, the APs resulting from the AP selection procedure were used to extract the in-silico biomarkers.

Fig. 2.

Illustration of in-silico biomarkers in AP profile and Ca profile, which consisted of repolarization and .

Biomarker extraction

In our study, we selected twelve in-silico biomarkers, namely (repolarization slope), (maximum depolarization slope), (resting membrane potential), (action potential duration at 90% repolarization), (duration at 50% repolarization), (triangle area between and ), (calcium transient duration at 90% repolarization), (duration at 50% repolarization), (triangle area between CaD_90 and CaD_50), (diastolic calcium concentration), qInward (Eq. 3), and qNet (Eq. 4). These were generated through in-silico simulations using 2000 sets of and Hill coefficients from MCMC analysis. Building on the work of Yoo et al., nine of these biomarkers have been proven significant for predictive performance with ANN Yoo et al.15. We incorporated three additional biomarkers: , , and , informed by the findings of Jeong, Danadibrata et al., and Parikh et al., to enhance machine learning performance30,31,32.

| 3 |

| 4 |

The selection was driven by their relevance to drug effects, electrophysiological characteristics, and calcium handling in cardiac cells. For instance, the repolarization slope () of the action potential (AP) was chosen based on research by Chang et al.28, which identified AP with the highest repolarization slope from the last 250 stimulations. Additionally, the maximum slope of the depolarization phase, , is associated with AP’s pulse. Vm resting signifies the resting potential of the AP, while and represent the duration from peak to 50% and 90% repolarization, respectively. signifies the triangle area between and .

Likewise, calcium transient durations for 50% and 90% repolarization, represented by and , are considered biomarkers (Fig. 2). represents the triangle area between and , while indicates the calcium concentration during the diastolic phase. These biomarkers were strategically chosen to capture a comprehensive range of cellular responses to drug effects, providing robust features for machine learning analysis.

The grid search for optimizing machine learning models

GS is a preferred method for hyperparameter tuning in machine learning models, systematically exploring various combinations of hyperparameters across a grid and evaluating performance using a validation set33. This method systematically explores all combinations of hyperparameter values in a grid, assessing the performance of each variant with a validation set34. We allocated 80% of our dataset for training and the remaining 20% for validation, allowing for a rigorous optimization process during GS. The models, including ANN, XGBoost, RF, SVM, K-Nearest Neighbors (KNN), and radial basis function (RBF) networks, underwent fivefold cross-validation within the training subset to enhance their robustness and minimize overfitting. GS iteratively trains on all hyperparameter combinations, selecting the configuration that excels in validation performance34. Despite its thoroughness, GS can be computationally intensive as the hyperparameter space expands35. Therefore, we constrained the hyperparameter selection to balance computational efficiency and model performance, ultimately applying the best-configured model to evaluate the test set33.

ANN are interconnected neural networks consisting of several layers, including input, hidden, and output layers36. The proposed ANN model is designed with an input layer representing complex interactions captured by 12 in-silico biomarkers. Each of these biomarkers contains detailed information from interactions with 12 different drugs, where each drug is represented by 2000 data points, culminating in a rich dataset for model training.

The GS method was employed to optimize the ANN configuration, focusing on parameters such as batch size, with 32 and 64 being considered, and optimizer choices, including RMSprop and Adam. Neuron counts in the range of 5–9 and learning rates of 0.1, 0.01, and 0.001 were also explored to fine-tune the model’s performance. The model’s training process included a rigorous fivefold cross-validation, where the dataset was divided into 80% for training and 20% for validation in each fold, ensuring comprehensive and unbiased model assessment. The training utilized a batch size of 32, allowing for efficient iterative learning and weight updating.

Before training, the data underwent Z-score normalization to ensure a consistent scale across all inputs, enhancing the model’s classification accuracy37. The ANN architecture features three hidden layers, each with six nodes, and an output layer with three nodes (see Fig. 3a). The selection of ReLU (Rectified Linear Activation) as the activation function for hidden layers introduces non-linearity, enhancing model learning without impeding gradient flow. In the output layer, the softmax activation function converts the neural output to a probability distribution, accurately reflecting class probabilities15.

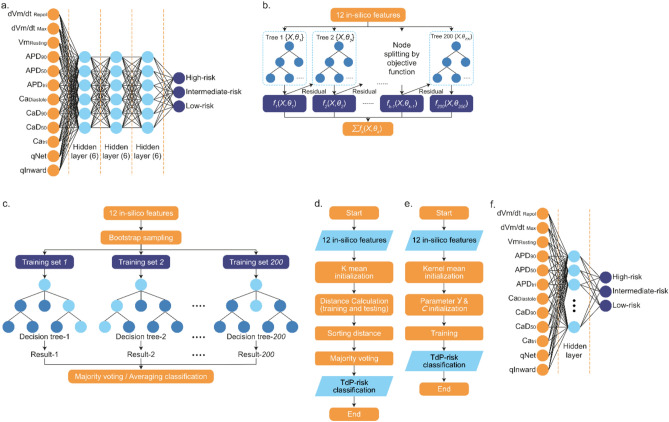

Fig. 3.

(a) Schematic representation of the ANN classification model, which employs twelve in-silico biomarkers as inputs. The model architecture comprises three hidden layers, each consisting of six neurons. Outputs from the ANN model are categorized into three risk classes: high-risk, intermediate-risk, and low-risk for TdP. (b) Illustration of the XGBoost classifier model. This model utilizes twelve in-silico features to train an ensemble of 200 decision trees. The trees are built sequentially with each tree learning from the errors (residuals) of the previous ones, thereby refining the classification. The node splitting is guided by an objective function, and the final output is the sum of predictions from all trees. (c) Depiction of the Random Forest training process. Starting with twelve in-silico features, the method employs bootstrap sampling to create multiple training sets. Each set is used to train a decision tree, resulting in 200 trees. The classification outcome for a sample is then determined by majority voting or averaging the results from all decision trees. (d) Workflow of the KNN classifier. The process begins with twelve in-silico features and initializes using K mean. It calculates the distance between training and testing points, sorts by distance, and applies majority voting for TdP risk classification, resulting in the final output. (e) Process diagram for the SVM classifier. The model initiates with twelve in-silico features and uses kernel mean initialization. Parameters Y & C are then established, followed by the training phase, culminating in the TdP risk classification. (f) Diagram of the RBF. The network starts with twelve in-silico features leading into a hidden layer that applies the RBF for transformation. The output is divided into three risk categories: high, intermediate, and low risk for TdP.

The loss function, ‘categorical cross-entropy’, was used to gauge the difference between the predicted and actual probability distributions, guiding the model towards precise predictions. The ‘adam’ optimizer was chosen for its effectiveness in managing sparse gradients and expediting the training process38. These methodical design and validation steps were taken to ensure the ANN model effectively interprets the intricate relationships within the biomarker-drug interaction data, leading to reliable drug effect classification.

XGBoost, an advanced gradient boosting decision tree algorithm, excels in constructing efficiently enhanced trees that operate in parallel39. It incorporates regularization to optimally balance the reduction of the objective function and model complexity, thus preventing overfitting40,41. In our study, the XGBoost model is specifically employed to classify the responses of 12 in-silico biomarkers, each representing interaction information from 12 different drugs (see Fig. 3b). Notably, each of these drugs encompasses 2000 data points, detailing the effects and interactions with the biomarkers, thus providing a comprehensive dataset for the model to analyze and predict the biomarker responses effectively42. Figure 3b depicts a schematic representation of XGBoost classification, illustrating how the algorithm iteratively improves its predictions.

During the training process, the model calculates loss for each node to identify the most impactful leaf nodes for the decision-making process. XGBoost dynamically adds new decision trees, segmenting input features to enhance learning precision. This iterative addition aims to refine the model’s predictions by learning new functions that align with the accumulated knowledge. After completing the training, with K decision trees developed, each sample’s prediction traverses these trees, concluding in specific leaf nodes, each assigned a score. The aggregated scores from all trees determine the final predicted efficacy for each biomarker.

Optimizing the XGBoost classification model involved using GS to select optimal parameters. This entailed testing various configurations of the number of trees (50, 100, 150, 200) and maximum depth levels (3, 5, 7, 9), enabling us to find the best balance for model performance. Applying fivefold cross-validation ensured a rigorous assessment of the model’s predictive accuracy, reinforcing its reliability and effectiveness in deciphering complex biomarker-drug interactions.

RF is a practical ensemble learning approach for classification. In this method, a collection of decision trees is constructed during the training process, with the final output obtained through the aggregate estimation of individual trees43,44. This model integrates various predictions to produce more accurate and stable results.

In the context of our study, the RF model leverages complex information from 12 in-silico biomarkers (Fig. 3c), each associated with data from 12 different drugs. Furthermore, each drug is represented by a large dataset of 2000 data points, indicating the drug’s response under various conditions and scenarios. In our model, each biomarker interacts with an extensive dataset containing detailed information about the response to each of the 12 drugs. Consequently, the RF model analyzes this data to identify patterns and relationships, enabling accurate predictions about the most suitable drug based on the patient’s biomarker profile.

We applied the GS method to optimize the model, selecting the number of decision trees from 100, 150, and 200, and determining the maximum features with options ‘log2’, ‘auto’, and ‘sqrt’44–46. The maximum depth of the trees was set using values ‘None’, 3, 5, 7, and 9, employing entropy and Gini selection criteria to assess the best splits.

During the training process, we implemented fivefold cross-validation to evaluate the model’s performance objectively. The model was trained using 80% of the available data, while the remaining 20% was used to validate and test the accuracy of the model’s predictions.

Our study chose the SVM framework for its precision in handling high-dimensional data (Fig. 3d). We employed StandardScaler to normalize feature vectors, ensuring uniform scale across dimensions and enhancing the model’s statistical robustness47,48. StratifiedKFold for cross-validation, divided into five folds, ensured that each subset maintained class distribution homogeneity, which is vital for reliable performance evaluation. Through GS, we optimized hyperparameters, selecting a regularization parameter (C) of 1, a kernel coefficient (gamma) of ‘scale’, and the RBF kernel, balancing model complexity and preventing overfitting.

The optimal settings were deduced to streamline model operations, where StratifiedKFold ensured even class representation in each training subset, and GS systematically explored parameter combinations to enhance model efficacy. This systematic training and validation process, where accuracy scores were diligently recorded across folds, revealed SVM’s adaptability and resilience, reflected in synthesized average accuracy metrics47–49. These empirical findings underscore SVM’s competence in deciphering complex patterns within the dataset, affirming its applicability and effectiveness in complex classification tasks within machine learning research.

The KNN algorithm, fundamental in machine learning, classifies data points based on their proximity to their nearest neighbors (Fig. 3e). It operates under the premise that similar items are closer to each other in the feature space. The ‘k’ in KNN refers to the number of nearest neighbors considered for the majority voting process that determines the class of the queried point47.

Optimization in this study was carried out using GS, focusing on key parameters: the number of neighbors (n_neighbors) and the distance metric (Euclidean or Manhattan). The number of neighbors affects the granularity of the classification, with too few neighbors leading to noise sensitivity and too many causing underfitting. The distance metric determines how similarity is calculated, influencing the grouping and classification outcome.

A StratifiedKFold cross-validation approach was employed to ensure each class was evenly represented across folds, thus maintaining validity and reliability in the model’s performance assessment. Through GS, we identified the optimal hyperparameters, which were then applied to construct and evaluate the refined KNN model. The process involved systematic iteration over different data subsets and assessing the model’s performance in terms of accuracy in each fold.

To illustrate, imagine a two-dimensional feature space where data points from two classes are spread. KNN classifies a new point based on the majority class within its ‘k’ nearest neighbors, with distance measured in either a straight line (Euclidean) or grid-like path (Manhattan).

The empirical analysis revealed that the KNN model exhibited robustness and high accuracy with the optimized settings, signifying its capability to discern complex patterns. Compared to other classification methods, the inherent adaptability and straightforward logic of KNN make it a reliable choice for various practical applications.

RBF networks are artificial neural networks that are known for their ability to handle nonlinear relationships50. They are commonly used in machine learning for pattern recognition and classification tasks. RBF networks transform the input space into a higher-dimensional space using RBF, typically Gaussian, to facilitate complex classification scenarios50–52.

In our study, the RBF network implemented within the Sequential neural network model utilizes TensorFlow and Keras. The key parameters of this RBF layer include the ‘units’, representing the number of neurons, and ‘gamma’, a scale factor that influences the spread of the radial basis function, affecting how the network discriminates between different input features (Fig. 3f).

During initialization, the centers of the RBF neurons are randomly set with a uniform distribution to diversify the starting points for learning. Operationally, the RBF layer computes its output by applying the Gaussian function to measure the Euclidean distance between inputs and these centers, adjusted by the ‘gamma’ parameter53,54. This mechanism enables the network to project the input into a higher-dimensional space, enhancing classification capabilities.

The network performance was optimized using GS, a systematic approach to parameter optimization. This process, integrated seamlessly within Keras via the KerasClassifier wrapper, allows for efficient exploration across the specified parameter grid, ensuring the most effective combination for model training is identified. Following the RBF layer, the model includes a dense layer with softmax activation to classify the transformed inputs into three distinct categories, meeting the multi-class classification requirements. Rigorous fivefold stratified cross-validation was employed during training to assess the model’s reliability and consistency across different data subsets.

By adopting these methods, we leveraged the unique qualities of RBF networks, renowned for their nonlinear handling and robustness in machine learning tasks. Integration with advanced deep learning frameworks like TensorFlow and Keras enhanced functionality and usability and allowed for extensive experimentation and detailed performance analysis. Theoretical strengths of RBF combined with practical, scalable deep learning practices ensured a comprehensive approach to tackling the complexities of sophisticated classification scenarios.

This study showcases the RBF network’s effectiveness in practical classification problems, offering insights into its performance relative to other networks or traditional classification methods. The practical implications of utilizing RBF networks in machine learning are vast, demonstrating their value and relevance across various industries, particularly where complex nonlinear patterns must be deciphered and classified accurately.

SHAP (SHapley Additive exPlainations)

In this study, the role of XAI, particularly the SHAP method, is crucial in understanding the factors driving machine predictions27. Unlike traditional approaches focusing solely on improving model performance through dimensionality reduction, our goal with XAI is to enhance model performance and interpretability simultaneously. The SHAP method enables us to explore physiological insights related to each biomarker, providing a detailed breakdown of the contribution of each biomarker to predictions without sacrificing information richness27. The strategic use of XAI aligns with our objective to maintain the complexity of the analysis process while gaining an in-depth understanding of the underlying factors27. We also consider the research methodology employed by Li et al.55, which applied a single feature without reducing its dimensionality, although not explicitly utilizing XAI. By adopting the SHAP method, we go beyond conventional approaches, ensuring that the interpretability of our model is not compromised during the performance optimization process26. The analogous to fair payments for contributions in a game, SHAP values allow us to understand the average marginal contribution of feature values, providing a comprehensive perspective on complex relationships within the dataset56. SHAP value can be calculated using Eq. (5)19.

| 5 |

is the contribution of the i-th feature, N is the number of features, S is the subset of the features used in the model, and x is the feature value vector of the instance to be described. is the prediction for feature values in the marginalized set S of features not included in the S set. The model is trained with the presence of the i-th feature, while another model is trained by omitting the same feature19.

Feature importance

The SHAP feature importance is the sum of the average absolute Shapley values for each feature across all data56. The sum of Shapley’s scores is absolute because Shapley’s values positively and negatively affect predictive results in machine learning57. The importance of the SHAP feature can be represented by Eq. (6)56.

| 6 |

is the contribution of the i-th feature, is the number of shapley value. SHAP feature importance differs from permutation feature importance because SHAP is based on the magnitude of the feature attribution, while permutation feature importance is based on decreasing model performance56.

Performance evaluation

Following the validation procedure implemented by the CiPA research group, we established a drug allocation strategy where 12 drugs were designated for the training set, and 16 drugs were reserved for the testing set (Table 1)55. Our study involved the creation of 10,000 drug sets, each consisting of 2000 samples, to ensure a diverse and representative dataset (see Fig. 4). This process included the random selection of drug samples to form these sets. Notably, each drug set comprised one sample from each of the 16 drugs, contributing to the variability and randomness in our dataset. This randomization approach was adopted to prevent potential systematic bias from a predetermined allocation. The 10,000 drug sets are not individual datasets but a collection from which random samples are drawn for each iteration of our analysis. The dataset size was chosen to enhance statistical robustness and facilitate a comprehensive evaluation using a pre-trained machine learning in 10,000 classification tests. The results of these tests, meticulously documented and presented in Fig. 5, underwent detailed analysis, including examining median values and 95% confidence intervals (CI). The 95% CI was calculated as the 2.5th to 97.5th percentiles of measurements across the 10,000 datasets for each observed test parameter, following the methodology proposed by Li et al.55. This comprehensive approach addresses dataset size concerns and ensures our classification models’ reliability and robustness under diverse conditions, providing valuable insights into their performance. Model performance testing uses Eqs. (7–11)29,58–60.

| 7 |

| 8 |

| 9 |

| 10 |

| 11 |

Fig. 4.

An evaluation algorithm was employed to assess the performance of the classification model proposed by the CiPA research group, utilizing the principles of the central limit theorem; AUC, the area under the receiver operating curve; LR, likelihood ratio.

Fig. 5.

(a) Feature importance visualization for the ANN model. The bar chart displays the mean SHAP values of each in-silico biomarker across three classes: high-risk, intermediate-risk, and low-risk. The feature qInward appears to be the most influential for high-risk classification, while Vm Resting has the least impact. (b) Feature importance chart for the XGBoost model. The graph illustrates the average impact of each in-silico biomarker on the model’s output, with higher mean SHAP values indicating greater importance. For high-risk classification, qInward and dVmdt Repol show significant influence. (c) Summary of SHAP values by class for the RF model. This bar chart represents the mean SHAP values by class, highlighting the features that most strongly affect the model’s predictions, with qInward showing a high impact on the high-risk class. (d) SHAP value summary for the SVM model. The bar chart details the feature importance, where CaD_50 is notably influential across all risk categories, suggesting a critical role in the model’s risk stratification process. (e) Feature importance for the KNN model, depicted through mean SHAP values. CaD_50 and APD_90 stand out as key features with high importance for the high-risk and intermediate-risk classifications, respectively. (f) Visualization of feature importance for the RBF model. The chart highlights the mean SHAP values with dVmdt Max showing a prominent role in distinguishing across all of risk category.

In this study, accuracy refers to the correctness of positive sample classifications, while recall (sensitivity) quantifies the proportion of correctly identified positive predictions among all actual positive instances29,58,59,61. Specificity, on the other hand, gauges the model’s ability to identify true negative instances correctly. It is crucial to note that LR stands for Likelihood Ratio. We employed recall (sensitivity) and specificity to compute the Positive Likelihood Ratio (LR+) and Negative Likelihood Ratio (LR−), following the methodology applied by CiPA and previous studies, such as those conducted by Yoo et al., Li et al. 2019 and Yoo et al.15,29,55.

LR+ provides the probability of a positive outcome after a positive screening, while LR− offers the probability of a negative outcome after a negative screening29,61. True Positive (TP) characterizes the quantity of data accurately predicted as positive, whereas False Negative (FN) indicates instances where the model predicts a negative class when it should be positive. False Positive (FP) occurs when data that should be negative is incorrectly predicted as positive. Conversely, True Negative (TN) reflects the quantity of data correctly predicted as negative60.

It is essential to note that we did not present the values of sensitivity and specificity in this report, aligning with the focus of our research, which follows the methodology of previous studies by Yoo et al., Li et al. and Yoo et al.15,29,55. These studies also solely presented AUC, LR+, and LR− values. Similarly, we adopted a comparable approach to facilitate a straightforward comparison of results with these prior research endeavors.

Results

Deciphering the role of electrophysiological biomarkers in drug toxicity prediction: a SHAP-based comparative analysis of machine learning models

This study delved into the impact of electrophysiological biomarkers on drug toxicity prediction, employing SHAP-based analysis to comprehend the specific feature contributions within various machine learning models27. SHAP, an advanced model interpretation method, provides measurable insights into each biomarker’s influence on model predictions, facilitating a deep understanding of how electrophysiological characteristics affect drug toxicity risk57. In the ANN context (Fig. 5a), SHAP analysis revealed significant contributions from biomarkers such as qInward with a SHAP value of 0.42, indicating its primary role in the predictive mechanism, followed by at 0.18, at 0.17, and at 0.15. These findings highlight the ANN’s sensitivity to ionic fluctuations and action potential duration variations. Meanwhile, the XGBoost model placed the highest emphasis on qInward with a SHAP score of 13.38 (Fig. 5b), underscoring the importance of cellular dynamics, with subsequent notable values for at 5.07, at 4.65, and at 2.49, illustrating the model’s focus on depolarization and repolarization processes. The RF model demonstrated a balanced approach in feature importance (Fig. 5c), with qInward and qNet each garnering a SHAP value of 0.23, illustrating how RF integrates diverse electrophysiological signals for robust prediction. Conversely, the SVM highlighted with a SHAP value of 0.32 (Fig. 5d), while the KNN model prioritized with a SHAP score of 0.49, emphasizing the significance of calcium duration in their analysis (Fig. 5e). The RBF model spotlighted with a SHAP value of 0.13, accentuating its focus on transient electrophysiological dynamics (Fig. 5f). Through SHAP analysis, this study provides nuanced understanding of how each machine learning model processes and evaluates electrophysiological information, underlining the importance of a multidisciplinary approach in drug toxicity analysis. These results not only advance our comprehension of electrophysiology and machine learning but also contribute to the development of more informative and effective drug toxicity risk assessment methods, offering valuable insights for future drug toxicity prediction strategies.

SHAP value distributions across machine learning models in biomarkers

In the Supplementary Material, Fig. S1a–r meticulously illustrate SHAP (SHapley Additive exPlanations) value distributions across six classification models, offering a granular view of how individual features contribute to each model’s predictive power. SHAP values, a concept central to explainable AI, serve to quantify the effect of each feature on the model’s predictions, delineating their importance in the predictive process. These values are stratified into high, intermediate, and low impact categories to facilitate an in-depth analysis of the feature importance hierarchy within each model, correlating directly with their statistical significance and predictive impact.

Figure S1a–c in the supplementary material correspond to the ANN, depicting the SHAP value distribution. Figure S1 a illustrates high-impact features at high-risk levels such as qInward and , Fig. S1b shows high-impact features at intermediate-risk levels including qInward and , while Fig. S1c represents high-impact features at low-risk levels like qInward and . The gradation in feature importance highlights the role of electrophysiological parameters within the ANN analytical framework.

For the XGBoost model, Fig. S1d–f in the supplementary material articulate the tiered feature influence, with Fig. S1d emphasizing high-impact features at high-risk levels such as qInward and , Fig. S1e showing high-impact features at intermediate-risk levels like and qInward, and Fig. S1f depicting high-impact features at low-risk levels like qInward and . These figures collectively underline the strategic assessment of the model towards dynamic cellular properties.

The significance of features in the RF model is illustrated through Fig. S1g–i in the supplementary material. Figure S1g highlights high-impact features at high-risk levels including qInward and qNet, Fig. S1h displays high-impact features at intermediate-risk levels like qInward and , and Fig. S1i identifies high-impact features at low-risk levels like and . This demonstrates the RF model’s comprehensive approach to integrating a wide spectrum of electrophysiological features.

The SVM model analysis is encapsulated in Fig. S1j–l of the supplementary material. High-impact features at high-risk levels like and qInward are presented in Fig. S1j, high-impact features at intermediate-risk levels like and in Fig. S1k, and high-impact features at low-risk levels including qInward and in Fig. S1l. This distribution reflects the SVM’s methodical consideration of feature weights in its decision-making process.

In the context of the KNN model, Fig. S1m–o in the supplementary material illustrate the SHAP value distribution, with Fig. S1 m depicting high-impact features at high-risk levels like qInward and , Fig. S1n showing intermediary features like and , and Fig. S1o outlining high-impact features at low-risk levels like and . This highlights the KNN model’s reliance on the local significance of electrophysiological attributes for classification accuracy.

Lastly, the feature priorities of the RBF network are presented in Fig. S1p–r of the supplementary material, with Fig. S1p depicting high-impact features at high-risk levels like and , Fig. S1q encompassing high-impact features at intermediate-risk levels including and , and Fig. S1r illustrating high-impact features at low-risk levels like and . This emphasizes the RBF model’s attention to key features that determine kernel-based similarity assessment.

The elaborate portrayal of SHAP value distribution in the supplementary material provides an extensive explanation of the feature-based discriminative power of each model, underscoring the complexity and specificity of feature importance in the context of predictive modeling. This granular analysis enhances our understanding of the decision-making paradigms of the models, offering substantial insights into the intricate dynamics that govern machine learning in electrophysiological data interpretation.

Quantitative analysis of feature impact on model predictions using SHAP

In this study, we delve into the intricate landscape of feature influence on model predictions by employing SHAP (SHapley Additive exPlanations) analysis, a cornerstone in the field of explainable AI. SHAP values are used to quantify the impact of individual features on a model’s predictions, providing a clear and interpretable measure of each feature’s relative importance. These values play a crucial role in elucidating the mechanisms behind model decision-making, thus enhancing transparency and understanding. Illustrated in the supplementary material, the waterfall plots categorize the impact of features into high, intermediate, and low levels across six classification models. These plots systematically depict the incremental contribution of each feature, offering a detailed view of how specific features influence the prediction outcomes in these models. Through this analysis, we gain a nuanced understanding of the dynamic interplay between features and their roles in predictive modeling.

In the Supplementary Material, the waterfall plots for the Artificial Neural Networks (ANN) model (Fig. S2a–c) distinctly delineate the feature hierarchy and its influence on model predictions. In these plots, risk levels are demarcated to demonstrate how features contribute differently under varying conditions of predictive uncertainty. Figure S2a, representing the high-risk category, exhibits features like and as having substantial contributions, signifying their pivotal roles when the prediction stakes are high and the model’s output is most critical. These features, due to their strong impact, are likely to drive significant shifts in model prediction outcomes under scenarios classified as high-risk. Figure S2b transitions to the intermediate risk level, where features like and are shown. Although these features have a noteworthy impact, their influence is moderated compared to the high-risk features, indicating their balanced role in scenarios where predictions carry a moderate level of certainty and risk. This intermediate category bridges the high and low-risk features, showing a gradient of feature impact on the model’s predictions. Lastly, Fig. S2c captures the low-risk features such as and , which, despite their influence on the model, contribute less decisively compared to the high and intermediate-risk features. Their presence in the low-risk category highlights their subtle yet consistent role in shaping the model’s output when the predictions are relatively stable or less prone to change.

In the XGBoost analysis, the supplementary material’s Fig. S2d–f elucidate the SHAP value distribution and its correlation with different risk levels, providing a clear depiction of how features impact the model’s predictions. Figure S2d, representing the high-risk category, underscores the prominent influence of features like qInward and qNet, which are critical in driving the model’s high-stakes predictions. These features, with their substantial SHAP values, are deemed high-impact due to their strong predictive power in scenarios where the potential for drug-induced toxicity is greatest. Moving to the intermediate risk level, Fig. S2e showcases features such as and , which, while still influential, have a moderated effect on the model’s output compared to the high-risk features. This intermediate category bridges the gap between the most and least influential features, representing conditions of moderate predictive uncertainty where these features still play a significant but less decisive role. At the low-risk level, Fig. S2f focuses on features like and , which have lower SHAP values, indicating their lesser, yet still relevant, contribution to the model’s prediction capabilities. These low-impact features are more subtle in their influence, affecting predictions where the likelihood of toxicity is lower, and thus the predictive certainty is higher.

RF model, leveraging SHAP Waterfall plots presented in Fig. S2g–i. These plots elucidate the incremental influence of each feature compared to a baseline, clarifying their roles in shaping the model’s predictions for specific instances. Figure S2g focuses on high-risk scenarios, demonstrating that features like qInward and qNet possess significant positive SHAP values, enhancing the model’s predictive likelihood, while negative values would indicate a diminishing effect on predictions. This suggests that qInward and qNet are pivotal in escalating the model’s risk assessment, underlining their substantial positive impact on the prediction outcome. In Fig. S2h, which represents intermediate-risk situations, the waterfall plot reveals the impact of features such as and , alongside others. Here, and emerge as influential, with their considerable positive contributions marking them as integral in deciphering the model’s decision-making process. This nuanced representation helps in understanding the intermediate-risk features’ incremental effects on model behavior. Finally, Fig. S2i reiterates the analysis for low-risk levels, aligning with the observations in Fig. S2g but potentially differing in the impact magnitude of features like and . This consistency in feature presence across risk levels, with variable impact magnitudes, accentuates the nuanced role these features play in the RF model’s predictive logic, with and notably shaping the prediction outcomes.

In the results section, the SHAP Waterfall plot analysis for the Support Vector Machine (SVM) model is elaborated, showing how variably different features contribute to the predictive outcomes, with Fig. S2j–l in the supplementary material ranking these features based on their importance and contribution to SVM’s decision-making. Specifically, in Fig. S2j, high-impact features like qInward, , and are highlighted at the top, signifying their significant influence on the model’s predictions, indicative of high-risk scenarios, whereas lower-ranked features such as and suggest a lesser impact, associated with lower risk levels in the model’s evaluative process. Moving to Fig. S2k, a notable reshuffling shows , qNet, and rising in importance, demonstrating their strong influence and revealing the dynamic nature of feature prioritization in correspondence to intermediate risk levels of SVM’s analytical phase. Finally, Fig. S2l showcases qInward, , and leading the feature list, asserting their dominant role in molding the model’s output, while features like and , though contributing significantly, are positioned lower, underlining a nuanced hierarchy where the interplay of features shifts based on their assessed impact in influencing the model’s predictions at different risk levels, thereby providing a comprehensive view into how SVM navigates and weighs electrophysiological features in its predictive logic, correlating feature significance directly with the stratified risk assessment in its analytical framework.

In the k-Nearest Neighbors (KNN) model’s SHAP Waterfall plot analysis, represented in Fig. S2m–o of the supplementary material, we delve into the nuanced understanding of how individual features assert their influence on predictive outcomes. High-impact features like and are prominently displayed at the top in Fig. S2m, signaling their significant roles in high-risk prediction scenarios, while mid-tier and lower-tier features like and are positioned to reflect their moderate and lesser degrees of influence, respectively. This structured hierarchy not only delineates the gradation of feature impacts but also correlates with the model’s risk-level assessment, showcasing a clear differentiation in feature prioritization. As we transition to Fig. S2n, the consistent prominence of and , coupled with and , illustrates a dynamic adjustment in the model’s sensitivity, indicating a shift in feature importance across different risk levels. Figure S2o further emphasizes this trend, with and leading the influence chart, accentuating their pivotal effects on the model’s low-risk predictions. The subsequent ranking of features like , , , and unfolds the intricate interplay within the predictive process, highlighting how these elements collectively fine-tune the KNN model’s accuracy across a spectrum of risk considerations. This in-depth analysis encapsulates the KNN model’s analytical depth, providing a comprehensive view of how electrophysiological features are intricately woven into its decision-making fabric, thereby elucidating the sophisticated machinery driving its predictive mechanics in the realm of electrophysiological data analysis.

The SHAP Waterfall plots for the RBF model, as shown in Fig. S2p–r of the supplementary material, offer a detailed exposition of how specific features impact the model’s predictive behavior across varying risk levels. In Fig. S2p, high-risk features such as , , and are identified as having a significant influence on the model’s decisions, underscoring their critical role in shaping high-risk predictions and denoting their dominant impact in the model’s analytical hierarchy. Transitioning to Fig. S2q, the analysis shifts to an intermediate-risk perspective, where the same features like and continue to exhibit importance but in a varied context, reflecting the model’s adaptability and nuanced response to the dynamic electrophysiological landscape. This shift underscores the variability and sensitivity of the RBF model to these key features, demonstrating their adjusted significance under different risk assessments. In the low-risk spectrum, depicted in Fig. S2r, there is a further delineation of feature impact, with and coming to the forefront alongside the persistent influence of and , which elucidates their ongoing, albeit less pronounced, role in influencing the model’s predictions at this risk level. Collectively, these plots methodically illustrate the hierarchical and stratified nature of feature influence within the RBF model, providing a comprehensive view of how it discerns and integrates various electrophysiological features to refine its predictive outcomes across a spectrum of risk levels, thus enriching our understanding of the intricate decision-making processes embedded within this sophisticated machine learning model.

Comprehensive analysis of machine learning models for enhanced torsades de pointes risk stratification

In this study, we embarked on a detailed exploration of Torsades de Pointes (TdP) risk classification using a refined ANN model. This method integrated grid search-optimized parameters and SHAP-derived feature importance assessments, testing a dataset of 16 drugs with 12 in-silico biomarkers. The rigorous testing process involved 10,000 classification iterations to ensure the reliability of our findings.

Our ANN model underwent extensive hyperparameter optimization, leading to an ideal configuration that yielded a high degree of accuracy—0.83 (CI 0.75–0.83)—and impressive AUC scores for categorizing high-risk (0.96, CI 0.92–1.00), intermediate, and low-risk TdP cases, as seen in Table 2. A deeper analysis indicated that the removal of the Vmresting biomarker significantly enhanced the model’s performance, illustrating the delicate interplay between feature selection and model accuracy.

Table 2.

The performance of the ANN algorithm is presented after undergoing 10,000 tests on 16 drugs, displaying the median and 95% CI values derived from the 10,000 test outcomes.

| System performance parameter | ANN (11 features) | XGBoost (6 features) | RF (6 features) | KNN (6 features) | SVM (7 features) | RBF (6 features) | Yoo et al.15 | Li et al.55 |

|---|---|---|---|---|---|---|---|---|

| Accuracy | 0.83 (0.79, 0.83) | 0.67 (0.58, 0.75) | 0.71 (0.62, 0.79) | 0.75 (0.62, 0.79) | 0.67 (0.62, 0.75) | 0.71 (0.62, 0.75) | – | – |

| AUC high risk | 0.92 (0.88, 0.96) | 0.81 (0.54, 0.94) | 0.83 (0.71, 0.94) | 0.88 (0.67, 0.99) | 0.75 (0.6, 0.88) | 0.92 (0.85, 0.94) | 0.92 (0.85, 0.96) | 0.86 (0.81, 0.9) |

| AUC intermediate risk | 0.83 (0.73, 0.9) | 0.56 (0.43, 0.68) | 0.56 (0.46, 0.66) | 0.6 (0.48, 0.69) | 0.73 (0.65, 0.79) | 0.71 (0.67, 0.76) | 0.83 (0.73, 0.91) | – |

| AUC low risk | 0.98 (0.95, 0.98) | 0.67 (0.58, 0.8) | 0.74 (0.64, 0.78) | 0.71 (0.59, 0.79) | 0.46 (0.38, 0.62) | 0.75 (0.69, 0.78) | 0.98 (0.91, 1) | 0.86 (0.82, 0.9) |

| LR + high risk | 500,000.97 (250,001.07, 500,001.98) | 3.0 (1.0, 6.0) | 3.0 (2.0, 6.0) | 9.0 (3.0, nan) | 3.0 (1.0, 9.0) | 3.0 (2.0, 4.0) | 5000 (4000, 6000) | 5 (3.33, 12.5) |

| LR + intermediate risk | 2.25 (1.8, 2.25) | 1.29 (0.43, 5.14) | 1.93 (0.86, 5.14) | 1.71 (0.86, 2.57) | 1.61 (1.29, 2.57) | inf (0.0, nan) | 2.249 (1.80, 2.25) | – |

| LR + low risk | 600,000.92 (400,000.53, 600,002.16) | 3.3 (2.2, 4.4) | 3.3 (2.2, 4.4) | 3.3 (1.1, 4.4) | 1.1 (1.1, 2.2) | 2.93 (1.65, 6.6) | 6000(4.39, 6000) | 2.01 (1.61, 2.84) |

| LR- high risk | 0.5 (0.5, 0.75) | 0.38 (0.0, 1.0) | 0.3 (0.0, 0.67) | 0.27 (0.0, 0.6) | 0.6 (0.3, 1.0) | 0.0 (0.0, 0.0) | 0.5 (0.4, 0.59) | 0.556 (0.278, 0.588) |

| LR- intermediate risk | 0.0 (0.0, 0.0) | 0.86 (0.55, 1.29) | 0.73 (0.48, 1.07) | 0.64 (0.48, 1.07) | 0.32 (0.21, 0.77) | 0.86 (0.71, 1.12) | 0.18e−3 (0.18e−3, 0.26) | – |

| LR- low risk | 0.4 (0.4, 0.6) | 0.49 (0.24, 0.55) | 0.49 (0.24, 0.55) | 0.49 (0.24, 0.98) | 0.98 (0.88, 0.98) | 0.27 (0.24, 0.63) | 0.4 (0.4, 0.66) | 0.118 (1.8e−6, 0.284) |

Furthermore, we expanded our analysis to include other classification models for comparison. The XGBoost and RF models, with their respective feature sets, displayed strong predictive abilities, particularly in low-risk classifications. Their accuracies, while not surpassing the ANN, offer valuable insights and underscore the utility of ensemble methods.

The KNN classifier demonstrated its strength in identifying high-risk cases with an AUC of 0.88 (CI 0.67–0.99) (Table 2), highlighting its potential for rapid risk assessment in clinical settings. Similarly, the SVM, with fewer features, achieved an excellent AUC for low-risk classifications (0.98, CI 0.88–0.98), challenging the performance of the ANN (Table 2).

The RBF model, another deep learning-based classifier in our study, showed promising results with a solid performance across different risk levels. Its accuracy and AUC for the intermediate-risk group were competitive, showing its capacity for complex data separation.

Compared to previous studies, our results present significant advancements in TdP risk classification. The ANN model’s positive and negative likelihood ratios for various risk levels demonstrate a high level of accuracy, especially for the high and low-risk categories. Even with the intermediate-risk level’s challenges, our model outperformed existing models, indicating its strength as a reliable clinical tool for risk assessment. These findings not only contribute to the ongoing dialogue in the field but also provide a comprehensive view of the potential of machine learning in clinical decision-making.

Assessing the impact of feature reduction on predictive model performance

In the results section of our study, we delineate the intricate interdependency between the quantity of features and the efficacy yielded from various predictive models, with a special emphasis on the pivotal role of SHAP values in guiding our feature reduction strategy.

For ANN, reducing the number of features from twelve to eleven had a negligible impact on the ability to predict high-risk cases, with the Area Under the Curve (AUC) remaining stable at 0.87, and the p-value shifting from 0.001364 to 0.006442 (Table 3). This feature reduction paradoxically coincided with peak performance for predicting intermediate and low-risk scenarios, evidenced by AUC values of 0.66 (p = 0.006442) and 0.83 (p = 0.0006442), respectively (Table 3). Such outcomes reinforce the hypothesis that an optimally filtered feature set, selected based on its influence, can surpass a more extensive feature array.

Table 3.

Impact of feature reduction on model performance metrics—this table presents a factorial ANOVA analysis delineating the effect of systematic feature reduction on the Area Under the Curve (AUC) for high, intermediate, and low-risk predictions across multiple classification models. Reduction in the number of features is directed by SHapley Additive exPlanations (SHAP) value significance. Each entry corresponds to an AUC value, accompanied by the CI in parentheses and the p-value, providing a statistical testament to the performance shifts observed as the feature set is pruned. Notably, the eleven-feature configuration in the ANN model exemplifies a consistent high-risk prediction AUC of 0.87, with significant p-values indicating robustness against feature diminution.

| Number of features | AUC high | AUC intermediate | AUC low | p-value | |

|---|---|---|---|---|---|

| ANN | 12 features | 0.87 (0.83, 0.91) | 0.61 (0.56, 0.65) | 0.64 (0.61, 0.67) | 0.001364 |

| 11 features | 0.87 (0.82, 0.91) | 0.66 (0.62, 0.7) | 0.83 (0.79, 0.87) | 0.006442 | |

| 10 features | 0.78 (0.74, 0.82) | 0.58 (0.55, 0.61) | 0.78 (0.74, 0.81) | 0.014848 | |

| 9 features | 0.76 (0.72, 0.81) | 0.65 (0.61, 0.7) | 0.83 (0.79, 0.87) | 0.000943 | |

| 8 features | 0.79 (0.75, 0.84) | 0.61 (0.57, 0.65) | 0.79 (0.75, 0.82) | 0.003039 | |

| 7 features | 0.82 (0.78, 0.86) | 0.68 (0.63, 0.73) | 0.82 (0.78, 0.85) | 0.014543 | |

| 6 features | 0.72 (0.68, 0.76) | 0.65 (0.61, 0.69) | 0.69 (0.64, 0.73) | 0.313042 | |

| XGBoost | 12 features | 0.71 (0.66, 0.77) | 0.52 (0.45, 0.58) | 0.65 (0.60, 0.71) | 4.13E−13 |

| 11 features | 0.73 (0.68, 0.79) | 0.54 (0.49, 0.60) | 0.65 (0.60, 0.70) | 3.24E−10 | |

| 10 features | 0.75 (0.70, 0.81) | 0.51 (0.45, 0.58) | 0.65 (0.60, 0.70) | 1.01E−11 | |

| 9 features | 0.74 (0.68, 0.79) | 0.51 (0.45, 0.58) | 0.65 (0.60, 0.71) | 7.35E−12 | |

| 8 features | 0.8 (0.75, 0.86) | 0.56 (0.50, 0.61) | 0.69 (0.64, 0.74) | 9.21E−10 | |

| 7 features | 0.80 (0.74, 0.85) | 0.57 (0.52, 0.63) | 0.70 (0.65, 0.76) | 1.45E−11 | |

| 6 features | 0.80 (0.75, 0.86) | 0.57 (0.51, 0.62) | 0.67 (0.61, 0.72) | 1.47E−10 | |

| RF | 12 features | 0.79 (0.74, 0.85) | 0.49 (0.49, 0.49) | 0.77 (0.71, 0.84) | 7.20E−18 |

| 11 features | 0.79 (0.74, 0.85) | 0.49 (0.42, 0.55) | 0.78 (0.71, 0.84) | 4.28E−17 | |

| 10 features | 0.79 (0.74, 0.85) | 0.48 (0.42, 0.55) | 0.78 (0.78, 0.78) | 9.39E−18 | |

| 9 features | 0.80 (0.75, 0.86) | 0.50 (0.43, 0.55) | 0.77 (0.72, 0.83) | 7.06E−16 | |

| 8 features | 0.81 (0.63, 0.99) | 0.54 (0.54, 0.54) | 0.76 (0.76, 0.76) | 7.10E−184 | |

| 7 features | 0.82 (0.77, 0.88) | 0.54 (0.54, 0.54) | 0.78 (0.78, 0.78) | 7.85E−17 | |

| 6 features | 0.83 (0.64, 1) | 0.56 (0.56–0.56) | 0.74 (0.74, 0.74) | 1.20E−183 | |

| SVM | 12 features | 0.62 (0.62–0.62) | 0.57 (0.5, 0.63) | 0.75 (0.75, 0.75) | 5.66E−19 |

| 11 features | 0.62 (0.62, 0.62) | 0.59 (0.53, 0.65) | 0.75 (0.69, 0.80) | 5.06066E−13 | |

| 10 features | 0.61 (0.55, 0.67) | 0.58 (0.52, 0.65) | 0.75 (0.69, 0.80) | 1.20E−12 | |

| 9 features | 0.691 (0.55, 0.68) | 0.59 (0.54, 0.65) | 0.74 (0.68, 0.80) | 4.77E−10 | |

| 8 features | 0.67 (0.61, 0.74) | 0.75 (0.75, 0.75) | 0.55 (0.50, 0.61) | 9.21E−12 | |

| 7 features | 0.75 (0.75, 0.75) | 0.73 (0.67, 0.79) | 0.45 (0.39, 0.52) | 1.59E−17 | |

| 6 features | 0.71 (0.71, 0.71) | 0.67 (0.67, 0.67) | 0.56 (0.51, 0.62) | 3.05E−14 | |

| KNN | 12 features | 0.81 (0.63, 0.99) | 0.56 (0.56, 0.56) | 0.69 (0.69, 0.69) | 3.80E−183 |

| 11 features | 0.81 (0.75, 0.88) | 0.56 (0.56, 0.56) | 0.69 (0.69, 0.69) | 1.63E−20 | |

| 10 features | 0.81 (0.63, 0.99) | 0.56 (0.56, 0.56) | 0.69 (0.69, 0.69) | 3.80E−183 | |

| 9 features | 0.81 (0.75, 0.88) | 0.56 (0.56, 0.56) | 0.69 (0.69, 0.69) | 1.63E−20 | |

| 8 features | 0.81 (0.63, 0.99) | 0.56 (0.56, 0.56) | 0.69 (0.69, 0.69) | 3.80E−183 | |

| 7 features | 0.81 (0.63, 0.99) | 0.56 (0.56, 0.56) | 0.69 (0.69, 0.69) | 3.80E−183 | |

| 6 features | 0.82 (0.76, 0.89) | 0.56 (0.56, 0.56) | 0.69 (0.69, 0.69) | 1.0622E−19 | |

| RBF | 12 features | 0.59 (0.55, 0.64) | 0.69 (0.64, 0.74) | 0.73 (0.68, 0.78) | 2.03E−03 |

| 11 features | 0.52 (0.48, 0.55) | 0.63 (0.59, 0.66) | 0.69 (0.66, 0.74) | 3.52E−02 | |

| 10 features | 0.63 (0.60, 0.67) | 0.68 (0.62, 0.73) | 0.68 (0.63, 0.72) | 7.25E−01 | |

| 9 features | 0.83 (0.80, 0.87) | 0.68 (0.68, 0.68) | 0.64 (0.59, 0.69) | 1.67E−03 | |

| 8 features | 0.73 (0.69, 0.76) | 0.62 (0.57, 0.66) | 0.68 (0.64, 0.73) | 3.65E−01 | |

| 7 features | 0.75 (0.72, 0.79) | 0.67 (0.62, 0.72) | 0.64 (0.60, 0.68) | 1.77E−01 | |

| 6 features | 0.89 (0.83, 0.93) | 0.64 (0.61, 0.68) | 0.74 (0.69, 0.79) | 1.22E−04 |

The narrative shifts when considering the XGBoost model, where a decrease in AUC for intermediate risk prediction was noted with feature reduction, with the most substantial performance, an AUC of 0.71 (p = 4.13E−13) (Table 3), observed with the full set of 12 features. This indicates that XGBoost might rely on the complex interactions of multiple features to maintain predictive accuracy.

Similarly, the RF model exhibited a preference for eleven features, excelling particularly in low-risk prediction with an AUC of 0.76 (p = 7.10E−184) (Table 3), reinforcing the notion that an optimally proximate feature set can be identified that balances performance with model simplicity.

Conversely, SVM and KNN models demonstrated a more complex relationship between feature quantity and prediction accuracy, with the data not delineating an optimal feature set, hinting at more nuanced interactions between feature selection and model output.

In an interesting development, the performance of the RBF network in predicting high risk was bolstered by reducing the feature count to six, yielding an AUC of 0.89 (p = 1.22E−04) (Table 3). This finding underscores the potential of a highly influential and concentrated feature set to significantly enhance model performance.

Discussion

This study focuses on substructural biomarkers and utilizes the value of Shapley Additive exPlanations (SHAP) to determine the influence of each biomarker in predicting drug-related risks like Torsades de Pointes (TdP). By implementing feature importance mechanisms, this research method methodically assesses how each biomarker contributes to the overall predictive model. This approach not only enhances the accuracy of toxicity prediction but also elucidates the complex role of certain electrophysiological features and enriches our understanding of the biological mechanisms behind drug-induced effects.

In the context of this research, ANN, XGBoost, RF, SVM, KNN, and RBF are used to identify in-silico biomarkers that significantly impact drug toxicity evaluation. Twelve biomarkers were selected as input features, encompassing morphological aspects of the Action Potential (AP) like , , , , , and , calcium transient morphology like , , , , and features related to charge movement, namely qNet, and qInward.

By implementing the GS method, we determined the most optimal model and then used the SHAP approach to identify biomarkers significantly contributing to drug risk classification. The SHAP approach is based on coalition game theory, where SHAP values are calculated to describe the contribution of each attribute. Unlike heuristic approaches used in previous studies, the SHAP approach considers the prediction probabilities of all inputs in a machine learning context. Its dual nature allows both positive and negative SHAP values to have equal influence in making predictions. As a result, we calculated the average absolute SHAP scores to identify the biomarkers with the most significant contributions.

The feature importance levels from the SHAP results of the ANN model in Fig. 5a, highlight qInward, , and as significant influences in the TdP risk assessment, aligning with Yoo et al. findings that emphasize the complexity of action potentials and calcium transients in proarrhythmic potential15. This conformity strengthens the validation of our model and illustrates its capacity to capture crucial electrophysiological dynamics critical for accurate drug toxicity prediction. Specifically, the prominence of qInward in the SHAP analysis reflects the intricate role of inward ion currents, especially calcium and sodium, in initiating and maintaining arrhythmogenic activity. This emphasizes the charge movement in drug-induced TdP risk discussed in Dutta et al. and Li et al.10,11. Furthermore, the sufficient importance of duration markers like and aligns with traditional electrophysiological theory and supports the CiPA initiative’s shift towards complex in silico assessment strategies.

This analysis highlights the interaction between various electrophysiological parameters, indicating a more complex relationship than previously understood in the context of TdP risk, where the focus was often solely on AP prolongation or ionic current changes. Our ANN model, enriched with a comprehensive set of biomarkers, not only strengthens existing electrophysiological insights but also reveals the inherent complexity of proarrhythmic potential in drug-induced cardiotoxicity. A deeper understanding from the SHAP feature importance analysis confirms the robustness of our ANN approach in navigating the complex landscape of TdP risk assessment. ANN tends to capture the non-linear and complex interactions between features, using a deep architecture with multiple layers and neurons to model these relationships in detail, allowing it to detect very specific nuances and patterns in the data.

The SHAP feature importance results for our XGBoost model, shown in Fig. 5b, provide nuanced insights into the critical electrophysiological determinants for TdP risk assessment. The prominence of qInward and confirms the importance of inward ion flux and repolarization dynamics in modulating cardiac risk, in line with findings from Yoo et al. and Dutta et al.11,15, where a complex balance of ion currents underlies proarrhythmic potential. The model’s emphasis on along with indicates comprehensive electrophysiological interactions affecting arrhythmogenicity, highlighting the need for a holistic approach in cardiac safety evaluation, as also suggested in the CiPA comprehensive in silico modeling framework.

In the XGBoost model, the prioritization of features like qNet underscores the critical role of Channel block handling in cardiac toxicity, in line with the nuanced insights provided by Li et al.55, where qNet is an integral part of TdP risk prediction. This alignment underlines the relevance of these biomarkers in reflecting the multifactorial nature of drug-induced arrhythmia. Moreover, the presence of in the analysis, albeit with a lower importance score, indicates the subtle impact of resting membrane potential on cardiac electrophysiology, necessitating further investigation as discussed in broader cardiac safety research.

Our analysis through SHAP feature importance levels for the XGBoost model not only aligns with established electrophysiological paradigms but also offers detailed exploration into how these biomarkers interact within the predictive model, enhancing current understanding of TdP risk. Integrating comprehensive biomarker analysis within the machine learning framework represents an advance in refining drug safety assessment, leveraging the strength of XGBoost to dissect complex biomarker interactions in predicting proarrhythmic risk. Although our findings illuminate the way forward in using sophisticated analytical models to capture the complex landscape of cardiac arrhythmogenicity, features with greater influence in predicting the target are given higher priority, indicating that XGBoost is effective in feature selection and handling nonlinear data, using gradient boosting techniques to enhance prediction.

On the other hand, the SHAP feature importance results for the RF model (Fig. 5c) identify qInward and qNet as the most influential factors in determining TdP risk, aligning with the critical role these features play in drug-induced arrhythmogenicity as discussed in studies by Yoo et al., and Li et al.10,15,55. The importance of qInward in the model underscores the fundamental role of inward ion currents, particularly through calcium and sodium channels, in shaping the cardiac action potential and inducing arrhythmias. This is consistent with the comprehensive analysis conducted in the CiPA initiative, which emphasizes the multifactorial nature of TdP risk, beyond the traditional focus on hERG channel blocking.

Additionally, the significance of and in the RF model highlights the complex interaction between action potential duration and calcium homeostasis in cardiac electrophysiology, reflecting the intricate insights provided by Dutta et al. about regulated ion channel activity affecting cardiac repolarization11. This analysis reveals a sophisticated landscape where subtle variations in these parameters can significantly alter the proarrhythmic potential of drugs, reinforcing the need to integrate a broad spectrum of biomarkers for more accurate and holistic risk assessment.

It can be concluded that the RF model, employing an ensemble approach, combines predictions from many decision trees to improve accuracy and reliability. RF tends to give equal weight to features and is good at identifying feature importance through its process.

The feature importance results from the SVM model, shown in Fig. 5d, indicate equal priority of biomarkers for assessing TdP risk. The importance of and qInward in this analysis reaffirms the critical role of calcium dynamics and inward ion flow, respectively, in mediating arrhythmic potential, a finding that reflects the physiological basis emphasized in the CiPA initiative. Notably, the SVM model’s emphasis on and qNet aligns with the electrophysiological criteria discussed by Dutta et al. and Li et al.11,55, where these parameters are vital in representing the proarrhythmic tendencies of drugs through detailed action potential profiles and ion charge characteristics.

The lower ranking of features like and in importance challenges the conventional consideration of their roles, indicating that TdP risk associated with drug compounds may not directly correspond with typical markers of cellular activity or repolarization duration. This insight challenges traditional views and signifies more complex interactions in arrhythmogenesis, requiring a broader perspective in risk stratification.

Furthermore, the SVM analysis reflects a sophisticated understanding of how various biomarkers collectively inform proarrhythmic risk, advocating a multifaceted approach in cardiac safety evaluation as supported by the advanced ANN methodology of Yoo et al.15. The depth of this analysis enhances the precision of TdP risk assessment, providing a compelling argument for the integration of diverse electrophysiological features beyond standard APD measurements.

At its core, the SHAP feature importance results for the SVM model significantly contribute to the evolving narrative of cardiac safety pharmacology, affirming the intricate balance of ionic and electrophysiological parameters in mediating drug-induced TdP risk. Meanwhile, the SVM model operates by seeking an optimal hyperplane to separate classes in feature space, with a strong focus on features that help define the decision margin. The use of kernels, such as RBF, allows SVM to work effectively in high-dimensional spaces.