Abstract

In this narrative review, we review the applications of artificial intelligence (AI) into clinical magnetic resonance imaging (MRI) exams, with a particular focus on Japan’s contributions to this field. In the first part of the review, we introduce the various applications of AI in optimizing different aspects of the MRI process, including scan protocols, patient preparation, image acquisition, image reconstruction, and postprocessing techniques. Additionally, we examine AI’s growing influence in clinical decision-making, particularly in areas such as segmentation, radiation therapy planning, and reporting assistance. By emphasizing studies conducted in Japan, we highlight the nation’s contributions to the advancement of AI in MRI. In the latter part of the review, we highlight the characteristics that make Japan a unique environment for the development and implementation of AI in MRI examinations. Japan’s healthcare landscape is distinguished by several key factors that collectively create a fertile ground for AI research and development. Notably, Japan boasts one of the highest densities of MRI scanners per capita globally, ensuring widespread access to the exam. Japan’s national health insurance system plays a pivotal role by providing MRI scans to all citizens irrespective of socioeconomic status, which facilitates the collection of inclusive and unbiased imaging data across a diverse population. Japan’s extensive health screening programs, coupled with collaborative research initiatives like the Japan Medical Imaging Database (J-MID), enable the aggregation and sharing of large, high-quality datasets. With its technological expertise and healthcare infrastructure, Japan is well-positioned to make meaningful contributions to the MRI–AI domain. The collaborative efforts of researchers, clinicians, and technology experts, including those in Japan, will continue to advance the future of AI in clinical MRI, potentially leading to improvements in patient care and healthcare efficiency.

Keywords: Artificial intelligence, Magnetic resonance imaging, Healthcare, Medicine, Review

Introduction

The integration of artificial intelligence (AI) in magnetic resonance imaging (MRI) has led to significant improvements in clinical MRI exams [1, 2]. MRI is highly valued for its absence of ionizing radiation and its excellent soft tissue contrast, making it indispensable for diagnosing a wide range of diseases across various organs and systems [3]. However, challenges such as long acquisition times, motion sensitivity, patient comfort, and technical complexities persist. AI has shown promise in addressing these issues by optimizing each step of the MRI process, ranging from patient preparation and scan planning to image reconstruction and assistance in image interpretation and report generation.

Japan’s unique characteristics in MRI examinations, including a large number of MRI scanners per capita [4, 5], skilled technologists [6], extensive health screening data [7], combined with the national health insurance system [8] provides a fertile ground for AI research and development. Japan boasts one of the highest densities of MRI scanners globally, enabling widespread access to advanced imaging technology. Additionally, Japanese MRI technologists are renowned for their expertise [6], contributing to high-quality image data collection. This, coupled with the country’s national insurance system that offers CT and MRI scans to all citizens [7] ensures the collection of unbiased imaging data across all age groups and socioeconomic statuses. Collaborative research across multiple universities is also prevalent in Japan, with initiatives like Japan Medical Imaging Database (J-MID) enabling the sharing of large datasets [9], further fostering AI development. These factors collectively create an ideal environment for developing and implementing AI solutions in clinical MRI. Furthermore, Japan is actively contributing to the education of AI applications across various organs and modalities [10–17], showcasing its commitment to this field.

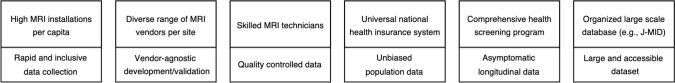

In this narrative review, we provide the applications of AI into MRI with a focus on Japan’s contribution to this field. The article first discusses the role of AI in improving clinical MRI exams, covering each step from optimizing scan protocols and patient preparation to improving image quality and postprocessing. Additionally, AI’s role in clinical decision-making, particularly in segmentation and radiation therapy planning, as well as the use of large language models (LLMs) for reporting will be discussed. Given the extensive research on AI in MRI, this paper predominantly focuses on studies from Japan. In the latter half, the paper offers insights into Japan’s potential future contributions to the field (Fig. 1), mentioning initiatives such as the J-MID and identifying possible areas for research and development that could advance the current state of technology.

Fig. 1.

Japan’s unique characteristics in clinical MRI examinations

Patient preparation and scan planning

MRI examinations often necessitate the acquisition of multiple sequences, leading to prolonged scan times [18]. Ensuring optimized protocols for every patient can be challenging, especially in institutions where radiologists may not always be readily available. AI algorithms are proving valuable in optimizing scan planning by automatically determining ideal slice positioning and coverage, leading to more efficient scan sessions [19–21]. These approaches not only ensure greater consistency across different operators but also tailors the acquisition process to each patient’s unique anatomy.

Additionally, active research is exploring the optimization of MRI sequences themselves using AI, going beyond adjustments to higher order parameters like matrix size and echo times. Examples include utilizing deep reinforcement learning for designing radiofrequency pulse [22] and generating k-space sampling patterns [23]. While these technical advancements are beyond the scope of this review, they represent an exciting frontier in AI-driven MRI development.

Image reconstruction

Deep learning reconstruction (DLR), particularly those utilizing convolutional neural networks (CNNs), have demonstrated success in MRI reconstruction [24, 25]. These AI algorithms can effectively increase image resolution, reduce noise, and subsequently allow for more undersampled acquisitions. Numerous studies have shown its promise in clinical MRI exams, including but not limited to the brain [26–28], head and neck [29], upper abdomen [30–33], prostate [34], musculoskeletal [35], and breast. These studies, along with many others reported daily worldwide, lead to a broad agreement that DLR effectively improves subjective image quality and signal-to-noise ratio in structural imaging compared to other existing acceleration techniques.

Beyond these improvements in image quality, it is essential to evaluate the broader impact of DLR on patient management. A critical aspect to consider is its influence on clinical assessments, such as T-category evaluation [36], which directly affects patient care decisions. In a cohort of 213 patients pathologically diagnosed with non-small cell lung cancer, the application of DLR improved image quality but did not necessarily lead to changes in T-category evaluation [37]. Similarly, in prostate imaging, while DLR enhanced image quality, it did not result in alterations to Prostate Imaging Reporting and Data System classification [38]. These findings underscore the importance of evaluating the clinical implications of DLR, rather than focusing solely on improvements in image quality that may not directly translate into clinical value.

Quantitative measurements, such as apparent diffusion coefficient (ADC) and relaxation time, derived from images reconstructed with DLR are an area that warrants further exploration, despite the widespread reporting of its effectiveness in structural imaging. For example, one study found no substantial differences in prostate ADCs derived with and without DLR [39]. In contrast, another study reported significantly higher ADC values in both prostate tissue and lesions when comparing images reconstructed with DL to those with parallel imaging [40]. Additionally, the effectiveness of DLR has been demonstrated in non-structural imaging, such as diffusion metrics [41], relaxation [42, 43], and neuromelanin imaging [44], with findings suggesting that DLR enables more aggressive undersampling. Given the diversity of DLR techniques and implementations, their impact on quantitative measurements remains a topic of significant interest.

While the majority of the techniques discussed above rely on image-to-image CNNs with supervised learning, exciting developments are actively emerging, including physics-informed DLR approaches such as unrolled variational networks [45] and self-supervised learning methods [46]. Physics-informed DLR approaches leverage k-space information and provide higher acceleration and theoretically less chance of hallucination. Notably, some unrolled variational networks have been validated in patient cohorts, demonstrating promising results even at high undersampling rates, such as R = 12 [40, 47–49]. Additionally, self-supervised learning methods are gaining traction as powerful tools for image reconstruction [43, 46]. These techniques have demonstrated great technical potential, and further clinical validations in clinical settings are underway.

Image postprocessing: segmentation and contrast synthesis

Segmentation

Image segmentation has been one of the most promising areas of AI in medical imaging. Deep learning models, particularly U-Net and its variants, have dramatically improved the speed and accuracy of MRI segmentation tasks. These AI-driven segmentation tools can automatically delineate organs, tumors, and other structures of interest, saving significant time for radiologists and improving consistency [50]. As segmentation precision and accuracy increase, the segmented volumes and volumes of interest can be subsequently used for downstream tasks such as classification and prognosis (e.g., longitudinal volume tracking for evaluating treatment response) [51].

One of the most successful applications of MRI segmentation is brain segmentation, due to the brain’s complex anatomy and functional importance. Brain segmentation software, such as FreeSurfer, has been widely used in neuroimaging and brain analyses [52]. These pipelines involve computationally intensive, time-consuming optimization steps. Deep learning-based neuroimaging pipelines for the automated segmentation of structural human brain MRI scans, including surface reconstruction and cortical parcellation, have been proposed [53, 54].

Segmentations with AI are not only useful for diagnosis but also potentially improve and optimize radiation treatment planning [55]. AI-driven segmentation and image analysis tools are streamlining the radiation therapy planning process by accurately delineating target volumes and organs at risk in MRI images. These algorithms can automatically contour tumor volumes and critical structures with high accuracy, reducing the time-consuming manual delineation process. For example, an AI-based automatic pipelines enabled precise lesion segmentation and subsequent outcome prediction using pretreatment MRI [56, 57].

Contrast synthesis

Contrast synthesis represents an exciting application of AI in MRI. Generating missing contrast-weighted images from existing sequences can allow for the retrieval of important contrast information, even after the exam has concluded, thereby improving workflow flexibility and potentially improving patient outcomes. For example, not all institutions have MR angiography (MRA) routinely acquired in their protocol, and using AI to retrospectively generate MRA [58] or increase the spatial resolution of MRA [59] may be helpful in certain cases. Similarly, synthesizing fat-suppressed images in the spine [60] and in the breast [61, 62] has been demonstrated. Further, Liu et al. have demonstrated that synthesizing a wide range of contrast weighted images from available contrast weighted images is feasible [63].

Reducing the intravenous dosage of contrast agents are also being explored, by boosting the subtle T1 shortening effects of the gadolinium based contrast agent. This may enable to reduce contrast dosage while preserving imaging quality. For example, AI-enhanced MRI exams with 10% dosage showed similar to improved image quality and contrast enhancement compared to standard dosage [64, 65].

The combination of MRI and AI offers the capability to synthesize not only a diverse range of MRI contrasts but also images from other modalities. PET imaging, for instance, offers powerful insights into metabolic processes, but it carries drawbacks such as high cost and invasiveness of radioactive tracer administration, and limited availability. MRI, which is more accessible and non-invasive, can be combined with AI to infer specific PET images. For example, a study has shown that AI-based models can generate synthetic methionine PET images that strongly correlate with real PET images, demonstrating good performance for glioma grading and prognostication [66]. Applications to amyloid-beta PET imaging have also been explored. For example, Vega et al. demonstrated that amyloid-beta PET images can be synthesized from structural MRI with a high degree of similarity to the real PET images [67]. Additionally, Fujita et al. utilized MR fingerprinting [69] to synthesize amyloid-beta PET images that showed significant correlation with Aβ-PET measurements and cognitive scores [68].

Clinical decision making: detection and classification

Detection

Detecting lesions on medical images is the first and most crucial step in diagnosing a wide range of medical conditions. Without the initial identification of these lesions, healthcare providers would be unable to diagnose or manage patient care effectively. For instance, the detection of an incidental, unruptured intracranial aneurysm on an MRI can guide patients towards appropriate monitoring or even preventive intervention.

One of the earliest reports on the development and validation of AI for detecting cerebral aneurysms in time-of-flight MRA in a clinical setting came from Japan. In a study by Ueda et al., a retrospective evaluation included 748 aneurysms for training, 649 for validation, and 80 for an external test dataset, demonstrating high sensitivity and improved aneurysm detection compared to initial reports [70]. Similarly, Nakao et al. demonstrated that combining a convolutional neural network with a maximum intensity projection algorithm is effective for detecting intracranial aneurysms [71]. This combination of convolutional neural network and maximum intensity projection has also been applied in dynamic contrast-enhanced (DCE) breast imaging. Adachi et al. demonstrated that their AI system showed higher performance in detecting lesions in maximum intensity projections of DCE breast MRI and improved the diagnostic performance of human readers [72].

While the use of AI system has demonstrated promising performance in medical literature, several key aspects of its integration into the interpretation of MRI exams, such as the timing of its involvement (e.g., concurrent with the initial review, prescreening, second reader, or stand-alone), and its potential impact on factors like interpretation time and the short- and long-term interaction between AI and human readers, are yet to be fully evaluated. A systematic investigation of these factors is essential to optimize the integration of AI into clinical practice, ensuring accurate and efficient image interpretation while maintaining patient safety and trust in the technology.

Classification

AI can potentially assist clinicians in providing metrics for classification of the lesion, patient survival, predicting treatment response [73, 74], or assessing the risk of disease recurrence. One of the earliest reports on using CNNs to classify diffuse liver disease from MRI was reported from Japan: a retrospective study with 634 patients with various liver fibrosis evaluated with gadoxetic acid-enhanced hepatobiliary phase magnetic resonance (MR) imaging demonstrated that a deep convolutional neural network model has high diagnostic performance in the staging of liver fibrosis [75]. Although this study did not include an external dataset, it became a pioneering work in using CNNs to classify lesions from MRI such as for breast lesion classification from DCE [76, 77], and also for discrimination of soft tissue tumor [78].

From a clinical workflow perspective, classification and stratification are considered more downstream tasks compared to lesion detection. These tasks are closer to the decision-making and management phases of patient care, where the outcomes directly influence treatment plans and patient prognosis. Because the results of classification and stratification have a more immediate impact on patient outcomes, integrating AI systems into these stages of clinical practice requires greater caution and thorough validation.

Assisting in reporting and communication

Large Language Models (LLMs) are transforming radiology reporting by assisting and generating reports [79]. AI-powered reporting systems, based on LLMs, can automatically generate preliminary radiology reports from MRI findings. These systems can ensure consistent terminology, include all relevant measurements and comparisons, and even flag critical findings for immediate attention. Chat Generative Pre-trained Transformer (ChatGPT) achieved a diagnostic accuracy rate of 50% in neuroradiology cases [80] and 94% in brain tumor cases [81]. However, these studies were based on text inputs (i.e., the image findings were described by humans as inputs) and the performance is expected to be dependent on how the image findings were described by human readers. A recent study has further evaluated multimodal LLMs with image itself as inputs [82]. Limitations of LLMs that currently limit applications in radiology include hallucinations, knowledge cutoff dates, poor complex reasoning, and a tendency to perpetuate bias [79]. The impact of LLMs are impacting radiology dramatically and for further information we recommend review articles solely on LLMs and radiology as in [79].

Japan’s contributions, core strengths, and future role

Japan’s contribution in MRI research

Japan has made significant contributions to MRI research, leveraging its technological prowess and unique healthcare landscape. The Japanese Society for Magnetic Resonance in Medicine (JSMRM) is a non-profit society established in 1986, with more than 3600 members in the fields of clinical medicine, basic medicine, physics, chemistry, biology, and engineering [83]. JSMRM is one of the oldest and largest national societies in the field of magnetic resonance in medicine worldwide.

Notable recent contributions of JSMRM in clinical MRI include topics such as diffusion MRI [84, 85], quantitative MRI [51, 86], and the glymphatic system [87–89]. It is noteworthy that many key studies on the glymphatic system have been reported from Japan, such as the in vivo observation of gadolinium-based contrast agent leakage and its mechanism of deposition and accumulation in the brain [87]. A practical tool for obtaining a surrogate metric to evaluate the glymphatic system, called diffusion tensor image analysis along the perivascular space (DTI-ALPS), has also been proposed from Japan [88]. This technique has shown associations between MRI parameters and amyloid β deposition, neuronal changes, and cognitive impairment in Alzheimer's disease [89].

The JSMRM includes members from industry, reflecting the country's strong tradition in electronics and imaging technology. Combined with its advanced healthcare system, this has fostered strong academic-industry collaborations [90–98].

Japan’s core strengths and its potential impact

Japan's distinct landscape in MRI examinations creates a uniquely advantageous environment for the development and implementation of AI technologies (Fig. 1). With the highest per capita number of MRI installations worldwide [4, 5], sourced from various vendors, Japan has the capacity to rapidly accumulate large MRI datasets. Additionally, Japan’s national health insurance system is one of the few globally that provides universal access to CT and MRI scans [8], allowing for the collection of imaging data across all demographics, irrespective of age or socioeconomic status. This inclusivity is crucial in minimizing bias during AI development for MRI [99]. Japanese MRI technologists, known for their exceptional proficiency, consistently produce high-quality images, leading to the generation of extensive, quality controlled MRI data sets. This convergence of widespread MRI accessibility, diverse patient representation, and technical expertise forms an ideal foundation for advancing AI applications in MRI.

These extensive MRI data are systematically collected and organized into well-structured medical imaging datasets. A significant initiative in this area is the J-MID project [9]. Established in 2018 with the backing of the Japan Agency for Medical Research and Development, J-MID functions as a cloud-based national database infrastructure dedicated to diagnostic imaging. It provides researchers with access to a substantial repository of high-quality imaging data, which is critical for developing and validating robust AI algorithms in medical imaging. Currently, this comprehensive database contains over 500 million images, including CT and MRI scans, as well as diagnostic reports from both national and private university hospitals across Japan.

In addition to scans covered by national insurance, Japan offers a distinctive health screening program [7]. This elective program allows individuals to undergo additional tests, including MRI, beyond the standard scans provided by insurance. Notably, many participants in the program undergo multiple annual screenings, resulting in the generation of valuable longitudinal data [100]. Unlike many other research databases, this health screening program database includes a substantial number of healthy individuals [101], including young adults. This unique aspect allows researchers to explore the early stages of disease development and assess the potential for preventive interventions. The predominance of healthy participants also reduces the confounding effects of pre-existing conditions, making it easier to isolate specific factors influencing health outcomes. The combination of longitudinal data and the unique characteristics of the program participants makes this database an invaluable resource for advancing medical knowledge and enhancing healthcare practices. These elements together create an optimal environment for developing and implementing AI solutions in clinical MRI.

Future research and development directions

Japan’s aging population presents unique opportunities for the application of AI in MRI, especially when combined with the country’s core strengths. While increasing the number of physicians could address healthcare shortages [102], Japan is exploring alternative solutions that leverage its technological expertise and data integration capabilities without expanding the physician workforce.

Centralizing available AI-based tools will enhance their accessibility by allowing users to easily identify and utilize existing options. To support this goal, the Japanese Radiological Society has established guidelines for medical image AI, requiring vendors to be certified and registered in their database. In Japan, hospitals are reimbursed for using AI-powered diagnostic support software, provided they implement appropriate safety management based on guidelines established by relevant academic societies [103, 104]. Additionally, creating guidelines for AI integration will ensure its seamless incorporation into clinical practice.

Developing standardized, scalable AI applications necessitates datasets from various types of scanners. The presence of multi-vendor equipment within Japanese facilities facilitates the evaluation of AI’s generalizability across different platforms [105]. Japan is uniquely positioned to foster collaboration across diverse platforms, enabling the development of AI solutions that are compatible with a wide range of systems.

Furthermore, Japan’s universal healthcare system enables the rapid collection of high-quality data across the entire population, including extensive multimodal datasets from healthy individuals. These datasets are crucial for training AI models that are both accurate and unbiased. By capitalizing on these strengths, Japan can address the high medical demand and physician shortages, positioning itself at the forefront of global MRI-AI innovation.

Conclusion

Japan, with its technological expertise and unique healthcare system, is well positioned to be a global leader in the MRI–AI domain. The collaborative efforts of researchers, clinicians, and technology experts worldwide, with significant contributions from Japan, will shape the future of AI in clinical MRI, ultimately leading to improved patient outcomes and more efficient healthcare systems.

Acknowledgements

We would like to extend our gratitude to Claude and ChatGPT, AI language models, for their assistance in checking grammar and spelling in this article. The validity of all text was thoroughly confirmed by the authors.

Declarations

Conflict of interest

Yusuke Matsui received a grant and lecturer fee from Canon Medical Systems outside the submitted work. Kenji Hirata received a grant from GE HealthCare Japan outside the submitted work. The other authors declare that they have no conflicts of interest.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18:500–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Shimron E, Perlman O. AI in MRI: computational frameworks for a faster, optimized, and automated imaging workflow. Bioengineering (Basel). 2023. 10.3390/bioengineering10040492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.McRobbie DW. MRI from picture to proton. Cambridge: Cambridge University Press; 2003. [Google Scholar]

- 4.Matsumoto M, Koike S, Kashima S, Awai K. Geographic distribution of CT, MRI and PET devices in Japan: a longitudinal analysis based on national census data. PLoS One. 2015;10: e0126036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.OECD temporary archive. [cited 2024 Aug 19]. Available from: https://web-archive.oecd.org/temp/2024-02-21/78817-health-data.htm

- 6.About JSRT. [cited 2024 Aug 20]. Available from: https://www.jsrt.or.jp/data/english/jsrt/

- 7.Lu J. Ningen Dock: Japan’s unique comprehensive health checkup system for early detection of disease. Glob Health Med. 2022;4:9–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ikegami N. Universal health coverage for inclusive and sustainable development: lessons from Japan. World Bank Publications; 2014. [Google Scholar]

- 9.Kakihara D, Nishie A, Machitori A, Honda H. The Japan medical imaging database (J-MID). In: Nakashima N, editor. Epidemiologic research on real-world medical data in Japan, vol. 1. Singapore: Springer Nature Singapore; 2022. p. 87–93. [Google Scholar]

- 10.Yamada A, Kamagata K, Hirata K, Ito R, Nakaura T, Ueda D, et al. Clinical applications of artificial intelligence in liver imaging. Radiol Med. 2023;128:655–67. [DOI] [PubMed] [Google Scholar]

- 11.Fujima N, Kamagata K, Ueda D, Fujita S, Fushimi Y, Yanagawa M, et al. Current state of artificial intelligence in clinical applications for head and neck MR imaging. Magn Reson Med Sci. 2023;22:401–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Yanagawa M, Ito R, Nozaki T, Fujioka T, Yamada A, Fujita S, et al. New trend in artificial intelligence-based assistive technology for thoracic imaging. Radiol Med. 2023;128:1236–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Tsuboyama T, Yanagawa M, Fujioka T, Fujita S, Ueda D, Ito R, et al. Recent trends in AI applications for pelvic MRI: a comprehensive review. Radiol Med. 2024. 10.1007/s11547-024-01861-4. [DOI] [PubMed] [Google Scholar]

- 14.Nakaura T, Kobayashi N, Yoshida N, Shiraishi K, Uetani H, Nagayama Y, et al. Update on the use of artificial intelligence in hepatobiliary MR imaging. Magn Reson Med Sci. 2023;22:147–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Barat M, Pellat A, Hoeffel C, Dohan A, Coriat R, Fishman EK, et al. CT and MRI of abdominal cancers: current trends and perspectives in the era of radiomics and artificial intelligence. Jpn J Radiol. 2024;42:246–60. [DOI] [PubMed] [Google Scholar]

- 16.Hirata K, Kamagata K, Ueda D, Yanagawa M, Kawamura M, Nakaura T, et al. From FDG and beyond: the evolving potential of nuclear medicine. Ann Nucl Med. 2023;37:583–95. [DOI] [PubMed] [Google Scholar]

- 17.Tatsugami F, Nakaura T, Yanagawa M, Fujita S, Kamagata K, Ito R, et al. Recent advances in artificial intelligence for cardiac CT: enhancing diagnosis and prognosis prediction. Diagn Interv Imaging. 2023;104:521–8. [DOI] [PubMed] [Google Scholar]

- 18.Pooley RA. AAPM/RSNA physics tutorial for residents: fundamental physics of MR imaging. Radiographics. 2005;25:1087–99. [DOI] [PubMed] [Google Scholar]

- 19.Denck J, Haas O, Guehring J, Maier A, Rothgang E. Automated protocoling for MRI exams-challenges and solutions. J Digit Imaging. 2022;35:1293–302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hoinkiss DC, Huber J, Plump C, Lüth C, Drechsler R, Günther M. AI-driven and automated MRI sequence optimization in scanner-independent MRI sequences formulated by a domain-specific language. Front Neuroimaging. 2023;2:1090054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Eghbali N, Siegal D, Klochko C, Ghassemi MM. Automation of protocoling advanced MSK examinations using natural language processing techniques. AMIA Jt Summits Transl Sci Proc. 2023;2023:118–27. [PMC free article] [PubMed] [Google Scholar]

- 22.Shin D, Kim Y, Oh C, An H, Park J, Kim J, et al. Deep reinforcement learning-designed radiofrequency waveform in MRI. Nat Mach Intell. 2021;3:985–94. [Google Scholar]

- 23.Alkan C, Mardani M, Liao C, Li Z, Vasanawala SS, Pauly JM. AutoSamp: autoencoding k-space sampling via variational information maximization for 3D MRI. IEEE Trans Med Imaging. 2024. 10.1109/TMI.2024.3443292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Knoll F, Hammernik K, Zhang C, Moeller S, Pock T, Sodickson DK, et al. Deep-learning methods for parallel magnetic resonance imaging reconstruction: a survey of the current approaches, trends, and issues. IEEE Signal Process Mag. 2020;37:128–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kiryu S, Akai H, Yasaka K, Tajima T, Kunimatsu A, Yoshioka N, et al. Clinical impact of deep learning reconstruction in MRI. Radiographics. 2023;43: e220133. [DOI] [PubMed] [Google Scholar]

- 26.Iwamura M, Ide S, Sato K, Kakuta A, Tatsuo S, Nozaki A, et al. Thin-slice two-dimensional T2-weighted imaging with deep learning-based reconstruction: improved lesion detection in the brain of patients with multiple sclerosis. Magn Reson Med Sci. 2024;23:184–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Tajima T, Akai H, Yasaka K, Kunimatsu A, Yamashita Y, Akahane M, et al. Usefulness of deep learning-based noise reduction for 1.5 T MRI brain images. Clin Radiol. 2023;78:e13–21. [DOI] [PubMed] [Google Scholar]

- 28.Yasaka K, Akai H, Sugawara H, Tajima T, Akahane M, Yoshioka N, et al. Impact of deep learning reconstruction on intracranial 1.5 T magnetic resonance angiography. Jpn J Radiol. 2022;40:476–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Yasaka K, Tanishima T, Ohtake Y, Tajima T, Akai H, Ohtomo K, et al. Deep learning reconstruction for 1.5 T cervical spine MRI: effect on interobserver agreement in the evaluation of degenerative changes. Eur Radiol. 2022;32:6118–25. [DOI] [PubMed] [Google Scholar]

- 30.Kiso K, Tsuboyama T, Onishi H, Ogawa K, Nakamoto A, Tatsumi M, et al. Effect of deep learning reconstruction on respiratory-triggered T2-weighted MR imaging of the liver: a comparison between the single-shot fast spin-echo and fast spin-echo sequences. Magn Reson Med Sci. 2024;23:214–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Tamada D, Kromrey M-L, Ichikawa S, Onishi H, Motosugi U. Motion artifact reduction using a convolutional neural network for dynamic contrast enhanced MR imaging of the liver. Magn Reson Med Sci. 2020;19:64–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Tajima T, Akai H, Yasaka K, Kunimatsu A, Akahane M, Yoshioka N, et al. Clinical feasibility of an abdominal thin-slice breath-hold single-shot fast spin echo sequence processed using a deep learning-based noise-reduction approach. Magn Reson Imaging. 2022;90:76–83. [DOI] [PubMed] [Google Scholar]

- 33.Tajima T, Akai H, Sugawara H, Yasaka K, Kunimatsu A, Yoshioka N, et al. Breath-hold 3D magnetic resonance cholangiopancreatography at 1.5 T using a deep learning-based noise-reduction approach: comparison with the conventional respiratory-triggered technique. Eur J Radiol. 2021;144: 109994. [DOI] [PubMed] [Google Scholar]

- 34.Tajima T, Akai H, Sugawara H, Furuta T, Yasaka K, Kunimatsu A, et al. Feasibility of accelerated whole-body diffusion-weighted imaging using a deep learning-based noise-reduction technique in patients with prostate cancer. Magn Reson Imaging. 2022;92:169–79. [DOI] [PubMed] [Google Scholar]

- 35.Akai H, Yasaka K, Sugawara H, Tajima T, Akahane M, Yoshioka N, et al. Commercially available deep-learning-reconstruction of MR imaging of the Knee at 1.5T has higher image quality than conventionally-reconstructed imaging at 3T: a normal volunteer study. Magn Reson Med Sci. 2023;22:353–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rosen RD, Sapra A. TNM classification. StatPearls Publishing; 2023. [PubMed] [Google Scholar]

- 37.Takenaka D, Ozawa Y, Yamamoto K, Shinohara M, Ikedo M, Yui M, et al. Deep learning reconstruction to improve the quality of mr imaging: evaluating the best sequence for T-category assessment in non-small cell lung cancer patients. Magn Reson Med Sci. 2023. 10.2463/mrms.mp.2023-0068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bischoff LM, Peeters JM, Weinhold L, Krausewitz P, Ellinger J, Katemann C, et al. Deep learning super-resolution reconstruction for fast and motion-robust T2-weighted prostate MRI. Radiology. 2023;308: e230427. [DOI] [PubMed] [Google Scholar]

- 39.Ueda T, Ohno Y, Yamamoto K, Murayama K, Ikedo M, Yui M, et al. Deep learning reconstruction of diffusion-weighted MRI improves image quality for prostatic imaging. Radiology. 2022;303:373–81. [DOI] [PubMed] [Google Scholar]

- 40.Nishioka N, Fujima N, Tsuneta S, Yoshikawa M, Kimura R, Sakamoto K, et al. Enhancing the image quality of prostate diffusion-weighted imaging in patients with prostate cancer through model-based deep learning reconstruction. Eur J Radiol Open. 2024;13: 100588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Sagawa H, Fushimi Y, Nakajima S, Fujimoto K, Miyake KK, Numamoto H, et al. Deep learning-based noise reduction for fast volume diffusion tensor imaging: assessing the noise reduction effect and reliability of diffusion metrics. Magn Reson Med Sci. 2021;20:450–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Jun Y, Cho J, Wang X, Gee M, Grant PE, Bilgic B, et al. SSL-QALAS: Self-supervised learning for rapid multiparameter estimation in quantitative MRI using 3D-QALAS. arXiv [eess IV]. 2023. 10.1002/mrm.29786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Jun Y, Arefeen Y, Cho J, Fujita S, Wang X, Grant PE, et al. Zero-DeepSub: Zero-shot deep subspace reconstruction for rapid multiparametric quantitative MRI using 3D-QALAS. arXiv [eessIV]. 2023. 10.1002/mrm.30018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Oshima S, Fushimi Y, Miyake KK, Nakajima S, Sakata A, Okuchi S, et al. Denoising approach with deep learning-based reconstruction for neuromelanin-sensitive MRI: image quality and diagnostic performance. Jpn J Radiol. 2023;41:1216–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Funayama S, Motosugi U, Ichikawa S, Morisaka H, Omiya Y, Onishi H. Model-based deep learning reconstruction using a folded image training strategy for abdominal 3D T1-weighted imaging. Magn Reson Med Sci. 2023;22:515–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Yaman B, Hosseini SAH, Moeller S, Ellermann J, Uğurbil K, Akçakaya M. Self-supervised physics-based deep learning MRI reconstruction without fully-sampled data. 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI). IEEE; 2020. p. 921–5

- 47.Hirano Y, Fujima N, Kameda H, Ishizaka K, Kwon J, Yoneyama M, et al. High resolution TOF-MRA using compressed sensing-based deep learning image reconstruction for the visualization of lenticulostriate arteries: a preliminary study. Magn Reson Med Sci. 2024. 10.2463/mrms.mp.2024-0025. [DOI] [PubMed] [Google Scholar]

- 48.Fujima N, Nakagawa J, Kameda H, Ikebe Y, Harada T, Shimizu Y, et al. Improvement of image quality in diffusion-weighted imaging with model-based deep learning reconstruction for evaluations of the head and neck. MAGMA. 2024;37:439–47. [DOI] [PubMed] [Google Scholar]

- 49.Fujima N, Nakagawa J, Ikebe Y, Kameda H, Harada T, Shimizu Y, et al. Improved image quality in contrast-enhanced 3D–T1 weighted sequence by compressed sensing-based deep-learning reconstruction for the evaluation of head and neck. Magn Reson Imaging. 2024;108:111–5. [DOI] [PubMed] [Google Scholar]

- 50.Ma J, He Y, Li F, Han L, You C, Wang B. Segment anything in medical images. Nat Commun. 2024;15:654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Hagiwara A, Fujita S, Kurokawa R, Andica C, Kamagata K, Aoki S. Multiparametric MRI: from simultaneous rapid acquisition methods and analysis techniques using scoring, machine learning, radiomics, and deep learning to the generation of novel metrics. Invest Radiol. 2023. 10.1097/RLI.0000000000000962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Fischl B. FreeSurfer. Neuroimage. 2012;62:774–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Henschel L, Conjeti S, Estrada S, Diers K, Fischl B, Reuter M. FastSurfer—a fast and accurate deep learning based neuroimaging pipeline. Neuroimage. 2020;219: 117012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Billot B, Greve DN, Puonti O, Thielscher A, Van Leemput K, Fischl B, et al. SynthSeg: segmentation of brain MRI scans of any contrast and resolution without retraining. Med Image Anal. 2023;86: 102789. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Kawamura M, Kamomae T, Yanagawa M, Kamagata K, Fujita S, Ueda D, et al. Revolutionizing radiation therapy: the role of AI in clinical practice. J Radiat Res. 2024;65:1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Li L, Xu B, Zhuang Z, Li J, Hu Y, Yang H, et al. Accurate tumor segmentation and treatment outcome prediction with DeepTOP. Radiother Oncol. 2023;183: 109550. [DOI] [PubMed] [Google Scholar]

- 57.Fang W, Mao Y, Wang H, Sugimori H, Kiuch S, Sutherland K, et al. Fully automatic quantification for hand synovitis in rheumatoid arthritis using pixel-classification-based segmentation network in DCE-MRI. Jpn J Radiol. 2024. 10.1007/s11604-024-01592-6. [DOI] [PubMed] [Google Scholar]

- 58.Fujita S, Hagiwara A, Otsuka Y, Hori M, Takei N, Hwang K-P, et al. Deep learning approach for generating MRA images from 3D quantitative synthetic MRI without additional scans. Invest Radiol. 2020;55:249–56. [DOI] [PubMed] [Google Scholar]

- 59.Wicaksono KP, Fujimoto K, Fushimi Y, Sakata A, Okuchi S, Hinoda T, et al. Super-resolution application of generative adversarial network on brain time-of-flight MR angiography: image quality and diagnostic utility evaluation. Eur Radiol. 2023;33:936–46. [DOI] [PubMed] [Google Scholar]

- 60.Tanenbaum LN, Bash SC, Zaharchuk G, Shankaranarayanan A, Chamberlain R, Wintermark M, et al. Deep learning-generated synthetic MR imaging STIR spine images are superior in image quality and diagnostically equivalent to conventional STIR: a multicentre, Multireader Trial. AJNR Am J Neuroradiol. 2023;44:987–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Fujioka T, Mori M, Oyama J, Kubota K, Yamaga E, Yashima Y, et al. Investigating the image quality and utility of synthetic MRI in the breast. Magn Reson Med Sci. 2021;20:431–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Mori M, Fujioka T, Katsuta L, Kikuchi Y, Oda G, Nakagawa T, et al. Feasibility of new fat suppression for breast MRI using pix2pix. Jpn J Radiol. 2020;38:1075–81. [DOI] [PubMed] [Google Scholar]

- 63.Liu J, Pasumarthi S, Duffy B, Gong E, Datta K, Zaharchuk G. One model to synthesize them all: multi-contrast multi-scale transformer for missing data imputation. IEEE Trans Med Imaging. 2023;42:2577–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Pasumarthi S, Tamir JI, Christensen S, Zaharchuk G, Zhang T, Gong E. A generic deep learning model for reduced gadolinium dose in contrast-enhanced brain MRI. Magn Reson Med. 2021;86:1687–700. [DOI] [PubMed] [Google Scholar]

- 65.Gong E, Pauly JM, Wintermark M, Zaharchuk G. Deep learning enables reduced gadolinium dose for contrast-enhanced brain MRI. J Magn Reson Imaging. 2018;48:330–40. [DOI] [PubMed] [Google Scholar]

- 66.Takita H, Matsumoto T, Tatekawa H, Katayama Y, Nakajo K, Uda T, et al. AI-based virtual synthesis of methionine PET from contrast-enhanced MRI: development and external validation study. Radiology. 2023;308: e223016. [DOI] [PubMed] [Google Scholar]

- 67.Vega F, Addeh A, Ganesh A, Smith EE, MacDonald ME. Image translation for estimating two-dimensional axial amyloid-beta PET from structural MRI. J Magn Reson Imaging. 2024;59:1021–31. [DOI] [PubMed] [Google Scholar]

- 68.Fujita S, Otsuka Y, Murata K, Koerzdoerfer G, Nittka M, Motoi Y, et al. MR fingerprinting and complex-valued neural network for quantification of brain amyloid burden. Available from: https://archive.ismrm.org/2022/0559.html

- 69.Ma D, Gulani V, Seiberlich N, Liu K, Sunshine JL, Duerk JL, et al. Magnetic resonance fingerprinting. Nature. 2013;495:187–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Ueda D, Yamamoto A, Nishimori M, Shimono T, Doishita S, Shimazaki A, et al. Deep learning for MR angiography: automated detection of cerebral aneurysms. Radiology. 2019;290:187–94. [DOI] [PubMed] [Google Scholar]

- 71.Nakao T, Hanaoka S, Nomura Y, Sato I, Nemoto M, Miki S, et al. Deep neural network-based computer-assisted detection of cerebral aneurysms in MR angiography. J Magn Reson Imaging. 2018;47:948–53. [DOI] [PubMed] [Google Scholar]

- 72.Adachi M, Fujioka T, Mori M, Kubota K, Kikuchi Y, Xiaotong W, et al. Detection and diagnosis of breast cancer using artificial intelligence based assessment of maximum intensity projection dynamic contrast-enhanced magnetic resonance images. Diagnostics (Basel). 2020. 10.3390/diagnostics10050330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Yardimci AH, Kocak B, Sel I, Bulut H, Bektas CT, Cin M, et al. Radiomics of locally advanced rectal cancer: machine learning-based prediction of response to neoadjuvant chemoradiotherapy using pre-treatment sagittal T2-weighted MRI. Jpn J Radiol. 2023;41:71–82. [DOI] [PubMed] [Google Scholar]

- 74.Zhu W, Xu Y, Zhu H, Qiu B, Zhan M, Wang H. Multi-parametric MRI radiomics for predicting response to neoadjuvant therapy in patients with locally advanced rectal cancer. Jpn J Radiol. 2024. 10.1007/s11604-024-01630-3. [DOI] [PubMed] [Google Scholar]

- 75.Yasaka K, Akai H, Kunimatsu A, Abe O, Kiryu S. Liver fibrosis: deep convolutional neural network for staging by using gadoxetic acid-enhanced hepatobiliary phase MR images. Radiology. 2018;287:146–55. [DOI] [PubMed] [Google Scholar]

- 76.Fujioka T, Yashima Y, Oyama J, Mori M, Kubota K, Katsuta L, et al. Deep-learning approach with convolutional neural network for classification of maximum intensity projections of dynamic contrast-enhanced breast magnetic resonance imaging. Magn Reson Imaging. 2021;75:1–8. [DOI] [PubMed] [Google Scholar]

- 77.Goto M, Sakai K, Toyama Y, Nakai Y, Yamada K. Use of a deep learning algorithm for non-mass enhancement on breast MRI: comparison with radiologists’ interpretations at various levels. Jpn J Radiol. 2023;41:1094–103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Cay N, Mendi BAR, Batur H, Erdogan F. Discrimination of lipoma from atypical lipomatous tumor/well-differentiated liposarcoma using magnetic resonance imaging radiomics combined with machine learning. Jpn J Radiol. 2022;40:951–60. [DOI] [PubMed] [Google Scholar]

- 79.Bhayana R. Chatbots and large language models in radiology: a practical primer for clinical and research applications. Radiology. 2024;310: e232756. [DOI] [PubMed] [Google Scholar]

- 80.Horiuchi D, Tatekawa H, Shimono T, Walston SL, Takita H, Matsushita S, et al. Accuracy of ChatGPT generated diagnosis from patient’s medical history and imaging findings in neuroradiology cases. Neuroradiology. 2024;66:73–9. [DOI] [PubMed] [Google Scholar]

- 81.Mitsuyama Y, Tatekawa H, Takita H, Sasaki F, Tashiro A, Oue S, et al. Comparative analysis of GPT-4-based ChatGPT’s diagnostic performance with radiologists using real-world radiology reports of brain tumors. Eur Radiol. 2024. 10.1007/s00330-024-11032-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Horiuchi D, Tatekawa H, Oura T, Shimono T, Walston SL, Takita H, et al. Comparison of the diagnostic accuracy among GPT-4 based ChatGPT, GPT-4V based ChatGPT, and radiologists in musculoskeletal radiology. medRxiv. 2023. 10.1101/2023.12.07.23299707v1.abstract.37425910 [Google Scholar]

- 83.Aoki S. In commemoration of the 20th anniversary of MRMS. Magn Reson Med Sci. 2022;21:7–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Iima M, Le Bihan D, Okumura R, Okada T, Fujimoto K, Kanao S, et al. Apparent diffusion coefficient as an MR imaging biomarker of low-risk ductal carcinoma in situ: a pilot study. Radiology. 2011;260:364–72. [DOI] [PubMed] [Google Scholar]

- 85.Iima M, Kataoka M, Kanao S, Onishi N, Kawai M, Ohashi A, et al. Intravoxel incoherent motion and quantitative non-gaussian diffusion MR imaging: evaluation of the diagnostic and prognostic value of several markers of malignant and benign breast lesions. Radiology. 2018;287:432–41. [DOI] [PubMed] [Google Scholar]

- 86.Fushimi Y, Nakajima S, Sakata A, Okuchi S, Otani S, Nakamoto Y. Value of quantitative susceptibility mapping in clinical neuroradiology. J Magn Reson Imaging. 2024;59:1914–29. [DOI] [PubMed] [Google Scholar]

- 87.Naganawa S, Taoka T, Ito R, Kawamura M. The glymphatic system in humans: investigations with magnetic resonance imaging. Invest Radiol. 2024;59:1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Taoka T, Masutani Y, Kawai H, Nakane T, Matsuoka K, Yasuno F, et al. Evaluation of glymphatic system activity with the diffusion MR technique: diffusion tensor image analysis along the perivascular space (DTI-ALPS) in Alzheimer’s disease cases. Jpn J Radiol. 2017;35:172–8. [DOI] [PubMed] [Google Scholar]

- 89.Kamagata K, Saito Y, Andica C, Uchida W, Takabayashi K, Yoshida S, et al. Noninvasive magnetic resonance imaging measures of glymphatic system activity. J Magn Reson Imaging. 2024;59:1476–93. [DOI] [PubMed] [Google Scholar]

- 90.Okuchi S, Okada T, Fujimoto K, Fushimi Y, Kido A, Yamamoto A, et al. Visualization of lenticulostriate arteries at 3T: optimization of slice-selective off-resonance sinc pulse-prepared TOF-MRA and its comparison with flow-sensitive black-blood MRA. Acad Radiol. 2014;21:812–6. [DOI] [PubMed] [Google Scholar]

- 91.Mehemed TM, Fushimi Y, Okada T, Yamamoto A, Kanagaki M, Kido A, et al. Dynamic oxygen-enhanced MRI of cerebrospinal fluid. PLoS One. 2014;9: e100723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Funaki T, Fushimi Y, Takahashi JC, Takagi Y, Araki Y, Yoshida K, et al. Visualization of periventricular collaterals in moyamoya disease with flow-sensitive black-blood magnetic resonance angiography: preliminary experience. Neurol Med Chir. 2015;55:204–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Hinoda T, Fushimi Y, Okada T, Fujimoto K, Liu C, Yamamoto A, et al. Quantitative susceptibility mapping at 3 T and 1.5 T: evaluation of consistency and reproducibility. Invest Radiol. 2015;50:522–30. [DOI] [PubMed] [Google Scholar]

- 94.Mehemed TM, Fushimi Y, Okada T, Kanagaki M, Yamamoto A, Okada T, et al. MR imaging of the pituitary gland and postsphenoid ossification in fetal specimens. AJNR Am J Neuroradiol. 2016;37:1523–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Sakata A, Fushimi Y, Okada T, Arakawa Y, Kunieda T, Minamiguchi S, et al. Diagnostic performance between contrast enhancement, proton MR spectroscopy, and amide proton transfer imaging in patients with brain tumors. J Magn Reson Imaging. 2017;46:732–9. [DOI] [PubMed] [Google Scholar]

- 96.Kanazawa Y, Fushimi Y, Sakashita N, Okada T, Arakawa Y, Miyazaki M. B1 power optimization for chemical exchange saturation transfer imaging: a phantom study using egg white for amide proton transfer imaging applications in the human brain. Magn Reson Med Sci. 2018;17:86–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Ito S, Okuchi S, Fushimi Y, Otani S, Wicaksono KP, Sakata A, et al. Thin-slice reverse encoding distortion correction DWI facilitates visualization of non-functioning pituitary neuroendocrine tumor (PitNET)/pituitary adenoma and surrounding normal structures. Eur Radiol Exp. 2024;8:28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Fujita S, Hagiwara A, Kimura K, Taniguchi Y, Ito K, Nagao H, et al. Three-dimensional simultaneous T1 and T2* relaxation times and quantitative susceptibility mapping at 3 T: a multicenter validation study. Magn Reson Imaging. 2024;112:100–6. [DOI] [PubMed] [Google Scholar]

- 99.Ueda D, Kakinuma T, Fujita S, Kamagata K, Fushimi Y, Ito R, et al. Fairness of artificial intelligence in healthcare: review and recommendations. Jpn J Radiol. 2024;42:3–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Fujita S, Mori S, Onda K, Hanaoka S, Nomura Y, Nakao T, et al. Characterization of brain volume changes in aging individuals with normal cognition using serial magnetic resonance imaging. JAMA Netw Open. 2023;6: e2318153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Mori S, Onda K, Fujita S, Suzuki T, Ikeda M, Zay Yar Myint K, et al. Brain atrophy in middle age using magnetic resonance imaging scans from Japan’s health screening programme. Brain Commun. 2022;4: fcac211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.The Lancet Regional Health – Western Pacific. Junior doctor strikes in South Korea: more doctors are needed? The Lancet Regional Health - Western Pacific. 2024;44:101056. [DOI] [PMC free article] [PubMed]

- 103.Lobig F, Subramanian D, Blankenburg M, Sharma A, Variyar A, Butler O. To pay or not to pay for artificial intelligence applications in radiology. NPJ Digit Med. 2023;6:117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Tamura M, Nakano S, Sugahara T. Reimbursement pricing for new medical devices in Japan: Is the evaluation of innovation appropriate? Int J Health Plann Manage. 2019;34:583–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Hagiwara A, Fujita S, Ohno Y, Aoki S. Variability and standardization of quantitative imaging: monoparametric to multiparametric quantification, radiomics, and artificial intelligence. Invest Radiol. 2020;55:601–16. [DOI] [PMC free article] [PubMed] [Google Scholar]