Abstract

As Moore’s Law comes to an end, the implementation of high-performance chips through transistor scaling has become increasingly challenging. To improve performance, increasing the chip area to integrate more transistors has become an essential approach. However, due to restrictions such as the maximum reticle area, cost, and manufacturing yield, the chip’s area cannot be continuously increased, and it encounters what is known as the “area-wall”. In this paper, we provide a detailed analysis of the area-wall and propose a practical solution, the Big Chip, as a novel chip form to continuously improve performance. We introduce a performance model for evaluating Big Chip and discuss its architecture. Finally, we derive the future development trends of the Big Chip.

Keywords: Big Chip, Integrated chips, Area-wall, Chiplet, Performance model

1. Introduction

The utilization of cloud and edge platforms is experiencing rapid growth, driven by the rising popularity of deep neural networks (DNNs) and scientific computing [1], [2]. The computility necessary for conducting the most extensive AI training runs has been expanding exponentially, with a doubling time of 3.4 months. This metric has increased by over 300,000 times since 2012. Nevertheless, the considerable computational intensity of these algorithms remains a significant obstacle to their practical deployment. Consequently, there is a growing need for increased chip performance to meet the demand for higher computility. The performance of the chip is related to the following three factors:

| (1) |

D represents the transistor density, which is generally associated with manufacturing processes and device mechanism. A represents the chip’s area, related to integration scale. E represents the architectural factor, reflecting the performance of each transistor, typically determined by the chip’s structure. We refer to the above formula as the DAE model for chip performance. Consequently, when using the same chip architecture, improving transistor scaling and area are two crucial ways to enhance chip performance.

The manufacturing process of integrated circuits (ICs) has historically evolved in tandem with Moore’s Law. Currently, we have reached the production stage of 5 nm process, and the 3 nm process is steadily advancing. Each breakthrough in the process node has resulted in improved performance and reduced power consumption. However, as Moore’s Law [2] and Dennard scaling [3] approach their limits, it is becoming increasingly challenging and costly to increase the number of transistors integrated into a single chip [4].

As transistor scaling becomes increasingly unsustainable, one practical approach to integrating more functional units is to increase the chip’s area. However, an important obstacle that can arise when expanding the single chip’s area is what we refer to as the “area-wall.” The area-wall refers to the limitation of the single die’s area due to manufacturing technology and cost constraints. The manufacturing of chips depends on lithography, and the chip area is restricted by the lithography aperture [5]. The maximum exposure area of a single die is limited to 858 mm (26 mm × 33 mm) due to the size of the reticle and the physical properties of the optics. Significant advancements in lithography systems would be required to increase the maximum exposure area, which is challenging from a cost perspective. Moreover, cost is another challenge associated with increasing chip area. The cost per unit chip area increases in more advanced processing nodes [6]. Finally, yield is also a significant challenge when it comes to large area chips, which lead to a higher occurrence frequency of manufacturing defects, resulting in a reduction of the wafer’s yield [7].

To design a chip that breaks the limit of area wall, we claim that a novel chip form is proposed, named Big Chip. The term “Big chip” refers to a chip that has a larger area than the maximum exposure area of the most advanced lithography machine currently available. This type of chip also typically features a massive number of transistors and is implemented using semiconductor manufacturing technology. The Big Chip has two characteristics. Firstly, the area of Big Chip is large, breaking the area limitation of lithography stepper, and massive transistors are integrated into a chip, which can exceed the number of transistors integrated on a monolithic chip under the current fabrication technology. Secondly, the Big Chip is composed of multiple functional dies, and several emerging semiconductor fabrication techniques are used to integrate the pre-fabricated dies into a Big Chip. Cerebras utilizes the planar manufacturing technology to implement waferscale big chip with an area of 46,225 mm. Chiplet integration is also a promising technology, which combines multiple chiplets on an interposer or substrate in a single package [8], [9], [10], [11], [12], [13], [14], [15], [16]. AMD and Nvidia launched high-performance processor designs based on multi-chiplet architecture in 2019 [12] and 2020 [17], respectively. With the large chip area, the chip performance is boosted considerably.

Despite the widespread attention to building the Big Chip, comprehensive analysis paper in this field is scarce and urgently needed. This paper provides a detailed analysis of the Big Chip. Firstly, we analyze the area-wall in detail, taking into consideration physical limitations, yield, and cost. Based on this analysis, we further introduce potential techniques that can be used to implement the Big Chip. Secondly, we propose a performance model to guide the design and evaluation of the Big Chip. Finally, we give the architecture and our implementation for building the Big Chip based on chiplet technology and future development trends.

2. Challenge: Chip’s area-wall

High-performance computing systems are consistently demanding more computility to support computational intensive workloads in many fields. More computility requires more integrated transistors, which can be achieved by larger die area and denser silicon. However, silicon density growth has been slowing down recently due to more expensive wafer, less production yield and more complex design rules. Thus, the best way to implement higher computility is to integrate larger chips. However, traditional monolithic integration suffers from the area-wall, which obstructs the growth of die area. Fortunately the impact of area-wall can be significantly weakened by multi-chiplet integration technology. In this section, we will analyze in detail the three reasons for the area bottleneck.

2.1. Lithography exposure area limitation

In modern lithography systems [5], [18], [19], reticle is exposed under oblique incident light, and reflected light carrying information from the reticle goes through a set of optics and finally falls on the surface of wafer, as shown in Fig. 1. The exposure image on wafer is actually a demagnification of the image on reticle. Given the magnification factor , the size of image which is exposed on wafer is of the size on reticle. An important measure of how much light can be collected on a surface, called numerical aperture, is defined as the sine of half the opening angle of the light cone. The numerical apertures on the surfaces of reticle and wafer are respectively, where are shown in Fig. 1. These two numerical apertures are related by the formula [5]:

| (2) |

There are two options to increase wafer exposure area, designing optics with smaller , and increasing reticle area. However, both of these two options are hardly realized in current industry.

Fig. 1.

Demonstration of lithography system.

According to Rayleigh criterion [20], more advanced processing nodes require that to be increased. This prevents optics to be designed with smaller , because otherwise gets larger according to Eq. 2. Thus gets larger, and forces Chief Ray Angle at Object (CRAO, in Fig. 1) to be larger so that the incident and reflected light cones do not overlap. However, a larger CRAO reduces image quality and mask efficiency. Thus most of current advanced lithography systems are designed with optics and potentially larger is needed for more advanced process nodes.

Suppose the width and length of reticle is , the exposure size can be expressed in following equation:

| (3) |

The largest commercially available reticle in current industry is of size 6”, which yields the 104 mm 132 mm after discarding the margin of manufacturing. Since current advanced lithography system has , this leads to current maximum exposure size 26 mm 33 mm = 858 mm. It is important to emphasize that our above analysis is focused on silicon-based chip fabrication and does not consider processes such as TFT (thin-film-transistor) fabrication.

2.2. Yield limitation

Historically, industry has been seeking for an accurate model to predict the die yield to guide their production [21]. Also, yield model is important for exploring the possible level of integration to guide the chip design. Several models were proposed to predict yield under different assumptions. The Poisson yield model assumes a uniform and random distribution of defects, which tends to underestimate yield for large chips. Seeds’ model introduces exponential distribution to model defect density variation from chip to chip. Negative binomial model leverages both defect density and defect clustering phenomenon to determine yield, which is widely used. The following formula shows the negative binomial model for predicting the yield of monolithic die, where is the defect density dependent on process node, is defect clustering parameter [21]:

| (4) |

Based on this, we propose a yield model for general multi-chiplet system. A general multi-chiplet system can be abstracted as several parts, each part is further partitioned into identical chiplets. The area of each chiplet in is , where is the total critical area (by critical we mean excluding die-to-die modules) of and is the ratio of die-to-die area to critical area. is manufactured with processing nodes that has defect density and clustering parameter and the yield can be predicted as monolithic case. We propose that the yield of the multi-chiplet system is determined by the integrated minimum yield of all parts. Note that integration process also introduces potential failures, so integration yield should also be counted into the system yield. Suppose the success rate of bonding a chiplet is , and is the yield of chiplet i (consisting ), which is defined as the quotient of actual production and target production of chiplet i. The multi-chiplet system yield is modeled as

| (5) |

Monolithic integration suffers from low yield when implementing larger die area, especially when the processing node is advanced. We argue that multi-chiplet integration enables implementing larger total die area than monolithic under the same yield objective. Here, we consider a K-chiplet system with only one part , and compare it to the monolithic die. The K-chiplet system yield can be calculated as . By taking inverse function of yield models, we can model the maximal critical area that can be achieved by monolithic and K-chiplet system respectively as follows:

| (6) |

There is a internal constraint on that , since the yield of multi-chiplet system is definitely smaller than bonding yield. Eq. 6 can be interpreted well. For , the first product term shows that larger critical area can be manufactured with more chiplets (larger ), but this is obstructed by the ratio of die-to-die modules. The second product term is a factor that reflects the overhead from integration processes, showing that integration overhead hinders the expansion of multi-chiplet system. In extreme case, if the bonding yield is 100%, then this factor truns to 1. In reality, the second term is less than 1 and becomes smaller when increases, representing higher integration overhead when integrating more chiplets.

Fig. 2 shows the maximal critical area (longitudinal axis) that can be achieved under given yield constraint (horizontal axis) using monolithic and chiplet integration technologies. Under the same yield constraint, integration using more chiplets generally enables more critical area. Moreover, multi-chiplet system easily breaks the physical monolithic die area limit (marked with dashed lines).

Fig. 2.

Maximal critical area (mm) that can be manufactured under yield constraints. Left part and right part showing 5 nm and 14 nm processings respectively. The horizontal dashed line shows the 858 mm physical monolithic die area upper bound.

2.3. Cost limitation

The manufacturing cost can be estimated with yield and raw cost for each part of the integrated system. For monolithic die, the cost is estimated simply with die yield and raw die cost, where the yield is used to amortize costs from failed dies. For multi-chiplet system, the cost is counted for multiple consisting parts and the integration process, as pointed out previously [6], [7], [22], [23]. We model the manufacturing cost of monolithic and multi-chiplet system as follows:

| (7) |

where are the raw cost of unit area of die and interposer. is the cost of bonding unit area of die onto interposer.

Based on this cost model, we again compare the cost efficiencies of monolithic and K-chiplets systems as the case in Fig. 2. We model the number of transistors as transistor density multiplies critical area and plot the cost per transistor in Fig. 3. We also plot the system cost for comparison in Fig. 4.

Fig. 3.

Cost per transistor when implementing different critical area (mm) of 5 nm (left) and 14 nm (right) processing nodes. The cost is normalized to the cost of smallest monolithic die plotted.

Fig. 4.

System cost (arbitrary unit) when implementing different critical area (mm) of 5 nm (left) and 14 nm (right) processing nodes.

For mature processing node (see 14 nm in Fig. 3, Fig. 4), both the cost per transistor and system cost of monolithic is lower than that of multi-chiplet system. However, for new and advanced nodes, multi-chiplet system is demonstrating lower cost for large critical area. Note that in the figures we don’t show critical area beyond 858 mm (dashed line), because it can only be achieved with chiplet integration. Meanwhile, the cost curve of system with more chiplets grows more smoothly, implicating that system with more chiplets has more cost advantage when implementing large enough critical area.

3. Technique: breaking the area-wall

The big chip, which consists of over a trillion transistors and thousands of square millimeters of area (beyond a reticle), can currently be implemented using two approaches. The first approach is chiplet integration, which involves combining multiple chiplets on an interposer or substrate in a single package. In 2018, AMD proposed the EPYC processor, which integrated four identical chiplets using MCM (Multi-Chip Module) technology [24]. Huawei also proposed their Server SoC series based on chiplet-integration [25]. Through TSMC CoWoS technology, the Kunpeng 920 SoC series integrated multiple chiplets with different functions. The second approach is Wafer-scale integration (WSI), which involves building a very large integrated circuit from an entire silicon wafer. In 1980, Trilogy System made an early attempt at wafer scale integration for IBM mainframe [26]. Such integration placed the inter-die communication on the wafer, decreasing the inter-die communication latency and power. However, the yield and lithography problems led to the failure of wafer scale integration by Trilogy System [26]. Cerebras System implemented Wafer Scale Engine-1 (WSE-1) [27] in 2019 and Wafer Scale Engine-2 (WSE-2) [28] in 2021. Both approaches can significantly improve the chip’s performance. However, there are several challenges that arise in the design and implementation of the Big Chip, including fabrication and packaging, design cost and IP reuse, yield, and cooling. In the following sections, we will delve into these challenges and the solutions provided by chiplet-integration and wafer-scale integration.

Fabrication and packaging. It is significant to ensure the die packaging with high-performance and reliable die-to-die interconnection in the big chip. In standard fabrication, the scribe line is the region that separates die from its neighbor die. For the wafer scale integration, numerous wires across scribe lines are made to make the on-wafer die interconnect. For example, Cerebras System WSE-1 [27] used the newly proposed TSMC InFO_SoW packaging technology [29] as shown in Fig. 5a to add the wire in the scribe line achieving the mesh interconnection which features 2x line density and bandwidth density compared with MCM. Instead of adding wire on the scribe line to connect the die, chiplet design allows die-to-die communication base on the organic substrate or silicon interposer [24], [30], which provides more flexible and diverse die layout options. Packaging is another challenge for wafer-scale integration. When considering packaging the large-scale wafer and PCB, it is necessary to mitigate the impact of different thermal expansion of wafers and PCBs due to the heat, which would improve the reliability of packaging [27]. In addition, the impact caused by the interaction between large wafers and PCBs, such as mechanical stress, must also be considered during packaging. To improve the packaging performance, some components for buffering stress, such as the connector, are used to alleviate these problems [27]. However, the extra introduced connector increases the difficulty of packaging. The precise alignment of bumps between the wafer, the connector and PCB need to be ensured. Currently, no existing and reliable packaging tool guarantees such alignment demand, so a new custom packaging tool needs to be redeveloped [27]. Chiplet - integration provides multiple mature and verified 2D2.5D3D packaging technology as shown in Fig. 5b [4], and the reliability of these chiplet packaging has also been proved in some studies [31], [32].

Fig. 5.

The fabrication and packaging comparison of chiplet integration and wafer-scale integration[4], [29]. (a) Wafer-scale integration. (b) Chiplet integration.

Design cost and IP reuse. In building the big chip, the design cost, including design time and costs, needs to be considered, in which IP reuse is a popular way to help reduce the design cost. As the wafer scale integration implement all die on the same wafer, every die on the wafer was implemented by the same process [27]. This results in two drawbacks in the system design. Firstly, wafer scale integration reduces the possibility of implementing the die when using both the advanced and mature process. Secondly, wafer scale integration features such a tight system that the die on the wafer is hard to reuse as a functional component [33]. The chiplet IP reuse scheme is shown in Fig. 6. The system applications are decomposed into many basic functional chiplets which are then combined logically and integrated physically. Compared with wafer scale integration, chiplet package technology supports the die made from a heterogeneous process to be integrated. It allows the significant process unit which targets high performance to be implemented by advanced process, and other units, such as IO, to be yielded by mature processes, increasing the computility and minimizing the cost [34]. Furthermore, the implemented die as a pre-component or IP, can be reused in the next generation design, which significantly shortens the design time and decreases the design cost [35]. Therefore, chiplet integration brings outstanding advantages to reducing design costs through IP reuse.

Fig. 6.

The chiplet IP reuse scheme.

Yield. The overall yield of the Big Chip with multiple chiplet is a more concerning factor. Chiplet integration and wafer-scale integration respectively introduce the Known Good Die (KGD) [36] method and redundant design [27] to improve the overall yield. Due to the device and environmental factors, it is hard to ensure every die is good in a wafer, which means for wafer-scale integration, it is unavoidable to cause defected die on the wafer. In addition, as some wafer scale integration design adds wires for interconnection in the scribe, defects that occur in the scribe region also harm the yield. In order to settle the yield challenge, Cerebras proposed a redundancy design which includes 1.5 extra cores [27]. As a similar wafer scale integration design, the chip by Trilogy System introduced 2x redundancy design [26]. Such redundancy design allows the defective die to be disabled and then replaced by the redundant die whose links to other good dies would be rebuilt on the fabric, avoiding the performance degradation of on-chip network and communication due to the defective die. However, the redundancy design and re-connection increase the design overhead and require co-design between the designer and foundry tightly. On the contrary, chiplet technology is beneficial to improving the overall yield. Chiplet has two-aspect yield improvement. The first is to increase yield by decreasing the individual die size [37]. Based on the chipet technology, a big chip can be integrated by small dies. Yield improves with the die size becoming small. The second level to improve yield is to use Known Good Die (KGD) [36] to package. Instead of cutting the largest square down from the wafer, chiplet technology cut the individual die down from the wafer and only allow the individual die which passes the burn-in test to be packaged, which leads to improved yield.

Cooling. With the increasing number of integrated transistors in the big chip, the power consumption of the chips soared, and the power consumption of the big chip can be surprisingly large. Thus, the heat dissipation becomes a critical issue. In the wafer-scale integration, WSE [27] developed a cold plane and custom connector to issue the heat dissipation and thermal effect. The heat is removed by contact between the water and the chip. In current chiplet integration, the heat sink is introduced to dissipate the heat [30]. The heat dissipation solution of the chiplet integration with a small heat sink is more flexible in the mobile and edge application than that of wafer-scale integration with a sizeable water-cooling system.

4. Model: evaluating the Big Chip

4.1. Demand for performance model

The large scale of a big chip system poses new challenges, such as limitations on off-chip access to interior chiplets and long-distance communication. The high degree of customizability and wide range of integration technologies and architectures make it difficult to determine the optimal design for a specific market [38]. In such cases, a general performance model is needed to provide insights into key aspects of Big Chip design and to offer guidelines for architecture improvements, including integration technology choices, parallelism, interconnect and memory hierarchy design, off-chip bandwidth, etc.

We propose a performance model to characterize performance bottlenecks in regions of different scales. Although not perfect, the model provides insights into key aspects that can improve the upper bound of performance for a given design. We first explain how this model is extrapolated with a focus on data communication and parallelism, which are crucial factors in determining system performance. Then, we identify methods for improving peak performance in different regions and illustrate how the model changes under certain methods.

4.2. Extrapolation of performance model

We study the performance change as the big chip system scales up. To be inclusive for the aspects we consider, such as data communication and parallelism, we choose area (A) as the variable to indicate the change of system scale. There should be three main facts that contribute to the processing latency of the whole system, which are computing, off-chip access and inter-die (or inter-core) communication. The latency of these three parts can be simply calculated as

| (8) |

where refers to computing ability, indicates the off-chip bandwidth, and is the inter-die or inter-core bandwidth. Here the and are the normalized amount of data movement, which indicates the amount of data movement per computation (with unit of B/op) from off-chip memory and that inter dies (or cores) respectively.

Now we need to figure out the relations between , s and . The computing ability of big chip with certain design increase linearly as the system scales up. The relation can be expressed as

| (9) |

where the refers to the density of computing elements. is the computing frequency. can be estimated by partitioning the number of computing elements in a die (or core) by its area in a certain design.

The bandwidth of off-chip access is proportional to the circumference of the chip, as the I/Os are arranged at the edges of the chip. If we take the I/O density as design-specific, then the relation between off-chip bandwidth and area can be estimated as

| (10) |

where the indicates the off-chip bandwidth density along the edge of the chip, with the unit of . It can also be expressed as the multiplication of I/O density with frequency of data transmission.

When it comes to the inter-die or inter-core communication latency, there are two basic assumptions. The first one is that the data transmissions on buses happen concurrently with each other. Under such assumption, the intra-chip communication latency should be the maximum among the latency of all the buses:

| (11) |

The second assumption is that the scaling up of the big chip system depends mainly on the repeat of the same baseline design, which can be the design for a die or a core. Then the bandwidth of each baseline design, which is in Eq. 11, can be taken as a constant. The intra-chip communication latency can thus be expressed as

| (12) |

We then extrapolate the relationship of total latency with these three decisive parts. Here we introduce another new assumption similar to the first one above, that the computing, off-chip access and intra-chip communication operate concurrently with each other. Then we have

| (13) |

As the performance is proportional to the reverse of latency, we have

| (14) |

This is an extreme assumption dealing with latency estimation, and there exists another extreme that these three operations are totally sequential to each other. Then the total latency equals the sum of all three parts of latencies. The real world case lies between these two extremes. Even under this extreme, we can assume that there is one parts in each region that dominates the total latency, then the expression is the same as Eq. 14. Taking Eqs. 9, 10 and 12 into Eq.14, we have the final performance model as a function of chip area:

| (15) |

The three parts of performance are proportional to area, proportional to the square root of area and constant as area changes respectively. With various values of the other parameters, there should be three possibilities of the trend of the performance model, which are shown in Fig. 7. In the balanced pattern shown in Fig. 7a, the performance model is partitioned into 3 regions. In the first region with smaller chip area, the lack of computing ability is the key bottleneck of performance. As the system scales up, the off-chip access retards the growth of the performance resulting from the increasing parallel computing resources. In this region, the performance keeps growing with increasingly slow trend, and reaches the plateau when intra-chip communication plays a dominant role. In the compute-intensive and compute-sparse pattern, the sufficiency or lack of computing resources results in the absence of computing-dominated or off-chip-dominated region, as shown in Fig. 7b and c.

Fig. 7.

Three possible trends of performance model.

4.3. Comparison with monolithic multi-core and multi-chip systems

We compare the performance model of chiplet system with those of monolithic multi-core and multi-chip systems to demonstrate the performance advantage of big chip system. The parameters of the baseline design we used come from the AMD’s “Zepplin” SoC and their first-generation chiplet processor [24]. We assume that the computing ability and off-chip access are the same in the three systems, then the only difference lies in the ‘intra-chip communication’ region. The inter-die and inter-chip communications are provided separately by infinity fabric (IF) and PCIe equipped on the ‘Zepplin’ SoC [24], and the off-chip bandwidth density is estimated by dividing its two-channel DDR4 bandwidth by the length of SoC’s long edge. We set the and as 6 and 4 respectively. The performance curves are exhibited in Fig. 8.

Fig. 8.

The comparison of performance models of chiplet, monolithic multi-core and multi-chip systems.

Ideally, the monolithic chip has higher peak performance than the other two systems owing to the less limits on inter-core bandwidth. However, the monolithic design faces great challenge of “area wall” imposed by the maximum field size of lithography stepper, which retards the growth of performance. To continue the system expansion, it turns to the multi-chip integration techniques under conventional process, which exhibits in the performance curve as a asymptotic line towards the line of multi-chip design as area increases (this trend is not plotted in the figure). Thus, we can extrapolate the advantage of high performance scalability of big chip system.

4.4. Performance optimization

Performance optimization methods can be typically categorized into three levels: workload mapping, architecture and physical design. When shown in the performance model, the optimizations represent as the changes in the functions or positions of the curve. In the rest of the section, we will first take 3D-stacking as the example to clarify how physical design changes the shape of the performance curve, and then declare the roles played by and in our model as well as their dominant factors.

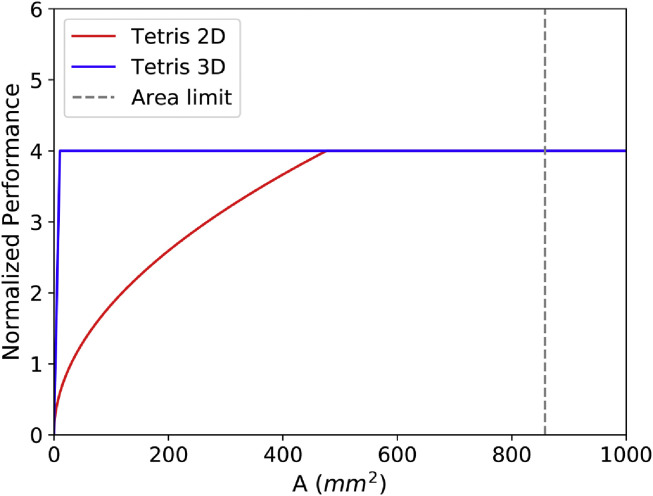

Optimization on the model shape. We take 2D-integration and 3D-stacking implements of Tetris chiplet accelerator [39] to substantiate that physical design, especially the integration technique, always optimizes the chip performance fundamentally by changing the shape of the performance curve.

The main difference between the 2D and 3D implements is the way of off-chip access. 2D Tetris uses LPDDR3, conforming to the relation demonstrated in Eq. 10. 3D Tetris uses Hybrid Memory Cube (HMC) [40] as its 3D memory substrate, which stacks vertically face-to-face with logic die and communicates through the high-speed Through-Silicon Via(TSV), then the off-chip bandwidth should turn to be proportional to the area, as indicated in Eq. 16.

| (16) |

Here the has the unit of .

Then we can get the performance models of both implements as shown in Fig. 9, in which the inter-die bandwidth is from traditional HMC design. Owing to the eminently parallel processing elements, though the frequency is not that high (500 MHZ) [39], the computility is so abundant that it never becomes the bottleneck in both implements.

Fig. 9.

The comparison between the performance models of 2D-integration and 3D-stacking designs of Tetris. The 3D-stacking optimization changes the shape of the model curve.

Though still limited by the maximum silicon area, the 3D implement has substantially narrower off-chip bottleneck region compared with that of 2D design, and can easily achieve the peak performance even with small chip area, which is attributed to abundant wiring resource and high-speed transmission provided by 3D-memory-stacking techniques. We can see from the example that a design effort may not contribute to the performance of the system in any scale, but can give prominent improvement in the region that designers are interested in.

The roles ofand. means the amount of data transferred between chip and off-chip memory, and means the maximum amount of data transmitted between two dies or cores interconnected by a bus. Though they are both variables related to the data volume and were assumed constant with chip area in our discussion above, they are affected by the architecture design such as of-chip memory capacity and structure of interconnects.

depends on application, namely the amount of data needed by the computation and the workload mapping and scheduling policies, and the architecture design, especially the on-chip memory capacity. The amount of data accessed off-chip consists of two parts: a constant part determined by the data volume needed by the workload, and the redundant part caused by ineffective workload mapping policy or the lack of on-chip memory capacity. With improvement on the mapping and on-chip memory proportion, the data locality can be optimized and is reduced accordingly, then the performance curve arises in the off-chip region, as shown in Fig. 10a.

Fig. 10.

The impacts ofand.

is determined by application, on-chip memory capacity and the structure of interconnects. Application and on-chip memory influence the data locality on each die. The effect of interconnect structure can be declared in a more general perspective. Considering transporting fixed amount of data between two nodes, the more routes between them, the less data assigned on each routes, resulting in less amount of data transmitted by each bus and the same for the maximum. Cmesh is an example interconnect design that can achieve lower compared with mesh design. The reduced leads to elevation of the peak performance of the chip design.

5. Architecture: building the Big Chip

The architecture design of big chip, which has significant influence on the performance, is importantly associated with the memory access pattern. In terms of the memory access pattern, different from the traditional multi-core processor design which considers multi-core integrated on a single die accessing the memory, the big chip design focuses on multiple multi-core die accessing the memory system. Based on the memory access pattern, the big chip can be classified into symmetric-chiplet architecture, NUMA (non-uniform memory access)-chiplet architecture, cluster-chiplet architecture and heterogeneous-chiplet architecture. In the following sections, we will use the example of building big chips with chiplet technology to discuss the characteristic of these big chip architectures from the perspectives of performance, scalability, reliability, communication, and other aspects.

Symmetric-chiplet architecture. As shown in Fig. 11a, symmetric-chiplet architecture consists of many identical computing chiplets accessing the shared uniform memory or IO through the router network or the inter-chiplet resources such as the interposer. The chiplet can be designed as a multi-core structure with the local cache, or a NoC structure with multiple processing elements. The uniform memory can be equally accessed by all chiplet, which shows the UMA (uniform memory access) effect. We now discuss three main advantages of the symmetric-chiplet architecture. Firstly, the symmetric-chiplet architecture allows multiple chiplets to perform the instruction to provide high computing ability. The workload can be split into small pieces then distributed to different chiplets to speed up the application execution while keeping the workload balance among different chiplets. Secondly, such symmetric-chiplet architecture provides unified latency from different chiplet to the memory, without considering the remote accessing or memory copies in the distributed shared memory system such as NUMA, thus saving the latency and energy consumption due to the unnecessary data movement. Thirdly, symmetric-chiplet processor also provides the redundant design that other chiplets can take over the job of the failed chiplet, which increases the system reliability. Due to the shared memory, the symmetric-chiplet processor scale up the number of chiplet without increasing the extra private memory. However, when the symmetric-chiplet architecture continues to scale up the number of chiplets, the interconnection design would be heavily limited by the physical wiring. To issue the high-bandwidth inter-chiplet communication and memory request conflict is also challenging. Note that increasing the number of chiplets may increase the request conflict of different chiplets to the memory, which would harm the system performance. On average, the memory bandwidth is partitioned by the chiplet. Increasing the number of chiplets would decrease the partitioned memory bandwidth of each chiplet. Several designs from the industry and academia adopt the symmetric-chipelt architecture. Apple M1 Ultra processor [41] utilized a chiplet-integration design with two identical M1 Max chips, featuring a unified memory architecture design. The unified memory up to 128 GB can be accessed by the cores on the die. Fotouhi [42] proposed a uniform memory architecture based on the chiplet integration to overcome the distance-dependent power consumption and latency issue. Sharma [43] presents a multi-chiplet system sharing a uniform memory through on-board optical interconnect.

Fig. 11.

The architecture of the Big Chip processor. (a) Symmetric-chiplet Architecture. (b) NUMA-chiplet Architecture. (c) Cluster-chiplet Architecture. (d) Heterogeneous-chiplet Architecture. (e) Hierarchal-chiplet Architecture.

NUMA-chiplet architecture. NUMA chiplet architecture contains multiple chiplets interconnected through a point-to-point network or a central router and the memory system of NUMA chiplet architecture is shared by all chiplet but distributed in the architecture, as shown in Fig. 11b. The chiplet can adopt the design of multiple cores with the shared cache, or PEs interconnected by NoC. Moreover, each chiplet can occupy its own local memory such as DRAM, HBM, etc, which is the most obvious feature distinguishing it from the symmetric-chiplet architecture. Although these memories connected to different chiplets are distributed in the system, the memory address space is shared globally. This distributed placement of share memory results in a NUMA effect which is that accessing the remote memory is slower than accessing the local memory [44]. Some advantages are considered in the NUMA-chiplet architecture. From the view of the single chiplet, each chiplet holds its own memory, featuring relatively private memory bandwidth and capacity, and reducing the conflict with memory requests from other chiplet. Furthermore, the close chip-to-memory placement provides the low latency and low power consumption of data movement. In addition, in NUMA-chiplet architecture, multiple chiplets interconnected with a high-bandwidth point-to-point network or router can perform the task parallel, which boosts the system performance and compatibility. Such NUMA-chiplet architecture allows a high scalable ability as each chiplet is with its own memory. However, as the NUMA-chiplet architecture scale up with more chiplets, it is challenging to design the chiplet-to-chiplet interconnection network. In addition, the cost and the difficulty of programming model grow as the number of chiplet increases. There are some designs with NUMA-chiplet architecture. AMD’s first-generation EPYC processor connected four identical chiplet with local memory [24]. The latency difference between the local access and remote access to the memory can be up to 51 ns [44]. In AMD’s second-generation EPYC processor, the computing chiplets are connected to the memory through the IO chiplet, which shows a NUMA-chiplet architecture [34]. Another typical NUMA-chiplet architecture design is Intel Sapphire Rapids [45]. It consists of four chiplets which are connected through MDFIO (Multi-Die Fabric IO). Four chiplets are organized as a array and each die works as a NUMA node. Zaruba [46] architects 4 RISC-V processor based chiplet and each has three links to the other three chiplet respectively to provide non-uniform memory access.

Cluster-chiplet architecture. As shown in Fig. 11c, cluster-chiplet architecture contains many clusters of chiplets, featuring totally up to thousands of cores. High-speed or high-throughput network topology such as ring, mesh and 1D/2D torus etc. is used to connect the cluster to meet the high-bandwidth and low-latency communication requirement of such extremely large-scale system. Each cluster is formed by many interconnected chiplets with the individual memory and each cluster can run the individual operating system. The cluster can communicate with other clusters through message passing. The cluster-chiplet architecture with the powerful cluster interconnected through high-performance interconnection shows a high scalability and provide a huge amount of computility. As a strongly scalable architecture, cluster-chiplet architecture is the base of many designs. IntAct [30] integrated 96 cores which are divided into 6 chiplets on the active interposer. The 6 chiplets are connected by a NoC. Tesla [47] issued the microarchitecture of Dojo system for exa-scale computing. In Dojo, one training tile consists of 25 D1 chiplets which are arranged as a matrix style. Many training tiles interconnected by 2D mesh network can form a larger system. Integrated by MCM, Simba [1] constructs a chiplet system using mesh interconnection. The PEs inside the chiplet are connected using NoC.

Heterogeneous-chiplet architecture. Heterogeneous-chiplet architecture integrates heterogeneous chiplets, consisting of different kinds of chiplet as shown in Fig. 11d. Different kinds of chiplets on the same interposer can complement other kinds of chiplet, collaboratively implementing the computing task. Huawei Kunpeng 920 series SoC [25] is a heterogeneous system based on computing chiplet, IO chiplet, AI chiplet etc. Intel Lakefield [48] proposed a design which stacks the compute chiplet on the base chiplet. The compute chiplet integrates many processing cores including CPU, GPU, IPU(Infrastructure Processing Unit), etc. and the base chiplet contains abundant IO interfaces including PCIe Gen3, USB type-C, etc. In Ponte Vecchio [49], two base tiles are interconnected using EMIB(Embedded Multi-Die Interconnect Bridge). The compute tile and RAMBO tile are stacked on each base tile. Intel Meteor Lake processor [50] integrates GPU tile, CPU tile, IO tile and SoC tile.

For the current and future exa-scale computing, we predict a hierarchical-chiplet architecture as a powerful and flexible solution. As shown in Fig. 11e, the hierarchical-chiplet architecture is designed as many cores and many chiplets with hierarchical interconnect. Inside the chiplet, cores are communicated using ultra-low-latency interconnect while inter-chiplet are interconnected with low latency beneficial from the advanced packaging technology, such that the on-chip(let) latency and the NUMA effect in such high-scalablility system can be minimized. The memory hierarchy contains core memory, on-chip(let) memory and off-chip(let) memory. The memory from these three levels vary in terms of memory bandwidth, latency, power consumption and cost. In the overview of hierarchical-chiplet architecture, multiple cores are connected through cross switch and they share a cache. This forms a pod structure and the pod is interconnected through the intra-chiplet network. Multiple pods form a chiplet and the chiplet is interconnect through the inter-chiplet network and then connects to the off-chip(let) memory. Carefully design is needed to make full use of such hierarchy. Reasonably utilizing the memory bandwidth to balance the workload of different computing hierarchy can significantly improve the chiplet system efficiency. Properly designing the communication network resource can ensure the chiplet collaboratively performing the shared-memory task (Fig. 12).

Fig. 12.

Comparison between different architectures of Big Chip.

6. Building the Big Chip: our implementation

To explore the design and implementation technique of Big Chip, we architected and designed a 16-chiplet based 256 core processor system named Zhejiang Big Chip. Here, we provide an introduction to the proposed Big Chip processor.

The Zhejiang Big Chip adopts a scalable tile-based architecture, as illustrated in Fig. 13. This processor consists of 16 chiplets, and it has the potential to scale up to 100 chiplets. In each chiplet, there are 16 CPU processors that are connected via a network-on-chip (NOC), and each tile is fully symmetrically interconnected to enable communication among multiple chiplets. The CPU processors are designed based on the RISC-V instruction set. Moreover, this processor adopts a unified memory system, which means any core on any tile can directly access the memory across the entire processor.

Fig. 13.

Zhejiang Big Chip overview.

To connect multiple chiplets, a die-to-die (D2D) interface is employed. The interface is designed using a channel-sharing technique based on a time-multiplexing mechanism. This approach reduces the number of inter-chip signals, thereby minimizing the area overhead of I/O bumps and interposer wiring resources, which can significantly lower the complexity of substrate design. The chiplets are terminated at the top metal layer, where the micro I/O pads are built. The Zhejiang Big Chip processor is designed and fabricated on 22 nm CMOS process.

7. The prospect and challenge

In addition to improving computility, the Big chip will also promote the development of novel design methodology. We predict that near-memory computing and optical-electronic computing will be important research directions.

7.1. Near-memory computing

As the computing workload is heavily concentrated in the chiplet, off-chip memory system usually has a simple function of storing data and IO. For applications with poor data locality, frequent on-chip cache misses occur, resulting in the need to reload data from off-chip memory. This frequent data movement between a large number of chiplets and memory can lead to extra latency and high energy consumption. In symmetric-chiplet architectures, bus congestion can worsen this situation, degrading system performance. To address these issues, near-data processing can be used to introduce near-memory computing, placing processing and memory units closely with high-bandwidth interconnections to maximize system performance. Near-memory computing breaks the performance limitations of traditional memory hierarchies. 3D stacked memory is an excellent example of near-memory computing, gaining more attention as a solution to capacity, bandwidth, and performance limitations. In 3D stacked memory, multiple DRAM chiplets are stacked vertically on the bottom logic chiplet, and TSVs implement the electrical connections between the chiplets, showing a high bandwidth of inter-chiplet data transmission. The logic chiplet at the bottom of the stacked memory can perform relatively simple data processing, undertaking part of the computing workload. Another approach to near-memory computing is to increase the volume of on-chip cache to keep more data on the chip instead of frequently scheduling on-chip and off-chip data. AMD has proposed 3D V-cache technology, which stacks a 64MB cache on the Zen3 shared 32 MB L3 cache, implementing a total of 96 MB L3 cache. The Cerebras WSE has even achieved 18 GB on-chip memory.

7.2. Optical-electronic computing

Optical-electronic computing has emerged as a potential solution to address the bottleneck of electrical design, particularly the electrical IO for inter-chiplet communication, which becomes more pronounced as the demand for high bandwidth increases [51], [52], [53]. The current data rate, pin count, and pin pitch of electrical interconnects are limited by signal integrity issues such as crosstalk. Moreover, some pins are reserved for non-communication purposes such as power/ground pins, further decreasing pin utilization and exacerbating the interconnect physical limitations that hinder high-bandwidth communication between chiplets. In addition, the long-distance signal transmission on the interposer or substrate suffers from degradation and high latency, which can be mitigated by limiting the distance between chiplets or adding repeaters. However, limiting the distance between chiplets can result in multiple hops to communicate with remote chiplets, which further impacts system performance.

Optical-IO Processor defined in this paper stands out as an important future technology that utilizes IO chiplet with optical device to facilitate high bandwidth communication. Optical-IO Processors can overcome the signal integrity limitations of traditional electrical interconnects, making them an attractive solution for the aforementioned issues in electrical design. Studies have illustrated the feasibility of high-performance and low-energy-consumption optical IO implementation and packaging [51], [52], [53], [54], [55].

7.3. Challenges

Although the big chip can achieve powerful computing ability, it still faces yield, cooling and performance as its main challenge. Firstly, the integration of big chips involves many steps, making it difficult to ensure high yield due to device, technology, environment, and other factors. While methods like KGD can improve yield, the cost of designing for defect die must also be considered. Secondly, heat dissipation is a significant issue in big chip design, with a large number of dies generating enormous heat. Therefore, cooling systems and low power design are crucial. Lastly, task mapping and design space exploration in big chip design are challenging to implement. Moreover, in chiplet integration, the non-uniform bandwidth effect must be considered.

Declaration of competing interest

The authors declare that they have no conflicts of interest in this work.

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China (61834006, 62025404, 62104229, 62104230, 61874124, 62222411), and in part by the Strategic Priority Research Program of Chinese Academy of Sciences (XDB44030300, XDB44020300), Zhejiang Lab under Grants 2021PC0AC01.

Biographies

Yinhe Han received the MS and PhD degrees in computer science from the Institute of Computing Technology(ICT), Chinese Academy of Sciences(CAS), in 2003 and 2006, respectively. He is currently a professor at ICT, CAS. His main research interests are microprocessor design, integrated circuit design, and computer architecture. In these research fields, he has published 100 papers. He is a Senior Member of Chinese Computer Federation(CCF). He serves on the technical program committees of several conferences, such as DAC and HPCA. He is an associate editor of IEEE TRANSACTIONS ON COMPUTERS.

Haobo Xu received the BS and MS degrees from Southeast University, Nanjing, China, in 2013 and 2016, respectively, and the PhD degree in computer science from the Institute of Computing Technology (ICT), Chinese Academy of Sciences (CAS), Beijing, China, in 2020. He is currently an associate professor with ICT, CAS. His research interests include computer architecture, domain-specific accelerator, and VLSI design.

Ninghui Sun received the BS degree from Peking University, in 1989, and the MS and PhD degrees in computer science from the Institute of Computing Technology, Chinese Academy of Sciences, in 1992 and 1999, respectively. He is a professor with the Institute of Computing Technology, Chinese Academy of Sciences. He is the architect of the Dawning series high-performance computers. His research interests include computer architecture, operating system, and parallel algorithm.

Contributor Information

Haobo Xu, Email: xuhaobo@ict.ac.cn.

Ninghui Sun, Email: snh@ict.ac.cn.

References

- 1.Shao Y.S., Clemons J., Venkatesan R., et al. Proceedings of the 52nd Annual IEEE/ACM International Symposium on Microarchitecture. 2019. Simba: Scaling deep-learning inference with multi-chip-module-based architecture; pp. 14–27. [Google Scholar]

- 2.Moore G.E. Cramming more components onto integrated circuits, reprinted from electronics, volume 38, number 8, april 19, 1965, pp. 114 ff. IEEE Solid-State Circuits Soc. Newsl. 2006;11(3):33–35. [Google Scholar]

- 3.Dennard R.H., Gaensslen F.H., Yu H.-N., et al. Design of ion-implanted MOSFET’s with very small physical dimensions. IEEE J. Solid-State Circuits. 1974;9(5):256–268. [Google Scholar]

- 4.Lau J.H. Springer Nature; 2021. Semiconductor Advanced Packaging. [Google Scholar]

- 5.Neumann J.T., Gräupner P., Kaiser W., et al. Photomask Technology 2012. vol. 8522. SPIE; 2012. Interactions of 3D mask effects and NA in EUV lithography; pp. 322–333. [Google Scholar]

- 6.Feng Y., Ma K. Proceedings of the 59th ACM/IEEE Design Automation Conference. 2022. Chiplet actuary: A quantitative cost model and multi-chiplet architecture exploration; pp. 121–126. [Google Scholar]

- 7.Stow D., Akgun I., Xie Y. 2019 ACM/IEEE International Workshop on System Level Interconnect Prediction (SLIP) IEEE; 2019. Investigation of cost-optimal network-on-chip for passive and active interposer systems; pp. 1–8. [Google Scholar]

- 8.Li F., Wang Y., Cheng Y., et al. Proceedings of the 41st IEEE/ACM International Conference on Computer-Aided Design. 2022. GIA: A reusable general interposer architecture for agile chiplet integration; pp. 1–9. [Google Scholar]

- 9.Zheng H., Wang K., Louri A. 2020 57th ACM/IEEE Design Automation Conference (DAC) IEEE; 2020. A versatile and flexible chiplet-based system design for heterogeneous manycore architectures; pp. 1–6. [Google Scholar]

- 10.Zaruba F., Schuiki F., Benini L. Manticore: A 4096-core RISC-v chiplet architecture for ultraefficient floating-point computing. IEEE Micro. 2020;41(2):36–42. [Google Scholar]

- 11.Tan Z., Cai H., Dong R., et al. 2021 ACM/IEEE 48th Annual International Symposium on Computer Architecture (ISCA) IEEE; 2021. NN-baton: DNN workload orchestration and chiplet granularity exploration for multichip accelerators; pp. 1013–1026. [Google Scholar]

- 12.Naffziger S., Lepak K., Paraschou M., et al. 2020 IEEE International Solid-State Circuits Conference-(ISSCC) IEEE; 2020. AMD chiplet architecture for high-performance server and desktop products; pp. 44–45. [Google Scholar]

- 13.Lin M.-S., Huang T.-C., Tsai C.-C., et al. A 7-nm 4-GHz arm-core-based cowos chiplet design for high-performance computing. IEEE J. Solid-State Circuits. 2020;55(4):956–966. [Google Scholar]

- 14.Kim J., Murali G., Park H., et al. Architecture, chip, and package codesign flow for interposer-based 2.5-D chiplet integration enabling heterogeneous IP reuse. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2020;28(11):2424–2437. [Google Scholar]

- 15.Kabir M.D.A., Peng Y. 2020 25th Asia and South Pacific Design Automation Conference (ASP-DAC) IEEE; 2020. Chiplet-package co-design for 2.5 D systems using standard ASIC CAD tools; pp. 351–356. [Google Scholar]

- 16.Hwang R., Kim T., Kwon Y., et al. 2020 ACM/IEEE 47th Annual International Symposium on Computer Architecture (ISCA) IEEE; 2020. Centaur: a chiplet-based, hybrid sparse-dense accelerator for personalized recommendations; pp. 968–981. [Google Scholar]

- 17.Zimmer B., Venkatesan R., Shao Y.S., et al. A 0.32–128 TOPS, scalable multi-chip-module-based deep neural network inference accelerator with ground-referenced signaling in 16 nm. IEEE J. Solid-State Circuits. 2020;55(4):920–932. [Google Scholar]

- 18.Suzuki A. Advances in optics and exposure devices employed in excimer laser/EUV lithography. Handb. Laser Micro-and Nano-Eng. 2020:1–42. [Google Scholar]

- 19.Kneer B., Migura S., Kaiser W., et al. EUV lithography optics for sub 9 nm resolution. Proc. SPIE. 2015;9422 doi: 10.1117/12.2175488. [DOI] [Google Scholar]

- 20.Lin B.J. Optical Microlithography V. vol. 633. SPIE; 1986. Where is the lost resolution? pp. 44–50. [Google Scholar]

- 21.Cunningham J.A. The use and evaluation of yield models in integrated circuit manufacturing. IEEE Trans. Semicond. Manuf. 1990;3(2):60–71. [Google Scholar]

- 22.Stow D., Xie Y., Siddiqua T., et al. 2017 IEEE/ACM International Conference on Computer-Aided Design (ICCAD) IEEE; 2017. Cost-effective design of scalable high-performance systems using active and passive interposers; pp. 728–735. [Google Scholar]

- 23.T. Tang, Y. Xie, Cost-aware exploration for chiplet-based architecture with advanced packaging technologies, arXiv preprint arXiv:2206.07308 (2022).

- 24.Beck N., White S., Paraschou M., et al. 2018 IEEE International Solid - State Circuits Conference - (ISSCC) 2018. ‘Zeppelin’: An SoC for multichip architectures; pp. 40–42. [Google Scholar]

- 25.Xia J., Cheng C., Zhou X., et al. Kunpeng 920: The first 7-nm chiplet-based 64-core ARM SoC for cloud services. IEEE Micro. 2021;41(5):67–75. [Google Scholar]

- 26.Lauterbach G. The path to successful wafer-scale integration: The cerebras story. IEEE Micro. 2021;41(6):52–57. [Google Scholar]

- 27.Wafer-scale deep learning . 2019 IEEE Hot Chips 31 Symposium (HCS) 2019. pp. 1–31. [Google Scholar]

- 28.Lie S. 2022 IEEE Hot Chips 34 Symposium (HCS) 2022. Cerebras architecture deep dive: First look inside the HW/SW co-design for deep learning: cerebras systems; pp. 1–34. [Google Scholar]

- 29.Chun S.-R., Kuo T.-H., Tsai H.-Y., et al. 2020 IEEE 70th Electronic Components and Technology Conference (ECTC) IEEE; 2020. InFO_sow (system-on-wafer) for high performance computing; pp. 1–6. [Google Scholar]

- 30.Vivet P., Guthmuller E., Thonnart Y., et al. Intact: A 96-core processor with six chiplets 3D-stacked on an active interposer with distributed interconnects and integrated power management. IEEE J. Solid-State Circuits. 2021;56(1):79–97. [Google Scholar]

- 31.Lin P.-Y., Yew M.C., Yeh S.S., et al. 2021 IEEE 71st Electronic Components and Technology Conference (ECTC) 2021. Reliability performance of advanced organic interposer (CoWoS®-r) packages; pp. 723–728. [Google Scholar]

- 32.Lin M.L., Liu M.S., Chen H.W., et al. 2022 IEEE 72nd Electronic Components and Technology Conference (ECTC) 2022. Organic interposer CoWoS-r+ (plus) technology; pp. 1–6. [Google Scholar]

- 33.Kim J., Murali G., Park H., et al. Architecture, chip, and package codesign flow for interposer-based 2.5-D chiplet integration enabling heterogeneous IP reuse. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2020;28(11):2424–2437. [Google Scholar]

- 34.Naffziger S., Lepak K., Paraschou M., et al. 2020 IEEE International Solid- State Circuits Conference - (ISSCC) 2020. AMD chiplet architecture for high-performance server and desktop products; pp. 44–45. [Google Scholar]

- 35.Wang M., Wang Y., Liu C., et al. 2021 58th ACM/IEEE Design Automation Conference (DAC) IEEE; 2021. Network-on-interposer design for agile neural-network processor chip customization; pp. 49–54. [Google Scholar]

- 36.Gilg L., Zorian Y. Multi-Chip Module Test Strategies. 1997. Known good die; pp. 15–25. [Google Scholar]

- 37.Ma X., Wang Y., Wang Y., et al. CCF Transactions on High Performance Computing. 2022. Survey on chiplets: Interface, interconnect and integration methodology; pp. 1–10. [Google Scholar]

- 38.Iyer S.S. Heterogeneous integration for performance and scaling. IEEE Trans. Compon. Packag. Manuf. Technol. 2016;6(7):973–982. [Google Scholar]

- 39.Gao M., Pu J., Yang X., et al. Proceedings of the Twenty-Second International Conference on Architectural Support for Programming Languages and Operating Systems. 2017. Tetris: Scalable and efficient neural network acceleration with 3d memory; pp. 751–764. [Google Scholar]

- 40.Jeddeloh J., Keeth B. 2012 Symposium on VLSI Technology (VLSIT) IEEE; 2012. Hybrid memory cube new DRAM architecture increases density and performance; pp. 87–88. [Google Scholar]

- 41.A. Newsroom, Apple unveils m1 ultra, the world’s most powerful chip for a personal computer, 2022. https://www.apple.com/hk/en/newsroom/2022/03/apple-unveils-m1-ultra-the-worlds-most-powerful-chip-for-a-personal-computer/, Last accessed on 2022-11-18.

- 42.Fotouhi P., Werner S., Lowe-Power J., et al. Proceedings of the International Symposium on Memory Systems. 2019. Enabling scalable chiplet-based uniform memory architectures with silicon photonics; pp. 222–334. [Google Scholar]

- 43.Sharma A., Bamiedakis N., Karinou F., et al. 2021 Optical Fiber Communications Conference and Exhibition (OFC) IEEE; 2021. Multi-chiplet system architecture with shared uniform access memory based on board-level optical interconnects; pp. 1–3. [Google Scholar]

- 44.Naffziger S., Beck N., Burd T., et al. 2021 ACM/IEEE 48th Annual International Symposium on Computer Architecture (ISCA) IEEE; 2021. Pioneering chiplet technology and design for the AMD EPYC™and Ryzen™processor families: Industrial product; pp. 57–70. [Google Scholar]

- 45.Nassif N., Munch A.O., Molnar C.L., et al. 2022 IEEE International Solid- State Circuits Conference (ISSCC) vol. 65. 2022. Sapphire rapids: The next-generation Intel Xeon scalable processor; pp. 44–46. [Google Scholar]

- 46.Zaruba F., Schuiki F., Benini L. Manticore: A 4096-core RISC-V chiplet architecture for ultraefficient floating-point computing. IEEE Micro. 2021;41(2):36–42. [Google Scholar]

- 47.Talpes E., Williams D., Sarma D.D. 2022 IEEE Hot Chips 34 Symposium (HCS) 2022. DOJO: The microarchitecture of Tesla’s exa-scale computer; pp. 1–28. [Google Scholar]

- 48.Gomes W., Khushu S., Ingerly D.B., et al. 2020 IEEE International Solid- State Circuits Conference - (ISSCC) 2020. 8.1 Lakefield and mobility compute: A 3D stacked 10 nm and 22FFL hybrid processor system in 1212 mm, 1 mm package-on-package; pp. 144–146. [Google Scholar]

- 49.Gomes W., Koker A., Stover P., et al. 2022 IEEE International Solid- State Circuits Conference (ISSCC) vol. 65. 2022. Ponte vecchio: A multi-tile 3D stacked processor for exascale computing; pp. 42–44. [Google Scholar]

- 50.Gomes W., Morgan S., Phelps B., et al. 2022 IEEE Hot Chips 34 Symposium (HCS) 2022. Meteor lake and arrow lake Intel next-gen 3D client architecture platform with foveros; pp. 1–40. [Google Scholar]

- 51.Hosseini K., Kok E., Shumarayev S.Y., et al. 2021 Optical Fiber Communications Conference and Exhibition (OFC) IEEE; 2021. 8 Tbps co-packaged FPGA and silicon photonics optical IO; pp. 1–3. [Google Scholar]

- 52.Wade M., Davenport M., De Cea F.M., et al. 2018 European Conference on Optical Communication (ECOC) IEEE; 2018. A bandwidth-dense, low power electronic-photonic platform and architecture for multi-tbps optical I/O; pp. 1–3. [Google Scholar]

- 53.Wade M., Anderson E., Ardalan S., et al. TeraPHY: A chiplet technology for low-power, high-bandwidth in-package optical I/O. IEEE Micro. 2020;40(2):63–71. [Google Scholar]

- 54.Meade R., Ardalan S., Davenport M., et al. 2019 Optical Fiber Communications Conference and Exhibition (OFC) IEEE; 2019. TeraPHY: a high-density electronic-photonic chiplet for optical I/O from a multi-chip module; pp. 1–3. [Google Scholar]

- 55.Sun C., Jeong D., Zhang M., et al. 2020 IEEE Symposium on VLSI Technology. IEEE; 2020. TeraPHY: An O-band WDM electro-optic platform for low power, terabit/s optical I/O; pp. 1–2. [Google Scholar]