Abstract

Objective:

We investigated how various error patterns from an AI aid in the non-binary decision scenario influence human operators’ trust in the AI system and their task performance.

Background:

Existing research on trust in automation/autonomy predominantly uses the signal detection theory (SDT) to model autonomy performance. The SDT classifies the world into binary states and hence oversimplifies the interaction observed in real-world scenarios. Allowing multi-class classification of the world reveals intriguing error patterns previously unexplored in prior literature.

Method:

Thirty-five participants completed 60 trials of a simulated mental rotation task assisted by an AI with 70–80% reliability. Participants’ trust in and dependence on the AI system and their performance were measured. By combining participants’ initial performance and the AI aid’s performance, five distinct patterns emerged. Mixed-effects models were built to examine the effects of different patterns on trust adjustment, performance, and reaction time.

Results:

Varying error patterns from AI impacted performance, reaction times, and trust. Some AI errors provided false reassurance, misleading operators into believing their incorrect decisions were correct, worsening performance and trust. Paradoxically, some AI errors prompted safety checks and verifications, which, despite causing a moderate decrease in trust, ultimately enhanced overall performance.

Conclusion:

The findings demonstrate that the types of errors made by an AI system significantly affect human trust and performance, emphasizing the need to model the complicated human-AI interaction in real life.

Application:

These insights can guide the development of AI systems that classify the state of the world into multiple classes, enabling the operators to make more informed and accurate decisions based on feedback.

Keywords: trust dynamics, multi-class classification, human-automation interaction, human-autonomy interaction, human-AI interaction

Précis:

We examine how AI errors influence human trust and performance. Some AI errors provided false reassurance, misleading operators into believing their incorrect decisions were correct. This leads to worsened performance and a significant decline in trust. Conversely, and paradoxically, some AI errors prompt safety verification, ultimately enhancing overall performance.

INTRODUCTION

Consider the following hypothetical scenario:

Sarah, a highly skilled pharmacist, is responsible for filling the medication bottles for each prescription order. Recently, the pharmacy Sarah works for has introduced an AI computer vision system1 that can scan the filled bottle and identify the specific medication that has been filled. The AI system is introduced as another layer of verification before medication dispensing.

Today, Sarah receives a prescription order for one patient, Noah, who needs to take medication .

Upon receiving the order, one of the following five cases could happen:

Case A: Sarah correctly fills the bottle with medication . The AI system scans the bottle and correctly identifies that the filled medication is and signals a green light.

Case B: Sarah correctly fills the bottle with medication . The AI system scans the bottle but incorrectly identifies that the filled medication is (or any medication that is not ). As medication is not the ordered medication, the AI system signals a red flag and indicates the need to double-check.

Case C: Sarah has a lapse when filling the bottle and incorrectly fills it with medication . Fortunately, the AI system correctly identifies the filled medication as . As medication is not the ordered medication, the AI system signals a red flag.

Case D: Sarah has a lapse when filling the bottle and incorrectly fills it with medication . The AI system scans the filled medication and identifies it as medication (or any medication that is not and not ). As medication is not the ordered medication, the AI system signals a red flag.

Case E: Sarah has a lapse when filling the bottle and incorrectly fills it with medication . The AI system scans the filled medication and incorrectly identifies it as medication and signals a green light.

This hypothetical scenario, which reflects recent developments in medical dispensing (Q. Chen et al., 2024; J. Y. Kim et al., 2025; Lester et al., 2021; Tsai et al., 2025; Zheng et al., 2023), presents unique characteristics that are largely overlooked in existing research on trust in and dependence on automation/autonomy and AI systems. Existing research typically involves a human subject performing tasks, with certain tasks being automated. For example, an automated combat identification (CID) aid can scan the environment, identify a friend or a foe, and make recommendations to soldiers (Du, Huang, & Yang, 2020; Guo, Yang, & Shi, 2023, 2024; Neyedli, Hollands, & Jamieson, 2011; Wang, Jamieson, & Hollands, 2009). To model the performance of the automation, most studies used the signal detection theory (SDT) (Hautus, Macmillan, & Creelman, 2021; Tanner & Swets, 1954). However, SDT categorizes the world into binary states—signal present or absent—which oversimplifies the complex dynamics and variances observed in real-world scenarios.

Let’s examine the five cases presented in the hypothetical scenario, focusing particularly on Case D and Case E shown in Figure 1b, which are especially thought-provoking. In Case D, although the AI erroneously identifies medication Y as Z, this error can be considered beneficial from a utilitarian perspective. The incorrect identification differs from the prescribed medication X, which rightly triggers a safety alert. Conversely, Case E also involves an error by the AI, but this mistake could potentially lead to catastrophic results, as it mistakenly confirms the incorrectly filled medication as correct, misleading the human operator and providing false reassurance. Interestingly, as shown in Figure 1a, if we assumed the prediction of the AI is only binary, Cases D and E would be combined into a single case.

Figure 1.

Difference in the performance patterns when choices are binary or non-binary. In the binary choice (a), the Wrong-Incorrect pattern always matches the reference. However, in the non-binary choice (b), the Wrong-Incorrect pattern could be categorized into two, based on whether incorrect predictions match the reference (prescribed pill).

This research aims to explore how people trust and depend on automation/autonomy when the predictions made by these systems extend beyond simple binary decisions. We first review the SDT, followed by existing studies on trust and dependence that utilize SDT within both single- and dual-task frameworks. After that, we introduce and detail the current study.

SDT, Trust, Automation Compliance and Reliance

SDT models the relationship between signals and noise, as well as the automation’s ability to detect signals among noise (Sorkin & Woods, 1985; Tanner & Swets, 1954). The state of the world is characterized by either “signal present” or “signal absent,” which may or may not be identified correctly by automation. The combination of the state of the world and the automation’s detection results in four possible states: hit, miss, false alarm (FA), and correct rejection (CR) (see Figure 2).

Figure 2.

Signal Detection Theory (SDT)

SDT is widely employed to examine trust in and dependence on automation/autonomy and AI systems. Typically, SDT is used to model the reliability of automation with which the human operators interact in both single-task (Bhat, Lyons, Shi, & Yang, 2022, 2024; Lacson, Wiegmann, & Madhavan, 2005; Madhavan, Wiegmann, & Lacson, 2006; Neyedli et al., 2011; Wang et al., 2009; Wiegmann, Rich, & Zhang, 2001) and multi-task paradigms (Chung & Yang, 2024; Du et al., 2020; McBride, Rogers, & Fisk, 2011; Wiczorek & Manzey, 2014; Yang, Unhelkar, Li, & Shah, 2017). The general findings indicate that as automation reliability increases, trust and dependence on automation increase accordingly.

When error types (FAs and misses) are examined more closely, findings suggest they lead to distinct behavioral patterns (Chancey, Bliss, Yamani, & Handley, 2017; Meyer, 2001, 2004). Dependence on automation can be categorized into compliance and reliance. Compliance is characterized by operators responding as though the error exists when the system indicates an error. FAs often result in commission errors when the operator follows the automation’s false indication. Reliance, by contrast, occurs when operators believe the automated system’s indication of safety. When operators fail to respond to events due to automation misses, omission errors arise (Meyer, 2001, 2004; Skitka, Mosier, & Burdick, 1999). Research suggests that FAs primarily influence compliance, though some studies indicate they may also affect reliance, whereas misses predominantly impact reliance (Chancey, Bliss, Liechty, & Proaps, 2015; Chancey et al., 2017; Dixon & Wickens, 2006; Dixon, Wickens, & McCarley, 2007; Meyer, 2001).

For example, Chancey et al. (2017) investigated automation reliability and error types (FA-prone vs. miss-prone) in a simulated flight task. Participants performed a primary task of maintaining level flight and a secondary resource management task supported by automated aids with high (90%) and low (60%) reliability. Trust was measured at an aggregate level halfway through each session. The results showed that reliability significantly affected compliance in the FA-prone condition, with participants more likely to comply with the highly reliable systems. In contrast, in the miss-prone condition, reliability primarily affected reliance, with greater reliance observed on highly reliable systems. Additionally, FAs had a stronger impact on trust than misses; trust mediated the relationship between FAs and compliance but not between misses and reliance (Chancey et al., 2017).

Other research indicates that misses may cause operators to trust automation less. For instance, in a study simulating an unmanned aerial vehicle mission with an automation-assisted secondary weapon-deployment task, Davenport and Bustamante (2010) investigated participants’ trust, reliance, and compliance following FA-prone and miss-prone aid. Trust was assessed through post-session questionnaires, revealing a greater trust decrement for systems with more misses compared to FAs. Compliance was greater for the miss-prone aid, while reliance was greater for the FA-prone aid (Davenport & Bustamante, 2010). Conversely, other studies indicate that operators may exhibit lower trust in systems with a high frequency of FAs, which may be attributed to the heightened prominence of FAs that reduces the credibility as operators need to actively verify the presence of errors, making the error more salient (Breznitz, 2013; Johnson, Sanchez, Fisk, & Rogers, 2004).

Some studies implicitly assessed compliance and reliance using performance and reaction time metrics. Dixon et al. (2007) examined error types in tracking and system monitoring tasks. Participants were grouped by automation aid type: no help, perfectly reliable aid, FA-prone aid (60% reliability), and miss-prone aid (60% reliability). Reliance was evaluated through performance deficits, while compliance was measured by comparing response times to those observed in the perfectly reliable aid condition. Results indicated that the FA-prone system reduced both compliance and reliance, while the miss-prone system only reduced reliance. In the FA-prone condition, failure detection rates and response times in the monitoring task were worse than in the perfectly reliable automation condition, reflecting reduced compliance. Both error types affected reliance as evidenced by poorer tracking task performance after silent automation trials. Notably, the FA-prone condition resulted in lower performance and longer detection times compared to the miss-prone condition (Dixon et al., 2007). In Sanchez, Rogers, Fisk, and Rovira (2014)’s multi-task simulation experiment, participants performed a collision avoidance driving task supported by 94.5% reliable automation and a secondary tracking task. Reliance was assessed based on whether participants chose to double-check after receiving support from the automated aid. Results revealed a trend of increasing reliance in the FA condition as participants became more familiarized with automation, whereas reliance decreased in the miss condition (Sanchez et al., 2014).

In high-stakes scenarios where the consequences of missing critical events could be catastrophic, automation thresholds are often calibrated to favor FAs to minimize the chance of overlooking abnormalities (Sanchez et al., 2014; Sorkin & Woods, 1985). However, excessive FAs may affect trust more than misses, leading to disuse (neglect or under-dependence), deviating from the original purpose (Chancey et al., 2017; Dixon & Wickens, 2006; Parasuraman & Riley, 1997). These studies treated the error type (FA-prone and miss-prone) as a between-subject variable. Future research could benefit from evaluating within-subject differences to provide a more comprehensive understanding of human behavioral patterns in response to automation errors.

Properties of Trust Dynamics

Over the past 30 years, many researchers have investigated people’s trust in automated/autonomous and AI systems. Historically, most studies have approached trust from a snapshot view, typically using end-of-experiment questionnaires to evaluate trust levels. Research within this view has identified many factors that can influence people’s trust in these systems (Hoff & Bashir, 2015; Kaplan, Kessler, Brill, & Hancock, 2023). More recently, acknowledging that a person’s trust can change dynamically while interacting with automated/autonomous technologies, there is a shift of research focus from snapshot trust to trust dynamics – how humans’ trust in autonomy forms and evolves due to moment-to-moment interaction with automated/autonomous technologies (de Visser et al., 2020; Wischnewski, Krämer, & Müller, 2023; Yang, Guo, & Schemanske, 2023; Yang, Schemanske, & Searle, 2023).

One body of research on trust dynamics explores how trust can be violated and restored after trust violation. Trust violations happen after negative experiences (false alarms and misses), which substantially decreases trust to a level below the original trust level (Baker, Phillips, Ullman, & Keebler, 2018; Esterwood & Robert, 2022; Esterwood & Robert Jr, 2023; P. H. Kim, Ferrin, Cooper, & Dirks, 2004; T. Kim & Song, 2021; Lewicki & Brinsfield, 2017; Sebo, Krishnamurthi, & Scassellati, 2019). Some trust violation research suggests that misses may lead to lower trust in automation. For example, Azevedo-Sa et al. (2020) investigated how failures in semi-autonomous driving systems influence drivers’ trust. Participants engaged in a non-driving task in a semi-autonomous vehicle, which occasionally provided FAs or missed obstacles. Trust dynamics were evaluated after each interaction, revealing that misses had a more harmful effect on trust than FAs (Azevedo-Sa et al., 2020). In contrast, other studies suggest no differences between error types. For instance, Guzman-Bonilla and Patton (2024) investigated participants’ responses to automation errors (FAs or misses) during a block pair matching task. Trust was measured after each block, and their findings indicated that error type did not affect the participants’ trust (Guzman-Bonilla & Patton, 2024). After trust violations occur, efforts can be made to restore trust, and these efforts often involve various actions/strategies such as apologies (Kohn, Momen, Wiese, Lee, & Shaw, 2019; Mahmood, Fung, Won, & Huang, 2022; Natarajan & Gombolay, 2020), denials (Kohn et al., 2019), explanations (Ashktorab, Jain, Liao, & Weisz, 2019; M. Faas, Kraus, Schoenhals, & Baumann, 2021; Natarajan & Gombolay, 2020), and promises (Albayram et al., 2020).

The second body of research is focused on developing real-time trust prediction models (Bhat et al., 2022; M. Chen, Nikolaidis, Soh, Hsu, & Srinivasa, 2018; Guo & Yang, 2021; Xu & Dudek, 2015). Examples of trust prediction models include the online probabilistic trust inference model (OPTIMo) by Xu and Dudek (2015), the Beta random variable model by Guo and Yang (2021), and the Bayesian model combining Gaussian processes and recurrent neural networks by Soh, Xie, Chen, and Hsu (2020). For a detailed review, please refer to Kok and Soh (2020).

The third body of research, which is examined in this study, uncovers general properties that govern how a person’s trust in automated/autonomous and AI systems changes over time. Recently, Yang, Guo, and Schemanske (2023) summarized three key properties of trust dynamics: continuity (Trust at the present moment i is significantly associated with trust at the previous moment i − 1.), negativity bias (Negative experiences due to autonomy failures have a greater influence on trust than positive experiences due to autonomy successes), and stabilization (A person’s trust will stabilize over repeated interactions with the same autonomy). The first two properties have been reported consistently in prior research (Lee & Moray, 1992; Manzey, Reichenbach, & Onnasch, 2012; Yang, Schemanske, & Searle, 2023; Yang et al., 2017). The last property, stabilization, was first empirically found in Yang, Guo, and Schemanske (2023). Using these properties, a predictive computational model was developed, which claims that trust at any point follows a Beta distribution (Guo & Yang, 2021). This model surpasses previous models in prediction accuracy while ensuring generalizability and explanatory power, indicating the importance of the three properties of trust dynamics.

The present study

For readability purposes, we denote the five cases mentioned in the hypothetical scenario using two letters. The first letter, or , represents the participants making a right or wrong initial decision. The second letter, or or , represents the AI making a correct or incorrect prediction, given the human’s initial performance. We differentiate and , where “ref” emphasizes that the AI mistakenly confirms the incorrectly filled medication as the prescribed (i.e., reference) medication. Additionally, the outcome of the task can be denoted as or . Altogether, we have ten different outcomes combining the five patterns (cases) and two possible outcomes for each pattern (see Table 1).

TABLE 1:

Description of the five cases and two outcomes. Bold text indicates potential incorrect outcomes resulting from automation errors.

| Case | Pattern | Prescribed Medication | Filled Medication | AI Prediction | Final Outcome |

|---|---|---|---|---|---|

|

| |||||

| A | RC | X | X | X | R: Dispense medication W: Error-correction refill |

| B | RI | X | X | Y/Z | R: Dispense medication W: Error-correction refill |

| C | WC | X | Y | Y | R: Error-correction refill W: Dispense medication |

| D | WI | X | Y | Z | R: Error-correction refill W: Dispense medication |

| E | X | Y | X | R: Error-correction refill W: Dispense medication |

|

The aims of the study are multifaceted. The primary interest of the study is to investigate the influence of AI predictions following wrong initial decisions. Specifically, to compare between patterns (i.e., Case C), (i.e., Case D), and (i.e., Case E).

Both patterns and involve the wrong medication being filled and subsequently misidentified by the AI system. The critical difference emerges in the outcome of the AI’s error: in pattern , the AI’s mistaken identification of medication Y as Z prompts a re-evaluation of the dispensed medication, potentially averting a mistake. Conversely, in pattern , the AI incorrectly identifies medication as , misleading the human operator and providing false reassurance, thereby compounding the error. Therefore, we hypothesize:

H1: Pattern will lead to the best performance, followed by pattern , then pattern .

In terms of trust change, we hypothesize both pattern and pattern will lead to trust decrement compared to trust increment for pattern . In addition, there will be a more significant trust decrement for pattern .

H2: Pattern will have the highest trust increment, followed by pattern , then pattern . This trend should be observed regardless of the final outcome of the task (Note: a larger trust decrement is considered a smaller trust increment).

Regarding the time taken to make a final decision after receiving the AI’s prediction, we hypothesize an interaction between the three patterns and the final outcome. As mentioned earlier, pattern misleads the human operator and provides false reassurance. Therefore, we speculate that when participants are indeed tricked by pattern , they would react very fast. On the contrary, it would take the longest time for a participant to recognize and rectify the error in pattern because the incorrect prediction does not align with either the reference or the initial answer.

H3a: It would take a shorter time for people in pattern to commit an error, compared to patterns and .

H3b: It would take a longer time for people in pattern to recognize an error, compared to patterns and .

Along with the primary interest, we wish to provide further evidence for the continuity (H4), negativity bias (H5), and stabilization (H6) properties of trust dynamics.

H4: Trust at the present moment is significantly associated with trust at the previous moment .

H5: The magnitude of trust decrement due to incorrect predictions (, , ) will be greater than the magnitude of trust increment following correct predictions (, ).

H6: The magnitude of trust adjustment will decrease as a function of time for both the correct and the incorrect AI predictions.

METHOD

This research complied with the American Psychological Association code of ethics and was exempt from the Institutional Review Board oversight at the University of Michigan (HUM00230326 ). Informed consent was obtained from each participant.

Participants

A total of 35 University of Michigan undergraduate and graduate students (average age = 22.43 years, SD = 3.27) participated in the experiment. Participants were required to have a normal or corrected-to-normal vision. Participants received a compensation of 10 dollars base rate with a bonus of up to 10 dollars based on their performance. Participants completed a three-dimensional spatial visualization and recognition task (J. Kim, Chen, Lester, & Yang, 2023; J. Y. Kim, Richie, & Yang, 2024; Vandenberg & Kuse, 1978), assisted by an AI. In each trial, participants were presented with a reference image selected from a pool of 30 images. They were then shown five options and asked to choose the one that matched the reference. Following their selection, the simulated AI predicted the shape of the participant’s chosen image. Finally, participants indicated whether their initial selection was correct. This workflow mirrors a medication dispensing task, where a pharmacist first receives a prescription (e.g., vitamin C) and retrieves the corresponding medication from an inventory containing hundreds of options. The retrieved medication is then verified for accuracy by pharmacy technicians or an AI system, comparing it to the prescription order. Based on the verification, the pharmacist decides whether to dispense the medication or to rectify errors by retrieving the appropriate item.

Experimental apparatus and stimuli

Stimuli development.

The study employed a simulated mental rotation task (MRT) and we followed the study of Shepard and Metzler (1971) to develop stimuli. We created 30 three-dimensional (3D) objects, half with 10 cubes and another half with 8 cubes. For each object, we created a reference two-dimensional image of the object, positioned at a specific rotation (see Figure 3). Subsequently, for each reference, we created 14 rotated images by rotating the 3D object every 45 degrees along the horizontal and vertical axes. This resulted in 30 reference images, each with 14 corresponding rotated images, denoted as correct alternatives (of the reference).

Figure 3.

Illustration of stimuli development. After creating the (a) reference images, one (b) mirrored image, fourteen 45-degree (c) horizontal axis rotated figures, and fourteen 45-degree (d) vertical axis rotated figures were created for each reference image.

The difficulty of the stimuli was determined through a separate study using Qualtrics, and the participants were recruited via Amazon Mechanical Turk (MTurk). Following Shepard and Metzler (1971)’s mental rotation study, participants viewed multiple image pairs. Each left image was a randomly chosen reference image, and the right image was either a correct alternative, a distractor, or the reference image itself (Figure 4). Distractors were randomly chosen from the remaining 29 reference images, which included 14 images with the same cube amount as the reference image (i.e., 8 or 10) and 15 images with a different cube amount. Participants had 10 seconds to identify whether the image pairs were the same or different. Data from participants who failed to match the validation pairs (reference-reference image pairs) correctly were discarded. The mean hit rate determined stimuli difficulty.

Figure 4.

For the beta test, participants were asked to determine whether the image pairs are the same items in different rotations (by clicking “Yes, they are the same item”) or different items (by clicking “No, they are different”) within 10 seconds.

Experimental Task.

The task was adapted from a three-dimensional spatial visualization test (Vandenberg & Kuse, 1978) and was developed using the Python Tkinter package. Figure 5 shows the flowchart of the experimental task.

Figure 5.

Flowchart of the experiment

Before the experiment, participants completed a demographics survey (i.e., age and gender) and a trust propensity survey estimating their propensity to trust automation (Merritt, Heimbaugh, LaChapell, & Lee, 2013).

During the experiment, participants performed the MRT, which consisted of 60 trials presented in random order. Each trial contained several steps. First, participants were shown a reference image and five choices, containing one correct alternative and four distractors (Figure 6a). The correct alternative was identical to the reference image in structure but was shown in a rotated position. Two distractors were randomly chosen from rotated mirrored images of the reference and the other two were randomly chosen from rotated images of other reference images. Participants were asked to choose the image that portrayed the same 3D object as the one portrayed in the reference image, as accurately as possible and within 15 seconds, then to click the “Next” button. The 15-second duration was determined based on pilot testing. If no selection was made within the time limit, the text on the initial choice page displayed “You did not make a selection within the time limit” (Figure 6b), and participants needed to click the “Next” button to start the next trial.

Figure 6.

Participants were asked to make their initial answer choice within 15 seconds.

After making the initial choice, participants rated their confidence in their initial choice using a visual analog scale, with the leftmost point labeled “Not confident at all” and the rightmost point “Absolutely confident” (Figure 7).

Figure 7.

Participants rated their confidence using a visual analog scale.

Next, participants were shown the AI’s prediction, displayed below their initial selection to illustrate the rotation of the AI-predicted shape. In Figure 8a, the participant initially selected the third image (indicated by an arrow), but the AI misidentified the initial selection and presented the reference image of its predicted shape (i.e., Case B described in the Introduction section). In contrast, in Figure 8b, the participant initially selected the fifth image (indicated by an arrow). The AI misidentified the initial selection and displayed the reference image of the correct alternative (i.e., Case E described in the introduction).

Figure 8.

Participants were presented with the AI system’s recognition and chose between sticking with or rejecting their initial choice within 10 seconds. If no selection was made, they were skipped to the next trial.

After viewing the AI’s prediction, participants chose between sticking with their initial answer by clicking “I was right” or rejecting their initial answer by clicking “I was wrong” within 10 seconds. Similarly, the duration was determined based on a pilot study. If no selection was made in 10 seconds, the text on the final choice page displayed a message similar to Figure 6b, and participants needed to press the “Next” button to move to the next trial.

Participants were then presented with their performance feedback and the validity of AI’s prediction (Figure 9).

Figure 9.

Participants were presented with the performance feedback page, showing their performance on the initial and final answer choices and the validity of the AI’s prediction.

At last, participants rated their trust in and perceived reliability of the AI system using a visual analog scale (Figure 10). Following Yang, Schemanske, and Searle (2023)’s approach, participants of this experiment were asked to rate their trust after each trial. The leftmost anchor of the trust scale was labeled “I don’t trust the decision aid at all” and the rightmost anchor of the trust scale was labeled “I absolutely trust the decision aid.” The perceived reliability rating was on a 0 to 100 scale.

Figure 10.

Participants rated their trust and perceived reliability using a visual analog scale.

Experimental design

The experiment employed a within-subjects design. The independent variable was the patterns of performance (Tables 1 and 2). It is important to note that the presence of each pattern or outcome was participant-dependent, as the participant’s initial and final responses could not be manipulated.

TABLE 2:

Mean and sd of confidence rating, trust adjustment, final performance, final reaction time, number of patterns, and number of participants grouped by patterns and outcomes of performance. (Note: Performance is based on the final answer choice. Therefore, for each pattern, there is only one output.)

| Patterns of Performance | Confidence Rating | Trust Adjustment | Final Performance (%) | Final Reaction Time (s) | N Patterns | N Participants | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | (SD) | Mean | (SD) | Mean (SD) | Mean | (SD) | (n) | (n) | ||||

|

| ||||||||||||

| RC | R | 74.58 (23.28) | 75.48 (22.73) | 1.17 (4.33) | 1.21 (4.36) | 96.96 (17.17) | 3.64 (2.00) | 3.54 (1.88) | 889 | 862 | 35 | 35 |

| W | 45.81 (22.84) | −0.07 (3.02) | 6.70 (3.00) | 27 | 13 | |||||||

| RI | R | 72.53 (24.53) | 78.45 (21.57) | −3.67 (6.06) | −3.65 (5.89) | 78.61 (41.11) | 5.78 (2.47) | 5.82 (2.53) | 201 | 158 | 35 | 33 |

| W | 50.77 (22.58) | −3.77 (6.71) | 5.63 (2.26) | 43 | 23 | |||||||

| WC | R | 50.41 (22.11) | 44.93 (21.75) | 0.76 (4.03) | 1.01 (3.39) | 62.33 (48.50) | 5.71 (2.71) | 5.96 (2.62) | 592 | 369 | 35 | 35 |

| W | 59.46 (19.63) | 0.34 (4.90) | 5.30 (2.83) | 223 | 33 | |||||||

| WI | R | 51.68 (23.32) | 43.38 (21.53) | −1.91 (5.39) | −2.23 (5.32) | 56.85 (49.65) | 6.34 (2.88) | 6.25 (2.71) | 197 | 112 | 35 | 32 |

| W | 62.62 (21.07) | −1.48 (5.47) | 6.45 (3.10) | 85 | 30 | |||||||

| WI Ref | R | 53.71 (26.16) | 42.29 (23.23) | −4.64 (8.03) | −4.46 (7.82) | 25.00 (43.53) | 4.56 (2.86) | 6.79 (3.15) | 96 | 24 | 34 | 15 |

| W | 57.51 (26.12) | −4.71 (8.15) | 3.82 (2.35) | 72 | 31 | |||||||

To induce the occurrence of different patterns, the AI system is set as follows: The AI system made correct recognition 70% of the time, irrespective of whether the participant’s initial choice was right or wrong. Pattern (Case A) occurred when the participant’s initial choice was correct, coupled with a correct recognition from the AI system; Pattern (Case C) occurred when the participant’s initial choice was wrong, coupled with a correct recognition from the AI system. The AI system made incorrect recognition 20% of the time by recognizing the participant’s selected choice as another shape other than the reference shape. This led to pattern (Case B) if the participant’s initial choice was correct and pattern (Case D) if the participant’s initial choice was wrong. The AI system recognized the participant’s initially selected choice as the reference shape 10% of the time. This led to pattern (Case A) if the participant’s initial choice was right and pattern (Case E) if the participant’s initial choice was wrong. Therefore, depending on each participant’s initial choice, AI reliability ranged from 70–80%. This reliability is above the baseline threshold level of around 70% to be perceived as useful (Wickens & Dixon, 2007). In the present study, participants were informed that the AI aid’s recommendations were imperfect, but they were not provided with specific information about its reliability level.

Measures

We are interested in the following measures.

Confidence.

After participants completed step 1 of each trial, they rated their confidence in their initial selection on a Visual Analog Scale from 0 to 100. The leftmost anchor was labeled “I am not confident in my answer at all,” and the rightmost anchor was labeled “I absolutely trust the decision aid.”

Trust Adjustment.

After each trial , participants reported their in the decision aid. We calculate a trust adjustment as:

Since the moment-to-moment trust is reported after each trial, only 59 trust adjustments are obtained from each participant.

Performance.

Another dependent variable was the final performance of the experimental task, which was calculated as the percentage of correct final answers (determining the validity of the initial answers) for each pattern for each participant (Figure 8a).

Reaction Time.

The reaction time was measured in seconds from when the AI prediction appeared until the participant pressed either the “I was right” or “I was wrong” button (Figure 8a).

Experimental procedure

After participants signed the consent form and completed the online demographics and trust propensity surveys (Merritt et al., 2013), they watched a video explaining the experimental task. Participants were informed that the AI aid’s recommendations were imperfect and may or may not be correct. Each participant completed 60 trials. Upon completion, participants completed a post-experiment survey.

Statistical Analysis

We constructed linear mixed-effects models, accounting for random effects of participants, to examine our hypotheses using the “lme4” package in R (Bates, Mächler, Bolker, Walker, et al., 2014). Mixed-effects models have advantages in dealing with repeated measures and missing values and are, therefore, particularly appropriate for our analysis. We used an iterative approach to construct the models. Following the standard procedure for building mixed-effects models (Field, Field, & Miles, 2012), we started simple and gradually added complexity to our models. Using the likelihood ratio test (LRT), comparisons of models were conducted, and the simpler models were used when no significant differences were found. The level of significance for this study was set to α =0.05.

The mixed-effects model equation is:

Where:

: The outcome variable for participant under case pattern .

: The fixed effect intercept.

: The random intercept for the participant.

, , , : Dummy variables for the case patterns, comparing each case to the reference case (i.e., Case A).

, , , : The fixed effect coefficients for the case patterns (B, C, D, and E) relative to the reference case A.

: The residual error for participant in case pattern .

Post-hoc t-tests were conducted to analyze the differences between the patterns. The Kenward-Roger method of estimating degrees of freedom was used, which adjusts the variance-covariance matrix of the fixed effects using a Taylor series expansion, then calculates degrees of freedom (Kenward & Roger, 1997). This method is widely recognized in mixed-effects models for its ability to mitigate Type 1 errors, generate precise p-values, and maintain reliability across varying sample sizes (Kuznetsova, Brockhoff, & Christensen, 2017; Luke, 2017; McNeish & Stapleton, 2016).

RESULTS

After all trials were completed, the number of occurrences for each pattern coupled with the outcome was calculated. As the occurrence of each pattern can only be determined posteriorly, participants might not necessarily display each performance pattern. Table 2 shows the number of occurrences for each performance pattern and the descriptive statistics of all the measurements.

Confidence

Analyzing participants’ data on confidence can be regarded as a check of data quality. We expected that participants would have higher confidence when their initial choices were correct. We organized the patterns into two classifications: right human initial choice (, ) and wrong human initial choice (, , ). The confidence rating revealed significant differences between the right (Mean = 72.74, SD = 1.98) and the wrong (Mean = 56.77, SD = 2.00) initial choices (t(1962.72) = −20.20, p < .001).

Comparing Patterns , , and

Significance indicators are displayed on figures to highlight the statistical significance between patterns of performance.

Trust adjustment.

There was a significant difference between the three patterns on trust adjustment (F (2, 875.68) = 61.2, p < .001). Pattern had a significantly larger trust increase than pattern (t(875) = 6.57, p < .001) and pattern (t(878) = 9.96, p < .001). Pattern had a significantly greater trust decrement than pattern (t(876) = −4.46, p < .001) (Figure 11).

Figure 11.

Trust adjustment by patterns of performance. The error bars represent +/− 2 standard errors.

Performance.

There was a significant difference between the three patterns in the final performance (F (2, 858.11) = 29.43, p < .001). Post-hoc results show that pattern had a significantly worse performance compared to (t(856.62) = −7.67, p < .001) and to (t(856.47) = −5.73, p < .001).

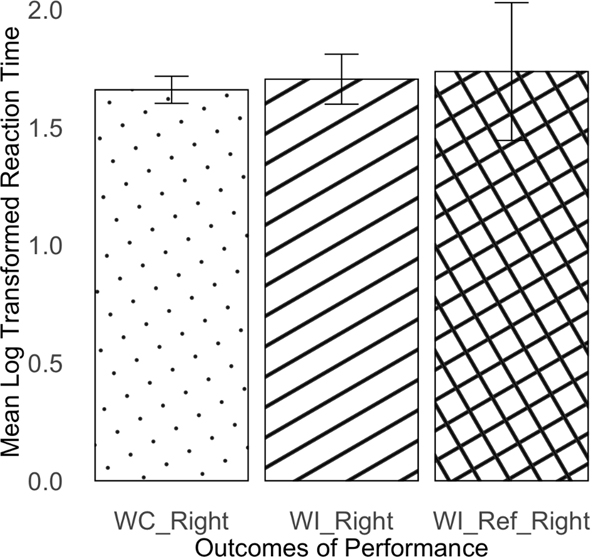

Reaction Time.

For the statistical analyses of reaction times, a log scale transformation was applied to response time data. Both patterns (F (2, 852.35) = 26.82, p < .001) and outcomes (F (1, 862.59) = 24.64, p < .001) significantly affected reaction time. In addition, a significant interaction effect was observed (F (2, 855.15) = 10.38, p < .001). Because of the significant interaction effect, we compare the three patterns within each level of outcome. When the final outcome was right (Figure 13a), there was no significant difference between the three patterns (F (2, 475.49) = .35, p = .71); When the final outcome was wrong (Figure 13b), patterns significantly affected reaction time (F (2, 363.47) = 22.09, p < .001). Post-hoc comparisons revealed resulted in significantly quicker reaction time than (t(362.36) = 5.82, p < .001) and (t(358.65) = 6.14, p < .001).

Figure 13.

Reaction Time by patterns of performance. The error bars represent +/− 2 standard errors.

Evaluating Continuity, Negativity Bias, and Stabilization

To examine the two properties of trust dynamics, the patterns were organized into two classifications: correct AI predictions (i.e., patterns and ) and incorrect AI predictions (i.e., patterns , , and ).

Continuity.

Comparing correct AI predictions to incorrect AI predictions shows that AI success led to a trust increment. Conversely, AI prediction failures led to a decline in trust. In addition, trust at the present moment t is highly correlated with trust at the previous moment . To investigate the relationship between the present rating of trust and its historical rating over time, we computed the autocorrelation of trust ratings. The results, depicted in Figure 14, show that trust at time is highly correlated with trust at the previous moment . In addition, the mean autocorrelation decreased as the time gap between ratings increased, indicating a decline in correlation with longer time intervals.

Figure 14.

Autocorrelation of trust as a function of time separation. The error bars represent +/− 2 standard errors.

Negativity bias.

To evaluate the property of negativity bias, we compared the magnitude of trust increment and that of trust decrement. Results show significant differences between the correct (Mean = 1.01, SD = 0.13) and the incorrect (Mean = −3.16, SD = 0.22) AI prediction (t(1968.00) = −16.58, p < .001).

Stabilization.

To evaluate the stabilization property, an analysis using a linear mixed model of trust adjustment as a function of time. Results show a significant decrease in the magnitude of trust adjustment over time for both the correct AI predictions (β = −0.035, t(1448) = −4.58, p < .001) and the incorrect AI predictions (β = −0.203, t(469.27) = −3.50, p < .001).

DISCUSSION

In this section, we discuss the findings in relation to our aims and hypotheses. The primary interest of the study is to compare between patterns , , and . The results partially support H1, in which we hypothesized that pattern will lead to the best performance, followed by pattern , then pattern . Our results showed that pattern had a significantly worse performance compared to and . Out of the 96 occurrences of pattern , the outcomes of 72 were wrong. This highlights the high likelihood of making errors when automated decision aid erroneously confirms participants’ wrong initial answer as correct, making participants falsely believe their initial mistakes were correct. In H1, we also hypothesized that the performance of pattern would be higher than pattern . To our surprise, we found a non-significant difference between patterns and , which suggests the strong benefits of making such errors from a utilitarian point of view. This counter-intuitive finding–that certain AI errors are, in fact, beneficial–only surfaces when we go beyond the binary categorization of signal detection theory (SDT).

In prior work examining human-automation/autonomy interaction, risk has been identified as a significant factor influencing trust, dependence, and task performance (Hoff & Bashir, 2015). The risk of an event is the product of the probability of an event and the consequence of that event (Sheridan, 2008; Slovic & Lichtenstein, 1968). Mathematically, Sheridan (2008) expressed risk as the “product of the probability of an initial reference event and the sum of products of probability of each consequence occurring given that E occurs, as well as the cost of each consequence .” Therefore, , where . Applying the equation to the present study, is the probability of each case (i.e., ), and can be considered the probability of final outcome (i.e., and ). In the experiment, participants were encouraged to perform the task as accurately as possible. We did not manipulate the cost of an error (i.e., one medication error could be harmless and another could lead to patient death) and of a success. Therefore, and are constants. The findings pertinent to H1 can be summarized as . Furthermore, if we are willing to assume and , we could calculate the average consequences of the three cases: , , and .

H2 is fully supported that pattern led to the highest trust increment, followed by pattern , then pattern . Prior research has consistently shown that automation performance is a key determinant of the direction of trust change – automation success leads to trust increment, and failure leads to trust decrement (Lee & Moray, 1992; Manzey et al., 2012; Yang, Schemanske, & Searle, 2023; Yang et al., 2017). We simultaneously observe two seemingly competing theories of how people interpret automation performance. On the one hand, if automation performance is assessed based on the automation’s prediction performance per se, we would expect to see a trust increment for pattern , and a trust decrement for both patterns and . In addition, the amount of trust decrements should be similar because, for both cases, the automation made a wrong prediction. Alternatively, if automation performance is assessed based on the “utility” of a prediction, we would expect to see a trust decrement for pattern and a trust increment for both patterns and , as both patterns prompt a re-evaluation of the initial selection. Further, the amount of trust increment between the two patterns should be similar because the final performance of the two patterns was comparable. Our results revealed neither theory is entirely right or wrong. Instead, the two forces – the AI’s performance per se and the “utility” of an AI prediction – together determine trust change. In prior research based on SDT, the automation’s prediction performance and its “utility” are aligned. However, in multi-class scenarios, the prediction performance and the “utility” of are in opposite directions, causing the intriguing finding. Specifically, the direction of trust change, increment or decrement, is largely determined by the AI performance. Consistent with prior literature (Lee & Moray, 1992; Manzey et al., 2012; Yang, Schemanske, & Searle, 2023; Yang et al., 2017), a wrong prediction leads to a trust decrement. However, the magnitude of trust decrement is further influenced by the “utility”: a wrong prediction leading to good outcomes has a smaller trust decrement.

Regarding the time taken to make a final decision after receiving the AI’s prediction, we hypothesized an interaction between the three patterns and the final outcome. The results supported H3a – when the human operators were tricked by the incorrect reassurance from the AI, they reacted very fast, resulting in significantly quicker reaction time than and . The results did not support H3b. We found a non-significant difference between the three patterns when participants successfully recognized the false reassurance. This lack of significance could be due to the small sample size, and future research is needed to examine this further. In addition, we noticed a pattern of speed-accuracy trade-off, where faster responses were associated with increased errors. In a practical work environment, whether time or accuracy has a higher priority hinges on the payoff structure. For example, in medical settings where a wrong medication may lead to catastrophic outcomes, it might be more beneficial to prioritize accuracy over time.

Along with our primary objectives, we also wished to validate the existence of the three properties of trust dynamics, namely, continuity (H4), negativity bias (H5), and stabilization (H6). The continuity and negativity bias has been reported in the literature consistently (Lee & Moray, 1992; Manzey et al., 2012; Yang, Wickens, & Hölttä-Otto, 2016). The stabilization property was recently reported in the studies of Yang, Guo, and Schemanske (2023) and Guo and Yang (2021). Our study provides further validation of this property – as a person uses an automated technology over and over again, this person’s trust will stabilize over time. It is important to note that the three properties are summarized based on aggregated data from empirical research (i.e., the trust change is averaged over a group of participants). Therefore, the three properties represent the population average behaviors.

CONCLUSION

Most studies on trust in and dependence on automation/autonomy and AI systems used SDT to model the performance of the systems. However, SDT categorizes the world into binary states—signal present or absent—which oversimplifies the complex dynamics observed in real-world scenarios. This binary categorization fails to address the recognition of complexity inherent in multi-class classification scenarios, which are increasingly prevalent in advanced AI systems across various sectors. Examples include medical diagnosis through image classification (classifying chest X-rays into normal, pneumonia, or lung cancer), object detection in autonomous vehicles (recognizing various objects encountered on the road), and product categorization in e-commerce (classifying products as electronics, clothing, home appliances, or books), among others (Gao et al., 2018; Kozareva, 2015; Rahman, Desai, & Bhattacharya, 2008). As far as we know, we are one of the few, if not the only, studies that examine trust in and dependence on automation/autonomy and AI systems beyond the binary classification of the state of the world.

Our study revealed the difference in performance, response time, and trust between three patterns: , , and . These findings emphasize the need for careful consideration of error patterns in automated systems. In scenarios where AI falsely reassures the human operator, such as in pattern , we observed high likelihood for operators to make expedited decisions that often led to errors. This highlights a significant danger in AI design—systems that mislead operators can severely undermine safety and efficiency. Conversely, errors that lead to safety checks and verifications, even though incorrect, can enhance the overall reliability of human-AI systems by preventing potential mishaps. Our results also illustrate the generalizability of the three properties of trust dynamics, continuity, negativity bias, and stabilization, illustrating how people’s trust can change over time due to moment-to-moment interaction with AI.

There are several limitations to this study. First, in the present study, we did not manipulate the cost of an error or the cost of success (i.e., in the risk equation), which often varies in safety-critical domains. For instance, Aldhwaihi, Schifano, Pezzolesi, and Umaru (2016) found that although most dispensing errors caused no harm, some errors had high consequences that could potentially cause harm or even death, depending on the dosage and patients’ vulnerability. Future research could incorporate varying costs into experiments to examine the impact of various AI error types. Also, future research should be conducted to validate the results in more realistic contexts, particularly in safety-critical domains. Second, inspired by the medical dispensing examples described at the beginning of the article, the testbed we developed focused on a mental rotation and recognition task. Future studies could replicate the study using testbeds focusing on other human information processing components such as memory and decision-making. Third, there were only 96 cases, which could have caused the lack of significance in H3b. Future studies could benefit from a larger sample size.

Supplementary Material

Figure 12.

Performance by patterns of performance. The error bars represent +/− 2 standard errors.

Figure 15.

Trust adjustment by patterns of performance. The error bars represent +/− 2 standard errors.

Keypoints.

AI systems that offer a multi-class classification of the state of the world can lead to types of errors that SDT does not traditionally capture.

Varying error patterns from AI significantly impacted performance, reaction times, and trust adjustments.

AI errors can provide false reassurance, misleading operators into believing their incorrect decisions were correct. This leads to worsened performance and a significant decline in trust. Conversely, and paradoxically, some AI errors can prompt safety verification, ultimately enhancing overall performance.

We illustrate the generalizability of the three properties of trust dynamics: continuity, negativity bias, and stabilization.

Acknowledgement:

This study is in part supported by the University of Michigan Industrial and Operations Engineering department’s fellowship, the University of Michigan Rackham Graduate School’s graduate student research grant, and the National Library of Medicine of the National Institutes of Health under award number R01LM013624. The authors would like to thank Szu-Tung Chen for her assistance in testbed development, and Yili Liu for lively intellectual discussions.

Biographies

Jin Yong Kim is a Ph.D. candidate in the Department of Industrial and Operations Engineering (Human Factors) at the University of Michigan Ann Arbor. He obtained a BSE in Industrial and Operations Engineering from the University of Michigan in 2021.

Corey Lester is an Assistant Professor in the College of Pharmacy at the University of Michigan Ann Arbor. He received his PharmD from the University of Rhode Island in 2012 and completed a PGY-1 Community Practice Residency at Virginia Commonwealth University. He received his PhD from the University of Wisconsin-Madison, School of Pharmacy in 2017.

X. Jessie Yang is an Associate Professor in the Department of Industrial and Operations Engineering and the Department of Robotics at the University of Michigan Ann Arbor. She obtained a PhD in Mechanical and Aerospace Engineering (Human Factors) from Nanyang Technological University, Singapore in 2014.

Footnotes

We use a Wizard of Oz approach in our study without an algorithm running at the backend. We acknowledge the difference between automation, autonomy, and AI but the differentiation is not within the scope of the study. The word “AI” is adopted to acknowledge the advances in computer vision and image classification. Various deep learning algorithms have been developed in various domains (Q. Chen, Al Kontar, Nouiehed, Yang, & Lester, 2024; Krizhevsky, Sutskever, & Hinton, 2012).

Contributor Information

Jin Yong Kim, Industrial and Operations Engineering, University of Michigan, Ann Arbor, MI.

Corey Lester, College of Pharmacy, University of Michigan, Ann Arbor, MI.

X. Jessie Yang, Industrial and Operations Engineering, University of Michigan, Ann Arbor, MI.

References

- Albayram Y, Jensen T, Khan MMH, Fahim MAA, Buck R, & Coman E. (2020). Investigating the effects of (empty) promises on human-automation interaction and trust repair. In Proceedings of the 8th International Conference on Human-Agent Interaction (pp. 6–14). [Google Scholar]

- Aldhwaihi K, Schifano F, Pezzolesi C, & Umaru N. (2016). A systematic review of the nature of dispensing errors in hospital pharmacies. Integrated Pharmacy Research and Practice, 1–10. [DOI] [PMC free article] [PubMed]

- Ashktorab Z, Jain M, Liao QV, & Weisz JD (2019). Resilient chatbots: Repair strategy preferences for conversational breakdowns. In Proceedings of the 2019 CHI conference on human factors in computing systems (pp. 1–12).

- Azevedo-Sa H, Jayaraman SK, Esterwood CT, Yang XJ, Robert LP, & Tilbury DM (2020). Comparing the effects of false alarms and misses on humans’ trust in (semi) autonomous vehicles. In Companion of the 2020 acm/ieee international conference on human-robot interaction (pp. 113–115). [Google Scholar]

- Baker AL, Phillips EK, Ullman D, & Keebler JR (2018). Toward an understanding of trust repair in human-robot interaction: Current research and future directions. ACM Transactions on Interactive Intelligent Systems (TiiS), 8 (4), 1–30. [Google Scholar]

- Bates D, Mächler M, Bolker B, Walker S, et al. (2014). Fitting linear mixed-effects models using lme4. arxiv. arXiv preprint arXiv:1406.5823, 23.

- Bhat S, Lyons JB, Shi C, & Yang XJ (2022). Clustering Trust Dynamics in a Human-Robot Sequential Decision-Making Task. IEEE Robotics and Automation Letters, 7 (4), 8815–8822. doi: 10.1109/LRA.2022.3188902 [DOI] [Google Scholar]

- Bhat S, Lyons JB, Shi C, & Yang XJ (2024). Evaluating the Impact of Personalized Value Alignment in Human-Robot Interaction: Insights into Trust and Team Performance Outcomes. In Proceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction (pp. 32–41). New York, NY, USA: Association for Computing Machinery. doi: 10.1145/3610977.3634921 [DOI] [Google Scholar]

- Breznitz S. (2013). Cry wolf: The psychology of false alarms. Psychology Press. [Google Scholar]

- Chancey ET, Bliss JP, Liechty M, & Proaps AB (2015). False alarms vs.misses: Subjective trust as a mediator between reliability and alarm reaction measures. In Proceedings of the human factors and ergonomics society annual meeting (Vol. 59, pp. 647–651). [Google Scholar]

- Chancey ET, Bliss JP, Yamani Y, & Handley HA (2017). Trust and the compliance–reliance paradigm: The effects of risk, error bias, and reliability on trust and dependence. Human factors, 59 (3), 333–345. [DOI] [PubMed] [Google Scholar]

- Chen M, Nikolaidis S, Soh H, Hsu D, & Srinivasa S. (2018). Planning with trust for human-robot collaboration. In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction (HRI ‘18) (pp. 307–315). [Google Scholar]

- Chen Q, Al Kontar R, Nouiehed M, Yang XJ, & Lester C. (2024). Rethinking Cost-Sensitive Classification in Deep Learning via Adversarial Data Augmentation. INFORMS Journal on Data Science. doi: 10.1287/ijds.2022.0033 [DOI] [Google Scholar]

- Chung H, & Yang XJ (2024). Associations between trust dynamics and personal characteristics. In 2024 IEEE 4th International Conference on Human-Machine Systems (ICHMS) (p. 1–6). doi: 10.1109/ICHMS59971.2024.10555583 [DOI] [Google Scholar]

- Davenport RB, & Bustamante EA (2010). Effects of false-alarm vs. miss-prone automation and likelihood alarm technology on trust, reliance, and compliance in a miss-prone task. In Proceedings of the human factors and ergonomics society annual meeting (Vol. 54, pp. 1513–1517). [Google Scholar]

- de Visser EJ, Peeters MM, Jung MF, Kohn S, Shaw TH, Pak R, & Neerincx MA (2020). Towards a theory of longitudinal trust calibration in human–robot teams. International Journal of Social Robotics, 12 (2), 459–478. [Google Scholar]

- Dixon SR, & Wickens CD (2006). Automation reliability in unmanned aerial vehicle control: A reliance-compliance model of automation dependence in high workload. Human factors, 48 (3), 474–486. [DOI] [PubMed] [Google Scholar]

- Dixon SR, Wickens CD, & McCarley JS (2007). On the independence of compliance and reliance: Are automation false alarms worse than misses? Human factors, 49 (4), 564–572. [DOI] [PubMed] [Google Scholar]

- Du N, Huang KY, & Yang XJ (2020). Not All Information Is Equal: Effects of Disclosing Different Types of Likelihood Information on Trust, Compliance and Reliance, and Task Performance in Human-Automation Teaming. Human Factors, 62 (6), 987–1001. doi: 10.1177/0018720819862916 [DOI] [PubMed] [Google Scholar]

- Esterwood C, & Robert LP (2022). A literature review of trust repair in hri. In 2022 31st ieee international conference on robot and human interactive communication (ro-man) (pp. 1641–1646).

- Esterwood C, & Robert LP Jr (2023). Three strikes and you are out!: The impacts of multiple human–robot trust violations and repairs on robot trustworthiness. Computers in Human Behavior, 142, 107658. [Google Scholar]

- Field A, Field Z, & Miles J. (2012). Discovering statistics using r. sage. [Google Scholar]

- Gao H, Cheng B, Wang J, Li K, Zhao J, & Li D. (2018). Object classification using cnn-based fusion of vision and lidar in autonomous vehicle environment. IEEE Transactions on Industrial Informatics, 14 (9), 4224–4231. [Google Scholar]

- Guo Y, & Yang XJ (2021). Modeling and predicting trust dynamics in human–robot teaming: A bayesian inference approach. International Journal of Social Robotics, 13 (8), 1899–1909. [Google Scholar]

- Guo Y, Yang XJ, & Shi C. (2023). Enabling Team of Teams: A Trust Inference and Propagation (TIP) Model in Multi-Human Multi-Robot Teams. In Robotics: Science and Systems XIX. doi: 10.15607/RSS.2023.XIX.003 [DOI]

- Guo Y, Yang XJ, & Shi C. (2024). TIP: A trust inference and propagation model in multi-human multi-robot teams. Autonomous Robots, 48 (7), 1–19. doi: 10.1007/s10514-024-10175-3 [DOI] [Google Scholar]

- Guzman-Bonilla E, & Patton CE (2024). Misses or false alarms? how error type affects confidence in automation reliability estimates. In Proceedings of the human factors and ergonomics society annual meeting (p. 10711813241275093).

- Hautus MJ, Macmillan NA, & Creelman CD (2021). Detection theory: A user’s guide. Routledge. [Google Scholar]

- Hoff KA, & Bashir M. (2015). Trust in automation: Integrating empirical evidence on factors that influence trust. Human factors, 57 (3), 407–434. [DOI] [PubMed] [Google Scholar]

- Johnson JD, Sanchez J, Fisk AD, & Rogers WA (2004). Type of automation failure: The effects on trust and reliance in automation. In Proceedings of the human factors and ergonomics society annual meeting (Vol. 48, pp. 2163–2167). [Google Scholar]

- Kaplan AD, Kessler TT, Brill JC, & Hancock P. (2023). Trust in artificial intelligence: Meta-analytic findings. Human factors, 65 (2), 337–359. [DOI] [PubMed] [Google Scholar]

- Kenward MG, & Roger JH (1997). Small sample inference for fixed effects from restricted maximum likelihood. Biometrics, 983–997. [PubMed]

- Kim J, Chen ST, Lester C, & Yang XJ (2023). Exploring trust and performance in human-automation interaction: Novel perspectives on incorrect reassurances from imperfect automation..

- Kim JY, Marshall VD, Rowell B, Chen Q, Zheng Y, Lee JD, . . . Yang XJ (2025). The effects of presenting ai uncertainty information on pharmacists’ trust in automated pill recognition technology: Exploratory mixed subjects study. JMIR Human Factors, 12, e60273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim JY, Richie O, & Yang XJ (2024). Evaluating the influence of incorrect reassurances on trust in imperfect automated decision aid. In Proceedings of the human factors and ergonomics society annual meeting (Vol. 68, pp. 81–87). [Google Scholar]

- Kim PH, Ferrin DL, Cooper CD, & Dirks KT (2004). Removing the shadow of suspicion: the effects of apology versus denial for repairing competence-versus integrity-based trust violations. Journal of applied psychology, 89 (1), 104. [DOI] [PubMed] [Google Scholar]

- Kim T, & Song H. (2021). How should intelligent agents apologize to restore trust? interaction effects between anthropomorphism and apology attribution on trust repair. Telematics and Informatics, 61, 101595. [Google Scholar]

- Kohn SC, Momen A, Wiese E, Lee Y-C, & Shaw TH (2019). The consequences of purposefulness and human-likeness on trust repair attempts made by self-driving vehicles. In Proceedings of the human factors and ergonomics society annual meeting (Vol. 63, pp. 222–226). [Google Scholar]

- Kok BC, & Soh H. (2020). Trust in robots: Challenges and opportunities. Current Robotics Reports, 1 (4), 297–309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kozareva Z. (2015). Everyone likes shopping! multi-class product categorization for e-commerce. In Proceedings of the 2015 conference of the north american chapter of the association for computational linguistics: human language technologies (pp. 1329–1333). [Google Scholar]

- Krizhevsky A, Sutskever I, & Hinton GE (2012). ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems (Vol. 25). Curran Associates, Inc. Retrieved 2025-01-02, from https://proceedings.neurips.cc/paperfiles/paper/2012/hash/c399862d3b9d6b76c8436e924aAbstract.html [Google Scholar]

- Kuznetsova A, Brockhoff PB, & Christensen RHB (2017). lmertest package: tests in linear mixed effects models. Journal of statistical software, 82 (13). [Google Scholar]

- Lacson FC, Wiegmann DA, & Madhavan P. (2005). Effects of attribute and goal framing on automation reliance and compliance. In Proceedings of the human factors and ergonomics society annual meeting (Vol. 49, pp. 482–486). [Google Scholar]

- Lee J, & Moray N. (1992). Trust, control strategies and allocation of function in human-machine systems. Ergonomics, 35 (10), 1243–1270. [DOI] [PubMed] [Google Scholar]

- Lester CA, Li J, Ding Y, Rowell B, Yang XJ, & Al Kontar R. (2021). Performance evaluation of a prescription medication image classification model: an observational cohort. NPJ Digital Medicine, 4 (1), 118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewicki RJ, & Brinsfield C. (2017). Trust repair. Annual review of organizational psychology and organizational behavior, 4 (1), 287–313. [Google Scholar]

- Luke SG (2017). Evaluating significance in linear mixed-effects models in r. Behavior research methods, 49, 1494–1502. [DOI] [PubMed] [Google Scholar]

- Madhavan P, Wiegmann DA, & Lacson FC (2006). Automation failures on tasks easily performed by operators undermine trust in automated aids. Human factors, 48 (2), 241–256. [DOI] [PubMed] [Google Scholar]

- Mahmood A, Fung JW, Won I, & Huang C-M (2022). Owning mistakes sincerely: Strategies for mitigating AI errors. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems (pp. 1–11). [Google Scholar]

- Manzey D, Reichenbach J, & Onnasch L. (2012). Human performance consequences of automated decision aids: The impact of degree of automation and system experience. Journal of Cognitive Engineering and Decision Making, 6 (1), 57–87. [Google Scholar]

- McBride SE, Rogers WA, & Fisk AD (2011, November). Understanding the effect of workload on automation use for younger and older adults. Human Factors, 53 (6), 672–686. doi: 10.1177/0018720811421909 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McNeish DM, & Stapleton LM (2016). The effect of small sample size on two-level model estimates: A review and illustration. Educational Psychology Review, 28, 295–314. [Google Scholar]

- Merritt SM, Heimbaugh H, LaChapell J, & Lee D. (2013). I trust it, but i don’t know why: Effects of implicit attitudes toward automation on trust in an automated system. Human factors, 55 (3), 520–534. [DOI] [PubMed] [Google Scholar]

- Meyer J. (2001). Effects of warning validity and proximity on responses to warnings. Human factors, 43 (4), 563–572. [DOI] [PubMed] [Google Scholar]

- Meyer J. (2004). Conceptual issues in the study of dynamic hazard warnings. Human factors, 46 (2), 196–204. [DOI] [PubMed] [Google Scholar]

- Faas M, S., Kraus J, Schoenhals A, & Baumann M. (2021). Calibrating pedestrians’ trust in automated vehicles: does an intent display in an external hmi support trust calibration and safe crossing behavior? In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1–17). [Google Scholar]

- Natarajan M, & Gombolay M. (2020). Effects of anthropomorphism and accountability on trust in human robot interaction. In Proceedings of the 2020 ACM/IEEE international conference on human-robot interaction (pp. 33–42). [Google Scholar]

- Neyedli HF, Hollands JG, & Jamieson GA (2011). Beyond identity: Incorporating system reliability information into an automated combat identification system. Human factors, 53 (4), 338–355. [DOI] [PubMed] [Google Scholar]

- Parasuraman R, & Riley V. (1997). Humans and automation: Use, misuse, disuse, abuse. Human factors, 39 (2), 230–253. [Google Scholar]

- Rahman MM, Desai BC, & Bhattacharya P. (2008). Medical image retrieval with probabilistic multi-class support vector machine classifiers and adaptive similarity fusion. Computerized Medical Imaging and Graphics, 32 (2), 95–108. [DOI] [PubMed] [Google Scholar]

- Sanchez J, Rogers WA, Fisk AD, & Rovira E. (2014). Understanding reliance on automation: effects of error type, error distribution, age and experience. Theoretical issues in ergonomics science, 15 (2), 134–160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sebo SS, Krishnamurthi P, & Scassellati B. (2019). “i don’t believe you”: Investigating the effects of robot trust violation and repair. In 2019 14th acm/ieee international conference on human-robot interaction (hri) (pp. 57–65). [Google Scholar]

- Shepard RN, & Metzler J. (1971). Mental rotation of three-dimensional objects. Science, 171 (3972), 701–703. [DOI] [PubMed] [Google Scholar]

- Sheridan TB (2008). Risk, Human Error, and System Resilience: Fundamental Ideas., 50 (3), 418–426. (Issue: 3 Pages: 418–426) doi: 10.1518/001872008X250773 [DOI] [PubMed] [Google Scholar]

- Skitka LJ, Mosier KL, & Burdick M. (1999). Does automation bias decision-making? International Journal of Human-Computer Studies, 51 (5), 991–1006. [Google Scholar]

- Slovic P, & Lichtenstein S. (1968, November). Relative importance of probabilities and payoffs in risk taking. Journal of Experimental Psychology, 78 (3, Pt.2), 1–18. Retrieved 2024-12-31, from https://doi.apa.org/doi/10.1037/h0026468 doi: 10.1037/h00264685682961 [DOI] [Google Scholar]

- Soh H, Xie Y, Chen M, & Hsu D. (2020). Multi-task trust transfer for human–robot interaction. The International Journal of Robotics Research, 39 (2–3), 233–249. [Google Scholar]

- Sorkin RD, & Woods DD (1985). Systems with human monitors: A signal detection analysis. Human-computer interaction, 1 (1), 49–75. [Google Scholar]

- Tanner WP, & Swets JA (1954). A decision-making theory of visual detection. Psychological Review, 61 (6), 401–409. [DOI] [PubMed] [Google Scholar]

- Tsai C-C, Kim JY, Chen Q, Rowell B, Yang XJ, Kontar R, . . . Lester C. (2025). Effect of artificial intelligence helpfulness and uncertainty on cognitive interactions with pharmacists: Randomized controlled trial. Journal of Medical Internet Research, 27, e59946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vandenberg SG, & Kuse AR (1978). Mental rotations, a group test of three-dimensional spatial visualization. Perceptual and motor skills, 47 (2), 599–604. [DOI] [PubMed] [Google Scholar]

- Wang L, Jamieson GA, & Hollands JG (2009). Trust and reliance on an automated combat identification system. Human Factors, 51 (3), 281–291. doi: 10.1177/0018720809338842 [DOI] [PubMed] [Google Scholar]

- Wickens CD, & Dixon SR (2007). The benefits of imperfect diagnostic automation: A synthesis of the literature. Theoretical Issues in Ergonomics Science, 8 (3), 201–212. [Google Scholar]

- Wiczorek R, & Manzey D. (2014). Supporting attention allocation in multitask environments: Effects of likelihood alarm systems on trust, behavior, and performance. Human factors, 56 (7), 1209–1221. [DOI] [PubMed] [Google Scholar]

- Wiegmann DA, Rich A, & Zhang H. (2001). Automated diagnostic aids: The effects of aid reliability on users’ trust and reliance. Theoretical Issues in Ergonomics Science, 2 (4), 352–367. [Google Scholar]

- Wischnewski M, Krämer N, & Müller E. (2023). Measuring and understanding trust calibrations for automated systems: A survey of the state-of-the-art and future directions. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (pp. 1–16). [Google Scholar]

- Xu A, & Dudek G. (2015). OPTIMo: Online probabilistic trust inference model for asymmetric human-robot collaborations. In Proceedings of the 2015 ACM/IEEE International Conference on Human-Robot Interaction (HRI ‘15) (pp. 221–228). [Google Scholar]

- Yang XJ, Guo Y, & Schemanske C. (2023). From Trust to Trust Dynamics: Combining Empirical and Computational Approaches to Model and Predict Trust Dynamics In Human-Autonomy Interaction. In Duffy VG, Landry SJ, Lee JD, & Stanton N. (Eds.), Human-Automation Interaction: Transportation (pp. 253–265). Cham: Springer International Publishing. doi: 10.1007/978-3-031-10784-9_15 [DOI] [Google Scholar]

- Yang XJ, Schemanske C, & Searle C. (2023). Toward quantifying trust dynamics: How people adjust their trust after moment-to-moment interaction with automation. Human Factors, 65 (5), 862–878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang XJ, Unhelkar VV, Li K, & Shah JA (2017). Evaluating effects of user experience and system transparency on trust in automation. In Proceedings of the 2017 ACM/IEEE international conference on human-robot interaction (pp. 408–416). [Google Scholar]

- Yang XJ, Wickens CD, & Hölttä-Otto K. (2016). How users adjust trust in automation: Contrast effect and hindsight bias. In Proceedings of the human factors and ergonomics society annual meeting (Vol. 60, pp. 196–200). [Google Scholar]

- Zheng Y, Rowell B, Chen Q, Kim JY, Al Kontar R, Yang XJ, . . . others (2023). Designing human-centered ai to prevent medication dispensing errors: Focus group study with pharmacists. JMIR Formative Research, 7 (1), e51921. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.