Abstract

Background

Emergency departments (ED) are struggling with an increased influx of patients. One of the methods to help departments prepare for surges of admittance is time series forecasting (TSF). The aim of this study was to create an overview of current literature to help guide future research. Firstly, we aimed to identify external variables used. Secondly, we tried to identify TSF methods used and their performance.

Methods

We included model development or validation studies that were forecasting patient arrivals to the ED and used external variables. We included studies on any forecast horizon and any forecasting methodology. Literature search was done through PubMed, Scopus, Web of Science, CINAHL and Embase databases. We extracted data on methods and variables used. The study is reported according to TRIPOD-SRMA guidelines. The risk of bias was assessed using PROBAST and authors’ own dimensions.

Results

We included 30 studies. Our analysis has identified 10 different groups of variables used in models. Weather and calendar variables were commonly used. We found 3 different families of TSF methods. However, none of the studies followed reporting guidelines and model code was seldom published.

Conclusions

Our results identify the need for better reported results of model development and validation to better understand the role of external variables used in created models, as well as for more uniform reporting of results between different research groups and external validation of created models. Based on our findings, we also suggest a future research agenda for this field.

Clinical trial number

Not applicable.

Supplementary information

The online version contains supplementary material available at 10.1186/s12873-025-01250-8.

Graphical Abstract

Keywords: Systematic review, Forecasting, Emergency service, Artificial intelligence, Statistical models, External data, TRIPOD, PROBAST

Background

Emergency departments (ED) are the backbone of acute (and becoming prominent in chronic) patient care and one of the main entrances for patients entering the healthcare system besides primary care and their referrals [1, 2]. EDs around the globe are experiencing overcrowding and staff shortages. The ED staff in the Western World have stated concerns over mismatching system resources to cope with peak hours patient influx [3]. This has a negative effect both on patient care and safety as well as on staff working conditions [3].

Time series (TS) is sequential data collected over time observing a phenomenon [4]. Time series forecasting (TSF) is an effort to try and predict what will happen with the observed phenomena, based on its history and other variables in the near to far future [4]. TSF was popularized during the COVID-19 pandemic, where it was used for predicting the pandemic trajectory [5]. Other than medicine, TSF is also used in retail, energy, logistics and many other industries, which suffer from similar problems of trying to balance the supply and demand problem [6]. With TSF in the medical field, we can try to anticipate the number of patients that will get infected, show symptoms or seek medical care in a set horizon in the future [7].

With new advances coming from other fields of TSF, the research field is growing, and new technologies are emerging [2, 8, 9]. EDs routinely collect data that can be used for TSF, being a good starting point before proceeding to other departments. A major problem is the lack of uniform terminology (box 1), blocking rapid development and hindering the ability of systematic review to capture all of the research pertaining to the research question [2, 8, 9].

Box 1.

Important term definitions

| Number of visits: number of patients arriving to the emergency department (This includes all patients, regardless of whether they are attended to, leave without being seen, or are redirected elsewhere). |

| Number of arrivals: number of patients who have visited ED and have stayed there, sometimes also called “census”. |

| Attendance: number of patients that were actually attended to in the emergency department (can be lower than the number of visits). |

| Disposition: choice if patient is going to be admited to ward or discharged. |

One of the earliest articles from the field of ED TSF was published in 1994 and described using raw observations, moving averages and auto-regressive integrated moving averages (ARIMA) [10]. Since then, teams from around the world have been trying to improve their predictions using different models available [2, 8, 9, 11]. Recently, with the advancement of hardware and lowered entry requirements for advanced methods, such as machine learning [12], the field has been experiencing rapid development.

We have identified 4 different systematic reviews carried out on this topic to this date [2, 8, 9, 11]. Each raises the field of ED TSF to a higher level and views the topic through a slightly different prism. Although reviews are exhaustive, they still leave a novice in the field wondering where and how to start. It is still uncertain which variables perform better and which models should be used. Additionally, none of the reviews address bias in a systematic way using externally validated tools.

With this in mind, the aim of this systematic review is to help authors starting in the ED arrivals TSF field. The primary goal was to identify which external variables to use to make prediction models more accurate. The secondary goal was to find out which methods of TSF are used and which of them perform better.

Methods

Our systematic review is reported according to TRIPOD-SRMA guidelines [13] (see Supplement 1), which uses some elements of the PRISMA checklist with added thematic items for AI and modelling [13]. The protocol of the study (see Supplement 2) was not registered to Prospero as it does not research randomized controlled studies and is out of scope for the database.

Information sources and search strategy

Our systematic review search strategy was informed by previous work done on other systematic reviews from this field (Table 1) [2, 8, 9, 11]

Table 1.

Previous systematic reviews on ED TSF

| Author | Databases searched | Search strategy | Search strategy hits | Number of included studies | Search start | Search end |

|---|---|---|---|---|---|---|

| Wargon et al. [2] | PubMed (Medline), Science Citation Index | ‘Emergency Service [mh] AND (forecasting [all] or scheduling) AND (simulation OR models, theoretical [mh]) | 201 | 9 | NA | 2007 |

| Muhammet et al. [11] | PubMed (Medline), Scopus, Web of Science, Google Scholar, Wiley, Elsevier, Springer, IEEE, BMJ Journals, Hindawi, Wolters Kluver Healths, Mary Ann Liebert, Oxford Journals, and Taylor & Francis | Emergency depart- ment/emergency room AND forecasting/predicting/ estimating AND patient demand/arrival/visit/volume/ presentation, LOS, admission, waiting time, ambulance diversion, etc. | 2964 | 41 | 2002 | 2018 |

| Jiang et al. [9] | PubMed (Medline), ScienceDirect and Scopus | ‘forecast OR predict’ AND ‘daily attendances OR patient volumes OR patient visits’ AND ‘emergency room OR emergency department’ AND ‘time series OR regression’ | 1677 | 35 | 2004 | 2021 |

| Silva et al. [8] | PubMed (Medline), Web of Science and Scopus | forecasting AND ED AND (visits OR arriv- als)) AND (method OR model) AND (better OR improved) AND (performance OR accuracy) | 233 | 7 | 2015 | 2021 |

Our systematic search was conducted in 5 databases – PubMed [14], Scopus [15], Web of Science [16], CINAHL [17] and Embase [18], on the 13th of February 2025. We used the following search key, which was based on previous systematic reviews search queries (Table 1):

(“emergency department*” OR “emergency room*” OR “emergency service*” OR “ED” OR “ER”) AND (attendance* OR visit* OR “patient volume” OR “patient flow”) AND (modelling OR modeling) AND TITLE-ABS-KEY (forecast* OR prediction OR “time series forecasting” OR “time series model” OR “patient visits forecast” OR overcrowding) OR TITLE-ABS-KEY (“machine learning”).

We restricted our search to exclude certain publication types. Conference abstracts and books or book chapters did not appear in our search results. Only results in English language were included. No restrictions regarding study design were applied. Only studies reporting development and validation of models were included. The search included all eligible articles published until February 2025. The review builds on previous work conducted.

Study selection process

We included model development and/or validation studies that were forecasting patient arrivals to the ED and used external variables to better inform their model. Studies which were on any age, any sex or any disease were assessed as relevant to this review. We included studies on any forecast horizon and any forecasting methodology. Our primary outcome was the final list of external variables used in the model. The secondary outcome was an analysis of which model performed best (if more than one model was used).

Before the screening, search results were deduplicated using SR-accelerator Deduplicator software [19]. Search results were independently screened for eligibility by 2 authors (LP, KGR). Two review authors (LP, KGR) retrieved the full texts, and 2 authors (LP, KGR) screened the full texts for inclusion. The citation search was done by LP and KGR. Discrepancies were resolved by a second look and agreement between the authors screening full texts (LP, KGR).

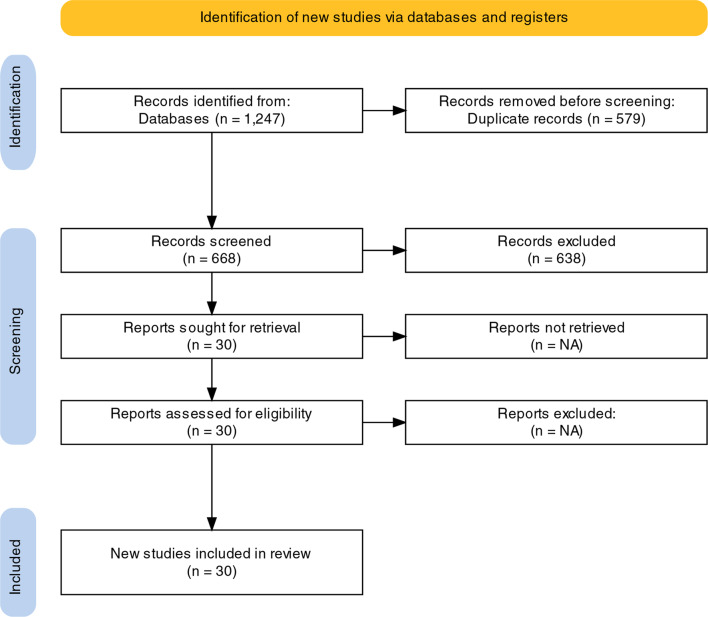

The selection process was recorded in sufficient detail to complete a PRISMA flow diagram (see Fig. 1), which was created online [20] and a list of deduplicated studies (Supplement 3).

Fig. 1.

PRISMA flowchart representing the study selection process

Data items

An author-created form (initially piloted on one included study) was used for data extraction of characteristics of studies, outcomes and risk of bias. One study author (LP) extracted the following data from included studies:

year of publication,

country,

dataset size,

methods used,

list of all tested external variables,

the population the model tried to capture,

list of external variables used in the final model,

forecasting horizon,

external variable preprocessing used,

missing data handling,

main conclusions and

future research directions.

Risk of bias and applicability assessment

Risk of bias was assessed using the PROBAST tool [21]. Two authors (LP, KGR) assessed risk of bias for each study on 73 PROBAST points. TSF expert (JŽ) also suggested additional items to better assess the risk of bias and quality of manuscripts, which could be answered with a yes or no. These items were:

Is the model made public (ex. Hugging face or Github)?

Is the development dataset size specified (number of records)?

Was the development data made available (ex. Zenodo or Figshare)?

Were any reporting guidelines followed (ex. TRIPOD)?

Did the study use internal or external validation?

What kind of data validation was used and what type is it according to TRIPOD 2015 [22]?

Did the authors report predictions with confidence intervals?

PROBAST tool includes 4 steps. In the first step, the systematic review question is specified (see the aim of the study), this step is not repeated later. In the second to fourth step, each study is assessed for the type of prediction model evaluation, risk of bias and applicability and finally, the overall judgement is passed [21]. If only a low risk in step 2 and 3 is found, the article has low risk of bias and a low concern over applicability. If at least one dimension in step 2 in 3 is marked as high, the article gets a high risk of bias or a high concern of applicability [21].

The unit of analysis was “population seeking care in EDs”. We did not contact investigators or study sponsors to provide missing data. We did not assess publication bias/small studies effect. We did not perform subgroup or sensitivity analysis because it does not apply to these kinds of studies.

Results

Search results

Our systematic search identified 1247 studies, 10 from EBSCO, 208 from EMBASE, 319 from PubMed, 519 from Scopus and 191 from Web of Science. After removing duplicates, 668 studies were screened. 30 studies were included in the [10, 23–51] systematic review [10, 23–51]. Figure 1 shows the PRISMA [52] diagram of the study.

Study and model characteristics

The summary data of 30 studies included in our systematic review (SR) can be seen in Table 1. Most of the included articles tried to predict all patient admissions, while 4 focused on asthma or respiratory illnesses [27, 30, 33, 42] and 2 on respiratory illnesses related ED arrivals [33, 42]. Average study period was 4 years, ranging from 1 to 13 years in one study (see Supplement 4) [53]. The vast majority of research comes from the United States of America and Asia. Most European studies are based in the UK or Spain. The forecast horizon ranged from 1 day to 1 year. Sample size was between 3000 and 901,000. The most common conclusions are that using weather and calendar variables improves prediction accuracy, that machine learning models generally perform better than other methods of TSF and that use of internet search data (ie. Google Trends) can meaningfully help improve models,but needs further research. Future research suggested by authors is similar between articles. Prospective studies are suggested as well, using external validation. Additionally, authors want to try incorporating more granularity into their prediction, i.e. predicting triage categories of arriving patients and the number of different chief complaints. Finally, authors propose creating new hybrid models (also known as ensemble or fusion of models) to improve on currently available ones. Some of the studies also used variable pre-processing (Table 2). None of the studies used any methods to fill in the missing data.

Table 2.

Summary data of 30 included studies in the field of prognostic models for emergency department arrivals forecasting.

| Year | Author | Country | Number of patient records | Forecast horizons | Population | Main conclusions | Future research | External variables preprocessing |

|---|---|---|---|---|---|---|---|---|

| 2024 | Peláez et al. [23] | Spain | 3322 | 7 days | GP |

I. Dividing the database according to the day of the week allows obtaining the best results in both databases II. Meteorological variables exhibit notable significance. Particularly, maximum and minimum temperatures III. The most effective threshold is identified at 40 visits when splitting the dataset based on the count of ED visits from the sixth previous day IV. Variables related to temperature hold significant relevance in both databases when performing the training data segmentation into various subsets V. Variables referring to the number of visitors on preceding days also play a pivotal role in the process |

I. The development of hybrid models II. Refining the selection of optimal thresholds and variables to improve forecasting precision III. The distinction between various patient categories and an extension of the prediction-time horizons of the forecasting problem IV. The adaptation of the prediction system to extreme events |

I. Correlation matrix for variable selection, feature standardization using mean and standard deviation |

| 2023 | Gafni-Pappas et al. [24] | USA | NA | 1 day | GP | I. Machine learning models perform better at predicting daily patient volumes as compared to simple univariate time series models |

I. Prospective study using the identified variables identified II. Additional feature engineering III. Assessing differences in model accuracy on other outcomes IV. One could also consider reviewing common emergency department complaints to see if the models can predict these V. Other variables to aid in prediction may exist |

None |

| 2022 | Tuominen, et al. [25] | Finland | 900700 | 1 day | GP |

I. Predictive accuracy of next day arrivals can be increased using high-dimensional feature selection approach when compared to both univariate and nonfiltered high-dimensional approach II. Performance over multiple horizons was similar with a gradual decline for longer horizons |

I. Enhancing the feature selection mechanism II. Investigating its applicability to other domains, III. In identifying other potentially relevant explanatory variables |

None |

| 2022 | Fan et al. [26] | Hong Kong | 139910 | 7 days | GP |

I. The internet search index significantly improves forecasting as a reliable predictor monitoring continuous behavior trend and sudden changes during the epidemic II. Nonlinear model is better |

I. Explore ED-related data from social media platforms II. Predict the ED patient flow with different severity levels III. The relationship between levels of patient arrivals and internet information |

I. Minimum-maximum normalization technique before modeling |

| 2020 | Peng et al. [27] | China | 13218 | prediction of peak ED arrival | Chronic respiratory disease patients | I. Random forests reached the best classification prediction performance | None | I. Removed records with less than 10 people on weekends to eliminate weekend effects, bringing the total number of samples collected to 55 |

| 2019 | Tideman et al. [28] | USA | NA | Unknown | GP | I. Search volume data can meaningfully improve forecasting of ED |

I. Validating the methodology at additional diverse sites II. Incorporating additional novel sources of data III. Prospectively testing the models in real time |

I. Pairways correlation and PCA |

| 2018 | Jiang et al. [29] | Hong Kong | 245377 | 28 days | GP | I. Predictive values are significant indicators of patients' demand and can be used by ED managers to make resource planning and allocation | None | I. A genetic algorithm (GA)-based feature selection algorithm is improved and implemented as a pre-processing step |

| 2015 | Ram et al. [30] | USA | NA | 3 days | Asthma patients | I. Model can predict the number of asthma ED visits based on near-real-time environmental and social media data with approximately 70% precision |

I. A temporal prediction model that analyzes the trends in tweets and AQI changes and estimates the time lag between these changes and the number of asthma ED visits. II. Examine the effects of seasonal variations III. The effect of relevant data from other types of social media IV. How combining real-time or near-real-time social media and environmental data with more traditional data might affect the performance and timing of the current individual-level prediction models V. Diseases with geographical and temporal variability |

I. Z-score normalization, twitter with ratio number of asthma related vs. all tweets |

| 2011 | Perry et al. [31] | Canada | 140000 | 1 day | Weather induced heat-related illness | I. Estimates of HRI visits from regression models using both weather variables and visit counts captured by syndromic surveillance as predictors were slightly more highly correlated with NACRS HRI ED | None | None |

| 2023 | Hugo Álvarez et al. [32] | Spain | 361698 | 1, 3 and 7 days | GP | I. Attention model with calendar data achieves the best results | None | None |

| 2025 | Morbey et al. [33] | United Kingdom | Unknown | 1 to 28 days | Respiratory ED visit |

I. Machine learning pipelines can be used to create daily forecast that predict the peak in demand for RSV activity over the following 28 days II. Correctly predict the peak in ED acute bronchiolitis attendances in children under 5 years III. Whilst ‘ensemble forecast methods’ inevitably fit the data better, they lack transparency, and it is difficult to explain the theoretical justification for individual forecasts |

I. Better forecasts during atypical seasons by weighting the training data to give more emphasis to the rare events II. Synthetic data could be incorporated in training data |

I. Data were smoothed by weighting activity to remove day of the week effects caused by weekends and public holidays so that weekends and any public holidays within the week always contributed two sevenths of the weekly activity. II. Data were normalised, so that the smoothed daily activity were in the range zero to one |

| 2024 | Lim et al. [34] | Singapore | unknown | Unknown | GP |

I. The EWS-ED framework is designed to detect drifts and predict the extent of these drifts, thereby allowing pre-emptive signals to be detected in order to consider model updating or retraining II. Importance of evaluating concept drift from the conflicting problem arising due to the stability versus the plasticity of predictive models over time. |

I. External validation of the modeling framework II. Including data from multiple hospitals across different regions |

None |

| 2024 | Porto et al. [35] | Australia, the USA, the Netherlands | 46495 | 7 and 45 days | GP |

I. XGBoost consistently outperformed other models across all forecast horizons, with FE significantly enhancing the predictive capabilities of all ML algorithms. II. Temperatures and days of the week are important predictors III. Calendar variables show more importance than meteorological ones |

I. Apply FE to other variables, such as meteorological data | None |

| 2023 | Susnjak et al. [36] | New Zealand | unknown | 90 days | GP | I. Best performing models are Voting, Averaging, Stacking, and Prophet | None | None |

| 2022 | Petsis et al. [37] | Greece | 893046 | 1 day | GP |

I. Day of the week, the mean number of visits of the four previous on duty days, and the daily maximum temperature of the city of Ioannina are the variables that most affect the number of patient ED visits on the next on duty day II. Monday is the busiest day for the ED III. On days with a maximum daily temperature of over 24 Celsius degrees, larger numbers of visits are expected IV. A mean above 284 number of visits on the four previous on duty days means that probably there would be many visits on the next on duty day |

None | None |

| 2021 | Sharafat et al. [38] | USA | unknown | 30 days | GP |

I. PatientFlowNet has a slightly better prediction accuracy for exogenous variables II. It produces substantially more accurate predictions for the endogenous flow variables such as treatment and discharge rates |

None | None |

| 2021 | Vollmer et al. [39] | UK | unknown | 1, 3 and 7 days | GP | I. Framework that is quick and easy to implement | I. Focus on granular data sets including individual level patient data with diagnoses and testing information | None |

| 2021 | Duarte et al. [40] | UK | unknown | 7 days | GP | I. Prediction of Emergency Department (ED) indicators can be improved by using machine learning models in comparison to traditional, particularly when sudden changes are observed in the data | I. Simulations with different KPIs and data sample periods | None |

| 2020 | Yousefi et al. [41] | Brazil | unknown | 1 to 7 days | GP |

I. Short-term forecasting horizons (1 and 2 days) the ARIMA-LR performs better than other II. Forecasting horizons of 3–7 days the MLR shows better results |

I. Future study can integrate simulation with forecasting to show the impact of patient visits on the performance of an ED II. Same model in a number of EDs in different countries to evaluate the performance of the proposed model |

None |

| 2019 | Martin et al. [42] | Canada | unknown | 3 days | Influenza like illness | I. The most effective modelling approach was based on a GLMM with random intercept and incorporated spatio-temporal characteristics as well as an indicator variable similar to a hinge function |

I. Model predictions could be updated after the 3-day window of advanced warning- update estimates post-prediction. II. Predictions at a smaller geographic area or at the hospital-level, and by age category III. Other methods to model the spatial distribution of the visits across the city IV. Model performance could be evaluated based on other influenza season characteristics V. Model should be tested in practice |

None |

| 2019 | Ho et al. [43] | Singapore | unknown | 1 day and 7 days | GP |

I. Informed resource and manpower allocation in hospitals II. Can serve as a key mitigation measure against worsening ED congestion problems |

None | None |

| 2018 | Ekström et al. [44] | Sweden | unknown | 3 days | GP | I. Gradient boosting may be favorable over neural networks for predicting the coming 72 hours | I. Longer prediction horizons | None |

| 2016 | Calegari et al. [45] | Brazil | 57128 | 1, 7, 14, 21, and 30 days | GP |

I. Seasonal and weekly patterns II. Time-series models can be used to provide accurate forecasts, especially short-range forecasts horizons III. Some climatic factors displayed significant correlation with demand series from different classes of patients but do not increase the accuracy of prediction when incorporated into the model |

I. Study should be extended to regions with different climatic characteristics | None |

| 2015 | Aroua et al. [46] | Canada | unknown | 7 days | GP |

I. SARIMAX provides better information than simple SARIMA models II. The level of snow, the maximum and minimum weekly average temperature and weeks of the year are often factors that are significant |

None | None |

| 2015 | Zlotnik et al. [47] | Spain | unknown | 2, 4, 8, 12, 16, 20, 24 weeks | GP | I. The M5P models are simpler and computationally faster than SVM models, may be a good alternative for larger data sets | I. Additional adjustments of model for different locales | None |

| 2015 | Ablogaye et al. [48] | Australia | unknown | 12 months | GP | I. VARIMA models are a more reliable to predict ED demand by patient subgroups and provide a more accurate forecast for ED demand than the normal or standard univariate ARMA and Winters’ methods |

I. Scenario modelling to consider potential changes in population projections and in health policy and clinical practice II. Identify the factors/drivers that contribute to the increasing trend of ED presentation III. Health services strategic planning, and guide workforce modelling, infrastructure and financial modelling |

None |

| 2010 | Perry et al. [49] | Canada | unknown | 4 and 7 days | Respiratory illness |

I. Telehealth can be used to estimate future visits at the health unit level II. Estimates are better for health units with larger populations III. Non-linear modeling methods produced more accurate estimates IV. Because no knowledge of the actual number of past visits is used in any model over the validation data, the statistically significant decrease in prediction accuracy with increasing forecasting lead time provides evidence that there is information about the future number of visits in the call time series |

None | None |

| 2009 | Jones et al. [50] | USA | unknown | 35 days | GP |

I. Multivariate VAR models provided more accurate forecasts of ED arrivals for extended forecast horizons when compared to standard univariate time series methods II. We are not confident that these forecasts could be used reliably in the clinical setting |

I. Focus on the development and use of robust analytical methods such as queuing theory, optimization, and simulation modeling that can be used to provide decision support for clinical staffing, real-time monitoring, and forecasting | None |

| 1994 | Tandberg et al. [10] | USA | 87354 | unknown | GP |

I. Models based on prior observations allow for predictions for future staffing and other needs. II. Simple models performed better than more complex III. Time series forecasts of length of stay and patient acuity are not likely to contribute additional useful information for staffing and resource allocation decisions |

None | None |

| 2019 | Asheim et al. [51] | Norway | 191939 | 1, 2 and 3 hours | GP | I. This method shows continuously updated forecasts with better accuracy than methods based on time variables alone, particularly for periods of large inflow |

I. A more sophisticated machine learning algorithm giving more accurate forecasts II. Interpretability of the proposed model may be valuable |

I. Rounding to 10 minutes before |

Legend: (GP: general population, ED: emergency department, AQI: air quality index, NACRS HRI ED: National Ambulatory Care Reporting System, EWS-ED: Early Warning System for ED attendance predictions, FE: Feature engineering, M5P: decision tree with with regression on nodes, SVM: Support Vector Machines: Multivariate VAR, Cesnus, RSV: Respiratory syncytial virus, FE: Feature engineering, ML: Machine Learning, ARIMA: Autoregressive integrated moving average KPI: Key Performance Indicator, ARIMA-LR: ARIMA Linear Regression, MLR: Multiple Linear Regression, SARIMA: Seasonal ARIMA, SARIMAX: Seasonal Autoregressive Integrated Moving Average + exogenous variable, VARIMA: Vector Autoregressive Integrating Moving Average, ARMA: Autoregressive moving-average model)

Primary outcome

The number of external variables (Table 3) varied between studies from one [28] to over thirty [32]. Analyzing the external variables, they can be split into different thematic groups. These groups are: (I) weather, (II) air quality, (III) Social networks (ex. X formerly Twitter), (IV) calendar, (V) Google search trends, (VI) hospital website visits and (VII) hospital information system, (VIII) Prehospital dispatch, (IX) Experienced local Staff and (X) national health phone lines. Most commonly, weather and calendar variables were used for the final model. Although studies have tried a wide array of external variables, the final model usually used only a select few, which were found to have the highest predictive value. These variables are presented in the table below.

Table 3.

External variables that were used in final models for forecasting ED arrivals

| Author | Variables Used | Variables Retained in the Best Model |

| Peláez et al. [23] |

1. Weather a. temperature, b. pressure, c. rainfall for the three previous days, 2. Calendar a. day of week, b. moon phase, c. the presence of festivities on both the current and preceding days, 3. HIS a. daily ED visits of the previous week. |

1. Weather a. minimum temperature b. maximum temperature c. wind speed 2. HIS a. daily ED visits of the previous week |

| Gafni-Pappas et al. [24] |

1. Weather a. max temperature, b. precipitation, c. average cloud coverage, d. relative humidity, e. average SFC pressure, f. daily change in SFC pressure, g. average wet bulb, h. average solar radiation, 2. Air quality a. overall air quality score, b. max ozone concentration, c. percent flu visits 3. Calendar a. day of the week |

1. Calendar a. day of the week b. maximum temperature 2. Weather a. SFC pressure |

| Tuominen, et al. [25] |

1. HIS a. number of available hospital beds, 2. Calendar a. weekday, b. month, c. specific holiday, d. lagged holiday, e. working day, f. N of minor public events, g. N of major public events, h. N of all public events. 3. Weather a. cloud count, b. air pressure, c. relative humidity, d. rain intensity, e. snow depth, f. air temperature, g. dew point h. temperature, i. visibility, j. air temperature min, k. air temperature max, 4. Website traffic a. website Visitstays.fi, b. website Visitstays.fi/acuta c. Ekstöm’s visitstays.fi, d. Ekström’s ratiotays.fi, 5. Search index/Google trends a. “Acuta” |

1. Calendar a. Shrove Sunday b. Easter day c. Midsummer d. Christmas eve e. All Saint’s day f. Independence day eve g. Ascension day h. Holidayt −1 i. working day j. March k. February l. December m. 29 individual public events* n. N of major public events o. Weekdays i. Thursday ii. Saturday iii. Friday iv. Wednesday v. Tuesday vi. Sunday vii. Monday 2. Available hospital beds 3. Weather a. cloud count 4. Hospital website visits |

| Fan et al. [26] |

1. Calendar a. months, b. school holiday c. public holidays 2. Weather a. the daily highest temperature b. daily lowest temperature 3. Search index/Google trends |

1. Calendar a. months, b. school holiday c. public holidays 2. Weather a. the daily highest temperature b. daily lowest temperature 3. Search index/Google trends a. ginger, swine flu symptoms, infect, dept. of health, fever, swine flu clinic, dentist, enterovirus, cough |

| Peng et al. [27] |

1. Weather a. wind speed b. outside temperature c. atmospheric pressure d. relative humidity 2. Air quality a. particulate matter less than 2.5 μm in diameter b. sulphur dioxide. c. carbon monoxide d. nitrogen dioxide e. 8-hour average ozone slip in a day |

Same |

| Tideman et al. [28] |

1. Search index/Google trends a. allergies, blizzard, bronchitis, fever, flu, flu shot, forecast, humidifier, pneumonia, and snow 2. Calendar a. holidays b. special days (eg. Monday after a long weekend) 3. Weather a. min, mean, max, temp b. barometer c. visibility d. mean, max wind speed e. daily snowfall f. weather warnings |

1. Weather a. maximum temperature b. mean visibility 2. Search index/Google trends a. snow, allergies, blizzard, flu shot, fever, forecast, pneumonia 3. Calendar |

| Jiang et al. [29] |

1. Calendar a. hour of day, b. day of week, c. month of year, d. public holiday, e. before public holiday, f. after public holiday, 2. Weather a. absolute daily max air temp, b. mean air temp, c. absolute daily min air temp, d. mean dew point, e. mean relative humidity, f. mean amount of cloud, g. total rainfall, h. mean wind speed, i. change of max air, j. mean air, k. absolute min air, l. mean dew, m. mean relative humidity, n. mean amount of cloud, o. total rainfall, p. mean wind speed to one day before |

same |

| Ram et al. [30] |

1. Twitter data, 2. Search index/Google trends 3. Air quality index (AQI) 4. Air quality measures a. particulate matter b. ground-level ozone c. carbon monoxide d. sulfur oxides e. nitrogen oxides f. lead |

1. Air quality a. CO b. NOx c. PM 2. Twiiter data a. asthma related tweets 3. Search index/Google trends |

| Perry et al. [31] |

1. Weather a. heat index b. Humindex c. wet bulb globe temperature d. temperature maximum e. temperature minimum f. average daily values of the temperature g. dew point h. wind speed |

1. Weather a. temperature b. humidity c. wind speed |

| Hugo Álvarez et al. [32] |

1. Calendar a. weekday b. month of year c. holidays d. academic holidays e. weekends 2.Weather a. temperature (avg, min, max, variation, hour max,hour min) b. max wind direction c. wind speed avg d. hours of daylight e. atmospheric pressure (min, max) 3.Air quality: a. BEN, CH4, CO, EBE, NMHC, NONO2, NOX, O3, PM10, PM2.5, SO2,TCH, TOL, OXY, MXY, PXY 4.Allergens alnus, alternaria, artemisia, betula, carex, castanea, cupressaceae, fraxinus, gramineae, mercurialis, morus, olea, arecaceae, pinus, plantago, platanus, populus, amaranthaceae, quercus, rumex, ulmus, urticaceae 5.Search index/Google trends a. allergy, analgesic, antihistamines, appendicitis, asthma, aspirin, bronchiolitis, fall, conjunctivitis, damage, depression, fainting, diabetes, pain, emergency, endometriosis, illness, coping, epilepsy, COPD, fever, gastroenteritis, flu, hospital, ibuprofen, incident, heart attack, discomfort, medication, migraine, omeprazole, otitis, paracetamol,prostate, pseudoephedrine, pneumonia, allergic reaction, common cold, brawl, hospital route, bleeding, sinister, high blood pressure, thyroid, urgency, chickenpox, gallbladder |

1. Calendar a. weekday b. month of year c. holidays d. academic holidays e. weekends 2. Weather a. temperature (avg, min, max, variation, hour max,hour min) b. max wind direction c. wind speed avg d. hours of daylight e. atmospheric pressure (min, max) 3. Air quality a. BEN, CH4, CO, EBE, NMHC, NO, NO2, NOX, O3, PM10, PM2.5, SO2, TCH, TOL, OXY, MXY, PXY 4. Search index/Google trends a. appendicitis, bronchiolitis, conjunctivitis b. influenza, hospital, otitis, common cold |

| Morbey et al. [33] |

1. Calendar a. day of month 2. UKHSA real-time syndromic surveillance system* |

Same |

| Lim et al. [34] |

1. calendar a. Holiday i. public holidays ii. post-holiday (the working day following a public holiday) iii. pre-holiday (the day preceding a public holiday) b. working day (indicator for weekdays and non-public holidays) c. day of the week (indicator for Monday, Tuesday, Wednesday, Thursday, Friday, Saturday, or Sunday) |

Same |

| Porto et al. [35] |

1. Calendar data a. day of the week in which the patient arrived at the ED – 7 dummy variables b. month of the year in which the patient arrived at the ED - 12 dummy variables 2. Weather data a. minimum daily temperature in Celsius of the arrival day, mean daily temperature in Celsius of the arrival day, maximum temperature in Celsius of the arrival day), 3. Feature Engineered (FE) variables |

Same |

| Susnjak et al. [36] |

1. Calendar a. public_holiday (Public holiday indicator (0/1)) b. week (Week number ranging from 1 to 53) 2. Covid a. COVID-19 alert level ranging from one to four |

1. Covid-19 Alert level |

| Petsis et al. [37] |

1. Calendar a. the day of year, the month, the day of the month, the day of the week, and the week of the year b. holidays and event based i. weekend, public holiday, before public holiday, after public holiday, event, before event, after event, school holiday 2. Weather a. min temp b. max temp c. mean temp d. rain e. average wind speed |

Same, with most important being: 1. Calendar a. the day of the week 2. Weather a. maximum temperature |

| Sharafat et al. [38] |

1. HIS a. arrival b. treatment c. discharge |

2. HIS a. arrival b. treatment c. discharge |

| Vollmer et al. [39] |

1. Calendar a. school and bank holidays 2. Weather 3. Search index/Google trends a. flu 4. Experienced staff-known local events that cause surge |

1. Calendar a. Christmas b. Monday c. Sunday d. school holiday e. Saturday f. Wednesday g. Thursday h. July i. time j. March k. Tuesday l. Nottingham Carnival m. August n. December o. June p. September q. bank holiday r. November s. February t. May u. October v. April 2. Weather a. precipitation yesterday b. min air temperature yesterday c. max air temperature yesterday 3. Search index/Google trends a. flu |

| Duarte et al. [40] |

1. HIS a. N of attendances b. unallocated patients with decision to admit c. medically fit to discharge |

2. HIS a. N of attendances b. unallocated patients with decision to admit c. medically fit to discharge |

| Yousefi et al. [41] |

1. Calendar a. soccer match days b. weekends c. holidays d. day before holiday e. day after holiday |

Same |

| Martin et al. [42] |

1. HIS a. date of birth b. forward sortation area (FSA) of the postal code and c. chief complaint 2. Calendar a. season |

1. HIS a. visitor age b. area of residence and 2. Calendar a. season |

| Ho et al. [43] | 1. Search index/Google trends |

2. Search index/Google trends a. “SGH map,” “Singapore General Hospital address,” “SGH appointment,” and “SGH A&E.” |

| Ekström et al. [44] |

1. Calendar a. day of week b. month c. hour of the day d. holidays |

2. Calendar a. day of week b. month c. hour of the day d. holidays |

| Calegari et al. [45] |

1. calendar a. month b. day of the week 2. weather a. average temperature b. min temperature c. max temperature d. temperature gap e. rain f. air velocity g. relative humidity h. hours of insolation |

1. Weather a. minimal temperature b. maximal temperature c. rain d. air velocity e. relative humidity f. temperature gap |

| Aroua et al. [46] |

1. Calendar a. weeks of the year 2. Weather a. weekly average temperature b. maximum temperature c. minimum temperature d. weekly temperature differences e. rain f. snow |

Same |

| Zlotnik et al. [47] |

1. Calendar a. day of week b. holiday c. month of the year d. week number |

Same |

| Ablogaye et al. [48] | 1. Weather |

1. Calendar a. month of the year 2. HIS a. age group b. place of treatment c. triage category d. disposition |

| Perry et al. [49] |

1. National phone service a. Daily aggregate number Telehealth Ontario Calls** for respiratory illness 2. Calendar a. indicator variable for upcoming holidays and weekends |

Same |

| Jones et al. [50] |

1. HIS a. ED arrivals b. ED census c. ED laboratory orders d. ED radiography orders e. ED computed tomography (CT) orders f. inpatient census g. inpatient laboratory h. inpatient radiography orders i. inpatient CT orders |

1. HIS a. laboratory orders, b. radiography orders, c. CT orders, d. inpatient census e. inpatient diagnostic orders |

| Tandberg et al. [10] | None | None |

| Asheim et al. [51] |

1. Calendar a. time of day b. day of the week c. month d. holidays 2. Dispatch a. prehospital notifications |

Same |

Legend: SFC: surface pressure chart, PM: particulate matter, AQI: air quality index, N: number, HIS: Hospital Information System, FSA: Forward Sortation Area, *: Monitor anonymised health service contacts from across the National Health Service (NHS) in England, **: a free 24-hour health advice telephone service

Secondary outcome

We have identified 53 different methods from 3 different method families across our systematic review (see Table 4 below). ARIMA, Support Vector Machines and Random Forest are the most commonly used methods.

Table 4.

Methods used across the literature

| Method Family | Method | Titles of studies with this methodology |

|---|---|---|

| Statistical Methods | ARIMA | Gaffni-Pappas et al. [24], Tuomien et al. [25], Jiang et al. [29], Vollmer et al. [39], Duarte et al [40]., Yousefi et al [41]., Ekström et al. [44], Calegari et al [45]., Abogaye-Sarfo et al. [48], Tandberg et al [10]., Susnjak et al. [36], Fan et al. [26], Duarte et al. [40] |

| ARIMAX | Tuomien et al. [25], Jiang et al. [29], Fan et al. [26] | |

| Seasonal-ARIMA | Jiang et al. [29], Calegari et al [45]., Lim et al [34], Sharafat et al. [38] | |

| ARIMA with linear regression | Yousefi et al. [41] | |

| Exponential Smoothing | Gafni-Pappas [24], Vollmer et al [39]., Calegari et al. [45] | |

| EWMA (Exponentially Weighted Moving Average) | Perry et al. [49] | |

| Linear Regression | Morbey et al [33]. | |

| Multiple Linear Regression | Tiedman et al. [28], Yousefi et al. [41], Ho et al [43]., Perry et al. [31], Aroua et al. [46], Fan et al. [26] | |

| Poisson Regression | Perry et al. [31], Martin et al [42]., Asheim et al. [51] | |

| Relaxed LASSO | Jiang et al. [29] | |

| Generalised linear model with elastic net regularisation | Morbey et al. [33] | |

| LASSO and generalised linear model | Porto et al. [35] | |

| General Linear Method | Yousefi et al. [41] | |

| Simulated annealing and Floating search | Tuomien et al. [25] | |

| Facebook Prophet [54] | Gafni-Pappas et al. [24], Susnjak et al. [36], Duarte et al. [40] | |

| Ridge regression | Susnjak et al. [36] | |

| Generalized estimating equations | Yousefi et al. [41] | |

| Holt-Winters | Calegari et al [45]., Abogaye-Sarfo et al. [48] | |

| Stratified average | Zlotnik et al. [47] | |

| Non-linear difference equation model | Perry et al. [49] | |

| Moving averages | Tandberg et al. [10] | |

| Machine Learning | Decision Tree | Ram et al. [30], Zlotnik et al. [47] |

| Random Forest | Gafni-Pappas et al. [24], Tuomien et al. [25], Peng et al. [27], Jiang et al. [29], Porto et al. [35], Susnjak et al. [36], Sharafat et al [38]., Morbey et al [33]., Aroua et al. [46], Fan et al. [26] | |

| Support Vector Machine (SVM) | Jiang et al. [29], Ram et al. [30], Morbey et al [33]., Yousefi et al. [41], Aroua et al. [46], Zlotnik et al. [47], Jones et al. [50], Fan et al. [26], Porto et al. [35] | |

| Naive Bayes | Ram et al. [30], Susnjak et al. [36] | |

| Artificial Neural Network | Ram et al. [30] | |

| Deep Neural Network | Jiang et al. [29], Ekström et al. [44] | |

| Long Short-Term Memory (LSTM) | Duarte et al [40]., Yousefi et al. [41], Sharafat et al [38]., Vollmer et al. [39], Fan et al. [26] | |

| Attention-Based Neural Network | Alvarez et al. [32] | |

| Deep Neural Network Integrated Genetic Algorithm | Jiang et al. [29] | |

| K-nearest neighbour | Morbey et al. [33], Susnjak et al. [36], | |

| Neural network regression | Porto et al. [35] | |

| Feed forward neural network | Sharafat et al. [38] | |

| General regression neural network | Duarte et al. [40] | |

| Recurrent neural network | Duarte et al. [40] | |

| Radial absis network | Duarte et al. [40] | |

| Parallel cascade model | Perry et al. [49] | |

| State space model | Perry et al. [49] | |

| Extreme learning machine | Fan et al. [26] | |

| Convolutional neural network | Sharafat et al. [38] | |

| EnsembleMethods | Cluster-based Ensemble Learning | Pelaez et al. [23] |

| Gradient Boosted Machines | Gafni-Pappas et al. [24], Ekström et al [44]., Morbey et al. [33], Porto et al. [35], Petsis et al [37]., Aroua et al. [46] | |

| CatBoost | Susnjak et al. [36] | |

| Voting regressor | Susnjak et al. [36] | |

| Averaging model | Susnjak et al. [36] | |

| Stacking model | Susnjak et al. [36], Vollmer et al. [39] | |

| Threshold based machine learning | Pelaez et al. [23] |

Legend: ARIMA: Autoregressive integrated moving average; ARIMAX: AutoRegressive Integrated Moving Average with eXogenous inputs; LASSO: least absolute shrinkage and selection operator; SVM: support vector machines; RBF: radial basis function., Facebook Prophet: decomposable time series model with trend, seasonality and holidays components

To rate the performance of models, different studies used different measurements. These measurements are explained in Table 5. Due to none of the values in Table 5 being reported across all studies, the secondary aim of comparing models’ ability to correctly predict ED arrivals was not achieved. A meta-analysis was not performed due to the high heterogeneity of the included studies. The study designs, outcomes measured and study populations varied across the studies. This variability would introduce substantial bias, thereby compromising the reliability of the meta-analysis. We do however present all of the best performing models from each study and their horizon in Table 6.

Table 5.

Measures used to describe models ability to correctly predict emergency department arrivals

| Acronym | Measure |

| RMSE | Root mean square error |

| MSE | Mean Square Error |

| MAPE | Mean Absolute Percentage Error |

| DM | Diebold-Mariano test (The null hypothesis is that the reference model is more accurate than the test model [26]) |

| Precision* | Number of true positives divided by the total number of positive predictions |

| Pearson correlation | Coefficient that measures the linear association |

| AUC | Area under curve |

| MAE | Mean absolute error |

| R2 | Coefficient of determination |

| Peak error | The peak error measure is zero if the peak forecast correctly identifies both the date and intensity of the peak |

Legend: Precision* was used in a study where they additionally tried to predict if the arrivals is going to be peak event, compared to others

Table 6.

Comparison of model performance based on reported metrics and horizon of the best performing model

| Authors | Horizon* | RMSE | MSE | MAPE | Precision | Pearson Correlation | MAE | AUC | Peak error ** | R^2 |

| Lim et al. [34] | Not clearly marked | 5.3 | ||||||||

| Petsis et al. [37] | 22.96 | 6.5 | 18.37 | |||||||

| Sharafat et al. [38] | 38.6 | 55.6 | 25.1 | 87 | ||||||

| Gafni-Pappas et al. [24] | 18.94 | |||||||||

| Fan et al. [26] | 14.55 | 3 | ||||||||

| Peng et al. [27] | 0.809 | |||||||||

| Tideman et al. [28] | 7.58 | |||||||||

| Ram et al. [30] | days | 70% | ||||||||

| Perry et al. [31] | 0.29 | 0.73 | ||||||||

| Porto et al. [35] | 7 days | 5.48 | ||||||||

| Duarte et al. [40] | 7.1002 | |||||||||

| Aroua et al. [46] | 6 | |||||||||

| Perry et al. [49] | 4 days | 36 | ||||||||

| Martin et al. [42] | 5.65 | |||||||||

| Tandberg et al. [10] | 28 days | 42 | ||||||||

| Morbey et al. [33] | 0.195 | |||||||||

| Zlotnik et al. [47] | 2 weeks | 10.717 | ||||||||

| Ekström et al. [44] | 2 days | 17.5 | ||||||||

| Susnjak et al. [36] | 13 weeks | 15.9 | 8.9 | 12.8 | ||||||

| Abogaye-Sarfo et al. [48] | 12 months | 323.45 | 1.81 | 424.28 | ||||||

| Jones et al. [50] | 1 hour | 2.5 | ||||||||

| Jiang et al. [29] | 2.7 | |||||||||

| Pelaez-Rodriguez et al. [23] | 1 day | 8.36 | ||||||||

| Tuomien et al. [25] | 6.6 | |||||||||

| Álvarez-Chaves et al. [32] | 5.98 | |||||||||

| Vollmer et al. [39] | 6.7 | 14.27 | ||||||||

| Yousefi et al [41]. | 4.89 | 99.6 | ||||||||

| Ho et al. [43] | 0.4744 | |||||||||

| Calegari et al. [45] | 2.91 | |||||||||

| Asheim et al. [51] | 3 hours | 31 |

Legend: * Horizon of best performing model, **The peak error measure is zero if the peak forecast correctly identifies both the date and intensity of the peak, RMSE: Root mean square error, MSE: Mean Square Error, MAPE: Mean Absolute Percentage Error, MAE: Mean Absolute Error, AUC: Area Under the Curve, R^2: Coefficient of determination

We compared models based on their output performance measurements reported and horizons of the manuscripts’ best performing model.

Most common time horizon was 1 day (7/30 studies) and most common reported metric was MAPE (16/30 studies) (Table 6).

Risk of bias and applicability

Risk of bias assessment was carried out first on authors own dimensions and then using PROBAST tool.

Own dimensions

Authors’ own risk of bias domains have found that in general studies have not made their development data available (Table 7). Only one of the manuscripts published the full code [51]. For validation, a train/test split was most common. Additionally, Morbey et al. [33] used Generalized Additive Model For Location, Scale, And Shape to create confidence intervals, Porto et al. [35] used Time-Series Split Cross-Validation (TSCV), Martin et al. [42] used external validation and 5 of the studies reported their predictions with confidence intervals. According to TRIPOD, the studies had analysis type 1b (development and validation using resampling) and type 2b (nonrandom split-sample development and validation), some of studies were both type 1b and 2b, and one study used the type 3 [42] analysis (Table 5).

Table 7.

Risk of bias assessment using authors’ own domains

| Author | Model Made Public | Dataset Size Specified | Training Data Made Public | Train: Test Division Made | Guidelines (Ex. TRIPOD) Followed | Confidence Intervals Used? | Validation Methods | TRIPOD 2015 Type* |

|---|---|---|---|---|---|---|---|---|

| Pelaez-rodriguez et al. [23] | No | Yes | Yes | Yes | No | No | Cross Validation K = 5, Train/Test Split | 1b &2b |

| Gaffni-pappas et al. [24] | No | Yes | No | Yes | No | No | Cross Validation K = 5, Train/Test Split | 1b &2b |

| Tuomien et al. [25] | No | Yes | Partially | Yes | No | No | Train/Test Split and Cross Validation (K Unknown) | 1b &2b |

| Fan et al. [26] | No | Yes | No | Yes | No | No | Train/Test Split | 2b |

| Peng et al. [27] | No | Yes | No | No | No | No | Train/Test Split | 2b |

| Tideman et al. [28] | No | No | No | No | No | No | Train/Test Split, Cross Validation (K = 10), | 1b &2b |

| Jiang et al. [29] | No | Yes | No | Yes | No | No | Train/Test Split, Cross Validation (K = 5), | 1b &2b |

| Ram et al. [30] | No | No | No | No | No | No | Cross Validation (K = 10) | 1b |

| Perry et al. [31] | No | Yes | No | Yes | No | No | Train/Test Split | 2b |

| Alvarez-chales et al. [32] | No | Yes | No | Yes | No | No | Train/Test Split, Cross Validation | 1b &2b |

| Morbey et al. [33] | No | No | No | Yes | No | Yes | Generalized Additive Model For Location, Scale, And Shape (GAMLSS) To Create Uncertainty Intervals | 1b |

| Lim et al. [34] | No | Yes | No | Yes | No | Yes | Incremental Learning Validation Process | 1b |

| Porto et al. [35] | No | Yes | Yes | Yes | No | No | Cross Validation (K = 5), Time-Series Split Cross-Validation (TSCV) | 1b, 2b |

| Susnjak et al. [36] | No | Yes | No | Yes | No | No | Cross Validation (K = 5), Train/Test Split | 1b, 2b |

| Petsis et al. [37] | No | Yes | No | Yes | No | No | Cross Validation, Train/Test Split | 1b, 2b |

| Sharafat et al. [38] | No | No | No | Yes | No | No | Train/Validation/Test Split | 1b, 2b |

| Vollmer et al. [39] | No | No | No | Yes | No | No | Train/Test Split, Temporal One Day Cross Validation For Each Day | 2b |

| Duarte et al. [40] | No | No | No | No | No | Yes | Train/Test Split | 2b |

| Yousefi et al. [41] | No | No | No | Yes | No | No | Test/Train Split | 2b |

| Martin et al. [42] | No | No | No | Yes | No | No | Train/Test Split, Cross Validation, External Validation | 1b, 2b, 3 |

| Ho et al. [43] | No | No | No | No | No | No | None | None |

| Ekstrom et al. [44] | No | Yes | No | Yes | No | No | Training, Validation and Train/Test Split | 2b |

| Calegari et al. [45] | No | Yes | No | Yes | No | No | Train/Test Split | 2b |

| Aroua et al. [46] | No | Yes | No | Yes | No | No | None | None |

| Zlotnik et al. [47] | No | Yes | No | Yes | No | No | Train/Test Split | 2b |

| Abogaye et al. [48] | No | No | No | Yes | No | Yes | Train/Test Split | 2b |

| Perry et al. [49] | No | Yes | No | Yes | No | No | Train/Test Split | 2b |

| Jones et al. [50] | No | Yes | No | Yes | No | No | Train/Test Split | 2b |

| Tandberg et al. [10] | No | Yes | No | Yes | No | Yes | Train/Test Split | 2b |

| Asheim et al. [51] | Yes | Yes | No | Yes | No | No | Train/Test Split | 2b |

Legend: * see TRIPOD 2015 (22), 1b: development and validation using resampling, 2b: non-random split-sample development and validation

Probast

Using PROBAST (see Supplement 4) tool (Table 8), we found 11 out of 30 studies have a high overall risk of bias [10, 27, 30, 31, 43, 46–49]. Only 2 out of 30 studies have a high concern over applicability to the review question [30, 33].

Table 8.

Risk of Bias summary using PROBAST tool

| Author | Overall judgement of risk of bias | Concern over overall judgement of applicability |

|---|---|---|

| Pelaez-Rodriguez et al. [23] | low | low |

| Gaffni-Pappas et al. [24] | low | low |

| Tuomien et al. [25] | low | low |

| Fan et al. [26] | low | low |

| Peng et al. [27] | high | low |

| Tideman et al. [28] | low | low |

| Jiang et al. [29] | low | low |

| Ram et al. [30] | high | high |

| Perry et al. [31] | high | low |

| Alvarez-Chales et al. [32] | low | low |

| Morbey et al. [33] | low | high |

| Lim et al. [34] | low | low |

| Porto et al. [35] | low | low |

| Susnjak et al. [36] | low | low |

| Petsis et al. [37] | low | low |

| Sharafat et al. [38] | low | low |

| Vollmer et al. [39] | low | low |

| Duarte et al. [40] | low | low |

| Yousefi et al. [41] | low | low |

| Martin et al. [42] | low | low |

| Ho et al. [43] | high | low |

| Ekstrom et al. [44] | low | low |

| Calegari et al. [45] | low | low |

| Aroua et al. [46] | high | low |

| Zlotnik et al. [47] | high | low |

| Aboagye et al. [48] | high | low |

| Perry et al. [49] | high | low |

| Jones et al. [50] | low | low |

| Tandberg et al. [10] | high | low |

| Asheim et al. [51] | high | low |

Discussion

To date, this is the first systemic review known to authors that carried out a validated risk of bias assessment on the included studies [2, 8, 9, 11], which is an important factor when trying to build foundation for further advancement of the field. We found 10 thematic areas of external variables that different studies used to improve their basic TSF model and 53 different methods of TSF from 3 TSF methods families. Additionally, we found that the publishing of training data is rare and that most of the studies did not publish final models for use by other researchers.

Methodological approaches

TSF can optimize the logistics of EDs and has been gaining more momentum recently with over 870 results for TSF and ED on PubMed [55]. It shows great promise in accurately predicting ED arrivals [2, 8, 9, 11].

We found that authors used simple and transparent methods of data preprocessing and no methods to fill the missing values were described or used. It is possible that due to accessing metadata from patient care, there is not any missing data, as this might be the case with other modeling types of studies [56]. We recommend that authors describe the completeness of the data used in future work to achieve better transparency.

TSF has developed multiple methodological approaches to the problem, especially during the COVID-19 pandemic [57, 58]. The current state of the art has been identified in a comprehensive review by Kolambe et al. [59]. Recently, this field has also seen a lot of new programming libraries and commercial solutions entering the market, for example Nixtla, Kumo AI, Sulie, Chronos and Neural Prophet [12, 60–63]. In our systematic review, we have identified 3 studies that used an off the shelf library– Facebook Prophet [25, 36, 40], which was already out of date at the time of publication and updated with Neural Prophet [63].

In our analysis, we identified multiple methods used, from the most classic ones like ARIMA, to advanced machine learning and hybrid models. The systematic review from 2009 found that ARIMA, exponential smoothing, regression, moving average and linear regression are most used [2]. Silva et al. have suggested using ARIMA for ED TSF when patterns show strong seasonality and Machine Learning approaches when there is more randomness [8]. More recent reviews identified a wide plethora of methods, ranging from statistical time series methods (ie. ARIMA, SARIMA, MSARIMA), regression methods (multiple linear regression, Poisson regression, quadratic model) to more novel ones using artificial intelligence (ie. Artificial neural networks) and hybrid models (ie. ARIMA-LR, ARIMA-ANN) [2, 8, 9, 11]. Due to differences in reporting accuracy (already pointed out by Silva et al. and Wargon et al. [2, 8]), we cannot make a recommendation on which methods should be tested first and which hold the most promise for future research. Silva et al. have already recommended starting with ARIMA [8]. We however suggest starting with one model from each of the forecasting families identified. From the statistical methods we recommend ARIMA, from machine learning methods we suggest neuronal nets (Artificial Neural Network - ANN or Deep Neural Network - DNN) and from hybrid methods gradient-boosted machines. From these starting points, future studies should have a good baseline on which they can compare methods and choose the best-performing ones.

To ensure, among other things, minimalization of risk of bias, comparability and reproducibility, reporting guidelines have been created [64]. This is the second review to address bias in analyzed studies of ED TSF. One review approached bias, but has done so in an unvalidated way [9]. One of the most important hubs for research reporting guidelines is Equator Network [65]. In modeling, the most referred to guideline is TRIPOD statement [66] and a version of it has also been used for reporting this systematic review [13]. In selected studies, none of the authors have adhered to any of the existing reporting guidelines, obstructing the reproducibility and dampening the usefulness of their research. To asses bias we adhered to TRIPOD-SRMA guidelines [13]. We have identified 11 manuscripts as having a high risk of bias. With no TRIPOD statement in the included manuscripts, it is also difficult to extract required information to make a systematic review, as the wording is not uniform across different research groups.

We found that almost none of the results reported had confidence or prediction intervals next to predicted values. This is an important missing feature, as it leaves the forecast end-users without a reference of how accurate the forecast is and what the edge conditions that could happen are. One of the methods that could be used to quantify such uncertainty is conformal prediction [67]. This would also build trust with the users, as they would be able to better interpret the forecasts [67].

Different platforms have emerged to compare models between each other, drive community collaboration and speed up development. Examples of such platforms are Kaggle and Hugging face [68, 69]. These platforms create competitions with a standard dataset and a forecasting model on which different users and research teams base their models and try to improve prediction scores [70]. Since 1982, a world time series competition, called “M competition” is organized, which compares different TSF methods using real-world data [71]. During the COVID-19 pandemic, two websites specifically dedicated to the challenge of COVID-19 TSF were created, separately for the European Union and the United States of America [57, 58]. Our research found that the majority of the studies included have not made their model open for use by others or performed external validation. Furthermore, only two studies made their development data easily available [23, 25] and one partially available [25]. We could not achieve the secondary aim of creating a scoreboard and finding the best-performing model due to various methods of reporting model success of prediction. We found that RMSE, MSE, MAPE, DM, Precision and Pearson correlation were used, but none of the measures were used throughout the body of research, making comparisons impossible, although RMSE and MAPE were used most frequently. We suggest adding Weighted Interval Score (WIS), which is similar to DM and which was also used when comparing COVID-19 forecasting methods. WIS is great for ensemble methods, because it compares the performance of a model to the last prediction (the null prediction being the same as the last known value) [58]. We additionally suggest using validated open-source libraries to carry out validation, like scores, published by the Australian Bureau of Meteorology [72], instead of writing a new validation code.

In our analysis most of the studies were both type 1b and 2b according to TRIPOD 2015, some were only type 1b or 2b separately, and one was type 3 as well [22]. It is important to note that none of the studies were type 4 (using external validation on previously published data set). Because of this, the results of the model can be too optimistic, which reduces reproducibility and generalizability of the model [22, 73]. Our findings are in line with the general trend that can be seen in medicine, where the vast majority of developed models do not use external validation [73]. External validation is a crucial step for models to come into clinical practice and meaningfully affect clinical practice [73]. We recommend that a standard dataset should be published in such a way that all ED TSF researchers can easily externally validate their model.

External variables

External variables have been shown to improve TSF models in more recent systematic reviews of ED TSF [2, 8, 9, 11]. In previous reviews, calendar dates, weather, air quality, web site traffic, influenza levels, socioeconomic data, triage level data, patient information and Monday effect were identified as external variables that were used [2, 8, 9, 11].We found 10 different thematic areas of external variables used. To the existing weather, calendar, air quality, hospital web site and other potential variables (ie. socioeconomic indicators, triage level, influenza level) we are adding social networks (ex. X, formerly Twitter), Google search trends, HIS, calls to the nonacute medical care phone number, prehospital team’s announcements of arrivals, experienced local staff knowledge of busy holidays. Use of call center data was unique to Asheim et al. and Perry et al., respectively [49, 51]. Currently, the the usefulness of this in other countries is questionable, as it requires a high level of digitalization and health policy supporting these underlying systems – especially the non-acute phone number. A good candidate for incorporating call center data would also be Finland with its 116–117 helpline [74]. An interesting external variable never before used is the experienced local staff knowledge to help identify holidays that drive the most traffic to the ED [39]. A good mix of external variables can probably be established from in all of the gathered examples and is probably highly variable depending on the locale of the model. We have also found that too many variables can hinder the model accuracy, so testing which combinations work best is an important step in every model development. Authors should check availibility and effect of above mentioned before conducting research.

We found that most commonly, weather-related and calendar variables are most commonly used in the final models, which was also established by previous reviews [2, 9, 11]. The novelty of our study is a much wider array of external variables [2, 8, 9, 11], with our review finding 10. None of the studies in our sample incorporated socioeconomic indicators, which Jiang et al. suggested possibly hold value but are under-researched [9]. An interesting addition could also be satellite-based data such as Normalized Difference Vegetation Index, which was used in conjunction with temperature to predict severe allergic reactions in Greece [75].

It has also been suggested that a meta-analysis of external variables should be carried out to try and identify the most prudent variables to gather and use [8]. Currently, with the state of literature published in this field, this is still not achievable, as authors mostly do not publish their model weights, do not quantify predictive values of the external variables used in their models nor do they make the code of the models open source to allow external validation and reproducibility of their analyses. We did, however, create a table reporting horizon and best performing model metric for all studies included.

Limitations and strengths

Our literature review is limited to a small sample of articles fitting our screening criteria. Compared to other reviews, we had the 3rd biggest sample of articles after Shanchend et al. [9] and Muhammet et al. [11], while other reviews had a smaller sample [2, 8]. Additionally, our article does not compare different models’ forecasting ability due to heterogeneity of dimensions used to report these results. We included research from different continents, contributing to possible hindering of applicability, as different social, geographical, environmental and cultural determinants might lead to different external variables predicting ED arrivals; there probably isn’t a set of true answers that would be applicable in all situations. This systematic review should serve as a guide as to which variables more effort should be put into for testing.

The main strength of this systematic review is adhering to TRIPOD reporting guidelines [13], which is intended specifically for reviews of forecasting models and additionally evaluating the risk of bias of included research. We also curated a list of external variables on which TSF for ED could be based and methods that are most common for these problems. Additionally, we identified directions on which future research could be based. Finally, we found some major shortcomings of current research that should be easy to address by authors in future studies.

Implications for research and future directions

In our review, we have identified 18 out of 30 articles providing future directions of research.

These directions are (I) feature engineering, (II) predicting incoming patient categories, (III) use of social media and internet data, (IV) prospective study design, (V) simulation of policy changes, (VI) studying geographical variation, (VII) using multiple study sites, (VIII) discovering new variables, (IX) creation of hybrid models, (X) external validation of models, (XI) longer prediction horizons and (XII) synthetic data use. They suggest that data pre-processing should be incorporated into the model preparation workflow. This was not mentioned in articles inside our scope of research, even though it is a valid and good suggestion. Additionally, Jiang et al. suggest creating hybrid models, which is in line with our results [9].

Explainable AI (XAI) is an expanding field which emphasizes the importanceof understanding why the models give one results and not the other and not only trusting their performance metrics [36]. This was suggested as a future research direction by Asheim et al. [51] (with some of the research already carried out by Susnjak et al. [36]). NeuralProphet, a successor to Facebook Prophet, one of most proliferated forecasting libraries in the field of TSF is one step into such direction, promising explainable results of its’ predictions [63]. One of such methodologies is incorportaing SHapley Additive exPlanations (SHAP) into result reporting workflows [76]. In the field of ED triage it has been already used extensively and had positive impact [77]. This type of research is better fit to address legislative concerns and gain trust of key stakeholders of whom action based on created forecasts is expected [78].

Feature engineering is an approach of transforming input variables to make a better use of machine learning methods used [79]. Some of these transformations are Principal Component Analysis (PCA), Logarithmic transformations, Box-Cox transformation and others [79]. We found that authors used PCA, Z-scoring, weighting and normalization. Further research is warranted to find which kind of feature engineering works best for a given TSF methodology. Pelaz et al. [23] mentioned this as a possible direction of future research as well.

Important concern about feasibility was brought up by Wargon et al. [2]. None of the studies have touched on the clinical importance of their models, nor was there any model that made it through translational phase into practice. It is not clear whether ED departments can actually benefit from ED arrivals prediction. We believe that an interview-based study with ED directors of different economic areas (USA, EU, etc.) would be prudent to define what time horizons should be used, what certainty assured and which predictions hold actual clinical and managerial value.

We hypothesize that long-term trends in ED arrivals might be more important than short term horizons in giving exact numbers of patients attending EDs.

Hybrid models should be tested, as they combine strengths of different methods to overcome limitations of any one model used. It is also important to state that a meta-analysis of effect of external variables at the time of writing this review is not feasible, due to authors not making their models open and not reporting specific results for each of the external variables tested to better asses which ones across the literature carry the most promise. Finally, we recommend that authors report their accuracy result in a metric, which allows for an easy comparison; in our review we have identified RMSE and MAPE to be most used. Additionally, we recommend the use of WIS, which has proven useful in COVID-19 models scoring.

This review serves as a good starting point for creating a TSF model for ED arrivals. With gaps identified, especially in comparability of the studies, the authors will create a TSF challenge on one of the aforementioned platforms and prepare a dataset that could be used by all authors for external validation of their models as no such TSF challenge nor dataset currently exist. Silva et al. have already recommended that at least 3 years of data should be used for doing ED TSF research [8]. Not all research groups have access to such datasets. The creation of this challenge will democratize this research field and allow other researchers to externally validate their models, thus making it possible to advance the field and actually translate the findings and models into real-world use. We also recommend further exploration of external variables, especially socioeconomic ones, which are under-researched and could improve prediction models.

Conclusions

TSF is feasible for use in EDs. Our results emphasize the need for better reported results of model development and validation to better understand the role of external variables used in created models, as well as for more uniform reporting of results between different research groups and external validation of created models. We believe that open science initiatives and stricter requirements of journals for authors to adhere to when reporting will allow for such studies to be conducted in the near future. We recommend authors make their models and results as open as possible in order to improve the field of TSF in ED.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Acknowledgements

This systematic review is part of the author’s (LP) PhD candidacy. Mentors of the PhD candidate are TA and JŽ. The mentor for review writing is AK.

Abbreviations

- AI

Artificial Inteligence

- ANN

Artificial Neural Network

- AQI

Air Quality Index

- ARMA

Autoregressive–Moving-Average

- ARIMA

Autoregressive integrated moving average

- ARIMA-LR

Autoregressive integrated moving average – linear regression

- ARIMAX

AutoRegressive Integrated Moving Average + exogenous variables

- AUC

Area Under Curve

- SARIMA

Seasonal Autoregressive Integrated Moving Average

- SARIMAX

Seasonal Autoregressive Integrated Moving Average + exogenous variables

- BMJ

British Medical Journal

- CINAHL

Cumulative Index to Nursing and Allied Health Literature

- COPD

Chronic Obstructive Pulmonary Disease

- COVID-19

Coronavirus disease 2019

- DM

Diebold-Mariano test

- DNN

Deep Neural Network

- EBSCO

EBSCO Information Services

- ED

Emergency department

- EMBASE

Excerpta Medica Database

- EWS ED

Early Warning System for ED attendance predictions

- EWMA

Exponentially Weighted Moving Average

- FE

Feature engineering

- FSA

Forward Sortation Area

- GA

Genetic Algorythm

- GP

General Population

- HIS

Hospital Information System

- HRI NACRS ED

National Ambulatory Care Reporting System

- IEEE

Institute of Electrical and Electronics Engineers

- KPI

Key Performance Indicator

- LASSO

Least absolute shrinkage and selection operator

- LR

Linear regression

- LTSM

Long short-term memory networks

- MAE

Mean Absolute Error

- MAPE

Mean Absolute Percentage Error

- ML

Machine Learning

- MLR

Multiple Linear Regression

- MSE

Mean Square Error

- NA

Not Applicable

- PCA

Primary component Analysis

- PM

Particulate Matter

- PRISMA

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- PROBAST

Prediction model Risk Of Bias ASsessment Tool

- RBF

Radial basis function

- RMSE

Root mean square error

- RSV

Respiratory syncytial virus

- SFC

Surface Pressure Chart

- SHAP

SHapley Additive exPlanations

- SR

Systematic review

- SRMA

Systematic review and meta analysis

- SVM

Support Vector Machines

- TRIPOD

Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis

- TSF

Time series forecasting

- USA

United States of America

- UKHSA

United Kingdom Health Security Agency

- VARIMA

Vector Autoregressive Integrating Moving Average

- WIS

Weighted Interval Score

Author contribution

LP: Conceptualization, Data Curation, Formal Analysis, Investigation, Methodology, Project Administration, Resources, Software, Visualization, Writing – Original Draft, Writing – Review & Editing. KG: Article Screening, Data Curation, Investigation, Writing – Review & Editing. JŽ: Conceptualization, Methodology, Writing – Review & Editing. TA: Writing – Review & Editing. AK: Writing – Original Draft, Writing – Review & Editing

Funding

This work is in part financed by P3-0429 (SLORApro).

Data availibility

All data generated or analysed during this study are included in this published article [and its supplementary information files]

Declarations

Ethical approval

IRB approval was not necessary for this type of study.

Use of AI

Generative AI was not used in the creation of this manuscript or any parts of analysis.

Patient and public involvement

No patients or public were involved in this study.

Consent for publication

Not applicable

Competing interest

The authors declare no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Garattini L, Badinella Martini M, Nobili A Urgent and emergency care in europe: time for a rational network? European Journal of Internal Medicine. 2024 Feb;120:1–2. [DOI] [PubMed] [Google Scholar]

- 2.Wargon M, Guidet B, Hoang TD, Hejblum G A systematic review of models for forecasting the number of emergency department visits. Emergency Medicine Journal. 2009 Jun 1;26(6):395–99. [DOI] [PubMed]