Abstract

Purpose

To report on the development, evaluation, and acceptability of a simulated patient resource designed for teaching and assessment in low vision rehabilitation. The findings aim to inform possible future integration of this method into optometric education.

Methods

In response to COVID-19 restrictions, a simulated patient resource was developed to allow students undertaking the practical elements of postgraduate modules in low vision practice at Cardiff University to complete their training. Using a mixed methods case study approach, the evaluation examined perspectives from students, assessors, and simulated patients, to establish if there was a consensus of opinion on its use. The study did not seek to demonstrate educational impact or validate SiP against existing methods.

Results

Five assessors, five simulated patients and seven students completed evaluations. Both assessors and students broadly accepted the method for both teaching and assessment, with mean scores across all groups ranging from 7.3 to 9.6 on a 1–10 scale (where 1 indicated very poor acceptance and 10 excellent acceptance). The discussion and establishing magnification elements of the encounter were the least well accepted. Simulated patients found the level of preparation for the role adequate. There were no significant differences between Likert scores between assessors and students in both the teaching and assessment sessions. Thematic analysis of free text comments showed in the main positive responses, with all groups highlighting the benefits of detailed scenarios and pre-session training. The method was acceptable to the professional governing and accrediting body, the College of Optometrists.

Conclusion

Implementing a guideline-compliant simulated patient resource for high-stakes assessment requires significant time and resources. Our single cohort analysis indicates broad acceptance amongst assessors and students. Further research is needed to evaluate its educational impact across a wider range of scenarios and competencies while also further assessing its validity. Though simulated patients offer a viable alternative for teaching and assessing low vision practice, real patient encounters remain preferable.

Keywords: simulated patients, low vision, low vision rehabilitation, optometry, post graduate teaching, simulation-based learning

Plain Language Summary

Simulation-based education in healthcare replicates real-life scenarios for teaching purposes. One method used is the simulated patient; these are individuals trained to portray patients with specific health conditions. This approach allows healthcare professionals to develop, practice, and be assessed on their clinical consultation skills.

During the COVID-19 pandemic, we developed a program at Cardiff University to train and use simulated patients, enabling eye care practitioners to complete their training in low vision practice. This paper outlines how we used accepted guidelines to design this resource and analyses feedback from students, simulated patients, and teaching staff involved in using it.

Our experiences taught us that developing and implementing such a simulated patient resource requires significant time and resources. Even though feedback suggested that the method is mostly acceptable to both assessors and students, we have concluded that having access to a readily available pool of real patients is the more credible option for teaching and assessing this accredited level of low vision practice. However, in the absence of real patients, the use of simulated patients is a viable option for teaching and assessing low vision practice, provided the resource is carefully planned and implemented.

We feel that more research is needed to explore how this method could be used effectively and more widely in teaching and assessing other optometric skills.

Introduction

Simulation-based education is now widely used in the training of healthcare professionals at all levels.1–4, The Coronavirus Disease 2019 (COVID-19) pandemic focused attention on how simulation can be used to train skills related to pandemic response5 but also to continue the training and assessment of health professionals when patient interactions were restricted.6 One of the modalities of simulation in use in health care training is the use of simulated patients (SiPs) which offers a potential pathway for teaching and assessment when specific groups of real patients are not available. SiPs are volunteers that have been trained to play the role of a patient, simulating health problems to allow a health professional to develop, practice and ultimately be assessed on their clinical consultation skills.7 SiPs are increasingly used in both training and assessment as a response to changing constraints on education systems such as increased student numbers, ethical considerations and the increased need to deliver competency-based assessments. The SiP method offers several organizational advantages, including standardised case presentations for all candidates, on-demand availability, participation in teaching and adaptability to different teaching levels.4,8 They have been used in an optometric context both to assess clinical practice,9–11 to teach communication skills12 and low vision practice.13

The COVID-19 pandemic disrupted teaching in optometry schools worldwide.14,15 In response to restrictions on the use of patient volunteers imposed in Wales during the autumn of 2020 we used accepted guidelines to develop a simulated patient resource to allow students undertaking the practical elements of postgraduate modules in low vision practice at Cardiff University to complete their training and assessment.

This paper examines the development of this resource and presents the results of its evaluation using a qualitative approach. We did not set out to show educational effect but to explore this training and teaching method from the perspectives of its participants to determine if there was a consensus of opinion on its use. This analysis aims to identify lessons learned from this experience that can inform its possible future use for ourselves and other educators.

Methods

Ordinarily students undertaking the practical elements of postgraduate modules in low vision practice at Cardiff University attend for two consecutive days of teaching and assessment. The first day consists of workshops without patient involvement. On the second day, students practice their low vision routine with a real patient under assessor guidance in the morning, followed by a summative assessment with a real patient in the afternoon. These patients have a relevant pathology and have been coached to present their condition in a standardised way,7,8,12 for example always stating the same low vision goal. The program has an annual intake of approximately 60 students in two cohorts over two semesters.

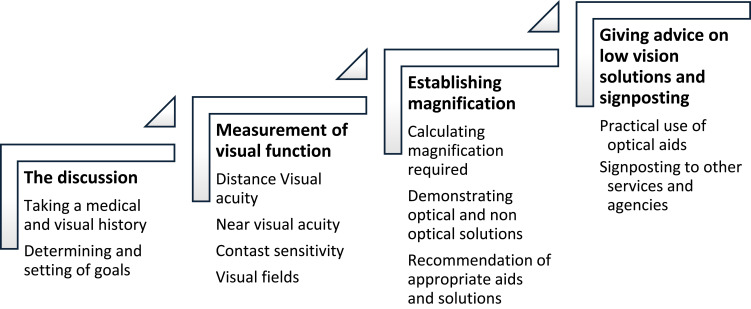

This real patient encounter is structured around four areas of learning or “domains”: the taking of a person’s visual and medical history including determining and setting of goals, the measurement of visual function, establishing magnification and the recommendation of optical aids and other low vision solutions (Figure 1).

Figure 1.

Domains taught and assessed in the low vision assessment.

Students are not required to refract the patient or examine the fundus. Patients are examined in a standard 4m optometric consulting room setting with the student and assessor in the room at all times during teaching and assessment. The real patients used are often from vulnerable groups and during COVID-19 restrictions these individuals were part of the “shielding” population and could not attend these sessions.

Our study employed a mixed methods case study methodology16 to explore the use of SiPs. This takes one defined or “bounded” event, in the case the teaching and assessment session, and explores the differing perspectives of the participant groups experiencing it using both scaled and open text responses.

Developing the Resource

Guided by relevant elements of frameworks and guidelines for the development and use of SiPs in education,8,17–19 a simulated patient resource was developed to replace real patients. The goal was to allow the teaching and assessment sessions to be carried out in an almost identical format and the development process aimed to address assessment validity and fidelity. This approach can best be described as a scenario-based simulation using the modality of a simulated patient.2

Developing the SP Scenarios

We obtained 50 anonymised patient records from the Low Vision Service Wales20 and guided by a published scenario template,19 an experienced low vision practitioner worked alongside the Module Lead to author 6 scenarios based on these records. A blueprinting exercise ensured the scenarios addressed the learning/assessment outcomes currently mapped to the College of Optometrists standards for this module.21 The plan was approved by the College of Optometrists as part of the temporary arrangements for the Professional Certificate in Low Vision to take account of COVID-19 impact on delivery.22

The Director of Postgraduate Teaching, who also specialized in low vision practice, conducted a standard-setting exercise on the scenarios, following a structured review and feedback matrix. The incorporated feedback informed subsequent revisions.

Briefing packs and prompt sheets for use by the SiPs were developed for each scenario under the headings detailed in Box 1.

Box 1.

Briefing Scenario Pack Headings for SiPs

| Patient name and age |

| Setting and reason for the interaction |

| General background information |

| Ocular history |

| General medical history |

| Medication |

| Social history |

| Lifestyle |

| Reported difficulties and main goals to achieve |

| Support to date |

| Temperament and behaviour |

| Key points to convey |

| Opening statement |

| Plain language explanation of visual status |

| Behaviour at key points in the assessment |

Planning the SiP Encounter

The SiP teaching and assessment encounters were structured around the original patient encounter using the four domains outlined in Figure 1. History taking, needs assessment, and discussion of potential low vision solutions were all communication-based activities requiring the SiP to act the scenario. The measuring of visual function and the assessment of magnification required the use of simulation spectacles at certain points in the assessment.

Simulation glasses simulating both contrast and acuity loss were matched to the visual function measures detailed in the case scenarios. Assessors were already experienced in using simulation glasses for teaching the measurement of LogMAR visual acuity and contrast sensitivity in workshops. Assessors used an existing marking rubric based on the four domains to grade the encounter.

Training and Preparation of the SiPs and Assessors

SiPs were recruited from department staff due to COVID-19 restrictions. A recent review showed that student peers acting as SiPs were a realistic option to external SiPs, at least in the teaching context23 and the familiarity of staff with the eyecare setting offered benefits. Assessors were all experienced low vision practitioners that have carried out this teaching and assessing role over the past decade.

An online training package for both assessors and SiPs was developed which defined the role of the SiP in this context, set out the core competencies for a SiP, showed recorded video of examples of SiPs in similar settings and defined the role of the SiP in feedback after teaching.

SiPs were given their scenario briefing pack three weeks in advance and then discussed the scenario with the lead assessor before the teaching and assessment day. Each SiP was only asked to work with one scenario during the day.

A 45-minute pre-session briefing was conducted before the teaching and the assessment session to brief both SiPs and assessors separately. SiPs also had a preparation session with their matched assessor to ensure the simulation spectacles matched their scenario and that they were familiar with their use and the layout of the room. The simulation spectacles used were adapted from the Vine Simulation package24 to simulate impaired central acuity and contrast sensitivity loss.

Evaluating the Resource in Action

Questionnaires were developed for the assessors, SiPs and students, structured around the four domains being taught and assessed. Likert scales, number scales and free text responses were used to explore the differing perspectives of each of these groups. Assessors and SiPs completed questionnaires on the day, while students were provided an online link for use after the event so as not to interfere with their learning and assessment.

Analysis

Questionnaire data was analysed to identify how each of the groups responded to the various elements of the teaching and assessment process, and we conducted a thematic analysis25 of the free text data to identify common themes using NVIVO12.

We worked to a definition of thematic analysis as “A method for identifying and reporting patterns (themes) with data”.25 We approached this analysis from a realist perspective that accepts that the questionnaire responses represented the views of the participants. We developed themes from an inductive “bottom up” approach which develops themes closely linked to the data. Our interest was to describe the participants’ views and experiences at an explicit level, that is not looking beyond the data to develop an understanding of why the participants responded in the way they did.25

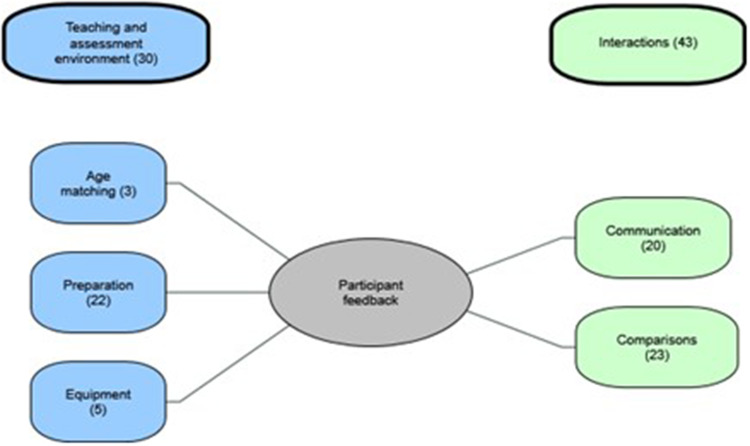

The thematic analysis followed a recognised process25,26 with researchers MK and NL transcribing handwritten responses and agreed a coding framework. The coded data informed the thematic map detailed in Figure 2.

Figure 2.

Thematic map.

This study has been reported in accordance with the Standards for Reporting Qualitative Research.27 Ethical approval was granted by the Cardiff University School of Optometry and Vision Sciences ethics committee. All participants gave informed consent which included agreement for the publication of anonymised responses/direct quotes.

Results

There were 5 assessors, 5 SiPs and 10 students. All the assessors, SiPs, and 7 of the students completed questionnaires.

Preparation

SiPs were asked about the level of preparation for the role. All SiPs responded that the level of detail in the scenario was “just about OK” and all felt that they were either well prepared or very well prepared for the role.

Teaching

SiPs and assessors were asked about their overall experiences of the teaching session, and the results are summarised in Table 1. The SiPs reported positively on their ability to play the role, get across key points and their ability to carry out tests. Assessors agreed that the roles were played convincingly and that the SiPs were able to carry out tests. Students also reported that they felt the characters were convincingly played.

Table 1.

Participants Perceptions of the Teaching Session

| Question Asked | Participant Responses | |||

|---|---|---|---|---|

| Disagree or Strongly Disagree n (%) |

Neither Agree or Disagree n (%) |

Agreed or Strongly Agreed n (%) |

Number of Responses n |

|

| SiPs perceptions of the teaching session | ||||

| I was able to carry out all the clinical tests | 0 | 0 | 4 (100%) | n=4* |

| I was able to remember all the key points in my case | 0 | 0 | 5 (100%) | n=5 |

| I was able to get across all the key points to the student | 0 | 1 (20%) | 4 (80%) | n=5 |

| I felt I played my character convincingly | 0 | 0 | 5 (100%) | n=5 |

| Assessors’ perceptions of the teaching session | ||||

| The SiPs able to carry out the clinical test | 0 | 0 | 5 (100%) | n=5 |

| The SiPs played their character convincingly | 0 | 0 | 5 (100%) | n=5 |

| Students’ perceptions of the teaching session | ||||

| Did the SiPs play their characters convincingly? | 0 | 0 | 7 (100%) | n=7 |

Note: *Only four SiPs chose to answer this question.

Assessors and students rated their teaching session experiences, as detailed in Table 2. Assessors scored the four domains from 1 (very hard) to 10 (very easy), with mean scores above 8 (range 8.2–8.6) except for discussion, which had a lowest score of 5. When comparing these sessions to real-patient teaching, all assessors found them the same or easier, except for discussion, which two found harder to teach.

Table 2.

Assessors and Students Scoring of Teaching Session by Domain

| Domain | Assessor (n=5) | Student (n=7) | ||||

|---|---|---|---|---|---|---|

| I Found Teaching This Domain. (1=Very Hard to 10= Very Easy) Mean Scores (Range) |

How Did Teaching This Domain Compare to Your Experiences with Our Real Volunteer Patients in the Past? n(%) |

How Well Were They Able to Practice This Domain? (1= Not Very Well at All to 10= Very Well) Mean Scores (Range) |

Results for Mann–Whitney U-test for Differences Between Assessors and Students Median Scores | |||

| Much Harder or Harder | The Same | Easier or Much Easier | ||||

| The discussion | ||||||

| The discussion taking a history and the determining and setting of goals | 8.2 (5–10) | 2 (40%) | 0 | 3(60%) | 8.3 (6–10) | U=18, z=0.086, p=1 rrb=0.44 |

| Measuring visual function | 8.8 (8–10) | 0 | 1 (20%) | 4 (80%) | 9.6 (9–10) | U=24.5, z=1.296, p=0.27 rrb=0.40 |

| Establishing magnification and making recommendations of appropriate aids | 8.6 (7–10) | 0 | 3 (60%) | 2 (40%) | 7.3 (4–10) | U=13, z=−0.751, p=0.53 rrb=0.54 |

| Giving advice on low vision solutions and signposting. | 8.6 (7–10) | 0 | 3 (60%) | 2 (40% | 9 (7–10) | U=20.5, z=0.511, p=0.64 rrb=0.43 |

Students were asked to rate their ability to practice each domain on a scale of 1 to 10. Mean scores were above 7 (range 7.3 to 9.6) across all domains. The discussion and magnification domains had the lowest mean scores and widest ranges, mean 8.3, range 6 to 10 and mean 7.3, range 4 to 10, respectively.

A Mann–Whitney U-test of the differences between each group’s median scores revealed no significant differences between the scores assessors and students gave for each domain in the teaching session.

Assessment

Table 3 summarises participant perceptions of the assessment session. SiPs reported positively on their role-playing, communication, and ability to conduct tests, with assessors agreeing they performed convincingly. Most students found the character portrayal convincing, though two remained neutral.

Table 3.

Participants’ Perceptions of the Assessment Session

| Question Asked | Participant Responses | |||

|---|---|---|---|---|

| Disagree or Strongly Disagree n (%) |

Neither Agree or Disagree n (%) |

Agreed or Strongly Agreed n (%) |

Number of Responses n |

|

| SiPs perceptions of the assessment session | ||||

| I was able to carry out all the clinical tests | 0 | 0 | 5 (100%) | n=5 |

| I was able to remember all the key points in my case | 0 | 0 | 5 (100%) | n=5 |

| I was able to get across all the key points to the student | 0 | 0 | 5 (100%) | n=5 |

| I felt I played my character convincingly | 0 | 0 | 5 (100%) | n=5 |

| Assessors perceptions of the assessment session | ||||

| The SiPs able to carry out the clinical test | 0 | 0 | 5 (100%) | n=5 |

| The SiPs played their character convincingly | 0 | 0 | 5 (100%) | n=5 |

| Students’ perceptions of the assessment session | ||||

| Did the SiPs play their characters convincingly? | 2 (29%) | 0 | 5 (71%) | n=7 |

Table 4 details assessor and student ratings. Assessors scored the four domains from 1 (very hard) to 10 (very easy), with mean scores above 8 (range 8.6–9.2), except for discussion, which one assessor found harder to evaluate.

Table 4.

Assessors and Students Scoring of Assessment Session by Domain

| Domain | Assessor (n=5) | Student (n=7) | Results for Mann–Whitney U-test for Differences Between Assessors and Students Median Scores | |||

|---|---|---|---|---|---|---|

| I Found Assessing This Domain. (1=Very Hard to 10= Very Easy) Mean Scores (Range) |

How Did Assessing This Domain Compare to Your Experiences with Our Real Volunteer Patients in the Past? n(%) |

How Well Were they Able to Evidence This Domain? (1= Not Very Well at All to 10= Very Well) Mean Scores (Range) |

||||

| Much Harder or Harder | The Same | Easier or Much Easier | ||||

| The discussion | ||||||

| The discussion taking a history and the determining and setting of goals | 8.6 (7–10) | 1 (20%) | 1 (20%) | 3 (60%) | 8 (6–10) | U=11.5, z=−0.662, p=0.537 rrb=0.64 |

| Measuring visual function | 9.2 (8–10) | 0 | 3 (60%0 | 2 (40%) | 9.3 (8–10) | U=15.5, z=0.101, p=1, rrb=0.67 |

| Establishing magnification and making recommendations of appropriate aids | 8.8 (8–10) | 0 | 3 (60%) | 2 (40%) | 7.5 (5–10) | U=8.5, z=−1.226, p=0.247, rrb=0.72 |

| Giving advice on low vision solutions and signposting. | 8.8 (8–10) | 0 | 3 (60%) | 2 (40%) | 8.5 (7–10) | U=13, z=−0.388, p=0.792, rrb=0.62 |

Student scores for demonstrating skills ranged from 7.5 to 9.3, with the widest variations in discussion (mean 8, range 6–10) and establishing magnification (mean 7.5, range 5–10).

A Mann–Whitney U-test showed no significant differences between assessors’ and students’ median scores for each domain.

Thematic Analysis

Two overarching themes emerged: “teaching and assessment environment” and “interactions”, with five sub-themes (Figure 2). In total, 73 statements were identified—30 under “teaching and assessment environment” and 43 under “interactions”. The thematic map shows the statement distribution to illustrate the spread of comments, not their relative importance.

Of these, 37 (51%) were positive, 17 (23%) neutral, and 19 (26%) negative or suggested improvements. Assessors made 41 statements, SiPs 25, and students 7. A total of 55 statements related to the teaching session and 18 to the assessment session. Statements covered all four domains, preparation level, and role performance, with the latter being the most frequent (32) and measuring visual function the least (5).

The Teaching and Assessment Environment

This first theme grouped statements that were defined by their relevance to the process of teaching and assessment with three sub themes emerging, namely: preparation, equipment and age matching.

Unsurprisingly, almost all statements about preparation came from the SiPs and all were positive or neutral. The importance of timely preparation was noted as was the depth of detail in the cases that helped in the delivering of the role.

Excellent level of detail. Just enough to allow me to retain the information. Prompt sheet was a welcome addition to solidify my key points before the session.

The level of detail also included information that was not integral to the patient’s complaint which helped me understand his character and lifestyle.

There was a lot of background so quite easy to form natural detailed answers.

Personal preparation and the practice sessions were both highlighted as important in preparing for the encounters.

There were five statements about equipment related to the simulation glasses and to the use of the ADD+4.00 to establish reading. Three of these highlighted that the simulation glasses were annoying and prone to steaming up and one complained about the difficulty of reading with an ADD+4.00 addition. The last expressed an interest in how the simulation glasses worked.

Age matching was mentioned by the students in that it helped to have an SP who was in the same age range as the case scenario.

I was lucky to get an older patient, ... pretending to talk to an older person with a young patient [SiP] is difficult.

Interactions

This second theme was defined by statements that were relevant to the personal interactions of the participants, further grouped into two sub themes namely: comparisons and communication.

There were 23 statements which addressed a comparison of the use of an SiP to the use of a real patient with the vast majority (19) being made by the assessors. The majority (12) made a favorable comparison and the eight negative comments were a mix of observations on the unrealistic predictability of SiP in their visual behavior. There were a mix of comments about the measuring of visual function being more reliable with real patients in the case of distance measures,

I felt more confident in the [real] patients, they are more stable when measuring VA.

But less so for near vision assessment.

The sim [simulated] patients are [more] stable with the NV than the real patients.

It was felt the SiPs were more predictable overall and hence this made teaching easier but that there was a lack of emotional connection and realism.

Teaching was easy but did not feel as effective.

I felt this was a better test of the students abilities, however, there was a lack of emotional communication to assess.

The final sub theme dealt with statements around communication, with assessors highlighting how convincing the SiPs were. The SiPs noted in detail the challenges of answering questions in a realistic way and how the training had prepared them to divulge information in a measured manner.

It was tricky to work out how much to say when the students didn’t ask many questions. It’s tempting to help them out.

I am aware I had an urge to elaborate beyond the student’s question, an urge I suppressed in alignment with the ‘chunking’ guidance.

Cautious not to overindulge information not directly asked.

Discussion

The increased use of simulation-based learning in mainstream healthcare teaching is primarily driven by the need to teach high levels of practitioner competency across increasingly complex work scenarios with ever more limited access to “real” patient situations and finite training resources.7,28,29

Simulated patients have been used in evaluating optometric clinical care10,11,30 and in the teaching of communication skills to undergraduate optometry students.12,31 Oberstein et al13 reported on the use of teaching staff in the role of expert simulated patients as a response to “no show” teaching patients and later as a response to COVID-19 restrictions. These same restrictions required that we adapt our assessment and teaching methods to ensure stakeholder acceptance and practicality, prioritizing validity and acceptability.32 This also offered us the chance to reevaluation our use of real patients in teaching and assessment.

SBL design follows a five-stage process: set learning goals, select a simulation method and modality, define assessment, and establish feedback.33 Since we replaced only the patient within an existing framework, we focused on choosing the simulation method and modality, as other elements remained unchanged.

The simulation method used was a scenario-based simulation2 chosen because the teaching and assessment were of a patient encounter composed of multiple competencies including both interactive communication and clinical skills. Scenario-based assessments such as these aim to recreate a clinical encounter with all its complexities and are in contrast to skills-based simulations2 that isolate a single, often procedural skill, such as the assessing the use of a volk lens by examining a model eye. The simulation modality chosen was a simulated patient with the additional use of a simulation aid to mimic reduced visual function during part of the encounter. This approach, known as hybrid simulation,8 enables the simulation of a full patient encounter, including clinical signals, and has the documented advantage of combining communication and procedural skills into one realistic encounter, unlike the alternative OSCE method which assesses them separately.34,35

When considering this method for both teaching and assessment, validity is a central consideration. Application of current frameworks36,37 to discuss the validity of the whole assessment framework that the SiP encounter sat within (accepted by the College of Optometrists in the delivery of the Professional Qualification in Low Vision)21 is beyond the scope of this paper, and we make the assumption that it is valid. The question is then whether the SiP element of the method is valid and this hinges on the concept of fidelity—how realistic it is—both at a superficial and theoretical level.

The simulated encounter is similar in many ways to the original real patient encounter. A patient is seen sitting in a chair and is able to answer questions about their situation. This structural or physical fidelity38 addresses what is sometimes termed the face validity39 - does the assessment appear to be assessing the right thing? Our participants' initial reactions suggest that both assessors and students felt that the role was played convincingly in both the teaching and assessment sessions and that the level of preparation was highlighted in SiP comments as important factor in enabling them to play their roles convincingly. Detailed planning of the encounter and SiP preparation, from detailed case documentation to practice and pre-briefing, is recommended for successful SiP use7,19 and this probably contributed to this positive response. Age matching of actors to SiP scenario characters was commented on as a positive but given the constraints of the staff volunteer pool was not possible in all cases which undoubtedly affected the physical fidelity of the experience.

Basing the scenarios on real patient data and standard setting against the teaching and assessment learning objectives addressed the concept of content validity,40 that is—does the method teach and assess all the elements of the competency? To ensure the scenarios had adequate content validity we needed to ensure that the symptoms, level of visual function and responses were reasonable for a low vision patient. Only a detailed design of the scenarios ensures this.

Examining the responses of participants at a domain level offered a detailed perspective. The scored feedback on the initial discussion in both the teaching and assessment sessions showed a wide range of Likert scores from both assessors and students indicating a mixed response to this domain. It was the only domain where some assessors found it harder to both teach and assess when compared to the real patients with one assessor commenting on the lack of emotional engagement. This suggests that even with a high level of preparedness the initial interview was still the least well accepted by students and assessors and this is unexpected as teaching and assessing communication is a strength of the SiP method.8,41 Conversely, the other communication domain, that of advice giving, was better accepted, possibly because the interaction was more one way, relying less on the information given by the SiP.

Conversely, during the measuring of visual function, when physical fidelity was at its lowest because the SiPs were wearing a simulation spectacle (which most found annoying), both assessors and students reported higher scores with narrower ranges and assessors reported a better parity with the experience of using real patients. This seeming anomaly might be explained by the argument posed by Hamstra et al42 that physical fidelity is less important in simulation than functional fidelity (or functional task alignment) and consequent learner engagement. It is argued that physical fidelity is a distraction and what is more important in educational terms is the meaningfulness of the experience for the learner.42 It may be that our participants when confronted with the superficially more realistic but more in-depth task of the initial discussion where less able to “buy into it” but were more able to do so with the less realistic but much simpler task of measuring visual function. Likewise, the domain of establishing magnification which involved the faking of a reduced near acuity but without a simulation spectacle showed a wide range of scores for the students in both the teaching and assessment sessions, suggesting they were not all convinced of its functional task alignment.

Simulating visual function loss is challenging, as methods vary in effectiveness. Abraham et al43 found that both commercial and self-made approaches lacked consistency between expected and measured impact. In our study, SiPs wore simulation glasses to align with expected scenario acuity, though an alternative approach—using pre-agreed scores—could have removed simulation aids but introduced variability in testing distances.

This study examined participant perspectives on the SiPs’ resource and its acceptability to key stakeholders, including the module team, the School, and the College of Optometrists. Assessors and students broadly accepted the method, though discussion and magnification-establishing elements were less well received. Assessors, experienced in both teaching and assessment using real patients, provided the strongest support for its use in low vision practice. The method was acceptable to the professional governing and accrediting body.

Organizationally, the use of the SiP method has many documented advantages. SiPs allow for a standardized presentation of cases to all candidates, they are available as and when required and they can be calibrated to the level of teaching needed. They can undergo teaching or assessment sessions many times over, can be trained to present unusual or emotionally complex scenarios and when trained in feedback can become educators themselves.7,44 Conversely, and our experience concurs, the main drawback of the method is the considerable resource needed to develop it,7 especially if the method is not in usual usage in existing teaching programs and educators are new to planning and implementing the methodology.

SiPs are in use to train and assess a wide range of health disciplines, primarily in medicine1,45 but also in pharmacy,46 nursing,47 physical therapy,48 dentistry49 and physiotherapy.50 The method is used in the main to teach communication skills51 but can also be used to teach physical examination and procedural skills4 for example slit-lamp techniques. In assessment, they are widely used within OSCE examinations but also for in situ assessment of clinical performance. It is when the method is combined with physical or virtual simulation in the form of a hybrid simulation34,52,53 that a whole patient encounter can be developed for a wide range of conditions. For example, a SiP can be interviewed by the practitioner and then “examined” using a slit lamp with a virtual feed complimented by on-screen imaging data. The practitioner is therefore able to complete a full encounter including communication of results and treatment plan.

Ultimately, the development and use of SiPs must be weighed against the “real thing”, in our case the patients that post pandemic have again become available to us. Our results show that teaching and assessing the “discussion” and “establishing magnification” domains were the most challenging and problematic, especially for the students. This was probably due to a lack of functional task alignment. These are key competencies in low vision practice. The real patient, although less organizationally flexible than the SiP, offers the credibility of demonstrating practice in a genuine clinical scenario without the requirement of simulation, which the module team feel is more appropriate for this level of formal accreditation in low vision practice.

Weaknesses and Limitations of This Study

The case study methodology used does not aim to show educational effect or to validate the use of the SiP against existing methods used.

Although the experienced assessors involved represent a substantial proportion of those working in low vision education in the UK, this study examines only a single instance of using the SiP methodology within one aspect of optometric education. It focuses on the experiences of those involved in teaching and assessing one cohort, evaluating only the short-term outcome of method acceptability.

We have limited our consideration of the validity of the SiP method to the early-stage concepts of face and content validity specific to the use of the SiPs within an existing teaching and assessment method. The wider validity of these methods remains open to discussion.

The perspective of the students is only of this one experience and their response to the teaching and assessment can only be seen as comparative to their experience of interacting with patients in general practice.

Future Research

These findings should be interpreted as preliminary data that can inform a broader discussion on the role of SiPs in optometric education. If, as seen in other health professions, optometric educators are to adopt SiPs more widely, further research is essential to explore how and when this method can be effectively applied to teaching and assessing optometric competencies.

Specifically, studies should examine the educational impact across a wider range of scenarios and competencies, alongside a structured evaluation of the method’s validity. This would require both practical and theoretical comparisons with alternative or existing teaching approaches to determine its effectiveness and broader applicability. Larger sample sizes and varied cohorts would allow for the use of more detailed inferential statistical modelling to quantify and predict validity and educational impact. Of specific interest would be an exploration of hybrid simulations where SiPs provide the human interaction and virtual environments provide clinical data, such as imaging. This would allow educators to present whole case scenarios of complex or rare cases in standardised ways for both teaching and assessment.

Conclusions

Developing and implementing a simulated patient resource that conforms to guidelines and is acceptable for high stakes assessment involves considerable time and resources.

Assessors and students found the method in the main acceptable, with the discussion and magnification elements being least well accepted. The positive view of the experienced assessors offers the strongest indication that the method is acceptable to teach and assess low vision practice.

The method was acceptable to the professional governing and accrediting body, the College of Optometrists, as part of its temporary arrangements to take account of the impact of COVID-19.

Access to real patients is seen as the more credible option to teach and assess this level of low vision practice. In the absence of real low vision patients, the use of SiPs is a realistic option for teaching and assessing if the resource is planned and implemented carefully.

If optometric educators are to adopt SiPs more widely, further research is essential to explore how and when this method can be effectively applied to teaching and assessing optometric competencies. When combined with the virtual presentation of clinical signs, this method provides an opportunity for standardized teaching and assessment of complex cases that would not be available at scale if real patients are to be used.

Disclosure

The authors report no conflicts of interest in this work.

References

- 1.Issenberg SB, Scalese RJ. Simulation in health care education. Perspect Biol Med. 2008;51(1):31–46. doi: 10.1353/pbm.2008.0004 [DOI] [PubMed] [Google Scholar]

- 2.Battista A, Nestel D. Chapter 11: simulation in medical education. In: Swanwick T, Forrest K, editors. Understanding Medical Education. Wiley Blackwell; 2019:p151–162. [Google Scholar]

- 3.Purva M, Fent G, Prakash A, Armstrong M. Enhancing UK core medical training through simulation-based education: an evidence-based approach. A report from the joint JRCPTB/HEE expert group on simulation in core medical training. Health Education England; 2016. [Google Scholar]

- 4.Bearman ML, Nestel DF, Andreatta P. Simulation‑based medical education. In: Walsh K, editor. Oxford Textbook of Medical Education. 1st ed. United Kingdom: Oxford University Press; 2013. pp. 186–197. [Google Scholar]

- 5.Nayahangan LJ, Konge L, Russell L, Andersen S. Training and education of healthcare workers during viral epidemics: a systematic review. BMJ Open. 2021;11(5):e044111. doi: 10.1136/bmjopen-2020-044111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Park CS, Clark L, Gephardt G, et al. Manifesto for healthcare simulation practice. BMJ Simul Technol Enhanc Learn. 2020;6(6):365–368. doi: 10.1136/bmjstel-2020-000712 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Cleland JA, Abe K, Rethans JJ. The use of simulated patients in medical education: AMEE Guide No 42. Med Teach. 2009;31(6):477–486. doi: 10.1080/01421590903002821 [DOI] [PubMed] [Google Scholar]

- 8.Forrest K, McKimm J, Edgar S. Essential Simulation in Clinical Education. John Wiley & Sons; 2013. [Google Scholar]

- 9.Shah R, Edgar DF, Spry PG, et al. Glaucoma detection: the content of optometric eye examinations for a presbyopic patient of African racial descent. Br J Ophthalmol. 2009;93(4):492–496. doi: 10.1136/bjo.2008.145623 [DOI] [PubMed] [Google Scholar]

- 10.Shah R, Edgar DF, Rabbetts R, et al. The content of optometric eye examinations for a young myope with headaches. Ophthalmic Physiol Opt. 2008;28(5):404–421. doi: 10.1111/j.1475-1313.2008.00587.x [DOI] [PubMed] [Google Scholar]

- 11.Shah R, Edgar DF, Harle DE, et al. The content of optometric eye examinations for a presbyopic patient presenting with symptoms of flashing lights. Ophthalmic Physiol Opt. 2009;29(2):105–126. doi: 10.1111/j.1475-1313.2008.00613.x [DOI] [PubMed] [Google Scholar]

- 12.Shah R, Ctori I, Edgar DF, Parker P. Use of standardised patients in optometry training. Clin Exp Optom. 2021;104(8):848–853. doi: 10.1080/08164622.2021.1896332 [DOI] [PubMed] [Google Scholar]

- 13.Oberstein S, Beckmann EA. Using an expert simulated patient strategy to teach in the low vision optometry clinic. Optometric Educ. 2023;49(1). [Google Scholar]

- 14.Jonuscheit S, Lam AKC, Schmid KL, Flanagan J, Martin R, Troilo D. COVID-19: ensuring safe clinical teaching at university optometry schools. Ophthalmic Physiol Opt. 2021;41(1):144–156. doi: 10.1111/opo.12764 [DOI] [PubMed] [Google Scholar]

- 15.Subramanian A. Two years on: what has COVID-19 taught us about online (telerehabilitation) visual impairment teaching clinics? Clin Exp Optom. 2023;106(1):91–93. doi: 10.1080/08164622.2022.2121642 [DOI] [PubMed] [Google Scholar]

- 16.Yin RK. How to do better case studies: (with illustrations from 20 exemplary case studies). In: The SAGE Handbook of Applied Social Research Methods. 2 ed. SAGE Publications, Inc.; 2009. [Google Scholar]

- 17.INACSL standards of best practice: simulationSM simulation design. Clin Simul Nurs. 2016;12:S5–S12. doi: 10.1016/j.ecns.2016.09.005 [DOI] [Google Scholar]

- 18.Lewis KL, Bohnert CA, Gammon WL, et al. The association of standardized patient educators (ASPE) standards of best practice (SOBP). Adv Simul. 2017;2(1):10. doi: 10.1186/s41077-017-0043-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Dudley F. The Simulated Patient Handbook: A Comprehensive Guide for Facilitators and Simulated Patients. CRC Press; 2018. [Google Scholar]

- 20.Ryan B, Khadka J, Bunce C, Court H. Effectiveness of the community-based low vision service wales: a long-term outcome study. Br J Ophthalmol. 2013;97(4):487–491. doi: 10.1136/bjophthalmol-2012-302416 [DOI] [PubMed] [Google Scholar]

- 21.College of Optometrists. Learning outcomes for professional certificate in low vision. College of Optometrists; 2017. Available from: https://www.college-optometrists.org/professional-development/further-qualifications/higher-qualifications. Accessed July 5, 2024. [Google Scholar]

- 22.Optometrists Co. College higher qualifications – temporary arrangements to take account of COVID-19 impact on delivery V1.2 April 2020. 2020.

- 23.Dalwood N, Bowles KA, Williams C, Morgan P, Pritchard S, Blackstock F. Students as patients: a systematic review of peer simulation in health care professional education. Med Educ. 2020;54(5):387–399. doi: 10.1111/medu.14058 [DOI] [PubMed] [Google Scholar]

- 24.Visual Impairment North East. The Vine Simulation Package. 2024. Available from: https://vinesimspecs.co.uk/. Accessed August 22, 2024.

- 25.Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol. 2006;3(2):77–101. doi: 10.1191/1478088706qp063oa [DOI] [Google Scholar]

- 26.Kiger ME, Varpio L. Thematic analysis of qualitative data: AMEE Guide No. 131. Med Teach. 2020;42(8):846–854. doi: 10.1080/0142159X.2020.1755030 [DOI] [PubMed] [Google Scholar]

- 27.O’Brien BC, Harris IB, Beckman TJ, Reed DA, Cook DA. Standards for reporting qualitative research: a synthesis of recommendations. Acad Med. 2014;89(9):1245–1251. doi: 10.1097/ACM.0000000000000388 [DOI] [PubMed] [Google Scholar]

- 28.Jeffries PR. A framework for designing, implementing, and evaluating: simulations used as teaching strategies in nursing. Nurs Educ Perspect. 2005;26(2):96–103. [PubMed] [Google Scholar]

- 29.Barry Issenberg S, McGaghie WC, Petrusa ER, Lee Gordon D, Scalese RJ. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach. 2005;27(1):10–28. doi: 10.1080/01421590500046924 [DOI] [PubMed] [Google Scholar]

- 30.Shah R, Edgar D, Evans BJ. Measuring clinical practice. Ophthalmic Physiol Opt. 2007;27(2):113–125. doi: 10.1111/j.1475-1313.2006.00481.x [DOI] [PubMed] [Google Scholar]

- 31.Anderson HA, Young J, Marrelli D, Black R, Lambreghts K, Twa MD. Training students with patient actors improves communication: a pilot study. Optometry Vision Sci. 2014;91(1):121–128. doi: 10.1097/OPX.0000000000000112 [DOI] [PubMed] [Google Scholar]

- 32.Van Der Vleuten CPM. The assessment of professional competence: developments, research and practical implications. Adv Health Sci Educ. 1996;1(1):41–67. doi: 10.1007/BF00596229 [DOI] [PubMed] [Google Scholar]

- 33.Cook DA, Hamstra SJ, Brydges R, et al. Comparative effectiveness of instructional design features in simulation-based education: systematic review and meta-analysis. Med Teach. 2013;35(1):e867–98. doi: 10.3109/0142159X.2012.714886 [DOI] [PubMed] [Google Scholar]

- 34.Kneebone R, Kidd J, Nestel D, Asvall S, Paraskeva P, Darzi A. An innovative model for teaching and learning clinical procedures. Med Educ. 2002;36(7):628–634. doi: 10.1046/j.1365-2923.2002.01261.x [DOI] [PubMed] [Google Scholar]

- 35.Kneebone R, Nestel D, Bello F. Learning in a Simulated Environment. Elsevier; 2017. [Google Scholar]

- 36.Kane MT. Validating the interpretations and uses of test scores. J Educ Meas. 2013;50(1):1–73. doi: 10.1111/jedm.12000 [DOI] [Google Scholar]

- 37.Messick S. The interplay of evidence and consequences in the validation of performance assessments. Educ Res. 1994;23(2):13–23. doi: 10.3102/0013189X023002013 [DOI] [Google Scholar]

- 38.Allen J, Buffardi L, Hays R. The relationship of simulator fidelity to task and performance variables. USARI Ft B Sci. 1991. [Google Scholar]

- 39.Allen MS, Robson DA, Iliescu D. Face Validity. Eur J Psychol Assess. 2023;39(3):153–156. doi: 10.1027/1015-5759/a000777 [DOI] [Google Scholar]

- 40.Schuwirth LWT, Van der Vleuten CPM. How to design a useful test: the principles of assessment. Understand Med Educ. 2018:2018;275–289. [Google Scholar]

- 41.Kaplonyi J, Bowles KA, Nestel D, et al. Understanding the impact of simulated patients on health care learners’ communication skills: a systematic review. Med Educ. 2017;51(12):1209–1219. doi: 10.1111/medu.13387 [DOI] [PubMed] [Google Scholar]

- 42.Hamstra SJ, Brydges R, Hatala R, Zendejas B, Cook DA. Reconsidering fidelity in simulation-based training. Acad Med. 2014;89(3):387–392. doi: 10.1097/ACM.0000000000000130 [DOI] [PubMed] [Google Scholar]

- 43.Abraham CH, Sakyi-Badu G, Boadi-Kusi SB, et al. Simulation of visual impairment in persons with normal vision for scientific research. Ophthalmic Physiol Opt. 2024;44(2):442–456. doi: 10.1111/opo.13268 [DOI] [PubMed] [Google Scholar]

- 44.Forrest K, McKimm J. Healthcare Simulation at a Glance. John Wiley & Sons; 2019:52-53. [Google Scholar]

- 45.Lane C, Rollnick S. The use of simulated patients and role-play in communication skills training: a review of the literature to August 2005. Patient Educ Couns. 2007;67(1–2):13–20. doi: 10.1016/j.pec.2007.02.011 [DOI] [PubMed] [Google Scholar]

- 46.Watson MC, Norris P, Granas AG. A systematic review of the use of simulated patients and pharmacy practice research. Int J Pharm Pract. 2006;14(2):83–93. doi: 10.1211/ijpp.14.2.0002 [DOI] [PubMed] [Google Scholar]

- 47.MacLean S, Kelly M, Geddes F, Della P. Use of simulated patients to develop communication skills in nursing education: an integrative review. Nurse Educ Today. 2017;48:90–98. doi: 10.1016/j.nedt.2016.09.018 [DOI] [PubMed] [Google Scholar]

- 48.Pritchard SA, Blackstock FC, Nestel D, Keating JL. Simulated patients in physical therapy education: systematic review and meta-analysis. Phys Ther. 2016;96(9):1342–1353. doi: 10.2522/ptj.20150500 [DOI] [PubMed] [Google Scholar]

- 49.Johnson GM, Halket CA, Ferguson GP, Perry J. Using standardized patients to teach complete denture procedures in second year of dental school. J Dent Educ. 2017;81(3):340–346. doi: 10.1002/j.0022-0337.2017.81.3.tb06280.x [DOI] [PubMed] [Google Scholar]

- 50.Javaherian M, Dabbaghipour N, Khabaz Mafinejad M, Ghotbi N, Khakneshin AA, Attarbashi Moghadam B. The role of simulated patient in physiotherapy education: a review article. J Mod Rehabil. 2021. doi: 10.18502/jmr.v14i2.7704 [DOI] [Google Scholar]

- 51.Doyle AJ, Sullivan C, O’Toole M, et al. Training simulated participants for role portrayal and feedback practices in communication skills training: a BEME scoping review: BEME Guide No. 86. Med Teach. 2024;46(2):162–178. doi: 10.1080/0142159X.2023.2241621 [DOI] [PubMed] [Google Scholar]

- 52.Kneebone R, Baillie S. Contextualized simulation and procedural skills: a view from medical education. J Vet Med Educ. 2008;35(4):595–598. doi: 10.3138/jvme.35.4.595 [DOI] [PubMed] [Google Scholar]

- 53.Kneebone RL, Scott W, Darzi A, Horrocks M. Simulation and clinical practice: strengthening the relationship. Med Educ. 2004;38(10):1095–1102. doi: 10.1111/j.1365-2929.2004.01959.x [DOI] [PubMed] [Google Scholar]