Abstract

Background

Clinical notes are a vital and detailed source of information about patient hospitalizations. However, the sheer volume and complexity of these notes make evaluation and summarization challenging. Nonetheless, summarizing clinical notes is essential for accurate and efficient clinical decision-making in patient care. Generative language models, particularly large language models such as GPT-4, offer a promising solution by creating coherent, contextually relevant text based on patterns learned from large datasets.

Methods

This study describes the development of a discharge summary system using large language models. By conducting an online survey and interviews, we gather feedback from end users, including physicians and patients, to ensure the system meets their practical needs and fits their experiences. Additionally, we develop a rating system to evaluate prompt effectiveness by comparing model-generated outputs with human assessments, which serve as benchmarks to evaluate the performance of the automated model.

Results

Here we show that the model’s ability to interpret diagnoses borders on humanlevel accuracy, demonstrating its potential to assist healthcare professionals in routine tasks such as generating discharge summaries.

Conclusions

This advancement underscores the potential of large language models in clinical settings and opens up possibilities for broader applications in healthcare documentation and decision-making support.

Subject terms: Health care, Health services, Medical research

Plain language summary

This study developed a system to support physicians in writing hospital discharge summaries. Clinical notes often include essential patient information, but their length and complexity can make it challenging to summarize them efficiently. To address this, we applied artificial intelligence (AI) techniques to help generate clear and organized summaries based on patient data. We collected input from both physicians and patients through surveys and interviews to ensure the system aligned with their needs. We also evaluated the summaries created by the system by comparing them to those written by healthcare professionals. The results showed that the AI-generated summaries were comparable in accuracy to human-written versions. This suggests that such a system could assist physicians in their documentation tasks and contribute to clearer communication during care transitions. Future applications may include other types of clinical documentation.

Damasio et al. develop a discharge summary system based on large language models using feedback from both physicians and patients. The results show that the AI-generated model can accurately support the generation of summaries from clinical notes demonstrating its potential to assist healthcare professionals in routine tasks.

Introduction

A well-constructed discharge summary must present a concise overview of a patient’s history, diagnosis, relevant treatments, medical interventions, and follow-up plans. It is a critical communication tool between hospitals and primary care providers, facilitating continuity of care and ensuring the transfer of essential medical information1–3. By enhancing the continuum of care with well-constructed discharge summaries, we support patient-level clinical decision-making during their care journey. Although the patient does not participate in the preparation of this document, the clarity and accessibility of the information promotes understanding of the diagnosis, treatment, and medical advice, encouraging adherence and engagement in their own care. The study focuses on validating the technical content of hospital discharge summaries, prioritizing the perspective of health care professionals, who are primarily responsible for creating, interpreting, and using this information.

Understanding the structure of a discharge summary requires recognizing the substantial volume of data generated throughout a patient’s hospitalization4. This set of data, documented in Electronic Health Records (EHR), includes structured, semistructured, and unstructured information, capturing a comprehensive record of the patient’s journey through the healthcare system. Structured data is organized into database tables, facilitating analysis and retrieval; in our scenario, this primarily refers to exams and medications. In contrast, semi-structured data lacks a defined format or structure, making its analysis more complex. For example, clinical notes contain information such as the reason for admission and the discharge plan. Although clinical notes are also stored in database tables, there is usually a text field where some information is described, resembling a long letter. Unstructured data refers to information that does not have a predefined model or is not easily categorized into structured fields. This type of data includes document entries (like DOCX and PDF), images, audio recordings, and videos.

Given the high clinical demand, physicians frequently face time constraints that hinder their ability to thoroughly review the complete hospitalization history and synthesize the most critical information. Artificial Intelligence (AI) can play a crucial role in overcoming this challenge by creating high-quality discharge summaries, thus improving patient care5. Implementing electronic discharge summary systems can considerably reduce healthcare wait times and enhance the accuracy and standardization of the information provided6. Therefore, optimizing this process can improve patient safety, satisfaction, and the overall quality of care.

Generative language models and Large Language Models (LLMs) offer valuable support in summarizing the data physicians need to review. Although these models do not replace the nuanced judgment and evaluation of medical professionals, they have powerful text-generation capabilities based on patterns and structures learned from training data7. Models such as GPT-3, GPT-4, and DNAbert can generate realistic simulations, personalized feedback, and comprehensive responses to queries. Nevertheless, while acknowledging the potential of LLMs in healthcare, concerns arise due to risks (for example, confabulation, fragility, and factual errors)8. Besides, studies such as9 have reported that clinicians display low adherence and high resistance to adopting new digital health technologies due to the lack of integration with the clinical workflow, which motivated us to engage multiple actors during the development process.

Several recent studies have demonstrated the potential of LLMs in automating clinical documentation processes, including the generation of discharge summaries10–12. These approaches aim to optimize the quality of documentation and reduce the administrative burden on healthcare professionals. For example, GPT-based chatbots have been shown to improve discharge summaries by automating processes, saving physicians time, and enhancing the quality of documentation10. Furthermore, advanced systems integrating Human-Computer Interaction, Natural Language Processing, and Big Data have been developed to improve the timeliness and relevance of information provided to healthcare professionals and patients11.

However, challenges related to accuracy, safety, and integration into clinical workflows remain significant13. For instance, a cross-sectional study evaluating LLM-generated discharge summaries highlighted concerns about omissions and hallucinations, with nearly 40% of reviewed summaries presenting potential safety risks13. These findings underscore the need for careful evaluation and responsible integration of LLMs in clinical practice.

Recognizing these challenges, we focused our efforts on developing a solution tailored to the Brazilian healthcare context, where the public health system (SUS) plays a central role. SUS serves as the largest healthcare provider in the country, ensuring access for all citizens, with approximately 80% of Brazilians relying exclusively on it14. This unique healthcare landscape influenced the design and implementation of our discharge summary system, which leverages LLMs to address the needs and constraints of clinicians within this setting.

In this study, we present our method for developing a discharge summary system that incorporates LLMs. We adopted a three-step approach, starting with requirements gathering, followed by the development, which draws on an online survey and interviews. The last step involved rating the system we developed. The detailed findings from these investigations are discussed in the following sections.

Our results demonstrated that the approach based on LLMs is capable of generating high-quality summaries, comparable to those produced by healthcare professionals. The evaluation conducted by physicians indicated that most of the generated versions were rated as “Very Good” or “Excellent”, reflecting the tool’s effectiveness in supporting the preparation of hospital discharge summaries. Additionally, the acceptance of AI by both physicians and patients was predominantly positive, reinforcing the system’s potential for adoption within the Brazilian public healthcare context.

Methods

This section describes the multidisciplinary development process of our AI-based discharge summary system integrated within NoHarm.ai. We detail the composition of the team and stakeholder involvement, the system design and summarization methodology, the user interface and discharge summary structure, and the evaluation approach, including user feedback and ethical considerations specific to the Brazilian healthcare context.

Development team and stakeholder consultation

We developed a discharge summary system (code available at Zenodo15) to enhance the quality of care transitions by establishing more effective communication and facilitating collaboration among the healthcare professionals involved. This system is a feature of NoHarm.ai, which leverages AI to support clinical decision-making throughout the patient’s care journey, thereby strengthening the continuum of care. NoHarm.ai is used in more than 150 partner hospitals spread across Brazil to improve patient care.

Our development team was multidisciplinary, encompassing expertise in Computer Science, Pharmacy, Medicine, Electrical Engineering, and Information Systems, along with specializations in Healthcare Quality and Artificial Intelligence. The team also reflected considerable diversity in terms of gender, professional experience, and geographic location, enriching the perspectives and approaches adopted throughout the project.

In addition to the core team, we consulted a diverse group of healthcare professionals and stakeholders, including resident physicians, nurses, psychologists, pharmacists, internal medicine specialists, quality assurance professionals, and innovation and quality staff. This inclusive approach ensured that the system’s design and functionalities addressed the practical needs and perspectives of various roles in healthcare.

System design and summarization methods

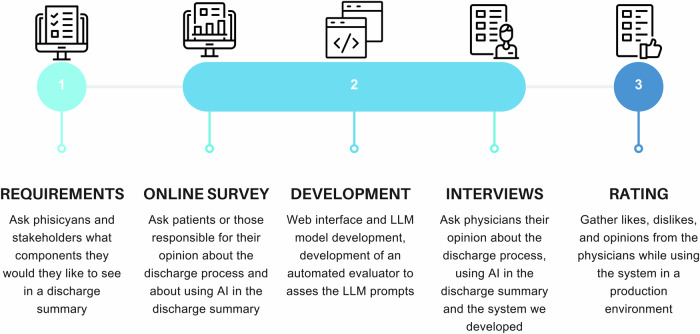

We outline the process we followed in Fig. 1. The project began with gathering system and model requirements from healthcare professionals and stakeholders in partner hospitals. This stage was followed by three key actions: developing a functional system prototype, undertaking an online survey, and conducting interviews. The prototype was designed to seamlessly integrate with hospital information systems, incorporating both structured data and AI-generated outputs. It features a web interface, the LLM model, and a previously introduced automated evaluator16 to assess the quality and effectiveness of the prompts.

Fig. 1. Process to develop and evaluate the discharge summary.

We employed a three-step approach, beginning with (1) requirements gathering, followed by (2) development, which involved an online survey and interviews. The final step focused on (3) evaluating the system we created.

During the development phase, we employed the following method to summarize the information stored in clinical notes:

To improve the performance of the LLM, it was fine-tuned to Brazilian Portuguese using data collected from the web and scientific articles.

We created a token classifier using Named Entity Recognition (NER) models such as Flair to identify important information in clinical notes, such as the reason for admission, test results, drug allergies, conditions, and discharge plans, among others. We generated a discharge summary by applying the LLM to the words and phrases classified in the previous step in each section of the summary.

We verbalized data, transforming structured data into natural language using Lama 2, e.g., laboratory test results, and a list of medications used during the hospital stay.

The hybrid approach (using NER and LLM together) has scalability advantages, such as low computational cost, since NER is a faster model to classify tokens and the LLM will exclusively need to process pre-classified words and phrases. The LLM handles only up to 4 K tokens, while NER can extract small phrases from larger texts. This allows scaling the data processing pipeline to include more hospitals without increasing project costs.

Discharge summary structure and user interface

We segmented the discharge summary into sections, using an optimized prompt that requires zero or minimal activation to operate properly. Summary elements or sections comprise Reason for admission, Diagnosis, Allergies, Home medications, Clinical summary, Laboratory tests, Imaging studies, Procedures performed, Medications used during hospitalization, Discontinued medications, Discharge conditions, Discharge plan, and Discharge prescription. These sections were defined based on the needs communicated by physicians.

We present the summary sections in a web interface tool (Fig. 2) where physicians can edit, modify, validate, and improve the discharge summary. The physician searches the web system for the patient for whom he wants to describe the discharge summary. Physicians can search by appointment number or by selecting patients from a list of patients who are within certain filters, such as the inpatient sector. When the patient is selected, Fig. 2 presents, at the same time, the input and the output for the discharge summary since the white boxes are editable. NoHarm is already integrated with several hospitals’ electronic medical record systems, so it can access and process all the aforementioned information.

Fig. 2. NoHarm discharge summary—web interface.

Discharge summary generated by our AI-based system. The summary demonstrates how structured and unstructured clinical data are organized to support effective communication among healthcare professionals during patient discharge.

User feedback, evaluation, and ethical considerations

While developing our approach, we collected user feedback to better understand the problem and improve the prototype. We interviewed primary care physicians and collected data from patients who had been hospitalized or from individuals who had patients under their care hospitalized through an online survey. This research has the approval of the ethics committee, under the code CAAE 75541023.5.0000.5336 at Plataforma Brasil (Brazilian Research Ethics Committee), and informed consent was obtained from the study participants. We followed the methods proposed by ref. 17 to perform an online unsupervised survey and the activities proposed by ref. 18 to conduct the online interviews. With these insights, we identified the needs of both patients and physicians regarding the use of discharge summaries. If necessary, we could add more functionalities to our system and retrain our model.

To conclude the evaluation process, we used a rating system so the physicians could evaluate our results straightforwardly while creating discharge summaries with real data for their current patients. The rating process also gave us insights into improving our proposed solution.

Although the methodology used is familiar to technologists and healthcare leaders, the value of our study lies in the insights specific to the Brazilian context. We adapted the process to capture communication gaps between hospitals and primary care within SUS, where 62.2% of patients rely exclusively on the public system. Additionally, interviews with physicians and patients revealed critical gaps—such as the lack of discharge summaries—that directly impact post-hospital care. These findings guided practical adjustments to the AI model and system interface, ensuring a solution more aligned with the local reality.

Results

In this section, we present our key findings from each step of the methodology.

Online survey with patients

We conducted a comprehensive online survey with (n = 128) respondents from Brazil, including both patients who had been hospitalized and individuals who had cared for hospitalized patients. The respondents were from several states across the country. Most participants (61.4% of the sample) hailed from Rio Grande do Sul (RS), the state where most researchers live. Additionally, there were participants from the states of São Paulo, Minas Gerais, Distrito Federal, Bahia, Mato Grosso do Sul, Goiás, and Paraná. Brazil’s vast geography and regional disparities in healthcare access, infrastructure, and resources considerably impact patient experiences and care delivery. Having respondents from diverse regions allows this study to capture a broad range of perspectives, ensuring that the proposed AI solutions address healthcare inequities and are adaptable to different contexts within the country. In the survey, we also received answers from people with different special needs: five participants were visually impaired, two had hearing disabilities, two lived with motor disabilities, and others had different types of disabilities. The participants’ ages ranged from 18 to 73, with the largest age group being 40–50, comprising 29.1% of the sample. The data analysis revealed that most participants were female, accounting for 70.9% of the total. Besides, 48.8% of participants hold postgraduate degrees, and 62.2% make use of SUS (Sistema Único de Saúde´, the Brazilian public healthcare system).

In our survey, approximately 32.8% of patients reported not receiving a discharge document, while 13.3% stated they did not recall receiving one (Table 1). When asked if they later felt that any information was not provided by their primary care physician, the respondents that answered “yes” cited the following gaps, in order of importance: procedures performed, test results, medications used during hospitalization, home care instructions (discharge plan), discharge conditions, medications discontinued during hospitalization, and medication allergies. Besides, 97.7% of patients answered they would like to receive a discharge document with all relevant information about their hospitalization. However, 10.9% of respondents stated that there are some pieces of information they would not want to be included in the document, such as past abortions, drug use, psychiatric issues, and sexually transmitted diseases.

Table 1.

Access and relevance of post-discharge information

| Aspect | Results (n = 128 patients) |

|---|---|

| Patients reported not receiving a discharge document | 32.8% |

| Patients who would like to receive a discharge summary | 97.7% |

| Patients who use SUS | 62.2% |

| Top missing information cited by patients |

1. Procedures performed 2. Test results 3. Medications used during hospitalization 4. Home care instructions (discharge plan) 5. Discharge conditions 6. Discontinued medications 7. Medication allergies |

Only 16.4% of the respondents did not have specialized knowledge of AI. When asked how they would feel if AI was used to assist physicians in discharge procedures, 73.44% felt comfortable/curious about the idea.

Interview with primary care physicians

We also interviewed 10 primary care physicians who had recently treated patients who were discharged. The majority of participants were male (70%—7 participants). The participants’ ages ranged from 18 to 73, with the largest age group being 29–39, constituting 70% of the total. The majority (70%—7 participants) of the interviewed physicians had considerable professional experience, with over 6 years of clinical practice, and 90% had experience in family and community clinics.

Most respondents reported that they received either printed or verbal discharge summaries from patients. The identified gaps in discharge summaries highlight critical information that is often missing, such as medication changes, discharge plans, and test results. We presented the summary discharge interface (see Fig. 2) with an example to the primary care physicians (PCPs), and all of them agreed that our system would meet their needs. When asked how they would feel if they had learned that AI was employed to create a patient discharge summary that was then reviewed by the doctor, 70% answered that they would feel comfortable and 30% answered that they would feel very comfortable. We also presented an interface with the discharge summary items described in Fig. 2 to the PCPs. When asked if the product seemed to address their needs while receiving summary discharges, 60% completely agreed and 40% partially agreed. The PCPs put forward some suggestions regarding our summary, which are detailed in Table 2.

Table 2.

Primary care physicians’ suggestions for improving discharge summaries

| Category | PCPs’ suggestions |

|---|---|

| Patient identification | Include SUS ID, address, email, age, and length of hospital stay. |

| Medications | Separate previously used or occasional medications. |

| Laboratory results | Improve test result presentation, highlighting the latest altered results in a table or a comparative chart (hospital admission vs. discharge). |

| Imaging exams | Display only the most recent textual exam result and attach the full report. |

| Discharge plan | Ensure that home care instructions are detailed and clear. |

Development

The discharge summary generation process involves several steps: (1) extracting relevant entities from clinical notes using NER, (2) structuring these entities into predefined categories, (3) applying an LLM to generate a structured summary, and (4) allowing physicians to review and edit the final output before submission.

A detailed evaluation of the LLM system, including model selection, fine-tuning process, dataset composition, and performance metrics, is provided in our previous study16. In that work, we systematically assessed the effectiveness of LLMs in automating diagnosis summarization for discharge summaries (see Fig. 2), using quantitative metrics such as F1-score and human expert validation. For a comprehensive discussion on the methodology and evaluation criteria, we refer the reader to ref. 16.

We compared the performance of six LLMs with human assessments using the F1-Score metric. Our previous study reveals areas for improvement in the medical knowledge and diagnostic capabilities of LLMs. The use of LLMs in healthcare, specifically for generating discharge summaries, is highlighted as a potentially transformative tool. The importance of evaluating LLMs for natural language processing performance in healthcare settings is emphasized to ensure their safe, effective use. The study underscores the significance of crafting precise prompts for LLM evaluation and the role of NER in processing clinical text. The alignment of LLM rankings generated by GPT-4 with those provided by specialists is seen as a positive development, enhancing the scalability of the model evaluation process.

A key distinction of our approach is that the system does not merely return extracted data as output. Instead, the LLM reformulates and condenses scattered clinical information into an organized discharge summary. For example, while the input consists of fragmented notes from multiple sections of the EHR, the output presents a cohesive narrative suitable for discharge documentation. Additionally, the system ensures physician oversight, allowing clinicians to modify and finalize the summaries before they are stored in the patient’s record.

Furthermore, we incorporated free-text generation capabilities, allowing the LLM to go beyond structured data and generate contextualized narrative summaries based on extracted entities. This ensures that the discharge summaries are not only complete but also clinically meaningful, providing additional insights that might not be immediately apparent in raw extracted data.

Additionally, while the NER system extracts key medical entities, these do not serve as direct inputs to the summary without processing. Instead, the LLM integrates, refines, and restructures the information, ensuring that the final output adds value beyond simple entity extraction by improving coherence and clinical relevance.

This approach ensures that the system enhances readability, reduces physician workload, and maintains accuracy while supporting clinical decision-making in postdischarge care.

Rating

To allow the users (physicians) to evaluate the final result of the discharge summary, we developed a like-and-dislike evaluation for each part of the summary and an evaluation of the overall content of the summary based on a five-point Likert scale. Also, physicians could provide textual feedback to further elaborate on their assessments. On the discharge summary platform, we analyzed the preliminary results provided by physicians who used the tool, based on patient attendance. We received evaluations from four different hospitals, each assessing the results of their respective discharge summary sessions. The physicians used the discharge summary for 28 patients. The majority of the feedback was positive, with 0% of respondents rating the summary as Poor, 5.13% as Fair, 20.51% as Good, 61.54% as Very Good, and 12.82% as Excellent. For 12 patients, each specific item of the summary was evaluated. There were suggestions for improvements, and 51.9% of the respondents considered that the information was insufficient, 18.5% thought it was excessive, and 29.6% regarded it as incorrect. The limited use of the discharge summary tool likely reflects early-phase integration challenges, insufficient training, and some inaccuracies in the summaries. Additionally, it is common for physicians to provide feedback primarily when issues arise, as they are less likely to comment when the tool meets expectations. These factors highlight the need for iterative improvements to enhance trust, usability, and adoption in clinical workflows, thereby ensuring that the AI-generated discharge summaries effectively support clinical decision-making and strengthen the continuum of care.

Summary of results

The survey and physician interviews highlight key insights into healthcare delivery and the role of AI in medical documentation in Brazil. With 128 respondents from several states, the study shows that 62.2% rely on SUS. A considerable gap exists, as 32.8% of participants reported that they did not receive a discharge document, while 97.7% would like to have one, emphasizing the need for improved post-discharge communication. However, some respondents prefer that sensitive information be excluded from these documents.

There is a positive attitude toward using AI, with 73.44% of respondents comfortable or curious about AI-assisted discharge summaries. Physicians also generally welcomed AI—70% are comfortable and 30% are very comfortable with its use. Feedback from physicians suggested improvements, such as including more details on the patient and better presentation of laboratory test results. The evaluation of AI models for generating discharge summaries shows promise but also highlights the need for ongoing development to improve accuracy and utility. Overall, the data supports the potential of AI in healthcare, especially for enhancing discharge summaries, provided that privacy and customization are carefully addressed.

The data presents a general overview of the physicians’ feedback on the discharge summaries of 28 patients. Overall, the feedback was positive, with 61.54% of respondents rating the summary as Very Good, 20.51% as Good, and 12.82% as Excellent, while 5.13% rated it as Fair and none rated it as Poor. This suggests that the summary is largely effective but could benefit from improvements. In a more detailed assessment of specific items of 12 patients, 51.9% of the information was deemed insufficient, indicating a need for more meticulous detail. We recognize that having incomplete information is normal, as our system is designed to support summarization, not replace human evaluation, which is essential to complement the data. Additionally, 18.5% of the information was considered excessive, suggesting that some parts may be redundant or unnecessary, while 29.6% was found to be incorrect, raising concerns about data accuracy. These findings highlight the importance of ensuring the completeness, relevance, and accuracy of the discharge summary to better support healthcare professionals in patient care.

Discussion

The results of this study highlight the considerable potential of integrating LLMs into healthcare, particularly in enhancing the quality and efficiency of discharge summaries. By addressing critical gaps in patient documentation, LLMs offer opportunities to improve continuity of care and strengthen communication among healthcare professionals. However, these advancements also introduce challenges that require careful and responsible implementation. A key finding is the need to build trust among healthcare providers and patients regarding AI-driven systems. While most respondents expressed comfort or curiosity about using AI, concerns about data privacy, algorithmic transparency, and inclusivity must be addressed. Future research should focus on longitudinal studies to assess the long-term impact of AI-generated discharge summaries on patient outcomes and clinical workflows.

Our findings are consistent with previous research demonstrating the potential of LLMs for generating clinical summaries and other medical documents10,19. For example, Jung et al.19 investigated the use of Mistral-7B to automate discharge notes for cardiac patients, achieving positive evaluations regarding clinical relevance and support for informed decision-making. However, unlike some studies that highlighted significant risks of inaccuracy and omission13, our hybrid approach—combining NER and LLM—yielded predominantly positive results, as assessed by participating physicians.

Moreover, studies such as that by Blease et al.20 indicate a growing adoption of AI tools to support clinical documentation, with many physicians already using generative AI for documentation and differential diagnosis. This trend reinforces the relevance of our approach within the Brazilian healthcare context, particularly in addressing documentation gaps and enhancing communication during care transitions.

Throughout the Discharge Summary project, our understanding of responsible AI evolved as we encountered challenges and made key ethical decisions. Initially focused on technical accuracy, we expanded our approach to address issues such as algorithmic bias, data privacy, and equity. This led to the adoption of responsible AI principles at every stage, from training NER models—following a data minimization strategy to reduce the amount of patient information sent to the LLM—to developing rigorous evaluation methods for LLM performance. To promote inclusivity, we also incorporated patients with neglected or rare diseases into the training data.

The integration of AI-powered discharge summaries into NoHarm was seamless, as it extended an existing system already widely used by clinical pharmacy professionals in hospitals. This eliminated major technical adaptation challenges; however, adoption among physicians—who were not previously NoHarm users—required additional efforts. To facilitate acceptance, we implemented a rating system that allowed physicians to assess the coherence and accuracy of AI-generated summaries. This process provided valuable insights, with most evaluations rating the summaries as “Very Good” or “Excellent.” Nonetheless, further efforts are needed to increase adoption, including raising awareness of the tool’s benefits, providing training for new users, and ensuring deeper integration into clinical workflows. Expanding physician engagement is essential to maximize the solution’s impact on hospital documentation quality and continuity of care.

Moreover, the need for customization and adaptability in discharge summaries emerged as a crucial area for improvement. Physician feedback emphasized the importance of refining the presentation of key information, such as laboratory test results and medication changes. While maintaining adherence to regulated standards for discharge summaries, as validated by organizations like the Joint Commission, the system becomes increasingly tailored to specific scenarios and customizes itself in terms of clarity and practicality for various specialties—including both surgical and clinical—as its data is continually trained. Future development should focus on modular summaries tailored to specific clinical scenarios, supported by intuitive interfaces that allow healthcare providers to efficiently review and refine content.

While our study was conducted in an early-stage validation phase, preliminary feedback from physicians indicates that AI-generated discharge summaries have the potential to streamline clinical documentation. In interviews, physicians highlighted that manually summarizing hospitalization records is often time-consuming, particularly when retrieving relevant details from scattered clinical notes. Our system provides structured summaries, which could facilitate faster discharge processes and improve information continuity. However, further real-world implementation studies are required to evaluate its effectiveness in routine clinical practice, including its impact on workflow efficiency, patient outcomes, and physician satisfaction. Additionally, since evaluations were primarily qualitative and based on physician feedback, future studies should incorporate objective performance metrics and independent double-checking to ensure broader generalizability and reliability of AI-generated summaries in clinical practice.

Finally, advancing our understanding of the ethical implications of AI in healthcare remains critical. While the system shows considerable promise, occasional inaccuracies and excessive information highlight the need for rigorous validation protocols. Expanding the dataset to include a wider range of clinical cases and implementing continuous evaluation mechanisms will help mitigate these issues. This study lays the foundation for leveraging AI in healthcare documentation, offering actionable insights for future research. Prioritizing responsible AI practices, user-centric design, and interdisciplinary collaboration will be key to unlocking the full potential of LLMs in improving healthcare delivery.

Conclusions

This study highlights the potential of LLMs in enhancing the quality and efficiency of discharge summaries in Brazilian healthcare. Discharge summaries are critical for ensuring continuity of care and effective communication between hospitals and primary care providers. Traditional methods often need to be revised to address the increasing clinical demands faced by physicians, leading to the exploration of AI-driven solutions.

Our methodology involved collecting feedback from both physicians and patients through surveys and interviews, highlighting the essential data elements required in discharge summaries and the general perception of AI in healthcare. The insights we gained emphasized the importance of integrating AI tools to improve discharge planning and the quality of patient care.

In conclusion, the implementation of AI and LLMs in discharge summaries puts forward a promising solution to the challenges faced by the Brazilian healthcare system. This approach not only streamlines the documentation process but also enhances the accuracy and comprehensiveness of patient records, supporting effective clinical decision-making during care transitions and ultimately contributing to better healthcare outcomes and efficient resource allocation. Future research should focus on refining these models and expanding their application to other areas of medical documentation to fully reap the benefits of AI in healthcare.

This study was conducted in a preliminary validation phase, which imposes certain limitations. First, the evaluations of the generated summaries were predominantly qualitative, based on healthcare professionals’ perceptions, without independent double-checking or blind validation. This may introduce bias in assessing the quality of the summaries. Additionally, the amount of data used for training and testing the model remains limited, and large-scale adoption of the system has not yet been evaluated. Future studies should include a more robust quantitative analysis with objective performance metrics and comparisons between different AI models, as well as expand the training data to reflect a broader range of clinical scenarios.

Supplementary information

Acknowledgements

We acknowledge the support provided by the Bill & Melinda Gates Foundation, and we also thank Pedro Braga, from ITS Rio, for his valuable review, which substantially contributed to our work. We also appreciate the valuable feedback from our respondents and interviewees, which helped us improve our tool.

Author contributions

J.D.O.: conceptualization, methodology, writing, data collection, survey and interviews design; H.D.P.S.: model development & evaluation, funding acquisition, writing–review & editing, supervision; A.H.U.: funding acquisition, investigation, validation, writing–review & editing, supervision; J.C.C.: methodology, visualization, data collection, writing; M.A.: software interface development, text review; J.S.: model development & evaluation; M.M.C.: investigation, writing; D.F.: investigation, writing, and model evaluation.

Peer review

Peer review information

Communications Medicine thanks Ethan Goh, Samuel Thio, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Data availability

The data related to the surveys, interviews, and figures that support this study’s findings are not openly available due to reasons of sensitivity, but are available from the corresponding author upon reasonable request. The data are located in controlled access data storage at NoHarm.ai cloud.

Code availability

The source code supporting the findings of this study is publicly available at Zenodo15.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Juliana Damasio Oliveira, Henrique D. P. Santos, Ana Helena D. P. S. Ulbrich, Julia Colleoni Couto, Marcelo Arocha, Joaquim Santos.

Supplementary information

The online version contains supplementary material available at 10.1038/s43856-025-01091-3.

References

- 1.Chua, C. E. & Teo, D. B. Writing a high-quality discharge summary through structured training and assessment. Med. Educ.57, 773–774 (2023). [DOI] [PubMed] [Google Scholar]

- 2.Sebastianus, F. & Suharto, E. Information system design completeness of filling out discharge summary of inpatients. J. Tek. Inform.3, 877–887 (2022). [Google Scholar]

- 3.Dielissen, P. W. & Beuken-van Everdingen, M. Quality of discharge summary for patients with limited life expectancy. Ned. Tijdschr. Voor Geneeskd.166, 6575–6575 (2022). [PubMed] [Google Scholar]

- 4.Chatterton, B. et al. Primary care physicians’ perspectives on high-quality discharge summaries. J. Gen. Intern. Med.39, 1438–1443 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Goodman, H. Discharging patients from acute care hospitals. Nurs. Stand.30, 49–60 (2016). [DOI] [PubMed] [Google Scholar]

- 6.O’Leary, K. J. et al. Creating a better discharge summary: improvement in quality and timeliness using an electronic discharge summary. J. Hosp. Med.4, 219–225 (2009). [DOI] [PubMed] [Google Scholar]

- 7.Ushio, A., Alva-Manchego, F. & Camacho-Collados, J. Generative language models for paragraph-level question generation. In Conference on Empirical Methods in Natural Language Processing 670–688 (Association for Computational Linguistics, 2022).

- 8.Lee, P., Bubeck, S. & Petro, J. Benefits, limits, and risks of GPT -4 as an AI chatbot for medicine. N. Engl. J. Med.388, 1233–1239 (2023). [DOI] [PubMed] [Google Scholar]

- 9.Ebnali, M. et al. A coding framework for usability evaluation of digital health technologies. In International Conference on Human-Computer Interaction. 185–196 (Springer, Cham, 2022). [DOI] [PMC free article] [PubMed]

- 10.Ersoy, A., Vizcarra, G., Mayeesha, T. & Muller, B. In what languages are generative language models the most formal? analyzing formality distribution across languages. In Findings of the Association for Computational Linguistics 2650–2666 (Association for Computational Linguistics, 2023).

- 11.Patel, S. B. & Lam, K. ChatGPT: the future of discharge summaries? Lancet Digital Health5, 107–108 (2023). [DOI] [PubMed] [Google Scholar]

- 12.Lal, H. & Lal, P. NLP chatbot for discharge summaries. In International Conference on Intelligent Communication and Computational Techniques. 250–257 (IEEE, 2019).

- 13.Zaretsky, J. et al. Generative artificial intelligence to transform inpatient discharge summaries to patient-friendly language and format. JAMA Netw. Open7, 240357–240357 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Pinto, L. R. et al. Analisys of hospital bed capacity via queuing theory and simulation. In Winter Simulation Conference. 1281–1292 (IEEE, 2014).

- 15.Juliana, D. et al Development and evaluation of a clinical note summarization system using large language models. Zenodo 10.5281/zenodo.15342747 (2025). [DOI] [PMC free article] [PubMed]

- 16.Santos, J. et al. Evaluating LLMs for diagnosis summarization. In International Conference of the IEEE Engineering in Medicine and Biology Society. 1–7 (Pubmed, 2024). [DOI] [PubMed]

- 17.Kitchenham, B. & Pfleeger, S. Principles of survey research part 1: turning lemons into lemonade. ACM SIGSOFT Softw. Eng. Notes26, 16–18 (2001). [Google Scholar]

- 18.Hove, S. E. & Anda, B. Experiences from conducting semi-structured interviews in empirical software engineering research. In Software Metrics Symposium. 10 (IEEE, 2005).

- 19.Jung, H. et al. Enhancing clinical efficiency through LLM: discharge note generation for cardiac patients. Preprint at https://arxiv.org/abs/2404.05144 (2024).

- 20.Blease, C. R., Locher, C., Gaab, J., H¨agglund, M. & Mandl, K. D. Generative artificial intelligence in primary care: an online survey of UK general practitioners. BMJ Health Care Inform.31, 101102 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data related to the surveys, interviews, and figures that support this study’s findings are not openly available due to reasons of sensitivity, but are available from the corresponding author upon reasonable request. The data are located in controlled access data storage at NoHarm.ai cloud.

The source code supporting the findings of this study is publicly available at Zenodo15.