Abstract

Threat-relevance theory suggests that gaze direction determines the self-relevance of facial threats. Indeed, angry eye-contact is a more relevant threat compared to its counterpart with averted gaze. Similarly, fearful eye-contact is not a threat to the observer, but averted fearful gaze can signal a relevant threat nearby. Following evidence that amygdala-reactivity to self-relevant threat depends on coarse visual processing, we investigate whether self-relevant threat attracts attention due to processing of low spatial frequency information via peripheral vision. Furthermore, we aim to provide behavioral relevance to this mechanism by investigating whether the psychopathic trait fearless-dominance promotes attention to self-relevant anger. Across three studies (N = 12, 31 and 36), we find that, during visual-search for emotional faces, less gaze-fixations are needed to find facial fear and anger when they are self-relevant. Self-relevance, however, does not promote peripheral identification of fear and anger in a signal-detection experiment. Together, this confirms that self-relevant facial threats indeed attract peripheral-attention. Moreover, image-analysis suggests that this is due to their low spatial-frequency content. Lastly, peripheral-attention to angry eye-contact is indeed more pronounced in relation to the psychopathic trait fearless-dominance, while diminished in relation to the psychopathic trait impulsive-antisociality, which provides preliminary behavioral relevance to this mechanism.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-025-15695-1.

Keywords: Visual search, Image statistics, Psychopathy, Fearless dominance, Impulsive antisociality, Response modulation theory

Subject terms: Psychology, Human behaviour, Emotion, Social behaviour, Visual system

Introduction

Threat-relevance theory proposes that gaze direction of angry and fearful faces determines how relevant these facial threats are to the observer1. An angry person making eye-contact is a threat to the observer, whereas a fearful person looking away signals the location of a nearby threat. As such, these expression/gaze combinations can be considered self-relevant threat signals as opposed to their counterparts, with averted and direct gaze respectively. Self-relevant threat is indeed recognized faster and rated as more intense, and eye-contact promotes the recognition of anger in ambiguous facial expressions whereas averted gaze promotes the recognition of fear2,3.

Interestingly, self-relevance promotes threat-reactivity in the right amygdala1,4–6, a limbic brain-region crucial for allocating attention7 and reactivity to threat8, to occur more rapidly. This happens via magnocellular pathways, which support fast, coarse, and achromatic processing of small low-spatial frequency luminance contrasts9. Retinal cells supporting the magnocellular pathway are relatively more present in the periphery compared to fovea, and processing of peripheral compared to foveal information in the primary visual cortex is indeed more tuned to temporal contrast differences10. Thus, self-relevant facial threat seems to tap into visual and emotional mechanisms that also support rapid peripheral-attention11, but the behavioral relevance of this interaction has not yet been studied directly.

Therefore, we start our studies with a proof-of-concept visual search study (Study 1), investigating whether self-relevance promotes detection of facial threat, in which we expect that angry eye-contact and fearful faces gazing away are found faster compared to their counterparts with different gaze directions. In this visual search task, trial instructions are always the same (‘find the emotional face’), as are the distractors (emotionally neutral faces), which together prevents that search goals/templates and distractor properties bias search performance12–15. Furthermore, for all our stimuli, we take control over low-level features by constructing grey-scaled luminance corrected average faces using multiple validated stimuli. This avoids low-level artifacts in the individual images, while retaining those low-level features, such as spatial frequency contrast differences, that are relevant to the emotional content. Consequently, the only manipulated variable in this experiment is the target image, of which the low-level differences are directly relevant to the expressed emotion and gaze. In Studies 2 and 3, we subsequently use eye-tracking, as well as signal detection theory, to focus on foveal and peripheral (or non-foveal) vision to evaluate whether self-relevant threat has indeed the ability to attract attention via peripheral vision.

The final aim of our studies is to provide behavioral relevance to this mechanism of self-relevant threat detection. For this, we investigate whether the psychopathic trait fearless-dominance modulates peripheral-attention to self-relevant anger. Psychopathy is a personality disorder underlying antisocial behavior, often at great cost for individuals and society16. Although psychopathy is rare, psychopathic traits are more common in the general population, with fearless-dominance and impulsive-antisociality described as their main factors17,18. In mild forms, these traits can aid the expression of high-risk/high-gain behaviors that can help the individual reach great heights in society19,20. However, when these traits are more severe, the balance can tip towards criminal behavior.

With regards to the psychopathic trait fearless-dominance, it is interesting to note that reduced threat reactivity21, as well as general insensitivity to emotions expressed by others22, is argued to underlie antisocial behavior. Particularly signals of social correction, such as fearful and angry eye-contact, are recognized poorly, which is argued to subsequently underlie a lack of downregulation of aggressive tendencies in response to these signals23–25. Indeed, particularly the psychopathic trait fearless-dominance is related to reduced scanning of faces, as measured with eye-tracking, during emotion recognition26.

On the other hand, there is also evidence that psychopaths and criminal offenders have an attentional bias towards hostile stimuli and angry faces in particular27,28. Such an attentional bias for angry faces is in line with the observation that socially dominant individuals are preconsciously motivated to seek confrontation with angry others through eye-contact30–33. Interestingly, within a group of clinically assessed psychopaths, it was recently shown that particularly the interpersonal facet of the four-facet model of psychopathy34, which is conceptually related to the trait fearless-dominance, also predicts preconscious motivation to confront angry eye-contact35. Fearless-dominance might thus impair the ability to evaluate signals of social correction on the one hand, but at the same time enhance the ability to detect angry eye-contact.

Intriguingly, threat reactivity impairments in psychopathy can normalize when the goals given to the participant during the experiment also promote attention to the threat29. This pattern of relatively normal threat reactivity in psychopathy, but only when the task goal aligns with the threat content, underlies the response modulation theory of psychopathy36. Response modulation theory suggests that psychopathy results from a lack of automaticity in directing attention to (social) threat, that is normally observed in the general population. According to this theory, only when the threat aligns with the individual’s current goals will it also attract the psychopath’s attention. In line with this theory, one could thus argue that, when the task goal aligns with those properties of faces that communicate threat, the implicit motivation to confront that threat found in relation to fearless-dominance34 might prevail, resulting in an attentional bias for angry eye-contact instead of reduced threat reactivity. Within the scope of the present research, in which we apply a visual search task where the goal of the participants is explicitly focused on finding facial threats, we would thus expect that fearless-dominance levels are positively associated with the detection of self-relevant anger, which we assess in Study 3.

Study 1

Methods

Participants and procedure

Healthy volunteers (6 female, 6 male; mean age = 20.9, SD = 1.32), with (corrected to) normal visual acuity, were recruited among Utrecht University students using flyers and the online Social and Behavioural Sciences (FSS) Research Participation System. Participation was awarded with course-credit (one participant-hour of which the students must collect ten) or payment (7 euros). For this proof-of-concept study, we aimed to identify a behaviorally meaningful, and thus large effect-size, and a sample-size of N = 12 can be considered large enough to identify one-tailed large effect-sizes (d = 0.8) with a power of 0.80 in pair-wise comparisons (computed using GPower 3.137).

All studies were performed in accordance with the declaration of Helsinki. All participants provided written informed consent before the experiments started, and all studies reported in this paper were approved by the ethical committee of the faculty of social and behavioral sciences at Utrecht University.

Participants were invited to the lab, briefed about the experiment, and asked to sign the informed consent form. The eye-tracker was calibrated using a 9-point calibration procedure immediately followed by instructions, practice trials and the visual search task.

Apparatus

The visual search task was presented on a Tobii T120 (120 Hz sampling rate) eye-tracker with 0.5 degrees accuracy (Tobii Technology, Danderyd, Sweden). Participants were comfortably seated at ~ 60 cm distance from the integrated monitor and responded to the experiment via a combination of gaze fixation and button presses (spacebar of the computer keyboard). All experiments were built in E-Prime 2.0 (Psychology Software Tools, Pittsburgh, PA) and stable synchronization between the experiment-PC and eye-tracker was secured using the E-Prime Extensions for Tobii package (EET v2.0.2.41).

Stimuli

As self-relevant facial threat images, we used angry expressions with direct gaze, and fearful expressions with an averted gaze. We also used angry expressions with averted gaze, and fearful expressions with direct gaze, to make direct self-relevance related comparisons possible. Fearful, angry, and emotionally neutral faces, with direct and averted (left/right) gaze of all male and female Caucasian actors were selected from the Radboud Faces Database38. The images were first grey-scaled, then the head area of the image was cropped, and this crop was masked with a 180 × 120 oval with a cosine ramp edge, ensuring that the images blended smoothly into the grey (RGB: 128,128,128) background. Next, for each option (female/male, anger/fear/neutral, left/right/direct gaze), 8 identities were generated by averaging 14 random actors resulting in 8*2*3*3 = 144 unique face images. Each face was based on a unique combination of actors. The luminance of the images was controlled for such that the median luminance of all images was identical, and that there were no significant differences in luminance between image types (e.g., male, anger, left gaze vs. female, fear, direct gaze).

Visual search task

We used an interactive gaze-contingency paradigm to record visual search performance. Participants were instructed that each stimulus display would contain one emotional face that was either angry or fearful, that all other images would be emotionally neutral, and that it was the participant’s task to ‘Find the emotional face, look at it and press the spacebar as fast and accurate as possible’. Gaze direction was not mentioned at any point in the experiment.

Each trial commenced with a fixation-cross in the center of the screen for 1500ms. After a gaze-contingent check that the participant was correctly fixating gaze, the stimulus display was presented. If no fixation could be detected, a track-status screen appeared showing a representation of the eyes of the participant in relation to the quality of eye-tracking, and the participant was instructed to adjust position and restart the trial with a button press. Gaze-contingent feedback was provided immediately after the participant pressed the button by presenting a square around the face that was gazed at when the response button was pressed. This square was green when the face was the target, or red when it was a distractor. If no response was given within 5 s after stimulus display presentation, the response was scored as incorrect. See Fig. 1A for an example.

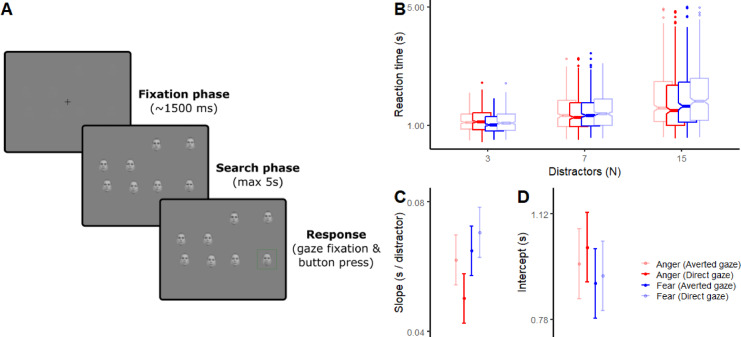

Fig. 1.

(A) Example of a trial in the visual search task with 7 distractors. (B) Boxplots of raw reaction time data from Study 1. (C) Search-slopes show that self-relevant threats are found more efficiently whereas (D) search-intercepts indicate that fearful targets are more easily identified. Values represent estimated marginal means (EMMs) with 95% confidence intervals (CI).

Stimulus displays consisted of either 4, 8 or 16 faces (grid-sizes). All faces were emotionally neutral (distractors), except for one angry or fearful face (target). To ensure an equal distribution of stimuli in relation to the initial fixation-cross, the stimulus display (a mid-screen rectangle of 1138 × 882 pixels) was divided into quadrants (Qs), which again were divided into quadrants (QQs) resulting in 16 possible image positions. First, the target was assigned a QQ based on our stimulus balancing algorithm (see below). Next, the distractors were equally divided over the other Qs and randomly assigned a QQ within each Q. All faces were positioned at the center of its assigned QQ with a random jitter (x ± 41, y ± 9 pixels).

The task was presented in 12 blocks (32 trials each, 384 trials in total), with always the same grid-size within a block (4 blocks for each grid-size condition). Block order was random, but never more than two blocks of the same grid-size following each other. After each block, participants could immediately proceed with the next block or take a 30-second break.

Our stimulus balancing algorithm ensured that within two blocks of the same grid-size (64 trials), each of the 16 positions (QQs) was used once for each emotion/gaze combination. Target gaze was operationalized as either ‘direct’ or ‘averted’, which was always a random pick between left- or right-sided gaze. The distractors’ gaze was always a random pick between direct, left and right, separately for each distractor. Within each block, an equal number of female and male targets were used, and the distractors were pseudo-randomly chosen such that each trial contained an equal amount of male and female faces. Finally, one block of 16 practice trials, including 4 random trials for each condition with grid-size = 16 preceded the experiment.

Data analysis

Reaction time (RT) was defined as time between stimulus presentation onset and button press. Trials with incorrect responses, determined by gaze location at the time of button press, were removed (3.4%) and outliers were removed using the interquartile range (IQR) method (separately for each participant and grid-size).

We evaluate threat-relevance effects by assessing the search slopes and intercepts of the regression of reaction time (RT) on the number of distractors using a linear mixed-model. Search slope thus reflects the additional time needed to find the target with each distractor, and search intercept reflects the time needed to identify the target when looking at it. As such, these measures respectively capture search efficiency and target identification time39.

RTs were entered into a full-factorial 2 × 2 linear mixed model, with EMOTION (fear, angry) and GAZE (averted, direct) as within factors. To estimate search slopes and intercepts, we entered the number of distractors as a non-centered rescaled (divided by 100) linear predictor. Data were clustered by participant, and a random intercept across participant was included to account for between-subjects variance across all predictors. EMOTION, GAZE and number of distractors were also entered as random slopes to the models, but due to non-convergence of a number of those models (across all experiments) we report only models without these random slopes. Models that did converge with random slopes included did not yield different fixed-effects results. Models were estimated in R, version 4.2.240, using the lme4 package41. All models reported in this study were tested for reliability by computing RSpearman−Brown using odd-even model-comparison based on trial-number.

Results

We find, based on the interaction of EMOTION and GAZE in search-slopes, that self-relevance promotes search efficiency (-4.4 ms/distractor, p = .027), which is also the case when comparing angry with fearful faces (-5.8 ms/distractor, p = .003). On the other hand, based on the search intercepts, we find that fearful compared to angry targets are identified faster (-38.6 ms, p = .043), see Fig. 1B-D. Odd-even reliability of this model was RSpearman−Brown=0.89. Statistics, estimates and 95% confidence intervals for all models are reported in the Supplemental Information, here SI-Model-RT1.

Interim discussion

Our results thus confirm our hypothesis that self-relevance promotes finding facial threat in visual search. Additionally, our results suggest that fearful, compared to angry, faces are more easily identified as search target.

Theoretically, this identification advantage of fearful faces is driven by foveal perception of the targets. Peripheral visual processing is on the other hand more likely related to the observation that angry faces and self-relevant threats are found more efficiently. This is the case, because this effect is found in the slope-differences, which are driven by parallel processing of the stimulus display using foveal as well as peripheral vision. Therefore, to separate foveal from peripheral effects, we aim to replicate these findings, while also collecting eye-tracking data to evaluate the respective contributions of foveal and peripheral visual processing. For this, we run the same experiment in Study 2, and now also decompose RT into its peripheral- and foveal-vision components in line with recent studies showing that these have distinct contributions to visual search42. We first focus on the search time before target localization (first gaze fixation on the target). During this phase, search behavior is driven by foveal perception of the distractors as well as peripheral visual processing of the target and distractors, which guides the direction of the next fixation. We operationalise these respectively with the average time spent gazing at each distractor (dwell time; DT), and the number of distractor fixations (nF) needed before target localization. Secondly, the time after target localization (defined as the time between first fixation on the target and button-press) involves mainly foveal perception of the target, which we operationalise as target identification time (TIT). Note that the task we use is not an identification task, thus TIT does not refer to identification of the target being fearful or angry specifically, but identification of the target as not being a neutral distractor. In sum, we separate RT into three measures: peripheral target processing (nF), foveal perception of distractors (DT), and foveal perception of targets (TIT).

Study 2

Methods

Participants and procedure

Healthy volunteers (19 female, 12 male; mean age = 21.7, SD = 1.09), with (corrected to) normal visual acuity, were recruited among Utrecht University students using flyers and the online Social and Behavioural Sciences (FSS) Research Participation System. Participation was awarded with course-credit (one participant-hour of which the students must collect ten) or payment (7 euros). This sample-size was chosen, because we anticipated the eye-tracking effects to be of medium effect-size, and a sample size of N = 31 is large enough to identify one-tailed medium effect-sizes (d = 0.5) with a power of 0.80 in pair-wise comparisons (computed using GPower 3.137).

Stimuli, task and procedure were the same as in Study 1, with as only exception that we used an eye-tracker with a higher sampling-rate (300 Hz), namely a Tobii TX300 eye-tracker (Tobii Technology, Danderyd, Sweden).

Gaze-fixation detection

For gaze-fixation detection, we used a dispersion algorithm. A gaze-data point was considered part of a potential gaze-fixation when it was located within a circle of 100 pixels of the average location of the preceding gaze-data points of that potential gaze-fixation. A potential gaze-fixation was considered a valid gaze-fixation when its duration exceeded 100ms. Eye blinks and invalid gaze-data, as defined by the Tobii eye-tracker validity coding system, were excluded from the gaze-fixation algorithm.

Data analysis

As in study 1, reaction time (RT) was defined as time between stimulus presentation onset and button press. Trials with incorrect responses, determined by gaze location at the time of button press, were removed (3.8%), and outliers were removed using the interquartile range (IQR) method (separately for each participant and grid-size). We subsequently used gaze-fixations to isolate three measures from these RTs. Target identification time (TIT) which is the time after target localization (first fixation on the target), number of fixations (nF) on the distractors before target localization, and their average dwell-times (DT). For all three measures, we again removed outliers using the IQR method.

As in Study 1, RTs were entered into a full-factorial 2 × 2 linear mixed model, with EMOTION (fear, angry) and GAZE (averted, direct) as within factors. To estimate search slopes and intercepts, we entered the number of distractors as a non-centered rescaled (divided by 100) linear predictor. DTs and TITs were entered in full-factorial 2 × 2 × 3 linear mixed models, with EMOTION (fear, angry), GAZE (averted, direct) and number of distractors (3, 7 and 15) as within factors. For nFs, we used the same approach using a generalized mixed model with poisson-distribution and a log-link function. Data were clustered by participant, and a random intercept across participant was included to account for between-subjects variance across all predictors.

Results

The RT analysis (RSpearman−Brown=0.84) replicates Study 1: An EMOTION by GAZE interaction in search-slopes shows that self-relevance promotes search efficiency (-4.5 ms/distractor, p < .001), which is driven by both emotions (see Fig. 2B). Furthermore, angry faces are found more efficiently compared to fearful faces (-4.0 ms/distractor, p = .001), and fearful targets are identified faster compared to angry targets (-30.6 ms, p = .007), as shown in Fig. 2A-C and SI-Model-RT2. When teasing apart RT using the eye-tracking data, we find that the nF data (RSpearman−Brown=0.89) supports the search efficiency effect, showing that less fixations are needed to reach angry compared to fearful targets (incidence rate ratio (IRR) = 0.979, p < .001). Moreover, an EMOTION by GAZE interaction shows that less fixations are also needed to reach self-relevant targets (IRR = 0.973, p < .001). Both effects also interact with the number of distractors (IRR = 0.982, p = .045 and IRR = 0.974, p = .004, respectively), indicating the effects are strongest in trials with the most distractors, see Fig. 2D and SI-Model-nF2. No effects were observed in DT (RSpearman−Brown=0.91), suggesting that foveal evaluation of the distractors does not contribute to search efficiency related to anger and threat relevance (see Fig. 2E and SI-Model-DT2). TIT (RSpearman−Brown=0.89) fully explains the fear-identification advantage over anger (-13.8 ms, p < .001), with also a small significant self-relevance effect (-3.9 ms, p = .001), suggesting that it takes less time to identify fearful, compared to angry, faces as a target when perceiving it foveally, see Fig. 2F and SI-Model-TIT2.

Fig. 2.

Visual search results Study 2 (left) and Study 3 (right). (A&G) Boxplots of raw reaction time data. (B&H) Search-slopes show that self-relevant threats (anger/direct gaze and fear/averted gaze) are found more efficiently, which is due to (D&J) less fixations needed to reach the target and not because of (E&K) average dwell-times on the distractors. (C&I) Search-intercepts suggest that fearful targets are more easily identified, which is indeed due to (F&L) faster target identification times. Values represent estimated marginal means (EMMs) with 95% confidence intervals (CI).

Interim discussion

These results show that fearful, compared to angry, faces are more easily identified when processing them foveally. Additionally, self-relevance promotes that less fixations are needed to find the targets, which is also the case when comparing angry with fearful targets. Thus, these results suggest that fearful faces are more easily identified, whereas self-relevant facial threat seems to attract peripheral-attention. However, following the spatial cueing literature43 it might also be the case that it is more difficult to disengage from angry compared to fearful faces, which could also underlie the additional time needed to identify the angry targets. Likewise, in peripheral vision it is also possible that self-relevant targets are more easily identified, thereby resulting in faster localization times independent from attentional capture.

To investigate this further, we set out to test the particular contributions of foveal and peripheral vision in the identification of the target-emotions using signal detection theory44. Thereto, in Study 3, we combined the visual search task with two signal-detection experiments focusing on target identification through foveal and peripheral-vision respectively. With this combination of measures, we can thus investigate whether self-relevance promotes the recognition of facial threat in peripheral vision, and whether facial fear compared to anger is more easily identified in foveal vision. If self-relevant facial threat indeed attracts peripheral-attention, as our visual search results suggest, we expect to find no identification advantage for these stimuli in the peripheral signal detection task. If fear is indeed more easily identified foveally, we would expect this also to be confirmed in the foveal signal detection task. Finally, an additional aim of Study 3 is to evaluate whether the psychopathic trait fearless-dominance modulates this peripheral-attention to self-relevant anger, following the hypothesis that fearless-dominance levels predict increased peripheral attention to self-relevant anger (see Introduction).

Study 3

Methods

Participants and procedure

Healthy volunteers (18 female, 18 male; mean age = 19.9, SD = 1.08), with (corrected to) normal visual acuity were recruited among Utrecht University students using flyers and the online Social and Behavioural Sciences (FSS) Research Participation System. Participation was awarded with course-credit (one participant-hour of which the students must collect ten) or payment (7 euros). The visual search task and analyses were similar to Study 2, with the exception that we used half the number of trials.

Participants were invited to the lab, briefed about the experiment, and asked to sign the informed consent form. The eye-tracker was calibrated using a 9-point calibration procedure immediately followed by instructions, practice trials and the visual search task. After that, the participants received instructions on, and completed the signal detection tasks, after which they filled in the FD/IA questionnaire17.

FD/IA questionnaire

To assess the psychopathic traits fearless-dominance and impulsive-antisociality, we used the FD/IA questionnaire17. Based on subscales of the Multidimensional Personality Questionnaire (MPQ)18, this questionnaire was reported to have good internal consistency (Cronbach’s alpha: MPQ-FD = 0.80; MPQ-IA = 0.85)45. The questionnaire consists of 40 statements in total, equally divided between the two measures, that had to be answered on a 5-point Likert-scale ranging from ‘strongly disagree’ to ‘strongly agree’. Example statements are: ‘I take charge’ (MPQ-FD) and ‘I use others for my own ends’ (MPQ-IA). Cronbach’s alphas in the present sample were: MPQ-FD = .73; MPQ-IA = .76.

Signal detection tasks

Participants were instructed that each trial would contain a rapid presentation of either an emotional (angry or fearful) or an emotionally neutral face. The participants’ task was to indicate whether the face expressed an emotion or not by pressing ‘q’ or ‘p’ (counterbalanced across participants) on the computer keyboard as fast and accurately as possible. Gaze direction was not mentioned at any point in the experiment.

Each trial commenced with a gaze-contingent fixation check similar as in the visual search experiment. Stimulus displays consisted of brief presentations of a face stimulus followed by a masking image on the same location as the face. The face-stimuli were the same as in the visual search task and they were either presented at the location of the fixation-cross (foveal-task) or in the middle of one of the four QQs (see Methods, Study 1: Visual search task) surrounding the fixation-cross (peripheral-task). Presentation time of the faces (foveal-task: 40 ms, peripheral-task: 53 ms) was determined based on pilot research, such that for both tasks the measure of interest (d-prime, see below) would be within the average range of 0.5-2.0.

Each task consisted of 128 trials: 64 emotionally neutral, 32 angry and 32 fearful faces. The same stimuli from the visual search task were used. Within each emotional category the stimuli were balanced for sex, position (for the peripheral-task) and gaze (direct or randomly picked left or right gaze). The foveal-task was always done first, and before each task the participants did eight practice trials.

Signal detection analysis

D-prime was computed for each target type (anger/fear, direct/averted) with neutral faces with the same gaze direction as reference category, using the d-prime implementation from the psycho package in R46. For foveal and peripheral task separately, d-prime values were entered in full-factorial 2 × 2 linear mixed models with EMOTION (fear, angry) and GAZE (averted, direct) as within factors.

Results

Analysis of visual search parameters (RT, TIT, nF and DT; all RSpearman−Brown>0.77) replicates all effects from Study 2, see Fig. 2G-L and SI-Models-RT3, nF3, DT3 and TIT3. Next, we enter fearless-dominance and impulsive-antisociality scores (see Table 1) as continuous variables to the models for RT and nF. Here we focus on the faces that make eye-contact as these can be considered the two main signals of social correction. Particularly, following the violence inhibition theory of Blair (2003), angry eye-contact can serve as a corrective signal to stop aggressive behavior and fearful eye-contact can serve as a cue to stop aggression by evoking empathy for the victim. This analysis shows that fearless-dominance predicts faster search times (-54.4 ms/standard deviation difference on FD, p = .012) and less fixations needed (IRR = 0.96 per SD, p = .012) to localize self-relevant anger compared to fear, whereas impulsive-antisociality predicts the opposite; slower search times (46.8 ms/SD, p = .031) and more fixations (IRR = 1.05 per SD, p = .017), see Fig. 3C-F and SI-Model-FDIA (please note the IRRs reported in the SI are scored with anger-direct as reference category).

Table 1.

Descriptives of questionnaire scores and gender effects.

| Group | N | Mean | Median | SD | T-value | p-value | |

|---|---|---|---|---|---|---|---|

| Fearless-dominance | male | 17 | 71.1 | 70 | 6.94 | 1.26 | 0.22 |

| female | 18 | 66.7 | 65 | 12.7 | |||

| Impulsive-antisociality | male | 17 | 41.8 | 36 | 10.95 | 0.01 | 0.99 |

| female | 18 | 41.7 | 37.5 | 11.2 |

Fig. 3.

(A) D-prime values for the foveal signal detection task showing that detection sensitivity is higher for fearful compared to angry targets. (B) D-prime values for the peripheral signal detection task showing no differences for emotion and self-relevance. (C-F) Linear relations of psychopathic traits with RT and nF for the main signals of social correction, angry and fearful faces making eye-contact. (C-D) Fearless-dominance predicts greater search-efficiency and peripheral-attention for angry compared to fearful eye-contact, whereas (E-F) impulsive-antisociality predicts the opposite. Anger/direct gaze slopes and their difference with fear/averted gaze are significant across GRID-size conditions, but strongly driven by the trials with the most distractors. Values represent estimated marginal means (EMMs) with 95% confidence intervals (CI).

From the signal detection experiments, we compute the metric d-prime, which represents detection sensitivity44 for each target type. We find a significantly positive d-prime (1.20, p < .001) for the foveal task, which is higher for fearful compared to angry targets (difference = 0.51, p < .001), but not different in relation to self-relevance (emotion by gaze interaction: p = .087), see Fig. 3A and SI-Model-SDF. For the peripheral task, we also find a significantly positive d-prime (0.83, p < .001), but the effects of emotion and self-relevance effects are non-significant (all ps > 0.11), see Fig. 3B and SI-Model-SDP.

Interim discussion

Additional to replicating the results from Study 2, our signal-detection results show that in peripheral vision there is no advantage of anger compared to fear, or self-relevance effect, in detection-sensitivity. We can thus conclude that the observation that these targets are found more easily, using less fixations, results from those targets attracting peripheral attention instead of those targets being identified more easily in peripheral vision. Moreover, we find a detection advantage of fear compared to anger in the foveal signal detection task, suggesting that the fear advantage we find in target identification during visual search, is most likely due to this foveal detection advantage, and not due to difficulties in disengaging from angry faces. Lastly, we find preliminary support for the hypothesis that the psychopathic trait fearless-dominance promotes peripheral-attention for self-relevant anger. This finding aligns with evidence that dominance motivation promotes preconscious confrontation by making and maintaining eye-contact with angry others30–33. Interestingly, the psychopathic trait impulsive-antisociality seems to predict the opposite pattern; reduced peripheral attention for self-relevant anger. Arguably, this combination of effects could underlie the duality in findings regarding the processing of facial expressions of threat in psychopathy. Indeed, on the one hand facial signals of social correction are recognized poorly, theoretically underlying a lack of downregulation of aggressive tendencies in response to these signals23–25. On the other hand, attentional biases towards hostile stimuli and angry faces have been reported in psychopaths and criminal offenders27,28.

Now the question remains what it is that attracts peripheral-attention. Although emotion researchers generally acknowledge that threat-content of a visual stimulus is inherently the product of its low-level features, they tend to ignore the more parsimonious feature-based interpretation. These studies typically focus on functional and clinical relevance, like trait and state characteristics47, emotional disorders48 and clinical implications49. Regardless, it can be argued from the threat-based as well as the feature-based account that facial expressions of threat exploit the sensitivities of the human visual system12,50. We thus take the stance that facial emotions are inherently intertwined with the visual features that contribute to these expressions, and now aim to identify what these features are51,52, but see Hedger and colleagues53 for a critical discussion on this topic in relation to unguided detection tasks.

Contrasts between targets and the background and/or distractors are some of the strongest drivers of attention in visual search39. In the present studies we controlled our stimuli such that the features that are irrelevant to the emotion or gaze direction are minimized via averaging, grey-scaling and correcting for luminance differences. These procedures, however, do not equalize all aspects of contrast differences between the images. Specifically, luminance corrections control for contrast differences between the images and the background luminance. Locally, meaning within an image, contrast energy may be differently attributed to specific spatial frequency content, and these differences are known to influence attentional effects related to facial expressions51,54. Thus, contrasts in different spatial frequency bands may still differ between the images used in our studies. In fact, since emotional expressions differ in their relative spatial frequency contrasts, this would even be expected51,55–57. Such differences between images in their spatial frequency content are particularly relevant to the current study given that sensitivity to different types of contrasts (e.g., spatial frequencies) is not affected equally by eccentricity. Specifically, whereas sensitivity tends to decrease with increasing eccentricity, this drop-off is less pronounced for lower spatial frequencies58,59. This means that faces with relatively high low spatial frequency content would provide relatively stronger signals in the periphery and therefore facilitate the allocation of attention.

Thus, to better understand our findings, and link them to specific spatial frequency contrast differences, we analyzed spatial frequency specific contrast differences within the faces and between the conditions used in our studies. For this we combine our observations with the previously discussed evidence that self-relevant threat is detected through magnocellular pathways, which together suggests that low spatial-frequency properties of anger and self-relevant threat drive the peripheral-attention effects. The fear advantage in foveal perception, on the other hand, can also be driven by higher spatial-frequency properties.

Image statistics

Methods

We take a descriptive approach, analyzing the 144 images used in this study to identify which spatial frequency specific contrasts contribute to the increased foveal perception of fear, as well as the peripheral-attention to anger and self-relevant threat. Specifically, for each image, we extracted the Fourier Magnitude spectrum using the protosc toolbox60 for 48 spatial frequency bins (twice the default setting of the toolbox). Next, based on the conditions used in the experiments, we calculated the averages of the spatial frequency contrasts for (1) all angry faces, (2) all angry faces with a direct gaze, (3) all angry faces with an averted gaze, (4) all fearful faces, (5) all fearful faces with a direct gaze, and (6) all fearful faces with an averted gaze. Finally, we calculated the differences between relevant stimulus conditions (e.g., Anger averted vs. Anger direct).

If visual search parameters can be explained by the differences in spatial frequency content between image conditions, we would expect faster reaction times for images that have more contrast in certain spatial frequency bins compared to images from another condition. Standard errors shown in Fig. 4 are estimated using only one image per image condition at a time instead of all 16. The procedure is repeated using each image one at a time to calculate a standard error of the mean.

Fig. 4.

Contrast differences (Fourier magnitude, y-axis) between different image conditions (A: Anger, F: Fearful, a: averted gaze, d: direct gaze) across spatial frequency (cycles per degree [cpd], x-axis). Error bars represent the standard error of the mean. For example, the green line shows the average contrast difference between images of angry faces with direct gaze (Ad) or fearful faces with averted gaze (Fa), and images of angry faces with averted gaze (Aa) or fearful faces with direct gaze (Fd).

Results

When looking at the low frequency range, 1 to 3 cycles per degree (cpd), we see higher contrasts within anger compared to fear (Fig. 4, A-F) and direct compared to averted gaze (Fig. 4, d-a). Consequently, also self-relevant anger is characterized by higher contrasts (Fig. 4, Ad-Aa), which is in line with the observation that facial anger, particularly when self-relevant, attracts peripheral-attention. Self-relevance within fear (Fig. 4, Fd-Fa) is characterized by high contrasts in the medium frequency range, 4 to 8 cpd, which suggests that these frequencies also contribute to attracting peripheral-attention. Interestingly, fear compared to anger (containing all gaze directions; Fig. 4, A-F), is characterized by high contrasts in the medium to high frequency range (6 to 12 cpd), which is in line with the idea that the fear identification advantage we find across our studies is driven by high spatial frequencies.

Overall discussion

We investigated whether self-relevance promotes threat-detection in the context of emotional faces. Across three studies, we repeatedly show that self-relevance promotes peripheral-attention to angry and fearful faces. To our knowledge, this is the first evidence that the magnocellular and amygdalar processing of self-relevant threat discussed earlier1,4–6,9 also promotes peripheral-attention. Interestingly, peripheral-attention to (facial) threat is a much-studied topic with support going back and forth. The observation here that a simple eye-direction manipulation promotes peripheral-attention, even in opposite but consistently self-relevant directions for anger and fear, strongly supports the idea that threat indeed attracts attention.

We also show that these observations align well with expectations based on the spatial-frequency content of the images. This aspect of the results can be approached in multiple ways. One way is to interpret the self-relevance effects in terms of bottom-up reactions to salient, high-effective contrast signals. However, the fact that self-relevant social signals also consist of the strongest low spatial-frequency contrasts, can also be approached from the perspective that these facial expressions of threat exploit the sensitivities of the human visual system12,50. Although such evolutionary arguments are essentially unfalsifiable53, it is striking that a simple gaze manipulation has expression-specific, and opposing, effects on the spatial-frequency content of emotional expressions that may cause attention to be attracted in a manner that aligns with the behaviors expected from the threat-relevance theory.

In Study 3, we also find support for the hypothesis that peripheral-attention for self-relevant anger is amplified in relation to fearless-dominance. Although this result should be considered preliminary due to the relatively low sample-size, it does provide some interesting directions for future research into psychopathy. In particular, given that our task design included the specific goal to find the emotional target, this observation seems in line with response-modulation theory36,61, which argues that psychopaths need motivational drive to employ emotional reactivity that is typically automatic in the healthy population. Here, alignment of the task goal and fearless-dominance appears to motivate individuals to attend to self-relevant anger, possibly even overriding the lack of such automatic attention associated with impulsive-antisociality. Arguably, this intriguing dissociation could provide psychopaths with the possibility to rapidly notice and confront opposition when it aligns with their goals or ignore it if not. In the general population, this combination could facilitate high risk/high gain social behaviors, but in more extreme forms this might result in antisociality and aggressive behavior. Of course, our current sample-size warrants only tentative conclusions and only hold for non-clinical psychopathic traits. Interestingly, however, the reported effects seem reliable in a 64-trial subset of our visual search design (i.e., the trials with the most distractors), and given the challenges associated with clinical studies, particularly in psychopathy, this is an important observation that could make such clinical studies actually feasible.

An alternative explanation for our observation that peripheral-attention to angry eye-contact is modulated by psychopathic traits could again be sought in specific low spatial-frequency sensitivities. In terms of visual-search, this would involve a bottom-up explanation of how fearless-dominance and impulsive-antisociality make one respectively more, and less, sensitive to low spatial frequency contrasts. However, in line with response modulation theory, psychopathic traits have been shown to be associated with top-down, and not bottom-up, driven visual search62,63. This evidence thus favors our top-down explanation that fearless-dominance motivates to attend to self-relevant anger, and the lack of motivation to evaluate such signals of social correction in relation to impulsive-antisociality could result in disregarding them. Taken together, although low spatial-frequencies drive bottom-up attentional allocation, the correlations to psychopathic traits seem most likely related to top-down motivational differences.

It is furthermore important to note that our visual search design is optimized for attention effects. Distractors were homogeneous and the search task was always the same, rendering the search task fully driven by the targets. As such, our experimental setup favors parallel search for the targets, i.e., using peripheral vision to sample multiple stimuli at the same time in order to find the target more efficiently39. Indeed, the effects in peripheral-attention we report here can be considered a reflection of such a search mechanism. Importantly, this is different from designs with heterogeneous distractors and variable search goals15,64, where perceptually driven dwell-times on the distractors are the biggest contributor to search (in)efficiency. The observation that facial anger is found more efficiently while facial fear is identified more easily is also interesting in this respect. Arguably, this might indicate that facial fear is found relatively more rapidly in heterogeneous designs, which we hope to address in future studies.

Following a similar line of argument, our observation that self-relevant threat attracts peripheral-attention is also in line with the angry-superiority effect65 in visual search, which suggests that angry, compared to happy, faces are more easily detected among distractors due to their threat value. Using a design with highly heterogenous distractors we, however, recently showed that happy faces are more easily found compared to their angry counterparts52. There is some evidence suggesting that in designs optimized for parallel search, i.e., with homogenous distractors, angry-superiority might emerge66 similar to what we find in the present study for self-relevant threat. A future study with explicit manipulations of heterogeneity of distractors and eye-tracking measures, to tease apart foveal and peripheral effects, should investigate this further.

Although our eye-tracking measures can distinguish between mechanisms supported by foveal and non-foveal vision, a limitation to our interpretations regarding peripheral vision is that we did not explicitly manipulate retinal eccentricity of our stimulus presentations. Future studies could address this issue to make more thorough conclusions possible, in terms of which part(s) of peripheral vision contribute to the effects reported here. Another limitation to our study is that we did not control our stimuli a priori for spatial frequency differences. Although equalizing all spatial frequencies risks degradation of emotional content in the images, future studies could try to address this issue. Lastly, generalization of the effects reported here to a more representative population should also be a focus of future research.

To conclude, in this study we show that high spatial-frequency contrasts promote faster identification of fearful faces in visual search, whereas low spatial-frequency contrasts promote peripheral-attention towards angry faces and self-relevant facial threats. Furthermore, peripheral-attention to angry eye-contact is more pronounced in individuals high on fearless-dominance, and diminished in relation to impulsive-antisociality. Together, this provides the first behavioral evidence that self-relevant threat attracts attention. Moreover, this seems to support behavior in a meaningful manner as, in line with the response modulation theory of psychopathy, fearless-dominance seems to promote social confrontation, whereas impulsive-antisociality renders one insensitive to angry signals of social correction.

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

We thank Giulia Bertoldo, Jurriaan Freutel, Piet Jonker and Sandra van der Meer for help with data collection.

Author contributions

D.T. and S.M.S devised the research. D.T. performed the behavioral data analyses. S.M.S. performed the image statistics analyses. D.T. and S.M.S wrote the manuscript.

Data availability

All materials, data and analyses are publicly available ( [https://osf.io/3ucf8/](https:/osf.io/3ucf8) ). The images from the experiment and for image-analyses are not allowed to be made available in public media. Consequently, they are available on reasonable request by contacting the corresponding author (DT).

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Adams, R. B., Gordon, H. L., Baird, A. A., Ambady, N. & Kleck, R. E. Effects of gaze on amygdala sensitivity to anger and fear faces. Sci. (1979). 300, 1536 (2003). [DOI] [PubMed] [Google Scholar]

- 2.Adams, R. B. & Kleck, R. E. Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion5, 3–11 (2005). [DOI] [PubMed] [Google Scholar]

- 3.Adams, R. B. & Kleck, R. E. Perceived gaze direction and the processing of facial displays of emotion. Psychol. Sci.14, 644–647 (2003). [DOI] [PubMed] [Google Scholar]

- 4.Im, H. Y. et al. Observer’s anxiety facilitates magnocellular processing of clear facial threat cues, but impairs parvocellular processing of ambiguous facial threat cues. Sci. Rep.7, 15151 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cushing, C. A. et al. Neurodynamics and connectivity during facial fear perception: the role of threat exposure and signal congruity. Sci. Rep.8, (2018). [DOI] [PMC free article] [PubMed]

- 6.Adams, R. B. et al. Amygdala responses to averted vs direct gaze fear vary as a function of presentation speed. Soc. Cogn. Affect. Neurosci.7, 568–577 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Terburg, D. et al. Hypervigilance for fear after basolateral amygdala damage in humans. Transl. Psychiatry2, (2012). [DOI] [PMC free article] [PubMed]

- 8.Terburg, D. et al. The basolateral amygdala Is essential for rapid escape: a human and rodent study. Cell 175, (2018). [DOI] [PMC free article] [PubMed]

- 9.Kveraga, K., Ghuman, A. S. & Bar, M. Top-down predictions in the cognitive brain. Brain Cogn.65, 145–168 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Himmelberg, M. M. & Wade, A. R. Eccentricity-dependent temporal contrast tuning in human visual cortex measured with fMRI. Neuroimage184, 462–474 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Cheng, A., Eysel, U. T. & Vidyasagar, T. R. The role of the magnocellular pathway in serial deployment of visual attention. Eur. J. Neurosci.20, 2188–2192 (2004). [DOI] [PubMed] [Google Scholar]

- 12.Horstmann, G. Preattentive face processing: what do visual search experiments with schematic faces tell us? Vis. Cogn.15, 799–833 (2007). [Google Scholar]

- 13.Horstmann, G., Scharlau, I. & Ansorge, U. More efficient rejection of happy than of angry face distractors in visual search. Psychon Bull. Rev.13, 1067–1073 (2006). [DOI] [PubMed] [Google Scholar]

- 14.Fox, E. et al. Facial expressions of emotion: are angry faces detected more efficiently? Cogn. Emot.14, 61–92 (2000). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Horstmann, G. & Becker, S. I. More efficient visual search for happy faces May not indicate guidance, but rather faster distractor rejection: evidence from eye movements and fixations. Emotion20, 206–216 (2020). [DOI] [PubMed] [Google Scholar]

- 16.Kiehl, K. A. et al. The criminal psychopath: history, neuroscience, treatment, and economics. Jurimetrics. Jurimetrics51, 355 (2011). [PMC free article] [PubMed] [Google Scholar]

- 17.Witt, E. A., Donnellan, M. B., Blonigen, D. M., Krueger, R. F. & Conger, R. D. Assessment of fearless dominance and impulsive antisociality via normal personality measures: convergent validity, criterion validity, and developmental change. J. Pers. Assess.91, 265–276 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Patrick, C. J., Curtin, J. J. & Tellegen, A. Development and validation of a brief form of the multidimensional personality questionnaire. Psychol. Assess.14, 150–163 (2002). [DOI] [PubMed] [Google Scholar]

- 19.Benning, S. D., Venables, N. C. & Hall, J. R. Successful psychopathy. Handb. Psychopathy. 2, 585–608 (2018). [Google Scholar]

- 20.Francis, K. B. et al. Simulating moral actions: an investigation of personal force in virtual moral dilemmas. Sci. Rep. 7, 1–11 (2017). [DOI] [PMC free article] [PubMed]

- 21.Oskarsson, S. et al. The startle reflex as an indicator of psychopathic personality from childhood to adulthood: A systematic review. Acta Psychol. (Amst). 220, 103427 (2021). [DOI] [PubMed] [Google Scholar]

- 22.Blair, R. J. Neurobiological basis of psychopathy. Br. J. Psychiatry. 182, 5–7 (2003). [DOI] [PubMed] [Google Scholar]

- 23.Best, M., Williams, J. M. & Coccaro, E. F. Evidence for a dysfunctional prefrontal circuit in patients with an impulsive aggressive disorder. Proc. Natl. Acad. Sci. U S A. 99, 8448–8453 (2002). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Van Honk, J. & Schutter, D. J. L. G. Testosterone reduces conscious detection of signals serving social correction: implications for antisocial behavior. Psychol. Sci.18, 663–667 (2007). [DOI] [PubMed] [Google Scholar]

- 25.Marsh, A. A. & Blair, R. J. Deficits in facial affect recognition among antisocial populations: A meta-analysis. Neurosci. Biobehav Rev.32, 454–465 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Boll, S. & Gamer, M. Psychopathic traits affect the visual exploration of facial expressions. Biol. Psychol.117, 194–201 (2016). [DOI] [PubMed] [Google Scholar]

- 27.Zhao, Z., Yu, X., Ren, Z., Zhang, L. & Li, X. The remediating effect of attention bias modification on aggression in young offenders with antisocial tendency: A randomized controlled trial. J. Behav. Ther. Exp. Psychiatry75, (2022). [DOI] [PubMed]

- 28.Domes, G., Mense, J., Vohs, K. & Habermeyer, E. Offenders with antisocial personality disorder show attentional bias for violence-related stimuli. Psychiatry Res.209, 78–84 (2013). [DOI] [PubMed] [Google Scholar]

- 29.Newman, J. P., Curtin, J. J., Bertsch, J. D. & Baskin-Sommers A. R. Attention moderates the fearlessness of psychopathic offenders. Biol. Psychiatry. 67, 66–70 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Terburg, D., Aarts, H. & van Honk, J. Testosterone affects gaze aversion from angry faces outside of conscious awareness. Psychol. Sci.23, (2012). [DOI] [PubMed]

- 31.Hortensius, R., van Honk, J., De Gelder, B. & Terburg, D. Trait dominance promotes reflexive staring at masked angry body postures. PLoS One9, (2014). [DOI] [PMC free article] [PubMed]

- 32.Terburg, D., Hooiveld, N., Aarts, H., Kenemans, J. L. & van Honk, J. Eye tracking unconscious face-to-face confrontations: dominance motives prolong gaze to masked angry faces. Psychol. Sci.22, (2011). [DOI] [PubMed]

- 33.Terburg, D. et al. Testosterone abolishes implicit subordination in social anxiety. Psychoneuroendocrinology72, (2016). [DOI] [PubMed]

- 34.Hare, R. D. Hare psychopathy checklist-revised, Second Edition. (Multi-Health Systems, Toronto, Canada, (2003).

- 35.Rijnders, R. J. P., Dykstra, A. H., Terburg, D., Kempes, M. M. & van Honk, J. Sniffing submissiveness? Oxytocin administration in severe psychopathy. Psychoneuroendocrinology131, (2021). [DOI] [PubMed]

- 36.Hamilton, R. B. & Newman, J. P. The response modulation hypothesis: formulation, development, and implications for psychopathy. (2018).

- 37.Faul, F., Erdfelder, E., Buchner, A. & Lang, A. G. Statistical power analyses using G*Power 3.1: tests for correlation and regression analyses. Behav. Res. Methods. 41, 1149–1160 (2009). [DOI] [PubMed] [Google Scholar]

- 38.Langner, O. et al. Presentation and validation of the radboud faces database. Cogn. Emot.24, 1377–1388 (2010). [Google Scholar]

- 39.Wolfe, J. M. & Horowitz, T. S. What attributes guide the deployment of visual attention and how do they do it? Nat. Rev. Neurosci.5, 495–501 (2004). [DOI] [PubMed] [Google Scholar]

- 40.R Core Team. R: A language and environment for statistical computing. Preprint at (2022). https://www.R-project.org/

- 41.Bates, D., Mächler, M., Bolker, B. & Walker, S. Fitting linear mixed-effects models using lme4. J. Stat. Softw.67, 1–48 (2015). [Google Scholar]

- 42.Shasteen, J. R., Sasson, N. J. & Pinkham, A. E. Eye tracking the face in the crowd task: why are angry faces found more quickly? PLoS One. 9, e93914 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Mogg, K., Holmes, A., Garner, M. & Bradley, B. P. Effects of threat cues on attentional shifting, disengagement and response slowing in anxious individuals. Behav. Res. Ther.46, 656–667 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Verghese, P. Visual search and attention: review A signal detection theory approach. Neuron31, 523–535 (2001). [DOI] [PubMed] [Google Scholar]

- 45.Blonigen, D. M., Carlson, M. D., Hicks, B. M., Krueger, R. F. & Iacono, W. G. Stability and change in personality traits from late adolescence to early adulthood: a longitudinal twin study. J. Pers.76, 229–266 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Makowski, D. The psycho package: an efficient and Publishing-Oriented workflow for psychological science. J. Open. Source Softw.3, 470 (2018). [Google Scholar]

- 47.Juth, P., Lundqvist, D., Karlsson, A. & Öhman, A. Looking for foes and friends: perceptual and emotional factors when finding a face in the crowd. Emotion5, 379–395 (2005). [DOI] [PubMed] [Google Scholar]

- 48.Ashwin, C., Wheelwright, S. & Baron-Cohen, S. Finding a face in the crowd: testing the anger superiority effect in asperger syndrome. Brain Cogn.61, 78–95 (2006). [DOI] [PubMed] [Google Scholar]

- 49.Lucas, N., Bourgeois, A., Carrera, E., Landis, T. & Vuilleumier, P. Impaired visual search with paradoxically increased facilitation by emotional features after unilateral pulvinar damage. Cortex120, 223–239 (2019). [DOI] [PubMed] [Google Scholar]

- 50.Horstmann, G. & Bauland, A. Search asymmetries with real faces: testing the anger-superiority effect. Emotion6, 193–207 (2006). [DOI] [PubMed] [Google Scholar]

- 51.Stuit, S. M. et al. The image features of emotional faces that predict the initial eye movement to a face. Sci Rep11, (2021). [DOI] [PMC free article] [PubMed]

- 52.Stuit, S. M., Alejandra, M., Sanchez, P., Terburg, D. & Perceptual Not attentional, guidance drives happy superiority in complex visual search. Behav. Sci.144, (2025). [DOI] [PMC free article] [PubMed]

- 53.Hedger, N., Garner, M. & Adams, W. J. Do emotional faces capture attention, and does this depend on awareness? Evidence from the visual probe paradigm. J. Exp. Psychol. Hum. Percept. Perform.45, 790–802 (2019). [DOI] [PubMed] [Google Scholar]

- 54.Stuit, S. M., Paffen, C. L. E. & Van der Stigchel S. Prioritization of emotional faces is not driven by emotional content. Sci. Rep. 13, 1–9 (2023). [DOI] [PMC free article] [PubMed]

- 55.Goffaux, V. & Rossion, B. Faces are ‘spatial’ - Holistic face perception is supported by low Spatial frequencies. J. Exp. Psychol. Hum. Percept. Perform.32, 1023–1039 (2006). [DOI] [PubMed] [Google Scholar]

- 56.Jeantet, C., Caharel, S., Schwan, R., Lighezzolo-Alnot, J. & Laprevote, V. Factors influencing Spatial frequency extraction in faces: A review. Neurosci. Biobehav Rev.93, 123–138 (2018). [DOI] [PubMed] [Google Scholar]

- 57.Kumar, D. & Srinivasan, N. Emotion perception is mediated by Spatial frequency content. Emotion11, 1144–1151 (2011). [DOI] [PubMed] [Google Scholar]

- 58.Rijsdijk, J. P., Kroon, J. N. & van der Wildt, G. J. Contrast sensitivity as a function of position on the retina. Vis. Res.20, 235–241 (1980). [DOI] [PubMed] [Google Scholar]

- 59.Haun, A. M. What is visible across the visual field? Neurosci. Conscious (2021). (2021). [DOI] [PMC free article] [PubMed]

- 60.Stuit, S. M. & Paffen, C. L. E. Van der stigchel, S. Introducing the prototypical stimulus characteristics toolbox: protosc. Behav. Res. Methods. 54, 2422–2432 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Rijnders, R. J. P., Terburg, D., Bos, P. A., Kempes, M. M. & van Honk, J. Unzipping empathy in psychopathy: empathy and facial affect processing in psychopaths. Neurosci. Biobehav. Rev.131, 1116–1126 (2021). [DOI] [PubMed] [Google Scholar]

- 62.Hoppenbrouwers, S. S., Van der Stigchel, S., Sergiou, C. S. & Theeuwes, J. Top-down attention and selection history in psychopathy: evidence from a community sample. J. Abnorm. Psychol.125, 1–7 (2016). [DOI] [PubMed] [Google Scholar]

- 63.Krusemark, E. A., Kiehl, K. A. & Newman, J. P. Endogenous attention modulates early selective attention in psychopathy: an ERP investigation. Cogn. Affect. Behav. Neurosci.16, 779–788 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Horstmann, G., Herwig, A. & Becker, S. I. Distractor dwelling, skipping, and revisiting determine target absent performance in difficult visual search. Front. Psychol.7, 1–13 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Öhman, A., Soares, S. C., Juth, P., Lindstrm, B. & Esteves, F. Evolutionary derived modulations of attention to two common fear stimuli: Serpents and hostile humans. J. Cognitive Psychol.24 17–32 Preprint at (2012). 10.1080/20445911.2011.629603

- 66.Öhman, A., Lundqvist, D. & Esteves, F. The face in the crowd revisited: a threat advantage with schematic stimuli. J. Pers. Soc. Psychol.80, 381–396 (2001). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All materials, data and analyses are publicly available ( [https://osf.io/3ucf8/](https:/osf.io/3ucf8) ). The images from the experiment and for image-analyses are not allowed to be made available in public media. Consequently, they are available on reasonable request by contacting the corresponding author (DT).