Abstract

The promise of adaptation and adaptive designs in implementation science has been hindered by the lack of clarity and precision in defining what it means to adapt, especially regarding the distinction between adaptive study designs and adaptive implementation strategies. To ensure a common language for science and practice, authors reviewed the implementation science literature and found that the term adaptive was used to describe interventions, implementation strategies, and trial designs. To provide clarity and offer recommendations for reporting and strengthening study design, we propose a taxonomy that describes fixed versus adaptive implementation strategies and implementation trial designs. To improve impact, (a) future implementation studies should prespecify implementation strategy core functions that in turn can be taught to and replicated by health system/community partners, (b) funders should support exploratory studies that refine and specify implementation strategies, and (c) investigators should systematically address design requirements and ethical considerations (e.g., randomization, blinding/masking) with health system/community partners.

Keywords: implementation strategies, adaptation, cluster randomized design, quality improvement, community engagement

BACKGROUND

Implementation science is an evolving discipline that aims to close gaps between the development of effective interventions and the implementation of effective interventions in public health and clinical practice (3, 25, 53). Within the past decade, there has been explosive growth in the identification, design, and testing of implementation strategies (51–53). An implementation strategy is a tool or method that supports existing providers to improve uptake of evidence-based practices and policies in public health or routine clinical care (implementation practice).

The term adaptive design is increasingly applied to describe the design of a tool that adjusts something to a new condition. For example, in clinical practice, adaptive intervention designs can be used by clinicians to improve outcomes by adjusting the provision of treatment to the baseline and ongoing needs of patients (17, 23, 25). In public health practice, an adapted intervention (18) can be used by communities to adjust health programs to meet the needs of populations. In implementation practice, adaptive implementation strategy designs can be used by implementers to improve adoption, implementation, and sustainment of effective interventions by addressing specific barriers to implementation (e.g., provider burden, lack of organizational resources) (10, 11, 13, 39). In effectiveness and implementation science, adaptive trial designs can be used by investigators to improve efficiency or ethical considerations by adjusting aspects of a randomized trial design (e.g., sample size or number of randomized arms being compared) during its conduct (31).

Despite the growing interest in adaptive designs, a lack of precision of language is evident in both written and spoken communication around relevant concepts. This lack of clarity may stem from a need for a shorthand to communicate the myriad of methodological concepts that (a) employ the word “adapt,” such as “adaptation,” “adaptive trials,” “adaptive interventions,” and “adaptive implementation strategies”; or (b) do not explicitly use the word “adapt” but are known to be adaptive in some sense, such as “precision implementation,” “iterative design,” “rapid-cycle research,” “tailoring,” “optimization,” and “sequentially randomized trials.” Misunderstanding may also stem from a lack of precision in the development of implementation study designs, which tend to focus on external validity.

Confusion around the term adaptive in implementation science can lead to (a) implementation strategies that are not replicable by future implementers and (b) implementation research designs that are not replicable by future scientists. Advancing the field of implementation science requires both types of replication, which, in turn, rely on clear prespecification or methods and/or interventions. Yet when the term adaptive design is used within implementation science, it is often unclear whether it is being used to describe a research design or an implementation strategy design, a fundamental distinction necessary for replication. Recent efforts to create a systematic framework for the replication of implementation studies such as the implementation replication framework (12) provide some clarity, but more is needed to ensure clarity in adaptive design concepts in particular (5).

The goal of this methodological review is to clarify the use of the term adaptive in implementation science and practice. Based on a brief review of the literature and building on recent reviews of implementation study designs by Brown (5), Curran (16, 17), Geng (23) and Hwang (29), we describe how the word adapt is currently being used, propose a new taxonomy of adaptation in implementation science, and make recommendations to strengthen adaptation methods in implementation science and practice to improve the uptake of effective interventions in real-world settings in order to improve population health. In this review, intervention refers to a treatment, program, or practice delivered to patients or consumers by health care or community-based providers.

ADAPTATION CONCEPTS IN IMPLEMENTATION SCIENCE

Study authors assessed recent (within the past seven years) articles describing implementation science designs and implementation strategy compilations to identify how the term adaptation was used. Table 1 provides a list of 5 terms and 9 concepts related to adaptive designs in the field of implementation. Sometimes a single term is used to convey a single design concept. Other times, a single term is used to mean multiple design concepts. In these cases, a naming convention is provided to distinguish between multiple uses of the same term. Five commonly used terms in implementation science were identified that included the core word adapt, and these are used variously to describe nine distinct types of designs. Six are for research designs (use by an effectiveness or implementation scientist), two are implementation strategy designs (for use by an implementation practitioner), and one is an intervention design (for use by a clinician).

Table 1.

Table of terms and concepts related to “adaptive design”a

| Term | Design | Actor (who) | When | Goal | Example in literature | |

|---|---|---|---|---|---|---|

| Adaptation | 1 | Adaptation of intervention as an implementation research design | Implementation scientist | Before an implementation trial | Alter an effective intervention prior to studying its implementation | Trauma-focused CBT (14) |

| 2 | Adaptation of intervention as an effectiveness intervention design | Effectiveness scientist | Before an effectiveness trial | Alter an intervention prior to studying its effectiveness | SMI Life Goals (36) | |

| 3 | Adaptation of an intervention as an implementation strategy | Implementation practitioner | During implementation | Improve implementation | Getting To Outcomes (10, 11), Replicating Effective Programs (39) | |

| Adaptive intervention | 4 | Adaptive intervention design | Clinician | During intervention | Improve patient outcomes | Stepped care (32) |

| Adaptive implementation | 5 | Adaptive implementation strategy | Implementation practitioner | During implementation | Improve implementation | ASIC (36, 40, 58), Re-Engage (35), ADEPT (59, 60), ROCC (62) |

| Adaptive trial | 6 | Adaptive intervention trial design | Effectiveness scientist | During an effectiveness trial | Improve intervention effectiveness study efficiency or ethics | ESETT (31) |

| 7 | Adaptive implementation trial design | Implementation scientist | During an implementation trial | Improve implementation study efficiency or ethics | PEPReC (22) | |

| Adaptive iterative design | 8 | Iterative intervention effectiveness research | Effectiveness scientist | During a pilot effectiveness trial | Ensure feasibility and acceptability of intervention | PARAMEDIC2 (50) |

| 9 | Iterative implementation research | Implementation scientist | During a pilot implementation trial | Ensure feasibility and acceptability of implementation strategy | FRAME-IS (45) | |

Abbreviations: ADEPT, Adaptive Implementation of Effective Programs Trial; ASIC, Adaptive School-based Implementation of CBT; CBT, cognitive behavioral therapy; ESETT, Established Status Epilepticus Treatment Trial; FRAME-IS, Framework for Reporting Adaptations and Modifications-Enhanced–Implementation Science; Re-Engage, re-engagement care management program for Veterans lost to care; SMI, serious mental illness; PARAMEDIC2, Prehospital Assessment of the Role of Adrenaline: Measuring the Effectiveness of Drug administration in Cardiac arrest; PEPReC, Partnered Evidence-based Policy Resource Center; ROCC, Recovery Oriented Collaborative Care.

Intervention refers to a treatment, program, or practice delivered to patients or consumers by health care or community-based providers.

The term adaptation has been used to describe changes to an intervention prior to implementation (e.g., Table 1, line 1), changes to an intervention that informed another effectiveness study (Table 1, line 2), or changes to an intervention as part of the implementation strategy process (Table 1, line 3). For example, trauma-focused cognitive behavioral therapy (TF-CBT) was adapted to better implement cognitive behavioral therapy (CBT) in marginalized populations (14). The collaborative care model was changed to include cardiovascular disease management in another clinical effectiveness study for persons with serious mental illness (36). Implementation strategies such as Replicating Effective Programs (REP) (39) or Getting To Outcomes (GTO) (10, 11, 13) included the process of adapting interventions during the implementation research study (Table 1, line 3).

Adaptive interventions (Table 1, line 4) can refer to interventions that are modified by clinicians in routine practice, such as stepped care (32), which involves a prespecified sequence of interventions that are implemented depending on the patient’s severity and response to the initial intervention. An adaptive implementation strategy (Table 1, line 5) is akin to stepped care but involves a prespecified sequence of implementation strategies to enhance effective intervention uptake in sites needing additional implementation assistance (35, 36, 40, 58–60).

Adaptive trial designs (Table 1, lines 6 and 7) can refer to unanticipated modifications made during the trials, whether for interventions (9, 22, 31, 48) or for implementation strategies (22). Finally, adaptive iterative designs (Table 1, lines 8 and 9) usually refer to iterative modifications made to the implementation strategies themselves (45) in response to feedback on the intervention or implementation strategy’s performance, and these modifications are prespecified.

NEW TAXONOMY FOR ADAPTATIVE DESIGN IN IMPLEMENTATION SCIENCE

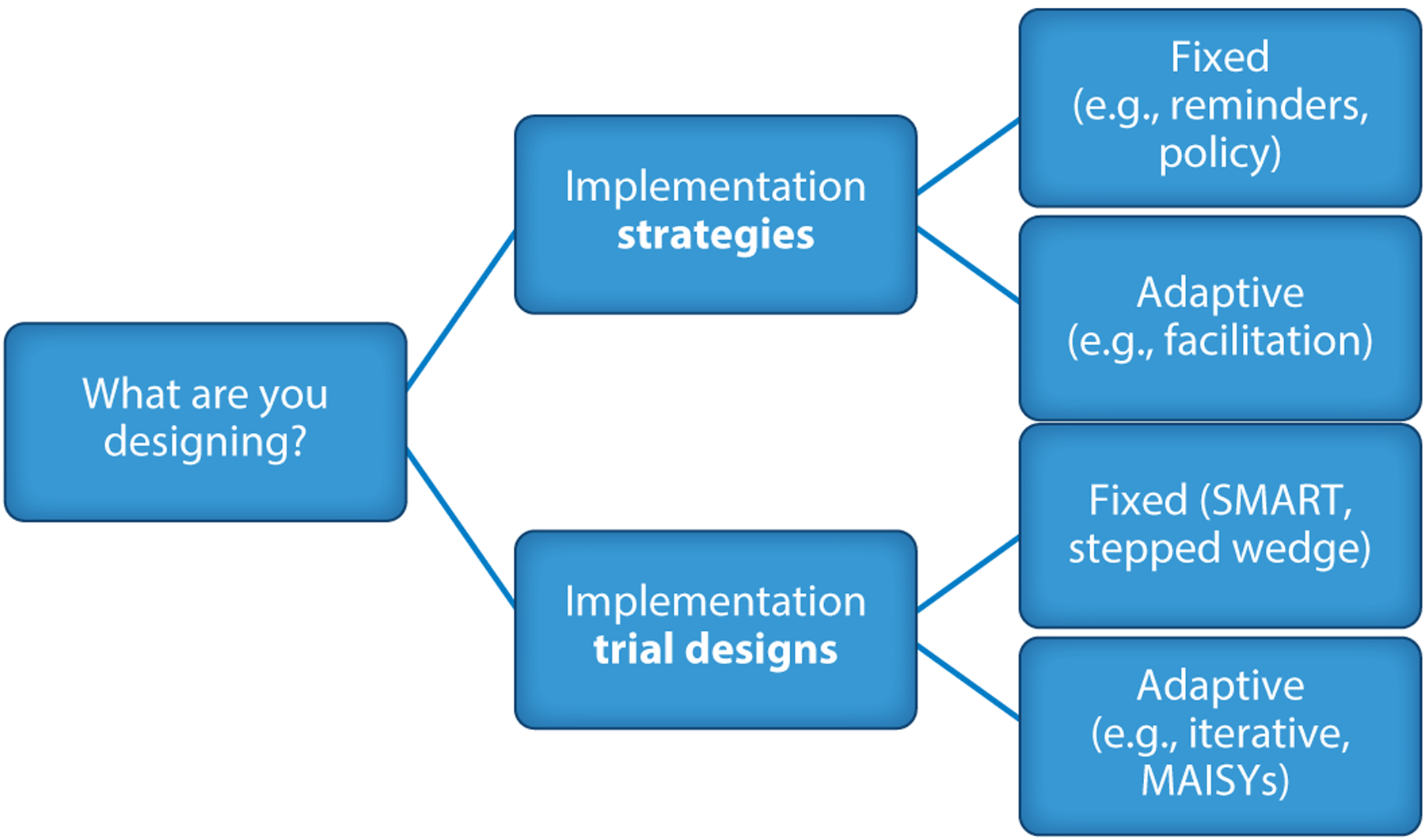

Below, we focus on more precisely defining adaptive design in implementation science, with a particular focus on implementation strategy studies (Table 1, lines 3, 5, 7, and 9). Hybrid designs were introduced to combine tests of interventions and implementation strategies into a single trial to speed the conduct of research (16, 17). A hybrid type 2 study is a design that combines an intervention effectiveness trial with a test of an implementation strategy that helps support provider uptake of the intervention. A hybrid type 3 study primarily tests the impact of an implementation strategy to improve intervention uptake, while collecting some data on individual outcomes. Implementation designs (5, 30) as well as adaptive trials for clinical interventions have been described in more detail elsewhere (61). Figure 1 illustrates how (a) the implementation strategies to be tested can be fixed (unchanged) or adaptive (change over time), and (b) implementation trial designs (16, 17) can be fixed or adaptive as well.

Figure 1.

Updated taxonomy of adaptation in implementation science. Abbreviations: SMART, sequential multiple-assignment randomized trial; MAISYs, multi-level adaptive implementation strategy designs.

Fixed and Adaptive Implementation Strategies

Many implementation strategies have not been fully specified or empirically tested. So-called fixed implementation strategies typically involve discrete tools or methods such as clinical reminders, provider training, or a performance incentive or policy at the organization or regional level. Moreover, an implementation strategy that involves changes or adaptations to the effective intervention itself is considered a fixed implementation strategy because the processes used to adapt the intervention are the same, even if the intervention is modified across different contexts.

One example of a fixed implementation strategy that includes a common process that leads to adaptation of the effective intervention is REP. Developed by the Centers for Disease Control and Prevention in the 1990s to implement evidence-based behavioral interventions more rapidly in communities, REP includes a user-centered design whereby practitioners and patients provide input via community working groups on how the intervention should be adapted to their settings. The discussion yields a set of menu options that still enable the intervention’s core functions to be used (19, 20, 39). Core functions (39) are the active ingredients of an intervention that help achieve its desired effect. In contrast, forms are variations by which core functions can be delivered without compromising their active ingredients. For example, in REP, community working group participants reviewed the core functions of the CBT psychosocial intervention (e.g., active discussions of patients’ thoughts, feelings, and behaviors by a therapist) and are then asked to provide examples of how these core functions can be adapted in different forms to their particular settings (e.g., virtual care, modular-based topics). These adapted forms of the core functions are then included in the intervention manual, as long as active discussions on thoughts, feelings, and behaviors are still present.

Adaptive implementation strategies enable implementers to change the delivery of the implementation strategies as well as the interventions to better address barriers to implementation across different sites. Most implementation strategies could be classified as adaptive because they typically involve ongoing active discussions between the implementer and practitioners. An example of an adaptative implementation strategy is facilitation (4, 20, 34, 40, 41, 49), in which the implementer (facilitator) guides practitioners to overcome barriers to adoption of an effective intervention, usually through individualized consultation focused on enhancing the practitioner’s self-efficacy and strategic thinking skills. During the implementation process, the facilitator may deploy other unique implementation strategies beyond facilitation core functions such as identifying and preparing other practice champions or cross-site learning collaboratives.

Other terms are used to describe adaptive implementation strategies, including precision implementation or tailoring. In these situations, adaptive implementation strategies typically involve a process of assigning specific implementation strategies to address barriers using optimization approaches described below. Tailoring has often been described to involve the adjustment of implementation strategies to make them fit better into a specific context (52). Regardless of terminology, it is important to specify an implementation strategy’s core functions, and the different implementation strategies involved, so they can be evaluated and replicated, as described below.

Fixed and Adaptive Implementation Trial Designs

Implementation trials generate new knowledge about which implementation strategies work best to improve effective intervention uptake. Fixed trial designs typically start with a known set of static implementation strategies and design choices. Adaptive implementation trial designs involve changing the implementation strategies or design methods used during the trial. Fixed and adaptive randomized implementation trial designs are described below with key examples, and Table 2 presents advantages and disadvantages of each design. In describing adaptive implementation trial designs, we choose to focus primarily on randomized designs because they principally involve the allocation and reallocation of implementation strategies, such as with the use of sequential multiple assignment randomized trial (SMART) designs (2, 6, 55). While a more in-depth discussion of observational implementation designs is provided elsewhere (19, 29, 44, 46), fixed designs can include quasi-experimental designs (43) such as stepped-wedge or interrupted time series designs.

Table 2.

Advantages and disadvantages of various designs

| Trial design type | Design | Advantages | Disadvantages |

|---|---|---|---|

| Fixed Trial Designs | Cluster randomized trials | Is the most straightforward; can be applied to a wide range of content areas | Fixed nature may not adequately account for natural variation or best reflect real-world implementation; some sites are randomized to less intense arm |

| Stepped-wedge trials (randomized, quasi-experimental) | All sites receive the intervention by the end of the trial; increased measurement may reduce the sample size needed | Increases costs and response burden because of the greater number of measurements; can take longer to complete trial; has an inflexible schedule of measurement and start times, which is challenging | |

| SMART designs | Reflects real-world and personalized nature of clinical decision-making; can answer multiple research questions within a single trial; can require smaller samples by incorporating adaptive intervention strategies | Can be complex to run; results may not generalize to different settings where the same intervention personalization is not available; may not have sufficient information to prespecify implementation strategies; may be insufficiently powered to assess each permutation | |

| Adaptive Trial Designs | Adaptive iterative design | Allows for real-time adaptation of interventions based on emerging evidence and insights gained during implementation; emphasizes a learning orientation; involves stakeholders | The need for continuous data collection can require additional resources and could present data quality challenges; iteration could create complex implementation strategies, which could also limit generalizability |

| Adaptive implementation strategy designs | Yields data about which strategies work in a certain context | May not have sufficient information to prespecify the progression of implementation strategies; can be resource intensive and complex; can be difficult to disentangle the effects; can be difficult to scale outside of the tested context | |

| MAISYs | Allows for the evaluation of implementation strategies that target multiple levels of a health care system; can optimize strategies based on feedback; promotes learning systems; may enhance contextual relevance | Can be complex to run; is resource intensive given the involvement of multiple levels; may lack generalizability because of the focus on local tailoring |

Abbreviations: MAISYs, multi-level adaptive implementation strategy; SMART, sequential multiple assignment randomized trial.

Fixed trial designs.

Fixed implementation trial designs use prespecified implementation strategies and often use cluster randomization methods, allocation arms, and end points to compare different implementation strategies. Stepped-wedge designs may also be quasi-experimental and not involve randomization of sites or regions to an implementation strategy or strategies (rather instead randomizing according to the timing of strategy receipt). Other quasi-experimental, fixed designs used in implementation science include interrupted time series or prepost designs with a nonequivalent control group, which are often applied when randomization is not possible. SMART designs (2) are a particular type of fixed design that can include different decision points at which time sites can be rerandomized to either augmented or different implementation strategies. SMART implementation designs, described below, inform adaptive implementation strategies by determining which sequence works best to promote effective intervention uptake.

Cluster randomized trials.

Cluster randomized trials are a common trial design used in implementation science to evaluate the effectiveness of implementation strategies or policies at the organizational or community level. In cluster randomized implementation trials, groups (or clusters) of settings such as clinics, schools, or organizations are randomly assigned to receive either the implementation strategy or usual practice to determine which approach is best for promoting adoption and sustained use of effective interventions in real-world practice. These trials can be used to evaluate a wide range of implementation strategies, including those at the provider level (e.g., training, reminders, coaching) or the organization level (e.g., performance incentives, facilitation). One advantage of cluster randomized trials in implementation science is that they minimize the potential for contamination, where individuals in the control group may be exposed to the intervention through interactions with individuals in the intervention group. A caveat with cluster randomized trials is that specific statistical approaches are needed to control for similarity among individuals within each cluster, reducing the effective sample size.

Several tests of the GTO (1, 8, 10–12, 27, 28) implementation strategy have employed a cluster randomized trial design. GTO is a 10-step, manualized multicomponent facilitated implementation strategy that includes provider training, intervention adaptation, academic detailing (real-time observations by facilitators who provide specific implementation feedback), feedback reports, and facilitation. Preparing to Run Effective Programs (PREP) was a cluster randomized trial (8, 10–12, 27, 28) comparing 15 Boys & Girls Club sites that implemented a five-session evidence-based alcohol and drug prevention program called CHOICE with 14 similar sites that implemented the same program supported by GTO. A central strategy of GTO (GTO step 4 is called “fit”) was that facilitators collaborated with Boys & Girls Club personnel to adapt CHOICE to fit their organization while preserving the core functions of CHOICE—e.g., by varying the timing of sessions, splitting sessions, or changing the examples used to match the target population.

Stepped-wedge trials.

Stepped-wedge trials are a type of cluster design in which the timing of site starts is randomized or, if not randomized, rolled out in an iterative fashion. In stepped-wedge trials, all sites receive the implementation strategy but have different start times. Stepped-wedge trial designs are often applied when it is not pragmatic to provide the implementation strategy simultaneously to all sites (e.g., when implementation interventionists need time to prepare site practitioners for using the effective intervention). They are also used when it is impossible to randomize receipt of an effective intervention due to the time-sensitive nature of the rollout. However, often a cluster randomized trial with a staggered start can also effectively be used to roll out an intervention in a time-sensitive manner, especially as the most ethical option would be to randomize start times due to a limited capacity to get all sites up to speed at once. Likewise, all sites in a stepped-wedge trial design serve as their own controls prior to receiving the implementation strategy. However, this approach assumes that the implementation strategy clearly leads to more benefit than harm for participants, rather than equipoise (i.e., we are assuming that it is the right approach to offer the implementation strategy to all). An alternative design that allows all to receive the implementation strategy in this case is a cluster randomized trial with wait-list control. Despite their practicality, stepped-wedge trial designs have several notable disadvantages, including the need for strict adherence to start times, the burden of more repeated measurement, and the need to account for secular trends.

In a recent stepped-wedge trial, Getting To Implementation (GTI) (56) was used to support practitioners to improve care for Veterans with cirrhosis, or advanced liver disease. GTI is a manualized implementation support tool based on GTO—assisting sites to select the implementation strategies (e.g., Table 1, line 3) for implementation instead of researchers helping sites select and implement effective interventions. Sites that included Veterans with cirrhosis were cluster-randomized to when they would receive GTI. This design was chosen because leadership wanted all sites to receive implementation support by the end of the study, but resources were available to provide GTI support to only four sites at a time. Data were collected from all sites at baseline, 6 months, 12 months, 18 months, and 24 months using repeated surveys of patients and providers at fixed points over time.

Sequential multiple assignment randomized trial designs.

SMART designs (2), which are also considered optimization randomized trial designs, have been increasingly used to determine the optimal sequence of implementation strategies for improving the uptake of effective interventions in real-world practice. SMART designs involve prespecified sequences of different implementation strategies used in the same cohort of settings (e.g., audit and feedback, training, facilitation), and sometimes the sequences involve an augmentation of the same implementation strategy to sites that are not responding (e.g., not using the effective intervention) to an initial implementation strategy. The advantage of SMART designs in implementation strategy trials is that they can inform which sequence of strategies works best to improve uptake and use of an intervention and whether strategies work better if offered immediately or delayed. In addition, the more intensive implementation strategies are offered only to sites that need additional implementation support, as indicated by their nonresponse (e.g., few or no practitioners using the effective intervention). SMART designs also enable prespecification of implementation strategies. A potential disadvantage is that the implementation strategy sequences need to be prespecified, and there are often insufficient preliminary studies to warrant selection of a set of promising implementation strategies.

Previous SMART designs focused on enhancing uptake of effective collaborative care models for persons with mental disorders (34, 40). For example, the Adaptive Implementation of Effective Programs Trial (ADEPT) (59, 60) determined whether adding internal facilitators (i.e., a clinical manager trained to provide embedded support to practitioners using the effective intervention) to external facilitators (i.e., a study team–supported implementation expert provides self-efficacy skills to site practitioners) could improve intervention uptake and patient outcomes compared with practitioner training through the REP implementation strategy or REP plus external facilitation alone. Sites not responding to REP were randomized to either an external facilitator or an external plus internal facilitator, allowing investigators to compare effectiveness among those sites that did not benefit from REP alone.

Adaptive trial designs.

Adaptive implementation strategy trial designs can be either exploratory (i.e., the sequences of implementation strategies are not prespecified) or hypothesis driven (the sequences of implementation strategies are prespecified).

Adaptive iterative designs.

Adaptive iterative design is an example of an exploratory design where implementation strategies are systematically evaluated and modified based on real-time feedback and data. This design allows for continuous improvement, where insights gained from implementation inform subsequent strategy refinements. The key components of adaptive iterative designs include flexibility (e.g., adapt the implementation strategy in light of the evolving contextual needs), continuous learning (e.g., collect practitioner feedback on the implementation strategy), feedback loops (e.g., implementer and practitioner identify new strategies), and collaboration (e.g., implementers or practitioners learn from other sites to improve implementation). These adjustments can involve modifications to both the effective intervention and the implementation strategy. Adaptive iterative designs are ideal when there is uncertainty about which implementation strategies to use and when the sequence or combination of implementation strategies needs to be pilot tested (e.g., in a hybrid type 2 trial). However, changes to the implementation strategies that are not prespecified and occur during testing may introduce bias and preclude replicability.

Adaptive implementation strategy designs.

Adaptive implementation strategy designs are prespecified versions of adaptive implementation strategies. These offer the opportunity to support replication while also maximizing flexibility and implementation. In adaptive implementation strategy designs, the study protocol outlines the sequence of implementation strategies to use depending on the site context, changing needs, and responsiveness to previously used implementation strategies. Adaptive implementation strategy designs are derived from SMART trial designs, which test which sequences of implementation strategies work best for improving effective intervention uptake. Ideally, adaptations to implementation strategies are anticipated and occur at a critical decision point, when a decision is made concerning which implementation strategy to provide or enhance, and this decision is based on any available information. The measures used to make these decisions are known as “tailoring variables” (2, 47), which can include any measure of past performance, current need, predictions of future performance, prior strategies offered, or engagement with previous strategies (2, 44). Adaptative implementation strategy designs are flexible yet differ from adaptations to the effective intervention or to unanticipated changes to implementation strategies (23) because they prespecify decision points and rules.

Multi-level adaptive implementation strategy designs (MAISYs) (24) are an emerging approach to guide adjustments to implementation strategies (e.g., augmenting, intensifying) based on the multilevel contextual needs (e.g., clinic, practitioner). MAISYs are prespecified methods informed by multi-level implementation sequential, multiple assignment, randomized trial (MI-SMART) designs for implementation strategies, in which sequences of randomizations of different implementation strategies occur at multiple levels to optimize the implementation approach. An example of a MAISY includes the Adaptive School-based Implementation of CBT (ASIC) trial (40, 58), where a regional-level implementation strategy (REP including CBT dissemination to and training of school professionals) was augmented by school-level coaching (i.e., enhanced training in CBT competency), facilitation (strategy thinking skills to overcome barriers to CBT), or coaching and facilitation in combination.

RECOMMENDATIONS

Using this alternative taxonomy to further clarify the meaning of adaptation across interventions, implementation strategies, and implementation strategy trial designs, we provide the following recommendations for strengthening implementation science methods, especially for implementation strategy trial designs (see Table 3) (42).

Table 3.

Recommendations to clarify the meaning of adaptation: rationale and future directions

| Recommendation | Rationale | Future direction |

|---|---|---|

| Discern and prespecify core functions of implementation strategies, which are the strategy’s active ingredients that help achieve the intended effort of improving intervention uptake |

|

|

| Consider exploratory study designs that refine and specify implementation strategies |

|

|

| Address design requirements and ethical considerations up front with health system/community partners to maximize rigor and responsiveness, notably rationale for randomization and blinding/masking of outcomes |

|

|

Abbreviations: FRAME-IS, Framework for Reporting Adaptations and Modifications to Evidence-based Implementation Strategies; LISTS, Longitudinal Implementation Strategy Tracking System; SMART, sequential multiple assignment randomized trial.

Recommendation 1: Discern and Prespecify Core Functions of Implementation Strategies

To determine whether they are truly being adapted, wherever possible the implementation strategies should be prespecified to ensure their replication. One method is to discern the strategy’s core functions and forms of those functions. In doing so, implementation scientists ought to take a full inventory of the actors and actions of the different implementation strategies to be used and assume that all the functions of the implementation strategies will be offered as part of an intent-to-treat situation in an implementation strategy trial. For example, the core functions of the facilitation implementation strategy were recently expanded based on a review of recent trial results (37). However, if that is not possible or if there is uncertainty about whether the implementation strategy or strategies will need to be adapted, then the hypotheses related to the impact of the implementation strategies should be considered exploratory in order to avoid overly constraining prespecification of the strategies. This approach also enables implementers to pivot their use of different implementation strategies based on real-time feedback on new barriers from health system and community partners.

For implementation strategies such as facilitation (37), core functions include assessing readiness and identifying implementation barriers and facilitators with the providers responsible for effective intervention deployment; an example of a form might be the type of data collected in a needs assessment or the frequency of stakeholder meetings. Another implementation strategy core function is the process used to adapt and tailor an effective intervention, such as the use of a plan-do-study-act cycle. The form of deploying a plan-do-study-act cycle may vary in terms of the length between pilot tests or the number of sites or patients involved.

We recommend that researchers describe the core functions of an implementation strategy that should be fixed and specify a priori the forms of the strategy (dose, timing, length, setting) that can be adapted. However, most implementation strategies do not have established core functions or forms and require further study to ensure replication. Efforts are underway to classify the functions or mechanisms of implementation strategies because strategy forms” can (and likely should) vary widely depending on contextual factors. For example, a discrete strategy such as changing reimbursement can target different people in different ways and have a different source, scale, and scope. For this reason, Proctor et al. (54) developed recommendations for specifying implementation strategies for replication and evidence generation, which are utilized in the implementation replication framework (12).

Recommendation 2: Consider Exploratory Study Designs that Refine and Specify Implementation Strategies

The second recommendation for improving the uptake of effective interventions is to design the strongest implementation strategy possible and prepare for its rigorous testing using an appropriate implementation trial design. However, while fully powered trials are desirable by funding agencies, not all implementation strategies have had their core functions specified adequately. Still, the investment in large implementation trials without fully specifying the implementation strategies can generate inconclusive results that limit replication by other researchers or practitioners. To this end, greater value should be placed on funding trials that are exploratory to ensure that implementation strategies are well refined and specified adequately. Emerging frameworks and methods have been developed, notably by the US Department of Veterans Affairs Quality Enhancement Research Initiative (QUERI) (38) and Guastaferro & Collins (26), to enable more impactful exploratory work to refine implementation strategies without leading to inclusive results that are due to unanticipated adaptations, including rapid cycle research, multiphase optimizing strategy (MOST) (26), and the QUERI Roadmap (38). These frameworks can guide the development and refinement of appropriate implementation strategies using feedback from consumers, providers, systems leaders, and policy makers, as well as through preliminary testing, e.g., hybrid type 2 study.

Derived from previous frameworks that recommend ways to speed up the implementation process (54), rapid cycle research approaches (30) use contextually informed processes that are modified to enable time-sensitive implementation strategy studies. Rapid cycle research has six phases: preparation (establish partnerships), problem exploration (identify the problems to solve collaboratively with local stakeholders), knowledge exploration (aim to better understand the problem from different points of view), solution development (with stakeholders, design a solution that is simple and scalable), solution testing (even though traditional research or quality improvement methods can be used, match the method to the question while minimizing threats to interval validity at the fastest pace possible), implementation and dissemination (identify and address barriers, spread the solution, and attempt to move the solution to scale). A large part of what makes the rapid cycle research process proceed more quickly is the collaboration with interested parties (e.g., patients, providers, system leaders)—i.e., the research protocols are better fit and thus easier to execute, and solutions are more easily spread because buy-in is established from the beginning.

The MOST process can be applied to implementation strategies by outlining a process by which the implementer pilots the implementation strategy to inform its further refinement for use across other settings. In the pilot phase, both the intervention and implementation strategies are refined based on their intensity/affordability, scalability, and efficiency in an initial cohort of sites. For example, MOST can be used if the question is, How do I optimize intervention or implementation strategy components? In contrast, a SMART design can be used if the question is, What should I do for sites that do not respond to first-line strategies? SMART designs are used in situations when the implementation strategies (including their core functions and measures of fidelity) are specified to inform adaptive implementation strategies by empirically testing different strategies derived from MOST designs.

Similarly, the QUERI Roadmap for Implementation and Quality Improvement (38) was developed to enable researchers and practitioners to design an implementation strategy study from the beginning, using similar methods outlined in the rapid cycle research process. The QUERI Roadmap places specific emphasis on prespecifying the intervention, implementation strategies, and outcomes to be evaluated based on input from those most affected by the implementation process. QUERI also provides examples and training opportunities in prespecified implementation strategies such as facilitation, leadership for organizational change and implementation, and evidence-based quality improvement, which have developed a set of core functions that can be replicated across different settings (https://www.queri.research.va.gov/training_hubs/default.cfm).

In exploratory implementation strategy studies, tracking adaptation is critical, and one method to do so is the Framework for Reporting Adaptations and Modifications to Evidence-based Implementation Strategies (FRAME-IS) (45). Study teams use the FRAME-IS to ascertain input from different interested parties to capture how the implementation strategy was modified, including the nature, timing, goal, and dissemination of the modification. The Longitudinal Implementation Strategies Tracking System (LISTS) (57) is a tool for tracking both anticipated and unanticipated adaptations to implementation strategies. Notably LISTS uses the Proctor action-actor framework, the Expert Recommendations for Implementing Change (ERIC) (51, 52), and the FRAME-IS to describe the different implementation strategies used and the extent to which they were adapted. Moreover, these tools may also help in discerning a strategy’s core functions (19, 20, 39) from its forms and clarify when an adaptation, such as frequency, length of time, venue, or timing of an implementation strategy, actually occurred.

However, a key challenge with these tools is that they require implementation science background and training to use, thus limiting their scalability especially across multiple, lower-resourced settings. In addition, to date there are limited ways in which data on implementation strategy use can be ascertained in a more cost-efficient and standardized manner. For example, emerging informatics methods are being used to automatically capture fidelity to effective interventions (15), but their use is limited to settings with comprehensive electronic health records.

Nonetheless, more journals should promote opportunities for the publication of exploratory implementation strategy trials. Moreover, in many ways SMART trials are very well suited to piloting because one can systematically tinker with the implementation strategy by prespecifying augmentations or changes. However, SMART trials might be limited in their power to discern primary effects on the different sequences of strategies. Ultimately, enabling additional opportunities to conduct exploratory trials enables innovation in the development of more impactful implementation strategies.

Recommendation 3: Address Design Requirements and Ethical Considerations Up Front with Health System/Community Partners to Maximize Rigor and Responsiveness

For implementation strategy trials, addressing up front the design requirements such as randomization, blinding, and other ethical considerations, in addition to appropriate interventions and implementation strategies, will enable appropriate balancing of rigor with responsiveness and engagement with individual and community partners and prevent the need to stray from prespecified components of the trial during the study.

Implementation trial randomization: pros and cons.

Most of these study designs described above can involve randomization. However, randomization may not be appropriate in all situations when evaluating implementation strategies. The purpose of randomization is to equalize arms on unmeasured confounders. However, there are times when randomization is not perceived as equitable, ethical, or feasible. This is particularly true when there is not equipoise or where one arm is thought to be superior to another. In implementation science, this situation occurs often when there is an inactive control. Moreover, communities may have a legitimate need to roll out the effective intervention sooner rather than later due to a public health crisis. Given that most implementation strategies are thought to enhance access to an evidence-based practice or program (compared with passive implementation), it is often considered suboptimal to have an inactive control. In such cases, a stepped-wedge or cluster trial design with wait-list control may be a preferable design. In other situations, the more intensive implementation strategy may not be the most optimal, especially for sites with labor shortages and overburdened providers (59, 60), which can make designs such as SMART more feasible when timing the level of intensity of the implementation. Randomization is a key concern to be raised with community members and participants in trial planning. It is critical to include marginalized populations in these conversations to avoid exacerbating mistrust in research and to ensure that important voices are heard. This work is important from an ethical standpoint and can ensure that research is maximally applicable and valid but can also require resources to engage in such ongoing partnerships (6).

Blinding (masking) and reporting of interim results in implementation trials.

One of the challenges and opportunities with implementation trials is the desire for community or health system partners to want to know about progress, especially in uptake of an effective intervention across their sites. One way to enable progress reporting is to report on intermediate variables such as the number of patients receiving at least one intervention encounter, which is not tied to a primary or secondary outcome such as intervention fidelity or patient health status. In general, study data collectors and the analysts should be blinded to intervention arm assignment. While it can be impossible to blind the study implementer (e.g., facilitator) to site progress data, they can be blinded to site progress occurring in other allocation arms. Moreover, allocation arms should be using the same measuring stick in assessing implementation and patient outcomes.

Blinding (masking) is the “process used in experimental research by which study participants, persons caring for the participants, persons providing the intervention data collectors, and data analysts are kept unaware of group assignment (control vs intervention)” (21, p. 70). Journal protocol publications may require documentation if and how blinding was done, e.g., Consolidated Standards of Reporting Trials (CONSORT) (7). For implementation trials, blinding of participants (e.g., care providers) and implementation interventionists is not possible, but blinding of outcome data by arm is feasible (e.g., outcomes assessors are blinded to group assignment). Challenges with maintaining blinding include the desirability of community partners to be updated during the study and the sharing of interim progress between the implementation interventionist (e.g., facilitator) and on-site practitioners who desire to know their overall progress and to assess barriers to intervention uptake.

Overall, we recommend that only the unblinded study statistician should see trial results for primary and secondary outcomes by study arm until all data have been collected. Data on primary and secondary outcomes that typically serve as process variables (e.g., reach, adoption, intervention fidelity) can be shared with implementation intervention staff, but only for the single implementation arm in which they work and only for the purposes of encouraging adherence to the approved protocol. Feedback to interested parties (e.g., patient engagement groups, providers, community, and health system partners) should be based on data different from the primary and secondary outcomes such as reach; doing so ensures that investigators can adjust early on if there is an imbalance in participation that can be rectified. Ultimately, it is essential to describe how blinding was done. For example, in the ASIC implementation trial (40), the coach and facilitator knew the randomization assignments, but the research assistant (RA) did not. The analyst and RA remained blinded to outcomes until study completion, and interim reports included response rates and enrollment but not primary outcome data.

Other ethical considerations.

Community and health system partners are crucial to the success of implementation, and it is critical to seek their input when developing and adapting interventions and implementation strategies. For example, dosing of implementation strategies must be carefully considered as more intensive strategies may burden providers, particularly in lower-resourced settings, inadvertently exacerbating health inequities. It is likewise critical to measure the potential harms of the strategies (e.g., provider burnout, patient trust).

SMART and adaptive trial designs were developed to address gaps in effective intervention uptake in lower-resourced sites, but it is essential to be transparent about the methods to avoid worsening disparities and mistrust or overburdening an already stretched system. In the ADEPT trial (59, 60), some sites were less open to facilitation support because frontline providers felt “volun-told” to serve as an internal facilitator. Likewise, it is important to focus on sustainment as a key implementation outcome. In a hypothetical SMART trial, some sites might respond quickly to implementation support in order to fill unmet clinical needs, yet longer-term barriers to sustainment are not addressed if they are not randomized to receive additional implementation strategies. A potential solution is to use an unrestricted SMART design (33), where additional implementation support is not contingent on the site’s responsiveness. Overall, it is critical to carefully design studies and implementation strategies and assess the impacts of the implementation strategies throughout the trial to maximize the benefits and minimize the harms of such studies.

CONCLUSIONS

The promise of adaptation and adaptive designs in implementation science is hindered by the lack of clarity and precision in defining what is means to adapt, especially with regard to the distinction between adaptive study designs and adaptive implementation strategies. This review provides a close look at this major development in implementation study designs and proposes a new way of describing adaptive implementation strategy and trial designs. An important consideration for an implementation strategy study is to discern whether an implementation strategy is being adapted (e.g., by the addition of distinct strategies) or not (e.g., retaining its existing core functions but in different forms) and to track these features in exploratory studies. To improve replicability and impact, future implementation science studies should better discern and prespecify core functions of implementation strategies; consider more exploratory studies that refine and specify implementation strategies, especially as they respond to changes in health system/community needs and barriers; and address design requirements and ethical considerations up front (e.g., randomization, blinding/masking).

SUMMARY POINTS.

Despite the growing interest in adaptive designs, the wide variety in types of adaptive designs appears misunderstood in written and spoken communication.

The term adaptation has been used to describe changes to an intervention prior to implementation, changes to a treatment that informed another effectiveness study, or changes to a treatment as part of the implementation strategy process.

“Adaptive interventions” can refer to treatments that are modified by clinicians in routine practice, while an “adaptive implementation strategy” involves a prespecified sequence of implementation strategies to enhance effective intervention uptake in sites needing additional implementation assistance.

“Adaptive intervention” and “adaptive implementation” trial designs usually refer to unanticipated modifications made during the trials, whether they be for interventions or programs or implementation strategies (20). Adaptive iterative designs usually refer to iterative modifications made to the implementation strategies themselves (21, 50) in response to feedback on the intervention or implementation strategy’s performance that are not prespecified in the study protocol, thereby limiting their potential replication and impact.

Both implementation strategies to be tested can be fixed (unchanged) or adaptive (change over time), and implementation trial designs can be fixed or adaptive as well. The different combinations of fixed and adaptive strategies and trial designs create a new taxonomy to better understand adaptation.

Core functions of implementation strategies should be specified a priori in order to facilitate rigorous testing and replicability.

Researchers should also consider exploratory study designs that refine and specify implementation strategies to avoid investing in large-scale trials of unclear strategies.

For implementation studies, design requirements and ethical considerations should be addressed up front with health system/community partners to maximize rigor and responsiveness.

FUTURE ISSUES.

Organizations, including funders that support implementation science, should increasingly use grant mechanisms that encourage more exploratory design and analysis of implementation strategies in order to establish their core functions, which could then be fully assessed in a subsequent randomized trial.

A significant line of future research is matching implementation strategies to specific barriers found at a site and then testing the match to see if the implementation strategies improve uptake of effective interventions and subsequent health outcomes. For this reason, specifying the core functions of implementation strategies and the forms in which they can be delivered without compromising those core functions is even more critical in order to ensure replication of the implementation strategies and their translation to community and health system partners.

Health and social services licensing and accreditation organizations should consider opportunities to offer certification programs in implementation strategies that have tested and validated their core functions, especially through training programs and educational core competencies, in order to maximize their consistent use and spread in routine care settings.

Funders and other organizations responsible for implementing effective interventions in real-world and community-based settings should develop opportunities for community and health system partners to learn implementation study design methods to enable their active participation and optimization of research in their respective settings.

ACKNOWLEDGMENTS

This work was supported by the US Department of Veterans Affairs, Veterans Health Administration, Health Services Research & Development Service (I01HX002344), and the National Institutes of Health (UG3 HL154280, R01 MH114203; UM1TR004404; P50DA054039, R01DA048910, K23DA048182).

Glossary

- CBT

cognitive behavioral therapy

- REP

Replicating Effective Programs

- GTO

Getting To Outcomes

- SMART

sequential multiple assignment randomized trial

- ADEPT

Adaptive Implementation of Effective Programs Trial

- MAISYs

Multi-level adaptive implementation strategy designs

- ASIC

Adaptive School-based Implementation of CBT

- MOST

multiphase optimizing strategy

- QUERI

Quality Enhancement Research Initiative

- FRAME-IS

Framework for Reporting Adaptations and Modifications-Enhanced–Implementation Science

- LISTS

Longitudinal Implementation Strategy Tracking System

- ERIC

Expert Recommendations for Implementing Change

Footnotes

DISCLOSURE STATEMENT

The authors are not aware of any affiliations, memberships, funding, or financial holdings that might be perceived as affecting the objectivity of this review. Institutional review board review was not required as no research or human subjects data were analyzed. The views expressed are those of the authors and do not necessarily represent the views of the Department of Veterans Affairs.

LITERATURE CITED

- 1.Acosta J, Chinman M, Ebener P, Malone PS, Cannon J, D’Amico EJ. 2020. Sustaining an evidence-based program over time: moderators of sustainability and the role of the Getting To Outcomes implementation support intervention. Prev. Sci 21:807–19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Almirall D, Nahum-Shani I, Wang L, Kasari C. 2018. Experimental designs for research on adaptive interventions: singly and sequentially randomized trials. In Optimization of Behavioral, Biobehavioral, and Biomedical Interventions, ed. Collins LM, Kugler K, pp. 89–120. Cham, Switz.: Springer [Google Scholar]

- 3.Bero LA, Grilli R, Grimshaw JM, Harvey E, Oxman AD, Thomson MA. 1998. Closing the gap between research and practice: an overview of systematic reviews of interventions to promote the implementation of research findings. BMJ 317(7156):465–68 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Berta W, Cranley L, Dearing JW, Dogherty EJ, Squires JE, Estabrooks CA. 2015. Why (we think) facilitation works: insights from organizational learning theory. Implement. Sci 10(1):141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Brown CH, Curran G, Palinkas LA, Aarons GA, Wells KB, et al. 2017. An overview of research and evaluation designs for dissemination and implementation. Annu. Rev. Public Health 38:1–22 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Burgess RA, Shittu F, Iuliano A, Haruna I, Valentine P, et al. 2023. Whose knowledge counts? Involving communities in intervention and trial design using community conversations. Trials 24(1):385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Butcher NJ, Monsour A, Mew EJ, Chan A-W, Moher D, et al. 2022. Guidelines for reporting outcomes in trial reports: the CONSORT-Outcomes 2022 Extension. JAMA 328(22):2252–64 [DOI] [PubMed] [Google Scholar]; Presents evidence-and consensus-based standards for reporting outcomes in clinical trials.

- 8.Cannon JS, Gilbert M, Ebener P, Malone PS, Reardon CM. 2019. Influence of an implementation support intervention on barriers and facilitators to delivery of a substance use prevention program. Prev. Sci 20:1200–10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chang M, Chow S-C. 2005. A hybrid Bayesian adaptive design for dose response trials. J. Biopharm. Stat 15(4):677–91 [DOI] [PubMed] [Google Scholar]

- 10.Chinman M, Acosta J, Ebener P, Malone PS, Slaughter M. 2016. Can implementation-support help community-based settings better deliver evidence-based sexual health promotion programs: a randomized trial of Getting To Outcomes®. Implement. Sci 11:78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chinman M, Acosta J, Ebener P, Malone PS, Slaughter M. 2018. A cluster-randomized trial of Getting To Outcomes’ impact on sexual health outcomes in community-based settings. Prev. Sci 19:437–48 [DOI] [PMC free article] [PubMed] [Google Scholar]; Demonstrates that Getting To Outcomes improves program fidelity and outcomes from a randomized trial.

- 12.Chinman M, Acosta J, Ebener P, Shearer A. 2022. “What we have here, is a failure to [replicate]”: ways to solve a replication crisis in implementation science. Prev. Sci 23(5):739–50 [DOI] [PubMed] [Google Scholar]

- 13.Chinman M, Ebener P, Malone PS, Cannon J, D’Amico E, Acosta J. 2018. Testing implementation support for evidence-based programs in community settings: a replication cluster-randomized trial of Getting To Outcomes®. Implement. Sci 13:131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Cohen JA, Mannarino AP, Deblinger E. 2006. Treating Trauma and Traumatic Grief in Children and Adolescents. New York: Guilford Press [Google Scholar]

- 15.Creed TA, Kuo PB, Oziel R, Reich D, Thomas M, et al. 2022. Knowledge and attitudes toward an artificial intelligence-based fidelity measurement in community cognitive behavioral therapy supervision. Adm. Policy Ment. Health 49(3):343–56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. 2012. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med. Care 50(3):217–26 [DOI] [PMC free article] [PubMed] [Google Scholar]; Presents the hybrid designs for implementation research, which combines efficacy and implementation trial aspects.

- 17.Curran GM, Landes SJ, McBain SA, Pyne JM, Smith JD, et al. 2022. Reflections on 10 years of effectiveness-implementation hybrid studies. Front. Health Serv 2:1053496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Eisman AB, Kim B, Salloum RG, Shuman CJ, Glasgow RE. 2022. Advancing rapid adaptation for urgent public health crises: using implementation science to facilitate effective and efficient responses. Front. Public Health 10:959567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Esmail LC, Barasky R, Mittman BS, Hickam DH. 2020. Improving comparative effectiveness research of complex health interventions: standards from the Patient-Centered Outcomes Research Institute (PCORI). J. Gen. Intern. Med 35(Suppl. 2):875–81 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Fixsen D, Blasé KA Naoom SF, Wallace F. 2009. Core implementation components. Res. Soc. Work Pract 19(5):531–40 [Google Scholar]; Reviews literature and presents a framework of core implementation functions that are consistent across efforts.

- 21.Forbes D 2013. Blinding: an essential component in decreasing risk of bias in experimental designs. Evid.-Based Nursing 16:70–71 [DOI] [PubMed] [Google Scholar]

- 22.Frakt AB, Prentice JC, Pizer SD, Elwy AR, Garrido MM, et al. 2018. Overcoming challenges to evidence-based policy development in a large, integrated delivery system. Health Serv. Res 53(6):4789–807 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Geng EH, Mody A, Powell BJ. 2023. On-the-go adaptation of implementation approaches and strategies in health: emerging perspectives and research opportunities. Annu. Rev. Public Health 44:21–36 [DOI] [PubMed] [Google Scholar]

- 24.Goss C 2023. MAISYs: a flexible tool to meet the changing needs of implementation science. Wash. Univ. St. Louis Cent. Dissem. Implement. Blog, Jan. 22. https://publichealth.wustl.edu/maisys-a-flexible-tool-to-meet-the-changing-needs-of-implementation-science/ [Google Scholar]

- 25.Grimshaw JM, Shirran L, Thomas R, Mowatt G, Fraser C, Bero L, et al. 2001. Changing provider behavior: an overview of systematic reviews of interventions. Med. Care 39(8 Suppl. 2):II2–45 [PubMed] [Google Scholar]

- 26.Guastaferro K, Collins LM. 2021. Optimization methods and implementation science: an opportunity for behavioral and biobehavioral interventions. Implement. Res. Pract 2:26334895211054363 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Herman PM, Chinman M, Cannon J, Ebener P, Malone PS, et al. 2020. Cost analysis of Getting to Outcomes implementation support of CHOICE in Boys and Girls Clubs in Southern California. Prev. Sci 21:245–55 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Herman PM, Chinman M, Ebener P, Malone PS, Acosta J. 2020. Cost analysis of a randomized trial of Getting to Outcomes implementation support for a teen pregnancy prevention program offered in Boys and Girls Clubs in Alabama and Georgia. Prev. Sci 21(8):1114–25 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hwang S, Birken SA, Melvin CL, Rohweder CL, Smith JD. 2020. Designs and methods for implementation research: advancing the mission of the CTSA program. J. Clin. Transl. Sci 4:159–67 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Johnson K, Gustafson D, Ewigman B, Provost L, Roper R. 2015. Using rapid-cycle research to reach goals: awareness, assessment, adaptation, acceleration. AHRQ Publ. 15-0036, Agency Healthc. Res. Qual. (AHRQ), Rockville, MD [Google Scholar]

- 31.Kapur J, Elm J, Chamberlain JM, Barsan W, Cloyd J, et al. 2019. Randomized trial of three anticonvulsant medications for status epilepticus. N. Engl. J. Med 381(22):2103–13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kasari C, Kaiser A, Goods K, Nietfeld J, Mathy P, et al. 2014. Communication interventions for minimally verbal children with autism: a sequential multiple assignment randomized trial. J. Am. Acad. Child Adolesc. Psychiatry 53(6):635–46 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kho A, Daumit GL, Truesdale KP, Brown A, Kilbourne AM, et al. 2022. The National Heart Lung and Blood Institute Disparities Elimination through Coordinated Interventions to Prevent and Control Heart and Lung Disease Alliance. Health Serv. Res 57(Suppl. 1):20–31 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kilbourne AM, Almirall D, Eisenberg D, Waxmonsky J, Goodrich DE, et al. 2014. Protocol: Adaptive Implementation of Effective Programs Trial (ADEPT): cluster randomized SMART trial comparing a standard versus enhanced implementation strategy to improve outcomes of a mood disorders program. Implement. Sci 9:132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kilbourne AM, Almirall D, Goodrich DE, Lai Z, Abraham KM, et al. 2014. Enhancing outreach for persons with serious mental illness: 12-month results from a cluster randomized trial of an adaptive implementation strategy. Implement. Sci 9(1):163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kilbourne AM, Barbaresso MM, Lai Z, Nord KM, Bramlet M, et al. 2017. Improving physical health in patients with chronic mental disorders: twelve-month results from a randomized controlled collaborative care trial. J. Clin. Psychiatry 78(1):129–37 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kilbourne AM, Geng E, Eshun-Wilson I, Sweeney S, Shelley D, et al. 2023. How does facilitation in healthcare work? Using mechanism mapping to illuminate the black box of a meta-implementation strategy. Implement. Sci. Commun 4(1):53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kilbourne AM, Goodrich DE, Miake-Lye I, Braganza MZ, Bowersox NW. 2019. Quality enhancement research initiative implementation roadmap: toward sustainability of evidence-based practices in a learning health system. Med. Care 57(10 Suppl. 3):S286–93 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kilbourne AM, Neumann MS, Pincus HA, Bauer MS, Stall R. 2007. Implementing evidence-based interventions in health care: application of the Replicating Effective Programs framework. Implement. Sci 2(1):42. [DOI] [PMC free article] [PubMed] [Google Scholar]; Describes an implementation strategy that focuses on a process to enable adaptation of effective interventions up front in order to enable more feasible implementation over time.

- 40.Kilbourne AM, Smith SN, Choi SY, Koschmann E, Liebrecht C, Rusch A, et al. 2018. Adaptive School-based Implementation of CBT (ASIC): clustered-SMART for building an optimized adaptive implementation intervention to improve uptake of mental health interventions in schools. Implement. Sci 13(1):119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kirchner JE, Ritchie MJ, Pitcock JA, Parker LE, Curran GM, Fortney JC. 2014. Outcomes of a partnered facilitation strategy to implement primary care–mental health. J. Gen. Intern. Med 29(4):904–12 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kwan BM, Brownson RC, Glasgow RE, Morrato EH, Luke DA. 2022. Designing for dissemination and sustainability to promote equitable impacts on health. Annu. Rev. Public Health 43:331–53 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Leeman J, Birken SA, Powell BJ, Rohweder C, Shea CM. 2017. Beyond “implementation strategies”: classifying the full range of strategies used in implementation science and practice. Implement. Sci 12(1):125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Mazzucca S, Tabak RG, Pilar M, Ramsey AT, Baumann AA, et al. 2018. Variation in research designs used to test the effectiveness of dissemination and implementation strategies: a review. Front. Public Health 6:32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Miller CJ, Barnett ML, Baumann AA, Gutner CA, Wiltsey-Stirman S. 2021. The FRAME-IS: a framework for documenting modifications to implementation strategies in healthcare. Implement. Sci 16(1):36. [DOI] [PMC free article] [PubMed] [Google Scholar]; Presents a framework and tool to track changes to implementation strategies during an implementation effort.

- 46.Miller CJ, Smith SN, Pugatch M. 2020. Experimental and quasi-experimental designs in implementation research. Psychiatry Res. 283:112452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Nahum-Shani I, Almirall D, Buckley J. 2019. An introduction to adaptive interventions and SMART Designs in Education. NCSER 2020-001, US Dep. Educ., Natl. Cent. Spec. Educ. Res, Washington, DC. https://ies.ed.gov/ncser/pubs/2020001/pdf/2020001.pdf [Google Scholar]

- 48.Pallmann P, Bedding AW, Choodari-Oskooei B, Dimairo M, Flight L, et al. 2018. Adaptive designs in clinical trials: why use them, and how to run and report them. BMC Med. 16(1):29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Parchman ML, Anderson ML, Dorr DA, Fagnan LJ, O’Meara ES, et al. 2019. A randomized trial of external practice support to improve cardiovascular risk factors in primary care. Ann. Fam. Med 17(Suppl. 1):S40–49 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Perkins GD, Ji C, Deakin CD, Quinn T, Nolan JP, et al. , PARAMEDIC2 Collab. 2018. A Randomized Trial of Epinephrine in Out-of-Hospital Cardiac Arrest. N. Engl. J. Med 379(8):711–21 [DOI] [PubMed] [Google Scholar]

- 51.Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, et al. 2017. Methods to improve the selection and tailoring of implementation strategies. J. Behav. Health Serv. Res 44(2):177–94 [DOI] [PMC free article] [PubMed] [Google Scholar]; Provides an overview of methods for matching implementation strategies to the local need and context.

- 52.Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, et al. 2015. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement. Sci 10:21. [DOI] [PMC free article] [PubMed] [Google Scholar]; Researchers used implementation experts to develop a standard terminology for implementation strategies.

- 53.Proctor EK, Powell BJ, McMillen JC. 2013. Implementation strategies: recommendations for specifying and reporting. Implement. Sci 8(1):139. [DOI] [PMC free article] [PubMed] [Google Scholar]; Provides guidance on how to report implementation strategies in papers in a standardized format.

- 54.Proctor E, Ramsey AT, Saldana L, Maddox TM, Chambers DA, Brownson RC. 2022. FAST: A Framework to Assess Speed of Translation of health innovations to practice and policy. Glob. Implement Res. Appl 2(2):107–19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Quanbeck A, Almirall D, Jacobson N, Brown RT, Landeck JK, et al. 2020. The Balanced Opioid Initiative: protocol for a clustered, sequential, multiple-assignment randomized trial to construct an adaptive implementation strategy to improve guideline-concordant opioid prescribing in primary care. Implement. Sci 15:26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Rogal SS, Yakovchenko V, Morgan T, Bajaj JS, Gonzalez R, et al. 2020. Getting to Implementation: a protocol for a Hybrid III stepped wedge cluster randomized evaluation of using data-driven implementation strategies to improve cirrhosis care for Veterans. Implement. Sci 15(1):92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Smith JD, Merle JL, Webster KA, Cahue S, Penedo FJ, Garcia SF. 2022. Tracking dynamic changes in implementation strategies over time within a hybrid type 2 trial of an electronic patient-reported oncology symptom and needs monitoring program. Front. Health Serv 2:983217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Smith SN, Almirall D, Choi SY, Koschmann E, Rusch A, et al. 2022. Primary aim results of a clustered SMART for developing a school-level, adaptive implementation strategy to support CBT delivery at high schools in Michigan. Implement. Sci 17(1):42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Smith SN, Almirall D, Prenovost K, Liebrecht C, Kyle J, et al. 2019. Change in patient outcomes after augmenting a low-level implementation strategy in community practices that are slow to adopt a collaborative chronic care model: a cluster randomized implementation trial. Med. Care 57(7):503–11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Smith SN, Liebrecht CM, Bauer MS, Kilbourne AM. 2020. Comparative effectiveness of external vs blended facilitation on collaborative care model implementation in slow-implementer community practices. Health Serv. Res 55(6):954–65 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.U.S. Dep. Health Hum. Serv., Food Drug Adm. 2019. Adaptive design clinical trials for drugs and biologics guidance for industry. Rep., Cent. Drug Eval. Res., Cent. Biol. Eval. Res, Silver Spring, MD. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/adaptive-design-clinical-trials-drugs-and-biologics-guidance-industry [Google Scholar]

- 62.Waxmonsky J, Kilbourne AM, Goodrich DE, Nord KM, Lai Z, et al. 2014. Enhanced fidelity to treatment for bipolar disorder: results from a randomized controlled implementation trial. Psychiatr. Serv 65(1):81–90 [DOI] [PMC free article] [PubMed] [Google Scholar]

RELATED RESOURCES

- Patient-Cent. Outcomes Res. Initiat. 2023. PCORI methodology standards. https://www.pcori.org/research/about-our-research/research-methodology/pcori-methodology-standards#Complex

- RAND Corp. Getting To Outcomes. https://www.rand.org/health-care/projects/getting-to-outcomes.html

- Univ. Mich. Inst. Soc. Res. Adaptive Intervention and Design Center. https://d3c.isr.umich.edu/

- VA Qual. Enhanc. Res. Initiat. (QUERI). 2021. QUERI implementation roadmap. https://www.queri.research.va.gov/tools/roadmap.cfm

- VA Qual. Enhanc. Res. Initiat. (QUERI). 2023. Implementation strategy training opportunities. https://www.queri.research.va.gov/training_hubs/default.cfm