Abstract

Transformer, one of the latest technological advances of deep learning, has gained prevalence in natural language processing or computer vision. Since medical imaging bear some resemblance to computer vision, it is natural to inquire about the status quo of Transformers in medical imaging and ask the question: can the Transformer models transform medical imaging? In this paper, we attempt to make a response to the inquiry. After a brief introduction of the fundamentals of Transformers, especially in comparison with convolutional neural networks (CNNs), and highlighting key defining properties that characterize the Transformers, we offer a comprehensive review of the state-of-the-art Transformer-based approaches for medical imaging and exhibit current research progresses made in the areas of medical image segmentation, recognition, detection, registration, reconstruction, enhancement, etc. In particular, what distinguishes our review lies in its organization based on the Transformer’s key defining properties, which are mostly derived from comparing the Transformer and CNN, and its type of architecture, which specifies the manner in which the Transformer and CNN are combined, all helping the readers to best understand the rationale behind the reviewed approaches. We conclude with discussions of future perspectives.

Keywords: Transformer, Medical imaging, Survey

1. Introduction

Medical imaging (Beutel et al., 2000) is a non-invasive technology that acquires signals by leveraging the physical principles of sound, light, electromagnetic wave, etc., from which visual images of internal tissues of the human body are generated. There are many widely used medical imaging modalities, including ultrasound, digital radiography, computed tomography (CT), magnetic resonance imaging (MRI), and optical coherent tomography (OCT). According to a report published by EMC2, about 90% of all healthcare data are medical images, which undoubtedly become a critical source of evidence for clinical decision making, such as diagnosis and intervention.

Artificial intelligence (AI) technologies that process and analyze medical images have gained prevalence in scientific research and clinical practices in recent years (Zhou et al., 2019). This is mainly due to the surge of deep learning (DL) (LeCun et al., 2015), which has achieved superb performances in a multitude of tasks, including classification (He et al., 2016; Hu et al., 2018; Huang et al., 2017), object detection (Girshick et al., 2014; Wang et al., 2017b), and semantic segmentation (Zhao et al., 2017; Chen et al., 2017). The convolutional neural networks (CNNs or ConvNets) are DL methods customarily designed for image data. The earliest applications of CNNs in medical imaging go back to the 1990s (Lo et al., 1995b,a; Sahiner et al., 1996). Though they showed encouraging results, it was not until the last decade that CNNs began to exhibit state-of-the-art performances and widespread deployment in medical image analysis. Ever since U-Net (Ronneberger et al., 2015) won the 2015 ISBI cell tracking challenge, CNNs have taken the medical image analysis research by storm. Up till today, U-Net and its variants continue to demonstrate outstanding performance in many fields of medical imaging (Isensee et al., 2021; Zhou et al., 2022a; Cui et al., 2019). Other deep learning techniques, such as recurrent neural networks (RNNs) (Zhou et al., 2019) and deep reinforcement learning (DRL) (Zhou et al., 2021d), have been developed and built on top of CNNs for medical image analysis.

More recently, Transformer (Vaswani et al., 2017) has shown great potential in medical imaging applications as it has flourished in natural language processing and is flourishing in computer vision. Regarding homogeneity and heterogeneity of natural and medical images representations, it is motivated to investigate the status quo of Vision Transformer for medical imaging. It remains unclear whether Vision Transformers are better than CNNs for understanding medical images, and whether Transformers can transform medical imaging. Like any other machine learning techniques, Transformers have both advantages and disadvantages. For example, one of the benefits of Transformers is that they tend to have large effective receptive fields, which means they are better at understanding contextual information than CNNs. This is particularly useful in medical imaging, where it is important to take into account not only the area of concern but also the surrounding tissue and organs when diagnosing a medical condition. On the downside, Transformers tend to be more computationally intensive and require more data. This can be a challenge in the field of medical imaging, where resources may be limited due to factors such as patient privacy concerns. At the present stage, it is uncertain whether Transformers will revolutionize the field of medical imaging, but current research has shown their potential in achieving improved performance on various medical imaging tasks. In this paper, we highlight the properties of Vision Transformers and present a comparative review for Transformer-based medical image analysis. Given that, the survey is confined to Vision Transformer, Unless stated otherwise, "Transformer" and "Transformer-based" referred in this paper represents "Vision Transformer", models with vanilla Language Transformer base blocks integrated, and applied in image analysis tasks.

We organize the rest of paper to include the following: (i) a brief introduction to CNN for medical image analysis; (Section 2) (ii) an introduction to Transformer with its general principle, key properties, and its main differences from a CNN (Section 3); (iii) current progresses of state-of-the-art Transformer methods for solving medical imaging tasks, including medical image segmentation, recognition, classification, detection, registration, reconstruction, and enhancement, which is the main part (Section 4); (iv) yet-to-solve challenges and future potential of Transformer in medical imaging (Section 5).

2. CNN for Medical Image Analysis

2.1. CNNs for medical imaging

We begin by briefly outlining the applications of CNNs in medical imaging and discussing their potential limitations. CNNs are specialized in analyzing data with a known grid-like topology (e.g., images). This is due to the fact that the convolution operation imposes a strong prior on the weights, compelling the same weights to be shared across all pixels. As the exploration of deep CNN architectures has intensified since the development of AlexNet for image classification in 2012 (Krizhevsky et al., 2012), the first few successful efforts at deploying CNNs for medical imaging lay in the application of medical image classifications. These network architectures often begin with a stack of convolutional layers, pooling operations, and follow by a fully connected layer for producing a vector reflecting the probability of belonging to a certain class (Roth et al., 2014, 2015; Cireşan et al., 2013; Brosch et al., 2013; Xu et al., 2014; Malon and Cosatto, 2013; Cruz-Roa et al., 2013; Li et al., 2014). In the meantime, similar architectures have been used for medical image segmentation (Ciresan et al., 2012; Prasoon et al., 2013; Zhang et al., 2015a; Xing et al., 2015; Vivanti et al., 2015) and registration (Wu et al., 2013; Miao et al., 2016; Simonovsky et al., 2016) by performing the classification task on a pixel-by-pixel basis.

In 2015, Ronneberger et al. introduced U-Net (Ronneberger et al., 2015), which is built based on the concept of the fully convolutional network (FCN) (Long et al., 2015). In contrast to previous encoder-only networks, U-Net employs a decoder composed of successive blocks of convolutional layers and upsampling layers. Each block upsamples the previous feature maps such that the final output has the same resolution as the input. U-Net represents a substantial advance over previous networks. First, it eliminated the need for laborious sliding-patch inferences by having the input and output be full-sized images. Moreover, because the input to the network is a full-sized image as opposed to a small patch, U-Net has a better understanding of contextual information presented in the input. Although many other CNN architectures have demonstrated superior performances (e.g., HyperDense-Net (Dolz et al., 2018) and DnCNN (Zhang et al., 2017; Cheng et al., 2019; Kim et al., 2018)), the U-Net-like encoder-decoder paradigm has remained the de facto choice when it comes to CNNs for pixel-level tasks in medical imaging. Many variants of such a kind have been proposed and demonstrated promising results on various applications, including segmentation (Isensee et al., 2021; Zhou et al., 2018; Oktay et al., 2018; Gu et al., 2020; Zhang et al., 2020), registration (Balakrishnan et al., 2019; Dalca et al., 2019; Zhao et al., 2019b,a), and reconstruction (Han and Ye, 2018; Cui et al., 2019). Attempts have been made to improve CNNs by incorporating RNNs or LSTMs for medical image analysis. For instance, Alom et al. proposed a combination of ResUNet with RNN (Alom et al., 2018), which includes a feature accumulation module to enhance feature representations for image segmentation. Gao et al. proposed Distance-LSTM (Gao et al., 2019a), which is capable of modeling the time differences between longitudinal scans. This model is efficient at learning the intra-scan feature variabilities. Similarly, (Gao et al., 2018) merged CNNs with LSTM to learn spatial-temporal representations of brain MRI slices for segmentation. In general, RNNs have a unique ability to model medical images that can advance CNNs. By integrating CNNs with RNNs, it becomes feasible to capture global spatial-temporal feature relationships. Nevertheless, due to the resource-intensive nature of RNNs, they are mostly used for particular tasks, such as comprehending sequential data (e.g., longitudinal data).

Despite the widespread success of CNNs in medical imaging applications over the last decade, there are still inherent limitations within the architecture that prevent CNNs from reaching even greater performance. The vast majority of current CNNs deploy rather small convolution kernels (e.g., 3 × 3 or 5 × 5). Such a locality of convolution operations results in the CNNs being biased toward local spatial structures (Zhou et al., 2021b; Naseer et al., 2021; Dosovitskiy et al., 2020), which makes them less effective at modeling the long-range dependencies required to better comprehend the contextual information presented in the image. Extensive efforts have been made to address such limitations by expanding the theoretical receptive fields (RFs) of CNNs, with the most common methods including increasing the depth of the network (Simonyan and Zisserman, 2015), introducing recurrent- (Liang and Hu, 2015) or skip-/residual-connections (He et al., 2016), introducing dilated convolution operations (Yu and Koltun, 2016; Devalla et al., 2018), deploying pooling and up-sampling layers (Ronneberger et al., 2015; Zhou et al., 2018), as well as performing cascaded or two-stage framework (Isensee et al., 2021; Gao et al., 2019b, 2021a). Despite these attempts, the first few layers of CNNs still have limited RFs, making them unable to explicitly model the long-range spatial dependencies. Only at the deeper layers can such dependencies be modeled implicitly. However, it was revealed that as the CNNs deepen, the influence of faraway voxels diminishes rapidly (Luo et al., 2016). The effective receptive fields (ERFs) of these CNNs are, in fact, much smaller than their theoretical RFs, even though their theoretical RFs encompass the entire input image.

2.2. Motivations behind using Transformers

Transformers, as alternative network architecture to CNNs, has recently demonstrated superior performances in many computer vision tasks (Dosovitskiy et al., 2020; Liu et al., 2021b; Wu et al., 2021a; Zhu et al., 2020; Wang et al., 2021f; Chu et al., 2021; Yuan et al., 2021b; Dong et al., 2022). The core element of Transformers is the self-attention mechanism, which is not subject to the same limitations as convolution operations, making them better at capturing explicit long-range dependencies(Wang et al., 2022c). Transformers have other appealing features, such as they scale up more easily (Liu et al., 2022e) and are more robust to corruption (Naseer et al., 2021). Additionally, their weak inductive bias enables them to achieve better performance than CNNs with the aid of large-scale model sizes and datasets (Liu et al., 2022e; Zhai et al., 2022; Dosovitskiy et al., 2020; Raghu et al., 2021). Existing Transformer-based models have shown encouraging results in several medical imaging applications (Chen et al., 2021d; Hatamizadeh et al., 2022b; Chen et al., 2022b; Zhang et al., 2021e), prompting a surge of interest in further developing such models (Shamshad et al., 2022; Liu and Shen, 2022; Parvaiz et al., 2022; Matsoukas et al., 2021). This paper provides an overview of Transformer-based models developed for medical imaging applications and highlights their key properties, advantages, shortcomings, and future directions. In the next section, we briefly review the fundamentals of Transformers.

3. Fundamentals of Transformer

Language Transformer (Vaswani et al., 2017) is a neural network based on self-attention mechanisms and feed-forward module to compute representations and global dependencies. Recently, large Language Transformer models employed self-supervised pre-training has demonstrated improved efficiency and scalability, such as BERT (Devlin et al., 2018) and GPT (Radford et al., 2018; Radford et al.; Brown et al., 2020) in natural language processing (NLP). In addition, Vision Transformer (ViT) (Dosovitskiy et al., 2020) partition and flatten images to sequences and implement Transformer for modeling visual features in a sequence-to-sequence paradigm. Below, we first give a detailed introduction to Vision Transformer, focusing on self-attention and its general pipeline. Next, we summarize the characteristics of convolution and self-attention and how the two interact. Lastly, we include key properties of Transformer from manifold perspectives.

3.1. Self-attention in Transformer

Humans choose and pay attention to part of the information unintentionally when observing, learning and thinking. The attention mechanism in neural networks is a mimic to this physiological signal processing process (Bahdanau et al., 2014). A typical attention function computes a weighted aggregation of features, filtering and emphasizing the most significant components or regions (Bahdanau et al., 2014; Xu et al., 2015; Dai et al., 2017; Hu et al., 2018).

3.1.1. Self-attention

Self-attention (SA) (Bahdanau et al., 2014) is a variant of attention mechanism (Figure 1 (left)), which is designed for capturing the internal correlation in data or features. The standard SA (Vaswani et al., 2017) first maps the input into a query , a key , and a value , using three learnable parameters Wq, Wk, and Wv, respectively:

| (1) |

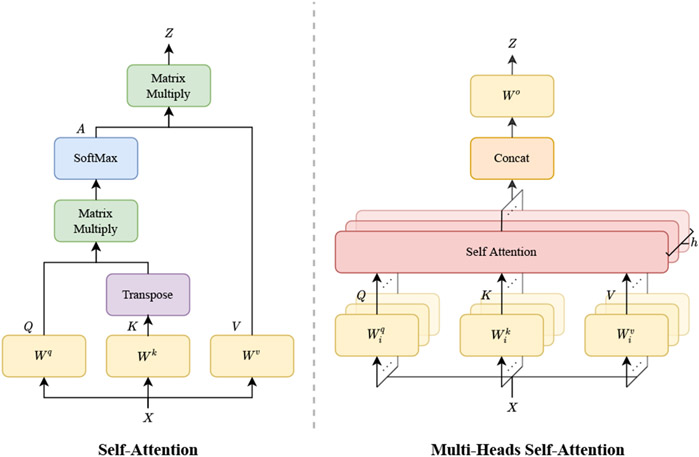

Fig. 1.

Details of a self-attention mechanism (left) and a multi-head self-attention (MSA) (right). Compared to self-attention, the MSA conducts several attention modules in parallel. The independent attention features are then concatenated and linearly transformed to the output.

Then, the similarity and correlation between query Q and key K is normalized, attaining an attention distribution :

| (2) |

The attention weight is applied to value V, giving the output of a self-attention block:

| (3) |

In general, the key K acts as an embedding matrix that "memorizes" data, and the query Q is a look-up vector. The affinity between the query Q and the corresponding key K defines the attention matrix A. The output Z of a self-attention layer is computed as a sum of value V, weighted by A. The matrix A calculated in (2) connects all elements, thereby leading to a good capability of handling long-range dependencies in both NLP and CV tasks.

3.1.2. Multi-head self-attention (MSA)

Multiple self-attention blocks, namely multi-head self-attention (Figure 1 (right)), are performed in parallel to produce multiple output maps. The final output is typically a concatenation and projection of all outputs of SA blocks, which can be given by:

| (4) |

where h denotes the total number of heads and is a linear projection matrix, aggregating the outputs from all attention heads. , and are parameters of the ith attention head. MSA projects Q, K and V into multiple sub-spaces that compute similarities of context features. Note that it is not necessarily true that a larger number of heads accompanies with better performance (Voita et al., 2019).

3.2. Vision Transformer pipeline

3.2.1. Overview

A typical design of a Vision Transformer consists of a Transformer encoder and a task-specific decoder, depicted in Figure 2 (left). Take the processing of 2D images for instance. Firstly, the image is split into a sequence of N non-overlapping patches {X1, X2, …, XN}; , where C is the number of channels, [H, W] denotes the image size, and [P, P] is the resolution of a patch. Next, each patch is vectorized and then linearly projected into tokens:

| (5) |

where D is the embedding dimension. Then, a positional embedding, Epos, is added so that the patches can retain their positional information:

| (6) |

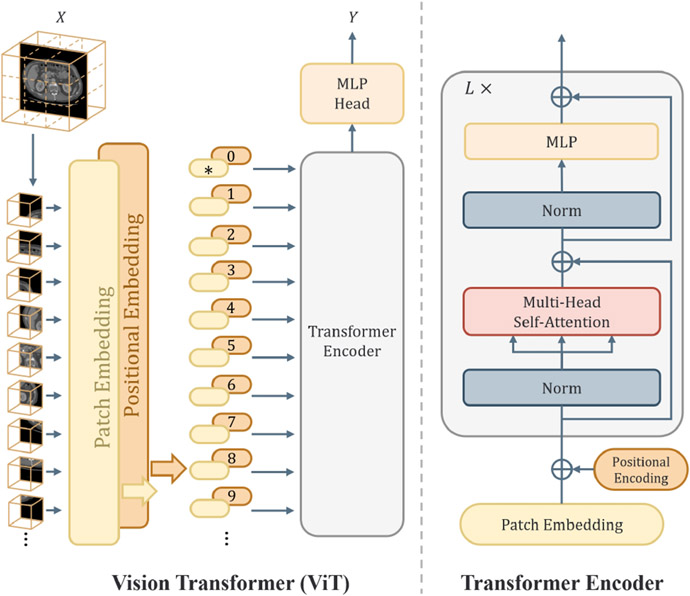

Fig. 2.

Overview of Vision Transformer (left) and illustration of the Transformer encoder (right). The strategy for partitioning an image involves dividing it into several patches of a fixed size, which are then treated as sequences using an efficient Transformer implementation from NLP.

The resulting tokens are fed into a Transformer encoder as shown in Figure 2 (right), which consists of L stacked base blocks. Each base block consists of a multi-head self-attention and a multi-layer perceptron (MLP), with Layer-Norm (LN). The feature can be formulated as:

| (7) |

3.2.2. Non-overlapping patch generation

ViT adapts a standard Transformer in vision tasks, with the fewest modifications as possible. Therefore, the patches {X1, …, Xn} are generated in a non-overlapping style. On one hand, non-overlapping patches partially break the internal structure of an image (Han et al., 2021a). MSA blocks integrate information from various patches, alleviating this problem. On the other hand, there is no computational redundancy when feeding non-overlapping patches into Transformer.

3.2.3. Positional embedding

Transformers tokenize and analyze each patch individually, resulting in the loss of positional information on each patch in relation to the whole image, which is undesired given that the position of each patch is imperative for comprehending the context in the image. Positional embeddings are proposed to encode such information into each patch such that the positional information is preserved throughout the network. Moreover, positional embeddings serve as the manually introduced inductive bias in Transformers. In general, there are three types of positional embedding: sinusoidal, learnable, and relative. The first two encode absolute positions from 1 to the number of patches, while the last encodes relative positions/distances between patches. In the following subsections, we briefly introduce each of the positional embeddings.

Sinusoidal positional embedding.

To encode the position of each patch, we might intuitively assign an index value between 1 and the total number of patches to each patch. Yet, an obvious issue arises: if the number of patches is large, there may be a significant discrepancy in the index values, which hinders network training. Here, the key idea is to represent different positions using sinusoids of different wavelengths. For each patch position n, the sinusoidal positional embedding is defined as (Vaswani et al., 2017):

| (8) |

where .

Learnable positional embedding.

Instead of encoding the exact positional information onto the patches, a more straightforward way is to deploy a learnable matrix, Elrn, and let the network learn the positional information on its own. This is known as the learnable positional embedding.

Relative positional embedding.

Contrary to using a fixed embedding for each location, as is done in sinusoidal and learnable positional embeddings, relative positional embedding encodes the relative information according to the offset between the elements in Q and K being compared in the self-attention mechanism (Raffel et al., 2020). Many relative positional embedding approaches have been developed, and this is still an active field of research (Shaw et al., 2018; Raffel et al., 2020; Dai et al., 2019; Huang et al., 2020; Wang et al., 2020a; Wu et al., 2021b). However, the basic principle stays the same, in which they encode information about the relative position of Q, K, and V through a learnable or hard-coded additive bias during the self-attention computation.

3.2.4. Multi-layer perceptrons

In the conventional Transformer design (e.g., the original ViT (Dosovitskiy et al., 2020) and Transformer (Vaswani et al., 2017)), the MLP comes after each self-attention module. MLP is a crucial component since it injects inductive bias into Transformer, while the self-attention operation lacks inductive bias. This is because MLP is local and translation-equivariant, but self-attention computation is a global operation. The MLP is comprised of two feed-forward networks with an activation (typically a GeLU) in between:

| (9) |

where x denotes the input, and W and b denote, respectively, the weight matrix and the bias of the corresponding linear layer. The dimensions of the weight matrices, W1 and W2, are typically set as D × 4D and 4D × D (Dosovitskiy et al., 2020; Vaswani et al., 2017). Since the input is a matrix of flattened and tokenized patches (i.e., Eqn. (6)), applying W to x is analogous to applying a convolutional layer with a kernel size of 1 × 1. Consequently, the MLPs in the Transformer are highly localized and and equivariant to translation.

3.3. Transformer vs. CNNs

CNNs provide promising results for image analysis, while Vision Transformer has shown comparable even superior performance when pre-training or scaled datasets are available (Dosovitskiy et al., 2020). This raises a question on the differences about how Transformers and CNNs understand images. The receptive field of CNNs gradually expands when the nets go deeper, therefore the features extracted in lower stages are quite different from those in higher stages (Raghu et al., 2021). Features are analyzed and represented layer-by-layer, with global information injected. Besides, the expanding receptive field of neurons and the use of pooling operations result in equivalence and local invariance in terms of translation (Jaderberg et al., 2015; Kauderer-Abrams, 2017), which empowers CNNs to exploit samples and parameters more effectively (see Appendix Appendix .1 for further details). Beyond that, the locality and weight sharing confers CNNs the advantages in capturing local structures. Considering the limited receptive field, CNNs are limited in catching long-distance relationships among image regions. In Transformer model, the MSA provides a global receptive field even with the lowest layer of ViT, resulting in similar representations in different number of blocks (Raghu et al., 2021). The MSA block of each layer is capable of aggregating features in a global perspective, reaching a good understanding of long-distance relationships. The 16 by 16 sequences length is in natural large receptive field that can lead to better global feature modeling. In 3D transformers for volumetric data, this advantage is even obvious, the use of patch size 16×16×16 is intuitive and beneficial for high dimensional, high resolution medical images, as anatomical context are crucial for medical deep learning.

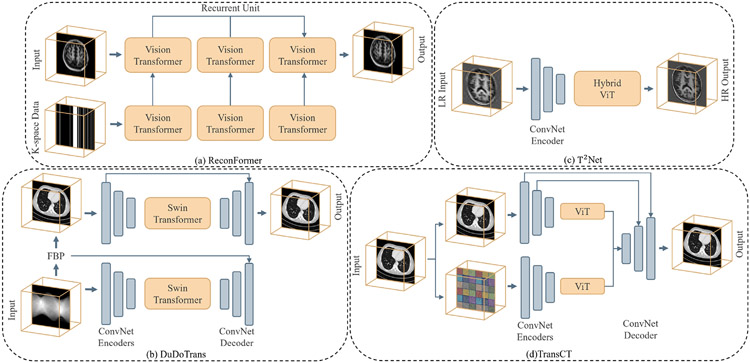

3.3.1. Combining Transformer and CNN

To embrace the benefits from conventional CNNs (e.g., ResNet (He et al., 2016) and U-Net (Ronneberger et al., 2015)) and conventional Transformers (e.g., the original ViT (Dosovitskiy et al., 2020) and DETR (Carion et al., 2020)), multiple works have been done in combining the strengths of CNNs and Transformer, which can be included into three types, and we illustrate them one by one in the following paragraphs. Additionally, Fig. 3 contains a taxonomy of typical methods that combine CNN and Transformer.

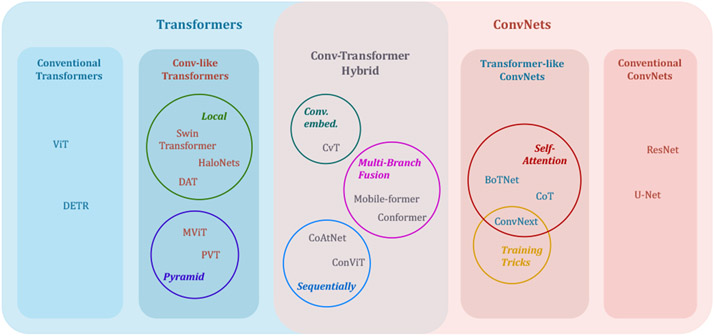

Fig. 3.

Taxonomy of typical approaches in combining CNNs and Transformer.

Conv-like Transformers:

This type of model introduces some convolutional properties into conventional Vision Transformer. The building blocks are still MLPs and MSAs, while arranged in a convolutional style. For example, in Swin Transformer (Liu et al., 2021b), HaloNets (Vaswani et al., 2021), and DAT (Xia et al., 2022b), the self-attention is performed within a local window hierarchically and neighboring windows are merged in subsequent layers. Hierarchical multi-scale framework in MViT (Fan et al., 2021) and pyramid structures in PVT (Wang et al., 2021f) guide a Transformer to increase the capacity of intermediate layers progressively.

Transformer-like CNNs:

This type of model introduces the traits of Vision Transformers into CNNs. The building blocks are convolutions, while arranged in a more Vision Transformer way. Thus, this type of models are excluded in the introduction about Transformer models in Section 4. Specifically, the self-attention mechanism is assembled to convolutions, like in CoT (Li et al., 2021e) and BoTNet (Srinivas et al., 2021), making a full exploration of neighboring context that compensates the CNNs’ weakness in capturing long-range dependencies. ConvNext (Liu et al., 2022e) modernizes a ResNet by exploiting a depth-wise convolution as a substitute of self-attention, and following the training tricks from Swin Transformer (Liu et al., 2021b).

Conv-Transformer hybrid:

A straightforward way of combining CNNs and Transformers is to employ them both in an attempt of leveraging both of their strengths. So the building blocks are convolutions, MLPs and MSAs. This is done by keeping self-attention modules to catch long-distance relationships, while utilizing the convolution to project patch embeddings in CvT (Wu et al., 2021a). Another type of methods is the multi-branch fusion, like Conformer (Peng et al., 2021) and Mobile-former (Chen et al., 2021g), which typically fuses the feature maps from two parallel branches, one from CNN and the other from Transformer, such that the information provided by both architectures is retained throughout the decoder. Analogously, convolutions and Transformer blocks are arranged sequentially in ConViT (dâĂŹAscoli et al., 2021) and CoAtNet (Dai et al., 2021c), and representations from convolutions are aggregated by MSAs in a global view.

3.4. Key properties

From the basic theory and architecture design of Transformer, researchers are yet to figure out why Transformer works better than say CNN in many scenarios. Below are some key properties associated with Transformers from the perspectives of modeling and computation.

3.4.1. Modeling

M1: Long-range dependency.

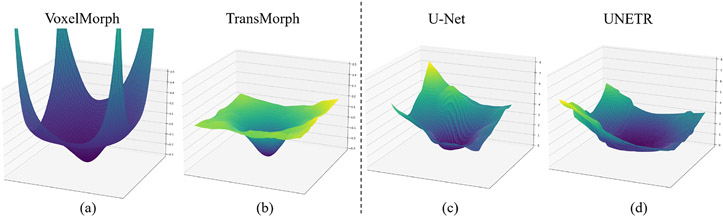

The MSA module connects all patches with a constant distance, and it is proved in (Joshi, 2020) that a Transformer model is equivalent to a graph neural network (GNN). It promises Transformer with large theoretical and effective receptive fields (as shown in Fig. 4), and possibly brings better understanding of contextual information and long-range dependency than CNNs.

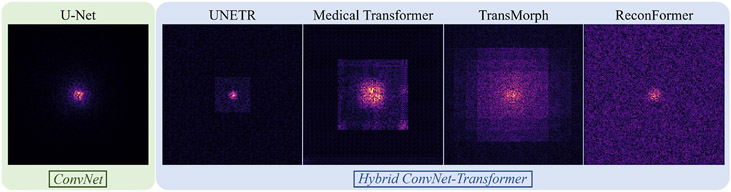

Fig. 4.

Effective receptive fields (ERFs) (Luo et al., 2016) of the well-known CNN, U-Net (Ronneberger et al., 2015), versus the hybrid Transformer-CNN models, including UNETR (Hatamizadeh et al., 2019), Medical Transformer (Valanarasu et al., 2021), TransMorph (Chen et al., 2022b), and ReconFormer (Guo et al., 2022d). The ERFs are computed at the last layer of the model prior to the output. The γ correction of γ = 0.4 was applied to the ERFs for better visualization. Despite the fact that its theoretical receptive field encompasses the whole image, the pure CNN model, U-Net (Ronneberger et al., 2015), has a limited ERF, with gradient magnitude rapidly decreasing away from the center. On the other hand, all Transformer-based models have large ERFs that span over the entire image.

M2: Detail modeling.

Images are projected into embeddings by MLPs in Transformers. The embeddings of local patches are refined and adjusted progressively at the same scale. Features in CNNs, like ResNet and U-Net, are resized by pooling and strided-convolution operations. Features are at different detailing stages over scales. Dense modeling and trainable aggregation of features in Transformers can preserve contextual details along with more semantic information injected when deeper layers are reached (Li et al., 2022e).

M3: Inductive bias.

The convolutions in CNNs exploit the relations from the locality of pixels and apply the same weights across the entire image. This inherent inductive bias leads to faster convergence of CNNs and better performances in small datasets (dâĂŹAscoli et al., 2021). On the other hand, because computing self-attention is a global operation, Transformers in general have a weaker inductive bias than CNNs (Cordonnier et al., 2019). The only manually injected inductive bias in original ViT (Dosovitskiy et al., 2020) is the positional embedding. Therefore, Transformers lack the inherent properties of locality and scale-invariance, making them more data-demanding and harder to train (Dosovitskiy et al., 2020; Touvron et al., 2021b). However, the reduced inductive bias may improve the performance of Transformers when trained on a larger-scale dataset. See Appendix .2 for further details.

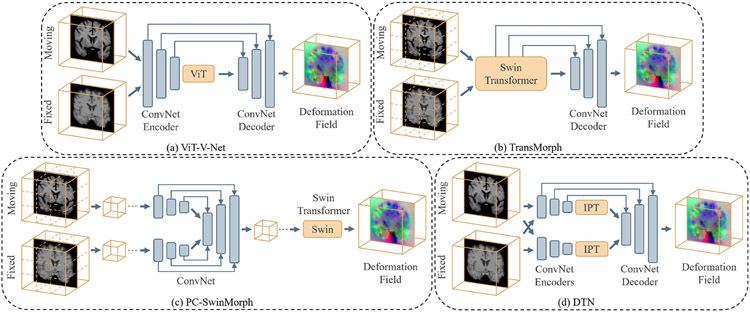

M4: Loss landscape.

The self-attention operation of Transformer tends to promote a flatter loss landscape (Park and Kim, 2022), even for hybrid CNN-Transformer models, as shown in Fig. 5. This results in improved performance and better generalizability compared to CNNs when trained under the same conditions. See Appendix .3 for further details.

Fig. 5.

Loss landscapes for the models based on CNNs versus Transformers. The left and right panels depict, respectively, the loss landscapes for registration and segmentation models. The left panel shows loss landscapes generated based on normalized cross-correlation loss and a diffusion regularizer; the right panel shows loss landscapes created based on a combination of Dice and cross-entropy losses. Transformer-based models, such as (b) TransMorph (Chen et al., 2022b) and (d) UNETR (Hatamizadeh et al., 2022b), exhibit flatter loss landscapes than CNN-based models, such as (a) VoxelMorph (Balakrishnan et al., 2019) and (c) U-Net (Ronneberger et al., 2015).

M5: Noise robustness.

Transformers are more robust to common corruptions and perturbations, such as blurring, motion, contrast variation, and noise (Bhojanapalli et al., 2021; Xie et al., 2021a).

3.4.2. Computation

C1: Scaling behavior.

Transformers show the same scaling properties in NLP and CV (Zhai et al., 2022). The Transformer models achieve higher performance when their computation, model capacity, and data size scale up together.

C2: Easy integration.

It is easy to integrate Transformers and CNNs into one computational model. As shown in Section 3.C and future sections, there are multiple ways of integrating them, resulting in flexible architecture designs that are mainly grouped into Conv-like Transformers, Transformer-like CNNs, and Conv-Transformer hybrid.

C3: Computational intensiveness.

While promising results may be obtained with Transformers, typical Transformers (e.g., ViT (Dosovitskiy et al., 2020; Zhai et al., 2021)) require a significant amount of time and memory, particularly during training. See Appendix .4 for further details.

4. Current Progresses

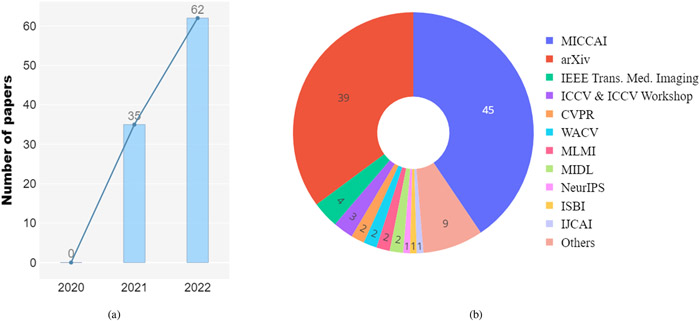

As shown in Fig. 6(a), Vision Transformers has received intensive study in present. We introduce the criteria of inclusion/exclusion for selecting research papers in this review. Fig. 6(b) shows the graphic summary of Transformers in medical image analysis papers. In particular, we investigate articles on IEEE, PubMed, Xplore, Springer, Science direct, proceedings of conferences including medical imaging conferences such as MICCAI, IPMI, ISBI, RSNA, SPIE, etc. Finally, we search manuscripts and project references on google scholar. In the result of search queries, we have found over 2000 transformer related papers, most of these contributions are from language studies or natural image analysis. We build our survey concepts from the self-attention paper, and vision transformer, which are keys milestones for exploring transformer in medical studies. Finally, we set the criteria of legitimacy for this survey only about medical application with transformers. As shown in Fig. 6(b), we demonstrate categorization of our selected papers based on tasks in medical domain. In the figure, we show percentage of article sources from conferences, journals, and pre-print platforms. The list of our selected papers, covering a wide range of topics including medical image segmentation, recognition & classification, detection, registration, reconstruction, and enhancement, is by no means exhaustive. Fig. 7 gives an overview of the current applications of vision Transformers, and below we present a literature summary for each topic with the use of key properties indicated accordingly.

Fig. 6.

(a) The number of papers accepted to the MICCAI conference from 2020 to 2022 whose titles included the word "Transformer". (b) Sources of all 114 selected papers.

Fig. 7.

An overview of Transformers applied in medical tasks in segmentation, recognition & classification, detection, registration, reconstruction, and enhancement.

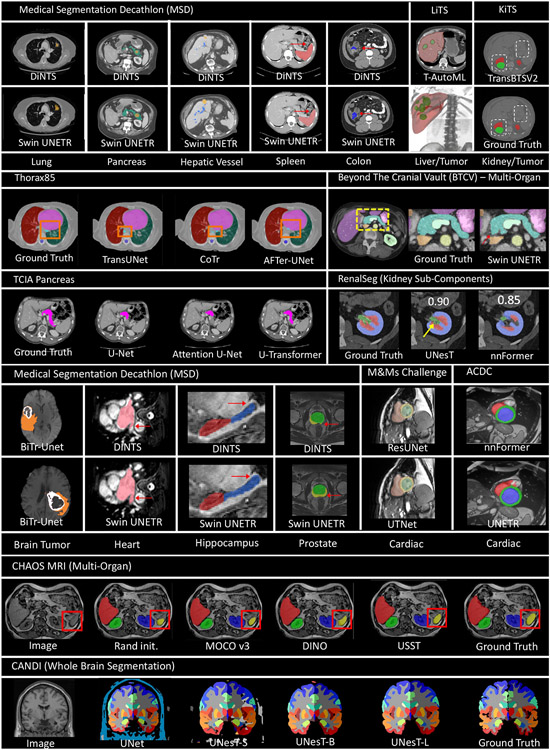

4.1. Medical image segmentation

In general, Transformer-based models outperform ConvNets for solving medical image segmentation tasks. The main reasons are as follows:

The ability of modeling longer range dependencies of context in high dimensional and high resolution medical images. [Property M1]

The scalability and robustness of ViT and Swin Transformer strengthen the dense prediction for pixel-wise segmentation (Liu et al., 2021b). [Property M2]

The superior scaling behavior of Transformers over ConvNets and the lack of convolutional inductive bias in Transformers make them more advantageous to large-scale self-supervised pre-training on medical image datasets (Tang et al., 2022; Zhai et al., 2022). [Property C1 and M3]

Network architecture design is flexible by mixing Transformer and CNN modules. [Property C2]

Though it has demonstrated superior performance, the use of Transformers for medical image segmentation has challenges in transferring the representation capability from language domain to image modalities. Compared to word tokens that are modeled as the basic embedding, visual features are at variant scales. This multi-scale problem can be significant in dense prediction tasks with higher resolution of voxels in medical images. However, for the current Transformer backbones, the learnt embedding is commonly at a fixed scale, which is intractable for segmentation tasks, especial on large-scale medical radiography, microscopy, fundus, endoscopy or other imaging modalities. To adapt the vanilla Transformer models for medical image segmentation, recent researchers proposed solutions that utilize the components of ViT into particular segmentation models. In the following, we summarize and discuss recent works on how Transformer blocks are used in the segmentation models. Table 1 provides a summary list of all reviewed segmentation approaches along with their information about associated architecture type, model size, dataset, method highlight, etc. As one of the most classical approaches in medical segmentation, U-Net (Ronneberger et al., 2015) is widely chosen for comparison by its followers. The U-shaped architecture and skip-connections in U-Net has proved its effectiveness in leveraging hierarchical features. Fig. 8 presents some typical Transformer-based U-shaped segmentation model architectures.

Table 1.

The summarized review of Transformer-based model for medical image segmentation. "N" denotes not reported or not applicable on number of model parameters. "N.A." denotes for not applicable for intermediate blocks or decoder module.

| Reference | Architecture | 2D/3D | #Param | Modality | Dataset | ViT as Enc/Inter/Dec | Highlights |

|---|---|---|---|---|---|---|---|

| CoTr (Xie et al., 2021b) | Conv-Transformer Hybrid | 3D | 46.51M | CT | Multi-organ (BTCV (Landman et al., 2015)) | No/Yes/No | The Transformer block with deformable module captures deep features in the bottleneck. |

| SpecTr (Yun et al., 2021) | Conv-Transformer Hybrid | 3D | N | Microscopy | Cholangiocarcinoma (Zhang et al., 2019b) | No/Yes/No | Hybrid Conv-Transformer encoder with spectral normalization. |

| TransBTS (Wang et al., 2021e) | Conv-Transformer Hybrid | 3D | 32.99M | MRI | Brain Tumor (Baid et al., 2021) | No/Yes/No | 3D Transformer blocks for encoding bottleneck features. |

| UNETR (Hatamizadeh et al., 2021) | Conv-Transformer Hybrid | 3D | 92.58M | CT, MRI | Multi-organ (BTCV (Landman et al., 2015)), Brain Tumor, Spleen (MSD (Simpson et al., 2019 | Yes/No/No | The 3D Transformer directly encodes image into features, and use of CNN decoder for capturing global information. |

| BiTr-UNet (Jia and Shu, 2021) | Conv-Transformer Hybrid | 3D | N | MRI | Brain Tumor (Baid et al., 2021) | No/Yes/No | The bi-level Transformer blocks are used for encoding two level bottleneck features of acquired CNN feature maps. |

| VT-UNet (Peiris et al., 2021) | Conv-Transformer Hybrid | 3D | 20.8M | MRI, CT | Brain tumor, Pancreas, Liver (MSD (Simpson et al., 2019)) | Yes/Yes/Yes | The encoder directly embeds 3D volumes jointly capture local/global information, the decoder introduces parallel cross-attention expansive path. |

| Swin UNETR (Tang et al., 2022; Hatamizadeh et al., 2022a) | Conv-Transformer Hybrid | 3D | 61.98M | CT, MRI | Multi-organ (BTCV) (Landman et al., 2015), MSD 10 tasks (Simpson et al., 2019) | Yes/No/No | The 3D encoder with swin-Transformer direclty encodes the 3D CT/MRI volumes with a CNN-based decoder for better capturing global information. |

| HybridCTrm (Sun et al., 2021a) | Conv-Transformer Hybrid | 3D | N | MRI | MRBrainS (Mendrik et al., 2015), iSEG-2017 (Wang et al., 2019b) | Hybrid/N.A./No | A hybrid architecture encodes images from CNN and Transformer in parallel. |

| UNesT (Yu et al., 2022c) | Conv-Transformer Hybrid | 3D | 87.30M | CT | Kidney Sub-components (RenalSeg, KiTS (Heller et al., 2021)) | Yes/No/No | The use of hierarchical Transformer models for efficiently capturing multi-scale features with a 3D block aggregation module. |

| Universal (Jun et al., 2021) | Conv-Transformer Hybrid | 3D | N | MRI | Brain Tumor (Baid et al., 2021) | No/Yes/No | The proposed model takes advantages of three views of 3D images and fuse 2D features to 3D volumetric segmentation. |

| PC-SwinMorph (Liu et al., 2022a) | Conv-Transformer Hybrid | 3D | N | MRI | Brain (CANDI (Kennedy et al., 2012), LPBA-40 (Shattuck et al., 2008)) | No/No/Hybird | The designed patch-based contrastive and stitching strategy enforce a better fine detailed alignment and richer feature representation. |

| TransBTSV2 (Li et al., 2022c) | Conv-Transformer Hybrid | 3D | 15.30M | MRI, CT | Brain Tumor (Baid et al., 2021), Liver/Kidney Tumor (LiTS (Bilic et al., 2019), KiTS (Heller et al., 2021 | No/Yes/No | The deformable bottleneck module is used in the Transformer blocks modeling bottleneck features to capture more shape-aware representations. |

| GDAN (Lin et al., 2022b) | Conv-Transformer Hybrid | 3D | N/A | CT | Aorta | No/Yes/No | Geometry-constrained module and deformable self-attention module are designed to guide segmentation. |

| VT-UNet (Peiris et al., 2022) | Conv-Transformer Hybrid | 3D | N/A | MRI, CT | Brain Tumor (Baid et al., 2021) | Yes/Yes/No | The self-attention mechanism to simultaneously encode local and global cues, the decoder employs a parallel self and cross attention formulation to capture fine details for boundary refinement. |

| ConTrans (Lin et al., 2022a) | Conv-Transformer Hybrid | 2D | N/A | Endoscopy, Microscopy, RGB, CT | Cell (Pannuke), (Polyp, CVC-ClinicDB (Bernal et al., 2015), CVC-ColonDB (Bernal et al., 2012), ETIS-Larib (Silva et al., 2014), Kvasir (Jha et al., 2020)), Skin (ISIC (Codella et al., 2018)) | Yes/Yes/No | Spatial-Reduction-Cross-Attention (SRCA) module is embedded in the decoder to form a comprehensive fusion of these two distinct feature representations and eliminate the semantic divergence between them. |

| DA-Net (Wang et al., 2022b) | Conv-Transformer Hybrid | 2D | N/A | MRA images | Retina Vessels (DRIVE (Staal et al., 2004) and CHASE-DB1 (Fraz et al., 2012)) | No/Yes/No | Dual Branch Transformer Module (DBTM) that can simultaneously and fully enjoy the patches-level local information and the image-level global context. |

| EPT-Net (Liu et al., 2022c) | Conv-Transformer Hybrid | 3D | N/A | Intracranial Aneurysm | Intracranial Aneurysm (IntrA (Yang et al., 2020)) | No/Yes/No | Dual stream transformer (DST), outeredge context dissimilation (OCD) and inner-edge hard-sample excavation (IHE) help the semantics stream produce sharper boundaries. |

| Latent Space Transformer (Li et al., 2022f) | Conv-like Transformer | 3D | N/A | CT, MRI | LiTS (Bilic et al., 2019), CHAOS (Kavur et al., 2020 | Yes/Yes/Yes | It intentionally make the large patches overlap to enhance intra-patch communication. |

| Swin-MIL (Qian et al., 2022) | Conv-like Transformer | 2D | N/A | Microscopy | Haematoxylin and Eosin (H&E) | Yes/Yes/No | A novel weakly supervised method for pixel-level segmentation in histopathology images, which introduces Transformer into the MIL framework to capture global or long-range dependencies. |

| Segtran (Li et al., 2021a) | Conv-Transformer Hybrid | 2D/3D | 166.7M | Fundus, Colonoscopy, MRI | Disc/Cup (REFUGE20 (Orlando et al., 2020)), Polyp, Brain Tumor | No/Yes/N.A. | The use of squeeze and expansion block for contextualized features after acquiring visual and positional features of CNN. |

| MT-UNet (Wang et al., 2021c) | Conv-Transformer Hybrid | 2D | N | CT, MRI | Multi-organ (BTCV (Landman et al., 2015)), Car-diac (ACDC (Bernard et al., 2018)) | No/Yes/No | The proposed mixed Transformer module simultaneously learns inter- and intra- affinities used for modeling bottleneck features. |

| TransUNet++ (Wang et al., 2022a) | Conv-Transformer Hybrid | 2D | N | CT, MRI | Prostate, Liver tumor (LiTS (Bilic et al., 2019)) | No/Yes/No | The feature fusion scheme at decoder enhances local interaction and context. |

| RT-Net (Huang et al., 2022b) | Conv-Transformer Hybrid | 2D | N | Fundus | Retinal (IDRiD (Porwal et al., 2018), DDR) | No/yes/No | The dual-branch architecture with global Transformer block and relation Transformer block enables detection of small size or blurred border. |

| TransUNet (Chen et al., 2021d) | Conv-Transformer Hybrid | 2D | 105.28M | CT, MRI | Multi-organ (BTCV (Landman et al., 2015)), Cardiac (ACDC (Bernard et al., 2018)) | No/Yes/No | Transformer blocks for encoding bottleneck features. |

| U-Transformer (Petit et al., 2021) | Conv-Transformer Hybrid | 2D | N | CT | Pancreas (TCIA) (Holger and Amal, 2016), Multi-organ | No/Yes/No | The U-shape design with multi-head self-attention for bottleneck features and multi-head cross attention in the skip connections. |

| MBT-Net (Zhang et al., 2021a) | Conv-Transformer Hybrid | 2D | N | Microscopy | Corneal Endothelium cell (TM-EM300, Alizarine (Ruggeri et al., 2010)) | No/Yes/No | The design of hybrid residual Transformer model captures multi-branch global features. |

| MCTrans (Ji et al., 2021) | Conv-Transformer Hybrid | 2D | 7.64M | Microscopy, Colonoscopy, RGB | Cell (Pannuke), (Polyp, CVC-ClinicDB (Bernal et al., 2015), CVC-ColonDB (Bernal et al., 2012), ETIS-Larib (Silva et al., 2014), Kvasir (Jha et al., 2020)), Skin (ISIC (Codella et al., 2018)) | No/Yes/No | The Transformer blocks are used for encoding bottleneck features in a UNet-like model. |

| Decoder (Li et al., 2021b) | Conv-Transformer Hybrid | 2D | N | CT, MRI | Brain tumor (MSD (Simpson et al., 2019)), Multi-organ (BTCV (Landman et al., 2015)) | No/No/Yes | The first study of evaluate the effect of using Transformer for decoder in the medical image segmentation tasks. |

| UTNet (Gao et al., 2021b) | Conv-Transformer Hybrid | 2D | 9.53M | MRI | Cardiac (Campello et al., 2021) | Hybrid/Hybrid/No | The design of a hybrid architecture in the encoder with convolutional and Transformer layers. |

| TransClaw UNet (Chang et al., 2021) | Conv-Transformer Hybrid | 2D | N | CT | Multi-organ (BTCV (Landman et al., 2015)) | No/Yes/No | The Transformer blocks are used as additional encoder for strengthening global connection of CNN encoded features. |

| TransAttUNet (Chen et al., 2021a) | Conv-Transformer Hybrid | 2D | N | RGB, X-ray, CT Microscopy | Skin (ISIC (Codella et al., 2018)), Lung (JSRT (Shiraishi et al., 2000), Montgomery (Jaeger et al., 2014), NIH (Tang et al., 2019)), (Clean-CC-CCII (He et al., 2020b)), Nuclei (Bowl, GLaS (Malík et al., 2020)) | No/Yes/No | The model contains a co-operation of Transformer self-attention and global spatial attention for modeling semantic information. |

| LeViT-UNet(384) (Xu et al., 2021a) | Conv-Transformer Hybrid | 2D | 52.17M | CT, MRI | Multi-organ (BTCV (Landman et al., 2015)), Car-diac (ACDC (Bernard et al., 2018)) | No/Yes/No | The lightweight design of Transformer blocks as second encoder. |

| Polyp-PVT (Dong et al., 2021a) | Conv-Transformer Hybrid | 2D | N | Endoscopy | Polp (Kvasir (Jha et al., 2020), CVC-ClinicDB (Bernal et al., 2015), CVC-ColonDB (Bernal et al., 2012), Endoscene (Vázquez et al., 2017), ETIS (Silva et al., 2014)) | Yes/No/No | The Transformer encoder directly learns the image patches representation. |

| COTRNet (Shen et al., 2021b) | Conv-Transformer Hybrid | 2D | N | CT | Kidney (KITS21 (Heller et al., 2021)) | Hybrid/N.A./No | The U-shape model design has the hybrid of CNN and Transformers for both encoder and decoder. |

| TransBridge (Deng et al., 2021) | Conv-Transformer Hybrid | 2D | 11.3M | Echocardiograph | Cardiac (EchoNet-Dynamic) (Ouyang et al.,2020 | No/Yes/No | The Transformer blocks are used for capturing bottleneck features for bridging CNN encoder and decoder. |

| GT UNet (Li et al., 2021c) | Conv-Transformer Hybrid | 2D | N | Fundus | Retinal (DRIVE (Staal et al., 2004)) | Hybrid/N.A./No | The design of hybrid grouping and bottleneck structures greatly reduces computation load of Transformer. |

| BAT (Wang et al., 2021d) | Conv-Transformer Hybrid | 2D | N | RGB | Skin (ISIC (Codella et al., 2018), PH2 (Mendonça et al., 2013)) | No/Yes/No | The model proposes a boundary-wise attention gate in Transformer for capturing prior knowledge. |

| AFTer-UNet (Yan et al., 2022) | Conv-Transformer Hybrid | 2D | 41.5M | CT | Multi-organ (BTCV (Landman et al., 2015)), Thorax (Thorax-85 (Chen et al., 2021e), SegTHOR (Lambert et al., 2020)) | No/Yes/No | The proposed axial fusion mechanism enables intra- and inter-slice communication and reduced complexity. |

| Conv Free (Karimi et al., 2021) | Conventional Transformer | 3D | N | CT, MRI | Brain cortical (Bastiani et al., 2019) plate, Pancreas, Hippocampus (MSD (Simpson et al., 2019)) | Yes/Yes/N.A. | 3D Transformer blocks as encoder without convolution layers |

| nnFormer (Zhou et al., 2021a) | Conv-like Transformer | 3D | 158.92M | CT, MRI | Brain tumor (Baid et al., 2021), Multi-organ (BTCV (Landman et al., 2015)), Cardiac (ACDC (Bernard et al., 2018)) | Yes/Yes/Yes | The 3D model with pure Transformer as encoder and decoder. |

| MISSFormer (Huang et al., 2021) | Conv-like Transformer | 2D | N | MRI, CT | Multi-organ (BTCV (Landman et al., 2015)), Cardiac (ACDC (Bernard et al., 2018)) | Yes/Yes/Yes | The U-shape design with patch merging and expanding modules as encoder and decoder. |

| D-Former (Wu et al., 2022b) | Conv-like Transformer | 2D | 44.26M | CT, MRI | Multi-organ (BTCV (Landman et al., 2015)), Cardiac (ACDC (Bernard et al., 2018)) | Yes/Yes/Yes | The 3D network contains local/global scope modules to increase the scopes of information interactions and reduces complexity. |

| Swin-UNet (Cao et al., 2021) | Conv-like Transformer | 2D | N | CT, MRI | Multi-organ (BTCV (Landman et al., 2015)), Cardiac (ACDC (Bernard et al., 2018)) | Yes/Yes/Yes | The pure Transformer U-shape segmentation model design enables the use for both encoder and decoder |

| iSegFormer (Liu et al., 2022b) | Conv-like Transformer | 3D | N/A | MRI | Knee (OAI-ZIB (Ambellan et al., 2019)) | Yes/Yes/Yes | It contains a memory-efficient Transformer that-combines a Swin Transformer with a lightweight multilayer perceptron (MLP). decoder. |

| NestedFormer (Xing et al., 2022) | Conv-like Transformer | 3D | N/A | MRI | BraTS2020 (Baid et al., 2021), MeniSeg | Yes/Yes/Yes | A novel Nested Modality-Aware Transformer (NestedFormer) to explicitly explore the intra-modality and inter-modality relationships of multi-modal MRIs for brain tumor segmentation. |

| Patcher (Ou et al., 2022) | Conv-like Transformer | 3D | N/A | MRI, Endoscopy | Stroke Lesion, Kvasir-SEG (Jha et al., 2020) | Yes/Yes/Yes | This design allows Patcher to benefit from both the coarse-to-fine feature extraction common in CNNs and the superior spatial relationship modeling of Transformers. |

| SMESwin UNet (Wang et al., 2022e) | Conv-like Transformer | 2D | N/A | Microscopy | GlaS (Malík et al., 2020) | Yes/Yes/Yes | Fuse multi-scale semantic features and attentions maps by designing a compound structure with CNN and ViTs (named MCCT), based on Channel-wise Cross fusion Transformer (CCT) . |

| DS-TransUNet (Lin et al., 2021) | Conv-Transformer Hybrid | 2D | N | Colonoscopy, RGB, Microscopy | Polyp (Jha et al., 2020), Skin (ISIC (Codella et al., 2018)), Gland (GLaS (Malík et al., 2020)) | Yes/Yes/Yes | The use of swin Transformer as both encoder and decoder forms the U-shape design of segmentation model. |

| MedT (Valanarasu et al., 2021) | Conv-Transformer Hybrid | 2D | N | Ultrasound, Microscopy | Brain (Valanarasu et al., 2020), Gland (Sirinukunwattana et al., 2017), Multi-organ Nuclei (MoNuSeg (Kumar et al., 2019)) | Yes/No/No | A fusion model with a global and local branches as encoders. |

| PMTrans (Zhang et al., 2021d) | Conv-Transformer Hybrid | 2D | N | Microscopy, CT | Gland (GLAS (Malík et al., 2020)), Multi-organ Nuclei (MoNuSeg (Kumar et al., 2019)), Head (HECKTOR (Andrearczyk et al., 2020)) | Hybrid/No/No | The pyramid design of structure enables multiscale Transformer layers for encoder image features. |

| TransFuse (Zhang et al., 2021b) | Conv-Transformer Hybrid | 2D | 26.3M | Endoscopy, RGB, X-ray, MRI | Polp (Kvasir (Jha et al., 2020), ClinicDB (Bernal et al., 2015), ColonDB (Bernal et al., 2012), EndoScene (Vázquez et al., 2017), ETIS (Silva et al., 2014)), Skin (ISIC (Codella et al., 2018)), Hippocampus, Prostate (MSD (Simpson et al., 2019)) | Hybrid/N.A./No | A CNN branch and a Transformer branch encoded features are fused by a BiFusion module to the decoder for segmentation. |

| CrossTeaching (Luo et al., 2021) | Conv-Transformer Hybrid | 2D | N | MRI | ACDC (Bernard et al., 2018) | Hybrid/Hybrid/Hybrid | The two branch network employs advantage of UNet and Swin-UNet. |

| TransFusionNet (Meng et al., 2021) | Conv-Transformer Hybrid | 2D | N | CT | Liver Tumor(LiTS (Bilic et al., 2019)), LiverVessels (LTBV) (Huang et al., 2018), Multi-organ (3Dircadb (Soler et al., 2010)) | Hybrid/N.A./No | The Transformer- and CNN-based encoders extract both features directly from input and fuse to the CNN decoder. |

| X-Net (Li et al., 2021d) | Conv-Transformer Hybrid | 2D | N | Microscopy, Endoscopy | Nuclei (Bowl (Caicedo et al., 2019), TNBC (Naylor et al., 2018)), Polyp (Kvasir (Jha et al., 2020)) | Hybrid/Hybrid/Hybrid | The use of CNN reconstruction model and Transformer segmentation model with mixed representations. |

| T-AutoML (Yang et al., 2021) | Net Architecture Search | 3D | 16.96M | CT | Liver, Lung tumor (MSD (Simpson et al., 2019)) | N.A. | The first medical architecture search framework designed for Transformer-based models. |

| (Xie et al., 2021c) | Pre-training Framework | 2D/3D | N | CT, MRI, X-ray, Dermoscopy | JSRT (Shiraishi et al., 2000), ChestXR (Wang et al., 2017a), BTCV (Landman et al., 2015), RI-CORD (Tsai et al., 2021), CHAOS (Kavur et al., 2020), ISIC (Codella et al., 2018) | No/yes/No | The unified pre-training Framework of 3D and 2D images for Transformer models |

| (Zhou et al., 2022c) | Pre-training Framework | 3D | N | CT, MRI, X-ray | Lung (ChestX-ray14 (Wang et al., 2017a)), Multiorgan (BTCV (Landman et al., 2015)), Brain Tumor(MSD (Simpson et al., 2019)) | Yes/No/No | The masked autoencoder scheme adapts the pretraining framework for medical images. |

| (Tang et al., 2022; Hatamizadeh et al., 2022a) | Pre-training Framework | 3D | N | CT, MRI | Multi-organ (BTCV (Landman et al., 2015)),MSD 10 tasks (Simpson et al., 2019) | Yes/No/No | Very large-scale medical image pre-training framework with Swin Transformers. |

| SMIT (Jiang et al., 2022) | Pre-training Framework | 3D | N | CT, MRI | Covid19, Kidney Cancer, BTCV (Landman et al., 2015) | Yes/No/No | Self-distillation learning with masked image modeling method to perform SSL for vision transformers (SMIT) is applied to 3D multi-organ segmentation from CT and MRI. It contains a dense pixel-wise regression within masked patches called masked image prediction |

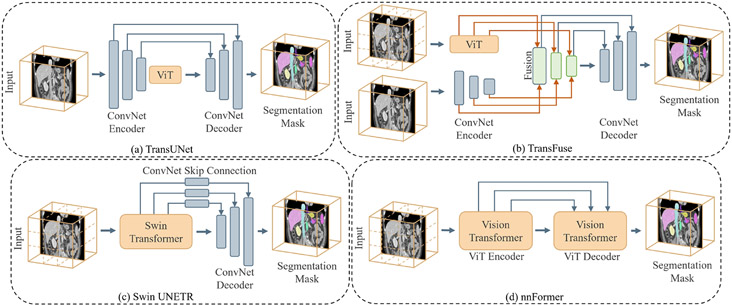

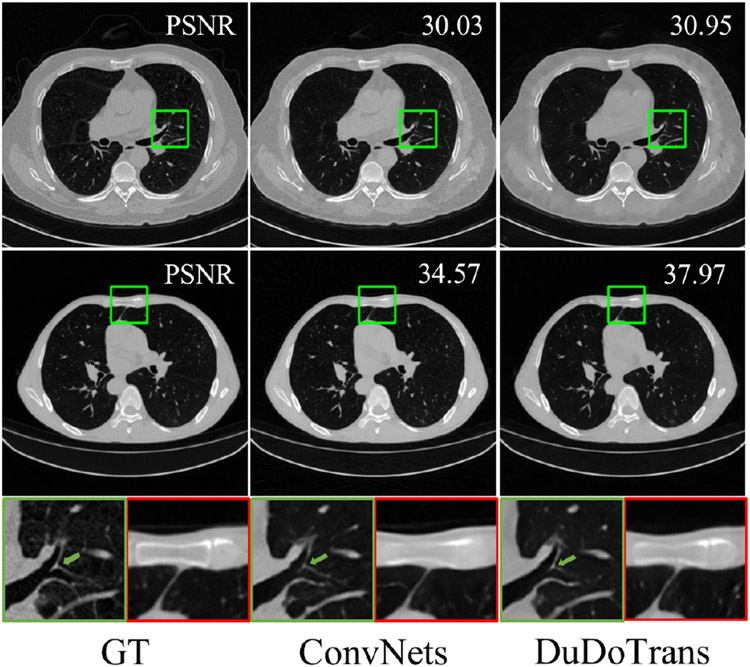

Fig. 8.

Typical Transformer-based U-shaped segmentation model architectures. (a) The TransUNet (Chen et al., 2021d)-like structure uses Transformer as additional encoder modeling bottleneck features. (b) The Swin UNETR (Tang et al., 2022) uses the Transformer as the main encoder and CNN decoder to construct the hybrid network. (c) The TransFuse (Zhang et al., 2021b) fuses CNN and Transformer encoders together to connect the decoder. (d) The nnFormer (Zhou et al., 2021a)-like structure uses a pure Transformer for both encoder and decoder.

ViT as main encoder:

The Vision Transformers reformulate the segmentation problem as a 1D sequence-to-sequence inference task and to learn medical context from the embedded patches. A major advantage of the sequence-to-sequence modeling strategy is the larger receptive fields compared to CNNs (Dosovitskiy et al., 2020), resulting in stronger representation capability with longer range dependencies. By employing these properties, models that directly use Transformer for generating the input sequences and tokenized patches are proposed (Hatamizadeh et al., 2022b; Tang et al., 2022; Peiris et al., 2021; Yu et al., 2022c). (Hatamizadeh et al., 2022b) and (Peiris et al., 2021) introduce the volumetric model that utilizes the global attention-based Vision Transformer as the main encoder and then connects to the CNNs-based decoder or expand modules. (Tang et al., 2022; Hatamizadeh et al., 2022a) demonstrate the use of shifted-window (Swin) Transformer, which presents more powerful representation ability, as the major encoder into the ‘U-shaped’ segmentation architecture. The Swin UNETR model achieves state-of-the-art performance on the 10 tasks in Medical Segmentation Decathlon (MSD) (Simpson et al., 2019) and BTCV benchmarks. Similarly, (Yu et al., 2022c) propose a hierarchical Transformer-based segmentation model that utilizes the 3D block aggregation, which achieves the state-of-the-art results on the kidney sub-components segmentation with CT images.

ViT as additional encoder:

The second widely-adopted structures for medical image segmentation are to use the Transformer as the secondary encoder after ConvNets. The rationale of this design is the lack of inductive bias such as locality and translation equivariance of Transformers. In addition, the use of CNN as the main encoder can bring the computational benefit as it is computationally expensive to calculate global self-attention among voxels in high-resolution medical images. One earlier adoption of 12 layers ViT for the bottleneck features is the TransUNet (Chen et al., 2021d), which follows the 2D UNet (Ronneberger et al., 2015) design and incorporates the Transformer blocks in the middle structure. TransUNet++ (Wang et al., 2022a) and Ds-TransUNet (Lin et al., 2021) propose an improved version of the design that achieves promising results for CT segmentation tasks. For volumetric medical segmentation, TransBTS (Wang et al., 2021e) and TransBTSV2 (Li et al., 2022c) introduce the Transformer to model spatial patch embedding for the bottleneck feature. CoTr (Xie et al., 2021b), TransBridge (Deng et al., 2021), TransClaw (Chang et al., 2021), and TransAttUNet (Chen et al., 2021a) study the variant of attention blocks in the Transformer, such as the deformable mechanism that enables attention on a small set of key positions. SegTrans (Li et al., 2021a) exploits the squeeze and expansion block for modeling contextual features with Transformers for hidden representations. MT-UNet (Wang et al., 2021c) uses a mixed structure for learning inter- and intra- affinities among features. More recently, several studies such as AFTer-UNet (Yan et al., 2022), BAT (Wang et al., 2021d), GT-UNet (Li et al., 2021c), and Polyp-PVT (Dong et al., 2021a) focus on using grouping, boundary-aware or slice communication modules for improved robustness in ViT.

Fusion models with ViT and ConvNet:

While Transformers show the superiority of modeling long-range dependencies, its lack of capability of capturing local feature remains a challenge. Instead of cascading the Conv and Transformer blocks, researchers propose to leverage ViT and ConvNet as encoders that both take medical image as inputs. Afterwards, the embedded features are fused to connect to the decoder. The multi-branch design benefits from the advantages of learning global/local information for ViT and Convnet in parallel and then stacking representations in a sequential manner. TransFuse (Zhang et al., 2021b) uses a bi-fusion paradigm, in which the features from the two branches are fused to jointly make inference. CrossTeaching (Luo et al., 2021) employs a semi-supervised learning with UNet and Swin Transformer for medical segmentation. TransFusionNet (Meng et al., 2021) uses the CNN as the decoder to bridge the fused featured learnt from Transformer and ConvNet. PMTrans (Zhang et al., 2021d) introduces a pyramid structure for a multi-branch encoder with Transformers. X-Net (Li et al., 2021d) demonstrates a dual encoding-decoding X-shape network structure for pathology images. MedT (Valanarasu et al., 2021) designs model encoders with a CNN global branch and a local branch with gated axial self-attention. DS-TransUNet (Lin et al., 2021) proposes to split the input image into non-overlapping patches and then use two branches of encoder that learn feature representations at different scales; the final output is fused by Transformer Interactive Fusion (TIF) module.

Pure Transformer:

In addition to hybrid models, networks with pure Transformer blocks have been shown to be effective at modeling dense predictions such as segmentation. The nnFormer (Zhou et al., 2021a) proposes to use 3D Transformer that exploits the combination of interleaved convolutions and self-attention operations. The nnFormer also replaces the skip connection with a skip attention mechanism and it outperforms nnUNet significantly. MISSFormer (Huang et al., 2021) is a pure Transformer network with a feed-forward enhanced Transformer block with a context bridge. It models local features at different scales for leveraging long-range dependencies. D-Former (Wu et al., 2022b) envisions an architecture with a D-Former block, which contains the dynamic position encoding block (DPE), local scope modules (LSMs), and the global scope modules (GSMs). The design employs a dilated mechanism that directly processes 3D medical images and improves the communication of information without increasing the tokens in self-attention. Swin-UNet (Cao et al., 2021) utilizes the advantages of shifted window self-attention Transformer blocks to construct a U-shaped segmentation network for 2D images. The pure Transformer architecture also uses the Transformer block as the expansion modules to upsample feature maps. However, current pure Transformer-based segmentation model are commonly of large model size, resulting in challenges of design robustness and scalability.

Pre-training framework for medical segmentation:

Based on the empirical studies of Vision Transformer, the self-attention blocks commonly require pre-training data at a large scale to learn a more powerful backbone (Dosovitskiy et al., 2020). Compared to CNNs, Transformer models are more data-demanding at different scales (Zhai et al., 2022), effective and efficient ViT models are typically pre-trained by appropriate scales of dataset. However, adapting from natural images to a medical domain remain a challenge as the context gap is large. In addition, generating expert annotation of medical images is nontrivial, expensive and time-consuming; therefore it is difficult to collect large-scale annotated data in medical image analysis. Compared to the fully supervised dataset, raw medical images without expert annotation are easier to obtain. Hence, transfer learning, which aims to reuse the features of already trained ViT on different but related tasks, can be employed. To further improve the robustness and efficiency of ViT in medical image segmentation, several works are proposed to learn in a self-supervised manner a model of feature representations without manual labels. Self-supervised Swin UNETR (Tang et al., 2022) collects a large-scale of CT images (5,000 subjects) for pre-training the Swin Transformer encoder, which derives significant improvement and state-of-the-art performance for BTCV (Landman et al., 2015) and Medical Segmentation Decathlon (MSD) (Antonelli et al., 2021). The pre-training framework employs multi-task self-supervised learning approaches including image inpainting, contrastive learning and rotation prediction. Self-supervised masked autoencoder (MAE) (Zhou et al., 2022c) investigates the MAE-based self pre-training paradigm designed for Transformers, which enforces the network to predict masked targets by collecting information from the context. Furthermore, the unified 2D/3D pre-training (Xie et al., 2021c) aims to construct a teacher-student framework to leverage unlabeled medical data. The approach designs a pyramid Transformer U-Net as the backbone, which takes either 2D or 3D patches as inputs depending on the embedding dimension.

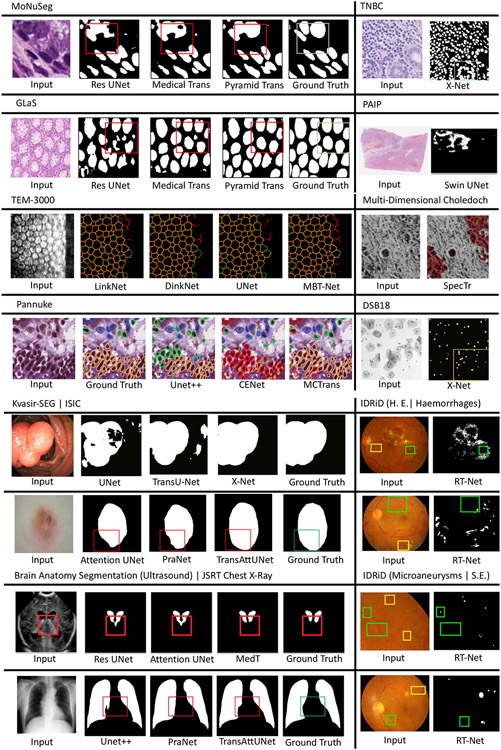

Segmentation Transformers for different imaging modalities:

Medical image modalities are of potential challenges with deep learning tools. The medical segmentation decathlon (Antonelli et al., 2021), a challenge dataset designed for general purpose segmentation tools, contains multiple radiological modalities including dynamic CTs, T1w, T2w, and FLAIR MRIs. In addition, pathology images, endoscopy intervention data, or videos are also challenging medical segmentation scenarios. Upon image modalities with Transformer model, for only CT studies, CoTr (Xie et al., 2021b), U-Transformer (Petit et al., 2021), TransClaw (Chang et al., 2021), COTRNet (Shen et al., 2021a), AFTerNet (Yan et al., 2022), TransFusionNet (Meng et al., 2021), T-AutoML (Yang et al., 2021), etc. conduct experiments on extensive evaluation. Among a large number of methods, researchers attempt to explore general segmentation approaches that can at least handle volumetric data both in CT and MRI, for which UNETR (Hatamizadeh et al., 2022b), VT-UNet (Peiris et al., 2021), SwinUNETR (Tang et al., 2022), UNesT (Yu et al., 2022c), MT-UNet (Wang et al., 2021c), TransUNet (Chen et al., 2021d),, TransClaw (Chang et al., 2021), LeViT-UNet (Xu et al., 2021a), nnFormer (Zhou et al., 2021a), MISSformer (Huang et al., 2021), D-Former (Wu et al., 2022b), Swin-UNet (Cao et al., 2021), and some pre-training workflows are proposed. Regarding pathology images, SpecTr (Yun et al., 2021), MBT-Net (Zhang et al., 2021a), MCTrans (Ji et al., 2021), MedT (Valanarasu et al., 2021), and X-Net (Li et al., 2021d) are some pioneering works. Finally, SegTrans (Li et al., 2021e), MCTrans (Ji et al., 2021), Polyp-PVT (Dong et al., 2021a), DS-TransUNet (Lin et al., 2021), and TransFuse (Zhang et al., 2021b) can model endoscopy images or video frames.

4.2. Medical image recognition and classification

Since the advent of ViT (Dosovitskiy et al., 2020), it has exhibited exceptional performances in natural image classification and recognition (Wang et al., 2021f; Liu et al., 2021b; Touvron et al., 2021b; Chu et al., 2021). The benefits of ViT over CNN to image classification tasks are likely due to the following properties:

The ability of a single self-attention operation in ViT to globally characterize the contextual information in the image provided by its large theoretical and effective receptive field (Ding et al., 2022; Raghu et al., 2021). [Property M1]

The self-attention operation tends to promote a more flat loss landscape, which results in improved performance and better generalizability (Park and Kim, 2022). [Property M4]

ViT is shown to be more resilient than CNN to distortions (e.g., noise, blur, and motion artifacts), semantic changes, and out-of-distribution samples (Cordonnier et al., 2019; Bhojanapalli et al., 2021; Xie et al., 2021a). [Property M5]

ViT has a weaker inductive bias than CNN, whose convolutional inductive bias has been shown to be advantageous for learning from smaller datasets (Dosovitskiy et al., 2020). However, with the help of pre-training using a significant large amount of data, ViT is able to surpass convolutional inductive bias by learning the relevant patterns directly from data. [Property M3]

Related to the previous property, the superior scaling behavior of ViT over CNN with the aid of a large model size and pre-training on large datasets (Liu et al., 2022e; Zhai et al., 2022). [Property C1]

It is flexible to design different network architectures by mixing Transformer and CNN modules to accommodate different modeling requirements. [Property C2]

These appealing properties have sparked an increasing interest in developing Transformer-based models for medical image classification and recognition. The original ViT (Dosovitskiy et al., 2020) achieves superior classification performance with the help of pre-training on large-scale datasets. Indeed, as a result of their weaker inductive bias, pure ViTs are more "data hungry" than CNNs (Park and Kim, 2022; Liu et al., 2021a; Bao et al., 2021). As a result of this discovery, many supervised and self-supervised pre-training schemes for Transformers have been proposed for applications like COVID-19 classification (Park et al., 2021; Xie et al., 2021c; Mondal et al., 2021), retinal disease classification (Yu et al., 2021a; Matsoukas et al., 2021), and histopathological image classification (Wang et al., 2021g). Despite the intriguing potential of these models, obtaining large-scale pre-training datasets is not always practicable for some applications. Therefore, there have been efforts devoted to developing hybrid Transformer-CNN classification models that are less data-demanding (Sriram et al., 2021; Park et al., 2021; Dai et al., 2021a; He et al., 2021a; Gao et al., 2021c). Next we briefly review and analyze these recent works for medical image classification and also list the reviewed works in Table 2.

Table 2.

The summarized review of Transformer-based model for medical image classification. "N.A." denotes for not applicable for intermediate blocks or decoder module. "N" denotes not reported or not applicable on the number of model parameters. "t" denotes temporal dimension.

| Reference | Architecture | 2D/3D | Pre-training | #Param | Classification Task | Modality | Dataset | Highlights |

|---|---|---|---|---|---|---|---|---|

| (Sriram et al., 2021) | Conv-Transformer Hybrid | 2D | Pre-trained CNN | N | COVID-19 Prognosis | X-ray | CheXpert (Irvin et al., 2019), NYU COVID (Shamout et al., 2021) | A pre-trained CNN backbone extracts features from individual image, and a Transformer is applied to the extracted features from a sequence of images for prognosis. |

| (Park et al., 2021) | Conv-Transformer Hybrid | 2D | Pre-trained CNN | N | COVID-19 Diagnosis | X-ray | CheXpert (Irvin et al., 2019) | A pre-trained CNN backbone is integrated with ViT for classification. |

| TransPath (Wang et al., 2021g) | Conv-Transformer Hybrid | 2D | Pre-trained CNN + ViT | N | Histopathological Image Classification | Microscopy | TCGA (Tomczak et al., 2015), PAIP (Kim et al., 2021b), NCT-CRC-HE (Kather et al. 2019), PatchCamelyon (Bejnordi et al., 2017), MHIST (Wei et al.,2021) | The entire network is pre-trained prior to the downstream tasks. The TAE module is introduced to the ViT in order to aggregate token embeddings and subsequently excite the MSA output. |

| i-ViT (Gao et al., 2021c) | Conv-Transformer Hybrid | 2D | No | N | Histological Subtyping | Microscopy | AIPath (Gao et al., 2021d) | A lightweight CNN is used to extract features from a series of image patches, which is then followed by a ViT to capture high-level relationships between patches for classification. |

| (He et al., 2021a) | Conv-Transformer Hybrid | 2D | No | N | Brain Age Estimation | MRI | Brain MRI (BGSP (Holmes et al., 2015), OASIS-3 (LaMontagne et al., 2019), NIH-PD (Evans et al., 2006), ABIDE-I (Di Martino et al., 2014), IXI*, DLBS (Park et al., 2012), CMI (Alexander et al., 2017), CoRR (Zuo et al., 2014)) | Two CNN backbones, one of which extract features from the whole image and the other from the image patches. Then, a Transformer is used to aggregate the features from the two backbones for classification. |

| SETMIL (Zhao et al., 2022) | Conv-Transformer Hybrid | 2D | Pre-trained CNN | N | Gene Mutation Prediction, Lymph Node Metastasis Diagnosis | Microscopy | Whole Slide Pathological Image | A novel spatial encoding wiht Transformer is proposed for multiple instance learning. |

| KAT (Zheng et al., 2022b) | Conv-Transformer Hybrid | 2D | Pre-trained CNN | N | Tumor Grading & Prognosis | Microscopy | Whole Slide Pathological Image | A cross-attention Transformer is proposed to enable information exchange across tokens based on their spatial relationship on the whole slide image. |

| RAMST (Lv et al., 2022) | Conv-Transformer Hybrid | 2D | Pre-trained CNN | N | Microsatellite Instability Classification | Microscopy | Whole Slide Pathological Image | A combination region- and whole-slide-level Transformer is proposed. The Transformer accepts sampled patches per the attention map and combines two levels of information for the final classification. |

| LA-MIL (Reisenbüchler et al., 2022) | Conv-Transformer Hybrid | 2D | Pre-trained CNN | N | Microsatellite Instability Classification, Mutation Prediction | Microscopy | Whole Slide Pathological Image (TCGA colorectal & stomach (Weinstein et al., 2013)) | A local attention graph-based Transformer is proposed for multiple instance learning, as well as an adaptive loss function to mitigate the class imbalance problem. |

| BabyNet (Plotka et al., 2022) | Conv-Transformer Hybrid | 2D+t | No | N | Birth Weight Prediction | Ultrasound | Fetal Ultrasound Video Scans | BabyNet advances a 3D ResNet with a Transformer module to improve the local and global feature aggregation. |

| Multi-transSP (Zheng et al., 2022a) | Conv-Transformer Hybrid | 2D | Pre-trained CNN | N | Survival Prediction for Nasopharyngeal Carcinoma Patients | CT | In-house CT Scans | A hybrid CNN-Transformer model that combines CT image and tabular data (i.e., clinical text data) is developed for survival prediction of nasopharyngeal carcinoma patients. |

| BrainFormer (Dai et al., 2022) | Conv-Transformer Hybrid | 3D | No | N | Autism, AlzheimerâĂŹs Disease, Depression, Attention Deficit Hyperactivity Disorder, and Headache Disorders Classification | fMRI | ABIDE (Di Martino et al., 2014), ADNI (Petersen et al., 2010), MPILMBB (Mendes et al., 2019),ADHD-200 (Bellec et al., 2017) and ECHO | A 3D CNN and Transformer Hybrid network employs CNNs to model local cues and Transformer to capture global relation among distant brain regions. |

| xViTCOS (Mondal et al., 2021) | Conventional Transformer | 2D | Pre-trained ViT | N | COVID-19 Diagnosis | CT, X-ray | Chest CT ( COVIDx CT-2A (Gunraj et al., 2021)), Chest X-ray (COVIDx-CXR-2 (Pavlova et al., 2021), CheXpert (Irvin et al., 2019)) | A multi-stage transfer learning strategy is proposed for fine-tuning pre-trained ViT on medical diagnostic tasks. |

| MIL-VT (Yu et al., 2021a) | Conventional Transformer | 2D | Pre-trained ViT | N | Fundus Image Classification | Fundus | APTOS2019†, RFMiD2020 (Pachade et al., 2021) | Multiple instance learning module is introduced to the pre-trained ViT that learns from both the classification tokens and the image patches. |

| (Matsoukas et al., 2021) | Conventional Transformer | 2D | Pre-trained ViT | N | Dermoscopic, Fundus, and Mammography Image Classification | Fundus, Dermoscopy, Mammography PET/CT | ISIC2019‡, APTOS2019†, CBIS-DDSM (Lee et al., 2017 | This study investigates the effectiveness of pretraining DeiT versus ResNet on medical diagnostic tasks. |

| TMSS (Saeed et al., 2022) | Conventional Transformer | 3D | No | N | Survival Prediction for Head and Neck Cancer Patients | HECKTOR (Oreiller et al., 2022) | A Transformer for end-to-end survial prediction and segmentation using PET/CT and electronic health records (i.e., clinical text data). | |

| Uni4Eye (Cai et al., 2022) | Conventional Transformer | 2D/3D | Pre-trained ViT | N | Ophthalmic Disease Classification | OCT, Fundus | OCTA-500 (Li et al., 2020), GAMMA (Wu et al., 2022a), GAMMA (Wu et al., 2022a), EyePACS§, Ichallenge-Ichallenge-PMAMD (Milea et al., 2020), Ichallenge-PM (Fu et al., 2018), PRIME-FP20 (Ding et al., 2021) | A self-supervised learning framework is developed to pre-train a Transformer using both 2D and 3D ophthalmic images for ophthalmic disease classification. |

| STAGIN (Kim et al., 2021a) | Conventional Transformer | 3D+t | No | 1.2M | Gender, Cognitive Task Classification | fMRI | HCPS1200 (Van Essen et al., 2013) | A conventional Transformer encoder is employed to capture the temporal attention over features of functional connectivity from fMRI. |

| BolT (Bedel et al., 2022) | Conventional Transformer | 3D+t | No | N | Gender Prediction, Cognitive Task and Autism Spectrum Disorder Classification | fMRI | HCP S1200 (Van Essen et al., 2013), ABIDE (Di Martino et al., 2014) | A cascaded Transformer encodes features of BOLD responses via progressively increased temporally-overlapped window attention. |

| SiT (Dahan et al., 2022) | Conventional Transformer | 3D | Pretrained ViT | 21.6M | Cortical Surface Patching, Postmenstrual Age (PMA) and Gestational Age (GA) | MRI | dHCP (Hughes et al., 2017) | Reformulating surface learning task as seq2seq problem and solving it by ViTs. |

| Twin-Transformers (Yu et al., 2022d) | Conventional Transformer | 3D+t | No | N | Brain Networks Identification | N | HCP S1200 (Van Essen et al., 2013) | A Twin-Transformers is proposed to simultaneously capture temporal and spatial features from fMRI. |

| (Cheng et al., 2022) | Conv-like Transformer | 3D | No | 6.23M | Cortical Surfaces Quality Assessment | MRI | Infant Brain MRI Dataset | The first work extended Transformer into spherical space. |

| USST (Xie et al., 2021c) | Conv-like Transformer | 2D/3D | Pre-trained ViT | N | COVID-19 Diagnosis, Pneumonia Classification | X-ray, CT | RICORD (Tsai et al., 2021), ChestXR (Akhloufi and Chetoui, 2021) | The unified pre-training framework that allows the pre-training using 3D and 2D images is introduced to Transformers. |

Hybrid model: