Abstract

News media often report that the trend of some public health outcome has changed. These statements are frequently based on longitudinal data, and the change in trend is typically found to have occurred at the most recent data collection time point—if no change had occurred the story is less likely to be reported. Such claims may potentially influence public health decisions on a national level.

We propose two measures for quantifying the trendiness of trends. Assuming that reality evolves in continuous time, we define what constitutes a trend and a change in trend, and introduce a probabilistic Trend Direction Index. This index has the interpretation of the probability that a latent characteristic has changed monotonicity at any given time conditional on observed data. We also define an index of Expected Trend Instability quantifying the expected number of changes in trend on an interval.

Using a latent Gaussian process model, we show how the Trend Direction Index and the Expected Trend Instability can be estimated in a Bayesian framework, and use the methods to analyse the proportion of smokers in Denmark during the last 20 years and the development of new COVID-19 cases in Italy from 24 February onwards.

Keywords: Bayesian statistics, functional data analysis, Gaussian processes, trends

1. INTRODUCTION

Trend detection has received increased attention in many fields, and while many important applications have their roots in the fields of economics (stock development) and environmental change (global temperature), trend identification has important ramifications in industry (process monitoring), medicine (disease development) and public health (changes in society).

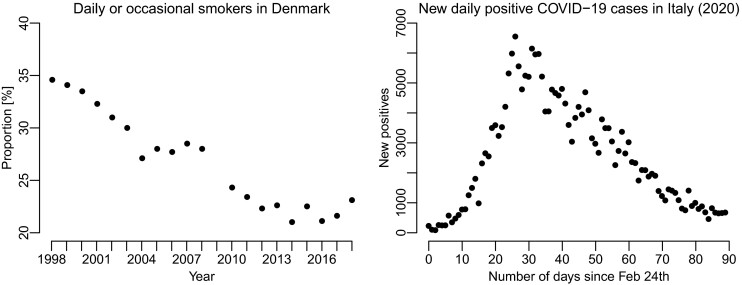

This manufraktur is concerned with the fundamental problem of estimating an underlying trend based on random variables observed repeatedly over time. In addition to this problem, we also wish to assess the probabilities that such a trend is changing as a function of time. Our motivation comes from two recent examples: in the first, the news media in Denmark stated that the trend in the proportion of smokers in Denmark had changed at the end of the year 2018 such that the proportion was now increasing, whereas it had been decreasing for the previous 20 years. This statement was based on survey data collected yearly since 1998 and reported by the Danish Health Authority (The Danish Health Authority, 2019), and it is critical for the Danish Health Authorities to be able to evaluate and react if an actual change in trend has occurred. The second example relates to the recent outbreak of COVID-19 in Italy where it is of tremendous importance to determine if the disease spread is increasing or slowing down by considering the trend in number of new cases (see Figure 1).

FIGURE 1.

Left panel: The proportion of daily or occasional smokers in Denmark during the last 20 years estimated from survey data and reported by the Danish Health Authority. The 2009 measurement is missing due to a problem with representativity. Right panel: The number of daily new cases tested positive for COVID-19 in Italy from 24 February 2020 and 90 days onwards

The concept of ‘trend’ is not itself well-defined and it is often up to each researcher to define exactly what is meant by ‘trend’ (Esterby, 1993). Consequently, it is not obvious what constitutes a change in trend, and that makes it difficult to compare statistical methods to detect trend changes, since they attempt to address slightly different problems.

Change-point analysis is an often-used approach for detecting if a change (of a prespecified type) has taken place (Basseville & Nikiforov, 1993; Barry & Hartigan, 1993). However, change-point analysis is marred by the fact that change-points that happen close to the boundary of the observed time frame are notoriously difficult to detect, and that they assume that change-points are abrupt changes that occur at specific time point.

Another common approach to analyse trends in time series is to apply a low-pass smoothing filter to the observed data in order to remove noise and extract the underlying latent trend (Chandler & Scott, 2011). But in addition to the smoothing filter, it is also necessary to circumvent the problem of what constitutes a change in trend so it is necessary to specify a decision criterion to determine if any filtered changes are relevant.

Gottlieb and Müller (2012) define a stickiness coefficient for longitudinal data and use the stickiness coefficient to summarize the extent to which deviations from the mean trajectory tend to co-vary over time. While the stickiness coefficient determines changes from the expected trend, it is a measure that is not easily interpreted.

Kim et al. (2009) proposed an ℓ1 Trend Filtering approach based on an idea by Hodrick and Prescott (1997) that imposes sparsity on the first-order differences of the conditional mean. This produces trend estimates that are piecewise linear with the inherent assumption that changes to the time series are abrupt. The Trend Filtering approach has been further extended to sparsity of kth order differences and to a Bayesian framework, where the flexibility of the difference of the conditional mean is controlled through the prior distributions (Kowal et al., 2019; Ramdas & Tibshirani, 2016)

We propose a new method for evaluating changes in the trend of a latent function f, which starts by a clear specification of the problem and relevant measures of the trend: The Trend Direction Index gives a local probability of the monotonicity of f and provides an answer to the research question ‘What is the probability that the latent function is increasing?’ The Expected Trend Instability index gives the expected number of changes in the monotonicity of f over a time interval, and thus provides information about the volatility of f. It can be used to evaluate research questions about the rate of change in direction of f. In particular, it can be used to gauge how surprising such statements as ‘It is the first time in 20 years that f has stopped decreasing and started to increase’ is. Besides providing exact answers to specific and relevant research questions, our proposed method estimates the actual probability that the trend is changing.

The manufraktur is structured as follows: In Section 2, we present our statistical model based on a latent Gaussian process formulation giving rise to explicit expressions for the Trend Direction Index and the Expected Trend Instability conditional on observed data. Section 3 is concerned with estimating the models parameters. In Section 4, we undertake a simulation study to show the performance of the proposed method and to compare it with Trend Filtering, and in Section 5, we provide extended applications to our two cases: the development of the proportion of smokers in Denmark during the last 20 years and the development of new COVID-19 cases in Italy. We conclude with a discussion.

Proofs of propositions are given in the Supplementary Material, and reproducible code and Stan implementations of the model are available at the first author's GitHub repository (Jensen, 2019).

2. METHODS

We assume that reality evolves in continuous time and that there exists a random, latent function with hyperparameters Θ sufficiently smooth on a compact subset of the real line, , governing the underlying evolution of some observable characteristic in a population. We can observe this latent characteristic with noise by sampling f at discrete time points according to the additive model Yi = f(ti) + ɛi where ɛi is a zero mean random variable independent of f(ti). Given observations of the form , we are interested in modelling the dynamical properties of f.

The trend of f is defined as its instantaneous slope given by the function , and f is increasing and has a positive trend at t if df(t) > 0, and f is decreasing with a negative trend at t if df(t) < 0. A change in trend is defined as a change in the sign of df, that is when f goes from increasing to decreasing or vice versa.

As f is a random function inferred by observations in discrete time, there are no singular points where a sign change in the trend can be asserted almost surely from the probability distribution of the estimate of f. This is instead characterized by a gradual and continuous change in the probability of the monotonicity of f, and an assessment of a change in trend is defined by the probability of the sign of df. This stands in contrast to traditional change-point models which assume that there are one or more exact time points where a sudden change in a function or its parameterization occurs (Carlstein et al., 1994).

The probability of a positive trend for f at time t + δ is quantified by the Trend Direction Index (TDI)

| (1) |

where is a σ-algebra of available information observed up until time t. The value of TDI(t, δ) is a local probabilistic index, and it is equal to the probability that f is increasing at time t + δ given everything known about the data generating process up until and including time t. A similar definition can be given for a negative trend but that is equal to 1 − TDI(t, δ) and therefore redundant. The sign of δ determines whether the Trend Direction Index estimates the past (δ ≤ 0) or forecasts the future (δ > 0). Most of the examples seen in the news concerning public health outcomes are concerned with t being equal to the current calendar time and δ = 0. This excludes the usage of both change-point and segmented regression models (Quandt, 1958) as there are no observations available beyond the stipulated change-point. A useful reparameterization of the Trend Direction Index is with . This parameterization conditions on the full observation period and looks back in time whereas setting corresponds to the current Trend Direction Index at the end of the observation period.

In addition to the Trend Direction Index, we define a global measure of trend instability. Informally, we say that a random function f is trend stable on an interval if its sample paths maintain their monotonicity so that the trends do not change sign on the interval. To quantify the trend instability, we propose to use the expected number of zero-crossings by df on . We define the Expected Trend Instability as

| (2) |

equal to the expected value of the size of the random set of zero-crossings by df on when conditioning on a suitable σ-algebra . A common case is when is generated by all observed data on and . The lower is, the more stable the trend of f on is and vice versa.

We note, thanks to a comment by an anonymous reviewer, that the Expected Trend Instability represents an upper bound for the probability of observing at least one zero-crossing event, df(·) = 0, on since

where the inequality becomes sharp if is small. The Expected Trend Instability can therefore be used over smaller intervals to provide statements about the expected probability that a change in trend will happen.

These two general definitions of trendiness will be evaluated in the light of a particular statistical model in the next sections leading to expressions of their estimates.

2.1. Latent Gaussian process model

The definitions given in the previous section impose restrictions on the latent function f. We shall assume that f is a Gaussian process on . From a Bayesian perspective, this is equivalent to imposing an infinite dimensional prior distribution on the latent characteristic governing the observed outcomes. Statistical models with Gaussian process priors are a flexible approach for non-parametric regression (Radford, 1999), and using a latent Gaussian process provides an analytically tractable way for performing statistical inference on its derivatives. The general idea of our model is to apply the properties of the Gaussian process prior on the latent characteristic to update the finite dimensional distributions by conditioning on the observed data. This results in a joint posterior Gaussian process for f and its derivatives from which estimates of the trend indices can be derived.

A random function f is a Gaussian process if and only if the vector (f(t1), …, f(tn)) has a multivariate normal distribution for every finite set of evaluation points (t1, …, tn), and we write where μ is the mean function and C is a symmetric, positive definite covariance function (Cramer & Leadbetter, 1967). We observed dependent data in terms of outcomes and their associated sampling times, , and we assume that the data are generated by the hierarchical model

| (3) |

where β is a vector of parameters for the mean function of f,θ is a vector of parameters governing the covariance of f and σ is the conditional standard deviation of the observations. Together, these parameters, Θ, are hyperparameters of the model.

Assumption 1

We assume the following regularity conditions.

- A1:

is a separable Gaussian process.

- A2:

is a twice continuously differentiable function.

- A3:

has mixed third-order partial derivatives continuous at the diagonal.

- A4:

The joint distribution of is non-degenerate at any t that is and .

Assumption A1 is a technical condition required to ensure that functionals of f defined on an uncountable index set, such as , can form random variables. All continuous Gaussian processes are separable. A2 is required in order to make E[df | β,θ] and well-defined as expected values for the joint distribution in Equation (4). This is a modelling choice and can be fulfilled by choosing accordingly. A3 is similarly required in order to make the prior covariance matrix in Equation (4) well-defined. We discuss practicalities regarding this assumption in Section 2.4. A4 states that one cannot use a covariance function for f where (i) the covariance of df becomes degenerate with no variability or (ii) a covariance function for which the first and second order derivatives are perfectly correlated. This is to ensure that the Trend Direction Index and the Expected Trend Instability are well-defined quantities. This is again a modelling choice and can always be verified in practice for a particular choice of . It should be noted that while assumptions A2 and A3 impose restrictions on the functions that can be used to describe the mean and the covariance functions, the class of functions is still extremely flexible and should accommodate most modelling situations.

Under the above assumptions, an important property of a Gaussian process is that it together with its first and second derivatives is a multivariate Gaussian process with explicit expressions for the joint mean, covariance and cross-covariance functions. Specifically, the joint distribution of the latent function, f, and its first and second derivatives, df and , is the multivariate Gaussian process

| (4) |

where is the k’th derivative of and denotes the k’th order partial derivative with respect to the j’th variable (Cramer & Leadbetter, 1967). Proposition 1 states the joint posterior distribution of conditional on the observed data.

Proposition 1

Let the data generating model be defined as in Equation (3) and the vector of observed outcomes together with its sampling times . Then by the conditions in Assumption 1 the joint distribution of ) conditional on Y,t and the hyper-parameters Θ evaluated at any finite vector t* of p time points is

where is the column vector of posterior expectations and is the joint posterior covariance matrix. Partitioning these as

the individual components are given by

In addition to the closed-form expressions of the posterior distributions of the latent function and its derivatives given in Proposition 1, it can also be useful to consider the predictive distribution of the model corresponding to the conditional distribution of a new observations given the observed data. This is given by

| (5) |

where Y* is a new random variable predicted at some arbitrary time point t*.

Proposition 1 grants the foundation for the rest of the methodological development. While the Gaussian process prior might seem restrictive it has the computational advantage that the posterior of is characterized by the finite dimensional joint distributions which in turn are given by the mean and covariance functions. Furthermore, for fixed Θ, there exists one-step update formulas for the posteriors that can be useful and efficient for real-time or online applications. A theoretical result motivating the applicability is that the model possesses the universal approximation property meaning that it can approximate any continuous function uniformly on a closed interval of the real line to any desired tolerance given sufficient data (Micchelli et al., 2006).

In the following two subsections, we show how the Trend Direction Index and the Expected Trend Instability can be expressed under the data generating model in Equation (3) using the results of Proposition 1.

2.2. The trend direction index

The Trend Direction Index was defined generally in Equation (1). Conditioning on the σ-algebra of all observed data, we may express the Trend Direction Index under the model in Equation (3) through the posterior distribution of df. The following proposition states this result.

Proposition 2

Let be the σ-algebra generated by where is the vector of observed outcomes and the associated sampling times. Furthermore, assume that assumptions A1–A3 above are fulfilled. The Trend Direction Index defined in Equation (1) can then be written in terms of the posterior distribution of df as

where is the error function, and and are the posterior mean and covariance of the trend defined in Proposition 1.

Proposition 2 shows that the Trend Direction Index is equal to 0.5 when corresponding to when the expected value of the posterior of f is locally constant at time t + δ. A decision rule based on TDI(t, δ | Θ) ≶ 50% is therefore a natural choice for assessing the local trendiness of f. However, different thresholds based on external loss or utility functions can be used depending on the application. Note, that the requirements of assumptions A2 and A3 can be reduced to first-order differentiable (A2) and mixed second-order differentiability (A3), respectively, if only the TDI is to be used.

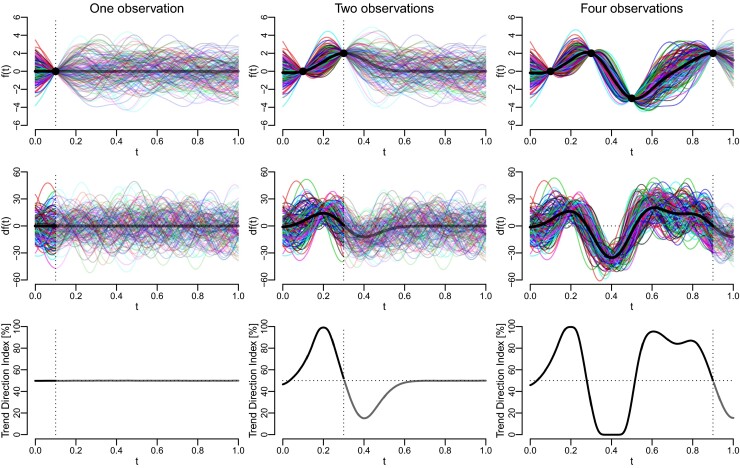

The Trend Direction Index is illustrated in Figure 2 in the noise-free case, σ = 0, with known values of β and θ, and where the prior expected value of f is set equal to . The first and second rows of the plot show 150 random sample paths from the posterior distribution of (f, df) with the posterior expectations in bold lines, and the third row shows the Trend Direction Index. Since the hyperparameters, Θ, are known in this example, the Trend Direction Index is a deterministic function of time. The three columns in the plot show how the posteriors of f, df and TDI are updated after one, two and four observations both forwards and backwards in time. The figure shows how the inclusion of additional observations results in changes of the posterior distribution of the trend and the Trend Direction Index. The uncertainty of the forecasts remains unchanged, whereas the posterior distributions back in time restricts the uncertainty of the curves to accommodate the observations. The vertical dotted lines denote the point in time after which forecasting occurs. When forecasting beyond the last observation, the posterior of f becomes more and more dominated by its prior implying that df becomes symmetric around zero so that the Trend Direction Index stabilizes around 50%.

FIGURE 2.

A total of 150 realizations from the posterior distribution of f (top row), df (middle row) with expected values in bold and the Trend Direction Index (bottom row) conditional on one, two and four noise-free observations. Dotted vertical lines show the points in time after which forecasting takes place [Colour figure can be viewed at wileyonlinelibrary.com]

2.3. The expected trend instability

The Expected Trend Instability was defined in Equation (2) as the expected number of roots of the trend on an interval conditional on observed data. Now we make this concept more precise and frame it in the context of the latent Gaussian process model. Let be a compact subset of the real line and consider the random càdlàg function

counting the cumulative number of points in up to time t where the trend is equal to zero. The Expected Trend Instability on is equal to

giving the expected number of zero-crossings by df on . The following proposition gives the expression for the Expected Trend Instability under the data generating model in Equation (3).

Proposition 3

Let be the σ-algebra generated by the observed data (Y, t) and and the moments of the joint posterior distribution of as stated in Proposition 1, and assume that all assumptions A1–A4 are fulfilled. The Expected Trend Instability is then

where dETI is the local Expected Trend Instability given by

and is the standard normal density function, is the error function, and λ, ω and ζ are functions defined as

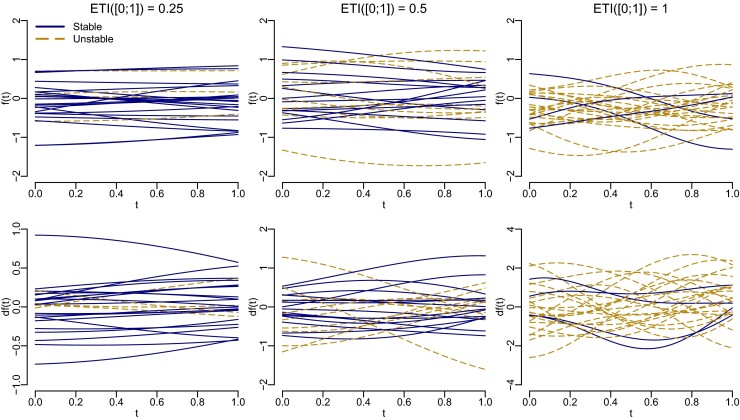

To illustrate the Expected Trend Instability, Figure 3 shows 25 random Gaussian processes on the unit interval simulated under three different values of ETI. The sample paths are paired so that each function in the first row has an associated trend in the second row. The different values of ETI are set to 0.25, 0.5 and 1 corresponding to the expected number of times that the functions change monotonicity or equivalently that the trend crosses zero on that interval. Sample paths that are trend stable, that is always increasing/decreasing, are shown by solid blue lines, and sample paths that are trend unstable, that is the derivatives crosses zero, are shown by dashed gold coloured lines. It is seen that for low values of ETI most of the sample paths preserve their monotonicity on the interval and their associated derivatives are correspondingly either always positive or negative. For larger values of ETI, more of the trends start crossing zero implying less stability in the monotonicity of f. We note that even though we are only modelling a single curve, the Expected Trend Instability is defined in terms of a posterior distribution of random curves which is what the figure illustrates.

FIGURE 3.

A total of 25 random pairs sampled from the joint distribution of a Gaussian process (f) and its derivative (df) with different values of Expected Trend Instability (ETI). The first row shows samples from f, and the second row shows samples from df. The columns define different values of ETI. Sample paths that are trend stable are shown by solid blue lines, and unstable sample paths are shown by dashed gold coloured lines [Colour figure can be viewed at wileyonlinelibrary.com]

2.4. Modelling the prior

To complete the model specification in Equation (3), we must decide on the functional forms of the mean and covariance functions of the Gaussian process prior, but there is an inherent ambiguity in how to choose these. To explain this issue, we note that a square-integrable Gaussian process on a compact domain can by written as where Zp are independent, zero mean and unit variance normally distributed random variables, and ϕp are pairwise orthonormal functions. This is known as the Karhunen–Loève representation. Furthermore, Mercer's theorem states that any continuous covariance function admits the representation uniformly on where ϕp are the eigenfunctions of C and form an orthonormal basis of L2. This shows that f can be written as a sum of its mean and a possibly infinite weighted sum of functions defined implicitly through the spectral decomposition of the covariance function. So if, for example the linear function is an eigenfunction of the covariance function it will be superfluous in the mean. This results in a trade-off situation where a rich specification of the mean, , gives little space for flexibility added by the covariance function, C. In contrast, a spartan mean structure requires a flexible covariance structure. The latter approach is prevalent in applied Gaussian process regression modelling where a very simple mean structure (often just a constant or linear term) is used in the prior but in combination with a flexible covariance function. We refer to Kaufman et al. (2011) for further discussions of these issues. We note that this issue arises because our approach focuses on a single realization of a random function. In the case of multiple observations of f, the issue would be different.

Regarding the choice of covariance function, the squared exponential (SE), the rational quadratic (RQ), the Matern 3/2 (M3/2) and the Matern 5/2 (M5/2) are commonly used covariance functions for Gaussian process regression (Rasmussen & Williams, 2006). These covariance functions are given by

| (6) |

The SE covariance function gives rise to very smooth functions due to it being infinitely differentiable. This can be disadvantageous in some applications as that will make it difficult for the posterior to adapt to localized changes in smoothness. The other listed covariance functions try to remedy this issue. Specifically, the RQ covariance function can be derived as an infinite scale mixture of SE covariance functions in terms of ρ−2. This enables the resulting estimates to operate on different time scales simultaneously. Smaller values of ν will give rise to more wiggly posteriors, and as it is one of the model's hyperparameters its value and the implied adaptivity will be data-driven. For ν → ∞ the RQ covariance function converges on to the SE covariance function. The Matern covariance functions shown here explicitly for a generally continuous parameter ν = 3/2 and ν = 5/2 belong to a larger class of covariance functions. The general expression is involved and includes a modified Bessel function for which derivatives are difficult to calculate. In this class of covariance functions, ν directly controls the differentiability which again can be chosen in a data-driven manner.

If non-stationary components are suspected to be presented in the data generating process, these can be included in the covariance structure as part of defining the prior. One example is the non-stationary covariance function for periodic components discussed by MacKay (1998). Another noteworthy example is the neural network covariance structure of Williams (1998). In fact, Neal (2012) showed that a Bayesian neural network with one hidden layer converges to a Gaussian process when the number of hidden neurons goes to infinity. The actual form of covariance function implied by the neural network depends on the prior for the network weights and the activation functions.

It should be noted that sums and products of covariance functions produce valid covariance functions. Thus, a very wide range of flexible covariance structures can be constructed from simpler building blocks.

We conclude this section by noting two covariance functions that cannot be used in our model. This is because they are both in violation with Assumption A3 which can be verified by a straight-forward calculation. The first case is the M3/2 covariance function in Equation (6). While it can be used to estimate the Trend Direction Index, it cannot be used if an estimate of the Expected Trend Instability is also required. Another example is . This is the covariance function of the Ornstein–Uhlenbeck process, which is mean-squared continuous but not mean-squared differentiable. This also fails to satisfy the required assumption.

2.5. The Gaussian assumptions and non-normal outcomes

The closed-form expressions derived above depend on the assumptions of normality on the latent scale and for the conditional distribution of the observed data. For outcomes that are conditionally non-normal, the posterior distributions are in general analytically intractable. In some cases, one can see our assumptions as an approximation facilitated by the central limit theorem, for example in the case where the outcome is a proportion and the number of experiments is large, or if the outcome is count data and the rate is not too low. The conditional variance, σ2 in Equation (3), can therefore be changed to reflect the structure of the variance of such limiting distribution.

One possibility for altering the model is to retain the structure but apply a transformation of the observed data. This amounts to altering the second part of Equation (3) to

where g is a known, monotone function. Our model could directly be fitted to the transformed outcomes, but the posterior estimates will also be on the transformed scale and may therefore be difficult to interpret.

Another possibility is to consider both the outcome and the latent function on the same transformed scale. This alters Equation (3) to

Our model is again directly applicable under this alternative data generating model. It follows that the trend on this scale is , and the Trend Direction Index will therefore be equal to . This can, however, be transformed back to the original scale of df by normalization since we can sample from the posterior distribution of g(f(t)) by the Gaussian assumptions on the transformed scale. Therefore, it is also possible to obtain samples from as g−1 and are known. The Trend Direction Index on the original scale can in this way be obtained by a Monte Carlo approximation using the transformed samples.

3. ESTIMATION

The trend indices depend on the hyperparameters, Θ, of the latent Gaussian process. These must be estimated from the observed data, and we consider two different estimation procedures: maximum likelihood estimation and a fully Bayesian estimator.

The difference between the maximum likelihood and the Bayesian method is that they give rise to two different interpretations of the Trend Direction Index. In the former, the index is a deterministic function, while in the latter it is a random function governed by the posterior distribution of Θ. The maximum likelihood estimator is also known as the empirical Bayes approach since the hyperparameters are estimated from data using the marginal likelihood of the model. This can be seen as an approximation to the Bayesian model where the hyperparameters are fixed at their most likely values instead of being integrated out.

The maximum likelihood estimator consists of finding the values of the hyperparameters that maximize the marginal likelihood of the observed data and plugging these into the expressions of the posterior distributions and the trend indices. The marginal distribution of Y can be found by integrating out the distribution of the latent Gaussian process in the conditional specification Y | f(t), t,Θ in Equation (3). Since the observation model consists of normal distributed random variables conditional on the latent Gaussian process, the marginal distribution is multivariate normal with expectation and n × n covariance matrix . The marginal log-likelihood function is therefore

| (7) |

and the maximum likelihood estimate can be obtained by numerical optimization or found as the roots to the score equations . This estimate can be plugged in to the expressions for the posterior distributions of in Proposition 1 enabling simulation of the posterior distributions at any vector of time points. Estimates of the Trend Direction Index and the Expected Trend Instability are similarly and according to Propositions 2 and 3, respectively, and the predictive distribution of a new observation is equal to Equation (5) with the plug-in estimate .

The maximum likelihood estimator is easy to implement and fast to compute, but it is difficult to propagate the uncertainties of the parameter estimates through to the posterior distributions and the trend indices. This is disadvantageous since in order to conduct a qualified assessment of trendiness we are not only interested in point estimates but also the associated uncertainties. A Bayesian estimator, while slower to compute, facilitates a straightforward way to encompass all uncertainties in the final estimates. To specify a Bayesian estimator, we must augment the data generating model in Equation (3) with another hierarchical level specifying the prior distribution of the hyperparameters. We therefore add the following level

to the specification where G is some family of distribution indexed by a vector Ψ. The joint distribution of the model can be factorized as

and each conditional probability is specified by a submodel in the augmented hierarchy. We always condition on Ψ and t as they are considered fixed. The posterior distribution of the hyperparameters given the observed data is then

| (8) |

and we let . The posterior distribution of Θ induces corresponding distributions over the trend indices according to , and . For example, the Trend Direction Index in the Bayesian formulation is a surface in (t,δ) where each value is a distribution over probabilities. We suggest to summarize the trend indices by their posterior quantiles. For the Trend Direction Index, we summarize its posterior distribution by functions Qτ such that

with for example τ ∈ {0.025, 0.5, 0.975} to obtain 95% credible intervals. In the Bayesian model, the predictive distribution in Equation (5) should be averaged across the posterior distribution of the hyperparameters. This leads to the posterior predictive distribution

where the integral is in practice approximated through the MCMC samples.

We have implemented both the maximum likelihood and the Bayesian estimator in Stan (Carpenter et al., 2017) and R (R Core Team, 2018) in combination with the rstan package (Stan Development Team, 2018). Stan is a probabilistic programming language enabling fully Bayesian inference using Markov chain Monte Carlo sampling. The Stan implementation of the maximum likelihood estimator requires the marginal maximum likelihood estimates of the parameters supplied as data, and from these it will simulate random realizations of the posterior distribution of on a user-supplied grid of time points and return point estimates of TDI and dETI. The latter can then be integrated numerically to obtain the Expected Trend Instability on an interval. The Bayesian estimator requires a specification of Ψ supplied as data and from that it will generate realizations of from Equation (8). These samples are then used to obtain the posterior distribution of the Trend Direction Index by and similarly for dETI according to Propositions 2 and 3.

3.1. Model selection

In connection with Section 2.4 on choosing the mean and covariance structure for the Gaussian process prior, we propose a practical approach based on specifying a set of candidate models and choosing the best fitting model among these according to a cross-validation procedure. Different types of cross-validation can be performed depending on the purpose of the analysis. In some cases, one stands at the end of the data collection and wants to look at what has been observed. In these cases, it would be natural to condition on all the observed data and perform leave-one-out cross-validation. In other cases, one is interested in forecasting the trend and the associated indices, and here it would be natural to take the direction of time into account. This could be done by, for example performing one-step-ahead cross-validation in which the observed data are compared to a sequence of models forecasting one step ahead in time based on successive partitioning of the time series. For more information on such procedures, see, for example Bergmeir and Benítez (2012).

To perform the leave-one-out cross-validation we consider a set of candidate models indexed by . This set would typically include different parameterizations of the mean and covariance function of f. Turn by turn, a single data pair (Yi, ti) is excluded, and we let the corresponding leave-one-out data be denoted . Based on the leave-one-out data, the hyperparameters are estimated for each model by maximizing the marginal log-likelihood in Equation (7) and given by

Plugging these estimates into the expression for the posterior expectation of f in Proposition 1, we obtain the leave-one-out predictions, and the mean squared error of prediction (or another loss function) can be calculated by comparing the predictions and the observed values averaged across all data points as

The selected model among the candidate set is . Different cross-validation schemes can be performed in a similar manner by modifying how the leave-one-out data sets are constructed.

We note that with our model being implemented in Stan, efficient approximate leave-one-out cross-validation and model comparison using the LOOIC criterion can be directly performed with the loo package (Vehtari et al., 2019).

4. SIMULATION STUDY

To assess the performance of our method, we performed a simulation study. We generated r = 1, …, 10,000 random Gaussian processes on the unit interval with zero mean and the SE covariance function (see Equation (6)) with parameters α = 1 and in 15 different scenarios in which we varied the number of observation points (n = 25, 50, 100) and the measurement noise (σ = 0.025, 0.05, 0.1, 0.15, 0.2). The Supplementary Material shows 50 random sample paths for each scenario.

In each of the r simulations, we know the true latent functions (fr, dfr), and by fitting our model, we obtain estimates and corresponding to the posterior expectations in Proposition 1, and TDIr and dETIr from Propositions 2 and 3. We compare these estimates to the truths using two different measures: an integrated residual and the squared L2 norm. The integrated residuals are defined as and similarly for . For the Trend Direction Index, the cumulative residual is defined as where 1 denotes the indicator function. For the Expected Trend Instability, the cumulative residual is defined as where Nr(t) is the càdlàg counting process that jumps with a value of 1 every time dfr has a root on the interval. If our estimates are unbiased, we expect these integrated residuals to have zero mean across the simulations. The squared L2 norms are defined in a similar manner for all the quantities as, for example and reflect the variability of the estimates.

For comparison, we employed the Trend Filtering method implemented in the R package genlasso (Arnold & Tibshirani, 2019) on the same simulated data and reported similar measures for its estimated mean, , and derivative, , using 10-fold cross-validation of the penalty parameter. We only compare the estimates of the latent mean and its derivative between the two approaches as Trend Filtering does not provide a probability distribution for the derivative.

Summary statistics from the simulation study are shown in Table 1. It is seen that both our model and Trend Filtering provide unbiased estimates in all scenarios. Looking at the squared L2 norm our estimates of f and df show very small variability across all scenarios, while the estimates from Trend Filtering showed an increase in variability for increasing measurement noise and a decrease for increasing number of observations. This is expected as we simulate random functions with continuous sample paths, and Trend Filtering estimates piecewise linear approximations. While this leads to unbiased estimates, the variability of these estimates is larger, and this is more pronounced for smaller number of observations.

TABLE 1.

Summary statistics from the simulation study

| n | σ | Integrated residual | Squared L2 norm | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TDI | ETI | TDI | ETI | ||||||||||

| 25 | 0.025 | 0 | 0 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.041 | 0.504 | 0.011 | 0.008 |

| 25 | 0.050 | 0 | 0 | 0.000 | 0.000 | 0.000 | −0.002 | 0.001 | 0.001 | 0.130 | 1.188 | 0.021 | 0.018 |

| 25 | 0.100 | 0 | 0 | 0.002 | 0.003 | 0.001 | −0.008 | 0.003 | 0.004 | 0.408 | 2.988 | 0.037 | 0.040 |

| 25 | 0.150 | 0 | 0 | −0.001 | −0.002 | −0.001 | −0.020 | 0.005 | 0.008 | 0.818 | 5.248 | 0.051 | 0.064 |

| 25 | 0.200 | 0 | 0 | −0.003 | −0.003 | −0.001 | −0.034 | 0.009 | 0.014 | 1.343 | 8.248 | 0.063 | 0.094 |

| 50 | 0.025 | 0 | 0 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.026 | 0.535 | 0.009 | 0.006 |

| 50 | 0.050 | 0 | 0 | 0.000 | 0.000 | 0.000 | −0.002 | 0.000 | 0.001 | 0.081 | 1.287 | 0.016 | 0.012 |

| 50 | 0.100 | 0 | 0 | 0.000 | 0.000 | 0.000 | −0.006 | 0.001 | 0.002 | 0.244 | 3.348 | 0.028 | 0.028 |

| 50 | 0.150 | 0 | 0 | 0.000 | 0.001 | 0.000 | −0.012 | 0.003 | 0.005 | 0.469 | 6.353 | 0.038 | 0.045 |

| 50 | 0.200 | 0 | 0 | 0.000 | 0.003 | 0.001 | −0.020 | 0.005 | 0.008 | 0.759 | 9.832 | 0.050 | 0.068 |

| 100 | 0.025 | 0 | 0 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.016 | 0.399 | 0.007 | 0.004 |

| 100 | 0.050 | 0 | 0 | 0.000 | 0.001 | 0.000 | −0.001 | 0.000 | 0.000 | 0.050 | 1.020 | 0.012 | 0.009 |

| 100 | 0.100 | 0 | 0 | 0.000 | 0.000 | 0.000 | −0.003 | 0.001 | 0.001 | 0.150 | 2.187 | 0.022 | 0.019 |

| 100 | 0.150 | 0 | 0 | 0.000 | −0.003 | 0.000 | −0.007 | 0.002 | 0.002 | 0.283 | 4.454 | 0.030 | 0.031 |

| 100 | 0.200 | 0 | 0 | 0.002 | 0.002 | 0.001 | −0.012 | 0.003 | 0.004 | 0.450 | 6.140 | 0.038 | 0.045 |

Each value is the mean across 10,000 simulations except for ETI where the median is reported. Superfraktur GP denotes our proposed method and TF denotes Trend Filtering. Numbers have been rounded to three decimal places.

The variability of the Trend Direction Index and the Expected Trend Instability as measured by the squared L2 norm were low in all scenarios but increased with the magnitude of the measurement noise as expected. In a few cases, the estimated Expected Trend Instability was far away from its true value. This was especially pronounced in scenarios with a small number of observations and a high measurement noise. The reason was that degenerated to either a constant function or to a perfect interpolation of the observed data. This is a consequence of the hyperparameters being weakly identified under such circumstances. These cases are still part of the reported summary statistics in Table 1. For such cases, the model fit can be regularized through the priors of the hyperparameters, but this must be determined on a case-by-case basis.

5. APPLICATIONS

5.1. Trend of proportion of Danish smokers

A report published by The Danish Health Authority in January 2019 updated the estimated proportion of daily or occasional smokers in Denmark with new data from 2018 (The Danish Health Authority, 2019). The data were based on an online survey including 5017 participants. The report also included data on the proportion of smokers in Denmark during the last 20 years which was shown in Figure 1. The report was picked up by several newspapers under headlines stating that the proportion of smokers in Denmark had significantly increased for the first time in two decades (Navne et al., 2019). The report published no statistical analyses for this statement but wrote that because the study population is so large, then more or less all differences become statistically significant at the 5% level (this was written as a 95% significance level in the report).

This data set provides an instrumental way of exemplifying our two proposed measures of trendiness. In this application, we wish to assess the statistical properties of the following questions:

-

Q1:

Is the proportion of smokers increasing in the year 2018 conditional on data from the last 20 years?

-

Q2:

If the proportion of smokers is currently increasing, when did this increase probably start?

-

Q3:

Is it the first time during the last 20 years that the trend in the proportion of smokers has changed?

A naive approach for trying to answer questions Q1 and Q2 is to apply sequential χ2-tests in a 2 × 2 table. Table 2 shows the p-values for the χ2-test of independence between the proportion of smokers in 2018 and each of the five previous years. Using a significance level of 5%, the conclusion is ambiguous. Compared to the previous year, there was no significant change in the proportion in 2018. Three out of these five comparisons fail to show a significant change in proportions. It is therefore evident that such pointwise testing is not sufficiently perceptive to catch the underlying continuous development.

TABLE 2.

p-values obtained from χ2-tests of independence between the proportion of smokers in 2018 and the five previous years

| 2017 | 2016 | 2015 | 2014 | 2013 | |

|---|---|---|---|---|---|

| p-value | 0.074 | 0.020 | 0.495 | 0.012 | 0.576 |

Numbers in bold are statistically significant differences at the 5% level.

Similarly, a simple approach for trying to answer question Q3 would be to look at the cumulative number of times that the difference in proportion between consecutive years changes sign. In the data set, there were nine changes in the sign of the difference between the proportion in each year and the proportion in the previous year giving this very crude estimate of the number of times that the trend has changed. This approach suffers from the facts that it is based on a finite difference approximation at the sampling points to the continuous derivative, and that it uses the noisy measurements instead the latent function. Consequently, the changes in trend are quite unstable.

We now present an analysis of the data set using our method. As a specification of the latent function, we considered three different mean functions, a constant mean , a linear mean and a quadratic mean , and the four different covariance functions given in Equation (6). This gives a total of 12 different candidate models to compare. Since we condition on all the observed data and are not interested in forecasting in this application, we performed the model comparison by leave-one-out cross-validation as discussed in Section 3.1.

Table 3 shows the mean squared error of prediction for each candidate model. For the models with a RQ covariance function and a linear and a quadratic mean function the parameter ν diverged numerically implying convergence to the SE covariance function. Comparing the leave-one-out mean squared error of prediction, the prior distribution of f in the optimal model has a constant mean function and a RQ covariance function. The marginal maximum likelihood estimates of the parameters in the optimal model were

| (9) |

TABLE 3.

Leave-one-out cross-validated mean squared error of prediction for each of the 12 candidate models

| SE | RQ | Matern 3/2 | Matern 5/2 | |

|---|---|---|---|---|

| Constant | 0.682 | 0.651 | 0.687 | 0.660 |

| Linear | 0.806 | ⇐ | 0.896 | 0.865 |

| Quadratic | 0.736 | ⇐ | 0.800 | 0.785 |

⇐ indicates numerical convergence to the SE covariance function. The value in bold indicates the lower leave-one-out cross-validated mean squared error of prediction.

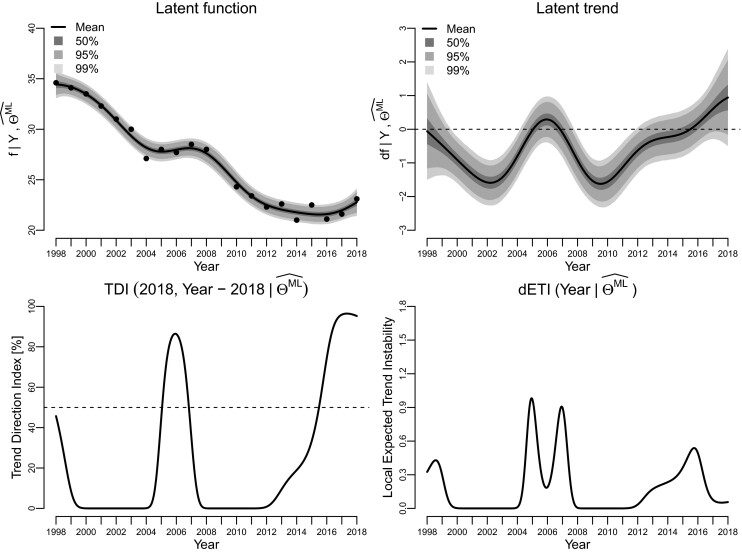

Figure 4 shows the fit of the model by the maximum likelihood method. The plots were obtained by plugging the maximum likelihood estimates into the expressions for the posterior distributions of f and df defined in Proposition 1, the Trend Direction Index in Proposition 2 and the Expected Trend Instability in Proposition 3. The predictions were performed on an equidistant grid of 500 time points spanning the 20 years. The plot of the posterior trend (top right) shows two regions in time where the posterior mean of the derivative is positive, one around [2004; 2008] and one shortly after 2015 and until the end of the observation period. The Trend Direction Index (bottom left) quantifies this positive trendiness as a probability standing in 2018 and looking back in time while also taking the uncertainty into account. The bottom right panel shows the local Expected Trend Instability and its integral is to the expected number of times that the trend has changed sign. Table 4 summarizes the maximum likelihood estimates of the Trend Direction Index at the end of the observation period and the previous five years as well as the Expected Trend Instability during the full observation period and during only the last ten years. Crosspoint is the first point in time during the last ten years where the Trend Direction Index became greater than 50% that is

FIGURE 4.

Results from fitting the latent Gaussian process model by maximum likelihood. The first row shows the posterior distributions of f (left) and df (right) with the posterior means in bold and grey areas showing pointwise probability intervals for the posterior distribution. The second row shows the estimated Trend Direction Index (left) and the local Expected Trend Instability (right)

TABLE 4.

Summary measures from the maximum likelihood and Bayesian analyses

| Maximum likelihood | Bayesian posterior | ||

|---|---|---|---|

| TDI(2018, 0) | 95.24% | 93.32% | [82.15%;98.86%] |

| TDI(2018, −1) | 95.92% | 94.21% | [84.28%;99.11%] |

| TDI(2018, −2) | 74.41% | 77.87% | [51.02%;94.94%] |

| TDI(2018, −3) | 33.36% | 44.11% | [18.23%;69.19%] |

| TDI(2018, −4) | 18.96% | 20.60% | [6.05%;31.82%] |

| TDI(2018, −5) | 9.50% | 6.21% | [0.03%;22.21%] |

| Crosspoint | 2015.48 | 2015.19 | [2014.62;2015.96] |

| ETI([1998, 2018]) | 3.68 | 3.36 | [1.24;4.79] |

| ETI([2008, 2018]) | 1.39 | 1.25 | [1.02;2.22] |

The rows show the estimated Trend Direction Index for 2013 to 2018 and the Expected Trend Instability for the last 10 and 20 years all conditional on data from 1998 to 2018. For the Bayesian analysis, posterior medians and 95% posterior probability intervals are given. Crosspoint is the time during the last 10 years where TDI first exceeded 50%.

Based on the results from the maximum likelihood analysis, we may answer the questions by stating that the expected proportion of smokers in Denmark is currently increasing with a probability of 95.24%. This is, however, not a recent development as the probability of an increasing proportion has been greater than 50% since the middle of 2015. This can be compared to the sequential χ2-tests in Table 2, which gave a cruder and less consistent result. The estimated values of ETI in Table 4 show that there has been an average of 3.68 changes in the monotonicity of the proportion during the last 20 years. This value does support the statement by the news outlets that it is the first time in 20 years that the trend has changed. The value, however, reduces to 1.39 when only looking 10 years back, which is slightly less than half the ETI for the longer period.

We also applied the Bayesian estimator to the data using the same prior mean and covariance structure. The Bayesian estimator requires a prior distribution of the hyperparameters. We used independent priors of the form

where each prior was a heavy-tailed distribution with a moderate variance centred at the maximum likelihood estimates. We used the following distributions

where the maximum likelihood values are given in Equation (9) and Half-T(·, ·, df) and Half-N(·,·) denotes the location-scale half T- and normal distribution functions with df degrees of freedom due to the requirement of positivity. We ran four independent Markov chains for 25,000 iterations each with half of the iterations used for warm-up and discarded. Convergence was assessed by trace plots of the MCMC draws and the potential scale reduction factor, , of Gelman and Rubin (1992). The trace plots are included in the Supplementary Material.

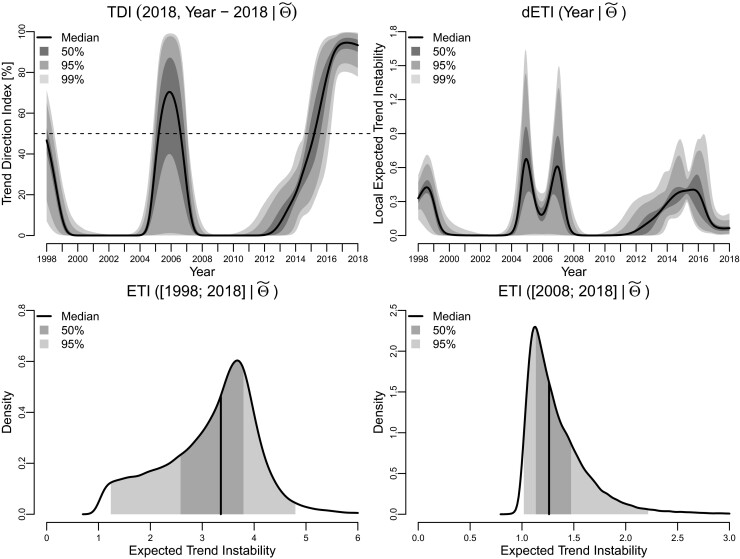

Figure 5 shows the results from the Bayesian estimator. In this model, both trend indices are time-varying posterior distributions and the top row of the shows the posterior distributions of the Trend Direction Index (left) and the Local Expected Trend Instability (right) summarized by time-dependent quantiles. The bottom row shows posterior density estimates of the Expected Trend Instability during the last 20 years (left) and during the last 10 years (right). The same summary statistics as for the maximum likelihood analysis are given in Table 4 but here stated in terms of posterior medians and 2.5% and 97.5% posterior quantiles.

FIGURE 5.

Results from fitting the latent Gaussian process model by Bayesian analysis. The first row shows the posterior distributions of TDI (left) and local ETI (right) with the posterior means in bold and gray areas showing pointwise 95% and 99% probability intervals for the posterior distribution. The second row shows densities and probability intervals for the expected trend instability for the 20-year period back-in-time from 2018 (left) and 10 year back-in-time (right)

The results from the two analyses generally agree, but there are two differences that we wish to address. Both analyses showed a local peak in trendiness around 2006. In the maximum likelihood analysis, this occurred at 2005.94 with a Trend Direction Index of 86.47%. In the Bayesian analysis, the peak occurs at 2005.87 with a median Trend Direction Index of 79.43% and a 95% posterior probability interval of [1.23%; 97.73%]. The added uncertainty estimates facilitated by the Bayesian estimator shows this trendiness is so variable that there is no reason to believe in a substantial increase in proportions at that point in time. This insight could not have been obtained from the maximum likelihood analysis.

The second difference is that the Bayesian model seems to generally induce more sluggish estimates due to mixing over the underlying parameters. This can be seen from the plot of the median local Expected Trend Instability in Figure 5, which is generally lower than its corresponding maximum likelihood point estimates in Figure 4. This is similarly reflected in the median ETI estimates in Table 4, which are lower than their values under maximum likelihood. Looking at the posterior distributions of the covariance parameters θ (not shown), we see that this is mainly a result of not restricting the parameter ν to its maximum likelihood value. The 95% probability interval of the posterior distribution of ν was [0.328; 10.743], which is highly right-skewed compared to the maximum likelihood estimate of .

To understand the effect of ν on the smoothness of the fitted models, we can compare the local expected number of crossings by a Gaussian process and its derivative at their mean values in the simple case of a zero-mean process with either the RQ or the SE covariance function. In this case, the formula in Proposition 3 simplifies immensely, and as shown in the Supplementary Material the local expected number of mean-crossings by f is equal to πρ−1 for both covariance functions. However, for df, the local expected number of mean-crossings is equal to 31/2π−1ρ−1 for the SE covariance function and 31/2π−1ρ−1(1+ν−1)1/2 for the RQ covariance function. We note that for 0 < ν < ∞ and monotonically decreasing for ν → ∞ with a limit of one. Therefore, the value of ν has no effect on the crossing intensity of the process itself, but its derivative is always larger under a RQ covariance function compared to the SE covariance function with equality in the limit. A right-skewed posterior distribution of ν therefore favours fewer crossings of the trend leading to a more stable trend and a smaller value of the Expected Trend Instability.

As a final remark to this application, we note that the observed data are proportions and therefore by nature not normally distributed. Commonly used transformations for proportions towards normality are the isometric log ratio or the arcsine-square-root or logit functions. In accordance with Section 2.5, we also performed the trend analysis on the logit transformed outcomes. The results from this analysis are included in the Supplementary Material and did not give rise to different interpretations. This is perhaps not surprising as the observed proportions are far from the boundaries of the parameter space and consequently the normality approximation is more likely to hold.

5.2. Number of new COVID-19-positive cases in Italy

As a second application, we look at the development of the number of new COVID-19 positive cases in Italy since 24 February 2020. Data were updated each day and made available at the GitHub repository of the Italian Civil Protection Department (Consiglio dei Ministri—Dipartimento della Protezione Civile, 2020). Ninety days had passed when this analysis was performed.

The data set provides a direct way to assess the COVID-19 disease progression and to monitor the impact of political initiatives to reduce disease spread. In this application, we wish to assess the statistical properties of the following questions:

-

Q1:

Is the disease spread currently under control or does the number of new positive cases seems to be on the rise?

-

Q2:

How did the Italian government's decision to lockdown most of Italy on 9 March 2020 reflect in the spread of the virus?

For this application, we fitted the Gaussian process model using the maximum likelihood method and as hyperparameters we used a constant mean function and the RQ covariance function.

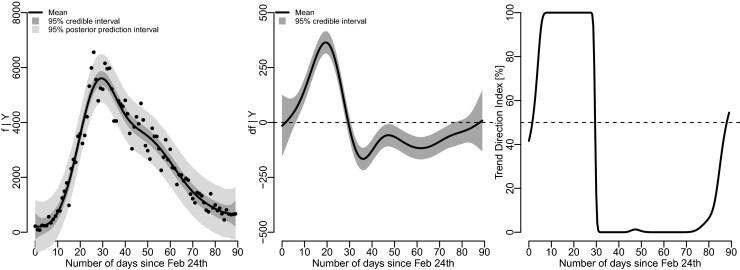

Figure 6 shows the result of the analysis. Between day 5 and 6, the Trend Direction Index crossed 95% probability and continued to climb to 100%. After 29 days (24 March 2020), the index proceeded to sharply decrease where it remained for some time. The Italian government imposed a quarantine on most of Italy from 9 March, and the sharp drop coincides nicely with the (as of present) expected incubation period of 2–14 days, which roughly reflects the incubation and testing period for the individuals who contracted the virus before the 9 March lockdown. After 24 March, the trend was clearly not positive for a long time.

FIGURE 6.

Results from the trend analysis of the Italian COVID-19 data. The left panel shows the number of new positives since 24 February along with the posterior mean of f and 95% credible and posterior prediction intervals. The middle panel shows the posterior distribution of the trend (df), and the right panel shows the Trend Direction Index

However, at day 88 (22 May 2020), the Trend Direction Index had again increased and crossed 50%, and at the time of this analysis, the index was equal to 54%. It is therefore currently not certain whether the number of new positives is increasing or decreasing but a slight probability favours the former. It should be stressed, that the Trend Direction Index monitors the sign and not the size of the trend and this recent increase of TDI to 54% may reflect that the number of new cases is merely starting to level off (df(t)≈0). The Trend Direction Index can be used as a monitoring tool to determine if the Italian authorities need to take extra actions.

6. DISCUSSION

In this article, we have proposed two new measures, the Trend Direction Index and the Expected Trend Instability, in order to quantify the trendiness of a latent, random function observed in discrete time. Using a Gaussian process model for the latent structure, we showed how these indices can be estimated from data in both a maximum likelihood and a Bayesian framework and provide probabilistic statements about the monotonicity of the latent development of an observed outcome over time.

Both indices have intuitive interpretations that directly refer to properties of the latent trend, and our proposed methodology exploits the assumption of continuity allowing us to calculate a different (and in many cases more relevant) probability than what can be obtained from, for example multiple pairwise comparisons in discrete time.

The comparison with Trend Filtering showed that our proposed method provides unbiased estimates of the underlying function f and its derivative, df but that we are able to relax the assumptions of piecewise linearity of the underlying process, while in addition providing a probability distribution for the derivative which is used directly in the Trend Direction Index and which directly provides the answers to the research questions often posed.

It is worth noting that the indices are scale-free and do not tell anything about the magnitude of a trend. Consequently, the two indices should therefore always be accompanied by plots of the posterior of df. If a prespecified magnitude, u, of a trend is desired, then the threshold in the definition of the TDI is easily modified to accommodate this as .

In conclusion, we have introduced a method for quantifying the trendiness of a trend that specifically addresses questions such as ‘Has the trend changed?’. Our approach is based on two intuitive measures that are easily interpreted, provide well-defined measures of trend behaviour, and which can be applied in a large number of situations. The flexibility of the model is further improved by the minimum of assumptions necessary to provide about the underlying latent trend.

SUPPORTING INFORMATION

Additional supporting information may be found in the online in the Supporting Information section.

Supplementary Material

ACKNOWLEDGEMENTS

The authors thank two anonymous reviewers and the associate editor for providing thoughtful comments that substantially improved the manufraktur.

Contributor Information

Andreas Kryger Jensen, Biostatistics, Institute of Public Health University of Copenhagen, Copenhagen, Denmark.

Claus Thorn Ekstrøm, Biostatistics, Institute of Public Health University of Copenhagen, Copenhagen, Denmark.

REFERENCES

- Arnold, T.B. & Tibshirani, R.J. (2019) genlasso: Path Algorithm for Generalized Lasso Problems. Available from: https://CRAN.R-project.org/package=genlasso.

- Barry, D. & Hartigan, J.A. (1993) A Bayesian analysis for change point problems. Journal of the American Statistical Association, 88(421), 309–319. [Google Scholar]

- Basseville, M. & Nikiforov, I.V. (1993) Detection of abrupt changes: Theory and application. Upper Saddle River: Prentice-Hall. [Google Scholar]

- Bergmeir, C. & Benítez, J. M.. 2012. On the use of cross-validation for time series predictor evaluation. Information Sciences, 191, 192–213. [Google Scholar]

- Carlstein, E., Müller, H.-G. & Siegmund, D. eds. (1994) Change-point problems. Vol. 23. Lecture Notes—Monograph Series. Hayward, CA: Institute of Mathematical Statistics. [Google Scholar]

- Carpenter, B., Gelman, A., Hoffman, M.D., Lee, D., Goodrich, B., Betancourt, M., et al. (2017) Stan: A probabilistic programming language. Journal of Statistical Software 76(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chandler, R. & Scott, M. (2011) Statistical methods for trend detection and analysis in the environmental sciences. Statistics in Practice. Hoboken: Wiley. [Google Scholar]

- Consiglio dei Ministri—Dipartimento della Protezione Civile, Presidenza del. (2020) COVID-19 Italia—Monitoraggio Situazione; 2020. Retrieved from: https://github.com/pcm-dpc/COVID-19.

- Cramer, H. & Leadbetter, M.R. (1967) Stationary and related stochastic processes—sample function properties and their applications. Hoboken: John Wiley & Sons, Inc [Google Scholar]

- Esterby, S.R. (1993) Trend analysis methods for environmental data. Environmetrics, 4(4), 459–81. [Google Scholar]

- Gelman, A. & Rubin, D.B. (1992) Inference from iterative simulation using multiple sequences. Statistical Science, 7(4), 457–72. [Google Scholar]

- Gottlieb, A. & Müller H.-G. (2012) A stickiness coefficient for longitudinal data. Computational Statistics & Data Analysis, 56(12), 4000–4010. [Google Scholar]

- Hodrick, R.J. & Prescott, E.C. (1997) Postwar U.S. business cycles: An empirical investigation. Journal of Money, Credit and Banking, 29(1), 1–16. [Google Scholar]

- Jensen, A.K. (2019) GitHub Repository for the Trendiness of Trends. 2019. Retrieved from https://github.com/aejensen/TrendinessOfTrends.

- Kaufman, C.G., Bingham, D., Habib, S., Heitmann, K. & Frieman, J.A.. others (2011) Efficient emulators of computer experiments using compactly supported correlation functions, with an application to cosmology. The Annals of Applied Statistics, 5(4), 2470–92. [Google Scholar]

- Kim, S.-J., Koh, K.Boyd, S. & Gorinevsky, D. (2009) ℓ1 trend filtering. SIAM Review, 51, 339–60. [Google Scholar]

- Kowal, D.R., Matteson, D.S. & Ruppert, D. (2019) Dynamic shrinkage processes. Journal of the Royal Statistical Society: Series B, 81, 781–804. [Google Scholar]

- MacKay, D.J.C. (1998) Introduction to Gaussian process. Neural Networks and Machine Learning. [Google Scholar]

- Micchelli, C.A., Xu, Y. & Zhang, H.. 2006. Universal kernels. Journal of Machine Learning Research, 7(Dec), 2651–67. [Google Scholar]

- Navne, H., Schmidt, A.L. & Rasmussen, L.I. (2019) Første Gang I 20 år: Flere Danskere Ryger. 2019. Retrieved from: https://politiken.dk/forbrugogliv/sundhedogmotion/art6938627/Flere-danskere-ryger.

- Neal, R.M. (2012) Bayesian learning for neural networks. Lecture Notes in Statistics. New York: Springer. [Google Scholar]

- Quandt, R.E. (1958) The estimation of the parameters of a linear regression system obeying two separate regimes. Journal of the American Statistical Association, 53(284), 873–80. [Google Scholar]

- Radford, N.M. (1999) Regression and classification using Gaussian process priors (with discussion). In Dawid, A.P.Bernardo, J.M., Berger, J.O. & Smith, A.F.M. (Eds) Bayesian statistics 6: Proceedings of the sixth valencia international meeting, pp. 475–501. [Google Scholar]

- Ramdas, A. & Tibshirani, R.J. (2016) Fast and flexible admm algorithms for trend filtering. Journal of Computational and Graphical Statistics, 25(3), 839–858. [Google Scholar]

- Rasmussen, C.E. & Williams, C.K.I. (2006) Gaussian processes in machine learning. Cambridge: MIT Press. [Google Scholar]

- R Core Team. (2018) R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing. Retrieved from: https://www.R-project.org/. [Google Scholar]

- Stan Development Team. (2018) RStan: The R Interface to Stan. Retrieved from: http://mc-stan.org/.

- The Danish Health Authority. (2019). Danskernes Rygevaner 2018. 2019. Retrieved from: https://www.sst.dk/da/udgivelser/2019/danskernes-rygevaner-2018.

- Vehtari, A., Gabry, J., Magnusson, M., Yao, Y. & Gelman, A. (2019) loo: Efficient Leave-One-Out Cross-Validation and WAIC for Bayesian Models. Retrieved from: https://mc-stan.org/loo.

- Williams, C.K.I. (1998) Computation with infinite neural networks. Neural Computation, 10(5), 1203–16. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.