Abstract

Background

Over the past decade, autism spectrum disorder (ASD) research has blossomed, and multiple clinical trials have tested potential interventions, with varying results and no clear demonstration of efficacy. Lack of clarity concerning appropriate biological mechanisms to target and lack of sensitive, objective tools to identify subgroups and measure symptom changes have hampered the efforts to develop treatments. A platform trial for proof-of-concept studies in ASD could help address these issues. A major goal of a platform trial is to find the best treatment in the most expeditious manner, by simultaneously investigating multiple treatments, using specialized statistical tools for allocation and analysis. We describe the setup of a platform trial and perform simulations to evaluate the operating characteristics under several scenarios. We use the Autism Behavior Inventory (ABI), a psychometrically validated web-based rating scale to measure the change in ASD core and associated symptoms.

Methods

Detailed description of the setup, conduct, and decision-making rules of a platform trial are explained. Simulations of a virtual platform trial for several scenarios are performed to compare operating characteristics. The success and futility criteria for treatments are based on a Bayesian posterior probability model.

Results

Overall, simulation results show the potential gain in terms of statistical properties especially for improved decision-making ability, while careful planning is needed due to the complexities of a platform trial.

Conclusions

Autism research, shaped particularly by its heterogeneity, may benefit from the platform trial approach for POC clinical studies.

1. Introduction

Novel trial designs have attracted the interest of researchers and policymakers to overcome the inefficiencies inherent in the design of some randomized controlled trials (RCTs), the widely used approach to generate clinical data [1,2]. Traditional RCT designs are usually limited to the assessment of a single agent (“intervention”) in a somewhat homogeneous population using fixed randomization and based on protocols with finite duration and prespecified outcomes [3]. Platform trial designs are designed to continually investigate a fixed or variable number of treatments or combinations using a decision algorithm with the broad goal of identifying the effective treatment [3,4]. A master protocol is designed to evaluate multiple hypotheses for the investigation of multiple interventions or across populations and defines the study's primary endpoint(s), primary analysis and decision rules [5].

In platform trials, the data accrued are used to update a statistical analysis in real time, which is then used to make decisions about dropping or adding treatments through the course of the trial [6]. This design of continual testing allows effective treatments to be evaluated more efficiently and expeditiously while engaging fewer resources compared to traditional RCTs. Furthermore, enrolment of more heterogenous populations also allows stratification into different subtypes based on clinical biomarkers, thus enabling to quantify treatment effects within subgroups [3]. The success of the I-SPY2 (Investigation of Serial studies to Predict Your Therapeutic Response with Imaging and Molecular Analysis 2), in efficiently evaluating and graduating newer neoadjuvant chemotherapies for high-risk breast cancer has been a major influence on the development of newer generation trial designs in other therapeutic areas to rapidly progress the advancement of meaningful therapies [[7], [8], [9]]. Other examples of successful ongoing platform trials include INSIGhT (Individualized Screening of Trial of Innovative Glioblastoma Therapy [10]) and AGILE (Adaptive Global Innovative Learning Environment [11]) for glioblastoma, and EPAD (The European Prevention of Alzheimer's Dementia) for Alzheimer's disease and dementia [12].

Autism spectrum disorder (ASD) is a heterogenous neurodevelopmental disorder characterized by impaired social interaction, restricted interests, and repetitive behavior. The complex etiology and heterogeneity in clinical presentation of ASD, including wide variations in severity, predominance of one or the other core symptoms, presence of associated symptoms and comorbidities, present immense challenges in the clinical development of treatment for ASD [13,14]. Despite the pioneering clinical research for potential interventions for ASD core symptoms, the progress has been impeded by a general lack of understanding of the biological mechanisms and reliable endpoints to detect symptom changes. Gold standard measures for ASD diagnosis including Autism Diagnostic Observation Schedule, 2nd edition (ADOS-2) and Autism Diagnostic Interview-Revised (ADIR) have limited use in measuring changes in symptoms over time [15]. Furthermore, the dearth of validated endpoints, including biomarkers, due to the interplay of varied neurological systems and pathways and have hampered the characterization of subgroups within populations of individuals with ASD [13,16].

The Janssen Autism Knowledge Engine (JAKE®) is a dynamic research tool comprising electroencephalography (EEG), eye-tracking, ECG, and facial affect expression and is specifically developed to optimize clinical trials for ASD. The JAKE system aims to identify biomarkers to categorize homogenous ASD subgroups and provide quantifiable measures to aid treatment monitoring and track changes of ASD symptomology [17]. The Autism Behavior Inventory (ABI), a component of the My JAKE application, is a psychometrically validated web-based rating scale to measure change in ASD core and symptoms and associated behavior, which is designed to include inputs from both clinicians and caregivers [18,19]. The ABI was developed as a clinical endpoint for interventional studies addressing core ASD symptoms. The ABI includes 62 items across five ASD domains: a) Social Communication (SC) (b) Restrictive Behaviors (RB) (c) Mood and Anxiety (d) Self-Regulation (e) Challenging Behavior [18,19]. We used the ABI SC and RB domains as clinical endpoints in the development of an adaptive clinical trial design to evaluate the efficacy of potential interventions on core symptoms for ASD. Here we describe the set-up of the platform trial and the evaluation of the design via simulation.

2. Methods

2.1. Setting up a platform trial for ASD

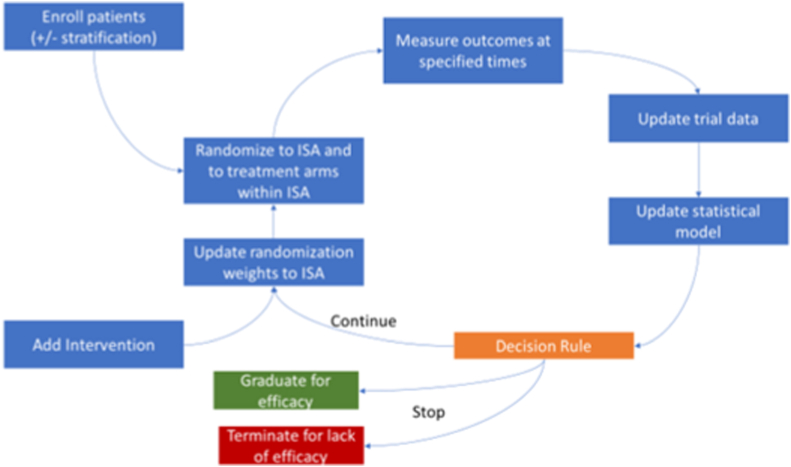

Fig. 1 shows a schematic representation of a platform trial for ASD [1]. After identifying patient inclusion criteria and stratification, patients will be randomized to interventions defined in the intervention specific appendix (ISA). Following outcome assessment, the data will be used to update the statistical model and apply prespecified decision rules. If there was only one intervention at a given time, then the randomization weights will not apply.

Fig. 1.

Schematic of a platform trial for ASD

ASD, autism spectrum disorder; ISA, intervention specific appendix Adapted from Ref. [1].

2.2. Master protocol and ISA

The master protocol describes the framework of the study design, the study population and common inclusion and exclusion criteria across all interventions. There can be multiple interventions, where participants on an intervention and its specific blinded placebo are labelled as an intervention cohort. Each intervention will be described in an ISA and each ISA could specify the decision rules to make it more difficult to “graduate” for success or make it easier to be dropped for futility, suggestive of very low probability of achieving a meaningful treatment effect at the end of the trial. Intervention cohorts may start at different time points during the platform trial. The master protocol will be developed to adequately address and describe the following: (1) validity of sharing a placebo/SoC arm across different ISA, especially for an ISA that is entering the platform trial after a large time gap relative to the earlier ISAs (2) a scenario where placebo/SoC changes for the target population during the conduct of the trial (3) the maximum sample size for each ISA, including the number of patients on experimental/SoC treatment arm and (4) details about randomization between and within ISAs which could include setting a maximum and minimum bound on the randomization ratios.

Double-blind will be maintained within an intervention cohort between the different arms in that cohort but participants will know their intervention cohort. Randomization may be stratified for an ISA to ensure equal distribution of the subgroups across the different arms in the ISA.

2.3. Platform trial conduct and decision making

For ASD, change from baseline in the ABI SC and RB domain scores were the primary endpoints. The total sample size for each ISA will depend on the target effect of the intervention. When a new ISA is added to the platform, the assumption for each active treatment arm in the ISA will be that it is equal to control in reducing the symptoms associated with ASD as assessed by the defined endpoints. An ISA will be deemed positive and the corresponding intervention “graduates” if it is very likely that the experimental treatment provides benefit over control. The intervention may also be “dropped” from the platform if it is unlikely that the intervention provides benefit. The decision of graduating or dropping an intervention from the platform can also be stratified by a covariate. The details of the decision boundaries will be specified in each ISA.

The platform trial will consist of evolution analyses (EA) and a final analysis (FA) for each ISA. Evolution analyses (EA) will be defined as analyses that were conducted at certain intervals beginning when the first ISA has a minimum amount of information accrued which is defined as a pre-specified number of participants enrolled with a specific amount of follow-up. After the first EA begins, additional EAs will be conducted at regular intervals based on time or the number of participants. At each EA, all participant data will be included in the analysis; however, only ISA that have met the minimum information requirement will be considered for success or futility, e.g., an intervention cannot be removed from the study early with too little information. The FA for an ISA will occur when all patients in the given ISA have a prespecified amount of follow-up and the major difference between and EA and FA is that the FA may alter the boundaries for decision making, thus controlling the false-positive rate, and lowering the likelihood of an incorrect early decision.

At each EA, a decision will be made to determine if an intervention should be selected early for success, futility or continue enrolling patient until the next analyses. An intervention will “graduate” if the posterior probability of the difference in change from baseline between treatment and placebo is large in either ABI SC or ABI RB endpoints. In addition, the intervention will be dropped from the platform if the posterior probability that the intervention provides benefit is small, for both ABI SC and ABI RB. Each ISA must determine the minimal acceptable value (MAV) for treatment differences for both ABI SC and ABI RB and posterior probability cutoffs for graduating an intervention, denoted as PU (posterior probability upper cutoff), or dropped from the platform, denoted as PL (posterior probability lower cutoff). More details are provided in the Statistical Model section. The posterior probability cutoffs may be defined separately for an EA, denoted by PUEA and PLEA, and for FA, denoted by PUFA and PLFA. The posterior probability for decisions can be different for ABI RB and ABI SC. The rules for the combining the decision from the two domains, ABI SC and ABI RB must be clearly specified for each ISA.

2.4. Statistical model

The statistical analyses for the platform will utilize a Bayesian model for the ABI SC and RB domain scores. This model will be fit separately for each intervention but may utilize common placebo arm participants from multiple intervention cohorts. This model will incorporate the Bayesian first order autoregressive (AR (1)) model for ABI SC and RB change from baseline domain scores over a 12-week time period with visits every week utilizing all available patient data. AR (1) was chosen since it assumes the same variance at each time point and that the correlation between time points is higher the closer in time the points are.

The determination of ISA success was based on the posterior distribution of the change from baseline for ABI SC and RB mean scores. For decision making, we compute the probability that difference between E and P at week 12 is at least MAV and if the probability is larger (small), E may graduate (or be dropped for futility).

For generality, an assumption was made that SC and RB were evaluated at J timepoints (baseline: J = 1). Denote the J outcome measurements by Y1,k, Y2,k, …, YJ,k for k = SC or RB and vector of outcome k as Yk = (Y1,k, Y2,k, …YJ,k), be the Y'k = (Y2,k - Y1,k), … (YJ,k - Y1,k) the change from baseline measurements and Y = (YSC, YRB) be the vector of all outcome data for an ISA. For simplicity, the change from baseline for SC and RB were modelled independently and utilized a similar autoregressive AR (1) model.

| Y’1 ∼ N (μ1, t) |

At time j define μj = α + β * Xj; where Xj is the time the measurement was taken.

For j = 2, J-1 (Note J-1 because we are modelling the change for baseline and hence 1 less value)

| Y'j ∼ N (μj + γ(Yj-1 – μj-1), τ) |

Priors:

α ∼ N (0, 0.1)

β ∼ N (0, 0.1)

γ ∼ N (0, 0.1)

τ ∼ Gamma (1.12, 2.54) (Note that the second parameter in the normal distribution is the precision)

The prior means were chosen to reflect the fact that we expect no change over time. In addition, we assume a variance of 10 to reflect a lack of knowledge about the response curve and the vague priors allow the accumulation of data to drive the analysis and hence decision making.

2.4.1. Decision making

Since the difference between placebo control (P) and experimental intervention (E) at 12 weeks is of interest, the posterior for P and E outcomes were sampled and the probability that the difference in the mean between E and P at time j = 12 is greater than MAV was computed. The mean at week 12 for outcome k was denoted by mP,k, and mE,k for P and E, respectively, and Δk = mE,k-mEP,k was computed using the below equation,

| Pk = Pr (Δk > MAVk | YP, YE) |

For each outcome k and analysis l = EA, FA the following decisions are made.

Pk > PUl → Graduate for success

Pk < PLl → Drop for futility

EA, evolution analyses; FA, final analysis; PL, posterior probability lower cutoffs to be dropped from the platform; PU, posterior probability upper cutoffs for graduating an intervention

Otherwise, continue enrolling patients if it is an EA or the final decision is indeterminate. If the decision at the FA is indeterminate, then typically other data may be used to decide if development of the treatment should continue or stop.

2.5. Single ISA simulation study

To conduct a simulation study, the trial, study design, sample sizes and decision rules consisting of numeric values for PU, PL at both the EA and FA were specified and assumptions on true parameter values were made. Missing data were not generated in the simulation as missing at random (MAR) was assumed. If drop out is an issue, sample sizes may need to be adjusted accordingly. Each scenario specifies an assumption of the true parameters, such as patient recruitment rate, the true time profile responses for ABI SC and ABI RB across weeks 0, 2, 4, 8 and 12 for all treatments and an expectation on when ISAs would enter the study, if more than a single ISA are being evaluated. Several plausible scenarios ranging from very pessimistic to optimistic cases were considered for simulation. For each scenario, virtual patients were enrolled in a virtual trial and outcomes were obtained via simulations. Outcomes from the virtual trial are recorded which include various summaries of the trial results, including whether the results were “positive” or “negative.” This trial simulation procedure was repeated 2500 times to find the operating characteristics of the design.

The change in ABI SC and ABI RB domain scores from baseline were calculated as the number of items in the domain with a 1-point improvement in the scores compared to baseline. A 1-point improvement was considered clinically relevant based on cognitive interviews with caregivers during scale development [20].

For example: ABI SC has 23 items and a point improvement in 5 items corresponds to an average change of 0.22 (5/23) in the total ABI SC score. Assuming there was no change in any of the items in the control group, if a change of a point in 5/23 items in ABI SC was considered clinically relevant, MAV was defined as half of that value, which was 0.11. Similarly, RB has 15 items and a point improvement in 4 items corresponded to an average change of 0.27 (4/15) and MAV of half of that value was approximately 0.14. MAV can be specified in each ISA for each domain, by the number of items showing improvement in comparison to control. When the platform trial was executed, each ISA will specify the MAV for SC and RB as well as the cutoffs for decision making. Due to the smaller sample size at the EA, thresholds were set up such that there is very little possibility of stopping an ISA, unless the treatment provided substantial improvement or very unlikely to provide benefit. For example, the results for a design with PL = 0.01, PU = 0.99 at the EA and PL = 0.25 and PU = 0.85 at the FA were used.

2.6. ASD platform trial conduct and decision making

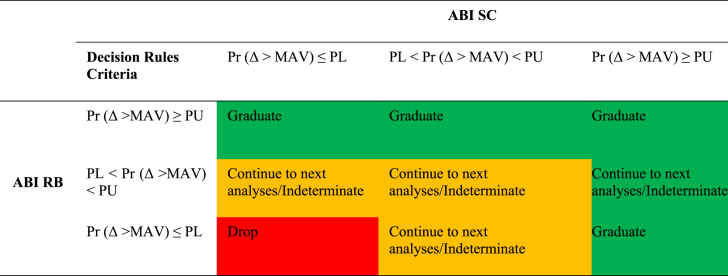

Each ISA must define how the individual outcome decision above for ABI SC and ABI RB will be combined for decision-making about the intervention. The decision to “continue to next analyses” was applicable at any EA and “indeterminate” is applicable for the FA (Table 1). It was expected that each ISA will utilize the same combination of ABI SC and ABI RB for decision making at EA and FA, however, this was not required and new approaches for combining ABI SC and ABI RB may be evaluated on ISA-by-ISA basis. For example, a new experimental treatment may target RB and thus the ISA could define the combination of ABI SC and ABI RB to only utilize RB. For the ASD platform study, by default each ISA will utilize a numeric value of 0.01 for PL, 0.99 for PU at an EA and 0.25 for PL, 0.85 for PU at the FA. Each ISA will use the decision rules in Table 1.

Table 1.

Decision rules to graduate, drop and continue to next analyses (at evolution analyses)/Indeterminate (at FA) using both ABI RB and ABI SC.

Δ is the difference between control and experimental arms using change from baseline scores.

For table layout, please note that the posterior probability Pr (Δ >MAV) is a condensed notation for Pr (Δ >MAV) | data) in all column/row headings.

ABI, Autism Behavior Inventory; FA, final analysis; MAV, the minimal acceptable value; PL, posterior probability lower cutoffs at which an intervention will be dropped; PU, posterior probability upper cutoffs for graduating an intervention; RB, Restrictive Behaviors; SC, Social Communication.

3. Results

3.1. Single Intervention/ISA simulation study

To help understand the impact of the cutoffs on the operating characteristics, a simulation study was conducted in the context of a platform trial with a single ISA consisting of placebo and experimental intervention where the EA was repeated every four months. The simulation of the platform trial was then extended to include three ISAs, each with placebo control and experimental intervention arms, and a simulation study conducted to understand the potential gain from borrowing placebo control patients across ISAs and changing the within ISA randomization ratio to have more patients on experimental in the later ISAs.

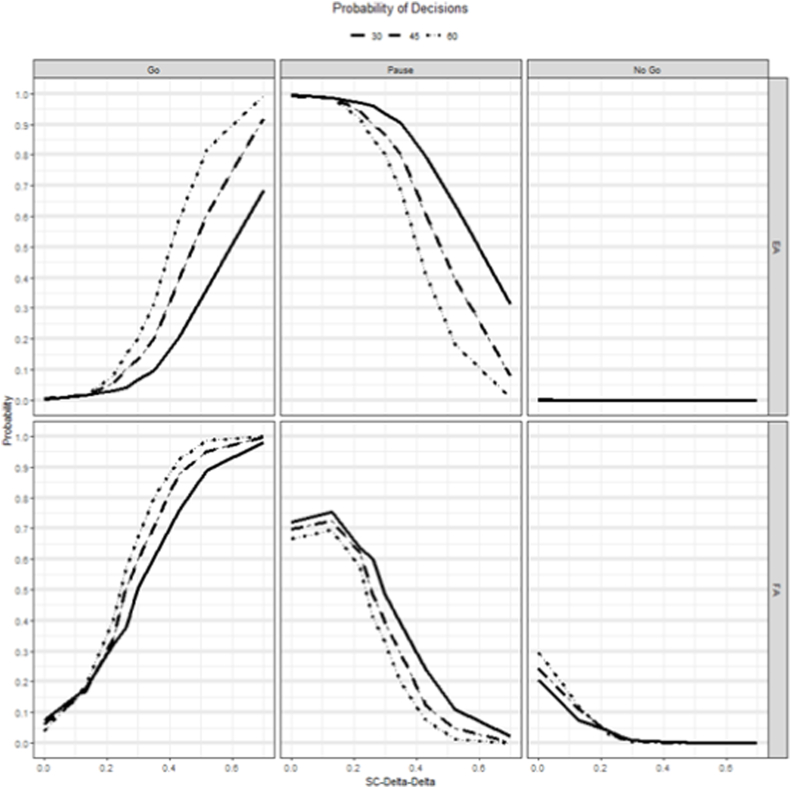

The simulation results for sample sizes per arm of 30, 45 and 60 are shown in Fig. 2, for an intervention that provides benefit in terms of SC and Supplementary Fig. 1 for an intervention that provides an improvement for RB. The probability of a go (P(Go)) at the FA were similar to typical power curves, with very low P(Go) for a null effect and increasing for higher response in the experimental arm. The P(Go) at EA were much lower compared to FA, for the same assumptions, because the FA lowers the cutoffs for decision making. For SC (Fig. 2), the P(Go) at FA was ≥80% for sample sizes 30, 40 and 60 when the true delta was 0.52, 0.4 and 0.35, respectively. Whereas the P(Go) at the EA was 80% only at the sample size of 60 with a true delta of 0.52. The probability of a no go (P(No Go)) was almost zero at the EA, whereas it was between 20-30% at the FA for null effect for different sample sizes. For RB (Supplementary Fig. 1), at FA, the P(Go) was >80% for a sample size of 60 patients per arm when the true delta was 0.47, whereas it was 42% for the same assumptions at EA. The MAV for RB was 0.14 which was higher than SC and therefore the lower power.

Fig. 2.

Simulation results showing the probability of making a Go, Pause or NoGo decision at the EA and FA for different sample size scenarios for an intervention that improves SC.

SC-Delta-Delta: The difference in effect size between treatment and control using change from baseline values.

EA, evaluation analysis; FA, final analysis; SC, Social Communication.

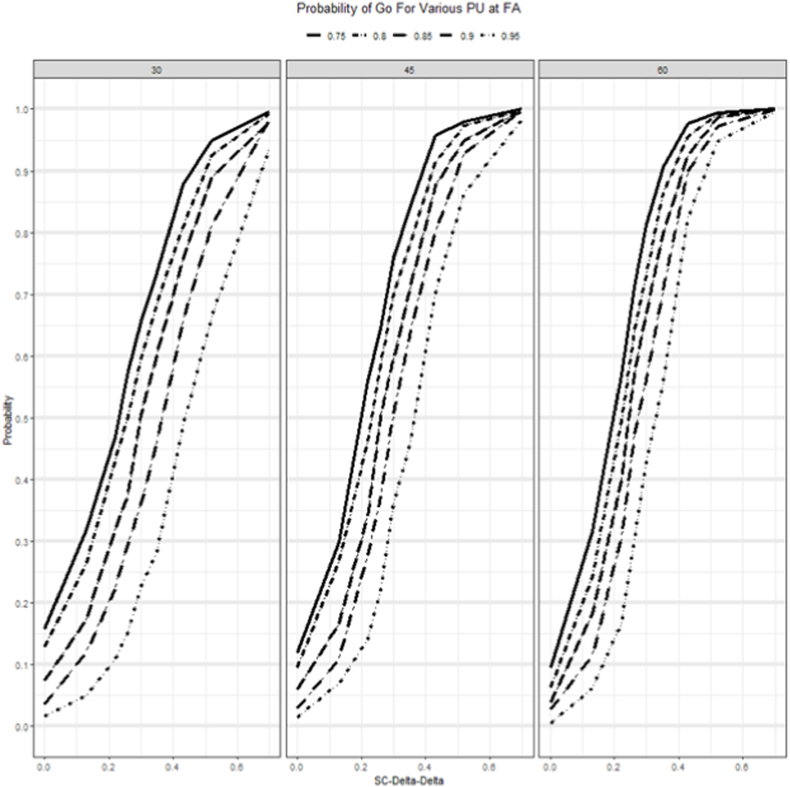

Since each ISA must specify the sample size and cutoff values it is important to provide a set of simulation results for a variety of true cutoff values for PU, PL at the EA, and FA to evaluate their OC. For PU at the FA ranging from 0.75 to 0.95, Fig. 3 displays the P(Go), decision for an intervention that provides benefit in terms of SC and Supplementary Fig. 2 for an intervention that provides an improvement for RB. For SC (Fig. 3), for a sample size of 30 with the true SC delta being 0.42 at FA the P(Go) was 88% for a PU of 0.75, whereas it was 76% for a PU of 0.85. As the assumption of the true delta for SC increases to higher values of 0.7, the P(Go) converges to the similar values for all thresholds. However, the assumption of high delta was to test for sensitivity and, we had to make trade-offs on decision criteria including the cutoff of the posterior probability, PU that we will use to declare success or PL to declare futility. Supplementary Fig. 2 shows the P(Go) for RB for different PU and sample sizes at different assumptions of true delta.

Fig. 3.

Probability of making a Go Decision at FA for various PU cutoff values across scenarios for an intervention that improves SC.

SC-Delta-Delta: The difference in effect size between treatment and control using change from baseline values.

FA, final analysis; PU, posterior probability upper cutoffs for graduating an intervention; SC, Social Communication.

3.2. Three Interventions/ISA simulation study

One of the major benefits of a platform study is the improved decision-making ability in the ISAs that are added to the platform after the initial ISA. To quantify the benefits of a platform trial with several ISAs sharing the placebo arm and improved decision-making ability, a three ISA platform trial was simulated. For this simulation study, we assumed the ISA 1 starts enrollment at the start of the platform, ISA 2 begins enrollment 12–13 months after the start of the platform and ISA 3 begins enrollment 18–19 months after the start of the platform. Each ISA is allotted 90 participants. The following three design approaches were considered: (1) all ISAs have 45 patients each on placebo and intervention with no borrowing of placebo participants from ISA to ISA; (2) all ISAs have 45 participants each on placebo and intervention, and placebo data is shared across ISAs and (3) on ISA 1, 45 participants receive placebo and intervention, on ISA 2, 30 participants receive placebo and 60 receive intervention and on ISA, 15 participants receive placebo and 75 receive intervention. All placebo data are shared across ISAs.

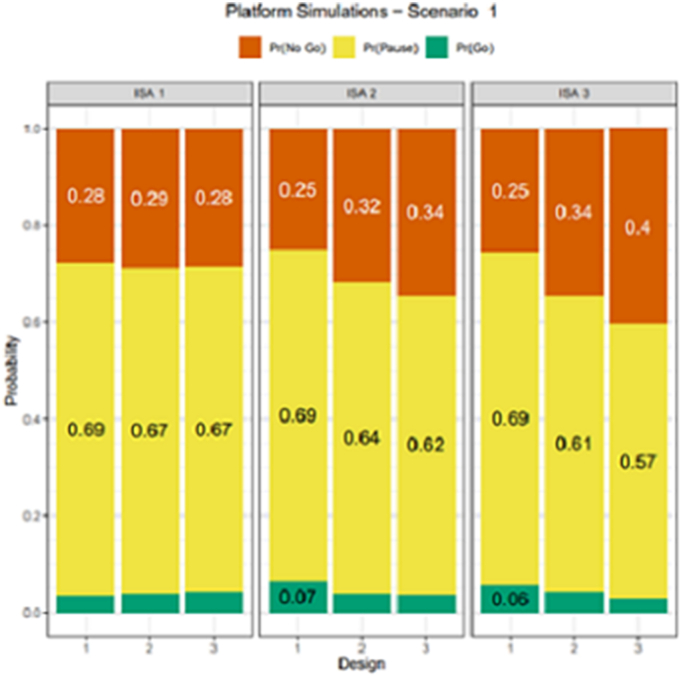

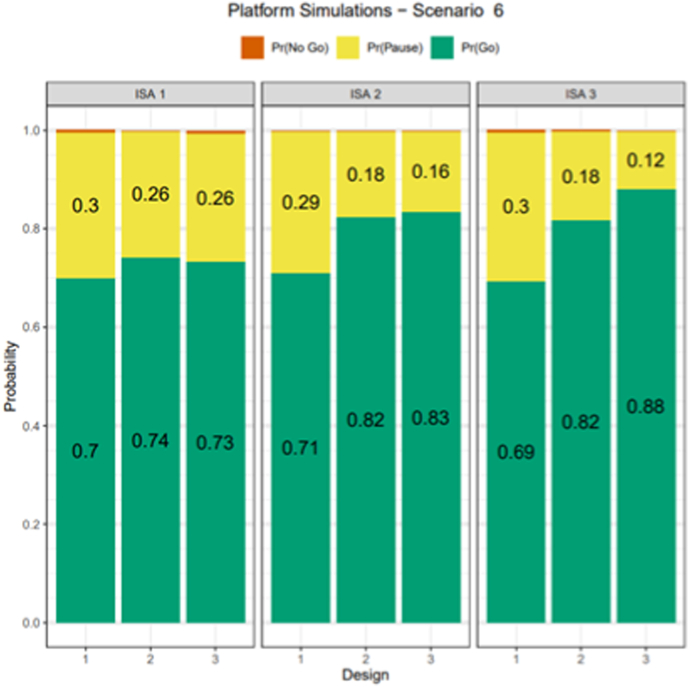

Design option 1 mimics what is typically done without a platform trial, option 2 illustrates the benefit of sharing placebo data and option 3 increases the number of participants receiving intervention and borrows placebo data making it a more efficient and flexible platform trial design. The remainder of the design parameters and same scenarios were simulated as in the single ISA case and for simplicity all ISAs had the same assumptions for true delta. Each ISA utilized the same cutoffs for decision making. Under the null case (Fig. 4), all three designs had very similar Go probabilities; however, the No Go probability increased from 25% to 40% for ISA 3, indicating that design 3 improved the likelihood of making the correct decision relative to design 1. Fig. 5 displays the Pr(Go) decision for all ISAs and designs for true delta of SC being 0.35 and showed that for ISA 3, relative to design 1, the design 3 improved the Pr(Go) by almost 20% and design 2 improved the Pr(Go) by about 13%. While P(Go) under the null case (Fig. 4), or the type 1 error, was similar across ISAs and designs, the gain in Pr(Go), power was by lowering the P(Pause) in design 3. This gain in power for design 3 decreased as the true assumption of delta increased to a high value of 0.52 (Supplementary Fig. 3), larger due to the ease of making the correct decision. These results suggested that borrowing of control participants across ISAs can improve decision-making ability and additional gains are realized by increasing the number of participants in the experimental intervention arm in later ISAs and borrowing placebo control participants across ISA. For design 3, ISA 3 benefits from the platform because this design borrows more placebo data and allocates more patients to the treatment arm, thus increasing the probability of a Go decision when compared to ISA 1 or 2.

Fig. 4.

Simulation results of probability of decision rules to graduate (P(Go), drop (P(No Go) and continue to next analyses (at EA)/Indeterminate (at FA) using both RB and SC for decision making in the null case

Note: Each ISA will utilize a numeric value of 0.01 for PL, 0.99 for PU at an EA and 0.25 for PL, 0.85 for PU at the FA.

EA, evaluation analysis; FA, final analysis; ISA, intervention specific appendix; ISA 1, starts enrollment at the start of the platform; ISA 2 begins enrollment 12–13 months after the start of the platform; ISA 3 begins enrollment 18–19 months; P (Go), probability of a go; P (No Go), probability of a no go; PL, posterior probability lower cutoffs at which an intervention will be dropped from the platform; PU, posterior probability upper cutoffs for graduating an intervention; RB, Restrictive Behaviors; SC, Social Communication.

Fig. 5.

Simulation results of probability of decision rules to graduate (P(Go), drop (P(No Go) and continue to next analyses (at EA)/Indeterminate (at FA) using both Restrictive Repetitive Behaviors and Social Communication for decision making for the scenario where the true delta for SC and RB is assumed to be 0.35 and 0.0 respectively

EA, evaluation analysis; FA, final analysis; ISA, intervention specific appendix; ISA 1, starts enrollment at the start of the platform; ISA 2 begins enrollment 12–13 months after the start of the platform; ISA 3 begins enrollment 18–19 months; P (Go), probability of a go; P (No Go), probability of a no go; RB, Restrictive Repetitive Behaviors; SC, Social Communication.

4. Discussion

Multiple clinical trials have tested potential interventions for the core symptoms of ASD; however, results are variable and often not promising [21]. Autism research, shaped particularly by the heterogeneity of ASD, the wide variety of potential targets, and uncertainty regarding fruitful targets for treatment, may benefit from the platform trial approach [21]. A key benefit of a platform trial is sharing of placebo or control patients across different treatments and those placebo patients may be non-concurrent depending on how much time lag is considered acceptable between new therapies entering the platform trial. This paper provides preliminary statistical details of designing a potential ASD platform trial using a Bayesian model. A master protocol was developed that defined treatment difference (primary endpoint: change from baseline in ABI SC/RB domains) between control and investigational interventions across a common Bayesian model. Although adaptive designs have been developed in both frequentist and Bayesian context, a Bayesian design provides a natural way of incorporating prior information that may be available about the effects of various prognostic variables and/or biomarker groups or subgroups, historical treatment or placebo effects as well as potential treatment benefit during study design as well as data analysis [22,23]. Another feature of the Bayesian framework is that it allows for continuous updating of existing data and allows for a more flexible design [22].

While platform trials are innovative and potentially provide improvements over the standard paradigm, obtaining an understanding of how the trial would function in practice and computing standard OCs is a non-trivial task since an appropriate software is not commercially available. To obtain the OCs for the ASD platform trial, a custom R package was developed for simulating the design. The R package was generalized and released publicly as OCTOPUS (Optimize Clinical Trials On Platforms Using Simulation [24]). The package provides a framework for platform trial simulation and design that can be extended easily by the user to other trials.

Overall, the simulation results show the potential gain in terms of statistical properties especially for improved decision-making ability. Although details for other potential gains were not provided, simulations were conducted to compare recruitment differences comparing a platform trial to the standard consecutive proof-of-concept type trials and exploring a wide range of scenarios. The simulations showed that an ASD platform trial typically took longer to complete enrollment for the first ISA potentially due to initial complexities in planning and executing a platform trial. However, for the second and all subsequent ISAs the platform performance was superior to consecutive studies. Careful planning prior to the first ISA can mitigate the risk of delaying the first ISA due to the complexities of a platform [25].

A platform trial increases the complexity for development, as researchers need to think about future interventions that would enter the study and how decisions could be made without knowing much about those future interventions. Thus, researchers must plan adequately and perform a carefully thought-out simulation study to understand potential gains and possible setbacks. In Autism research, with the development of biomarkers and stratification processes to address heterogeneity, strategies for identifying the populations to evaluate the effects of intervention have to be decided. Although sharing of control and active treatment patients across different intervention is an advantage, long duration between different therapies entering the trial and differences in the interventions and entry criteria can complicate sharing of patients between arms. Early and thoughtful planning for ethical considerations, statistical modelling, and methodology to construe findings can greatly facilitate implementation of platform trials.

Several critical issues that need consideration while designing a platform trial include: 1) number of interventions that have real potential for availability for inclusion in the near future 2) historical trial-to-trial variability in the placebo/standard of care response rate, 3) whether potential interventions provide similar benefit-risk profiles, 4) how participant recruitment can be increased to provide numbers to accommodate efficient evaluation of concurrent ISAs, and 5) the potential for patient population drift over the life of the platform trial. Many of these issues can be addressed and their impact lowered by carefully planning, modeling, and simulating, as these may be non-trivial issues.

5. Conclusions

Autism research, shaped particularly by the heterogeneity of ASD, the wide variety of potential targets, and uncertainty regarding fruitful targets for treatment, may benefit from the platform trial approach for POC clinical study [21]. As potential treatments for ASD emerge, the task of deciding whether therapies should be evaluated in a particular subgroup, or the overall population will not be trivial. A platform trial strategy, while complex to initiate could prove more time and cost efficient in the long term [21]. The design of this ASD platform trial allows for concurrent testing of multiple treatments, stratification by subgroups, efficacy evaluation based on ASD core symptoms (ABI SC/RB domains), and options for advancing and dropping interventions during the course of the trial. These steps may help impel the ongoing development of biomarkers and treatment for ASD with more efficient use of resources. Despite challenges, the proposed trial design is a useful addition to the inventory of platform trials and with continued innovation can help rapid selection of effective treatments for ASD. A synergistic collaboration between autistic people, their families, caregivers and health care providers with trial sponsors, overseen by a non-profit or government entity, would help recognition of unmet patient needs and develop more sustained models [21].

Funding

Funded by Janssen Research & Development, LLC, NJ, USA.

Declaration of competing interest

The authors declare the following financial interests/personal relationships which may be considered as potential competing interests: Drs. Jagannatha, Bangerter and Pandina are employees of Janssen Research & Development, LLC and hold company stock and/or stock options. Drs. Wathen and Ness were employees of Janssen Research & Development, LLC at the time of study. Dr. Wathen is currently an employee of Cytel Inc.

Acknowledgements

Priya Ganpathy, MPharm CMPP (SIRO Clinpharm Pvt. Ltd., India) provided writing assistance and Ellen Baum, PhD (Janssen Global Services, LLC) provided additional editorial support.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.conctc.2023.101061.

Appendix A. Supplementary data

The following is the Supplementary data to this article:

Data availability

No data was used for the research described in the article.

References

- 1.Adaptive Platform Trials, C Adaptive platform trials: definition, design, conduct and reporting considerations. Nat. Rev. Drug Discov. 2019;18(10):797–807. doi: 10.1038/s41573-019-0034-3. [DOI] [PubMed] [Google Scholar]

- 2.Bothwell L.E., et al. Assessing the gold standard--lessons from the history of RCTs. N. Engl. J. Med. 2016;374(22):2175–2181. doi: 10.1056/NEJMms1604593. [DOI] [PubMed] [Google Scholar]

- 3.Berry S.M., Connor J.T., Lewis R.J. The platform trial: an efficient strategy for evaluating multiple treatments. JAMA. 2015;313(16):1619–1620. doi: 10.1001/jama.2015.2316. [DOI] [PubMed] [Google Scholar]

- 4.Saville B.R., Berry S.M. Efficiencies of platform clinical trials: a vision of the future. Clin. Trials. 2016;13(3):358–366. doi: 10.1177/1740774515626362. [DOI] [PubMed] [Google Scholar]

- 5.Master Protocols: Efficient Clinical Trial Design Strategies to Expedite Development of Oncology Drugs and Biologics Guidance for Industry. 2018. https://www.fda.gov/media/120721/download Available from. [Google Scholar]

- 6.Pallmann P., et al. Adaptive designs in clinical trials: why use them, and how to run and report them. BMC Med. 2018;16(1):29. doi: 10.1186/s12916-018-1017-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Harrington D., Parmigiani G. I-SPY 2--A glimpse of the future of phase 2 drug development? N. Engl. J. Med. 2016;375(1):7–9. doi: 10.1056/NEJMp1602256. [DOI] [PubMed] [Google Scholar]

- 8.Nanda R., et al. Effect of pembrolizumab plus neoadjuvant chemotherapy on pathologic complete response in women with early-stage breast cancer: an analysis of the ongoing phase 2 adaptively randomized I-SPY2 trial. JAMA Oncol. 2020;6(5):676–684. doi: 10.1001/jamaoncol.2019.6650. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wang H., Yee D. I-SPY 2: a neoadjuvant adaptive clinical trial designed to improve outcomes in high-risk breast cancer. Curr Breast Cancer Rep. 2019;11(4):303–310. doi: 10.1007/s12609-019-00334-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Alexander B.M., et al. Individualized screening trial of innovative glioblastoma Therapy (INSIGhT): a bayesian adaptive platform trial to develop precision medicines for patients with glioblastoma. JCO Precis Oncol. 2019;3 doi: 10.1200/PO.18.00071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Alexander B.M., et al. Adaptive global innovative learning environment for glioblastoma: GBM AGILE. Clin. Cancer Res. 2018;24(4):737–743. doi: 10.1158/1078-0432.CCR-17-0764. [DOI] [PubMed] [Google Scholar]

- 12.Ritchie C.W., et al. The European prevention of alzheimer's dementia (EPAD) longitudinal cohort study: baseline data release V500.0. J Prev Alzheimers Dis. 2020;7(1):8–13. doi: 10.14283/jpad.2019.46. [DOI] [PubMed] [Google Scholar]

- 13.Loth E., Murphy D.G., Spooren W. Defining precision medicine approaches to autism spectrum disorders: concepts and challenges. Front. Psychiatr. 2016;7:188. doi: 10.3389/fpsyt.2016.00188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ghosh A., et al. Drug discovery for autism spectrum disorder: challenges and opportunities. Nat. Rev. Drug Discov. 2013;12(10):777–790. doi: 10.1038/nrd4102. [DOI] [PubMed] [Google Scholar]

- 15.McConachie H., et al. Systematic review of tools to measure outcomes for young children with autism spectrum disorder. Health Technol. Assess. 2015;19(41):1–506. doi: 10.3310/hta19410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Frye R.E., et al. Emerging biomarkers in autism spectrum disorder: a systematic review. Ann. Transl. Med. 2019;7(23):792. doi: 10.21037/atm.2019.11.53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ness S.L., et al. JAKE(R) multimodal data capture system: insights from an observational study of autism spectrum disorder. Front. Neurosci. 2017;11:517. doi: 10.3389/fnins.2017.00517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bangerter A., et al. Autism behavior inventory: a novel tool for assessing core and associated symptoms of autism spectrum disorder. J. Child Adolesc. Psychopharmacol. 2017;27(9):814–822. doi: 10.1089/cap.2017.0018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bangerter A., et al. Clinical validation of the autism behavior inventory: caregiver-rated assessment of core and associated symptoms of autism spectrum disorder. J. Autism Dev. Disord. 2020;50(6):2090–2101. doi: 10.1007/s10803-019-03965-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Pandina G., et al. Qualitative evaluation of the Autism Behavior Inventory: use of cognitive interviewing to establish validity of a caregiver report scale for autism spectrum disorder. Health Qual. Life Outcome. 2021;19:26. doi: 10.1186/s12955-020-01665-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ness S., et al. ASPI: a public-private partnership to develop treatments for autism. Nat. Rev. Drug Discov. 2020;19(4):219–220. doi: 10.1038/d41573-020-00012-4. [DOI] [PubMed] [Google Scholar]

- 22.Giovagnoli A. The bayesian design of adaptive clinical trials. Int. J. Environ. Res. Publ. Health. 2021;18(2) doi: 10.3390/ijerph18020530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Gupta S.K. Use of Bayesian statistics in drug development: advantages and challenges. Int. J. Appl. Basic Med. Res. 2012;2(1):3–6. doi: 10.4103/2229-516X.96789. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wathen K.J. OCTOPUS - optimize clinical trials on platforms using simulation update. 2020. https://kwathen.github.io/OCTOPUS/ Available from.

- 25.Meyer E.L., et al. Systematic review of available software for multi-arm multi-stage and platform clinical trial design. Trials. 2021;22(1):183. doi: 10.1186/s13063-021-05130-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

No data was used for the research described in the article.