Summary

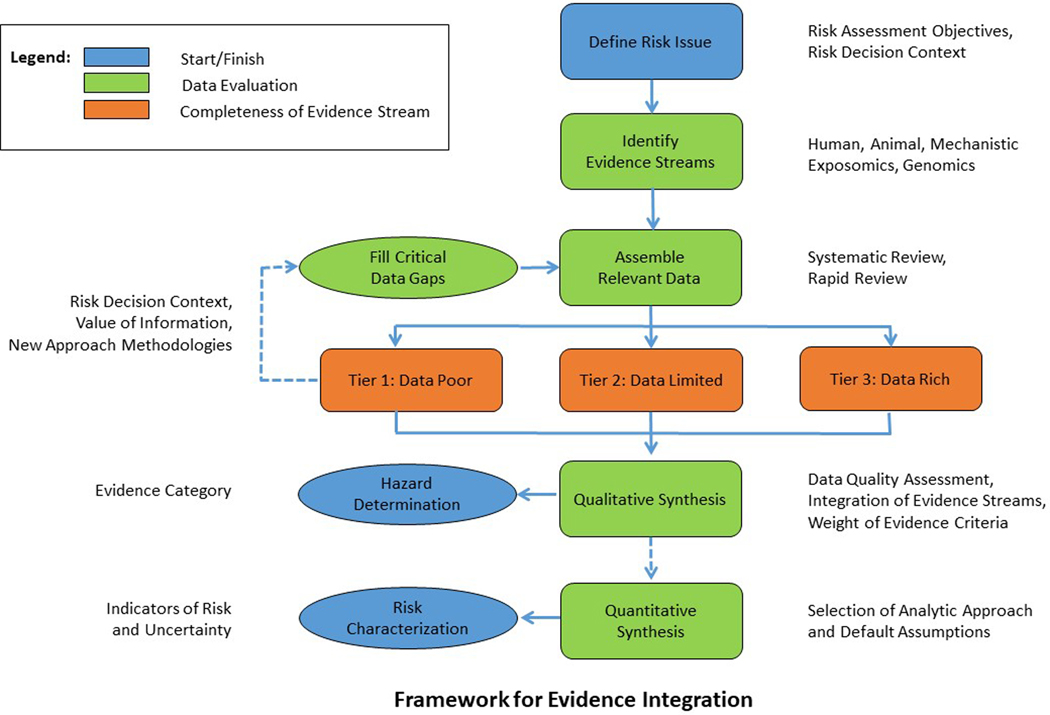

Assessment of potential human health risks associated with environmental and other agents requires careful evaluation of all available and relevant evidence for the agent of interest, including both data-rich and data-poor agents. With the advent of new approach methodologies in toxicological risk assessment, guidance on integrating evidence from multiple evidence streams is needed to ensure that all available data is given due consideration in both qualitative and quantitative risk assessment. The present report summarizes the discussions among academic, government, and private sector participants from North America and Europe in an international workshop convened to explore the development of an evidence-based risk assessment framework, taking into account all available evidence in an appropriate manner in order to arrive at the best possible characterization of potential human health risks, and associated uncertainty. Although consensus among workshop participants was not a specific goal, there was general agreement on the key considerations involved in evidence-based risk assessment incorporating 21st century science into human health risk assessment. These considerations have been embodied into an overarching prototype framework for evidence integration that will be explored in more depth in a follow-up meeting.

Keywords: risk assessment, environmental agents, population health, new approach methodologies, evidence stream, evidence integration

1. Introduction and Background

Risk science has evolved into a well-established interdisciplinary practice incorporating diverse data and methods in order to characterize population health risks and inform decision-making. Risk science has benefitted from advances in biology and toxicology over the last decade, providing powerful new tools and technologies — including high-throughput in vitro screening and computational toxicology — that can be used to better assess risks to population health. Risk science has also benefitted from advances in molecular and genetic epidemiology which, combined with concomitant advances in exposure science, permit direct estimation of risk in human populations. These and other advances have been incorporated into a framework for the next generation of risk science proposed by Krewski et al. (2014), which was based on work completed under the US Environmental Protection Agency (EPA) NexGen program, with input from a large number of stakeholders from North America and Europe.

An important aspect of the evolution of risk science is the desire to ensure that risk decisions are based on the best available scientific evidence, with this evidence identified and evaluated in accordance with appropriate processes and criteria. This trend is consistent with the evolution of evidence-based medicine, which makes use of current best evidence in making clinical decisions about the care of individual patients (Masic, Miokovic, & Muhamedagic, 2008; Sackett, 1997). More recently, the concept of evidence-based toxicology has emerged under the leadership of investigators at the Johns Hopkins Bloomberg School of Public Health (Stephens et al., 2013). Like evidence-based medicine, evidence-based toxicology seeks to ensure that the best available data is used in toxicological risk assessment.

The present initiative seeks to build on the scientific advances covered above, and the trends towards evidence-based decision making in multiple disciplines, to derive a framework for evidence-based risk assessment that incorporates all relevant data needed to support risk decision-making in a transparent and objective manner. The specific objectives of this project are:

to develop a framework for evidence-based risk assessment describing how all relevant evidence relating to a specific risk decision should be assembled and evaluated;

to conduct case study prototypes to evaluate the utility of the framework and demonstrate its application in practice; and

to lay out a knowledge translation action plan to support the adoption and use of the framework for evidence-based risk assessment in decision making.

2. Evidence for Causation

Establishing causality requires a careful evaluation of the available evidence for and against a causal association between exposure and outcome. Evaluating evidence for causality can be a complex undertaking, particularly in the presence of diverse sources of information which may report inconsistent findings and which may be of unequal relevance or reliability. A systematic review can be used to summarize the available evidence in a comprehensive and reproducible manner (Wang, Gomes, Cashman, Little, & Krewski, 2014). Although not all systematic reviews are designed to evaluate causality, there has been a trend towards including causality evaluation as a component of systematic review in recent years. Historically, the Hill criteria (strength, consistency, specificity, temporality, biological gradient, plausibility, coherence, experiment, and analogy) have provided useful general guidance on weighing the evidence for causality (Lucas & McMichael, 2005), though they were originally designed with only observational (epidemiologic) data in mind, and do not address other aspects of causality determination, such as consideration of experimental data and integrating different sources of evidence. The grading of recommendations, assessment development, and evaluation (GRADE) approach incorporates aspects of the considerations for causality identified by Hill as well as other considerations, providing an approach to evaluate the certainty of the body of evidence across the following domains: risk of bias, inconsistency, indirectness, imprecision, publication bias, magnitude of effect, dose-response gradient, and opposing residual confounding (Schunemann et al., 2008). On the other hand, the International Agency for Research on Cancer (IARC), for example, has developed a detailed approach for identifying agents that can cause cancer in humans, based on a careful evaluation of the available human, animal and mechanistic data (IARC, 2019).

Rhomberg and colleagues (2013) recently reviewed 50 different frameworks that have been proposed in different contexts in the interests of developing a “transparent and defensible” methodology for evaluating the evidence for causation. This review identified four key phases for such assessments: (1) defining the causal question and developing criteria for study selection, (2) developing and applying criteria for review of individual studies, (3) evaluating and integrating evidence and (4) drawing conclusions based on inferences. Although a specific framework that would be widely applicable in different contexts was not proposed, this work serves to define important attributes of what a broadly applicable framework might include. Five years later, another review of the body of knowledge presented a framework with a similar four-step approach: (1) plan and scope the weight of evidence (WoE) assessment, (2) establish lines of evidence, (3) integrate line to assess WoE, and (4) summarize conclusions (Martin et al., 2018). While the specific principles, practices and approaches proposed by these two reviews may differ, together they offer a general approach to evaluating evidence for causation that can be refined and adapted as needed.

Several organizations have provided more detailed guidance for evaluating evidence of causation in various circumstances, depending in part on the nature of the available data (predominantly epidemiological or toxicological) and the risk decision context. Following a review of risk assessment approaches used by the US EPA’s Integrated Risk Information System (IRIS), the US National Research Council (2014a) identified systematic review and evidence integration as key components of a qualitative and quantitative risk assessment paradigm for environmental chemicals. More broadly, the National Toxicology Program Office of Health Assessment and Translation developed a Handbook for Conducting a Literature-Based Health Assessment Using OHAT Approach for Systematic Review and Evidence Integration. In the context of establishing dietary reference intakes (DRIs) taking into account chronic disease outcomes, a committee of the National Academies of Sciences, Engineering, and Medicine (NASEM, 2017) developed Guiding Principles for Developing Dietary Reference Intakes Based on Chronic Disease, adopted GRADE (Guyatt et al., 2008) as the preferred approach to both establishing evidence of causation as well as for intake-response assessment.

3. Sources of Evidence

In conducting evidence-based risk assessment, it is important that all available and relevant sources of information be considered. Risk assessment may be informed by toxicological, epidemiological, clinical, surveillance, mechanistic and other data, all of which need to be considered collectively in order to ensure that the evidence base assembled to potentially support the assessment conclusions is appropriately comprehensive.

An important aspect of evidence-based risk assessment is the amount of data that may or may not be available to support the assessment. Cote and colleagues (2016) note that data-rich and data-poor risk decisions necessarily require different approaches and offer advice on what might be done in a data-poor situation where a risk decision must be made without the luxury of filling key data gaps.

In elaborating the proposed framework for evidence-based risk assessment, the strengths and limitations of different sources of information will be identified, and their complementary role in informing the overall assessment outlined. The framework will address both data-rich and data-poor risk decision contexts and establish minimum data requirements to support evidence-based risk decision making.

3.1. Current Approaches to Evidence Integration

3.1.1. EFSA

With a mandate to provide scientific expertise related to food and feed products in the European Union, the European Food Safety Authority (EFSA) is among the global leaders in hazard identification and risk assessment. Established in 2002 with funding from the European Union, EFSA has developed a variety of frameworks and approaches to support transparency, rigor and quality in evidence-based risk assessment for products under their remit (EFSA, 2010, 2014, 2015). While additional information on some of these is provided in a separate publication in this issue (Aiassa et al. 2022), a brief summary of the 4-step framework for conducting a scientific risk assessment is included herein.

The 4-step process comprises the following “plan”, “do”, “verify” and “report” stages.

Plan: Formulate the key research question and (as relevant) associated sub-questions, outlining the methodology for answering the question(s) in a protocol developed a priori

Do: Execute the methodology outlined in the protocol to collect, analyze and leverage data to inform conclusions (specifics will depend on study design and type(s) of data being collected)

Verify: Compare the methodology taken with that outlined in the protocol, making note of any deviations from the original plan

Report: Promote transparency through the publication of relevant methodologies, assumptions, results and uncertainties

This approach has been piloted across EFSA with some success. For example, such an approach promotes the impartiality, rigour and overall scientific value of the assessment process — as well as the resulting conclusions — by reducing the risk of bias from decisions made in light of the data collected (Munafo et al., 2017; Shamseer et al., 2015). Conversely, it was found that the implementation of the proposed approach among new or novice users was a resource-, effort- and time-intensive process, and EFSA continues to work towards building capacity and expertise to deliver scientific assessments that are both efficient and in line with current best practices in risk assessment. Nevertheless, EFSA’s prioritization of the principles of impartiality, methodological rigour, transparency and public engagement point to the value of the promotion and integration of such a framework within everyday practice, recognizing that continued improvement should further advance EFSA’s ability to deliver high-quality scientific assessments of relevance to public health promotion across the European Union.

3.1.2. EPA IRIS

Created in 1985 and located within the EPA Center for Public Health and Environmental Assessment, the IRIS Program conducts chemical hazard assessments (EPA, 2018). These assessments examine the health consequences of lifetime exposure to environmental chemicals and are both a primary source of certain chemical toxicity information used in support of regulatory and non-regulatory decisions within EPA program offices and regions, and important sources of information for other state, federal and international organizations.

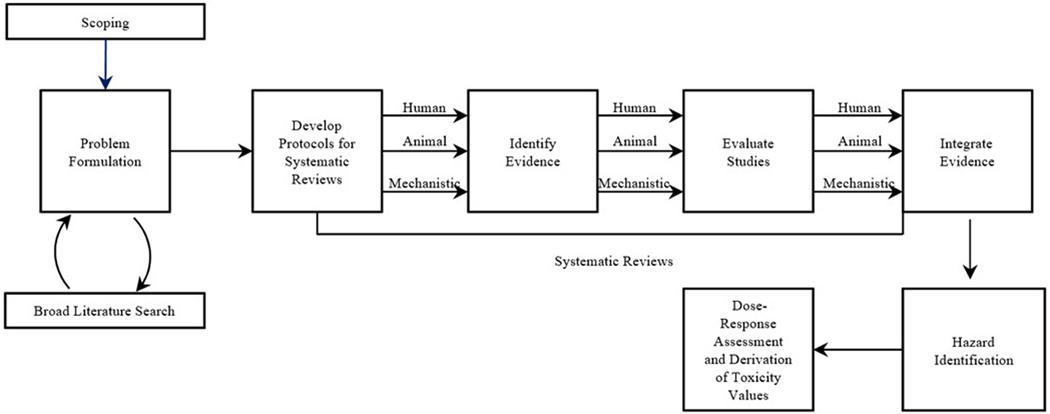

The IRIS approach for assessment development (illustrated as interpreted by the NAS in Figure 1) has increasingly been framed through the lens of rigorous and transparent systematic review processes. Assessment development is part of a larger seven-step process for assessment review, which can be summarized as follows (NRC, 2011):

Figure 1.

IRIS approach for assessment development, as interpreted by the NAS (NRC, 2014a).

Complete draft IRIS assessment

Internal agency review

Science consultation on the draft assessment with other federal agencies and White House offices

Independent expert peer review, public review and comment, and public listening session

Revise assessment

-

A. Internal agency review and EPA clearance of final assessment

B. EPA-led interagency science discussion

Post final assessment on IRIS

Assessments are intended to inform decisions related to hazard identification and dose-response assessments, which can be integrated with exposure assessments by EPA programs and regional offices to characterize potential public health risks associated with exposure to an environmental chemical or group of chemicals.

IRIS supports the principle of transparency in their decision processes, with publicly available summaries and databases of chemical-specific evidence and assessment judgments provided since 1988 (EPA, 2018). Progress towards the application of best practices in systematic review and risk assessment has accelerated since 2011, when recognition of challenges in previous assessments motivated a commentary on the IRIS assessment development process by the NAS that was outside of the scope of the chemical-specific review (NRC, 2011). The resulting recommendations outlined a roadmap for a more systematic review process that triggered numerous planned enhancements to the assessment development process, including on-boarding of systematic review methodologies, adoption of the Health and Environmental Research Online (HERO) tool, and increased public engagement (EPA, 2018; NASEM, 2018).

In order to review changes and progress in the years following the 2011 NAS review, another committee was convened in 2014 (NRC, 2014a); systematic review and application of best practices in evidence integration were again identified as essential elements of environmental chemical human health assessment. Further improvements to the rigor of the IRIS process are being implemented, as reflected in a third assessment conducted in 2018 (NASEM, 2018), which concluded that the IRIS process — while still evolving to adapt to new scientific methodologies and data sources — had successfully undertaken reforms to improve the application and transparency of systematic review methodologies in chemical assessments. Moving forward, it was noted that new tools and approaches would be required to meet some of the outstanding recommendations from the 2014 assessment, “especially for incorporating mechanistic information and for integrating evidence across studies” (NASEM, 2018, p. 12).

3.1.3. Health Canada

The Canadian Environmental Protection Act (CEPA), 1999, serves as the main federal policy under which potentially hazardous environmental substances are assessed and regulated. Health Canada and Environment and Climate Change Canada work together to assess the potential for risk to the environment and the general Canadian population associated with these substances and, as necessary, develop policies and risk management measures for their control. Since its ratification twenty years ago, over 23,000 environmental substances have been registered on the Canadian Domestic Substances List (DSL) (Krewski et al., 2020). The Canadian Chemicals Management Plan (CMP), launched in 2006 based on results of Categorization of the DSL and New Substances Notifications, further sought to evaluate the risk associated with 4,300 prioritized chemicals prior to 2020. Our knowledge of chemicals and emerging technologies continues to evolve. Therefore, moving forward it is important to continue to screen, integrate and consider new information and the increasing complexities of chemicals that may have the potential to cause harm to the environment or human health. Under the CMP, the identification of risk assessment priorities (IRAP)1 approach is the ongoing prioritization activity for systematically collecting, consolidating and analyzing information for chemicals and polymers.

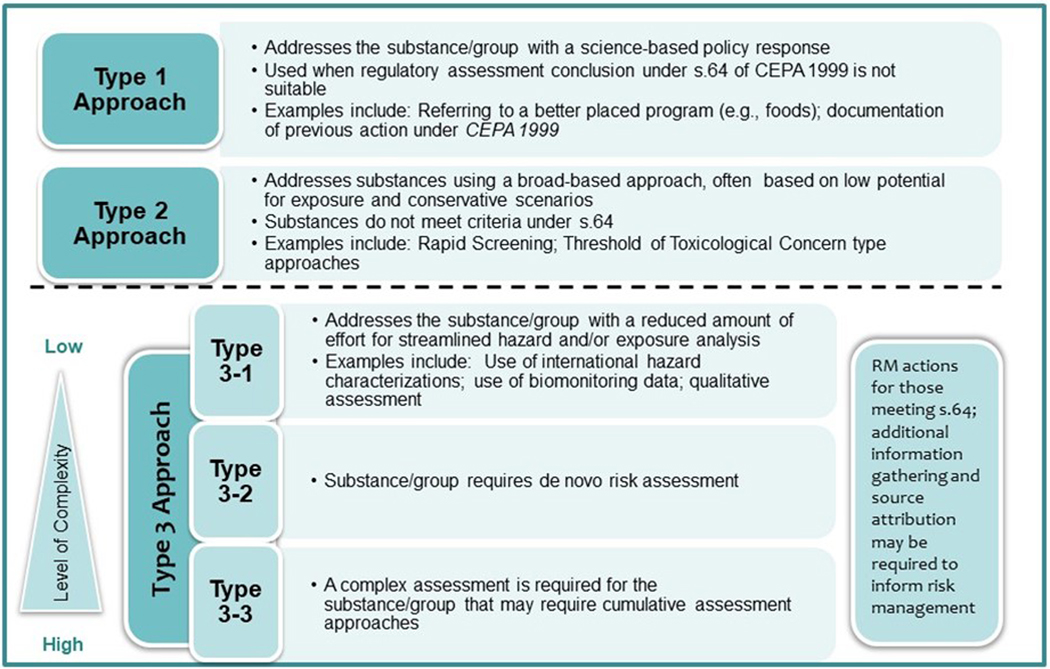

Given the ambitious timelines and number of chemicals to be assessed and addressed, an important element in Health Canada’s success to date has been the development and application of the CMP Risk Assessment Toolbox (Figure 2). This Toolbox was developed to delineate the various types of approaches used to address the remaining substances or groups of substances prioritized under the CMP. To make best use of available information, gain efficiencies and ensure the ability to focus on substances of highest concern, the Risk Assessment Toolbox outlines three types of approaches that can be selected as appropriate and used in a fit-for-purpose manner based on the complexity of the assessment required (Health Canada, 2016):

Figure 2.

Chemical Management Plan Risk Assessment Toolbox (Health Canada, 2016).

Type 1 Approaches use science-based policy responses such as referral of the assessment to a better-placed federal risk assessment program or documentation that the substance has already been addressed by an existing action or previous initiative under CEPA.

Type 2 Approaches address substances using broad-based quantitative or qualitative approaches and apply conservative (protective) assumptions. Formal CEPA conclusions may or may not be made under section 64.

Type 3 Approaches are applied for substances requiring a standard risk assessment approach including both hazard and exposure and may consider qualitatitive and quantitative lines of evidence in the determination of whether the substances or group of substances meet the criteria under section 64 of CEPA 1999. Further, the Toolbox proposes three approach subtypes spanning a continuum of complexity and methodology considerations in order to focus the risk assessment efforts.

Select examples of the types of approaches are noted in Figure 2 and the scientific details are described in the published CMP Science Approach Documents (SciADs). More information on the approaches, application and results, including the Threshold of Toxicological Concern, Ecological Risk Classification, and biomonitoring-based approaches can be found on the Chemical Substances webpage (Health Canada, 2020)2.

As the Government of Canada embarks on the next phase of their chemicals assessment and management program, new approach methods (NAMs) are being considered and developed for inclusion in the CMP Risk Assessment Toolbox, particularly for Type 2 and 3 approaches, to rapidly and effectively identify the potential for risk in support of the 21st century paradigm shift in risk science. To date, there has been a high degree of success in advancing the use of new technologies and analytical tools through several case studies that have illustrated the practical and positive impacts of integrating multiple lines of evidence, including emerging science. A solid foundation and proof of concept has been illustrated for the application of several important NAM and computational toxicology including the example presented at the workshop on the use of Integrated Approaches for Testing and Assessment (IATA) for screening level risk assessment (Webster et al. 2019).

As Health Canada continues to advance chemicals assessment and management, NAM will be considered in the evolving risk assessment toolbox and incorporated into decision-making through the application of robust methodologies that are context specific and fit-for-purpose.

3.1.4. ANSES

Over the period 2015–2016, the French Agency for Food, Environmental and Occupational Health & Safety, ANSES (l’agence national de sécurité sanitaire alimentation, environment, travail) convened an expert panel to provide a critical review and advice on best practices in evaluation of the weight of evidence (“le poids des preuves”) in the hazard identification step of the risk assessment process. ANSES is somewhat unique in this regard due to the breadth of their mandate. They sought to harmonize the application of weight-of-evidence concepts across multiple hazardous domains including public and occupational exposures to chemical hazards, radiation hazards, nutrients and microbial hazards, but all within the hazard identification stage. In this way, questions such as “Does exposure to this chemical cause cancer?” and “Are these particular prions transmissible to humans?” would be answered in a rigorous and harmonized approach. The result of the process was a report with several findings and recommendations (Makowski et al., 2016).

Following the literature review, the panel described a four-step process that is similar in general structure to other frameworks in the literature (planning, evaluation of lines of evidence, integration of lines of evidence and reporting on the overall weight-of-evidence). The panel’s recommendations to ANSES were described in line with this framework. Further work by ANSES has included, among other activities, consideration of the role of quantitative approaches to weight-of-evidence.

3.1.5. IARC

Several national and international health agencies have established programs with the aim of identifying agents and exposures that cause cancer in humans. The IARC Monographs on the Identification of Carcinogenic Hazards to Humans are published by the IARC, the cancer research arm of the World Health Organization (WHO). Each IARC Monograph represents the consensus of an international working group of expert scientists. The Monographs include a critical review of the pertinent peer-reviewed scientific literature as the basis for an evaluation of the weight of the evidence that an agent may be carcinogenic to humans. Published continuously since 1972, the scope of the IARC Monographs has expanded beyond chemicals to include complex mixtures, occupational exposures, lifestyle factors, physical and biologic agents, and other potentially carcinogenic exposures. To date, 120 IARC Monograph Volumes are available on-line3 and four more are in preparation, More than 1000 agents, mixtures, and exposures have been evaluated. Among these, 120 have been characterized as carcinogenic to humans, 82 as probably carcinogenic to humans, and 311 as possibly carcinogenic to humans.

From the very beginning of the monographs program, there have been two criteria for consideration of an agent for evaluation: (a) there is evidence of human exposure and (b) there are published scientific data suggestive of carcinogenicity. For each agent considered, systematic reviews of the available scientific evidence on its carcinogenicity in humans and in experimental animals are conducted by an international working group of independent experts. Data on human exposure to the agent and toxicological data on pertinent mechanisms of carcinogenesis are also reviewed. An overall evaluation that integrates epidemiological and experimental cancer data as well as mechanistic evidence, most notably in exposed humans, is reached according to a structured process. Agents with ‘sufficient evidence of carcinogenicity’ in humans are assigned by default to the highest category, ‘carcinogenic to humans’ (IARC Group 1) whereas the categories of ‘probably’ (Group 2A) or ‘possibly’ (Group 2B) carcinogenic to humans, or ‘not classifiable as to its carcinogenicity to humans’ (Group 3) are assigned according to the combined strength of the human, animal and mechanistic evidence. Agents may be placed in a higher category when the evidence for a relevant mechanism of carcinogenesis is sufficiently strong. The IARC Monographs classifications refer to the strength of the evidence for a cancer hazard, rather than to the quantitative level of cancer risk. The IARC Monographs integrate the three streams of evidence on cancer in humans, cancer in experimental animals and mechanistic data into an overall evaluation of the strength of evidence in terms of a hazard identification on the carcinogenicity of an agent. Identification and critical appraisal of the published literature includes both exposure and (when possible) exposure-response characterization, supporting both hazard identification and characterization. In addition to identifying cancer hazards, the IARC monographs may include quantitative statements about the level of cancer risk, as with Monograph Volume 114 on the consumption of red and processed meat and Monograph Volume 120 on benzene.

The Preamble to the IARC Monographs describes the objective and scope of the Programme, the scientific principles and procedures used in developing a Monograph, the types of evidence considered, and the scientific criteria that guide the evaluations. The IARC Monographs are prepared according to principles of scientific rigour, impartial evaluation, transparency, and consistency. The criteria defining those principles have evolved during the early years of the Programme and were outlined in the first Preamble (IARC, 1978), which has been refined and updated a dozen times since. In the recently revised Preamble (IARC, 2019) mechanistic evidence has gained in prominence, and relevance to cancer hazard evaluation. One of the important changes in the new Preamble is the introduction of systematic review of mechanistic data facilitated by the organization into Key Characteristics (Smith et al., 2016), which is now common practice since Monograph Volume 112. Further discussion of the evolution of the Preamble and other important changes incorporated into the most recent update is given by Samet and colleagues (2019). In their account of the history of the Preamble to the IARC Monographs, Baan & Straif (2022) note that new approach methodologies, discussed below, have the potential of reducing or avoiding the use of experimental animals in future evaluations conducted by the IARC.

3.2. New Approach Methodologies

Following publication of the US National Research Council report, Toxicity Testing in the 21st Century: A Vision and A Strategy (NRC, 2007), there has been increasing emphasis on alternatives to animal testing in toxicological risk assessment (Bolt, 2019; Perkins et al., 2019ab; Price et al., 2020). A mid-term update on progress made towards the realization of this vision over the original 20-year planning horizon has recently been prepared by Krewski and colleagues (2020). The broad suite of tools and strategies offering viable alternatives to animal testing is now referred to as new approach methodologies, or NAMs.

The US EPA (2018, p. 6) describes the term ‘NAM’ as a “descriptive reference to any technology, methodology, approach, or combination thereof that can be used to provide information on chemical hazard and risk assessment that avoids the use of intact animals”.US EPA (2019a) has developed a list of NAMs considered potentially relevant for evaluating chemical toxicity under the Toxic Substances Control Act. These new approaches can be broadly classified as including:

computational toxicology and bioinformatics;

high-throughput screening methods;

testing of categories of chemical substances;

tiered testing strategies;

in vitro studies;

systems biology; and

new or revised methods from validation bodies such as ICCVAM, ECCVAM, NICETAM, and OECD4.

The US EPA National Center for Computational Toxicology (NCCT) has recently developed a roadmap outlining an approach for making greater use of new approach methodologies (NAMs) (Thomas et al., 2019). Key elements of the EPA CompTox Blueprint include an emphasis on computational modeling and high-throughput approaches to supplement traditional approaches in chemical assessments for regulatory-decision making. On December 17, 2019, EPA hosted its First Annual Conference on the State of the Science on Development and Use of NAMs for Chemical Safety Testing (EPA, 2019b). The US National Toxicology Program has developed a listing of alternative test methods currently accepted by US regulatory agencies (US NTP, 2020).

In administering the European Union’s REACH (Registration, Evaluation, Authorization and Restriction of Chemicals) regulation (Armstrong et al., 2020), the European Chemicals Agency (ECHA, 2020) relies heavily on test guidelines that have been validated in accordance with guidance provided by the Organization for Economic Cooperation5 and Development, including alternative test methods. The OECD Toolbox provides a rich suite of alternative computational tools for evaluating quantitative structure-activity relationships (OECD, 2020). Alternative test methods are an important component of REACH dossiers submitted by registrants: of 6,290 substances evaluated within REACH, with some 89 % were found to include least one data endpoint based on alternative test methods (ECHA, 2017). Alternative test methods considered by US EPA include alternatives that have been validated by OECD, such as the Bacterial Reverse Mutation Test (OECD Test Guideline 471) and the Performance-Based Test Guideline for Human Recombinant Estrogen Receptor (hrER) in vitro Assays (OECD TG 493).

Andersen et al. (2019) have suggested a multi-level strategy for incorporating NAMs into toxicological risk assessment practice, seeking to deploy new methods in a context specific manner. Level 1 in the proposed strategy focuses on computational screening, with quantitative structure-activity relationships (QSAR)/read across, cheminformatics, and threshold of toxicological concern approaches used to assess bioactivity and high-throughput exposure modeling approaches used to evaluate potential human exposure. Level 2 relies on high-throughput in vitro screening to assess bioactivity through transcriptomics, high-content imaging and bioinformatics, along with judiciously chosen test batteries, to evaluate bioactivity. Refined exposure models may be used, along with high-throughput in vitro to in vivo extrapolation (HT-IVIVE), to estimate human doses. Level 3 invokes fit-for-purpose assays for bioactivity, including lower throughput cell-based assays and consideration of metabolism. More specific exposure models are employed, along with quantitative in vitro to in vivo extrapolation (q-IVIVE). Level 4 employs more complex in vitro assays for bioactivity, advanced systems such as organ chips, and tailored in vivo studies to confirm in vitro results. Tailored exposure models may also be employed at this level, including physiologically based pharmacokinetic (PBPK) models for in vivo species extrapolation.

As envisaged by Andersen et al. (2019), level 1 approaches may be most useful in the context of priority setting, with level 2 approaches more suited to screening level assessments. Level 3 approaches afford greater insight into toxicity pathways and are consistent with the vision put forward by the US National Research Council (NRC, 2007) for toxicity testing in the 21st century (TT21C). When required, level 4 approaches may provide additional data using more integrated assays at the biological system level. At each level, margins of exposure (MOEs) based on a comparison of predicted human doses to doses at which bioactivity is expected provide valuable information in support of context specific risk decisions.

The OCD IATA Case Studies Project includes a successful application of NAMs in risk assessment in which conducted, performance-based approaches where used to assess the reliability and accuracy of in vitro predictions (OECD 2019a,b). Specifically, using estrogen receptor bioactivity models based on 18 HTS assays, 43 reference chemicals achieved a balanced accuracy of 86–95%; similar validation exercises for androgen receptor activity and anti-androgen activity also produced strong measures of validation performance. Encouraged by the success of these in vitro approaches, the OECD has published three guidance documents on incorporating NAMs into integrated assessment and testing approaches.

New approaches to risk decision making are increasingly being used by regulatory authorities worldwide, motivated in large part by the need for increased throughput in risk decision making. The US EPA used Attagene assays including multiple gene targets such as PPARα and NRF2 to rapidly evaluate the relative toxicity of eight dispersants in response to the Deepwater Horizon oil spill in the Gulf of Mexico in 2009, and this represents an early practical application of NAMs in emergency risk decision-making by the US EPA (Anastas, Sonich-Mullin, & Fried, 2010). In February 2019, the Methodology Working Group of the Scientific Committee on Consumer Safety organized a working to discuss the use of NAMs to advance “Next Generation Risk Assessment” in the context of animal-free safety testing of cosmetic ingredients in Europe (Rogiers et al., 2020). With the diverse set of NAMs currently available, there are unprecedented opportunities to apply new high- and medium-throughput assays in support of human health risk assessment.

As an additional example, Gannon et al. (2019) conducted a tiered evaluation of hexabromocyclododecane (HBCD), a widely used flame retardant. This analysis incorporated ToxCast™ HTS data and in vitro-in vivo extrapolation, or IVIVE (Tier 1), rat liver transcriptomic data (Tier 2), and conventional rat bioassay data (Tier 3). Toxicity pathway perturbations were closely aligned between Tiers 1 and 2, and consistent with apical effects seen in Tier 3. Bioactivity-exposure ratios (BERs) calculated as the ratio of levels (expressed in human dose equivalents) at which biological activity was seen in these tiered assays to Canadian exposure levels were smallest in in Tier 1 and similar in Tiers 2 and 3.

Other comparisons of indicators of toxicity used in regulatory risk assessment between alternative and traditional test methods include benchmark dose estimates reported by Thomas et al. (2012), who showed good agreement between transcriptomic- and bioassay-based BMDs in a pilot study of five chemicals. More recently, Paul Friedman (2020) conducted a comparison of points of departure (PODs) for 448 chemicals based on quantitative high-throughput screening (qHTS) predictions of bioactivity, denoted PODNAM, with results from mammalian toxicity tests, denoted PODtraditional. The 95% lower confidence limit (LCL) on the PODNAM was less than the PODtraditional for the great majority of these chemicals, suggesting that qHTS based exposure guidelines are likely to afford at least as much public health protection as exposure guidelines based on traditional animal tests.

The elaboration of strategies for deploying new approach methodologies in a systematic manner, such as that suggested by Andersen et al. (2019), will be of great value in choosing the most appropriate approaches to employ within specific risk decision contexts. While such efforts are both needed and welcome, the increased use of NAMs by regulatory authorities will also require new thinking on how to incorporate new types of data into weight of evidence evaluations.

3.3. Trade-offs between Cost, Timeliness and Uncertainty Reduction in Toxicity Testing

A fundamental tenant of vision for the future of toxicity testing advanced by the NRC (2007) was the need for more rapid toxicity testing strategies in order to expand the coverage of the large number of chemicals for which toxicological data is lacking. This need was underscored by the NRC (2009), which recommended the use of formal value-of-information techniques to the adoption of formal value-of-information (VOI) methods to evaluate alternative testing strategies. Price et al. (2021) and Hagiwara et al. (2022) have recently developed informal and formal VOI methods to evaluate the trade-offs between the cost, timeliness, and uncertainty reduction. These contributions suggest that while rapid alternative test methods may be subject to greater uncertainty than traditional more expensive animal tests of longer duration, having sufficient information to support risk decision making in a timely manner can result in a lower total social cost by avoiding public health impacts that can accrue when decisions are delayed pending the results of toxicity tests of longer duration. Although further VOI analyses are needed to reaffirm these findings under broader real-world toxicity testing scenarios, initial results appear to be supportive of alternative test methods that can provide cost-effective toxicity data in a timely manner.

4. Defining the Research Question

Formulating a clear and actionable research question creates structure in the approach to conducting systematic reviews and developing health guidance (Guyatt, Oxman, Kunz, Atkins, et al., 2011) Within the field of risk assessment, this question may be tailored for studies of exposure as a PECO question, which is used to outline the Population, Exposure, Comparator, and Outcomes (Morgan, Whaley, Thayer, & Schunemann, 2018). Guidance has been provided to help users operationalize the PECO question, as this informs many stages of the evidence review and quality assessment of the findings (Section 7). The population may be defined based upon particular characteristics relevant to the exposure or outcome of interest, including geographic, demographic, socioeconomic, or genetic and biological factors. Approaches for identifying the exposure and comparator are discussed by Morgan and colleagues (2018). Research question formulation may also benefit from consideration of the FINER criteria (feasible, interesting, novel, ethical and relevant) (Farrugia et al., 2010).

In designing a systematic review, it is important to avoid the risk of formulation bias. Researchers should clearly identify the objectives of the review, which in turn should clearly reflect the ultimate use of the review in risk decision-making (Farhat et al. 2022). Phrasing of the problem and definition of the search parameters and selection criteria a priori should help limit formulation bias. Many risk assessment frameworks (e.g., Krewski et al., 2014; NRC, 2009; Paoli et al., 2022) emphasize the importance of problem formulation, including consideration of value of information to ensure that information collected is informative with respect to the objective of the assessments

5. Assembling the Evidence

Because of the diversity of evidence that could be considered in risk decision-making, it is essential to have structured approaches for identifying and summarizing all relevant information. Systematic review provides a powerful approach to meet this need. Guidelines for systematic review of clinical data have been established by the Cochrane Collaboration, including the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (Moher, Liberati, Tetzlaff, & Altman, 2009). Although guidelines for best practices in systematic review were developed first for summarizing clinical evidence, similar guidelines are now being developed for other sources of evidence, including toxicology and epidemiology. An overview of best practices in systematic review is provided in a separate article in this issue (Farhat et al., 2022).

Within the field of environmental health in particular, there is a clear need for rigorous systematic review methodologies. Although outside the scope of the present report, less intensive expedited or rapid reviews (see Farhat et al., 2022) may be conducted when time and resources do not permit the completion of a comprehensive systematic review. Review of the scientific evidence plays a critical role in decision-making about exposures to environmental chemicals by local and federal government agencies. However, challenges exist where there are large data sets, variable study quality, conflicting evidence, or limited information, which impedes integration and final conclusions. Approaches used to assemble and synthesize evidence have evolved over the last three decades, with expert judgement increasingly supported by guidance developed by national and international organizations. Improved methods of chemical assessment that better reflect scientific knowledge have been articulated by the National Research Council in several recent reports (NRC, 2008, 2009, 2014a, 2014b), in particular identifying systematic review as an approach that could substantially improve the processes used to inform policy- and decision-making regarding environmental chemicals.

Several systematic approaches have been evolving and undergoing applications to chemical assessment at the National Toxicology Program (Rooney, Boyles, Wolfe, Bucher, & Thayer, 2014) and U.S. EPA (NRC, 2014a, 2014b). One novel approach to systematic review in environmental health is the Navigation Guide, developed in 2009 through a collaboration between academic scientists and clinicians with the goal of expediting the development of evidence-based recommendations for preventing harmful environmental exposures (Woodruff & Sutton, 2011). The Navigation Guide was developed by drawing from the rigor of systematic review methods used in the clinical sciences with modifications allowing for the unique challenges faced with evidence streams specific to environmental health (i.e., animal toxicology and human epidemiology data).

The Navigation Guide has been applied in five published case studies as proof-of-concept (Johnson et al., 2016; Johnson et al., 2014; Koustas et al., 2014; Lam et al., 2014; Lam et al., 2017; Lam et al., 2016; Vesterinen et al., 2015) (Table 1). These case studies were some of the first to demonstrate that systematic and transparent review approaches in environmental health were not only achievable but also advantageous over existing methodologies such as narrative reviews.

Table 1.:

Five case studies of the Navigation Guide

| Citation | Case study | Evidence streams | Findings |

|---|---|---|---|

|

Johnson et al. 2014

Koustas et al. 2014 Lam et al. 2014 |

Developmental exposure to perfluorooctanoic acid (PFOA)and fetal growth outcomes | Human and animal | Rated 18 epidemiology studies and 21 animal toxicology studies. Both evidence streams were rated as “moderate” quality and “sufficient” strength, leading to a final conclusion that PFOA was “known to be toxic” to human reproduction and development. |

| Vesterinen et al. 2015 | Association between fetal growth and maternal glomerular filtration rates | Human and animal | Rated 31 human and non-human observational studies as “low” quality and two experimental non-human studies as “very low” quality. All three evidence streams were rated as “inadequate.” There was insufficient evidence to support the plausibility of a reverse causality hypothesis for associations between environmental exposures during pregnancy and fetal growth. |

| Johnson et al. 2016 | Exposure to triclosan and human development or reproduction | Human and animal | Rated three human studies and eight experimental animal studies in rats reporting hormone concentration outcomes (thyroxine levels). Human studies were rated as “moderate/low” and animal studies were rated as “moderate.” There was “sufficient” non-human evidence and “inadequate” human evidence, leading to the conclusion that triclosan was “possibly toxic” to reproductive and developmental health. |

| Lam et al. 2016 | Exposure to air pollution and Autism Spectrum Disorder (ASD) | Human only | Rated 23 epidemiology studies. Evidence was rated as “moderate” quality, leading to the conclusion that there was “limited evidence of toxicity” between exposure to air pollution and ASD diagnosis. |

| Lam et al. 2017 | Developmental exposure to Polybrominated diphenyl ethers (PBDEs) and IQ/ADHD outcomes | Human only | Rated 10 epidemiology studies for intelligence outcomes and 9 studies for ADHD outcomes. Evidence was rated as “moderate” quality with “sufficient” evidence for IQ outcomes and as “moderate” quality with “limited” evidence for ADHD outcomes. |

The Navigation Guide systematic review methodology involves three main steps:

Specify the study question: Frame a specific research question relevant to decision-makers about whether human exposure to a chemical or other environmental exposure is a health risk

Select the evidence: Conduct and transparently document a systematic search for published and unpublished evidence

Rate the quality and strength of the evidence: Rate the potential risk of bias (i.e., internal validity) of individual studies and the quality/strength of the overall body of evidence based on prespecified and transparent criteria (typically outlined in a pre-published, publicly available protocol). The Navigation Guide methodology conducts this process separately by evidence stream (i.e., human and animal evidence). Ultimately, evidence is combined by integrating the quality ratings of each of these two evidence streams. The end result is one of five possible statements about the overall strength of the evidence: “known to be toxic,” “probably toxic,” “possibly toxic,” “not classifiable,” or “probably not toxic.”

To date, the traditional application to Navigational Guide has been restricted to human and animal data (Woodruff & Sutton, 2014). Future iterations of the guide could benefit from extensions to include consideration of alternative test data derived from new approach methodologies.

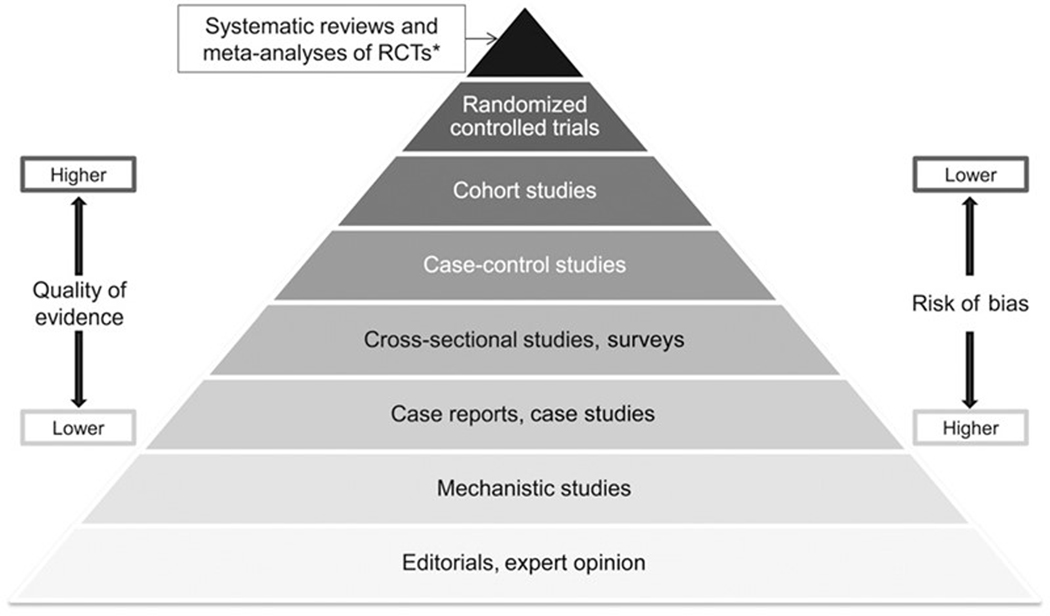

Although various authors have suggested different data hierarchies for use in evidence integration (Burns, Rohrich, Chung, 2012; Petrisor & Bhandari, 2007), consensus on a single hierarchy for application across diverse risk assessment contexts is lacking. As an example, Yetley et al. (2017) identified hierarchies of evidence considering sources of information to support the establishment of dietary reference intakes (DRIs) of nutrients present in the food supply. In this paradigm, well-conducted randomized clinical trials (RCTs) represent the ‘gold standard’ in terms of obtaining unbiased information directly in human populations. RCTs will not be available for most hazards of concern, though the hierarchy of evidence pyramid shown in Figure 3 also identifies other valuable sources of information that are frequently used in risk assessment applications. Other variations of this hierarchy have also been suggested, such as that discussed by Murad et al. (2016), which includes consideration of both study design and quality to allow for departures from a strict a priori hierarchy of evidence.

Figure 3. Hierarchy of evidence pyramid.

[adapted from Yetley et al. (2017)]

The emphasis on the application of systematic review to assemble the data needed to support evidence-based risk assessment is consistent with recommendations made by the US National Research Council (NRC, 2014a) as part of its review of the US EPA’s IRIS program. As indicated in Figure 1, adapted from the NRC review, systematic review represents a critical first step in assembling all human, animal, and mechanistic data relevant to the assessment of potential risks associated with environmental health hazards.

Additional guidance on the use of systematic review in risk assessment is available from numerous sources. Farhat and colleagues (2022) trace the evolution of incorporating systematic review into evidence-based risk assessment. Contemporary examples of the application of current methods in systematic review across different domains — including clinical, epidemiological, and toxicological applications — and provide a summary of available tools to support best practices in systematic review.

6. Synthesizing the Evidence

Once all relevant information has been assembled in a systematic review, this information needs to be synthesized, qualitatively and sometimes quantitatively. Qualitative synthesis involves a determination as to whether the exposure of interest constitutes a human health hazard. Within the context of evidence-based risk assessment, this is done using an appropriate evidence integration framework. Should a potential human health hazard be identified through the qualitative synthesis, the next step is to determine whether the available data are sufficient to support an evidence-based quantitative estimate of population health risk.

6.1. Qualitative Synthesis

A number of frameworks for specific types of risk have been developed by different authorities. IARC, for example, has elaborated and refined a well-known scheme for evaluating human carcinogenicity which, over the last 50 years, has led to the identification of 1206 agents as known causes of human cancer. (To date, only one agent — caprolactam — of the more than 1,000 agents evaluated has been classified as being probably not carcinogenic to humans, demonstrating the well-known scientific challenges in establishing a negative outcome with high confidence). It is important to recognize that the IARC framework for evaluating potential cancer risk to humans identifies cancer hazards, but generally does not result in a quantitative estimate of human cancer risk.

Other schema for evaluating non-cancer hazards have also been proposed by other authorities. Rhomberg and colleagues (2013) recently reviewed 50 frameworks in the literature. An update to this search from 2013 to 2018 is provided in Supplementary Material I: it is noted that a number of these frameworks specifically address the incorporation of data derived from alternative test methods. Meanwhile, Martin and colleagues (2018) conducted a critical assessment of 24 approaches in an effort to develop a generalized approach: a major conclusion of this assessment was that incorporation of factors such as extent of prescription, relevance to the question of interest, and ease of implementation increases the transparency of the approach taken.

6.2. Quantitative Synthesis

In many cases, a quantitative estimate of risk will be needed to complete the risk assessment. In the past, quantitative estimates of risk have often been based on identifying a key study (or studies) that are amenable to fitting an appropriate dose-response or exposure-response model. [Although outside the scope of this report, sophisticated analytical techniques, such as Bayesian model averaging (Thomas et al., 2007), can be used to incorporate results from multiple exposure–response models that are compatible with the data.] These models can then be used to develop projections of potential population health risk and associated uncertainty under specified exposure scenarios. These models can also be used to identify a point of departure (PoD) on the dose-response curve that can be used to establish a reference value (RfV) or other toxicity benchmark to serve as a guideline for human exposure (EPA, 2012). The PoD can also be used to establish a margin of exposure (MoE) reflecting the ratio between the toxicity benchmark and estimated or predicted human exposure levels (Thomas et al., 2013).

When multiple studies with quantitative information on dose-response are available, it may be possible to combine the results of these studies. As discussed below, combined analysis of the primary raw data may be possible when the study designs are compatible. When the primary raw data are not accessible for analysis, meta-analysis of summary risk estimates from the individual studies is often done. Another potentially useful approach to combining data involving different toxicological endpoints is categorical regression. Each of these approaches is described briefly below.

6.2.1. Combined Analysis

When access to the primary raw data from a series of related studies is available, a combined analysis of the raw data can be conducted. Having access to the raw data affords maximum flexibility in modelling exposure-response relationships across the studies being combined, as well as an opportunity to evaluate the effects of potential modifying factors included in the original studies. For example, Krewski and colleagues (2006) conducted a combined analysis of the primary raw data from a series of case–control studies on residential radon and lung cancer risk, , demonstrating for the first time a strong association between radon and lung cancer in residential settings.

6.2.2. Pooling Epidemiological Data

There are various methods for pooling data from epidemiological studies, each with its own strengths and limitations. Combining primary data from individual studies to yield a large dataset — referred to as pooled analysis when used in epidemiological studies — has many advantages. In particular, the increase in sample size allows for more precise calculations of risk estimates (Tobias, Saez, & Kogevinas, 2004). The large sample size can also improve the statistical power to allow the assessment of risks in specific subgroups or restricted subsets of data that would not be possible in smaller data sets of individual studies. These strengths of pooling data make it appealing when investigating effects of rare exposures or risk factors of diseases that have long induction periods, such as cancHer (Cardis et al., 2011; Fehringer et al., 2017; Felix et al., 2015; Gaudet et al., 2010; Kheifets et al., 2010; Peres et al., 2018; Wyss et al., 2013).

Although pooling primary sources of data can be expensive and time-consuming and requires the agreement to data-sharing and cooperation of investigators from multiple study centers, the approach does not have the limitations listed above. Pooling primary data can allow investigators to make a broader range of conclusions compared to meta-analyses that are based on published study findings (Checkoway, 1991). This approach also has other advantages; it allows having unified inclusion criteria and definitions of variables across the centers. It also allows the use of the same statistical model on all of the combined data. This is particularly important since individual studies commonly adjust for different confounders in their analysis (Friedenreich, 1993). Standardizing the methods used reduces potential sources of heterogeneity across the studies. Finally, the large sample size allows examination of rare exposures and performing subgroup analyses that would not be feasible in individual studies due to low statistical power.

This type of analysis can be done retrospectively or prospectively. Prospective planning has the added advantage that it allows co-investigators to plan ahead and ensure uniform methods are used for data collection and reporting (Blettner, Sauerbrei, Schlehofer, Scheuchenpflug, & Friedenreich, 1999). Nevertheless, many pooled analyses have been conducted retrospectively after individual studies had reported their findings.

For example, as mentioned above, Krewski and colleagues (2006) retrospectively combined primary data from seven case-control studies in North America. Their pooled findings, based on 4,081 cancer cases, indicate an association between residential radon and lung cancer, although findings from the individual case control studies had provided inconsistent evidence on the risks of lung cancer. The pooling of data further allowed analysis on subsets of the data with more complete radon dosimetry in the most critical exposure time windows. The investigators also performed dose–response analyses based on the histological type of lung cancer (Field et al., 2006). These analyses had not been possible in the prior analyses of each study data individually.

Similar radon risk analyses were conducted by Darby and colleagues (2005) from 13 European case–control studies on 7,148 lung cancer cases. The large sample size achieved by pooling provided sufficient statistical power to detect moderate risks that could not be detected in individual studies.

To perform a pooled analysis of primary data from multiple studies, Friedenreich (1993) suggests the need for a strict protocol. Further, the author details eight steps to follow for pooling data and analysing the combined dataset: 1) identify relevant studies; 2) select (sufficiently similar) studies from which to pool data; 3) combine the data after obtaining each study data from original investigators; 4) estimate study-specific effects using logistic regression; 5) examine the homogeneity of study-specific effects; 6) estimate pooled effects (if study-specific effects are homogenous); 7) explain heterogeneity between studies (if studies-specific effects are not homogenous); and 8) perform sensitivity analyses to examine the robustness of the pooled effects.

While pooling of data can serve to both increase precision and support subgroup analyses that would otherwise be not possible in individual studies, inferences regarding causality (see section 2) will typically still require establishment of a plausible biological hypotheses, with consideration of mechanistic data, including data derived using NAMs.

6.2.3. Meta-Analysis

Meta-analysis has become a popular and useful technique for developing a more pragmatic estimate of risk by quantitatively combining compatible study-specific risk estimates. Meta-analysis requires that the designs for the studies being combined are reasonably compatible, and that the study results do not demonstrate a high degree of heterogeneity. While meta-analysis has been applied in toxicological risk scenarios, it is predominantly applied in cases where human data is available.

When pooling data, primary data from individual studies are combined to provide a much larger dataset that is then analysed to obtain an overall effect estimate. This is the main difference compared to meta-analyses, where effect estimates reported from individual studies are combined into one overall effect estimate. Meta-analyses are very common in epidemiology, and have many advantages including low associated costs, time efficiency, and the ability to provide an overall quantitative assessment of risk and uncertainty (Friedenreich, 1993). However, limitations do arise in instances where significant heterogeneity between studies is present due to variations in study design, eligibility criteria, exposure and outcome ascertainment and statistical analyses (Blettner et al., 1999). Meta-analyses are also limited by the information provided in the publication and may preclude dose–response analysis and specific subgroup analyses (Friedenreich, 1993; Tobias et al., 2004).

Recent examples of informative meta-analyses of epidemiological data include analyses of the association between exposure to diesel exhaust and lung cancer (Vermeulen et al., 2014) and analyses of the association between talc and ovarian cancer (Taher et al., 2019). Vermeulen and colleagues (2014) conducted a meta-analysis of three epidemiological studies of the association between occupational exposure to diesel exhaust emissions in the mining and trucking industries and lung cancer, using elemental carbon as an indicator of exposure to diesel exhaust. This analysis provides a possible approach to characterizing the exposure-response relationship between diesel exhaust and lung cancer risk (HEI Diesel Epidemiology Panel, 2015). Taher et al. (2019) conducted a meta-analysis of 27 case-control and cohort studies, with limited evidence of study heterogeneity, to estimate the odds for ever use of talc to be 1.28 (95% CI: 1.20–1.37). An important component of this work was the conduct of a series of subgroup analyses, focusing on the nature of talc use, tumor characteristics and the possible effect of menopausal state, hormone use and pelvic surgery.

6.2.4. Categorical Regression

Categorical regression can be used to combine data from diverse sources, including different (both toxicological and epidemiological) types of studies and studies focusing on diverse health endpoints. This is done by developing a severity scoring system to place different adverse health outcomes on a common severity scale, following which categorical regression modelling of the severity scores can be done. In analyses involving both animal and human data, adjustments for inter-species differences and sensitivity can be included in the model.

The US EPA has invested considerable effort in developing a software package called CatReg to perform categorical regression (EPA, 2017). More recently, Milton and colleagues (2017a; 2017b) have extended the US EPA CatReg approach to permit modelling of U-shaped dose-response curves for essential elements that demonstrate toxicity due to both excess and deficiency: this modelling approach facilitates minimization of the joint risks of excess and deficiency after incorporating all relevant dose-response data within a single categorical regression model. Yetley and colleagues (2017) have identified categorical regression as a potentially useful tool for combining data from multiple sources in establishing DRIs for nutrients. To illustrate the use of categorical regression in practice, Farrell and colleagues (2022) provide a description of the application of this technique to rich datasets on two essential elements — copper and manganese — that include extensive human and animal data. Farrell and colleagues indicate how animal and human evidence on diverse endpoints associated with excess and deficiency of essential elements could be supplemented with alternative test data on the severity of biological changes occurring as upstream effects in adverse outcome pathways.

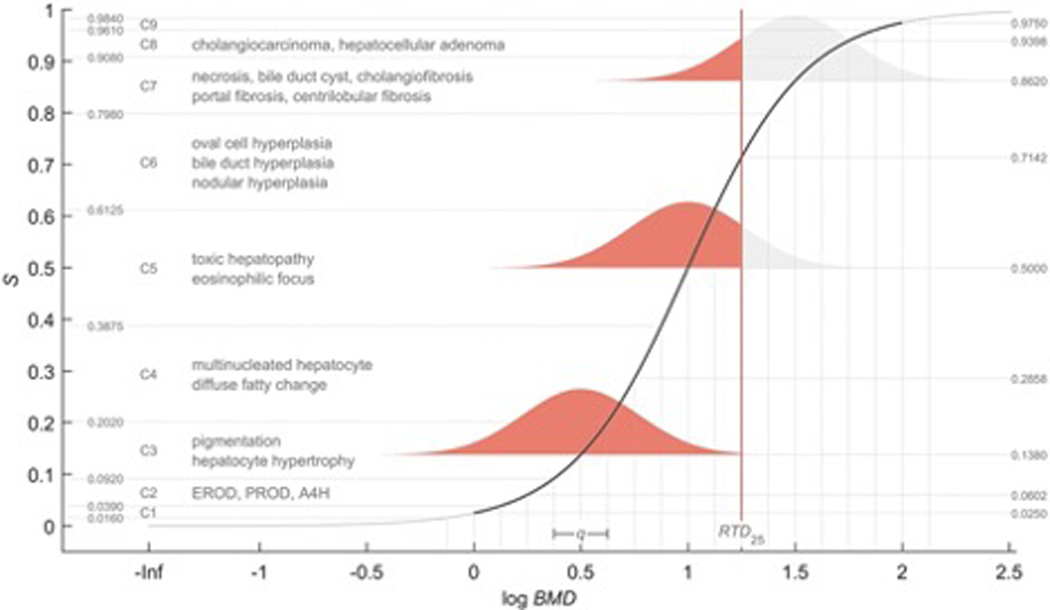

6.2.5. Combining Outcomes with Different Severities

Assessment of chemical hazards is based on specific critical health effect(s). As an extension, Sand and colleagues (2018) introduced a method for characterizing the dose-related sequence of the development of multiple (lower- to higher-order) toxicological health effects caused by a chemical. Although novel, the method proposed by Sand for combining data on different endpoints, like categorical regression, is not restricted to specific types of data, and can accommodate data from NAMs as well as traditional toxicological and epidemiological data. A “reference point profile” was defined as the relation between benchmark doses (BMDs) for selected health effects, and a standardized severity score determined for these effects (Figure 4). For a given dose of a chemical or mixture the probability for exceeding the reference point profile can be assessed. Following severity weighing an overall toxicological response (expressed in terms of the most severe outcomes) at the same dose can then be derived by integrating contributions across all health effects. Conversely, dose equivalents corresponding to specified levels of the new response metric can also be estimated. The reference point profile is a cross-section of the dose-severity-response volume, and in its generalized form the method accounts for all three dimensions (dose, severity, response). In this case, the new response metric becomes a proxy for the probability of response for the most severe health effects, rather than the probability for exceeding the BMD for such effects.

Figure 4.

Technical illustration of the reference point profile, which is a cross-section of the dose-severity-response volume. The solid s-shaped (Hill) curve describes the relation between the BMD for selected health effects, and the severity of toxicity (S) determined for these effects. The severity for individual health effects is first determined categorically according to a hierarchical classification scheme: the classification performed by Sand and colleagues (2018) of considered health effect in the liver is illustrated. The nine-graded categorical scale, C1 - C9, is then mapped to a quantitative scale that range from S = 0 to S = 1. The default mapping distributes severity categories symmetrically across S (see Sand et al., 2018 for details). The variability is assumed to be normally distributed on the log-scale with constant variance. Red areas describe probabilities for exceeding the reference point profile at exposure level, E, corresponding to the vertical (red) line. Here, E corresponds to an integrated response of 0.25 (50%), and E intersects the solid curve at S ≈ 0.71. The midpoint of C6 thus represents the center in terms of the new response metric. This point of calibration is approximately independent of the model parameters (Sand et al., 2018), and C6 also is regarded as the breaking point between reversible and irreversible effects. Association of C6 to a 50% response is therefore considered as a plausible starting point for severity weighting. A non-linear severity-weight, w(S) ≠ S, will indirectly modify the default mapping. This allows the midpoint of the system, corresponding to a 50% response, to be lower (C1 - C9 skewed upward) or higher (C1 - C9 skewed downward) than the midpoint of C6, which would also increase or decrease the response associated with E, respectively.

Conceptually, there are similarities between this method and categorical regression (e.g., Hertzberg and Miller, 1985; Dourson et al., 1997: Milton et al., 2017b). The latter methods have for example been used to calculate the probability for a given severity category. The method introduced by Sand and colleagues (2018) provides this type of output simultaneously across all categories. In addition, as indicated earlier, probabilities are integrated over the entire severity domain to produce an overall response expressed in terms of the most severe health effects. Integration across different severities requires weighting, and a developed system with nine severity categories (C1 to C9) is therefore mapped to a quantitative severity scale (S = 0 to S = 1) (see Figure 4).

For BMD analysis Sand and colleagues (2018) used data on dioxin-like chemicals from the U.S. National Toxicology Program long-term toxicology and carcinogenesis studies in rats, describing various health effects in the liver (Figure 4). They demonstrated that results derived by the method are largely insensitive to the choice of model used to describe the reference point profile. The proposed method also appears to be robust with respect to minor and moderate changes in severity classification of BMDs. Further analyses indicate that the interpretation of effective doses or points of departures, based on individual health effects, may change when considering health effects jointly along the lines proposed (Sand, 2022). This influences the consideration of equipotent doses for different chemicals, and the concept of acceptable response levels for individual effects. Preliminary results to date suggest that estimation of exposure guidelines, or similar, by the proposed method may be sufficiently accurate and precise even if data for the most severe health effects, associated with the highest severity categories, are omitted (Sand, 2022). The method may therefore enable derivation of a surrogate for the probability of severe health effects, and/or the probability for exceeding corresponding BMDs, also in the case of using data on comparatively “mild” effects only.

Sand (2022) further extend the proposed concept to incorporate genomic dose-response information. A “genomic reference point profile”, describing variability within and across affected gene-sets, is characterized by using an iterative method for combining all unique BMDs from a transcriptomic study. Similar to the implementation for traditional toxicity data, results suggest that joint consideration of different effects and associated BMDs differentiates the consequences of chemical exposure to a greater extent compared to using a specific/lowest BMD only. This can help to refine establishment of points of departures for various levels of health concern. Analysis and comparison of apical and genomic reference point profiles, as well as consideration of functional relations between gene sets within such analyses, may aid in the transition towards a new approach method-based risk assessment paradigm.

6.2.6. Structured Expert Elicitation

Structured expert elicitation (SEE) is a well-established approach for gauging expert opinion, of particular value in contexts characterized by limited data, low risk and substantial uncertainty (Aspinall, 2008, 2010; Cooke, 2013, 2015). One challenge associated with expert elicitation is the risk or perception of overly subjective expert input. For example, Schünemann and colleagues (2019) point to the need to distinguish expert-elicited evidence from expert opinion, relying on evidence to inform decision-making. Concerns about expert bias are reduced through an anonymized elicitation procedure with a formal, transparent, and auditable processing of responses and a performance-based weighting scheme for pooling judgements. This encourages experts to be open-minded in responding with their estimates and uncertainties, based on their own personal knowledge, expertise, and experience.

There are nearly 100 specific SEE methodologies, which may concern a generic scenario or circumstances related to the assessment or management for a specific project scenario (Colson & Cooke, 2017; Cooke & Goossens, 2008). Generally speaking, the method quantifies subjective judgements through the weighting of expert responses in order to generate a collective view represented as a median value and accompanying uncertainty distribution. An expert panel between four and twenty members is considered adequate to obtain meaningful results (Colson & Cooke, 2017).

The elicitation may be convened either in person or through video conferencing (thus also reducing the carbon footprint of the event), with experts being offered the opportunity to comment on the process, decline to answer specific questions or withdraw from the exercise entirely. Per Cooke’s Classical Model, a SEE should include the following steps (Cooke, 2013, 2015):

A draft version of the elicitation instrument is reviewed by independent experts (modifications as necessary).

The elicitation instrument is introduced to the expert participants, with a thorough review of the relevant terms and conditions. Questions are permitted throughout the process, such that the problem, context, definitions, and question content are understood. Refinements may be made, with the goal of ensuring the same understanding among experts.

Experts complete each question individually using pre-formatted response tables, which are submitted by the experts to the investigators upon completion. Experts first complete a series of “calibration” questions on technical issues for the topic of interest. This calibration exercise enables distinct performance weights to be given to individual experts based on their accuracy and ability to judge uncertainties.

Experts then respond to numerical uncertainty distribution “target” questions of the same format, with a central value (median), best judgement (50th percentile) and the 90% credible range (lower limit 5th percentile and upper limit 95th percentile).

Following the analysis, another facilitated meeting or video conference is arranged, providing the expert panel with an opportunity to review preliminary findings. Another round of modification and elicitation may be conducted if necessary.

7. Evidence Assessment & Presentation of Findings

7.1. Determining certainty in the evidence based on the GRADE approach

The evidence identified by systematic reviews can be assessed collectively in a framework such as that described for GRADE (Guyatt, Oxman, Kunz, Atkins, et al., 2011). This moves forward the individual study assessment, so that end-users understand the strengths and limitations (i.e. certainty) across the body of the evidence. Informed by Bradford Hill criteria and the iterative development of evidence-based medicine, the GRADE approach for evidence assessment evaluates the certainty in the evidence based on the following domains that may decrease one’s certainty in the body of evidence: risk of bias (i.e. study limitations), inconsistency (i.e. heterogeneity), indirectness, imprecision, and publication bias (Balshem et al., 2011; Guyatt et al., 2011). In addition, for nonrandomized studies, one’s certainty of the body of evidence may be increased by the following domains: magnitude of effect (e.g. large or very large effect size), dose-response gradient, or opposing residual confounding (an effect seen in the opposite direction expected from confounders).

These eight domains can help assessors understand the body of the evidence across outcomes as it relates to the research question of interest (i.e. our PECO question, section 3), even when the evidence comes from non-human studies. As mentioned previously, five domains relate to lowering one’s certainty in the body of evidence. Risk of bias is informed by the individual study assessments performed as part of the systematic review. While GRADE was originally developed in the context of randomized control trials, its application has expanded to include risk of bias related to randomized and nonrandomized intervention and exposure studies (Guyatt, Oxman, Kunz, Atkins, et al., 2011; Morgan et al., 2019; Schunemann et al., 2019). When considering inconsistency across the pooled evidence, the distinction is made between explained inconsistency or unexplained (Guyatt, Oxman, Kunz, Atkins, et al., 2011). Indirectness, based on how directly the identified evidence answers the research question, is a key element for evidence integration (Guyatt, Oxman, Kunz, Woodcock, et al., 2011). Information used to inform indirectness would be, when the population of interest is humans, how directly can evidence from animal experiments or other types of research (in vitro or in vivo) be extrapolated or help inform the association between an exposure and outcome. Some research has explored how the domain of indirectness relates to evidence from pre-clinical studies (e.g. research from animals) (Hooijmans et al., 2018). Imprecision considers whether the overall estimate of effect is precise or due to random error. Lastly, publication bias summarizes whether or not all the studies that have been conducted were captured in the review.

When considering the three domains that allow for increased certainty across the body of evidence, the magnitude of effect captures the extent of the observed effect, dose-response considers the exposure-effect relationship, and opposing residual confounding captures whether or not the worst-case scenario still allows for drawing strong conclusions (Guyatt, Oxman, Sultan, et al., 2011).

Operationalizing these domains relates to the understanding of the relationship between the research question and the evidence extrapolated to inform the findings to that question. In most instances, this can be informed by exploring the various sources of indirectness. For example, one may be interested in humans as a population. For example, humans who are exposed to a carcinogen with the interest in exploring how this exposure would relate to an adverse outcome of interest. Within GRADE, the best available evidence is understood to come from human studies; however, indirect evidence from other sources (animal, mechanistic) is also considered. The exposure or comparator could also introduce an element of indirectness. To apply this to a review, five paradigmatic scenarios exist (Morgan et al., 2018). In one particular scenario, little is known about the association between the exposure and outcome, therefore the assessment seeks to define that relationship. In this situation, mechanistic data or modelling may be utilized to see whether or not we have some confidence in a statement between the exposure and outcome. Indirectness may also be identified within the outcome, as to whether or not out evidence is extrapolated from a surrogate.

The starting point when determining the certainty across the body of evidence for an outcome typically starts at high certainty for RCTs and low certainty for nonrandomized studies. However, with the development of risk of bias instruments applied to nonrandomized studies that use a standardized scale from RCTs, the level of certainty could be increased (Morgan et al., 2019; Schunemann et al., 2019).

7.2. Relevance to Risk Assessment

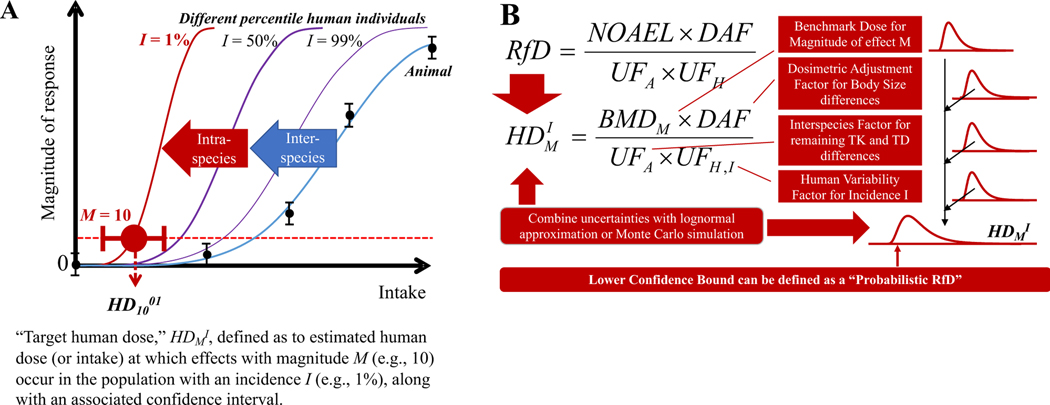

Characterizing the relationship between exposure levels and the health impacts they exert has been the focus of much research conducted by risk assessors, health practitioners, and regulatory experts in developing health protection programs to establish safe intake levels for humans (Krewski et al., 2010; Stern et al., 2007). Any substance, including but not limited to chemicals, nutrients, vitamins, or pharmaceuticals, has the potential to be harmful to humans if they are exposed to too much or too little. To establish a range of allowable intake for a substance that may be harmful to humans in excess or deficient amounts, it is necessary to strike a balance between the health impacts exerted by exposures across the excess-deficiency spectrum. The challenge of identifying an acceptable medium between excess and deficiency motivates the development of exposure–response models; they provide the foundation for identifying recommended levels of exposure to essential and nonessential substances (Krewski et al., 2010).