Abstract

Medical diagnostic methods that utilise modalities of patient symptoms such as speech are increasingly being used for initial diagnostic purposes and monitoring disease state progression. Speech disorders are particularly prevalent in neurological degenerative diseases such as Parkinson’s disease, the focus of the study undertaken in this work. We will demonstrate state-of-the-art statistical time-series methods that combine elements of statistical time series modelling and signal processing with modern machine learning methods based on Gaussian process models to develop methods to accurately detect a core symptom of speech disorder in individuals who have Parkinson’s disease. We will show that the proposed methods out-perform standard best practices of speech diagnostics in detecting ataxic speech disorders, and we will focus the study, particularly on a detailed analysis of a well regarded Parkinson’s data speech study publicly available making all our results reproducible. The methodology developed is based on a specialised technique not widely adopted in medical statistics that found great success in other domains such as signal processing, seismology, speech analysis and ecology. In this work, we will present this method from a statistical perspective and generalise it to a stochastic model, which will be used to design a test for speech disorders when applied to speech time series signals. As such, this work is making contributions both of a practical and statistical methodological nature.

1 Introduction

Numerous degenerative neurological diseases require continuous monitoring of the patient’s status to ensure treatment regimes are up to date. Furthermore, the same symptoms manifest in multiple of these conditions [1], demanding expensive equipment and advanced expertise for the correct diagnosis. As a solution, the developments of artificial intelligence in biotechnology have started to support these medical settings with automated computational tools that can increasingly identify disorders’ abnormalities in real-life-sensing environments [2–5]. The challenge in detecting symptoms of such nervous system disorders through a computerised practice is accomplished via several modalities (such as speech, handwriting, radiology, gait, etc.) which are employed to reveal indicators of discriminant symptoms associated with neurodegenerative disorders, see [3, 4]. The idea is to map different modality-derived features to the various symptoms and obtain discriminant information about the studied illness. In such a way, what is usually referred to as a “biomarker” could be defined.

This work focuses on Parkinson’s disease, the degenerative disorder of the central nervous system resulting from the death of dopamine-containing cells in the substantia nigra, a midbrain region [1]. It includes both motor and non-motor signs, worsening with disease progression [6, 7]. Medical treatments can alleviate the course of the disease, but no definite cure exists, and an early diagnosis and remote monitoring are critical for prolonging quality of life in those diagnosed, see [8, 9]. The modality in focus in this work is speech which sets our goal as characterising speech anomalies of such a disorder for implementing a pre-screening diagnostic tool and promoting remote telemedicine practices for understanding disease progression. Thus, our interest is restricted to voice symptoms that manifest from this neurodegenerative disorder, part of the speech-motor disease (SMD) class and markers of what is known as dysarthria.

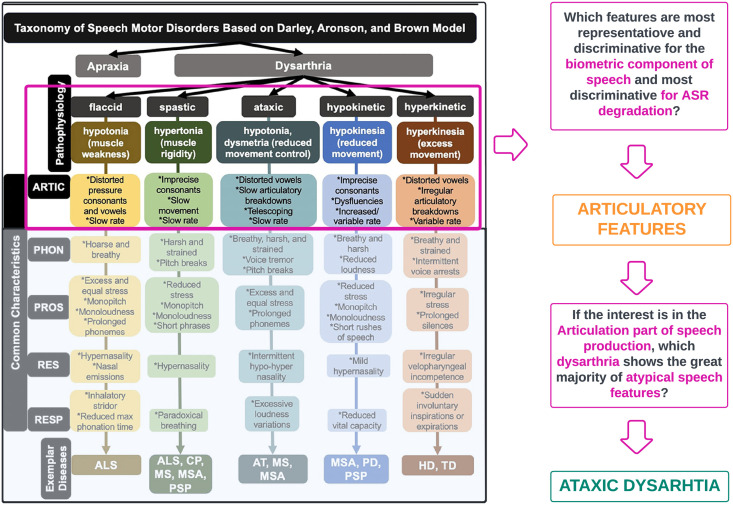

Dysarthria refers to a group of divergent SMDs often secondary to neurologic injury (but not limited to it) and exhibits highly variable speech patterns within and across individuals [10]. One of the most established clinical taxonomy for SMD corresponds to the Darley, Aronson, and Brown (DAB) model [11] that foresees 38 atypical speech features rated on a 7-point scale and groups dysarthria types based on speech feature profiles [10]. The DAB model split SMD into two classes, apraxia and dysarthria, and dysarthria into five clusters, flaccid, spastic, ataxic, hypokinetic, and hyperkinetic. Patients often show a combination of the five subtypes (i.e., mixed dysarthria) independently of the final diagnosis, and no speech feature (or a set) has yet to be found discriminative of the different types [1, 12–14]. Furthermore, this clinical system relies entirely on subjective auditory-perceptual observations requiring advanced expert clinical training [10, 13]. Automatic Speaker Recognition (ASR) represent the ideal tool for automatically detecting and monitoring the range of diversity in dysarthria symptoms.

Different types of ASR systems could be used [15, 16]. For example, there are ASR speaker-independent (SI) systems, trained on large multispeaker datasets, or ASR speaker-dependent (SD) systems, trained by an existing SI model to a target speaker or by a unique target speaker’s speech data [10, 17]. Commercially developed SI have low error rates for healthy speakers but appear to perform considerably worse with speech impairments tasks [10, 18]. Thus, extensive work has been conducted on SD systems for speech impairments showing stronger performances than SI [10, 19, 20]. The speech task used for the discrimination might vary and be dependent on the speech methodology or the final goal. These are repeating syllables, spontaneous dialogue, improvised description of a figure, etc. [2]. An ASR system can use several speech features descriptive of the different phases of speech production process, extensively reviewed by [2, 4, 21, 22]. Amongst many, acoustic or vocal tract features describing the articulatory phase are the ones that correlate the most with neurodegenerative disorders. Under the source-filter model [23], a speech signal results from the glottal airflow shaped by the vocal tract filter as it passes through it. Numerous studies in ASR prove that vocal folds features are not as discriminatory as vocal tract features [24]. In particular, representations containing information about the vocal tract’s resonance properties, also known as formants. An individual’s speech formant structures are analogous to that individual’s speech fingerprint, thereby characterising unique traits of the filter model specific to a human [17]. Following the introduced evidence, an ASR-SD system, relying on acoustic features and describing the speech formant structure, would represent a powerful solution for characterising different symptoms of dysarthria.

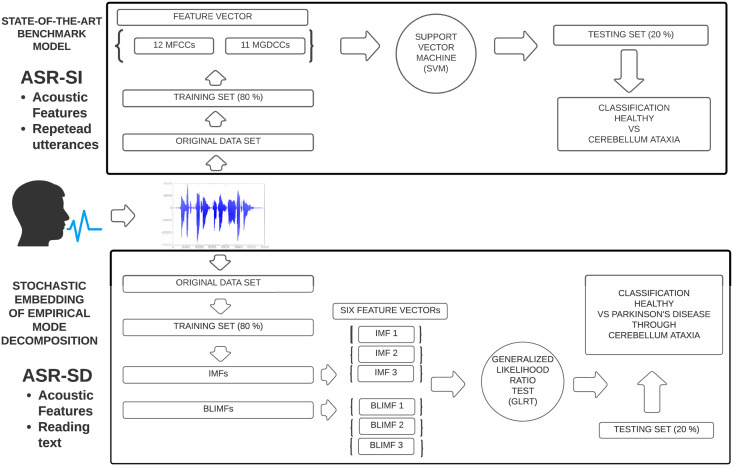

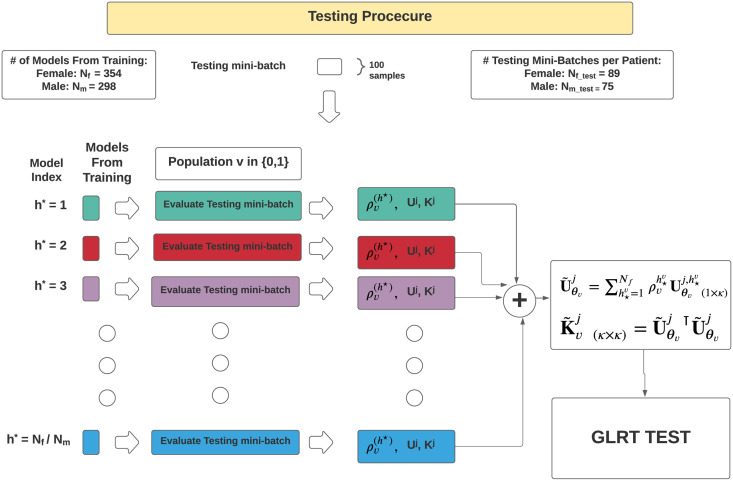

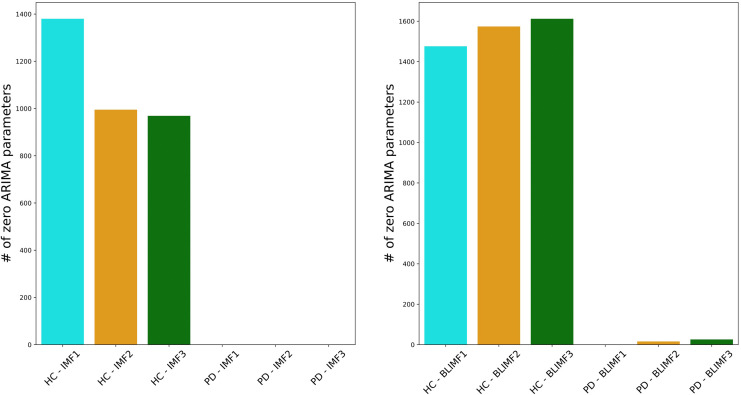

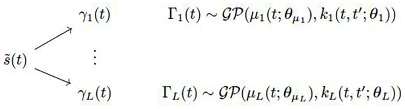

Our work is built upon the following considerations. Firstly, we consider the speech taxonomy provided by the DAB model shown in Fig 1 (produced by [10]). Secondly, we consider Parkinson’s disease and aim to discriminate the presence or absence of such disorder by quantifying ataxic dysarthria or ataxic speech. Fig 1 shows that articulatory speech abnormalities are prevalent in this kind of dysarthria and correspond to distorted vowels, slow articulatory breakdowns, telescoping, and slow rate [25–27]. Such abnormalities must be detected through time-varying features of formant structures. Thus, we will consider data for which the assigned speech task is “reading text” to observe the evolution of speech over time rather than using repeated syllables. Ataxic speech is chosen as the discriminant factor for Parkinson’s disease since, beyond being characterised by several abnormalities of the articulatory tract, whose features best capture biometric properties of a human voice, several studies reported a 70–90% of its prevalence once Parkinson’s appears [28]). Moreover, ataxic speech might be one of the earliest indicators of Parkinson’s [6]. Hence, we aim to construct a biomarker that efficiently detects formant structures of ataxic speech abnormalities based on acoustic features formulated through a sophisticated time-series signal processing technique. Fig 1 shows the steps of this procedure. This idea is based on the work proposed in [29], which sought to detect the presence of ataxic speech in participants with cerebellar ataxia using standard acoustic features. By presenting an ad hoc ASR-SD system substituting the one of [29] and efficiently targeting the formant structure of Parkinson’s subjects, we can characterise such a condition through ataxic speech anomalies. Our method is directly comparable to the one proposed by [29] and hence interpretable. Fig 2 shows the two ASR systems and their differences. The top diagram represents the ASR-SI system implemented by [29], while the bottom panel represents the one proposed in this work. Features and classification information will be provided in the text below since the methodologies must be introduced first. Note that, only the novel features are represented in the plot.

Fig 1. Figure describing the taxonomy of SMD according to the Darley, Aronson, and Brown model.

Note that the taxonomy panel was produced by [10] and modified in this paper. Acoustic features representing the vocal tract and capturing formant structure are amongst the most discriminant in ASR tasks. Our interest is to detect the presence or absence of Parkinson’s through such acoustic features. Hence, since one of the early symptoms of Parkinson’s is ataxic speech, which implies several speech abnormalities in the vocal tract, this will be the set of anomalies we aim to discriminate. Furthermore, based on [17], our goal is to construct an ASR-SD system able to deal with complex settings such as non-stationarity of the speech, small sample sizes, unbalanced data, and interpretation of the obtained results concerning gender voices, carrying different formant structure.

Fig 2. Figure showing the ASR systems detecting ataxic speech.

The top panel represents the ASR-SI system implemented by [29], which has been exploited to develop our technique. After having collected the speech data and split it into training and testing sets, the authors extracted (amongst others) Mel Frequency Cepstral Coefficients (MFCCs) and phase-based cepstral coefficients (MGDCCs) and combined them into a unique feature vector to then perform a classification task with a Support Vector Machine (SVM) for the diagnosis of cerebellar ataxia. The bottom panel of the plot shows the steps of our ASR system, which instead is SD and relies on read text as the speech task performed by the participants. The considered data set is given at [38], with people affected by Parkinson’s disease. We constructed the training and testing set and then extracted (amongst others) six different feature vectors, which we have been tested individually through a Generalized Likelihood Ratio Test (GLRT). The classification task targets the detection of ataxic speech with an equivalent statistical framework for diagnosing Parkinson’s disease. Note that an extension of the bottom panel including all the novel features will be presented in Fig 3.

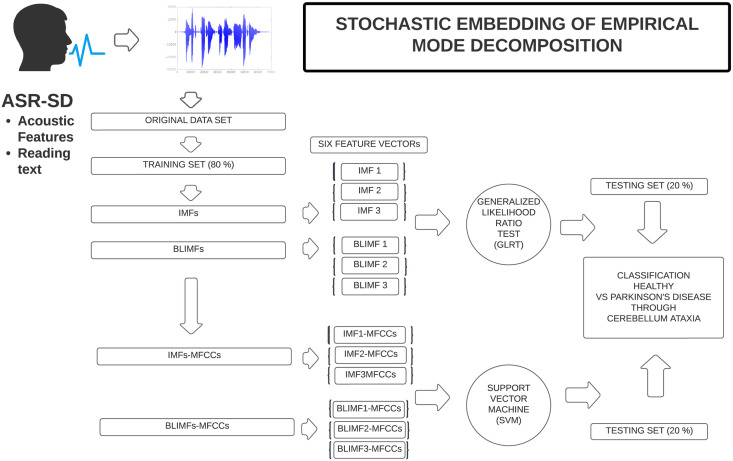

Fig 3. Figure showing the proposed ASR system detecting ataxic speech.

It corresponds to an extension of Fig 2 and presenting all the novel features used, hence, the IMFs and the BLIMFs (output of SM2 and SM3) and, further, MFCCs will be extracted on these and an SVM equivalent the one performed by [29] will be carried. Note that only the first 3 bases are retained. Reasons behind this choice will be later introduced.

The research question we want to address is whether it is possible to quantify ataxic speech, as done in [29], more robustly and if, by considering that there will be further statistical confounders, i.e. other types of dysarthria, such ataxic quantification will be discriminative for Parkinson’s disease. In doing so, the following components must be taken into account. Firstly, the developed method should be robust to small sample sizes, often affecting medical diagnostic studies. Secondly, if the data is unbalanced, the designed training and testing procedure combined with the classification method must handle such an issue to avoid introducing undesired bias. The standard practice followed by ASR methodologies is to refine standard glottal/voice features for the classification task or search for a more complex classifier based on deep learning techniques ([30–36]). The third point is that [17] the ASR speech method should account for gender since male and female voices enclose distinct resonant frequencies of the vocal cords and a joint classification would reduce accuracy of the classifier. Furthermore, the classifier must guarantee a physical interpretation of the obtained results, i.e. features better performing should reflect the discriminatory power carried by female or male voices. The other relevant aspect is that considering an ASR-SD is more powerful nowadays in medical settings since averaging results often employed in standard ASR or Speaker Verification tasks might still be too general for such medical biomarker discovery settings, given the lack of substantial reference data sets for specific diseases. Further, before moving to a generalisation protocol, experts providing the final diagnosis and treatments would need highly tested models already studied on several data.

Furthermore, since accuracy levels of at least 80% are required in health diagnostics, such challenges just discussed will require the development of tailored solutions involving sophisticated speech analysis methodologies that should be interpretable in order for them to be relevant for medical practitioners to interpret and trust. This paper aims to address these challenges by providing a novel method for a modelling methodology for ataxic speech symptom detection associated with Parkinson’s disease by addressing two core components of statistical speech analysis for medical diagnosis. The first involves detecting and quantifying ataxic speech anomalies in the case of Parkinson’s disease, with the case study considering speech recordings of patients at various stages of this disease. Secondly, it makes statistical contributions related to developing non-linear and non-stationary time-series methods based on Empirical Mode Decomposition (EMD) [37], where a novel stochastic model representation is established for the EMD which then allows a statistical treatment of EMD to be considered. This is important to undertake statistical analysis tasks such as estimation and inference and to accurately incorporate statistical uncertainty quantification in out-of-sample predictions and forecasts, distinct from model from naive extrapolation, often used in the absence of a stochastic model for EMD. We will show that the implemented methodology outperforms traditional speech methodologies with accuracy scores greater than 80% on the data set collected and provided by King’s College given at [38], available at https://zenodo.org/record/2867216#.ZAiHuRWZO3B.

1.1 Introduction to time series empirical mode decomposition

Speech data represents a complex data type that can be analysed through advanced time series decomposition methods since, if appropriately designed, the extracted bases reveal hidden insights into the data generating process, often not visible via the analysis of the original signal. We focus on the time-frequency method [39, 40] known as the EMD. Compared to traditional Fourier-like methods, the EMD is not prescriptive of the functional form of the basis used (as cosine for Fourier, for example) and only specifies the properties its basis functions must satisfy. Further, the EMD can relax requirements for statistical assumptions such as linearity or stationarity. Despite these critical practical features, there has been no statistical formalisation of a stochastic representation or embedding of the empirical algorithm that the EMD offers, and we address this challenge in this manuscript.

The EMD basis functions, known as Intrinsic Mode Functions (IMFs), carry the advantage of being monocomponent [41]. A monocomponent signal is described in the time-frequency (t,f)-domain by one single “ridge” corresponding to an elongated region of energy concentration. In addition, considering the crest of the ridge as a graph of Instantaneous Frequency (IF) vs time, one requires the IF of a monocomponent signal to be a scalar-valued function of time. In such a way, one is allowed to form the analytic extensions of each of the basis function IMFs via a well-defined Huang-Hilbert transform to characterise the collection of frequency representations obtained explicitly, i.e. the IFs of the signal, in our case the speech signals, see discussion in [39, 42–44]. The EMD method then utilises the fact that a multicomponent signal may be described as the sum of two or more monocomponent signals. A basis decomposition method utilising such characterising features can capture both time and frequency events in a localised fashion, which is extremely useful when there are non-stationarity effects present, as in speech.

Developing a stochastic representation or embedding along with a family of statistical model representations for the EMD method to complement its algorithmic formulation will be achieved by considering three methodological problem statements (PS1, PS2, PS3) addressed in this paper. The first problem is establishing a path-wise statistical model for the IMFs, satisfying the definitions provided in [37] that will also be consistent with the developed stochastic representation. The second problem statement considers the assumption that the EMD is algorithmically applied to the realisation of a time series signal sampled from an unknown stochastic process. Given the realised time series, the IMFs, per path, are then considered deterministic unknown functions that must be estimated from the samples. Therefore, in PS2, we seek to determine a stochastic version of the IMF decomposition compatible at a population process level with the pathwise representation of the deterministic decomposition being estimated under the solution to PS1.

Given that we will work with spline model representations as the solution to PS1, it becomes natural to consider whether Gaussian Processes (GP) [45] stochastic model embeddings will satisfy the solution to PS2 when stochastically embedding the IMFs. In this work’s context, a Gaussian process will be considered a continuous-time stochastic process for which all finite-dimensional distributions follow multivariate normal distributions. One may then interpret the GP as a random variable on L2([0, 1]) such that the individual sample paths mapping are considered random functions. In particular, there is a known connection between such functions when they are represented by splines, which under appropriate conditions are known to be suitable sample path realisations for GPs, see [46]. The challenge will be to ascertain whether this class of GP stochastic models will sufficiently satisfy the requirements imposed on the characteristic properties that such a representation should capture if it is to represent an EMD decomposition as a stochastic representation adequately.

Furthermore, GPs are a robust inference supervised machine learning technique used in many applications, given that they can be entirely specified by their mean and covariance, or kernel, functions. This will allow the definition of a stochastic representation with practical utility in performing tasks such as estimation, inference and forecasting. We will demonstrate that the GP stochastic representation we will develop for EMD basis functions IMFs when aggregated together to represent the original signal, can be considered as a special class of multi-kernel (MKL) GP (see review in [47]) stochastic model representation of the original time series signal. In practice, the EMD is then learning the multi-kernel spectral decomposition in terms of the number of kernel components to consider and their characteristic time-frequency structure for each kernel component. MKL representations can be achieved through multiple strategies developed in the literature ([48–51]).

The third problem addressed (PS3) pertains to the suitable selection of the covariance function used to capture the IMFs being stochastically modelled by GPs adequately. Since IMFs correspond to a collection of non-stationary basis functions, there is a requirement to properly design the family of kernel functions to accurately model the IMF spline representations estimated under the EMD basis extraction procedure, known as sifting. In this regard, in non-trivial applications such as speech analysis focused on in this manuscript, standard parametric kernels such as the Matern kernel and the RBF kernel (see [45]) will not suffice. Instead, we will develop two classes of solutions to this problem that generate two different families of stochastic model GP representations of EMD decompositions. The first is based on a family of data-adaptive kernels known as the Fisher kernel [52–55], which provides a generic mechanism incorporating generative probability models into the development of the covariance operator that will be data-adaptive and act as a flexible time series kernel. The second approach is based on a novel framework to learn optimal partitions of the time-frequency plane that utilises the IFs obtained from the EMD basis IMFs to partition the energy spectrum into localised regions that can then be modelled via localised GPs. One of the challenges with this second approach is how best to learn the time-frequency partition rule. This is solved via a novel application of Cross Entropy optimisation (CEM), which is a stochastic optimisation technique that Rubinstein first presented in 1999 (see [56, 57]). Once the optimal core bandwidths are computed, a new set of frequency band-limited bases we term “band-limited” IMFs (BLIMFs) will be derived. These new set of basis functions are obtained by aggregating the original IMFs sample points according to the location of their IFs within the regions of the computed optimal bandwidths partition. With such a partition model, we can characterise adaptive local bandwidths of the IMFs frequency domain with a kernel function in a GP setting.

1.2 Contributions, notation and structure

There are multiple contributions made by this work both in the direction of medical diagnosis for ataxic speech in Parkinson’s and for signal processing decomposition methods in speech analysis. These are given as follows.

A stochastic embedding model is developed for the EMD method that is consistent with the properties of the IMFs. The stochastic model for the IMFs is compatible with statistical representation comprised of B-spline and P-spline and proposes flexible statistical models that readily lend themselves to estimation, inference and statistical forecasting methods for EMD decompositions. Yet, this needed to be improved in the time-series signal processing literature, since traditionally the EMD method did not admit a probabilistic model representation, so we have developed one in this work.

-

The following notation will be used throughout: t0 < t1 < … < tN denotes signal observation times; the time series signal is denoted by and is observed at ; the continuous time spline reconstruction of the signal is denoted by ; the L IMF basis function from the EMD method are denoted by such that each satisfies ; L generically denoted the total number of IMFs extracted for a given signal; the analytic extension of the l-th IMF will be denoted by where denotes the Hilbert transform which produces the analytic signal ; will denoted the Fourier transform; when extracting IMF basis functions under the EMD method sifting algorithm, we will denote by the upper envelope used in sifting that is a spline interpolating the maximum of the current best estimate of the l-th IMF and analogously by the lower envelope of the l-th IMF interpolating the minimum of the current best estimate of the l-th IMF in the iterative IMF extraction algorithm known as sifting; finally, we will denote the collection of frequency band limited IMFs by the band-limited IMF construction based on M total specified bandwidths.;

The paper is organised as follows: firstly, a review of the EMD method is shown. We refer to [17] as main reference. Secondly, the EMD stochastic embedding set up is proposed with a set of objectives that must be satisfied. Afterwards, the stochastic embedding is formally developed, with the required notions presented to achieve it. Note that, three different system models will be formulated in this section: one for the stochastic embedding of the original signals and two which are the ones relating to the EMD and proposed in this manuscript. Section 5 presents how to develop a generative embedding kernel based on the Fisher kernel. Furthermore, the formulation of the cross-entropy problem with the derived solution used to formalise an optimal time-frequency partition for the second stochastic embedding is presented. Section 6 introduces the framework of speech based medical diagnostic with a subsection on motivation for Parkinson’s speech detection, a subsection standard benchmark model solving this task and the GLRT Test used to test the presence or absence of Parkinson’s disease developed in this paper. The last section shows the experiments results and discussion conducted on the speech data for Parkinson’s detection.

2 Statistical model framework for empirical mode decomposition

This section introduces a formalism required to understand the EMD method and builds upon the work presented in [17]. EMD basis characteristics of IMFs have been defined in [37] through a set of non-constructive properties only and are obtained via a procedure known as sifting, based on a recursive extraction of the signal energy associated with the intrinsic time scales of the original signal. They are therefore ordered according to their number of oscillations or convexity changes, and they furthermore satisfy the property that their sum reproduces the original realised signal path. Hence, the observed time series is reconstructed in principle exactly when the resulting IMFs are estimated or extracted numerically in a manner that perfectly satisfies the characterising properties of the EMD method.

Consider a continuous non-stationary speech signal s(t) observed as a sample recording at times 0 = t1 < … < tN = T. When applying the EMD basis decomposition framework, we first convert the partially observed discrete time signal s(t) into a continuous time analog signal, denote by . To achieve this we use a natural cubic polynomial spline. We will also express the EMD bases as natural cubic splines, derived from representation .

Definition 2.1. Given a set of l knots a = τ1 < τ2 < … < τl = b, a function is called a cubic polynomial spline if:

is a polynomial of degree 3 on each interval (τj, τj+1) (j = 1, …, l − 1)

is twice continuously differentiable

It is then a natural cubic spline when .

Hence, the speech signal representation is expressed in the class of truncated power basis, where the knot points are placed at the sampling times (τi = ti)

The coefficients are estimated by standard penalised least squares

with natural cubic spline constraints and where λ > 0 controls smoothness of the representation. In this case, the number of total convexity changes (oscillations) of the analog signal within the time domain [0, tN] is denoted by to . One may now define the EMD decomposition of a speech signal as follows.

Definition 2.2 (Empirical Mode Decomposition). The Empirical Mode Decomposition of signal is represented by the finite number of non-stationary basis functions known as Intrinsic Mode Functions (IMFs), denoted by {γl(t)}, such that

| (1) |

where r(t) represents the final residual (or final tendency) extracted, which has only a single convexity. In general the γl basis will have l-convexity changes throughout the domain (t1, tN) and each IMF satisfies:

- Oscillation The number of extrema and zero-crossing must either equal or differ at most by one;

(2) -

Local Symmetry The local mean value of the envelope defined by a spline through the local maxima denoted and the envelope defined by a spline through the local minima denoted by is equal to zero pointwise i.e.

(3) The minimum requirements of the upper and lower envelopes are:(4)

This definition provides characteristic properties that an IMF basis, γl(t), under the EMD method should satisfy. Evidently, it is not constructive, i.e. prescriptive of the functional form of the basis. Therefore, in this manuscript, we opt to utilise throughout the same flexible natural cubic spline representation as used to represent the speech signal interpolation also for the IMFs. Such a B-spline based representation for the realised deterministic basis decomposition that makes up the statistical model for the EMD pathwise representation will be essential to motivate the use of the Gaussian process stochastic model embedding for the stochastic process based representation we develop for the EMD method.

One can note that each IMF carries a unique number of convexity changes that can occur at any time spacings. Typically, the times of convexity change are irregularly spaced and reflect non-stationarity in a local bandwidth of the frequencies that characterize the signal at that time instant. As a result of this property, one can still order the basis IMF’s naturally according to the unique number of total convexity changes they produce in (t1, tN).

As outlined in [37], the construction of an IMF basis is directly linked to the concept of local symmetry required to handle non-stationary data. This notion is enclosed by the mean envelope that captures a local time scale, and the definition of a local averaging time scale is hence bypassed. Such a requirement is fundamental to avoid asymmetric waves affecting the concept of instantaneous frequency, formalised below.

2.1 Extraction of EMD basis functions Intrinsic Mode Functions (IMFs): The sifting procedure

We briefly outline the process applied to extract recursively the IMF basis representations, which is a procedure known as sifting, see [58]. To extract the l-th IMF The first step consists of computing extrema of the current signal representation after having removed the previously extracted IMFs by , which still admits a spline representation. Using the spline representation of one needs to find the roots of the first derivative to produce the sequence of time points for successive maxima and minima given by:

Without loss of generality, we assume the maxima occur at odd intervals, i.e. , and minima occur at even intervals, i.e. . The second step of sifting builds an upper () and lower () envelope of using two natural cubic splines through the sequence of maxima and the sequence of minima respectively:

such that ∀t with for all odd and strictly greater otherwise; and equivalently ∀t with for all even and strictly less than otherwise. One then utilises these envelopes to construct the mean signal denoted by ml(t) given in Eq (3), which will then be used to compensate the current representation of the speech signal by at each time point t ∈ [t1, tN]. This procedure is then repeated on the compensated signal, where again the current maxima and minima are obtained to produce envelopes which in turn produce a new estimate of the mean ml(t) which in turn is used in a defluctuation step to compensate the signal . This is repeated until the conditions specified in Definition 2.2 for the envelope and mean functions are satisfied, which when achieved produce the current deflucuated version of the signal as the l-th IMF γl(t). This procedure then repeats again for the l + 1-th IMF extraction working now on signal , and the entire sifting process terminates when the L + 1-st IMF is extracted and it corresponds to the IMF ‘tendency’ which only has one convexity change in [t1, tN] and is often denoted distinctly by r(t), see [17] for an algorithm and further details.

2.2 Obtaining Instantaneous Frequencys (IFs) from IMF basis functions

The EMD method extracts a set of basis functions (IMFs), each of which will admit a time-varying frequency structure that can be characterized by their corresponding instantaneous frequeny (IF) signal. The IF of a given IMF basis is extracted in the following stages.

First, one takes the Hilbert Transform of each IMF , in order to construct a set of analytic extensions via the Hilbert transform as follows:

which then produces the collection of analytic signals {zl(t)} with . We observe that when γl(t) is a proper IMF such that it respects the restrictions defined in (4), its Hilbert transform can be obtained in closed form. The complex analytical signal zl(t) can be then represented by the polar representation with time varying amplitude and time varying phase .

The instantaneous frequency ωl(t) for IMF γl(t) is then found from the time-varying phased of zl(t) as the rate of change given by:

As observed in [37] conditions (4) that characterize the IMF properties are specified to ensure that the instantaneous frequency remains positive and therefore admits a meaningful physical interpretation.

Since, we adopt a statistical model representation for the IMFs based on cubic splines one can utilise this representation of the l-th IMF to obtain the Hilbert transform of the sum of local cubic polynomial transforms, see for details [59]:

where △i = τi − τi−1 and is the Hilbert transform of the i-th polynomial:

Such a representation for the IMF γl(t) produces a smooth, differentiable, continuous function, it is approximated by the class of polynomial basis in the L2 space.

3 EMD stochastic embedding set-up

We have shown in Section 2 that working with cubic splines for the representation of the EMD method is advantageous from many perspectives. Firstly it is suitable to represent the interpolated signal from the observed time series in an optimal fashion based on minimising mean squared error. Secondly, it allows one to perform the sifting procedure readily when representing the envelope functions and results in a collection of IMF basis functions representations that are also cubic splines. Thirdly, the analytic extension via the Huang Hilbert transform, used to obtain the instantaneous frequency, admits closed form solutions for the representations of the IFs which is also characterised readily by cubic splines. Lastly, and most importantly, when considering moving from the path-wise EMD method basis extraction for one of the time series realised trajectories to a stochastic process embedding representation, the representation of IMFs via cubic splines allows one to utilise the established connection between Gaussian processes and B-splines to motivate working with Gaussian process stochastic embeddings.

3.1 EMD stochastic embedding objectives

In developing the stochastic embedding of the EMD, we will distinguish between the deterministic (realised) or empirical EMD decomposition for a given signal trajectory, satisfying at any time t ∈ [0, T] the property of EMD decomposition

for IMF γl(t) satisfying the mathematical characterisation given in Definition 2.2; and the stochastic process embedding of the EMD representation, denoted at any time t ∈ [0, T], by the random variables (upper case for random variables)

The challenge with developing a stochastic embedding for EMD method is that it will be required to satisfy a few core features:

Sample paths of the embedded EMD stochastic process should be able to be consistent with the basis functions for the IMFs obtained from the empirical sample based characteristics that represent the classical EMD method as set-up in Definition 2.2.;

-

Since the EMD method satisfies for each realised sample time-series trajectory that

then one would naturally require such a property to be inherited at the population stochastic process level such that:

where we have denoted the stochastic process for R(t) by ΓL(t) to reduce notational burden.Ideally the representations of processes and IMF stochastic processes would satisfy:

Stochastic processes used to model and IMF processes have known finite dimensional distributions and are from family of known stochastic process models which are easily parameterised and characterised. We will denote this family of models for distributions at time t

Stochastic processes used to model and IMF processes would also ideally be easily calibrated to realised EMD sample based decompositions via standard estimation methods like maximum likelihood estimation with closed form expressions for the likelihood of the model for the stochastic embedding.

- IMF stochastic processes are of the same family of stochastic process model as that which represents the signal stochastic process . In other words if, for each time t, one has that random variable is distributed by F in a family of distribution models where

with denoting the parameters of the model that indexes the family member from and furthermore, where is the joint distribution of the IMF random variables and tendency at time t, then it also holds that for each t ∈ [0, T] and l ∈ {1, …, L + 1} the distribution of the IMF random variables satisfies that it is also a member of this family of distribution models such that

indexed by parameter vectors Ψl. -

Another desirable property for the stochastic embedding representation of EMD would be to have the conditional distributions also members of the same family of distributions of , such that for each t ∈ [0, T] and any combination of J ≤ L + 1 indexes denoted by subset one has that the random variableNote: In the case one assumes an independence model approximation for the joint distribution of the IMF random variables and tendency at each time t ∈ [0, T] such thatThen the EMD method decomposition implies that the stochastic representation of the IMFs are closed under convolution. This means that at each time t the random variable for the signal S(t)∼F(s;ΨS) and the random variables for the IMFs satisfy that

such that

4 Developing a stochastic embedding of EMD

In this section we develop two approaches for the stochastic embedding of the EMD method which will be consistent with the EMD empirical decomposition whilst also concurrently satisfying the properties set out for such a stochastic representation of EMD given in Section 3.1. To achieve this we will develop two different system models each of which will be based on versions of multi-kernel Gaussian Processes models with specially selected kernel structures. The reference baseline or benchmark model we will compare to these two novel system models for EMD stochastic representation will be a Gaussian process fit directly to the original signal s(t).

Gaussian Processes (GPs) are a highly expressive family of stochastic models widely adopted in machine learning, see [45]. Formally, a Gaussian process is a collection of random variables, any finite number of which have a joint Gaussian distribution, which is entirely described by its mean and kernel covariance function as detailed in Definition 4.1. The positive definite covariance function often referred to as kernel determines the class of functions from which such processes sample paths take support.

Definition 4.1 (Gaussian Process (GP)). Denote by a stochastic process, parametrised with state-space , where . The random function f(x) is a Gaussian Process if all finite dimensional distributions are Gaussian, where for any , the random vector (f(x1), f(x2), …, f(xn)) is jointly normally distributed. We can therefore interpret a GP formally defined by the following class of random functions:

| (5) |

with , ,

| (6) |

The properties of the functions, i.e. smoothness, periodicity, etc., are determined by the sufficient statistic given by the covariance kernel function.

Before introducing these GP models, we will motivate theoretically why the class of GP models is suitable for a stochastic embedding that will be shown to be both meaningful for regularised spline representations of IMFs as well as suitable to satisfy the properties outlined for such a stochastic embedding of EMD discussed in Section 3.1.

4.1 Spline representations of an IMF and reproducing kernel hilbert spaces

In order to make explicit the connection between using spline models to represent the path-wise empirical EMD decomposition of and the stochastic embedding via a multi-kernel Gaussian process, we will recall briefly known connections between splines and Gaussian Processes (GPs). Splines may be viewed as limits of interpolations related to stationary Gaussian processes. Hence, we will explore further this connection as follows.

Consider seeking to recover the l-th unknown IMF function γl(t) for t ∈ [0, T] based on current sifting defluctuation step data at time points t1, …, tN denoted as observations here generically by . That is one has data and we seek the function representation for the l-th IMF that minimizes the objective given generically in Eq (7), for instance which may be the familiar penalised residual sum-of-squares,

| (7) |

where L is a loss function, λ ≥ 0 is regularisation strength and J is a functional imposing smoothness on the IMF representation γl. One can connect the regularised spline solution to GPs by considering Reproducing Kernel Hilbert Spaces (RKHS) to explore the unifying framework to motive the GP stochastic embedding model, see details in [60] and more recent works in [46, 61, 62].

A Hilbert space is an inner-product space which is complete in the metric induced by its norm. For every Hilbert space of functions on a set , one may define for each the evaluation functional f: t ↦ f(t). If every evaluation functional in the Hilbert space is bounded, then one obtains a Reproducing Kernel Hilbert Space (RKHS). Note L2 is not an RKHS since the Dirac-delta function is not in L2. In an RKHS the Riesz representation theorem states that one may find, for each t a representer such that

Then one can define a function known as the kernel by k(s, t) = ks(t). This function will be unique to a given RKHS and has the properties of symmetry, nonnegative definiteness and satisfies the reproducing property 〈k(⋅, s), k(⋅, t)〉 = k(s, t).

To understand why the RKHS space and reproducing kernel K are introduced, consider the space of all finite linear combinations of functions with the inner product given by 〈ks, kt〉 = k(s, t) along with linearity. It is then the case that k is a kernel for this space with the property, according to the Representer Theorem, that solutions to the regularised empirical risk given in Eq (7) take the form

for for all i ∈ {1, …, N}. The conditions under which such a representer theorem exists are studied in [63].

Given these results one may then link the estimation problem for representing each IMF to the case of polynomial smoothing splines, used to represent the IMF basis functions under the EMD method proposed. To see this consider, without loss of generality , penalty function which acts to penalise irregularity and induce smoothness in the spline representation of IMF basis. One can then construct an RKHS whose norm corresponds to this smoothing penalty J. Hence, the kernel needs to be made explicit.

Using Taylor’s theorem in one dimension with integral remainder term to express the IMF function γl, which is assumed to have at least m − 1 order absolutely continuous derivative in [0, 1] and , then

where (⋅)+ is the positive part only and zero otherwise. If functions with this series representation with the first m − 1 derivatives being 0 at t = 0 are denoted by , then for one has

where . Now observe that one can obtain an RKHS space from with the inner product

and kernel . Now if one defines the null space of the penalty function as with φi(t) = ti−1/(i − 1)!. Then the kernel for is . As shown in [60] the space of functions with m − 1 absolutely continuous derivatives and m derivatives can be written as a direct sum with kernel k = k1 + k0. Furthermore, J(γl) will be the square norm of the projection Pγl of γl onto so the PRSS estimation objective in Eq (7) with becomes

| (8) |

for . By Representer Theorem, the solution is the generalised form given by

is comprised of two parts: an unpenalized component of and a linear combination of the projections onto of the representers of evaluation at the N time points t1, …, tN. For the squared error loss L(yi, γl(ti)) = L(yi − γl(ti))2 the solution corresponds to the natural polynomial spline, see discussion in [64].

Hence, we have been able to motivate the spline representation of the IMF as the solution to a generalised estimation problem in an RKHS regularised function space. Now we will endeavour to connection this through the RKHS theory to the Gaussian process embedding.

4.2 Relating spline representations of an IMF and a gaussian processes stochastic embedding

Now we will treat Γl(t) as a random function modelled by a GP and we will illustrate the mathematical connection between the spline representation on the pathwise EMD method decomposition of an IMF and the stochastic embedding developed in this work via GP models.

For Gaussian process prediction with likelihoods that involve the observed values of the IMF γl at N training points, extracted by the EMD method sifting algorithm, the empirical loss L(yi, γl(ti)) can be expressed according to the negative log-likelihood. Then the analog of the representer theorem, as detailed in [65] is given as follows.

Since the predictive distribution of Γl(t*) at test point t* given observations y1, …, yN is given by

which in the GP case is expressed in terms of the GP covariance kernel k by

| (9) |

with where K is the N×N Kernel matrix (Gram matrix).

One then obtains the regularized solution to Eq (7) from a GP perspective by noting that for the specific choice of loss and penalty given by

where the loss function is set to the negative log-likelihood in which is the Gaussian noise model variance. The solution for the estimated IMF using this regularized estimation produces which if one substitutes and uses the fact of RKHS space can be re-expressed by an estimation objective explicitly in terms of the GP model as follows:

Rewriting the objective in this manner expresses it as a parameter optimization problem in terms of coefficient vector α, this is the advantage of knowing that a Representer Theorem can be applied. If one then minimizes Q w.r.t. vector of coefficients α one obtains

which gives the prediction at test point t*

which is exactly the predictive mean given in Eq (9).

Now to explicitly recover the solution to the smooth spline interpolation for the IMF representation obtained via solving Eq (8) using m = 2 and the regularised GP solution just presented we can use the result of [66] which shows that in this case if one considers a random function representation of the IMF given by

where and f(⋅) a GP with covariance given by

Then to complete the example of the regularizer in the cubic spline case, we must remove penalties on polynomial terms in the null space by making taking σβ → ∞. This produces the final predictive mean solution for the GP representation of the cubic spline characterisation of the IMF given by

with Kernel covariance matrix Ky corresponding to elements evaluated at all training points, H the matrix collecting the vector of polynomial basis terms (1, t) at training points and kernel least squares coefficient estimator given by

From this solution, one can see that the resulting solution for the predictive mean function for the GP representation of the IMF for γl will have a cubic polynomial form.

4.3 Gaussian processes based stochastic EMD embeddings

Having established how the GP representations is connected mathematically to the empirical path-wise cubic spline representation for an IMF in the EMD method, we now generalise the stochastic embedding from a single IMF to the entire collection of IMFs under two different system models proposed. Each of these will be designed to satisfy the properties proposed for the stochastic embedding objectives set out in Section 3.1.

To achieve the desired embedding, consider first the stochastic process associated with the observed sampled signal converted from samples {s(t1), …, s(tN)} to spline which when considered as the realisation of stochastic process will be denoted by S(t) and respectively. The reference model used for comparison to the stochastic EMD models will involve directly modelling the process without the EMD method signal decomposition information, via a GP model given in System Model 1 (SM1).

4.3.1 System Model 1 (SM1): Gaussian process for

For SM1 there is a choice to calibrate the GP model directly to observations of the process S(t) or to set up the model alternatively as follows, using the values of for estimation of the GP model. This second choice will often be both more aligned as a reference model to the EMD method stochastic embedding as well as more robust to noise due to the regularisation that can be adopted when obtaining . Therefore, under SM1 the GP model for signal S(t) is obtained via

where we treat as a GP

| (10) |

with and representing the mean and kernel functions respectively, and are the sets of hyperparameters of the mean and the kernel respectively. The additive error ϵ(t) corresponds to a regression error based on using the spline representation for the representation and potentially calibration of the SM1.

4.3.2 System Model 2 (SM2): Gaussian processses for IMFs

When the EMD is applied to signal and the set of basis functions are extracted, each IMF γl(t) will be considered as the realised path of the stochastic process denoted as Γl(t) and the one for the residual r(t) denoted as R(t). This will produce the following stochastic embedding of the EMD given:

|

with

where ϵ(t)∼N(0, σϵ) and Γl(t) represents the GP for IMF l and there are l = 1, …, L of them and R(t) represents the GP on the residual tendency component. This general structure will form the basic structure for the two stochastic embeddings proposed for the EMD method and we will refer to these two models as System Model 2 (SM2) and System Model 3 (SM3).

Therefore one can see that the resulting model is still a GP model but differs from the baseline benchmark model in Eq (10) as follows

| (11) |

It is apparent that the proposed GP model for the stochastic embedding of the EMD method differs from a direct GP model on the signal as detailed in reference model directly in how the sufficient statics are designed. The key point of the stochastic embedding of the EMD method GP framework is that the kernel of the GP is now comprised of a multi-kernel framework, where each kernel can be specifically calibrated to the extracted EMD’s basis functions. Furthermore, it is trivially to verify that this stochastic embedding of the EMD method satisfies the objectives set-out in Section 3.1.

4.4 Treatment of the residual tendency stochastic embedding

As detailed in Section 3 last component extracted by the EMD corresponds to the residual or tendency component r(t). By definition, this last component has only one convexity within the domain [0, T]. Therefore, it is possible, without loss of generality, to partition it in two subregions [0, s] and [s, T] in which monotonicity applies locally in each. Consequently one could then impose the following structure on the GP model for R(t) over each region that enforces a stochastic monotonicity as discussed in [67], producing an isotonic restriction on the Gaussian Process. This is achieved by imposing derivative constraints on the sufficient statistics. Effectively, this utilises the fact that a derivative of a Gaussian process is a Gaussian process ([65]) and therefore a convexity constraint will result in conditions on the mean as outlined below:

One can then consider to impose these conditions at all out-of-sample points R(t*) in such a manner that on average one preserves monotonicity. Given the conditional distribution for R(t*)|R(t1), …, R(tN) one imposes the following conditions on the predictive distribution:

where t = [t1, …, tN]T and

There exists a second option for the stochastic embedding of EMD to treat the tendency, which involves rewriting the model in a conditional form as follows:

Under this formulation, the monotonicity of the tendency is obtained using the EMD methods pathwise extracted tendency function r(t). This is equivalent to developing an empirical Bayes formulation of the stochastic EMD embedding, see discussion in [68].

4.5 Adaptive band-limited IMF partitions

Consider the extracted instantaneous frequencies (IFs) ω1(t), ω2(t), …, ωL(t) which were constructed from the IMFs γ1(t), …, γL(t) as described in Section 2.2. The EMD method extracts these functions in decreasing order according to the oscillation index of the IMFs, i.e. osc[ω1(t)] > osc[ω2(t)] > … > osc[ωL(t)], where osc[⋅] is an operator that counts the number of turning points ie. convexity changes of a signal. Notice, that in non-stationary settings, the number of oscillations will not correspond to particular stationarity in the frequency plane, and in fact the IMFs can have time-varying IFs that move around the frequency plane but remain ordered in general by their oscillation. Therefore, in order to use the EMD extracted IMFs for a stochastic embedding that is aligned with a traditional notion of bandwidth based analysis, we develop the concept of the Band Limited IMFs (BLIMFs). This allows for the development of a stochastic representation of an EMD signal decomposition that is guaranteed to be characteristic of a particular frequency band. This leads to the third system model (SM3) which is formulated based on the idea of aggregating the IMFs samples whose IFs lie within the same frequency band. Such newly formulated Quasi-IMFs are named band-limited IMFs and denoted as BLIMFs and are then modelled according to the same GP. To define the model, one needs first to introduce a partition rule which identifies different local frequency bandwidths.

In order to develop SM3 based on BILMFs we need to first present the formalism of what we refer to as an adaptive partition of the (time,frequency) plane based on the EMD extracted instantaneous frequencies (IFs) ω1(t), ω2(t), …, ωL(t). We will construct a partition based on the observed IF samples, denoted by where with time interval and frequency interval , where Π denotes the partition region. In developing the BLIMFs, a criteria and estimation objective will be established that will allow for the definition of an optimal partition, denoted by Π*, for the collection of empirical samples . To define Π* we will segregate Π into an M×D partition. The partition of M non-overlapping bandwidths, denoted , in the frequency domain satisfy

Within each bandwidth a time domain partition is sought, that can be unique to each bandwidth, corresponding to D total time partitions per bandwidth. This produces a set of time partitions for the m-th bandwidth given by

As noted, it is not necessary that for m ≠ m′ and m, m′ ∈ {1, …, M}. From this formulation of time partitioned bandwidths we can arrive at a partition of Π by defining MD rectangles, each denoted by for m = 1, …, M and d = 1, …, D which are non-overlapping and satisfy

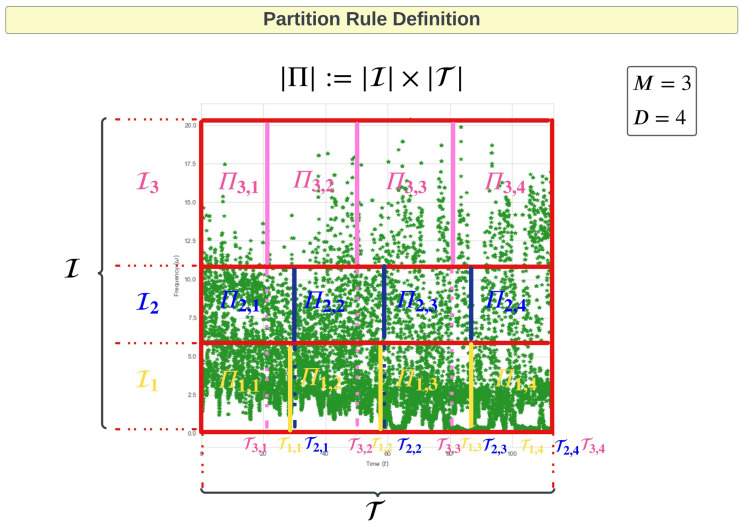

See a diagramatic example of such a partition in Fig 4. In this illustration the frequency domain is partitioned into three intervals and the time domain into four intervals.

Fig 4. Partition Rule Definition showing how the empirical IFs samples (colored in green) within region Π are partitioned into 12 time-frequency sub-regions that are defined by running the CEM method deriving Π*.

Note that, for this figure, we used only the first three IMFs, hence the first three IFs. This means that L = 3 in the Figure. The three IFs corresponds to the first three IFs of a speech segment used within the application of interest. Therefore, as it will be later in the paper highlighted, we consider speech segments with length N = 5000 samples.

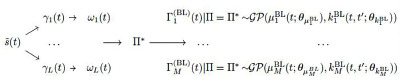

System Model 3 (SM3): Gaussian Processses for BLIMFs

Given a partition Π* with M bandwidth we can develop the BLIMFs as follows

| (12) |

these extracted BLIMFs in turn lead to the band-limited stochastic embedding of EMD method that we denoted as System Model 3 (SM3) given as follows

|

where denote the stochastic GP embedding of the l-th BLIMF. We note that since the BLIMF construction satisfies that

one can see that there will be no loss of information. However, the advantage will be in bandwidth selectivity as well as producing a frequency band-limited multi-kernel GP formulation where under SM3 one represents the stochastic process via multi-kernel representation given by

where and .

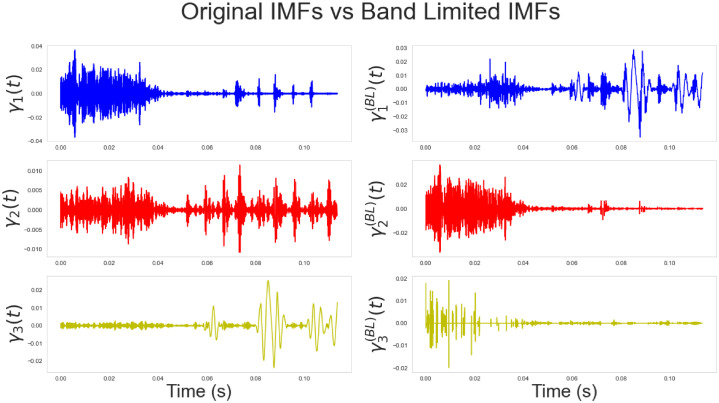

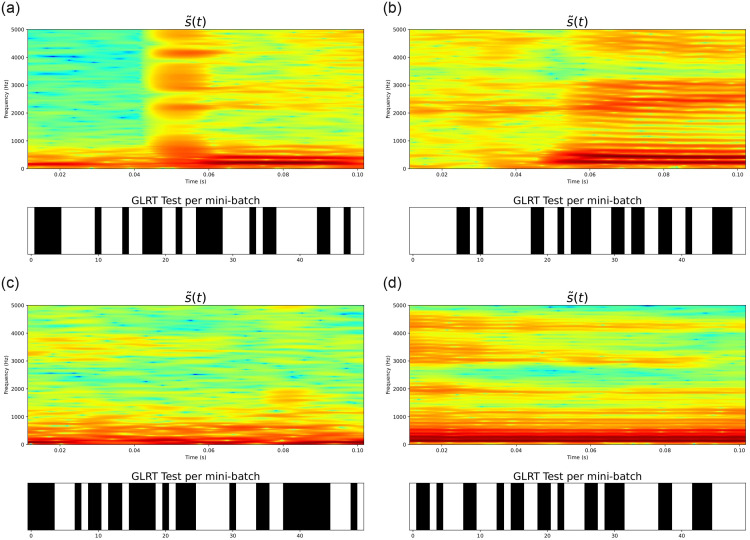

To demonstrate such a construction, consider the illustration in Fig 5. The left panels show the first three IMFs γ1(t), γ2(t), γ3(t) extracted on a given speech signal. The x-axis represents the time (in seconds). Only three IMFs have been considered in this example since, for speech analysis in general, the first 3 IMFs capture the majority of the frequency content (corresponding to formant frequencies, i.e. the frequencies at which the vocal folds vibrate) required to describe, capture or classify voices in general (see [17]). The right panels present the first three BLIMFs, which are obtained according to the model given in Eq (12). It is possible to observe how the time sample points have been reassigned within a new basis since its related frequency sample points fell into a different sub-region.

Fig 5. Comparison of the original extracted IMFs (left panels) and the obtained band-limited IMFs.

(right panels). The original signal is a segment of the speech signals considered in section 7. The x-axis represents time and is given in seconds. It corresponds to 0.13 seconds, or, 130 milliseconds approximately (given that the speech segments is 5000 samples recorded at 44.kHz). The y-axis shows the amplitudes of the IMFs (left panels) and the band-limited IMFs (right panels).

5 Time series covariance functions for multi-kernel GP stochastic EMD embeddings

In this section we discuss how to develop a generative embedding kernel based on the Fisher kernel first proposed in [52]. This kernel family has the advantage that it can be developed to produce a time series kernel for a GP that will adapt to the local structure of the observed process being modelled. It does this through a generative embedding mechanism that transfers the observed signal into a model space and then develops a subsequent sequence of feature vectors captured by the covariance operator that makes up the kernel. When the feature vectors represent summary statistics of a fitted model over the observed signal, such as the Fisher score, one produces the Fisher kernel embedding. We will use this Fisher kernel structure for SM1, SM2 (per IMF) and SM3 (per BLIMF). We begin this section by presenting the Fisher kernel basic details. We then subsequently discuss how we obtain the partition Π* for SM3 definition of the optimal BLIMFs.

5.1 Generative embedding kernel

The idea of a generative embedding kernel is to map the original time series data into a model derived sequence of feature vectors that form an embedded time series representations. Think of, for instance, a time series of summary statistics. When the summary statistics are based on a model representation, this is known as a generative embedding as the model generates the feature time series upon which the GP kernel is designed from the original input time series data. In [52] a generative embedding approach was developed where the kernel used was termed a Fisher kernel. It was given this name as the final stage of the generative embedding map was determined by the gradient of the log-likelihood of the parameters of an underlying generative model, which subsequently defined a new feature space called the Fisher score space. It describes how that parameter contributes to the process of generating a particular input data. The gradient maintains all the structural assumptions that the model encodes about the generation process.

The Fisher kernel has been successfully employed within speech verification and recognition tasks by [69] and [70]. Its role in this work consists of detecting voice disturbances in displacement, direction, and velocity to differentiate between healthy and ill subjects. The adopted generative models used to produce the Fisher score feature space were intentionally kept simple and utilised basic time series models to represent the generative model embedding selected to produce the speech signal IMF based feature vectors. The model for the generative embedding of the l-th IMF will be denoted by g(γl(t);θk) with model parameters θk. Such generative models are not designed to be perfect representations of the original time series but rather to capture summary features of the IMF over time that, in turn could produce an adaptive Fisher kernel structure that could adapt locally to a time varying frequency characteristics of each IMF.

One defines the Fisher score at time t, denoted by as follows:

where ∇θk denotes the gradient operator with respect to θk of the time t of the log-likelihood term lng(γl(t);θk). In so doing, one constructs an embedding into a generative model feature space which allows one to subsequently define the Fisher kernel via the inner product in this space:

where is the Fisher Information Matrix . Hence, the Fisher score is a feature mapping such that maps γl(t) into a feature vector that is a point in the gradient space of the manifold , see [52]. The gradient defines the direction δ which maximizes lng(γl(t);θk) while traversing the minimum distance in the manifold given by D(θk, θk + δ), where D(x, y) = ‖x − y‖. This latter gradient is usually known as natural gradient and is obtained from the ordinary gradient via . Hence, the mapping is called the natural mapping and the natural kernel associated to it corresponds to the inner product between these feature vectors relative to the local Riemannian metric. Note that the information matrix is asymptotically immaterial and so often one works with the simplified kernel given by setting .

5.2 Adaptive gaussian kernel design through optimal time-frequency EMD partitions

In SM3, where the BLIMFs are used to define the inputs to the GP models, one has a choice to either select the desirable time-frequency partitions Π* based on apriori information about the signal spectrum or frequency bands of interest over time. Alternatively, in many settings, such apriori beliefs about the partition may not be available and one instead seeks an optimal partition Π* according to a desirable data-driven criterion. This section develops a solution to the optimal data-driven partition rule for SM3.

Many possible objectives could be considered. The one considered in this work is to determine the optimal partition for a given number of bandwidths that achieves empirical coverage of the sample IFs per time-frequency slot with most uniform coverage over Π. Such a partition is based on a discretised representation of the time-frequency plane that uses the IFs samples so that these can be allocated to frequency bandwidths whose distribution is as close as possible to uniform such that each band selected will have equivalent total spectral energy contributions from each BLIMF. This problem corresponds to a combinatorial search which becomes highly computational when it comes to standard optimisation techniques like simulated annealing, tabu search, MCMC algorithms. In this section an effective solution is proposed using the cross-entropy method (CEM) of [71] which has been shown to be highly effective in solving hard COPs.

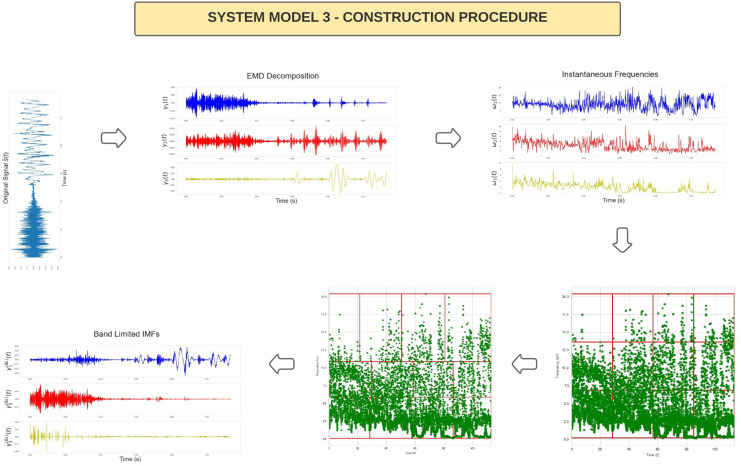

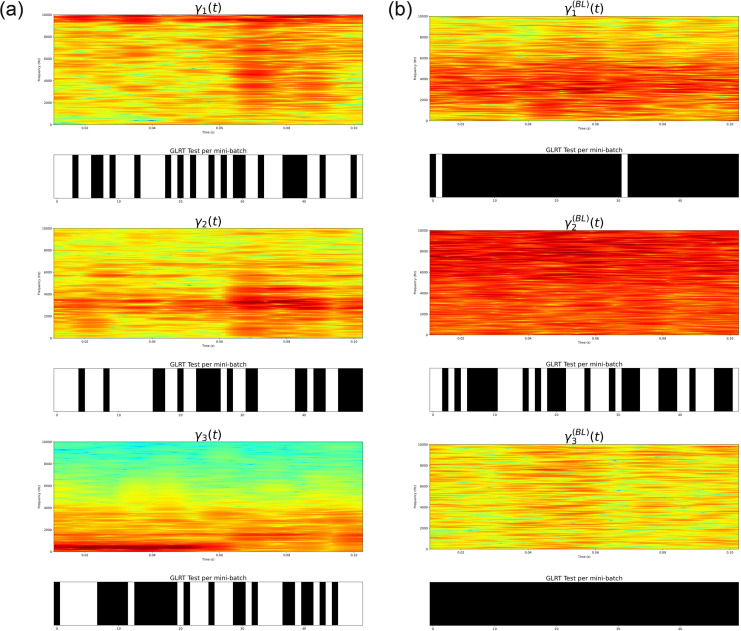

A core component of CEM is that it exploits an Importance Sampling (IS) framework to approximate the optimal solution. In the main literature of CEM minimising the Kullback–Leibler (KL) divergence, the distributions are commonly referred to as the target (true) distribution treated as an ideal model for the data (in this case, a uniform distribution) and an empirical distribution (an approximation of the true distribution), in this case, based on the empirical distribution of the sample IFs obtained from a given partition rule. An overview of the process of constructing IMFs followed by IFs then an optimal partition rule Π* via CEM followed by construction of the subsequent BLIMFs given the partition rule is provided in Fig 6.

Fig 6. Figure presenting the steps required for the implementation of System Model 3.

The first plot represents the original interpolated signal . This is a segment of speech signal used within the experiments section and corresponds to 0.13 seconds of speech. The x-axis corresponds to time (measures in seconds) and the y-axis to the amplitude. In the following plots, equivalent settings for the axes apply. Afterwards, the EMD is applied and the first three IMFs γ1(t), γ2(t), γ3(t) are plotted. The related IFs ω1(t), ω2(t), ω3(t) are extracted and plotted. After, the empirical sample points of the IFs are passed to the CEM method. The fourth step of this procedure is the initial partition Π0 used to initialise the cross-entropy algorithm, while the fifth step represents the CEM estimated optimal partition Π*. Lastly, the reconstructed BLIMFs are provided.

5.2.1 Formulation of the time-frequency partition optimisation problem

This subsection formalises the optimisation problem that estimates the optimal partition Π*. A given partition of Π according to M frequency bands is structured according to an increasing sequence of parameters ω1, …, ωM−1, defining frequency bandwidth subintervals of . In addition, for each bandwith there are D time partitions determined, for the m-th bandwidth, by an increasing sequences of parameters sm,1, …, sm,D−1, which defines the subintervals of . Hence, we denote the set of parameters to be estimated to determine the partition by vector:

| (13) |

We will next introduce the CEM importance sampling structure. Consider , the set of DM tuples and a random variable with a target uniform density π(x) given on support by:

such that the probability of drawing tuple (m, d) is proportional to the area of rectangle Πm,d versus Π. Given a current estimate of the partition Π* one can also construct the empirical distribution from N time samples of the L set of IFs denoted by such that

where . Therefore, the probability of drawing tuple (m, d) reflects the proportion of the number of points pl,n = (tn, ω(tn)) that lay within the rectangle to the overall sample size. Furthermore, the distribution is clearly then a function of the parameter vector Ψ, which has parameters that satisfy the conditions for each bandwith:

and characterise the partition Π*. From these definitions, it is clear that under these definitions one has that πm,d and are valid probabilities and satisfy

The optimization objective can then be formed under the CEM which in this problem formulation involves selecting the support of X in such a way that the Kullback-Leibler divergence,

measuring the similarity between the two proposed distributions target and empirical partitioned density, is minimised based on determining an optimal choice of the parameters that define the partition ψ⋆, given as follows:

| (14) |

Since this is a discrete problem, this objective can be simplified as follows:

| (15) |

The derivation is provided in SI, section 6 in S1 File.

5.2.2 Kernel density estimator smoothing of kullback-leibler divergence in optimal partitioning problem

For a given current estimate of the partition Π*, it can arise for a given emprical sample of the IFs that certain sub-rectangles might not contain any of the sample points pl,n = (tn, ωl(tn)) ∈ Π. As a result, the corresponding set will be empty, i.e. . Consequently, the probabilities equal zero and their logarithms used to calculate in Eq (15) tend to infinity. To avoid these numerical difficulties one can approximate by a kernel density estimator parametrised by kernel and bandwidth h > 0 such that

where is a kernel density estimator of points p = (t, ω(t)) ∈ Π specified on a sample set pn,l

By using the above, the objective function of the partitioning problem in (15) is reformulated to be the Kullback-Leibler divergence between π(x) and

| (16) |

given by

| (17) |

with C > 0 and set to a very small number, ie C = 10−100. The derivation of the above is provided in SI section 7.

5.3 Stochastic optimisation of optimal time-frequency partition via cross entropy

Given the formulated objective function for the partition problem defined in (14) one can now define the CEM approach to stochastic optimisation used to solve for the optimal partition given the IFs. Recall, such an objective utilises the KL(⋅) divergence as a similarity measure between two distributions, empirical and target. This must be optimised with respect to the vector of parameters ψ. The CEM process to undertake this stochastic optimisation is developed by considering the level sets of the objective function {ψ: KL(ψ)≥ζ} for , such that at the point that , we have {ψ: KL(ψ)≥ζ} = {ψ⋆}. We can formulate the importance sampling solution to achieving this outcome through a sequence of K intermediate solutions each based on a progressively less relaxed level set constraint i.e. ζ1 < ζ2 < ⋯ < ζK where and at each iteration one updates the importance distribution to increase the chance of sampling solutions that are feasible according to the current level set constraint. Next we define the IS formulation of the CEM stochastic optimisation solution. This will involve defining an IS sampling distribution for the parameters ψ as given in Eq (13) that make up the specification of the current estimate of the optimal partition Π*. In order to achieve this we consider a family of probability measure with support Ψ that admits a density {fφ: φ ∈ Φ} also parametrised by φ ∈ Φ. Let denote the expectation taken with respect to . Let us fix φ and ζ and define a rare event probability problem:

Instead of approximating this probability naively by sampling from fφ, the importance sampling method is used. Let gφ′ denote the importance sampler with φ′ ∈ Φ. Importance sampling approximates the rare event probability by

where vectors ψi for i = 1, …, S are iid samples generated from IS density gφ′(ψ). The optimal importance sampler densities (gφ′) parameters φ′ are then obtained progressively in the CEM iterations for a given level set ζ by:

| (18) |

where vectors ψi for i = 1, …, S are iid samples generated from fφ′(ψ). Notice that the last line of 18 corresponds to the maximum likelihood estimation (MLE) of φ′ when the samples are {ψi: KL(ψi)≥ζ}. The CEM starts from an initial sampling distribution and iteratively updates the threshold and the sampling distribution gφ′. For a detailed introduction to cross-entropy, the reader should refer to [57].

5.4 Design of the cross entropy importance sampling distribution

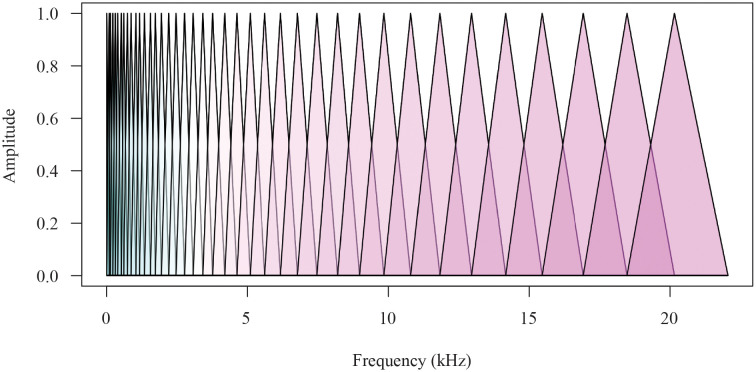

In this manuscript the optimisation problem is over a discrete support and so we have utilised a Multinomial distribution for the importance sampling distribution. In order to specify this distribution, consider a discretisation of the intervals and . The importance sampling distribution must reflect the distribution of discrete random variables that partition the rectangle Π. Consider regular dense grids of and constructed by:

Partition of into small Nω intervals of size , and we define for nω = 1, …, Nω, therefore ;

We partition into small Nτ intervals of size , and we define for nτ = 1, …, Nτ, therefore, .

Now define the probabilistic model to partition into M subintervals, for m = 1, …, M according to an (M)-dimensional multinomial random vector X with entries Xm on the support of {0, …, Nω} which indicate how many subsequent grids are connected to construct partitions and corresponding break points . Therefore, the multinomial random vector X models the number of grid points out of Nω that belong to each of M intervals with probabilities of being in an interval being 0 ≤ p1, …, pM ≤ 1 for . The distribution function of X is formulated as

for p = [p1, …, pM]. Recall that since X divides Nω points into M subsets. For instance, for realisations of X1, Â X2 such that x1 = 2 and x2 = 5, the partitions and are given by

This example gives an intuition for the general rule

and defines the approach to sample W1, …, WM−1 via change of variables such that for m = 1, …M − 1. The realisation of W1, …, WM−1, denoted by ω1, …, ωM−1, represent the break points defining partitions . Also, we recall that ω0 and WM = ωM are fixed.

We model M independent not identical partitions of the time-domain interval into D subintervals by following the same steps. We define M independent multinomial random variables that are D-dimensional, each, denoted by for m = 1, …, M, which entries on the support of {0, …, Nτ}, for d = 1, …, D, specify how many subsequent grids are connected to construct partitions of and determine break points . We denote their distributions by for such that . For every m = 1, …, M this construction satisfies and

where is a realisation of . Therefore, the random variables Sm,1, …, Sm,D−1 for m = 1, …, M are defined via change of variables such that for d = 1, …D − 1 with realisations sm,1, …, sm,D−1 representing the break points of the partitions . Again, we recall that t0 and Sm,D = tN are fixed for every m = 1, …, M.

We can now connect this formulation back to the IS framework in the previous section as follows. Given this model, the joint distribution of Ψ = [W1, …, WM−1, S1,1, …, SM,D−1] can we written as

Using this IS distribution we can now rewrite the IS parameter estimation rule under CEM framework, according to Eq (18) as follows, using

to obtain the estimation equation for the IS parameters with constraint imposed on under a Lagrangian constrained parameter estimation given as follows:

where P represents the IS distribution parameters to be estimated and vector are the Lagrangian multipliers. If one then seeks the First Order Conditions for this Lagrangian, one obtains the system of equations that admit a feasible solution as follows:

These solutions to the IS distribution parameter estimates can be further simplified by noting that since and one can obtain:

and finally

| (19) |

Following the same steps, we have that

| (20) |

Note that the support of the random variables introduced in this subsection includes zero, and this may lead to the situation that some partitions are of zero length. If that happens, the breakpoints ω1, …, ωM and s1,1, …, sM,D−1 are not admissible as they may not form increasing sequence. Consequently, they do not belong to the feasible set Ψ. To address this difficulty, we may consider two procedures

- sample directly from the conditional distribution

sampling from the Multinomial distribution and and force non zero realisation by removing any realisations that contain 0 entry to meet the conditions of the feasible set.

An algorithm for the CEM method based on this IS distribution construction is provided in the, section 8 in S1 File.

6 Application: Speech based medical diagnostics

In this section, we introduce how we will adopt the aforementioned Stochastic Embedding of the EMD method into a medical signal processing application based on the diagnostics of Parkinson’s Disease. The goal is to detect ataxic speech by constructing a probabilistic model for the speech signal whose tested properties will reveal the presence or absence of acoustic feature abnormalities consistent with ataxia. Before proceeding to the experiments and the obtained results, we first review speech medical diagnostic frameworks and benchmark models used for Parkinson’s disease.

6.1 Comparative benchmark models for Parkinson disease speech analysis

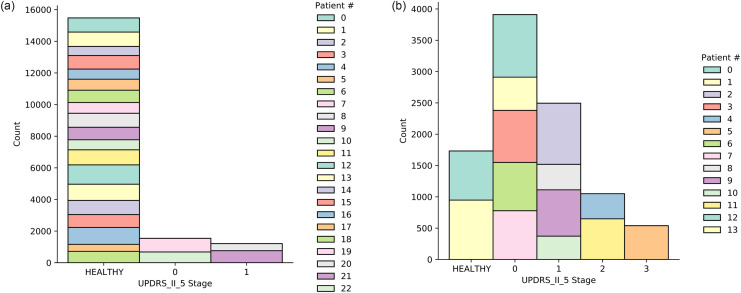

Among the various empirical tests considered for Parkinson’s disease dysfunctions evaluation, there are also speech and voice tests, based on auditory-perceptual subjective assessments of the patient’s ability to perform a range of tasks. The standard metric designed to follow Parkinson’s disease progression, introduced in 1987, is called the “Unified Parkinson’s Disease Rating Scale” (UPDRS) [72, 73]. A UPDRS assessment produces an integer number providing information about the stage of symptoms, where speech has two explicit labels, namely UPDRS II-5 and UPDRS III-18, ranging between 0–4. The label 0 represents the less severe stage, given as “Normal speech”, and 4 is the most severe stage, given as “Unintelligible most of the time”.

One challenge with such a survey-based diagnosis is that even for expert specialist doctors, it is difficult to find standardised reference baselines. This leads to a desire for a standardised objective based on formulation of a statistical model based solution that can be used for detecting the presence of the disease and surveilling its progression, see discussion in [74]. The biomarker used in this work corresponds to formant structure in speech, and the symptoms of interest are the ones affecting the vocal tract that result in ataxic speech in people with Parkinson’s disease. Hence, the objective is to identify acoustic disturbances in displacement, direction and rate (or velocity); see discussion in [29]. For further discussion on how to detect ataxic speech symptoms in Parkinson’s disease, the given speech tasks used or the employed acoustic features the reader might refer to [9, 74, 75] as references for further description of both tasks and features.