Abstract

Finding alignments between millions of reads and genome sequences is crucial in computational biology. Since the standard alignment algorithm has a large computational cost, heuristics have been developed to speed up this task. Though orders of magnitude faster, these methods lack theoretical guarantees and often have low sensitivity especially when reads have many insertions, deletions, and mismatches relative to the genome. Here we develop a theoretically principled and efficient algorithm that has high sensitivity across a wide range of insertion, deletion, and mutation rates. We frame sequence alignment as an inference problem in a probabilistic model. Given a reference database of reads and a query read, we find the match that maximizes a log-likelihood ratio of a reference read and query read being generated jointly from a probabilistic model versus independent models. The brute force solution to this problem computes joint and independent probabilities between each query and reference pair, and its complexity grows linearly with database size. We introduce a bucketing strategy where reads with higher log-likelihood ratio are mapped to the same bucket with high probability. Experimental results show that our method is more accurate than the state-of-the-art approaches in aligning long-reads from Pacific Bioscience sequencers to genome sequences.

Subject terms: DNA sequencing, Computational biology and bioinformatics

Introduction

Aligning millions of DNA sequences is significant for identifying functional and evolutionary relationships between organisms, assembling genomes, and analyzing single-nucleotide polymorphisms1,18. Many methods have been developed for solving the sequence alignment problem over the past decades3,4,7,9–12,15. Currently, the most popular alignment algorithms use the seed-chain alignment procedure. In this procedure a set of subsequences are extracted from the reference genome and indexed. Likewise a set of subsequences are extracted from a batch of query reads. Then each query read is only compared to sections of the reference genome that have subsequences in common. The assumption is that each read can only map to sections of the genome which share exact subsequences with the read. This assumption, however, is violated when error rate is high and thus the most popular alignment algorithms fail to identify a large portion of true alignments. One way to identify more true alignments in the high error rate regime is to relax the requirement that read and genome share exactly matching subsequences to read and genome share similar subsequences (not identical). However, methods for speeding up alignments of reads with high error rates using this relaxation are not available.

Sequence alignment has been studied from a statistical inference perspective8,9. It has been shown that optimal inference is equivalent to the dynamic programming solution to the sequence alignment problem. In order to overcome the low true positive rate of existing sequence alignment methods when the error rate is high, we first model sequence alignment as an inference problem in a latent variable generative model (Figs. 1 and 2) and then develop “asymetrical” hashing techniques for fast inference in this model. These asymetrical hashes can hash two reads to the same bucket or value whenever the two reads contain a pair of k-mers that are similar (but not necessarily identical). The set of non-matching pairs used for asymetrical hashing is optimally chosen with respect to the latent variable generative model.

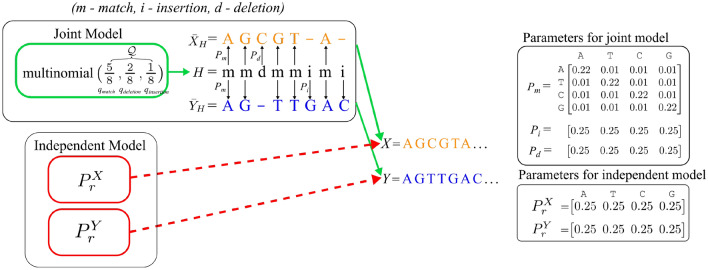

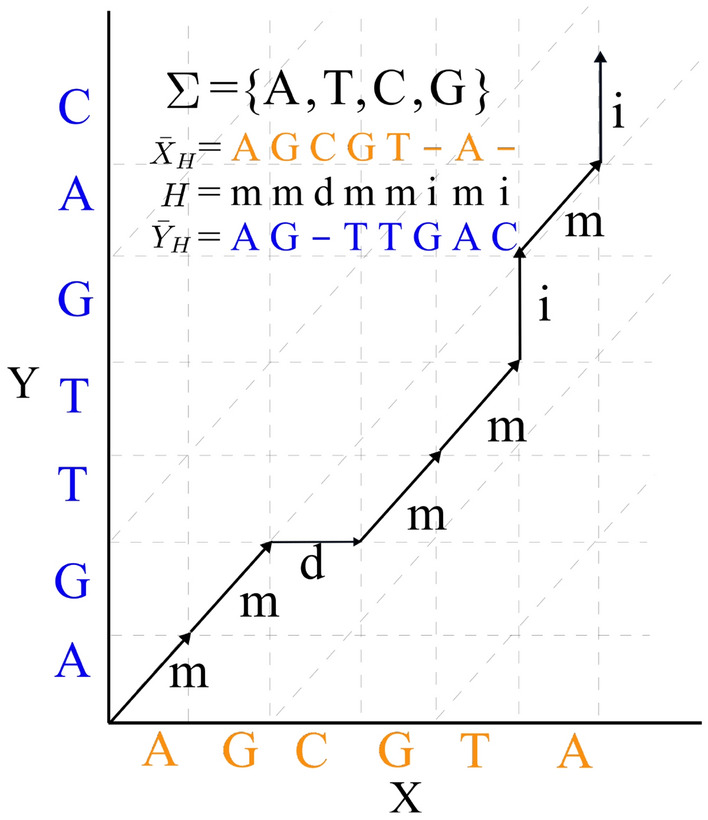

Figure 1.

We model sequence alignment as an inference problem in a latent variable model. We assume the true alignments are generated through a joint model, while random pairs are generated through independent models. In the joint model, a latent variable is first sampled from a multinomial distribution. Here, “m”, “i”, and “d” represent match/mismatch, insertion and deletion. Then sequences and are generated based on sequence H and probability matrices , , and . Whenever we have “m” at a position in H, the corresponding positions in and are sampled jointly from . Whenever we have “d” at a position in H, the corresponding position in is sampled from , while we have “−” for . Whenever we have “i” at a position in H, the corresponding position in is sampled from , while we have “−” for . Then sequences X and Y are formed by removing “−” from and . For the independent model, X and Y are independently sampled from and .

Figure 2.

In the joint model, a latent variable is first sampled, and then and are generated based on H. X and Y are generated by removing “−” from and . Here, “m”, “i” and “d” stand for match/mismatch, insertion and deletion.

In our model, we assume that pairs of sequences with high alignment scores are generated jointly from a generative model, and pairs of sequences with low alignment scores are generated independently of each other. Here we denote the probability that a pair of sequences X and Y are generated under the joint model as . The probability that X and Y are generated independently of each other is thus where denotes the marginal of along the x variable and denotes the marginal of along the y variable.

Given this model, a natural algorithm to find aligned sequences is the following: for each pair of sequences X and Y, compute . If is high, then X and Y are more likely to be generated from the joint model, rather than the independent model. Therefore, can be used as an alignment score between X and Y.

A naive implementation of this natural algorithm suffers from the fact that needs to be computed for each pair of sequences X and Y in the database. To overcome this challenge, we further propose a bucketing method inspired by locality sensitive hashing. In this strategy, we put each sequence X into several buckets (likewise for Y). For each X, we compute only for the sequences Y that are in the same buckets as X. This strategy significantly reduces the number of sequence pairs for which is computed, without missing true positive pairs.

In this paper, we introduce Distribution Sensitive Bucketing (DSB), a novel technique for efficient and accurate alignment of large read datasets against genomes. To do this, we first formulated the sequence alignment problem as an inference in two types of latent variable models, Hidden Markov Models (HMMs) and pair Hidden Markov Models (pair-HMMS). For both probabilistic models, we developed an efficient inference algorithm and derived its complexity. We further designed a family of asymmetric bucketing functions that minimize the complexity. Several alignment software packages have been developed using HMM like probabilistic models1–3. Algorithms based on HMM like probabilistic models are either slow or require heuristics1,3 with no theoretical bound on performance. The area of asymetric bucketing is relatively underexplored4. In order to detect homolog sequences, Mak et al.4 introduced a method that would put pairs of sequences in the same bucket if they shared the same subsequence plus or minus a few deletions and insertions. However, Mak et al. does not consider that computational complexity may increase due introducing this procedure and do not provide a methodology that adjusts the subsequence length and number of indels considered to various indel probabilities.

DSB is an algorithm that given indel probabilities will generate a bucketing data structure for sequence alignment that aims to keep sensitivity high while keeping computational complexity of sequence alignment low. Like Mak et al., DSB will compare reads that share similar subsequences, not just identical ones. Our results show that the DSB algorithm is at least as sensitive as competing methods in aligning reads at low error rates, and outperforms all methods when the error rates are high. When aligning reads to distant homologs, the DSB algorithm is 10 percent more sensitivity than the closest competitor.

For the sake of presentation clarity, we provide bodies of algorithms in Supplementary Materials and their summaries in the main text. Additional figures and tables are also provided in Supplementary Materials.

Results

We benchmarked DSB-SA against popular alignment methods in several scenarios. The scenarios include aligning simulated reads against a reference genome, aligning experimental reads against corresponding genomes, and aligning E. coli reads against reference genomes of distance homologs. In each scenario both Sensitivity and False Positives are reported. Sensitivity is measured as the proportion of aligned regions (read to genome) in the reference that are reported by the corresponding method. A sensitivity of 1 means that every region in the reference is recovered while 0 means no regions in the genome are recovered.

Brief overview of distribution sensitive bucketing

Distribution Sensitive Bucketing (DSB) consists of the following steps (see details in the Method Section). First, a directed graph is constructed, where each sink node represents a bucket. Then, starting from the source node, query and reference reads are mapped to one or several buckets through the decision graph (at each node in the graph, the decision graph might route a read to more than one direction). Finally, all the buckets are explored, and pairs of query and reference reads in each bucket are reported as matches. In the following, DSB-HMM refers to DSB algorithm in case of HMM model (Algorithms 1 and 2), while DSB-SA refers to DSB algorithm in case of string alignment model (Algorithms 3 and 5).

We benchmarked DSB-SA against MHAP5, Minimap26, DALIGNER7, BlasR8, MMSeqs29, GraphMap10, CD-HIT11, and Winnowmap12. MHAP is the state of the art algorithm for aligning reads with high insertion, deletion, and mismatch rates, that is based on compressing sequences to their representative fingerprints and detecting overlaps by estimating Jaccard’s similarity using min-wise hashing13. Minimap2 is a general-purpose alignment program for mapping DNA reads to reads/large reference databases, that is based on collecting and indexing minimizers in a hash table6. DALIGNER is based on an efficient and highly sensitive filter that predicts points between pairs of reads that are likely to have a significant local alignment passing through them7. BlasR first finds clusters of short exact matches between the read and the genome using a suffix array, and then perform a more detailed alignment of the regions where reads are matched8. MMSeqs2 is a sensitive and fast alignment program that utilizes k-mer matching and ungapped alignment to speed up mapping without losing sensitivity9. GraphMap is a fast and sensitive reads mapping program which is designed to analyze sequence data with high error such as nanopore sequencing data10. CD-HIT is a clustering algorithm that group reads together based on their pair-wise similarity11. Winnowmap is a direct descendent of Minimap2, and it uses weighted frequently occurring k-mers to reduce excessive false positives12.

Benchmarking DSB-HMM in matching simulated data

In this experiment, we compared the performances of DSB-HMM (Algorithms 1 and 2) against the brute force search (Algorithm 4). Data is simulated from a HMM with hidden state for and the following parameters:

| 1 |

| 2 |

where represents the transition matrix of the hidden states, i.e. and represents the emission probabilities, i.e. . Here, is the error rate, and is the probability of transition to an alternative state. In hidden state 0, the HMM mostly generates matching nucleotides whereas in hidden state 1 the HMM generates mostly mismatching nucleotides.

We first simulate the hidden states using the transition probabilities, and then simulate data using the emission probabilities for various values of and . We run for 1000, 2000, 5000, 10 000, 50 000, 100 000, and here . The brute force algorithm has a quadratic growth in complexity with respect to the number of sequences N, while our method has a sub-quadratic complexity (Fig. S1).

Benchmarking DSB-SA in aligning simulated reads against genomes

We benchmarked the different methods in mapping PacBio simulated reads against the E. coli reference genome. We used recommended settings for all methods. The reads are simulated using PBSIM14 and the E. coli reference genome, with a mean length of 700 bps. Figure 3 shows the sensitivity, the percentage of reads mapped correctly to the genome.

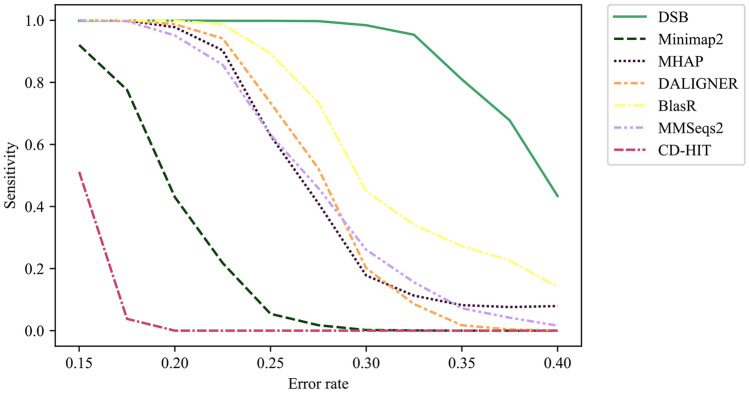

Figure 3.

Benchmarking different methods on mapping simulated reads against the genome. We use PBSIM to simulate PacBio reads from the E. coli reference genome. Indel and substitution errors are introduced, and the error rate on reads is the sum of these errors. In this experiment, we simulated the reads with an average length of 700 bps and average read errors from 0.25 to 0.45. Sensitivity versus error rate for DSB-SA, Minimap2, Winnowmap, DALIGNER, BlasR, MMSeqs2, and GraphMap are shown. Details of the PBSIM simulation are provided in the Supplementary Note 4.

We observe that DSB-SA maintains high sensitivity as the error rate increases. BlasR has a lower sensitivity compared to DSB-SA, and its performance decreases substantially for higher error rates. DALIGNER, Minimap2, Winnowmap and MMSeqs2 show similar low sensitivity across different error rates. The false positive rate for all methods is nearly zero (Fig. 4).

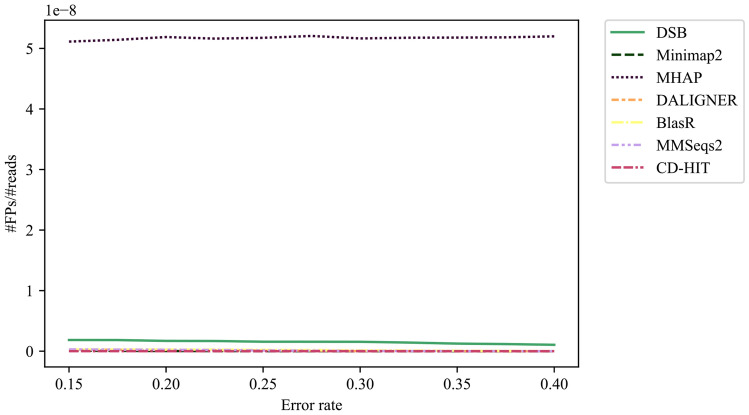

Figure 4.

Benchmarking the false positive rate of different methods on mapping simulated reads against the genome. We use PBSIM to simulate PacBio reads from the E. coli reference genome. Indel and substitution errors are introduced, and the error rate on reads is the sum of these errors. In this experiment, we simulated the reads with an average length of 700 bps and average read errors from 0.15 to 0.40. False positive rate of all methods is nearly zero.

Benchmarking DSB-SA in aligning pacbio reads to distant homologs

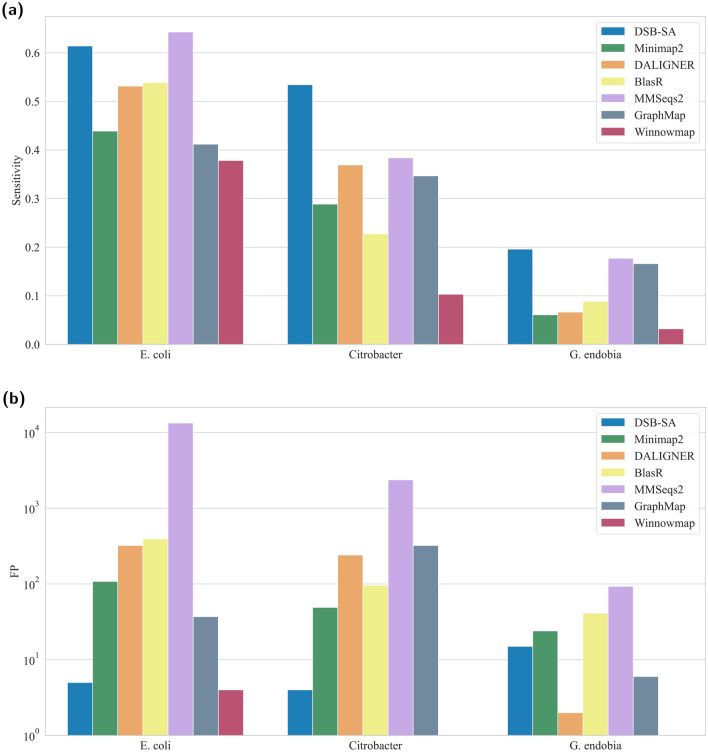

In this experiment, we compared the performances of DSB-SA with various methods on mapping PacBio reads of the E. coli genome to two genomes of related species. The ground truth is inferred by aligning the reads against the genomes with LALIGN15. LALIGN is a brute force method that finds all local alignments between given queries and targets. We use default settings for DALIGNER, BlasR, MMSeqs2, GraphMap and Winnowmap, and PacBio preset for Minimap2. Figure 5 illustrates the sensitivity of the examined methods.

Figure 5.

Comparison of sequence alignment methods in (a) sensitivity and (b) false positives. Here, Pacbio reads from E. coli are searched against the genome of Citrobacter and G. endobia to assess the power of various methods in identifying distant homologs.

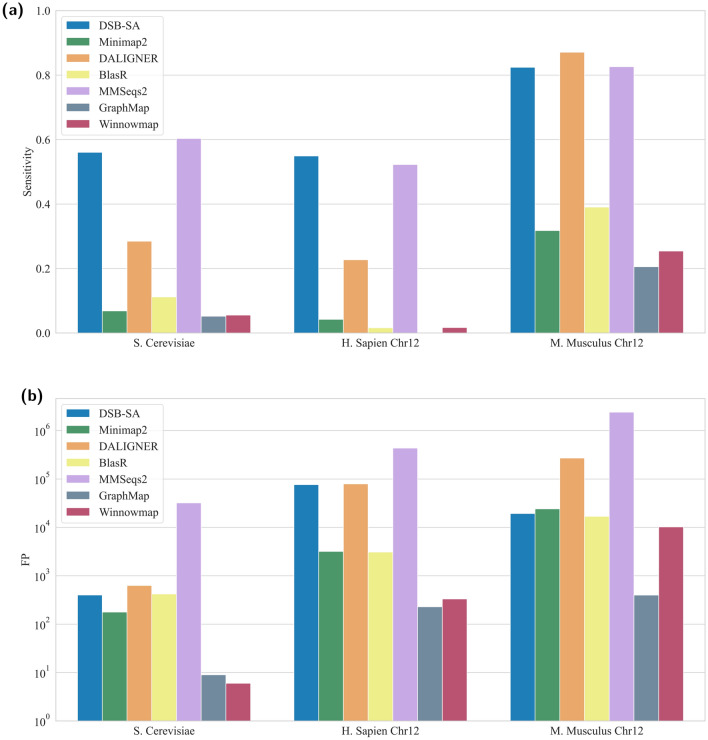

With the exception of MMSeq2, DSB-SA is significantly more sensitive than the other methods (Fig. 5a). While DSB-SA is nearly as sensitive as MMSeq2, it produced three orders of magnitude fewer false positives (Fig. 5b). Runtimes for all the methods are shown in Table 1. False negatives and true positive rates for all methods are shown in Supplementary Tables S1, S2, and S3.

Table 1.

Runtime/memory analysis of various methods for mapping PacBio long reads of E. coli to the E. coli genome.

| E. coli | ||

|---|---|---|

| runtime (s) | memory (MB) | |

| LALIGN | 1587806.20 | - |

| DSB-SA | 174.22 | 2022.54 |

| Minimap2 | 1.79 | 71.14 |

| DALIGNER | 11.92 | 761.04 |

| BlasR | 482.95 | 104.89 |

| MMSeqs2 | 64.80 | 8314.44 |

| GraphMap | 102.53 | 369.94 |

| Winnowmap | 1.41 | 60.05 |

The runtime is recorded in seconds (s), and the memory usage is recorded in megabytes (MB).

Benchmarking DSB-SA in aligning PacBio reads to corresponding genomes

We benchmarked DSB-SA against other methods in mapping PacBio reads of S. Cerevisiae, chromosome 12 of H. Sapiens, and chromosome 12 of M. Musculus to their respective genomes (Fig. 6). DSB-SA is significantly better than all the other methods with exception of MMSeq2 and DALIGNER. DSB-SA is comparable (or better) than MMSeq2 and DALIGNER, and produces significantly fewer false positives. False negatives and true positive rates for all methods are shown in Supplementary Tables S4, S5, and S6.

Figure 6.

Comparison of (a) sensitivity and (b) false positives of various sequence alignment methods. Here, Pacbio reads from S. Cerevisiae, H. Sapien, and M. Musculus are searched against their respective genomes.

Discussion

In recent years, various efficient methods have been introduced for aligning large number of reads against reads/genomes. However, majority of these techniques are limited to the cases where reads are mapped to close homologs (low mutations, insertions and deletions) and they do not generalize to alignment of reads to distant homologs. Furthermore, most of the methods that are used in practice use heuristics to speed up alignment.

Many approaches also use decision trees to solve the string alignment problem. The main contribution of the presented work is in modeling the string alignment problem as a statistical inference problem and applying a general hashing strategy for efficient inference. Since we use pair-HMMs to model the joint distribution of aligned reads, the final hidden state of a pair of aligned reads can be a deletion, insertion, or match/mismatch. Thus, there are three pairs of subsequences that are predecessors of a sequence pair (note that in traditional HMM, there is only a single predecessor). Thus the hashes for pair-HMMs are decision graphs (Fig. S4) rather than decision trees.

The sequence alignment problem has been modeled as a statistical inference problem, and dynamic programming solutions have been proposed to achieve optimal inference. Since these statistical models can be parameterized by different insertion, deletion, and mutation rates, the dynamic programming solutions are capable of discovering alignments between distant homologs. Still dynamic programming is very slow and runtime grows linearly with the size of reference. Inspired by locality sensitive hashing, in this paper we introduced the distribution sensitive bucketing paradigm for efficient subquadratic inference in latent variable models. In this paradigm, given a joint and an independent model for true and random pair of sequences, the sequences are mapped to buckets in a way that pairs of sequences from the joint model are more likely to fall into the same bucket than random ones. By focusing on pairs that fall into the same bucket, Distribution Sensitive Bucketing (DSB) can not only find read/genome pairs over a wide range of insertion, deletion, and mutation rates, but also avoids many unnecessary computations.

Brute force techniques (e.g. LALIGN) are incapable of handling large scale alignment tasks. On the other hand, faster methods (e.g. Minimap2 and DALIGNER) can not handle larger mismatch, insertion and deletion rates. DSB is capable of recovering a significant portion of alignments with a reasonable computation time. We do not advise using DSB in cases where error rates are small (e.g. Illumina reads). In these scenarios, our benchmark shows that Minimap2 outperforms other methods while maintaining high accuracy. In case of erroneous reads or distant homologs, DSB algorithm achieves high sensitivity, while being orders of magnitude faster than the brute force alternatives.

An alternative strategy to the MinHash approach used in MHAP is densified MinHash16. However, our results show that the main disadvantage of MinHash based methods is in their low sensitivity and high false positive rate. Switching to densified MinHash can make this problem more severe, since it has been reported that densified MinHash slightly improves the speed of MinHash while sacrificing sensitivity17.

Currently, DSB is based on a simplistic assumption that insertions and deletions occur independently. The current approach paves the path toward more sophisticated models, such as affine gap penalties and non-uniform distribution of nucleotides.

Methods

Inference problem for hidden Markov models

Consider an HMM with a finite alphabet of hidden states and an alphabet of observations where and are finite discrete alphabets. For any pair of sequences , where , let

| 3 |

| 4 |

| 5 |

where denotes hidden states, denotes the emission probabilities, denotes the transition probabilities, and denotes the initial probability of the hidden state . Here all multi-dimensional probabilities are shown with , while scalar probabilities are shown with P. In the context of sequence alignment, one can treat Y as the query sequence and X as a sequence in the database. The inference problem for latent variable models is defined as follows: Given a latent variable model , a set of sequences , and a query sequence , find satisifying:

| 6 |

where is the product of background probabilities of each base and insertions/deletions. In the case where is a HMM defined in (3)-(5), the naive method to solve this optimization problem requires computing for each using the classic forward algorithm18, and then finding that maximizes (see Supplementary Algorithm 4). However, since the complexity of the forward algorithm is , the complexity of this naive method is (computing P(Y|X) for all ). This solution thus cannot scale to cases where the number of sequences N is large. Also note that the forward algorithm is not applicable to the string alignment problem with insertions and deletions.

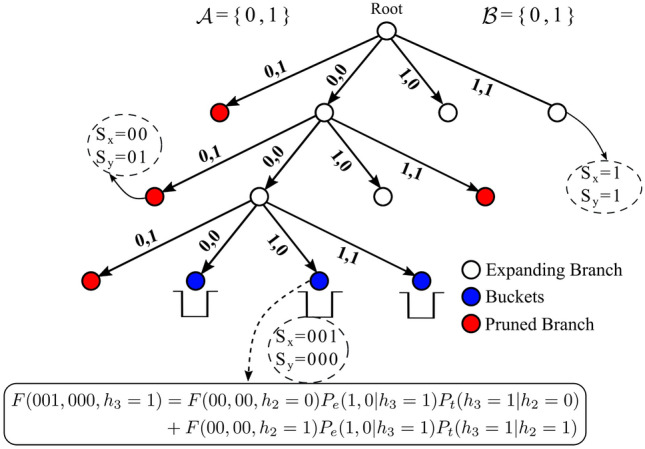

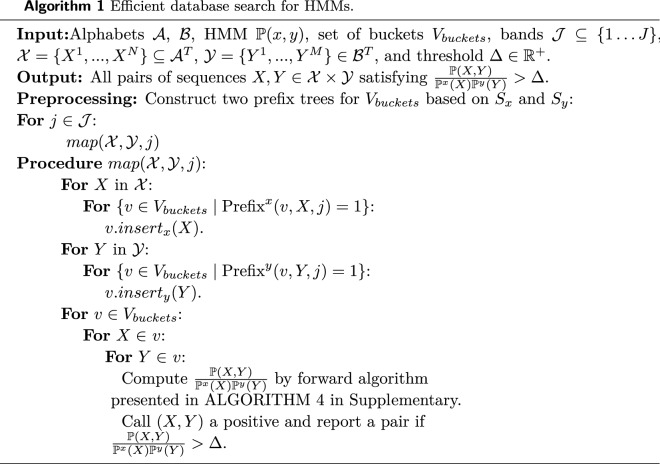

Database search by distribution sensitive bucketing for HMMs

Here we introduce decision trees with bucket data structures, and show how they can be used for mapping database sequences and query sequences to buckets in a way that for pairs (X, Y) with higher , the chance of mapping to the same bucket is higher. Intuitively, each node in the tree contains two subsequences and , and (resp. ) falls in a bucket node in the tree if (resp. ) is its prefix. We then use this data structure to design an algorithm that solves the inference problem in the case of HMMs.

The data structure consists of a decision tree G and a set of distinguished leaf nodes of G called “buckets”. Decision tree G is a directed tree with a set of nodes V. Each node is associated with two sequences and (called x-sequence and y-sequence of v). For each , and have the same size, and this size is equal to their depth in the tree. For the root node, , , where is the empty string. Each node is either a leaf node, or has exactly children. For each child w of v corresponding to , its x-sequence and y-sequence are defined as follows:

| 7 |

where plus sign stands for string concatenation. In other words, and are formed by attaching a and b to the end of and strings, respectively. The edge from v to w is indexed by (a, b). Furthermore the set of D buckets is a subset of the leaf nodes of the tree, where D is referred to as the number of buckets. Later we will discuss how to set D and in order to optimize accuracy and efficiency. Figure 7 shows the schematic of this data-structure for . Note that the proposed tree differs from suffix/prefix trees in that they are constructed from two distinct databases of strings, and each node in the tree corresponds to a pair of substrings.

Figure 7.

Construction of the HMM decision tree. Examples of accepted, pruned, and branched nodes are shown. Refer to Algorithm 3 for further details of the tree construction.

Given the decision tree G and a set of buckets , we define a natural bucketing strategy for database and query sequences in and . Let’s define:

| 8 |

| 9 |

A sequence maps to a bucket v in position if and only if is a subsequence of X starting at j, i.e. . A sequence Y is mapped similarly. For example if and , then while for any . We will refer to each position j, as a band. We further refer to the set of bands as (here ). Intuitively, only a small ratio of true positives fall into the same bucket in a single band, and therefore J bands are needed in order to guarantee a true positive rate nearly one.

Let’s define the bucketing function (resp. ) as the set all the buckets in the decision tree that a sequence belongs to from its j-th band. That is:

| 10 |

| 11 |

Given these buckets we can then solve the inference problem using Algorithm 1. In summary, Algorithm 1 first maps all sequences in and to appropriate buckets, which are obtained by growing and pruning the decision tree G (Fig. S3). Then, it examines all pairs in each bucket using forward algorithm to compute , and , and uses the likelihood ratio to determine if the pairs are likely to have an alignment. Since only pairs in each bucket are examined, the complexity is much lower than the brute force strategy from Algorithm 4.

In the special case where for every bucket v, , Algorithm 1 will be restricted to finding exact match substrings based on prefix trees. Enforcing exact match substrings could results in high false negative rates, as distance homolog sequences might not share any substring of certain length. However, by constructing optimal trees and buckets that tolerate errors ( not exactly equal to ), Algorithm 1 can achieve lower false negative rates than methods that enforce exact matches.

Another naive choice of the decision tree and buckets is a complete tree with all leaf nodes selected as buckets. In this case, every pair of sequences will share a bucket, and therefore the complexity of Algorithm 1 would be the same as the brute force algorithm. Now we provide a complexity and true positive rate analysis of Algorithm 1. We further present an algorithm to select a decision tree and a set of buckets, , that minimizes the complexity in Supplementary Sect. 9.6. This algorithm iteratively grows a tree and prunes nodes/buckets that do not have a sufficiently high probability of containing pairs of sequences generated under the joint distribution.

Complexity and true positive rate analysis

In the Supplementary Materials, we show proofs for the complexity and true positive rate under the HMM model. True positive rate (TPR) of Algorithm 1 is the fraction of (X, Y) pairs jointly generated under the HMMs that are captured in the same bucket. Using J bands we have

| 12 |

where is defined as the true positive rate in band j. In order to have a nearly one true positive rate, i.e. for a small , we select

| 13 |

With (13), the overall expected computational complexity of Algorithm 1 is

| 14 |

where M is the number of sequences in , N is the number of sequences in , is defined as the false positive rate in band j, and are defined as expected numbers of buckets that sequences X and Y in band j fall into, respectively.

Sequence alignment model

Algorithm 1 is limited to standard HMMs, and does not generalize to other latent variable models. Unfortunately, standard HMMs are not sufficient for modelling the important features of the sequence alignment problem, as they can not model insertions and deletions. Here, we define a natural probabilistic model (very similar to pair-HMMs) that we refer to as the sequence alignment model, that takes into account insertions, deletions, and matches/mismatches between pairs of sequences. Our probabilistic model first generates a latent variable H, e.g. . Here, “m”, “i”, and “d” represent match/mismatch, insertion, and deletion respectively. Given H, the generative model generates pre-sequences and , e.g. and . Note that whenever the entry of H is “i” (“d”), the corresponding entry (resp. ) is “−” . The model generates X and Y by removing all “−” from and (Fig. 2). In this example, we have:

| 15 |

| 16 |

with , and , and are positive, (Fig. 2). Here , and are the emission probabilities when the latent variable is match, deletion, and insertion respectively. , , are probabilities of the latent variables. Note that represents the probability for both match and mismatch.

For simplicity we have assumed that insertion, deletion, and match/mismatch events are happening independent of each other. In practice, these events are usually not independent, and a HMM is used for modeling intervals of insertions and deletions, that is equivalent to using an affine gap penalty. While in this paper we will focus on the independent insertion and deletion model, the algorithms presented can generalize to arbitrary priors.

So far, we have shown that the sequence alignment model can be stated as a special case of latent variable models. We will show how the algorithms we have developed for HMMs (without insertions/deletions) can be adapted to the case of sequence alignment models.

Efficient sequence alignment by sub-quadratic inference in sequence alignment model

Here we develop analogous methods to solve the inference problem in case of the sequence alignment model. We present a method to align sequences via the bucketing strategy (Algorithm 4), and we detail how to construct optimal buckets to minimize the runtime (Algorithm 5). Our model relies on pair-HMMs, which are different from standard HMMs in that they can also incorporate insertions and deletions. An efficient bucketing strategy is designed using a decision graph structure (Fig. S4). Decision graphs are iteratively grown and pruned, in order to optimize the theoretical complexity (Supplementary Sect. 9.6).

Supplementary Information

Acknowledgements

The work of M.M., C.S., and A.G.D. and H.M. was supported by a National Institute of Health Award DP2GM137413. G.M. was supported by US National Institutes of Health Award R01HG012470.

Author contributions

M.M., C.S. and A.G.D. designed the model. M.M. and C.S. implemented the model. M.M. and C. S. performed benchmarking experiments and analyzed the data. M.M. G.M and H.M. wrote the manuscript. H.M. supervised the project.

Data availability

All data generated or analysed during this study are included in this published article and its supplementary information files.

Code availability

The command-line version of DSB, available from https://github.com/mohimanilab/DistributionSensitiveBucketing, is capable of conducting searches in both all versus all mode (when searching many reads against each other) and all versus one mode (searching many reads against a single genome).

Competing interests

H.M. is a co-founder and has equity interest from Chemia Biosciences. The other authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Mihir Mongia and Chengze Shen.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-023-34257-x.

References

- 1.Potter SC, et al. Hmmer web server: 2018 update. Nucleic Acids Res. 2018;46:W200–W204. doi: 10.1093/nar/gky448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zhan Q, et al. Probpfp: A multiple sequence alignment algorithm combining hidden Markov model optimized by particle swarm optimization with partition function. BMC Bioinform. 2019;20:1–10. doi: 10.1186/s12859-019-3132-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Slater GSC, Birney E. Automated generation of heuristics for biological sequence comparison. BMC Bioinform. 2005;6:1–11. doi: 10.1186/1471-2105-6-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mak D, Gelfand Y, Benson G. Indel seeds for homology search. Bioinformatics. 2006;22:e341–e349. doi: 10.1093/bioinformatics/btl263. [DOI] [PubMed] [Google Scholar]

- 5.Berlin K, et al. Assembling large genomes with single-molecule sequencing and locality-sensitive hashing. Nat. Biotechnol. 2015;33:623–630. doi: 10.1038/nbt.3238. [DOI] [PubMed] [Google Scholar]

- 6.Li H. Minimap2: Pairwise alignment for nucleotide sequences. Bioinformatics. 2018;34:3094–3100. doi: 10.1093/bioinformatics/bty191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Myers, G. Efficient local alignment discovery amongst noisy long reads. Workshop on Algorithms in Bioinformatics8701 (2014).

- 8.Chaisson M, Tesler G. Mapping single molecule sequencing reads using basic local alignment with successive refinement (blasr): Application and theory. BMC Bioinform. 2012;13:238. doi: 10.1186/1471-2105-13-238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Steinegger M, Söding J. Mmseqs2 enables sensitive protein sequence searching for the analysis of massive data sets. Nat. Biotechnol. 2017;35:1026–1028. doi: 10.1038/nbt.3988. [DOI] [PubMed] [Google Scholar]

- 10.Sović I, et al. Fast and sensitive mapping of nanopore sequencing reads with graphmap. Nat. Commun. 2016;7:11307. doi: 10.1038/ncomms11307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Li W, Godzik A. Cd-hit: A fast program for clustering and comparing large sets of protein or nucleotide sequences. Bioinformatics. 2006;22:1658–1659. doi: 10.1093/bioinformatics/btl158. [DOI] [PubMed] [Google Scholar]

- 12.Jain C, et al. Weighted minimizer sampling improves long read mapping. Bioinformatics. 2020;36:i111–i118. doi: 10.1093/bioinformatics/btaa435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Shrivastava, A. & Li, P. In defense of minhash over simhash. In Artificial Intelligence and Statistics 886–894 (2014).

- 14.Ono Y, Asai K, Hamada M. Pbsim: Pacbio reads simulator-toward accurate genome assembly. Bioinformatics. 2013;29:119–121. doi: 10.1093/bioinformatics/bts649. [DOI] [PubMed] [Google Scholar]

- 15.Madeira F, et al. The embl-ebi search and sequence analysis tools apis in 2019. Nucleic Acids Res. 2019;47:636–641. doi: 10.1093/nar/gkz268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Shrivastava A. Optimal densification for fast and accurate minwise hashing. Proc. Int. Conf. Mach. Learn. 2017;70:3154–3163. [Google Scholar]

- 17.Shrivastava, A. Optimal densification for fast and accurate minwise hashing. In International Conference on Machine Learning 3154–3163 (PMLR, 2017).

- 18.Rabiner LR, Juang B-H. An introduction to hidden Markov models. IEEE ASSP Mag. 1986;3:4–16. doi: 10.1109/MASSP.1986.1165342. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data generated or analysed during this study are included in this published article and its supplementary information files.

The command-line version of DSB, available from https://github.com/mohimanilab/DistributionSensitiveBucketing, is capable of conducting searches in both all versus all mode (when searching many reads against each other) and all versus one mode (searching many reads against a single genome).