Abstract

Contemporary pose estimation methods enable precise measurements of behavior via supervised deep learning with hand-labeled video frames. Although effective in many cases, the supervised approach requires extensive labeling and often produces outputs that are unreliable for downstream analyses. Here, we introduce “Lightning Pose,” an efficient pose estimation package with three algorithmic contributions. First, in addition to training on a few labeled video frames, we use many unlabeled videos and penalize the network whenever its predictions violate motion continuity, multiple-view geometry, and posture plausibility (semi-supervised learning). Second, we introduce a network architecture that resolves occlusions by predicting pose on any given frame using surrounding unlabeled frames. Third, we refine the pose predictions post-hoc by combining ensembling and Kalman smoothing. Together, these components render pose trajectories more accurate and scientifically usable. We release a cloud application that allows users to label data, train networks, and predict new videos directly from the browser.

1. Introduction

Behavior is our window into the processes that underlie animal intelligence, ranging from early sensory processing to complex social interaction [1]. Methods for automatically quantifying behavior from video [2–4] have opened the door to high-throughput experiments that compare animal behavior across pharmacological [5] and disease [6] conditions. Moreover, when behavior is carefully monitored, motor signals are revealed in unexpected brain areas, even regions classically defined to be purely sensory [7, 8].

Pose estimation methods based on fully-supervised deep learning have emerged as a workhorse for behavioral quantification [9–13]. This technology reduces high-dimensional videos of behaving animals to low-dimensional time series of their poses, defined in terms of a small number of user-selected keypoints per video frame. Three steps are required to accomplish this feat. Users first create a training dataset by manually labeling poses on a subset of video frames; typically hundreds or thousands of frames are labeled to obtain reliable pose estimates. A neural network is then trained to predict poses that match user labels. Finally, the network is run on a new video to predict a pose for each frame separately. This process of labeling-training-prediction can be iterated until performance is satisfactory. The resulting pose estimates are used extensively in downstream analyses including quantifying predefined behavioral features (e.g., gait features such as stride length, or social features such as distance between subjects), estimation of neural encoding and decoding models, classification of behaviors into discrete “syllables,” and closed-loop experiments [14–19].

Although the supervised paradigm is effective in many cases, a number of critical roadblocks remain. To start, the labeling process can be laborious, especially when labeling complicated skeletons on multiple views. Even with large labeled datasets, trained networks are often unreliable: they output “glitchy” predictions that require further manipulation before downstream analyses [20, 21], and struggle to generalize to animals and sessions that were not represented in their labeled training set. Even well-trained networks that achieve low pixel error on a small number of labeled test frames can still produce error frames that hinder downstream scientific tasks. Manually identifying these error frames is like finding a needle in a haystack [22]: errors persist for a few frames at a time whereas behavioral videos can be hours long. Automatic approaches – currently limited to filtering low-confidence predictions and temporal discontinuities – can easily miss scientifically critical errors.

To improve the robustness and usability of animal pose estimation, we present Lightning Pose, a solution at three levels: modeling, software, and a cloud-based application.

First, we leverage semi-supervised learning, which involves training networks on both labeled frames and unlabeled videos, and is known to improve generalization and data-efficiency [23]. On unlabeled videos, the networks are trained to minimize a number of unsupervised losses that encode our prior beliefs about moving bodies: poses should evolve smoothly in time, be physically plausible, and be localized consistently when seen from multiple views. In addition, we leverage unlabeled frames in a Temporal Context Network architecture, which instead of taking in a single frame at a time, processes each frame with its neighboring (unlabeled) frames. Our resulting models outperform their purely supervised counterparts across a range of metrics and datasets, providing more reliable predictions for downstream analyses.

We further improve our networks’ predictions using a general Bayesian post-processing approach, which we coin the Ensemble Kalman Smoother: we aggregate (“ensemble”) the predictions of multiple networks – which is known to improve their accuracy and robustness [24, 25] — and model those aggregated predictions with a spatially-constrained Kalman smoother that takes their collective uncertainty into account.

We implemented these tools in a deep learning software package that capitalizes on recent advances in the deep learning ecosystem. Open-source technologies allow us to outsource engineering-heavy tasks (such as GUI development, or training orchestration), which simplifies our package and allows users to focus on scientific modeling decisions. We name our package Lightning Pose, as it is based on the PyTorch Lightning deep learning library [26]. Unlike most existing packages, Lightning Pose is video-centric and built for manipulating large videos directly on the GPU, to support our semi-supervised training (and enable fast evaluation on new videos). Our modular design allows users to quickly prototype new training objectives and network architectures without affecting any aspects of training.

Finally, to make pose estimation tools accessible to the broader audience in life sciences, their adoption should not depend on programming skills or access to specialized hardware. Therefore, we developed a no-install cloud application that runs on the browser and allows users to perform the entire cycle of pose estimation: uploading raw videos to the cloud, annotating frames, training networks, and diagnosing the reliability of the results using our unsupervised loss terms.

2. Results

We first describe the dominant supervised approach to pose estimation and illustrate its drawbacks, especially when applied to new subjects and sessions. Next, we introduce our unsupervised losses and Temporal Context Network architecture. We illustrate that these unsupervised losses can be used to identify outlier predictions in unlabeled videos, and find that networks trained with these losses lead to more reliable tracking compared to purely supervised models. We then introduce the Ensemble Kalman Smoother post-processing approach and show that it further improves tracking performance. We proceed to apply our combined methods to the International Brain Lab datasets, and show that they improve pupil and paw tracking, thereby improving neural decoding. Finally, we showcase our software package and cloud-hosted application. Further details on our models, losses, and training protocol are provided in the Methods.

2.1. Supervised pose estimation and its limitations

The leading packages for animal pose estimation – DeepLabCut [9], SLEAP [10], DeepPoseKit [11], and others – differ in architectures and implementation but all perform supervised heatmap regression on a frame-by-frame basis (Fig. 1A). A standard model is composed of a “backbone” that extracts features for each frame (e.g., a ResNet-50 network) and a “head” that uses these features to predict body part location heatmaps. Networks are trained to match their outputs to manual labels.

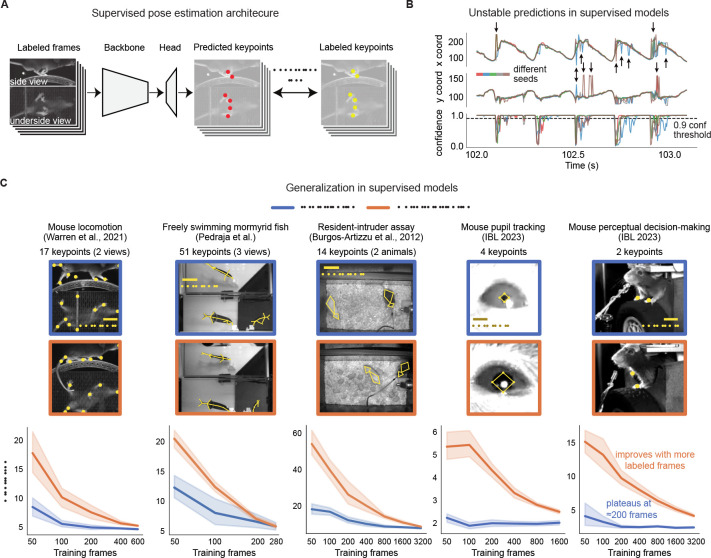

Figure 1: Fully-supervised pose estimation often outputs unstable predictions and requires many labels to generalize to new animals.

A. Diagram of a typical pose estimation model trained with supervised learning, illustrated using the mirror-mouse dataset [16]. A dataset is created by labeling keypoints on a subset of video frames. A convolutional neural network, consisting of a “backbone” and a prediction “head,” takes in a batch of frames as inputs, and predicts a set of keypoints for each frame. It is trained to minimize the distance from the labeled keypoints. B. Predictions from five supervised DeepLabCut networks (trained with 631 labeled frames on the mirror-mouse dataset), for the left front paw position (top view) during one second of running behavior. Top: x-coordinate; Middle: y-coordinate; Bottom: confidence, applying a standard 0.9 threshold in a dashed line. The predictions demonstrate occasional discontinuities and disagreements across the five networks, only some of which are flagged by low confidence (Supplementary Video 1). C. To generalize robustly to unseen animals, many more labels are required. Top row shows five example datasets. Each blue image is an example taken from the in-distribution (InD) test set, which contains new images of animals that were seen in the training set. The orange images are test examples from unseen animals altogether, which we call the out-of-distribution (OOD) test set. Bottom row shows data-efficiency curves, measuring test-set pixel error as a function of the training set size. InD pixel error is in blue and OOD in orange. Line plots show the mean pixel error across all keypoints and frames ± standard error over n=10 random subsets of InD training data.

Training supervised networks from a random initialization requires a large amount of labeled frames. Existing methods [9] circumvent this requirement by relying on transfer learning: pre-training a network on one task (e.g., image classification on ImageNet, with over one million labeled frames), and fine-tuning it on another task, in this case pose estimation, using far fewer labeled frames (~100s-1000s). Typically, the backbone is fine-tuned and the head is trained from a random initialization.

After training, a fixed model is evaluated on a new video by predicting pose on each frame separately. Each predicted keypoint on each frame is accompanied by an estimate of the network’s confidence for that prediction; often low-confidence estimates are dropped in a post-processing step to reduce tracking errors. Even when trained with many labeled frames, pose estimation network outputs may still be erroneous. We highlight this point using the “mirror-mouse” dataset, which features a head-fixed mouse running on a wheel and performing a sensory-guided locomotion task ([16]; see Methods). Using a camera and a bottom mirror, the mouse’s side and underside are observed simultaneously, recorded at 250 frames per second. 17 body parts are tracked, including all four paws in both views. We trained five DeepLabCut networks on 631 labeled frames (for each network, we used a different random seed to split the labeled frames into train and test sets).

Figure 1B shows the time series of the estimated left hind paw position during one second of a running behavior for each of the five networks (in colors). Each time series exhibits the expected periodic pattern (due to the running gait), but includes numerous “glitches,” some of which are undetected by the networks’ confidence. This collection of five networks – also known as a “deep ensemble” [24] – outputs highly variable predictions on many frames, especially in challenging moments of ambiguity or occlusion (Supplementary Video 1). We will later use this ensemble variance as a proxy for frame “difficulty.”

2.2. Supervised networks need more labeled data to generalize

It is standard to train a pose estimator using a representative sample of subjects, evaluate performance on held-out examples from that sample (“In Distribution” test set, henceforth InD), and then deploy the network for incoming data. The incoming data may include new subjects, seen from slightly different angles and lighting conditions (“Out of Distribution” test set, henceforth OOD). Differences between the InD and OOD test sets are termed “OOD shifts”; building models that are robust to such shifts is a contemporary frontier in machine learning research [27, 28].

We analyze five datasets: the “mirror-mouse” dataset introduced above [16], a freely swimming Mormyrid fish imaged with a single camera and two mirrors (for three views total; “mirror-fish,” Supplementary Fig. 1), a resident-intruder assay (“CRIM13;” two camera views are available but we consider the top view only; [29]), paw tracking in a head-fixed mouse (“IBL-paw;” three camera views are available but we only use the two side cameras; [30]), and a crop of the pupil area in IBL-paw (“IBL-pupil;” we use just one camera view). We split each labeled dataset into two cohorts of subjects, InD and OOD (see dataset and split details in Methods and Supplementary Table 1).

We train supervised heatmap regression networks that use a pretrained ResNet-50 backbone, similar to DeepLabCut (see Methods for architectural details) on InD data with an increasing number of labeled frames. Ten networks are trained per condition, each on a different random subset of InD data. We evaluate the networks’ performance on held-out InD and OOD labeled examples.

In Fig. 1C, we first replicate the observation that InD test-set error (blue curve) plateaus starting from ~200 labeled frames [18]. From looking at this curve in isolation, it could be inferred that additional manual annotation is unnecessary. However, the OOD error curve (orange) is both overall higher, and keeps steeply declining as more labels are added. To obtain an OOD error comparable to InD, many more labels will be needed. This larger label requirement is consistent with recent work showing that ~50k labeled frames are needed to robustly track ape poses [31], and that mouse face tracking networks need to be explicitly fine-tuned on labeled OOD data to achieve good performance [32]. For scarce labels, we find the gap between InD and OOD errors to be so large for some datasets that it renders prediction on new animals unusable for many downstream analyses.

To address these limitations, we propose the Lightning Pose framework, comprising two components: semi-supervised learning and a Temporal Context Network architecture, which we describe next.

2.3. Semi-supervised learning via spatiotemporal constraints

Most animal pose estimation algorithms treat body parts as independent in time and space. Moreover, they do not utilize the vast amounts of available unlabeled videos for training the networks; instead, most video data are used just at prediction time. These two observations offer an opportunity for semi-supervised learning [23]. We thus train a network on both labeled frames (supervised) and large volumes of unlabeled videos (unsupervised). During training, the network is penalized whenever its pose predictions violate a set of spatiotemporal constraints on the unlabeled videos. We use “soft” constraints, i.e., the network is penalized only for severe constraint violations (with a controllable threshold parameter). The unsupervised losses are applied only during training and not during video prediction. As a result, after training, a semi-supervised model predicts a video as quickly as its fully-supervised counterpart.

Our semi-supervised pose estimation paradigm is depicted in Fig. 2A. The top row, shaded in gray, is simply the supervised pose estimation approach à la DeepLabCut. In each training iteration, the network additionally receives an unlabeled video clip (selected at random from a queue of videos), and outputs a time-series of pose predictions - one pose vector for each frame (bottom row). Those predictions are subjected to our unsupervised losses. We describe these unsupervised losses next.

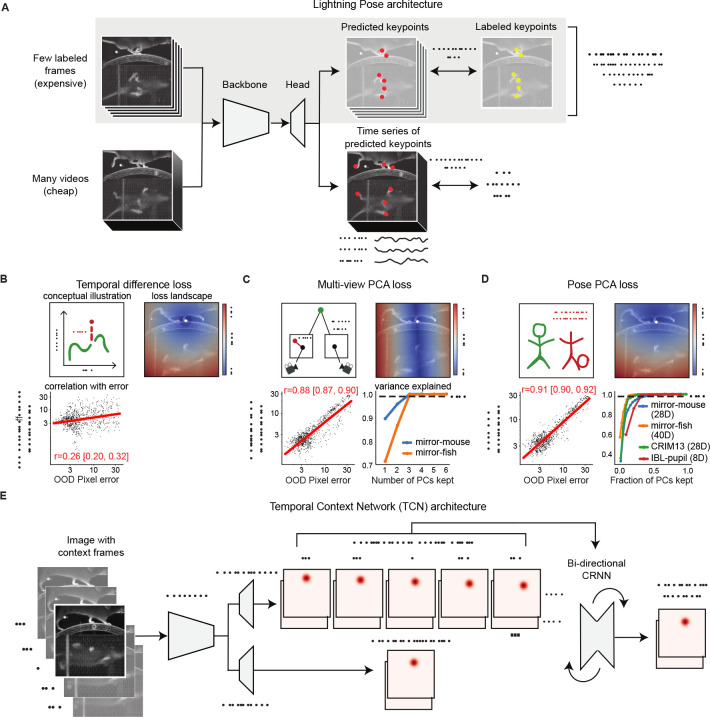

Figure 2: Lightning Pose exploits unlabeled data in pose estimation model training.

A. Diagram of our semi-supervised model that contains supervised (top row) and unsupervised (bottom row) components. B. Temporal difference loss penalizes jump discontinuities in predictions. Top left: illustration of a jump discontinuity. Top right: loss landscape for frame t given the prediction at t −1 (white diamond), for the left front paw (top view). The loss increases further away from the previous prediction, and the dark blue circle corresponds to the maximum allowed jump, below which the loss is set to zero. Bottom left: correlation between temporal difference loss and pixel error on labeled test frames. C. Multi-view PCA loss constrains each multi-view prediction of the same body part to lie on a three-dimensional subspace found by Principal Component Analysis (PCA). Top left: illustration of a 3D keypoint detected on the imaging plane of two cameras. The left detection is inconsistent with the right. Top right: loss landscape for the left front paw (top view; white diamond) given its predicted location on the bottom view. The blue band of low loss values is an “epipolar line” on which the top-view paw could be located. Bottom left: multi-view PCA loss is strongly correlated with pixel error. Bottom right: three PCs explain >99% of label variance on multi-view datasets. D. Pose PCA loss constrains predictions to lie on a low-dimensional subspace of plausible poses, found by PCA. Top left: illustration of a plausible and implausible poses. Top right: loss landscape for the left front paw (top view; white diamond) given all other keypoints, which is minimized around the paw’s actual position. Bottom left: Pose PCA loss is strongly correlated with pixel error. Bottom right: cumulative variance explained versus fraction of PCs kept. Across four datasets, > 99% of the variance in the pose vectors can be explained with <50% of the PCs. E. The Temporal Context Network processes each labeled frame with its adjacent unlabeled frames, using a bi-directional convolutional recurrent neural network. It forms two sets of location heatmap predictions, one using single-frame information and another using temporal context.

2.4. Temporal difference loss

The first spatiotemporal constraint we introduce is also one held by 4-month-old infants: objects should move continuously [33] and not jump too far between video frames. We define the temporal difference loss for each body part as the Euclidean distance between consecutive predictions in pixels. Similar losses have been used by several practitioners to detect outlier predictions post-hoc [16, 32], whereas our goal here, following [34], is to incorporate these penalties directly into network training to achieve more accurate network output. Figure 2B illustrates this penalty: the cartoon in the left panel indicates a jump discontinuity we would like to penalize. In the right panel we plot the loss landscape, evaluating the loss for every pixel in the image. The paw’s previous predicted position is depicted as a white diamond. Observe a ball of zero-loss values centered at the diamond with a radius of pixels which we set as the maximum allowed jump for this dataset; can be set depending on the frame rate, frame size, the camera’s distance from the subject, and how quickly or jerkily the subject moves. Outside the ball, the loss increases as we move farther away from the previous prediction.

If our losses are indeed viable proxies for pose prediction errors, they should be correlated with pixel errors in test frames for which we have ground-truth annotations. To test this, we trained a supervised model with 75 labeled frames, and computed the temporal difference loss on fully-labeled OOD frames. We anticipate a mild correlation with pixel error: prediction errors may persist across multiple frames and exhibit low temporal difference loss; in periods of fast motion, temporal difference loss may be high, yet keypoints may remain easily discernible. Indeed, in the bottom left panel of Fig. 2B, we see that the temporal difference loss is mildly correlated with pixel error on these frames (log-linear regression: Pearson r = 0.26, 95% CI = [0.20, 0.32]; each point is the mean across all keypoints for a given frame). As a comparison, confidence is a more reliable predictor of pixel error (Pearson r = −0.54, 95% CI = [−0.59, −0.49]).

2.5. Multi-view PCA loss

Our cameras see three-dimensional bodies from a two-dimensional perspective. It is increasingly common to record behavior using multiple synchronized cameras, train a network to estimate pose independently in each 2D view, and then use standard techniques post-hoc to fuse those 2D pose predictions into a 3D pose [20, 35]. This approach has two limitations. First, to reconstruct 3D poses, one needs to calibrate each camera, that is, to precisely infer where it is in the 3D world and carefully model its intrinsic parameters such as focal length and distortion. This typically involves filming a calibration board from all cameras after any camera adjustment; this adds experimental complexity and may be challenging or unreliable in some geometrically constrained experimental setups built for small model organisms. Second, localizing a body part in one view will constrain its allowed location in all other views [36], and we want to exploit this structure during training to obtain a stronger network; the post-hoc 3D reconstruction does not take advantage of this important structure to improve network training.

We use a “multi-view PCA” loss that constrains the predictions for unlabeled videos to be consistent across views [37, 38], while bypassing the need for complicated camera calibration. Each multi-view prediction (containing width-height coordinates for a single body part seen from multiple views) is compressed to three dimensions via simple principal components analysis (PCA; see Methods), and then this three-dimensional representation is linearly projected back into the original pixel coordinates (henceforth, “PCA reconstruction”). If the predictions are consistent across views and nonlinear camera distortion is negligible, no information should be lost when linearly compressing to three dimensions. We define the multi-view PCA loss as the pixel error between the original versus the PCA-reconstructed prediction, averaged across keypoints and views.

This simple linear approach will not be robust to substantial nonlinear distortions coming either from the lens or from a water medium. In both the mirror-mouse (two views) and mirror-fish (three views) datasets, distortions were minimized by placing the camera far from the subject (~ 1.1 and ~ 1.7 meters respectively). Indeed, in both cases, three PCA dimensions explain > 99.9% of the multi-view ground truth label variance (Fig. 2C, bottom right).

Figure 2C (top left) provides a cartoon illustration of the idea we have just described: an inconsistent detection by the left camera will result in high multi-view loss. In the top right panel we compute the loss landscape for the left front paw on the top view, given its position in the bottom view. According to principles of multiple-view geometry, a point identified in one camera constrains the corresponding point in a second camera to a specific line, known as the “epipolar line” [36]. Indeed, the loss landscape exhibits a line of low loss values (blue) that intersects with the paw’s true location. Finally, as we did for the temporal difference loss, we compute the correlation between the multi-view loss and objective prediction errors for a test-set of labeled OOD frames. The multi-view loss is strongly correlated with pixel error (Pearson r = 0.88, 95% CI = [0.87, 0.90]), much more so than the temporal difference loss or confidence, motivating its use both as a post-hoc quality metric and as a penalty during training.

2.6. Pose PCA loss

Not all body configurations are feasible, and of those that are feasible, many are unlikely. Even diligent yoga practitioners will find their head next to their foot only on rare occasions (Fig. 2D, top left). In other words, in many pose estimation problems there are fewer degrees of freedom than there are body parts. The Pose PCA loss constrains the full predicted pose (over all keypoints) to lie on a low-dimensional subspace of feasible and likely body configurations. It is defined as the pixel error between an original pose prediction and its reconstruction after low-dimensional compression (see Methods).

Our loss is inspired by the success of low-dimensional models in capturing biological movement [39], ranging from worm locomotion [40] to human hand grasping [41]. We similarly find that across four of our datasets, 99% of the pose variance can be explained with far fewer dimensions than the number of pose coordinates (Fig. 2D, bottom right) – mirror-mouse: 14/28 components; mirror-fish: 8/40; CRIM13: 8/28; IBL-pupil 3/8 (IBL-paw only contains four dimensions). The effective pose dimensionality depends on the complexity of behavior, the keypoints selected for labeling, and the quality of the labeling. Sets of spatially-correlated keypoints will have a lower effective dimension (relative to the total number of keypoints); label errors tend to reduce these correlations and inflate the effective dimension.

Fig. 2D (top right) shows the Pose PCA loss landscape for the left hind paw location in the mirror-mouse dataset (true location shown as a white diamond) given the location of all the other body parts. As desired, the Pose PCA loss is lower around the paw’s true location and accommodates plausible neighbouring locations. Here too, the Pose PCA loss closely tracks ground truth pixel error on labeled OOD frames (Fig. 2D, bottom left; Pearson r = 0.91, 95% CI = [0.90, 0.92]).

The Pose PCA loss might erroneously penalize valid postures that are not represented in the labeled dataset. To test the prevalence of this issue, we took DeepLabCut models trained with abundant labels and computed the Pose PCA loss on held-out videos. We collected 100 frames with the largest Pose PCA loss per dataset. Manual labeling revealed that 85/100 (mirror-mouse; Supplementary Video 2), 87/100 (mirror-fish; Supplementary Video 3), and 100/100 (CRIM13; Supplementary Video 4) of the frames include true errors, indicating that in most cases, large Pose PCA losses correspond to pose estimation errors, rather than unseen rare poses.

2.7. Temporal Context Network

Some frames are more challenging to label than others, due to occlusions or ambiguities between similar body parts. In many cases, additional temporal context can help resolve ambiguities: e.g., if a keypoint is occluded briefly we can scroll backwards and forwards in the video to help “fill in the gaps.” However, this useful temporal context is not provided to standard frame-by-frame pose estimation architectures, which instead must make guesses about such challenging keypoint locations given information from just one frame at a time.

Therefore, we propose a Temporal Context network (TCN), illustrated in Fig. 2E, which uses a frame sequence to predict the location heatmaps for the middle (i.e., ) frame. As in the standard architecture, the TCN starts by pushing each image through a neural network backbone that computes useful features from each frame. Then, instead of predicting the pose directly from each of these individual per-frame feature vectors, we combine this information across frames using a bi-directional convolutional recurrent neural network (CRNN), and then use the output of the CRNN to form predictions for the middle frame.

The CRNN is lightweight compared to the backbone, and we only apply the backbone once per frame; therefore the TCN runtime scales linearly with the number of total context frames. We have found that a context window of 5 frames (i.e., J = 2) provides an effective balance between speed and accuracy and have used this value throughout the paper. In practice, the output of the TCN and single-frame architectures usually match on visible keypoints; rather, the TCN helps with more rare occlusions and ambiguities, where predictions from a single-frame architecture might jump to a different region of the image.

2.8. Spatiotemporal losses enhance outlier detection

Before training networks with these spatiotemporal losses, we first assess whether violations of these losses correspond to meaningful errors in video predictions, going beyond correlations with pixel errors on relatively small labeled test sets (Fig. 2B,C,D). Practitioners often detect outliers using a combination of low confidence and large temporal difference loss [16, 20, 32, 42]. Here we show that the standard approach can be complemented by multi-view and Pose PCA, which capture additional unique outliers.

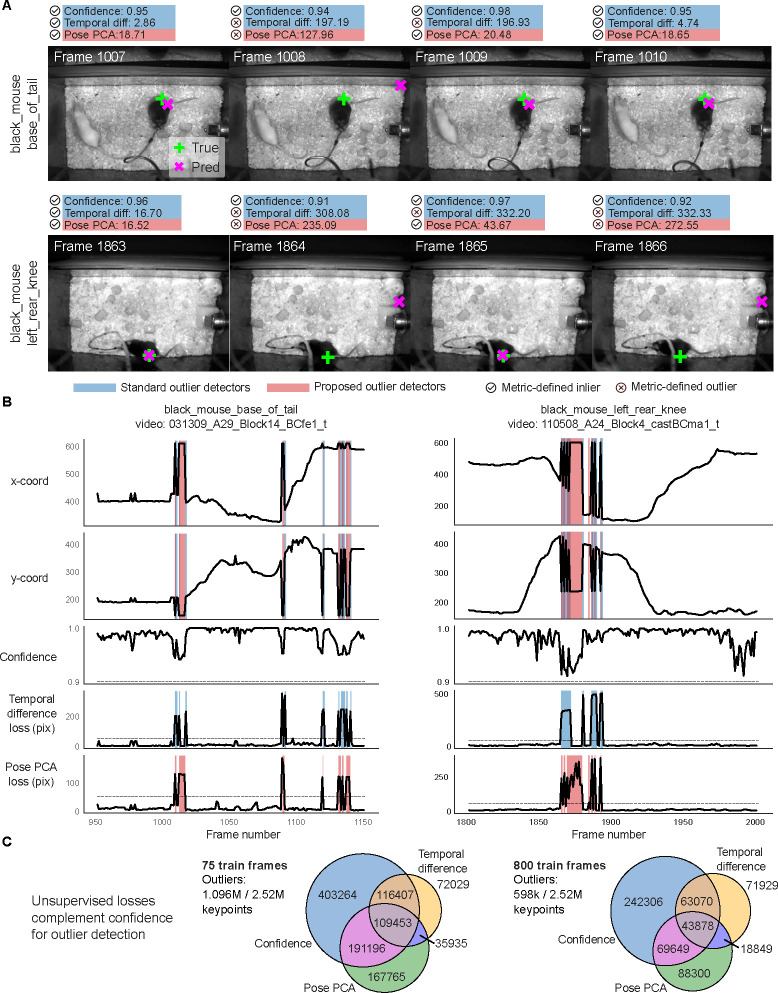

We start with an example video snippet from the mirror-mouse dataset, focusing on the left hind paw on the bottom view (Fig. 3A,B). We analyze the predictions from a DeepLabCut model (trained as in Fig. 1B). Fig. 3A shows that the x coordinate’s discontinuity in frames 290–294, for example, is a result of the network switching back and forth between the similar-looking front and hind paws. These common “paw-switching” errors are mostly missed by network confidence, which remains almost entirely above the >0.9 threshold. The temporal difference loss does not detect these errors, due to two main issues: first, this loss spikes not only when the network jumps to a wrong location (frame 291), but also when it jumps back to the correct location (frame 292). Second, the temporal difference loss misses frames when the network lingers at the wrong location (frame 294). On the other hand, the multi-view PCA loss trace correctly utilizes the top-view prediction for this keypoint (white circle) to flag the error frames as inconsistent across views.

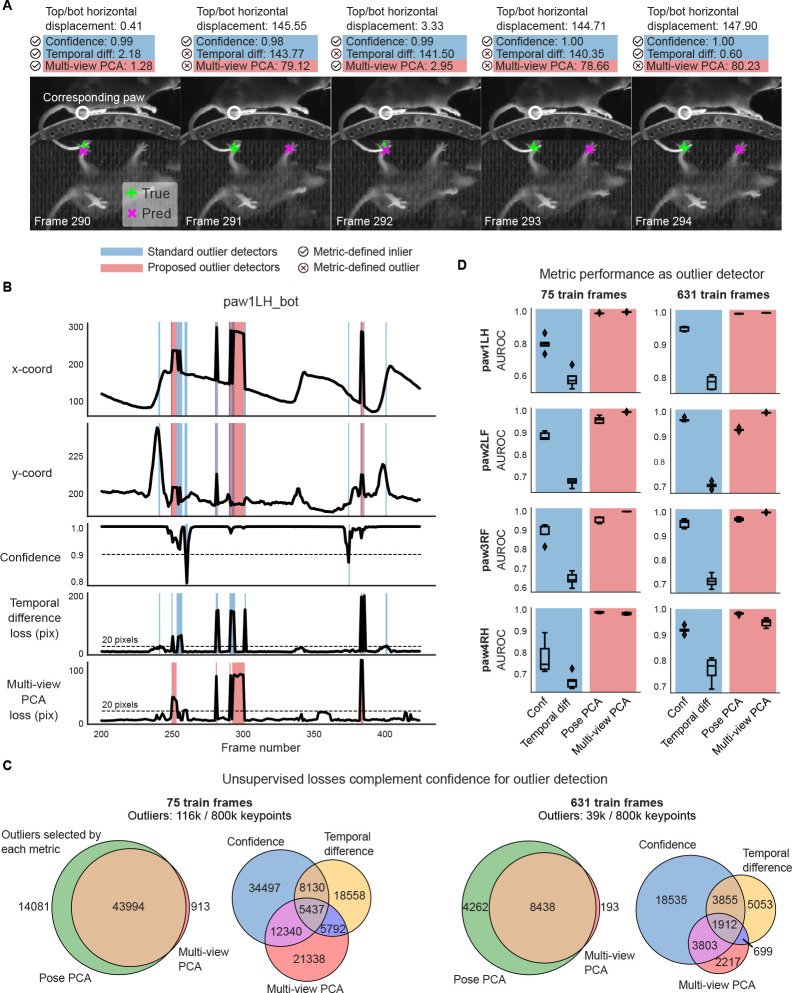

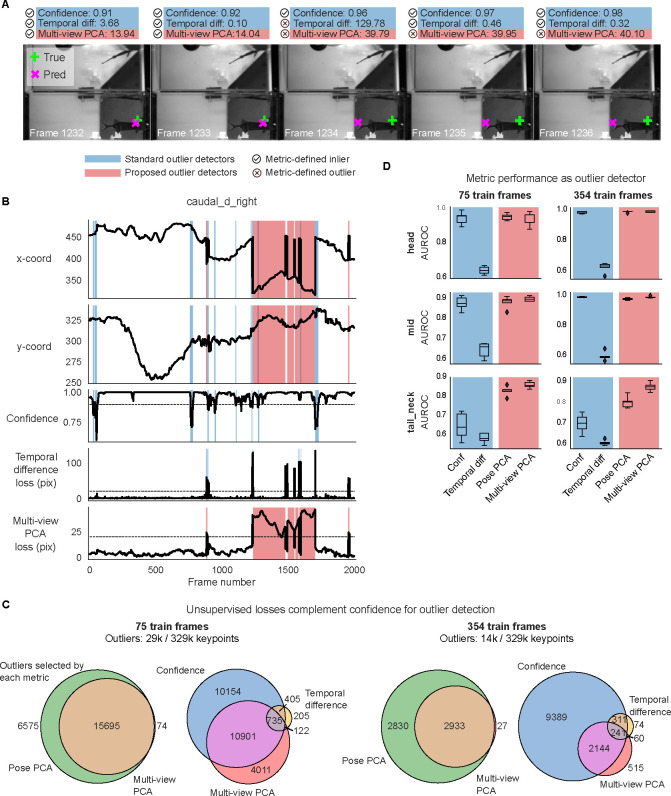

Figure 3: Unsupervised losses complement model confidence for outlier detection.

A. Example frame sequence from the mirror-mouse dataset. Predictions from a DeepLabCut model (trained on 631 frames) are overlaid (magenta ×), along with the ground truth (green +). Open white circles denote the location of the same body part (left hind paw) in the other (top) view; given the geometry of this setup, a large horizontal displacement between the top and bottom predictions indicates an error. Each frame is accompanied with “standard outlier detectors,” including confidence, temporal difference loss (shaded in blue), and “proposed outlier detectors,” including multi-view PCA loss (shaded in red; Pose PCA excluded for simplicity).  indicates an inlier as defined by each metric, and

indicates an inlier as defined by each metric, and  indicates an outlier. Confidence is high for all frames shown, and the temporal difference loss misses error frame 294 which does not contain an immediate jump, and flags frame 292 which demonstrates a jump to the correct location. Multi-view PCA captures these correctly. B. Example traces from the same video. Blue background denotes times where standard outlier detection methods flag frames: confidence falls below a threshold (0.9) and/or the temporal difference loss exceeds a threshold (20 pixels). Red background indicates times where the multi-view PCA error exceeds a threshold (20 pixels). Purple background indicates both conditions are met. C. The total number of keypoints flagged as outliers by each metric, and their overlap. D. Area under the receiver operating characteristic curve (AUROC) for each paw, for DeepLabCut models trained with 75 and 631 labeled frames (left and right columns, respectively). AUROC=1 indicates the metric perfectly identifies all nominal outliers in the video data; 0.5 indicates random guessing. AUROC values are computed across all frames from 20 test videos; boxplot variability is over n=5 random subsets of training11 data. Boxes use 25th/50th/75th percentiles for min/center/max; whiskers extend to 1.5 * IQR (inter-quartile range).

indicates an outlier. Confidence is high for all frames shown, and the temporal difference loss misses error frame 294 which does not contain an immediate jump, and flags frame 292 which demonstrates a jump to the correct location. Multi-view PCA captures these correctly. B. Example traces from the same video. Blue background denotes times where standard outlier detection methods flag frames: confidence falls below a threshold (0.9) and/or the temporal difference loss exceeds a threshold (20 pixels). Red background indicates times where the multi-view PCA error exceeds a threshold (20 pixels). Purple background indicates both conditions are met. C. The total number of keypoints flagged as outliers by each metric, and their overlap. D. Area under the receiver operating characteristic curve (AUROC) for each paw, for DeepLabCut models trained with 75 and 631 labeled frames (left and right columns, respectively). AUROC=1 indicates the metric perfectly identifies all nominal outliers in the video data; 0.5 indicates random guessing. AUROC values are computed across all frames from 20 test videos; boxplot variability is over n=5 random subsets of training11 data. Boxes use 25th/50th/75th percentiles for min/center/max; whiskers extend to 1.5 * IQR (inter-quartile range).

We proceed to generalize the example and quantify the overlaps and unique contributions of the different outlier detection methods on 20 unlabeled videos. We investigate two data regimes: “scarce labels” (75), which mimics prototyping a new tracking pipeline, and “abundant labels” (631 for the mirror-mouse dataset), i.e., a “production” setting with a fully trained network.

First, as we move from the scarce to the abundant labels regime, we find a 66% reduction in the outlier rate – the union of keypoints flagged by confidence, temporal difference, and multi-view PCA losses – going from 116k/800k to 39k/800k keypoints. This indicates that the networks become better and more confident. The Venn diagrams in Fig. 3C show that multi-view PCA captures a meaningful number of unique outliers which are missed by confidence and the temporal difference loss. (The Pose PCA includes both views and thus is largely overlapping with multi-view PCA.)

The overlap analysis above does not indicate which outliers are true versus false positives. To analyze this at a large scale, we restrict ourselves to a meaningful subset of the “true outliers” that can be detected automatically, namely predictions that are impossible given the mirrored geometry. We define this subset of outliers as frames for which the horizontal displacement between the top and bottom view predictions for a paw exceeds 20 pixels, similar to [16]; the networks output 72k/800k such errors with scarce labels, and 16k/800k with abundant labels. These spatial outliers should violate the PCA losses, but it is unknown whether they are associated with low confidence and large temporal differences. Instead of setting custom thresholds on our metrics as in Fig. 3B, we now estimate each metric’s sensitivity via a “Receiver Operating Characteristic” (ROC) curve, which plots the true positive rate against the false positive rate (both between 0 and 1), across all possible thresholds. The area under the ROC curve (AUROC) is a single measure summarizing the performance of each outlier detector: AUROC equals 1 for a perfect outlier detector, 0.5 for random guessing, and values below 0.5 indicate systematic errors. All metrics are above chance in detecting “true outliers” (Fig. 3D); for this class of spatial errors, the PCA losses are more sensitive outlier detectors than network confidence, and certainly more than the temporal difference loss (due to the pathologies described above).

To summarize, the PCA losses identify additional outliers that would have been otherwise missed by standard confidence and temporal difference thresholding (see Extended Data Fig. 1 and Extended Data Fig. 2 for similar results on mirror-fish and CRIM13 datasets). It is therefore advantageous to include PCA losses in standard outlier detection pipelines.

2.9. Both unsupervised losses and TCN boost tracking performance

Above we established that spatiotemporal constraint violations help identify network prediction errors. Next we quantify whether networks trained to avoid these constraint violations achieve more accurate and reliable tracking performance. As a “baseline” model for comparison, we implemented a supervised heatmap regression network that is identical to our more sophisticated model variants, but without semi-supervised learning or the TCN architecture. The baseline model matches DeepLabCut in performance across all datasets, though it is not intended to exactly match it in implementation (see Methods). The baseline model is useful because it eliminates implementation-level artifacts from model comparison. We quantify the networks’ performance both on an out-of-distribution labeled test set as well as on many unlabeled video frames. In this section, we compare the networks’ raw predictions, without any post-processing, to focally assess the implications of our architecture and unsupervised losses.

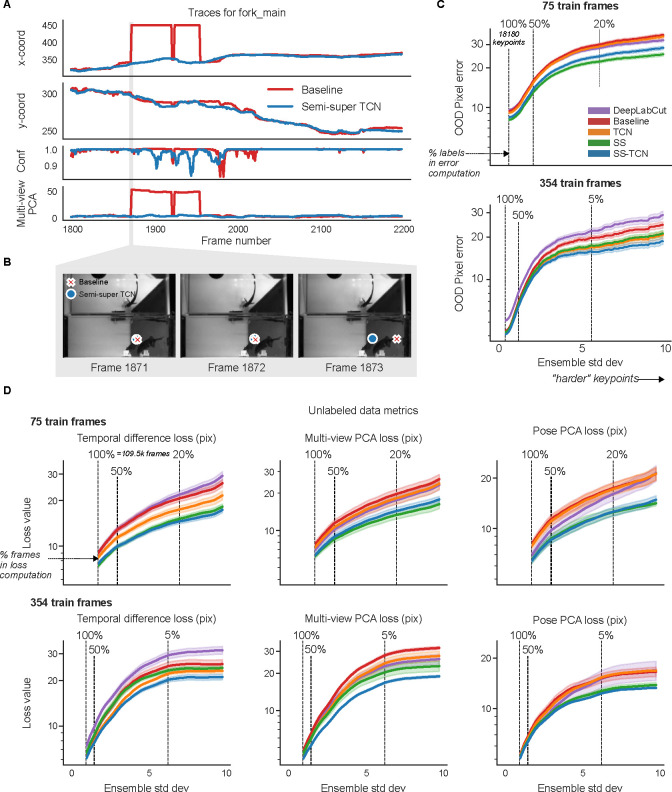

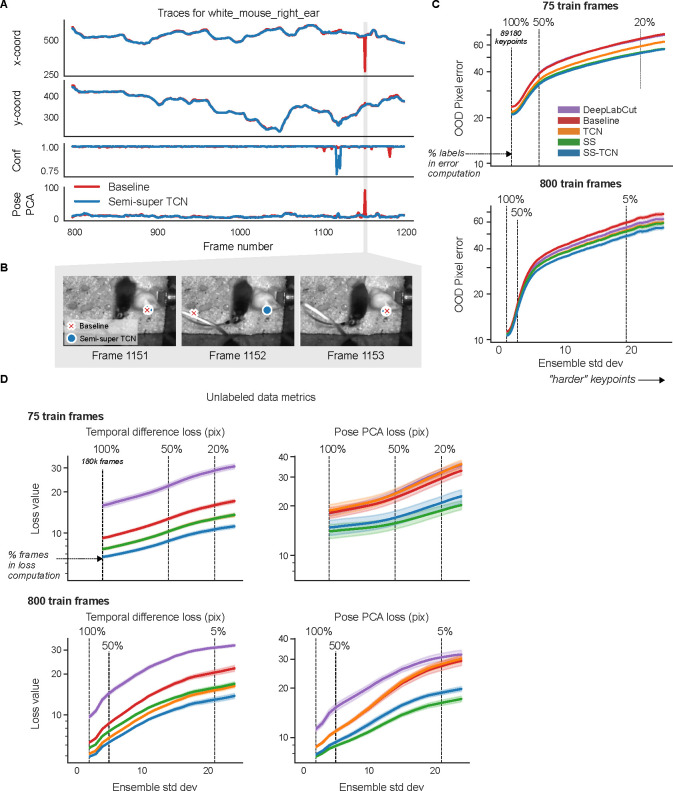

In Fig. 4A and Supplementary Video 5, we examine the mouse’s right hind paw position (top view) during two seconds of running. We compare the raw video predictions from our full semi-supervised model (in blue), including all supervised and unsupervised losses and a TCN architecture (henceforth, SS-TCN), to the predictions generated by our supervised baseline model, both trained on 75 labeled frames. The SS-TCN predictions are smoother (top two panels) and more confident (bottom panel), exhibiting a clearer periodic pattern expected for running on a stationary wheel. While some of the baseline model’s discontinuities are flagged by low confidence, some reflect a confident confusion between similar body parts, echoing Fig. 3A. One such confident confusion is highlighted in gray shading, and further scrutinized in Fig. 4B, showing that the baseline model (red) mistakenly switches to the left hind paw for two frames. The SS-TCN model avoids paw switching first because each frame is processed with its context frames, and second, because switching would have been heavily penalized by both the temporal difference loss and multi-view PCA loss.

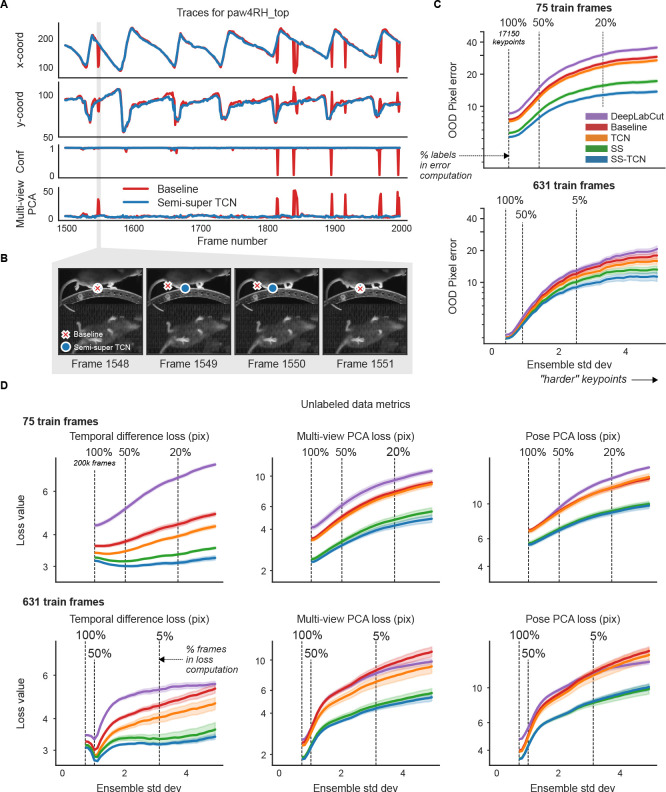

Figure 4: Unlabeled frames improve pose estimation (raw network predictions).

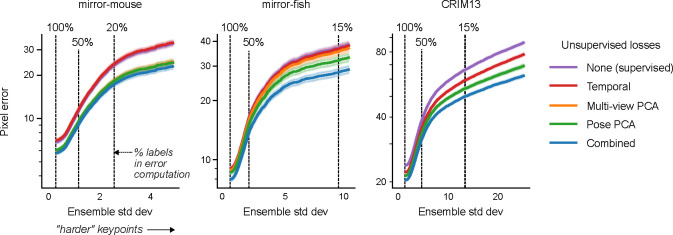

A. Example traces from the baseline model and the semi-supervised TCN model (trained with 75 labeled frames) for a single keypoint (right hind paw; top view) on a held-out video (Supplementary Video 5). The semi-supervised TCN model is able to resolve the visible glitches in the trace, only some of which are flagged by the baseline model’s low confidence. One erroneous paw switch missed by confidence – but captured by multi-view PCA loss – is shaded in gray. B. A sequence of frames (1548–1551) corresponding to the gray shaded region in panel A in which a paw switch occurs. The estimates from both models are initially correct, then at Frame 1549 the baseline model prediction jumps to the incorrect paw, and stays there until it jumps back at Frame 1551. C. We compute the standard deviation of each keypoint prediction in each frame in the OOD labeled data across all model types and seeds (five random shuffles of training data). We then take the mean pixel error over all keypoints with a standard deviation larger than a threshold value, for each model type. Smaller standard deviation thresholds include more of the data (n=17150 keypoints total, indicated by the “100%” vertical line; (253 frames) × (5 seeds) × (14 keypoints) - missing labels), while larger standard deviation thresholds highlight more “difficult” keypoints. Error bands represent standard error of the mean over all included keypoints and frames for a given standard deviation threshold. D. Individual unsupervised loss terms are plotted as a function of ensemble standard deviation for the scarce (top) and abundant (bottom) label regimes. Error bands as in panel C, except we first compute the average loss over all keypoints in the frame (200k frames total; (40k frames) × (5 seeds)).

We perform an ablation study to isolate the contributions of our semi-supervised losses, our TCN architecture, and their combination. For each model type, we trained five networks with different random subsets of InD data. As noted by [22, 43], simple pixel error is an incomplete summary of network performance, since error averages may be dominated by a majority of “easy” keypoints, obscuring differences that may only be visible on the minority of “difficult” keypoints. We found it informative to quantify the pixel error as a function of keypoint difficulty, where we operationally define “difficulty” as the variance in the predictions of a deep ensemble of (five) networks (averaged across all model types). When this variance is large, at least one network in the ensemble must be in error; indeed, qualitatively, Fig. 1 and Supplementary Video 1 show that ensemble variance tends to increase on occlusion frames.

As expected, for both scarce and abundant label regimes (Fig. 4C), OOD pixel error increases as a function of ensemble standard deviation. With scarce labels, models struggle to resolve even “easy” keypoints, and SS-TCN outperforms baseline and DeepLabCut models across all levels of difficulty. The TCN architecture alone only mildly contributes to the improvements compared to semi-supervised learning in this dataset. By training semi-supervised models with a single loss at a time, we identify that multi-view and Pose PCA losses underlie most improvements (Extended Data Fig. 3). With abundant labels, all models accurately resolve “easy” keypoints, and the trends observed in the scarce labels regime become pronounced only for more “difficult” keypoints.

The above analysis was performed on a small set of 253 labeled OOD test frames. If we assess performance on a much larger unlabeled dataset of 20 OOD videos, and compute each of our losses for every predicted keypoint on every video frame, we observe similar trends (Fig. 4D): the SS-TCN model improves sample-efficiency with scarce labels, and reduces rare errors with abundant labels. (Recall that the semi-supervised models are explicitly trained to minimize these losses, and so these results on OOD data are consistent with expectations.)

We find similar patterns for the mirror-fish (Extended Data Fig. 4, Supplementary Video 6) and CRIM13 (Extended Data Fig. 5, Supplementary Video 7) datasets.

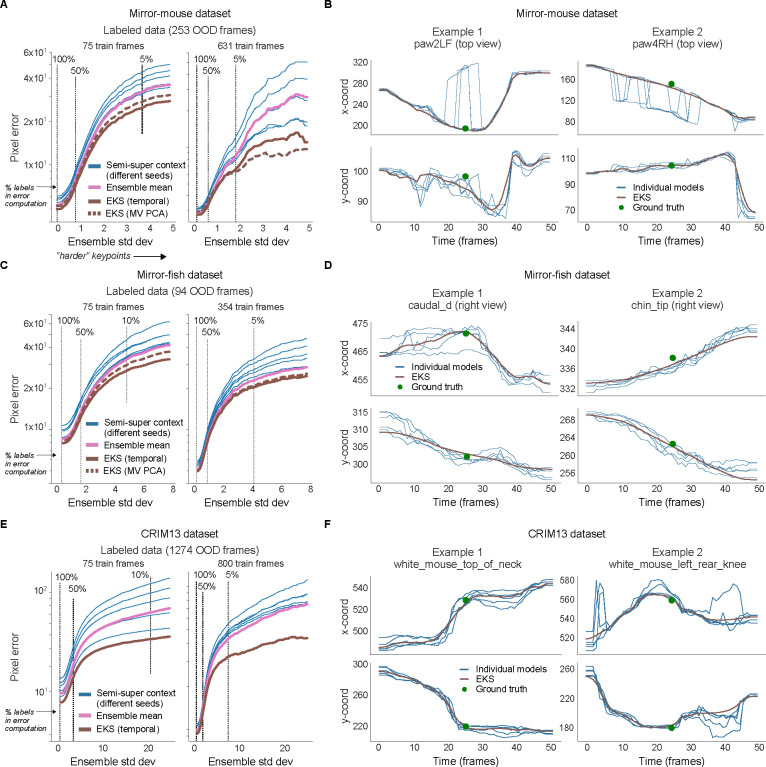

2.10. The Ensemble Kalman Smooter (EKS) enhances accuracy post-hoc

While our semi-supervised networks improve upon their fully-supervised counterparts, they still make mistakes. Recall that the spatiotemporal constraints are enforced during training but not at prediction time; therefore, we now present a post-processing algorithm which uses these constraints to refine the predictions. Successful post-processing requires identifying which predictions need fixing, that is, properly quantifying uncertainty for each keypoint on each frame. As emphasized above, low network confidence captures some, but not all, errors; conversely, constraint violations indicate the presence of additional errors within a set of keypoints but do not identify which specific keypoint within a given constraint violation is in fact an error.

Fig. 4C demonstrates that the ensemble variance – which varies for each keypoint on every frame – is an additional useful signal of model uncertainty [44, 45]. Hence, we developed a post-processing framework that integrates this ensemble variance uncertainty signal with our spatiotemporal constraints, via a probabilistic “state-space” model approach (Fig. 5A,B). Our model posits a latent “state” that evolves smoothly in time, and is projected onto the keypoint positions to enforce our spatial constraints. For example, we enforce multi-view constraints by projecting the three-dimensional true position of the body part (the “latent state”) through two-dimensional linear projections to obtain the keypoints in each camera view. This probabilistic state-space model corresponds to a Kalman filter-smoother model [46] and so we name the resulting post-processing approach the “Ensemble Kalman Smoother” (EKS) (see Methods).

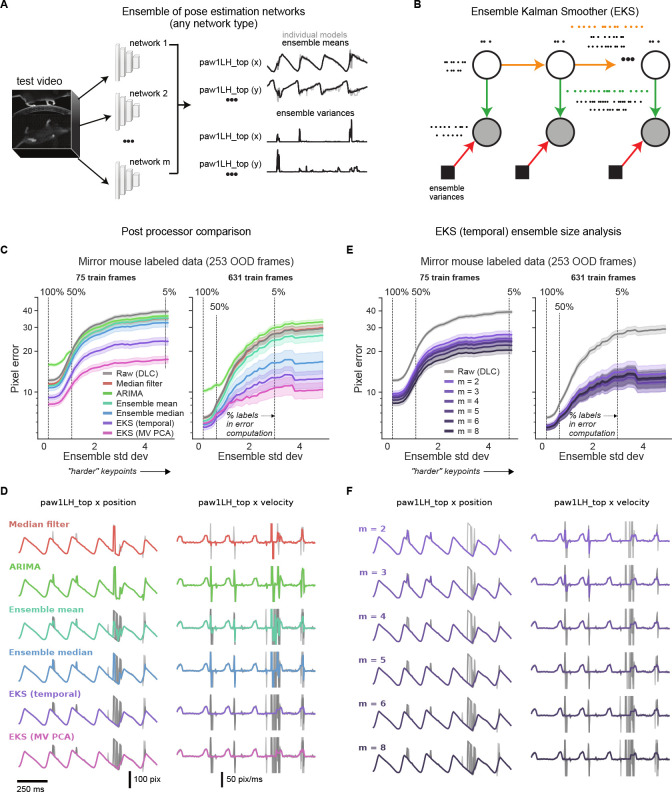

Figure 5: The Ensemble Kalman Smoother (EKS) post-processor.

Results are based on DeepLabCut models trained with different subsets of InD data and different random initializations of the head. A. Deep ensembling combines the predictions of multiple networks. The ensemble mean is potentially more accurate than single model predictions, and the ensemble variance can be a useful measure of uncertainty that is complementary to single model confidence values. B. EKS leverages the spatiotemporal constraints of the unsupervised losses as well as uncertainty measures from the ensemble variance in a probabilistic state-space model. Ensemble means of the keypoints are modeled with a latent linear dynamical system; temporal smoothness constraints are enforced through linear dynamics (yellow arrows) and spatial constraints (Pose or multi-view PCA) are enforced through a fixed observation model that maps the latent state to the observations (green arrows). Instead of learning the observation noise, we use the time-varying ensemble variance (red arrows). EKS uses a Bayesian approach to weight the relative contributions from the prior and observations. C. Post-processor comparison on OOD frames from the mirror-mouse dataset. We plot pixel error as a function of ensemble standard deviation (as in Fig. 4). The median filter and ARIMA models act on the outputs of single networks; the ensemble means, ensemble medians, and EKS variants act on an ensemble of five networks. EKS (temporal) only utilizes temporal smoothness, and is applied one keypoint at a time. EKS (MV PCA) utilizes multi-view information as well as temporal smoothness, and is applied one body part at a time (tracked by one keypoint in each of two views). Error bands as in Fig. 4 (n=17150 keypoints at 100% line). D. Trace comparisons for different methods (75 train frames). Gray lines show the raw traces used as input to the method, colored lines show the post-processed trace. E. Pixel error comparison for the EKS (temporal) post-processor as a function of ensemble members (m). Error bands as in panel C. F. Trace comparisons for varying numbers of ensemble members (75 train frames).

The EKS model output represents a Bayesian compromise between the spatiotemporal constraints (prior) and the information provided by the ensemble observations (likelihood). Concretely, if a keypoint’s uncertainty is low (i.e., all ensemble members agree) then this observation will be upweighted relative to the spatiotemporal prior and will only be lightly smoothed. Conversely, when a keypoint’s uncertainty is high, the spatiotemporal priors and other more-confident keypoints’ predictions will be used to interpolate over these uncertain observations. Unlike previous approaches [16, 20, 22, 32, 42], EKS requires no manual selection of confidence thresholds or (suboptimal) temporal linear interpolation separately for each dropped keypoint. Moreover, the EKS post-processing approach is agnostic to the type of networks used to generate the ensemble predictions.

We benchmark EKS on DeepLabCut models fit to the mirror-mouse dataset. EKS compares favorably to other standard post-processors, including median filters and ARIMA models (which are fit on the outputs of single networks), and the ensemble mean and median (computed using an ensemble of multiple networks; Fig. 5C,D). EKS provides substantial improvements in OOD pixel errors with as few as m = 2 networks; we find m = 5 networks is a reasonable choice given the computation-accuracy tradeoff (Fig. 5E,F), and use this ensemble size throughout.

When applied to Lightning Pose semi-supervised TCN models, EKS provides additional improvements across multiple datasets, particularly on “difficult” keypoints where the ensemble variance is higher (Extended Data Fig. 6). EKS achieves smooth and accurate tracking even when the models make errors due to occlusion and paw confusion (Extended Data Fig. 6; Supplementary Video 8–12; Supplementary Figs. 2–4).

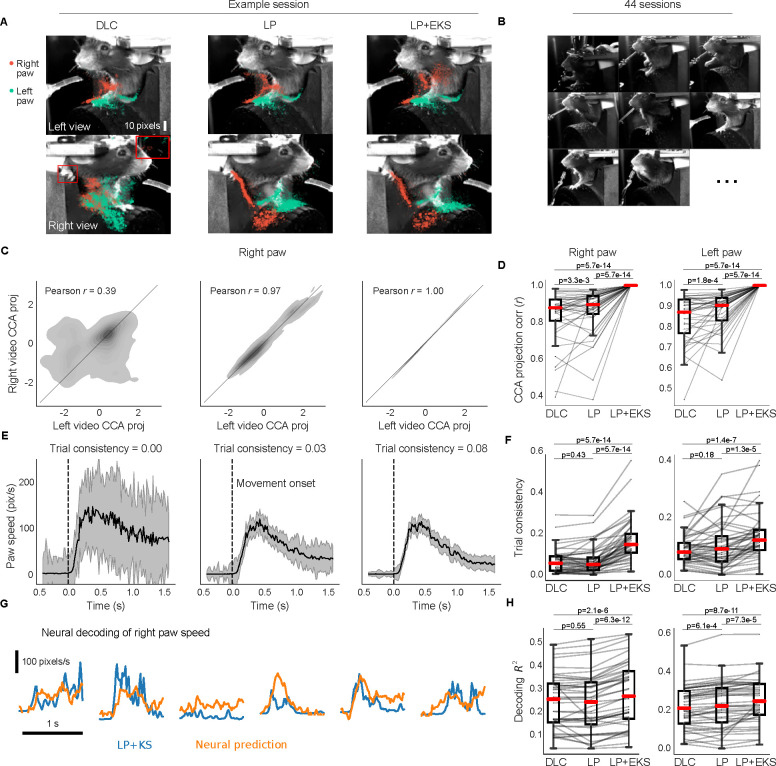

2.11. Improved tracking on International Brain Laboratory datasets

Next we turn to an analysis of large-scale public datasets from the International Brain Laboratory (IBL) [30]. In each experimental session, a mouse was observed by three cameras while performing a visually-guided decision-making task. The mouse signaled its decisions by manually moving a rotary wheel left or right. We analyze two of IBL’s video datasets. “IBL-pupil” contains zoomed-in videos of the pupil, where we track the top, bottom, left, and right edges of the pupil. In “IBL-paw” we track the left and right paws.

Despite efforts at standardization, the data exhibit considerable visual variability between sessions and labs, which presents serious challenges to existing pose estimation Methods. Specifically, in IBL’s preliminary data release we used DeepLabCut, followed by custom post-processing. As detailed in [30], this approach fails in a majority of pupil recordings: the signal-to-noise ratio of the estimated pupil diameter is too low for reliable downstream use, largely due to occlusions caused by whisking and infrared light reflections. Paw tracking tends to be more accurate, but is contaminated by discontinuities especially when a paw is retracted behind the torso. In this section, we report the results for IBL-pupil. The IBL-paw results appear in Extended Data Fig. 7 and the Supplementary Information.

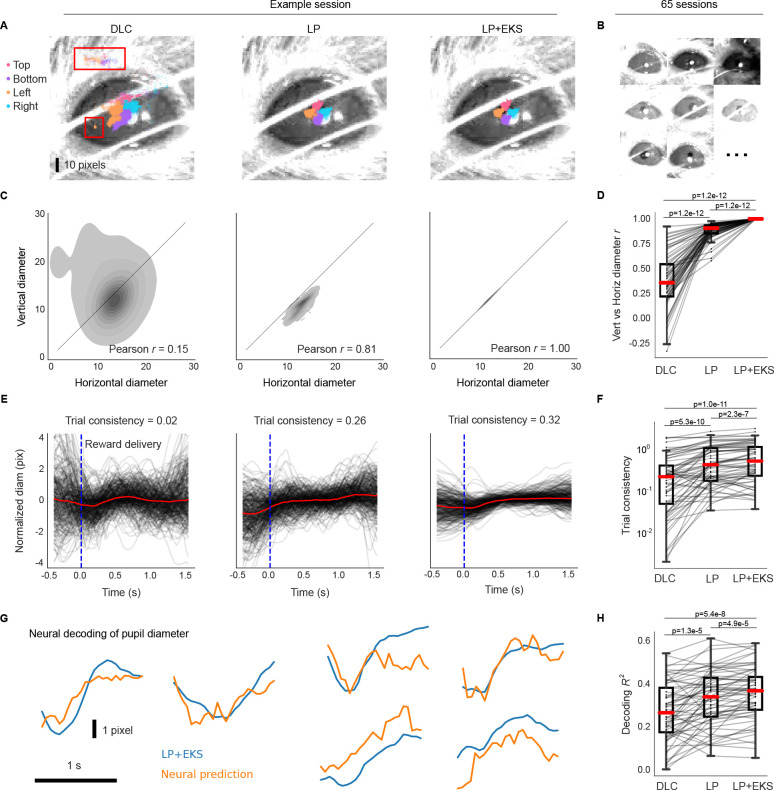

In Fig. 6, we evaluate three pose estimators: DeepLabCut with custom post-processing (DLC; left column of example session), Lightning Pose’s semi-supervised TCN model with the same post-processing (LP; middle column, using temporal difference and Pose PCA losses), and the pupil-specific EKS variant applied to an ensemble of m = 5 LP models (LP+EKS; right column). The pupil-specific EKS uses a three-dimensional latent state: pupil centroid (width and height coordinates) and a diameter. (It is straightforward to construct an EKS version with separate horizontal and vertical diameters, but we found this extension to be unnecessary for this dataset.) The latent state is then projected linearly onto the eight-dimensional tracked pixel coordinates (width and height for top, bottom, left, and right edges; see Methods).

Figure 6: Lightning Pose models and EKS improve pose estimation on IBL pupil data.

A. Sample frame overlaid with a subset of pupil markers estimated from DeepLabCut (DLC; left), Lightning Pose using a semi-supervised TCN model (LP; center), and a 5-member ensemble using semi-supervised TCN models (LP+EKS; right). B. Example frames from a subset of 65 IBL sessions, illustrating the diversity of imaging conditions in the dataset. C. Empirical distribution of vertical diameter measured from top and bottom markers scattered against horizontal pupil diameter measured from left and right markers. These estimates should ideally be equal, i.e., the distribution should lie near the diagonal line. Column arrangement as in panel A. The LP+EKS estimate imposes a low-dimensional model that enforces perfectly correlated vertical and horizontal diameters by construction. D. Vertical vs horizontal diameter correlation is computed across n=65 sessions for each model. The LP+EKS model has a correlation of 1.0 by construction. E. Pupil diameter is plotted for correct trials aligned to feedback onset; each trial is mean-subtracted. DeepLabCut and LP diameters are smoothed using IBL default post-processing (Methods), compared to LP+EKS outputs. We compute a trial consistency metric (the variance explained by the mean over trials; see text) as indicated in the panel titles. See Supplementary Video 13. F. The trial consistency metric computed across n=65 sessions. G. Example traces of LP+EKS pupil diameters (blue) and predictions from neural activity (orange) for several trials using cross-validated, regularized linear regression (Methods). H. Neural decoding performance across n=65 sessions. Panels D, F, and H use a one-sided Wilcoxon signed-rank test; boxes use 25th/50th/75th percentiles for min/center/max, and whiskers extend to 1.5 * IQR. See Supplementary Table 2 and main text for further quantification of boxes.

To directly compare our methods to the publicly released IBL DeepLabCut traces, we train on all available data and evaluate on held-out unlabeled videos. We define several pupil-specific metrics to quantify the accuracy of the different models and their utility for downstream analyses.

The first metric compares the “vertical” and “horizontal” diameters, i.e., top(y) - bottom(y) and right(x) - left(x), respectively. The vertical and horizontal diameters should be equal (or at least highly correlated) and, therefore, low correlations between these two values signal poor tracking. We compute this correlation in an example session in Fig. 6C, and over 65 sessions in Fig. 6D. The LP model (Pearson’s r=0.88±0.01, mean±sem) improves over the DeepLabCut model (r=0.36±0.03). (Since the pupil-specific EKS uses a single value for both vertical and horizontal diameters, it enforces a correlation of 1.0 by construction.)

Scientifically, we are interested in how behaviorally-relevant events (such as reward onset) impact pupil dynamics, as well as the correlation between pupil dynamics and neural activity. We expect that noise in our estimates of pupil diameter would reduce the apparent consistency of pupil dynamics across trials and also reduce any correlations between pupil diameter and neural activity; this is exactly what we observe (Fig. 6E–H). In Fig. 6E, we align diameter estimates across multiple trials to the time of reward delivered at the end of each successful trial. We define a second quality metric – trial consistency – by taking the variance of the mean pupil diameter trace and dividing by the variance of the mean-subtracted traces across all trials. This metric is zero if there are no reproducible dynamics across trials; it is infinity if the pupil dynamics are identical and non-constant across trials (constant outputs will result in an undefined metric since both numerator and denominator are zero). Although we expect some amount of real trial-to-trial variability in pupil dynamics, any noise introduced during pose estimation will decrease this metric. The LP and LP+EKS estimates show greater trial-to-trial consistency compared to the DeepLabCut estimates, both within a single session (Fig. 6E) and across multiple sessions (Fig. 6F; DeepLabCut 0.35±0.06; LP 0.62±0.07; LP+EKS 0.74±0.08). Supplementary Video 13 shows pupil diameter traces in multiple trials, and demonstrates that the increased trial-to-trial consistency does not compromise the model’s ability to track the pupil well within individual trials.

After establishing the improved reliability of the pupil diameter signal, we now examine whether it is more correlated with neural data. This analysis serves to verify that the LP+EKS approach is not merely suppressing pupil diameter fluctuations, but rather better capturing pupil signals that can be predicted from an independent measurement of neural activity. We compute the accuracy of a ridge regression model from neural data to pupil diameter (R2 on held-out trials; see Methods). Fig. 6H shows that across sessions, LP and LP+EKS enhance decoding accuracy compared to DeepLabCut (DLC R2=0.27±0.02; LP 0.33±0.02; LP+EKS 0.35±0.02).

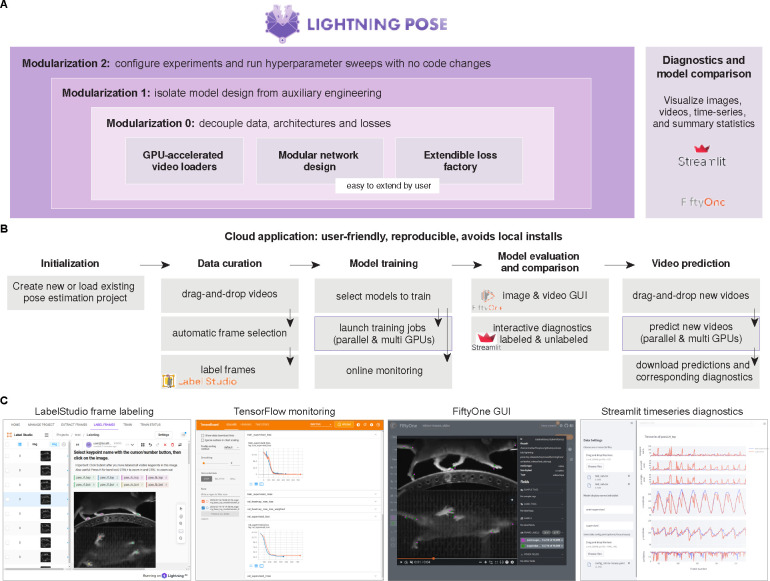

2.12. The Lightning Pose software package and a cloud application

We open-source a flexible software package and an easy-to-use cloud application. Both are presented in detail in the Methods, and we provide a brief overview here.

Extended Data Fig. 8A illustrates the design principles of the Lightning Pose package, which we built to be 1) simple to use and easy to maintain: we aim to minimize “boilerplate” code (such as basic GUI development or training loggers) by outsourcing to industry-grade packages; 2) video-centric: the networks operate on video clips, rather than on a single image at a time; 3) modular and extensible: our goal is to facilitate prototyping of new losses and models; 4) scalable: we support efficient semi-supervised training and evaluation; 5) interactive: we offer a variety of tracking performance metrics and visualizations during and after training, enabling easy model comparison and outlier detection.

The scientific adoption of deep learning packages like ours presents an infrastructure challenge. Labs need access to GPU-accelerated hardware with a set of pre-installed drivers and packages. Often, developers spend more time in hardware setup and installation than configuring, training, and evaluating pose tracking models (see e.g. [25] for further discussion). We therefore developed a cloud application that supports the full life cycle of animal pose estimation (Extended Data Fig. 8B), and is suitable for users with minimal coding expertise and only requires internet access.

3. Discussion

We presented Lightning Pose, a semi-supervised deep learning system for animal pose estimation. Lightning Pose uses a set of spatiotemporal constraints on postural dynamics to improve network reliability and efficiency. We further refine the pose estimates post-hoc, with the Ensemble Kalman Smoother (EKS) that uses reliable predictions and spatiotemporal constraints to interpolate over unreliable ones.

Our work builds on previous semi-supervised animal pose estimation algorithms that use spatiotemporal losses on unlabeled videos [34, 37]. Semi-supervised learning is not the only technique that enables improvements over standard supervised learning protocols. First, it has been suggested that supervised pose estimation networks can be improved by pretraining them on large labeled datasets for image classification [9] or pose estimation [47], to an extent that might eliminate dataset-specific training [48]. Other work avoids pretraining altogether by using lighter architectures [10]. These ideas are complementary to ours: any robust backbone obtained through these procedures could be easily integrated into Lightning Pose, and further refined via semi-supervised learning.

Human pose estimation, like animal pose estimation, is most commonly approached using supervised heatmap regression on a frame-by-frame basis [49]. Unlike the animal setting, human models are trained on much larger labeled datasets containing either annotated images [50] or 3D motion capture [51]. Moreover, human models track a standardized set of keypoints, and some operate on a standard skinned human body model [52]. In contrast, most animal pose estimation must contend with relatively scarce labels, lower quality videos, and bespoke sets of labels to track, varying by species and lab. Though human pose estimation models can impressively track crowds of moving humans, doing downstream science using the keypoints still presents several challenges [49] similar to those discussed in the Results. Lightning Pose can be applied to single-human pose estimation, by fine-tuning an off-the-shelf human pose estimation backbone to specific experimental setups (such as patients in a clinic), while enforcing our spatiotemporal constraints (or new ones). Future work could also apply EKS to the outputs of off-the-shelf human trackers.

Roughly speaking, two camps coexist in multi-view animal pose estimation [53]: those who use 3D information during training [12, 37, 54, 55] and those who train standard 2D networks and perform 3D reconstruction post-hoc [20, 56]. Either approach involves camera calibration, whose limitations we discussed above. Lightning Pose can be seen as an intermediate approach: we train with 3D constraints without an explicit camera calibration step (however, our current approach assumes the cameras have no distortion). At the same time, Lightning Pose does not provide an exact 3D reconstruction of the animal, but rather a scaled, rotated and shifted version thereof. Our improved predictions could be readily used as inputs to existing 3D reconstruction pipelines. Some authors employed 3D convolutional networks that operate on 3D location voxels instead of 2D heatmaps [12]. These architectures have been recently trained in a semi-supervised fashion with temporal constraints [43] akin to to [34] and the current work. We note that, although 3D voxels are more computationally expensive than 2D heatmaps, they could be incorporated as strong backbones for Lightning Pose.

A number of additional important directions remain for future work. One involves implementing richer models of moving bodies as losses. Multiple approaches have been recently proposed for modeling pose trajectories post-hoc. These include probabilistic body models [21, 57, 58], mechanical models [59], switching linear dynamical systems [19, 34, 60], and autoencoders [20]. These models could be made even more effective by being integrated into network training in a so-called end-to-end manner. Any model of pose dynamics, as long as it is differentiable, could be incorporated as an unsupervised loss.

Another important direction is to improve the efficiency of the EKS method. The advantages of ensembling come at a cost: we need to train, store, and run inference with multiple networks. (Post-processing the networks’ output with EKS is relatively computationally cheap.) One natural approach would be knowledge distillation [61]: train a single network to emulate the full EKS output.

Finally, while the methods proposed here can currently track multiple distinguishable animals (e.g., a black mouse and a white mouse), they do not apply directly to multi-animal tracking problems involving multiple similar animals [18, 62], since to compute our unsupervised losses we need to be able to know which keypoint belongs to which animal. Thus adapting our approaches to the general multi-animal setting remains an important open avenue for future work.

4. Methods

All datasets used for the experiment were collected in compliance with the relevant ethical regulations. See the following published papers for each dataset: mirror-mouse: [1], CRIM13: [2], and IBL datasets: [3]. All mirror-fish experiments adhere to the American Physiological Society’s Guiding Principles in the Care and Use of Animals and were approved by the Institutional Animal Care and Use Committee of Columbia University, protocol number AABN0557.

4.1. Datasets

We consider diverse datasets collected via different experimental paradigms for mice and fish. For each dataset, we collected a large number of videos including different animals and experimental sessions, and labeled a subset of frames from each video. We then split this data into two non-overlapping subsets (i.e., a given animal and/or session would appear only in one subset). The first subset is the “in-distribution” (InD) data that we use for model training. The second subset is the “out-of-distribution” (OOD) data that we use for model evaluation. This setup mimics the common scenario in which a network is thoroughly trained on one cohort of subjects, and is then used to predict new subjects. Supplementary Table 1 details the number of frames for each subset per dataset, as well as the number of unique animals and videos those frames came from.

Mirror-mouse.

Head-fixed mice ran on a circular treadmill while avoiding a moving obstacle [1]. The treadmill had a transparent floor and a mirror mounted inside at 45°, allowing a single camera to capture two roughly orthogonal views (side view and bottom view via the mirror) at 250 Hz. The camera was positioned at a large distance from the subject (~ 1.1 meters) to minimize perspective distortion. Frames are (406×396) and reshaped during training to (256×256). 17 keypoints were labeled across the two views including seven keypoints on the mouse’s body per view, plus three keypoints on the moving obstacle.

Mirror-fish.

19 wild-caught (age unknown) adult male and female Mormyrid fish (15–22 cm in length) of the species Gnathonemus petersii were used in the experiment. Fish were housed in 60-gallon tanks in groups of 5–20. Water conductivity was maintained between 60–100 microsiemens both in the fish’s home tanks and during experiments.

The fish swam freely in and out of an experimental tank, capturing worms from a well. The tank had a side mirror and a top mirror, both at 45°, providing three different views seen from a single camera at 300 Hz (Supplementary Fig. 1). Here too, the camera was placed ~ 1.7 meters away from the center of the fish tank to reduce distortions. Frames are (384 × 512) and reshaped during training to (256 × 384).

17 body parts were tracked across all three views for a total of 51 keypoints. We pre-processed the labeled dataset as follows. First, we identified labeling errors by flagging large values of the multi-view PCA loss. We then fixed the wrong labels manually. Next, in the InD data only, we used a probabilistic variant of multi-view PCA (PPCA) to infer keypoints that were occluded in one out of the three views, effectively similar to the triangulation-reprojection protocols used for multi-view tracking by [4, 5]. This resulted in a 30% increase in the number of keypoints usable for training, with more occluded keypoints included in the augmented label set.

CRIM13.

The Caltech Resident-Intruder Mouse dataset (CRIM13) [2] consists of two mice interacting in an enclosed arena, captured by top and side view cameras at 30 Hz. We only use the top view. Frames are (480 × 640) and reshaped during training to (256 × 256). Seven keypoints were labeled on each mouse for a total of 14 keypoints.

Unlike the other datasets, the InD/OOD splits do not contain completely non-overlapping sets of animals, as we used the train/test split provided in the dataset. The (4) resident mice are present in both InD and OOD splits; however, the intruder mouse is different for each session. Each keypoint in the CRIM13 dataset is labeled by five different annotators. To create the final set of labels for network training, we took the median across all labels for each keypoint.

IBL-paw.

This dataset [3] comes from the International Brain Lab and consists of head-fixed mice performing a decision-making task [6, 7]. Two cameras – “left” (60 Hz) and “right” (150 Hz) – capture roughly orthogonal side views of the mouse’s face and upper trunk during each session. The original dataset does not contain synchronized labeled frames for both cameras, preventing the direct use of multi-view PCA losses during training. Instead, we treat the frames as coming from a single camera by flipping the right camera video. Frames were initially downsampled to (102 × 128) for labeling and video storage; frames were reshaped during training to (128×128). We tracked two keypoints per view, one for each paw. More information on the IBL video processing pipeline can be found in [8]. For the large scale analysis in Extended Data Fig. 7 we selected 44 additional test sessions that were not represented in the InD or OOD sessions listed in Supplementary Table 1; these could be considered additional OOD data.

IBL-pupil.

The pupil dataset also comes from the International Brain Lab. Frames from the right camera were spatially upsampled and flipped to match the left camera. Then, a 100 × 100 pixel ROI was cropped around the pupil. The frames were reshaped in training to (128 × 128). Four keypoints were tracked on the top, bottom, left and right edges of the pupil, forming a diamond shape. For the large scale analysis in Fig. 6 we selected left videos from 65 additional sessions that were not represented in the InD or OOD sessions listed in Supplementary Table 1.

4.2. Problem formulation

Let denote the number of keypoints to be tracked, and the number of labeled frames. After manual labeling, we are given a dataset:

| (1) |

where is the i-th image and its associated label vector, stacking the annotated width-height pixel coordinates for each of the tracked keypoints.

It is standard practice to represent each annotated keypoint as a heatmap with width and height , thus converting to a set of heatmaps . This is done by defining a bivariate Gaussian centered at each annotated keypoint with variance (a controllable parameter), and evaluating it at 2D grid points (for more details, see [9]). If lacks an annotation (e.g. if it is occluded), we do not form a heatmap for it.

We normalize the heatmaps , which allows us to both evenly scale the outputs during training and use losses that operate on heatmaps as valid probability mass functions. Then, the dataset for training supervised networks is just frames and heatmaps . To accelerate training, the heatmaps are made 4 or 8 times smaller than the original frames.

4.3. Model architectures

4.3.1. Baseline

Our baseline model performs heatmap regression on a frame-by-frame basis, akin to DeepLabCut [10], SLEAP [9], DeepPoseKit [11], and others. It has roughly the same architecture: a “backbone” network that extract a feature vector per frame, and a “head” that transforms these into predicted heatmaps. In the results reported here, we use a ResNet-50 backbone network pretrained on the AnimalPose10K dataset ([12]; 10,015 annotated frames from 54 different animal species). For the mirror-fish dataset, we rely on ImageNet pretraining (except for the sample efficiency experiments in Fig. 1). However, our package, like others, is largely agnostic to backbone choices. Let denote batch size, the RGB color channels, and an “upscaling factor” by which we increase the size of our representations. The head includes a fixed PixelShuffle(2) layer that reshapes the features tensor output by the backbone from to and a series of identical ConvTranspose2D layers that further double it in size (kernel size (3 × 3), stride (2 × 2), input padding (1 × 1), and output padding (1 × 1)) [13]. The number of ConvTranspose2D layers is determined by the desired shape of the output heatmaps, and most commonly two such layers are used. Each heatmap is normalized with a 2D spatial softmax with a temperature parameter . The supervised loss is a divergence between predicted heatmaps and labeled heatmaps. Here, we use squared error for each batch element and keypoint

Once heatmaps have been predicted for each keypoint, we must transform these 2D arrays into estimates of the width-height coordinates in the original image space. We first upsample each heatmap to using bicubic interpolation. We then compute a subpixel maximum akin to DeepPoseKit [11]. A 2D spatial softmax renormalizes the heatmap to sum to 1, and we apply a high temperature parameter to suppress non-global maxima. A 2D spatial expectation then produces a subpixel estimate of the location of the heatmap’s maximum value. These two operations – spatial softmax followed by spatial expectation – are together known as a soft argmax [14]. Importantly, this soft argmax operation is differentiable (unlike the location refinement strategy employed in [10]), and allows the estimated coordinates to be used in downstream losses. To compute the confidence value associated with the pixel coordinates, we sum the values of the normalized heatmap within a configurable radius of the soft argmax.

4.3.2. Temporal Context Network

Many detection ambiguities and occlusions in a given frame can be resolved by considering some video frames before and after it. The Temporal Context Network (TCN) uses a sequence of frames to predict the labeled heatmaps for the middle frame,

| (2) |

where is the labeled frame and, for example, is the preceding (unlabeled) frame in the video.

During training, batches of frame sequences are passed through the backbone to obtain feature vectors. The TCN has two upsampling heads, one “static” and one “context-aware,” each identical to the baseline model’s head. The static head takes the features of only the central frame and predicts location heatmaps for that frame. The context-aware head generates predicted location heatmaps for each of the frames (note, these are the same shape as the location heatmaps, but we do not explicitly enforce them to match labeled heatmaps). Those heatmaps are passed as inputs to a bi-directional convolutional recurrent neural network whose output is the context-aware predicted heatmap for the middle frame. We then apply our supervised loss to both predicted heatmaps, forcing the network to learn the standard static mapping from an image to heatmaps, while independently learning to take advantage of temporal context when needed. (Recall Fig. 2E, which provides an overview of this architecture.)

The network described above outputs two predicted heatmaps per keypoint, one from each head, and applies the computations described above to obtain two sets of keypoint predictions with confidences. For each keypoint, the more confident prediction of the two is selected for downstream analysis.

4.4. Semi-supervised learning

We perform semi-supervised learning by jointly training on labeled dataset (constructed as described above) and an unlabeled dataset :

| (3) |

where is constructed as follows.

Assume we have access to one or more unlabeled videos; we splice these into a set of disjoint -frame clips (discarding the very last clip if it has fewer than frames),

| (4) |

where, typically, with with smaller frame sizes freeing up memory for longer sequences.

Now, assume we selected a mechanism (baseline model or TCN) for predicting keypoint heatmaps for a given frame. At each training step, in addition to a batch of labeled frames drawn from , we present the network with a short unlabeled video clip randomly drawn from . The network outputs a time-series of keypoint predictions (one pose for each of the frames in the clip), which is then subjected to one or more of our unsupervised losses.

All unsupervised losses are expressed as pixel distance between a keypoint prediction and the constraint. Since our constraints are merely useful approximate models of reality, we do not require the network to perfectly satisfy them. We are particularly interested in preventing, and having the network learn from, severe violations of these constraints. Therefore, we enforce our losses only when they exceed a tolerance threshold , rendering them -insensitive:

| (5) |

The threshold could be chosen using prior knowledge, or estimated empirically from the labeled data, as we will demonstrate below. is computed separately for each keypoint on each frame, and averaged to obtain a scalar loss to be minimized. Multiple losses can be jointly minimized via a weighted sum, with weights determined by a parallel hyperparameter search, which is supported in Lightning Pose with no code changes.

4.4.1. Temporal difference loss

Keypoints should not jump too far between consecutive frames. We measure the jump in pixels and ignore jumps smaller than , the maximum jump allowed by user,

| (6) |

where could be determined based on image size, frame rate, and rough viewing distance from the subject. We compute this loss for a pair of successive predictions only when both have confidence greater than a configurable threshold (e.g. 0.9) to avoid artificially enforcing smoothness in stretches where the keypoint is unseen. We average the loss across keypoints and unlabeled frames:

| (7) |

and minimize during training. Lightning Pose also offers the option to apply the temporal difference loss on predicted heatmaps instead of the keypoints. We have found both methods comparable and focus on the latter for clarity.

4.4.2. Multi-view PCA loss

Background.

Let be an unknown 3D keypoint of interest. Assume that we have cameras and that each camera sees a single perspective projection of denoted as , in pixel coordinates. (Note that is standard to express and in “homogeneous coordinates”, i.e., appending another element to each vector, yet we omit this for simplicity and for a clearer connection with our PCA approach.) Thus, we have a 2V-dimensional measurement of our 3D keypoin .

The multi-view geometry approach.

It is standard to model each view as a pinhole camera [15]: such a camera has intrinsic parameters (focal length and distortion) and extrinsic parameters (its 3D location and orientation, a.k.a “camera pose”), that together specify where a 3D keypoint will land on its imaging plane, i.e., the transformation from to . This transformation involves a linear projection (scaling, rotation, translation) followed by a nonlinear distortion. While one might know a camera’s focal length and distortion, in general, both the intrinsic and extrinsic parameters are not exactly known and have to be estimated. A standard way to estimate these involves “calibrating” the camera; filming objects with ground-truth 3D coordinates, and measuring their 2D pixel coordinates on the camera’s imaging plane. Physical checkerboards are typically used for this purpose. They have known patterns that can be presented to the camera and detected using traditional computer vision techniques. Now with a sufficient set of 3D inputs and 2D outputs, the intrinsic and extrinsic parameters can be estimated via (nonlinear) optimization.

Multi-view PCA on the labels (our approach).

We take a simpler approach which does not require camera calibration or, in the mirrored datasets considered in this paper, explicit information about the location of the mirrors. Our first insight is that the multi-view (-dimensional) labeled keypoints could be used as keypoint correspondences to learn the geometric relationship between the views. We approximate the pinhole camera as a linear projection (with zero distortion), and estimate the parameters of this linear projection by fitting PCA on the labels (details below), and keeping the first three PCs, since all we are measuring from our different cameras is a single 3D object. Fig. 2C (bottom right) confirms that our PCA model can explain > 99% of the variance with the first three PCs in several multi-view experimental setups, indicating that our linear approximation is suitable at least for the mirror-mouse and mirror-fish datasets, in which the camera is relatively far from the subject. We do anticipate cases where our linear approximation will not be sufficiently accurate (e.g., strongly distorted lenses, or highly zoomed in); the more general epipolar geometry approach of [16,17] could be applicable here. Note that our three-dimensional PCA coordinates do not exactly match the 3D width-height-depth physical coordinates of the keypoints in space; instead, these two sets of three-dimensional coordinates are related via an affine transformation.

Before training: fitting multi-view Principal Component Analysis (PCA) on the labels.