Abstract

OBJECTIVES

The transition to competency-based medical education (CBME) has increased the volume of residents’ assessment data; however, the quality of the narrative feedback is yet to be used as feedback-on-feedback for faculty. Our objectives were (1) to explore and compare the quality and content of narrative feedback provided to residents in medicine and surgery during ambulatory patient care and (2) to use the Deliberately Developmental Organization framework to identify strengths, weaknesses, and opportunities to improve quality of feedback within CBME.

METHODS

We conducted a mixed convergent methods study with residents from the Departments of Surgery (DoS; n = 7) and Medicine (DoM; n = 9) at Queen's University. We used thematic analysis and the Quality of Assessment for Learning (QuAL) tool to analyze the content and quality of narrative feedback documented in entrustable professional activities (EPAs) assessments for ambulatory care. We also examined the association between the basis of assessment, time to provide feedback, and the quality of narrative feedback.

RESULTS

Forty-one EPA assessments were included in the analysis. Three major themes arose from thematic analysis: Communication, Diagnostics/Management, and Next Steps. Quality of the narrative feedback varied; 46% had sufficient evidence about residents’ performance; 39% provided a suggestion for improvement; and 11% provided a connection between the suggestion and the evidence. There were significant differences between DoM and DoS in quality of feedback scores for evidence (2.1 [1.3] vs. 1.3 [1.1]; p < 0.01) and connection (0.4 [0.5] vs. 0.1 [0.3]; p = 0.04) domains of the QuAL tool. Feedback quality was not associated with the basis of assessment or time taken to provide feedback.

CONCLUSION

The quality of the narrative feedback provided to residents during ambulatory patient care was variable with the greatest gap in providing connections between suggestions and evidence about residents’ performance. There is a need for ongoing faculty development to improve the quality of narrative feedback provided to residents.

Keywords: faculty development, resident education, feedback, surgery, medicine

Introduction

In recent years, residency education in Canada has transitioned from time-based to competency-based medical education (CBME), with a focus on entrustable professional activities (EPAs) as an assessment framework to translate the necessary competencies into clinical practice. 1 Within CBME, assessors are encouraged to directly observe residents’ performance on real-world tasks and to provide frequent and actionable feedback. 2 The transition to CBME resulted in an increase in the quantity of assessments and feedback; however, the quality of the feedback has not changed.3,4 This suggests that in order to reconcile the tension between assessment and feedback, a focus must be placed on giving meaningful, constructive, and specific feedback within the CBME framework. As such, residents and faculty must be equipped with training on how to provide high-quality feedback to optimize residency education.

Resident and faculty growth within CBME can be explored using the conceptual framework of Deliberately Developmental Organizations (DDOs). The DDOs framework was developed to facilitate creation of institutions with the fundamental objective of developing people. It is comprised of three key features: The Edge, The Groove, and The Home. 5 The Edge is an aspirational alignment toward growth of individuals within an organization, which includes the identification of weakness and using them as opportunities for improvement. The Groove represents the active implementation of tools and practices to facilitate growth, and the Home references an overarching culture that embraces feedback and the improvement of individuals. To meet these expectations at an organizational level, DDOs framework relies on performance data to measure and inform the growth of individuals and the organization. 5 Within CBME, EPAs are used by faculty to assess residents’ competency and to inform decisions regarding promotion between stages of training. However, residency programs often fail to use the plethora of EPA data to its full potential, such as operationalizing it for faculty development. 6 The DDOs framework can be used to address this gap by using EPA assessment data to assess faculty's performance and provide them with feedback on their performance. The use of EPA data in this manner may provide residency programs with the Edge to create novel tools for faculty development (the Groove) and to foster an environment which embraces high-quality feedback and encourages professional development of residents and faculty (the Home) which in turn may improve resident performance and patient care. 6

In this study, we aimed to explore and compare the quality and the content of narrative feedback that was provided to residents within the departments of Medicine and Surgery during ambulatory clinical care at our academic institution. We use the DDO conceptual framework to identify strengths and weaknesses, and to make recommendations for how to improve the quality of feedback within CBME in an ambulatory setting.

Methods

Study design

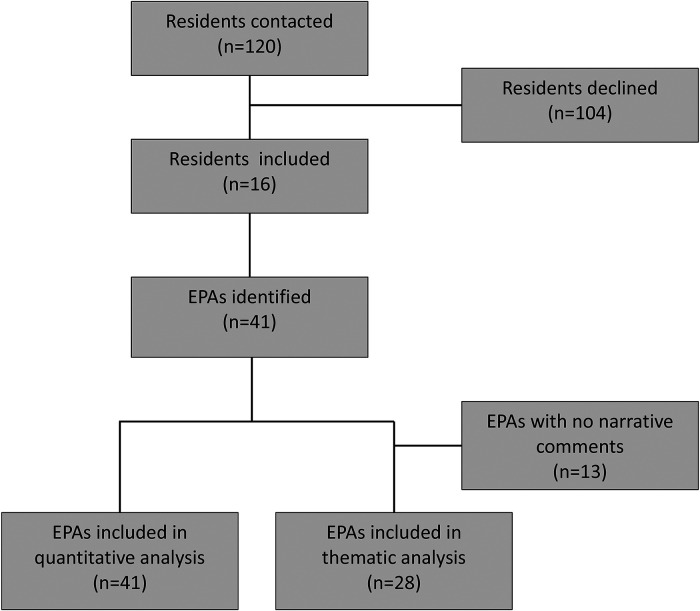

We used a mixed convergent methods design and recruited residents within the CBME stream from the Departments of Surgery (DoS) and Medicine (DoM) at Queen's University and its affiliated academic health sciences center (Kingston Health Sciences Center) using purposive sampling (Figure 1). 7 Kingston Health Sciences Centre has a large rural catchment area of 20,000 km2, servicing a population of approximately 500,000. The DoS and DoM offer residency programs in orthopedic and general surgery, internal medicine, and neurology, with approximately 120 residents across all years in the four programs. Study participants were contacted via email and provided written informed consent for us to access their EPA assessment data from Elentra™ (online academic portal) related to ambulatory patient care, including both in-person and virtual encounters, completed between July 1, 2020 and February 28, 2021. The content for each EPA assessment in each postgraduate specialty is determined by the Royal College of Physicians and Surgeons of Canada and includes a list of milestones, a global assessment of entrustment, as well as two written prompts (“next steps” and “global feedback”) specifically requesting the assessor to provide narrative comments (see Supplemental File A). 8 We collected the following EPA data variables: residency program, basis of assessment, date of clinical encounter, date of assessment completion, and narrative feedback. Basis of assessment included direct observation (faculty is in the room during the encounter), indirect observation (case reviews, presentations, discussion, and dictated letters), and other methods (combination or not specified). Time taken to provide narrative feedback included two variables: time for residents to initiate the EPA (time elapsed from the encounter date to the date the EPA was initiated) and time for faculty to complete the EPA assessment with narrative feedback (time elapsed between initiating the EPA and completion by faculty).

Figure 1.

Study flow diagram.

This study was approved by the Health Sciences and Affiliated Teaching Hospitals Research Ethics Board on August 20, 2020 (HSREB; file #6030474). All methods were conducted in accordance with the relevant guidelines and regulations of the HSREB. All study participants provided written informed consent for us to access their EPA assessment data related to ambulatory patient care.

Data analysis

Quality of feedback analysis

We used the Quality of Assessment of Learning (QuAL) Score to rate the overall quality of the feedback documented in the EPA assessments. 8 The QuAL Score assesses the quality of narrative feedback within the CBME context and has documented reliability and validity evidence. 9 It rates narrative feedback in three domains: (1) Evidence, (2) Suggestion, and (3) Connection. Evidence judges if the information provided about the resident's performance is sufficient (0—no comment, 1—no, but comment present, 2—somewhat, 3—yes/full description). Suggestion pertains to whether the rater provided a suggestion for improvement (0—no/1—yes). Connection determines if the rater's suggestion for improvement was linked to the behavior described in the evidence portion (0—no/1—yes). Maximum score is 5, while the minimum score is 1 if a narrative comment is present, or 0 if no written feedback was provided (Supplemental File B).

Three researchers rated the quality of narrative feedback (JSSH, RL, HB). Initially, 10% of the narrative feedback data was rated together, then a further 10% was rated individually and compared to determine the interrater reliability of 100%. The remaining data were then independently rated by two researchers (JSSH, RL) with an initial agreement of 93%. All disagreements were resolved by a third rater (HB). Examples of quality ratings are provided in Supplemental File C. We stratified the quality of the feedback into high (score of 4 or 5) and low (score of 1 or 2) to perform a sub-group analysis for qualitative themes, basis of assessment, and time to narrative feedback. QuAL scores and basis of assessment were compared between the DoM and DoS using a Chi-squared, Fischer Exact or Fisher–Freeman–Halton Exact test as appropriate (SPSS statistics). Time to initiate EPAs between high and low feedback quality groups was analyzed using independent samples t-test. Time to complete EPAs between high and low feedback quality groups was analyzed using the Mann–Whitney U test.

Thematic analysis

We used thematic analysis to analyze the narrative feedback documented in the EPA assessments. Three independent coders (JSSH, RL, HB) analyzed the narrative feedback inductively using thematic analysis including preliminary coding, line-by-line coding, and generation of themes. 10 During preliminary coding, 10% of the feedback was coded together to develop a preliminary codebook. Subsequently, a further 10% of the data was coded independently and then compared across coders to ensure inter-coder reliability with a resulting agreement of 96%. The remaining 4% was discussed until a consensus was reached. The resulting consensus-built codebook was used by two researchers (JSSH, RL) to code the remaining feedback. Lastly, all researchers (JSSH, RL, HB) conducted axial coding and concept grouping, resulting in the identification of themes. We used QSR International's NVivo software (Version 12). Throughout this process, we had to consider how personal biases may impact thematic interpretation, including personal experiences with providing and receiving narrative feedback, and the training and educational backgrounds of the researchers. 11 The researchers who conducted the analysis were medical students (JSSH, RL) trained by a health education researcher and consultant with the office of Professional Development and Educational Scholarship (HB). The research team also consisted of faculty from the Departments of Surgery (BZ, SM) and Medicine (SA), and two residents (EK, SB). Throughout the analysis process, the entire research team met to discuss emerging codes, interpretations, and themes, thus utilizing the insights of those handling the data, and those with educational and clinical experience.

Results

Demographics

Residents’ demographics are outlined in Table 1.

Table 1.

Demographics.

| Resident participants | |

| Total number | 16 |

| Department | |

| Surgery | 7 |

| Medicine | 9 |

| Mean age (SD) | 29.1 (5.1) |

| Sex | |

| Male | 7 |

| Female | 9 |

| Postgraduate year (PGY) (%) | |

| PGY-1 | 5 (35%) |

| PGY-2 | 3 (15%) |

| PGY-3 | 4 (30%) |

| PGY-4 | 1 (5%) |

| PGY-5 | 3 (15%) |

Quality of feedback

A total of 41 EPA assessments were analyzed. Thirteen out of 41 (32%) assessments did not have narrative feedback and received a QuAL score of 0. The overall quality of the narrative feedback varied, with a mean total QuAL score of 2.2 (1.9) out of 5. Forty-six percent of narrative feedback comments provided sufficient evidence about residents’ performance with a mean score of 1.57 (1.29) out of 3, 39% provided a suggestion for improvement with a mean score of 0.39 (0.49) out of 1, and 11% provided a connection between the suggestion and the evidence with a mean score of 0.28 (0.45) out of 1 (Table 2). Overall, assessors were significantly better at providing suggestions for improvement versus making connections between suggestions and described behaviors (0.39 [0.49] vs. 0.28 [0.45]; p < 0.001).

Table 2.

Feedback quality scores of surgery versus medicine.

| DOMAIN | VALUE | TOTAL FREQUENCY

(N = 41) (N, %) |

MEDICINE

(N = 18) (N, %) |

SURGERY

(N = 23) (N, %) |

P-VALUE (MEDICINE VS SURGERY) |

|---|---|---|---|---|---|

| Evidence—Does the rater provide sufficient evidence about resident performance? | 3 | 15 (37) | 11 (61) | 4 (17) | 0.008 |

| 2 | 6 (14) | 2 (11) | 4 (17) | ||

| 1 | 7 (17) | 0 (0) | 7 (30) | ||

| 0 | 13 (32) | 5 (28) | 8 (35) | ||

| Mean score (SD) | 1.57 (1.29) | 2.10 (1.35) | 1.17 (1.11) | ||

| Suggestion—Does the rater provide a suggestion for improvement? | 1 | 16 (39) | 8 (44) | 8 (35) | 0.529 |

| 0 | 25 (61) | 10 (56) | 15 (65) | ||

| Mean score (SD) | 0.39 (0.49) | 0.44 (0.51) | 0.35 (0.49) | ||

| Connection—Is the rater's suggestion linked to the behavior described? | 1 | 11 (27) | 8 (44) | 3 (13) | 0.036 |

| 0 | 30 (73) | 10 (56) | 20 (87) | ||

| Mean score (SD) | 0.28 (0.45) | 0.45 (0.51) | 0.12 (0.34) | ||

| Mean total QuAL score (SD) | 2.2 (1.9) | 2.9 (2.1) | 1.6 (1.5) | 0.022 | |

There were significant differences in the quality of narrative feedback from faculty members in the DoM and DoS (Table 2). Overall, faculty within the DoM provided significantly higher quality feedback (2.9 [2.1] vs. 1.6 [1.5]; p = 0.022), specifically in the domains of “evidence” and “connection” as compared to the faculty in the DoS. There was no significant difference within the domain of “suggestion” between faculty from either department (Table 2).

Quality of feedback and basis of assessment

The basis of assessment for residents in an ambulatory setting included direct observation, indirect observation, and others (Table 3). There were no significant differences in the basis of assessment used between the DoS and DoM (p = 0.552). There was no association between the basis of assessment and the quality of feedback, with assessors providing high- and low-quality feedback regardless of the basis of assessment used (Table 4).

Table 3.

Basis of assessments.

| BASIS OF ASSESSMENT | TOTAL FREQUENCY (N = 41) (N, %) | MEDICINE

(N = 18) (N, %) |

SURGERY

(N = 23) (N, %) |

P-VALUE |

|---|---|---|---|---|

| Direct observation | 19 (46) | 7 (39) | 12 (52) | 0.552 |

| Indirect Observation | 14 (34) | 8 (44) | 6 (26) | |

| Case review | 7 (17) | 3 (17) | 4 (17) | |

| Case presentation/discussion | 5 (12) | 5 (28) | 0 (0) | |

| Discussion | 1 (2) | 0 (0) | 1 (4) | |

| Dictated letters | 1 (2) | 0 (0) | 1 (4) | |

| Other (both, not specified) | 8 (19) | 3 (16) | 5 (22) |

Table 4.

Feedback and associated themes and basis of assessment stratified by quality.

| QUALITY | AVG QUALITY RATING (/5) | BASIS OF ASSESSMENT | FREQUENCY OF THEMES | ||||||

|---|---|---|---|---|---|---|---|---|---|

| DIRECT OBSERVATION | INDIRECT OBSERVATION | BOTH | NOT SPECIFIED | COMMUNICATION | DIAGNOSTICS AND MANAGEMENT | NEXT STEPS | EXAMPLE QUOTES | ||

| High (n = 11) | 4.7 | 3 | 6 | 0 | 2 | 8 | 10 | 11 | “Excellent comprehensive assessment and recognition of

social determinants of health…One additional thing that

came out was the cost of certain unnecessary

medications. Always keep this in mind…. thoughtful

recommendation that included contributors from

hypovolemia and from SIADH….” (IM-EPA-15) |

| Low (n = 12) | 1.7 | 5 | 2 | 1 | 4 | 0 | 3 | 3 | “Performing at level expected.” GS-EPA-35) |

Quality of feedback and time to complete the EPA assessment

Residents took an average of 3.6 (SD = 9.4) days to initiate an EPA assessment after a clinical encounter, and faculty took an average of 5.8 (SD = 15.6) days to complete the EPA assessment and to provide narrative feedback after the EPA was initiated by the resident. There was no difference in time taken by residents to initiate assessments between EPAs which had high- versus low-quality narrative feedback from faculty (3.18 [SD = 5.8] days vs. 6.25 [SD = 15.9] days; p = 0.59). Similarly, there was no difference in the time taken by faculty to complete assessments with high- versus low-quality narrative feedback (2.82 [SD = 4.8] days vs. 2.95 [SD = 4.3] days; p = 0.28).

Thematic analysis

A total of 41 individual EPA assessments were included in the analysis, representing 19 EPAs (Supplemental File D). Thirteen (32%) out of 41 assessments did not have narrative feedback, leaving a total of 28 assessments for thematic analysis. Three major themes of narrative feedback within ambulatory clinical settings emerged from the data: (1) Communication, (2) Diagnostics and Management, and (3) Next steps. Within each theme, several subthemes were identified (Table 5).

Table 5.

Themes and subthemes identified with quotes.

| Theme 1: Communication | |

| Subtheme | Example quotations |

| With other healthcare providers | “[Resident] is bright and receptive to discussion and feedback. We had some excellent discussions on opioids, volatiles, epidural analgesia, etc.” (GS-EPA-44) |

| With patients | “You were calm, informative, empathic, and patient….” (IM-EPA-19) |

| Theme 2: Diagnostics and Management | |

| Subtheme | Example quotations |

| Patient approach | “Nice approach to a patient presenting with transient neurological symptoms, most in keeping with non-neurologic origin, but keeping in mind the need to rule out serious disease and refer on appropriately.” (IM-EPA-24) |

| Clinical reasoning and evidence-based practice | “Good approach to osteoporosis and review of FRAX scores to determine moderate to high-risk patients who would benefit from pharmacotherapy.” (IM-EPA-18) |

| Theme 3: Next Steps | |

| Subtheme | Example quotations |

| Patient interactions | “Consider using concrete examples instead of abstractions when explaining options around goals of care to patients/families. Most people find it easier to understand ‘…if you developed pneumonia, would you want that treated with IV antibiotics in the hospital in addition to symptom management to help you feel short of breath, or would you like to remain at home and just focus on feeling comfortable and less short of breath?’ This (using examples of illness rather than abstractions) has been a really helpful tool for me to communicate effectively and help patients make goal-concordant health care decisions.” (IM-EPA-14) |

| Developing a plan | “Learning to be selective in tests that we order for patients with UGI symptoms. Not everyone will require EGD, 24-h pH, manometry. Always think why a test should be ordered. If you cannot explain the reason, do not order the test.” (GS-EPA-34) |

| Improving process | “Try and use less redundant words in dictations eg, in 1 year's time should just be 1 year.” (GS-EPA-41) |

Theme 1: Communication

Communication was the main theme within the narrative feedback provided to residents and two subthemes were identified: (1) Communication with staff and health care providers and (2) Communication with patients. Residents received narrative feedback from faculty on their communication with faculty (presenting case summaries, case discussions, and documentation), and with patients (using active listening, education, and creation of rapport).

Theme 2: Diagnostics and Management

The narrative feedback often highlighted residents’ skills with Diagnostics and Management. Two subthemes emerged: (1) Patient approach and (2) Clinical reasoning and evidence-based practice. Within the subtheme of “Patient approach,” faculty provided residents with feedback on their efficiency and thoroughness of patient assessments, as well as organization of histories and clinical presentations. Within the subtheme of “clinical reasoning and evidence-based practice,” faculty provided residents with feedback on the appropriateness of management plans, use of clinical support tools, and clinical practice guidelines.

Theme 3: Next Steps

Faculty provided residents with narrative feedback on the next steps to improve their performance in the ambulatory clinics. Three subthemes were identified: (1) Patient interactions, (2) Developing a plan, and (3) Improving process.

The subtheme of “Patient interactions” included feedback regarding providing patient education and counseling, working on nonconfrontational approaches to antagonistic patients, avoiding the use of medical jargon in conversations with patients, and working on exploring goals of care and end-of-life discussions.

The subtheme of “Developing a plan” included feedback about considering a wider range of differentials and working toward a comprehensive differential diagnosis. Residents were encouraged to explore different management options for their patients, and to familiarize themselves with practice guidelines and legal processes such as medical assistance in dying. Residents were encouraged to apply their skills to more complex patient encounters, and to select appropriate investigations by providing a rationale for each investigation ordered.

The subtheme of “Improving process” included feedback on the ways residents could improve workflow in ambulatory clinics, such as dictations and charting. Faculty suggested ways to streamline the process of documentation to increase clinic efficiency.

Quality of feedback and major qualitative themes

Narrative feedback from faculty was stratified into high-quality (QuAL score 4–5; n = 11) and low-quality (QuAL score 1–2; n = 12). Low-quality feedback only mentioned one or no qualitative themes (Table 3). Such feedback often included general praise such as “well done” or “good” without any specific details, or suggestions for improvement. High-quality narrative feedback often mentioned two or three themes and included various subthemes (Table 4).

Discussion

In this study, we used the QuAL framework to assess the quality of narrative feedback, and thematic analysis to examine the content of feedback provided to residents during ambulatory patient care within the Departments of Medicine and Surgery at our academic institution. We demonstrate that the overall quality of the narrative feedback varied, with 46% of feedback providing sufficient evidence about residents’ performance, 39% of feedback providing a suggestion for improvement, and 11% of feedback providing a connection between the suggestion and the evidence. We also identified three major themes within the content of narrative feedback: Communication, Diagnostics/Management, and Next steps. We found that a greater number of qualitative themes were present in high-quality narrative feedback versus low-quality feedback, which provides additional validity evidence for the QuAL tool. 10

We found that the overall quality of the narrative feedback varied with the mean total QuAL score (±SD) of 2.2 (±1.9) out of 5. The overall quality of narrative feedback in our study appears to be lower than in a study that examined the quality of narrative feedback documented in EPA assessments for emergency medicine residents at the Ottawa Hospital (Ontario, Canada). The authors reported a mean (±SD) total QuAL score of 3.78 (±0.95) for a group of residents who were directly observed and a mean (±SD) total QuAL score of 3.63 (±0.96) for a group of residents who were indirectly observed (p = 0.17). 12 To date, there are no other studies which have used the QuAL tool to evaluate the quality of narrative feedback outside of Emergency Medicine. Assessment burden on faculty may be contributing to the overall low quality of narrative feedback demonstrated in our study.13–15 With the transition to CBME, faculty are required to complete many more assessments, ideally with direct observation of the learners. A study from Western University (Ontario, Canada), examined surgery and anesthesia residents’ opinions on the burden of assessment within CBME and identified that residents felt the need to request multiple assessments from faculty placed a strain on their relationships with faculty and created additional strain on top of their clinical responsibilities. 16

Moreover, we found that the faculty from the DoM provided significantly higher quality narrative feedback than faculty from the DoS. Challenges with feedback in surgery have been previously documented within one study in a general surgery residency program, where 46% of the residents reported receiving feedback less than 20% of the time in the past year. 17 Unfortunately, this study did not assess the quality of feedback that was provided. One plausible explanation for the lower quality of feedback in DoS seen in our study is that most surgery EPAs are focused on procedures performed in the operating room (OR) and in-patient care setting, with learning in the ambulatory setting being ascribed a lower priority. 18 It has also been suggested that surgery residents receive much of their feedback verbally in the intraoperative period; 17 however, there is a paucity of research on the quality and quantity of feedback in surgery outside of simulation-based interventions. In comparison, the quality and quantity of feedback provided within medicine subspecialties have been investigated in several studies. For example, in a medical oncology program, a quality analysis tool has been created to determine the quality of feedback provided within EPAs during the transition to CBME. 19 Within the DDO framework, the significant difference between the quality of feedback provided by faculty in the DoM and the DoS, highlights an opportunity for growth within the surgery residency programs at our institution. Residency programs are driven by the goal of helping residents progress through their clinical training, however, residency programs are also organizations which should support the development of their faculty, in keeping with DDOs mandate to support the growth of all individuals within the organization.

We did not identify any relationship between the quality of feedback and basis of assessments. Similar results were reported in a study by Landreville et al which examined the effect of direct versus indirect observation on the quality of narrative feedback in emergency medicine. 12 It has been suggested that direct observation is the gold standard for the basis of assessment within CBME; 20 however, it is reassuring that even without direct observation some participants in our study received high-quality feedback. Direct observation of residents’ performance may not always be feasible, 21 and our findings suggest that the basis of assessment does not appear to influence the quality of the feedback that residents receive. Additionally, as the need for direct observations is partially responsible for the assessment burden of faculty within CBME, there may be an opportunity to decrease the required number of direct observations without compromising the quality of feedback that is provided to the learners. 16

We also did not find any relationship between the quality of feedback and the time taken to initiate or to complete the assessment. This was surprising, as one would expect higher quality feedback to be provided immediately after the clinical encounter as this is thought to minimize recall bias. However, there is a scarcity of literature examining this phenomenon, and further research should be conducted to confirm these findings.

Our findings support the notion that quality of narrative feedback should be assessed in both surgical and medical residency programs. This information can then be used to provide feedback-on-feedback to the faculty members as a continuing faculty development program; however, the utility of this approach should be investigated in future studies. Residency programs at other institutions can consider adopting our approach to assessing feedback quality in evaluation of their programs. Continuing to operationalize EPA assessment data to assess faculty performance and to provide faculty development focused on the areas where a need for improvement has been identified would be a step toward creating residency programs that embraces the DDO framework and aim to support the development of their faculty as well as their residents. One framework that can be taught to faculty is the SBAI model (Situation–Behaviour–Action–Impact) which provides a systematic approach to giving feedback. 22 The SBAI model has previously been used within the clinical context to assess narrative feedback provided in EPAs within an emergency medicine program. 23 While some studies have shown that residents and faculty often prefer verbal instead of written feedback, 19 written feedback is essential for tracking residents’ progress through CBME, and faculty should be skilled in providing quality narrative feedback. Faculty development should also focus on improving the “connections” between suggestions for improvement and evidence of residents’ performance as we identified a significant difference between these two domains in our study.

The three major themes identified within the content of narrative feedback align with the CanMEDS competencies: Theme 1—Communication (Communicator and Collaborator), Theme 2—Diagnostics and Management (Health Advocate, Scholar, and Medical Expert), and Theme 3—Next Steps (Leader and Professional) (Supplemental File E). This alignment is not surprising as CBME was developed to align with the CanMEDS competencies, while reflecting the realities of clinical practice. 24 As such, CBME appears to operationalize the assessments of the CanMEDs competencies in the context of EPAs. The CanMEDS roles have been identified as building blocks to curriculum development; however, stakeholders identified other key competencies such as clinical expertise, reflective practice, collaboration, a holistic view, and involvement in practice management as important in practice. 25 Therefore, while the CanMEDS competencies provide the basic building blocks for curriculum development, timely and high-quality feedback within CBME remains fundamental to the training of future physicians. The finding that high-quality feedback was identified to have a greater number of themes provides future avenues for faculty development by teaching faculty to provide examples of learners’ communication ability, diagnostics and management ability, and next steps for improvement.

We used the DDOs framework to interpret the quantity and quality of feedback provided to residents as a measure of faculty performance. Our analysis revealed that quality of the narrative feedback remains an area of weakness for some faculty members, which through the lens of the DDO framework can be viewed as opportunity. We also demonstrated how EPA assessment data can be successfully operationalized for use in faculty development as suggested by Thoma et al and the DDO framework, thus providing The Edge, so that residency programs can facilitate The Groove of creating tools to support faculty development. 6 In keeping with the features of DDOs, residency programs can foster environments that support the development of both residents and faculty within their organizations. The DDO framework parallels CBME in many ways, including the core belief that individuals can grow, the use of weaknesses as learning opportunities, placing an emphasis on developmental objectives, investing in tools and curriculum to facilitate growth, and fostering communities that support the development of individuals through small groups and close mentorship. 6 However, using a DDO framework allows CBME to shift away from the completion-based mindset that is often manifested in physician training, and toward a mindset of ongoing growth for both learners and teachers within CBME. 6 This includes faculty who have already completed the traditional faculty development courses, but should be encouraged and provided with resources to continue growth as educators, and further develop essential skills such as providing high-quality feedback.

Limitations

First, the data for our study were collected from EPAs initiated by residents in ambulatory patient care during the COVID-19 pandemic. The pandemic impacted residency education through limited patient volumes, virtual learning, and a shift to virtual ambulatory care. 18 The use of virtual ambulatory care has exponentially increased in Ontario during the pandemic, with the proportion of physicians who provided one or more virtual visits per year increasing from 7.0% in the second quarter of 2019 to 85.9% in the second quarter of 2020. 26 Our results should therefore not be generalized to the pre-pandemic times. Additionally, EPA forms did not collect data on whether clinical encounters were in-person or virtual and therefore it was impossible to determine what impact virtual ambulatory care had on the quality of narrative feedback. Second, we used assessment data with consent from participants who were recruited for parallel qualitative study. 13 As such we were not able to recruit additional participants for our study and did not perform a power calculation, making our results subject to type II error and selection bias. Apart from utilizing the same cohort of resident participants, there was no overlap in the outcome measures or findings of these studies. However, while our study was limited by the number of EPA assessments available for analysis (n = 41), we unearthed a richness within the content of feedback with qualitative analysis. Third, the rapid transition to virtual care and other learning modalities during the COVID-19 pandemic may have altered the existing structures and frameworks in place from CBME development at our institution, and our results should not be used to evaluate the effectiveness of CBME overall. Fourth, as our study was conducted at one academic institution with 16 residents and 41 assessments, our results may not be generalizable or transferable to other training programs or to other institutions. Fifth, we did not have permission to collect demographic information on faculty who completed the assessments. We also do not know how much exposure faculty from the DoM and DoS had to faculty development sessions on feedback. Future studies should examine how faculty's individual training or educational backgrounds impact the quality of feedback they provide. Finally, based on EPA assessments alone, it is also not possible to determine the quality of verbal feedback provided at the time of the clinical encounters.

Conclusions

Feedback and assessment are major tenets of CBME; however, the quality of narrative feedback in our study varied widely across EPAs for ambulatory patient care suggesting ongoing need for improvement which could be facilitated by faculty development regarding provision of high-quality narrative feedback within CBME. Differences in quality of feedback between departments were noted; however, there was no relationship between the quality of feedback and the basis of assessment or time to complete the EPA assessments. By successfully operationalizing EPA data to examine faculty's performance in providing feedback, we demonstrated how CBME can align with The Edge of the DDO framework and how assessment data can be used within residency programs to identify areas for improvement, and further support their faculty's growth and development as educators.

Supplemental Material

Supplemental material, sj-docx-1-mde-10.1177_23821205231175734 for Exploring the Quality of Narrative Feedback Provided to Residents During Ambulatory Patient Care in Medicine and Surgery by Rebecca Leclair, Jessica S. S. Ho, Heather Braund, Ekaterina Kouzmina, Samantha Bruzzese, Sara Awad, Steve Mann and Boris Zevin in Journal of Medical Education and Curricular Development

Supplemental material, sj-pdf-2-mde-10.1177_23821205231175734 for Exploring the Quality of Narrative Feedback Provided to Residents During Ambulatory Patient Care in Medicine and Surgery by Rebecca Leclair, Jessica S. S. Ho, Heather Braund, Ekaterina Kouzmina, Samantha Bruzzese, Sara Awad, Steve Mann and Boris Zevin in Journal of Medical Education and Curricular Development

Supplemental material, sj-docx-3-mde-10.1177_23821205231175734 for Exploring the Quality of Narrative Feedback Provided to Residents During Ambulatory Patient Care in Medicine and Surgery by Rebecca Leclair, Jessica S. S. Ho, Heather Braund, Ekaterina Kouzmina, Samantha Bruzzese, Sara Awad, Steve Mann and Boris Zevin in Journal of Medical Education and Curricular Development

Acknowledgements

The authors thank the residents within the Departments of Surgery and Medicine at Queen's University for participating in this study.

Footnotes

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The authors received no financial support for the research, authorship, and/or publication of this article.

ORCID iD: Rebecca Leclair https://orcid.org/0000-0001-6302-7129

Supplemental material: Supplemental material for this article is available online.

References

- 1.Shorey S, Lau TC, Lau ST, Ang E. Entrustable professional activities in health care education: a scoping review. Med Educ. 2019;53(8):766–777. doi: 10.1111/MEDU.13879 [DOI] [PubMed] [Google Scholar]

- 2.Frank JR, Snell LS, Ten Cate O, et al. Competency-based medical education: theory to practice. Med Teach. 2010;32(8):638–645. doi: 10.3109/0142159X.2010.501190 [DOI] [PubMed] [Google Scholar]

- 3.Mann S, Hastings Truelove A, Beesley T, Howden S, Egan R. Resident perceptions of competency-based medical education. Can Med Educ J. 2020;11(5):31–43. doi: 10.36834/CMEJ.67958 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Branfield Day L, Miles A, Ginsburg S, Melvin L. Resident perceptions of assessment and feedback in competency-based medical education: a focus group study of one internal medicine residency program. Acad Med. 2020;95(11):1712–1717. doi: 10.1097/ACM.0000000000003315 [DOI] [PubMed] [Google Scholar]

- 5.Kegan R, Lahey LL, Miller ML, Fleming A, Helsing D. An Everyone Culture: Becoming a Deliberately Developmental Organization. Boston, Massachusetts: Harvard Business Review Press; 2016. [Google Scholar]

- 6.Thoma B, Caretta-Weyer H, Schumacher DJ, et al. Becoming a deliberately developmental organization: using competency based assessment data for organizational development. Med Teach. 2021;43(7):801–809. doi: 10.1080/0142159X.2021.1925100 [DOI] [PubMed] [Google Scholar]

- 7.Creswell J, Creswell J. Chapter 9: designing research - qualitative methods. In: Research Design: Qualitative, Quantitative, and Mixed Methods Approaches Vol 5. Thousand Oaks, California: Sage Publication; 2018:179–211. [Google Scholar]

- 8.Entrustable Professional Activity Guides. https://www.royalcollege.ca/rcsite/documents/cbd/epa-observation-templates-e. Accessed March 13, 2023.

- 9.Chan TM, Sebok-Syer SS, Sampson C, Monteiro S. The quality of assessment of learning (Qual) score: validity evidence for a scoring system aimed at rating short, workplace-based comments on trainee performance. Teach Learn Med. 2020;32(3):319–329. doi: 10.1080/10401334.2019.1708365 [DOI] [PubMed] [Google Scholar]

- 10.Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol. 2006;3(2):77–101. doi: 10.1191/1478088706QP063OA [DOI] [Google Scholar]

- 11.Molintas MP, Caricativo RD. Reflexivity in qualitative research: a journey of learning. Qual Rep. 2017;22(2):426–438. doi: 10.46743/2160-3715/2017.2552 [DOI] [Google Scholar]

- 12.Landreville JM, Wood TJ, Frank JR, Cheung WJ. Does direct observation influence the quality of workplace-based assessment documentation? AEM Educ Train. 2022;6(4):e10781. doi: 10.1002/aet2.10781 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Carraccio C, Englander R, Van Melle E, et al. Advancing competency-based medical education. Acad Med. 2016;91(5):645–649. doi: 10.1097/acm.0000000000001048 [DOI] [PubMed] [Google Scholar]

- 14.Lockyer J, Carraccio C, Chan M-K, et al. Core principles of assessment in competency-based medical education. Med Teach. 2017;39(6):609–616. doi: 10.1080/0142159x.2017.1315082 [DOI] [PubMed] [Google Scholar]

- 15.Van Melle E, Frank JR, Holmboe ES, Dagnone D, Stockley D, Sherbino J. A core components framework for evaluating implementation of competency-based medical education programs. Acad Med. 2019;94(7):1002–1009. doi: 10.1097/acm.0000000000002743 [DOI] [PubMed] [Google Scholar]

- 16.Ott MC, Pack R, Cristancho S, Chin M, Van Koughnett JA, Ott M. “The most crushing thing”: understanding resident assessment burden in a competency-based curriculum. J Grad Med Educ. 2022;14(5):583–592. doi: 10.4300/jgme-d-22-00050.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gupta A, Villegas CV, Watkins AC, et al. General surgery residents’ perception of feedback: we can do better. J Surg Educ. 2020;77(3):527–533. doi: 10.1016/J.JSURG.2019.12.009 [DOI] [PubMed] [Google Scholar]

- 18.Ho JSS, Leclair R, Braund H, et al. Transitioning to virtual ambulatory care during the COVID-19 pandemic: a qualitative study of faculty and resident physician perspectives. Can Med Assoc Open Access J. 2022;10(3):E762–E771. doi: 10.9778/CMAJO.20210199 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Tomiak A, Braund H, Egan R, et al. Exploring how the new entrustable professional activity assessment tools affect the quality of feedback given to medical oncology residents. J Cancer Educ. 2020;35(1):165–177. doi: 10.1007/S13187-018-1456-Z/TABLES/3 [DOI] [PubMed] [Google Scholar]

- 20.Lockyer J, Carraccio C, Chan MK, et al. Core principles of assessment in competency-based medical education. Med Teach. 2017;39(6):609–616. doi: 10.1080/0142159X.2017.1315082 [DOI] [PubMed] [Google Scholar]

- 21.Young JQ, Sugarman R, Schwartz J, O’Sullivan PS. Overcoming the challenges of direct observation and feedback programs: a qualitative exploration of resident and faculty experiences. Teach Learn Med. 2020;32(5):541–551. doi: 10.1080/10401334.2020.1767107 [DOI] [PubMed] [Google Scholar]

- 22.Weitzel SR. Feedback the Works: How to Build and Deliver Your Message. Greensboro, North Carolina: John Wiley & Sons,Inc; 2007. [Google Scholar]

- 23.Lee C-W, Chen G-L, Yu M-J, Cheng P-L, Lee Y-K. A study to analyze narrative feedback record of an emergency department. J Acute Med. 2021;11(2):39. doi: 10.6705/J.JACME.202106_11(2).0001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Caccia N, Nakajima A, Kent N. Competency-based medical education: the wave of the future. J Obstet Gynaecol Canada. 2015;37(4):349–353. doi: 10.1016/S1701-2163(15)30286-3 [DOI] [PubMed] [Google Scholar]

- 25.Van Der Lee N, Fokkema JPI, Westerman M, et al. The CanMEDS framework: relevant but not quite the whole story. Med Teach. 2013;35(11):949–955. doi: 10.3109/0142159X.2013.827329 [DOI] [PubMed] [Google Scholar]

- 26.Bhatia RS, Chu C, Pang A, Tadrous M, Stamenova V, Cram P. Virtual care use before and during the COVID-19 pandemic: a repeated cross-sectional study. Can Med Assoc Open Access J. 2021;9(1):E107–E114. doi: 10.9778/CMAJO.20200311 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, sj-docx-1-mde-10.1177_23821205231175734 for Exploring the Quality of Narrative Feedback Provided to Residents During Ambulatory Patient Care in Medicine and Surgery by Rebecca Leclair, Jessica S. S. Ho, Heather Braund, Ekaterina Kouzmina, Samantha Bruzzese, Sara Awad, Steve Mann and Boris Zevin in Journal of Medical Education and Curricular Development

Supplemental material, sj-pdf-2-mde-10.1177_23821205231175734 for Exploring the Quality of Narrative Feedback Provided to Residents During Ambulatory Patient Care in Medicine and Surgery by Rebecca Leclair, Jessica S. S. Ho, Heather Braund, Ekaterina Kouzmina, Samantha Bruzzese, Sara Awad, Steve Mann and Boris Zevin in Journal of Medical Education and Curricular Development

Supplemental material, sj-docx-3-mde-10.1177_23821205231175734 for Exploring the Quality of Narrative Feedback Provided to Residents During Ambulatory Patient Care in Medicine and Surgery by Rebecca Leclair, Jessica S. S. Ho, Heather Braund, Ekaterina Kouzmina, Samantha Bruzzese, Sara Awad, Steve Mann and Boris Zevin in Journal of Medical Education and Curricular Development